1. Introduction

The Dual Active Bridge (DAB) DC-DC converter has been widely applied in various fields such as renewable energy, electric vehicles (EVs), energy storage, due to its excellent flexibility and efficiency. Its support of bidirectional power flow and galvanic isolation, and enabling of high power density all make it particularly suitable for applications that require robust energy exchange–such as EV battery charging, grid-connected storage, and utilization with renewable power sources [

1]. The DAB converter ability in wide voltage-range operation adds up to its flexibility, positioning it as part of the important devices in the recent energy systems. These topologies, in employing different types of filters to minimise the level of hazardous harmonics produced due to high switching speeds. Especially the CLLC filter enables soft-switching (including zero-voltage and zero-current-mode) at a wide input range, so that an EMI reduction and power density improvement are obtained. Due to these features, CLLC filters are especially suitable for the DAB systems which require small form factor and low power [

2,

3,

4]. Nevertheless, the control of the DAB converter remains difficult because of its non-linear and fast switching behavior as well as its sensitivity to changes inside the load and at the input. These constraints may compromise performance degradation, the variability of output, and the non-constant system behaviour of a dynamic system. The challenges can best be tackled by a control technology, which is adaptable, robust, providing dependable and constant performance under the various operating conditions [

5,

6]. The ideal solution should take into consideration the non-linearities of the converter in addition to maintaining a fast transient behaviour, as well as a stable and efficient operation.

During the years, a wide set of advanced control strategies has been developed and implemented for the DABC in order to deal with its intense non-linear behavior and to improve performance under different operating conditions. Sliding Mode Control (SMC) [

7,

8], Fuzzy Logic Control [

9], and Backstepping Control [

10,

11,

12] have been widely acknowledged for their robustness to parameter uncertainties and external disturbances, in addition to guaranteeing fast transient response and accurate tracking for nonlinear systems. On the other hand, Neural Network-based Control, which can learn and adapt to complex nonlinearities through data-driven modeling, has also emerged as a promising approach [

13,

14]. However, this type of controller generally demands heavy computational resources for training and real-time implementation, which is less suitable for high switching frequency systems like the DAB converter. Although these controllers reveal some favorable aspects, the DAB converter is inherently a time-varying system; hence, adaptive control design based on system dynamics is emphasized. It is well known that the disturbance-rejection performance of an adaptive controller surpasses that of a fixed-gain controller, especially under changing parameters, loads, and external disturbances [

15,

16,

17]. To address the complexity and high computational cost associated with advanced nonlinear control techniques, fractional-order controllers have emerged as a preferred alternative. The Fractional-Order PID (FOPID) controller is particularly well-suited for fine-tuned control in systems involving pressure/flow dynamics, offering enhanced flexibility in shaping control responses [

18,

19,

20,

21]. By extending the classical PID framework, FOPID provides superior control precision and better dynamic adaptation over a broader frequency range. Unlike classical controllers, FOPID enables more flexible adjustment of system dynamics, making it efficient for addressing nonlinear and dynamic behaviors. Its functional advantages include higher robustness, enhanced disturbance-rejection capabilities, faster transient response, and improved steady-state performance. Additionally, its relatively simple algorithm makes it convenient for real-time applications [

19,

20].

Recent studies have demonstrated the usefulness of FOPID control in power systems and power converters [

19,

22,

23,

24,

25]. For example, the application of FOPID controllers to DC-DC converters has shown substantial improvements in voltage regulation, reduced overshoot, and enhanced dynamic response under load changes. In grid-connected power systems, FOPID-based controllers have also been utilized to regulate inverter dynamics, leading to smoother power delivery and improved system stability in the presence of grid disturbances [

21]. These results highlight the practical benefits of FOPID controllers in real-world applications, as they provide an optimal balance between performance and implementation feasibility. Due to their high degree of flexibility and relatively low computational demand, FOPID controllers are increasingly considered viable alternatives for intelligent control in power electronics, particularly in Dual Active Bridge (DAB) converters [

25]. One of the critical aspects of a successful FOPID controller is effective gain tuning. The performance and effectiveness of FOPID controllers depend heavily on the proper selection of proportional, integral, and derivative gains, along with the fractional orders of integration and differentiation. While fixed gain values are easier to implement, they often fail to adapt to varying operating conditions, resulting in suboptimal performance, increased steady-state error, and reduced robustness to disturbances or parameter variations. Given that the DAB converter is a nonlinear system with time-varying loads, the use of fixed-gain controllers may not be sufficient to exploit the full potential of the FOPID framework [

19,

24].

To overcome these shortcomings, recent studies have focused on the optimization and dynamic tuning of FOPID controller gains to achieve better adaptability and efficiency. Recent developments in power electronics control have explored the combination of FOPID controllers with other control techniques to improve dynamic performance and transitional behavior [

26,

27,

28,

29]. These hybrid strategies aim to leverage the strengths of multiple control methods to address the complex dynamics of modern systems. One notable advancement is the development of the FOPID-SMC controller. Sliding Mode Control (SMC) is well known for its strong robustness to system uncertainties and external disturbances [

30]. With the incorporation of fractional-order elements, the resulting controller benefits from precise tuning of performance indices, which enhances transient response, reduces chattering effects, and minimizes overshoot compared to conventional SMC. However, these advantages come at the cost of increased design complexity and potentially higher processing requirements for implementation. Another hybrid approach is the integration of FOPID with Fuzzy Logic Control. Fuzzy controllers are well suited for nonlinear and uncertain systems that lack an accurate mathematical model [

31,

32]. When integrated with FOPID, the controller can finely tune system responses with suitable damping values across a broader frequency range than integer-order PID controllers. This combination also helps to smooth control actions and improve the system’s ability to respond to load variations. Nevertheless, the design process is more complex and typically requires extensive optimization to achieve optimal performance. FOPID has also been combined with Neural Network-based Control. Neural networks are capable of learning and adapting to temporal changes within dynamic systems by adjusting internal weights. When integrated with FOPID, the controller gains the ability to adapt its parameters in real-time, resulting in greater flexibility and robustness [

33,

34]. However, this approach demands significant computational resources, and the need to train neural networks may hinder real-time applicability. These hybrid control strategies demonstrate that it is possible to enhance the dynamic behavior of DAB converters by combining the precision tuning and adaptability of FOPID with the robustness and flexibility of other advanced control designs. Nonetheless, the increased design complexity and computational demands must be carefully managed to ensure practical feasibility, particularly for real-time applications.

Reinforcement Learning (RL) schemes coupled with FOPID controllers provide a potent methodology to further improve the control of complex systems. Reinforcement learning (RL) makes it possible for controllers to learn and cope with time-varying environments by interacting with the system and optimizing control actions according to the feedback, avoiding the shortfall of the fix-gain controller [

35,

36]. The key benefit of integrating RL with FOPID controllers is that it allows on-line, real-time tuning of the control parameters. This flexibility guarantees that performance is optimized over diverse operating conditions and increases the disturbance rejection and robustness of the system [

37]. Furthermore, RL-based control approaches can address nonlinearities, uncertainties and disturbances of power electronic systems without the accurate model being known, which provides a great convenience for the controller design process. Recent works have been made on some successful RL-enhanced FOPID controllers. For example, a model-based RL was employed in FOPID synthesis using probabilistic inference for learning control coordinate to refine PID parameters and tested on robust performance in underactuated mechanical systems [

31,

38,

39]. These developments demonstrate that the RL-embedded FOPID controllers can offer adaptive, high-performance, and robust control solutions for power converters, especially in systems where high precision and reliability are required. Although combining RL with various controllers offers such promising potential, three major issues need to be tackled to further exploit its strengths [

40,

41]. The first problem is to enhance the adaptability and robustness of the controller in broader disturbance and more different operating conditions. Although RL has the advantage of the ability to adjust, to achieve stable and reliable performance adaptation in diverse scenarios, a reliable and efficient learning model is necessary [

42,

43]. This is especially important for power converters, whose work is nonlinear and the time-varying. Improving adaptability of the developed RL-FOPID system is necessary to reliably control a system in dynamic and uncertain environment. The second issue concerns the high computational cost and time to obtain the initial gains for the FOPID controller according to the RL [

41]. The initialization where the RL agents explore and learn the parameter space is also often computationally expensive and long with a heavy overheat in the sense that if when the devices are real-time this can lead to significant delay. This difficulty becomes even greater when fractional-order parameters are incorporated which further enlarge the searching domain [

31]. Finding the appropriate strategy to initialize the RL algorithm is essential, for speeding up the convergence and decreasing the complexity for practicality. The third challenge is to establish a right policy selection for the RL framework that can lead to optimal control dynamics without introducing excessive computational load. Choosing a good policy between exploration and exploitation is vital to achieve the optimal solutions in a time-effective way. A good policy doesn’t only result in better learning efficiency, but also a well convergence of controller to the system dynamics and does not consume excessive amount of compute resources. Solving these three problems is the key to the good performance of the RL-enhanced FOPID controlled in generating robust, adaptive and efficient solutions for advanced PES.

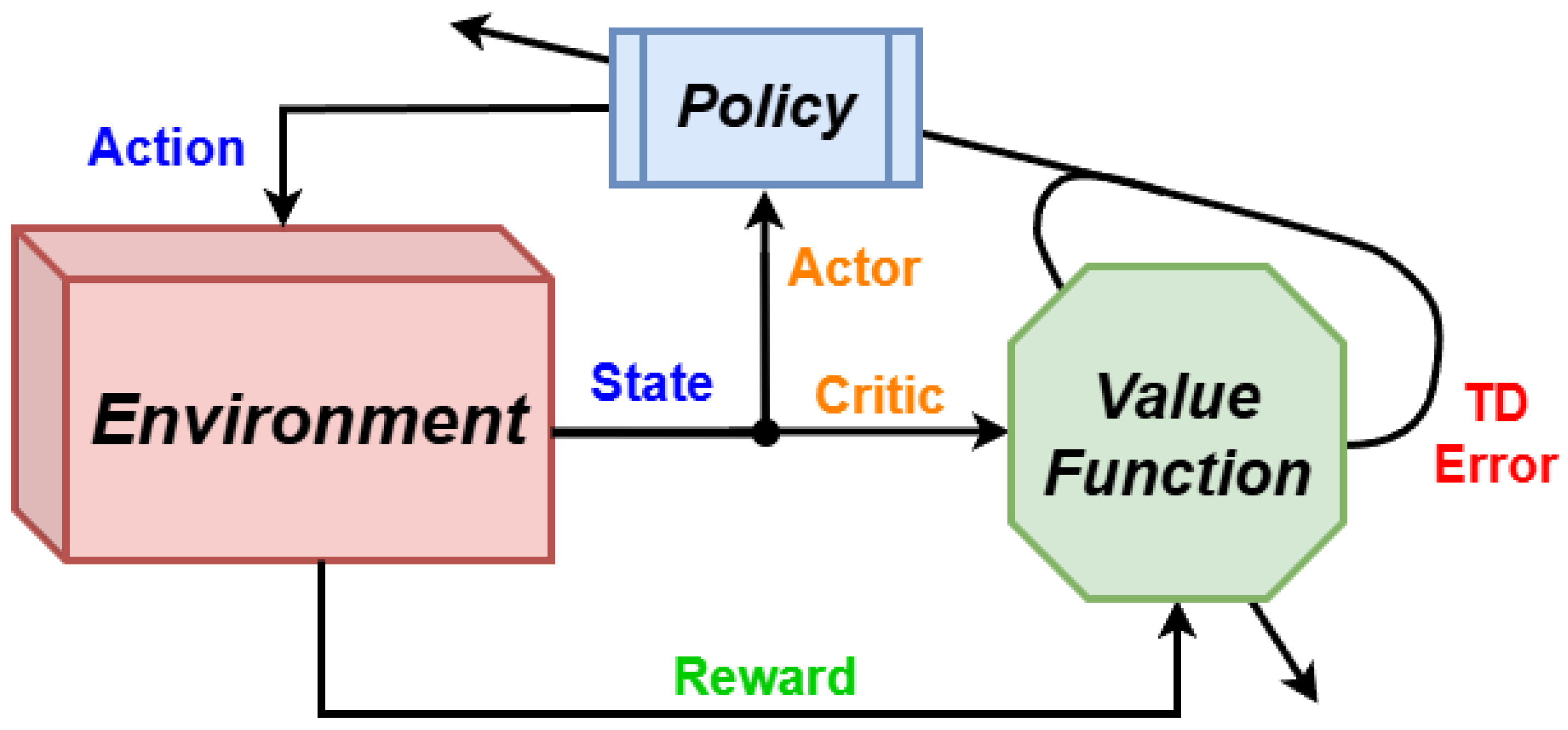

To enhance the adaptivity and robustness of the controller under different types of disturbances and operating points as well as its generalization capability, in this paper, we employ the Deep Deterministic Policy Gradient (DDPG) algorithm [

44,

45]. Actor-critic is a model-free DDPG: Actor-critic architectures are also suited to finding optimal policies for controller design in continuous-action spaces, where the output can be the control action for power converters [

46,

47,

48]. It is a combination of Q-learning and policy gradient, suitable for learning deterministic policy and capable of dealing with the nonlinear dynamics for converters. Due to the off-policy nature of the algorithm, the ALRAC algorithm can utilize experience replay buffers to increase sample efficiency and stability of training. DDPG has several unique advantages compared to other RL algorithms [

49,

50]. Similar to the earlier version known as Deterministic Policy Gradient (DPG), DDPG is cast as an analysis to approximated actor (policy) and critic (value function) with deep model, and is another more scalable version when the system is more complex. Also, Deep Q-learning (DQN) has been proved to be very effective for discrete action spaces, but does not work well for continuous control tasks, which are the cases of the power converters that require high-precision control [

51,

52]. Another popular one Soft Actor-Critic (SAC) concentrates on stochastic policies to encourage exploration and could potentially add more computational overhead than deterministic approach of DDPG [

53,

54]. Especially, DDPG is a good choice for the DAB application since it can cope with continuous control inputs as well as provide stable policy updates and it is still computationally efficient, which makes it a strong and practical alternative for power electronics control in real-time applications.

To handle simultaneous tuning of multiple gains for the FOPID controller, we used Multi-Agent Reinforcement Learning (MRL) [

55,

56]. In this setting, each gain is controlled by a separate agent, resulting in decentralized learning and optimization. This structure provides the controller with more flexibility and scalability, since the gain of each agent can be tuned according to the dynamics of the system. The MRL promotes the cooperation styles control and enhances performance in complex environments [

56]. For example, in the application of industrial wave energy converters, MRL controllers have exhibited better energy capture performance when comparing to conventional controllers [

57]. MRL has been applied in more and more fields, and it has been known to have a distinct advantage compared with individual agent. In power grid control, environments designed only for complex power system operations, such as Power Grid world, have been developed. Such settings enable agents to represent heterogeneous systems and learn to make policies that improve power flow solutions, resulting in better control of grid level variables and operational costs [

58,

59]. In the same way, MRL seems to be used in frequency regulation with the model of each generator controller as an individual agent. Such a distributed method allows the agents to learn their own control policies, and in turn provides a good frequency stability and system stability [

60]. MRL has also been used in load frequency control of multi-area power systems, where cooperative learning behavior among agents have resulted in optimized joint controllers, showing the superiority of control strategies that can greatly enhance the system performance and stability. These uses demonstrate MRL’s advantages in terms of ability to scale, resilience and efficiency, especially in case of collective actions and decentralized decision making. MRL is more versatile and adaptable than the single agent-based methods in the control of complex environment with non-stationary changes. The proposed algorithm can provide high throughput, but the Multi-Agent RL can encounter trouble with determining the best initial gains to ensure the effective operation of the system. This optimization is very sensitive to the initial gain values which may slow the convergence or result in bad solutions if not properly chosen [

61,

62]. To resolve this problem and improve the performance of the algorithm, we use metaheuristic algorithms for selection of proper initial gains. By offering well-tuned initial points, these algorithms can reduce the amount of nodes and edges that need to be passed at each iteration, leading to faster convergence and, ultimately, better overall performance in complex scenarios.

Recent studies have also explored the use of advanced control strategies and hybrid intelligence in emerging energy systems, emphasizing the integration of machine learning and metaheuristics for real-time optimization [

63,

64,

65,

66]. These works highlight the importance of adaptive, data-driven control frameworks that are robust under dynamic and uncertain operating conditions, which aligns with the approach adopted in this study. There have been some researches for metaheuristic algorithms e.g., PSO, GA, ALO to be used to find optimal gain values for given applications [

67,

68,

69]. Nevertheless the gain tuning of such methods is not yet satisfactory in the case of wide disturbances [

70,

71]. To avoid the time consuming task of discovering optimal initial gains for the RL mechanism, we have used the Grey Wolf Optimization Algorithm (GWO) [

72]. GWO is a novel metaheuristic optimization algorithm that mimics the basic rules of the wolves’ population and their prey, and has the advantage of simplicity and being an efficient approach for exploring complex search spaces. Using the GWO to find the initial values of the gains gives a better starting point for the RL agents, which leads to faster convergence of the learning and less computations. This coordination ensures that the controller can better improve its performance. The GWO is a successful metaheuristic algorithm using the mating habits of wolves and has several advantages compared to the other algorithms. One of its major strengths is its capacity of balancing exploration and exploitation, allowing to explore the set of solutions then existing solutions can be further refined for improving their quality [

73,

74,

75,

76]. GWO has shown competitive numerical performance on a wide range of problems such as benchmark functions and real-world engineering problems, which have verified its generality and flexibility. Some comparative studies have been made to compare GWO with other metaheuristic techniques as it achieves better performance in special cases and situations, especially in finding an optimal solution effectively, and it will be an effective tool for performing optimization tasks in different fields [

77]. However, despite significant advances in the adaptive control algorithms of DAB converters, some fundamental issues are still not resolved. Conventional FOPID controllers, which are more flexible modifications of classical PID controllers, are sometimes designed with static or manually-tunable parameters, resulting in a restricted applicability for dynamic operating environments [

78,

79,

80,

81]. The traditional RL-based controllers for power converters are often based on a single-agent framework, which leads to the relatively high complexity of tuning multiple parameters in high space dimension and the slow convergence. Furthermore, the initialization of RL agents is still a major bottleneck, because initialization with bad conditions could result in longer training time or suboptimal control performance. This paper fills these gaps by introducing a GWO-aided MARL framework for real-time applicable, more accurate control-oriented FOPID design in the DAB converters.

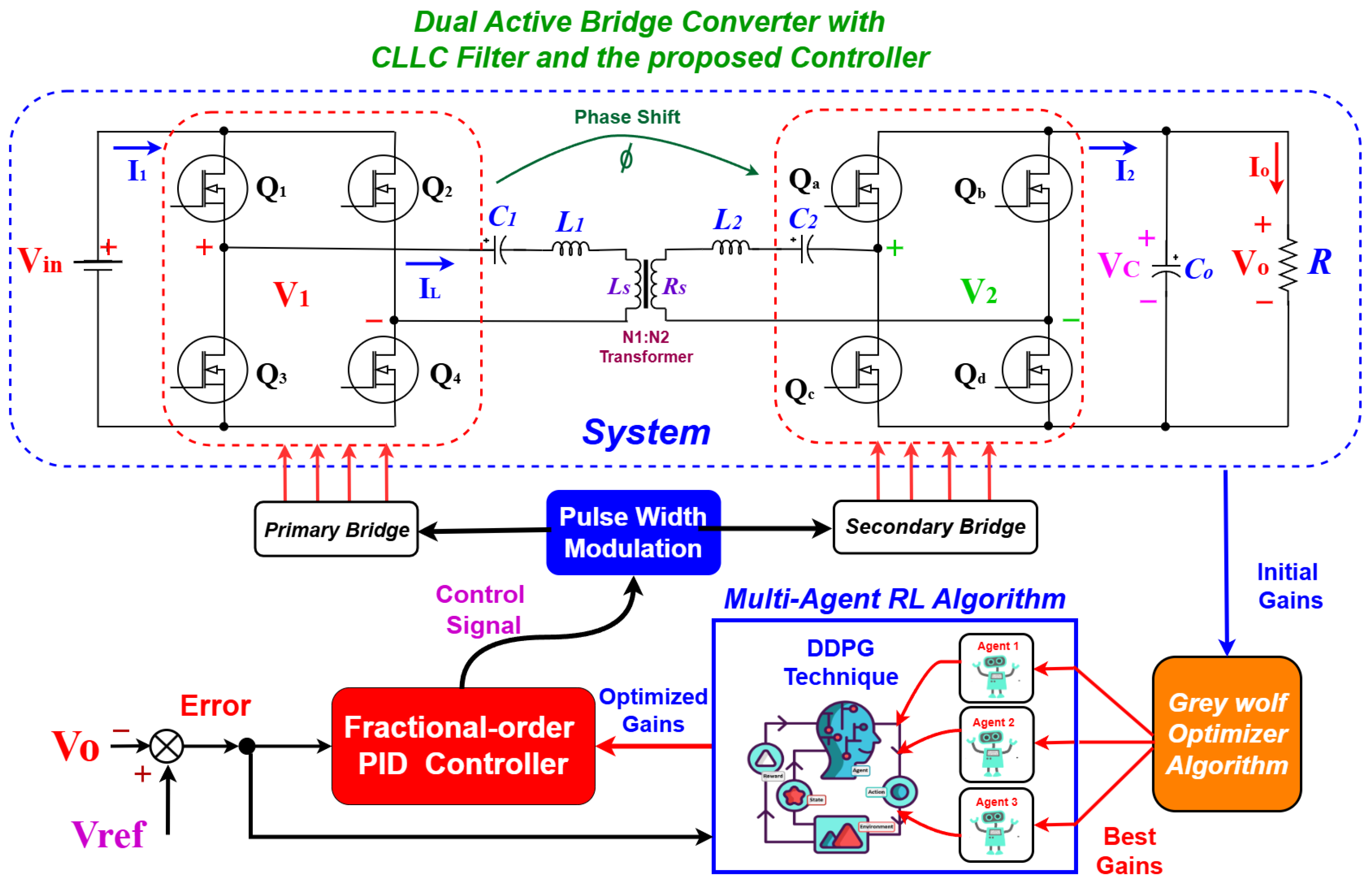

Figure 1 is presented in detail to illustrate the system topology using the proposed controller and its operation.

By combining DDPG for policy optimization, MARL for decentralized gain regulation, and SOA for efficient initialization, our approach addresses the key challenges in implementing RL-enhanced FOPID controllers for DAB converters. This comprehensive strategy enhances the controller’s adaptability, reduces computational complexity, and ensures robust performance across a wide range of operating conditions. The main contributions of this work can be summerized as:

Designed a FOPID controller tailored for DAB converters, leveraging its extended flexibility and superior dynamic performance compared to classical PID controllers.

Adopted the RL-DDPG algorithm for real-time tuning of FOPID controller gains, ensuring adaptability to varying operating conditions and disturbances. Our work utilizes a Model-free approach by adopting this method, leading to reduced dependency on system modeling and significantly lowering computational burden.

Implemented a Multi-agent framework for decentralized and dynamic regulation of individual controller gains, improving scalability and robustness.

To ensure faster convergence and improved learning stability, the Grey Wolf Optimizer (GWO) was employed to initialize the actor’s parameters, providing the best starting gains for the DDPG algorithm.

Conducted real-time validation of the proposed control strategy using a Hardware-in-Loop (HIL) setup to assess its performance under practical operating conditions. Demonstrated the controller’s effectiveness in achieving precise voltage and current regulation, robust disturbance rejection, and improved transient and steady-state performance.

6. Experimental Results

The Typhoon HIL microgrid setup, shown in

Figure 12, is a real-time simulation platform designed for testing control systems, power electronics, and microgrid operations. It offers a Hardware-in-the-Loop (HIL) environment where engineers can simulate complex electrical grids—including renewable energy sources, energy storage systems, and distributed generation—with high accuracy. By allowing seamless integration of controllers, the platform enables precise performance evaluation under various conditions, such as faults and grid disturbances, without the need for physical prototypes.

This setup accelerates development, enhances safety, and provides a real-time assessment of system stability and response.

Figure 13 presents the HIL simulation and SCADA environment implemented in this study. To evalute the performance of the proposed controller for DAB converter’s voltage regulation, different cases are tested using this real-time testing setup.

Figure 13 presents the HIL simulation and Supervisory Control And Data Acquisition (SCADA) environment implemented in this study.

Figure 13a shows the online platfrom for the DAB converter with the proposed speed controller and

Figure 13b shows real-time SCADA platform for the online results. To evalute the performance of the proposed controller for DABC’s speed regulation, different cases are tested using this real-time testing setup.

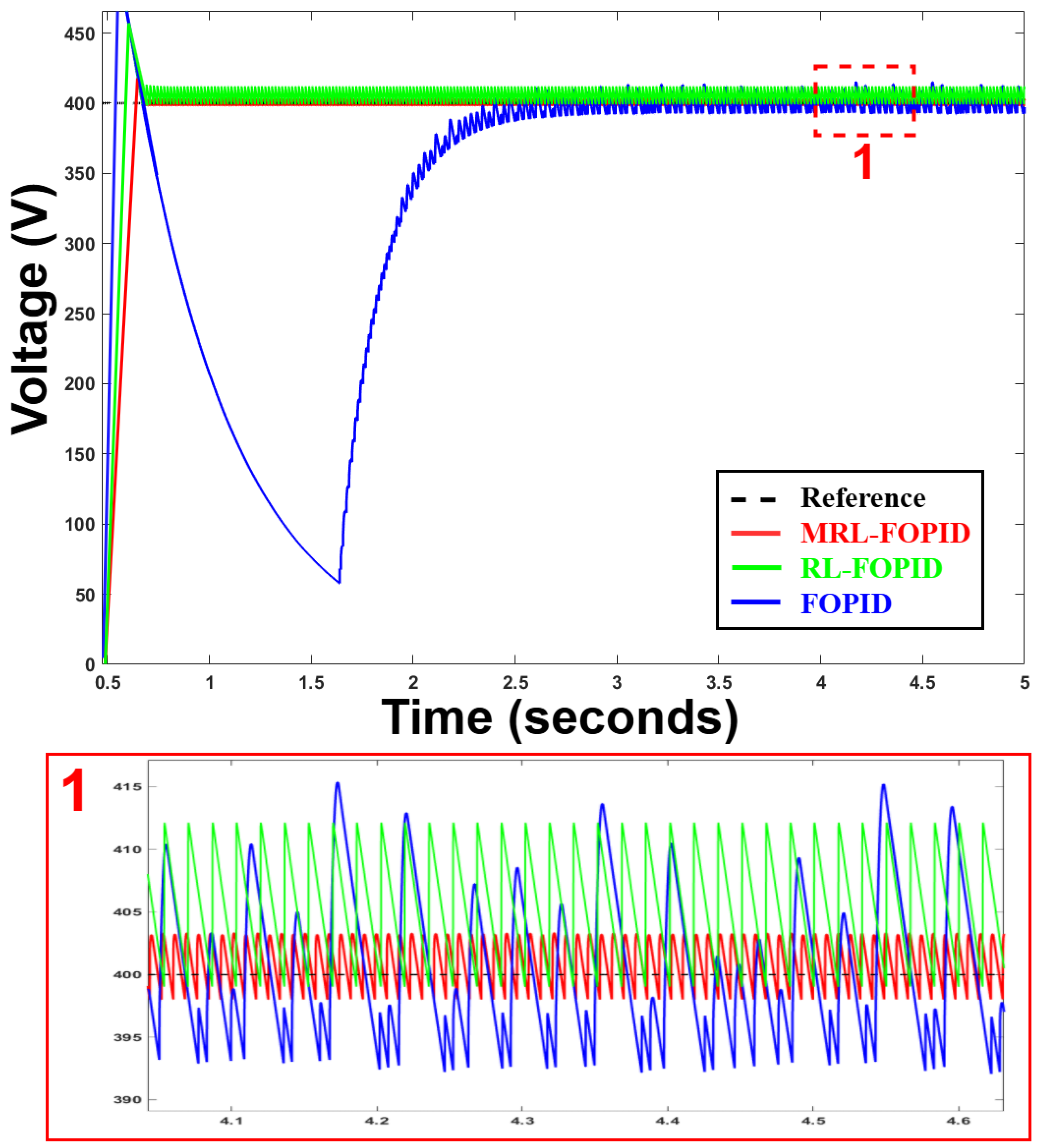

6.1. Case 1: Output Regulation

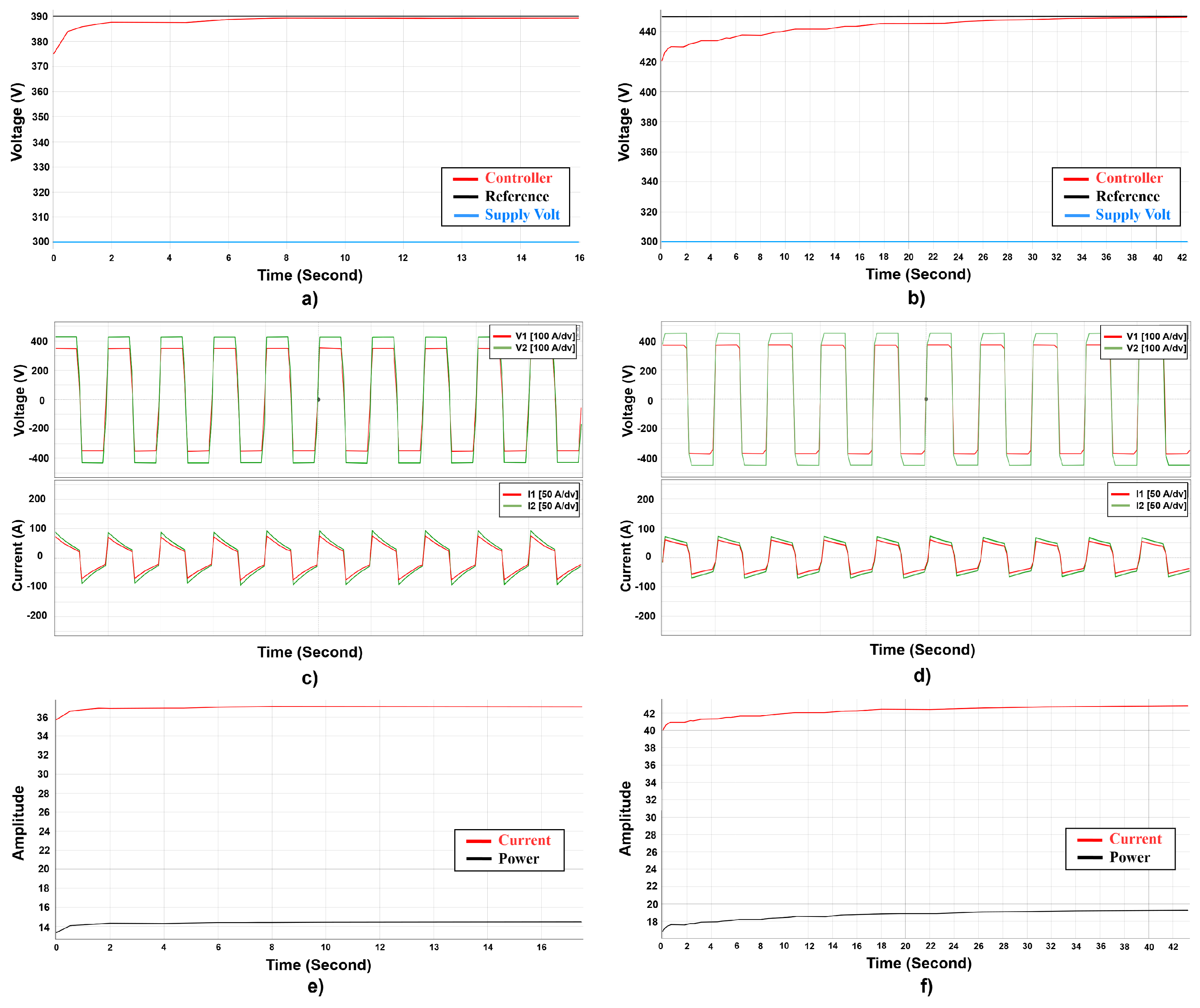

Figure 14 presents the experimental results of the proposed MRL-FOPID controller for the DAB converter, validated through a HIL setup. The voltage tracking performance, as shown in

Figure 14a,b, demonstrates rapid convergence to the reference values of 390 V and 440 V, respectively, with minimal overshoot and smooth settling. The symmetrical phase voltage waveforms (V1 and V2) in

Figure 14c,d reflect effective control of the converter’s switching dynamics, while the corresponding current waveforms (I1 and I2) exhibit minimal distortion and precise tracking, indicating robust ripple mitigation.

Figure 14e,f further highlight the industrial viability of the setup, showcasing stable current and power dynamics that ensure efficient power transfer and load adaptability. This stability and precision in regulating current and power output are crucial for industrial applications such as electric vehicle charging, renewable energy integration, and grid-tied power systems, where consistent performance and high reliability are essential. Overall, the results underline the MRL-FOPID controller’s capability to achieve precise voltage and current regulation while supporting scalable and efficient industrial energy systems.

These smooth, well-synchronized waveforms show the controller’s superb current and voltage management. Low overshoot, undershoot, and quick settling times indicate that the controller’s adaptive mechanism adapts efficiently to load and operating conditions. In the next situation, the robustness of the proposed method is tested in reference voltage changes.

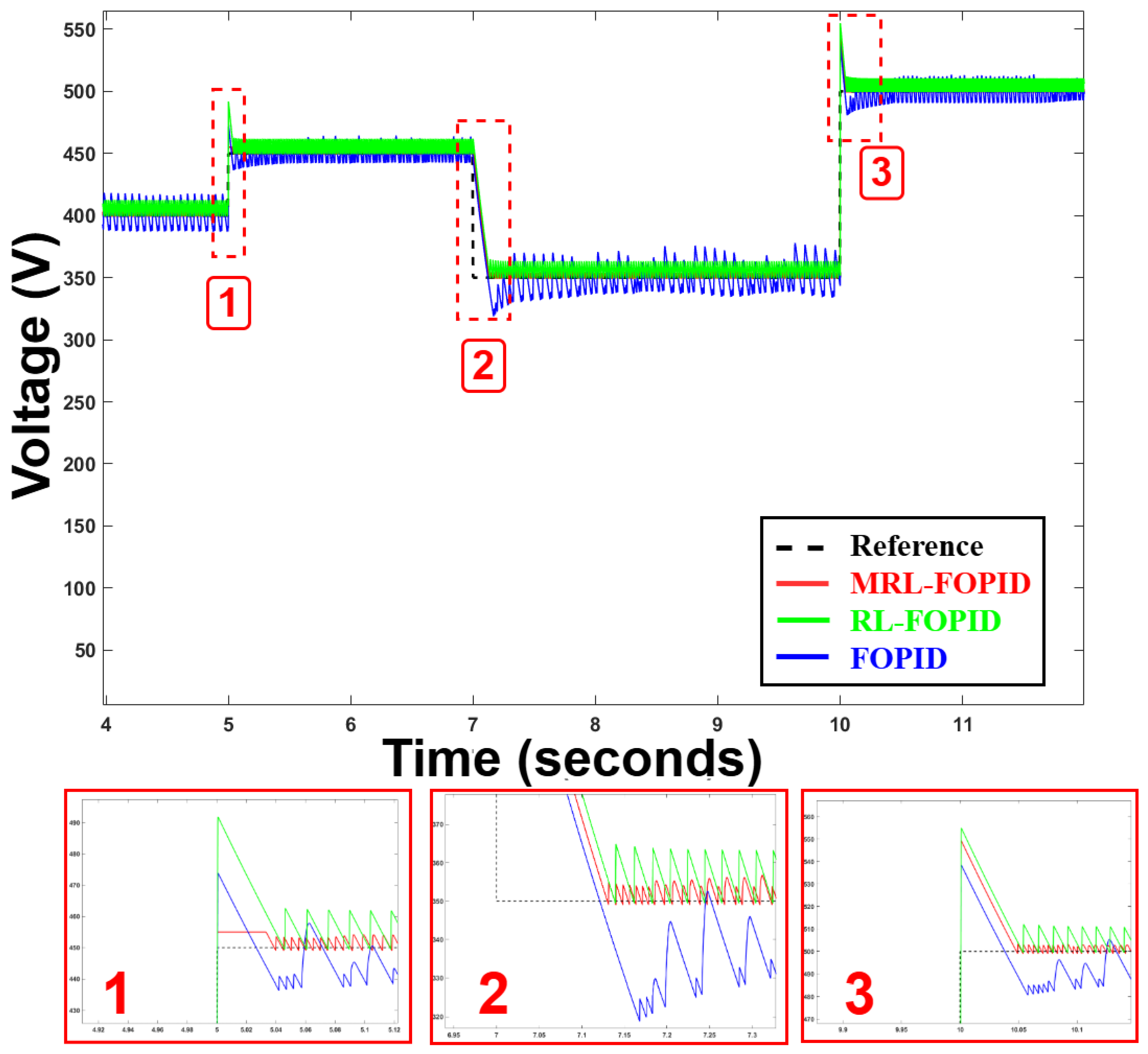

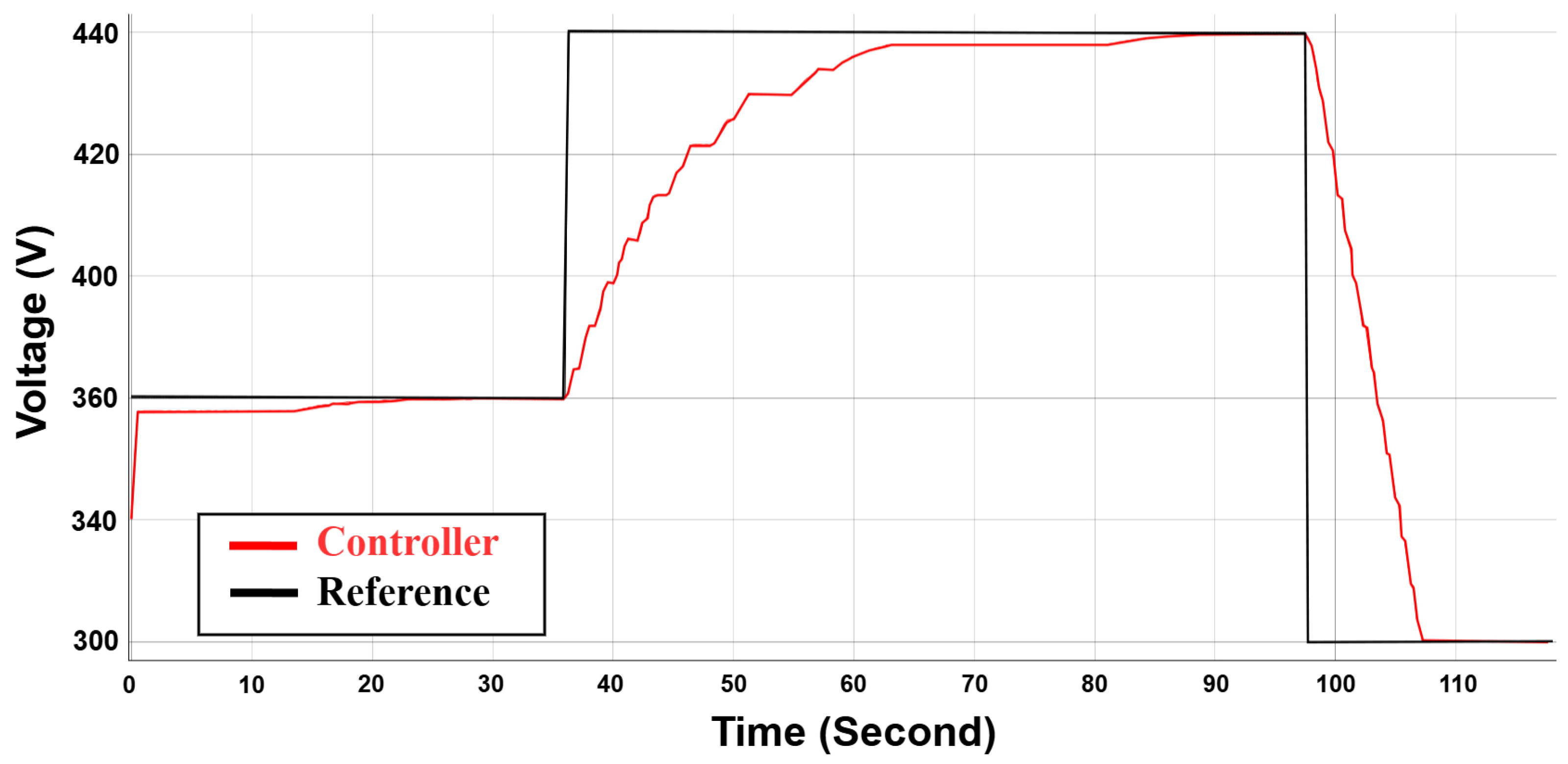

6.2. Case 2: Reference Tracking

Figure 15 illustrates the tracking performance of the proposed MRL-FOPID controller under a dynamic reference voltage signal, with the supply voltage set at 250 V. The black line represents the reference voltage, and the red line shows the controller’s output response. The controller demonstrates excellent performance in maintaining minimal steady-state error and achieving fast transient responses during step changes in the reference signal. The system effectively tracks the transition from 360 V to 440 V and back to 360 V, with smooth adjustments and stability during the dynamic changes. This highlights the robustness of the MRL-FOPID controller in managing varying operating conditions while ensuring precise voltage regulation. Industrial applications needing consistent and adaptive power management under changing load and voltage conditions depend on their capacity to manage such reference tracking.

6.3. Case 3: Supply Voltage Variations

Supply voltage variations are a common and challenging disturbance in power electronic systems, particularly in industrial and renewable energy applications where input fluctuations can occur due to grid instability, dynamic loads, or intermittent energy sources. The ability to maintain stable output performance in the face of such disturbances is critical for ensuring reliable operation and protecting downstream components. A robust control system must effectively counteract these variations to provide consistent voltage regulation, safeguard connected equipment, and enhance the overall system’s reliability and efficiency.

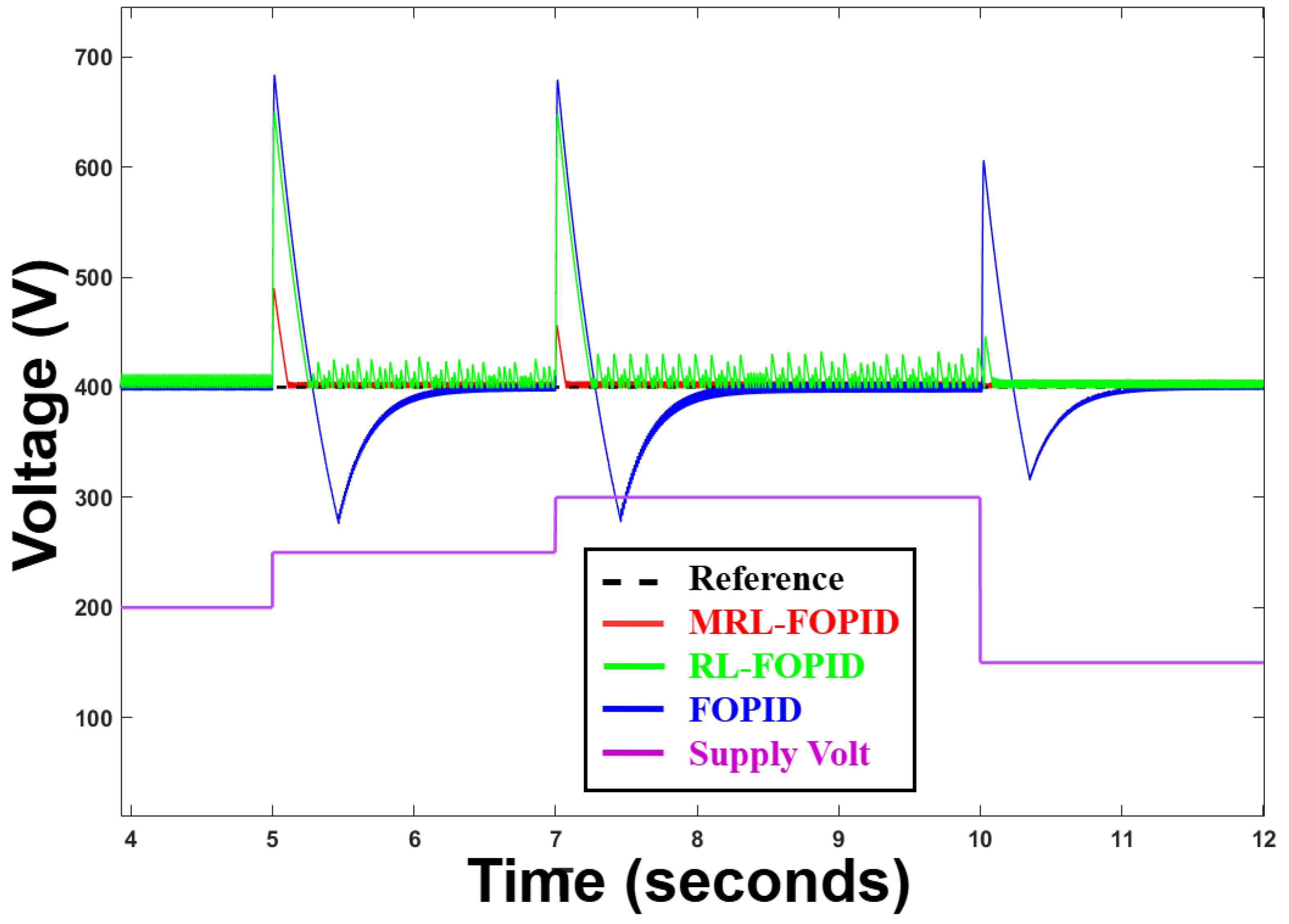

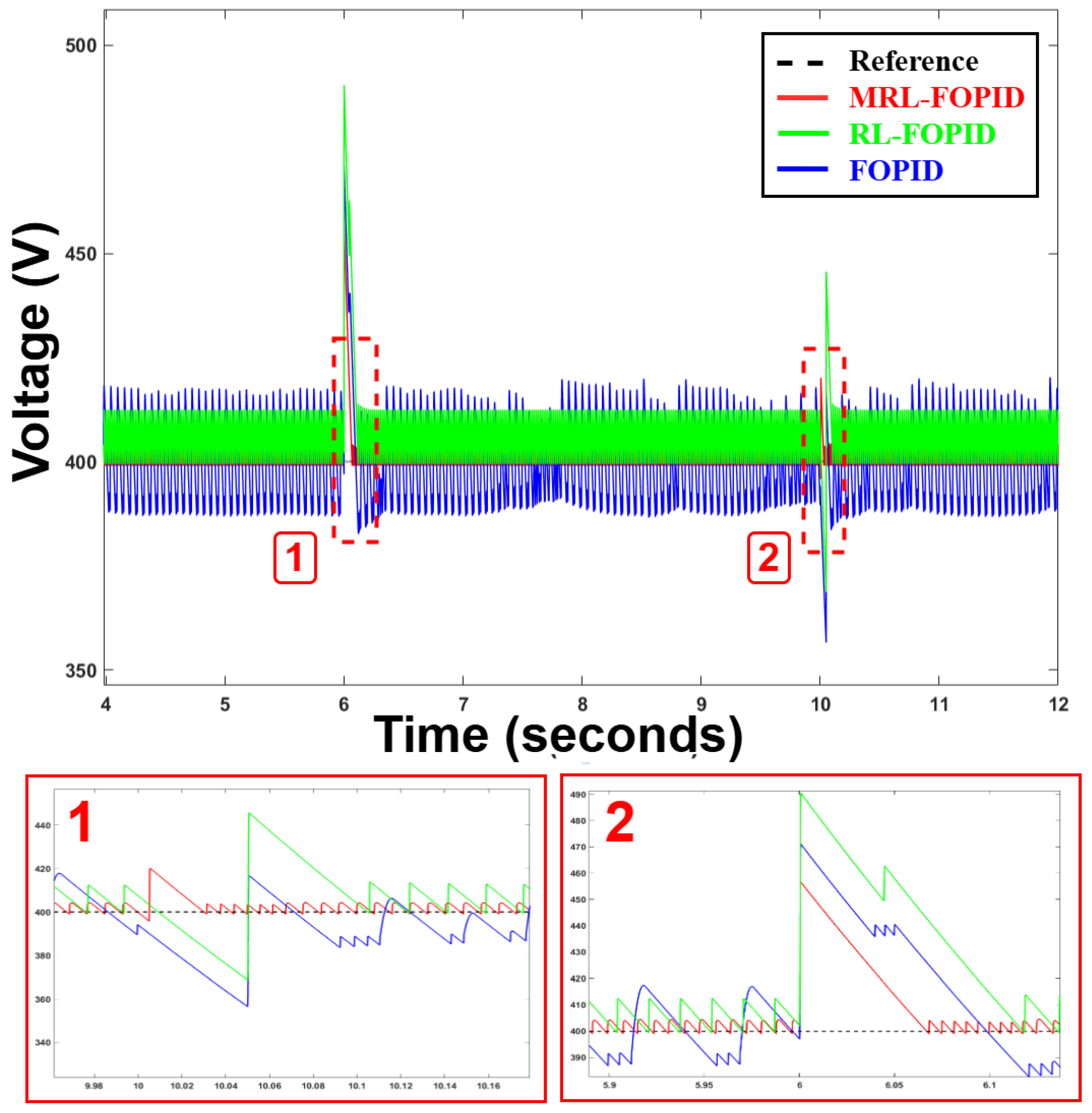

Figure 16 illustrates the performance of the proposed MRL-FOPID controller under supply voltage variations, highlighting its capability to handle dynamic changes in the input voltage. The controller’s ability to mitigate the effects of these fluctuations demonstrates its robustness, ensuring the output voltage remains closely aligned with the reference value, even during significant disturbances. This characteristic is vital for applications such as electric vehicle charging stations, renewable energy systems, and industrial converters, where steady and reliable performance is a fundamental requirement.

This result showcases the robustness of the proposed controller, which effectively compensates for supply voltage disturbances through its adaptive mechanism. It ensures stable performance even under extreme variations, maintaining a consistent output voltage and quickly recovering from fluctuations. The controller’s ability to overcome such disturbances while maintaining tight regulation highlights its suitability for applications that demand high performance and reliability, even in dynamic and unpredictable environments.

6.4. Case 4: Parametric Variations

Ensuring consistent operation and safeguarding downstream components depend on the capacity to sustain consistent output performance despite such disruptions. To provide consistent voltage control, protect linked equipment, and improve the general system’s dependability and efficiency, a strong control system must properly offset these fluctuations. We can clearly observe the performance of the proposed controller under supply voltage changes in

Figure 16. Two sudden step changes are applied to the system in the range of 300 V to 350 V with the set point of 400 V for the controller. The proposed robust adaptive controller shows a significant robustness in this case with a minimal digression from the reference signal which has been covered with a low overshoot or undershoot. This case can clearly depict strength of this method in industrial applications to adapt the controller with the unpredicted scenarios.

Figure 17 illustrates the performance of the MRL-FOPID controller under dynamic load variations, highlighting its robustness in handling significant parametric changes in the system. The load resistance is varied across different time intervals, with clear transitions from 5 Ω to 10 Ω, 10 Ω to 1 Ω, and 1 Ω to 50 Ω. These changes simulate real-world scenarios where load conditions fluctuate due to varying operational demands. The controller maintains stable output voltage throughout these variations, closely tracking the reference value of 400 V. During the transition from 5 Ω to 10 Ω, the controller achieves smooth adjustment with minimal overshoot. Similarly, when the load changes drastically from 10 Ω to 1 Ω, the controller swiftly compensates for the increased demand without significant deviation. The final transition from 1 Ω to 50 Ω demonstrates the controller’s ability to handle abrupt load reductions, quickly stabilizing the output voltage despite the sharp change in system dynamics. These results emphasize the robustness and adaptability of the MRL-FOPID controller in managing dynamic load and parametric variations, making it a suitable solution for applications requiring high reliability and performance under fluctuating conditions, such as renewable energy systems, industrial power supplies, and electric vehicle charging infrastructure.

The controller’s ability to manage these transitions with minimal current overshoot or oscillations underscores its robustness and suitability for battery management applications.

6.5. Case 5: Noise Impact

In real-world scenarios, electronic systems are often subjected to noise disturbances, which can originate from environmental factors, switching devices, or nearby equipment. These disturbances can disrupt the stability and accuracy of control systems, making noise rejection a critical feature for robust controller design. The ability to maintain stable and accurate output voltage under noise injection demonstrates a controller’s effectiveness in minimizing the impact of such external disturbances, ensuring reliable performance in practical applications.pted and precise operation, even under challenging conditions.

Figure 18 presents the performance of the MRL-FOPID controller under noise injection conditions, with two levels of variance noise introduced into the system: a high variance noise (3 variance). The black line represents the reference voltage of 400 V, while the red line indicates the controller’s output response. The controller demonstrates exceptional robustness by maintaining accurate voltage tracking despite the injected noise. For the 3-variance noise phase, the output voltage exhibits negligible fluctuations around the reference value, indicating the controller’s high disturbance rejection capability. This result emphasizes the MRL-FOPID controller’s ability to suppress the effects of noise on system dynamics, making it suitable for deployment in noisy environments such as industrial facilities, renewable energy systems, and electric vehicle charging stations, where external disturbances are inevitable. The controller’s robustness ensures uninterrupted and precise operation, even under challenging conditions.

To further validate the robustness and applicability of the proposed MARL-FOPID controller in EV contexts, next section presents a set of experiments integrating a realistic battery load into the DAB converter system.

6.6. Battery Management System Integration and Validation

DAB converters are widely used in bidirectional power exchange between batteries and DC buses in EV chargers and onboard systems. Unlike fixed resistive loads, battery systems introduce nonlinear load characteristics due to their internal electrochemical dynamics, state-of-charge (SOC)-dependent voltage behavior, and exponential discharge regions. The battery also includes defined voltage behavior across nominal and exponential zones, which significantly affects converter dynamics and control response. The goal of this section is to evaluate the controller’s performance in stabilizing the DAB converter output while interacting with a nonlinear battery load under various charging scenarios. Key performance indicators include output voltage tracking, disturbance rejection, and control adaptability across changing SOC conditions—highlighting the controller’s suitability for real-world BMS-integrated EV systems. The characteristics of the battery used for this study are shown in

Table 6.

6.6.1. Case 1: Output Regulation in BMS

To evaluate the performance of the proposed MARL-FOPID controller under realistic operating conditions, a nonlinear lithium-ion battery was introduced as the load on the Dual Active Bridge (DAB) converter. The controller was tasked with regulating the output voltage of the DAB converter to 450 V during the battery charging process.

Figure 19 illustrates the voltage tracking performance during this test. The red line represents the converter output voltage, while the black dashed line marks the constant 450 V reference. Initially, the controller maintains a gradual ramp-up toward the target voltage while compensating for battery-induced nonlinearity. A sharp transient occurs mid-sequence due to dynamic SOC adjustment and internal resistance shifts within the battery model. Despite this disturbance, the controller rapidly restores the voltage and continues to maintain tracking, though with increased oscillatory activity as the system reaches higher SOC levels. These results confirm the robustness and adaptability of the proposed controller in maintaining voltage regulation in the presence of complex, nonlinear battery dynamics—demonstrating its suitability for real-world battery management and EV charging applications.

6.6.2. Case 2: SOC-Regulated Charging/Discharging Test

To extend the BMS-oriented validation of the proposed MARL-FOPID controller, a dynamic SOC regulation case was designed. In this scenario (

Figure 20), the controller is tasked not only with maintaining output voltage but also with ensuring that the battery’s SOC remains within a defined safety window of 90–100%. The system operates in two modes: when the SOC drops to 90%, the controller initiates charging via the DAB converter; conversely, when SOC reaches 100%, the controller switches to discharge mode to supply energy to the load. This closed-loop SOC hysteresis control mimics practical EV battery operation and ensures the battery stays within optimal limits for health and longevity.

The controller successfully maintains the battery’s SOC within the predefined 90–100% range by alternating between charging and discharging modes (

Figure 20a). The SOC rises to 100%, at which point the controller initiates discharging, and when it drops to 90%, charging resumes. The corresponding current waveform clearly shows the mode transitions, with positive current indicating charging and negative current indicating discharge (

Figure 20b). This confirms the controller’s effectiveness in enforcing SOC-based operation boundaries and regulating energy flow dynamically in a bidirectional DAB-battery system.

The MRL-FOPID controller demonstrates exceptional performance for the DAB converter by maintaining precise voltage regulation and robust tracking under various dynamic conditions, including reference changes, supply voltage fluctuations, load variations, and noise disturbances. The controller’s ability to suppress disturbances, adapt to abrupt parameter changes, and ensure steady-state accuracy highlights its suitability for real-world applications such as renewable energy systems, electric vehicle charging, and industrial power converters. Overall, the results validate the controller’s effectiveness in achieving reliable and high-performance operation, making it a robust solution for dynamic and demanding power electronic environments.

6.7. Hardware Configuration and Training Setup

The real-time implementation and validation of the proposed controller were carried out using the Typhoon HIL 606 platform, connected to the SCADA environment via analog I/O breakout board. The system operated at a sampling time of 25 ns, allowing high-fidelity simulation of power converter dynamics and precise control signal handling. The control algorithm was developed and trained using MATLAB and converted to C code to be integrated in the SCADA environment.

Table 7 depicts the summary of training hyperparameters and execution details for the DDPG agents used in the proposed multi-agent FOPID controller. Each agent independently tunes one of the controller gains (

) under RL settings.

In early trials, multi-agent convergence issues were observed, including slow adaptation and occasional divergence of learning agents. This was mitigated by employing GWO-based initialization of each agent’s parameters, which helped stabilize training and ensure reliable convergence throughout the experimental process.