1. Introduction

In 2021, roughly 77% of the energy produced within the United States came from fossil fuels such as coal, oil, and natural gas [

1,

2]. While the implementation and fulfillment vary from region to region, the overall trend in recent years indicates a shift as the world moves toward adopting renewable sources [

3]. Multiple reasons exist for this shift in energy production, such as climate change and unsustainable fuel extractions [

4,

5]. While renewable energy offers an alternative to destructive fossil fuels, its widespread adoption is hindered by some inherent challenges.

As efforts are made to combat climate change, wind turbines have helped alleviate the problem. In 2022, wind-powered energy accounted for nearly 7.33% of electricity generation worldwide [

6] and remains the leading non-hydro renewable technology, generating over 2100 TWh [

7]. With the increasing number of wind turbines globally, the maintenance and upkeep of these systems have introduced a large capital barrier to investment. For example, in addition to wind turbine blade replacement costing up to USD 200k, further losses are incurred during blade inspection and replacement, as the turbine needs to be halted, thus hindering power production [

8]. This downtime results in average losses ranging from USD 800 to USD 1600, depending on the wind speed in the area [

9]. These factors highlight the growing need for improved inspection and preventative maintenance methods.

Another area driving investment and improvement in traditional maintenance and inspection technology is crew safety, which can be dangerous and time-consuming using traditional inspection and maintenance methods. The height of many commercial wind turbines in the United States averages around 280 feet, with each blade weighing 35 tons [

10]. Thus, it can be dangerous for laborers to be exposed to such heights, as well as the elements, during the inspection of the turbine blades. Furthermore, they face health issues such as motion sickness on the voyage to offshore wind sites [

11,

12]. Humans are also vulnerable to misdiagnosis and missing hard-to-see imperfections. With all these issues in mind, a solution must be able to detect faults and damages at early stages, reduce the overall cost involved with inspection, and improve the accuracy of fault diagnosis.

Current wind turbine fault diagnosis and identification systems fall roughly into two categories: visual-based inspection systems and sensor-based Supervisory Control And Data Acquisition (SCADA) modeling systems. Prior to the explosion of deep learning research and the application of deep learning to computer vision, traditional machine learning methods were applied to the large data streams fed by SCADA systems. SCADA systems are commonly integrated into wind turbines to monitor multiple sensors, including internal temperatures, wind speed, and power generation. With this information, researchers have deployed several algorithms and optimization methods to model and predict the failure of wind turbine components. In a study by Liu et al. [

13], an innovative approach was introduced employing extreme gradient boosting to establish a predicted normal temperature for gearbox oil. Subsequently, a weighted average was utilized to quantify the deviation between the predicted and measured temperatures. The authors demonstrated that with the proposed system alongside a dynamic fault threshold, errors could be identified with advance notice of 4.25 h for generators and 2.75 h for gearboxes [

13].

Recognizing the intricate relationships inherent in SCADA data streams, Khan and Byun proposed a stacking ensemble classifier that leverages the strengths of AdaBoost, K-nearest neighbors, and logistic regression [

14]. Their approach yielded enhanced accuracy in anomaly detection within SCADA data. Similarly, a comprehensive comparison of feature selection methods, architectures, and hyperparameter optimization was conducted by researchers in [

15]. Their findings indicated that employing K-nearest neighbors with a bagging regressor and Principal Component Analysis (PCA) for feature extraction enabled a four-week advance in fault detection [

15]. Further exploiting the time-series data from SCADA systems, an approach making use of nonlinear auto-regressive neural networks was deployed. Once signals were denoised with wavelet transforms, it was shown as a possible early warning solution for wind turbine maintenance [

16]. While leveraging SCADA data has proven effective for early warning systems, it is noteworthy that such data inherently suffer from imbalance [

17]. Velandia-Cardenas et al. investigated the imbalanced data from real SCADA systems and proposed preprocessing techniques along with RUSBoost, which increased overall accuracy [

17].

With the breakthroughs in deep learning, these networks have been applied to a variety of problems with increasing accuracy. In the area of computer vision, these architectures have been leveraged for tasks such as medical imaging analysis [

18], image reconstruction and sharpening [

19], crack detection in new building construction [

20], and many more. Additionally, deep learning can be utilized for speech signal analysis, allowing for advancements in voice detection and control [

21]. Another emerging sector making use of these algorithms is wearable technology. One such application utilized wearable sensors and multi-layer perceptron to classify different postures [

22].

The application of deep learning architectures to fault diagnosis and detection over traditional machine learning methodology has also increased in recent years. Lui et al. proposed a clustering and dimensionality reduction preprocessing technique prior to inputting data into a deep neural network [

23]. Another novel approach, utilizing advances in deep learning, introduced an attention-based octave convolution. This approach reduces the computations of traditional convolutional layers by separating low- and high-frequency channels. By altering the ResNet50 architecture with this convolution, it was shown to provide an accuracy of 98% in wind turbine converter failure detection [

24]. Finally, a controlled experiment over 11 years was conducted to investigate the suitability of SCADA-based monitoring for fault diagnosis. Murgia et al. developed a custom convolutional neural network (CNN) architecture and indicated that SCADA-based monitoring is a possible solution, allowing early warning of critical internal component failure [

25].

While SCADA systems provide a solution to the early detection of critical internal failures through sensor analysis and prediction, the diagnosis of external faults remains a concern. In this case, a visual-based inspection and monitoring system provides a better solution. One approach made use of a CNN with pretrained weights transferred from ImageNet to form the feature extraction base. These deep features were then fed into an SVM for classification [

26]. Similarly, Moreno et al. conducted a proof-of-concept simulation on the ability of a custom CNN architecture to classify wind turbine blade faults. In this case, the proposed model was trained on real-world turbine data, and a scaled 3D-printed blade with manufactured defects was used for verification. The approach achieved 81.25% accuracy, showing promising improvement in visual-based systems [

27]. To address the low accuracy in current visual-based systems, Chen et al. proposed an attention-based CNN architecture that allowed feature maps to be re-weighted according to an attention algorithm. Through ablation experiments of this method with ResNet50 and VGG16 networks, it was concluded that VGG16 provided the best accuracy of 92.86% [

28]. Another architecture was proposed in [

29], where attention mechanisms were deployed accompanied by Enhanced Asymmetric Convolutional (EAC) blocks, reducing the computational complexity of the model. This model outperformed other formidable architectures like MobileNet and ShufflenetV1 [

29]. Leveraging the complementary strengths of RBG and thermal imaging, a comprehensive fault classification analysis of 35 diverse architectures was performed on small wind turbine blades [

30].

Another emerging area of blade fault diagnosis research is the use of object detection models. An example is the modified You Only Look Once (YOLO) version 5 that was proposed in [

31]. The attention module, loss function, and feature fusion were modified to create an improved small-defect detection algorithm that outperformed the base YOLOv5 by 4% [

31]. Analogously, a modified YOLOv4 architecture was presented in [

32]. The authors replaced the backbone with MobileNetv1, reducing computation and complexity. The proposed model was also pretrained on the PASCAL VOC dataset, a popular dataset for object detection and classification commonly used to benchmark computer vision models, allowing faster learning convergence [

33]. While the detection speed increased, it became marginally less accurate [

32]. Improving the data and information that these models can provide, a two-stage approach for crack and contour detection was investigated, utilizing Haar-like features and a novel clustering algorithm in [

34]. This approach showcases the direction of current research and the breadth of information these novel deep learning algorithms can provide with sufficient data.

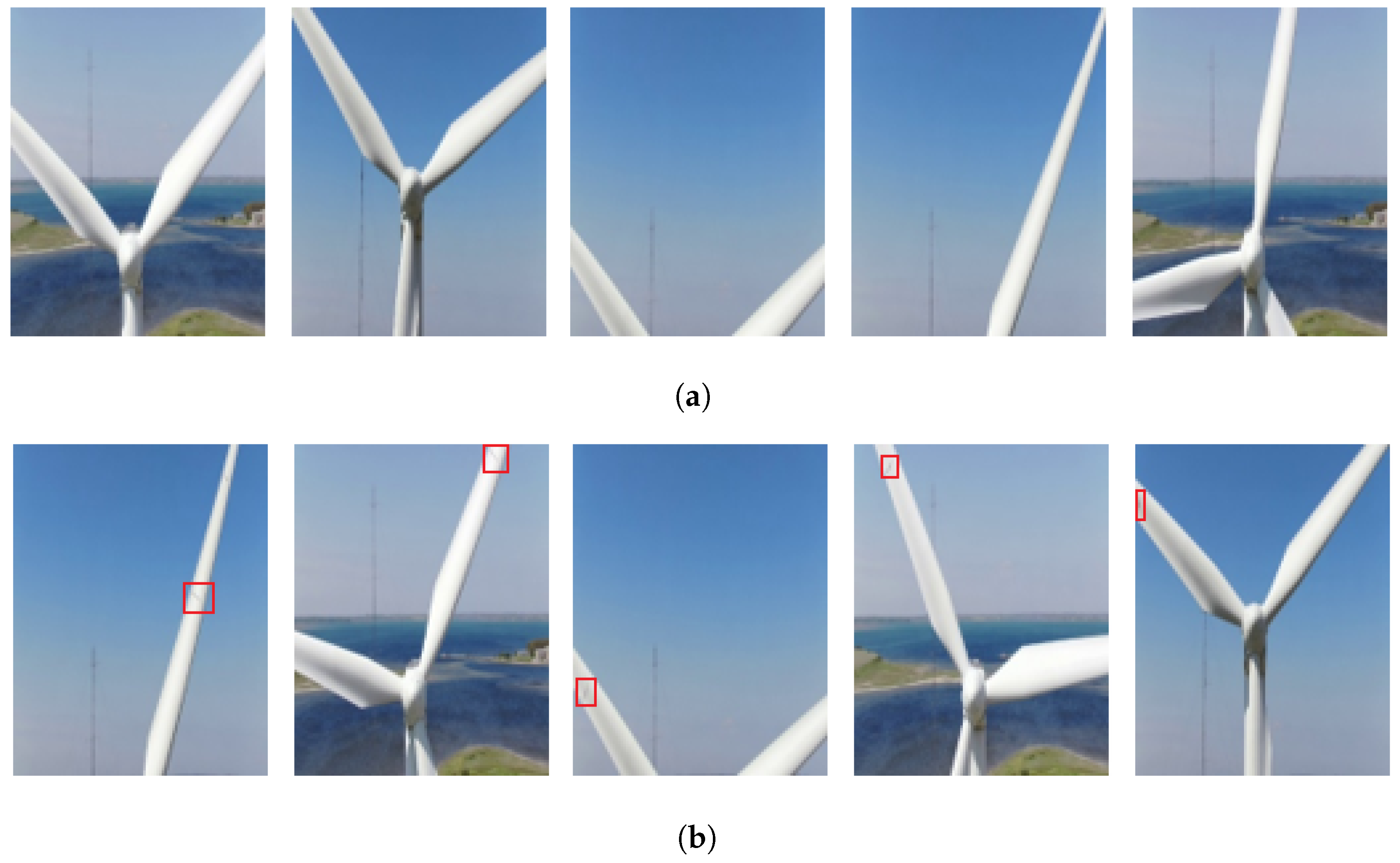

An important aspect of wind turbine fault analysis is the investigation of dataset creation and the inspection method. Due to the height and remote nature of wind turbine farms, drones are often deployed to gather sufficient data for training deep learning models. However, through drone path-planning research, drones possess the capability of autonomously flying to wind turbines to capture images of the blades. In this area, smaller-scale experiments have been conducted to verify the concept and implement the algorithms in a controlled environment [

35,

36].

Recent works have used drone inspection and machine learning to analyze wind turbine blade damage. Previous approaches to blade fault detection include the use of residual and wavelet-based neural networks, feature detection using Haar-like features, SVM with fuzzy logic, etc. [

37,

38,

39]. In China, an alternate approach to the problem was recently employed via Unmanned Aerial Vehicles (UAVs) to first capture images of wind turbine blades and then use a cascading classifier algorithm to identify and locate wind turbine blade cracks [

34,

40]. ResNet-50, pretrained with ImageNet weights and with custom fully connected top layers, has been used in other works to provide high test precision and recall on identifying small blade chips and cracks [

41].

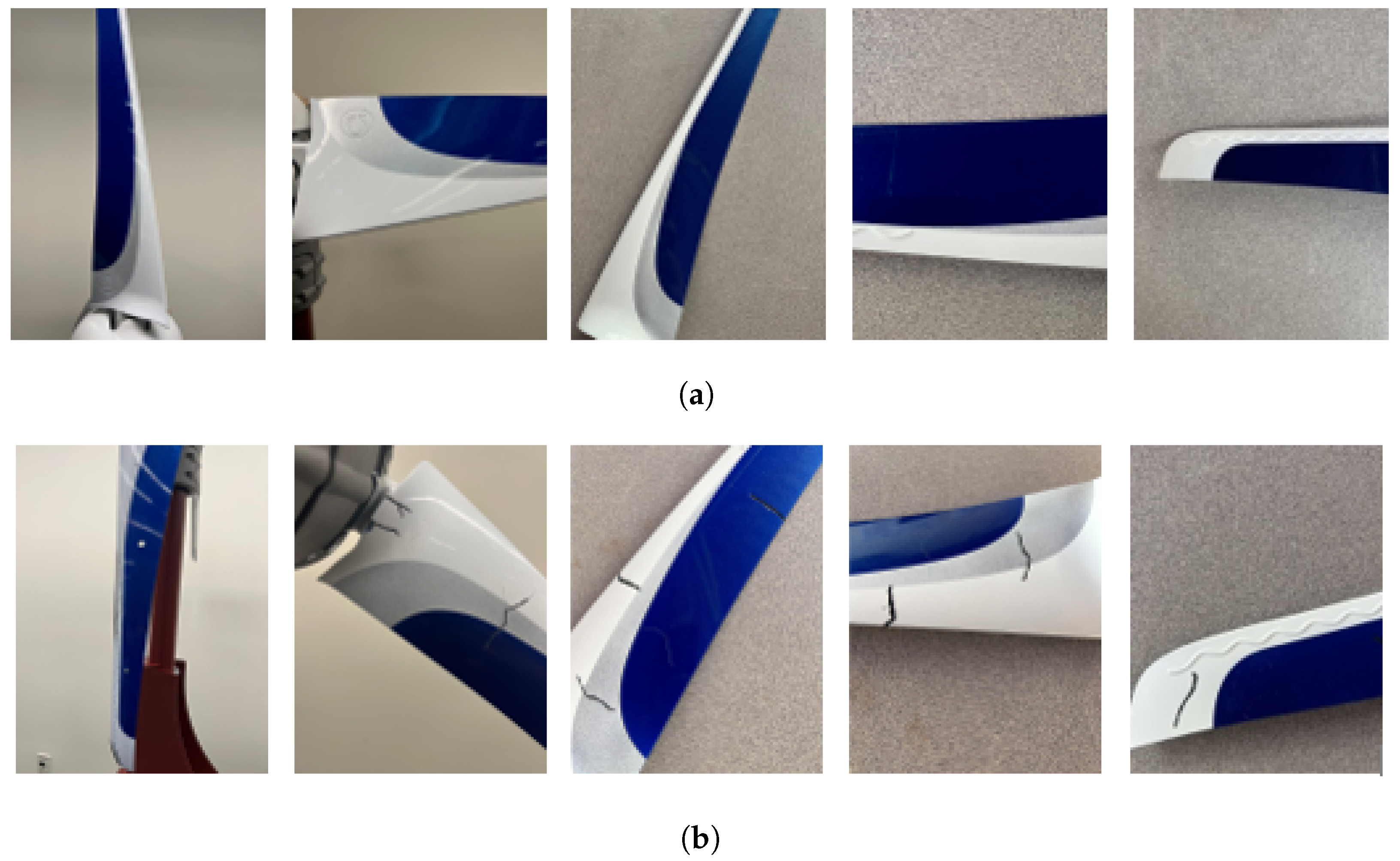

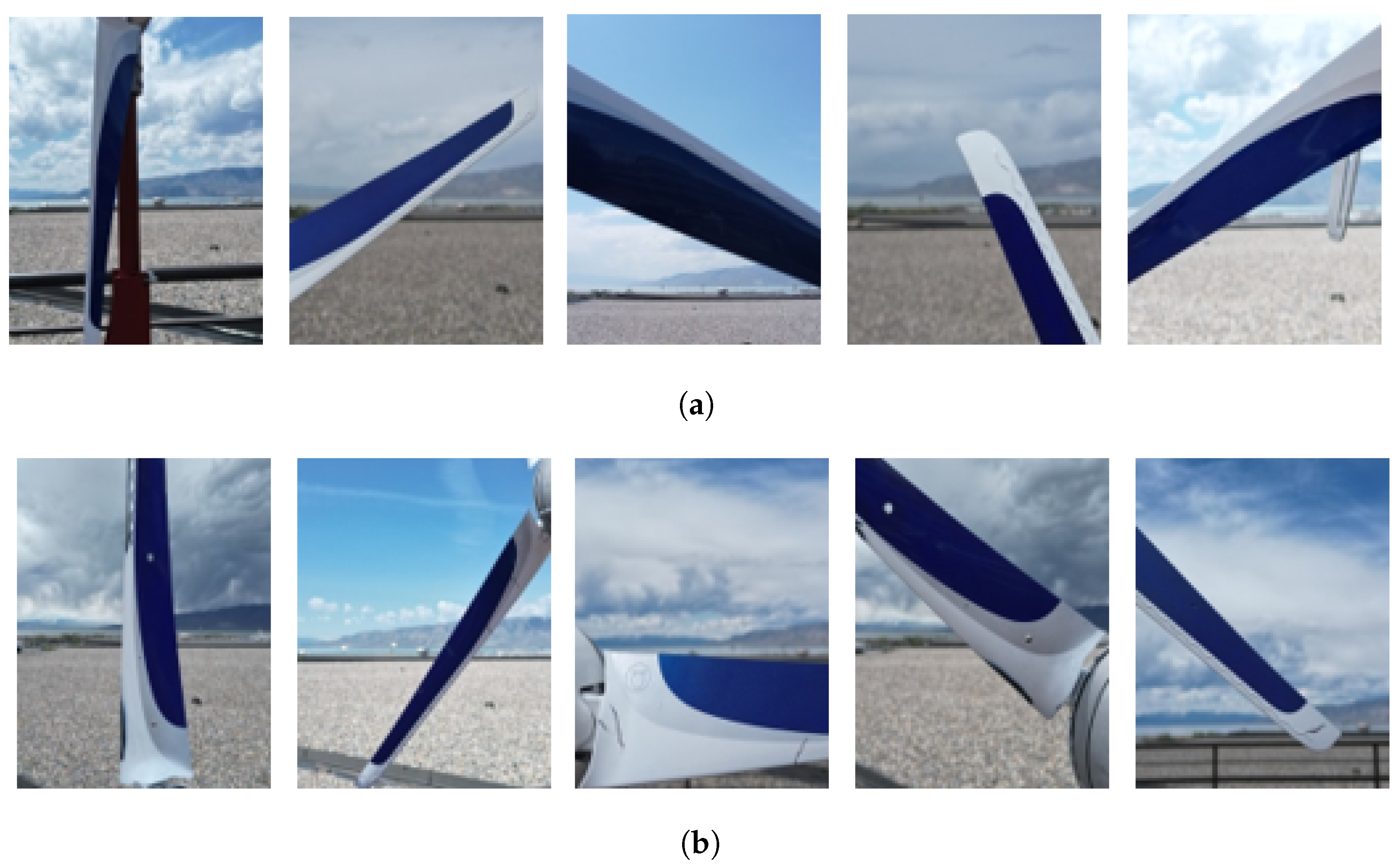

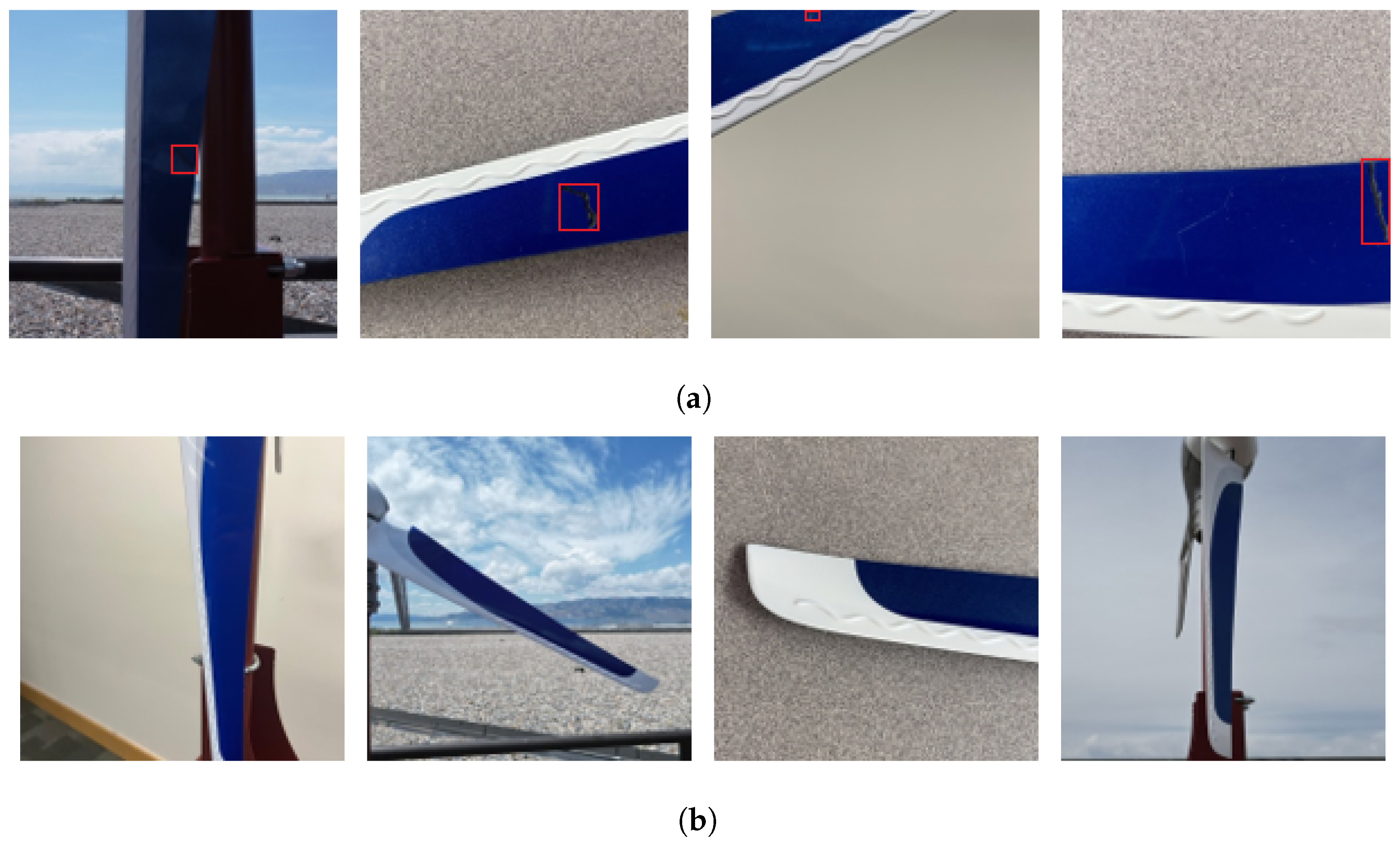

This research investigates the use of CNNs for the detection of faults within small wind turbine blade images. The CNN models are trained on a newly created dataset containing images of small wind turbine blades. This unique dataset contains multicolored images of wind turbine blades of the Primus AirMax turbine, rather than just white, monochromatic blades, and the damage on these blades includes cracks, holes, and edge erosion. The additional faults in the created dataset used here are not included in other datasets utilized in other works in this area.

The main contributions of this research include the following:

The creation of a novel fault identification dataset named Combined Aerial and Indoor Small Wind Turbine Blade (CAI-SWTB), utilizing images of healthy and damaged blades on a Primus AirMax wind turbine with a power output range of 0– kW.

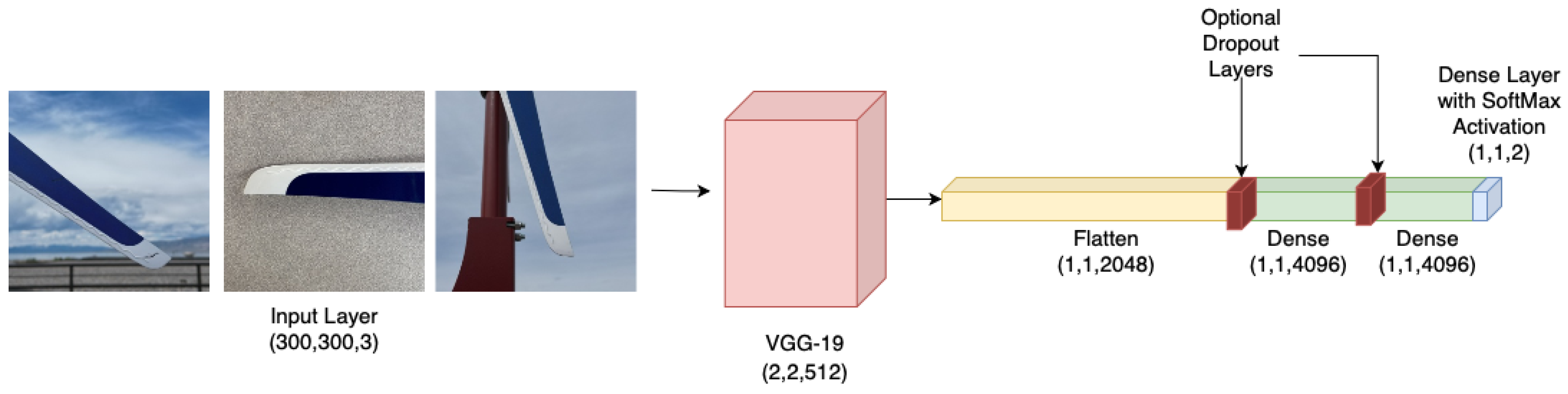

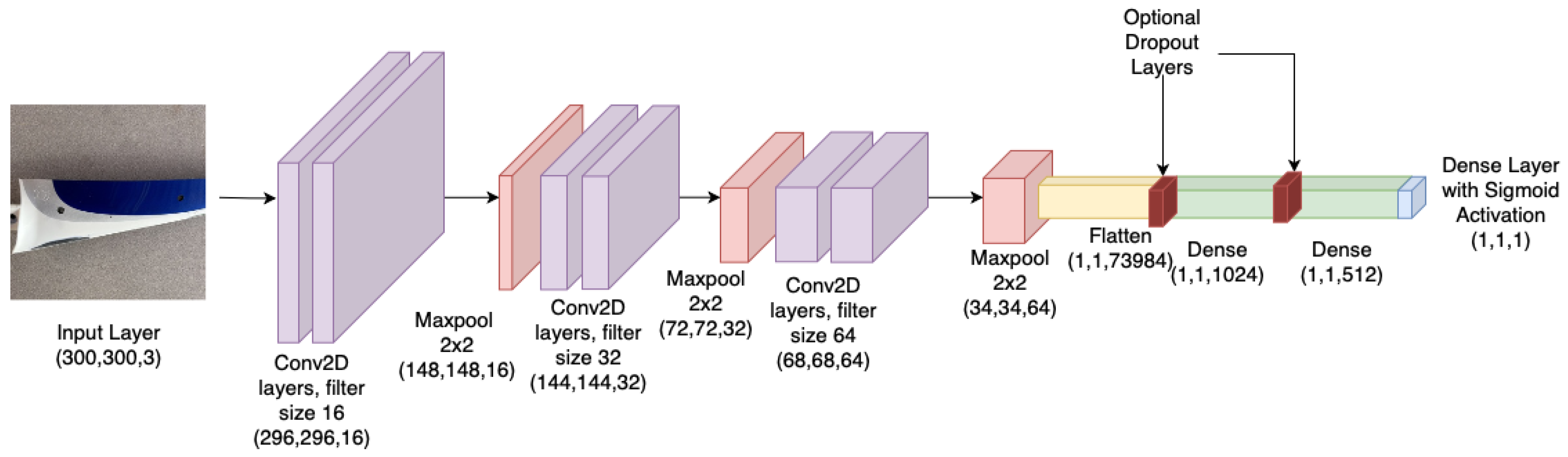

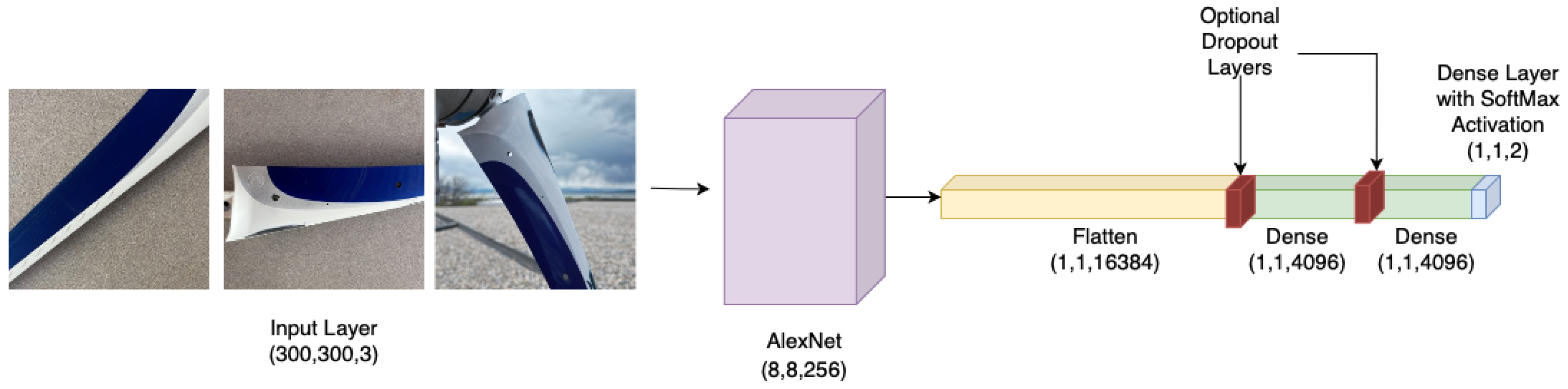

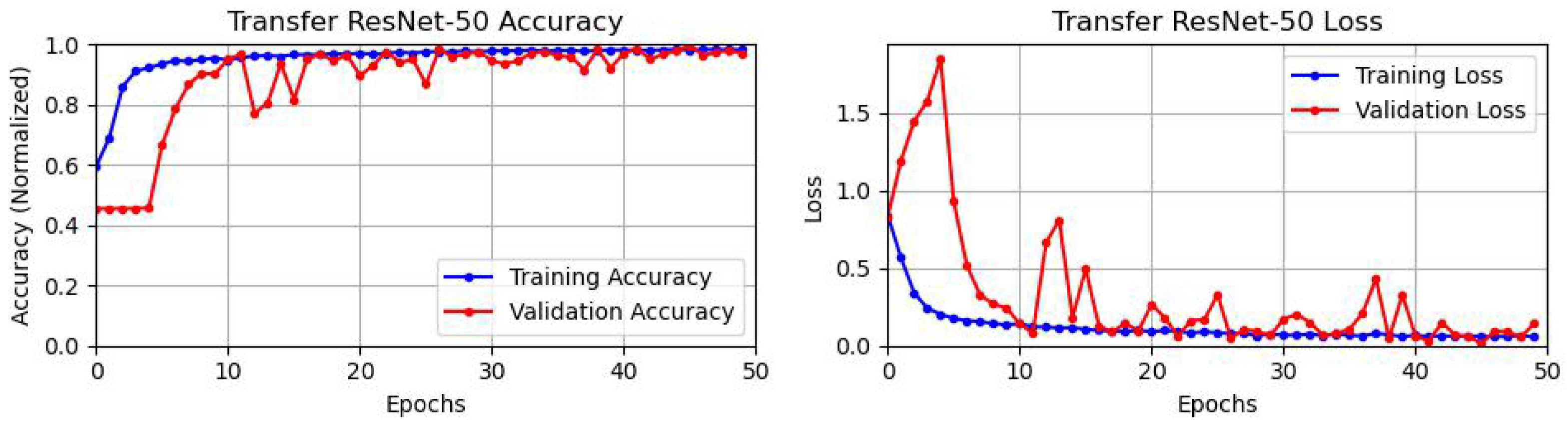

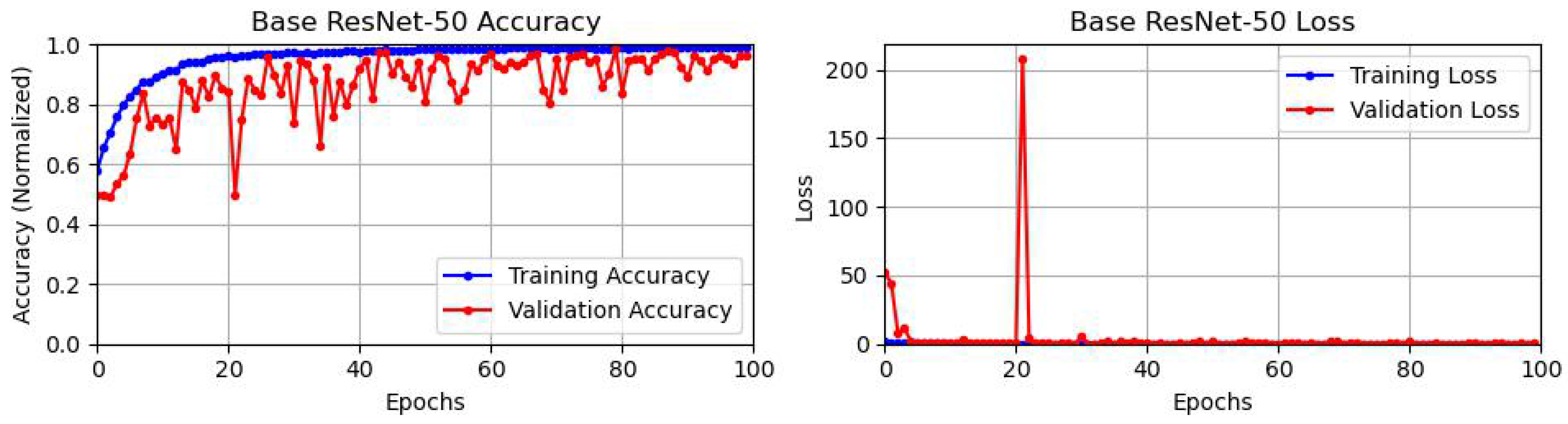

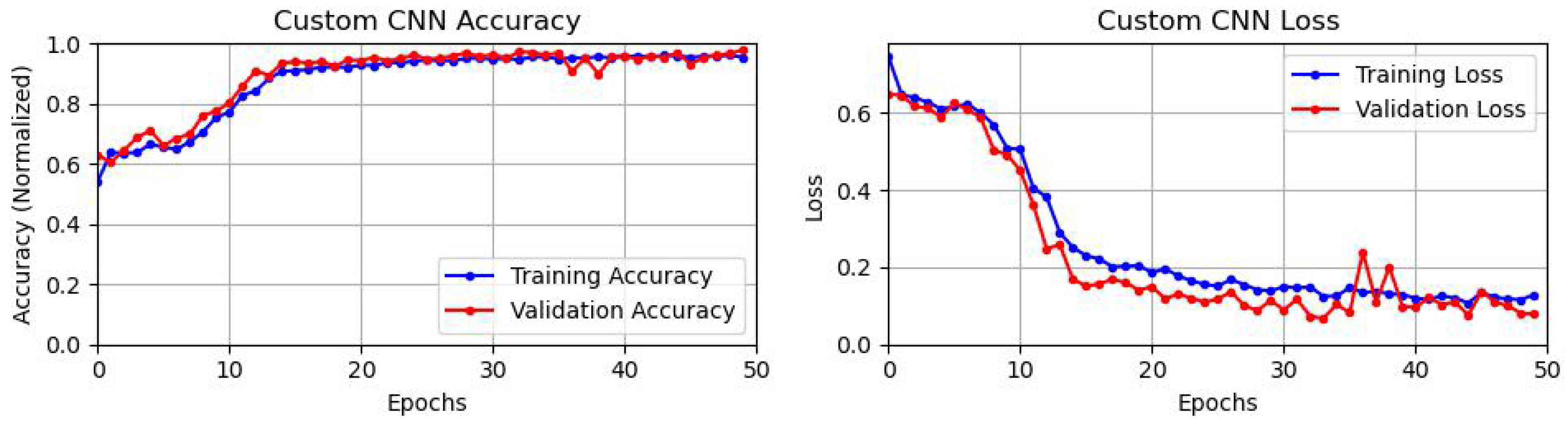

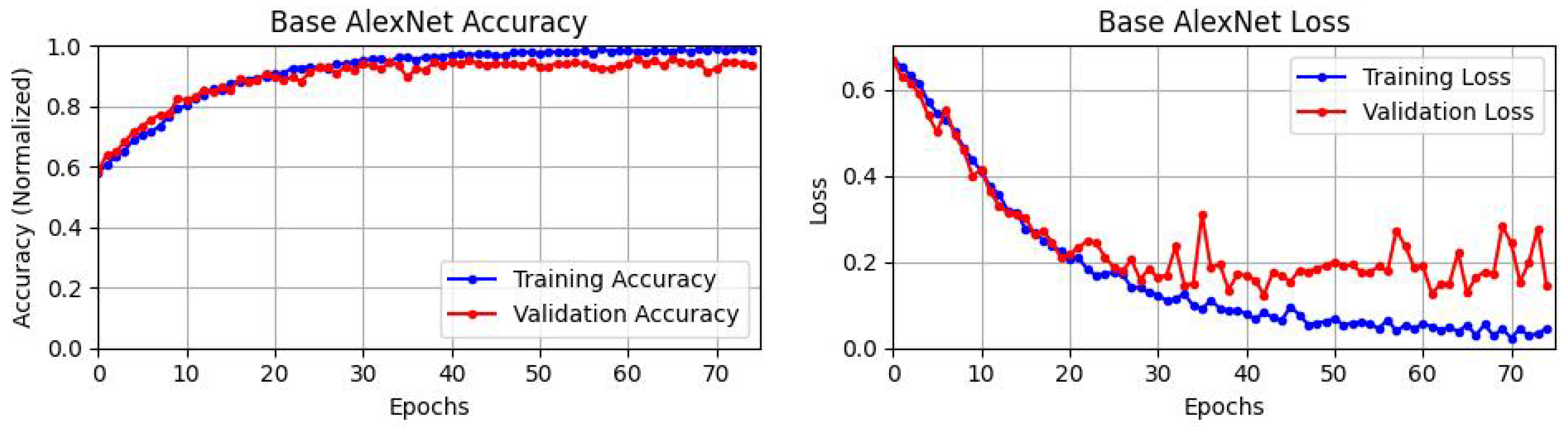

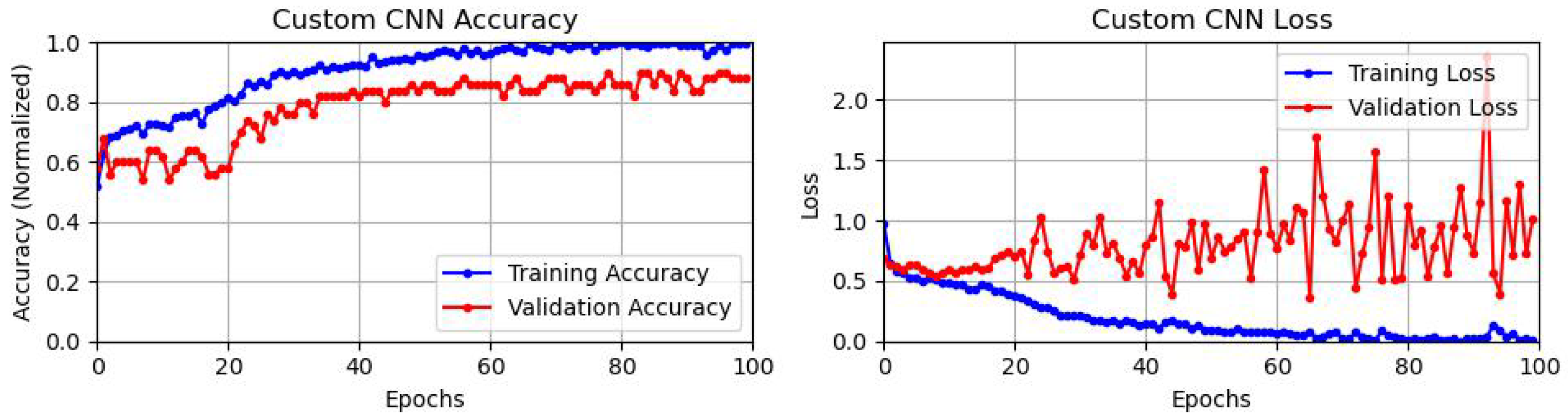

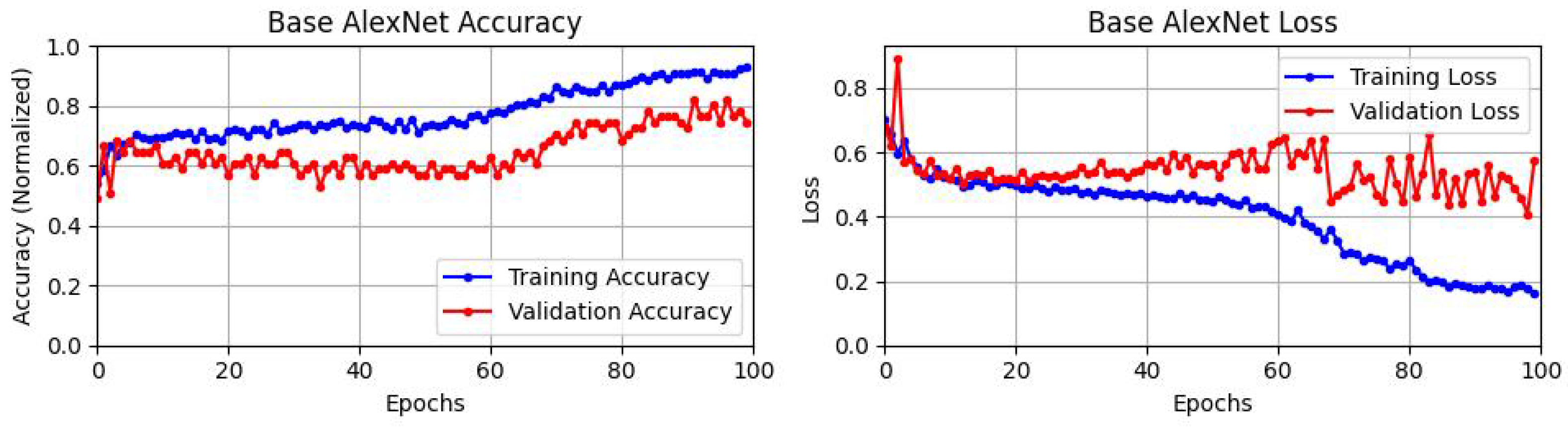

The development, training, and testing of a new custom CNN architecture and transfer learning models, making use of pretrained backbone networks to detect anomalies and damage on small wind turbine blades.

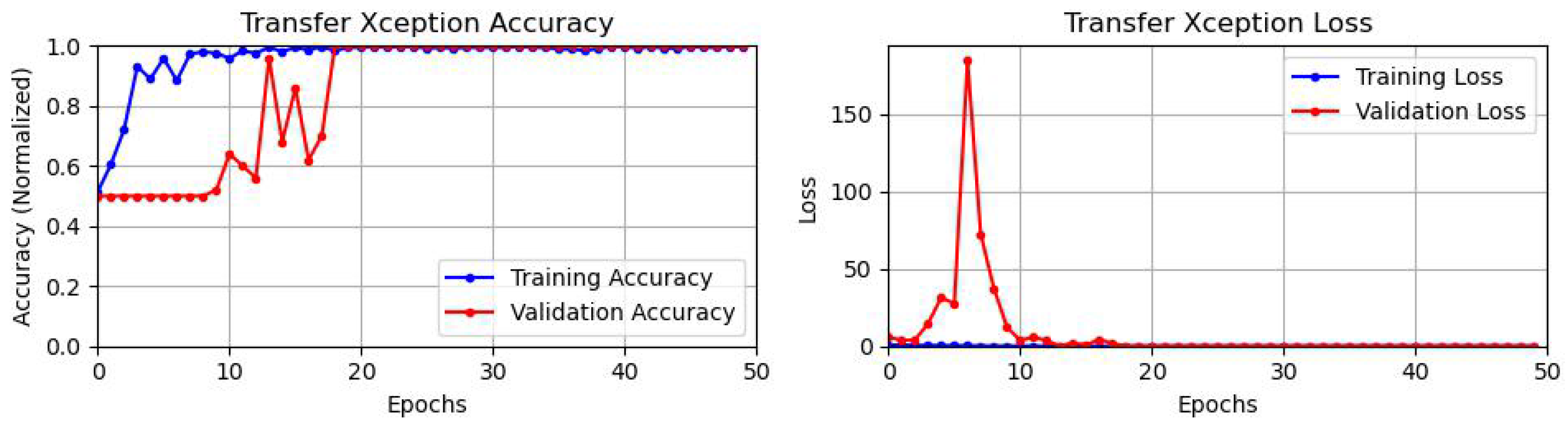

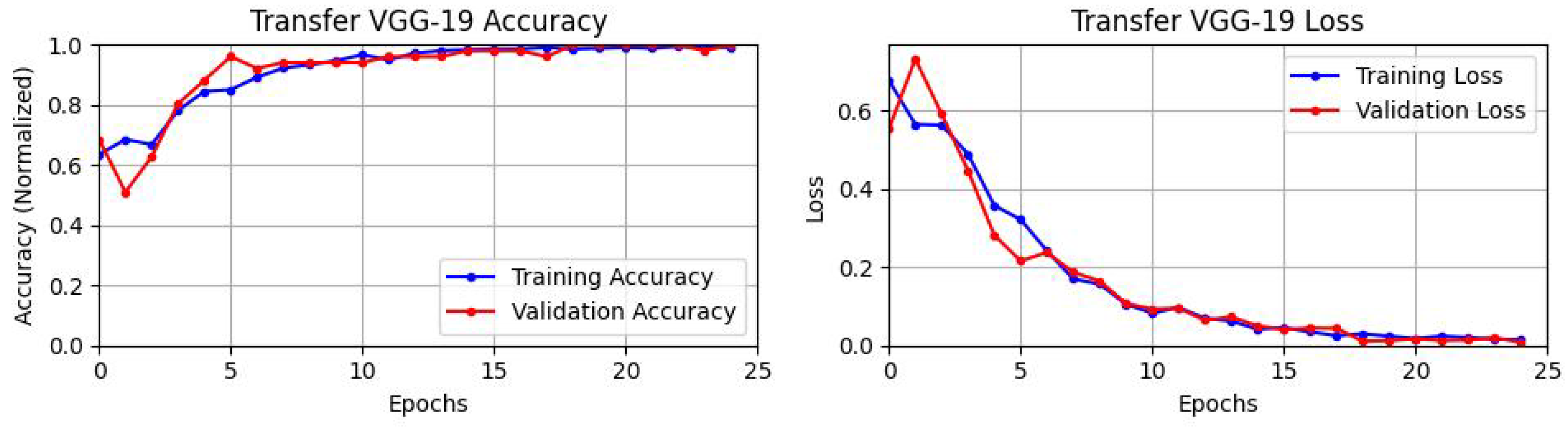

The investigation of transfer learning techniques in wind turbine fault classification.

Hyperparameter tuning and testing for optimal performance on the newly created CAI-SWTB dataset.

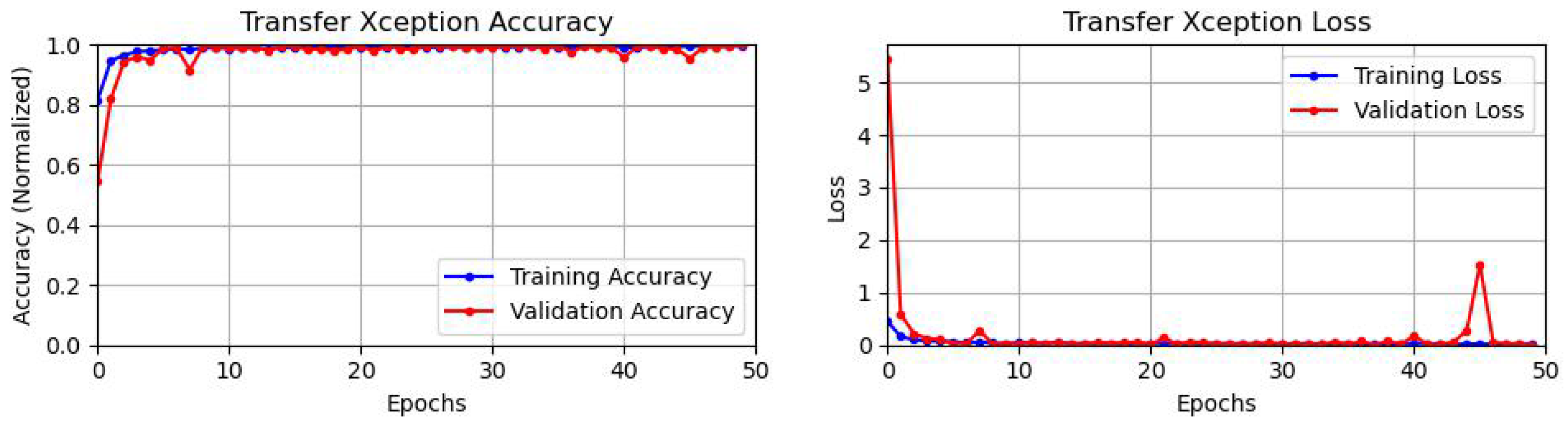

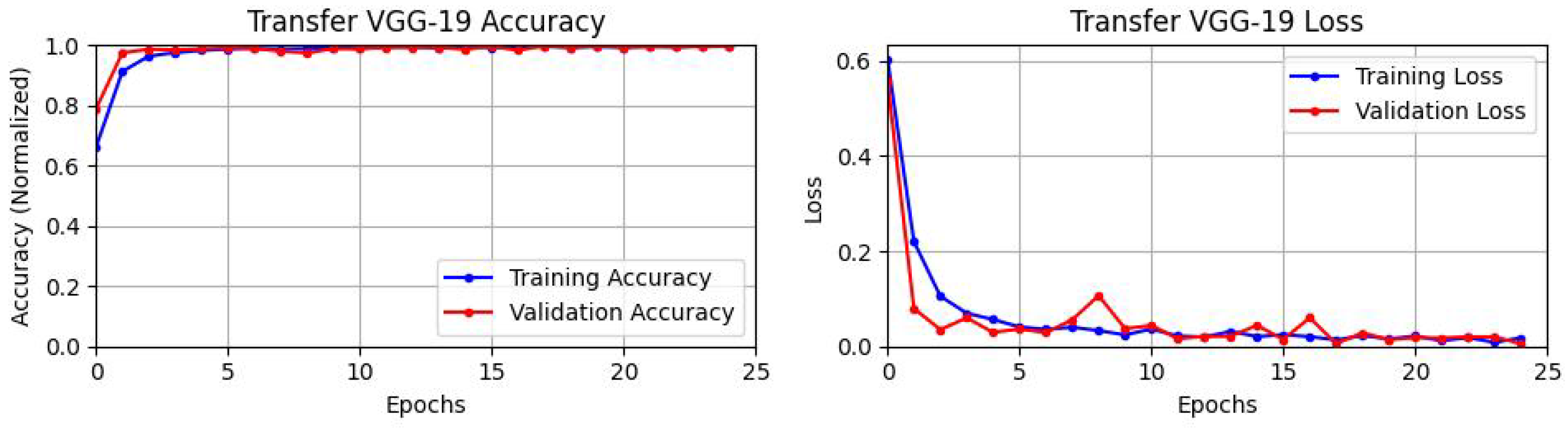

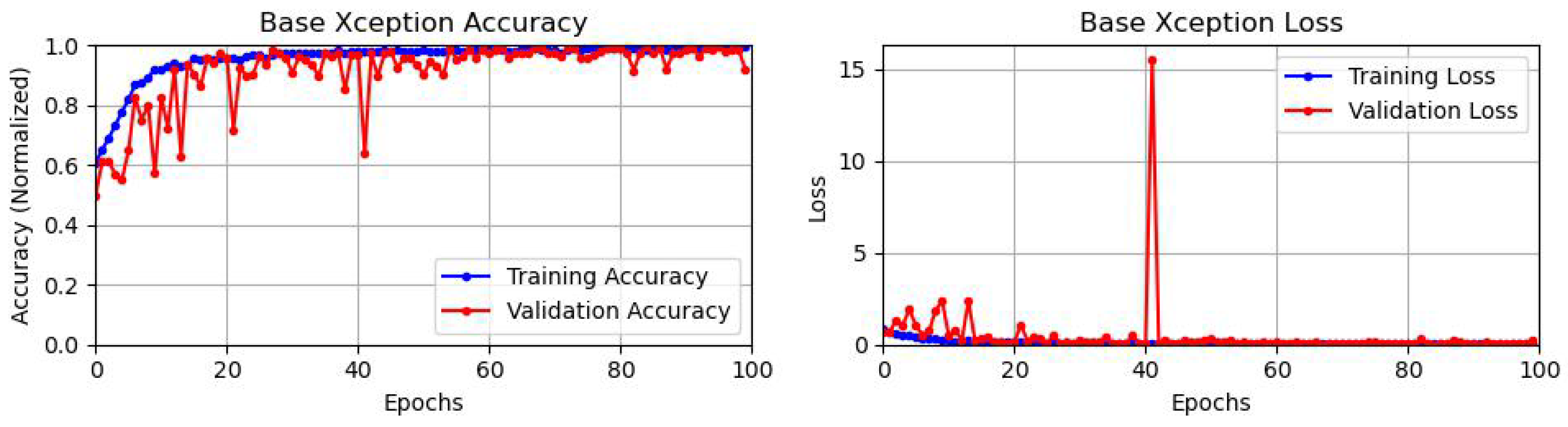

A comparison of Xception, ResNet-50, VGG-19, and AlexNet against the custom architecture and proposed transfer learning methods.

The rest of this paper is organized as follows.

Section 2 discusses the deep learning architectures deployed in our research of anomaly detection. The newly created dataset,

Combined Aerial and Indoor Small Wind Turbine Blade CAI-SWTB, is detailed in

Section 4, followed by simulations, results, and a discussion. Finally, some conclusions and suggestions for future work are presented in

Section 5.