Detection of Pumping Unit in Complex Scenes by YOLOv7 with Switched Atrous Convolution

Abstract

1. Introduction

2. Related Work

3. Proposed Method

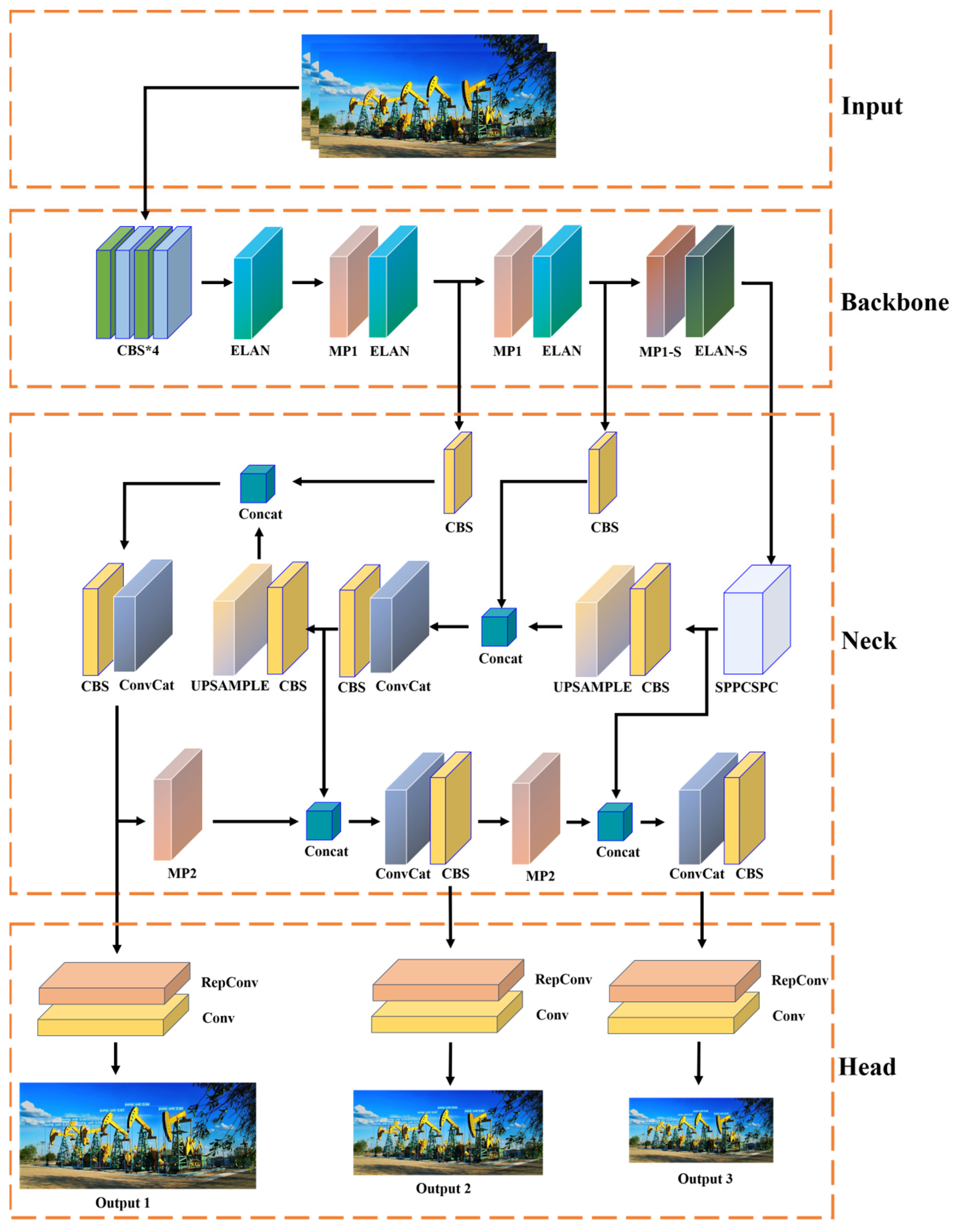

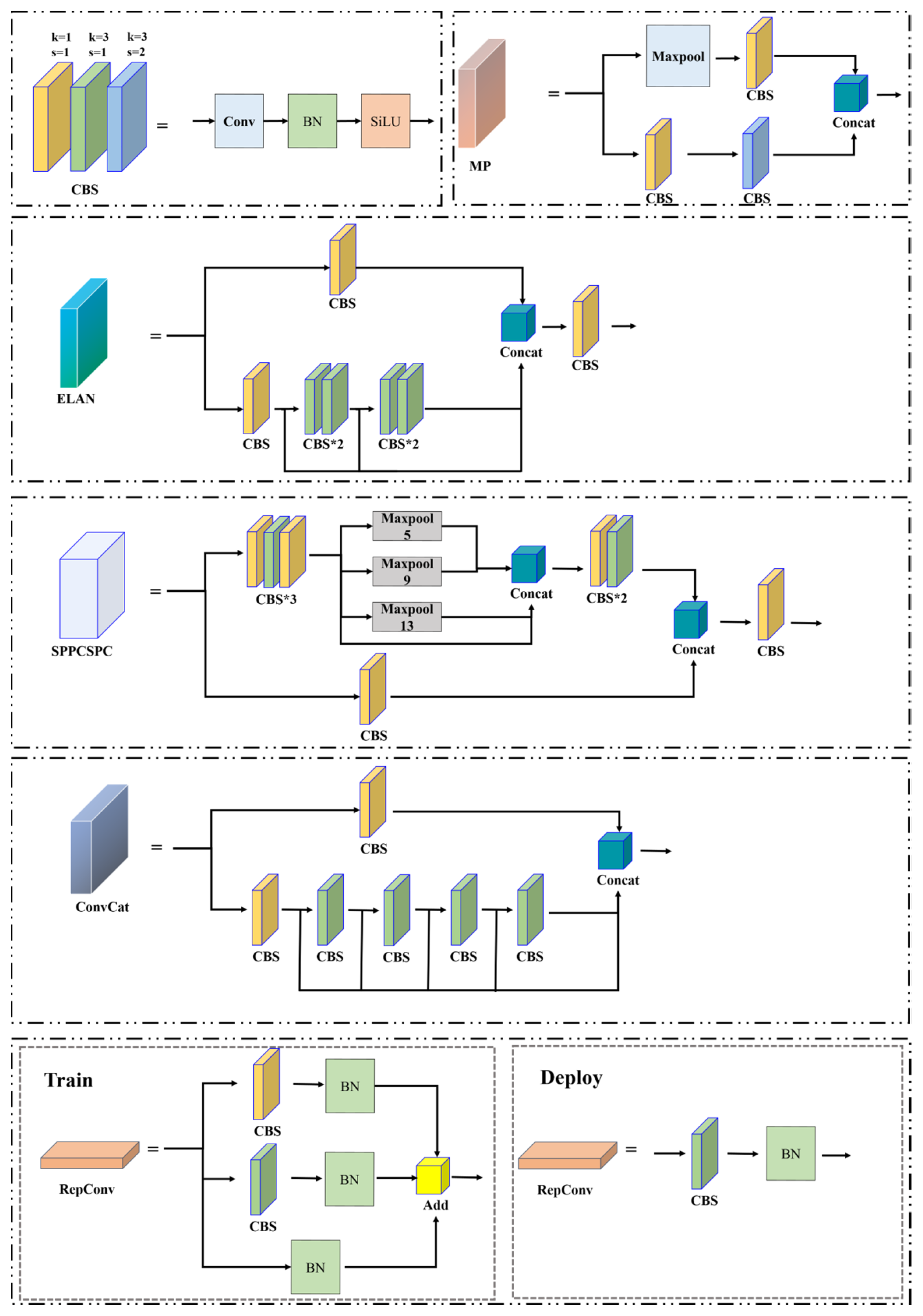

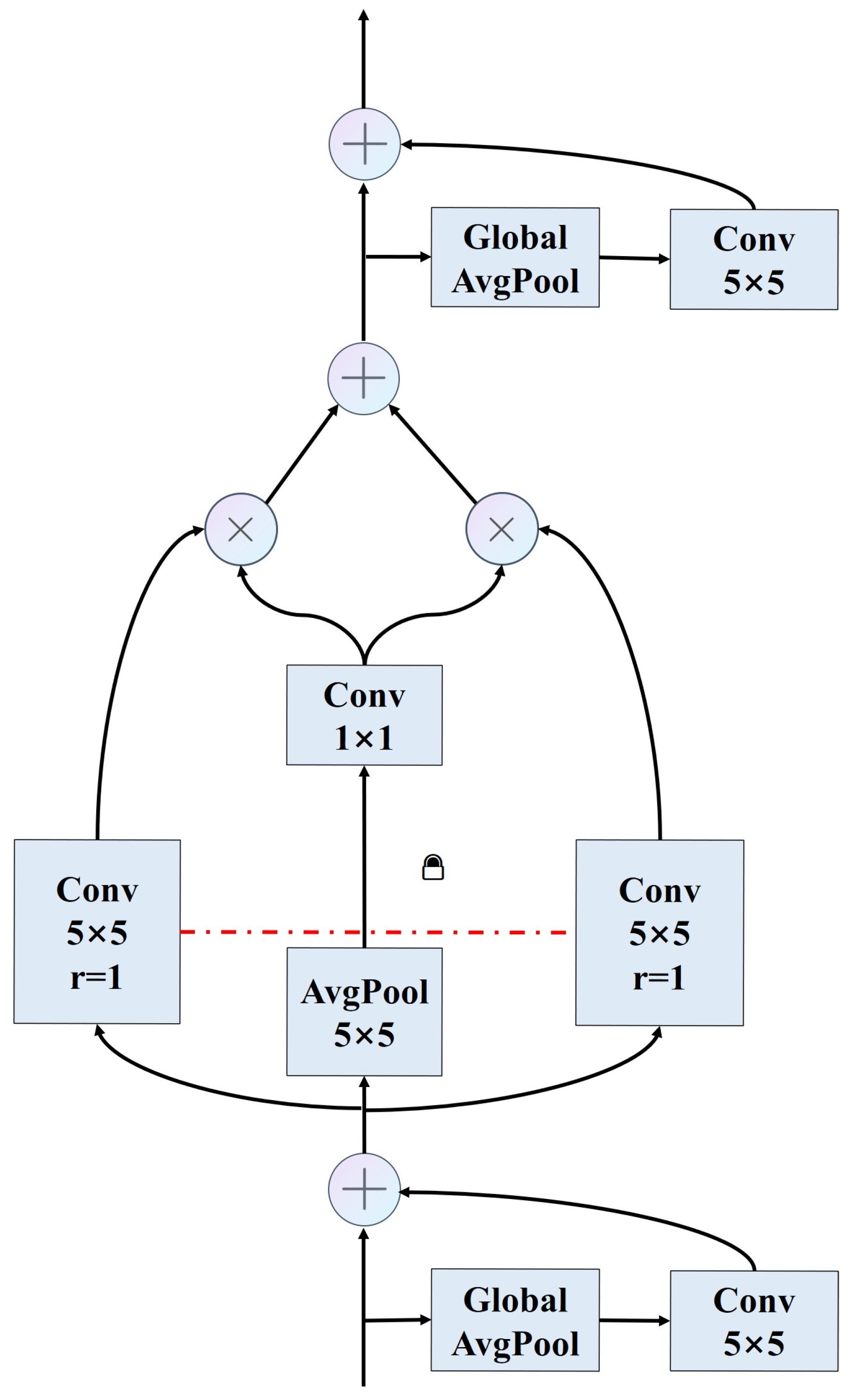

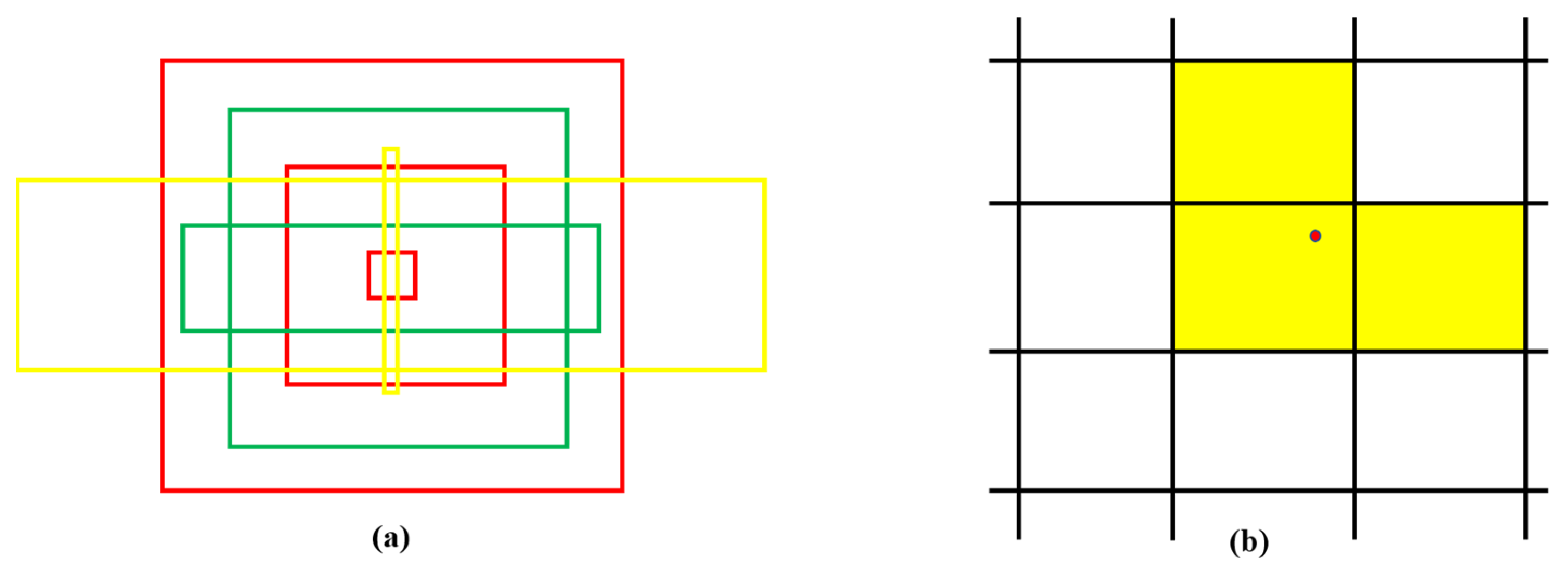

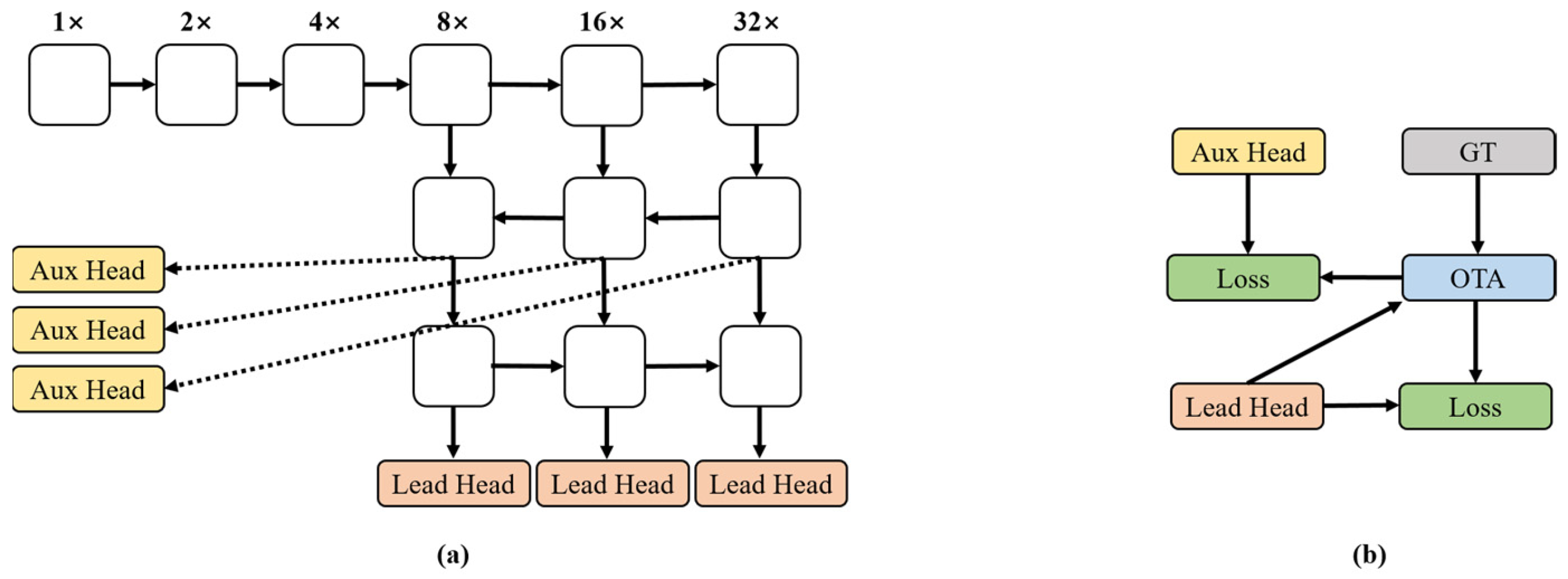

3.1. Network Structure

3.2. Training Strategy

3.3. Loss Function

4. Application

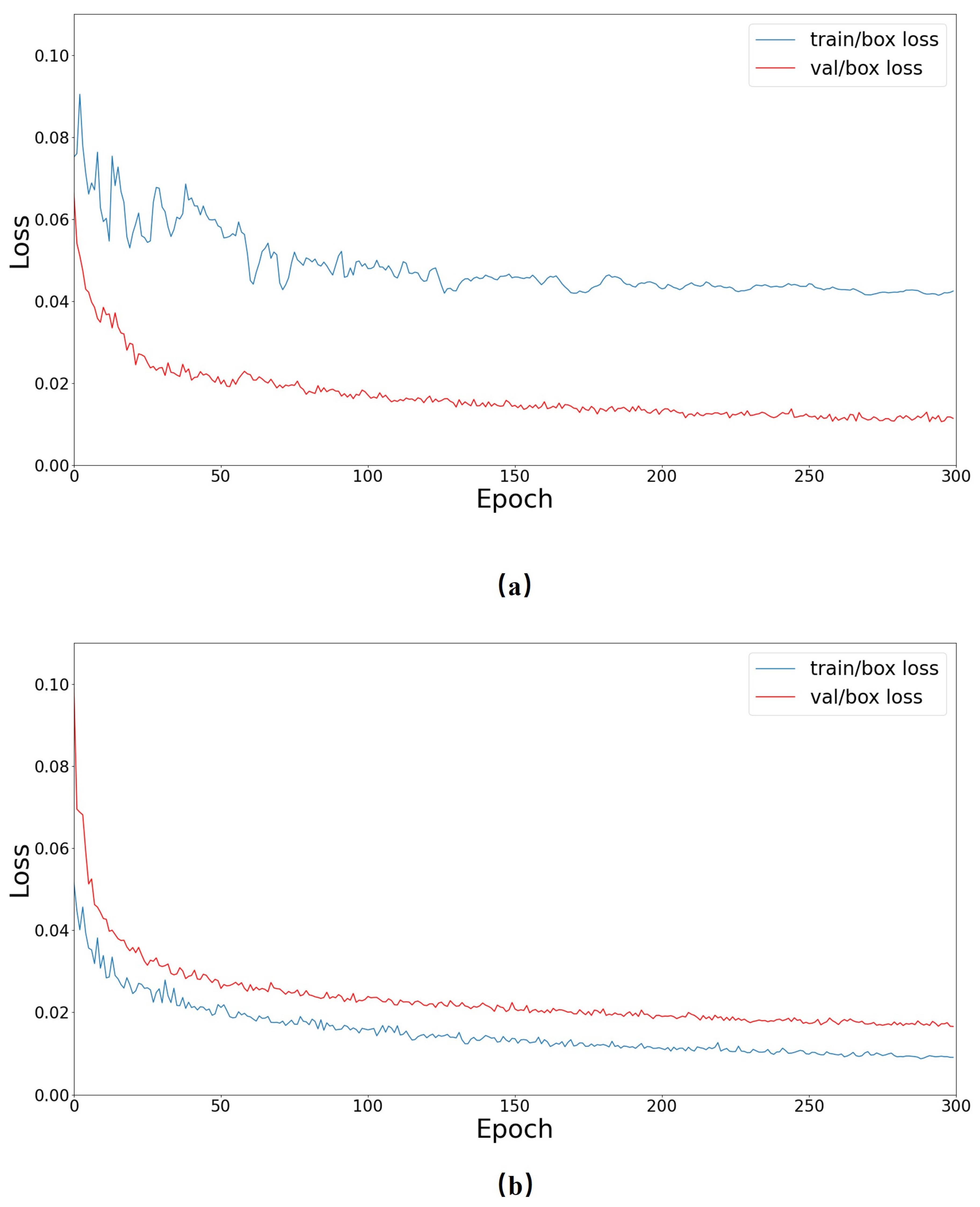

4.1. Model Construction

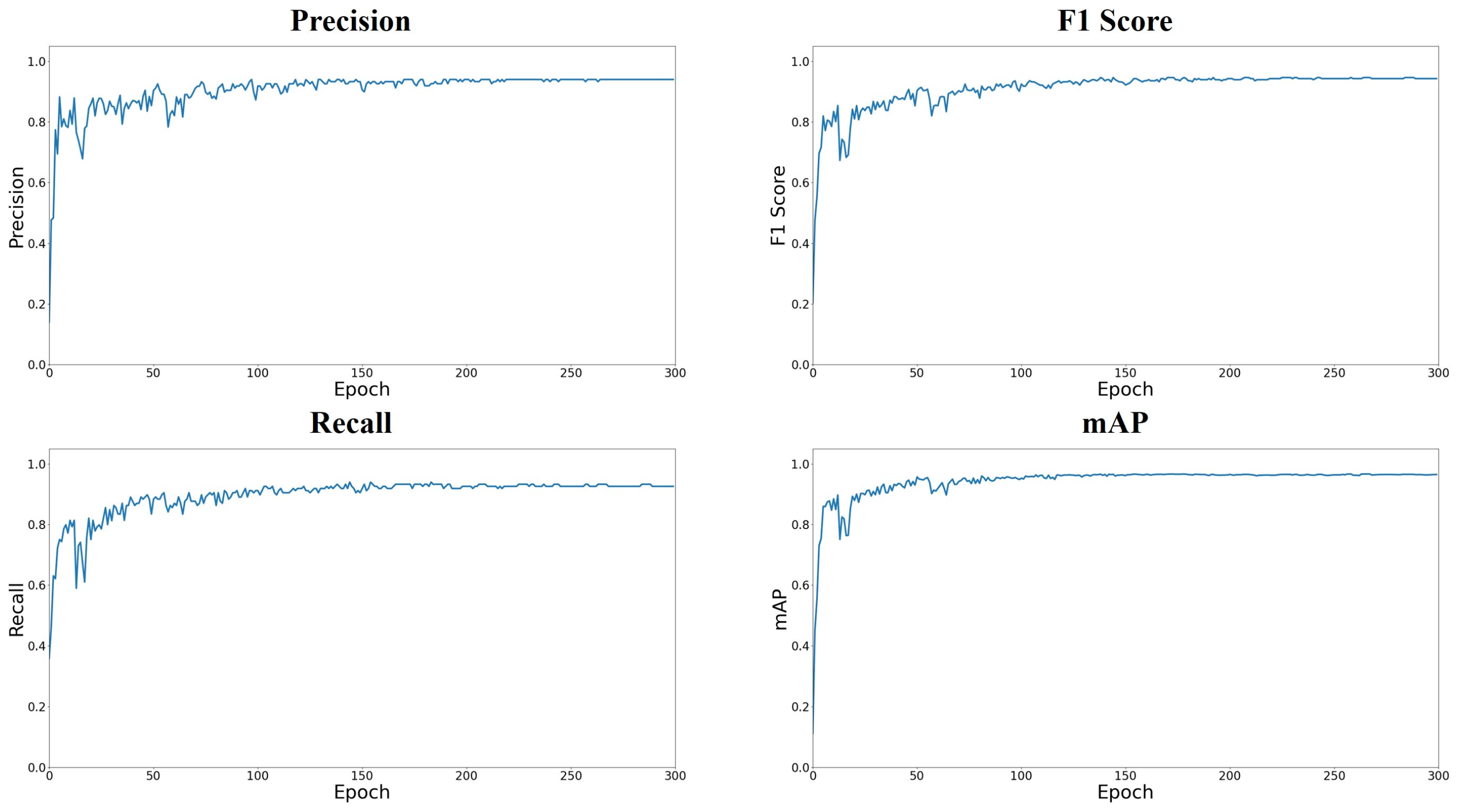

4.2. Model Evaluation

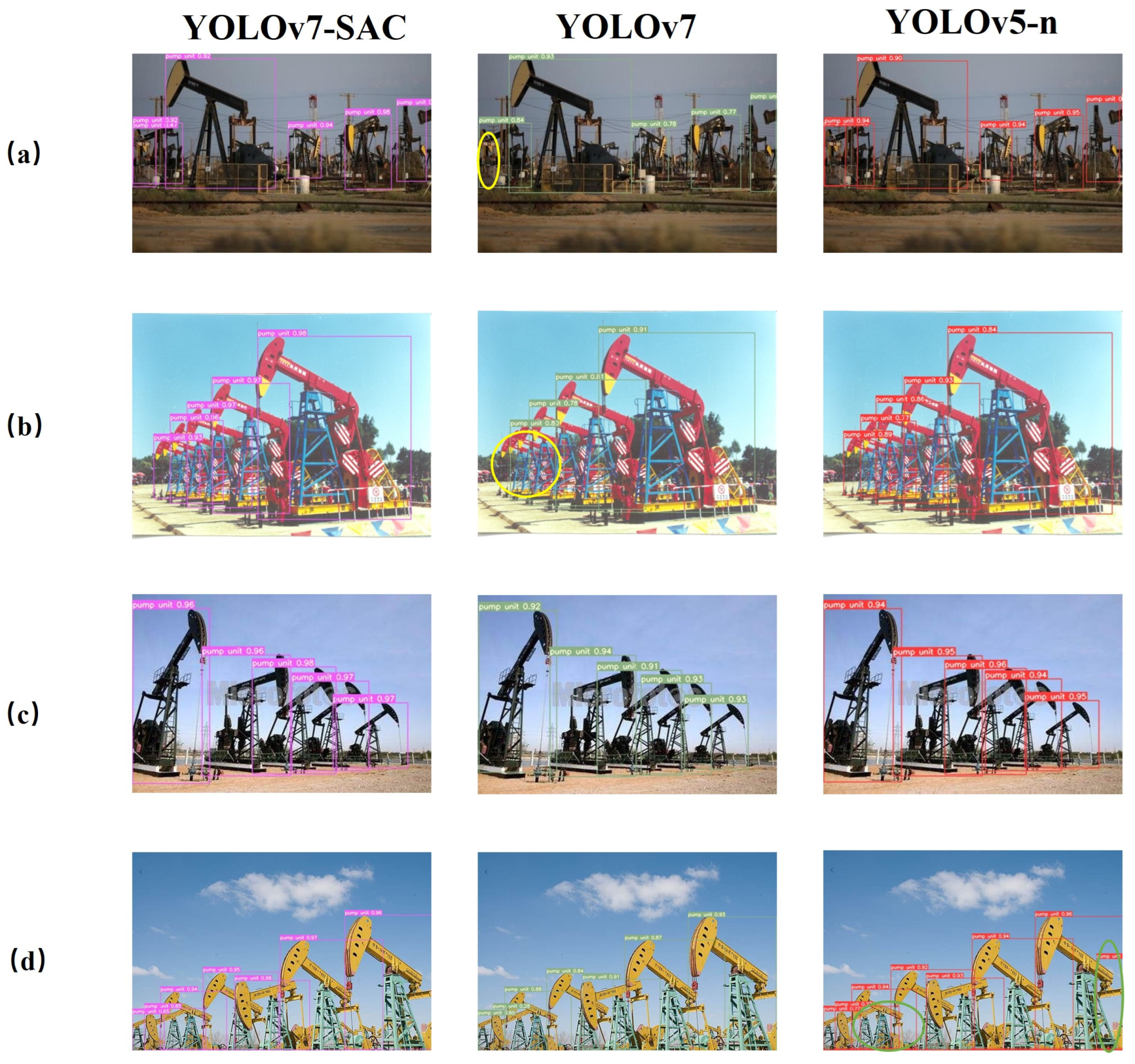

4.3. Results and Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Han, C.; Yue, Y. Judgment method of working condition of pumping unit based on the law of polished rod load data. J. Pet. Explor. Prod. 2021, 11, 911–923. [Google Scholar] [CrossRef]

- Lv, X.; Wang, H.; Zhang, X.; Liu, Y.; Jiang, D.; Wei, B. An evolutional SVM method based on incremental algorithm and simulated indicator diagrams for fault diagnosis in sucker rod pumping systems. J. Pet. Sci. Eng. 2021, 203, 108806. [Google Scholar] [CrossRef]

- Pan, Y.; An, R.; Fu, D.; Zheng, Z.; Yang, Z. Unsupervised fault detection with a decision fusion method based on Bayesian in the pumping unit. IEEE Sens. J. 2021, 21, 21829–21838. [Google Scholar] [CrossRef]

- Cheng, H.; Yu, H.; Zeng, P.; Osipov, E.; Li, S.; Vyatkin, V. Automatic recognition of sucker-rod pumping system working conditions using dynamometer cards with transfer learning and svm. Sensors 2020, 20, 5659. [Google Scholar] [CrossRef] [PubMed]

- Sreenu, G.; Durai, S. Intelligent video surveillance: A review through deep learning techniques for crowd analysis. J. Big Data 2019, 6, 1–27. [Google Scholar] [CrossRef]

- Nawaratne, R.; Alahakoon, D.; De Silva, D.; Yu, X. Spatiotemporal anomaly detection using deep learning for real-time video surveillance. IEEE Trans. Ind. Inform. 2019, 16, 393–402. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Computer Vision–ECCV 2014, Proceedings of the 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V 13; Springer: Cham, Switzerland, 2014. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Elfwing, S.; Uchibe, E.; Doya, K. Sigmoid-Weighted Linear Units for Neural Network Function Approximation in Reinforcement Learning. Neural Netw. Off. J. Int. Neural Netw. Soc. 2017, 107, 3–11. [Google Scholar] [CrossRef] [PubMed]

- Qiao, S.; Chen, L.-C.; Yuille, A. Detectors: Detecting objects with recursive feature pyramid and switchable atrous convolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. Repvgg: Making vgg-style convnets great again. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Ding, X.; Hao, T.; Tan, J.; Liu, J.; Han, J.; Guo, Y.; Ding, G. Resrep: Lossless cnn pruning via decoupling remembering and forgetting. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021. [Google Scholar]

- Ge, Z.; Liu, S.; Li, Z.; Yoshie, O.; Sun, J. Ota: Optimal transport assignment for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020. [Google Scholar]

| Model | mAP (%) | Precision (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|---|

| YOLOv5-n | 92.38 | 90.41 | 85.80 | 88.04 |

| YOLOv7 | 97.76 | 94.15 | 95.52 | 94.83 |

| YOLOv7SAC | 98.47 | 97.69 | 96.27 | 96.97 |

| Model | Number of Images | Average Time (ms) |

|---|---|---|

| YOLOv5-n | 280 | 12.1 |

| YOLOv7 | 280 | 14.2 |

| YOLOv7SAC | 280 | 13.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, Z.; Zhang, K.; Xia, X.; Zhang, H.; Yan, X.; Zhang, L. Detection of Pumping Unit in Complex Scenes by YOLOv7 with Switched Atrous Convolution. Energies 2024, 17, 835. https://doi.org/10.3390/en17040835

Song Z, Zhang K, Xia X, Zhang H, Yan X, Zhang L. Detection of Pumping Unit in Complex Scenes by YOLOv7 with Switched Atrous Convolution. Energies. 2024; 17(4):835. https://doi.org/10.3390/en17040835

Chicago/Turabian StyleSong, Zewen, Kai Zhang, Xiaolong Xia, Huaqing Zhang, Xia Yan, and Liming Zhang. 2024. "Detection of Pumping Unit in Complex Scenes by YOLOv7 with Switched Atrous Convolution" Energies 17, no. 4: 835. https://doi.org/10.3390/en17040835

APA StyleSong, Z., Zhang, K., Xia, X., Zhang, H., Yan, X., & Zhang, L. (2024). Detection of Pumping Unit in Complex Scenes by YOLOv7 with Switched Atrous Convolution. Energies, 17(4), 835. https://doi.org/10.3390/en17040835