Abstract

In this study, an in-depth analysis is presented on forecasting aggregated wind power production at the regional level, using advanced Machine-Learning (ML) techniques and feature-selection methods. The main problem consists of selecting the wind speed measuring points within a large region, as the wind plant locations are assumed to be unknown. For this purpose, the main cities (province capitals) are considered as possible features and four feature-selection methods are explored: Pearson correlation, Spearman correlation, mutual information, and Chi-squared test with Fisher score. The results demonstrate that proper feature selection significantly improves prediction performance, particularly when dealing with high-dimensional data and regional forecasting challenges. Additionally, the performance of five prominent machine-learning models is analyzed: Long Short-Term Memory (LSTM) networks, Artificial Neural Networks (ANNs), Support Vector Machines (SVMs), Convolutional Neural Networks (CNNs), and Extreme-Learning Machines (ELMs). Through rigorous testing, LSTM is identified as the most effective model for the case study in northern Italy. This study offers valuable insights into optimizing wind power forecasting models and underscores the importance of feature selection in achieving reliable and accurate predictions.

1. Introduction

The increasing dependence on renewable energy sources is essential to meet the rising global energy demand, which is expected to exceed 10 billion by 2050 [1]. Renewable energy sources, such as wind, solar, and hydro-power, offer sustainable alternatives to depleting fossil fuels, significantly reducing environmental pollution and mitigating the adverse effects of climate change [2]. Recent technological advancements have made renewable energy technologies more economically viable and improved integration into the energy market [1]. Accurately predicting wind power generation is crucial for maintaining the balance between supply and demand in electricity systems, yet the inherent variability of wind resources complicates this task [3,4]. Fuzzy models [5], as demonstrated in various applications, provide an effective means for managing uncertainty and nonlinearity in complex systems, making them particularly advantageous for ultra-short-term wind speed and power forecasting. A two-stage fuzzy nonlinear fusion model, using empirical mode decomposition and T-S fuzzy models combined with IT2-based aggregation, has demonstrated superior accuracy in ultra-short-term forecasting for wind farms in Colorado, USA [6]. A data-driven probabilistic Wind Power Ramps WPR forecasting (p-WPRF) method has been developed in [7], utilizing machine learning and a generalized Gaussian mixture model to predict wind power ramp events with high accuracy. A model employing meteorological data and fuzzy c-means clustering have demonstrated improved accuracy over traditional methods [8]. Additionally, in [9], a two-stage adaptive approach combining the Hilbert transform and neural networks has been proposed for forecasting nonstationary time series, showing effectiveness in managing highly variable power flows. Enhanced versions of SVM, like those combined with optimization algorithms such as the improved dragonfly algorithm, have demonstrated superior prediction performance compared to traditional models like Back Propagation Neural Networks (BPNNs) and Gaussian process regression [10]. ELM, known for its fast training times and high accuracy, outperforms classical ANNs in both short-term and multi-step-ahead forecasting scenarios, especially in complex terrains [11]. LSTM networks, a type of Recurrent Neural Network (RNN), excel in capturing temporal dependencies in wind speed data, providing robust short-term predictions [12]. CNNs, often used in hybrid models with other deep-learning techniques like Gated Recurrent Units (GRUs), automatically extract complex spatial features from wind power data, enhancing prediction accuracy [13]. Other notable models include the hybrid deep-learning approaches such as Variable Mode Decomposition (VMD)-CNN-GRU, which combine various techniques to reduce volatility and improve both short-term and long-term forecasting accuracy [14].

When the aggregated wind power in a large region is to be predicted, a standard approach is to aggregate the single power forecasts of all wind plants within the region. This approach has many disadvantages. One significant issue is obtaining real-time power generation data from all wind power plants, as many offline plants lack the necessary infrastructure for continuous data collection [15]. Additionally, the spatial and temporal correlations between different wind farms must be accurately captured [13]. The high-dimensional feature sets often encountered in regional forecasting further complicate the process, necessitating feature-selection and reduction techniques to manage redundant information [16]. Moreover, the variability in meteorological conditions across large areas demands robust models capable of integrating numerical weather predictions with historical data to enhance forecast accuracy [17]. Advanced forecasting methods are essential for handling the uncertainty in wind power predictions, but they introduce additional challenges in model construction and data processing [18]. Obviously, in this context, feature selection is pivotal, and advanced feature-selection methods allow for better determination of the significance and stability of various features, significantly enhancing forecasting model performance [19,20]. These methods streamline input data, for example by clustering features into sets and mapping them onto graphs or using PCA to reduce dimensionality and eliminate non-informative features [19,21,22]. The importance of feature selection is further underscored in [23], where deep feature-selection frameworks ensure that only the most pertinent data influences the initial layer of ML models. A PV power prediction method, presented in [24], is based on the Pearson coefficient and addresses factors such as ambient temperature, relative humidity, and solar irradiance by using correlation tests to remove irrelevant features while using an LSTM network. Similarly, ref. [25] employs LSTM neural networks for ultra-short-term prediction, and the Spearman rank correlation is used to determine hyper-parameters, for high-precision wind power forecasting in a wind farm in western China.

Most of the papers cited address the problem of hourly predicting the production of a single wind farm, even in the case of regional forecasts, which are obtained as the sum of the predictions at every single plant. In this kind of task, the problem is well defined: the output to be predicted is the plant power, while the input is the predicted wind speed and direction in the geographical position of the plant (more than one wind measurement point may be considered for very large plants).

This research faces a different and more complex task: the hourly prediction of aggregated wind energy production in a very large region, such as northern Italy, while considering the positions of the wind plants and their production to be unknown. This is a completely different task because there is a single known aggregated power to be predicted as an output, but detailed information about each plant in the region cannot be obtained. The region is too large, and acquiring real-time power generation data from all wind power plants within it presents a significant challenge. Thus, while there is a well-defined output to be predicted, the input is not well-defined.

In this work, a new approach is proposed to select the “input points” (denoted as measurement points) by identifying the main cities (province capitals in Italy) of the region. Additionally, since the capital cities comprise a substantial number (47 in the northern region of Italy), an exhaustive comparison of several feature-selection techniques is performed to reduce the number of input features. Furthermore, many state-of-the-art machine-learning and deep-learning regression methods are compared.

The research begins by highlighting the specific challenges of accurately predicting wind power at the regional level, followed by an introduction to the case under study. A range of machine-learning methods is explored, including SVM, ANN, ELM, CNN, and LSTM networks. Preliminary predictions will be made using these methods to identify the most effective approach for this case. Then, various feature-selection techniques are assessed, such as mutual information, Spearman correlation, Pearson correlation, the Chi-squared test, and Fisher score. Based on established criteria, the most suitable feature-selection method will be selected and applied to improve wind power forecasts in the region. By demonstrating the impact of refined feature selection, this comprehensive approach aims to enhance the accuracy and reliability of load predictions for regional wind power plants.

2. Problem Description: Regional Wind Power Forecasting with Unknown Plant Position and Production Data

Wind speed is the most significant factor influencing turbine output, with power generation increasing proportionally to the cube of wind speed. However, factors like turbulence, wind direction, and air density introduce additional complexity. Turbulence can reduce power output and increase stress on turbine components, while deviations in wind direction and yaw misalignment affect the performance of turbines across wind farms [26,27]. Moreover, air density, influenced by temperature and pressure, plays an essential role in power generation but was excluded from this study due to discrepancies in measurement locations [28].

The primary challenge in regional forecasting is the limited availability of detailed data for each wind power plant. In this case, only wind speed and temperature data are available for specific points, which may not correspond closely to the locations of the wind plants. As a result, precise predictions for individual plants are not feasible within this framework.

Regional forecasting, while less accurate on a plant-specific level, offers practical advantages by addressing broader areas with more efficiency. Therefore, this study aims to enhance the accuracy of regional wind power forecasts by employing advanced feature-selection techniques, providing a more scalable approach to predicting power production across multiple wind farms.

The main contribution of this study is a proposed approach to select the measuring points (locations) within a large geographical area in order to predict the aggregated power produced from the wind resource in that area (considering unknown locations of the wind plants). Wind speed forecasts at these locations are acquired from a provider such as Aeronautica Militare. The machine-learning methods are then used to relate the gathered wind speeds from several locations to the single aggregated power of the region. The time horizon is fixed to 24 h, as it is the value useful for the day before the market, which is where this aggregated forecast is mostly supposed to be used, other than defining power flows and unit commitment.

3. Case Study Description

The case study in this work focuses on a zone in northern Italy that includes the provinces of Emilia Romagna, Veneto, Friuli Venezia Giulia, Trentino Alto Adige, Lombardy, Piedmont, Liguria, and Aosta Valley. Within this region, access is available to the total production power of all wind power plants; however, specific details about the production capacities of individual power plants are unavailable. Additionally, meteorological data, including wind speed and temperature, are available for 47 locations, corresponding to the most important cities in this zone [29,30]. The available data spans from 20 January 2022 to 20 March 2023, with a granularity of one hour.

Hourly wind speed data are available for all 47 most important cities, with each dataset divided into two components: ugrd_10m, wind speed along the x-axis or the east–west direction (m/s), and vgrd_10m, wind speed along the y-axis or the north–south direction (m/s).

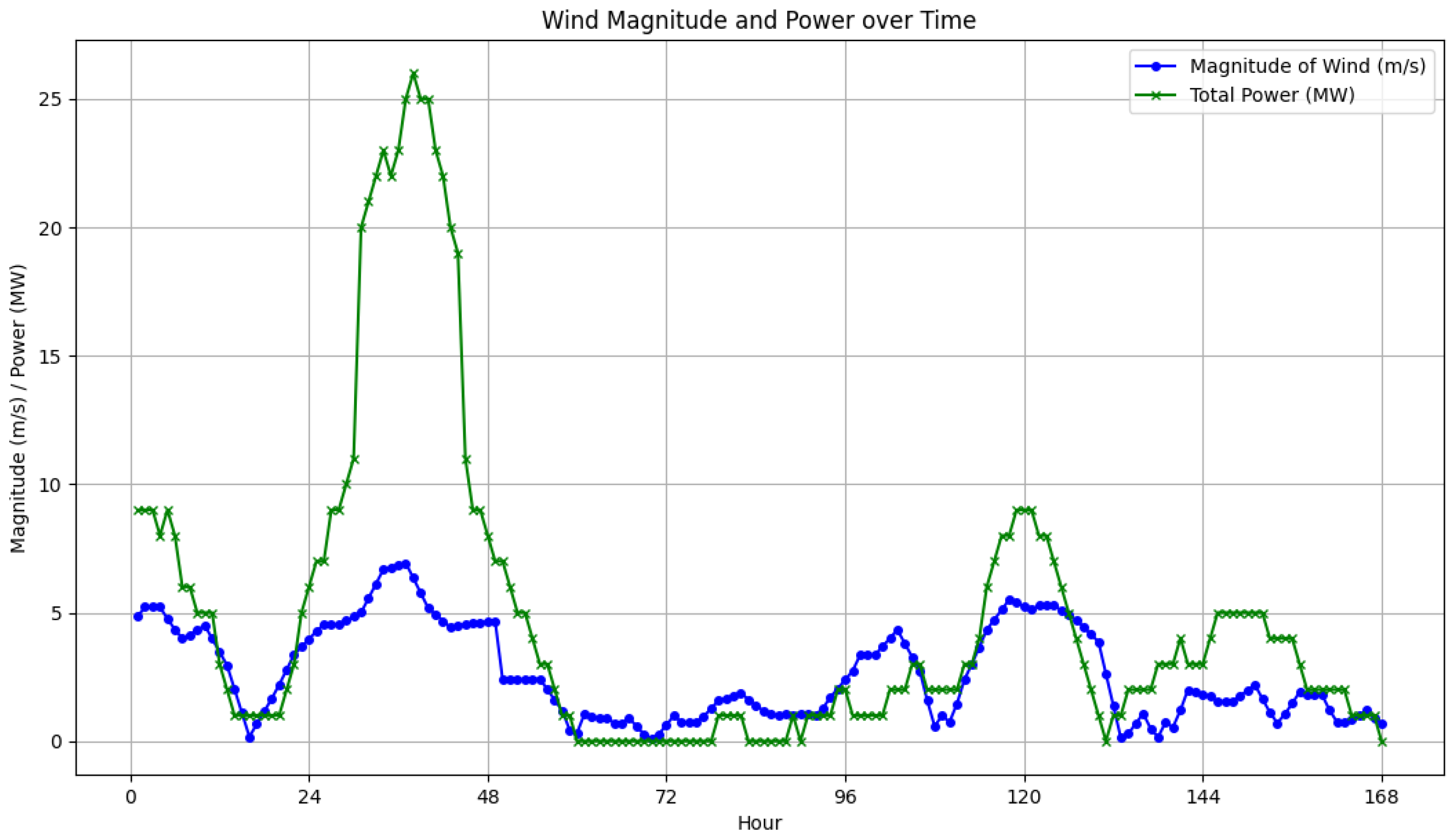

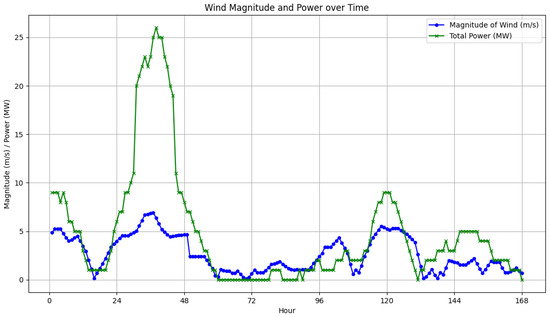

Also, information on the total production power of wind power plants is available hourly for the entire region. To enhance the clarity of the data presentation, Figure 1 illustrates the magnitude of wind speeds recorded at measurement point 8, along with the total power production for the entire zone over one week from 21 January to 27 January 2022 (168 h).

Figure 1.

Magnitude of wind speeds recorded at measurement point 8 compared to the total power production for the entire zone.

However, the exact distribution of the wind power plants across the region and their specific locations remain unclear. This lack of detailed spatial information presents a challenge for accurately predicting power production. Figure 2 illustrates the study zone and the locations of the meteorological stations.

Figure 2.

Study area—Northern Italy.

The complexity of predicting the total production power under these conditions requires a more nuanced approach. Unlike scenarios where predictions are made for individual power plants using their specific meteorological data, this case demands sophisticated analysis and the application of various ML methods. Emphasizing feature selection is crucial to improve the accuracy of predictions, given the limited information on the spatial distribution of the power plants.

4. Machine-Learning Method Selection

In recent years, the application of ML techniques in predicting the production power of wind power plants has garnered significant attention. Based on the comprehensive review provided in [31], five techniques have been identified as particularly prominent and effective: LSTM networks, ELM, ANN, CNN, and SVM. These methods have been extensively utilized due to their ability to handle complex and nonlinear relationships in data, making them suitable for the intricate task of wind power prediction. In this section, each of these methods will be explored in detail, and then they will be utilized for forecasting wind power production using wind speed as a feature. This study will demonstrate which method performs best for this specific case study.

4.1. Description of the Considered Methods

LSTM: LSTM networks are a type of recurrent neural networks that are particularly well-suited for time-series forecasting due to their ability to capture long-term dependencies in sequential data. This method was proposed in 1997 [32]. LSTM networks are designed to overcome the vanishing gradient problem encountered in traditional recurrent neural networks by incorporating memory cells that can maintain information over long periods. The key components of an LSTM cell include the input gate, forget gate, and output gate, which regulate the flow of information. The cell state and the hidden state are updated using the following equations:

where , , and represent the forget, input, and output gates, respectively; is the sigmoid function; and tanh is the hyperbolic tangent function. The matrices and are weights and and are biases.

In the context of wind power plant forecasting, LSTM networks have been shown to effectively predict power output by leveraging historical wind speed data. Based on [31], 27% of the papers utilize this method for predicting the production power of wind power plants.

ANNs: ANNs are computational models inspired by the human brain, capable of recognizing complex patterns and relationships in data. An ANN consists of interconnected layers of neurons, including an input layer, one or more hidden layers, and an output layer. Each neuron applies a weighted sum of its inputs, passes the result through an activation function, and transmits the output to the neurons in the next layer. The training process involves adjusting the weights to minimize the error between the predicted and actual outputs using backpropagation.

The output of a single neuron can be described by the following equation:

where y is the neuron’s output, is the activation function (such as sigmoid, ReLU, or tanh), are the inputs, are the weights, and b is the bias.

ANNs have proven effective in capturing the nonlinear relationships between wind speed and power output for wind power plant forecasting. As mentioned in [31], 17% of the articles focused on predicting the production power of wind power plants have employed this method. This significant usage underscores the effectiveness and reliability of this method in forecasting wind power production.

SVMs: SVMs are supervised learning models used for classification and regression tasks. SVMs are particularly effective for high-dimensional spaces and are known for their ability to model complex, nonlinear relationships using kernel functions. The basic idea of an SVM is to find the hyperplane that best separates the data into different classes or, in the case of regression, to find the optimal margin that minimizes the prediction error.

For regression tasks, SVMs use a method known as Support Vector Regression (SVR). The objective of SVR is to find a function that deviates from the actual target values by a value no greater than for all training data points while being as flat as possible. This is expressed mathematically as:

where and are the Lagrange multipliers, is the kernel function, and b is the bias term. Common kernel functions include linear, polynomial, and radial basis function kernels.

SVMs have shown promise for wind power plant forecasting due to their ability to handle nonlinear and high-dimensional data efficiently. This method is utilized in 16% of articles within the field of wind power plant energy forecasting, highlighting its high capability in accurately predicting power production [31].

ELM: ELMs are a type of single-layer feedforward neural network that offer fast learning speeds and good generalization performance. Unlike traditional neural networks, ELMs randomly assign the weights between the input and hidden layers and only train the weights between the hidden and output layers, resulting in a much faster training process. This method eliminates the need for iterative tuning of the weights, making it computationally efficient.

The following equation can describe the output of an ELM:

where H is the hidden layer output matrix, is the weight matrix between the hidden and output layers, and T is the target output matrix. The hidden layer output matrix H is calculated as:

where g is the activation function, are the input weights, are the input data, and are the biases.

Due to their ability to efficiently manage large datasets and accurately model complex relationships between wind speed and power output, ELMs have demonstrated considerable potential for wind power plant forecasting. According to the results of [31], this method has been utilized in 13% of the articles related to wind power production forecasting, indicating its significant presence in the field.

CNNs: CNNs are a specialized type of neural network primarily used for processing grid-like data structures, such as images. However, they have also been effectively applied in time-series forecasting, including predicting wind power production. CNNs are composed of convolutional layers, pooling layers, and fully connected layers. The convolutional layers apply convolution operations to the input data using filters (kernels) to extract local features, while the pooling layers reduce the spatial dimensions, making the computation more efficient and robust to variations.

The operation of a convolutional layer can be expressed as:

where X is the input matrix, W is the filter (kernel) matrix, and b is the bias. The output of this operation, called the feature map, highlights the presence of specific patterns in the input data.

CNNs can capture spatial and temporal correlations in wind speed data. By applying convolutional layers to time-series data, CNNs can identify relevant patterns that are predictive of wind power output. This capability has led to CNNs being utilized in 11% of the articles related to forecasting wind power plant production [31].

As is evident, approximately 84% of the articles in the field of forecasting wind power plant production have utilized these five methods. Consequently, this study employs these methods to predict the specific case study and identify the most efficient one. For the case study, wind speed and production power data are available for 14 months, from 20 January 2022 to 20 March 2023. Initially, the parameters of these models will be adjusted using data from the first two months, i.e., 20 January 2022 to 20 March 2023. Subsequently, predictions will be made for the data from the following year to determine the best method.

4.2. Hyperparameter Tuning

Hyperparameter tuning is a critical step in the development of effective ML models, significantly influencing their performance and ability to generalize to new data. Adjusting hyperparameters such as learning rate, batch size, and the number of layers or neurons in a network is essential for optimizing the model’s accuracy and efficiency. According to [33], systematic hyperparameter tuning can substantially improve model performance. The study [34] emphasizes that effective hyperparameter optimization is crucial for enabling the model to capture complex patterns in the data while avoiding both overfitting and underfitting, which is essential for making accurate predictions.

Moreover, Bergstra and Bengio [33] emphasized the effectiveness of automated hyperparameter search methods, such as grid search and random search. These methods systematically explore the hyperparameter space and often outperform manual tuning by efficiently identifying the optimal settings [35]. Proper tuning is particularly important in applications like wind power forecasting, where accurate predictions can enhance the efficiency and reliability of power generation systems. Therefore, meticulous tuning of hyperparameters is indispensable for achieving optimal results in predictive modeling tasks. Cross-validation is a crucial technique employed alongside hyperparameter tuning to evaluate a model’s generalization performance and mitigate overfitting. By dividing the dataset into several subsets, known as folds, cross-validation facilitates training the model on one subset while assessing its performance on a separate subset. This iterative process is conducted across all folds, with the average performance metric providing an estimate of the model’s overall generalization capability. K-fold cross-validation, a widely used variant, partitions the data into K equal folds, where each fold is used as the validation set once, and the remaining folds constitute the training data [36].

Implementing cross-validation offers a more robust performance estimation than a single train–test split. By integrating cross-validation during hyperparameter tuning, one can identify hyperparameter values that yield the best model performance on unseen data, thereby enhancing the reliability and accuracy of predictions, such as in solar PV power forecasting [37]. To tune hyperparameters, data from the first two months (20 January 2022, to 20 March 2022) was utilized, implementing a thorough hyperparameter tuning approach involving grid search and five-fold cross-validation. The data are divided into five folds, and the training and validation process is repeated five times. In each iteration, four folds are utilized as training data while one fold serves as the test data. The goal of the grid search was to find the optimal hyperparameters by minimizing the Normalized Root Mean Squared Error (NRMSE), which is derived from the Root Mean Squared Error (RMSE) using the following formula [38]:

where:

- n represents the number of samples

- is the actual value of the target variable for sample i

- is the predicted value of the target variable for sample i

- RMSE stands for Root Mean Squared Error

- Range denotes the range of the target variable (), where and are the maximum and minimum values of the true target variable, respectively.

The outcomes of the hyperparameter adjustments are summarized in Table 1, Table 2, Table 3, Table 4 and Table 5.

Table 1.

Summary of hyperparameters for LSTM.

Table 2.

Summary of hyperparameters for ANN.

Table 3.

Summary of hyperparameters for SVM.

Table 4.

Summary of hyperparameters for CNN.

Table 5.

Summary of hyperparameters for ELM.

4.3. Comparison of Machine-Learning Methods without Feature Selection

In this section, the focus is on forecasting the production power in the studied area using the introduced ML methods and the optimized hyperparameter settings to identify the best model for this case study.

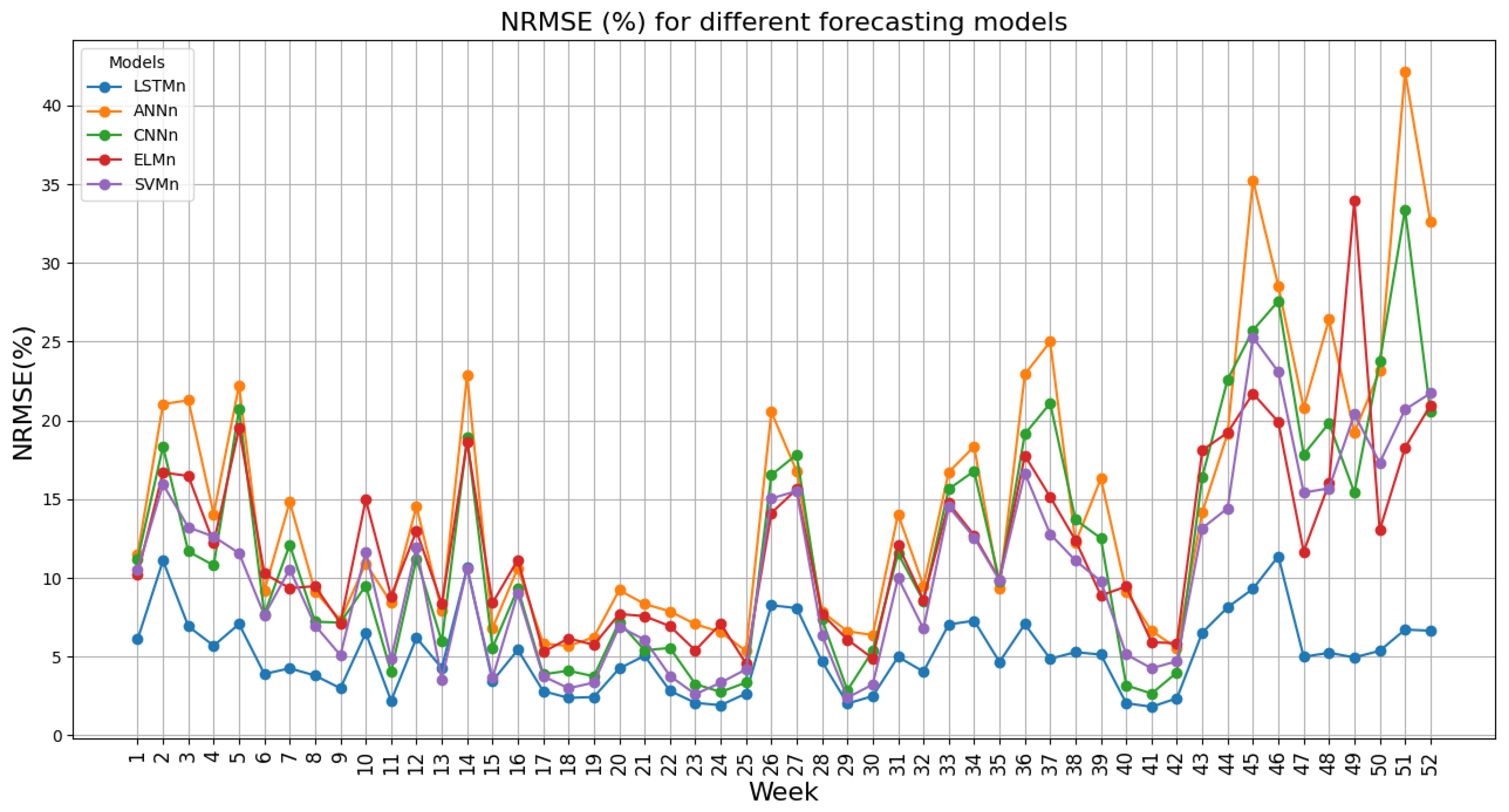

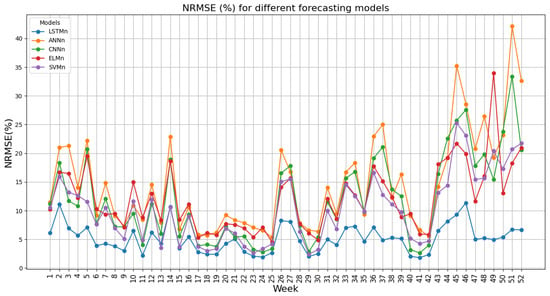

The prediction scenario is as follows: Initially, two months of data from 20 January 2022 to 20 March 2022 are used as training data, and the week after this period is used as test data. Subsequently, all the data are shifted forward by one week, and this process is repeated for 52 weeks. This approach ensures one year of forecasting, thereby enhancing the comprehensiveness of the method and results. The simulation results spanning 52 weeks are presented in Figure 3. The figure includes the NRMSE expressed as a percentage. Table 6 provides an overview of the overall NRMSE for all five methods.

Figure 3.

Performance of different models over weeks.

Table 6.

Comparison of model performance.

The results from Figure 3 and Table 6 clearly demonstrate that LSTM outperforms other methods in terms of efficiency in this case study. Therefore, this method is chosen as the best method for forecasting the production capacity of the studied area, and in the rest of the research the analyses are done only for this method.

5. Feature-Selection Methods

5.1. Define Feature-Selection Methods

Feature selection is a crucial step in developing accurate wind power forecasting models for this case study. Given the lack of information about the locations of wind power plants and the presence of 47 different wind measuring points—many of which may be unrelated to the wind power plants and could introduce noise into the forecasting process—feature selection becomes essential. This process involves selecting a subset of relevant features from a larger set of potential predictors to enhance the model’s accuracy and interpretability while reducing its complexity and computational cost.

This section provides an overview of the feature-selection methods used in wind power forecasting, highlighting key techniques within the filter methods category. Subsequently, several of these methods are applied to the problem to identify the most effective one.

1. Filter Methods: Filter methods evaluate the relevance of features independently of the chosen learning algorithm. Examples include the Pearson correlation coefficient, which is used to measure the linear relationship between features and the target variable (e.g., wind power output), as demonstrated in related works [39]. The Spearman rank correlation coefficient captures monotonic relationships between features and target variables in wind forecasting studies [23]. The Chi-squared test and Fisher score evaluate the independence of categorical features from the target variable [40]. Mutual information measures statistical dependence between variables, effectively capturing nonlinear relationships, and is widely used in wind power forecasting [41]. Relief-F estimates feature quality based on their ability to distinguish between instances of different classes, effectively handling noisy and irrelevant features [42]. Variance threshold selects features with significant variance, filtering out low-variance predictors.

2. Wrapper Methods: Wrapper methods use the learning algorithm to evaluate feature subsets, often improving performance but with higher computational costs. Recursive feature elimination iteratively removes the least impactful features to enhance prediction accuracy in wind forecasting. Sequential forward selection adds features one by one, while sequential backward elimination removes the least significant features, both optimizing model performance [43]. Genetic algorithms mimic natural selection to explore and exploit feature subsets and are widely applied in wind power forecasting [44].

3. Embedded Methods: Embedded methods integrate feature selection with model training, offering a more efficient approach. L1 Regularization (Lasso) adds a penalty term to shrink irrelevant feature coefficients to zero, effectively eliminating them, and has been useful in identifying key predictors in wind power forecasting [45]. L2 Regularization (Ridge) uses a different penalty term to handle multicollinearity, stabilizing models with highly correlated predictors [46]. Decision-tree-based methods, like random forests and gradient boosting trees, perform feature selection by ranking feature importance based on split criteria and have been widely applied in wind forecasting [47].

Filter methods offer distinct advantages over wrapper and embedded methods in feature selection. They are computationally efficient and scalable, making them particularly suitable for handling large datasets with numerous features [48]. Unlike wrapper methods, which involve iterative model training and are computationally demanding, filter methods evaluate feature relevance independently of the learning algorithm, thereby reducing computational overhead and avoiding overfitting concerns during feature selection [49]. Additionally, filter methods, such as correlation-based approaches, assess each feature’s importance in isolation, making them transparent and interpretable in identifying relevant features without being influenced by multicollinearity [50]. These characteristics underline the practical advantages of filter methods in scenarios where computational efficiency, scalability, and straightforward interpretability are crucial considerations. In this study, four distinct filter methods—Pearson correlation, Spearman correlation, Chi-squared test with Fisher score, and mutual information—were compared to determine which method most enhances prediction accuracy. These methods were selected for their capability to effectively handle high-dimensional data without relying on prior knowledge of feature relationships to power output.

5.2. Choosing Feature-Selection Method

As mentioned earlier, filter methods select features only based on the data, focusing on statistical relationships between each feature and the output. Since feature selection is independent of the learning algorithm, training is not required to assign feature scores. However, selecting the most relevant features requires setting a threshold based on their scores, and the method’s performance must be evaluated for different threshold values to determine the optimal one.

The following section introduces the filtering methods in more detail and identifies the most effective features.

5.2.1. Pearson Correlation

The Pearson correlation coefficient, often denoted as , is a measure of the linear relationship between two variables, typically used to assess the strength and direction of association between them. It quantifies how much a change in one variable is associated with a change in another variable. Here is the formula for calculating the Pearson correlation coefficient between two variables X and Y:

where:

is the Pearson correlation coefficient between variables X and Y.

is the covariance of X and Y.

and are the standard deviations of X and Y, respectively.

The covariance is calculated as:

where and are the means of X and Y, respectively, and n is the number of data points.

The Pearson correlation coefficient ranges from −1 to 1:

- -

- : Perfect positive linear relationship.

- -

- : Perfect negative linear relationship.

- -

- : No linear relationship (variables are not linearly correlated).

In feature selection, Pearson correlation can be used to measure the linear relationship between each feature and the target variable (such as wind power output). Features with high absolute correlation coefficients are typically considered more relevant for prediction tasks. However, it is important to note that Pearson correlation assumes a linear relationship and may not capture nonlinear associations between variables [39].

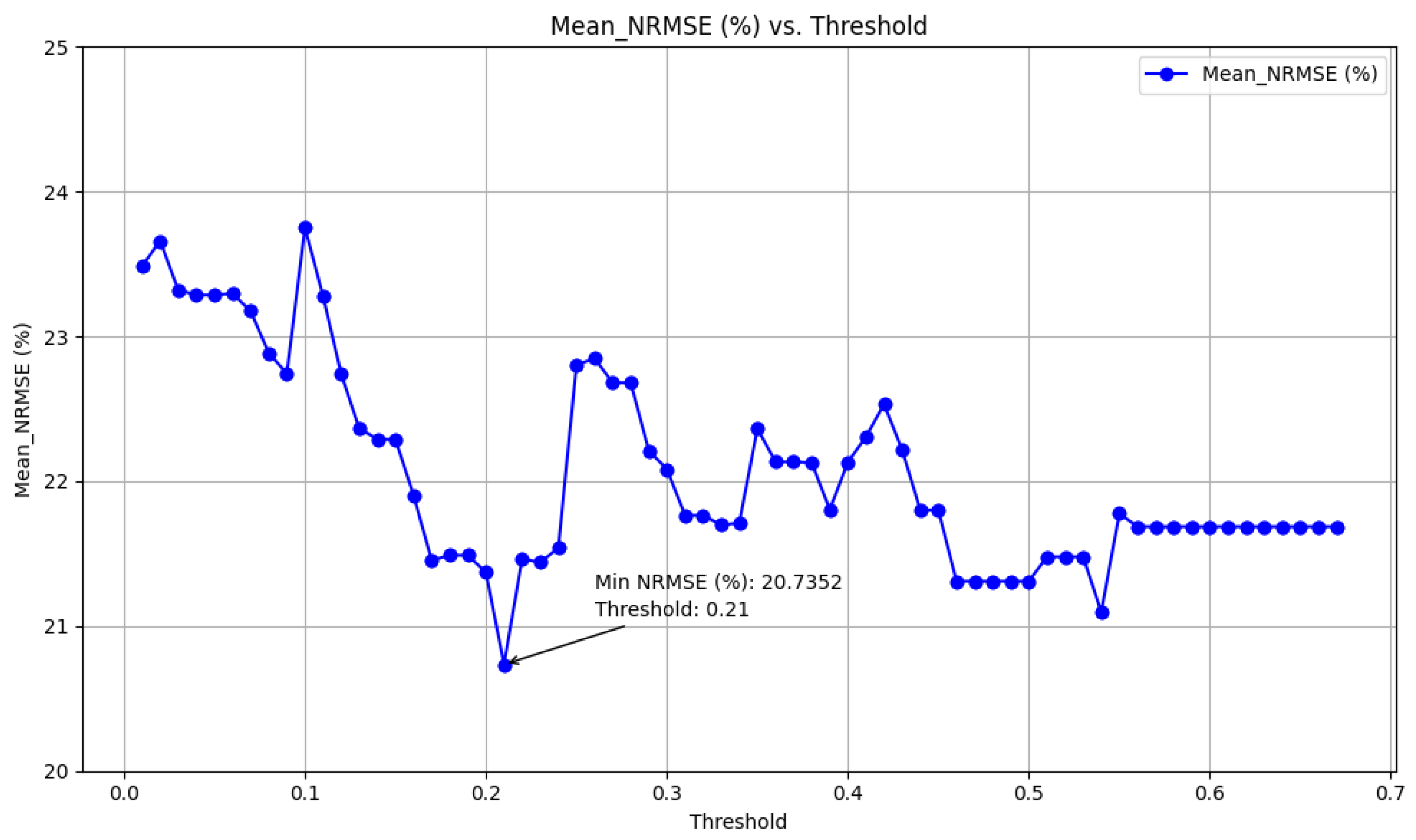

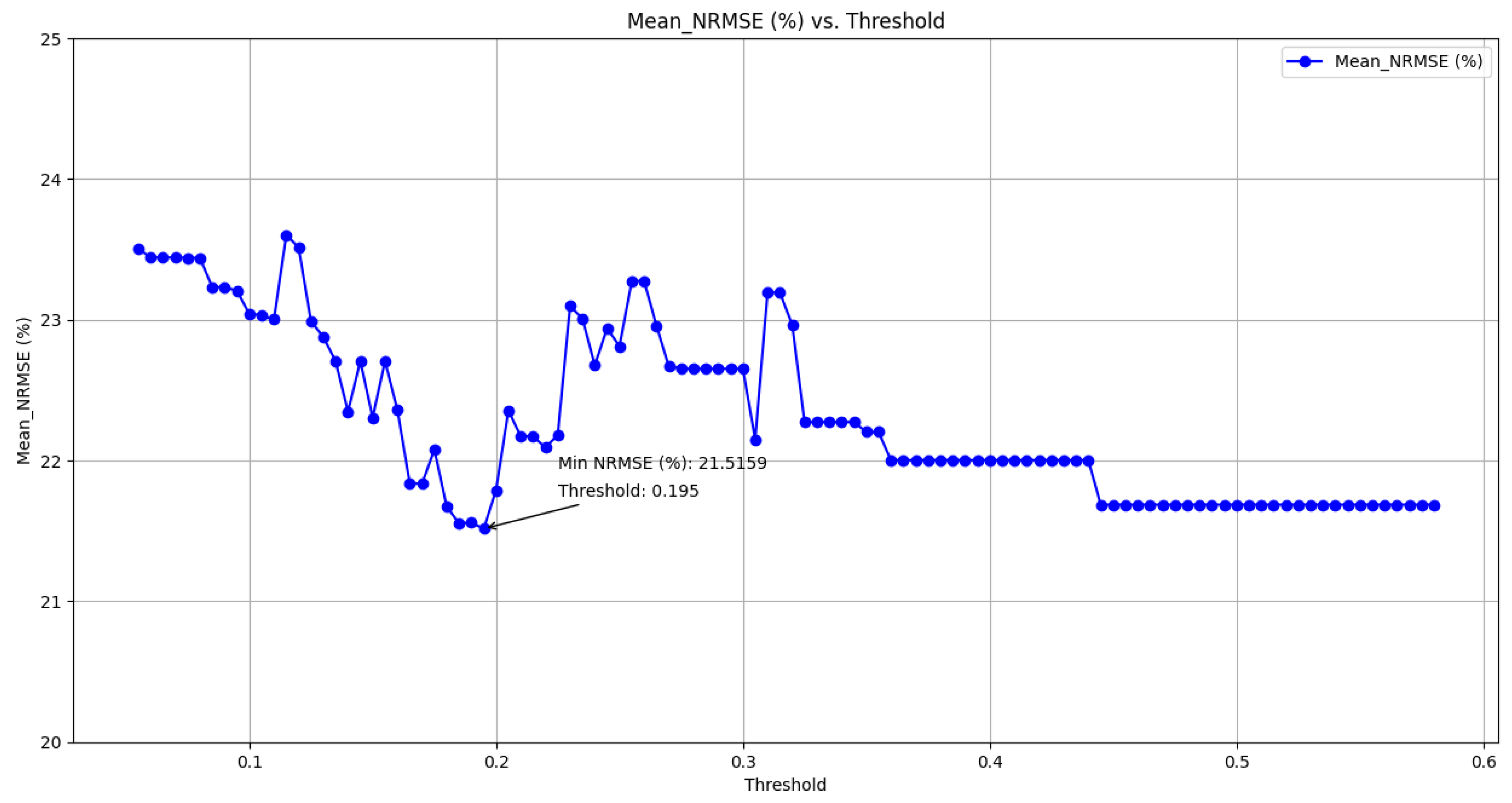

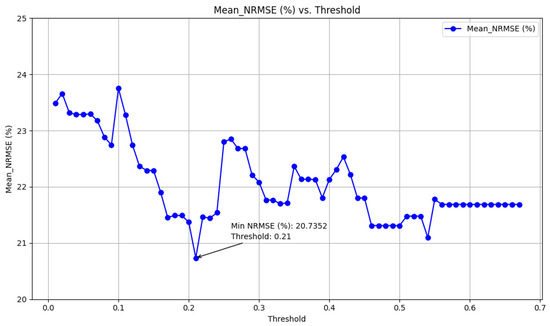

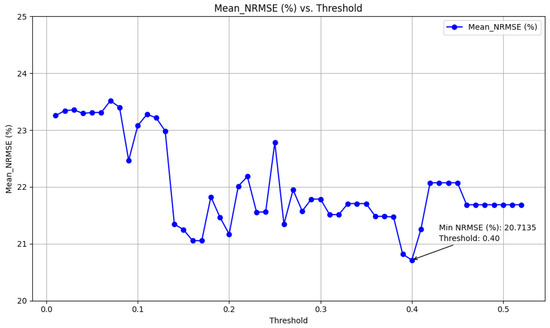

To employ this feature-selection method, data from 20 January 2022 to 20 March 2022 was analyzed to assess the importance of features for power forecasting. Initially, the ranking of feature importance was determined; however, establishing an appropriate threshold for filtering the most effective features remained ambiguous. To address this, feature scores were divided into equal intervals. For each interval value, cross-validation was conducted using five folds and an 80:20 training-to-test ratio. Eventually, the optimal threshold that minimized the average NRMSE was identified. Figure 4 illustrates the detailed process of selecting the best threshold.

Figure 4.

Finding the best threshold (Pearson).

The figure shows that the lowest NRMSE value is 20.7352%, achieved by selecting features with a threshold greater than 0.21.

5.2.2. Spearman Correlation

The Spearman correlation coefficient () is a statistical measure that evaluates the strength and direction of the monotonic relationship between two variables. It is calculated using the formula:

where represents the difference in ranks for each paired observation , n is the number of paired observations, and and are the ranks of the i-th observation of variables X and Y, respectively.

To compute the Spearman correlation coefficient:

- 1.

- Ranking: Assign ordinal ranks to each variable’s observations, from 1 (lowest) to n (highest).

- 2.

- Calculate differences: Compute the difference for each pair of ranked observations.

- 3.

- Square differences: Square each difference, .

- 4.

- Summation: Sum all squared differences, .

- 5.

- Apply formula: Substitute the sum of squared differences into the formula to calculate .

The resulting coefficient ranges from −1 to 1:

- -

- indicates a perfect positive monotonic relationship.

- -

- indicates a perfect negative monotonic relationship.

- -

- indicates no monotonic relationship.

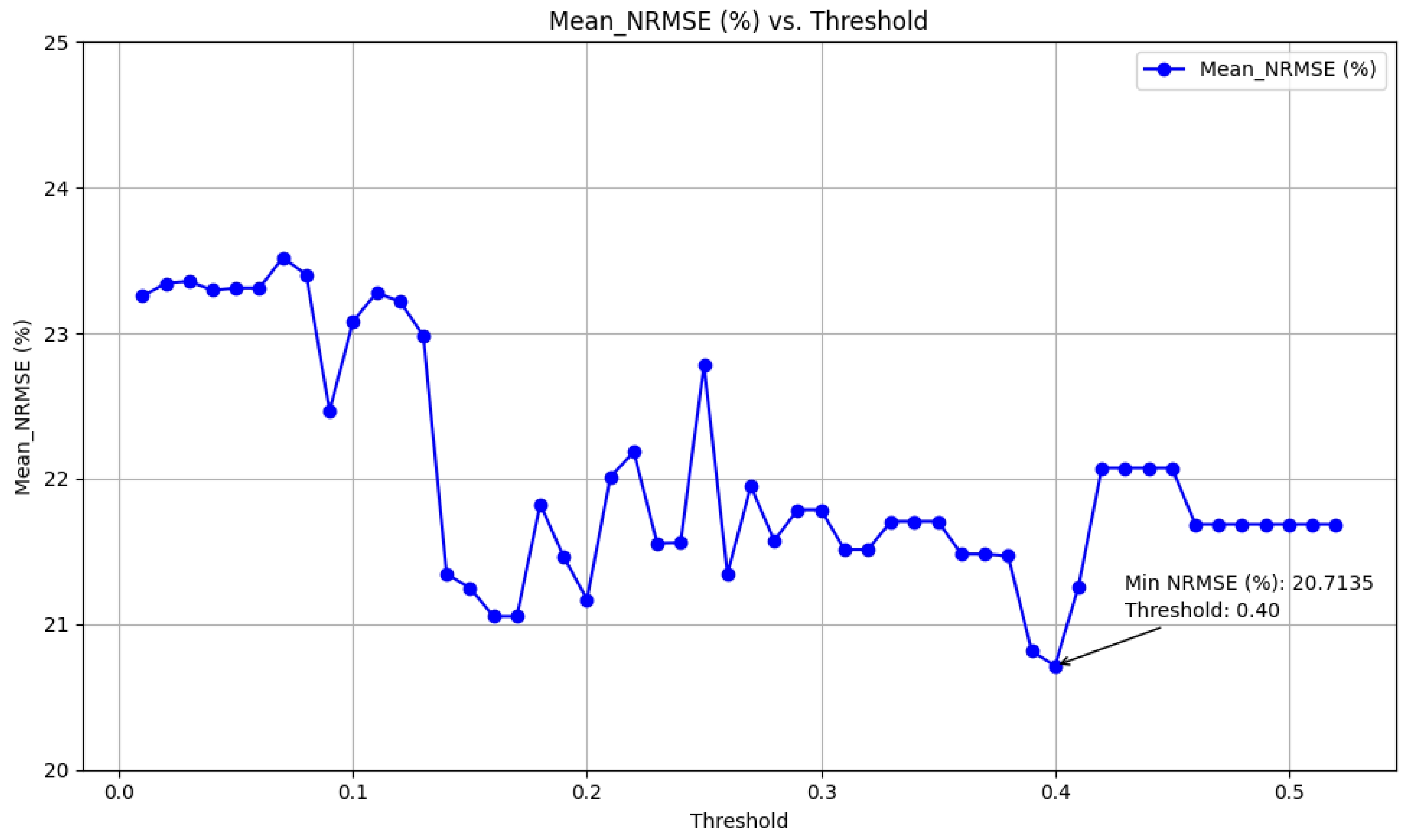

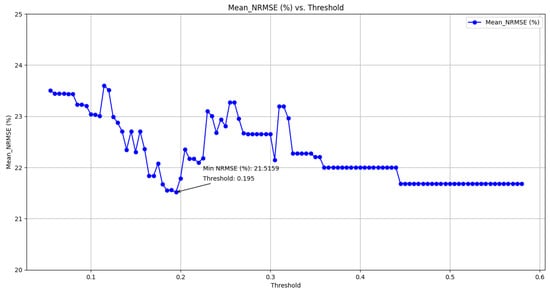

Spearman correlation is particularly useful in analyzing relationships between variables measured on ordinal scales or when assumptions of linearity and normality are not met. It provides a robust measure of association that is less sensitive to outliers compared to other correlation measures [50]. To determine the optimal threshold in this method, a process similar to that used with the Pearson method is followed. The outcome of this analysis is depicted in Figure 5.

Figure 5.

Finding the best threshold (Spearman).

The figure indicates that the lowest NRMSE value of 20.7135% is achieved when selecting features with a threshold greater than 0.4.

5.2.3. Mutual Information

Mutual information is a measure from information theory that quantifies the amount of information gained about one random variable through another. It is a crucial tool for understanding the dependency between variables, capturing the reduction in uncertainty about one variable given the knowledge of another. Formally, for two discrete random variables, X and Y, mutual information is defined as:

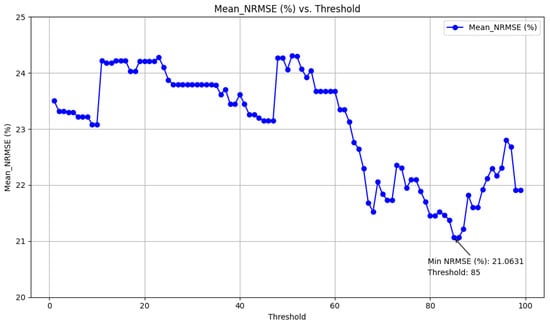

where is the joint probability distribution of X and Y and and are their marginal distributions. For continuous variables, the definition involves integrals. MI is non-negative and symmetric, meaning , and equals zero if and only if the variables are independent [51]. The same procedure as used in the previous two methods is employed to determine the optimal threshold for selecting the most effective features. The result is illustrated in Figure 6.

Figure 6.

Finding the best threshold (Mutual).

The figure shows that the minimum NRMSE value of 21.5159% is obtained when features are selected with a threshold exceeding 0.195.

5.2.4. Chi-Squared Test and Fisher Score

The Chi-squared test is a statistical method used to examine the relationship between two categorical variables, determining if they are independent or associated. It involves comparing the observed frequencies in a contingency table to the expected frequencies, calculated under the assumption that the variables are independent. The test statistic is calculated using the formula:

where represents the observed frequency and represents the expected frequency. A significant Chi-squared statistic, indicated by a low p-value, suggests that the variables are not independent and that there is a relationship between them. This test is widely used in hypothesis testing to validate the independence of categorical data.

In contrast, the Fisher score is a feature-selection technique used in ML to identify the most relevant features for classification tasks. It measures the importance of a feature by calculating the ratio of the variance between different classes to the variance within the same class, thereby highlighting features that best distinguish between classes. The formula for the Fisher Score is [52]:

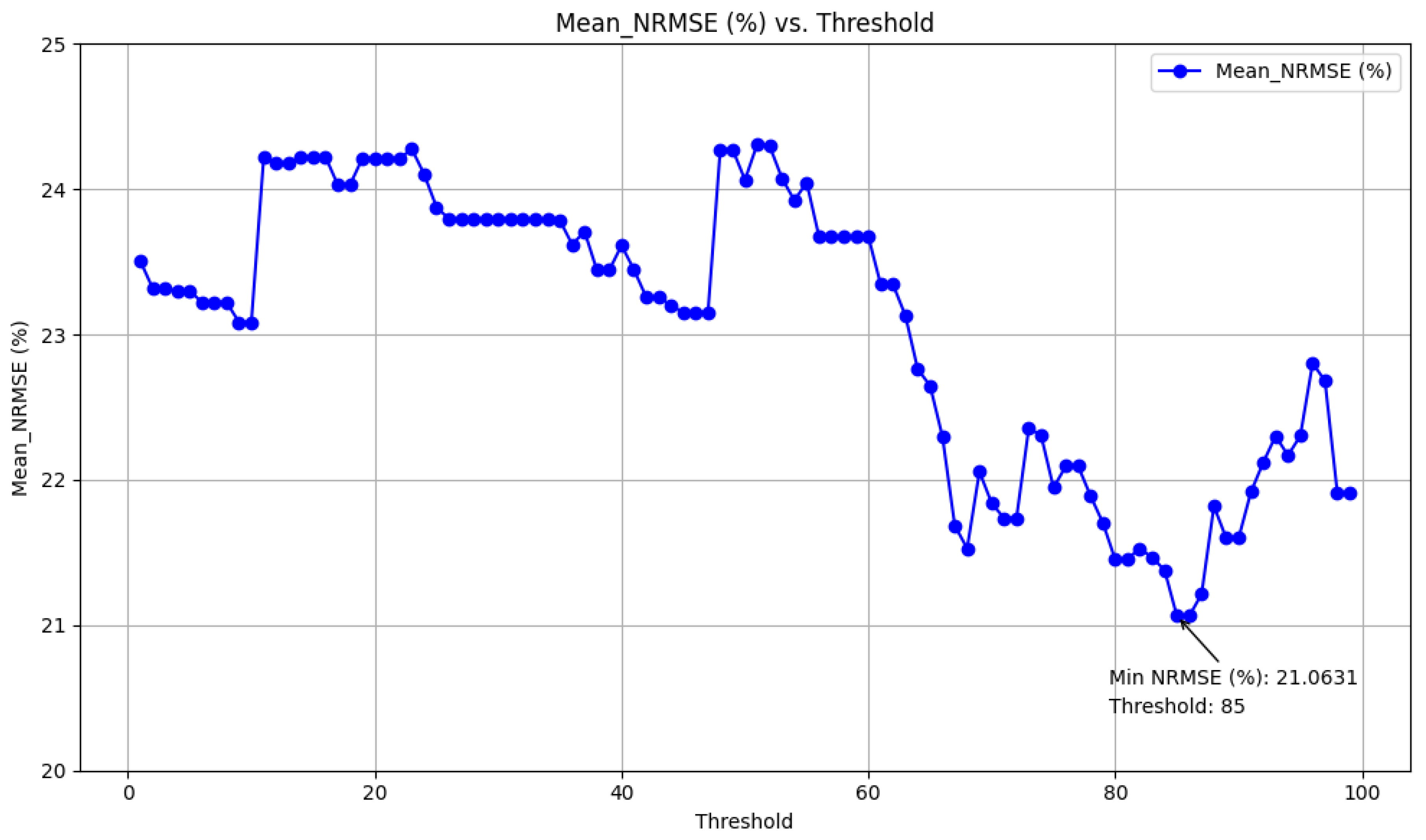

where j is the feature index, C is the number of classes, is the number of samples in class c, is the mean of the feature j in class c, is the overall mean of the feature j, and is the standard deviation of the feature j in class c. A higher Fisher score indicates a feature with greater discriminative power. To identify the most effective features, two indicators are used simultaneously to measure feature quality. The features are divided into 100 parts based on both indicators. Any feature that meets the criteria for at least one of the indicators is included in the final table. The result is shown in Figure 7.

Figure 7.

Finding the best threshold (Chi-squared Test and Fisher score).

The figure indicates that the minimum NRMSE value of 21.0631% is achieved when features are selected with a threshold greater than 85.

Thus, by identifying the optimal threshold for each of the four methods, the most effective features were selected according to each method’s criteria.

6. Results and Discussion

In this section, the features selected by each feature-selection method are utilized to forecast production capacity in the region. The results from each method are compared to evaluate their effectiveness.

For forecasting, two months of data from 20 January 2022 to 20 March 2022 are used as the training set, and the subsequent week serves as the test set. After each prediction, all the data are shifted by one week, and the prediction process is repeated. This procedure is carried out for 52 weeks to cover the entire year, allowing us to assess the method’s effectiveness with a high degree of certainty. As previously mentioned, the data have an hourly granularity.

6.1. Forecasting Using Selected Features by the Pearson Method

By applying the Pearson method with a threshold of 0.21, features that met this criterion were selected. From the 94 available features, 48 features were identified as relevant. These selected features are listed in Table 7. As a guide, ugrd_10m1 is related to the east–west wind speed at measurement point 1 and vgrd_10m3 is related to the north–south wind speed at measurement point 3.

Table 7.

Selected features with Pearson method.

Using wind speed data from these selected areas as features, regional power production was predicted. The results of these predictions demonstrate the impact of feature selection on predictive accuracy, showing a decrease in the NRMSE from 5.69% to 5% with the implementation of feature selection. Additionally, it provides insights into the operational efficiencies gained through feature selection, showcasing a significant reduction in the number of features employed for forecasting, from 94 to 48. Furthermore, the average elapsed time for one-week forecasting was notably reduced from 410.68 s to 348.96 s, reflecting improved computational efficiency under consistent conditions with the same computer.

The implementation of effective feature-selection strategies not only enhances predictive accuracy but also optimizes computational resources. By minimizing feature complexity, the forecasting model becomes more streamlined and efficient, facilitating quicker model updates and responsiveness in dynamic forecasting scenarios. This streamlined approach not only improves forecasting accuracy but also enhances overall operational efficiency, making the forecasting process more agile and resource-effective.

6.2. Forecasting Using Selected Features by the Spearman Method

By utilizing the Spearman method with a threshold of 0.4, features that satisfied this criterion were identified. Out of the 94 available features, 5 were deemed relevant. These selected features are presented in Table 8.

Table 8.

Selected features with Spearman method.

The implementation of the Spearman method illustrates the comparative impact of feature selection on predictive accuracy, showing a reduction in the NRMSE from 5.69% to 3.92% with feature selection. It also details the reduction in the number of features used for forecasting, decreasing from 94 to 5, as well as the average elapsed time for one-week forecasting, which decreased from 410.68 s to 235.06 s under identical conditions and using the same computer.

6.3. Forecasting Using Selected Features by the Mutual Information Method

By applying the mutual information method with a threshold of 0.195, features that met this criterion were selected. From the 94 available features, 42 were identified as relevant. These selected features are listed in Table 9.

Table 9.

Selected features with mutual information method.

With the implementation of the mutual feature selection method, predictive accuracy is enhanced, reducing the NRMSE from 5.69% to 4.87%. Additionally, there is a substantial reduction in the number of features utilized for forecasting, declining from 94 to 42, alongside a noteworthy decrease in the average time required for one-week forecasting, dropping from 410.68 s to 311.94 s under identical conditions using the same computer.

6.4. Forecasting Using Selected Features by the Chi-Squared Test and Fisher Score Method

By utilizing the Chi-squared test and Fisher score method with a threshold of 85, 19 relevant features were identified out of the 94 available. These features met the specified criterion and are detailed in Table 10.

Table 10.

Selected Features with Chi-squared test and Fisher score method.

Using this method leads to a decrease in the NRMSE from 5.69% to 4.43%. Additionally, it outlines the reduction in the number of features used for forecasting, which has dropped from 94 to 19, along with a reduction in the average time required for one-week forecasting, which has decreased from 410.68 s to 289.63 s, all under the same conditions and utilizing the same computer.

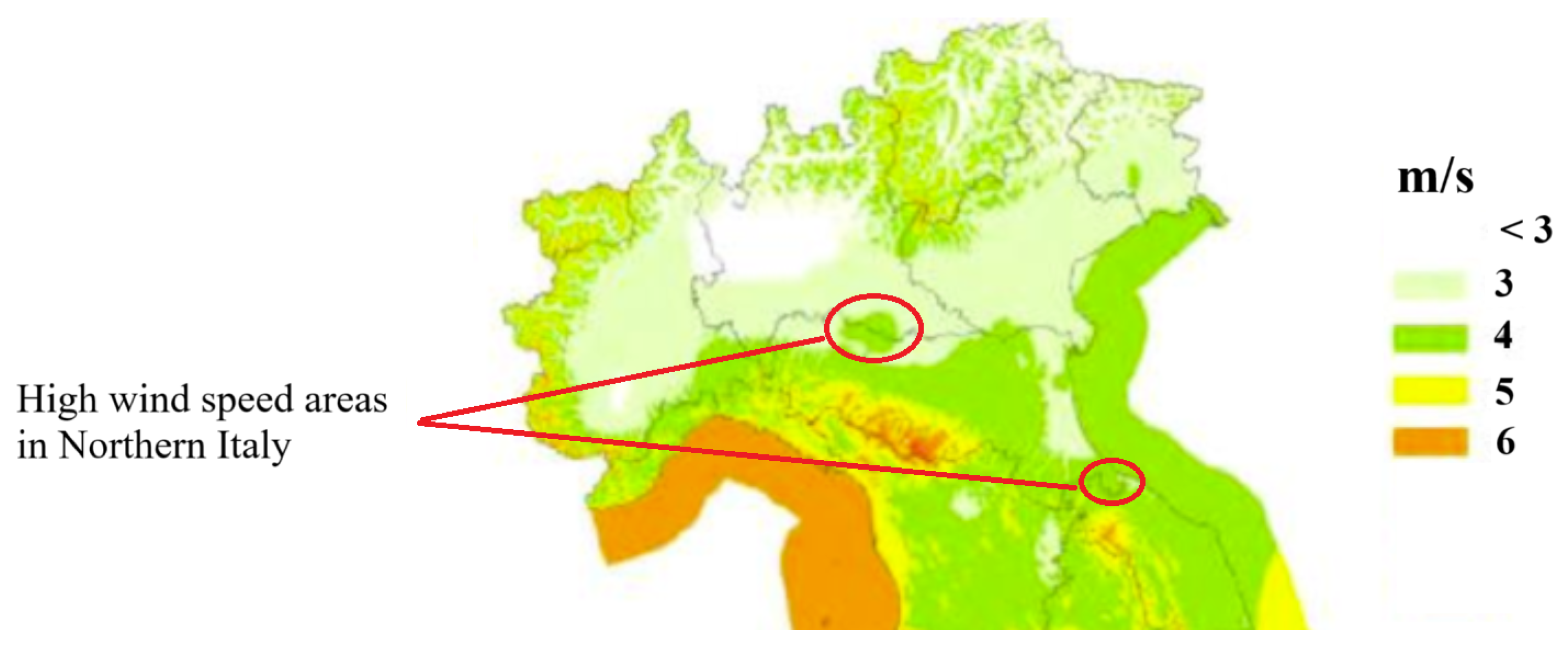

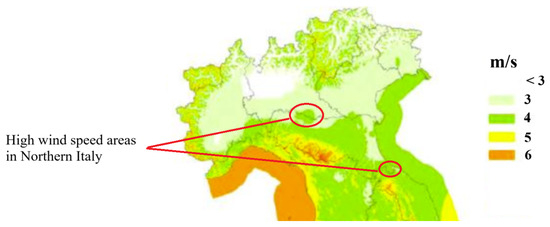

The results of all methods are presented in Table 11. Based on the results presented in this table, Spearman’s method demonstrates superior performance among the four evaluated methods. This approach not only selects the fewest number of features but also achieves the highest accuracy and the shortest prediction time for the study area. Consequently, Spearman’s method is highly efficient and effective in feature selection for this case study. The cities that have been selected as more effective cities with this method include Piacenza, Rimini, Cremona, and Mantova, whose locations are shown in Figure 8, which shows that the average wind speed (at 75 m) is high in the regions nearby those selected cities.

Table 11.

Comparison of feature-selection method results.

Figure 8.

Wind speed at selected areas by Spearman method.

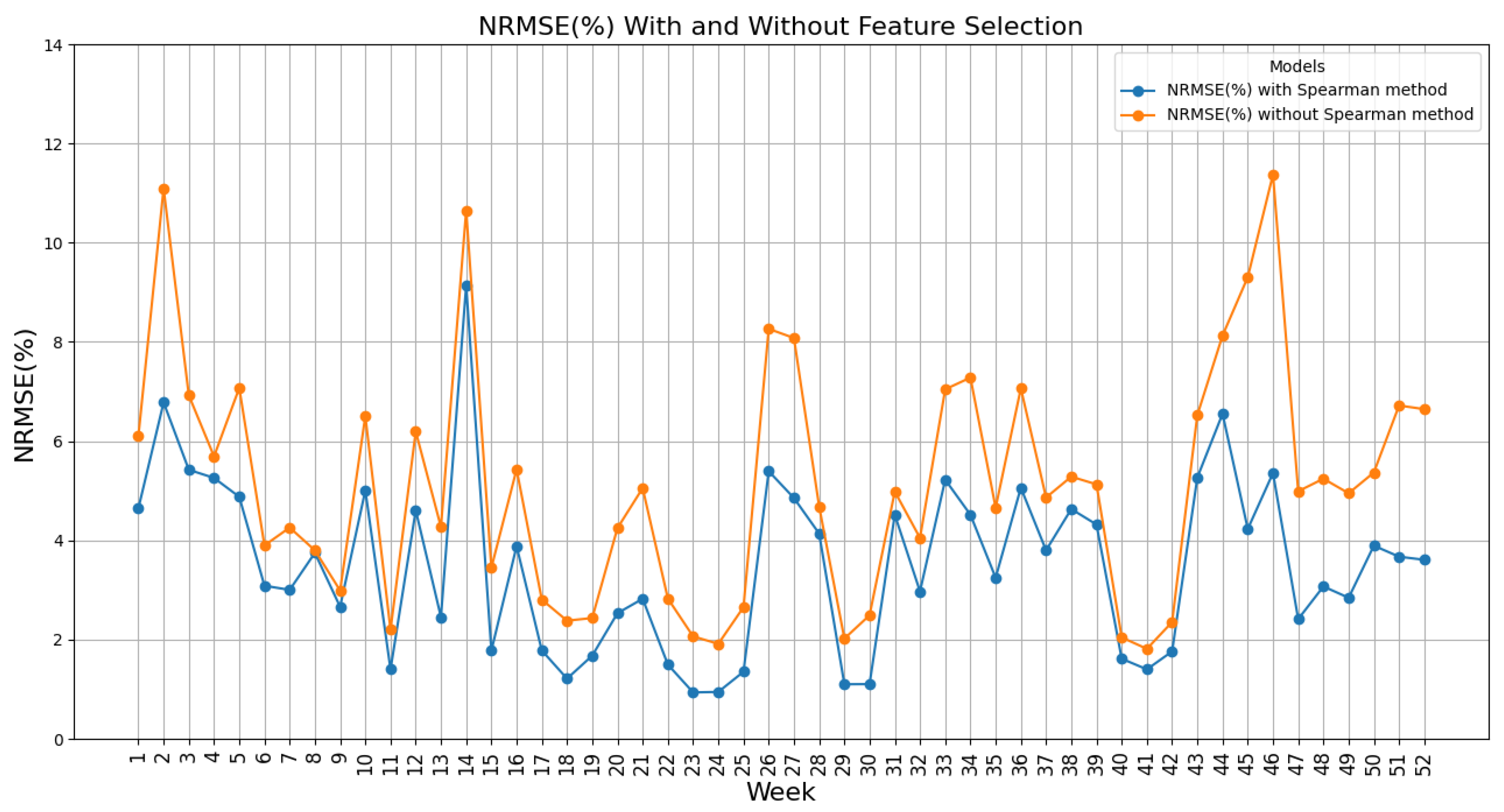

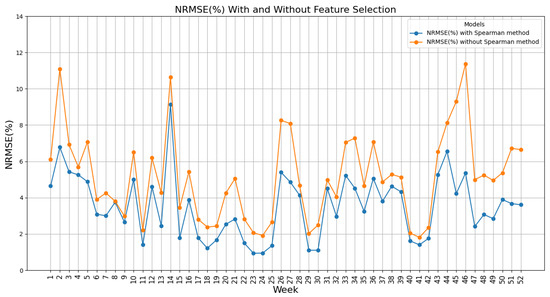

In Figure 9, NRMSE changes for weekly forecasts are presented, which shows that the range of changes by applying Spearman’s method is between 0.94 and 9.13%, while it was between 1.82% and 11.37% without applying this method.

Figure 9.

Comparison of NRMSE(%): with and without feature selection (Spearman method).

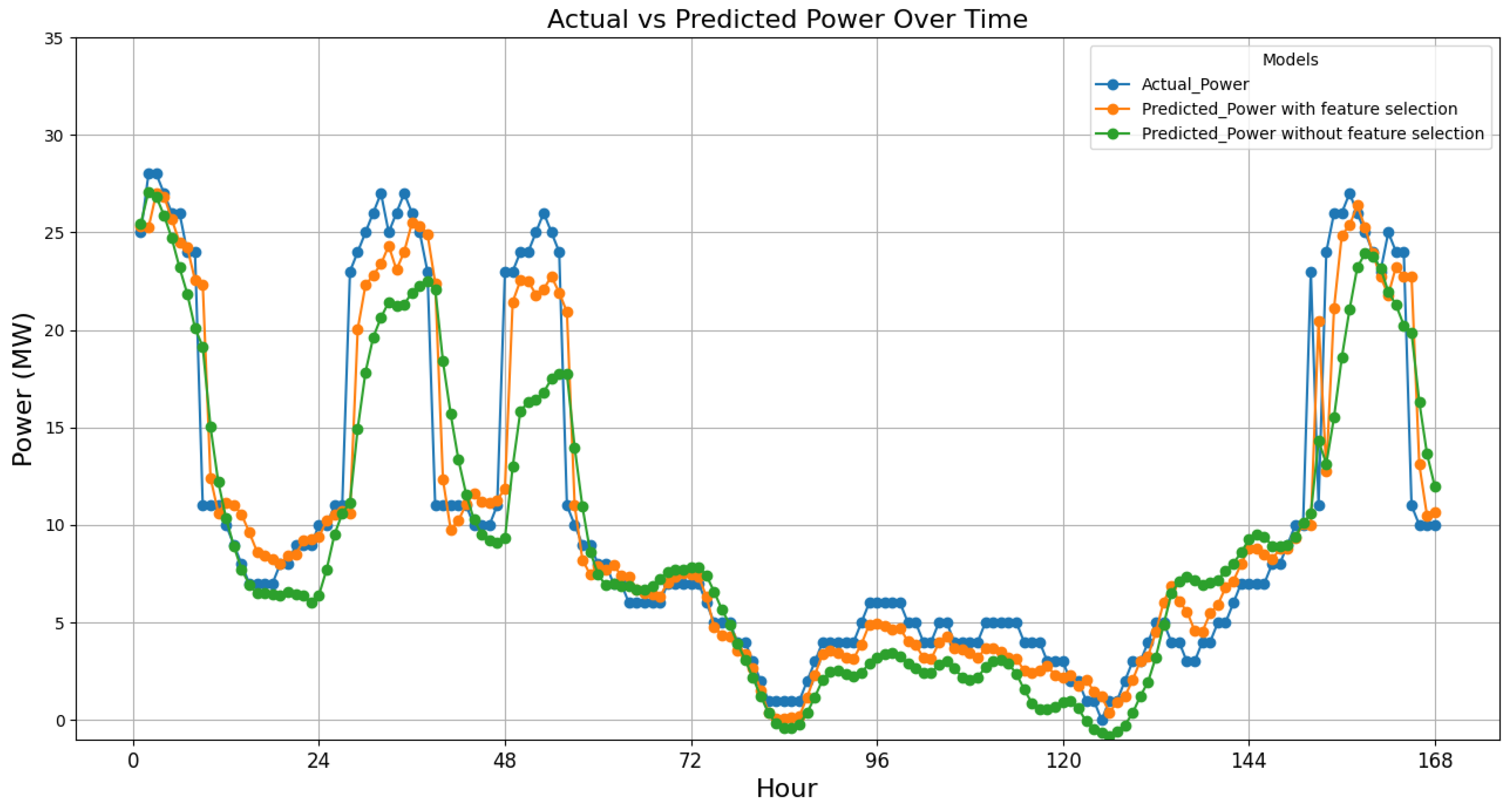

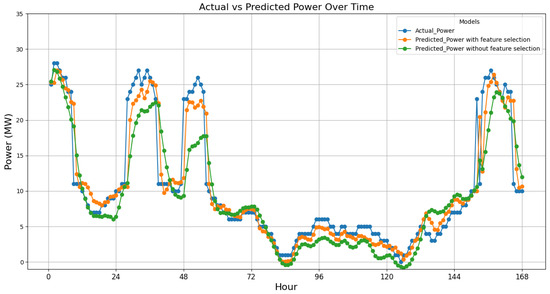

To demonstrate the effect of improving forecasting accuracy, the actual and forecasted data for a week from 21 March 2022 to 27 March 2022 (168 h) were compared in both cases: with and without feature selection. This comparison is illustrated in Figure 10.

Figure 10.

Comparison of actual power and predicted power by LSTM method with and without feature selection.

Figure 10 clearly shows that incorporating effective feature selection has notably increased the prediction accuracy. Combining LSTM for forecasting and Spearman for feature selection yields the best overall performance for this study area and optimizes both performance and efficiency, making it the most advantageous combination among the tested methods. This efficiency is crucial for large datasets and real-time applications where quick and accurate predictions are necessary.

7. Conclusions

This study proposes an advanced methodology for enhancing regional wind power forecasting through the integration of machine-learning techniques and feature-selection methods. By systematically evaluating several machine-learning models—LSTM, ANN, SVM, CNN, and ELM—within the context of northern Italy, LSTM emerged as the most effective model for regional forecasting. Four distinct feature-selection methods (Pearson correlation, Spearman correlation, mutual information, and the Chi-squared test with Fisher score) were comprehensively investigated, demonstrating that Spearman correlation was the most efficient for this dataset.

The key advantage of regional evaluation, combined with feature-selection methods, compared to existing approaches in the research, lies in its ability to optimize prediction accuracy and computational efficiency in regions with sparse or incomplete data on individual wind farms. Most existing methods focus on plant-specific predictions, which require detailed data for each wind farm. However, in regions such as northern Italy, where such detailed data are often unavailable, regional forecasting offers a practical alternative. By utilizing meteorological data from multiple stations, corresponding to province capital cities, and applying effective feature-selection techniques, this approach not only improved forecasting accuracy but also significantly reduced the number of features required for reliable predictions. This leads to more efficient computations and makes the method highly scalable and applicable to other regions facing similar data constraints.

Furthermore, this study demonstrates that regional forecasting, when optimized with appropriate machine-learning models and feature selection, can bridge the gap between plant-specific forecasting and large-scale grid management. It provides a robust solution for grid operators to better manage renewable energy integration, balance supply and demand, and reduce uncertainty in power system operations. The reductions in NRMSE and computation time further highlight the practical contributions of this approach, enhancing both predictive performance and operational efficiency.

As a final remark, the Spearman correlation and the LSTM method proved to be the best-performing options for the northern region of Italy. However, if this approach were to be applied to another region, such as the south of Italy, the entire procedure of cross-validation and grid search would need to be repeated to identify the best ML method and the most effective feature-selection method, which may differ in that case. The mathematical justification that the Spearman correlation is the best choice for this test case is a numerical one, which is a common practice in the machine-learning community, where the best method is assessed by means of an exhaustive grid search based on a cross-validation approach.

In conclusion, the regional evaluation presented in this work offers substantial benefits over existing methods by addressing the challenges posed by limited plant-specific data, improving forecasting accuracy and optimizing computational resources. Future research could extend this methodology to other renewable energy sources and explore hybrid approaches to further enhance predictive accuracy and scalability.

Author Contributions

Conceptualization, N.T.; methodology, N.T.; software, N.T.; validation, N.T.; formal analysis, N.T.; investigation, N.T.; resources, N.T.; data curation, N.T.; writing—original draft preparation, N.T.; writing—review and editing, M.T.; visualization, N.T.; supervision, M.T.; project administration, M.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The power data used in this study are available on public platforms as referenced in the text. However, the meteorological data were purchased from the cited provider and are not publicly available.

Acknowledgments

We express our gratitude to i-EM s.r.l. for their support in this research.

Conflicts of Interest

The authors declare that no commercial or financial relationships were involved in the research that could be interpreted as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ML | Machine Learning Institute |

| LSTM | Long Short-Term Memory |

| ANNs | Artificial Neural Networks |

| SVMs | Support Vector Machines |

| CNNs | Convolutional Neural Networks |

| ELMs | Extreme-Learning Machines |

| SVR | Support Vector Regression |

| NRMSE | Normalized Root 243 Mean Squared Error |

| RMSE | Root Mean Squared Error |

References

- Bilgen, S.; Kaygusuz, K.; Sari, A. Renewable energy for a clean and sustainable future. Energy Sources 2004, 26, 1119–1129. [Google Scholar] [CrossRef]

- Shahzad, U. The Need For Renewable Energy Sources. ITEE J. Need Renew. Energy Sources 2012, 2, 1–12. [Google Scholar]

- Natarajan, V.; Karatampati, P. Survey on renewable energy forecasting using different techniques. In Proceedings of 2019 2nd International Conference on Power and Embedded Drive Control (ICPEDC), Chennai, India, 21–23 August 2019; pp. 349–354. [Google Scholar] [CrossRef]

- Foley, A.M.; Leahy, P.G.; Marvuglia, A.; McKeogh, E.J. Current methods and advances in forecasting of wind power generation. Renew. Energy 2012, 37, 1–8. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, J.; Wang, X. Review on probabilistic forecasting of wind power generation. Renew. Sustain. Energy Rev. 2014, 32, 255–270. [Google Scholar] [CrossRef]

- Ren, Y.; Zhou, X.; Liu, J.; Xie, Y.; Li, J. A two-stage fuzzy nonlinear combination method for utmost-short-term wind speed prediction based on TS fuzzy model. J. Renew. Sustain. Energy 2023, 15, 0123456. [Google Scholar] [CrossRef]

- Cui, M.; Zhang, J.; Wang, Q.; Krishnan, V.; Hodge, B.-M. A data-driven methodology for probabilistic wind power ramp forecasting. IEEE Trans. Smart Grid 2019, 10, 1326–1338. [Google Scholar] [CrossRef]

- Liu, F.; Li, R.; Dreglea, A. Wind speed and power ultra short-term robust forecasting based on Takagi–Sugeno fuzzy model. Energies 2019, 12, 3551. [Google Scholar] [CrossRef]

- Kurbatskii, V.G.; Sidorov, D.N.; Spiryaev, V.A.; Shramkov, A.A. On the neural network approach for forecasting of nonstationary time series on the basis of the Hilbert-Huang transform. Autom. Remote Control 2011, 72, 1405–1414. [Google Scholar] [CrossRef]

- Li, L.L.; Zhao, X.; Tseng, M.L.; Tan, R.R. Short-term wind power forecasting based on support vector machine with improved dragonfly algorithm. J. Clean. Prod. 2020, 242, 118447. [Google Scholar] [CrossRef]

- Acikgoz, H.; Yildiz, C.; Sekkeli, M. An extreme learning machine based very short-term wind power forecasting method for complex terrain. Energy Sources Part A Recovery Util. Environ. Eff. 2020, 42, 2715–2730. [Google Scholar] [CrossRef]

- Chandran, V.; Patil, C.K.; Merline Manoharan, A.; Ghosh, A.; Sumithra, M.G.; Karthick, A.; Rahim, R.; Arun, K. Wind Power Forecasting Based on Time Series Model Using Deep Machine Learning Algorithms. Mater. Today 2021, 47, 115–126. [Google Scholar] [CrossRef]

- Pei, M.; Ye, L.; Li, Y.; Luo, Y.; Song, X.; Yu, Y.; Zhao, Y. Short-term regional wind power forecasting based on spatial–temporal correlation and dynamic clustering model. Energy Rep. 2022, 8, 10786–10802. [Google Scholar] [CrossRef]

- Zhao, Z.; Yun, S.; Jia, L.; Guo, J.; Meng, Y.; He, N.; Li, X.; Shi, J.; Yang, L. Hybrid VMD-CNN-GRU-based model for short-term forecasting of wind power considering spatio-temporal features. Eng. Appl. Artif. Intell. 2023, 121, 105982. [Google Scholar] [CrossRef]

- Ozkan, M.B.; Pinar, K. Data mining-based upscaling approach for regional wind power forecasting: Regional statistical hybrid wind power forecast technique (RegionalSHWIP). IEEE Access 2019, 7, 171790–171800. [Google Scholar] [CrossRef]

- Wang, Z.; Weisheng, W.; Bo, W. Regional wind power forecasting model with NWP grid data optimized. Front. Energy 2017, 7, 175–183. [Google Scholar] [CrossRef]

- Eikeland, O.F.; Hovem, F.D.; Olsen, T.E.; Chiesa, M.; Bianchi, F.M. Probabilistic forecasts of wind power generation in regions with complex topography using deep learning methods: An Arctic case. Energy Convers. Manag. X 2022, 15, 100239. [Google Scholar] [CrossRef]

- Yu, Y.X.; Yang, M.; Han, X.S.; Zhang, Y.M.; Ye, P.F. A Regional Wind Power Probabilistic Forecast Method Based on Deep Quantile Regression. IEEE Trans. Ind. Appl. 2021, 57, 4420–4427. [Google Scholar] [CrossRef]

- Shao, H.; Xing, D.; Yingtao, J. A novel deep learning approach for short-term wind power forecasting based on infinite feature selection and recurrent neural network. J. Renew. Sustain. Energy 2018, 10, 043303. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, H.; Kong, X.; Lee, K.Y. Wind speed forecasting using deep neural network with feature selection. Neurocomputing 2020, 397, 393–403. [Google Scholar] [CrossRef]

- Konstantinou, T.; Hatziargyriou, N. Regional wind power forecasting based on Bayesian feature selection. IEEE Trans. Power Syst. 2024, 1–12. [Google Scholar] [CrossRef]

- Kong, X.; Zhang, G.; Liu, Y.; Liang, Y.; Kang, H.; Huang, Z. Wind speed prediction using reduced support vector machines with feature selection. Neurocomputing 2015, 169, 449–456. [Google Scholar] [CrossRef]

- Sun, H.; Ye, C.; Wan, C.; Yao, H.; Zhang, K. Data-Driven Day-ahead Probabilistic Forecasting of Wind Power Based on Features Sensitivity Analysis and Meteorological Scenario Classification. In Proceedings of the 2023 IEEE Power & Energy Society General Meeting (PESGM), Orlando, FL, USA, 16–20 July 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Chen, H.; Xianfa, C. Photovoltaic power prediction of LSTM model based on Pearson feature selection. Energy Rep. 2021, 7, 1047–1054. [Google Scholar] [CrossRef]

- Li, J.; Geng, D.; Zhang, P.; Meng, X.; Liang, Z.; Fan, G. Ultra-Short Term Wind Power Forecasting Based on LSTM Neural Network. In Proceedings of the 2019 IEEE 3rd International Electrical and Energy Conference (CIEEC), Beijing, China, 7–9 September 2019; pp. 1815–1818. [Google Scholar] [CrossRef]

- Wharton, S.; Julie, K.L. Assessing atmospheric stability and its impacts on rotor-disk wind characteristics at an onshore wind farm. Wind Energy 2012, 15, 525–546. [Google Scholar] [CrossRef]

- Carta, J.A.; Ramirez, P.; Velazquez, S. A review of wind speed probability distributions used in wind energy analysis: Case studies in the Canary Islands. Renew. Sustain. Energy Rev. 2009, 13, 933–955. [Google Scholar] [CrossRef]

- Manwell, J.F.; McCowan, J.G.; Rogers, A.L. Wind energy explained: Theory, design and application. Wind. Eng. 2006, 30, 169. [Google Scholar]

- Meteorological Data Provided by the Italian Air Force—Areonautica Militare, 2023. Data Collected by the Italian Air Force and Made Available to the Public. Available online: https://www.meteoam.it/it/home (accessed on 12 April 2024).

- Transparency Platform of European Network of Transmission System Operators for Electricity, 2023. European Network of Transmission System Operators for Electricity. Available online: https://transparency.entsoe.eu/ (accessed on 12 April 2024).

- Yang, Y.; Lou, H.; Wu, J.; Zhang, S.; Gao, S. A survey on wind power forecasting with machine learning approaches. Neural Comput. Appl. 2024, 36, 12753–12773. [Google Scholar] [CrossRef]

- Schmidhuber, J.; Hochreiter, S. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar]

- Bergstra, J.; Bengio, Y. Random search for hyper-parameter optimization. J. Mach. Learn. Res. 2012, 13, 281–305. [Google Scholar]

- Greff, K.; Srivastava, R.K.; Koutnik, J.; Steunebrink, B.R.; Schmidhuber, J. LSTM: A Search Space Odyssey. IEEE Trans. Neural Networks Learn. Syst. 2016, 28, 2222–2232. [Google Scholar] [CrossRef]

- Makarovskikh, T.; Abotaleb, M.; Albadran, Z.; Ramadhan, A.J. Hyper-parameter tuning for the long short-term memory algorithm. AIP Conf. Proc. 2023, 2977, 020097. [Google Scholar] [CrossRef]

- Arlot, S.; Celisse, A. A survey of cross-validation procedures for model selection. Stat. Surv. 2010, 4, 40–79. [Google Scholar] [CrossRef]

- Varma, S.; Simon, R. Bias in error estimation when using cross-validation for model selection. BMC Bioinform. 2006, 7, 91. [Google Scholar] [CrossRef]

- Sabadus, A.; Blaga, R.; Hategan, S.-M.; Calinoiu, D.; Paulescu, E.; Mares, O.; Boata, R.; Stefu, N.; Paulescu, M.; Badescu, V. A cross-sectional survey of deterministic PV power forecasting: Progress and limitations in current approaches. Renew. Energy 2024, 226, 120385. [Google Scholar] [CrossRef]

- Zheng, J.; Niu, Z.; Han, X.; Wu, Y.; Cui, X. Short-Term Wind Power Forecasting Based on Two-Stage Feature Selection. In Proceedings of the 2023 6th International Conference on Energy, Electrical and Power Engineering (CEEPE), Guangzhou, China, 12–14 May 2023; pp. 1181–1186. [Google Scholar] [CrossRef]

- Kutlug Sahin, E.; Ipbuker, C.; Kavzoglu, T. Investigation of automatic feature weighting methods (Fisher, Chi-square and Relief-F) for landslide susceptibility mapping. Geocarto Int. 2017, 32, 956–977. [Google Scholar] [CrossRef]

- Ghadimi, N.; Akbarimajd, A.; Shayeghi, H.; Abedinia, O. Application of a New Hybrid Forecast Engine with Feature Selection Algorithm in a Power System. Int. J. Ambient Energy 2019, 40, 494–503. [Google Scholar] [CrossRef]

- Khandakar, A.; Chowdhury, M.E.H.; Kazi, M.K.; Benhmed, K.; Touati, F.; Al-Hitmi, M.; Gonzales, A.J.S.P. Machine Learning Based Photovoltaics (PV) Power Prediction Using Different Environmental Parameters of Qatar. Energies 2019, 12, 2782. [Google Scholar] [CrossRef]

- Eskandari, A.; Nedaei, A.; Milimonfared, J.; Aghaei, M. A multilayer integrative approach for diagnosis, classification and severity detection of electrical faults in photovoltaic arrays. Expert Syst. Appl. 2024, 252, 124111. [Google Scholar] [CrossRef]

- Eseye, A.T.; Lehtonen, M.; Tukia, T.; Uimonen, S.; Millar, R.J. Machine Learning Based Integrated Feature Selection Approach for Improved Electricity Demand Forecasting in Decentralized Energy Systems. IEEE Access 2019, 7, 91463–91475. [Google Scholar] [CrossRef]

- Viet, D.T.; Phuong, V.V.; Duong, M.Q.; Tran, Q.T. Models for Short-Term Wind Power Forecasting Based on Improved Artificial Neural Network Using Particle Swarm Optimization and Genetic Algorithms. Energies 2020, 13, 2873. [Google Scholar] [CrossRef]

- Demir-Kavuk, O.; Kamada, M.; Akutsu, T.; Knapp, E.W. Prediction using step-wise L1, L2 regularization and feature selection for small data sets with large number of features. BMC Bioinform. 2011, 12, 412. [Google Scholar] [CrossRef]

- Omer, Z.M.; Shareef, H. Comparison of Decision Tree based Ensemble Methods for Prediction of Photovoltaic Maximum Current. Energy Convers. Manag. X 2022, 16, 100333. [Google Scholar] [CrossRef]

- Saeys, Y.; Inza, I.; Larrañaga, P. A review of feature selection techniques in bioinformatics. Bioinformatics 2007, 23, 2507–2517. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Setiono, R. Incremental feature selection. Appl. Intell. 1998, 9, 217–230. [Google Scholar] [CrossRef]

- Peng, H.; Long, F.; Ding, C. Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1226–1238. [Google Scholar] [CrossRef]

- Osorio, G.J.; Matias, J.C.O.; Catalao, J.P.S. Short-term wind power forecasting using adaptive neuro-fuzzy inference system combined with evolutionary particle swarm optimization, wavelet transform and mutual information. Renew. Energy 2015, 75, 301–307. [Google Scholar] [CrossRef]

- Sharifai, G.A.; Zainol, Z. Feature selection for high-dimensional and imbalanced biomedical data based on robust correlation based redundancy and binary grasshopper optimization algorithm. Genes 2020, 11, 717. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).