Application of Quantum Neural Network for Solar Irradiance Forecasting: A Case Study Using the Folsom Dataset, California

Abstract

1. Introduction

- To address the information gap in the scientific literature regarding solar irradiance estimation using QML predictive models.

- To assess the feasibility of a QML model designed for forecasting solar irradiance up to 3 h in advance.

- To evaluate different configurations for the QNN framework, investigating several parameters composing its structure and their impact on the model’s output.

- To investigate different optimization strategies and their impact on the model’s output.

- To evaluate the QNN performance compared to classical ML counterparts.

2. Materials and Methods

2.1. Solar Irradiance

2.2. The Folsom Dataset

2.3. Classical Machine Learning Models

2.4. Qubits and Quantum Gates

2.5. Quantum Machine Learning

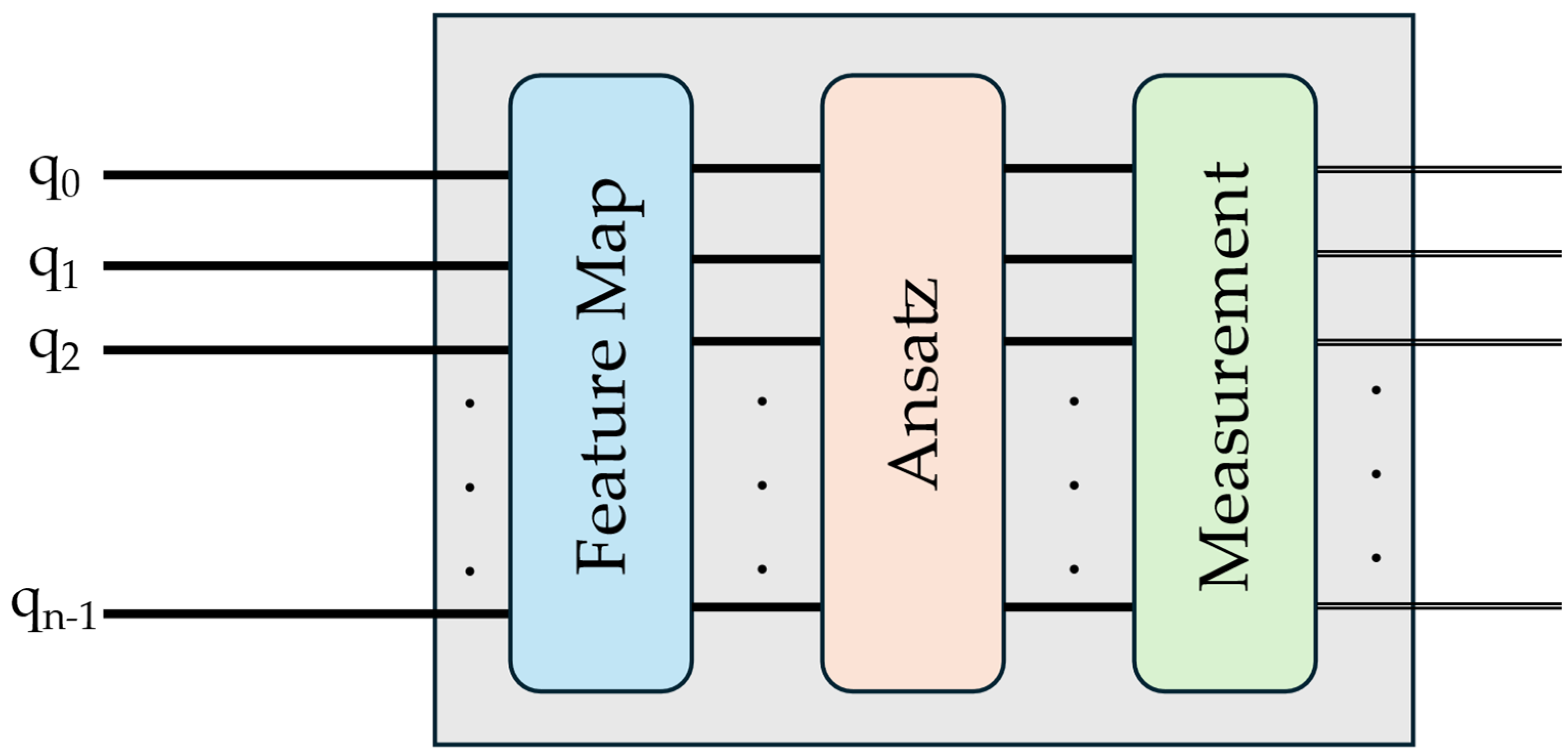

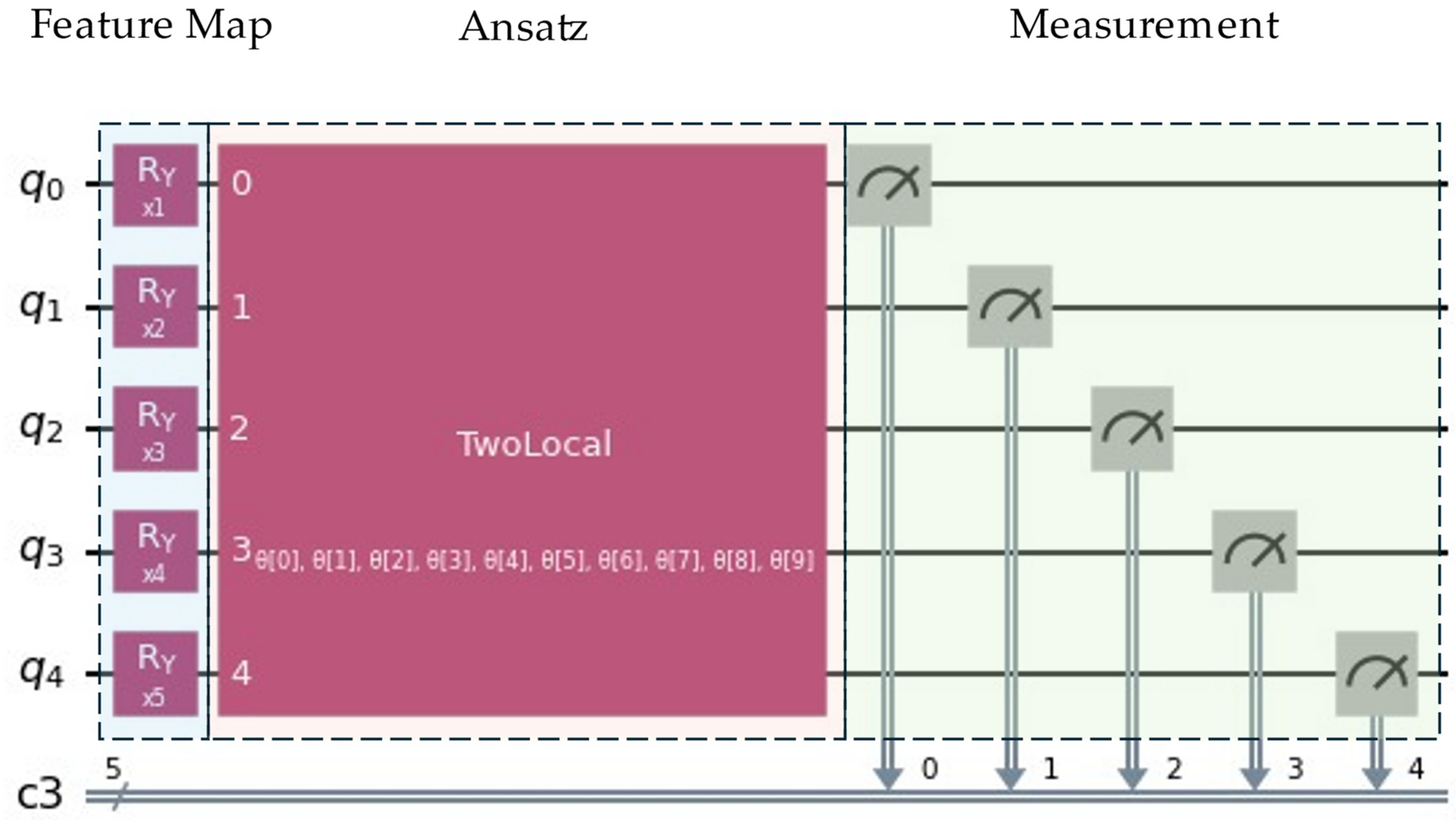

2.5.1. QNN Structure

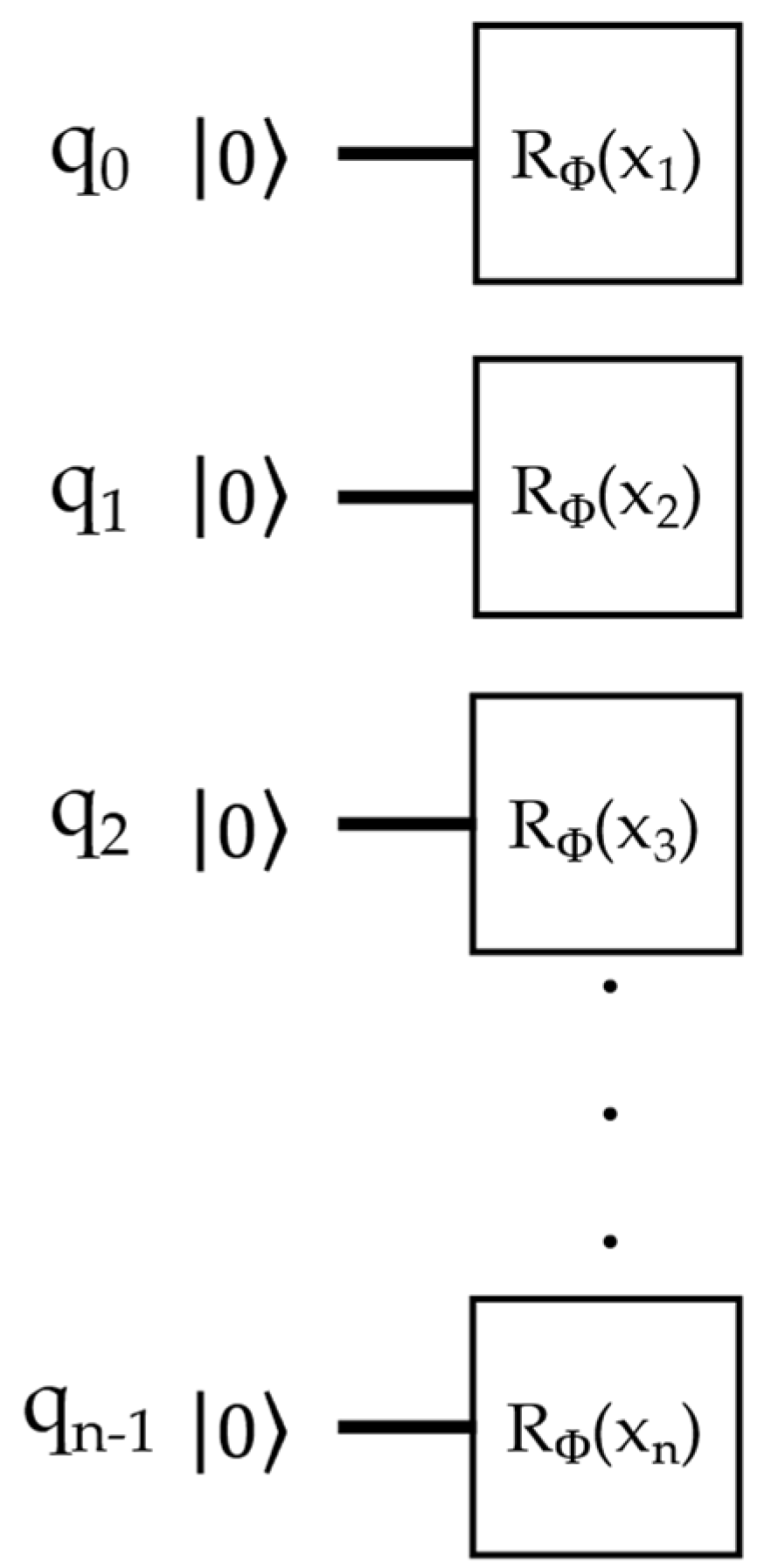

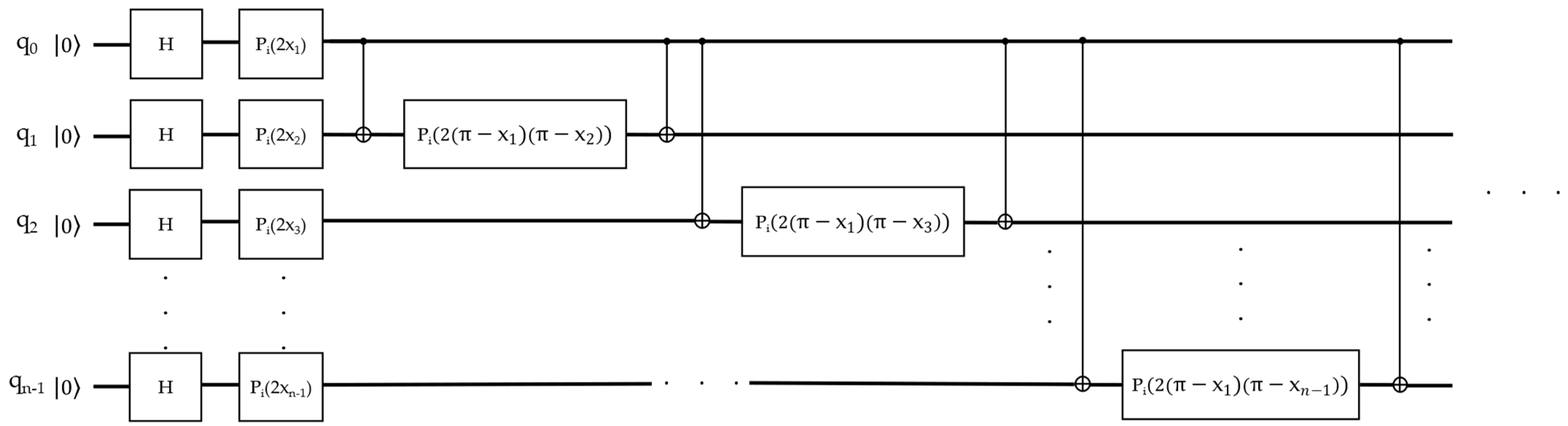

2.5.2. Feature Map

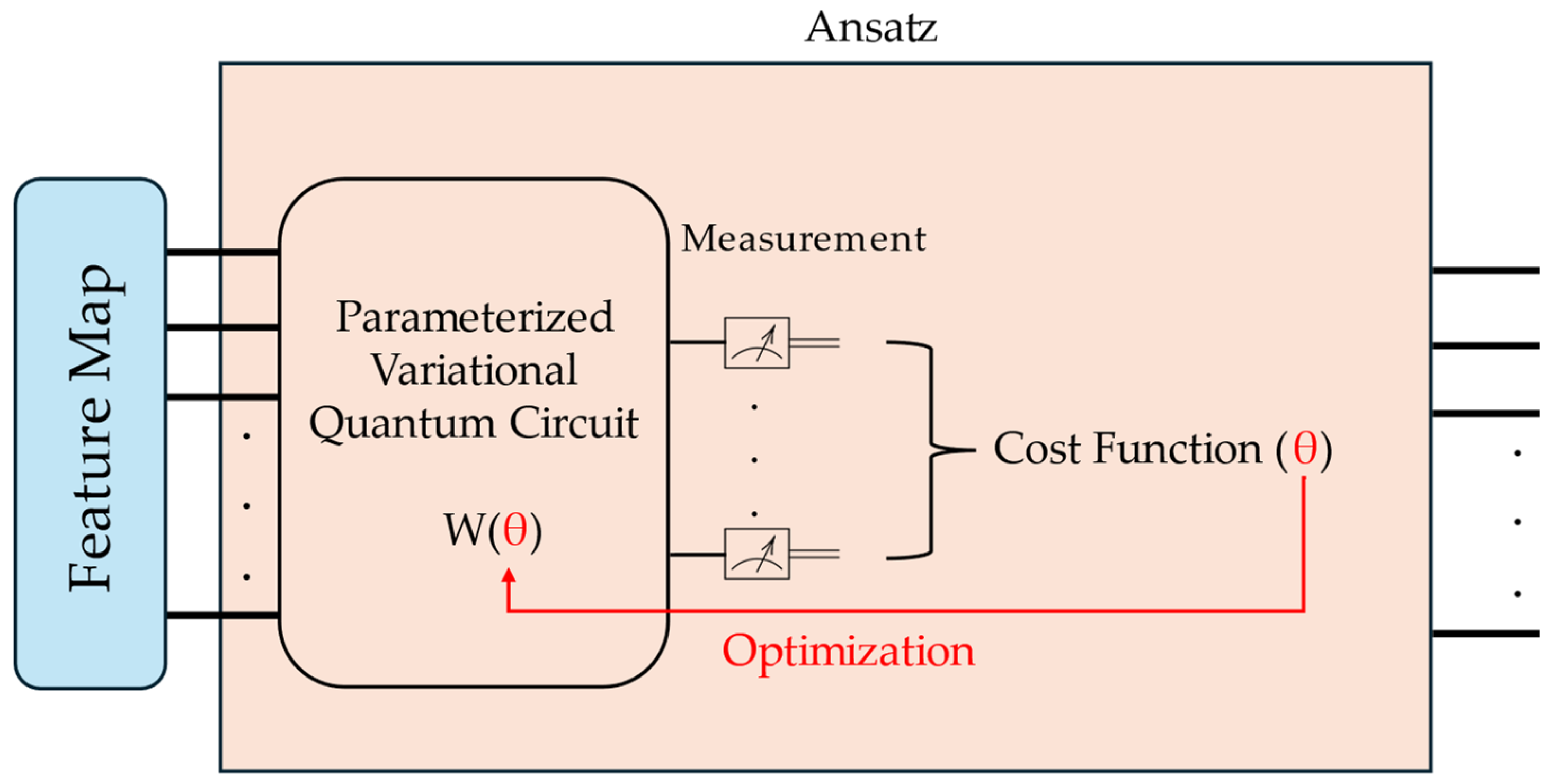

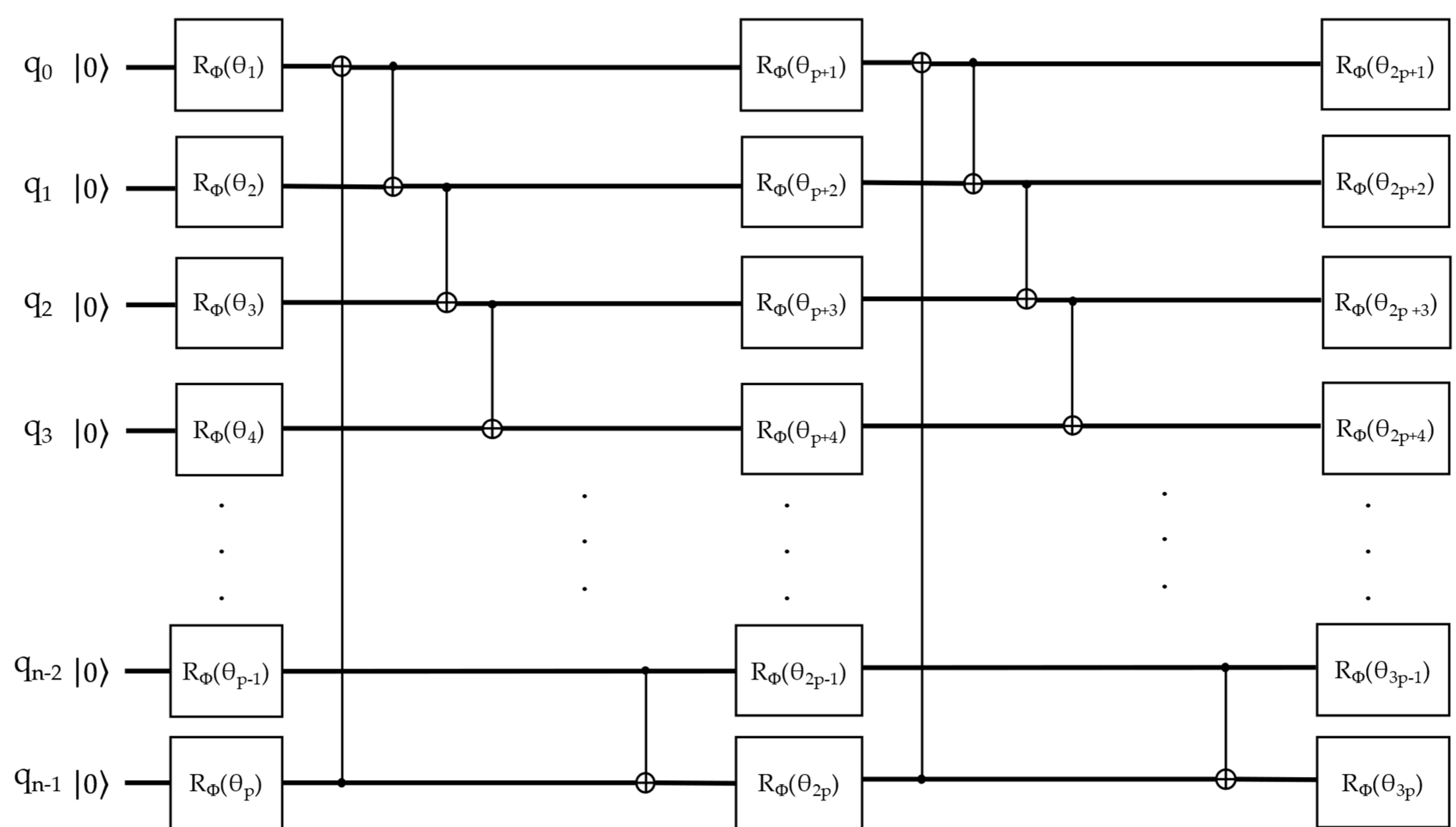

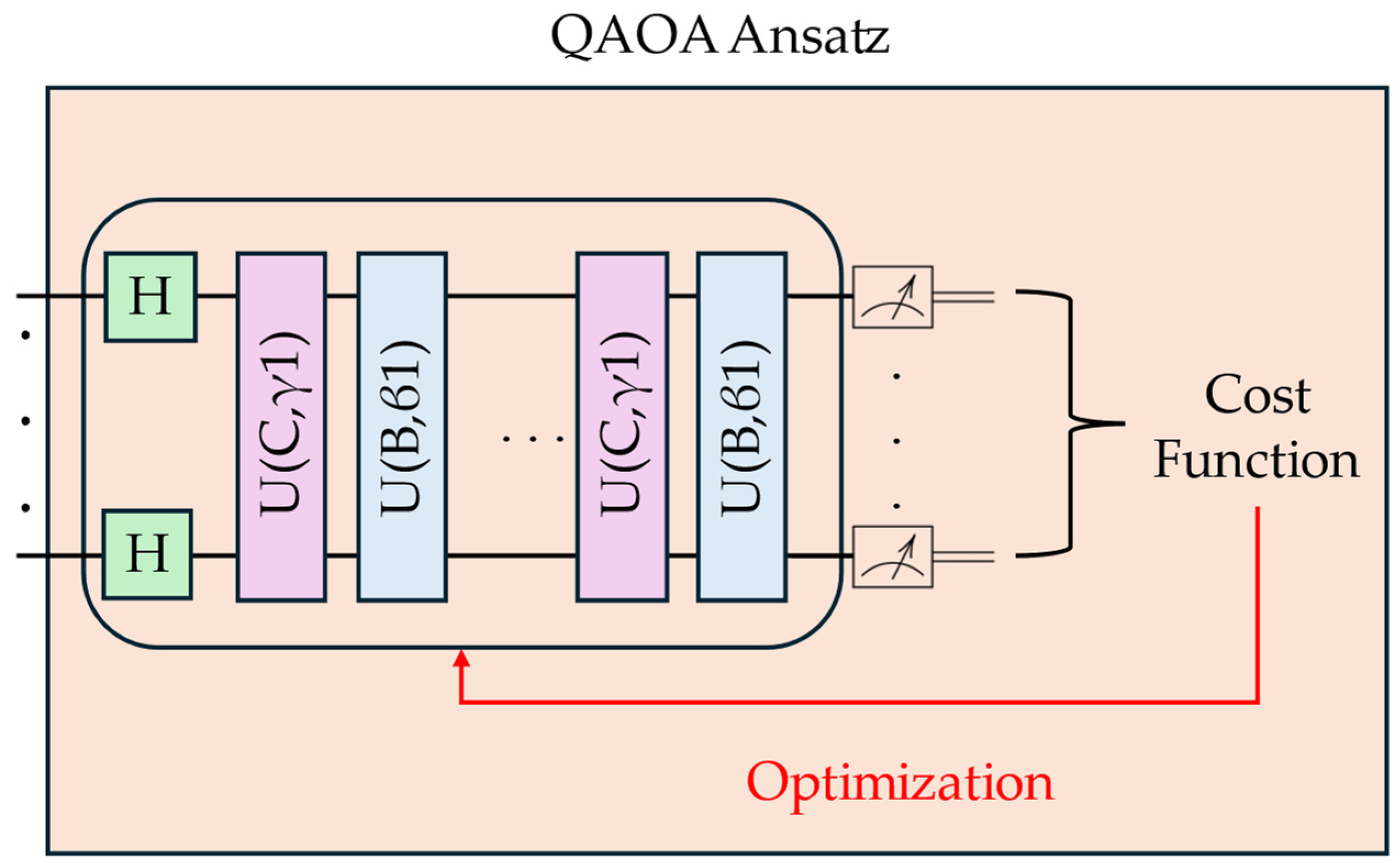

2.5.3. Ansatz

2.5.4. Optimization Algorithms

2.5.5. Measurement

3. Results

3.1. Parameter Selection

- To keep both information from statistical features, related to temporal information, and sky image features, related to spatial data.

- To keep the problem complexity low for quantum machine learning simulation.

3.2. Feature Map Quantum Circuit Configuration Selection

3.3. Ansatz Quantum Circuit Configuration Selection

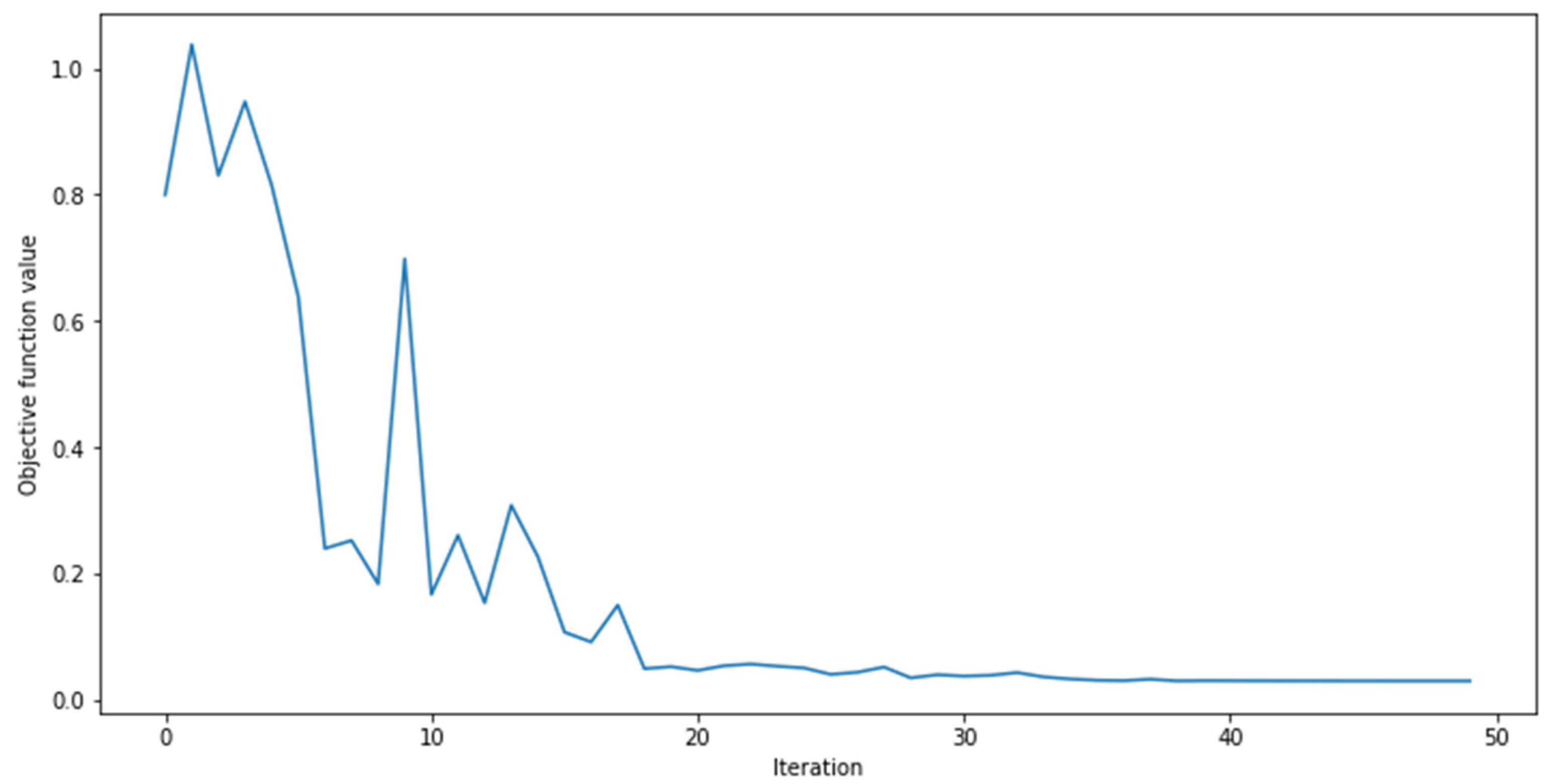

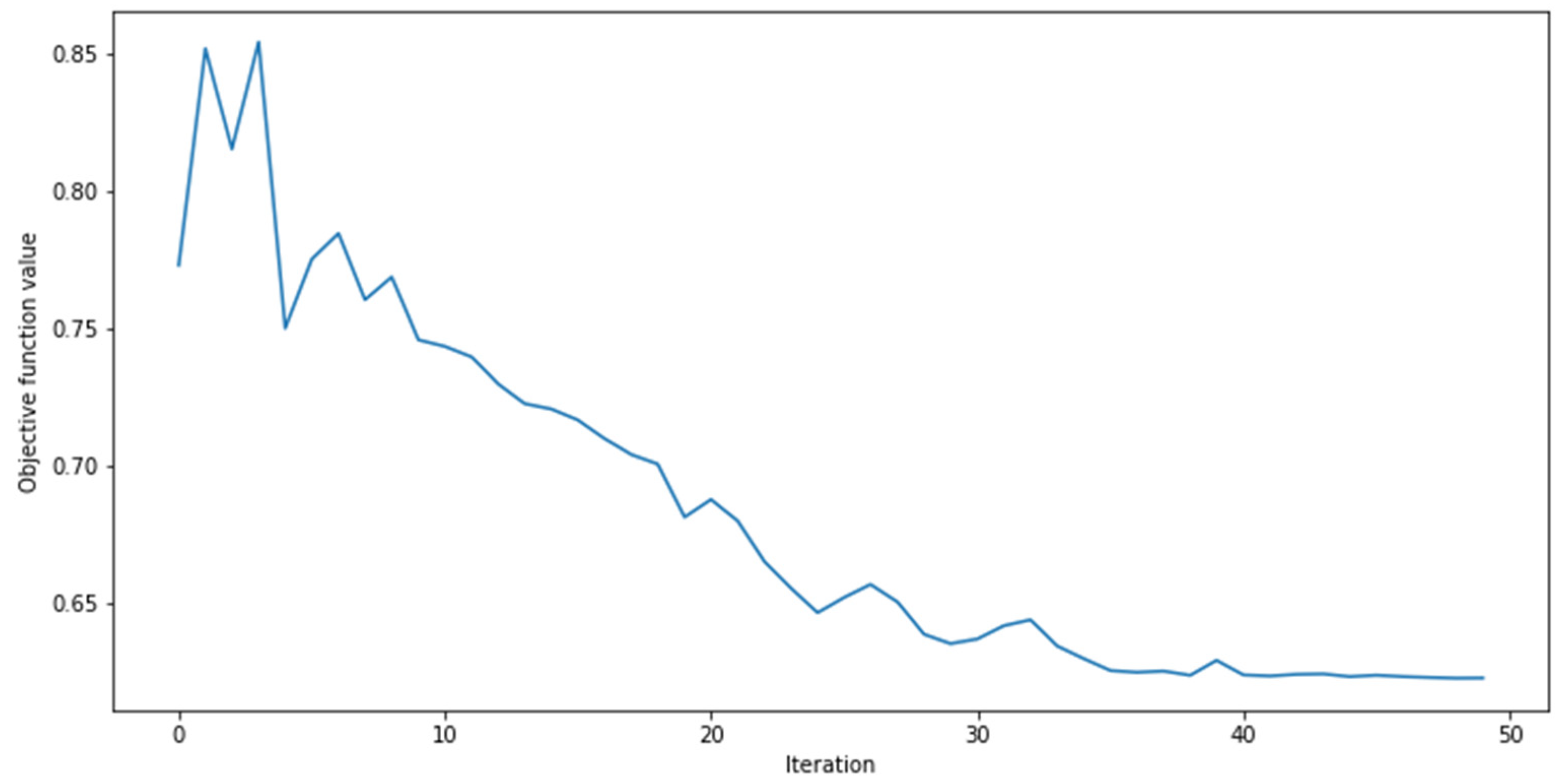

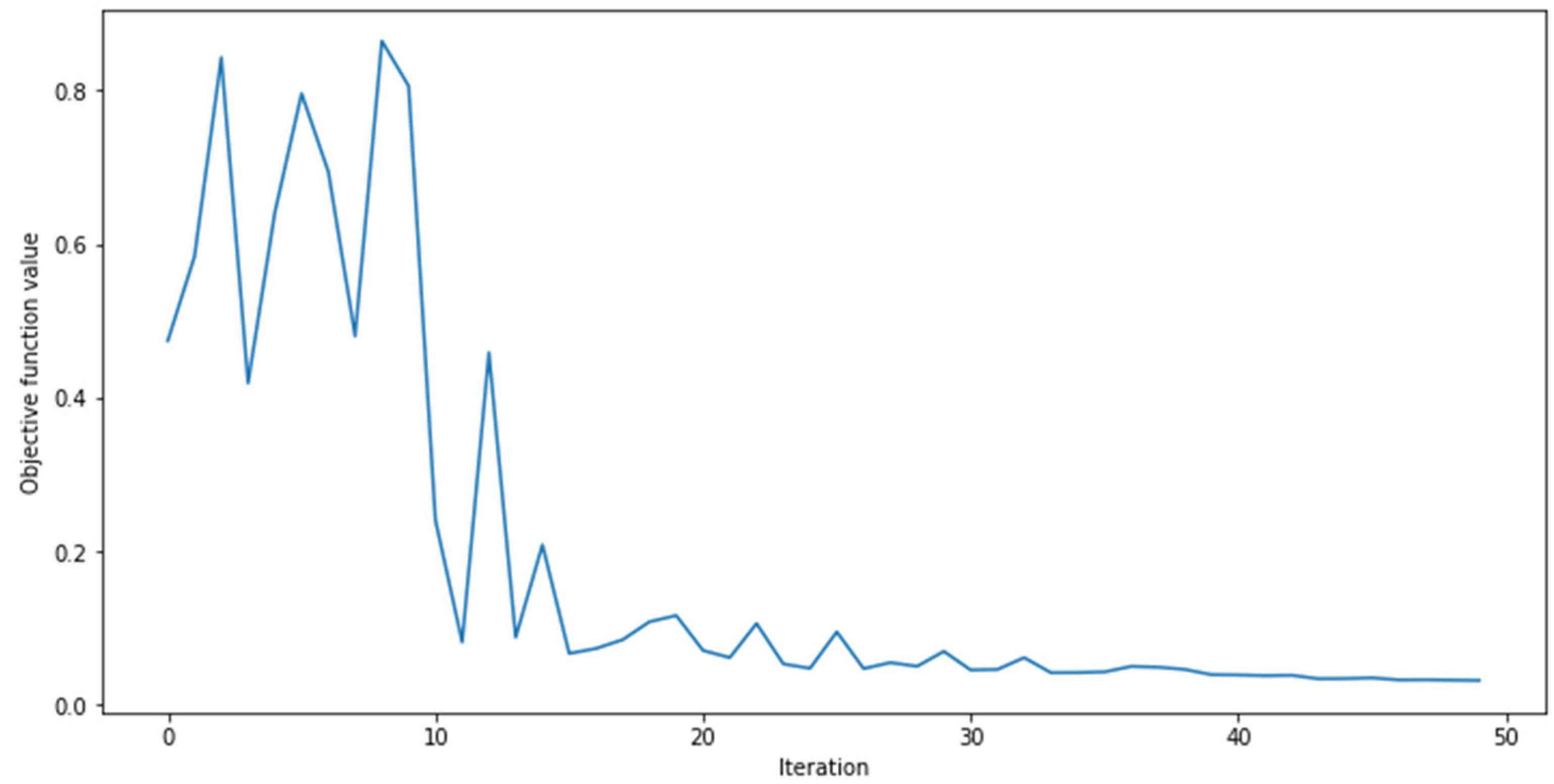

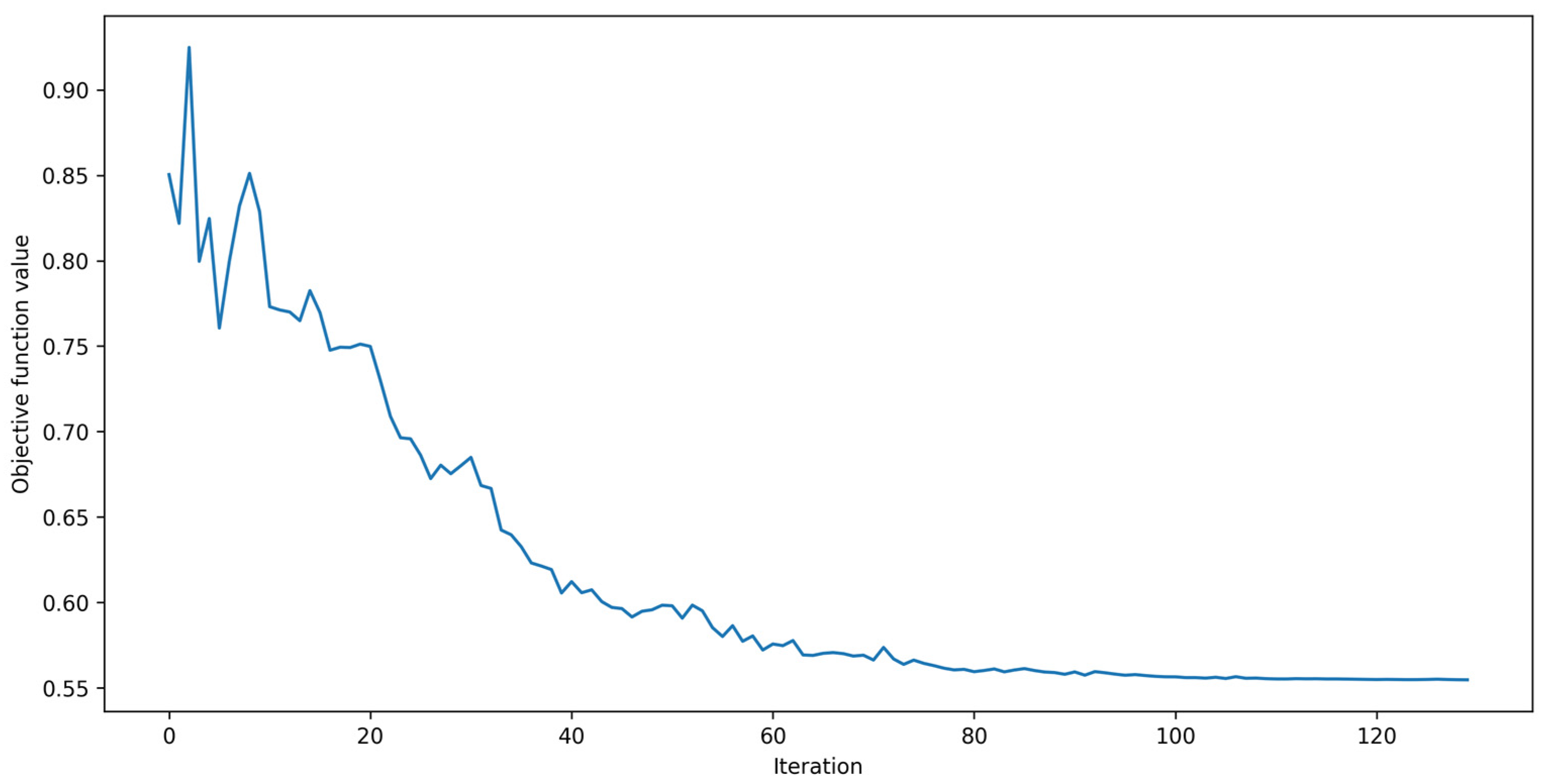

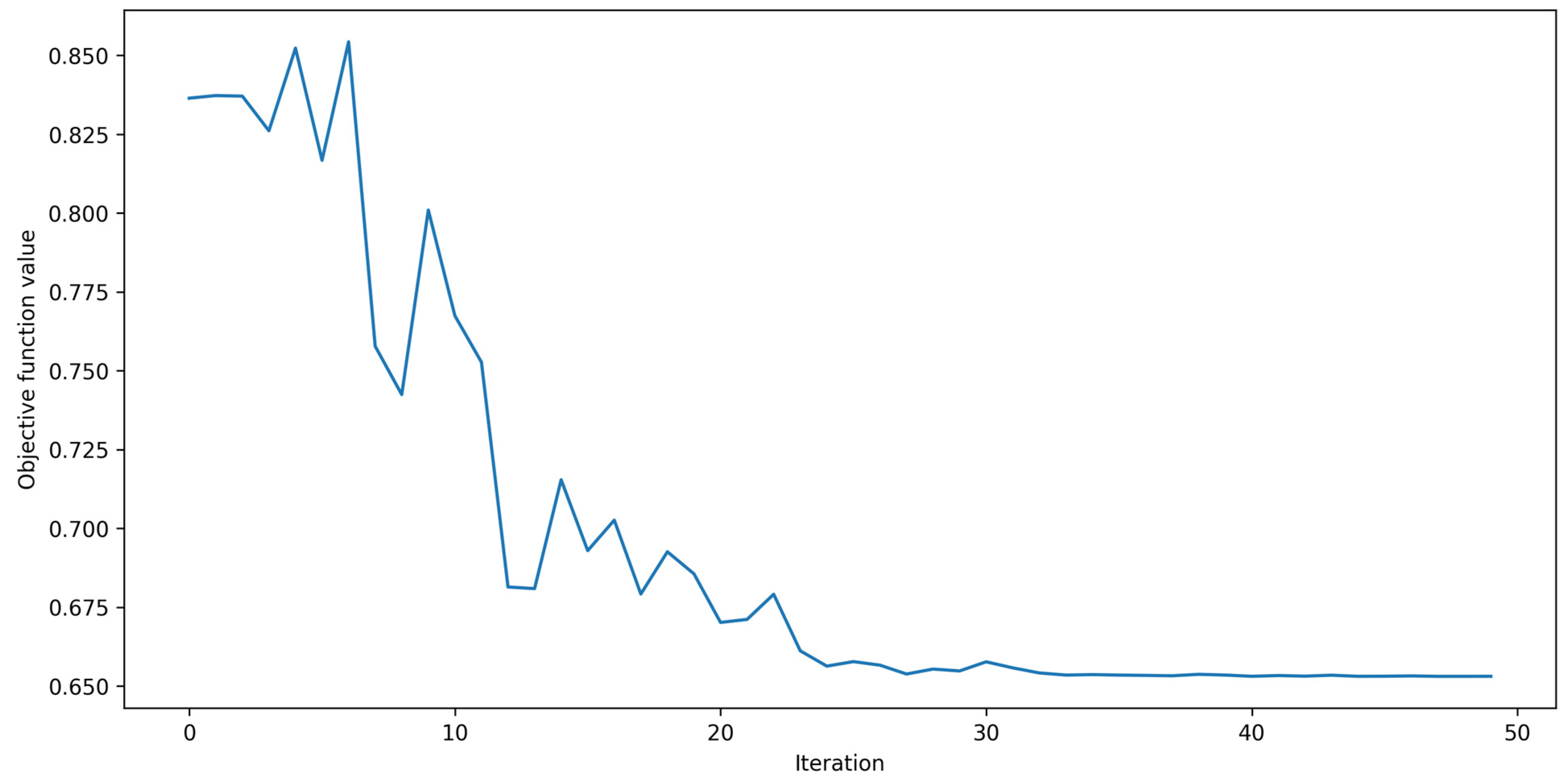

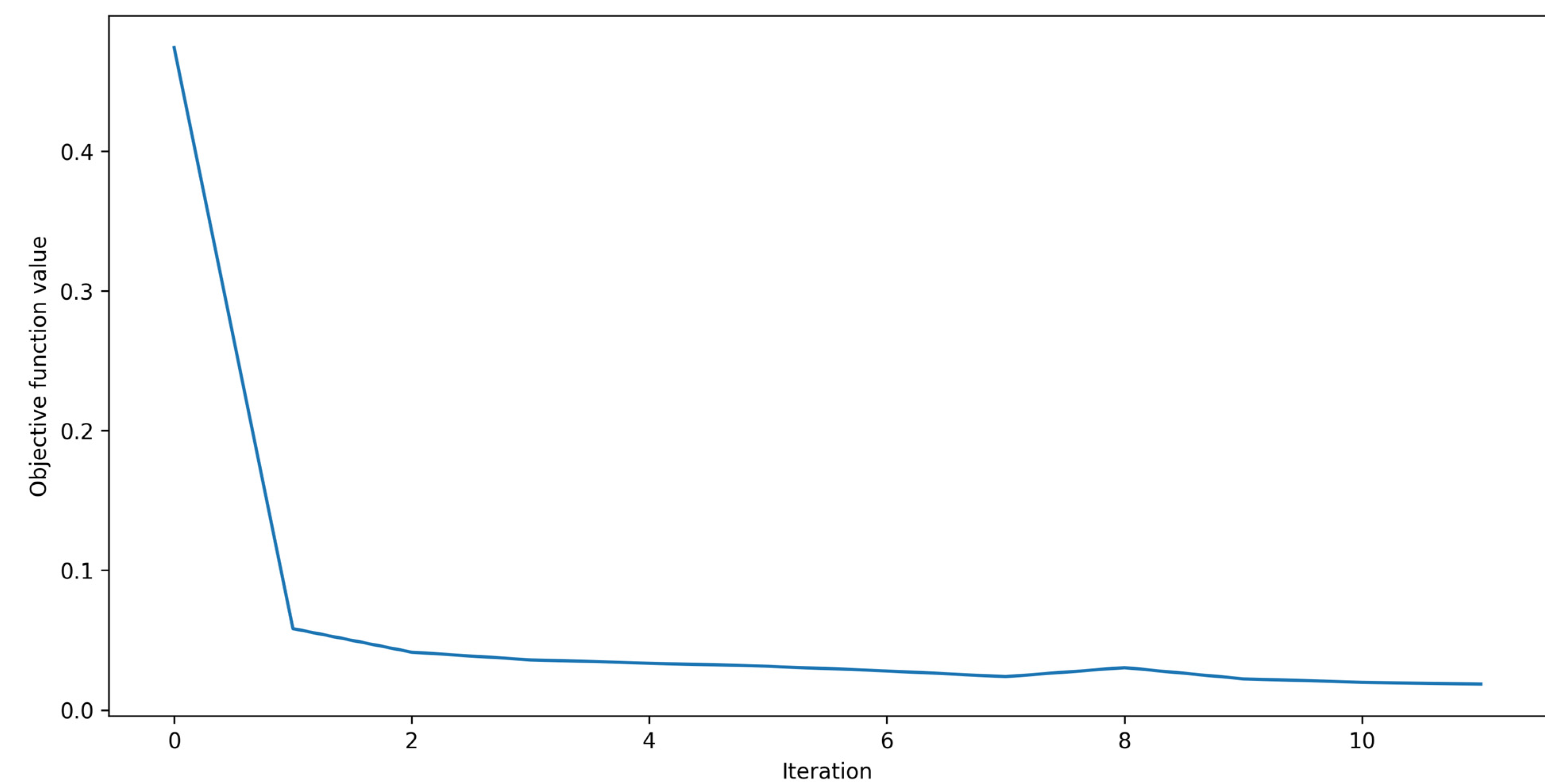

3.4. Optimization Algorithm

3.5. Number of Repetitions for the Ansatz

3.6. Solar Irradiance Forecasting Results

4. Discussion

4.1. QML Models Found in the Literature

4.2. GHI and DNI Forecasting Results

- The parameter selection was performed by a random forest model, which is a tree-based model and therefore may have added some bias toward the improved performance of the XGBoost.

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Biamonte, J.; Wittek, P.; Pancotti, N.; Rebentrost, P.; Wiebe, N.; Lloyd, S. Quantum Machine Learning. Nature 2017, 549, 195–202. [Google Scholar] [CrossRef] [PubMed]

- Sachdeva, N.; Harnett, G.S.; Maity, S.; Marsh, S.; Wang, Y.; Winick, A.; Dougherty, R.; Canuto, D.; Chong, Y.Q.; Hush, M.; et al. Quantum Optimization Using a 127-Qubit Gate-Model IBM Quantum Computer Can Outperform Quantum Annealers for Nontrivial Binary Optimization Problems. arXiv 2024, arXiv:2406.01743. [Google Scholar]

- Schuld, M.; Petruccione, F. Machine Learning with Quantum Computers; Springer Nature: Berlin/Heidelberg, Germany, 2021; ISBN 978-3-030-83098-4. [Google Scholar]

- Cerezo, M.; Verdon, G.; Huang, H.-Y.; Cincio, L.; Coles, P.J. Challenges and Opportunities in Quantum Machine Learning. Nat. Comput. Sci. 2022, 2, 567–576. [Google Scholar] [CrossRef]

- Brooks, M. The Race to Find Quantum Computing’s Sweet Spot. Available online: https://www.nature.com/articles/d41586-023-01692-9.pdf (accessed on 13 June 2024).

- Alchieri, L.; Badalotti, D.; Bonardi, P.; Bianco, S. An Introduction to Quantum Machine Learning: From Quantum Logic to Quantum Deep Learning. Quantum. Mach. Intell. 2021, 3, 28. [Google Scholar] [CrossRef]

- Guan, W.; Perdue, G.; Pesah, A.; Schuld, M.; Terashi, K.; Vallecorsa, S.; Vlimant, J.-R. Quantum Machine Learning in High Energy Physics. Mach. Learn. Sci. Technol. 2021, 2, 011003. [Google Scholar] [CrossRef]

- Vidal Bezerra, F.D.; Pinto Marinho, F.; Costa Rocha, P.A.; Oliveira Santos, V.; Van Griensven Thé, J.; Gharabaghi, B. Machine Learning Dynamic Ensemble Methods for Solar Irradiance and Wind Speed Predictions. Atmosphere 2023, 14, 1635. [Google Scholar] [CrossRef]

- Osman, A.I.; Chen, L.; Yang, M.; Msigwa, G.; Farghali, M.; Fawzy, S.; Rooney, D.W.; Yap, P.-S. Cost, Environmental Impact, and Resilience of Renewable Energy under a Changing Climate: A Review. Environ. Chem. Lett. 2023, 21, 741–764. [Google Scholar] [CrossRef]

- Walther, G.-R.; Post, E.; Convey, P.; Menzel, A.; Parmesan, C.; Beebee, T.J.C.; Fromentin, J.-M.; Hoegh-Guldberg, O.; Bairlein, F. Ecological Responses to Recent Climate Change. Nature 2002, 416, 389–395. [Google Scholar] [CrossRef] [PubMed]

- Duffy, P.B.; Field, C.B.; Diffenbaugh, N.S.; Doney, S.C.; Dutton, Z.; Goodman, S.; Heinzerling, L.; Hsiang, S.; Lobell, D.B.; Mickley, L.J.; et al. Strengthened Scientific Support for the Endangerment Finding for Atmospheric Greenhouse Gases. Science 2019, 363, eaat5982. [Google Scholar] [CrossRef]

- Zhang, W.; Zhou, T. Significant Increases in Extreme Precipitation and the Associations with Global Warming over the Global Land Monsoon Regions. J. Clim. 2019, 32, 8465–8488. [Google Scholar] [CrossRef]

- Ebi, K.L.; Vanos, J.; Baldwin, J.W.; Bell, J.E.; Hondula, D.M.; Errett, N.A.; Hayes, K.; Reid, C.E.; Saha, S.; Spector, J.; et al. Extreme Weather and Climate Change: Population Health and Health System Implications. Annu. Rev. Public Health 2021, 42, 293–315. [Google Scholar] [CrossRef] [PubMed]

- Bezirtzoglou, C.; Dekas, K.; Charvalos, E. Climate Changes, Environment and Infection: Facts, Scenarios and Growing Awareness from the Public Health Community within Europe. Anaerobe 2011, 17, 337–340. [Google Scholar] [CrossRef] [PubMed]

- Khoury, C.K.; Brush, S.; Costich, D.E.; Curry, H.A.; Haan, S.; Engels, J.M.M.; Guarino, L.; Hoban, S.; Mercer, K.L.; Miller, A.J.; et al. Crop Genetic Erosion: Understanding and Responding to Loss of Crop Diversity. New Phytol. 2022, 233, 84–118. [Google Scholar] [CrossRef] [PubMed]

- Dechezleprêtre, A.; Rivers, N.; Stadler, B. The Economic Cost of Air Pollution: Evidence from Europe; OECD Economics Department Working Papers; OECD: Paris, France, 2019; Volume 1584. [Google Scholar]

- Errigo, I.M.; Abbott, B.W.; Mendoza, D.L.; Mitchell, L.; Sayedi, S.S.; Glenn, J.; Kelly, K.E.; Beard, J.D.; Bratsman, S.; Carter, T.; et al. Human Health and Economic Costs of Air Pollution in Utah: An Expert Assessment. Atmosphere 2020, 11, 1238. [Google Scholar] [CrossRef]

- Fisher, S.; Bellinger, D.C.; Cropper, M.L.; Kumar, P.; Binagwaho, A.; Koudenoukpo, J.B.; Park, Y.; Taghian, G.; Landrigan, P.J. Air Pollution and Development in Africa: Impacts on Health, the Economy, and Human Capital. Lancet Planet. Health 2021, 5, e681–e688. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, F.; Liu, B.; Zhang, B. Short-Term and Long-Term Impacts of Air Pollution Control on China’s Economy. Environ. Manag. 2022, 70, 536–547. [Google Scholar] [CrossRef]

- IRENA. Renewable Capacity Statistics 2024. 2024. Available online: https://www.irena.org/Publications/2024/Mar/Renewable-capacity-statistics-2024 (accessed on 30 May 2024).

- IEA. World Energy Investment 2023. 2023. Available online: https://www.iea.org/reports/world-energy-investment-2023 (accessed on 30 May 2024).

- Voyant, C.; Notton, G.; Kalogirou, S.; Nivet, M.-L.; Paoli, C.; Motte, F.; Fouilloy, A. Machine Learning Methods for Solar Radiation Forecasting: A Review. Renew. Energy 2017, 105, 569–582. [Google Scholar] [CrossRef]

- Rajagukguk, R.A.; Ramadhan, R.A.A.; Lee, H.-J. A Review on Deep Learning Models for Forecasting Time Series Data of Solar Irradiance and Photovoltaic Power. Energies 2020, 13, 6623. [Google Scholar] [CrossRef]

- Chu, Y.; Wang, Y.; Yang, D.; Chen, S.; Li, M. A Review of Distributed Solar Forecasting with Remote Sensing and Deep Learning. Renew. Sustain. Energy Rev. 2024, 198, 114391. [Google Scholar] [CrossRef]

- Yang, D.; Kleissl, J.; Gueymard, C.A.; Pedro, H.T.C.; Coimbra, C.F.M. History and Trends in Solar Irradiance and PV Power Forecasting: A Preliminary Assessment and Review Using Text Mining. Sol. Energy 2018, 168, 60–101. [Google Scholar] [CrossRef]

- Wang, W.; Du, Y.; Chau, K.; Chen, H.; Liu, C.; Ma, Q. A Comparison of BPNN, GMDH, and ARIMA for Monthly Rainfall Forecasting Based on Wavelet Packet Decomposition. Water 2021, 13, 2871. [Google Scholar] [CrossRef]

- Costa Rocha, P.A.; Oliveira Santos, V.; Scott, J.; Van Griensven Thé, J.; Gharabaghi, B. Application of Graph Neural Networks to Forecast Urban Flood Events: The Case Study of the 2013 Flood of the Bow River, Calgary, Canada. Int. J. River Basin Manag. 2024, 1–18. [Google Scholar] [CrossRef]

- Oliveira Santos, V.; Costa Rocha, P.A.; Scott, J.; Van Griensven Thé, J.; Gharabaghi, B. Spatiotemporal Air Pollution Forecasting in Houston-TX: A Case Study for Ozone Using Deep Graph Neural Networks. Atmosphere 2023, 14, 308. [Google Scholar] [CrossRef]

- Oliveira Santos, V.; Costa Rocha, P.A.; Thé, J.V.G.; Gharabaghi, B. Graph-Based Deep Learning Model for Forecasting Chloride Concentration in Urban Streams to Protect Salt-Vulnerable Areas. Environments 2023, 10, 157. [Google Scholar] [CrossRef]

- Oliveira Santos, V.; Guimarães, B.M.D.M.; Neto, I.E.L.; de Souza Filho, F.d.A.; Costa Rocha, P.A.; Thé, J.V.G.; Gharabaghi, B. Chlorophyll-a Estimation in 149 Tropical Semi-Arid Reservoirs Using Remote Sensing Data and Six Machine Learning Methods. Remote Sens. 2024, 16, 1870. [Google Scholar] [CrossRef]

- Rocha, P.A.C.; Santos, V.O. Global Horizontal and Direct Normal Solar Irradiance Modeling by the Machine Learning Methods XGBoost and Deep Neural Networks with CNN-LSTM Layers: A Case Study Using the GOES-16 Satellite Imagery. Int. J. Energy Environ. Eng. 2022, 13, 1271–1286. [Google Scholar] [CrossRef]

- Marinho, F.P.; Rocha, P.A.C.; Neto, A.R.R.; Bezerra, F.D.V. Short-Term Solar Irradiance Forecasting Using CNN-1D, LSTM, and CNN-LSTM Deep Neural Networks: A Case Study With the Folsom (USA) Dataset. J. Sol. Energy Eng. 2023, 145, 041002. [Google Scholar] [CrossRef]

- Carneiro, T.C.; Rocha, P.A.C.; Carvalho, P.C.M.; Fernández-Ramírez, L.M. Ridge Regression Ensemble of Machine Learning Models Applied to Solar and Wind Forecasting in Brazil and Spain. Appl. Energy 2022, 314, 118936. [Google Scholar] [CrossRef]

- Oliveira Santos, V.; Costa Rocha, P.A.; Scott, J.; Van Griensven Thé, J.; Gharabaghi, B. Spatiotemporal Analysis of Bidimensional Wind Speed Forecasting: Development and Thorough Assessment of LSTM and Ensemble Graph Neural Networks on the Dutch Database. Energy 2023, 278, 127852. [Google Scholar] [CrossRef]

- Abdullah-Vetter, Z.; Dwivedi, P.; Ekins-Daukes, N.J.; Trupke, T.; Hameiri, Z. Automated Analysis of Internal Quantum Efficiency Measurements of GaAs Solar Cells Using Machine Learning. In Proceedings of the 2023 IEEE 50th Photovoltaic Specialists Conference (PVSC), San Juan, Puerto Rico, 11–16 June 2023; pp. 1–3. [Google Scholar]

- Liu, H.; Tang, W. Quantum Computing for Power Systems: Tutorial, Review, Challenges, and Prospects. Electr. Power Syst. Res. 2023, 223, 109530. [Google Scholar] [CrossRef]

- Yousef, L.A.; Yousef, H.; Rocha-Meneses, L. Artificial Intelligence for Management of Variable Renewable Energy Systems: A Review of Current Status and Future Directions. Energies 2023, 16, 8057. [Google Scholar] [CrossRef]

- Pedro, H.T.C.; Larson, D.P.; Coimbra, C.F.M. A Comprehensive Dataset for the Accelerated Development and Benchmarking of Solar Forecasting Methods. J. Renew. Sustain. Energy 2019, 11, 036102. [Google Scholar] [CrossRef]

- Duffie, J.A.; Beckman, W.A.; Blair, N. Solar Engineering of Thermal Processes, Photovoltaics and Wind; John Wiley & Sons: Hoboken, NJ, USA, 2020; ISBN 978-1-119-54028-1. [Google Scholar]

- El Boujdaini, L.; Mezrhab, A.; Moussaoui, M.A. Artificial Neural Networks for Global and Direct Solar Irradiance Forecasting: A Case Study. Energy Sources Part A Recovery Util. Environ. Eff. 2021, 1–21. [Google Scholar] [CrossRef]

- Cavaco, A.; Canhoto, P.; Collares Pereira, M. Procedures for Solar Radiation Data Gathering and Processing and Their Application to DNI Assessment in Southern Portugal. Renew. Energy 2021, 163, 2208–2219. [Google Scholar] [CrossRef]

- Gupta, R.; Yadav, A.K.; Jha, S.; Pathak, P.K. Long Term Estimation of Global Horizontal Irradiance Using Machine Learning Algorithms. Optik 2023, 283, 170873. [Google Scholar] [CrossRef]

- Larson, D.P.; Li, M.; Coimbra, C.F.M. SCOPE: Spectral Cloud Optical Property Estimation Using Real-Time GOES-R Longwave Imagery. J. Renew. Sustain. Energy 2020, 12, 026501. [Google Scholar] [CrossRef]

- Garcia-Gutierrez, L.; Voyant, C.; Notton, G.; Almorox, J. Evaluation and Comparison of Spatial Clustering for Solar Irradiance Time Series. Appl. Sci. 2022, 12, 8529. [Google Scholar] [CrossRef]

- Ineichen, P.; Perez, R. A New Airmass Independent Formulation for the Linke Turbidity Coefficient. Sol. Energy 2002, 73, 151–157. [Google Scholar] [CrossRef]

- Lauret, P.; Alonso-Suárez, R.; Le Gal La Salle, J.; David, M. Solar Forecasts Based on the Clear Sky Index or the Clearness Index: Which Is Better? Solar 2022, 2, 432–444. [Google Scholar] [CrossRef]

- Géron, A. Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2019; ISBN 978-1-09-812246-1. [Google Scholar]

- James, G.; Witten, D.; Hastie, T.; Tibshirani, R.; Taylor, J. An Introduction to Statistical Learning: With Applications in Python; Springer International Publishing: Berlin/Heidelberg, Germany, 2023; ISBN 978-3-031-38746-3. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; ISBN 978-0-262-03561-3. [Google Scholar]

- Chollet, F. Deep Learning with Python, 2nd ed.; Simon and Schuster: Shelter Island, NY, USA, 2021; ISBN 978-1-63835-009-5. [Google Scholar]

- Tanveer, M.; Rajani, T.; Rastogi, R.; Shao, Y.H.; Ganaie, M.A. Comprehensive Review on Twin Support Vector Machines. Ann. Oper. Res. 2022. [Google Scholar] [CrossRef]

- Azimi, H.; Bonakdari, H.; Ebtehaj, I.; Gharabaghi, B.; Khoshbin, F. Evolutionary Design of Generalized Group Method of Data Handling-Type Neural Network for Estimating the Hydraulic Jump Roller Length. Acta Mech. 2018, 229, 1197–1214. [Google Scholar] [CrossRef]

- Elkurdy, M.; Binns, A.D.; Bonakdari, H.; Gharabaghi, B.; McBean, E. Early Detection of Riverine Flooding Events Using the Group Method of Data Handling for the Bow River, Alberta, Canada. Int. J. River Basin Manag. 2022, 20, 533–544. [Google Scholar] [CrossRef]

- Zaji, A.H.; Bonakdari, H.; Gharabaghi, B. Reservoir Water Level Forecasting Using Group Method of Data Handling. Acta Geophys. 2018, 66, 717–730. [Google Scholar] [CrossRef]

- Stajkowski, S.; Hotson, E.; Zorica, M.; Farghaly, H.; Bonakdari, H.; McBean, E.; Gharabaghi, B. Modeling Stormwater Management Pond Thermal Impacts during Storm Events. J. Hydrol. 2023, 620, 129413. [Google Scholar] [CrossRef]

- Bonakdari, H.; Ebtehaj, I.; Gharabaghi, B.; Vafaeifard, M.; Akhbari, A. Calculating the Energy Consumption of Electrocoagulation Using a Generalized Structure Group Method of Data Handling Integrated with a Genetic Algorithm and Singular Value Decomposition. Clean Techn. Environ. Policy 2019, 21, 379–393. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, ACM, San Francisco, CA, USA, 13 August 2016; pp. 785–794. [Google Scholar]

- Nielsen, M.A.; Chuang, I.L. Quantum Computation and Quantum Information, 10th ed.; Cambridge University Press: Cambridge, MA, USA; New York, NY, USA, 2010; ISBN 978-1-107-00217-3. [Google Scholar]

- Combarro, E.F.; Gonzalez-Castillo, S.; Meglio, A.D. A Practical Guide to Quantum Machine Learning and Quantum Optimization: Hands-on Approach to Modern Quantum Algorithms; Packt Publishing Ltd.: Birmingham, UK, 2023; ISBN 978-1-80461-830-1. [Google Scholar]

- Sutor, R.S. Dancing with Qubits: How Quantum Computing Works and How It May Change the World; Expert insight; Packt: Birmingham, UK; Mumbai, India, 2019; ISBN 978-1-83882-736-6. [Google Scholar]

- Aïmeur, E.; Brassard, G.; Gambs, S. Machine Learning in a Quantum World. In Proceedings of the Advances in Artificial Intelligence; Tawfik, A.Y., Goodwin, S.D., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; Volume 3060, pp. 431–442. [Google Scholar]

- Lau, J.W.Z.; Lim, K.H.; Shrotriya, H.; Kwek, L.C. NISQ Computing: Where Are We and Where Do We Go? AAPPS Bull. 2022, 32, 27. [Google Scholar] [CrossRef]

- Li, G.; Ding, Y.; Xie, Y. Tackling the Qubit Mapping Problem for NISQ-Era Quantum Devices. In Proceedings of the Twenty-Fourth International Conference on Architectural Support for Programming Languages and Operating Systems, ACM, Providence, RI, USA, 4 April 2019; pp. 1001–1014. [Google Scholar]

- Ullah, M.H.; Eskandarpour, R.; Zheng, H.; Khodaei, A. Quantum Computing for Smart Grid Applications. IET Gener. Trans. Dist. 2022, 16, 4239–4257. [Google Scholar] [CrossRef]

- Orazi, F.; Gasperini, S.; Lodi, S.; Sartori, C. Hybrid Quantum Technologies for Quantum Support Vector Machines. Information 2024, 15, 72. [Google Scholar] [CrossRef]

- Zheng, K.; Van Griensven, J.; Fraser, R. A Quantum Machine Learning Approach to Spatiotemporal Emission Modelling. Atmosphere 2023, 14, 944. [Google Scholar] [CrossRef]

- Quantum Neural Networks—Qiskit Machine Learning 0.7.2. Available online: https://qiskit-community.github.io/qiskit-machine-learning/tutorials/01_neural_networks.html (accessed on 10 June 2024).

- Schuld, M.; Killoran, N. Quantum Machine Learning in Feature Hilbert Spaces. Phys. Rev. Lett. 2019, 122, 040504. [Google Scholar] [CrossRef] [PubMed]

- Mengoni, R.; Di Pierro, A. Kernel Methods in Quantum Machine Learning. Quantum Mach. Intell. 2019, 1, 65–71. [Google Scholar] [CrossRef]

- Yu, Y.; Hu, G.; Liu, C.; Xiong, J.; Wu, Z. Prediction of Solar Irradiance One Hour Ahead Based on Quantum Long Short-Term Memory Network. IEEE Trans. Quantum. Eng. 2023, 4, 1–15. [Google Scholar] [CrossRef]

- Sierra-Sosa, D.; Telahun, M.; Elmaghraby, A. TensorFlow Quantum: Impacts of Quantum State Preparation on Quantum Machine Learning Performance. IEEE Access 2020, 8, 215246–215255. [Google Scholar] [CrossRef]

- PauliFeatureMap. Available online: https://docs.quantum.ibm.com/api/qiskit/qiskit.circuit.library.PauliFeatureMap (accessed on 12 June 2024).

- Havlicek, V.; Córcoles, A.D.; Temme, K.; Harrow, A.W.; Kandala, A.; Chow, J.M.; Gambetta, J.M. Supervised Learning with Quantum Enhanced Feature Spaces. Nature 2019, 567, 209–212. [Google Scholar] [CrossRef]

- Variational Circuits—PennyLane. Available online: https://pennylane.ai/qml/glossary/variational_circuit/ (accessed on 3 June 2024).

- NLocal. Available online: https://docs.quantum.ibm.com/api/qiskit/qiskit.circuit.library.NLocal (accessed on 12 June 2024).

- TwoLocal. Available online: https://docs.quantum.ibm.com/api/qiskit/qiskit.circuit.library.TwoLocal (accessed on 12 June 2024).

- Funcke, L.; Hartung, T.; Heinemann, B.; Jansen, K.; Kropf, A.; Kühn, S.; Meloni, F.; Spataro, D.; Tüysüz, C.; Chinn Yap, Y. Studying Quantum Algorithms for Particle Track Reconstruction in the LUXE Experiment. J. Phys. Conf. Ser. 2023, 2438, 012127. [Google Scholar] [CrossRef]

- Farhi, E.; Goldstone, J.; Gutmann, S. A Quantum Approximate Optimization Algorithm. arXiv 2014, arXiv:1411.4028. [Google Scholar]

- VQE. Available online: https://docs.quantum.ibm.com/api/qiskit/0.26/qiskit.algorithms.VQE (accessed on 12 June 2024).

- QAOA. Available online: https://docs.quantum.ibm.com/api/qiskit/0.26/qiskit.algorithms.QAOA (accessed on 12 June 2024).

- Sushmit, M.M.; Mahbubul, I.M. Forecasting Solar Irradiance with Hybrid Classical–Quantum Models: A Comprehensive Evaluation of Deep Learning and Quantum-Enhanced Techniques. Energy Convers. Manag. 2023, 294, 117555. [Google Scholar] [CrossRef]

- COBYLA. Available online: https://docs.quantum.ibm.com/api/qiskit/0.26/qiskit.algorithms.optimizers.COBYLA (accessed on 12 June 2024).

- Quick, J.; Annoni, J.; King, R.; Dykes, K.; Fleming, P.; Ning, A. Optimization Under Uncertainty for Wake Steering Strategies. J. Phys.: Conf. Ser. 2017, 854, 012036. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, C.; Qiao, Y.; Zhou, Y.; Wang, S. Design and Mass Optimization of Numerical Models for Composite Wind Turbine Blades. JMSE 2023, 11, 75. [Google Scholar] [CrossRef]

- Miki, T.; Tsukayama, D.; Okita, R.; Shimada, M.; Shirakashi, J. Variational Parameter Optimization of Quantum-Classical Hybrid Heuristics on Near-Term Quantum Computer. In Proceedings of the 2022 IEEE International Conference on Manipulation, Manufacturing and Measurement on the Nanoscale (3M-NANO), Tianjin, China, 8 August 2022; pp. 415–418. [Google Scholar]

- Miháliková, I.; Friák, M.; Pivoluska, M.; Plesch, M.; Saip, M.; Šob, M. Best-Practice Aspects of Quantum-Computer Calculations: A Case Study of the Hydrogen Molecule. Molecules 2022, 27, 597. [Google Scholar] [CrossRef]

- Dalvand, Z.; Hajarian, M. Solving Generalized Inverse Eigenvalue Problems via L-BFGS-B Method. Inverse Probl. Sci. Eng. 2020, 28, 1719–1746. [Google Scholar] [CrossRef]

- L_BFGS_B. Available online: https://docs.quantum.ibm.com/api/qiskit/0.37/qiskit.algorithms.optimizers.L_BFGS_B (accessed on 12 June 2024).

- Wilson, M.; Stromswold, R.; Wudarski, F.; Hadfield, S.; Tubman, N.M.; Rieffel, E.G. Optimizing Quantum Heuristics with Meta-Learning. Quantum Mach. Intell. 2021, 3, 13. [Google Scholar] [CrossRef]

- Li, J.; Alam, M.; Saki, A.A.; Ghosh, S. Hierarchical Improvement of Quantum Approximate Optimization Algorithm for Object Detection: (Invited Paper). In Proceedings of the 2020 21st International Symposium on Quality Electronic Design (ISQED), Santa Clara, CA, USA, 25–26 March 2020; pp. 335–340. [Google Scholar]

- Gacon, J.; Zoufal, C.; Carleo, G.; Woerner, S. Simultaneous Perturbation Stochastic Approximation of the Quantum Fisher Information. Quantum 2021, 5, 567. [Google Scholar] [CrossRef]

- QNSPSA. Available online: https://docs.quantum.ibm.com/api/qiskit/0.40/qiskit.algorithms.optimizers.QNSPSA (accessed on 12 June 2024).

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-Learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Javadi-Abhari, A.; Treinish, M.; Krsulich, K.; Wood, C.J.; Lishman, J.; Gacon, J.; Martiel, S.; Nation, P.D.; Bishop, L.S.; Cross, A.W.; et al. Quantum Computing with Qiskit. 2024. [Google Scholar]

- Bergholm, V.; Izaac, J.; Schuld, M.; Gogolin, C.; Ahmed, S.; Ajith, V.; Alam, M.S.; Alonso-Linaje, G.; AkashNarayanan, B.; Asadi, A.; et al. PennyLane: Automatic Differentiation of Hybrid Quantum-Classical Computations. arXiv 2018, arXiv:1811.04968, 4968. [Google Scholar]

- Correa-Jullian, C.; Cofre-Martel, S.; San Martin, G.; Lopez Droguett, E.; De Novaes Pires Leite, G.; Costa, A. Exploring Quantum Machine Learning and Feature Reduction Techniques for Wind Turbine Pitch Fault Detection. Energies 2022, 15, 2792. [Google Scholar] [CrossRef]

- Sagingalieva, A.; Komornyik, S.; Senokosov, A.; Joshi, A.; Sedykh, A.; Mansell, C.; Tsurkan, O.; Pinto, K.; Pflitsch, M.; Melnikov, A. Photovoltaic Power Forecasting Using Quantum Machine Learning. arXiv 2023, arXiv:2312.16379. [Google Scholar]

- Jing, H.; Li, Y.; Brandsema, M.J.; Chen, Y.; Yue, M. HHL Algorithm with Mapping Function and Enhanced Sampling for Model Predictive Control in Microgrids. Appl. Energy 2024, 361, 122878. [Google Scholar] [CrossRef]

- Senekane, M.; Taele, B.M. Prediction of Solar Irradiation Using Quantum Support Vector Machine Learning Algorithm. SGRE 2016, 7, 293–301. [Google Scholar] [CrossRef]

- Ayoade, O.; Rivas, P.; Orduz, J. Artificial Intelligence Computing at the Quantum Level. Data 2022, 7, 28. [Google Scholar] [CrossRef]

- Li, X.; Ma, L.; Chen, P.; Xu, H.; Xing, Q.; Yan, J.; Lu, S.; Fan, H.; Yang, L.; Cheng, Y. Probabilistic Solar Irradiance Forecasting Based on XGBoost. Energy Rep. 2022, 8, 1087–1095. [Google Scholar] [CrossRef]

- Phan, Q.-T.; Wu, Y.-K.; Phan, Q.-D. Short-Term Solar Power Forecasting Using XGBoost with Numerical Weather Prediction. In Proceedings of the 2021 IEEE International Future Energy Electronics Conference (IFEEC), Taipei, Taiwan, 16 November 2021; pp. 1–6. [Google Scholar]

- Didavi, A.B.K.; Agbokpanzo, R.G.; Agbomahena, M. Comparative Study of Decision Tree, Random Forest and XGBoost Performance in Forecasting the Power Output of a Photovoltaic System. In Proceedings of the 2021 4th International Conference on Bio-Engineering for Smart Technologies (BioSMART), Paris/Créteil, France, 8 December 2021; pp. 1–5. [Google Scholar]

- Grinsztajn, L.; Oyallon, E.; Varoquaux, G. Why Do Tree-Based Models Still Outperform Deep Learning on Typical Tabular Data?

- Uddin, S.; Lu, H. Confirming the Statistically Significant Superiority of Tree-Based Machine Learning Algorithms over Their Counterparts for Tabular Data. PLoS ONE 2024, 19, e0301541. [Google Scholar] [CrossRef] [PubMed]

- Ajagekar, A.; You, F. Quantum Computing for Energy Systems Optimization: Challenges and Opportunities. Energy 2019, 179, 76–89. [Google Scholar] [CrossRef]

- Oliveira Santos, V.; Costa Rocha, P.A.; Scott, J.; Van Griensven Thé, J.; Gharabaghi, B. A New Graph-Based Deep Learning Model to Predict Flooding with Validation on a Case Study on the Humber River. Water 2023, 15, 1827. [Google Scholar] [CrossRef]

- Atmani, H.; Bouzgou, H.; Gueymard, C. Intra-Hour Forecasting of Direct Normal Solar Irradiance Using Variable Selection with Artificial Neural Networks; Springer: Cham, Switzerland, 2018. [Google Scholar]

| Attribute Name | Attribute Symbol |

|---|---|

| Backward Average | Bi |

| Lagged Average | Li |

| Clear-sky Variability | Vi |

| Red Channel | R |

| Green Channel | G |

| Blue Channel | B |

| Red-to-Blue Ratio | RB |

| Normalized Red-to-Blue Ratio | NRB |

| Average | AVG |

| Standard Deviation | STD |

| Entropy | ENT |

| Selected Features | ||

|---|---|---|

| Forecasting Horizon (min) | GHI | DNI |

| 5 | Bi, Vi, Li, ENT (R), ENT (RB) | Bi, Vi, Li, ENT (G), ENT (RB) |

| 10 | Bi, Vi, Li, ENT (G), ENT (RB) | Bi, Vi, Li, ENT (G), ENT (RB) |

| 15 | Bi, Vi, AVG (G), ENT (B), ENT (RB) | Bi, Vi, ENT (R), AVG (G), ENT (RB) |

| 20 | Bi, Vi, AVG (G), ENT (G), ENT (RB) | Bi, Vi, ENT (R), AVG (G), ENT (RB) |

| 25 | Bi, Vi, ENT (R), AVG (G), ENT (RB) | Bi, Vi, ENT (R), AVG (G), ENT (RB) |

| 30 | Bi, Vi, ENT (R), AVG (G), ENT (RB) | Bi, Vi, ENT (R), AVG (G), ENT (RB) |

| 60 | Bi, Vi, AVG (R), ENT (G), ENT (RB) | Bi, Vi, AVG (G), ENT (G), ENT (RB) |

| 120 | Bi, Vi, AVG (G), ENT (G), ENT (RB) | Vi, ENT (R), AVG (G), AVG (B), ENT (RB) |

| 180 | Bi, Li, AVG (G), ENT (B), ENT (RB) | Vi, Li, AVG (R), ENT (R), ENT (RB) |

| Pauli Y Feature Map | ||

|---|---|---|

| Ansatz, Optimizer | RMSE (W/m2) | R2 |

| Ry, COBYLA | 56.91 | 95.99% |

| Ry, L BFGS B | 56.73 | 96.01% |

| Ry, QN-SPSA | 56.55 | 96.04% |

| Two Local, COBYLA | 58.08 | 95.82% |

| Two Local, L-BFGS-B | 48.98 | 97.03% |

| Two Local, QN-SPSA | 63.90 | 94.94% |

| QAOA, COBYLA | 498.86 | −2.08 |

| QAOA, L-BFGS-B | 498.85 | −2.08 |

| QAOA, QN-SPSA | 501.21 | −2.11 |

| GHI | ||||

|---|---|---|---|---|

| Forecasting Time (min) | Algorithm | RMSE (W/m2) | R2 (%) | Forecast Skill (%) |

| 5 | SVR | 35.94 | 98.40 | 92.33 |

| XGBoost | 28.74 | 98.98 | 93.87 | |

| GMDH | 34.82 | 98.50 | 92.58 | |

| QNN | 48.98 | 97.03 | 89.55 | |

| 10 | SVR | 43.00 | 97.71 | 90.82 |

| XGBoost | 32.20 | 98.72 | 93.13 | |

| GMDH | 45.56 | 97.43 | 90.28 | |

| QNN | 54.48 | 96.33 | 88.38 | |

| 15 | SVR | 50.17 | 96.90 | 89.29 |

| XGBoost | 40.68 | 97.96 | 91.31 | |

| GMDH | 51.62 | 96.72 | 88.98 | |

| QNN | 65.56 | 94.71 | 86.00 | |

| 20 | SVR | 52.69 | 96.60 | 88.74 |

| XGBoost | 42.69 | 97.77 | 90.88 | |

| GMDH | 54.15 | 96.41 | 88.42 | |

| QNN | 65.40 | 94.76 | 86.02 | |

| 25 | SVR | 55.11 | 96.30 | 88.20 |

| XGBoost | 44.23 | 97.62 | 90.53 | |

| GMDH | 57.57 | 95.97 | 87.68 | |

| QNN | 63.09 | 95.16 | 86.50 | |

| 30 | SVR | 57.00 | 96.08 | 87.80 |

| XGBoost | 45.70 | 97.48 | 90.20 | |

| GMDH | 58.30 | 95.90 | 87.50 | |

| QNN | 63.97 | 95.06 | 86.30 | |

| 60 | SVR | 55.63 | 96.32 | 88.00 |

| XGBoost | 34.76 | 98.56 | 92.50 | |

| GMDH | 61.08 | 95.56 | 86.82 | |

| QNN | 61.20 | 95.54 | 86.80 | |

| 120 | SVR | 69.91 | 94.99 | 84.18 |

| XGBoost | 54.67 | 96.93 | 87.63 | |

| GMDH | 74.91 | 94.26 | 83.05 | |

| QNN | 66.24 | 95.50 | 85.01 | |

| 180 | SVR | 81.94 | 94.00 | 79.84 |

| XGBoost | 58.93 | 96.90 | 85.50 | |

| GMDH | 81.90 | 94.01 | 79.85 | |

| QNN | 77.55 | 94.63 | 80.92 | |

| DNI | ||||

|---|---|---|---|---|

| Forecasting Time (min) | Algorithm | RMSE (W/m2) | R2 (%) | Forecast Skill (%) |

| 5 | SVR | 80.31 | 93.33 | 87.16 |

| XGBoost | 61.96 | 96.03 | 90.09 | |

| GMDH | 72.08 | 94.63 | 88.47 | |

| QNN | 103.03 | 89.02 | 83.52 | |

| 10 | SVR | 95.17 | 90.70 | 84.75 |

| XGBoost | 77.08 | 93.90 | 87.65 | |

| GMDH | 92.49 | 91.22 | 85.18 | |

| QNN | 117.21 | 85.89 | 81.22 | |

| 15 | SVR | 105.25 | 88.77 | 83.08 |

| XGBoost | 87.76 | 92.19 | 85.90 | |

| GMDH | 105.86 | 88.64 | 82.99 | |

| QNN | 127.15 | 83.61 | 79.57 | |

| 20 | SVR | 112.31 | 87.43 | 81.88 |

| XGBoost | 93.69 | 91.25 | 84.89 | |

| GMDH | 114.72 | 86.88 | 81.49 | |

| QNN | 130.76 | 82.96 | 78.91 | |

| 25 | SVR | 117.58 | 86.49 | 80.95 |

| XGBoost | 98.19 | 90.58 | 84.09 | |

| GMDH | 121.78 | 85.51 | 80.27 | |

| QNN | 133.70 | 82.53 | 78.34 | |

| 30 | SVR | 122.84 | 85.54 | 80.00 |

| XGBoost | 102.33 | 89.97 | 83.34 | |

| GMDH | 127.79 | 84.36 | 79.20 | |

| QNN | 136.34 | 82.19 | 77.81 | |

| 60 | SVR | 123.77 | 85.69 | 79.53 |

| XGBoost | 84.33 | 93.35 | 86.05 | |

| GMDH | 134.96 | 82.98 | 77.68 | |

| QNN | 157.23 | 76.91 | 73.99 | |

| 120 | SVR | 172.05 | 77.31 | 69.11 |

| XGBoost | 107.24 | 91.19 | 80.74 | |

| GMDH | 168.16 | 78.33 | 69.80 | |

| QNN | 243.06 | 56.35 | 54.72 | |

| 180 | SVR | 192.58 | 74.81 | 61.46 |

| XGBoost | 145.54 | 85.61 | 70.87 | |

| GMDH | 177.88 | 78.51 | 64.40 | |

| QNN | 168.81 | 80.64 | 66.22 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Oliveira Santos, V.; Marinho, F.P.; Costa Rocha, P.A.; Thé, J.V.G.; Gharabaghi, B. Application of Quantum Neural Network for Solar Irradiance Forecasting: A Case Study Using the Folsom Dataset, California. Energies 2024, 17, 3580. https://doi.org/10.3390/en17143580

Oliveira Santos V, Marinho FP, Costa Rocha PA, Thé JVG, Gharabaghi B. Application of Quantum Neural Network for Solar Irradiance Forecasting: A Case Study Using the Folsom Dataset, California. Energies. 2024; 17(14):3580. https://doi.org/10.3390/en17143580

Chicago/Turabian StyleOliveira Santos, Victor, Felipe Pinto Marinho, Paulo Alexandre Costa Rocha, Jesse Van Griensven Thé, and Bahram Gharabaghi. 2024. "Application of Quantum Neural Network for Solar Irradiance Forecasting: A Case Study Using the Folsom Dataset, California" Energies 17, no. 14: 3580. https://doi.org/10.3390/en17143580

APA StyleOliveira Santos, V., Marinho, F. P., Costa Rocha, P. A., Thé, J. V. G., & Gharabaghi, B. (2024). Application of Quantum Neural Network for Solar Irradiance Forecasting: A Case Study Using the Folsom Dataset, California. Energies, 17(14), 3580. https://doi.org/10.3390/en17143580