Combinatorial Component Day-Ahead Load Forecasting through Unanchored Time Series Chain Evaluation

Abstract

1. Introduction

2. Materials and Methods

2.1. Time Series Decomposition Methods

2.1.1. Seasonal-Trend Decomposition using Locally Estimated Scatterplot Smoothing

2.1.2. Singular Spectrum Analysis

2.1.3. Empirical Mode Decomposition

2.2. Forecasting Models

2.2.1. Linear Regression

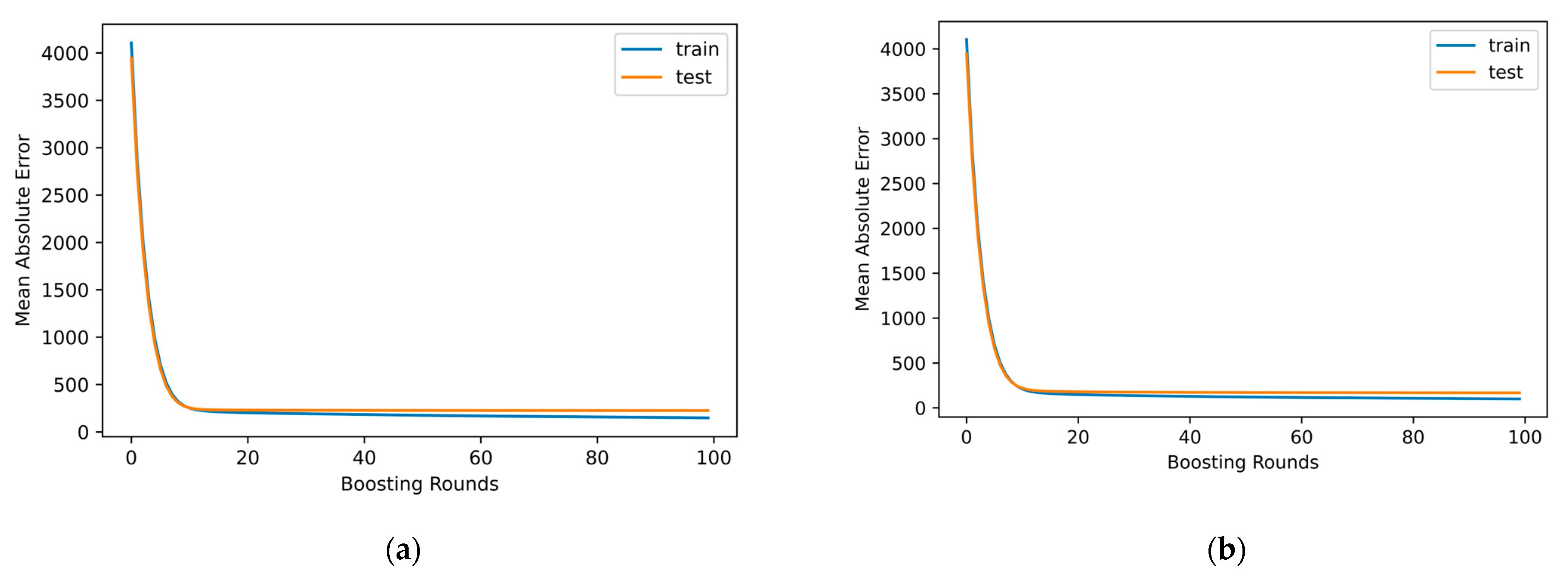

2.2.2. Extreme Gradient Boosting

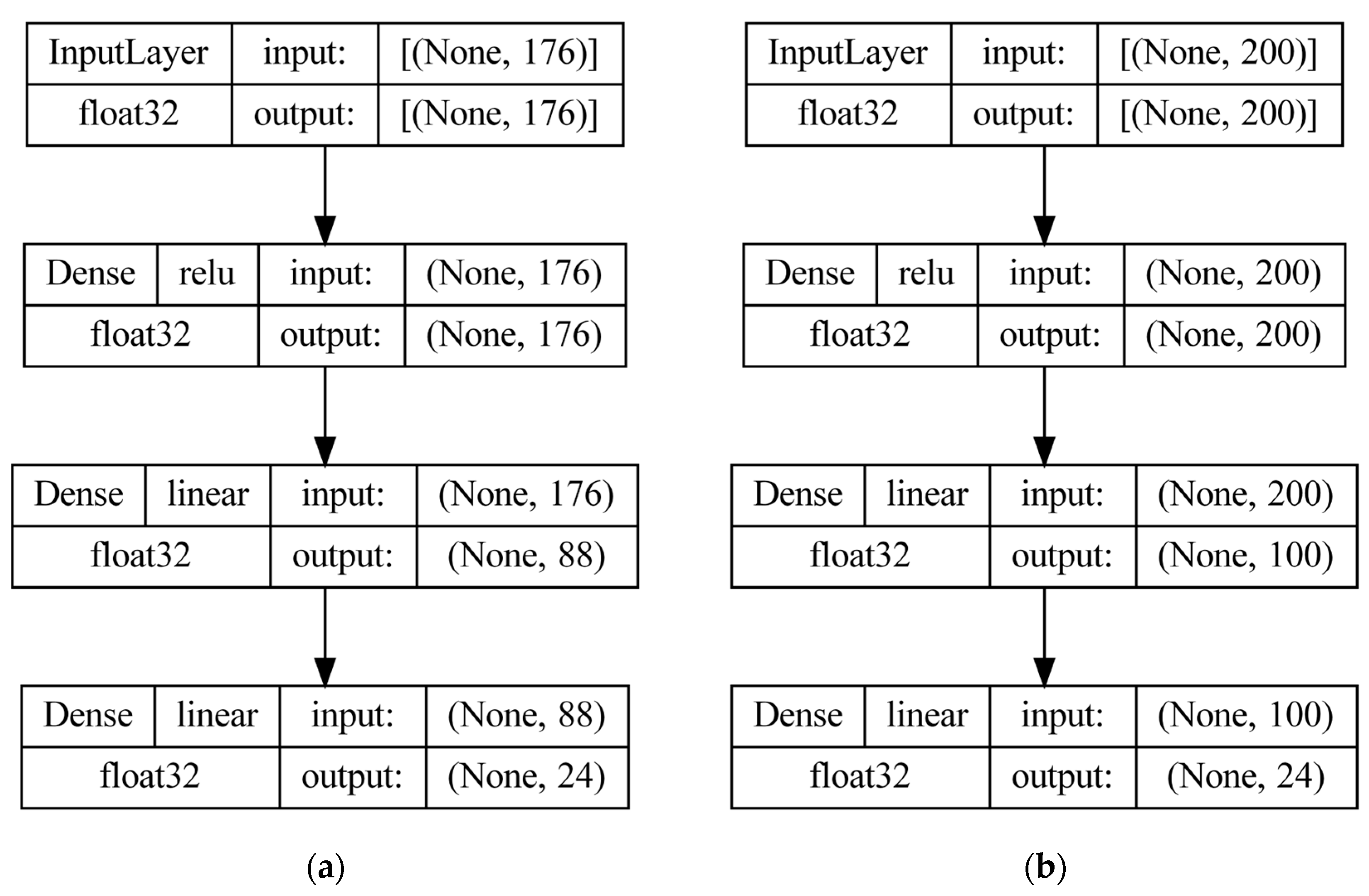

2.2.3. Multi-Layer Perceptron

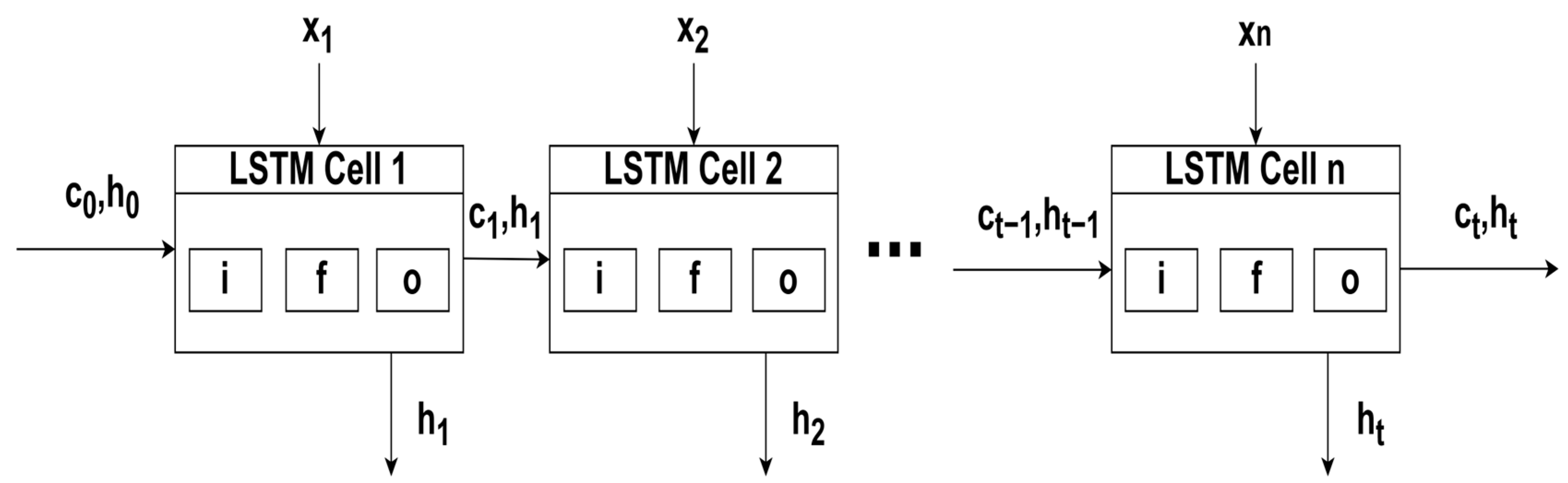

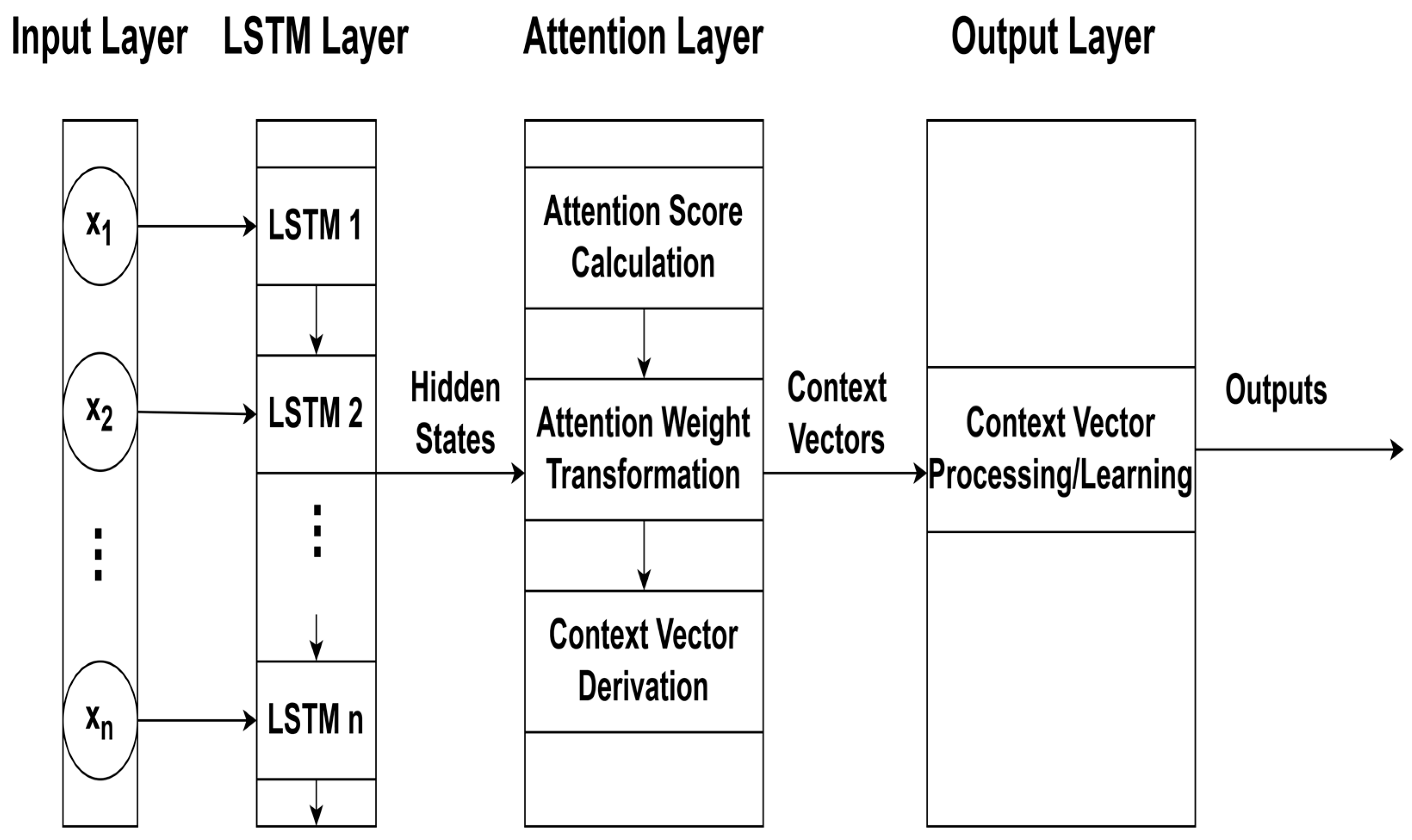

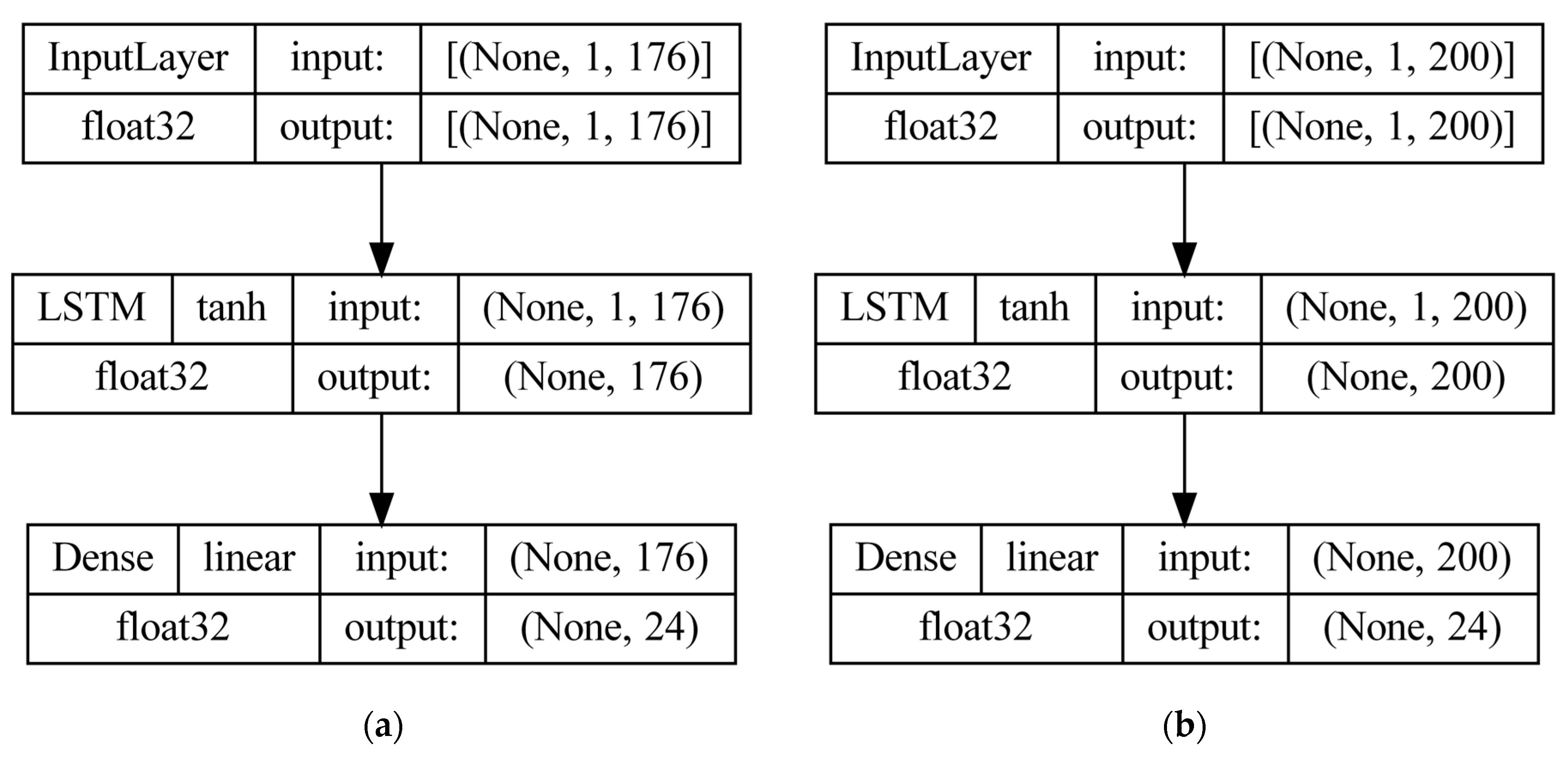

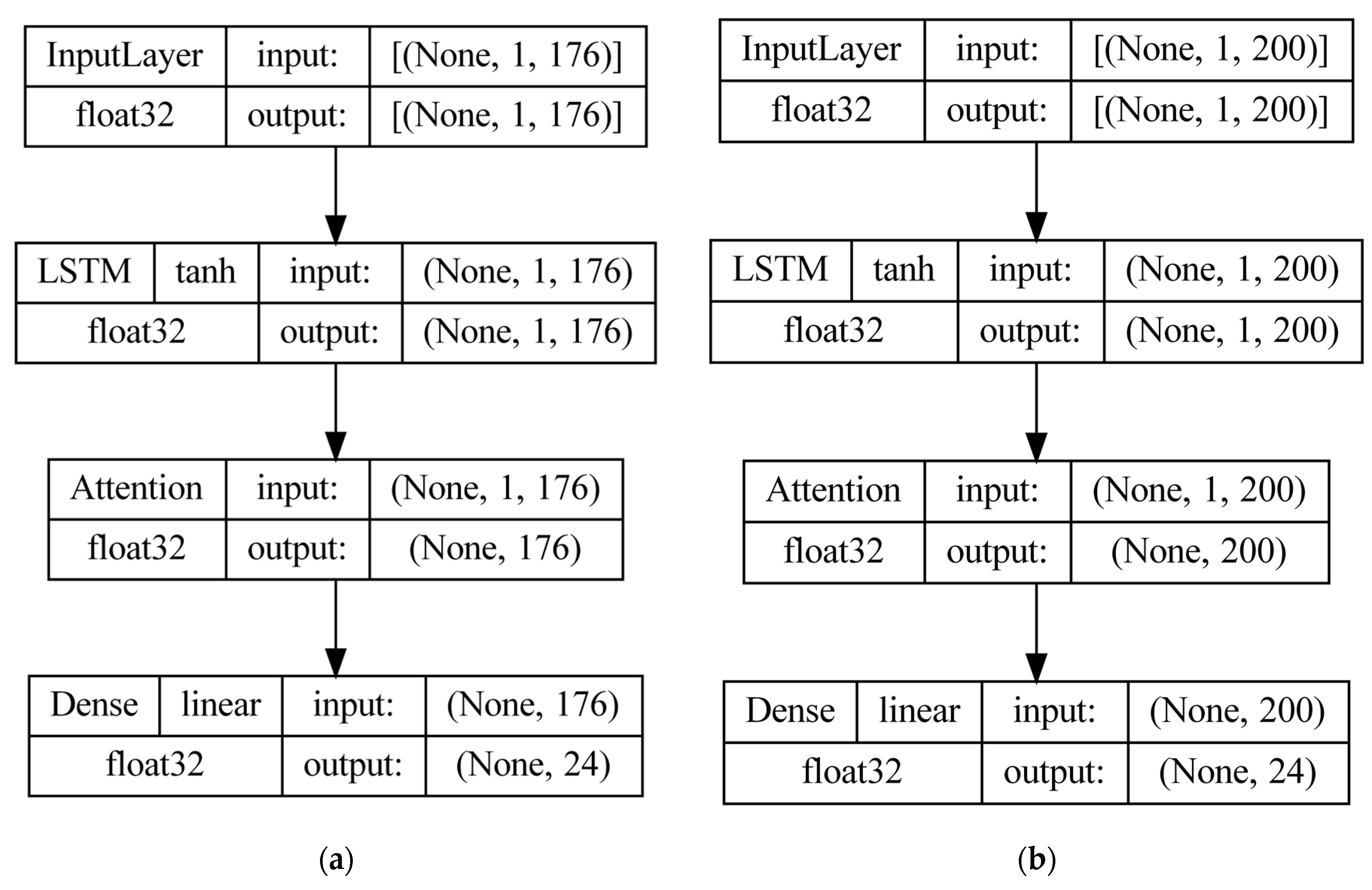

2.2.4. Long Short-Term Memory Networks

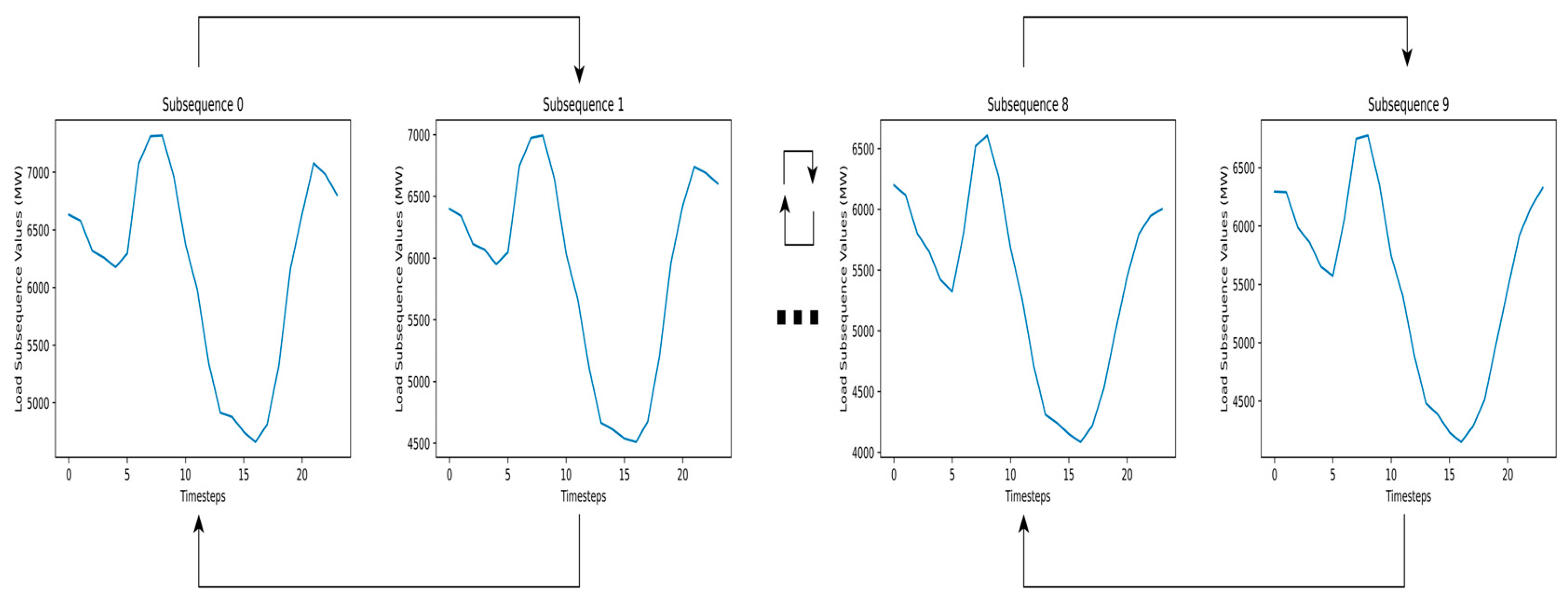

2.3. Time Series Chains

2.4. Problem Framing and Proposed Methodology

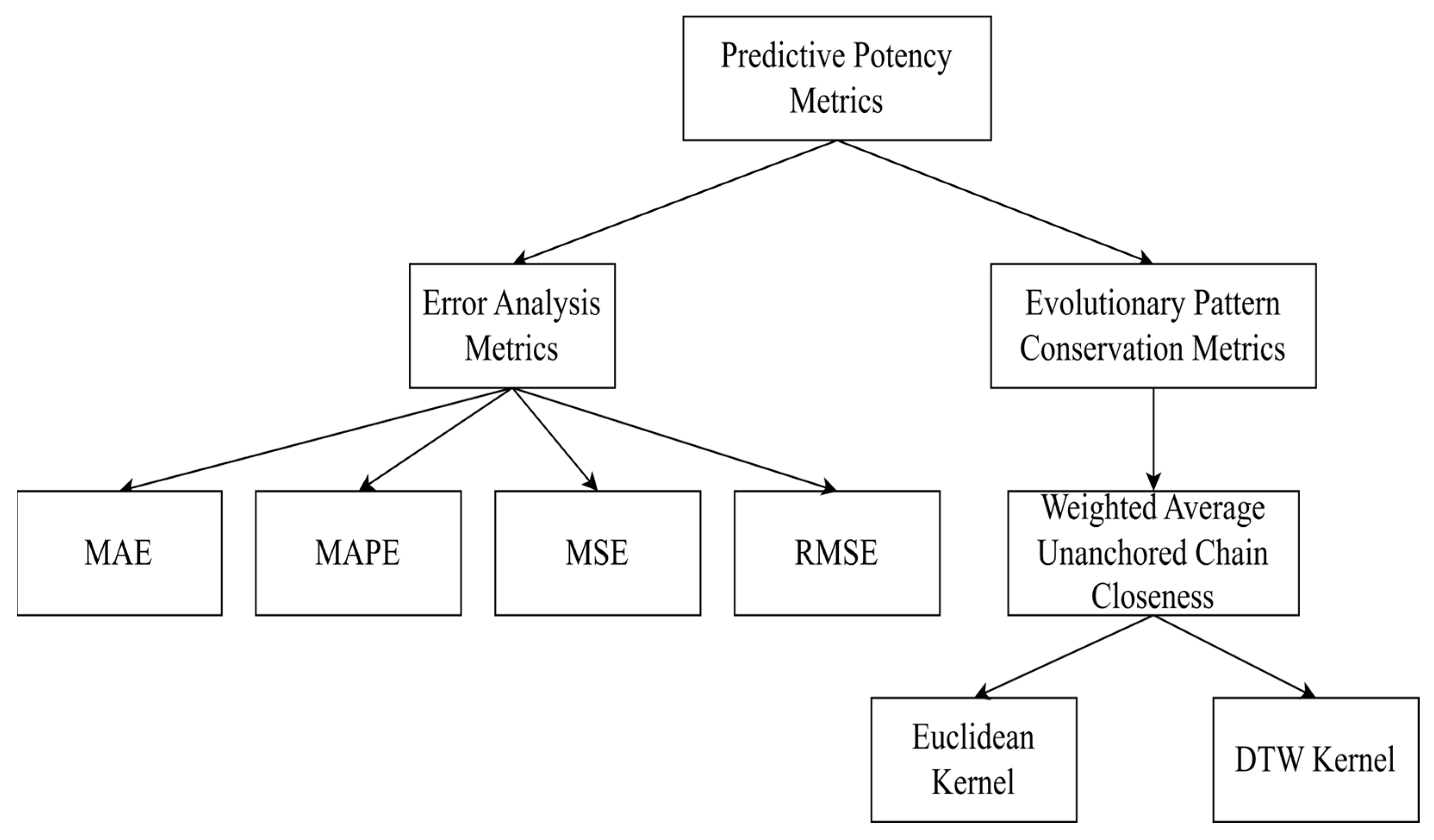

2.5. Performance Metrics

2.5.1. Error Analysis

2.5.2. Evolutionary Pattern Conservation Quality

2.6. Case Study and Experiments

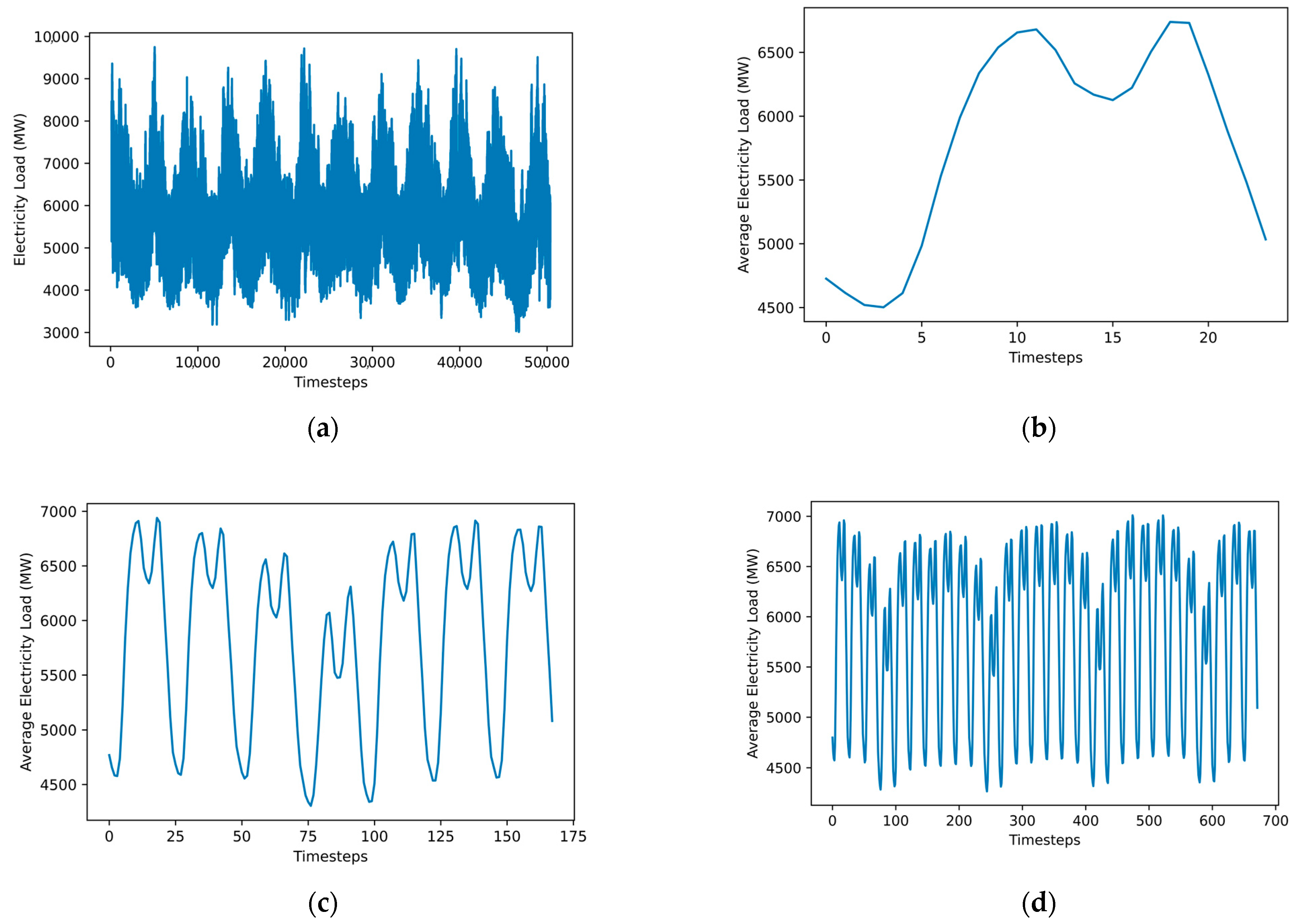

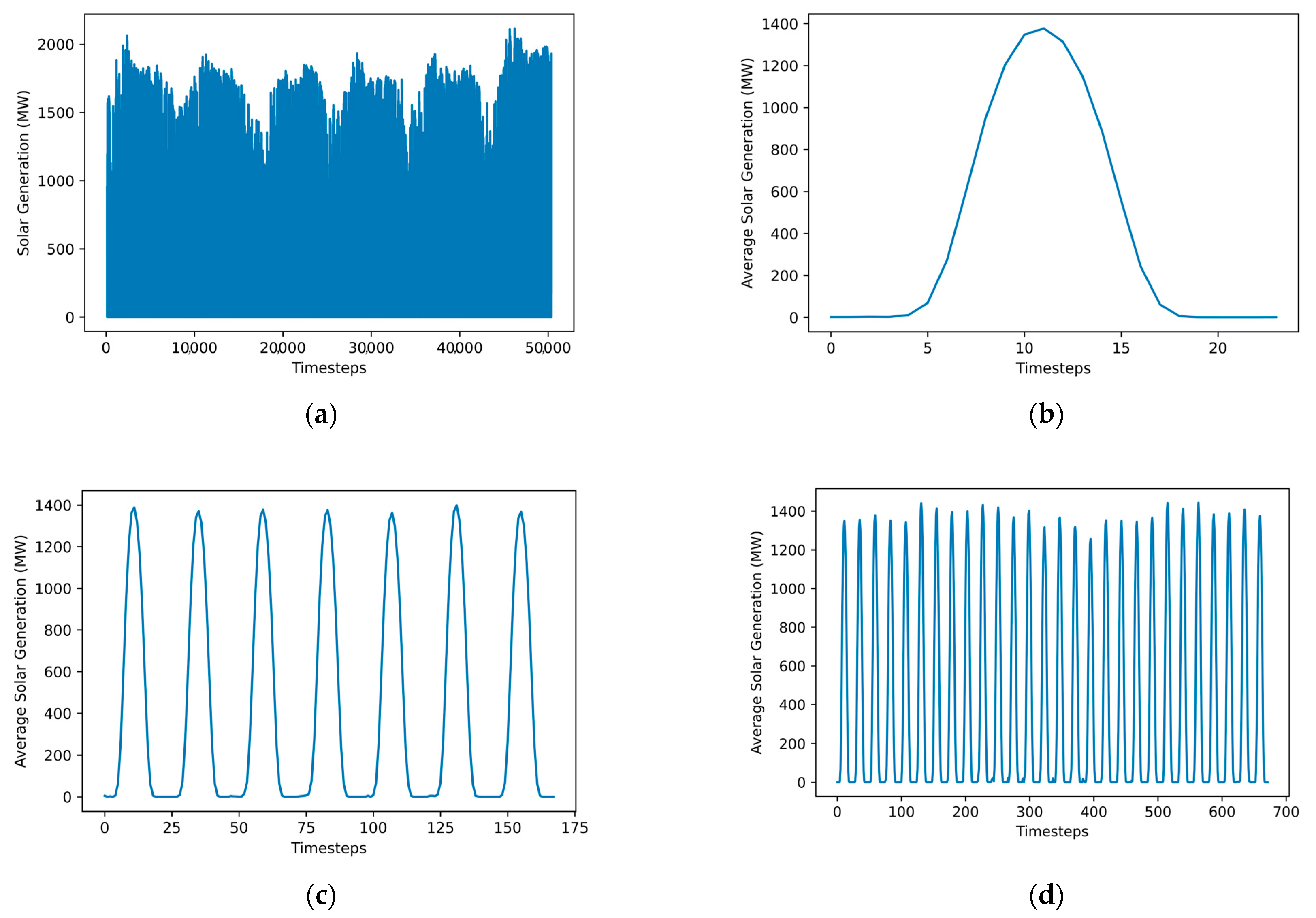

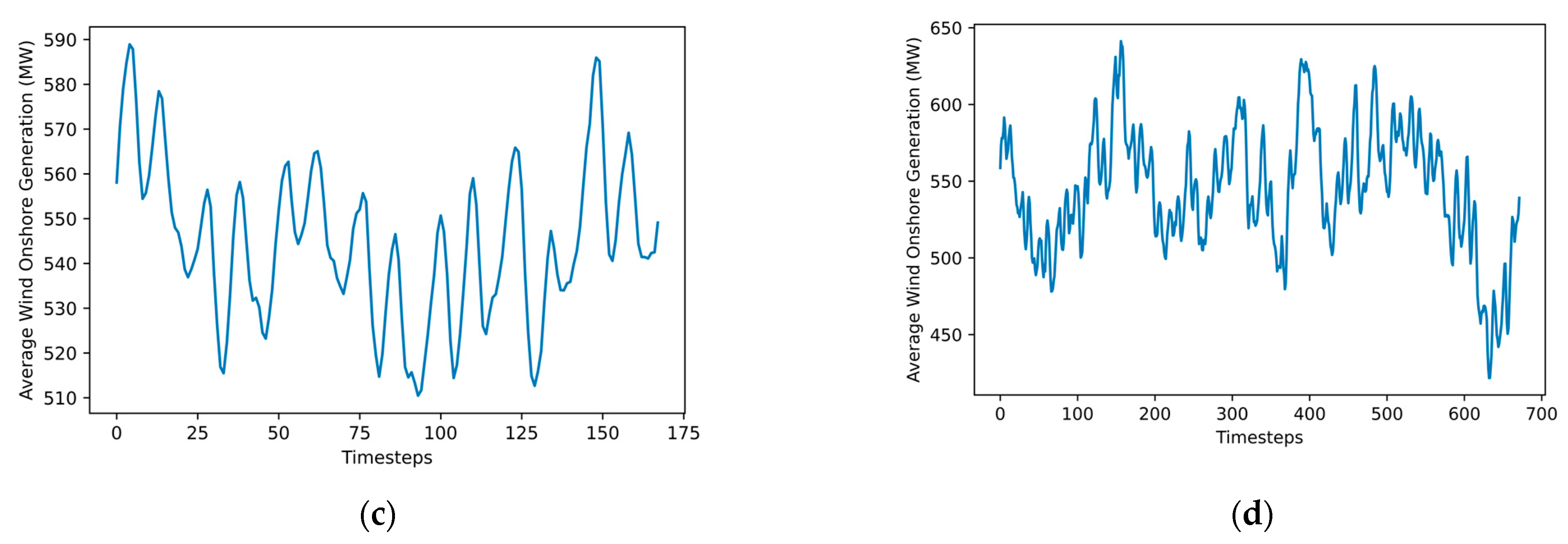

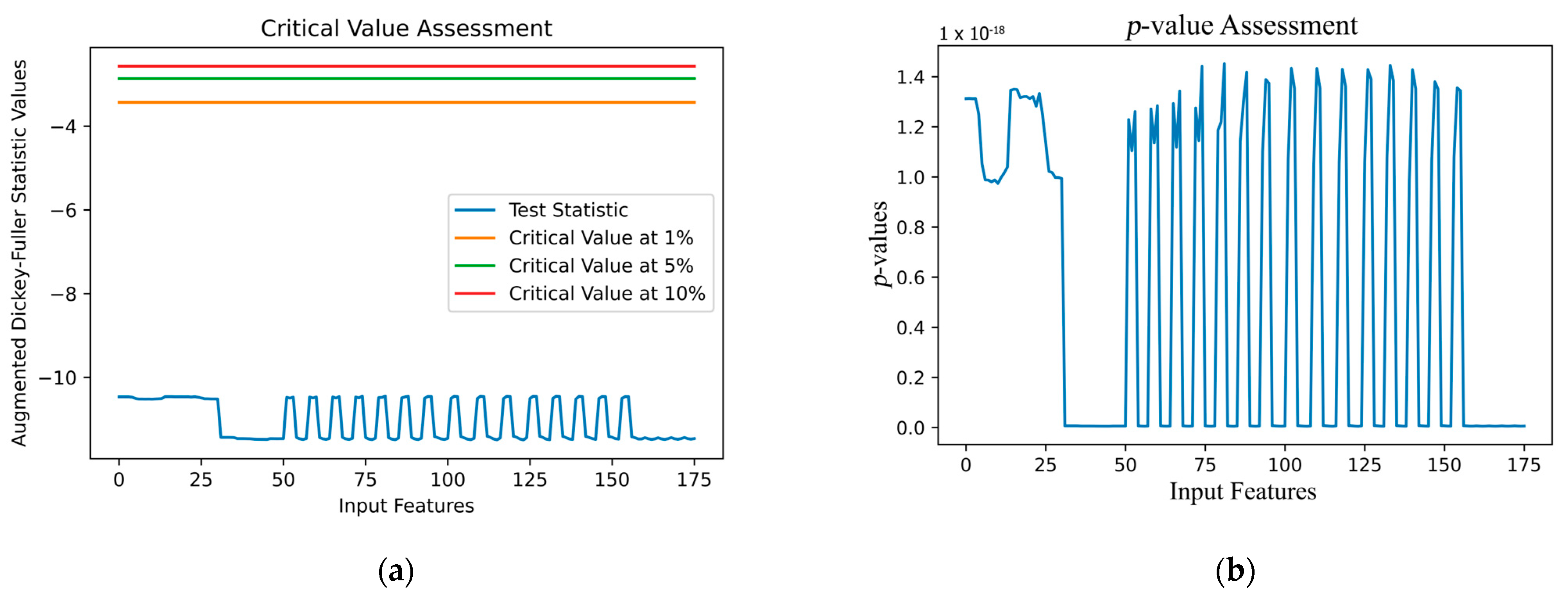

2.6.1. Dataset Overview and Preprocessing

2.6.2. Decomposition and Estimator Configuration

2.6.3. Experiments and Evaluation Strategy

3. Results

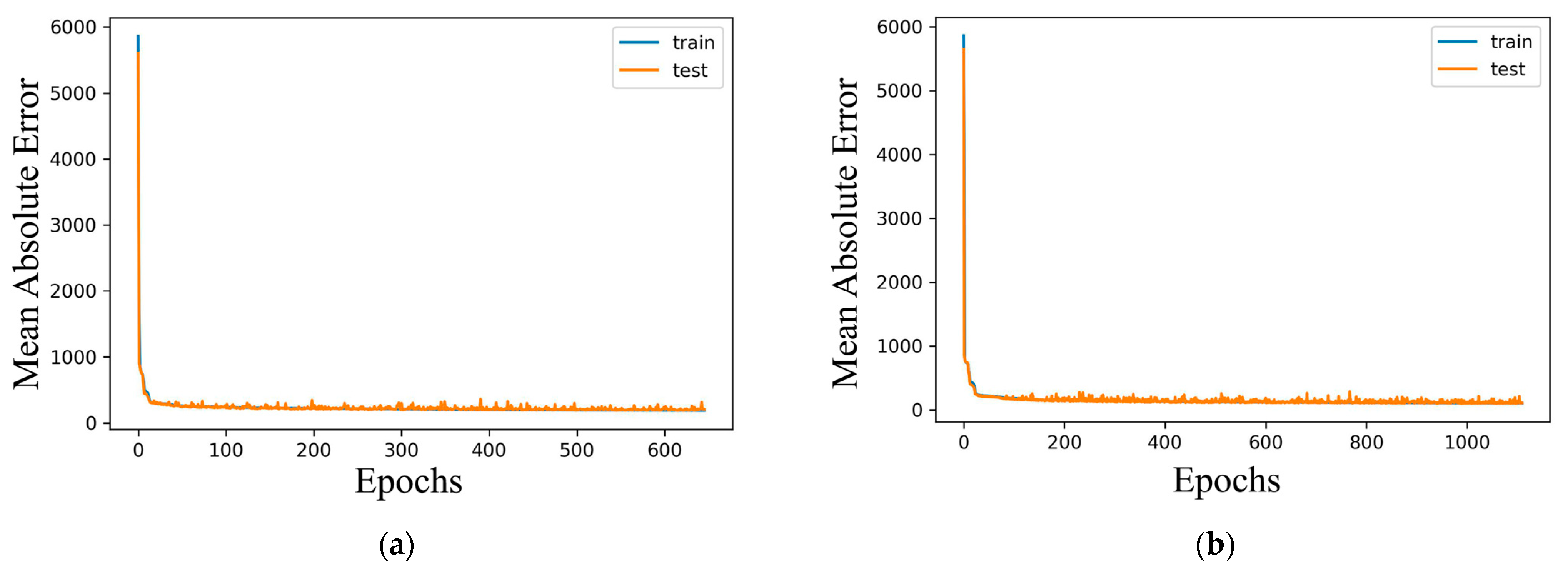

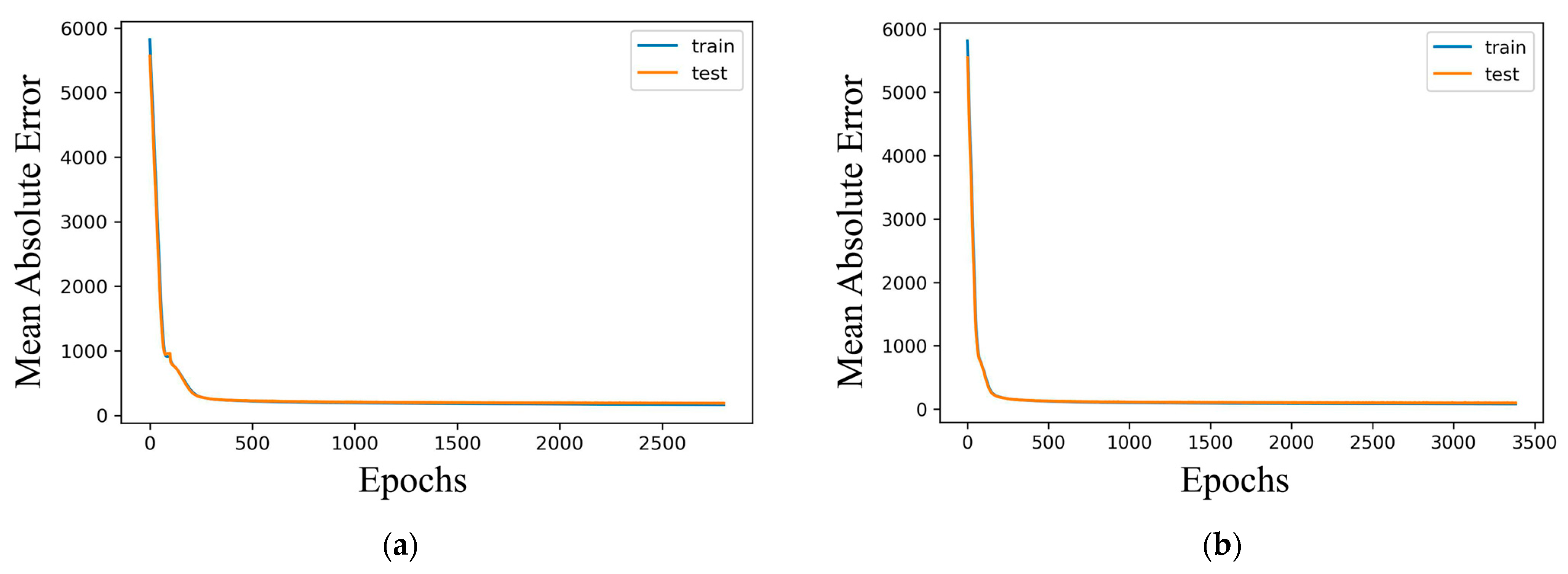

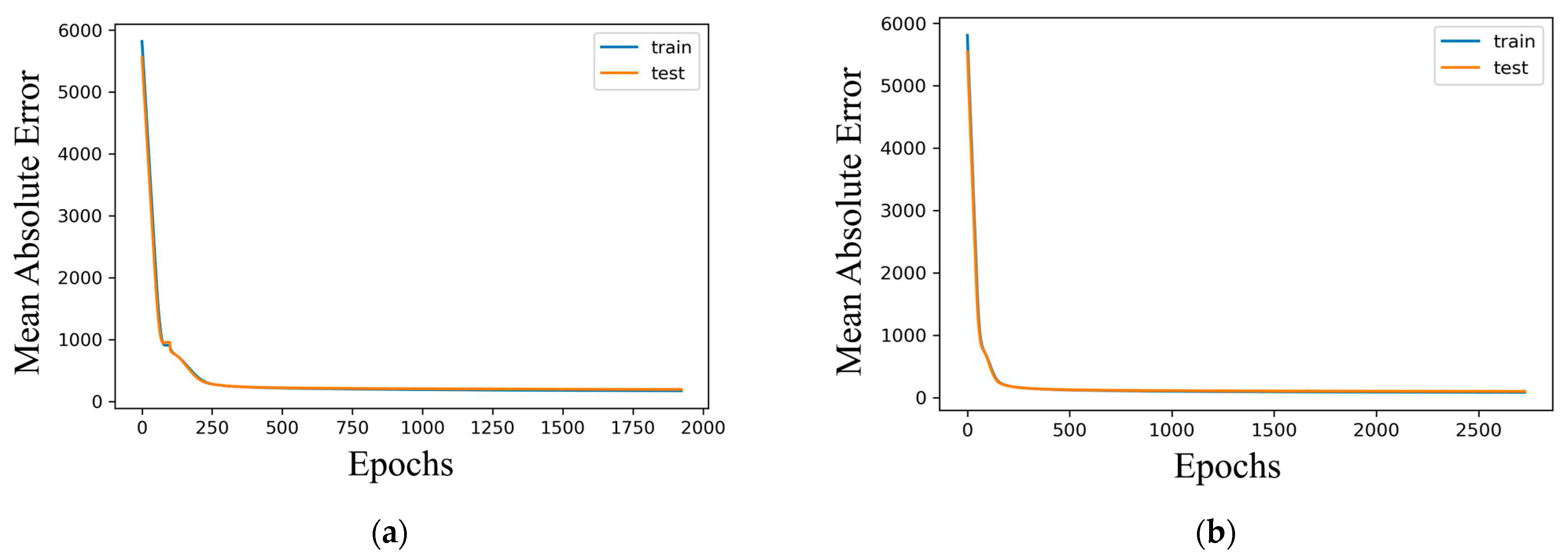

3.1. Learning Curve Examination

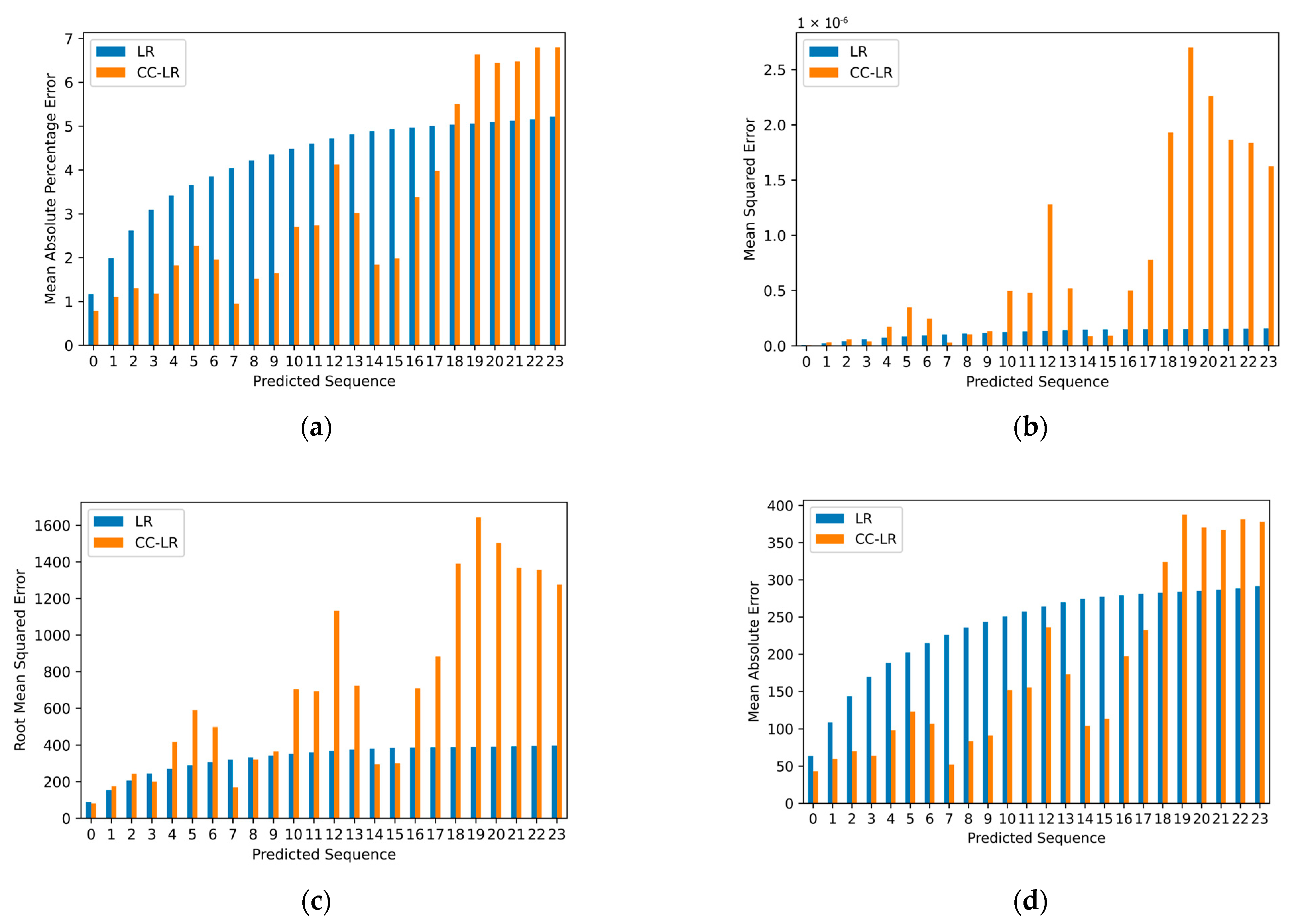

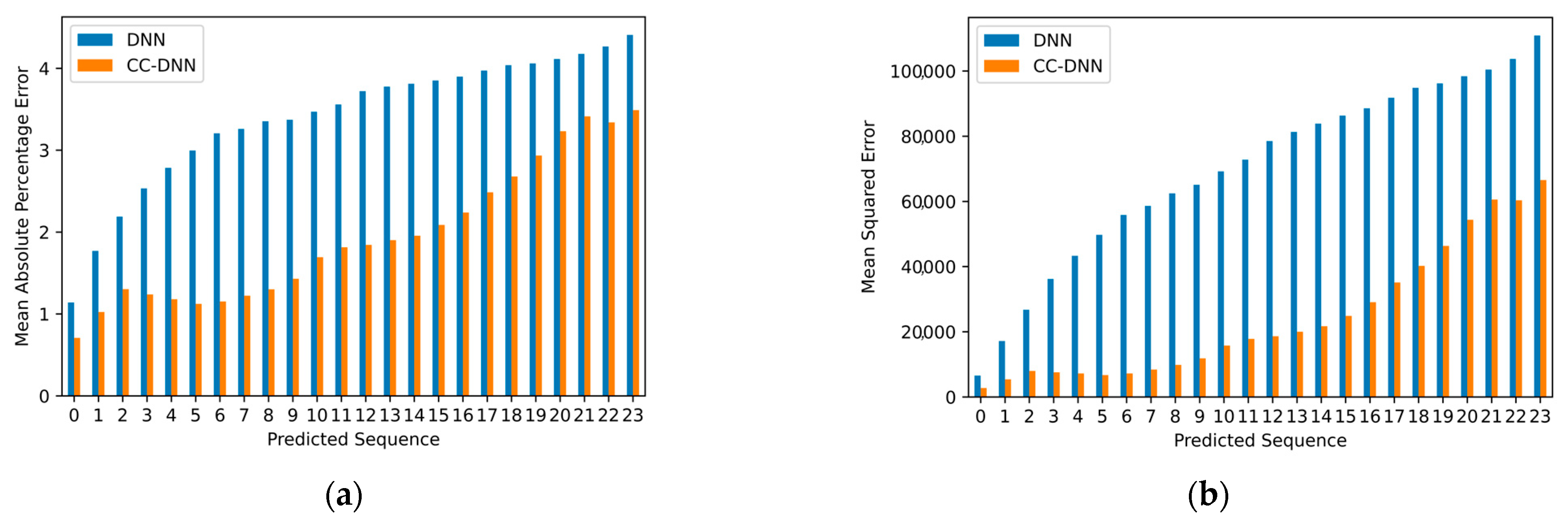

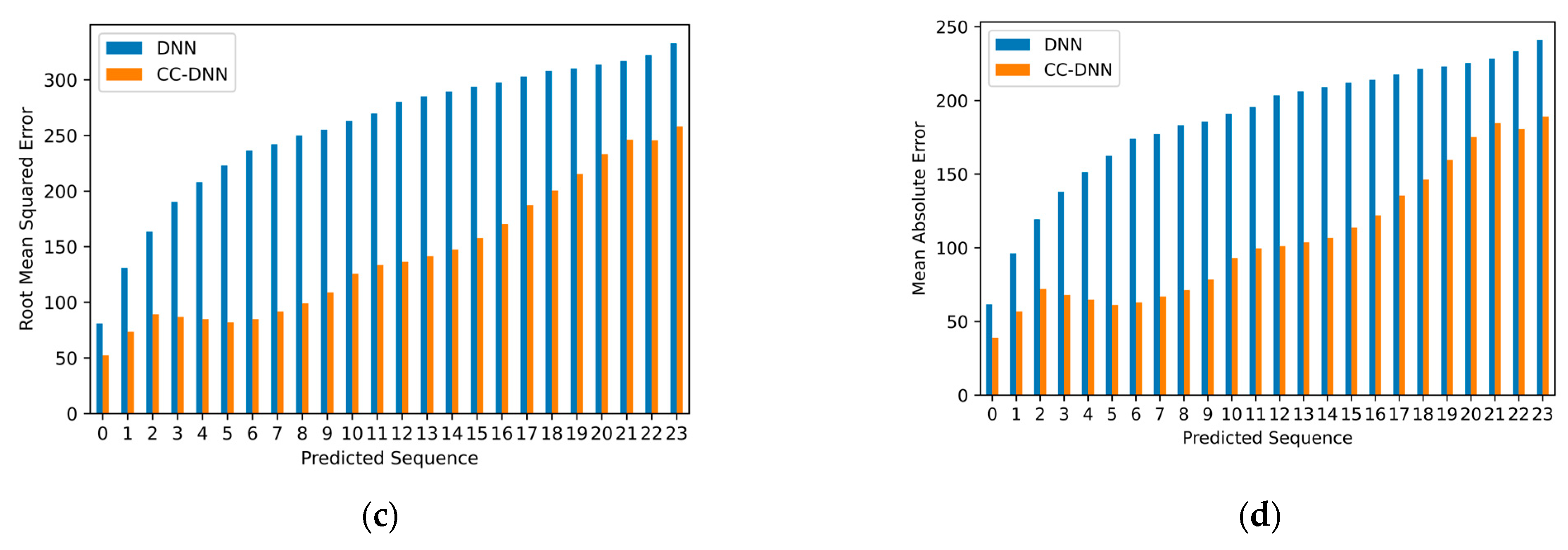

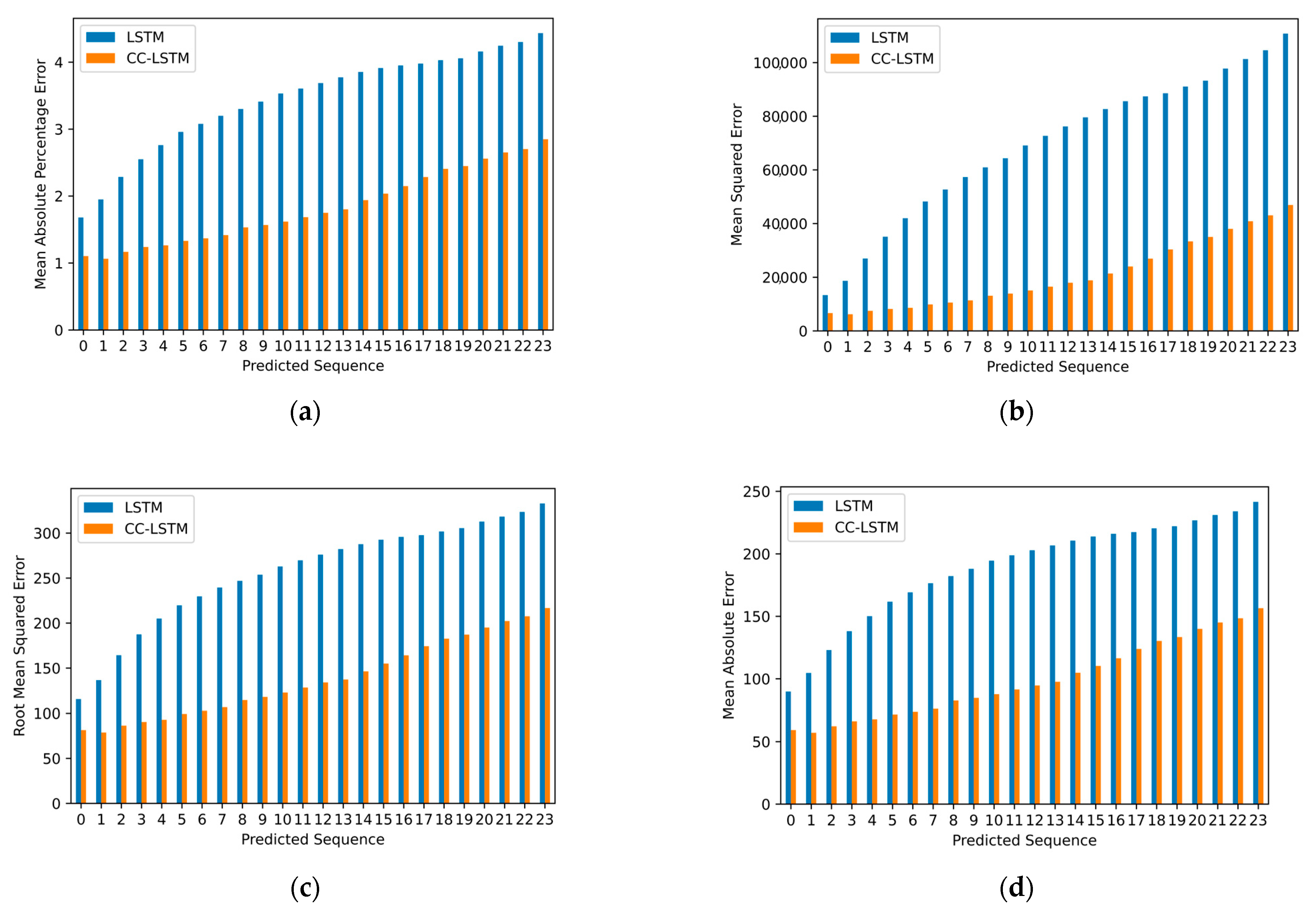

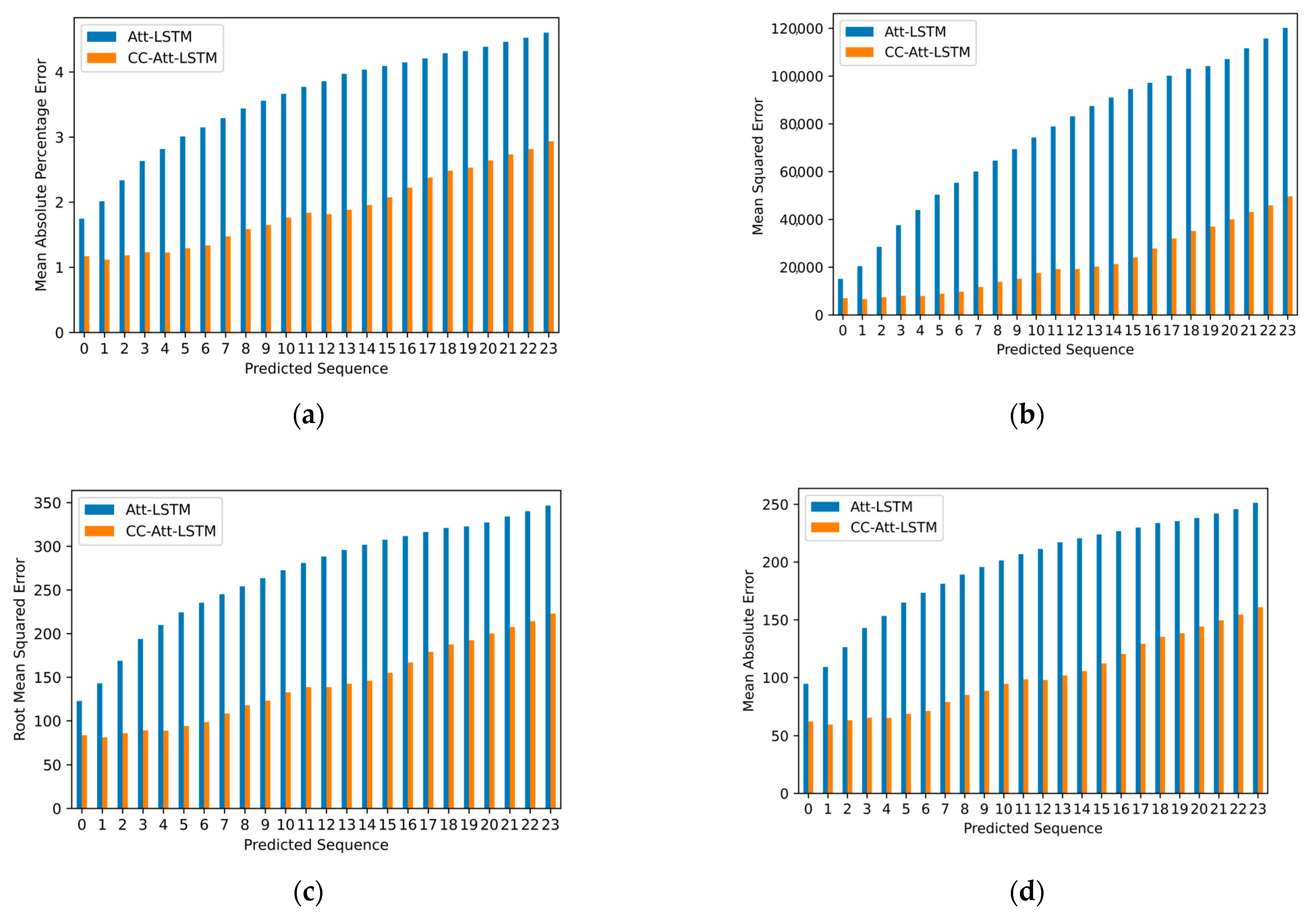

3.2. Error Analysis

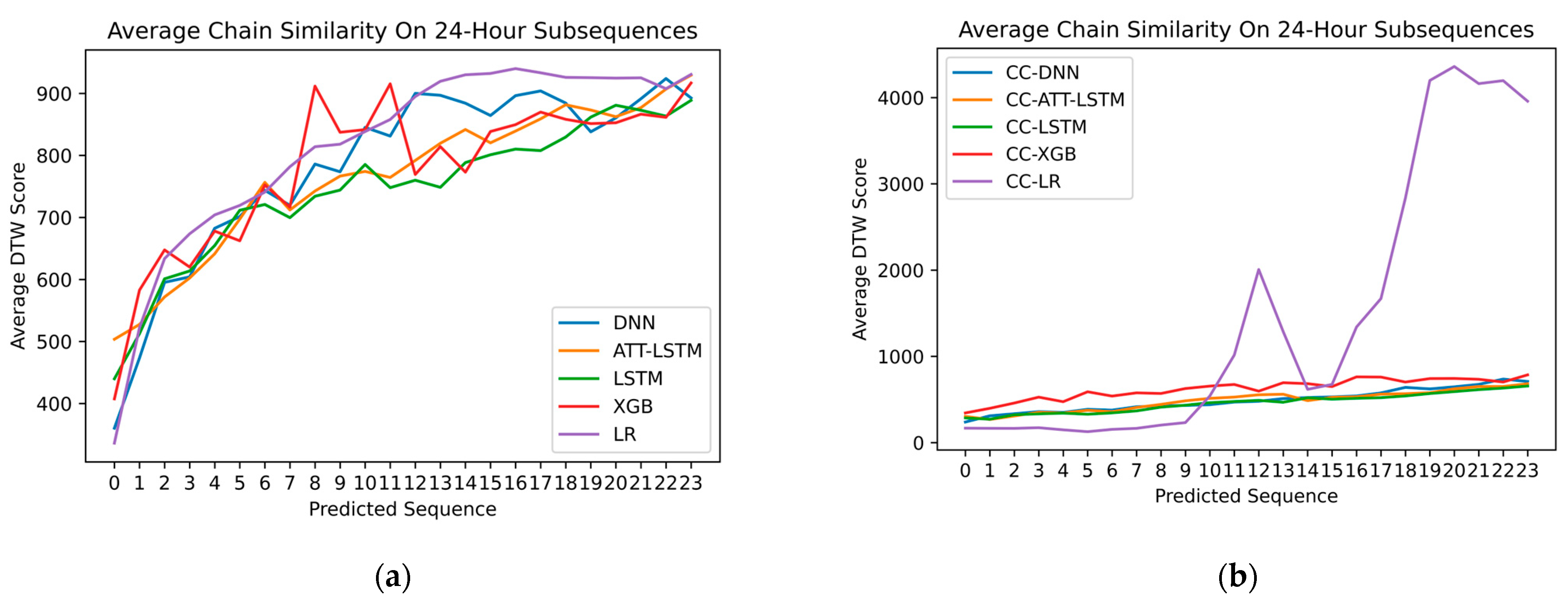

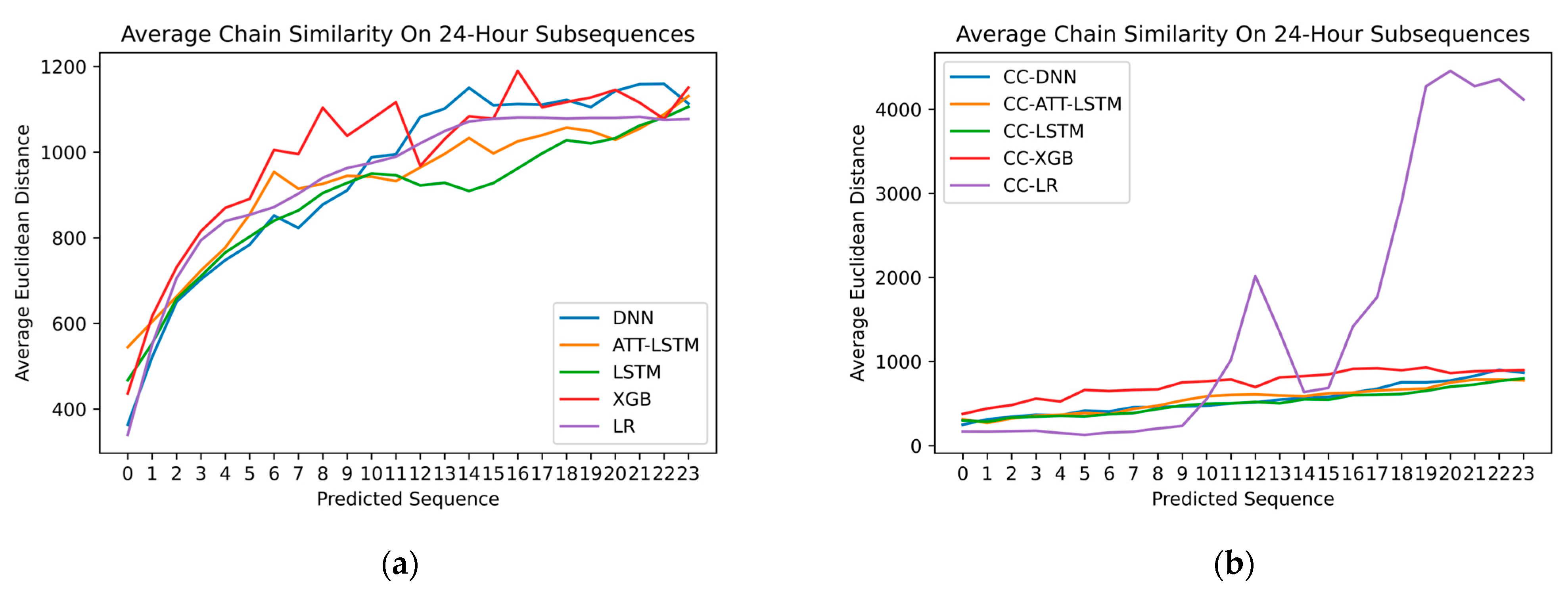

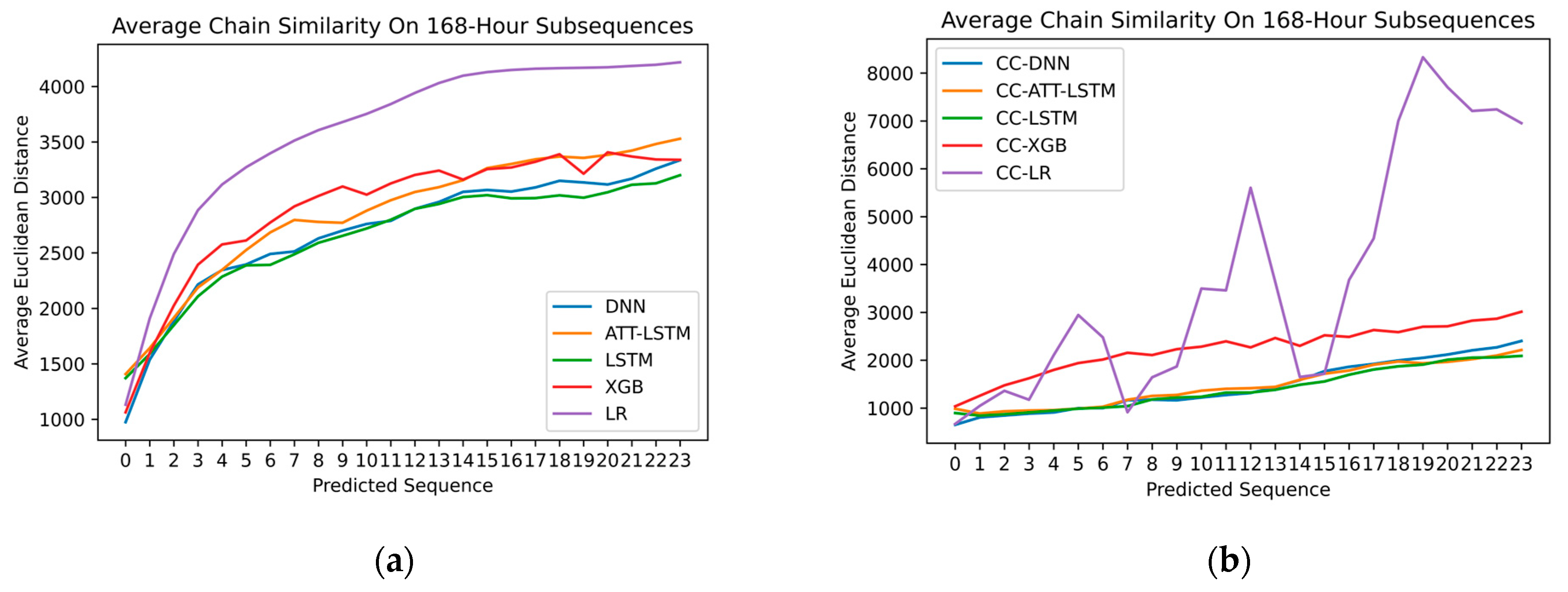

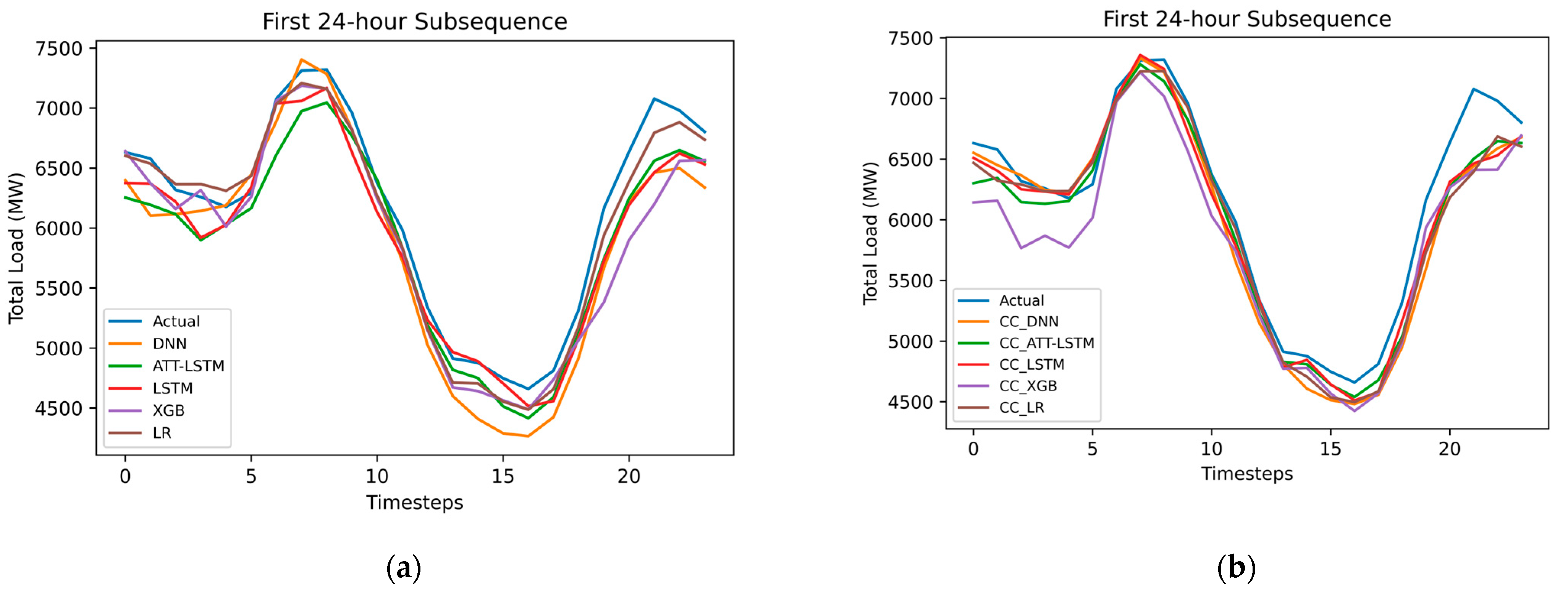

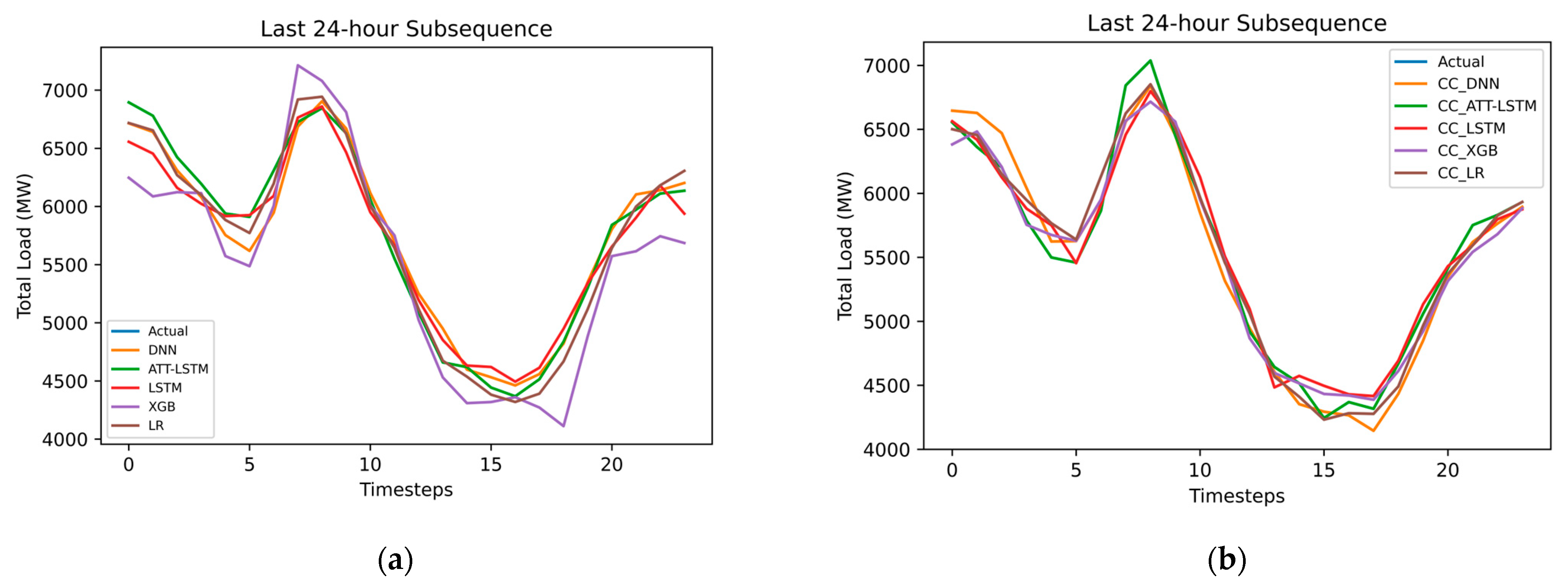

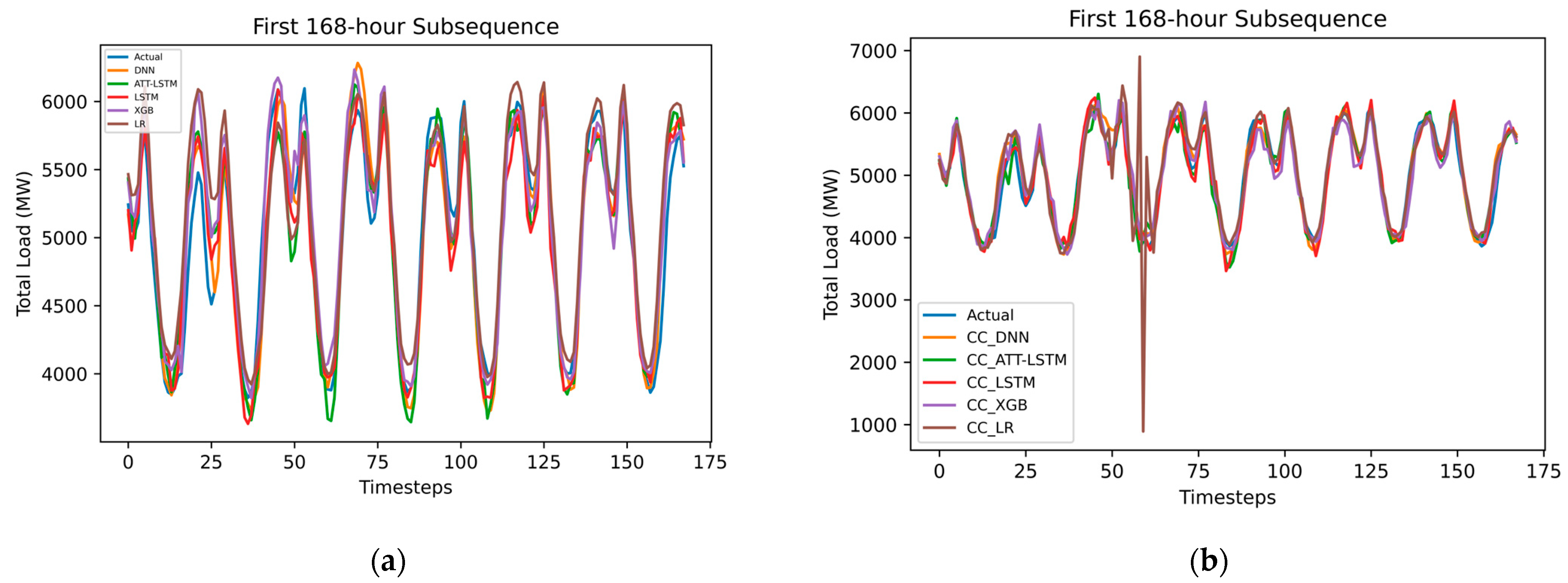

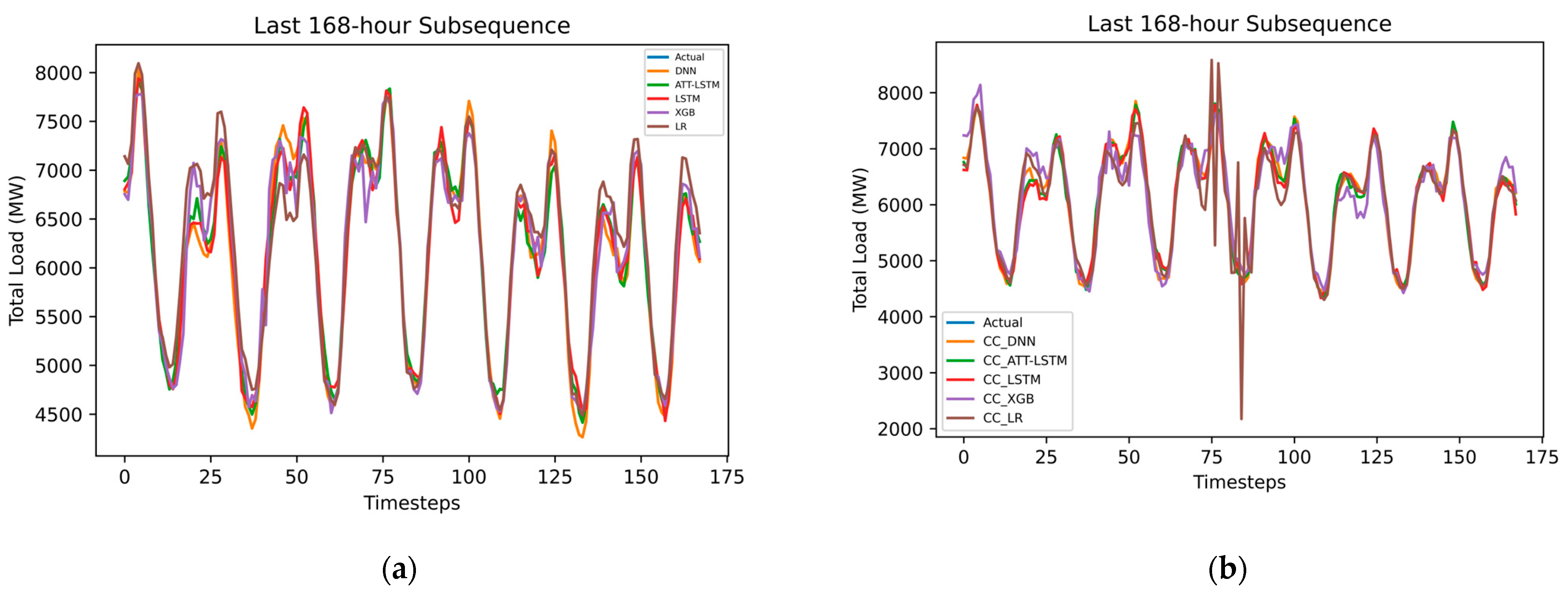

3.3. Pattern Conservation Quality

4. Discussion

5. Conclusions

- The important spectrum of nonlinear components enhanced the generalization capabilities of tree-based and neural network architectures since the diverse fine-grained representation of the input resulted in lower and more stable error profiles.

- The LSTM and DNN architectures benefited the most from this combinatorial method since they were able to capture the exposed nonlinearities more efficiently. The CC-LSTM model exhibited reduced MAPE by 46.87% when compared to the baseline. Similarly, the CC-DNN yielded a MAPE improvement of 42.76% over the baseline, reducing its MAPE measurement to 1.949. The studied models achieved a greater performance boost when compared to baseline improvements observed in relevant literature.

- Simpler linear kernels such as the LR model exhibited distinct instabilities due to their inability to handle the intrinsic nonlinearities of the decomposed input.

- The introduction of an intuitive and simple evaluation method based on the concept of time-series chains enabled the enhancement of the traditional error-focused framework in a direction that is aligned with the goals of modern decomposition methods regarding pattern evolution. This method provided an evaluation perspective that was unexplored by the literature of decomposition-based short-term load estimators.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| ADF | Augmented Dickey-Fuller |

| Att-LSTM | Attention Long Short-Term Memory |

| CC-Att-LSTM | Combinatorial Component Attention Long Short-Term Memory |

| CC-DNN | Combinatorial Component Deep Neural Network |

| CC-LR | Combinatorial Component Linear Regression |

| CC-LSTM | Combinatorial Component Long Short-Term Memory |

| CC-XGB | Combinatorial Component Extreme Gradient Boosting |

| DNN | Deep Neural Network |

| DTW | Dynamic Time Warping |

| EMD | Empirical Mode Decomposition |

| LR | Linear Regression |

| LSTM | Long Short-Term Memory |

| MAE | Mean Absolute Error |

| MAPE | Mean Absolute Percentage Error |

| MLP | Multilayer Perceptron |

| MSE | Mean Squared Error |

| ReLU | Rectified Linear Unit |

| RMSE | Root Mean Squared Error |

| RNN | Recurrent Neural Network |

| SHAP | Shapley Additive Explanations |

| SSA | Singular Spectrum Analysis |

| STL | Seasonal-Trend decomposition using Locally estimated scatterplot smoothing |

| SVD | Singular Value Decomposition |

| SVR | Support Vector Regression |

| VMD | Variational Mode Decomposition |

| WAUCD | Weighted Average Unanchored Chain Divergence |

| XGBoost | Extreme Gradient Boosting model |

References

- Khan, S. Short-Term Electricity Load Forecasting Using a New Intelligence-Based Application. Sustainability 2023, 15, 12311. [Google Scholar] [CrossRef]

- Feinberg, E.A.; Genethliou, D. Load Forecasting. In Applied Mathematics for Restructured Electric Power Systems, 2nd ed.; Chow, J.H., Wu, F.F., Momoh, J., Eds.; Springer: Boston, MA, USA, 2005; pp. 269–285. [Google Scholar] [CrossRef]

- Möbius, T.; Watermeyer, M.; Grothe, O.; Müsgens, F. Enhancing energy system models using better load forecasts. Energy Syst. 2023. [Google Scholar] [CrossRef]

- Kozak, D.; Holladay, S.; Fasshauer, G.E. Intraday Load Forecasts with Uncertainty. Energies 2019, 12, 1833. [Google Scholar] [CrossRef]

- Kavanagh, K.; Barrett, M.; Conlon, M. Short-term electricity load forecasting for the Integrated Single Electricity Market (I-SEM). In Proceedings of the 2017 52nd International Universities Power Engineering Conference (UPEC), Crete, Greece, 28–31 August 2017. [Google Scholar] [CrossRef]

- Kazmi, H.; Zhenmin Tao, Z. How good are TSO load and renewable generation forecasts: Learning curves, challenges, and the road ahead. Appl. Energy 2022, 323, 119565. [Google Scholar] [CrossRef]

- Melo, J.V.J.; Lira, G.R.S.; Costa, E.G.; Leite Neto, A.F.; Oliveira, I.B. Short-Term Load Forecasting on Individual Consumers. Energies 2022, 15, 5856. [Google Scholar] [CrossRef]

- Erdiwansyah; Mahidin; Husin, H.; Nasaruddin; Zaki, M. A critical review of the integration of renewable energy sources with various technologies. Prot. Control. Mod. Power Syst. 2021, 6, 3. [Google Scholar] [CrossRef]

- Kolkowska, N. Challenges in Renewable Energy. Available online: https://sustainablereview.com/challenges-in-renewable-energy/ (accessed on 18 February 2024).

- Moura, P.; de Almeida, A. Methodologies and Technologies for the Integration of Renewable Resources in Portugal. Renew. Energy World Eur. 2009, 9, 55–60. [Google Scholar]

- Cai, C.; Tao, Y.; Zhu, T.; Deng, Z. Short-Term Load Forecasting Based on Deep Learning Bidirectional LSTM Neural Network. Appl. Sci. 2021, 11, 8129. [Google Scholar] [CrossRef]

- Ackerman, S.; Farchi, E.; Raz, O.; Zalmanovici, M.; Dube, P. Detection of data drift and outliers affecting machine learning model performance over time. arXiv 2021, arXiv:2012.09258. [Google Scholar] [CrossRef]

- Lu, J.; Liu, A.; Dong, F.; Gu, F.; Gama, J.; Zhang, G. Learning under Concept Drift: A Review. IEEE Trans. Knowl. Data Eng. 2018, 31, 2346–2363. [Google Scholar] [CrossRef]

- Cordeiro-Costas, M.; Villanueva, D.; Eguía-Oller, P.; Martínez-Comesaña, M.; Ramos, S. Load Forecasting with Machine Learning and Deep Learning Methods. Appl. Sci. 2023, 13, 7933. [Google Scholar] [CrossRef]

- Dai Haleema, S. Short-term load forecasting using statistical methods: A case study on Load Data. Int. J. Eng. Res. Technol. 2020, 9, 516–520. [Google Scholar] [CrossRef]

- Kontogiannis, D.; Bargiotas, D.; Daskalopulu, A.; Tsoukalas, L.H. Explainability analysis of weather variables in short-term load forecasting. In Proceedings of the 2023 14th International Conference on Information, Intelligence, Systems & Applications (IISA), Volos, Greece, 10–12 July 2023. [Google Scholar] [CrossRef]

- Mbuli, N.; Mathonsi, M.; Seitshiro, M.; Pretorius, J.-H.C. Decomposition forecasting methods: A review of applications in Power Systems. Energy Rep. 2020, 6, 298–306. [Google Scholar] [CrossRef]

- Amral, N.; Ozveren, C.S.; King, D. Short term load forecasting using multiple linear regression. In Proceedings of the 2007 42nd International Universities Power Engineering Conference, Brighton, UK, 4–6 September 2007. [Google Scholar] [CrossRef]

- Ashraf, A.; Haroon, S.S. Short-term load forecasting based on Bayesian ridge regression coupled with an optimal feature selection technique. Int. J. Adv. Nat. Sci. Eng. Res. 2023, 7, 435–441. [Google Scholar] [CrossRef]

- Ziel, F. Modelling and forecasting electricity load using Lasso methods. In Proceedings of the 2015 Modern Electric Power Systems (MEPS), Wroclaw, Poland, 6–9 July 2015. [Google Scholar] [CrossRef]

- Srivastava, A.K. Short term load forecasting using regression trees: Random Forest, bagging and m5p. Int. J. Adv. Trends Comput. Sci. Eng. 2020, 9, 1898–1902. [Google Scholar] [CrossRef]

- Abbasi, R.A.; Javaid, N.; Ghuman, M.N.J.; Khan, Z.A.; Ur Rehman, S.; Amanullah. Short Term Load Forecasting Using XGBoost. In Web, Artificial Intelligence and Network Applications WAINA 2019. Advances in Intelligent Systems and Computing; Barolli, L., Takizawa, M., Xhafa, F., Enokido, T., Eds.; Springer: Cham, Germany, 2019; Volume 927. [Google Scholar] [CrossRef]

- He, W. Load forecasting via Deep Neural Networks. Procedia Comput. Sci. 2017, 122, 308–314. [Google Scholar] [CrossRef]

- Kontogiannis, D.; Bargiotas, D.; Daskalopulu, A. Minutely Active Power Forecasting Models Using Neural Networks. Sustainability 2020, 12, 3177. [Google Scholar] [CrossRef]

- Ali, A.; Jasmin, E.A. Deep Learning Networks for short term load forecasting. In Proceedings of the 2023 International Conference on Control, Communication and Computing (ICCC), Thiruvananthapuram, India, 19–21 May 2023. [Google Scholar] [CrossRef]

- Kontogiannis, D.; Bargiotas, D.; Daskalopulu, A.; Tsoukalas, L.H. A Meta-Modeling Power Consumption Forecasting Approach Combining Client Similarity and Causality. Energies 2021, 14, 6088. [Google Scholar] [CrossRef]

- Kontogiannis, D.; Bargiotas, D.; Daskalopulu, A.; Arvanitidis, A.I.; Tsoukalas, L.H. Error Compensation Enhanced Day-Ahead Electricity Price Forecasting. Energies 2022, 15, 1466. [Google Scholar] [CrossRef]

- Laitsos, V.; Vontzos, G.; Bargiotas, D.; Daskalopulu, A.; Tsoukalas, L.H. Enhanced Automated Deep Learning Application for Short-Term Load Forecasting. Mathematics 2023, 11, 2912. [Google Scholar] [CrossRef]

- Laitsos, V.; Vontzos, G.; Bargiotas, D.; Daskalopulu, A.; Tsoukalas, L.H. Data-Driven Techniques for Short-Term Electricity Price Forecasting through Novel Deep Learning Approaches with Attention Mechanisms. Energies 2024, 17, 1625. [Google Scholar] [CrossRef]

- Zahid, M.; Ahmed, F.; Javaid, N.; Abbasi, R.; Zainab Kazmi, H.; Javaid, A.; Bilal, M.; Akbar, M.; Ilahi, M. Electricity price and load forecasting using enhanced convolutional neural network and enhanced support vector regression in smart grids. Electronics 2019, 8, 122. [Google Scholar] [CrossRef]

- Peng, Y.; Wang, Y.; Lu, X.; Li, H.; Shi, D.; Wang, Z.; Li, J. Short-term load forecasting at different aggregation levels with predictability analysis. In Proceedings of the 2019 IEEE Innovative Smart Grid Technologies—Asia (ISGT Asia), Chengdu, China, 21–24 May 2019. [Google Scholar] [CrossRef]

- Dong, Y.; Ma, X.; Ma, C.; Wang, J. Research and Application of a Hybrid Forecasting Model Based on Data Decomposition for Electrical Load Forecasting. Energies 2016, 9, 1050. [Google Scholar] [CrossRef]

- Qiuyu, L.; Qiuna, C.; Sijie, L.; Yun, Y.; Binjie, Y.; Yang, W.; Xinsheng, Z. Short-term load forecasting based on load decomposition and numerical weather forecast. In Proceedings of the 2017 IEEE Conference on Energy Internet and Energy System Integration (EI2), Beijing, China, 26–28 November 2017. [Google Scholar] [CrossRef]

- Cheng, L.; Bao, Y.; Tang, L.; Di, H. Very-short-term load forecasting based on empirical mode decomposition and deep neural network. IEEJ Trans. Electr. Electron. Eng. 2019, 15, 252–258. [Google Scholar] [CrossRef]

- Bedi, J.; Toshniwal, D. Energy load time-series forecast using decomposition and autoencoder integrated memory network. Appl. Soft Comput. 2020, 93, 106390. [Google Scholar] [CrossRef]

- Safari, N.; Price, G.C.D.; Chung, C.Y. Analysis of empirical mode decomposition-based load and renewable time series forecasting. In Proceedings of the 2020 IEEE Electric Power and Energy Conference (EPEC), Edmonton, AB, Canada, 9–10 November 2020. [Google Scholar] [CrossRef]

- Langenberg, J. Improving Short-Term Load Forecasting Accuracy with Novel Hybrid Models after Multiple Seasonal and Trend Decomposition. Bachelor’s Thesis, Erasmus School of Economics, Rotterdam, The Netherlands, 2020. [Google Scholar]

- Taheri, S.; Talebjedi, B.; Laukkanen, T. Electricity demand time series forecasting based on empirical mode decomposition and long short-term memory. Energy Eng. 2021, 118, 1577–1594. [Google Scholar] [CrossRef]

- Stratigakos, A.; Bachoumis, A.; Vita, V.; Zafiropoulos, E. Short-Term Net Load Forecasting with Singular Spectrum Analysis and LSTM Neural Networks. Energies 2021, 14, 4107. [Google Scholar] [CrossRef]

- Pham, M.-H.; Nguyen, M.-N.; Wu, Y.-K. A novel short-term load forecasting method by combining the deep learning with singular spectrum analysis. IEEE Access 2021, 9, 73736–73746. [Google Scholar] [CrossRef]

- Zhang, Q.; Wu, J.; Ma, Y.; Li, G.; Ma, J.; Wang, C. Short-term load forecasting method with variational mode decomposition and stacking model fusion. Sustain. Energy Grids Netw. 2022, 30, 100622. [Google Scholar] [CrossRef]

- Liu, H.; Xiong, X.; Yang, B.; Cheng, Z.; Shao, K.; Tolba, A. A Power Load Forecasting Method Based on Intelligent Data Analysis. Electronics 2023, 12, 3441. [Google Scholar] [CrossRef]

- Sun, L.; Lin, Y.; Pan, N.; Fu, Q.; Chen, L.; Yang, J. Demand-Side Electricity Load Forecasting Based on Time-Series Decomposition Combined with Kernel Extreme Learning Machine Improved by Sparrow Algorithm. Energies 2023, 16, 7714. [Google Scholar] [CrossRef]

- Duong, N.-H.; Nguyen, M.-T.; Nguyen, T.-H.; Tran, T.-P. Application of seasonal trend decomposition using loess and long short-term memory in peak load forecasting model in Tien Giang. Eng. Technol. Appl. Sci. Res. 2023, 13, 11628–11634. [Google Scholar] [CrossRef]

- Huang, W.; Song, Q.; Huang, Y. Two-Stage Short-Term Power Load Forecasting Based on SSA–VMD and Feature Selection. Appl. Sci. 2023, 13, 6845. [Google Scholar] [CrossRef]

- Wood, M.; Ogliari, E.; Nespoli, A.; Simpkins, T.; Leva, S. Day Ahead Electric Load Forecast: A Comprehensive LSTM-EMD Methodology and Several Diverse Case Studies. Forecasting 2023, 5, 297–314. [Google Scholar] [CrossRef]

- Sohrabbeig, A.; Ardakanian, O.; Musilek, P. Decompose and Conquer: Time Series Forecasting with Multiseasonal Trend Decomposition Using Loess. Forecasting 2023, 5, 684–696. [Google Scholar] [CrossRef]

- Yin, C.; Wei, N.; Wu, J.; Ruan, C.; Luo, X.; Zeng, F. An Empirical Mode Decomposition-Based Hybrid Model for Sub-Hourly Load Forecasting. Energies 2024, 17, 307. [Google Scholar] [CrossRef]

- Filho, M. How to Measure Time Series Similarity in Python. Available online: https://forecastegy.com/posts/how-to-measure-time-series-similarity-in-python/ (accessed on 19 February 2024).

- Müller, M. Dynamic time warping. In Information Retrieval for Music and Motion; Springer: Berlin/Heidelberg, Germany, 2007; pp. 69–84. [Google Scholar] [CrossRef]

- Time Series Components. Available online: https://otexts.com/fpp2/components.html (accessed on 19 February 2024).

- Cleveland, R.B.; Cleveland, W.S.; McRae, J.E.; Terpenning, I. STL: A Seasonal-Trend Decomposition Procedure Based on Loess (with Discussion). J. Off. Stat. 1990, 6, 3–73. [Google Scholar]

- Hassani, H. Singular Spectrum Analysis: Methodology and comparison. J. Data Sci. 2021, 5, 239–257. [Google Scholar] [CrossRef]

- Huang, N.E.; Shen, Z.; Long, S.R.; Wu, M.C.; Shih, H.H.; Zheng, Q.; Yen, N.-C.; Tung, C.C.; Liu, H.H. The empirical mode decomposition and the Hilbert spectrum for nonlinear and non-stationary time series analysis. Proc. R. Soc. Lond. Ser. A Math. Phys. Eng. Sci. 1998, 454, 903–995. [Google Scholar] [CrossRef]

- Uyanık, G.K.; Güler, N. A study on multiple linear regression analysis. Procedia-Soc. Behav. Sci. 2013, 106, 234–240. [Google Scholar] [CrossRef]

- Deng, X.; Ye, A.; Zhong, J.; Xu, D.; Yang, W.; Song, Z.; Zhang, Z.; Guo, J.; Wang, T.; Tian, Y.; et al. Bagging–XGBoost algorithm based extreme weather Identification and short-term load forecasting model. Energy Rep. 2022, 8, 8661–8674. [Google Scholar] [CrossRef]

- Perceptron Learning Algorithm: A Graphical Explanation of Why It Works, Medium. 2018. Available online: https://towardsdatascience.com/perceptron-learning-algorithm-d5db0deab975 (accessed on 25 May 2024).

- Christensen, B.K.; Matrix representation of a Neural Network. Technical University of Denmark [Preprint]. Available online: https://orbit.dtu.dk/en/publications/matrix-representation-of-a-neural-network (accessed on 25 May 2024).

- Ramchoun, H.; Idrissi, M.A.J.; Ghanou, Y.; Ettaouil, M. Multilayer Perceptron. In Proceedings of the 2nd international Conference on Big Data, Cloud and Applications, New York, NY, USA, 29–30 March 2017. [Google Scholar] [CrossRef]

- Understanding LSTM Networks-Colah’s Blog, Colah.Github.io. 2021. Available online: https://colah.github.io/posts/2015-08-Understanding-LSTMs/ (accessed on 10 July 2021).

- Kang, Q.; Chen, E.J.; Li, Z.-C.; Luo, H.-B.; Liu, Y. Attention-based LSTM predictive model for the attitude and position of shield machine in tunneling. Undergr. Space 2023, 13, 335–350. [Google Scholar] [CrossRef]

- Time Series Chains. Available online: https://stumpy.readthedocs.io/en/latest/Tutorial_Time_Series_Chains.html (accessed on 19 February 2024).

- Zhu, Y.; Imamura, M.; Nikovski, D.; Keogh, E. Matrix profile VII: Time Series Chains: A new primitive for time series Data Mining (Best Student Paper Award). In Proceedings of the 2017 IEEE International Conference on Data Mining (ICDM), New Orleans, LA, USA, 18–21 November 2017. [Google Scholar] [CrossRef]

- Fürnkranz, J.; Chan, P.; Craw, S.; Sammut, C.; Uther, W.; Ratnaparkhi, A.; Jin, X.; Han, J.; Yang, Y.; Morik, K.; et al. Mean Absolute Error. In Encyclopedia of Machine Learning; Springer: Boston, MA, USA, 2011; p. 652. [Google Scholar] [CrossRef]

- de Myttenaere, A.; Golden, B.; Le Grand, B.; Rossi, F. Mean Absolute Percentage Error for regression models. Neurocomputing 2016, 192, 38–48. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A. Mean squared error: Love it or leave it? A new look at Signal Fidelity Measures. IEEE Signal Process. Mag. 2009, 26, 98–117. [Google Scholar] [CrossRef]

- Hodson, T. Root mean square error (RMSE) or mean absolute error (MAE): When to use them or not. Geosci. Model Dev. Discuss. 2022, 15, 5481–5487. [Google Scholar] [CrossRef]

- Open Power System Data. 2020. Data Package Time Series. Version 2020-10-06. Available online: https://data.open-power-system-data.org/time_series/2020-10-06 (accessed on 19 February 2024). [CrossRef]

- Feature Engineering with Sliding Windows and Lagged Inputs. Available online: https://www.bryanshalloway.com/2020/10/12/window-functions-for-resampling/ (accessed on 19 February 2024).

- Devi, K. Understanding Hold-Out Methods for Training Machine Learning Models. Available online: https://www.comet.com/site/blog/understanding-hold-out-methods-for-training-machine-learning-models/ (accessed on 19 February 2024).

- Patro, S.G.K.; Sahu, K.K. Normalization: A preprocessing stage. arXiv 2015, arXiv:1503.06462. [Google Scholar] [CrossRef]

- Schober, P.; Boer, C.; Schwarte, L.A. Correlation coefficients: Appropriate use and interpretation. Anesth. Analg. 2018, 126, 1763–1768. [Google Scholar] [CrossRef]

- Dickey, D.A.; Fuller, W.A. Distribution of the estimators for autoregressive time series with a unit root. J. Am. Stat. Assoc. 1979, 74, 427. [Google Scholar] [CrossRef]

- Activation Functions in Neural Networks [12 Types & Use Cases]. Available online: https://www.v7labs.com/blog/neural-networks-activation-functions (accessed on 19 February 2024).

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Prechelt, L. Early stopping—But when? In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2012; pp. 53–67. [Google Scholar] [CrossRef]

- Fatima, N. Enhancing performance of a Deep Neural Network: A comparative analysis of optimization algorithms. ADCAIJ Adv. Distrib. Comput. Artif. Intell. J. 2020, 9, 79–90. [Google Scholar] [CrossRef]

- GitHub-Dimkonto/Combinatorial_Decomposition: Day-Ahead Load Forecasting Model Introducing a Combinatorial Decomposition Method and a Pattern Conservation Quality Evaluation Method. Available online: https://github.com/dimkonto/Combinatorial_Decomposition (accessed on 19 February 2024).

| Correlation Threshold | MAPE (%) | () | RMSE (MW) | MAE (MW) | Features |

|---|---|---|---|---|---|

| 0.6 | 4.387 | 138,804.030 | 365.158 | 254.618 | 176 |

| 0.8 | 4.431 | 143,084.162 | 371.018 | 257.459 | 76 |

| 0.9 | 4.679 | 157,491.127 | 391.781 | 271.264 | 24 |

| Estimator | MAPE (%) | () | RMSE (MW) | MAE (MW) |

|---|---|---|---|---|

| DNN | 3.404 | 69,935.336 | 256.917 | 186.294 |

| Att-LSTM | 3.596 | 75,568.823 | 267.788 | 196.423 |

| LSTM | 3.444 | 69,168.608 | 256.490 | 188.313 |

| XGB | 4.126 | 101,673.878 | 311.678 | 225.034 |

| LR | 4.229 | 115,121.543 | 329.446 | 236.201 |

| CC-DNN | 1.949 | 24,424.991 | 143.828 | 106.340 |

| CC-Att-LSTM | 1.889 | 22,025.744 | 141.471 | 102.045 |

| CC-LSTM | 1.830 | 21,006.097 | 138.484 | 99.247 |

| CC-XGB | 3.043 | 56,562.717 | 231.141 | 166.753 |

| CC-LR | 3.207 | 734,619.059 | 709.685 | 181.810 |

| Estimator | Daily WAUCD (MW) | Weekly WAUCD (MW) |

|---|---|---|

| DNN | 849.828 | 1991.259 |

| Att-LSTM | 827.099 | 2017.401 |

| LSTM | 802.560 | 1955.416 |

| XGB | 832.679 | 2141.502 |

| LR | 884.997 | 2413.976 |

| CC-DNN | 560.374 | 1305.394 |

| CC-Att-LSTM | 548.654 | 1347.603 |

| CC-LSTM | 519.105 | 1316.265 |

| CC-XGB | 684.187 | 1824.870 |

| CC-LR | 2190.861 | 4564.749 |

| Estimator | Daily WAUCD (MW) | Weekly WAUCD (MW) |

|---|---|---|

| DNN | 1055.510 | 2969.447 |

| Att-LSTM | 1003.591 | 3161.184 |

| LSTM | 970.596 | 2899.150 |

| XGB | 1075.868 | 3177.605 |

| LR | 1027.712 | 3960.668 |

| CC-DNN | 648.328 | 1745.038 |

| CC-Att-LSTM | 634.231 | 1701.846 |

| CC-LSTM | 590.069 | 1636.850 |

| CC-XGB | 821.412 | 2501.816 |

| CC-LR | 2255.940 | 4838.714 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kontogiannis, D.; Bargiotas, D.; Fevgas, A.; Daskalopulu, A.; Tsoukalas, L.H. Combinatorial Component Day-Ahead Load Forecasting through Unanchored Time Series Chain Evaluation. Energies 2024, 17, 2844. https://doi.org/10.3390/en17122844

Kontogiannis D, Bargiotas D, Fevgas A, Daskalopulu A, Tsoukalas LH. Combinatorial Component Day-Ahead Load Forecasting through Unanchored Time Series Chain Evaluation. Energies. 2024; 17(12):2844. https://doi.org/10.3390/en17122844

Chicago/Turabian StyleKontogiannis, Dimitrios, Dimitrios Bargiotas, Athanasios Fevgas, Aspassia Daskalopulu, and Lefteri H. Tsoukalas. 2024. "Combinatorial Component Day-Ahead Load Forecasting through Unanchored Time Series Chain Evaluation" Energies 17, no. 12: 2844. https://doi.org/10.3390/en17122844

APA StyleKontogiannis, D., Bargiotas, D., Fevgas, A., Daskalopulu, A., & Tsoukalas, L. H. (2024). Combinatorial Component Day-Ahead Load Forecasting through Unanchored Time Series Chain Evaluation. Energies, 17(12), 2844. https://doi.org/10.3390/en17122844