Abstract

System health monitoring (SHM) of a ball screw laboratory system using an embedded real-time platform based on Field-Programmable Gate Array (FPGA) technology was developed. The ball screw condition assessment algorithms based on machine learning approaches implemented on multiple platforms were compared and evaluated. Studies on electric power consumption during the processing of the proposed structure of a neural network, implementing SHM, were carried out for three hardware platforms: computer, Raspberry Pi 4B, and Kria KV260. It was found that the average electrical power consumed during calculations is the lowest for the Kria platform using the FPGA system. However, the best ratio of the average power consumption to the accuracy of the neural network was obtained for the Raspberry Pi 4B. The concept of an efficient and energy-saving hardware platform that enables monitoring and analysis of the operation of the selected dynamic system was proposed. It allows for easy integration of many software environments (e.g., MATLAB and Python) with the System-on-a-Chip (SoC) platform containing an FPGA and a CPU.

1. Introduction

System health monitoring (SHM) is a hardware and software solution that enables the measurement, acquisition, and analysis of the operating parameters of a selected system to maintain it in operational condition [1]. Importantly, data analysis should take place in real time, which allows for an earlier response to emerging irregularities/anomaly detection. This type of approach is called predictive maintenance. The effect of the monitoring system is to minimize maintenance and repair costs, as well as the probability of failure. Hence, there is a need to continuously assess the condition of the machine/process and compare its current health condition with its history, which allows for future behaviour to be predicted while minimizing the risk of unplanned downtime. The practical implementation of SHM, depending on the target application, requires solving many problems regarding the selection of the target hardware platform, a set of sensors and algorithms enabling the assessment of the structural integrity of the tested system for the purpose of managing its operating conditions. The ball screw system considered in this paper is an important part of the machine tool, and its operation has a direct impact on the quality of its machining and productivity. However, the ball screw system often operates in harsh conditions and degrades. Therefore, it is extremely important to estimate the technical condition of the ball screw system and predict its remaining useful life, which can improve the reliability and efficiency of the machine tool and reduce the maintenance costs. In the literature, many methods related to the selection and location of sensors for analysing the condition of ball screws used in drives can be found. A set of semiconductor strain gauge bridge circuits has been successfully used to measure the variation in preload force on the ball screw. The arrangement of these sensors on the ball screw nut is also known. The strain gauges are mounted axially and circumferentially on the ball screw nut; they measure the strains on the nut either when it is compressed by the preload or any external force acting upon it [2]. Won Gi et al. [3], Guo-Hua Feng, and Yi-Lu Pan [4] proposed an MEMS sensor (accelerometer) mounted on nuts to measure the vibration signals of the ball screw (as a result of the interaction with the ball screw and the ball nut). This cost-effective sensing system based on MEMS allows for the installation of a sensor on each ball screw to further check the operation of the machine and monitor its health. An interesting approach is to measure the torque acting on the screw, which, according to [5], allows for better results in the early diagnosis of ball screw degradation than using MEMS sensors.

Energy efficiency is a key issue in the implementation of artificial intelligence. FPGAs, with their ability to perform parallel computations at low power consumption, excel in energy-efficient AI inference, making them the preferred choice for embedded AI and battery-powered devices. The combination of artificial intelligence and machine learning with FPGA design has opened up unprecedented opportunities for the development of various applications. The use of real-time AI inference methods, including FPGA systems, enables the full potential of machine learning to be used in solutions requiring high computing power while maintaining acceptable electrical energy consumption. As these technologies continue to develop, the synergy between AI, machine learning (ML) and FPGA has the potential to realize advanced, intelligent, and flexible measurement and control systems, including system health monitoring. FPGAs’ real-time processing capabilities and AI’s inference speed make them valuable assets in predictive maintenance applications. By analysing the sensor data and running AI algorithms on the FPGA accelerators, it is possible to detect anomalies in dynamic systems and predict the maintenance requirements proactively, minimizing the downtime and reducing the operational costs. Moreover, the reconfigurability of FPGAs allows designers to dynamically adjust the neural network architecture to optimise performance for specific tasks or adapt to changing requirements.

Shawahna et al. [6] discussed the challenges of implementing deep neural networks on FPGA in detail. Particular attention was paid to the possibility of the full use of the hardware resources of FPGA systems, including the possibility of performing fast calculations and cooperation with external memories. The specificity of deep neural networks (DNNs) and convolution neural networks (CNNs), in particular, requires large computing power and RAM [7]. Compression methods play an important role in the implementation of DNNs in FPGA systems. They significantly reduce the hardware requirements with a minimal impact on the accuracy [8,9]. An interesting, practical use of FPGA systems to implement CNN is presented in the works [10,11,12,13]. Several algorithmic optimization strategies used in FPGA hardware for CNNs are discussed, and a few of neural network FPGA-based accelerators architectures are presented.

Many data analysis methods are also considered to assess the current and future condition of ball screws. Some of them are presented in Section 3.

There is a lack of research on using modern FPGA SoCs for the real-time analysis of industrial automation systems, including ball screw drives, and understanding the energy demand based on the type of FPGA platform. There is also a lack of recommendations for choosing a type of hardware platform that provides both energy efficiency and enough performance to process SHM algorithms based on DNN or CNN neural networks. Of course, the literature contains developments of analytical models and techniques that can be used to estimate the power consumption of FPGA systems. Loubach [14] described the analytical power consumption and performance models applicable to the runtime partial reconfigurable FPGA platform. Anderson [15] proposed a capacitance prediction model that can be useful for FPGA early-power estimation and power-aware layout. Power estimation in the pre-design and pre-implementation phases of a FPGA project is also possible by using the Xilinx Power Estimator (XPE) [16]. XPE assists with architecture evaluation, device selection, appropriate power supply components, and thermal management components specific for user applications. The tool considers design resource usage, toggle rates, I/O loading, and many other factors which it combines with the device models to calculate the estimated power distribution.

In this paper, we focus on estimating the power consumed by an FPGA system performing ball screw condition assessment algorithms, based on the machine learning approach. We propose a CNN structure for predictive maintenance of a ball screw drive used for linear load transmission, employing the AMD Kria KV260 commercial Zynq UltraScale+ SoC. Research offers experimental studies on the energy efficiency of FPGA hardware. FPGA experimental results related to power consumption and classification accuracy are compare to two hardware platforms: PC and Raspberry Pi 4B, performing the same SHM algorithm. We envision that the proposed FPGA accelerator can be used in implementing various deep neural network structures with the lowest energy consumption compared to other considered hardware platforms.

In this paper, Section 2 presents the ball screw laboratory stand, including the hardware and software environment used. Section 3 describes the proposed system health monitoring CNN structure implemented on FPGA hardware platform. Section 4 shows the experimental results and discussion of these results with comparison of the evaluated hardware platforms. Section 5 concludes the proposed work and presents future directions of study.

2. Laboratory Stand

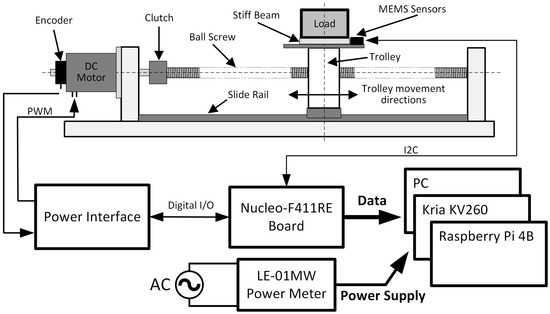

The mechanical unit of the ball screw is a basic element of machine tools, and is used for precise positioning. The ball screw is the main part of the ball screw system, and is mounted to the machine base with bearings. The laboratory stand with ball screw used in the experiments is presented in Figure 1. The DC servo motor of the Nisca NF5475E type driven ball screw converts the rotary motion into linear motion. Then, the carriage attached to the ball screw with the ball nut can move becomes linear. The direction of the trolley movement is marked in the figure. An incremental encoder is installed on the motor axis, which was used in experiments to analyse the position of the trolley relative to the ends of the slide rail. The DC motor is controlled by the PWM signal. The correct supply voltage (+12VDC) and current are provided by the power interface. Acceleration of the carriage on three axes was measured using an LSM6DSO MEMS sensor. The embedded system with a STM32F411RE processor (Nucleo-F411RE board) is responsible for controlling the operation of the sensor and preprocessing the measurement data. The prepared data can be saved to a file for later use, or sent directly to the tested hardware platform (PC, Kria KV260 or Raspberry Pi 4B). Only one platform is active during a given experiment. The active power consumed by the tested hardware platform was measured using the LE-01MW module, on the 230VAC supply voltage side [17]. Measurements were made during the processing of the SHM algorithms and before they were launched. This made it possible to filter out the background power consumed by the devices and take into account the power lost in the supply.

Figure 1.

Block diagram of laboratory stand.

Due to the time required to conduct the measurements, a significant problem was simulating the incorrect operation of the ball screw. This problem was solved by assembling a stiff beam on the trolley, transverse to its movement, on which the load mass was placed. It is also possible to adjust the position of the mass relative to the centre of the trolley. The central load position was assumed to be the one that causes the ball screw to function correctly. Changing the position of the mass introduces an asymmetrical preload on the carriage and simulates ball screw nut/bearing wear.

It is not necessary to prepare the entire pipeline (from the ball screw to the health monitoring system) to measure the highest possible performance of laboratory stands. Breaking the connection between data acquisition and processing will even be helpful to isolate the supervisory system. This allows precise measurement of the processing time and the amount of electricity consumed during this task. Therefore, the ball screw with the controller and acquisition system is separated from the data processing system. As a software environment, we used Python 3.8.10 with TensorFlow 2.10.1 to prepare the neural network model. Its architecture is presented in Table A1.

To perform experiments on a high-end environment, we have prepared the evaluation script on a Lenovo ThinkPad P15 Gen1 laptop, with Intel Core i7-10750H CPU with six cores (12 threads) up to 5 GHz (CPU frequency was 4 GHz during processing neural network on all threads). The computer worked under the control of a Windows 11 64-bit operating system. The PyCharm Community development environment was installed for software development in Python. The installed AMD Vitis Unified IDE provided an environment for comprehensive Kria KV260 application development. As a standard edge platform, we chose Raspberry Pi 4B with 4 GB RAM memory (1.5 GHz quad-core ARM Cortex-A72), as it is readily available and very popular among users. The system for performance validation was Kria KV260. As this evaluation board contains FPGA resources, special acceleration is possible. That is why this board is evaluated twice, with and without FPGA acceleration for NN data processing. Kria KV260 is Zynq UltraScale+ MPSoC (APU: dual-core Arm® Cortex®-A53 MPCore™ up to 1.3 GHz and RTPU: dual-core Arm Cortex-R5F MPCore up to 533 MHz) with 4GB DDR memory, 256 K logic cells, 144 BRAM blocks, 64 URAM blocks and 1.2 K DSP slices [18]. This provides sufficient resources for the B4096 deep learning processor unit (DPU), which is the largest one available. There are other possible choices for heterogeneous devices, such as ZCU102, but the price is much higher than that of Kria ($3234.00) [19]. Cheaper devices such as MicroZed are not supported via Vitis AI, due to the lack of FPGA resources required to perform the DPU implementation [20].

3. Proposed Algorithms/Methodology

Currently, SHM systems use many different approaches. The most commonly used is based on the Fourier transform, mathematical or statistical models, and machine learning approach, among others. Li et al. [21] proposed a Bayesian rigid regression approach based on natural frequencies. Zhang et al. [22] used deep belief networks to recognise degradation conditions. Zhang et al. [23] employed mixed deep auto-encoders with the Gaussian mixture model to create a performance degradation prognostic method. Bertolino et al. [24] created a virtual model in order to inject faults to support model-based health monitoring. Schlagenhauf et al. [25] used image data to monitor the progression of visually detectable wear progression on the ball screw. Yang et al. [26] used Bayesian dynamic programming to improve SHM in long-period structures.

In our research, we were focused only on ML-based (NN) SHM systems, because NNs are very suitable for FPGA acceleration due to their construction. Also, it has already been shown that FPGA implementation of NNs allows us to improve the efficiency of the algorithm via different types of quantisation/binarisation [27,28]. Our goal is to prove that, with the development of tools such as Vitis AI (Xilinx, San Jose, CA, USA), creating such systems is becoming cheap and easy. This may directly reduce the costs involved.

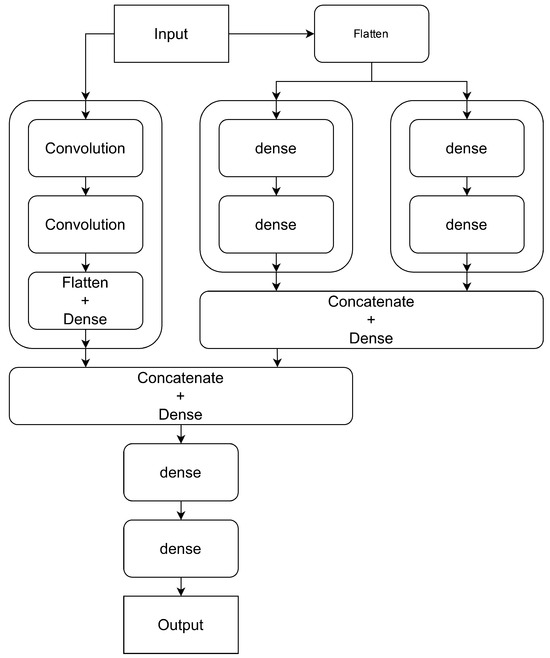

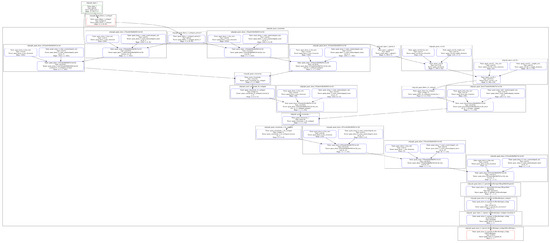

During the research, we tested a few neural networks with dense linear, dense nonlinear, or convolutional layers, but the most promising results were achieved by using all of them in parallel instead of a sequential shape. As presented in Table A1, and as can be seen in Figure 2 and Figure A1, we used three processing blocks (a convolutional one and two dense ones, with linear and rectified linear unit (ReLU) activation). The number of layers must be the same to avoid timing problems. However, the concatenate layer can process only two inputs to be able to implement it on FPGA using Vitis AI. Therefore, the convolutional block has an additional layer to compensate for time delays with the dense block. This is illustrated in Figure 2. Preparing NN in parallel has a further advantage from the FPGA point of view, as calculations performed on the CPU, regardless of the architecture, must be executed sequentially. It is worth adding that our goal was not to create the best implementation of NN for such a task, but to validate if it is possible and reasonable from a performance, accuracy, and energy efficiency point of view, without having to spend huge amounts of time on the implementation.

Figure 2.

Block diagram of the proposed NN.

To be able to implement a NN on hardware, the NN has to be quantised and compiled in the form understandable via programmable logic (PL), which is called the xmodel format. Xilinx has provided the Vitis AI tool (v3.5) to speed up and simplify this process. To introduce the whole process of accelerating NNs using FPGA resources, a step-by-step description of the activities we performed is presented below.

- Prepare and train the NN in the preferred supported environment, such as Keras v2.12 or PyTorch v1.13.1

- Save the trained NN in h5 format.

- Import the NN along with the data sample (necessary in the quantisation process) into the Vitis AI container.

- Quantise the NN using the Vitis AI environment (with an optional additional learning phase after quantisation and pruning).

- Compile the NN to xmodel (vai_c_tensorflow2 command or similar for other environments); the frozen graph on the compiled model is presented in Figure A1. A frozen graph is a Tensorflow graph that desribes the structure of the model and the trained variables relating to the weights and biases learned during the training process [29]. The “freezing” means an optimization technique that simplifies the graph by removing unnecessary nodes and operations, and converting the variables into constants. This makes the graph more suitable for deployment on production systems.

- Move the compiled model and the application script to the Zynq device.

- Load the DPU kernel on the target system.

- Run the application.

It is worth adding that there are a few restrictions for implementing the NN on FPGA using Vitis AI tools. First, in the case of Keras implementation, the model has to be created in a functional format, to be able to compile it into xmodel. Additionally, there are layer size limits which, if exceeded, will result in implementation in the processor.

Unfortunately, there are layers which are not yet supported. Therefore, it is worth confirming whether the model is compiled to appropriate resources. A full list of the supported layers can be found in the Xilinx documentation [29]. As can be seen in Figure A1, there are a few types of boundary in the frozen graph. The green one, called “subgraph_input_1”, refers to a data block, typically the input data layer of the model. Blocks with blue boundaries are our desired ones, as they are compiled into FPGA resources. The outer black boundary of block “subgraph_quant_concatenate” encloses the block that will be implemented as a whole on the FPGA resources. The red ones refer to the CPU resources and, for now, have to be implemented manually by the programmer. From the perspective of this experiment, the description of specific blocks is irrelevant. What matters is that the designed architecture is fully implementable on FPGA (blue blocks). We want to avoid the situation observed in Figure A2, in which a sequence of blue blocks is separated by red ones. This situation will require the use of shared memory, through which data will have to be transferred to the CPU and then again to the FPGA, which will significantly increase the operating time of the algorithm and reduce its energy efficiency. In this case (as shown in Figure A2), there is a problem with exceeded size of the supported layer, but a similar problem will occur in the case of using unsupported layers.

4. Experiments and Results

It is worth mentioning that our acquisition system was able to collect data in 1 kHz, but our MEMS sensor was collecting data in only 50 Hz. Due to that, it seemed most reasonable to take every 20th sample to feed the NN. It allowed us to speed up the learning process without losing the accuracy of the NN and reduce the computing power necessary to train the network.

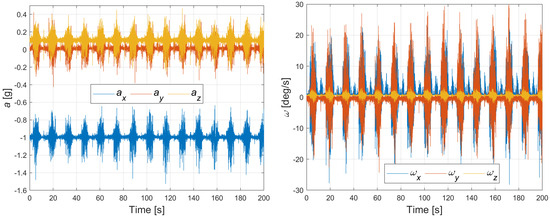

The data acquisition procedure consisted of starting the DC motor driving the ball screw with the initial position of the trolley on the left limit of the rail. After the trolley reached the right limit of the rail, the direction of rotation of the DC motor changed. This direction was changed again after reaching the left limit, creating a single cycle of movement. The duration of the experiment and the related number of cycles were selected according to the given stage of the research (NN training, ball-screw defect simulation). An example of the raw data obtained from the accelerometer and gyroscope is shown in Figure 3. Before using the collected data for training the neural networks and the subsequent tests, they were processed in such a way that we obtained a list of moving windows from the sample list (Figure 4). This is a typical approach that reduces the data and accompanying noise, and reorganises the data set. This last operation is particularly important due to the adopted method of feeding the NN with input data.

Figure 3.

Raw data from accelerometer and gyroscope.

Figure 4.

Visualisation of sequencing data.

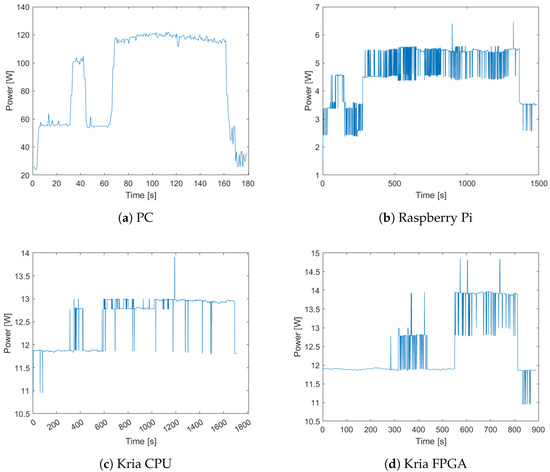

To compare the power consumption of the different devices available to process SHM, we wanted to isolate different steps of the calculations. At the beginning, we loaded the data from the disk into the RAM memory, then sequenced them, and finally calculated the neural network output. Each step can be clearly seen on the power consumption graph (Figure 5) as different power demands for the system.

Figure 5.

Power consumption during experiments.

The power used for the calculations during the processing of the data (seventh row of Table 1) is calculated by taking the average power consumed by the system without processing (just OS processes) and then subtracting it from the power consumed during processing data (Equation (1)). Finally, it only includes the increase in power consumption while sequencing the data and calculating the NN output. In the case of PC measurements, the calculations also contain the power rise while reading the data from disk. Referring to Figure 5, it can be clearly seen that only the PC power consumption (Figure 5a) reacts to the starting scripts, beginning with reading the data from the disk. Other devices react only when there is a higher demand for computational power. It can also be seen by comparing the total power with the power used for the calculations. Edge devices have a similar energy demand, regardless of whether the system is fully used or only carrying out basic operations related to the operating system. In the case of the Raspberry Pi, power consumption without calculation was too low to perform correct measurements, so we added a 20.3958 W light bulb. Figure 5b shows the power consumption with the power consumption of the light bulb already subtracted.

Table 1.

Results of the experiments.

Taking into account all differences between the compared systems, the most reasonable way to compare them objectively is to limit ourselves only to the period of increased processing during calculations related to neural networks. As they have different computational powers, the FPGA implementation of the NN can process over four times more data frames each second than the edge CPUs (Raspberry PI and Kria CPU), and it is still over three times slower than the high-end CPU in the PC implementation. We compared them in terms of power consumed in relation to processed frames (last row of Table 1).

Regarding Table 2, prices of the Raspberry Pi and Kria KV260 Vision AI starter kit were taken from the Newark (Avnet) distribution site (Phoenix, AZ, USA). The Lenovo ThinkPad price is taken from an Amazon offer, and is illustrative only, as the laptop has since been withdrawn from sale. The cost per day of work was chosen by comparing the computational capabilities of the weakest set of devices (Kria FPGA, in this case), and assuming that the rest of the day the devices are turned off. The electricity price is based on the bls.gov site for data published for October 2023. Even if we take into account that we had to buy a new edge device and already had a workstation, it is reasonable to buy an edge device, as such an investment, in the case of Kria KV260 with implemented FPGA acceleration, will return in years, which can be even faster as energy prices are increasing. We should also take into account the fact that the edge device can be closer to the monitored system, which will reduce the amount of energy necessary to transmit the data between the system and the supervisory device. A Raspberry Pi is worth considering, as its energy consumption is higher than Kria by 48%, but its precision is also higher by 12%, which may be crucial during the supervision of some systems. It is also worth remembering that the NN implementation is not optimised for the health monitoring task, so it is possible to achieve higher accuracy in FPGA and CPU implementations. The SHM model should be adjusted to a specific environment, which was not in the scope of this research. In addition to the financial aspect, it is also worth mentioning that reducing energy demand by approximately 2.7 kWh per day has a positive impact on the environment.

Table 2.

Price comparison of the evaluated solutions.

A 2.7 kWh per day reduction in energy demand is not staggering, but if we take into consideration that three Kria FPGA devices is enough to cover the computing power requirement for 23 ball screws (assuming that 50 Hz sampling is enough, which was proven by our experiments), and there are dozens of them in factories (let us assume 100), then energy reduction in scale of just one factory will cover the energy demand for two family houses with a family of two or single family house with a family of five. We can approach other systems that need supervision in the same way, which only increases the scale of energy demand reduction.

During this research, we also wanted to include an STMicroelectronics Company (STM) (Geneva, Switzerland) STM32F767ZI processor type, but our experiments showed that the proposed NN was too large to implement it in the above-mentioned device. The impossibility of using NN is due to the limitation of program (flash) and data (SRAM) memory size.

5. Summary and Discussion

In this research, we wanted to find out if it is reasonable, from an economical and environmental point of view, to invest in FPGA acceleration of SHM systems, which was proven during research. We have described what SHM systems are and why we need them. We have also presented the current approaches to SHM systems. Later, we presented our laboratory stand for research on energy consumption, efficiency, and accuracy of proposed solutions. The prepared NN was then presented and described, with an indication of the advantages of its parallel architecture. In Section 3, we have shown that rapid prototyping and implementing of such algorithms on FPGA devices is cheap and easy. We have also provided necessarily steps for this process taking into account typical problems that may arise when creating the system. Finally, we have presented our experiments, and we have found out that if we can accept the decline (11%) in the accuracy of the algorithm, the most reasonable is to select Kria KV260 with FPGA acceleration from an economical and environmental point of view, as it reduces energy demand by 67% compared to a PC with a high end processor. If we take into consideration the current energy prices, such an investment should return in approximately 4.7 years, with the assumption that we already have a high-end system and we do not have an edge device. During the experiment, we did not use techniques such as pruning or binarisation of weights to optimise the NN size, which can even increase its power efficiency, as presented in [27,28]. Additionally, we discovered that, if we can accept the low performance of the Raspberry Pi 4B, it is also reasonable to use it, as its accuracy is higher than that of Kria and its energy consumption is lower by 51% in comparison to a PC CPU. The calculations did not take into account the fact that edge devices can be closer to the monitored system, which reduces the need to transmit data over long distances and reduces the power demand. On the other hand, edge devices can be too small for some kinds of networks, which occurred when we tried to implement our NN on an STM device, as it has 13,875,629 parameters.

As the direction in which the research was conducted looks promising, the next steps that arise for further research are the impact of the network size on the differences in energy efficiency between individual systems, along with network size reduction methods, such as pruning and binarisation. In this context, it is important to optimise the FPGA architecture to increase the overall system performance, reduce program memory and data size when implementing neural networks, and analyse the impact of the FPGA system architecture on reducing electricity consumption. Additionally, the question arises as to how large cards with reconfigurable logic, such as Alveo, will compare to edge devices. Also, it can be worth comparing results of the currently used edge devices with GPU-capable ones, such as Nvidia Jetson Nano which, unfortunately, we did not have access to while conducting our research. In future research, the authors plan to use formal techniques, described, e.g., in papers [30,31], for the verification and validation of SHM system, treating the device described in the article as an IoT edge device.

Author Contributions

Conceptualization, W.K.; Methodology, M.R. and W.K.; Validation, M.R. and W.K.; Investigation, M.R.; Writing—original draft, M.R. and W.K.; Supervision, M.R. All authors have read and agreed to th epublished version of the manuscript.

Funding

This research work was sponsored by AGH UST project no 16.16.120.773.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial intelligence |

| CNN | Convolution neural network |

| CPU | Central processing unit |

| DNN | Deep neural network |

| DPU | Deep learning processor unit |

| FPGA | Field-programmable gate array |

| MEMS | Microelectromechanical system |

| ML | Machine learning |

| NN | Neural network |

| OS | Operating system |

| PWM | Pulse width modulation |

| RTPU | Real-time processing unit |

| SRAM | Static random-access memory |

| SHM | System health monitoring |

| SoC | System-on-a-Chip |

Appendix A

Table A1.

Summary of the NN model.

Table A1.

Summary of the NN model.

| Layer (Type) | Output Shape | Param # | Connected to |

|---|---|---|---|

| input_1 (InputLayer) | [(None, 250, 8, 1)] | 0 | [] |

| flatten_1 (Flatten) | (None, 2000) | 0 | [‘input_1[0][0]’] |

| dense_1 (Dense) | (None, 1024) | 2,049,024 | [‘flatten_1[0][0]’] |

| dense_3 (Dense) | (None, 1024) | 2,049,024 | [‘flatten_1[0][0]’] |

| dropout (Dropout) | (None, 1024) | 0 | [‘dense_1[0][0]’] |

| dropout_2 (Dropout) | (None, 1024) | 0 | [‘dense_3[0][0]’] |

| conv2d (Conv2D) | (None, 248, 6, 128) | 1280 | [‘input_1[0][0]’] |

| dense_2 (Dense) | (None, 1024) | 1,049,600 | [‘dropout[0][0]’] |

| dense_4 (Dense) | (None, 1024) | 1,049,600 | [‘dropout_2[0][0]’] |

| conv2d_1 (Conv2D) | (None, 246, 4, 28) | 32,284 | [‘conv2d[0][0]’] |

| dropout_1 (Dropout) | (None, 1024) | 0 | [‘dense_2[0][0]’] |

| dropout_3 (Dropout) | (None, 1024) | 0 | [‘dense_4[0][0]’] |

| flatten (Flatten) | (None, 27,552) | 0 | [‘conv2d_1[0][0]’] |

| concatenate (Concatenate) | (None, 2048) | 0 | [‘dropout_1[0][0]’] |

| [‘dropout_3[0][0]’] | |||

| dense (Dense) | (None, 256) | 7,053,568 | [‘flatten[0][0]’] |

| dense_5 (Dense) | (None, 256) | 524,544 | [‘concatenate[0][0]’] |

| concatenate_1 (Concatenate) | (None, 512) | 0 | [‘dense[0][0]’] |

| [‘dense_5[0][0]’] | |||

| dense_6 (Dense) | (None, 128) | 65,664 | [‘concatenate_1[0][0]’] |

| dropout_4 (Dropout) | (None, 128) | 0 | [‘dense_6[0][0]’] |

| dense_7 (Dense) | (None, 8) | 1032 | [‘dropout_4[0][0]’] |

| dropout_5 (Dropout) | (None, 8) | 0 | [‘dense_7[0][0]’] |

| dense_8 (Dense) | (None, 1) | 9 | [‘dropout_5[0][0]’] |

| Total params: 13,875,629 | |||

| Trainable params: 13,875,629 | |||

| Non-trainable params: 0 |

Figure A1.

Frozen graph of the compiled CNN used in experiments.

Figure A2.

Frozen graph of the compiled model with exceeded layer size limit.

References

- Farrar, C.R.; Keith, W. An introduction to structural health monitoring. Phil. Trans. R. Soc. A 2007, 365, 303–315. [Google Scholar] [CrossRef] [PubMed]

- Hoh, S.; Thorpe, P.; Johnston, K.; Martin, K. Sensor Based Machine Tool Condition Monitoring System. IFAC Proc. Vol. 1988, 21, 103–110. [Google Scholar] [CrossRef]

- Lee, W.G.; Lee, J.W.; Hong, M.S.; Nam, S.H.; Jeon, Y.; Lee, M.G. Failure Diagnosis System for a Ball-Screw by Using Vibration Signals. Shock Vib. 2015, 2015, 435870. [Google Scholar] [CrossRef]

- Feng, G.H.; Pan, Y.L. Establishing a cost-effective sensing system and signal processing method to diagnose preload levels of ball screws. Mech. Syst. Signal Process. 2012, 28, 78–88. [Google Scholar] [CrossRef]

- Li, P.; Jia, X.; Feng, J.; Davari, H.; Qiao, G.; Hwang, Y.; Lee, J. Prognosability study of ball screw degradation using systematic methodology. Mech. Syst. Signal Process. 2018, 109, 45–57. [Google Scholar] [CrossRef]

- Shawahna, A.; Sait, S.M.; El-Maleh, A. FPGA-Based Accelerators of Deep Learning Networks for Learning and Classification: A Review. IEEE Access 2019, 7, 7823–7859. [Google Scholar] [CrossRef]

- Qiu, J.; Wang, J.; Yao, S.; Guo, K.; Li, B.; Zhou, E.; Yu, J.; Tang, T.; Xu, N.; Song, S.; et al. Going Deeper with Embedded FPGA Platform for Convolutional Neural Network. In Proceedings of the 2016 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, New York, NY, USA, 21–23 February 2016; pp. 26–35. [Google Scholar] [CrossRef]

- Denton, E.L.; Zaremba, W.; Bruna, J.; LeCun, Y.; Fergus, R. Exploiting Linear Structure within Convolutional Networks for Efficient Evaluation. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N., Weinberger, K., Eds.; Curran Associates, Inc.: New York, NY, USA, 2014; Volume 27. [Google Scholar]

- Han, S.; Mao, H.; Dally, W.J. Deep compression: Compressing deep neural networks with pruning, trained quantization and huffman coding. arXiv 2015, arXiv:1510.00149. [Google Scholar] [CrossRef]

- Mittal, S. A survey of FPGA-based accelerators for convolutional neural networks. Neural Comput. Appl. 2020, 32, 1109–1139. [Google Scholar] [CrossRef]

- Li, S.; Luo, Y.; Sun, K.; Yadav, N.; Choi, K.K. A Novel FPGA Accelerator Design for Real-Time and Ultra-Low Power Deep Convolutional Neural Networks Compared with Titan X GPU. IEEE Access 2020, 8, 105455–105471. [Google Scholar] [CrossRef]

- Zhang, L.; Tang, X.; Hu, X.; Zhou, T.; Peng, Y. FPGA-Based BNN Architecture in Time Domain with Low Storage and Power Consumption. Electronics 2022, 11, 1421. [Google Scholar] [CrossRef]

- Abd El-Maksoud, A.J.; Ebbed, M.; Khalil, A.H.; Mostafa, H. Power Efficient Design of High-Performance Convolutional Neural Networks Hardware Accelerator on FPGA: A Case Study with GoogLeNet. IEEE Access 2021, 9, 151897–151911. [Google Scholar] [CrossRef]

- Loubach, D.S. An analysis on power consumption and performance in runtime hardware reconfiguration. Int. J. Embed. Syst. 2021, 14, 277–288. [Google Scholar] [CrossRef]

- Anderson, J.H. Power Estimation Techniques for FPGAs. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2004, 12, 1015–1027. [Google Scholar] [CrossRef]

- Advanced Micro Devices, I. Xilinx Power Estimator User Guide, 19 October 2023. Available online: https://docs.amd.com/viewer/book-attachment/3s_2Q8bH4og97QGoW1~sXQ/W~Z2C7A8Sb4COkrh_oF_0w (accessed on 4 January 2024).

- F&F Filipowski sp. z.o.o. LE-01MW v2 Electric Energy Meter. 2014. Available online: https://www.fif.com.pl/en/index.php?controller=attachment&id_attachment=1603 (accessed on 15 October 2023).

- Advanced Micro Devices, I. Kria KV260 Vision AI Starter Kit Data Sheet (DS986). 2024. Available online: https://docs.xilinx.com/r/en-US/ds986-kv260-starter-kit/Summary (accessed on 8 February 2024).

- Advanced Micro Devices, I. Zynq UltraScale+ MPSoC ZCU102 Evaluation Kit. 2024. Available online: https://www.xilinx.com/products/boards-and-kits/ek-u1-zcu102-g.html (accessed on 4 February 2024).

- Advanced Micro Devices, I. Avnet MicroZed 7010 SOM. 2024. Available online: https://www.xilinx.com/products/boards-and-kits/1-1g7jkrb.html (accessed on 4 February 2024).

- Li, K.; Qiu, C.; Li, C.; He, S.; Li, B.; Luo, B.; Liu, H. Vibration-based health monitoring of ball screw in changing operational conditions. J. Manuf. Process. 2020, 53, 55–68. [Google Scholar] [CrossRef]

- Zhang, L.; Gao, H.; Wen, J.; Li, S.; Liu, Q. A deep learning-based recognition method for degradation monitoring of ball screw with multi-sensor data fusion. Microelectron. Reliab. 2017, 75, 215–222. [Google Scholar] [CrossRef]

- Zhang, X.; Luo, T.; Han, T.; Gao, H. A novel performance degradation prognostics approach and its application on ball screw. Measurement 2022, 195, 111184. [Google Scholar] [CrossRef]

- Bertolino, A.C.; Sorli, M.; Jacazio, G.; Mauro, S. Lumped parameters modelling of the EMAs’ ball screw drive with special consideration to ball/grooves interactions to support model-based health monitoring. Mech. Mach. Theory 2019, 137, 188–210. [Google Scholar] [CrossRef]

- Schlagenhauf, T.; Scheurenbrand, T.; Hofmann, D.; Krasnikow, O. Analysis of the visually detectable wear progress on ball screws. CIRP J. Manuf. Sci. Technol. 2023, 40, 1–9. [Google Scholar] [CrossRef]

- Yang, Y.; Zhu, Z.; Au, S.K. Bayesian dynamic programming approach for tracking time-varying model properties in SHM. Mech. Syst. Signal Process. 2023, 185, 109735. [Google Scholar] [CrossRef]

- Zhan, J.Y.; Yu, A.T.; Jiang, W.; Yang, Y.J.; Xie, X.N.; Chang, Z.W.; Yang, J.H. FPGA-based acceleration for binary neural networks in edge computing. J. Electron. Sci. Technol. 2023, 21, 100204. [Google Scholar] [CrossRef]

- Liang, S.; Yin, S.; Liu, L.; Luk, W.; Wei, S. FP-BNN: Binarized neural network on FPGA. Neurocomputing 2018, 275, 1072–1086. [Google Scholar] [CrossRef]

- Advanced Micro Devices, I. Vitis AI User Guide (UG1414). 2024. Available online: https://docs.amd.com/r/en-US/ug1414-vitis-ai/vai_q_tensorflow2-Supported-Operations-and-APIs (accessed on 18 March 2024).

- Krichen, M. A Survey on Formal Verification and Validation Techniques for Internet of Things. Appl. Sci. 2023, 13, 8122. [Google Scholar] [CrossRef]

- Saidi, A.; Hadj Kacem, M.; Tounsi, I.; Hadj Kacem, A. A formal approach to specify and verify Internet of Things architecture. Internet Things 2023, 24, 100972. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).