2.2. African Vulture Optimization Algorithm (AVOA)

The AVOA is like most metaheuristic algorithms that mimic the biological principles of living things. The AVOA is based on the theories and principles of Old World African vultures described in [

42], with habitats in Africa, Europe, and Asia. The AVOA optimization steps are grouped into four and are presented as follows:

Step One: (Population Initialization) To determine the population where the best vulture is located, the population size is initiated, and the fitness of all members is calculated, the best solution is chosen as the best vulture of the first group; likewise, the second-best solution is the best vulture in the second group; concurrently, other solutions follow from Equation (15)

where

and

are measured parameters before the search operation. They are within the range

and the sum of the two parameters is

. Roulette wheel selection is used for the probability of choosing the best solution from each group and is expressed in Equation (16) as

where

denotes the satisfaction rate and

is the best solution for each group. If an

parameter is near

and the

parameter is near

, it increases intensification, while if the

parameter is near

and the

parameter is near

, it increases AVOA diversity.

Step Two: (Vulture starvation rate) The starvation rate of vultures determines the distance vultures travel in search of food (search space) Equation (17) explains this behavior.

where

denotes satisfaction rate,

represents the total number of iterations initiated,

indicates the current iteration number,

is a randomly generated number between

value is

; a value below

for

means the vulture is starving, while a value above or equal to

means the vulture is satisfied.

Step Three: (Exploration) Vultures in the AVOA experience difficulty finding food; hence, they explore their habitat for a long time and travel far and wide in search of food. Two strategies are adopted in this search process and a parameter is used to decide on each strategy to be chosen. This parameter is set before the optimization operation and is within the range of .

In choosing a strategy in the exploration phase, a random number

is generated if

Equation (22) is used; otherwise, Equation (21) is adopted. In this scenario, each vulture randomly searches the environment for food. This behavior is modeled in Equation (19)

In Equation (21), vultures initiate a random search for food in their immediate environment. The distance of the search is randomly selected for one of the best vultures of the two groups. The vulture position vector in the next iteration is expressed as , and the satisfaction rate of the vulture is computed using Equation (18) in the present iteration. in Equation (21) is one of the best vultures chosen via Equation (15). is a random number within the range . and is used to ensure a random high coefficient at the environmental search scale; thus, increasing the variety of search space areas. Equation (22) denotes the distance between the vulture and a present optimal one. is a randomly generated value within range . and denote the upper and lower boundary limits.

Step Four: (Exploitation) This stage is further divided into two parts.

Stage one: Stage one explores the efficiency of the AVOA. The AVOA initiates the exploitation process if

.

is a parameter within the range

and is adopted to decide on the strategy to be selected. A strategy called siege-fight is gently applied if the value

; otherwise, a strategy known as rotational flying is adopted. This behavior is modeled in Equation (23).

is a generated random value in the range and is the distance between one best vulture from one group to the other best vultures in the other group calculated using Equation (26).

and

are computed using Equations (27) and (28) as expressed below.

and in Equations (27) and (28) are made up of random numbers between

Stage two: Stage two of the AVOA is employed if

. At the start,

is produced in the range

. If parameter

, a strategy known as “competition for food” is initiated which attracts various vultures to the food source. The position of vultures is updated via Equation (29).

Equations (30) and (31) compute

as explained below.

In the second stage of AVOA exploitation, vultures gather toward the best vulture to feast on leftover food. As a result, the vulture’s position is updated using Equation (32).

where

is the problem’s dimension; the “levy flight” phenomenon enhances AVOA efficiency and is described as

while are randomly generated numbers within a range of .

For more details about the AVOA, see [

42].

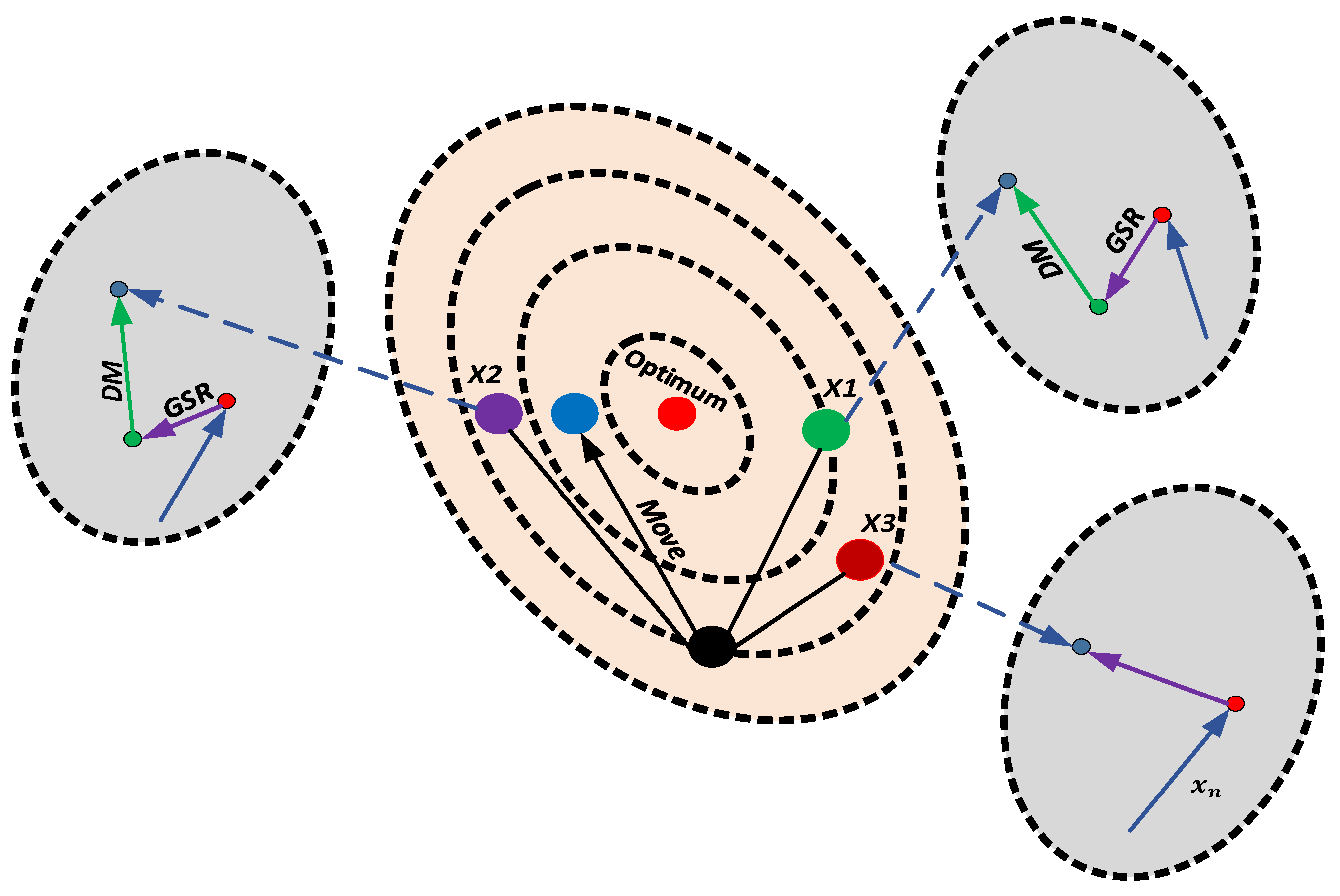

2.3. Gorilla Troop Optimization (GTO)

A new metaheuristic algorithm inspired by the gorilla’s pack behavior and developed by [

43] is mathematically modeled to explain the two important phases in optimization (exploration and exploitation). GTO algorithm uses five different operators in exploration and exploitation for optimization problems.

Exploitation: Follow the silverback; competition for adult females

Exploration: Migrate to unknown places, migrate around known places, and move to other gorilla groups.

2.3.1. Exploration Process

The process applied to the exploration phase is explained here. Gorillas live in groups under the leadership and dominance of the silverback; however, there are times when gorillas move away from their group. This movement can occur in three ways, each represented by a parameter

with the range

, gorillas can move to unknown places (i.e.,

), to known places (i.e.,

), and to other gorilla groups (i.e.,

). The first movement type provides a possibility for the algorithm to survey the entire search space well, the second movement type improves the algorithm’s exploration performance, and the third movement type enhances the algorithm’s ability to escape the local optima trap. Equation (35) models this behavior.

is the candidate position vector of a gorilla in the next iteration

.

is the present vector of the position of the gorilla.

, and

are random values within range

updated in each iteration.

and

denote the upper and lower limit boundaries of variables.

is one member of the gorillas in a group that is randomly selected from the entire population and

is one of the vectors of the gorilla’s positions of the randomly selected candidate’ it also includes the updated positions in each phase.

, and

are computed using Equations (36)–(38).

in Equation (36) represents the iteration current value,

is the total number of iterations performed,

in Equation (37) denotes the cosine function, and

is random values within range

updated in each iteration.

in Equation (38) is a random value in the range

. The silverback dominance is modeled using Equation (38). From Equation (35),

is computed using Equation (39), while

is computed in Equation (40).

where

is a random value in the dimensions of the problem within the range

2.3.2. Exploitation Process

The two behaviors applied here are following the silverback and competition for adult females. The silverback dominates the group, makes decisions for the group, directs gorillas to food sources, and determines their movements. Silverbacks may get old and die eventually, thus letting blackbacks (the young male gorillas) become the leader, or the other male gorillas may fight the silverback and dominate the group. Each of the behaviors in the exploitation phase, as already mentioned, can be selected using in Equation (36); if , the following silverback behavior is selected, while if , competition for adult females is selected. is a value to be set before optimization.

Equation (41) simulates following the silverback.

where

is the position vector of the silverback gorilla,

is the position vector of the gorilla,

is computed using Equation (38) and

is computed using Equation (42):

where

indicates each candidate gorilla’s vector position in iteration

,

is the total gorilla numbers,

is estimated via Equation (43), and

in Equation (43) is computed using Equation (38):

where

is a random value within

range,

in Equation (45) is a coefficient vector that determines the degree of violence in conflicts,

is a parameter that is set before optimization operation, and

in Equation (45) is a parameter set while being used to model the effect of violence on the dimensions of the solution. If

,

value of

is equal to random values in a normal distribution with problems dimensions, while if

will be equal to a random value in a normal distribution. For more details about GTO see [

43].

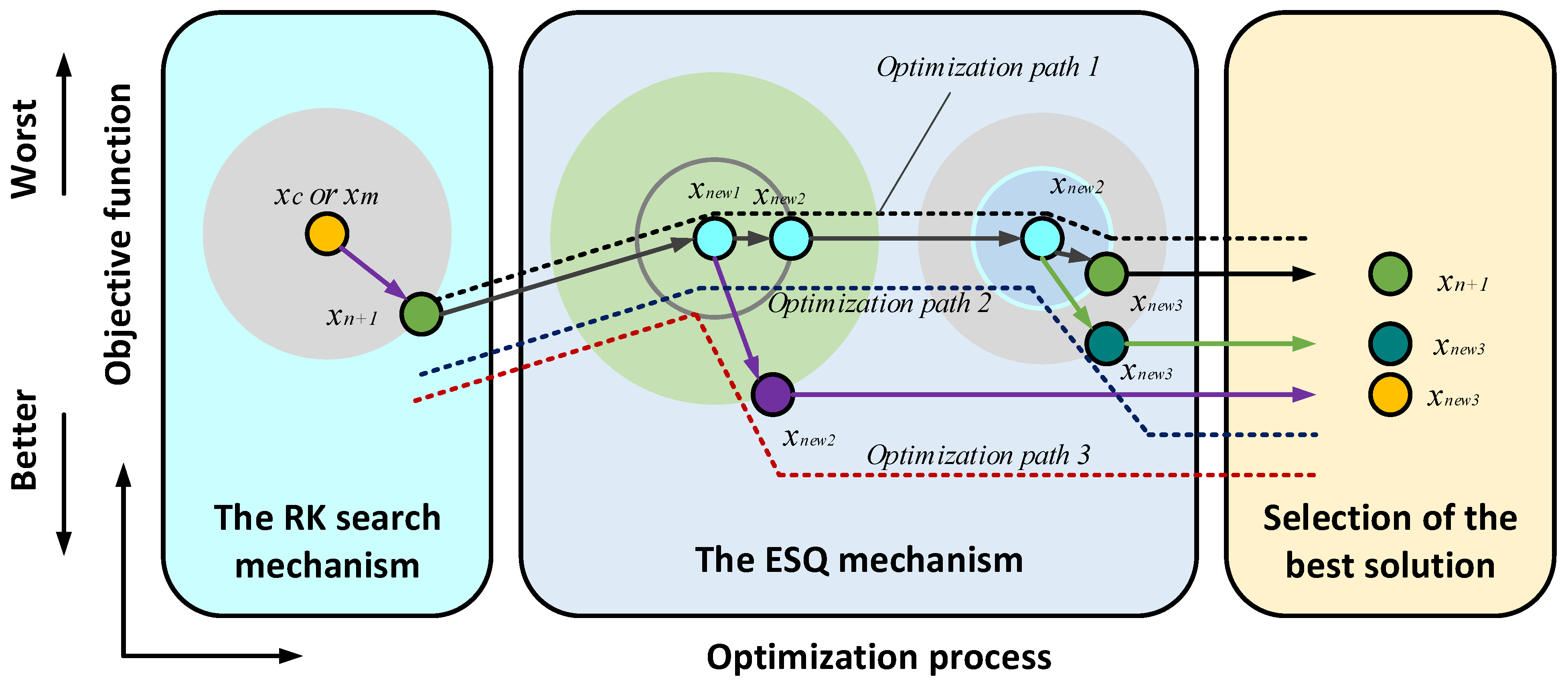

2.5. Runge Kutta Optimization (RUN)

The RUN algorithm concept is from the Runge-Kutta method of solving ordinary differential equations in the numeric form developed by [

45]. This algorithm has two stages; the first stage procedure is based on the Runge-Kutta theory, while the second stage is known as enhanced solution quality (ESQ). The RUN algorithm is explained as follows:

Solution Updating: RUN optimization adopts a search mechanism (SM) from the Runge-Kutta concept in updating the present solution position of each iteration; this is scripted in stage one below.

Stage One: Search mechanism for updating the present solution position in RUN.

Step 1: If then

Else

End if.

represents an integer number within range

and this parameter helps in enhancing RUN diversity.

is a randomly generated value within the range

as is parameter

.

is computed as in the study [

30].

is an adaptive factor computed as

is the maximum number of iterations and the parameters

and

are computed as

is a randomly generated number within range ; is the best solution found. is the position of the best solution found after each iteration.

Stage two: Enhanced solution quality (ESQ).

This process is adopted to increase solution quality and evade local optimal trapping during iterations. The process is scripted below:

Step 2: ESQ adoption in RUN to compute solution .

If then

End if

End if.

From Step 2,

and

new values are calculated via the below equations:

is a randomly generated number within range , the parameter is random, and is an integer number with values . is the best solution found. The calculated solution does not in each case have better fitness than the best solution. Thus, the RUN algorithm further computes to improve fitness via Step 3.

Step 3: Improving the new solution .

End If.

is a random number which is

.

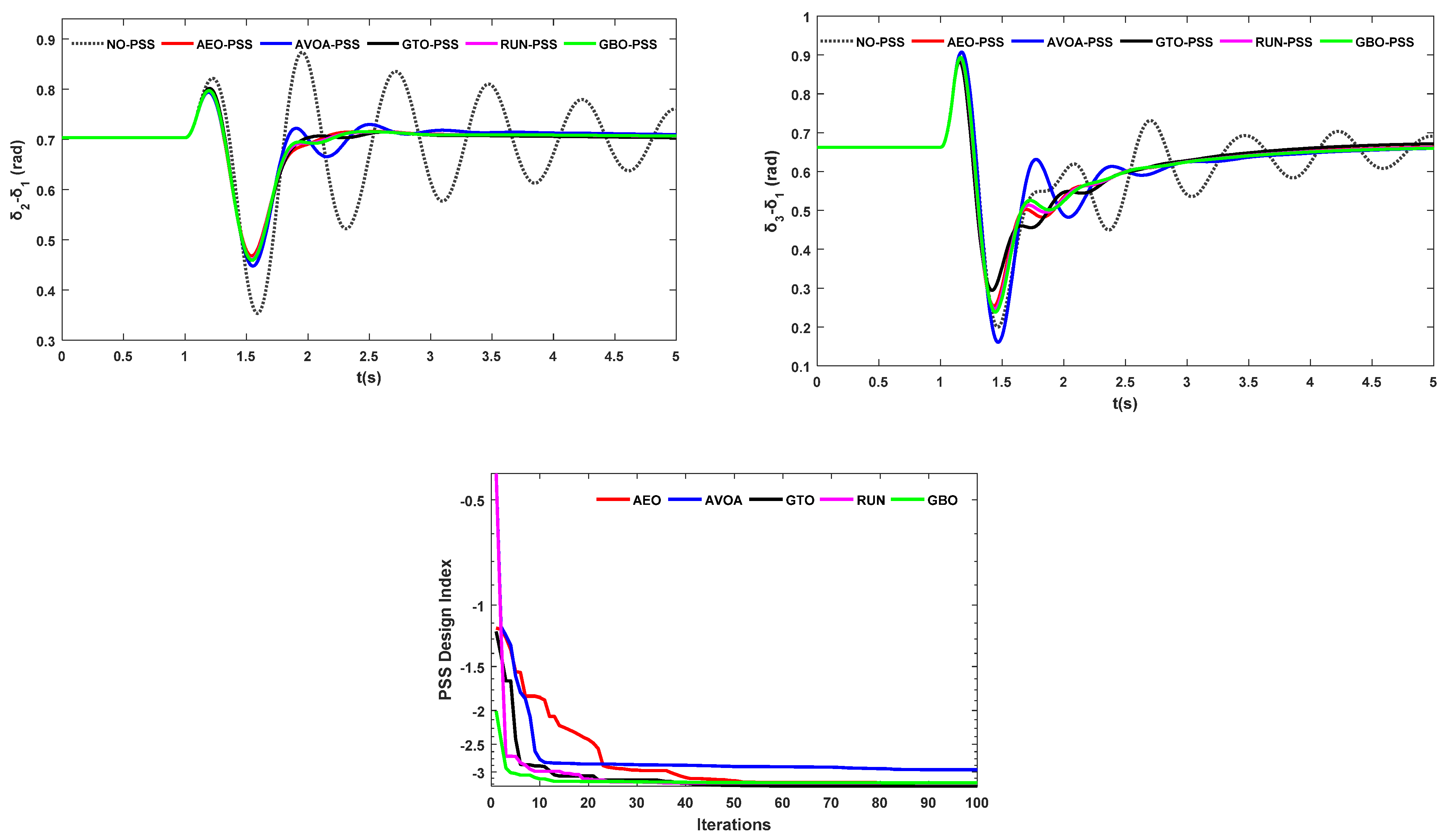

Figure 3 shows the Runge-Kutta optimization process. For more details about RUN, see [

45].