1. Introduction

The operational meteorological forecast (OMF) model has been primarily used in solar power prediction as well as weather forecasts. This model is essential for day-to-day solar resource assessment and further for the long-term climate outlook [

1]. Variables such as surface temperature, pressure, solar irradiance taken from OMF are employed to estimate the amount of solar power that will be generated at a specific location over a defined period of time. OMF exhibits a reliable wind and solar forecast up to three days ahead, although there are still uncertainties to consider [

2]. The accuracy of OMF is consequential in electricity generation and in electricity trading. For example, in a hybrid system, forecasting is important in determining the energy mix and preventing disruption by providing traditional energy sources such as hydrothermal, gas, or coal as back-up sources [

3,

4]. In some countries, power producers are penalized if they do not deliver the committed energy production amounts. OMF has also been used to evaluate and assess the overall performance of renewable power plants.

The integration of solar energy forecasting is not only vital for ensuring the stability and optimal functioning of solar power plants but also holds significant implications for the diverse energy landscape of residential buildings. While residential structures constitute approximately 25% of the total final energy consumption [

5], their energy needs exhibit a broad spectrum, surpassing the range observed in commercial and industrial counterparts. As highlighted in the 2021 Global Status Report for Building and Construction [

6], the overall building sector accounted for a substantial 37% of total energy consumption, encompassing both operational and process-related aspects [

7]. Solar forecasting, traditionally associated with energy producers, extends its relevance to various building types, including the paradigm of autonomous buildings or smart structures capable of operating independently from external infrastructural support like electric power [

8]. For autonomous buildings, renewable energy stands as the undisputed priority, with solar forecasting emerging as a crucial tool in mitigating challenges posed by occupancy patterns that contribute to fluctuating energy demands throughout consumption periods. Despite the myriad of benefits attributed to solar forecasting, it is imperative to acknowledge inherent limitations.

One of the limitations in solar forecasting is the uncertainty of weather forecast itself [

9]. Solar forecasting heavily relies on weather prediction models, which can be complex, especially in long-term forecasts. These are caused by rapid changes in atmospheric conditions and other weather phenomena that can impact radiation levels. Spatial variability is another factor [

10]. Irradiance can sometimes vary significantly within a relatively small area, factors such as topography, shadowing, and other factors that cause spatial variation in solar energy prediction. Another limitation is the forecast horizon [

11], long forecast horizon deprives solar predictability. Finally, the solar forecasting model may struggle to account for sudden operational changes or unexpected events that can affect solar energy generation [

12].

Despite several limitations, solar forecasting has made significant contributions to energy resource harvesting and allocation. Thus, to improve the reliability and dependability of solar forecasting, post-processing techniques are introduced [

13,

14,

15]. While solar forecasting involves predicting the amount of solar energy that will be generated, post-processing techniques are used to increase the accuracy [

16] of solar forecasts by refining raw forecast data. Post-processing techniques help refine the initial forecast, which is vital for efficient integration of solar energy into the grid and optimizing grid operation [

17]. One of the techniques used is ensemble forecasting. Ensemble forecasting involves generating multiple forecasts using different models or variations in the input data. Ensembles are then combined to create an ensemble forecast, which can provide a more reliable prediction. Analog ensemble is one of the ensemble forecast techniques used in solar forecasting [

18].

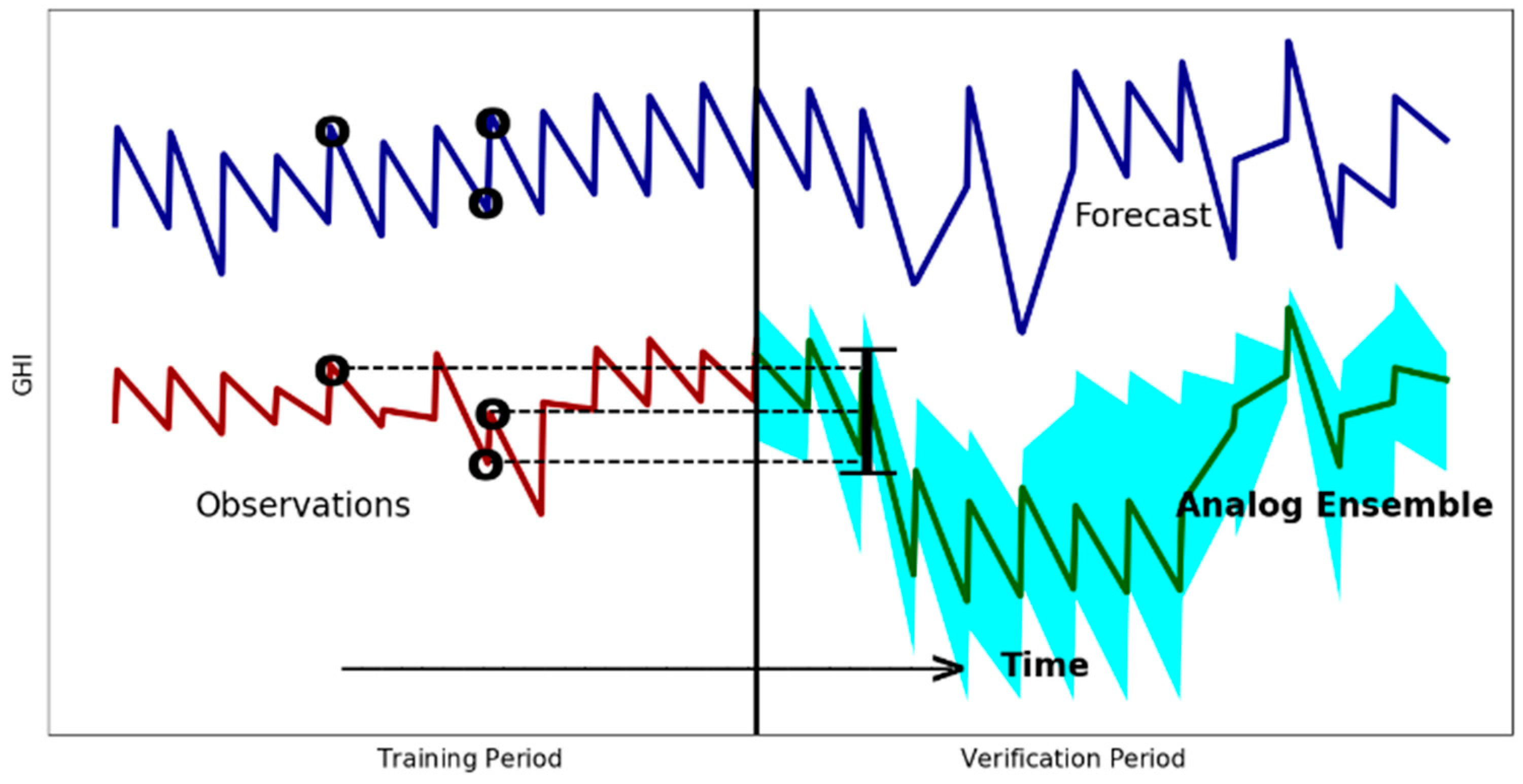

Analog ensemble forecasting in solar forecasting refers to techniques that use historical analogs or similar patterns in solar radiation data to improve the accuracy and reliability of solar energy generation predictions [

19,

20]. The analog ensemble technique leverages the idea that similar atmospheric patterns tend to produce similar weather outcomes. By identifying historical situations that closely resemble the current condition, the technique aims to capture the likely ranges of outcomes for the forecasted variable, such as the solar irradiance.

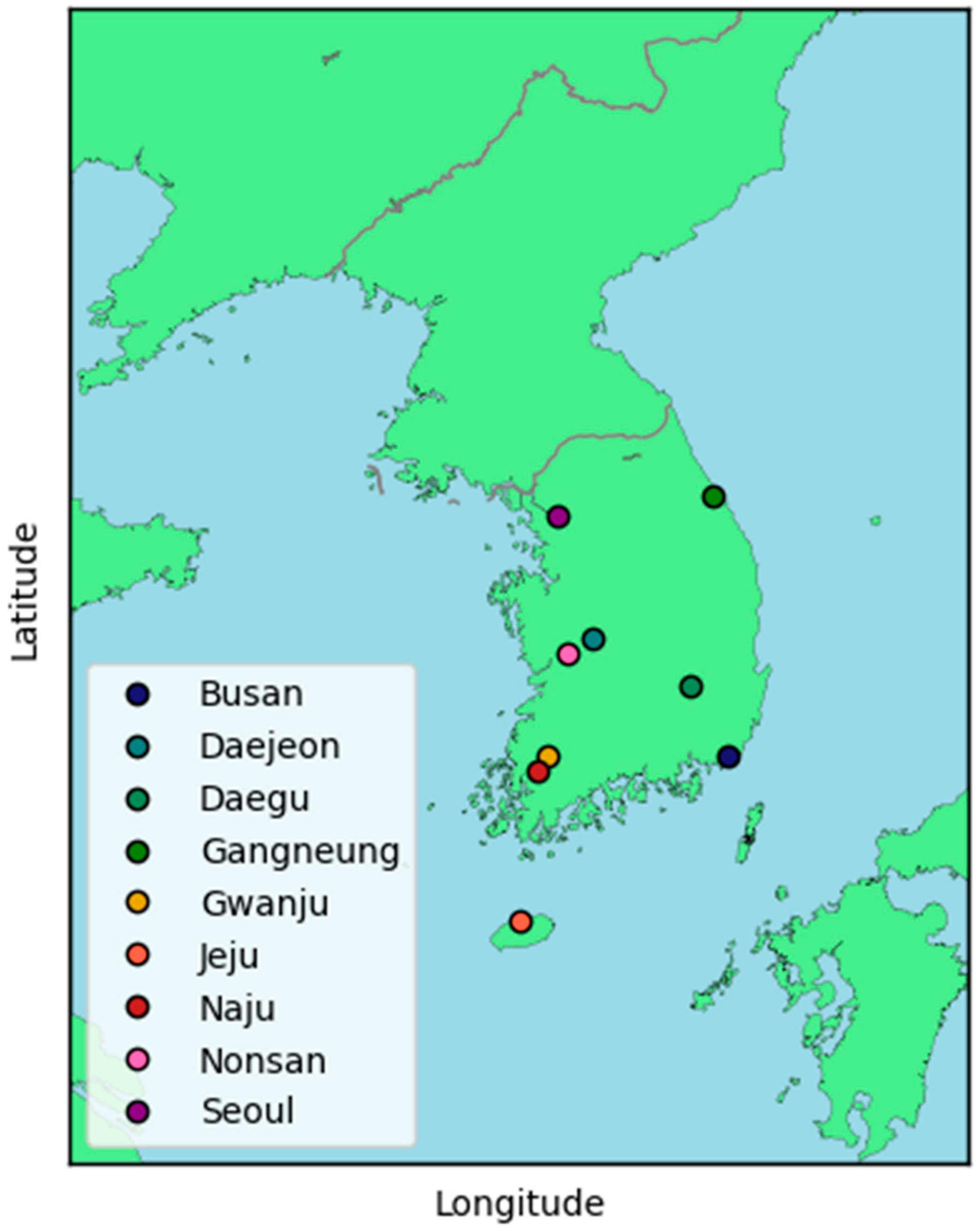

The goal of this paper is to create and put into use an analog ensemble prediction system that is appropriate for a weather forecast model operated by the Korea Meteorological Administration (KMA). Additionally, in this research, historical period optimization will be used to further maximize the analog ensemble’s capabilities. By identifying the most suitable dynamic period for observations through careful evaluation and cross-validation, analog ensemble forecasts can provide valuable insights for decision-making across various domains, ranging from weather and climate predictions to resource management and risk assessment. This will increase the precision and dependability of future forecasts by utilizing the knowledge and data from past occurrences. The operational meteorological forecast model’s integration of analog ensemble forecasts will be examined for its calibration through statistical validation.

3. Results

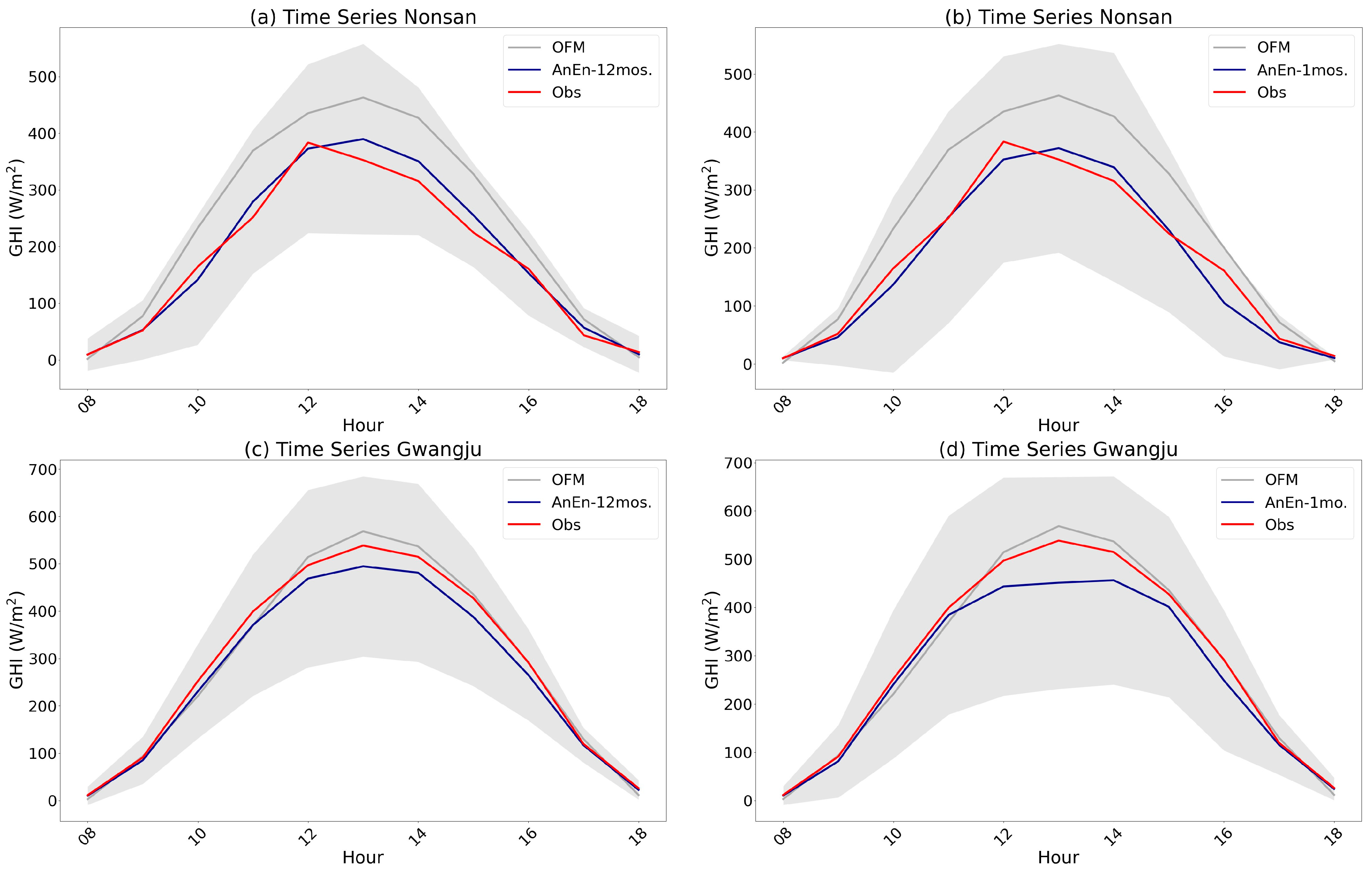

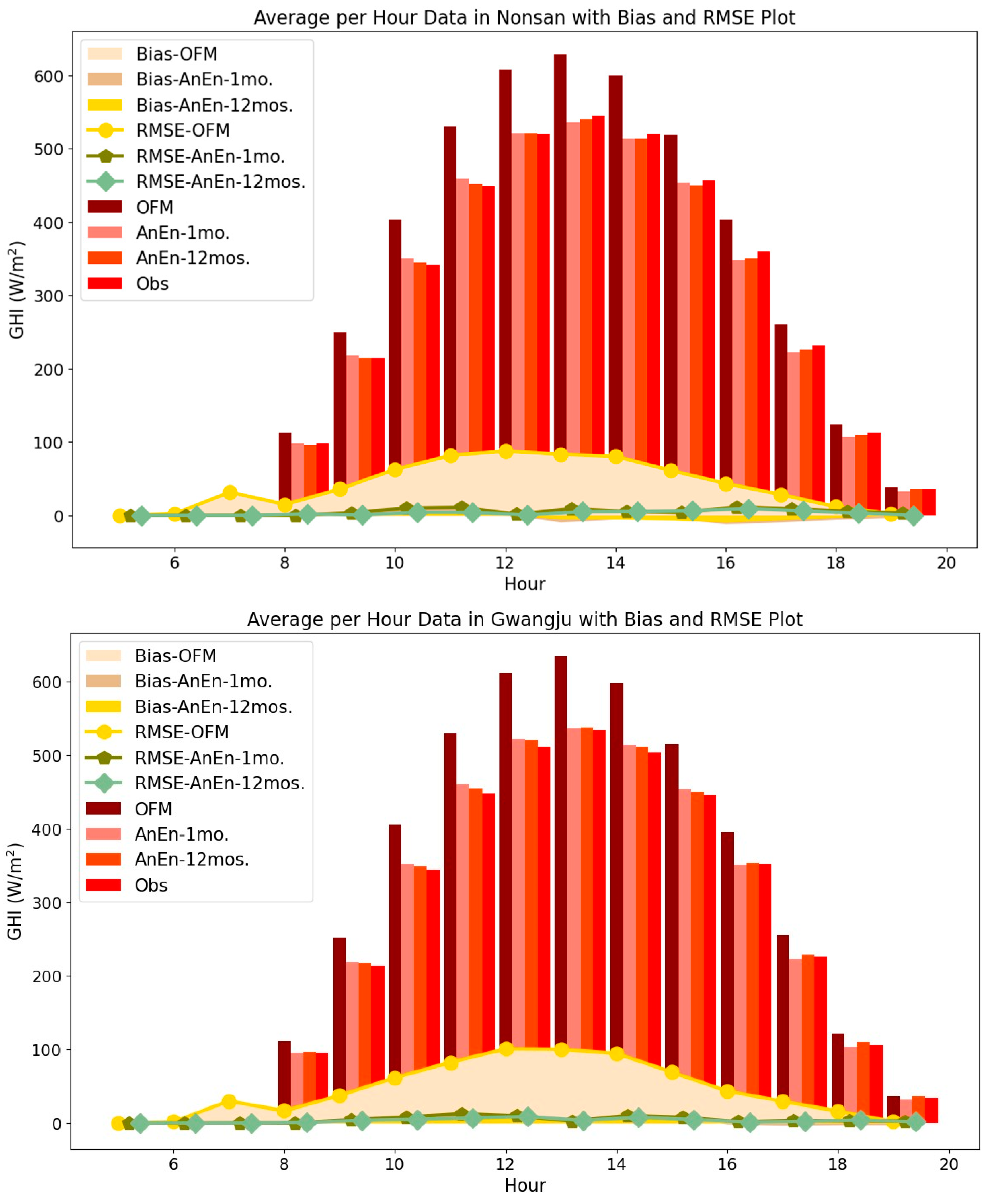

Two examples are given in

Figure 3, which shows the time series of solar irradiance forecast from OMF and AnEn that is produced by 12 months dataset and a month dataset with observation data at Nonsan and Gwangju stations. As AnEn is one of probabilistic forecast, prediction interval at 95% confidence level is also illustrated in

Figure 3. In the context of Nonsan station’s historical data analysis, spanning both a concise one-month period and a more comprehensive twelve-month duration, a noteworthy observation emerges. Specifically, during the hours between 12 to 16 KST, the OMF exhibits a propensity to deviate from actual observations. However, this deviation was not left unaddressed. Through the application of an analog ensemble approach, a method renowned for its proficiency in harnessing historical precedents, the previously mentioned discrepancy in the OMF’s predictions was adeptly compensated and rectified. This intriguing occurrence prompts a nuanced discussion about the efficacy of analog ensembles in improving model deviations, especially within the temporal framework of midday to late afternoon, and the potential implications of such compensation on enhancing the overall accuracy and reliability of operational meteorological forecasts at Nonsan station. When using 12-month historical data, the AnEn’s prediction interval is more condensed than when using 1-month historical data. The result comparing 1 month to 12 months gradually increased, with the prediction interval decreasing every 1 month increment in the dynamic period of observation data. This shows that there are few deviations from the observed data and a higher level of confidence in the model’s predictions. As the AnEn similarly confronts the outcome of the observed data, the pattern and observation of the result are also comparable to those at the Gwangju station (see

Figure 3c,d). When compared to AnEn with a 1-month historical period in Gwangju, AnEn with 12-month historical data also provided a narrow predicted interval, demonstrating minimal uncertainty and showing a significant agreement with the observed value.

Table 1 displays the average of the observations in comparison to the OMF and AnEn operated by 12 months datasets. Although OMF indicated great performance with an average correlation value of 0.9348 and a high degree of linear association, the correlation increased to 0.9811 on average with the deployment of the analog ensemble. There is a strong positive bias, with results ranging from 48 to 67 W/m

2 per station, demonstrating that the OMF routinely overestimates the observed values, which was greatly reduced, indicating that it is generally less biased in its predictions than the OMF. Further examination of mean absolute error (MAE) and root mean square error (RMSE) confirms the analog ensemble’s superiority. OMF’s MAE and RMSE are around 47.04 and 103.06 W/m

2, respectively. These values, however, are halved with the introduction of the analog ensemble, suggesting a considerable reduction in prediction errors and a significant improvement in forecast precision on average. The outcomes are represented in its skill score; the reduction in error statistics has also been validated by the skill score, with an average improvement of more than 50% when analog ensemble is used. These findings emphasize the extraordinary development made in forecasting, which promises more precise and dependable predictions for a wide range of applications. The highlighted stations are marked for additional review in the following paragraph.

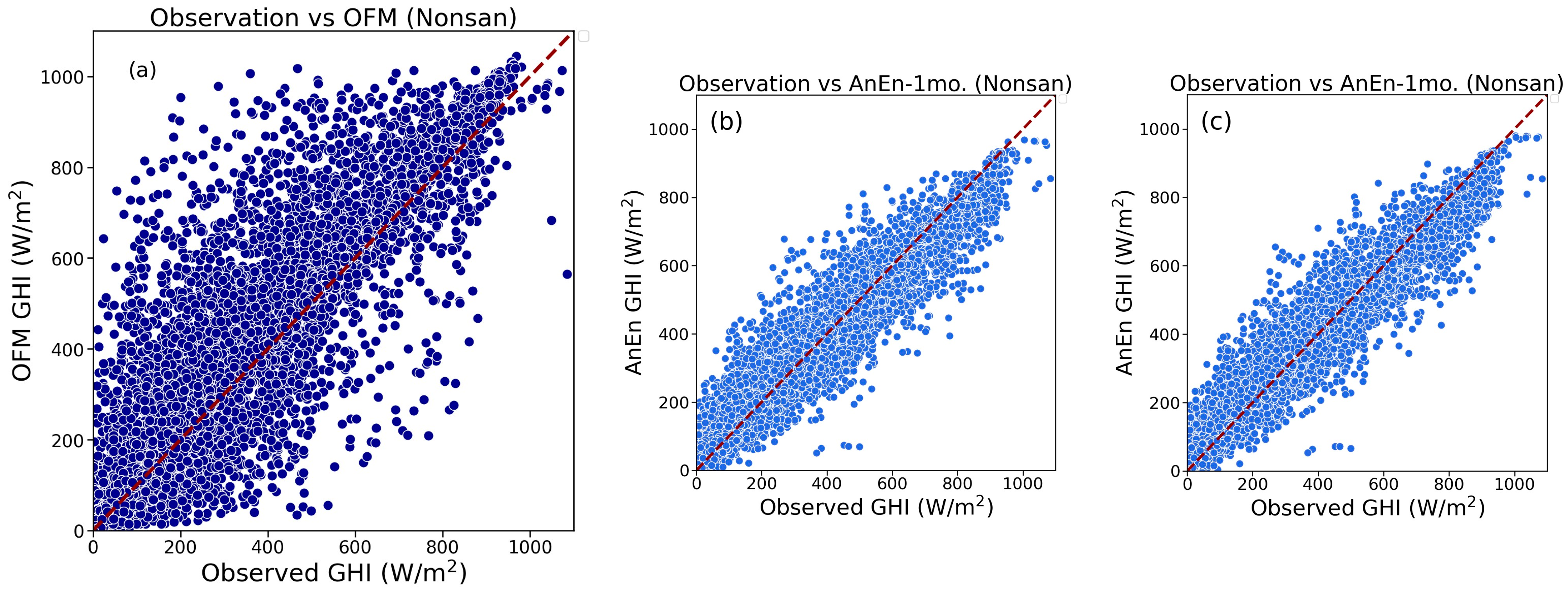

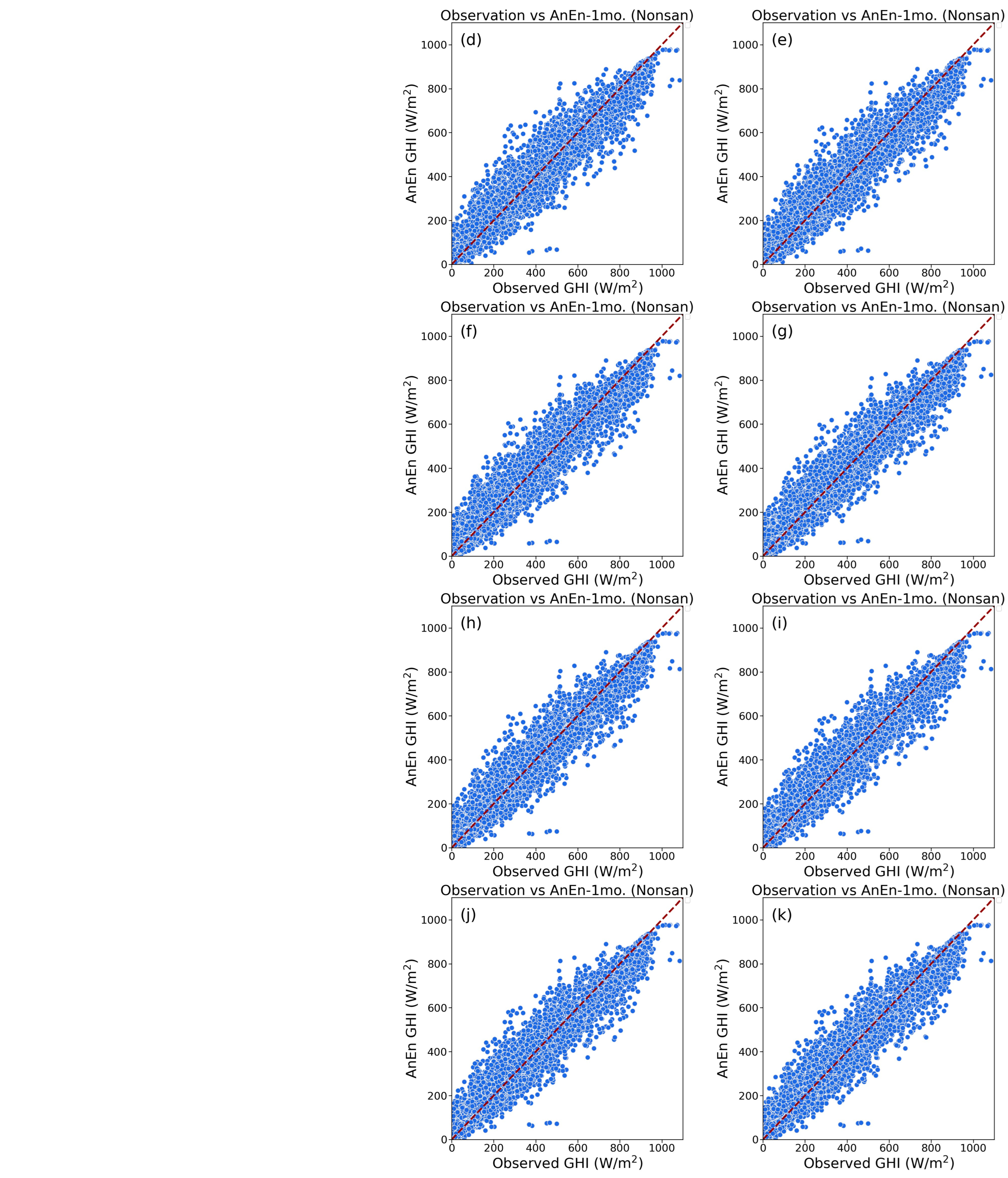

To further evaluate the result, a scatter diagram is used to determine the analog ensemble’s overall performance. Studying the scatter plot (see

Figure 4a) demonstrates how OMF overestimates the predicted value since the data are more strongly moved upwards. We can state that the data seems to improve even on a 1-month historical basis when compared to the AnEn vs. observation plots. The close alignment of the data points in the AnEn versus observation data along a diagonal line suggests a linear relationship between the variables. This implies a strong positive correlation between the two variables, indicating that the trends in their values are similar. As a result, we are able to draw conclusions regarding the outcome of the analog ensemble post-processing method. The statistical analysis also shows that the OMF is dispersed with bigger deviations from the reference line as compared to the observation value, indicating considerable discrepancies that lead to lower accuracy. Contrarily, each data point for analog ensembles is densely packed around the reference line, demonstrating agreement between the ensemble’s predictions and the observational results (see

Figure 4). This gives us a better understanding of how performing analog ensemble as a post-processing technique for all places detected has improved overall. The scatter plot of the AnEn vs. observations plot generally does not appear to exhibit differences in terms of improvement, so it is important to validate the outcome to statistical verification.

4. Discussion

Delving into the realm of forecast evaluation and the application of analog ensemble, this discussion centers on our assessment of correlation, MAE, RMSE, and bias as benchmarks of model accuracy. By scrutinizing the correlations between predicted and observed values, we gauge the level of association that underpins our forecasts. Simultaneously, we dissect the magnitudes of errors through MAE and RMSE, which together convey the predictive precision. Lastly, we untangle the presence of systematic tendencies via bias analysis. Our synthesis of these metrics provides a holistic understanding of analog ensemble and the right historical periods.

The correlation plot for all stations using the analog ensemble is shown in

Figure 5. The analog ensemble result is impacted by the applicability of a long historical period. In the 1-year historical dataset compared to a 1-month historical period, the correlation is highest. A longer historical period will have a positive impact across all stations by as much as 4%. Although the result is noticeably high compared to the ongoing forecast from the OMF. In

Table 1 for instance, the correlation between observation data and the Nonsan station of the OMF is 94.14%; however, when the analog ensemble prediction is used, even with a 1-month historical period, the correlation improves by roughly 98.4%, leading to a reduction in uncertainty. The comparison between the 12-month and 1-month periods reveals a narrow margin between their respective outcomes.

The proposition is that employing a 12-month historical period proves to be as efficient as utilizing a 1-month historical period. This assertion is substantiated by the close resemblance in correlation values evident from the graphical representation. As correlation alone is inadequate at determining the ideal historical period for an analog ensemble, we shall look more closely at the other statistical techniques.

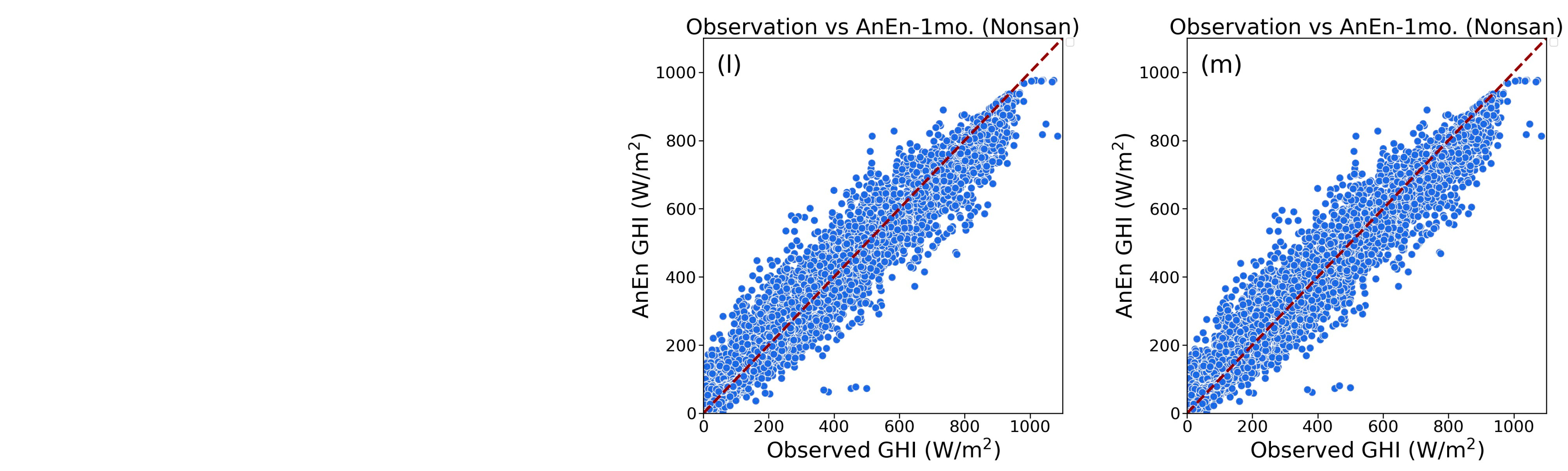

As seen in

Figure 6, the bias plot’s distribution is clearly skewed to the right. Except for the Gangneung station, the trend is essentially the same for all of the stations. In comparison to OMF, which ranges from 25.98 to 34.77 W/m

2, the results for all historical periods are reduced, with the range roughly being −2 to 3 W/m

2. When the historical period is 3 months, the bias result is the lowest at the other stations, including Busan, Jeju, Naju, and Seoul. However, considering a 1-year historical period, the results are not significantly different from the bias result. Overall, it appears that the analog ensemble method has a smaller bias than OMF based on the bias analysis results. This indicates that the genuine values or consequences have been underestimated. This can be useful in solar PV plants where conservative estimations are required to avoid overestimation and over commitment of resources.

Regarding the performance with respect to historical periods, the analysis indicates a notably reduced bias within the range of 2 to 4 months for certain stations like Busan, Jeju, Nonsan, and Seoul. This finding underscores that forecasts derived from these specific historical windows tend to align more closely with the actual observations, implying a higher accuracy in these cases. Conversely, a reverse trend is observed for other stations, where superior results are achieved when employing a longer historical period. The implication here is that the choice of historical period significantly influences the precision of our forecasts, and station-specific considerations play a pivotal role in determining the optimal historical window. The interaction between historical eras and station features produces complicated dynamics that necessitate careful study for precise forecasts, underscoring the complexity of the forecasting process.

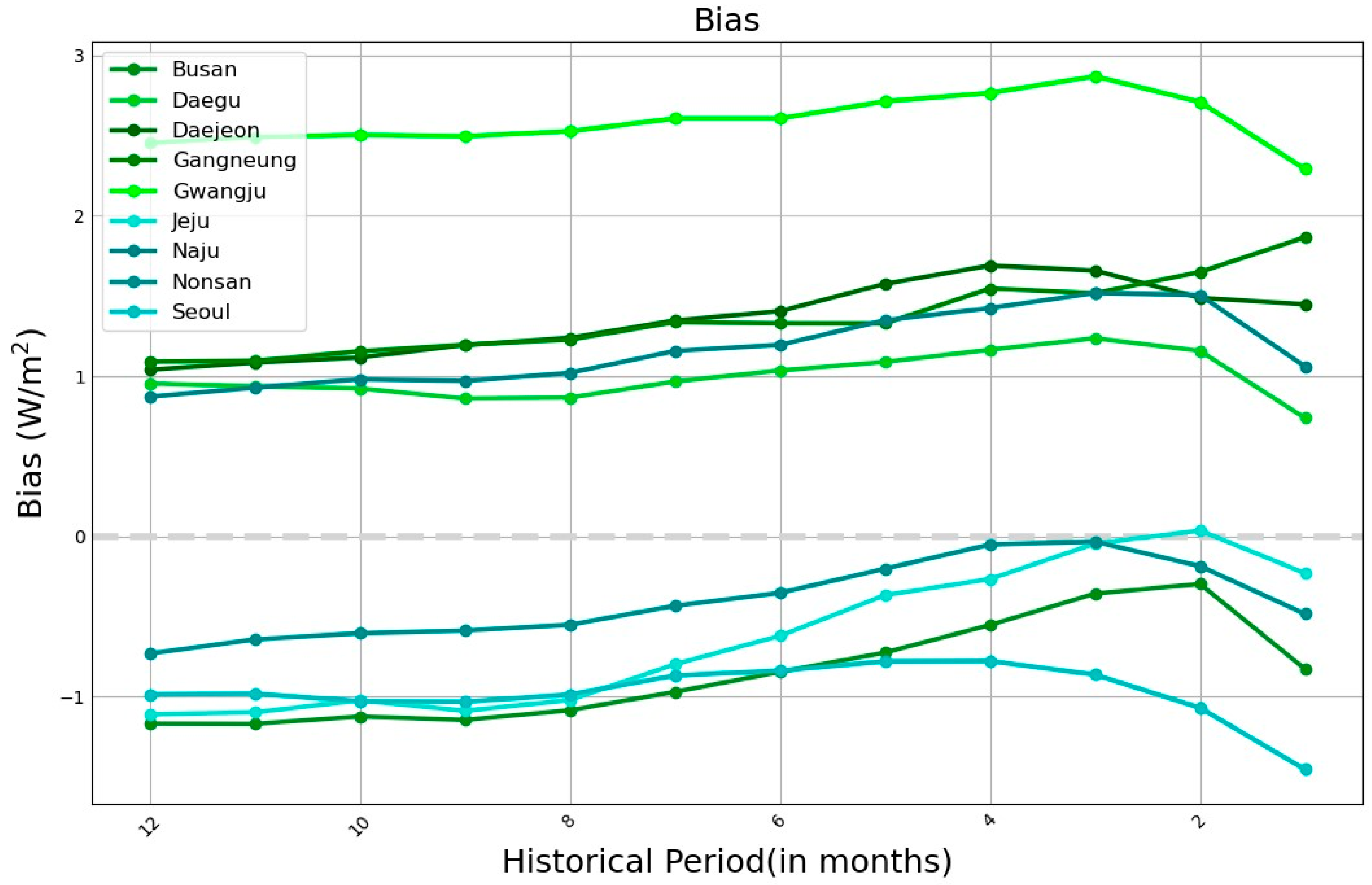

The MAE is cut in half when analog ensemble is applied to OMF’s GHI data.

Figure 7 illustrates the considerable decrease in MAE for all locations when compared to OMF. The accuracy and dependability of the forecasting findings are considerably increased by these states. Additionally, if you examine the trend of the analog ensemble at various historical periods, you will notice that all of the plots are comparable and that the AnEn with the 1-year historical period has the lowest MAE, whereas the AnEn with the 1-month historical period has the greatest MAE. On average, the analog ensemble results on historical period of one year are quite near to the actual observed values.

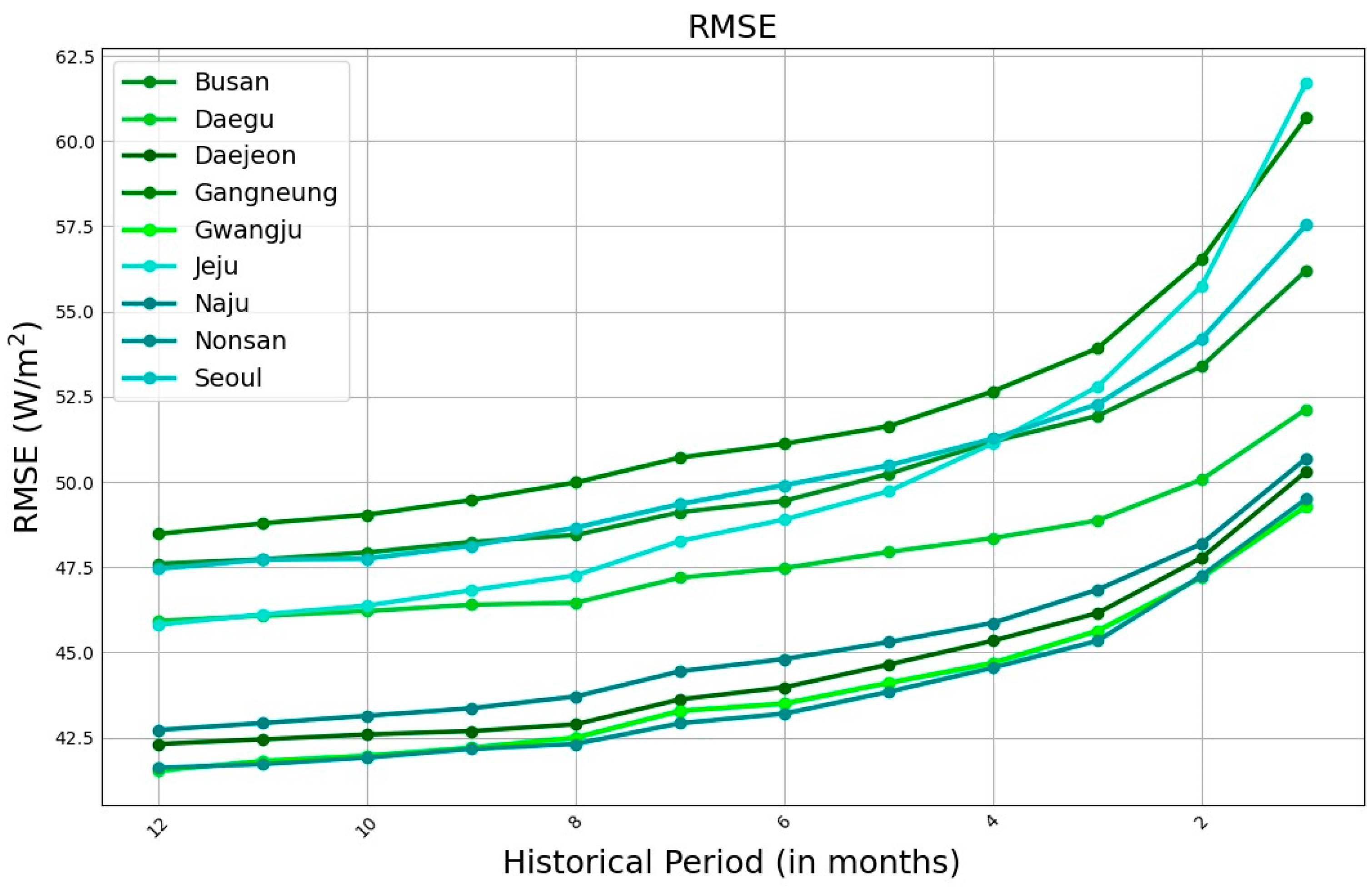

The RMSE plot is the same in

Figure 8, which has the same trend as the MAE plot. The RMSE’s output for AnEn at various historical periods is likewise diminished. When compared to OMF, which has RMSE scores between 90 and 100 W/m

2, AnEn findings range between 40 and 55 W/m

2 at various historical periods. AnEn exhibits a significant result when compared to OMF on the RSME plot, with a reduction of about 55%. When comparing AnEn results at various historical periods, the 12-month historical period continues to have the lowest RMSE and most dependability.

In

Figure 9, to determine the analog ensemble forecast, the result needed to identify twenty analog members, as assumed by the researchers. These twenty analog members are derived from the lowest distance from historical patterns. The contribution of the analog members, represented by the dashed lines, defined the mean result of the analog ensemble. The test dataset from the UASIBS-KIER verifies the results of the analog ensemble. The initial 24 h depiction in the figure stands as a notable exemplar. Here, the analog ensemble effectively unfolds a trajectory that closely mirrors the observation data, signifying a commendable alignment between the model’s predictions and the actual occurrences. One noteworthy observation emerges when comparing the root mean square error (RMSE) outcomes. Notably, there exists a proportional relationship between the RMSE values and the biased results in the context of the OMF. Intriguingly, this pattern experiences a significant reduction when the analog ensemble approach is applied. This implies that the analog ensemble method contributes not only to minimizing biases but also to restraining the overall magnitude of errors, effectively elevating the predictive capability of the model. The interplay between bias reduction and RMSE diminishment highlights the synergy between these evaluation metrics and underscores the efficacy of the analog ensemble technique in enhancing forecast precision. In essence, the strategic selection of analog members, coupled with their cumulative impact on forecast generation, underscores the rationale and potential of the analog ensemble approach. Its alignment with observation data, coupled with the advantageous reduction in both biases and errors, accentuates its practicality in improving forecasting accuracy across a diverse range of scenarios. This robust approach encapsulates the fusion of historical patterns and modern computational methodologies, culminating in a predictive framework that holds promise in a variety of forecasting applications.

The average error between the observation value and the OMF/analog ensemble is measured by the RSME value. Analog ensemble reveals that outcomes are more consistent with observation value when RSME is lower. The average overall RSME percentage has been reduced across the board by 74%, demonstrating that analog ensembles improve forecast accuracy (see

Figure 9).

While our study provides valuable insights into the application of AnEn in solar irradiance forecasting, it is essential to acknowledge certain limitations. The effectiveness of the analog ensemble method is contingent upon the availability and quality of historical data. Variations in the performance of the analog ensemble across different stations highlight the sensitivity of the approach to regional differences and microclimates. Additionally, the study primarily focuses on specific regions in South Korea, and the applicability of the findings may vary when extrapolated to other geographical locations with distinct weather patterns. Future research endeavors could explore the optimization of the analog ensemble method for different climatic conditions and diverse datasets and optimization of the appropriate analog ensemble members given a one-year historical period. Moreover, investigating the integration of advanced machine learning techniques with the analog ensemble could enhance the accuracy of solar irradiance predictions. As the field of renewable energy forecasting continues to evolve, our study lays the groundwork for a broader understanding of forecasting methodologies, emphasizing the need for adaptive approaches tailored to specific contexts.

5. Conclusions

This study evaluates the comprehensive analysis of solar irradiance forecasts using the Operational Meteorological Forecast (OMF), and the analog ensemble (AnEn) reveals valuable insights into the accuracy and reliability of these forecasting methods. The study considered a range of factors, including historical periods, MBE, MAE, RMSE, correlation, and the overall performance of the analog ensemble in comparison to the OMF.

Using the analog ensemble methodology to reduce uncertainty is a complex process that depends on a number of different elements. The quality of observation data is one important factor since it supports the precision and dependability of the overall prediction process. Recognizing that even the most complex model can only function as well as the data it is given with is crucial. Therefore, it is impossible to stress the importance of carefully curated, high-quality observation data. The interaction between the analog ensemble’s capacity for prediction and the reliability of the input data serves as a reminder of the interconnection that exists by nature in forecasting techniques. The temporal analysis, focusing on Nonsan station’s historical data, highlighted a notable tendency of the OMF to deviate from actual observations during specific hours. However, the application of the analog ensemble, known for its proficiency in leveraging historical precedents, effectively compensated for these discrepancies, particularly during midday to late afternoon periods. This underscores the efficacy of the analog ensemble in improving model deviations and enhancing the overall accuracy of operational meteorological forecasts.

Comparing different historical periods, it was observed that the analog ensemble, particularly with a 12-month historical dataset, significantly reduced errors, bias, and improved correlation when compared to the OMF. The scatter plots and bias analysis demonstrated that the analog ensemble consistently outperformed the OMF, providing more accurate and dependable predictions. The correlation analysis for all stations using the analog ensemble indicated that a longer historical period, such as 12 months, had a positive impact, with correlation values improving by as much as 4%. The bias analysis revealed that the analog ensemble generally exhibited a smaller bias than the OMF, indicating more conservative estimations. Further scrutiny of error statistics, including MAE and RMSE, confirmed the analog ensemble’s superiority, with a substantial reduction in prediction errors. The skill score also validated the remarkable improvement achieved by the analog ensemble, promising more precise and reliable predictions for various applications.

Nonetheless, it is essential to acknowledge that this study does not encompass all potential factors that could influence observation data quality. A prime example of this omission is the intricate interplay of climate change, which could introduce significant alterations to observational patterns. The absence of an assessment of climate change’s impact on the data is a limitation within the scope of this research. However, it serves as an avenue for future inquiries, underlining the dynamic nature of the scientific process. Subsequent studies can delve into the intricate relationship between climate change and observational data, enriching the understanding of their collective influence on forecasting precision.

In essence, the analog ensemble approach, with its strategic selection of analog members and consideration of historical patterns, emerged as a robust and promising methodology for improving solar irradiance forecasts. The study’s findings have significant implications for enhancing the accuracy of renewable energy forecasts, contributing to more reliable and efficient energy planning and utilization.