Prediction of TOC in Lishui–Jiaojiang Sag Using Geochemical Analysis, Well Logs, and Machine Learning

Abstract

1. Introduction

2. Geological Setting and Stratigraphy

2.1. Tectonic

2.2. Stratigraphy

3. Materials & Methods

3.1. Geochemical Analysis

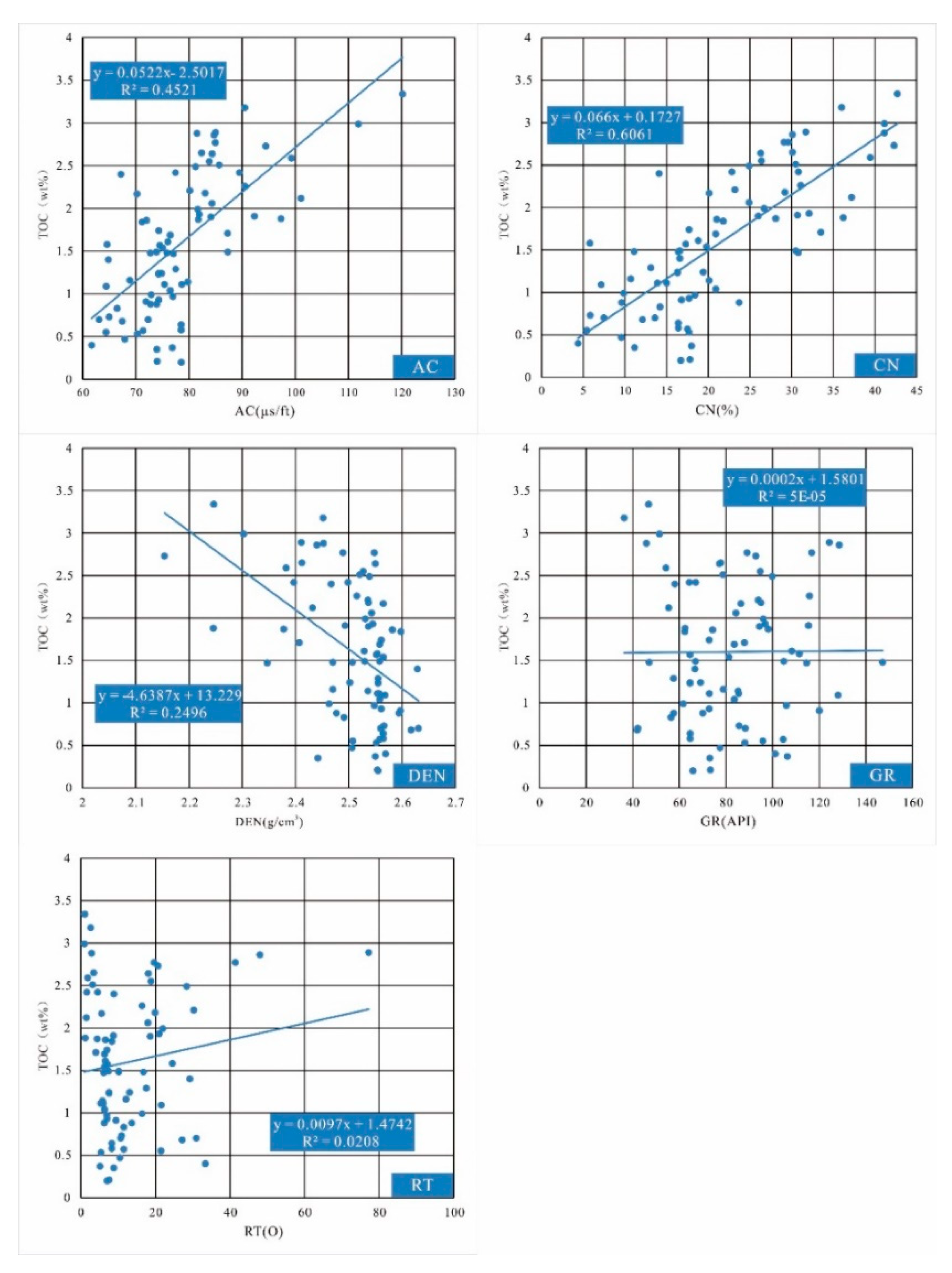

3.2. Log Series Selection and Well Log Models

3.3. ML Methods

3.3.1. Method of BPNN

3.3.2. Method of ELM

3.3.3. Method of RF

3.4. Evaluation Criteria

4. Results and Discussion

4.1. TOC and Source Rock Study from Geochemical Analyses

4.2. TOC Quantification from Multiple Regression Models

4.3. TOC Quantification Using ML Methods

4.4. Compare between Multiple Regression and ML

5. Conclusions

- The TOC content of the source rocks in Yueguifeng Formation is relatively high, with an overall distribution of 0.2–3.34%. The S1 + S2 is generally distributed in the range of 0.5 mg HC/g TOC to 12.51 mg HC/g TOC. The type of kerogen is mainly type II. The source rocks of Yueguifeng Formation have good hydrocarbon generation potential.

- The correlations between each logging parameter and TOC were evaluated through linear regression method and Pearson correlation coefficient analysis. The results indicate that the TOC of Yueguifeng Formation source rock has a better response in DEN, DT, and CN logging. The performance of each model using all well logs and selected well logs shows that each model with all well logs as input performed much better than the models with selected well logs.

- In terms of accuracy, the results of error analysis show that each ML model with all well logs as input performed much better than the multiple regression models. In addition, it can be concluded that the BPNN model outperforms the other ML models. According to the run time comparison, the RF model is much faster than the BPNN model, which indicates that RF should be chosen as the better option if processing speed is important. This study confirmed the ability of ML models for building an efficient model for estimating TOC from readily available borehole logs data in the study area.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bechtel, A.; Movsumova, U.; Strobl, S.A.; Sachsenhofer, R.F.; Soliman, A.; Gratzer, R.; Püttmann, W. Organofacies and paleoenvironment of the Oligocene Maikop series of Angeharan (eastern Azerbaijan). Org. Geochem. 2013, 56, 51–67. [Google Scholar] [CrossRef]

- Hakimi, M.H.; Abdullah, W.H.; Alqudah, M.; Makeen, Y.M.; Mustapha, K.A. Organic geochemical and petrographic characteristics of the oil shales in the Lajjun area, Central Jordan: Origin of organic matter input and preservation conditions. Fuel 2016, 181, 34–45. [Google Scholar] [CrossRef]

- Hakimi, M.H.; Abdullah, W.H.; Makeen, Y.M.; Saeed, S.A.; Al-Hakame, H.; Al-Moliki, T.; Al-Sharabi, K.Q.; Hatem, B.A. Geochemical characterization of the Jurassic Amran deposits from Sharab area (SW Yemen): Origin of organic matter, paleoenvironmental and paleoclimate conditions during deposition. J. Afr. Earth. Sci. 2017, 129, 579–595. [Google Scholar] [CrossRef]

- Tan, M.J.; Song, X.D.; Yang, X.; Wu, Q.Z. Support-vector-regression machine technology for total organic carbon content prediction from wireline logs in organic shale: A comparative study. J. Nat. Gas Sci. Eng. 2015, 26, 792–802. [Google Scholar] [CrossRef]

- Beers, R.F. Radioactivity and Organic Content of Some Paleozoic Shales1. AAPG Bull. 1945, 29, 1–22. [Google Scholar]

- Schmoker, J.W.; Hester, T.C. Organic Carbon in Bakken Formation, United States Portion of Williston Basin1. AAPG Bull. 1983, 67, 2165–2174. [Google Scholar]

- Mendelzon, J.D.; Toksoz, M.N. Source Rock Characterization Using Multivariate Analysis of Log Data. In Proceedings of the SPWLA 26th Annual Logging Symposium, Dallas, TX, USA, 17 June 1985; p. SPWLA-1985-UU. [Google Scholar]

- Autric, A. Resistivity, Radioactivity And Sonic Transit Time Logs To Evaluate The Organic Content Of Low Permeability Rocks. Log Anal. 1985, 26, SPWLA-1985-vXXVIn3a3. [Google Scholar]

- Passey, Q.R.; Creaney, S.; Kulla, J.B.; Moretti, F.J.; Stroud, J.D. A Practical Model for Organic Richness from Porosity and Resistivity Logs1. AAPG Bull. 1990, 74, 1777–1794. [Google Scholar]

- Kamali, M.R.; Allah Mirshady, A. Total organic carbon content determined from well logs using ΔLogR and Neuro Fuzzy techniques. J. Pet. Sci. Eng. 2004, 45, 141–148. [Google Scholar] [CrossRef]

- Passey, Q.R.; Bohacs, K.M.; Esch, W.L.; Klimentidis, R.; Sinha, S. From Oil-Prone Source Rock to Gas-Producing Shale Reservoir—Geologic and Petrophysical Characterization of Unconventional Shale-Gas Reservoirs. In Proceedings of the International Oil and Gas Conference and Exhibition in China, Beijing, China, 8 June 2010; p. SPE-131350-MS. [Google Scholar]

- Hu, H.T.; Lu, S.F.; Liu, C.; Wang, W.M.; Wang, M.; Li, J.J.; Shang, J.H. Models for Calculating Organic Carbon Content from Logging Information: Comparison and Analysis. Acta Sedimentol. Sin. 2011, 29, 1199–1205. [Google Scholar]

- Wang, P.; Chen, Z.; Pang, X.; Hu, K.; Sun, M.; Chen, X. Revised models for determining TOC in shale play: Example from Devonian Duvernay Shale, Western Canada Sedimentary Basin. Mar. Pet. Geol. 2016, 70, 304–319. [Google Scholar] [CrossRef]

- Zhao, P.; Ma, H.; Rasouli, V.; Liu, W.; Cai, J.; Huang, Z. An improved model for estimating the TOC in shale formations. Mar. Pet. Geol. 2017, 83, 174–183. [Google Scholar] [CrossRef]

- Siddig, O.; Ibrahim, A.F.; Elkatatny, S. Application of Various Machine Learning Techniques in Predicting Total Organic Carbon from Well Logs. Comput. Intell. Neurosci. 2021, 2021, 7390055. [Google Scholar] [CrossRef] [PubMed]

- Zheng, D.Y.; Wu, S.X.; Hou, M.C. Fully connected deep network: An improved method to predict TOC of shale reservoirs from well logs. Mar. Pet. Geol. 2021, 132, 105205. [Google Scholar] [CrossRef]

- Filipič, B.; Junkar, M. Using inductive machine learning to support decision making in machining processes. Comput. Ind. 2000, 43, 31–41. [Google Scholar] [CrossRef]

- Kim, D.H.; Kim, T.J.; Wang, X.; Kim, M.; Quan, Y.J.; Oh, J.W.; Min, S.H.; Kim, H.; Bhandari, B.; Yang, I.; et al. Smart Machining Process Using Machine Learning: A Review and Perspective on Machining Industry. Int. J. Precis. Eng. Manuf.-Green Technol. 2018, 5, 555–568. [Google Scholar] [CrossRef]

- Rehman, T.U.; Mahmud, M.S.; Chang, Y.K.; Jin, J.; Shin, J. Current and future applications of statistical machine learning algorithms for agricultural machine vision systems. Comput. Electron. Agric. 2019, 156, 585–605. [Google Scholar] [CrossRef]

- Shin, S.J.; Kim, Y.M.; Meilanitasari, P. A Holonic-Based Self-Learning Mechanism for Energy-Predictive Planning in Machining Processes. Processes 2019, 7, 739. [Google Scholar] [CrossRef]

- Ucar, F.; Cordova, J.; Alcin, O.F.; Dandil, B.; Ata, F.; Arghandeh, R. Bundle Extreme Learning Machine for Power Quality Analysis in Transmission Networks. Energies 2019, 12, 1449. [Google Scholar] [CrossRef]

- Xu, Y.; Zhou, Y.; Sekula, P.; Ding, L. Machine learning in construction: From shallow to deep learning. Dev. Built Environ. 2021, 6, 100045. [Google Scholar] [CrossRef]

- Wong, L.J.; Michaels, A.J. Transfer Learning for Radio Frequency Machine Learning: A Taxonomy and Survey. Sensors 2022, 22, 1416. [Google Scholar] [CrossRef] [PubMed]

- Burbidge, R.; Trotter, M.; Buxton, B.; Holden, S. Drug design by machine learning: Support vector machines for pharmaceutical data analysis. Comput. Chem. 2001, 26, 5–14. [Google Scholar] [CrossRef] [PubMed]

- Sample, P.A.; Goldbaum, M.H.; Chan, K.; Boden, C.; Lee, T.W.; Vasile, C.; Boehm, A.G.; Sejnowski, T.; Johnson, C.A.; Weinreb, R.N. Using machine learning classifiers to identify glaucomatous change earlier in standard visual fields. Invest. Ophthalmol. Visual Sci. 2002, 43, 2660–2665. [Google Scholar]

- Bax, J.J.; van der Bijl, P.; Delgado, V. Machine Learning for Electrocardiographic Diagnosis of Left Ventricular Early Diastolic Dysfunction∗. J. Am. Coll. Cardiol. 2018, 71, 1661–1662. [Google Scholar] [CrossRef] [PubMed]

- Singh, K.; Beam, A.L.; Nallamothu, B.K. Machine Learning in Clinical Journals Moving From Inscrutable to Informative. Circ. Cardiovasc. Qual. Outcomes 2020, 13, e007491. [Google Scholar] [CrossRef]

- Mainali, S.; Darsie, M.E.; Smetana, K.S. Machine Learning in Action: Stroke Diagnosis and Outcome Prediction. Front. Neurol. 2021, 12, 2153. [Google Scholar] [CrossRef]

- Shuhaiber, J.H.; Conte, J.V. Machine learning in heart valve surgery. Eur. J. Cardio-Thorac. Surg. 2021, 60, 1386–1387. [Google Scholar] [CrossRef]

- Tong, S.; Koller, D. Support vector machine active learning with applications to text classification. J. Mach. Learn. Res. 2002, 2, 45–66. [Google Scholar]

- Chen, Z.Y.; Khoa LD, V.; Teoh, E.N.; Nazir, A.; Karuppiah, E.K.; Lam, K.S. Machine learning techniques for anti-money laundering (AML) solutions in suspicious transaction detection: A review. Knowl. Inf. Syst. 2018, 57, 245–285. [Google Scholar] [CrossRef]

- Andres, A.R.; Otero, A.; Amavilah, V.H. Using deep learning neural networks to predict the knowledge economy index for developing and emerging economies. Expert Syst. Appl. 2021, 184, 115514. [Google Scholar] [CrossRef]

- Xie, X.; Sun, S. Multi-view Laplacian twin support vector machines. Appl. Intell. 2014, 41, 1059–1068. [Google Scholar] [CrossRef]

- de Bruijne, M. Machine learning approaches in medical image analysis: From detection to diagnosis. Med. Image Anal. 2016, 33, 94–97. [Google Scholar] [CrossRef] [PubMed]

- De Iaco, S.; Hristopulos, D.T.; Lin, G. Special Issue: Geostatistics and Machine Learning. Math. Geosci. 2022, 54, 459–465. [Google Scholar] [CrossRef]

- de Matos, M.C.; Osorio PL, M.; Johann, P.R.S. Unsupervised seismic facies analysis using wavelet transform and self-organizing maps. Geophysics 2007, 72, P9–P21. [Google Scholar] [CrossRef]

- de Matos, M.C.; Yenugu, M.; Angelo, S.M.; Marfurt, K.J. Integrated seismic texture segmentation and cluster analysis applied to channel delineation and chert reservoir characterization. Geophysics 2011, 76, P11–P21. [Google Scholar] [CrossRef]

- Roy, A.; Romero-Pelaez, A.S.; Kwiatkowski, T.J.; Marfurt, K.J. Generative topographic mapping for seismic facies estimation of a carbonate wash, Veracruz Basin, southern Mexico. Interpret.—A J. Subsurf. Charact. 2014, 2, SA31–SA47. [Google Scholar] [CrossRef]

- Qi, J.; Lin, T.F.; Zhao, T.; Li, F.Y.; Marfurt, K. Semisupervised multiattribute seismic facies analysis. Interpret.—A J. Subsurf. Charact. 2016, 4, SB91–SB106. [Google Scholar] [CrossRef]

- Qian, F.; Yin, M.; Liu, X.Y.; Wang, Y.J.; Lu, C.; Hu, G.M. Unsupervised seismic facies analysis via deep convolutional autoencoders. Geophysics 2018, 83, A39–A43. [Google Scholar] [CrossRef]

- Harris, J.R.; Grunsky, E.C. Predictive lithological mapping of Canada’s North using Random Forest classification applied to geophysical and geochemical data. Comput. Geosci. 2015, 80, 9–25. [Google Scholar] [CrossRef]

- Sebtosheikh, M.A.; Motafakkerfard, R.; Riahi, M.A.; Moradi, S.; Sabety, N. Support vector machine method, a new technique for lithology prediction in an Iranian heterogeneous carbonate reservoir using petrophysical well logs. Carbonates Evaporites 2015, 30, 59–68. [Google Scholar] [CrossRef]

- Ai, X.; Wang, H.Y.; Sun, B.T. Automatic Identification of Sedimentary Facies Based on a Support Vector Machine in the Aryskum Graben, Kazakhstan. Appl. Sci. 2019, 9, 4489. [Google Scholar] [CrossRef]

- Mulashani, A.K.; Shen, C.B.; Asante-Okyere, S.; Kerttu, P.N.; Abelly, E.N. Group Method of Data Handling (GMDH) Neural Network for Estimating Total Organic Carbon (TOC) and Hydrocarbon Potential Distribution (S-1, S-2) Using Well Logs. Nat. Resour. Res. 2021, 30, 3605–3622. [Google Scholar] [CrossRef]

- Hossain, T.M.; Watada, J.; Aziz, I.A.; Hermana, M. Machine Learning in Electrofacies Classification and Subsurface Lithology Interpretation: A Rough Set Theory Approach. Appl. Sci. 2020, 10, 5940. [Google Scholar] [CrossRef]

- Ashraf, U.; Zhang, H.; Anees, A.; Mangi, H.N.; Ali, M.; Zhang, X.; Imraz, M.; Abbasi, S.S.; Abbas, A.; Ullah, Z.; et al. A Core Logging, Machine Learning and Geostatistical Modeling Interactive Approach for Subsurface Imaging of Lenticular Geobodies in a Clastic Depositional System, SE Pakistan. Nat. Resour. Res. 2021, 30, 2807–2830. [Google Scholar] [CrossRef]

- Shokir, E.M.E. A novel model for permeability prediction in uncored wells. SPE Reserv. Eval. Eng. 2006, 9, 266–273. [Google Scholar] [CrossRef]

- Al-Anazi, A.; Gates, I.D. Support-Vector Regression for Permeability Prediction in a Heterogeneous Reservoir: A Comparative Study. SPE Reserv. Eval. Eng. 2010, 13, 485–495. [Google Scholar] [CrossRef]

- Kaydani, H.; Mohebbi, A.; Eftekhari, M. Permeability estimation in heterogeneous oil reservoirs by multi-gene genetic programming algorithm. J. Pet. Sci. Eng. 2014, 123, 201–206. [Google Scholar] [CrossRef]

- Zhang, G.Y.; Wang, Z.Z.; Li, H.J.; Sun, Y.A.; Zhang, Q.C.; Chen, W. Permeability prediction of isolated channel sands using machine learning. J. Appl. Geophys. 2018, 159, 605–615. [Google Scholar] [CrossRef]

- Zhang, G.Y.; Wang, Z.Z.; Mohaghegh, S.; Lin, C.Y.; Sun, Y.N.; Pei, S.J. Pattern visualization and understanding of machine learning models for permeability prediction in tight sandstone reservoirs. J. Pet. Sci. Eng. 2021, 200, 108142. [Google Scholar] [CrossRef]

- Liu, J.Z.; Liu, X.Y. Recognition and Classification for Inter-well Nonlinear Permeability Configuration in Low Permeability Reservoirs Utilizing Machine Learning Methods. Front. Earth Sci. 2022, 10, 218. [Google Scholar] [CrossRef]

- Wang, Y.J.; Li, H.Y.; Xu, J.C.; Liu, S.Y.; Wang, X.P. Machine learning assisted relative permeability upscaling for uncertainty quantification. Energy 2022, 245, 123284. [Google Scholar] [CrossRef]

- de Lima, R.P.; Surianam, F.; Marfurt, K.J.; Pranter, M.J. Convolutional neural networks as aid in core lithofacies classification. Interpret.—A J. Subsurf. Charact. 2019, 7, SF27–SF40. [Google Scholar]

- Koeshidayatullah, A.; Morsilli, M.; Lehrmann, D.J.; Al-Ramadan, K.; Payne, J.L. Fully automated carbonate petrography using deep convolutional neural networks. Mar. Pet. Geol. 2020, 122, 104687. [Google Scholar] [CrossRef]

- de Lima, R.P.; Duarte, D.; Nicholson, C.; Slatt, R.; Marfurt, K.J. Petrographic microfacies classification with deep convolutional neural networks. Comput. Geosci. 2020, 142, 104481. [Google Scholar] [CrossRef]

- Baraboshkin, E.E.; Ismailova, L.S.; Orlov, D.M.; Zhukovskaya, E.A.; Kalmykov, G.A.; Khotylev, O.V.; Baraboshkin, E.Y.; Koroteev, D.A. Deep convolutions for in-depth automated rock typing. Comput. Geosci. 2020, 135, 104330. [Google Scholar] [CrossRef]

- Izadi, H.; Sadri, J.; Bayati, M. An intelligent system for mineral identification in thin sections based on a cascade approach. Comput. Geosci. 2017, 99, 37–49. [Google Scholar] [CrossRef]

- Saporetti, C.M.; da Fonseca, L.G.; Pereira, E.; de Oliveira, L.C. Machine learning approaches for petrographic classification of carbonate-siliciclastic rocks using well logs and textural information. J. Appl. Geophys. 2018, 155, 217–225. [Google Scholar] [CrossRef]

- Silva, A.A.; Tavares, M.W.; Carrasquilla, A.; Missagia, R.; Ceia, M. Petrofacies classification using machine learning algorithms. Geophysics 2020, 85, WA101–WA113. [Google Scholar] [CrossRef]

- Zhu, L.; Zhang, C.; Zhang, C.; Zhang, Z.; Nie, X.; Zhou, X.; Liu, W.; Wang, X. Forming a new small sample deep learning model to predict total organic carbon content by combining unsupervised learning with semisupervised learning. Appl. Soft Comput. 2019, 83, 105596. [Google Scholar] [CrossRef]

- Shi, X.; Wang, J.; Liu, G.; Yang, L.; Ge, X.M.; Jiang, S. Application of extreme learning machine and neural networks in total organic carbon content prediction in organic shale with wire line logs. J. Nat. Gas Sci. Eng. 2016, 33, 687–702. [Google Scholar] [CrossRef]

- Johnson, L.M.; Rezaee, R.; Kadkhodaie, A.; Smith, G.; Yu, H.Y. Geochemical property modelling of a potential shale reservoir in the Canning Basin (Western Australia), using Artificial Neural Networks and geostatistical tools. Comput. Geosci. 2018, 120, 73–81. [Google Scholar] [CrossRef]

- Zhu, L.; Zhang, C.; Zhang, C.; Wei, Y.; Zhou, X.; Cheng, Y.; Huang, Y.; Zhang, L. Prediction of total organic carbon content in shale reservoir based on a new integrated hybrid neural network and conventional well logging curves. J. Geophys. Eng. 2018, 15, 1050–1061. [Google Scholar] [CrossRef]

- Wang, H.J.; Wu, W.; Chen, T.; Dong, X.J.; Wang, G.X. An improved neural network for TOC, S-1 and S-2 estimation based on conventional well logs. J. Pet. Sci. Eng. 2019, 176, 664–678. [Google Scholar] [CrossRef]

- Zhu, L.Q.; Zhang, C.; Zhang, C.M.; Zhou, X.Q.; Wang, J.; Wang, X. Application of Multiboost-KELM algorithm to alleviate the collinearity of log curves for evaluating the abundance of organic matter in marine mud shale reservoirs: A case study in Sichuan Basin, China. Acta Geophys 2018, 66, 983–1000. [Google Scholar] [CrossRef]

- Liu, X.Z.; Tian, Z.; Chen, C. Total Organic Carbon Content Prediction in Lacustrine Shale Using Extreme Gradient Boosting Machine Learning Based on Bayesian Optimization. Geofluids 2021, 2021, 6155663. [Google Scholar] [CrossRef]

- Rui, J.W.; Zhang, H.B.; Zhang, D.L.; Han, F.L.; Guo, Q. Total organic carbon content prediction based on support-vector-regression machine with particle swarm optimization. J. Pet. Sci. Eng. 2019, 180, 699–706. [Google Scholar] [CrossRef]

- Amosu, A.; Imsalem, M.; Sun, Y.F. Effective machine learning identification of TOC-rich zones in the Eagle Ford Shale. J. Appl. Geophys. 2021, 188, 104311. [Google Scholar] [CrossRef]

- Rong, J.; Zheng, Z.; Luo, X.; Li, C.; Li, Y.; Wei, X.; Wei, Q.; Yu, G.; Zhang, L.; Lei, Y. Machine Learning Method for TOC Prediction: Taking Wufeng and Longmaxi Shales in the Sichuan Basin, Southwest China as an Example. Geofluids 2021, 2021, 6794213. [Google Scholar] [CrossRef]

- Zhu, L.; Zhang, C.; Zhang, C.; Zhang, Z.; Zhou, X.; Liu, W.; Zhu, B. A new and reliable dual model- and data-driven TOC prediction concept: A TOC logging evaluation method using multiple overlapping methods integrated with semi-supervised deep learning. J. Pet. Sci. Eng. 2020, 188, 106944. [Google Scholar] [CrossRef]

- Handhal, A.M.; Al-Abadi, A.M.; Chafeet, H.E.; Ismail, M.J. Prediction of total organic carbon at Rumaila oil field, Southern Iraq using conventional well logs and machine learning algorithms. Mar. Pet. Geol. 2020, 116, 104347. [Google Scholar] [CrossRef]

- Ao, S.U.; Honghan, C.H.E.N.; Laisheng, C.A.O.; Mingzhu, L.E.I.; Cunwu, W.A.N.G.; Yanhua, L.I.U.; Peijun, L.I. Genesis, source and charging of oil and gas in Lishui sag, East China Sea Basin. Pet. Explor. Dev. 2014, 41, 574–584. [Google Scholar]

- Jiang, Z.; Li, Y.; Du, H.; Zhang, Y. The Cenozoic structural evolution and its influences on gas accumulation in the Lishui Sag, East China Sea Shelf Basin. J. Nat. Gas Sci. Eng. 2015, 22, 107–118. [Google Scholar] [CrossRef]

- Zhang, M.; Zhang, J.; Xu, F.; Li, J.; Liu, J.; Hou, G.; Zhang, P. Paleocene sequence stratigraphy and depositional systems in the Lishui Sag, East China Sea Shelf Basin. Mar. Pet. Geol. 2015, 59, 390–405. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, J.; Liu, Y.; Shen, W.; Chang, X.; Sun, Z.; Xu, G. Organic geochemistry, distribution and hydrocarbon potential of source rocks in the Paleocene, Lishui Sag, East China Sea Shelf Basin. Mar. Pet. Geol. 2019, 107, 382–396. [Google Scholar] [CrossRef]

- Liu, L.; Li, Y.; Dong, H.; Sun, Z. Diagenesis and reservoir quality of Paleocene tight sandstones, Lishui Sag, East China Sea Shelf Basin. J. Pet. Sci. Eng. 2020, 195, 107615. [Google Scholar] [CrossRef]

- Lei, C.; Yin, S.; Ye, J.; Wu, J.; Wang, Z.; Gao, B. Characteristics and deposition models of the paleocene source rocks in the Lishui Sag, east China sea shelf basin: Evidences from organic and inorganic geochemistry. J. Pet. Sci. Eng. 2021, 200, 108342. [Google Scholar] [CrossRef]

- Huang, Y.; Tarantola, A.; Wang, W.; Caumon, M.C.; Pironon, J.; Lu, W.; Yan, D.; Zhuang, X. Charge history of CO2 in Lishui sag, East China Sea basin: Evidence from quantitative Raman analysis of CO2-bearing fluid inclusions. Mar. Pet. Geol. 2018, 98, 50–65. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, J.; Xu, Y.; Chen, T.; Liu, J. Improved understanding of the origin and accumulation of hydrocarbons from multiple source rocks in the Lishui Sag: Insights from statistical methods, gold tube pyrolysis and basin modeling. Mar. Pet. Geol. 2021, 134, 105361. [Google Scholar] [CrossRef]

- Nagasaka, Y.; Iwata, A. Performance evaluation of BP and PCA neural networks for ECG data compression. In Proceedings of 1993 International Conference on Neural Networks (IJCNN-93-Nagoya, Japan), Nagoya, Japan, 25–29 October 1993; Volume 1, pp. 1003–1006. [Google Scholar]

- Fung, C.C.; Wong, K.W.; Eren, H.; Charlebois, R.; Crocker, H. Modular artificial neural network for prediction of petrophysical properties from well log data. In Proceedings of the Quality Measurement: The Indispensable Bridge between Theory and Reality (No Measurements? No Science! Joint Conference-1996: IEEE Instrumentation and Measurement Technology Conference and IMEKO Tec, Brussels, Belgium, 4–6 June 1996; Volume 2, pp. 1010–1014. [Google Scholar]

- Huang, Y.; Wong, P.M.; Gedeon, T.D. An improved fuzzy neural network for permeability estimation from wireline logs in a petroleum reservoir. In Proceedings of the Proceedings of Digital Processing Applications (TENCON ′96), Perth, WA, Australia, 29 November 1996; Volume 2, pp. 912–917. [Google Scholar]

- Wong, P.M.; Gedeon, T.D.; Taggart, I.J. Fuzzy ARTMAP: A new tool for lithofacies recognition. Ai Appl. 1996, 10, 29–39. [Google Scholar]

- Wong, P.M.; Henderson, D.J.; Brooks, L.J. Permeability determination using neural networks in the Ravva Field, offshore India. SPE Reserv. Eval. Eng. 1998, 1, 99–104. [Google Scholar] [CrossRef]

- Sfidari, E.; Kadkhodaie-Ilkhchi, A.; Najjari, S. Comparison of intelligent and statistical clustering approaches to predicting total organic carbon using intelligent systems. J. Pet. Sci. Eng. 2012, 86–87, 190–205. [Google Scholar] [CrossRef]

- Hansen, K.B. The virtue of simplicity: On machine learning models in algorithmic trading. Big Data Soc. 2020, 7, 2053951720926558. [Google Scholar] [CrossRef]

- Goz, E.; Yuceer, M.; Karadurmus, E. Total Organic Carbon Prediction with Artificial Intelligence Techniques. In Computer Aided Chemical Engineering; Elsevier: Amsterdam, The Netherlands, 2019; pp. 889–894. [Google Scholar]

- Amiri Bakhtiar, H.; Telmadarreie, A.; Shayesteh, M.; Heidari Fard, M.H.; Talebi, H.; Shirband, Z. Estimating Total Organic Carbon Content and Source Rock Evaluation, Applying ΔlogR and Neural Network Methods: Ahwaz and Marun Oilfields, SW of Iran. Pet. Sci. Technol. 2011, 29, 1691–1704. [Google Scholar] [CrossRef]

- Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme learning machine: A new learning scheme of feedforward neural networks. In Proceedings of the 2004 IEEE international joint conference on neural networks, Budapest, Hungary, 25–29 July 2004; Volumes 1–4, pp. 985–990. [Google Scholar]

- Huang, G.B.; Zhou, H.M.; Ding, X.J.; Zhang, R. Extreme Learning Machine for Regression and Multiclass Classification. IEEE Trans. Syst. Man Cybern. Part B-Cybern. 2012, 42, 513–529. [Google Scholar] [CrossRef] [PubMed]

- Peng, X.L.; Lin, P.; Zhang, T.S.; Wang, J. Extreme Learning Machine-Based Classification of ADHD Using Brain Structural MRI Data. PLoS ONE 2013, 8, e79476. [Google Scholar] [CrossRef]

- Ahmad, I.; Basheri, M.; Iqbal, M.J.; Rahim, A. Performance Comparison of Support Vector Machine, Random Forest, and Extreme Learning Machine for Intrusion Detection. IEEE Access 2018, 6, 33789–33795. [Google Scholar] [CrossRef]

- Zong, W.W.; Huang, G.B.; Chen, Y.Q. Weighted extreme learning machine for imbalance learning. Neurocomputing 2013, 101, 229–242. [Google Scholar] [CrossRef]

- Tang, J.X.; Deng, C.W.; Huang, G.B. Extreme Learning Machine for Multilayer Perceptron. IEEE Trans. Neural Networks Learn. Syst. 2016, 27, 809–821. [Google Scholar] [CrossRef]

- Lariviere, B.; Van den Poel, D. Predicting customer retention and profitability by using random forests and regression forests techniques. Expert Syst. Appl. 2005, 29, 472–484. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Breiman, L.; Last, M.; Rice, J. Random forests: Finding quasars. In Statistical Challenges in Astronomy; Springer: New York, NY, USA, 2003; pp. 243–254. [Google Scholar]

- Ziegler, A.; Konig, I.R. Mining data with random forests: Current options for real-world applications. Wiley Interdiscip. Rev.-Data Min. Knowl. Discov. 2014, 4, 55–63. [Google Scholar]

- Scornet, E. Random Forests and Kernel Methods. IEEE Trans. Inf. Theory 2016, 62, 1485–1500. [Google Scholar] [CrossRef]

- Shalaby, M.R.; Malik, O.A.; Lai, D.; Jumat, N.; Islam, M.A. Thermal maturity and TOC prediction using machine learning techniques: Case study from the Cretaceous-Paleocene source rock, Taranaki Basin, New Zealand. J. Pet. Explor. Prod. Technol. 2020, 10, 2175–2193. [Google Scholar]

- Biau, G. Analysis of a Random Forests Model. J. Mach. Learn. Res. 2012, 13, 1063–1095. [Google Scholar]

- Mutanga, O.; Adam, E.; Cho, M.A. High density biomass estimation for wetland vegetation using WorldView-2 imagery and random forest regression algorithm. Int. J. Appl. Earth Obs. Geoinf. 2012, 18, 399–406. [Google Scholar]

| R | AC | CN | DEN | GR | RT | TOC |

|---|---|---|---|---|---|---|

| AC | 1 | 0.85133 | −0.68255 | −0.09409 | −0.16529 | 0.67236 |

| CN | 1 | −0.61136 | −0.06937 | −0.08279 | 0.77855 | |

| DEN | 1 | 0.04474 | 0.1027 | −0.49961 | ||

| GR | 1 | 0.42611 | 0.00713 | |||

| RT | 1 | 0.1442 | ||||

| TOC | 1 |

| Well | Depth (m) | TOC | S1 | S2 | Pg | HI | Tmax |

|---|---|---|---|---|---|---|---|

| (mg/g TOC) | (mg/g TOC) | S1 + S2 | S2/TOC | °C | |||

| (mg/g TOC) | |||||||

| A | 2701 | 0.55 | 0.12 | 0.88 | 1 | 159.42 | 438 |

| 2707 | 1.09 | 0.22 | 1.19 | 1.41 | 109.17 | 444 | |

| 2720 | 1.58 | 0.27 | 2.11 | 2.38 | 133.54 | 434 | |

| 2721 | 0.4 | 0.12 | 0.99 | 1.11 | 247.5 | 442 | |

| 2916.6 | 0.99 | 0.02 | 0.48 | 0.5 | 48 | 454 | |

| 2917 | 0.88 | 0.02 | 0.3 | 0.32 | 34 | 452 | |

| 2940 | 0.73 | 0.89 | 0.77 | 1.66 | 105.48 | 430 | |

| 2960 | 0.7 | 0.23 | 0.96 | 1.19 | 137.14 | 428 | |

| 3079 | 0.35 | 0.02 | 0.18 | 0.2 | 34 | 451 | |

| 3092 | 0.47 | 0.02 | 0.31 | 0.33 | 65.96 | 451 | |

| 3099 | 0.37 | 0.02 | 0.21 | 0.23 | 56.76 | 453 | |

| B | 2356.5 | 3.34 | 0.13 | 11.72 | 11.85 | 350.9 | 438 |

| 2362.5 | 2.99 | 0.33 | 11.68 | 12.01 | 390.64 | 441 | |

| 2395.5 | 1.88 | 0.22 | 2.98 | 3.2 | 158.51 | 438 | |

| 2401.5 | 2.42 | 0.23 | 7.21 | 7.44 | 297.93 | 440 | |

| 2422.5 | 2.59 | 0.42 | 8.57 | 8.99 | 330.89 | 443 | |

| 2425.5 | 2.12 | 0.37 | 7.21 | 7.58 | 340.09 | 440 | |

| 2434.5 | 3.18 | 0.15 | 12.36 | 12.51 | 388.68 | 440 | |

| 2488.5 | 2.88 | 0.14 | 7.48 | 7.62 | 259.72 | 440 | |

| 2495 | 2.42 | 0.22 | 9.2 | 9.42 | 380.17 | 438 | |

| 2506.5 | 2.65 | 0.34 | 9.12 | 9.46 | 344.15 | 441 | |

| 2518.5 | 2.51 | 0.51 | 8 | 8.51 | 318.73 | 440 | |

| C | 3576 | 0.97 | 0.21 | 0.45 | 0.66 | 46.39 | 451 |

| 3577.5 | 1.61 | 0.55 | 1.28 | 1.83 | 79.5 | 458 | |

| 3586.5 | 1.24 | 0.33 | 0.66 | 0.99 | 53.23 | 452 | |

| 3595.5 | 1.71 | 0.36 | 0.98 | 1.34 | 57.31 | 461 | |

| 3604.5 | 1.86 | 0.21 | 1.16 | 1.37 | 62.37 | 458 | |

| 3607.5 | 1.87 | 0.38 | 1.24 | 1.62 | 66.31 | 459 | |

| 3630.5 | 1.48 | 0.17 | 0.61 | 0.78 | 41.22 | 460 | |

| 3640 | 0.68 | 0.02 | 0.19 | 0.21 | 27.94 | 470 | |

| 3640.05 | 0.7 | 0.01 | 0.12 | 0.13 | 17.14 | 479 | |

| 3640.8 | 1.29 | 0.13 | 0.83 | 0.96 | 64.34 | 466 | |

| 3641.6 | 2.4 | 1.08 | 1.98 | 3.06 | 82.5 | 470 | |

| 3641.7 | 0.83 | 0.05 | 0.21 | 0.26 | 25.3 | 478 | |

| 3642.7 | 2.17 | 0.11 | 1.08 | 1.19 | 49.77 | 466 | |

| 3643 | 0.53 | 0.03 | 0.21 | 0.24 | 39.55 | 472 | |

| 3643.6 | 0.2 | 0.01 | 0.04 | 0.05 | 20 | 471 | |

| 3643.7 | 0.64 | 0.03 | 0.16 | 0.19 | 25 | 476 | |

| 3643.79 | 0.58 | 0.03 | 0.18 | 0.21 | 31.03 | 452 | |

| 3645.8 | 1.14 | 0.17 | 0.6 | 0.77 | 52.63 | 465 | |

| 3646 | 1.11 | 0.1 | 0.62 | 0.72 | 55.86 | 469 | |

| 3646.4 | 1.11 | 0.11 | 0.6 | 0.71 | 54.05 | 466 | |

| 3646.95 | 1.69 | 0.14 | 0.55 | 0.69 | 32.54 | 463 | |

| 3647 | 1.04 | 0.13 | 0.6 | 0.73 | 57.69 | 462 | |

| 3647.09 | 1.54 | 0.38 | 0.83 | 1.21 | 53.9 | 461 | |

| 3647.2 | 0.93 | 0.1 | 0.36 | 0.46 | 38.71 | 471 | |

| 3647.25 | 1.74 | 0.37 | 0.71 | 1.08 | 40.8 | 470 | |

| 3647.42 | 1.49 | 0.12 | 0.6 | 0.72 | 40.27 | 468 | |

| 3647.5 | 1.24 | 0.1 | 0.62 | 0.72 | 50 | 462 | |

| 3647.5 | 1.23 | 0.11 | 0.74 | 0.85 | 60.16 | 463 | |

| 3647.81 | 1.57 | 0.16 | 0.67 | 0.83 | 42.68 | 465 | |

| 3648.16 | 0.21 | 0.03 | 0.21 | 0.24 | 100 | 455 | |

| 3688.5 | 1.84 | 0.29 | 1.54 | 1.83 | 83.7 | 448 | |

| 3702 | 0.88 | 0.16 | 0.32 | 0.48 | 36.36 | 458 | |

| 3712.5 | 1.16 | 0.15 | 0.6 | 0.75 | 51.72 | 471 | |

| 3724.5 | 0.57 | 0.07 | 0.26 | 0.33 | 45.61 | 461 | |

| 3739.5 | 1.91 | 0.88 | 1.47 | 2.35 | 76.96 | 455 | |

| 3745 | 1.49 | 0.52 | 1.21 | 1.73 | 81.21 | 456 | |

| 3751.5 | 2.26 | 1.27 | 1.87 | 3.14 | 82.74 | 457 | |

| 3755 | 2.77 | 1.32 | 2.01 | 3.33 | 72.56 | 457 | |

| 3769.5 | 1.99 | 1.3 | 1.3 | 2.6 | 65.33 | 455 | |

| 3772.5 | 2.49 | 1.38 | 2.43 | 3.81 | 97.59 | 454 | |

| 3775.5 | 2.21 | 2.05 | 1.93 | 3.98 | 87.33 | 453 | |

| 3784.5 | 2.77 | 1.08 | 1.68 | 2.76 | 60.65 | 446 | |

| 3793.5 | 2.06 | 1.25 | 1.25 | 2.5 | 60.68 | 457 | |

| 3793.5 | 2.64 | 1.03 | 1.61 | 2.64 | 60.98 | 451 | |

| 3795 | 1.93 | 0.99 | 1.22 | 2.21 | 63.21 | 453 | |

| 3795 | 2.18 | 1.01 | 1.25 | 2.26 | 57.34 | 455 | |

| 3796.5 | 1.9 | 0.94 | 1.15 | 2.09 | 60.53 | 459 | |

| 3796.5 | 2.55 | 1.21 | 2.4 | 3.61 | 94.12 | 458 | |

| 3813 | 1.4 | 0.2 | 0.68 | 0.88 | 48.57 | 457 | |

| 3852 | 2.73 | 0.89 | 1.61 | 2.5 | 58.97 | 464 | |

| 3883 | 2.86 | 1.27 | 1.83 | 3.1 | 63.99 | 447 | |

| 3888 | 2.89 | 1.52 | 2.15 | 3.67 | 74.39 | 456 | |

| 3903 | 1.47 | 0.13 | 0.58 | 0.71 | 39.46 | 455 | |

| 3910.5 | 1.48 | 0.37 | 0.61 | 0.98 | 41.22 | 453 | |

| 3913.5 | 0.91 | 0.28 | 0.43 | 0.71 | 47.25 | 455 |

| Well | Depth (m) | TOC | Prediction | |

|---|---|---|---|---|

| % | Multiple Regression | |||

| HXC | HX | |||

| A | 2701 | 0.55 | 0.77 | 0.55 |

| 2707 | 1.09 | 0.92 | 0.68 | |

| 2720 | 1.58 | 0.89 | 0.59 | |

| 2721 | 0.4 | 0.93 | 0.51 | |

| 2916.6 | 0.99 | 0.96 | 0.82 | |

| 2917 | 0.88 | 0.91 | 0.81 | |

| 2940 | 0.73 | 0.61 | 0.6 | |

| 2960 | 0.7 | 0.8 | 0.71 | |

| 3079 | 0.35 | 0.91 | 0.9 | |

| 3092 | 0.47 | 0.8 | 0.81 | |

| 3099 | 0.37 | 1.3 | 1.36 | |

| B | 2356.5 | 3.34 | 3.16 | 3.11 |

| 2362.5 | 2.99 | 2.91 | 2.96 | |

| 2395.5 | 1.88 | 2.44 | 2.62 | |

| 2401.5 | 2.42 | 1.59 | 1.68 | |

| 2422.5 | 2.59 | 2.61 | 2.79 | |

| 2425.5 | 2.12 | 2.5 | 2.62 | |

| 2434.5 | 3.18 | 2.27 | 2.52 | |

| 2488.5 | 2.88 | 2.4 | 2.84 | |

| 2495 | 2.42 | 1.87 | 2.16 | |

| 2506.5 | 2.65 | 1.91 | 2.14 | |

| 2518.5 | 2.51 | 1.97 | 2.15 | |

| C | 3576 | 0.97 | 1.35 | 1.39 |

| 3577.5 | 1.61 | 1.35 | 1.41 | |

| 3586.5 | 1.24 | 1.43 | 1.44 | |

| 3595.5 | 1.71 | 2.19 | 2.37 | |

| 3604.5 | 1.86 | 1.37 | 1.54 | |

| 3607.5 | 1.87 | 1.84 | 2.01 | |

| 3630.5 | 1.48 | 1.16 | 1.25 | |

| 3640 | 0.68 | 1.21 | 1.01 | |

| 3640.05 | 0.7 | 1.29 | 1.09 | |

| 3640.8 | 1.29 | 1.24 | 1.06 | |

| 3641.6 | 2.4 | 0.94 | 1.09 | |

| 3641.7 | 0.83 | 0.99 | 1.1 | |

| 3642.7 | 2.17 | 1.29 | 1.48 | |

| 3643 | 0.53 | 1.17 | 1.34 | |

| 3643.6 | 0.2 | 1.25 | 1.29 | |

| 3643.7 | 0.64 | 1.26 | 1.27 | |

| 3643.79 | 0.58 | 1.26 | 1.27 | |

| 3645.8 | 1.14 | 1.43 | 1.5 | |

| 3646 | 1.11 | 1.16 | 1.18 | |

| 3646.4 | 1.11 | 1.05 | 1.11 | |

| 3646.95 | 1.69 | 1.43 | 1.54 | |

| 3647 | 1.04 | 1.43 | 1.54 | |

| 3647.09 | 1.54 | 1.35 | 1.47 | |

| 3647.2 | 0.93 | 1.24 | 1.34 | |

| 3647.25 | 1.74 | 1.24 | 1.34 | |

| 3647.42 | 1.49 | 1.18 | 1.27 | |

| 3647.5 | 1.24 | 1.17 | 1.26 | |

| 3647.5 | 1.23 | 1.17 | 1.26 | |

| 3647.81 | 1.57 | 1.21 | 1.32 | |

| 3648.16 | 0.21 | 1.25 | 1.35 | |

| 3688.5 | 1.84 | 1.41 | 1.59 | |

| 3702 | 0.88 | 1.5 | 1.71 | |

| 3712.5 | 1.16 | 0.89 | 0.87 | |

| 3724.5 | 0.57 | 1.31 | 1.33 | |

| 3739.5 | 1.91 | 2.24 | 2.18 | |

| 3745 | 1.49 | 2.16 | 2.15 | |

| 3751.5 | 2.26 | 2.37 | 2.2 | |

| 3755 | 2.77 | 2.7 | 2.1 | |

| 3769.5 | 1.99 | 2.1 | 1.91 | |

| 3772.5 | 2.49 | 2.13 | 1.8 | |

| 3775.5 | 2.21 | 2.06 | 1.69 | |

| 3784.5 | 2.77 | 2.21 | 2.06 | |

| 3793.5 | 2.06 | 1.96 | 1.8 | |

| 3793.5 | 2.64 | 2.02 | 1.89 | |

| 3795 | 1.93 | 2.35 | 2.24 | |

| 3795 | 2.18 | 2.2 | 2.06 | |

| 3796.5 | 1.9 | 2.04 | 1.87 | |

| 3796.5 | 2.55 | 2.06 | 1.89 | |

| 3813 | 1.4 | 1.46 | 1.27 | |

| 3852 | 2.73 | 3.24 | 3.1 | |

| 3883 | 2.86 | 2.88 | 2.14 | |

| 3888 | 2.89 | 3.55 | 2.25 | |

| 3903 | 1.47 | 1.97 | 2.19 | |

| 3910.5 | 1.48 | 1.19 | 0.91 | |

| 3913.5 | 0.91 | 1.27 | 1.29 | |

| Method | RF | BPNN | ELM | Multiple Regression | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Parameter | HXC | HX | HXC | HX | HXC | HX | HXC | HX | HXC | HX | HXC | HX | HXC | HX |

| Type | Training | Tested | Training | Tested | Training | Tested | Tested | |||||||

| R2 | 0.97 | 0.97 | 0.65 | 0.49 | 0.91 | 0.84 | 0.70 | 0.53 | 0.88 | 0.82 | 0.66 | 0.57 | 0.63 | 0.60 |

| MAE | 0.03 | 0.03 | 0.39 | 0.56 | 0.22 | 0.26 | 0.37 | 0.47 | 0.23 | 0.28 | 0.42 | 0.45 | 0.39 | 0.41 |

| MSE | 0.02 | 0.02 | 0.26 | 0.45 | 0.07 | 0.26 | 0.23 | 0.43 | 0.10 | 0.12 | 0.27 | 0.33 | 0.24 | 0.26 |

| RMSE | 0.15 | 0.15 | 0.51 | 0.67 | 0.26 | 0.51 | 0.48 | 0.66 | 0.31 | 0.35 | 0.52 | 0.57 | 0.49 | 0.51 |

| Core Data | Training Dataset Prediction | Core Data | Tested Dataset Prediction | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| RF | BPNN | ELM | RF | BPNN | ELM | ||||||||

| HXC | HX | HXC | HX | HXC | HX | HXC | HX | HXC | HX | HXC | HX | ||

| 0.99 | 0.99 | 0.99 | 0.71 | 1.11 | 0.75 | 0.82 | 1.40 | 0.70 | 0.70 | 1.30 | 1.22 | 1.02 | 1.07 |

| 0.88 | 0.88 | 0.88 | 0.75 | 0.92 | 0.74 | 0.78 | 2.73 | 2.77 | 1.88 | 2.72 | 2.77 | 2.73 | 2.81 |

| 0.47 | 0.47 | 0.47 | 0.65 | 0.92 | 0.66 | 0.42 | 2.86 | 2.77 | 2.77 | 2.84 | 2.82 | 2.73 | 2.42 |

| 3.34 | 3.34 | 3.34 | 3.24 | 3.22 | 3.26 | 2.79 | 2.89 | 2.77 | 2.65 | 3.32 | 2.86 | 3.02 | 2.56 |

| 2.99 | 2.99 | 2.99 | 2.83 | 2.96 | 3.01 | 2.90 | 1.47 | 1.91 | 1.87 | 1.27 | 2.94 | 2.12 | 2.53 |

| 1.88 | 1.88 | 1.88 | 1.64 | 2.50 | 1.90 | 2.68 | 1.48 | 1.61 | 1.16 | 0.93 | 1.00 | 1.02 | 0.92 |

| 2.59 | 2.59 | 2.59 | 2.76 | 3.07 | 2.46 | 3.01 | 0.91 | 0.57 | 1.84 | 0.52 | 1.48 | 1.05 | 1.13 |

| 3.18 | 3.18 | 3.18 | 3.12 | 3.13 | 2.73 | 2.84 | 0.73 | 0.47 | 0.47 | 0.41 | 0.72 | 0.36 | 0.44 |

| 2.88 | 2.88 | 2.88 | 3.16 | 2.87 | 2.80 | 3.15 | 0.55 | 0.47 | 0.47 | 0.60 | 0.75 | 0.22 | 0.44 |

| 2.65 | 2.65 | 2.65 | 2.30 | 2.72 | 2.29 | 2.44 | 1.09 | 0.47 | 0.47 | 0.81 | 0.79 | 0.58 | 0.52 |

| 2.51 | 2.51 | 2.51 | 2.24 | 2.58 | 2.03 | 2.36 | 1.58 | 0.47 | 0.47 | 0.70 | 0.71 | 0.36 | 0.44 |

| 0.97 | 0.97 | 0.97 | 0.80 | 1.11 | 1.05 | 1.34 | 0.35 | 0.99 | 0.99 | 0.55 | 1.47 | 1.01 | 0.96 |

| 1.61 | 1.61 | 1.61 | 1.51 | 1.51 | 1.08 | 1.39 | 0.37 | 0.97 | 0.97 | 0.70 | 1.48 | 0.90 | 1.30 |

| 1.24 | 1.24 | 1.24 | 1.34 | 1.64 | 1.61 | 1.47 | 2.12 | 2.59 | 2.59 | 3.03 | 3.04 | 2.77 | 2.92 |

| 1.86 | 1.86 | 1.86 | 1.36 | 1.41 | 1.43 | 1.44 | 2.42 | 2.65 | 1.24 | 2.05 | 2.50 | 2.29 | 2.35 |

| 1.87 | 1.87 | 1.87 | 1.53 | 2.42 | 1.89 | 2.33 | 2.42 | 2.59 | 3.18 | 1.78 | 2.11 | 1.62 | 1.99 |

| 0.68 | 0.68 | 0.68 | 0.55 | 0.68 | 0.59 | 0.81 | 1.71 | 2.51 | 3.18 | 2.72 | 2.97 | 2.30 | 2.68 |

| 0.7 | 0.7 | 0.70 | 0.76 | 1.20 | 0.88 | 0.89 | 1.48 | 0.83 | 0.88 | 0.88 | 1.27 | 1.49 | 1.22 |

| 2.4 | 2.4 | 2.40 | 2.23 | 1.74 | 1.51 | 2.33 | 1.29 | 1.23 | 1.11 | 0.86 | 1.10 | 0.80 | 0.95 |

| 0.83 | 0.83 | 0.83 | 0.98 | 1.40 | 1.36 | 1.05 | 0.40 | 0.70 | 0.47 | 0.50 | 0.76 | 0.08 | 0.36 |

| 2.17 | 2.17 | 2.17 | 2.07 | 2.31 | 1.33 | 1.39 | 0.70 | 0.47 | 0.47 | 0.61 | 0.69 | 0.21 | 0.54 |

| 0.531 | 0.531 | 0.53 | 0.87 | 0.53 | 0.53 | 0.53 | 1.49 | 1.91 | 2.51 | 1.99 | 2.58 | 2.18 | 2.36 |

| 0.2 | 0.2 | 0.20 | 0.21 | 0.20 | 0.21 | 0.22 | 2.26 | 1.91 | 1.91 | 2.17 | 2.82 | 2.44 | 2.45 |

| 0.64 | 0.58 | 0.64 | 0.63 | 0.63 | 0.62 | 1.18 | 1.11 | 1.11 | 0.20 | 0.95 | 1.31 | 0.80 | 1.10 |

| 0.58 | 0.58 | 0.64 | 0.53 | 0.53 | 0.56 | 1.18 | 1.54 | 1.04 | 2.17 | 1.26 | 1.55 | 1.32 | 1.40 |

| 1.14 | 1.14 | 1.14 | 1.26 | 1.77 | 1.30 | 1.51 | 1.24 | 1.23 | 1.23 | 1.11 | 1.27 | 1.21 | 1.16 |

| 1.11 | 1.11 | 1.11 | 0.99 | 1.12 | 0.89 | 0.99 | 0.21 | 1.49 | 0.53 | 1.23 | 1.36 | 1.28 | 1.27 |

| 1.69 | 1.04 | 1.04 | 1.30 | 1.67 | 1.41 | 1.50 | |||||||

| 1.04 | 1.04 | 1.04 | 1.30 | 1.67 | 1.01 | 1.50 | |||||||

| 0.93 | 0.93 | 0.93 | 1.22 | 1.39 | 1.24 | 0.91 | |||||||

| 1.74 | 0.93 | 0.93 | 1.22 | 1.39 | 1.24 | 1.25 | |||||||

| 1.49 | 1.49 | 1.49 | 1.15 | 1.29 | 1.21 | 1.17 | |||||||

| 1.23 | 1.23 | 1.23 | 1.11 | 1.27 | 1.21 | 1.16 | |||||||

| 1.57 | 1.57 | 1.57 | 1.13 | 1.34 | 1.30 | 1.24 | |||||||

| 1.84 | 1.84 | 1.84 | 1.39 | 1.31 | 1.45 | 1.47 | |||||||

| 0.88 | 0.88 | 0.88 | 0.96 | 1.40 | 0.88 | 0.90 | |||||||

| 1.16 | 1.16 | 1.16 | 0.69 | 1.36 | 0.89 | 0.85 | |||||||

| 0.57 | 0.57 | 0.57 | 0.77 | 1.24 | 0.56 | 0.58 | |||||||

| 1.91 | 1.91 | 1.91 | 1.81 | 1.95 | 1.81 | 1.90 | |||||||

| 2.77 | 2.77 | 2.77 | 2.83 | 2.77 | 2.62 | 2.33 | |||||||

| 1.99 | 1.99 | 1.99 | 2.09 | 2.32 | 1.99 | 2.03 | |||||||

| 2.49 | 2.49 | 2.49 | 2.15 | 2.17 | 2.23 | 1.87 | |||||||

| 2.21 | 2.21 | 2.21 | 2.08 | 2.05 | 2.04 | 1.74 | |||||||

| 2.77 | 2.77 | 2.77 | 2.13 | 2.32 | 2.39 | 2.21 | |||||||

| 2.06 | 2.06 | 2.06 | 1.91 | 2.29 | 2.00 | 1.90 | |||||||

| 2.64 | 2.64 | 2.64 | 2.36 | 2.29 | 2.04 | 2.46 | |||||||

| 1.93 | 1.93 | 1.93 | 2.16 | 1.98 | 2.02 | 2.41 | |||||||

| 2.18 | 2.18 | 2.18 | 2.14 | 2.32 | 2.49 | 2.22 | |||||||

| 1.9 | 1.9 | 1.90 | 2.05 | 2.36 | 2.18 | 1.99 | |||||||

| 2.55 | 2.55 | 2.55 | 2.09 | 2.44 | 2.25 | 2.03 | |||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, X.; Hou, D.; Cheng, X.; Li, Y.; Niu, C.; Chen, S. Prediction of TOC in Lishui–Jiaojiang Sag Using Geochemical Analysis, Well Logs, and Machine Learning. Energies 2022, 15, 9480. https://doi.org/10.3390/en15249480

Han X, Hou D, Cheng X, Li Y, Niu C, Chen S. Prediction of TOC in Lishui–Jiaojiang Sag Using Geochemical Analysis, Well Logs, and Machine Learning. Energies. 2022; 15(24):9480. https://doi.org/10.3390/en15249480

Chicago/Turabian StyleHan, Xu, Dujie Hou, Xiong Cheng, Yan Li, Congkai Niu, and Shuosi Chen. 2022. "Prediction of TOC in Lishui–Jiaojiang Sag Using Geochemical Analysis, Well Logs, and Machine Learning" Energies 15, no. 24: 9480. https://doi.org/10.3390/en15249480

APA StyleHan, X., Hou, D., Cheng, X., Li, Y., Niu, C., & Chen, S. (2022). Prediction of TOC in Lishui–Jiaojiang Sag Using Geochemical Analysis, Well Logs, and Machine Learning. Energies, 15(24), 9480. https://doi.org/10.3390/en15249480