Eliminate Time Dispersion of Seismic Wavefield Simulation with Semi-Supervised Deep Learning

Abstract

1. Introduction

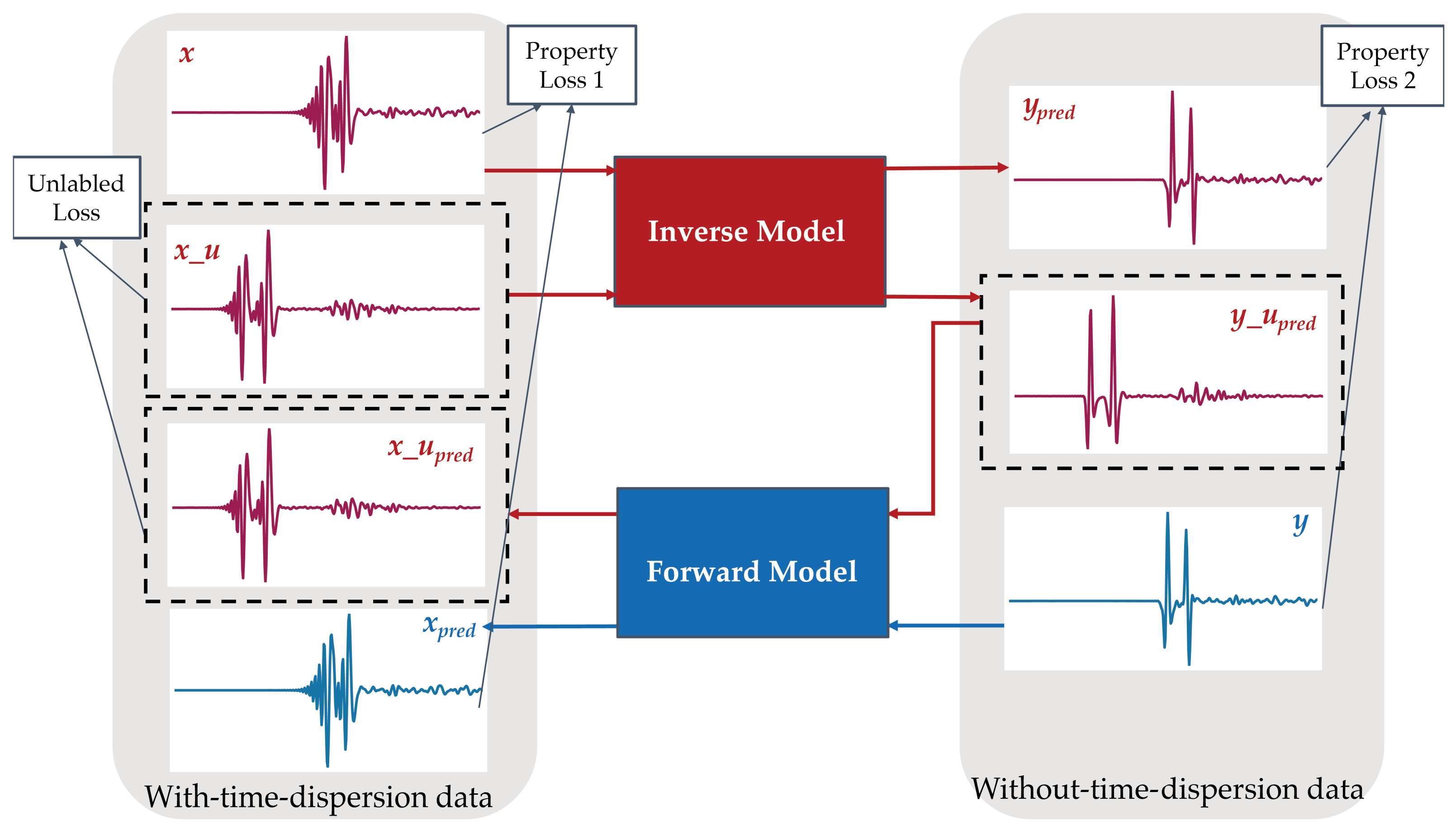

2. Methods

2.1. Theory

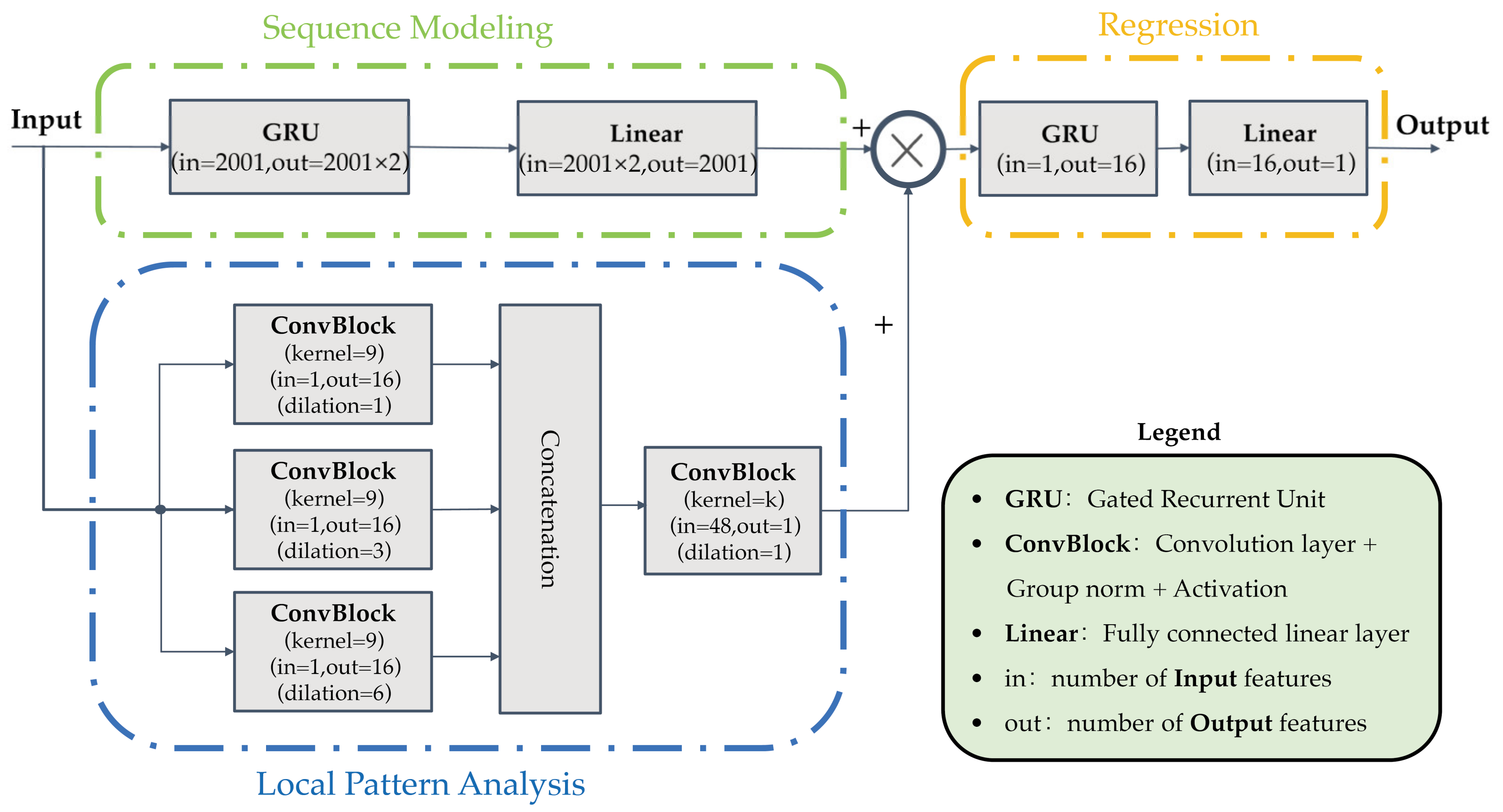

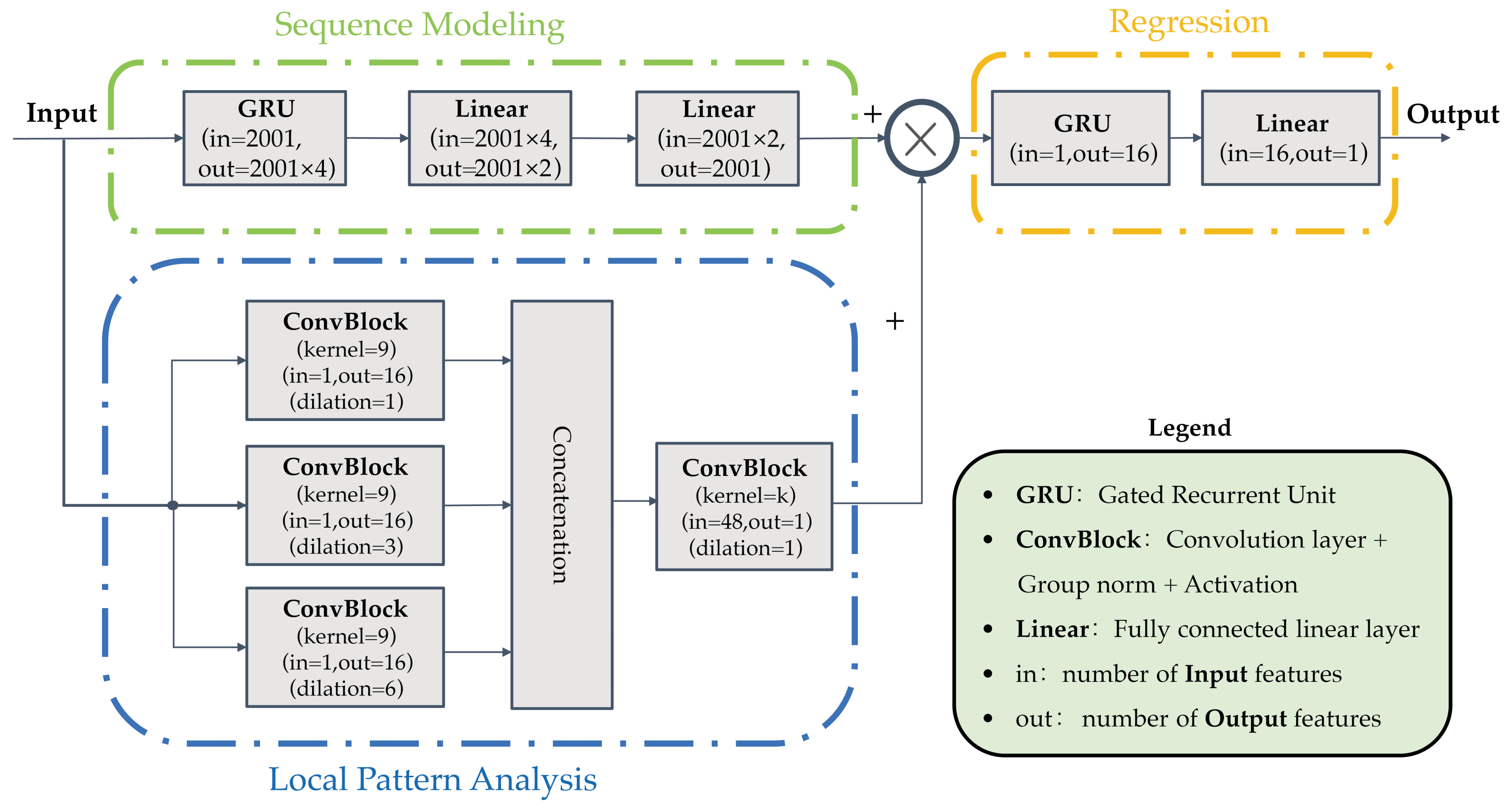

2.2. Network Structures

2.3. Loss Function

2.4. Training Procedure

| Algorithm 1 Algorithm for updating weights and . |

| Input: time-dispersed data sets X, X_U, time-dispersion-free data sets Y |

| Output: and |

| 1: Randomly initialize parameters and |

| 2: epoch = 500, = 0.2, and = 1 |

| 3: for epoch steps do |

| 4: for all of the labeled data sampled do |

| 5: |

| 6: Calculate the Property Loss in Equation (1) |

| 7: Randomly sample the unlabeled data |

| 8: |

| 9: Calculate the Unlabeled Loss in Equation (2) |

| 10: Calculate the Loss in Equation (3) using property loss, unlabeled loss, and |

| 11: end for |

| 12: Update and in order to minimize Loss |

| 13: end for |

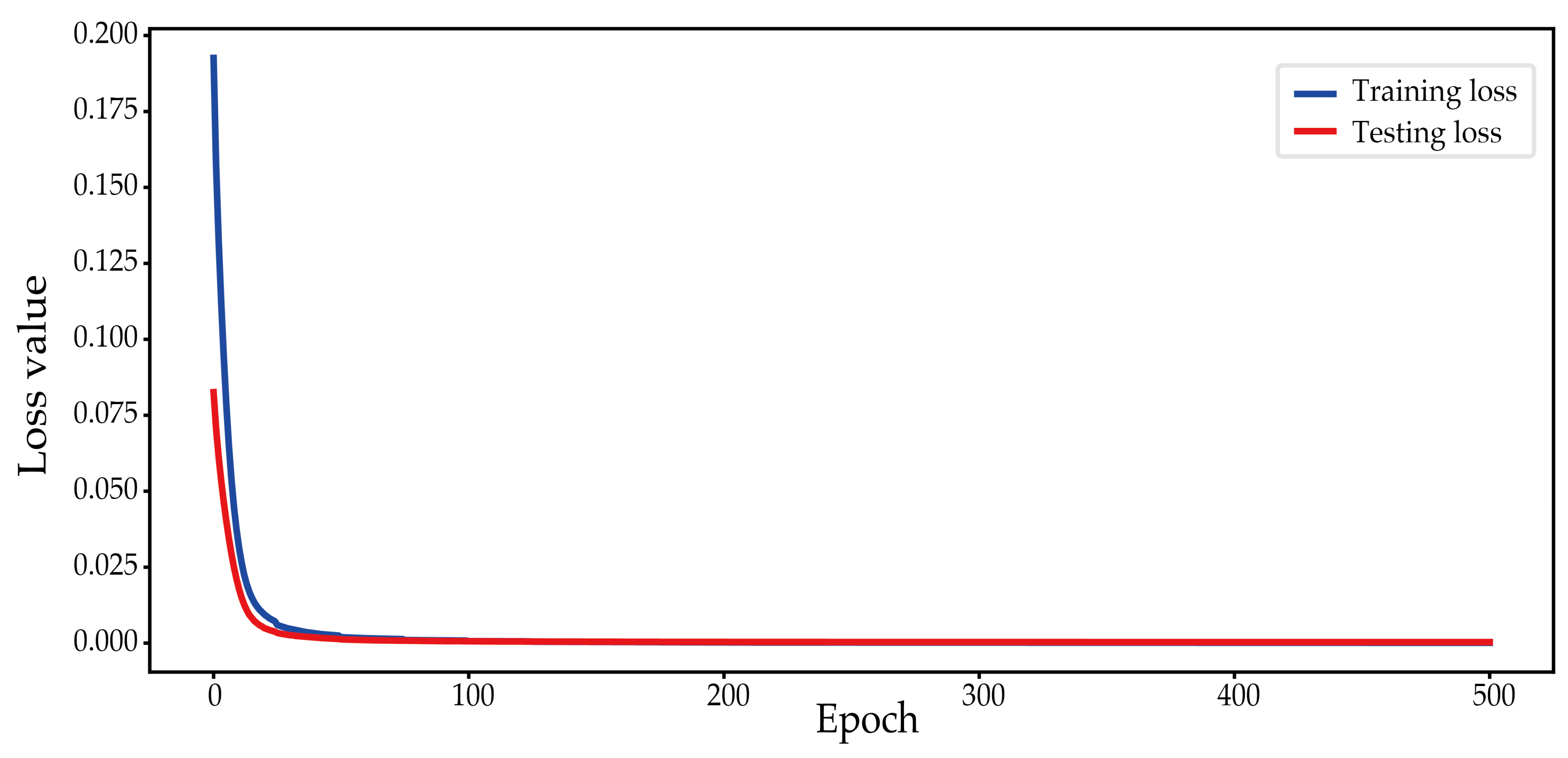

3. Results

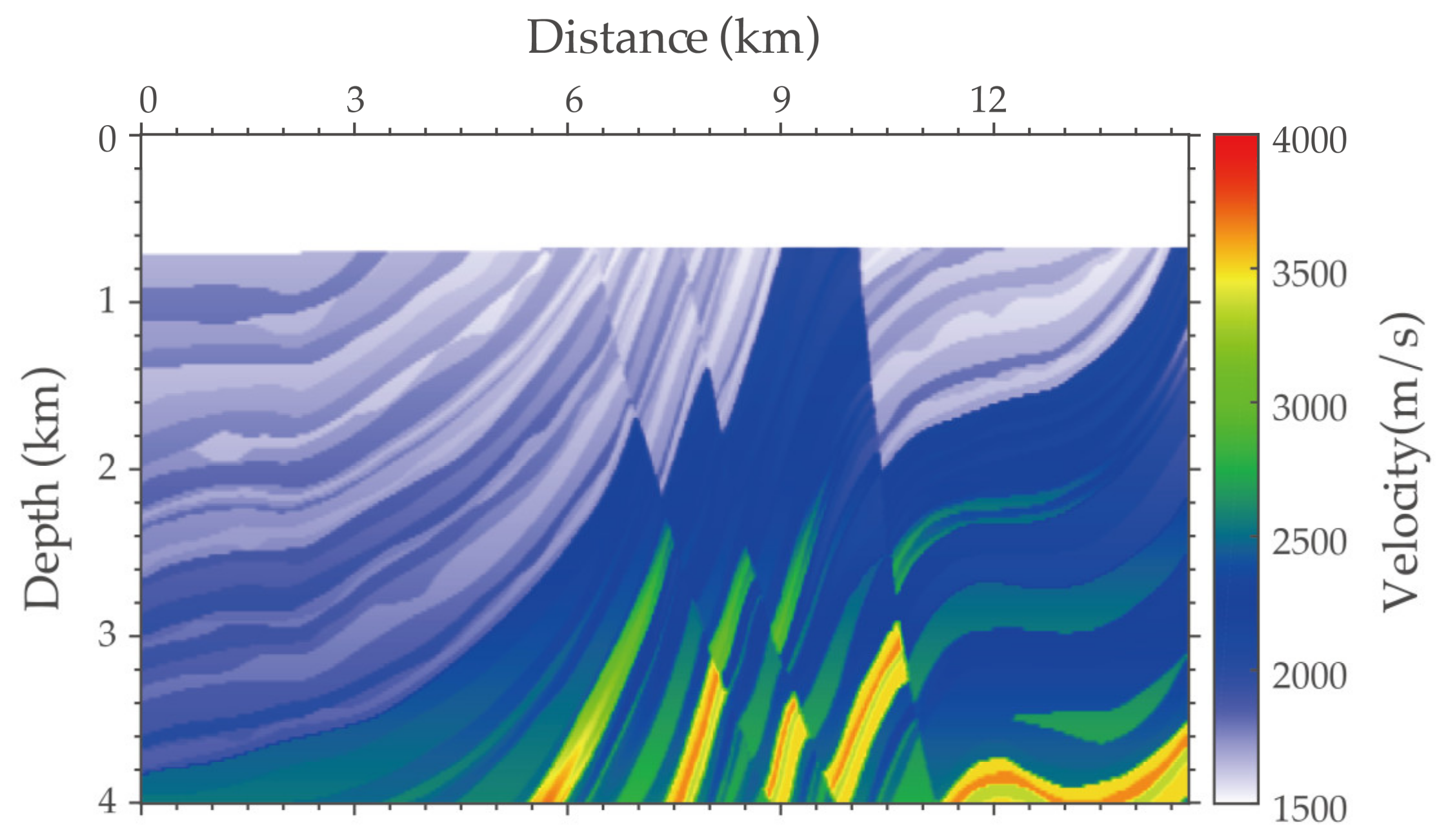

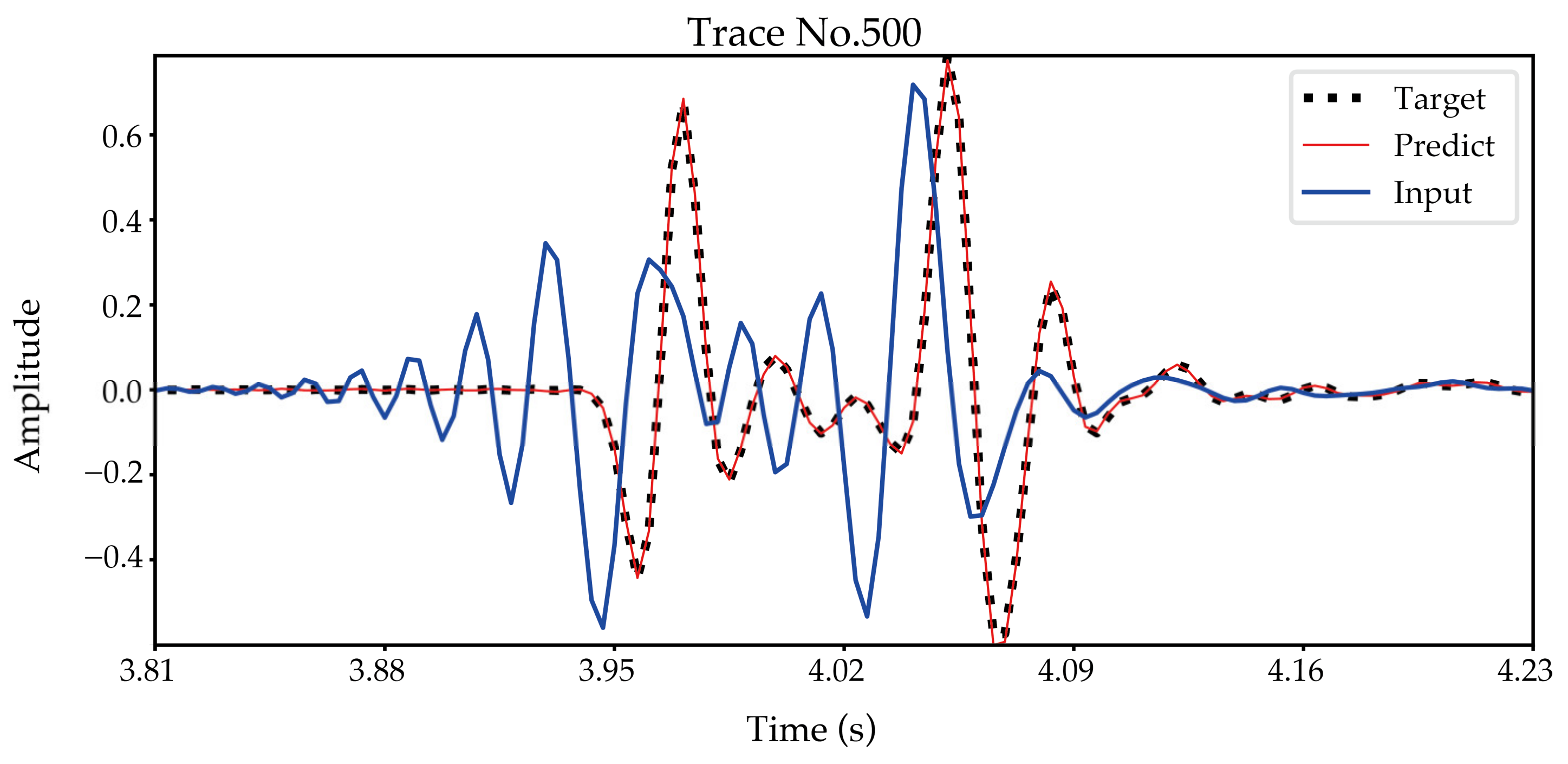

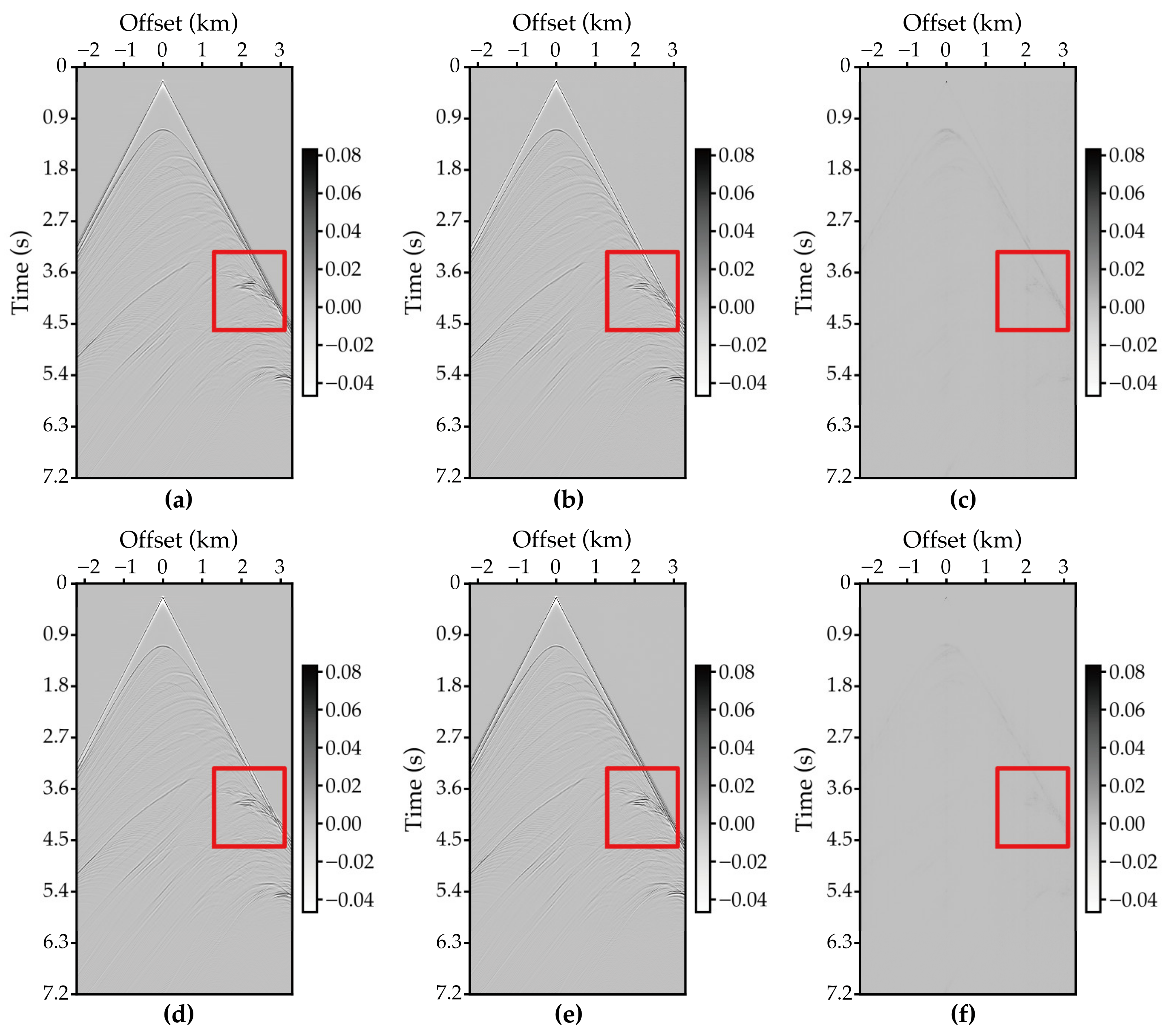

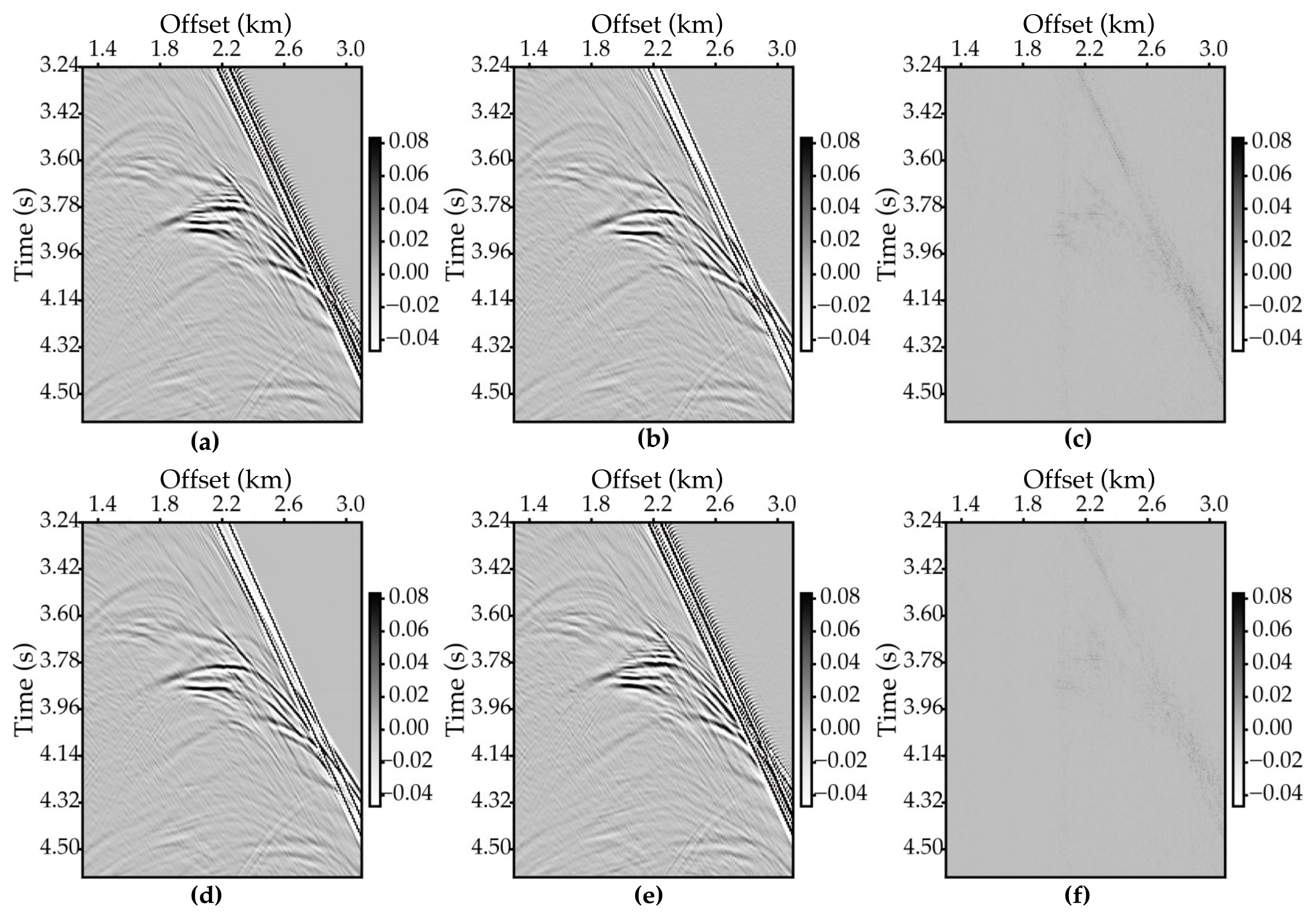

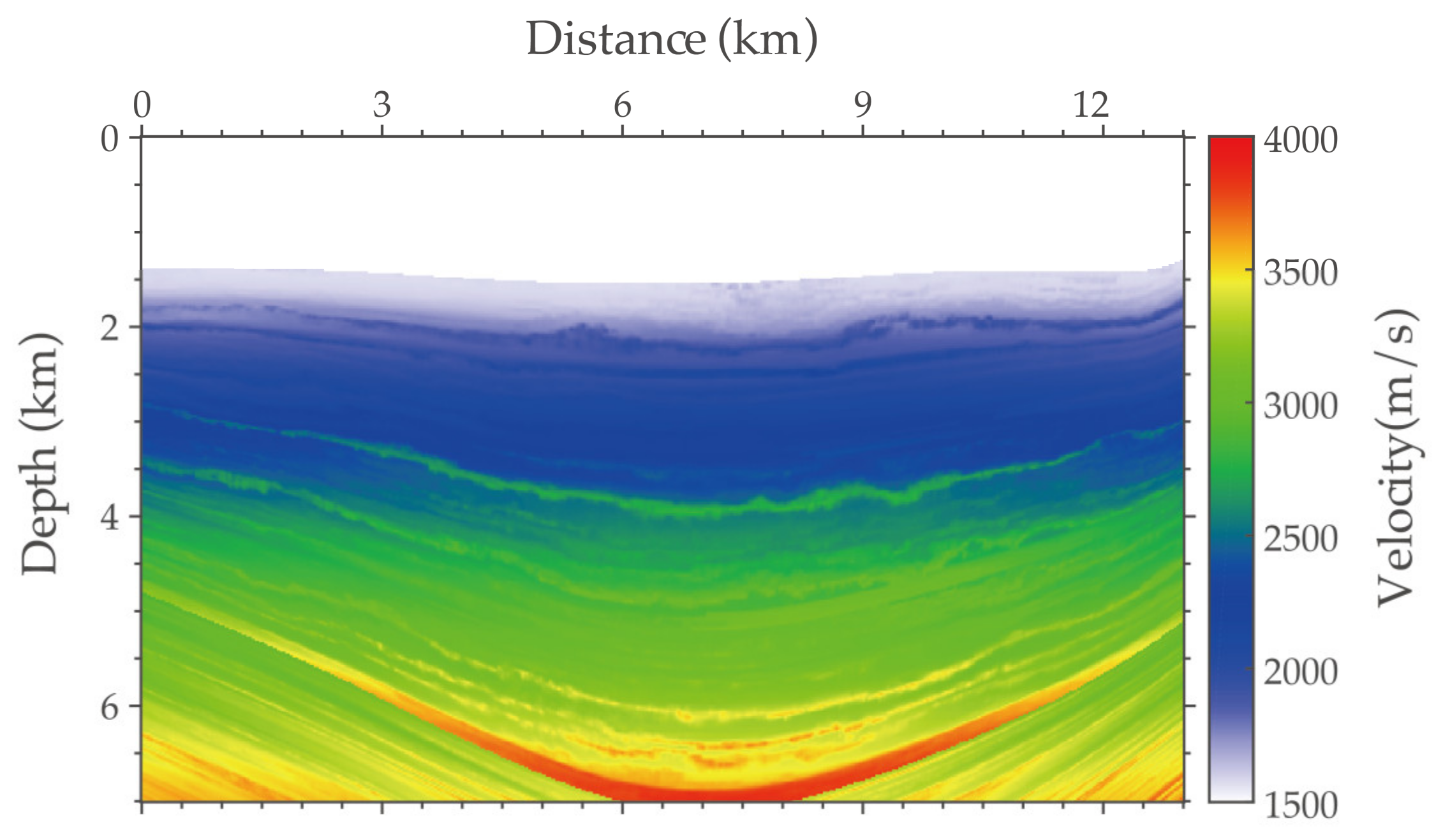

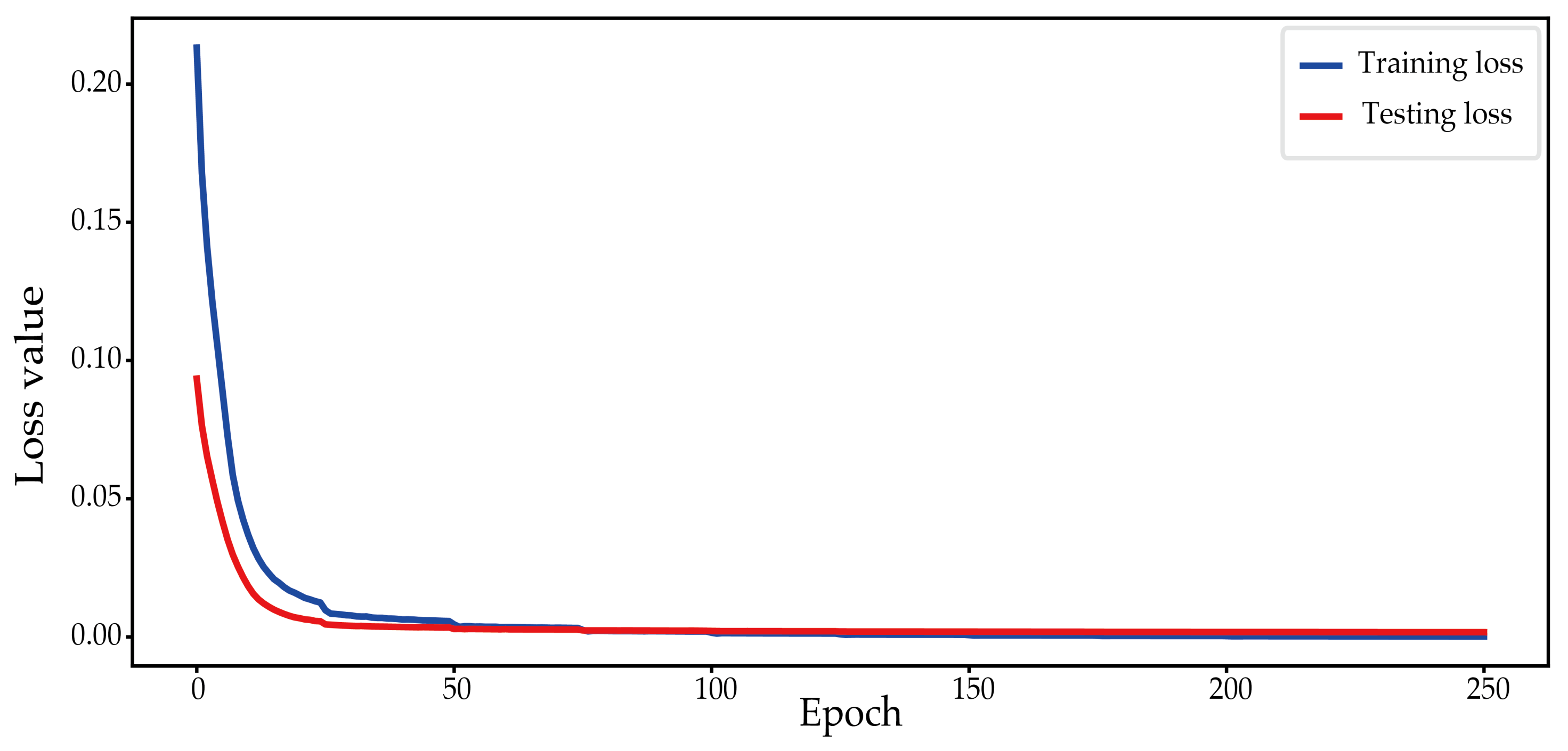

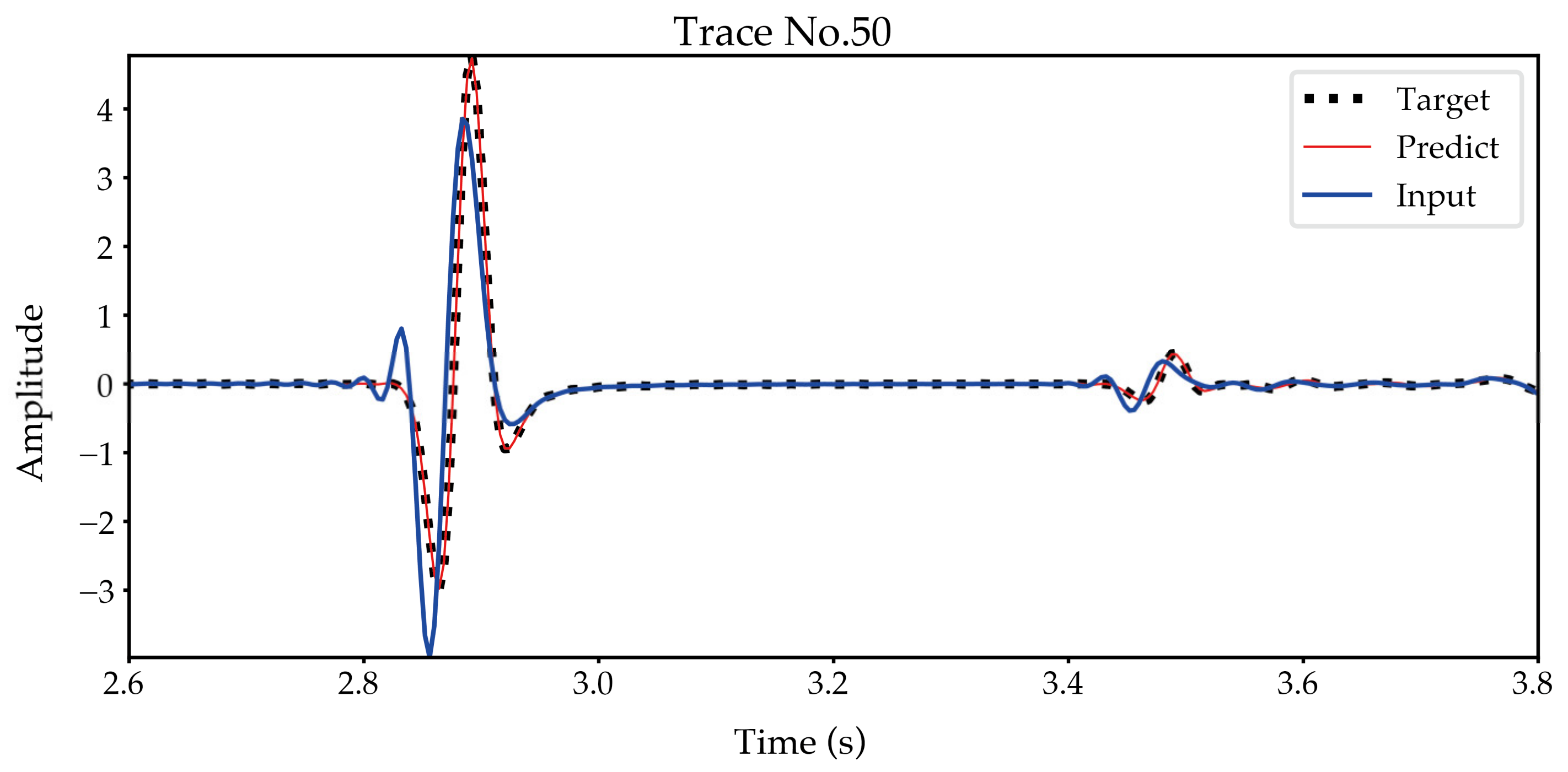

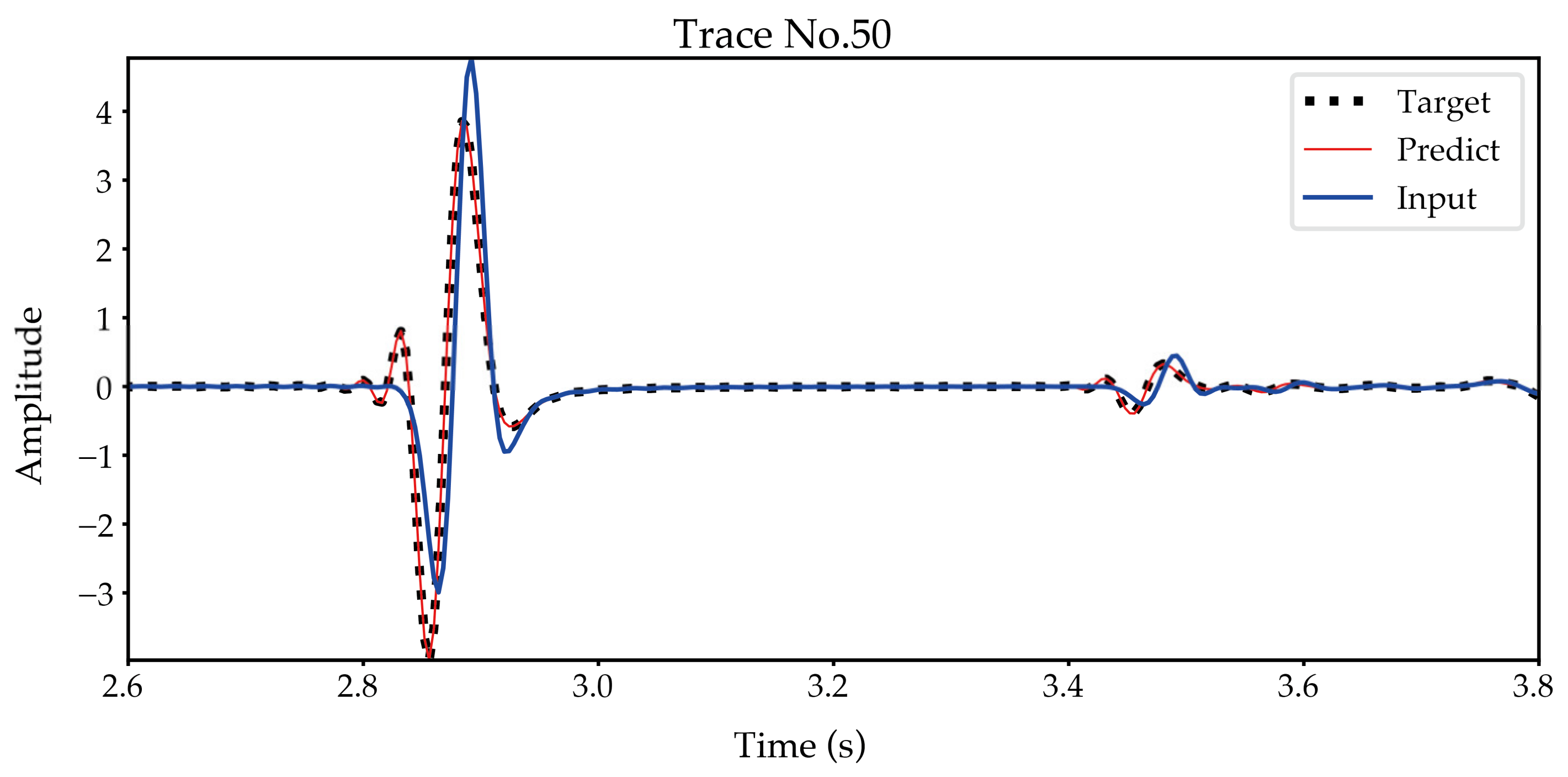

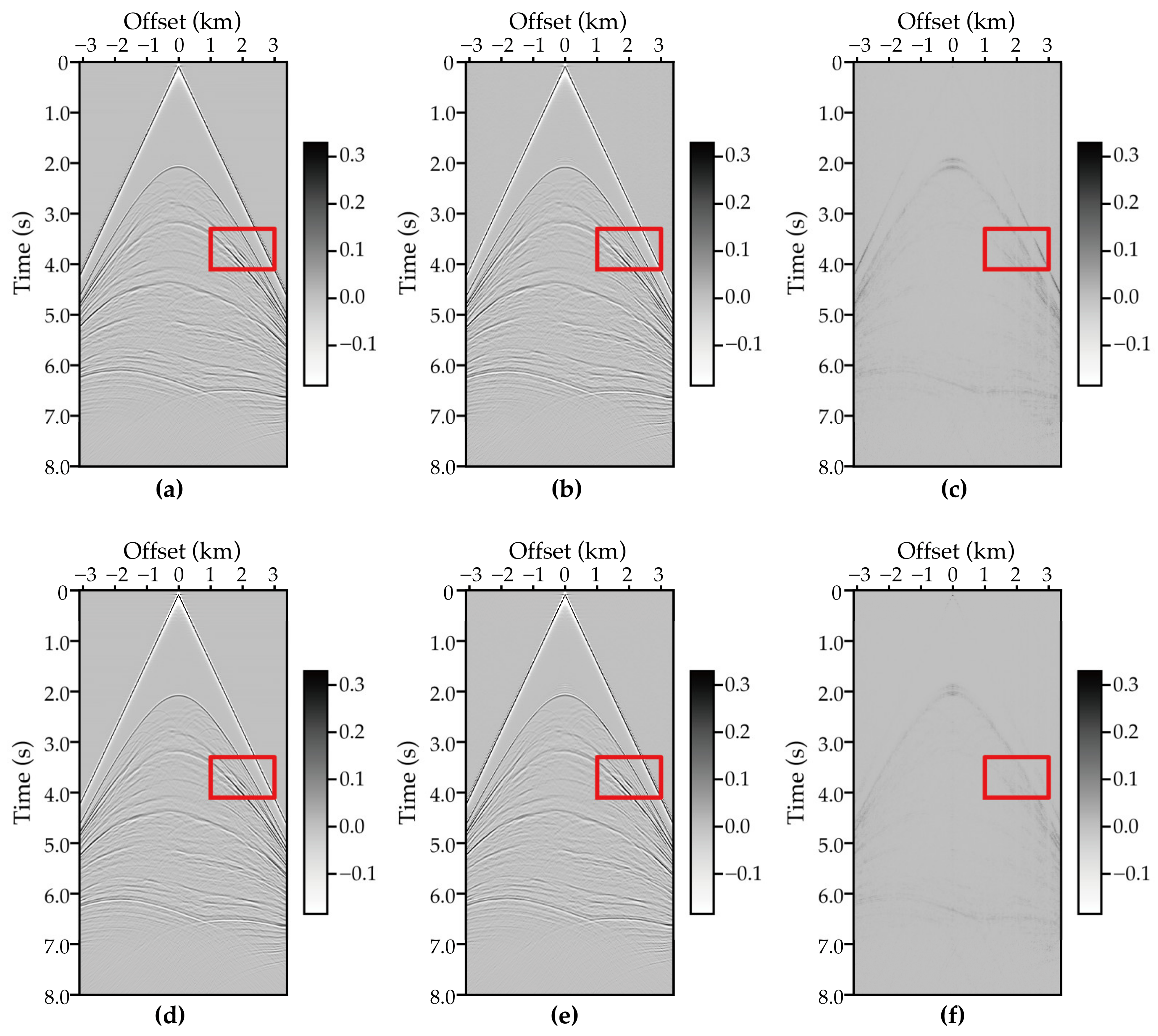

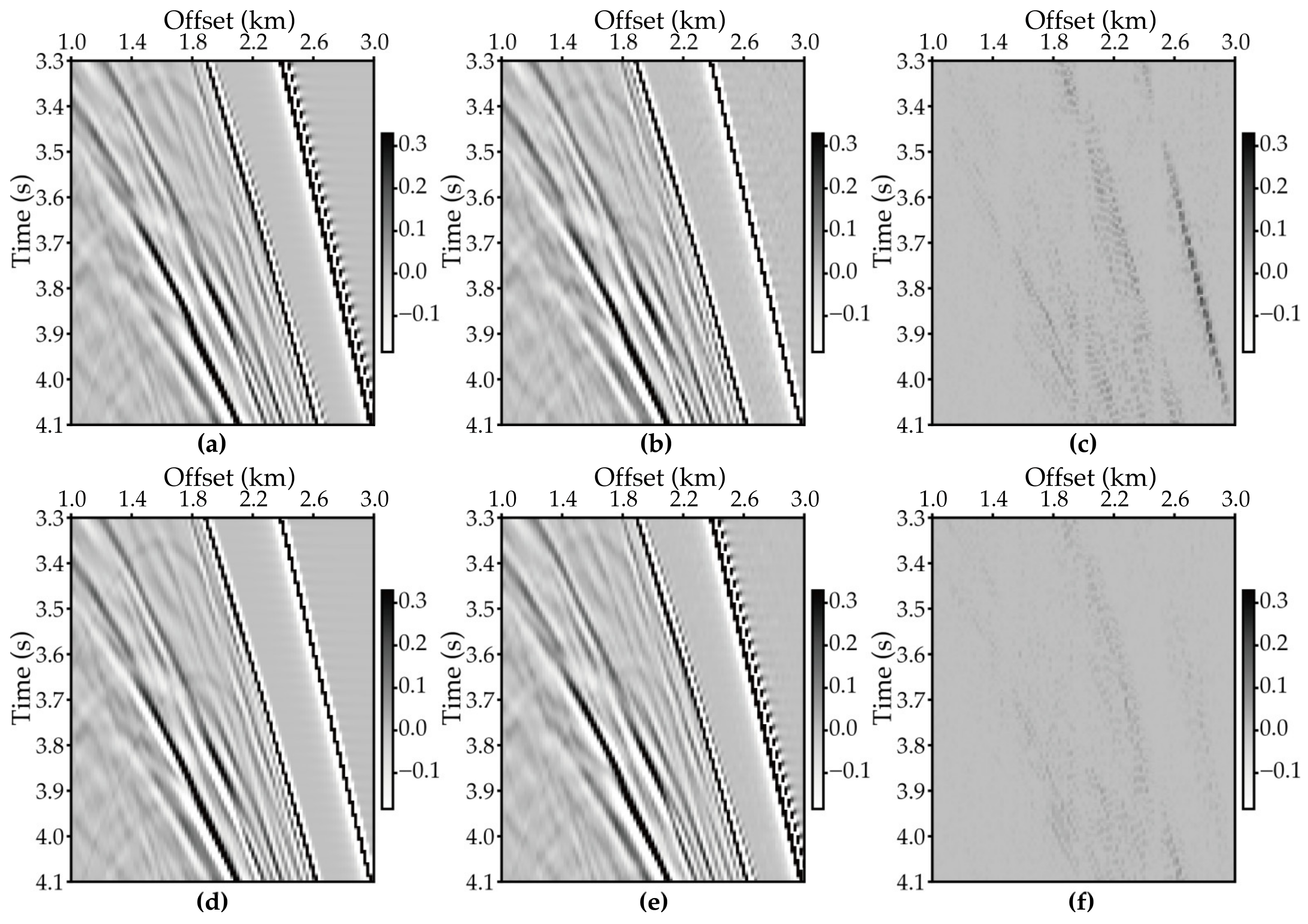

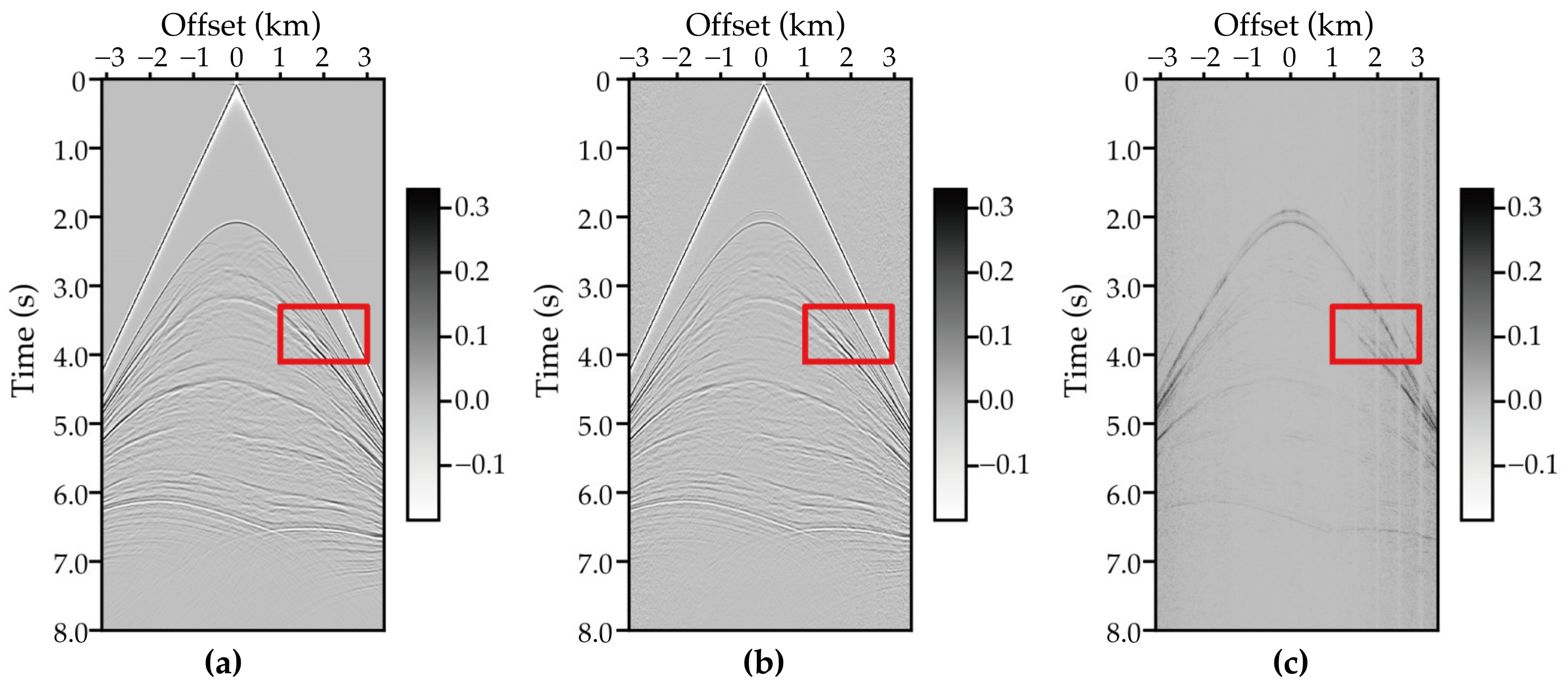

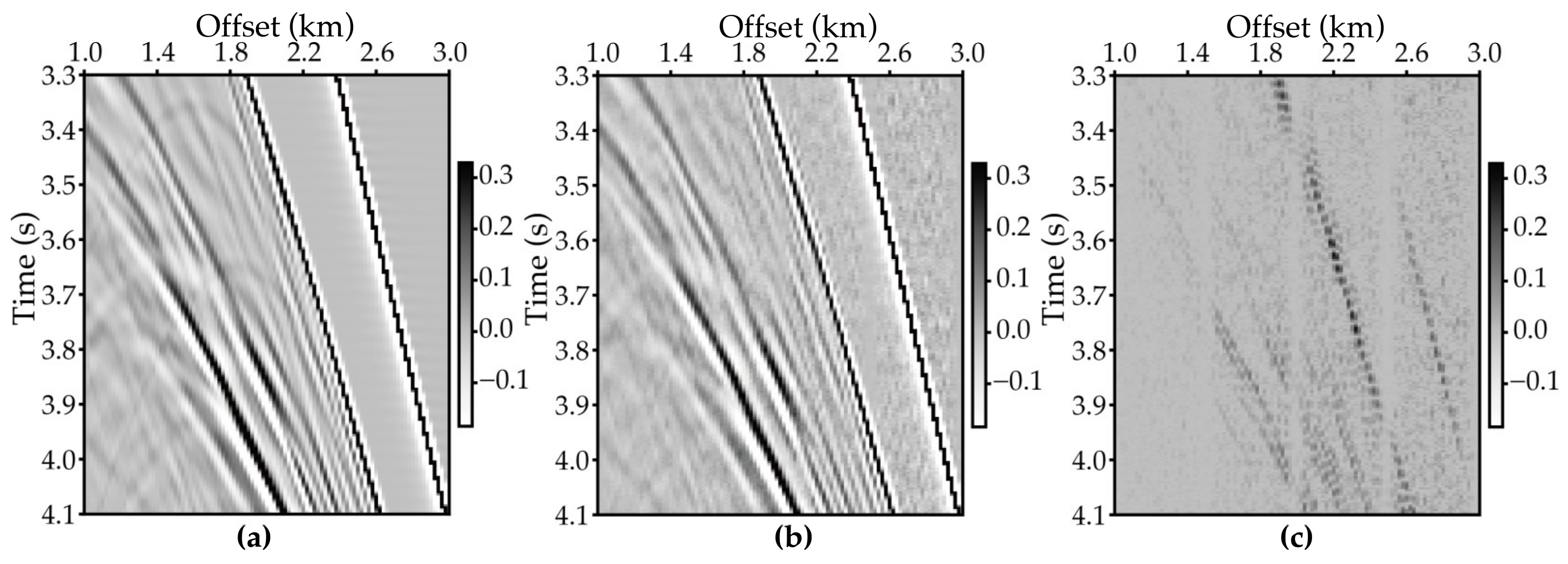

3.1. The Data Test with the Marmousi Model

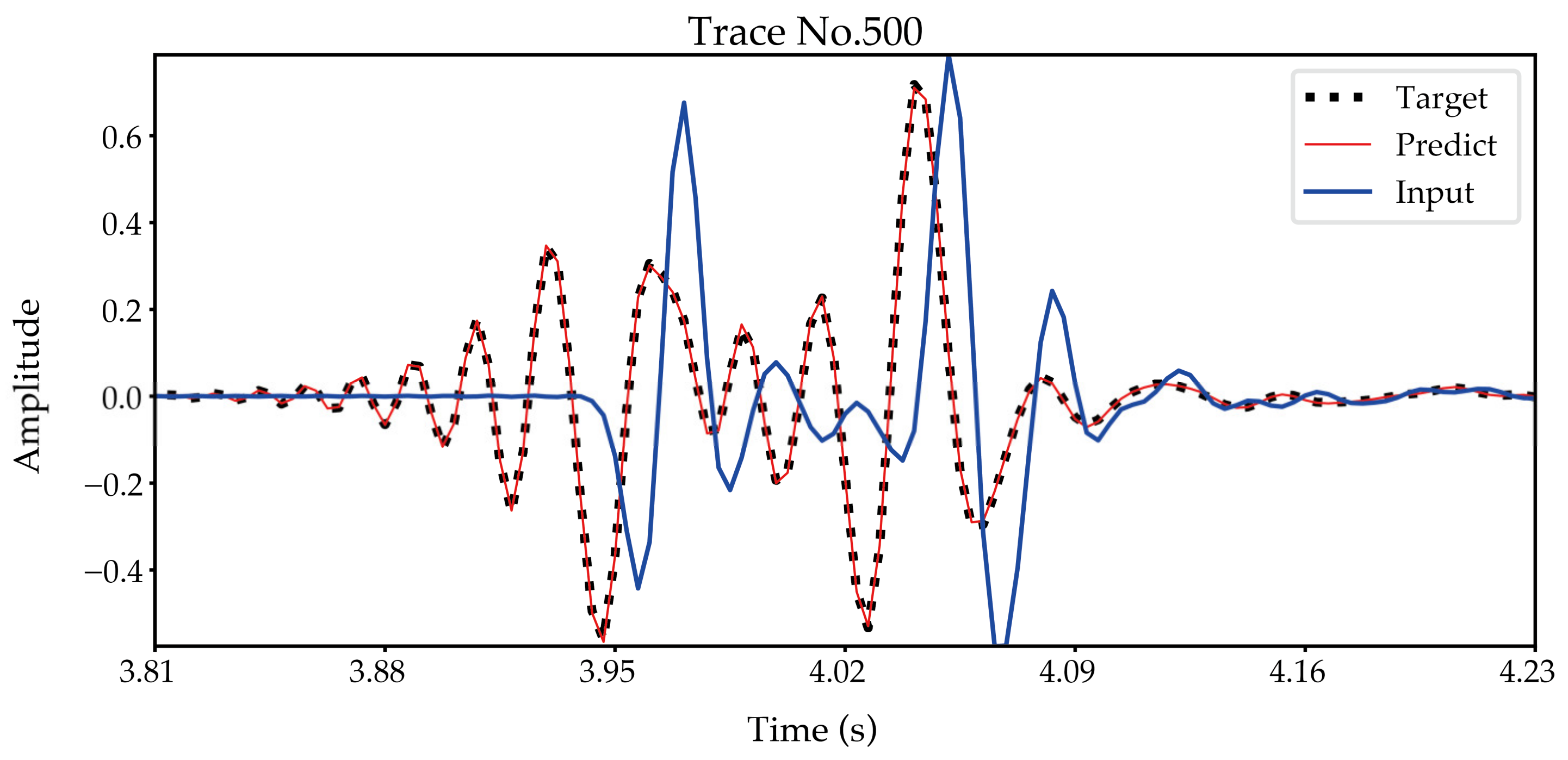

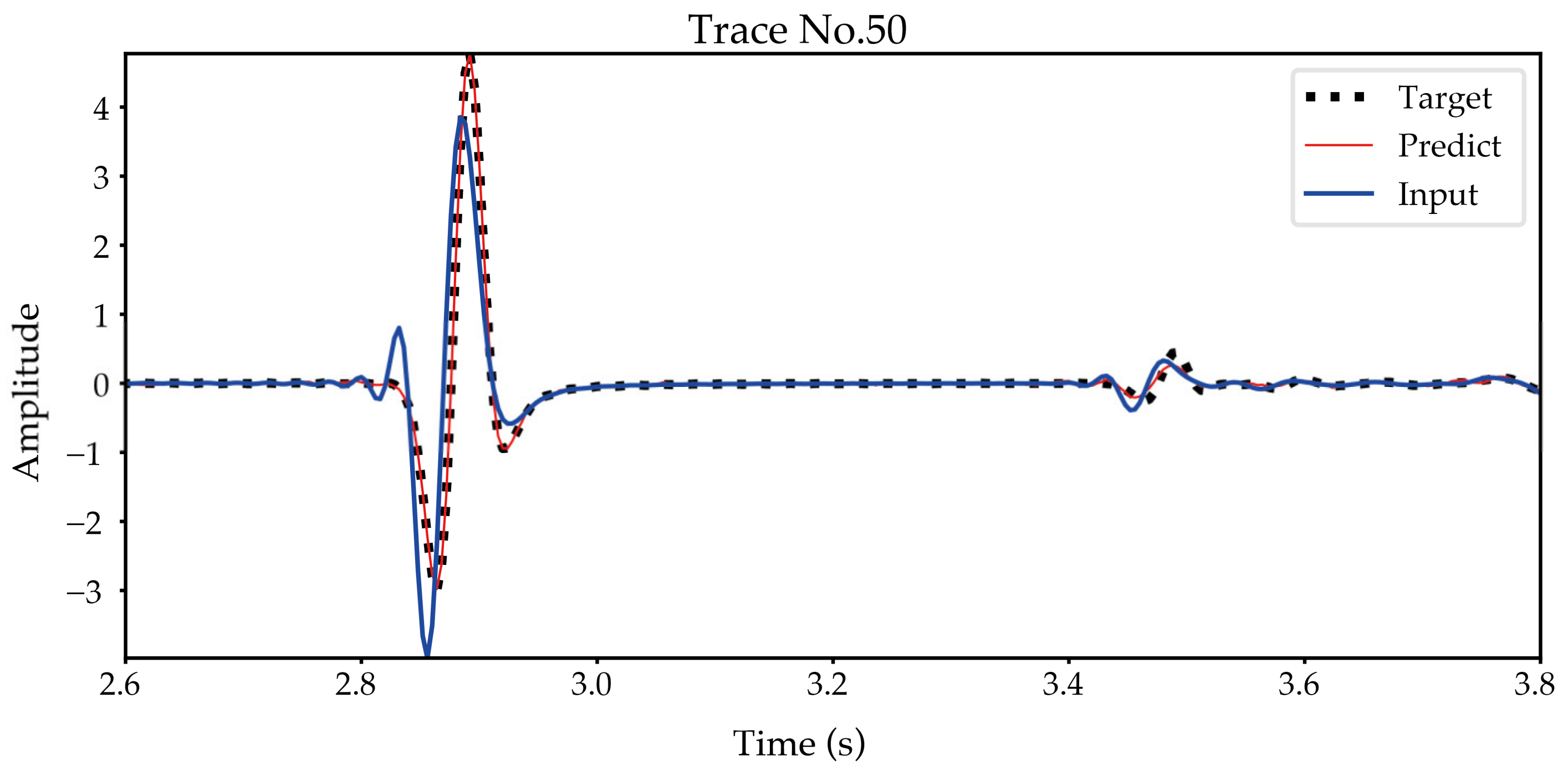

3.2. The Data Test with the SEAM Model

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Li, Z.-C.; Qu, Y.-M. Research progress on seismic imaging technology. Pet. Sci. 2022, 19, 128–146. [Google Scholar] [CrossRef]

- Baysal, E.; Dan, D.K.; Sherwood, J. Reverse-Time Migration. Geophysics 1983, 48, 1514–1524. [Google Scholar] [CrossRef]

- Wu, B.; Yao, G.; Cao, J.-J.; Wu, D.; Li, X.; Liu, N.-C. Huber inversion-based reverse-time migration with de-primary imaging condition and curvelet-domain sparse constraint. Pet. Sci. 2022, 19, 1542–1554. [Google Scholar] [CrossRef]

- Tarantola, A. Inversion of seismic reflection data in the acoustic approximation. Geophysics 1984, 49, 1259–1266. [Google Scholar] [CrossRef]

- Yao, G.; Wu, D. Reflection full waveform inversion. Sci. China Earth Sci. 2017, 60, 1783–1794. [Google Scholar] [CrossRef][Green Version]

- Wang, Z.-Y.; Huang, J.-P.; Liu, D.-J.; Li, Z.-C.; Yong, P.; Yang, Z.-J. 3D variable-grid full-waveform inversion on GPU. Pet. Sci. 2019, 16, 1001–1014. [Google Scholar] [CrossRef]

- Etgen, J.T. High Order Finite-Difference Reverse Time Migration with the Two Way Nonreflecting Wave Equation; Stanford University: Stanford, CA, USA, 1986; pp. 133–146. [Google Scholar]

- Yang, L.; Sen, M.K. A new time–space domain high-order finite-difference method for the acoustic wave equation. J. Comput. Phys. 2009, 228, 8779–8806. [Google Scholar]

- Yao, G.; da Silva, N.V.; Debens, H.A.; Wu, D. Accurate seabed modeling using finite difference methods. Comput. Geosci. 2018, 22, 469–484. [Google Scholar] [CrossRef]

- Song, X.; Fomel, S.; Ying, L. Lowrank finite-differences and lowrank Fourier finite-differences for seismic wave extrapolation in the acoustic approximation. Geophys. J. Int. 2013, 193, 960–969. [Google Scholar] [CrossRef]

- Sun, J.; Fomel, S.; Ying, L. Low-rank one-step wave extrapolation for reverse time migration. Geophysics 2016, 81, S39–S54. [Google Scholar] [CrossRef]

- Zhang, J.-H.; Yao, Z.-X. Optimized finite-difference operator for broadband seismic wave modeling. Geophysics 2013, 78, A13–A18. [Google Scholar] [CrossRef]

- Liu, Y. Research progress on time-space domain finite-difference numerical solution and absorption boundary conditions of wave equation. Oil Geophys. Prospect. 2014, 49, 35–46. [Google Scholar]

- Yang, L.; Yan, H.; Liu, H. Optimal staggered-grid finite-difference schemes based on the minimax approximation method with the Remez algorithm. Geophysics 2017, 82, T27–T42. [Google Scholar] [CrossRef]

- Kosloff, D.; Pestana, R.C.; Tal-Ezer, H. Acoustic and elastic numerical wave simulations by recursive spatial derivative operators. Geophysics 2010, 75, T167–T174. [Google Scholar] [CrossRef]

- Yang, L. Optimal staggered-grid finite-difference schemes based on least-squares for wave equation modelling. Geophys. J. Int. 2014, 197, 1033–1047. [Google Scholar]

- Ren, Z.; Liu, Y. Acoustic and elastic modeling by optimal time-space-domain staggered-grid finite-difference. Geophysics 2015, 80, T17–T40. [Google Scholar] [CrossRef]

- Wang, E.; Liu, Y.; Sen, M.K. Effective finite-difference modelling methods with 2-D acoustic wave equation using a combination of cross and rhombus stencils. Geophys. J. Int. 2016, 206, 1933–1958. [Google Scholar] [CrossRef]

- Kosloff, D.D.; Baysal, E. Forward modeling by a Fourier method. Geophysics 1982, 47, 1402–1412. [Google Scholar] [CrossRef]

- Fornberg, B. The pseudospectral method; comparisons with finite differences for the elastic wave equation. Geophysics 1987, 52, 483–501. [Google Scholar] [CrossRef]

- Dablain, M. The application of high-order differencing to the scalar wave equation. Geophysics 1986, 51, 54–66. [Google Scholar] [CrossRef]

- Blanch, J.O.; Robertsson, J. A modified Lax-Wendroff correction for wave propagation in media described by Zener elements. Geophys. J. Int. 1997, 131, 381–386. [Google Scholar] [CrossRef]

- Soubaras, R.; Yu, Z. Two-step Explicit Marching Method for Reverse Time Migration. In SEG Technical Program Expanded Abstracts 2008; Society of Exploration Geophysicists: Huston, TX, USA, 2008. [Google Scholar]

- Amundsen, L.; Pedersen, Ø. Time step n-tupling for wave equations. Geophysics 2017, 82, T249–T254. [Google Scholar] [CrossRef]

- Liu, Y.; Sen, M.K. Time–space domain dispersion-relation-based finite-difference method with arbitrary even-order accuracy for the 2D acoustic wave equation. J. Comput. Phys. 2013, 232, 327–345. [Google Scholar] [CrossRef]

- Tan, S.; Huang, L. An efficient finite-difference method with high-order accuracy in both time and space domains for modelling scalar-wave propagation. Geophys. J. Int. 2014, 197, 1250–1267. [Google Scholar] [CrossRef]

- Ren, Z.; Li, Z.; Liu, Y.; Sen, M.K. Modeling of the Acoustic Wave Equation by Staggered-Grid Finite-Difference Schemes with High-Order Temporal and Spatial AccuracyTemporal High-Order Finite Difference. Bull. Seismol. Soc. Am. 2017, 107, 2160–2182. [Google Scholar] [CrossRef]

- Ren, Z.-M.; Dai, X.; Bao, Q.-Z. Source wavefield reconstruction based on an implicit staggered-grid finite-difference operator for seismic imaging. Pet. Sci. 2022. [Google Scholar] [CrossRef]

- Stork, C. Eliminating Nearly All Dispersion Error from FD Modeling and RTM with Minimal Cost Increase. In Proceedings of the 75th Eage Conference en Exhibition Incorporating SPE Europec, London, UK, 10–13 June 2013. [Google Scholar]

- Wang, M.; Xu, S. Finite-difference time dispersion transforms for wave propagation. Geophysics 2015, 80, WD19–WD25. [Google Scholar] [CrossRef]

- Li, Y.E.; Wong, M.; Clapp, R. Equivalent accuracy at a fraction of the cost: Overcoming temporal dispersion. Geophysics 2016, 81, T189–T196. [Google Scholar] [CrossRef][Green Version]

- Koene, E.; Robertsson, J.; Broggini, F.; Andersson, F. Eliminating time dispersion from seismic wave modeling. Geophys. J. Int. 2018, 213, 169–180. [Google Scholar] [CrossRef]

- Wang, Y.-Q.; Wang, Q.; Lu, W.-K.; Ge, Q.; Yan, X.-F. Seismic impedance inversion based on cycle-consistent generative adversarial network. Pet. Sci. 2022, 19, 147–161. [Google Scholar] [CrossRef]

- Yuan, S.; Jiao, X.; Luo, Y.; Sang, W.; Wang, S. Double-scale supervised inversion with a data-driven forward model for low-frequency impedance recovery. Geophysics 2022, 87, R165–R181. [Google Scholar] [CrossRef]

- Gadylshin, K.; Vishnevsky, D.; Gadylshina, K.; Lisitsa, V. Numerical dispersion mitigation neural network for seismic modeling. Geophysics 2022, 87, T237–T249. [Google Scholar] [CrossRef]

- Yuan, S.; Wang, J.; Liu, T.; Xie, T.; Wang, S. 6D phase-difference attributes for wide-azimuth seismic data interpretation. Geophysics 2020, 85, IM37–IM49. [Google Scholar] [CrossRef]

- Alfarraj, M.; AlRegib, G. Semi-supervised learning for acoustic impedance inversion. In SEG Technical Program Expanded Abstracts 2019; Society of Exploration Geophysicists: Huston, TX, USA, 2019; pp. 2298–2302. [Google Scholar]

- Cho, K.; van Merriënboer, B.; Bahdanau, D.; Bengio, Y. On the properties of neural machine translation: Encoder–decoder approaches. In Proceedings of the 8th Workshop on Syntax, Semantics and Structure in Statistical Translation, SSST 2014, Doha, Qatar, 25 October 2014; pp. 103–111. [Google Scholar]

- Yu, F.; Koltun, V. Multi-Scale Context Aggregation by Dilated Convolutions. In Proceedings of the 4th International Conference on Learning Representations, ICLR 2016, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

| Metric | Training | Validation |

|---|---|---|

| PCC | 0.9999 | 0.9997 |

| r2 | 0.9999 | 0.9995 |

| L | 7.19 × 10−5 | 2.67 × 10−4 |

| PSNR | 91.2205 | 86.7756 |

| SSIM | 1.0000 | 1.0000 |

| Metric | Training | Validation |

|---|---|---|

| PCC | 1.0000 | 0.9993 |

| r2 | 1.0000 | 0.9984 |

| L | 1.70 × 10−5 | 8.09 × 10−4 |

| PSRN | 94.6501 | 79.9299 |

| SSIM | 1.0000 | 1.0000 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, Y.; Wu, B.; Yao, G.; Ma, X.; Wu, D. Eliminate Time Dispersion of Seismic Wavefield Simulation with Semi-Supervised Deep Learning. Energies 2022, 15, 7701. https://doi.org/10.3390/en15207701

Han Y, Wu B, Yao G, Ma X, Wu D. Eliminate Time Dispersion of Seismic Wavefield Simulation with Semi-Supervised Deep Learning. Energies. 2022; 15(20):7701. https://doi.org/10.3390/en15207701

Chicago/Turabian StyleHan, Yang, Bo Wu, Gang Yao, Xiao Ma, and Di Wu. 2022. "Eliminate Time Dispersion of Seismic Wavefield Simulation with Semi-Supervised Deep Learning" Energies 15, no. 20: 7701. https://doi.org/10.3390/en15207701

APA StyleHan, Y., Wu, B., Yao, G., Ma, X., & Wu, D. (2022). Eliminate Time Dispersion of Seismic Wavefield Simulation with Semi-Supervised Deep Learning. Energies, 15(20), 7701. https://doi.org/10.3390/en15207701