Performance Prediction of Induction Motor Due to Rotor Slot Shape Change Using Convolution Neural Network

Abstract

:1. Introduction

2. Convolution Neural Network (CNN)

3. Induction Motor Performance Prediction Procedure and Results

- Step 1: Collect induction motor analysis learning data;

- Step 2: Construct and train a deep learning model;

- Step 3: Validate the prediction accuracy of performance variables according to shape change;

- Step 4: Validate the prediction accuracy of the performance parameters for the optimized shape.

3.1. Collect Induction Motor Analysis Learning Data

3.2. Construct and Train a Deep Learning Model

3.3. Validate the Prediction Accuracy of Performance Variables According to Shape Change

3.4. Validate the Prediction Accuracy of the Performance Parameters for the Oprimized Shape

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Koo, Y.D.; Chun, D.H. Technical trend of High efficiency MEPS in Korea. J. Korean Inst. Illum. Electr. Install. Eng. 2011, 25, 26–37. [Google Scholar]

- Ferreira, F.J.; de Almeida, A.T. Novel multiflux level, three-phase, squirrel-cage induction motor for efficiency and power factor maximization. IEEE Trans. Energy Convers. 2008, 23, 101–109. [Google Scholar] [CrossRef]

- Lee, G.; Min, S.; Hong, J.P. Optimal Shape Design of Rotor Slot in Squirrel-Cage Induction Motor Considering Torque Characteristics. IEEE Trans. Magn. 2013, 49, 2197–2200. [Google Scholar] [CrossRef]

- Li, Y.; Li, S.; Sarlioglu, B. Analysis of pulsating torque in squirrel cage induction machines by investigating stator slot and rotor bar dimensions for traction applications. In Proceedings of the IEEE Energy Conversion Congress and Exposition, Denver, CO, USA, 15–19 September 2013; pp. 246–253. [Google Scholar]

- Mohamed, M.Y.; Maksoud, S.A.A.; Fawzi, M.; Kalas, A.E. Effect of poles, slots, phases number and stack length changes on the optimal design of induction motor. In Proceedings of the Nineteenth International Middle East Power Systems Conference (MEPCON), Cairo, Egypt, 19–21 December 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 466–471. [Google Scholar]

- Mellah, H.; Hemsas, K.E. Design and simulation analysis of outer stator inner rotor DFIG by 2d and 3d finite element methods. Int. J. Electr. Eng. Technol. 2021, 3, 457–470. [Google Scholar]

- Fireteanu, V.; Tudorache, T.; Turcanu, O.A. Optimal design of rotor slot geometry of squirrel-cage type induction motors. In Proceedings of the IEEE International Electric Machines & Drives Conference, Antalya, Turkey, 3–5 May 2007; Volume 1, pp. 537–542. [Google Scholar]

- Zhang, D.; Park, C.S.; Koh, C.S. A new optimal design method of rotor slot of three-phase squirrel cage induction motor for NEMA class D speed-torque characteristic using multi-objective optimization algorithm. IEEE Trans. Magn. 2012, 48, 879–882. [Google Scholar] [CrossRef]

- Marfoli, A.; Di Nardo, M.; Degano, M.; Gerada, C.; Chen, W. Rotor design optimization of squirrel cage induction motor-part i: Problem statement. IEEE Trans. Energy Convers. 2020, 36, 1271–1279. [Google Scholar] [CrossRef]

- Liang, L.; Liu, M.; Martin, C. Sun, W. A deep learning approach to estimate stress distribution: A fast and accurate surrogate of finite-element analysis. J. R. Soc. Interface 2018, 15, 138. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Khan, A.; Ghorbanian, V. Lowther, D. Deep learning for magnetic field estimation. IEEE Trans. Magn. 2019, 55, 1–4. [Google Scholar]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Fukushima, K.; Miyake, S. Neocognitron: A self-organizing neural network model for a mechanism of visual pattern recognition. In Competition and Cooperation in Neural Nets; Springer: Berlin/Heidelberg, Germany, 1982; pp. 267–285. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Sasaki, H.; Igarashi, H. Topology optimization accelerated by deep learning. IEEE Trans. Magn. 2019, 55, 1–5. [Google Scholar] [CrossRef] [Green Version]

- Asanuma, J.; Doi, S.; Igarashi, H. Transfer learning through deep learning: Application to topology optimization of electric motor. IEEE Trans. Magn. 2020, 56, 1–4. [Google Scholar] [CrossRef]

- Chattopadhyay, P.; Saha, N.; Delpha, C.; Sil, J. Deep learning in fault diagnosis of induction motor drives. In Proceedings of the 2018 Prognostics and System Health Management Conference, Chongqing, China, 26 October 2018; pp. 1068–1073. [Google Scholar]

- Gabdullin, N.; Madanzadeh, S.; Vilkin, A. Towards End-to-End Deep Learning Performance Analysis of Electric Motors. Actuators 2021, 10, 28. [Google Scholar] [CrossRef]

- Lehikoinen, A.; Davidsson, T.; Arkkio, A.; Belahcen, A. A high-performance open-source finite element analysis library for magnetics in MATLAB. In Proceedings of the XIII International Conference on Electrical Machines, Alexandroupoli, Greece, 3–6 September 2018; pp. 486–492. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the 27th International Conference on Machine Learning (ICML), Haifa, Israel, 21–24 June 2010. [Google Scholar]

- Nwankpa, C.; Ijomah, W.; Gachagan, A.; Marshall, S. Activation functions: Comparison of trends in practice and research for deep learnin. arXiv 2018, arXiv:1811.03378. [Google Scholar]

- Zhou, Y.T.; Chellappa, R. Computation of optical flow using a neural network. In Proceedings of the IEEE 1988 International Conference on Neural Networks, San Diego, CA, USA, 24–27 July 1988; pp. 71–78. [Google Scholar]

- Geuzaine, C.; Remacle, J.F. Gmsh: A 3-D finite element mesh generator with built-in pre-and post-processing facilities. Int. J. Numer. Methods Eng. 2009, 79, 1309–1331. [Google Scholar] [CrossRef]

- Xu, H.; Sun, L.P.; Shi, Y.Z.; Wu, Y.H.; Zhang, B.; Zhao, D.Q. Optimization of cultivation conditions for extracellular polysaccharide and mycelium biomass by Morchella esculenta As51620. Biochem. Eng. J. 2008, 39, 66–73. [Google Scholar] [CrossRef]

- Dodge, Y. The Concise Encyclopedia of Statistics; Springer Science & Business Media: Neuchâtel, Switzerland, 2008; pp. 88–90. [Google Scholar]

| General data | Given output power | 2.2 kW |

| Rated voltage | 380 V | |

| Number of poles | 4 | |

| Frequency | 60 Hz | |

| Length of shaft | 120 mm | |

| Winding | Turns per coil | 16 |

| Winding layers | 2 | |

| Winding connection | Star connection | |

| Filling factor | 41.25% | |

| Stator | Number of slots | 36 |

| Outer diameter | 180 mm | |

| Inner diameter | 110 mm | |

| Material | 50PN470 | |

| Rotor | Number of slots | 28 |

| Air gap | 0.35 mm | |

| Inner diameter | 32 mm | |

| Material | 50PN470 |

| Type 1 | Type 2 | Type 3 | |

|---|---|---|---|

Type 1-1 (1500) |  Type 2-1 (500) |  Type 3-1 (500) |  Type 3-2 (500) |

Type 1-2 (1500) |  Type 2-2 (500) |  Type 3-3 (500) |  Type 3-4 (500) |

Type 2-3 (500) |  Type 3-5 (500) |  Type 3-6 (500) | |

| Case | Data Type | Number of Training Data Points |

|---|---|---|

| Case 1 | Type 1 | 3000 |

| Case 2 | Type 1, Type 2 | 4500 |

| Case 3 | Type 1, Type 2, Type 3 | 7500 |

Test data 1 (50) |  Test data 2 (50) |  Test data 3 (50) |

Test data 4 (50) |  Test data 5 (50) |  Test data 6 (50) |

Test data 7 (50) |  Test data 8 (50) |  Test data 9 (50) |

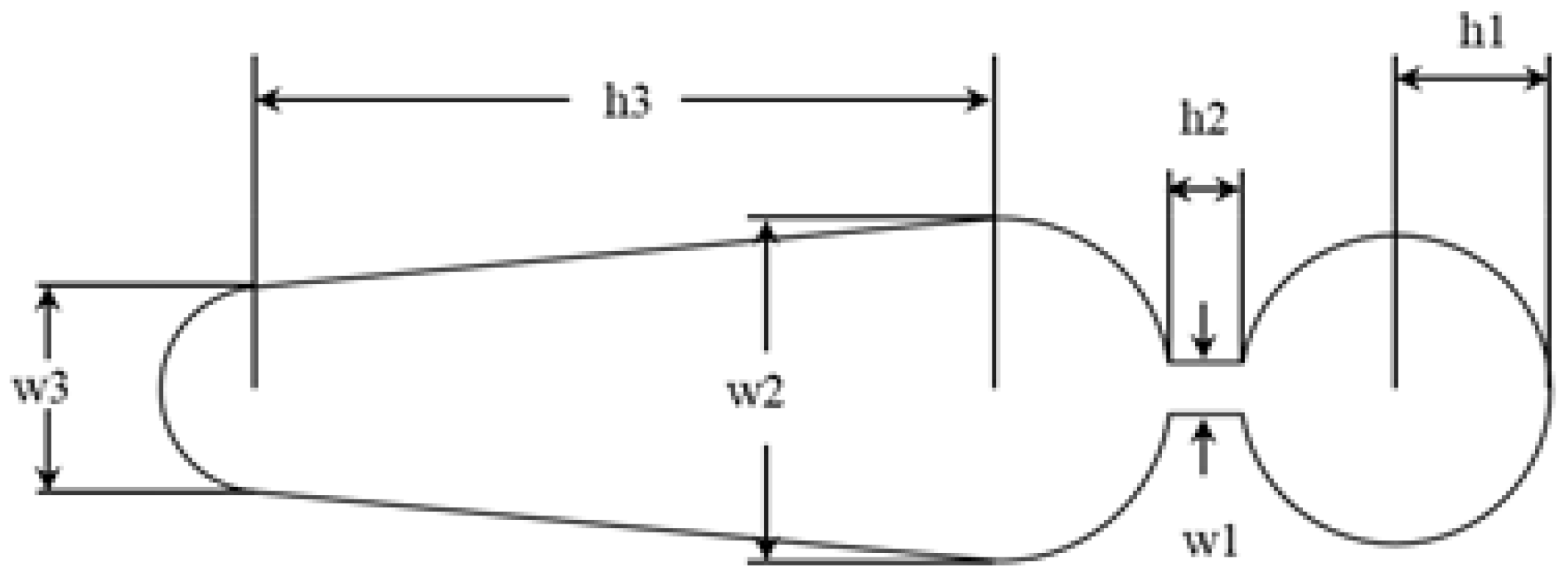

| Objective Function | Design Variables |

|---|---|

| Efficiency | 1.5 ≤ h1 ≤ 3.0 |

| 1.4 ≤ h2 ≤ 7.0 | |

| 1.0 ≤ h3 ≤ 18.0 | |

| 0.8 ≤ w1 ≤ 1.0 | |

| 2.0 ≤ w2 ≤ 3.0 | |

| 0.5 ≤ w3 ≤ 2.5 |

| Design Variables (mm) | Efficiency (%) |

|---|---|

| h1 = 2.997 | 89.62 |

| h2 = 1.881 | |

| h3 = 12.59 | |

| w1 = 0.973 | |

| w2 = 2.589 | |

| w3 = 1.319 |

| Optimal Shape | Case 1 | Case 2 | Case 3 | |

|---|---|---|---|---|

| Efficiency (%) | 89.62 | 88.85 | 89.38 | 89.60 |

| Power factor (%) | 80.69 | 78.48 | 80.35 | 80.62 |

| Starting torque (Nm) | 12.66 | 12.72 | 12.57 | 12.60 |

| Average torque (Nm) | 12.87 | 13.00 | 12.89 | 12.81 |

| Method | Case 1 | Case 2 | Case 3 | |

|---|---|---|---|---|

| Efficiency | RMSE | 0.544 | 0.170 | 0.014 |

| MAPE | 0.430 | 0.134 | 0.011 | |

| MAE | 0.385 | 0.120 | 0.010 | |

| Power factor | RMSE | 1.563 | 0.064 | 0.049 |

| MAPE | 1.369 | 0.355 | 0.043 | |

| MAE | 1.105 | 0.042 | 0.035 | |

| Starting torque | RMSE | 0.042 | 0.064 | 0.042 |

| MAPE | 0.237 | 0.355 | 0.237 | |

| MAE | 0.030 | 0.045 | 0.030 | |

| Average torque | RMSE | 0.092 | 0.028 | 0.042 |

| MAPE | 0.505 | 0.155 | 0.233 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Koh, D.-Y.; Jeon, S.-J.; Han, S.-Y. Performance Prediction of Induction Motor Due to Rotor Slot Shape Change Using Convolution Neural Network. Energies 2022, 15, 4129. https://doi.org/10.3390/en15114129

Koh D-Y, Jeon S-J, Han S-Y. Performance Prediction of Induction Motor Due to Rotor Slot Shape Change Using Convolution Neural Network. Energies. 2022; 15(11):4129. https://doi.org/10.3390/en15114129

Chicago/Turabian StyleKoh, Dong-Young, Sung-Jun Jeon, and Seog-Young Han. 2022. "Performance Prediction of Induction Motor Due to Rotor Slot Shape Change Using Convolution Neural Network" Energies 15, no. 11: 4129. https://doi.org/10.3390/en15114129

APA StyleKoh, D.-Y., Jeon, S.-J., & Han, S.-Y. (2022). Performance Prediction of Induction Motor Due to Rotor Slot Shape Change Using Convolution Neural Network. Energies, 15(11), 4129. https://doi.org/10.3390/en15114129