1. Introduction

As a kind of linear mathematical model, the state space model (SSM) is widely used in multi-variable system analysis and controller design processes, especially when the knowledge of modern control theory is applied [

1]. Furthermore, in the area of model-predictive control, performance-seeking control, and health evaluation, using SSM can greatly improve the real-time performance of the control system and simplify the process of the optimization and the estimation [

2,

3,

4].

SSM also plays an important role in the design of aero-engine control systems. Therefore, it is of great significance to research its modeling method, especially the method that can accurately capture the individual aero-engine dynamics in real time.

In view of the strong nonlinearity of an aero-engine, its mechanism model (component-level model, CLM) is very complex [

5]; the analytical SSM cannot be derived from it. At present, there are two main modeling methods for aero-engine SSM, namely, fitting method and partial derivative method [

6,

7,

8]. Both methods belong to the small disturbance method, and the parameters of the SSM are obtained based on the responses of the CLM to the separate small disturbance of the SSM inputs (and states for partial derivative method). The fitting method is carried out with the principle that the linear system output should be consistent with that of the nonlinear system under the same separate small disturbance inputs, and its model parameters are determined by minimizing the errors between the SSM model outputs and the CLM outputs. Therefore, optimization methods are widely used in the parameters identification process of the fitting method. For example, the SSM of certain turbofan engines is established by the least square optimization in the literature [

9], and the model parameters are optimized by genetic algorithm in the literature [

10]. The partial derivative method applies disturbances to the control variables and state variables, respectively, on the CLMs, and the partial derivative calculation in the SSM is approximated by the output difference [

11,

12].

However, both methods have some defects. The fitting method needs to collect the engine dynamic response data offline, and the partial derivative method needs to call the engine CLMs repeatedly for differential calculation. They both cannot be realized in real time and both depend on the CLM. Aero-engine CLM is a complex model, which is composed of multiple component models, co-working equations, and equation solving process [

13,

14,

15]. Due to the assumptions in modeling, the accuracy of CLM is difficult to ensure, and the CLM is mostly established based on the component characteristics at rated condition, which makes it hard to reflect the individual differences and performance degradation of aero-engine. The fitting method and partial derivative method based on the rated CLMs cannot characterize the accurate characteristics of a specific engine. Although the data used in fitting method can be obtained from the engine test, for multivariable control systems, the test data are a set of responses to the simultaneously varying input variables, while the data obtained from the component-level model are several sets of responses to separately varying input variables, which means the test data cannot clearly reflect the characteristics between the inputs and the outputs, and the model built based on the test data lacks reliability even if it can fit the output curve of the selected test point. In addition, the aero-engine works in a wide flight envelope, and the working state spans from start to maximum reheat rating. The traditional method can only be applied to limited steady-state points. For other working points, the SSMs are more dependent on interpolation or parameter scheduling methods, such as TS fuzzy model [

16,

17] and equilibrium manifold expansion (EME) model [

18,

19]. Compared with the SSMs directly established at the corresponding points, the accuracy of the model obtained by interpolation or other methods is relatively lower.

Pang et al. present an online exact partial derivation calculation method to solve this problem, which provides a component-level derivative model of engine, and the partial derivations are calculated by digital solution of the component-level derivative model [

20,

21]. This method can obtain more accurate SSMs in the whole envelope at any working state of the engine, and the SSM can adapt to the engine degradation with the adaptive CLM used. However, this modeling method is also based on the CLM, the calculation process is complex, and the model accuracy will also be affected by the accuracy of CLM.

With the development of artificial intelligence technology, data-driven methods have developed rapidly in recent years. In the field of aero-engine, data-driven methods have been used in degradation prediction, mathematical model establishment, and so on [

22,

23]. Compared with the traditional modeling methods that rely on CLMs, data-driven modeling method avoids the complex component-level modeling process and eliminates the influence of CLMs modeling accuracy on SSM. Based on the idea of data-driven, some scholars use an MGD neural network modeling method to establish the adaptive dynamic model of turbofan engine [

24,

25]. The improved MGD neural network based on batch training is used to establish the onboard model of turbofan engine, but the training process is time-consuming and must be carried out offline, which means the model cannot adapt to the engine degradation. The data-driven modeling method combined with intelligent algorithm is more efficient, but there is still little research on aero-engine state space modeling. Only in reference [

23] is a linear parameter-varying (LPV) modeling method of turboshaft engine proposed by using a special construction neural network.

This paper proposes an aero-engine modeling method based on adaptive forgetting factor online sequential extreme learning machine (AFOS-ELM), which aims to model the state space equation online based on data-driven method. The main contributions of this paper are as follows: (1) An online state space modeling method based on AFOS-ELM is proposed, which can be carried out in real time with the data gathered online. According to the form of SSM, the inputs and outputs of neural network are determined. By using the online training ability of online sequential extreme learning (OS-ELM), the parameters of the network are updated in real time, and the analytical model of the AFOS-ELM is obtained. Then, the SSM is derived by applying the partial derivative method to the analytical model of the AFOS-ELM. (2) The inverse-free extreme learning machine (ELM) is used to initialize the neural network model offline. The number of hidden nodes and the initial weights of the ELM are determined by the inverse-free method to improve the efficiency and accuracy of the offline training. (3) The neural network is trained by the AFOS-ELM method which can extract the current working state characteristics of the engine and improve the accuracy of the SSM model. (4) Compared with the traditional linearization modeling method, the method proposed in this paper can obtain the SSM based on the aero-engine test data at each sampling time, which can better reflect the individual characteristics of the engine.

The paper is organized as follows.

Section 2 introduces the inverse-free ELM used in this paper.

Section 3 introduces the AFOS-ELM method used in the modeling process.

Section 4 introduces the neural network structure for state space modeling and the deduction of SSM.

Section 5 gives the simulation results of the modeling.

Section 6 concludes this paper.

2. Inverse-Free ELM

Extreme learning machines are feedforward neural networks which were first proposed by Professor Huang of Nanyang University of Technology in Singapore in 2006 [

26]. Compared with traditional machine learning algorithms such as backpropagation (BP) neural network, ELM has faster learning speed and similar generalization performance [

27]. The traditional neural network has the problem that the number of hidden nodes needs to be determined by trial. The inverse-free ELM can solve this problem, and it has aroused many concerns [

28,

29]. It adds hidden nodes through iterative method, which can save the trail time and improve the calculation efficiency.

In the ELM algorithm, given the training set , where input , target output .

In ELM with

l hidden nodes, the output corresponding to the

ith input can be described as

where

are the weights connecting the hidden layer node

j and the input layer nodes.

bj is the bias of the hidden layer node

j.

are the weights connecting the hidden layer node

j and the output layer nodes.

f(

x) is the hidden layer activation function.

The learning target of neural network is to minimize the output error, so the cost function of ELM can be described as

where

H is the hidden layer output matrix, .

Output weight

β can be obtained from the following formula

where

is the generalized inverse of

H.

In order to avoid singular matrix or overfitting, Tikhonov regularization method was used, so Equation (4) becomes

where

is the regularization factor. Equation (4) can be regarded as a special form of Equation (5) where

.

If an additional hidden node is added to the ELM with

l hidden nodes, the hidden layer output matrix of all

l+1 nodes becomes

where

is the output of the (

l+1)

th hidden node. The input weight vector

and bias

corresponding to the newly added node are randomly generated.

With

l+1 hidden layer nodes, the weights of the ELM output layer can be calculated as

where

.

We rewrite

Ol+1 as [

30].

where

According to Equation (7),

where

Then, the process of updating the output weights can be described as

The above calculation process is repeated, and gradually increases the hidden layer nodes until the network training error satisfies the requirement or the number of hidden nodes reaches the maximum value. This method greatly saves the trail time and workload of repeated ELM network training used in adjusting the hidden layer nodes.

4. Online State Space Modeling Based on AFOS-ELM

The nonlinear state space model of the aero-engine can be described as follows:

where

is the

n-dimensional state vector,

is the

m-dimensional output vector, and

is the

r-dimensional input vector.

The linearized state space model of the system at the working point

at time

t can be expressed as

where ∆ is the increment symbol,

,

,

, where

t represents the engine operation time when the state space model is established, and

k is the variable used to express a discrete time system.

The parameters of the state space matrixes in Equation (26) can be expressed in the form of partial derivative, and the matrixes

At,

Bt,

Ct,

Dt are also called Jacobian matrixes.

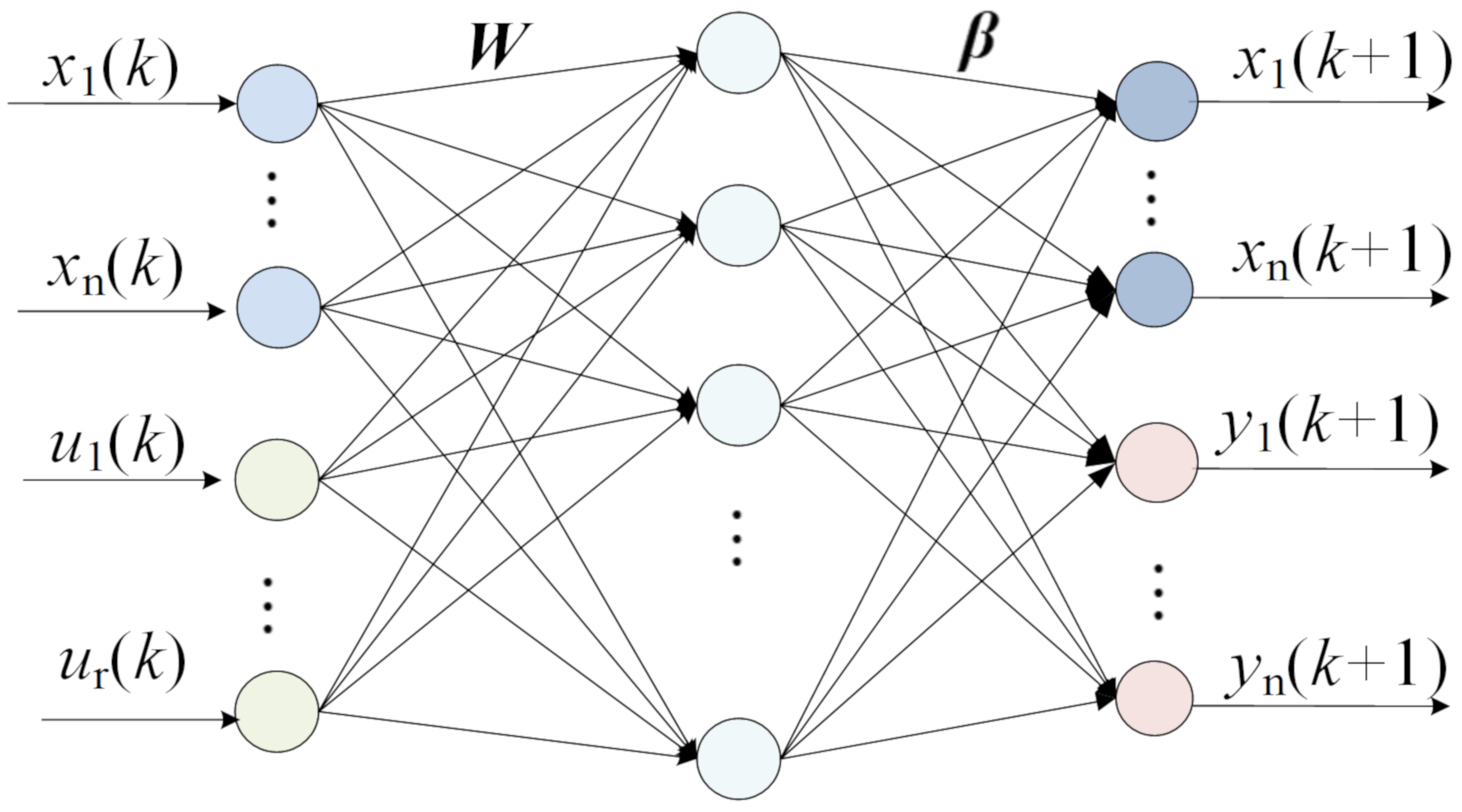

To obtain the linear state space model with AFOS-ELM, the data used to train the ELM are normalized as

The inverse-free ELM neural network described in

Section 2 is used in the ELM initialization. The inputs of the AFOS-ELM are the input variables

u(

k) and the state variables

x(

k) of the state space model in Equation (25). The outputs of the ELM are the state variables

x(

k + 1) and the output variables

y(

k) of the SSM. Then, the construction of the AFOS-ELM is shown in

Figure 1.

The OS-ELM adopts linear output nodes, and the analytical expression of network output can be described as

where

,

are the neural network output vector and input vector.

The partial derivative of network output to network input is calculated by

Therefore, the parameters in Equation (27) can be calculated according to Equations (30) and (31), and then the SSM in Equation (26) is obtained at time k.

5. Simulation and Discussion

5.1. Data Process

In order to verify the method of establishing SSM online based on the neural network proposed in this paper, the test data of a twin spool hybrid exhaust afterburner turbofan aero-engine are used for modeling and validation. The research is carried out in the environment of Win11, Intel(R) CORE(R) i5-12600Kf CPU, and 32G RAM.

Since the data come from the engine test, the measurement noise is inevitable. To avoid the impact of outliers on modeling accuracy, the local singularity estimation capability of wavelets and the residuals of the signal wavelet transform can be used to determine the residual threshold for outlier discrimination [

35,

36].

The residual of the original signal

S after

L-layer wavelet decomposition can be described by

where

SS are the low-frequency reconstructed signal after

L-layer decomposition.

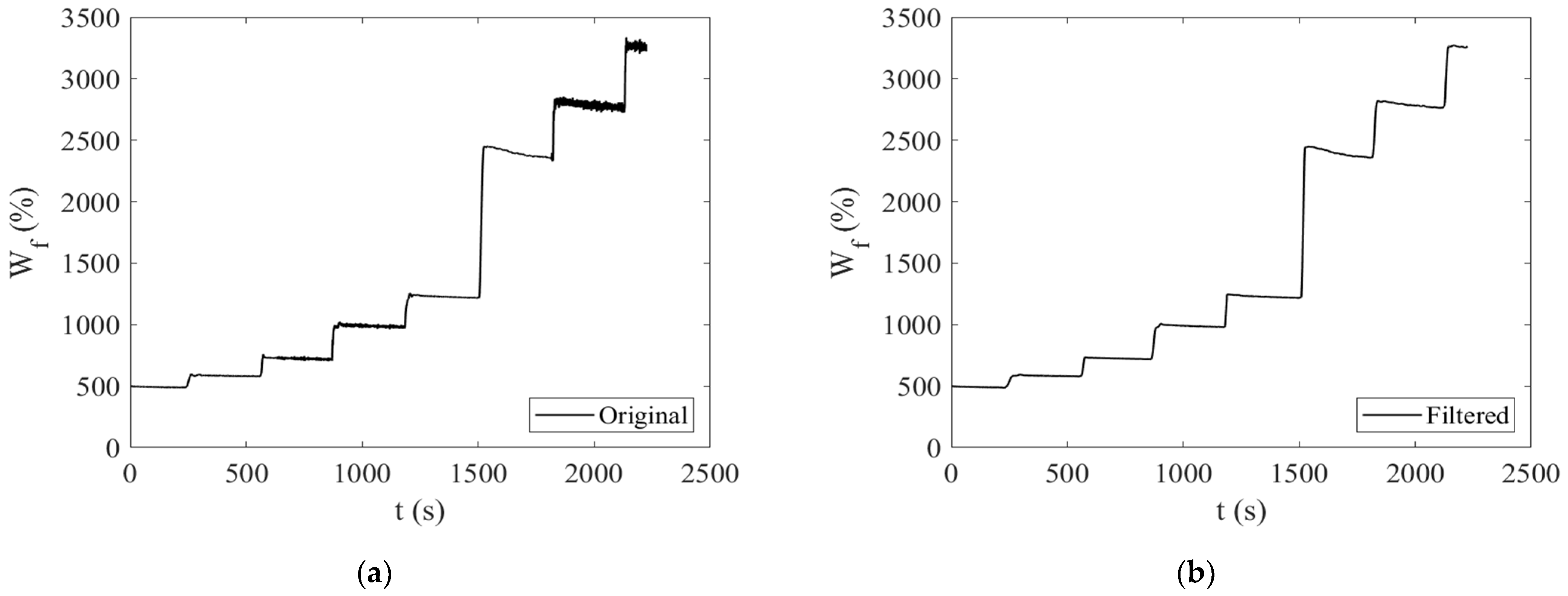

When the residual is small, the low-frequency reconstructed signal approaches the original signal. When the residual is large, there is a relatively large-amplitude high-frequency noise superimposed on the measured signal, which can be seen as an outlier. Taking the fuel data of an engine test as an example, the “db8” wavelet with scale 7 is used to detect the outliers. A total number of 111,406 data are collected in this test, and the residual distribution of fuel flow

Wf is shown in

Table 1.

It can be seen from

Table 1 that the data in the range of residual [−0.721%, 1.39%] account for 98.54% of the total data, and the data in the range of residual [−2.83%, 3.49%] account for 99.94% of the total data. The data distribution in the range of residual greater than 3.49% or less than −2.83% does not tend to decrease with the increase of residual. Therefore, the threshold for outlier identification is set to −2.83% and 3.49%. The data with residuals beyond this range are considered outliers, which are replaced by the average value of the data before and after them. For continuous outliers, they are replaced by linear interpolation of the reasonable data before and after them. After replacing the outlier data, the “db8” wavelet with scale 7 is adopted to filter the signal. Taking fuel data as an example, the comparison of data before and after filtering is shown in

Figure 2a,b.

It can be seen from

Figure 2 that the noise in the filtered data is significantly suppressed, which is helpful to establish the SSM in a data-driven way. Then, the data are normalized with Equation (27).

5.2. AFOS-ELM Validation

The input vector for a twin spool turbo-fan engine control system is , and A8 is the nozzle throat area. The measured output vector besides rotor speeds is , where P3 is the total pressure at compressor outlet, T6, P6 are the total temperature and total pressure at low-pressure turbine outlet, and F is the thrust. The state vector is ; n1 and n2 are the low-pressure rotor speed and the high-pressure rotor speed.

According to the construction of AFOS-ELM in

Figure 1, the input vector of the ELM is

. The output vector of the ELM is

.

The first 30% of the engine test data sequence are adopted to initialize the ELM based on the inverse-free ELM offline. When the root mean square error is less than 6.5% or the number of hidden layer nodes increases to more than 100, the offline training stops. The number of hidden layer nodes is automatically set to 86 in this research.

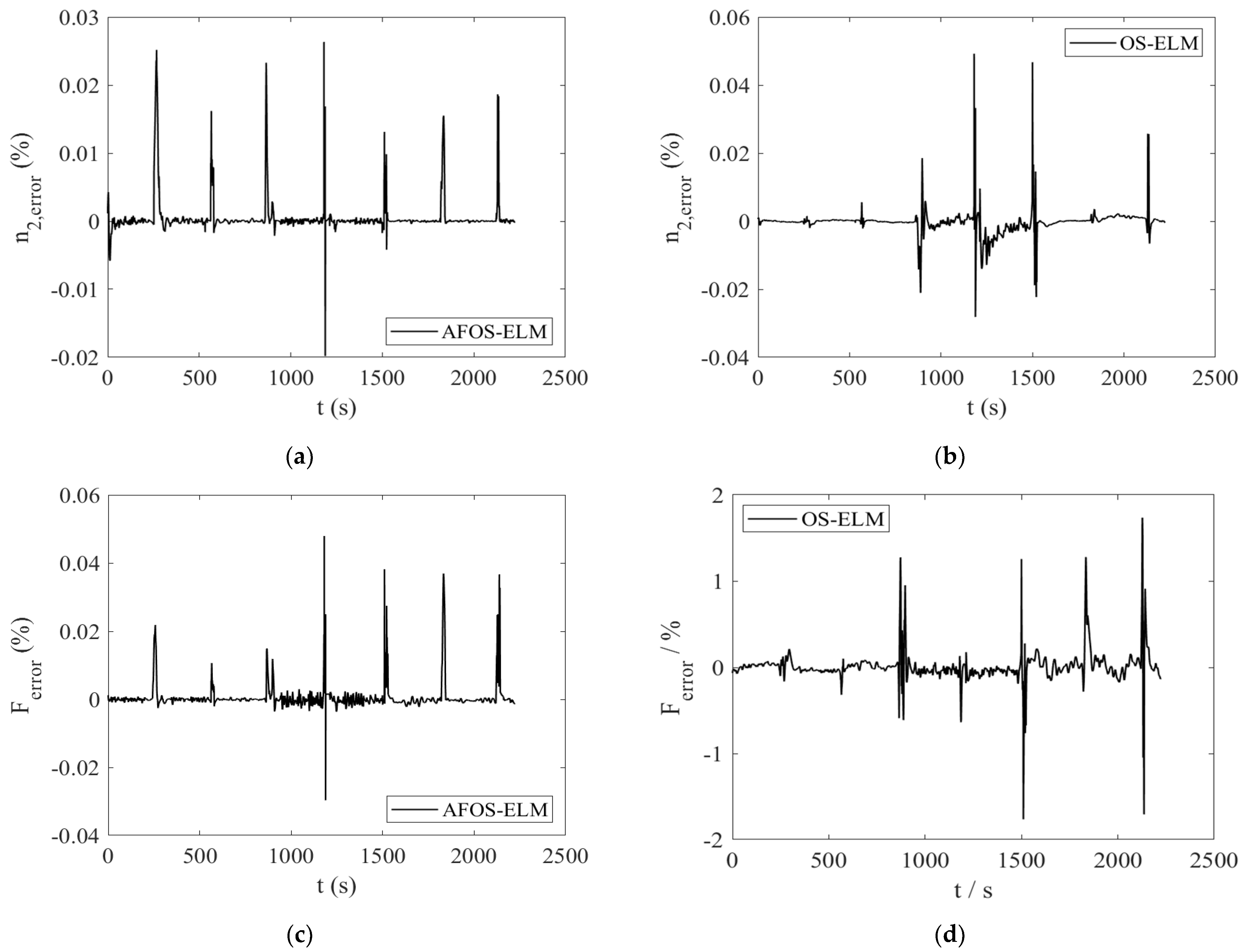

The AFOS-ELM is used to update the output weights online for all the engine test data. The

n2 output of the AFOS-ELM is compared with the engine test data in

Figure 3. The relative output errors of

n2 and

F are compared with that of the traditional OS-ELM without the forgetting factor in

Figure 4. The detail errors of the ELM outputs are listed in

Table 2.

From

Figure 3 we can see that both the AFOS-ELM and OS-ELM output

n2(

k + 1) track the engine test data well in the acceleration process. From

Figure 4 we can see that the relative errors of

n2(

k + 1) and

F(

k) are both smaller than 0.05% with the AFOS-ELM method, which is much smaller than that of the OS-ELM method, and the adaptive forgetting factor enhances the modeling accuracy both at the dynamic state and at the steady state.

It can be seen from

Table 2 that the errors of two rotor speeds are very small for both AFOS-ELM and OS-ELM, the maximum error occurs at

n1 with OS-ELM method, which is 0.1419%, and the average error is less than 0.002%, which is much better than that of the component-level model [

37]. For the other output variables, the AFOS-ELM method shows greater advantage than the OS-ELM method. The maximum error of AFOS-ELM occurs at

T6, which is 0.2747%, and the max average error occurs also occurs at

T6, which is 0.002%, while for OS-ELM, the maximum error and the max average error also occur at

T6, which are 16.52% and 0.5854%, respectively, and the figures are much bigger than that of the AFOS-ELM. AFOS-ELM can model the state space configuration nonlinear model well. It can process the engine failure data and ensure the accuracy of the current output. The adaptive forgetting factor can also deal with the rapid change caused by the large dynamic and maintain a high accuracy.

5.3. State Space Model Validation

Based on the analytical expression of the AFOS-ELM model established above, the SSM can be derived by partial derivative calculation. Taking the calculation of parameter

as an example, according to Equations (3) and (27), we have

Applying the calculation process of o the other parameters of the SSM, the calculation of all state space matrix parameters at time k can be achieved, and the SSM at time k is established.

In order to validate the effectiveness of the SSM established in this paper, the models established at different times are selected to demonstrate their output prediction ability over future time, which is essential for model-predictive control and performance-seeking control of aero-engines.

The prediction output of SSM at future time can be obtained through the response calculation of a discrete-time system.

Taking

p = 5 as an example, the error between the SSM prediction output and the engine output is compared. The results of prediction are shown in

Figure 5a–l, where the “SSM Prediction” represents the prediction output of the SSM proposed in this paper, and “Engine Output” represents the engine test data at

k +

p.

As can be seen from

Figure 5, the maximum prediction error at time

k + 5 occurs at

around 1500 s, where a sudden acceleration occurs and the thrust

F increases about 40.6%. Except for

F, the other variables all reach their biggest prediction error at this moment. Under such a big dynamic, the max prediction error occurred at

and is about 1.1%. The max prediction error of the low-pressure rotor speed is less than 0.3% when the low-pressure rotor speed

n1 increases about 22.9%, while the error is more than 0.4% in the component-level-model-based online state space modeling method in reference [

21] for time

k + 5 output prediction when the low-pressure rotor speed decreases by about 12% (there is no other common variable that can be compared in reference [

21]). The prediction accuracy of the SSM established by the method described in this paper shows great advantage, and it can predict the varying of the engine test data well.

In order to validate the real-time performance of the SSM proposed in this paper, the time consumption of the AFOS-ELM online modeling process and the SSM modeling process are evaluated. The simulation platform is as described above; the modeling process is carried out three times. The neural network is established online based on 111,406 groups of engine test data, and 111,405 SSMs are obtained from the first data to the last-1 data, and the average time for modeling the 111,405 SSMs based on AFOS-ELM is 183.958 s. The online network weights updating and online SSM calculation process of each step takes an average of 1.7 ms. It is lower than the sampling step of the aero-engine, which is about 20 ms. Therefore, this modeling method can meet the real-time requirements of the aero-engine.