Designing Control and Protection Systems with Regard to Integrated Functional Safety and Cybersecurity Aspects

Abstract

1. Introduction

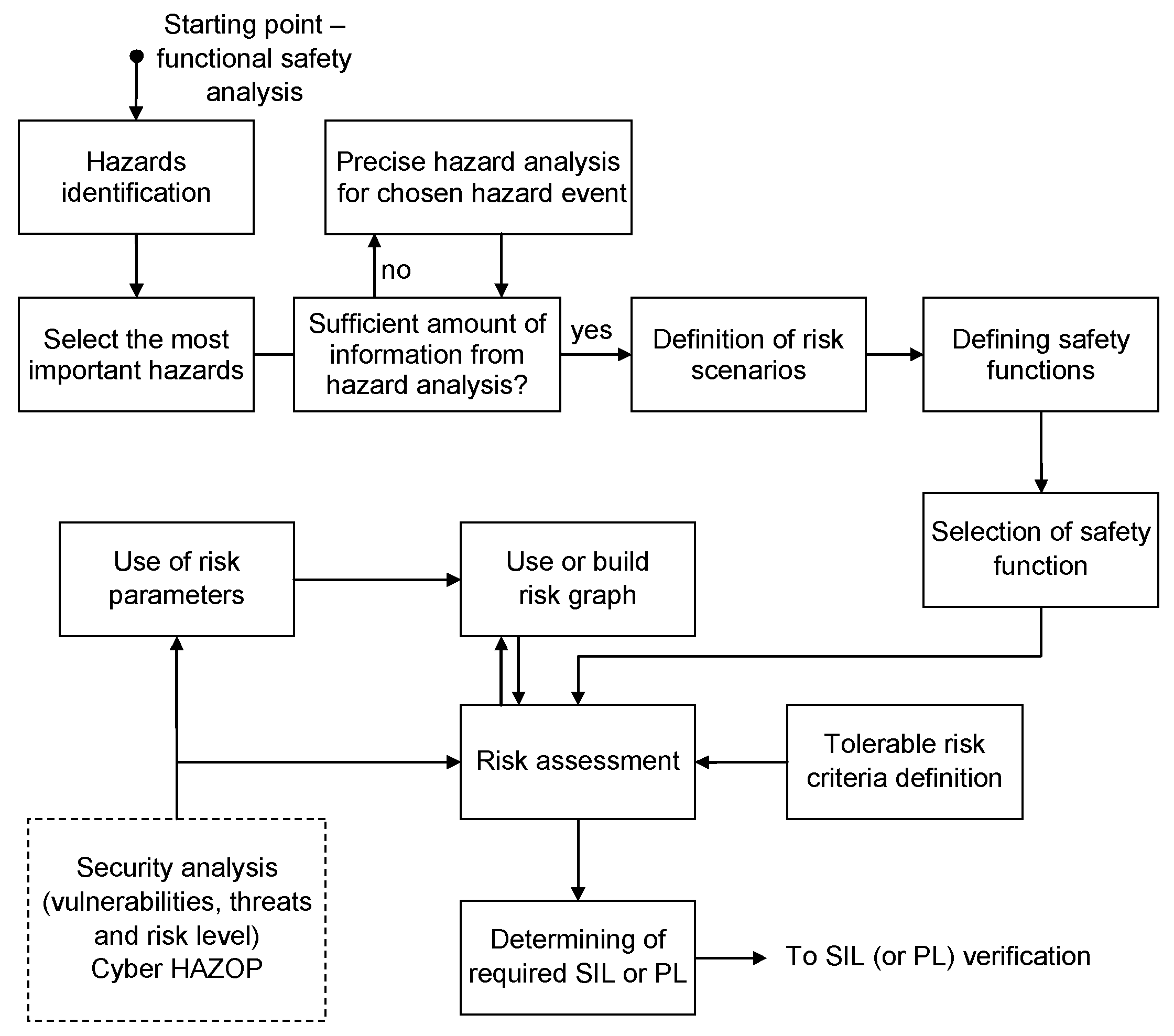

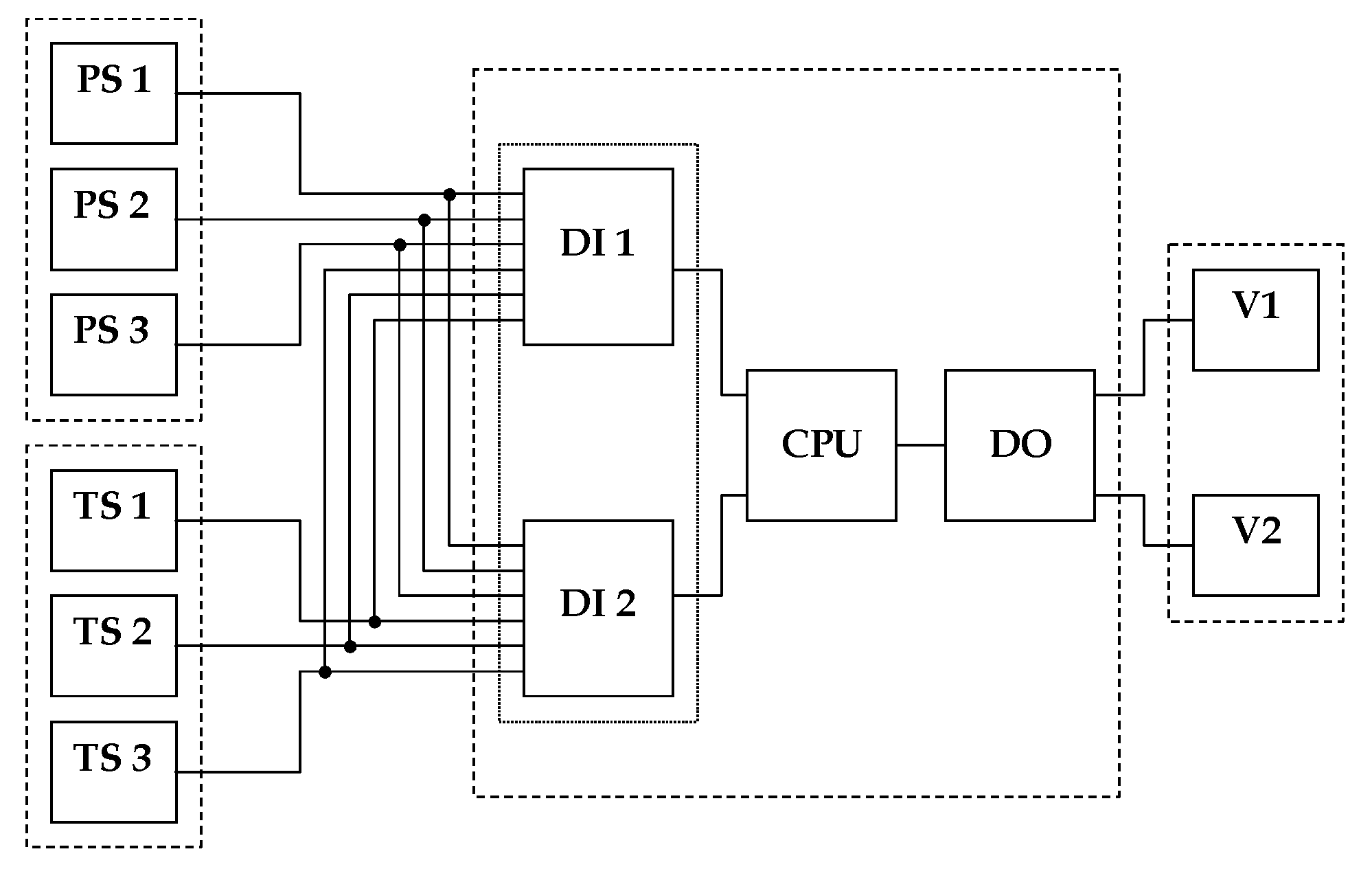

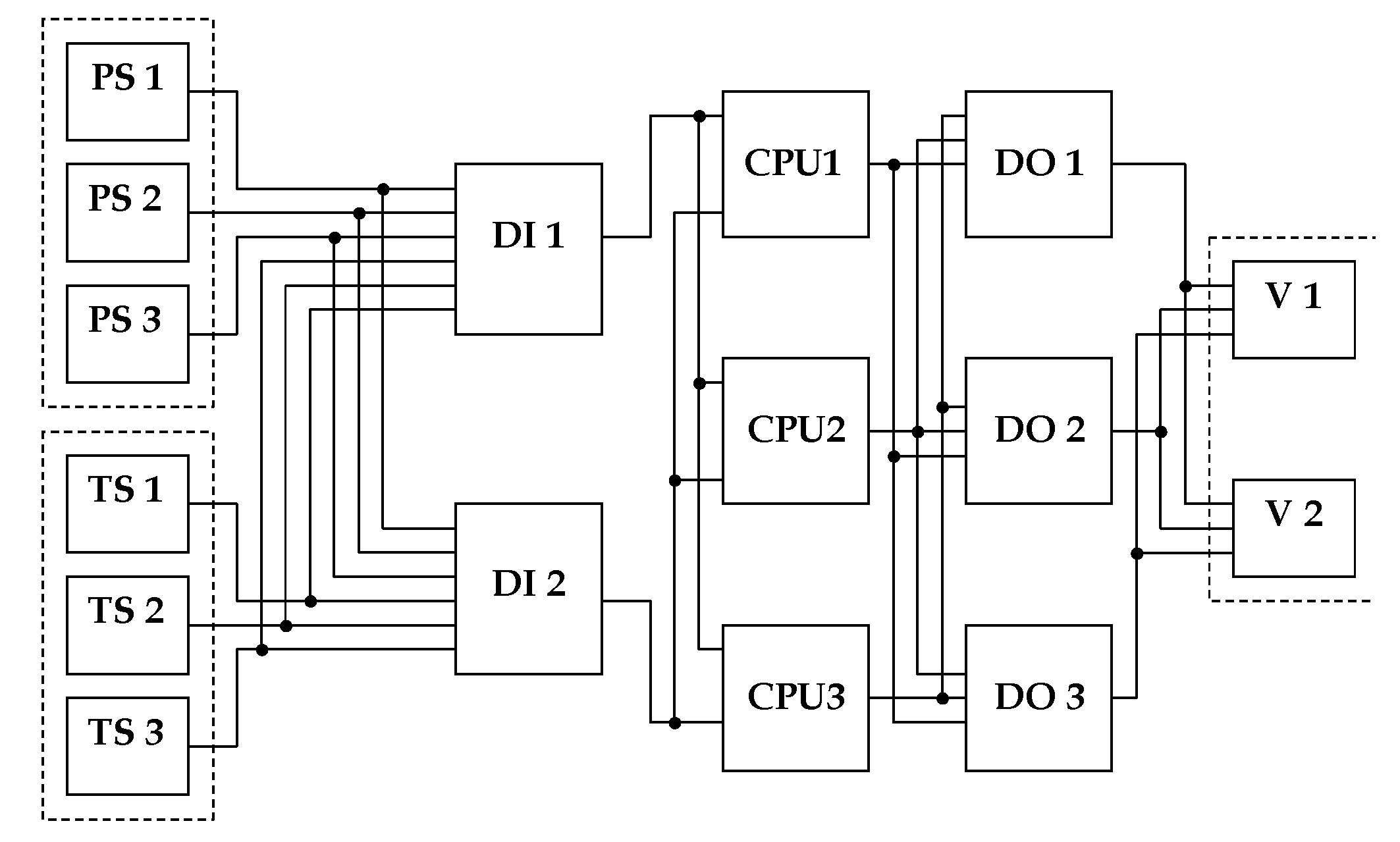

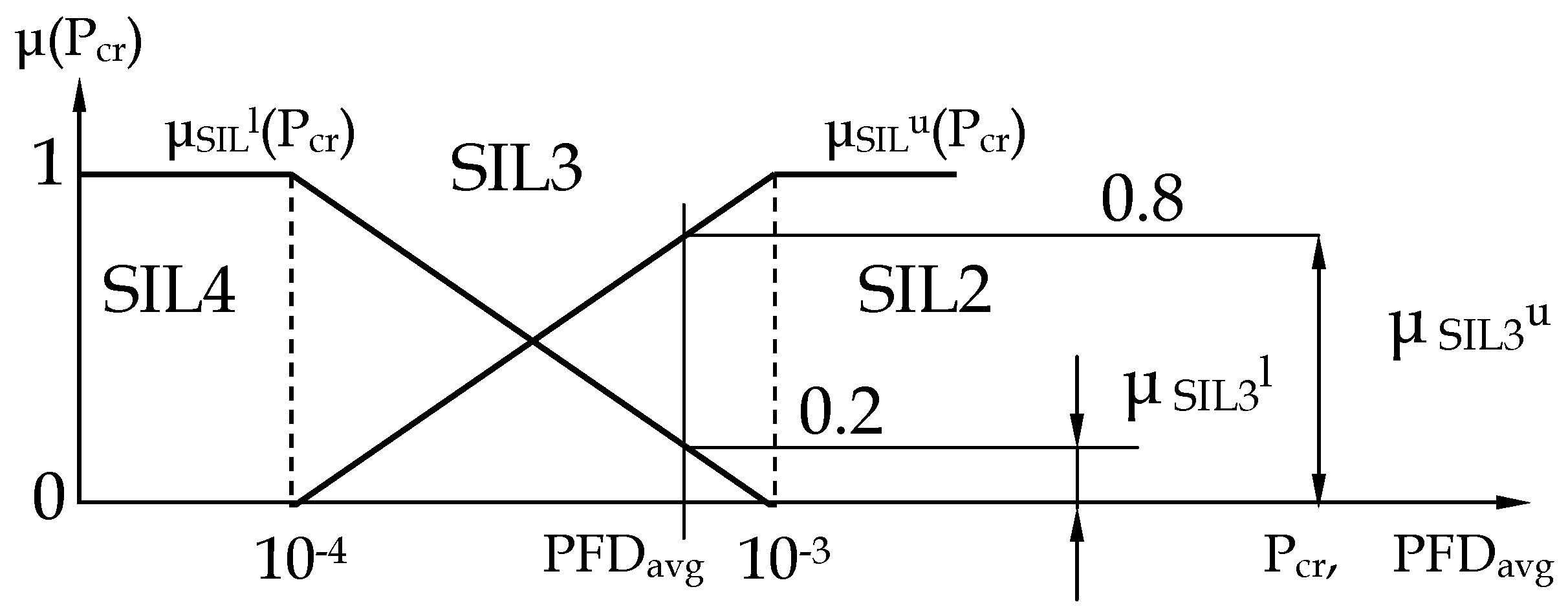

2. Issues of Determining the Required Safety Integrity Level of Safety Functions

2.1. Functional Safety Requirements

- the probability of failure (average) PFDavg for the safety function system operating on demand; or

- the frequency (probability of a dangerous failure per hour) PFH.

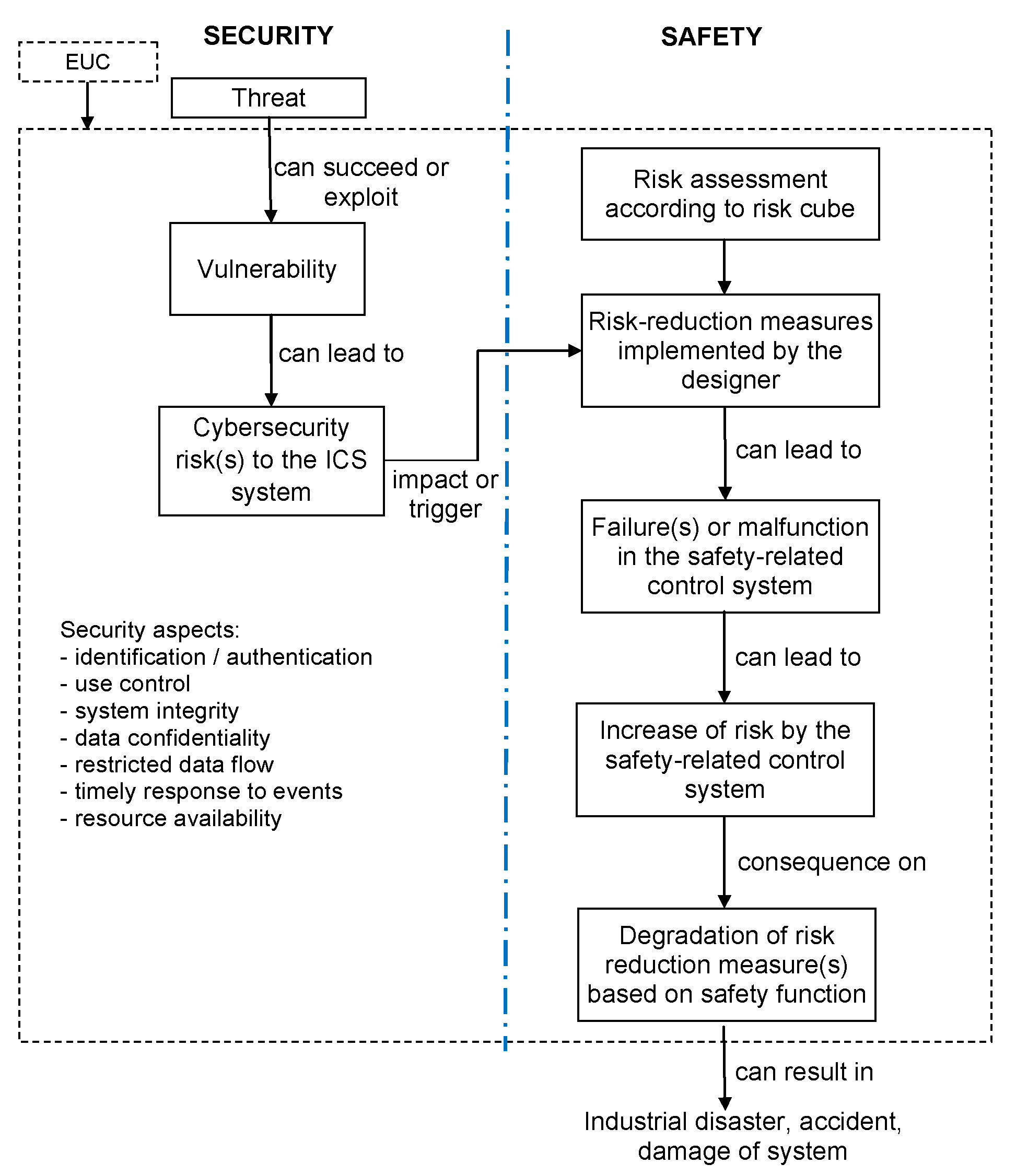

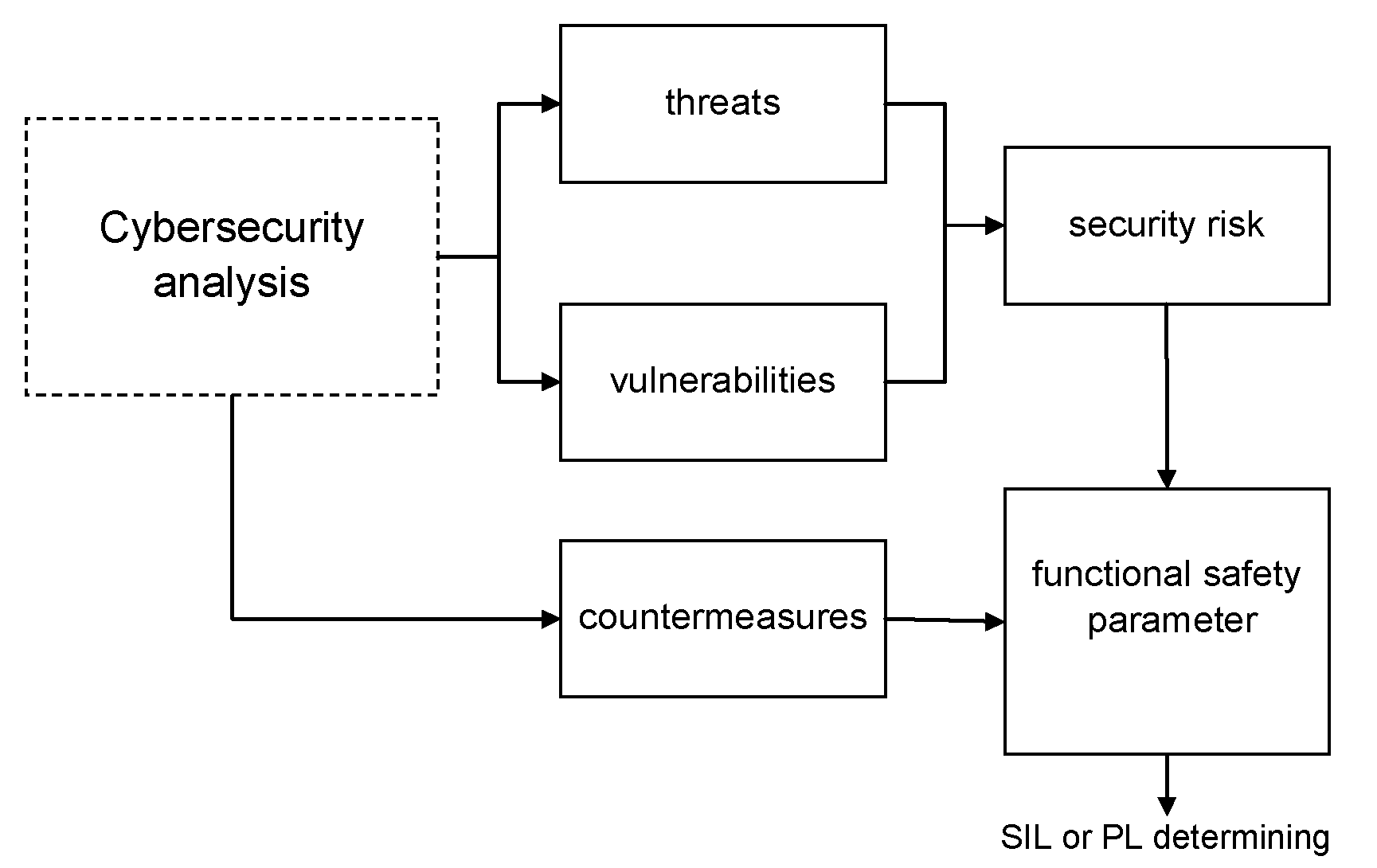

2.2. Cybersecurity Approach

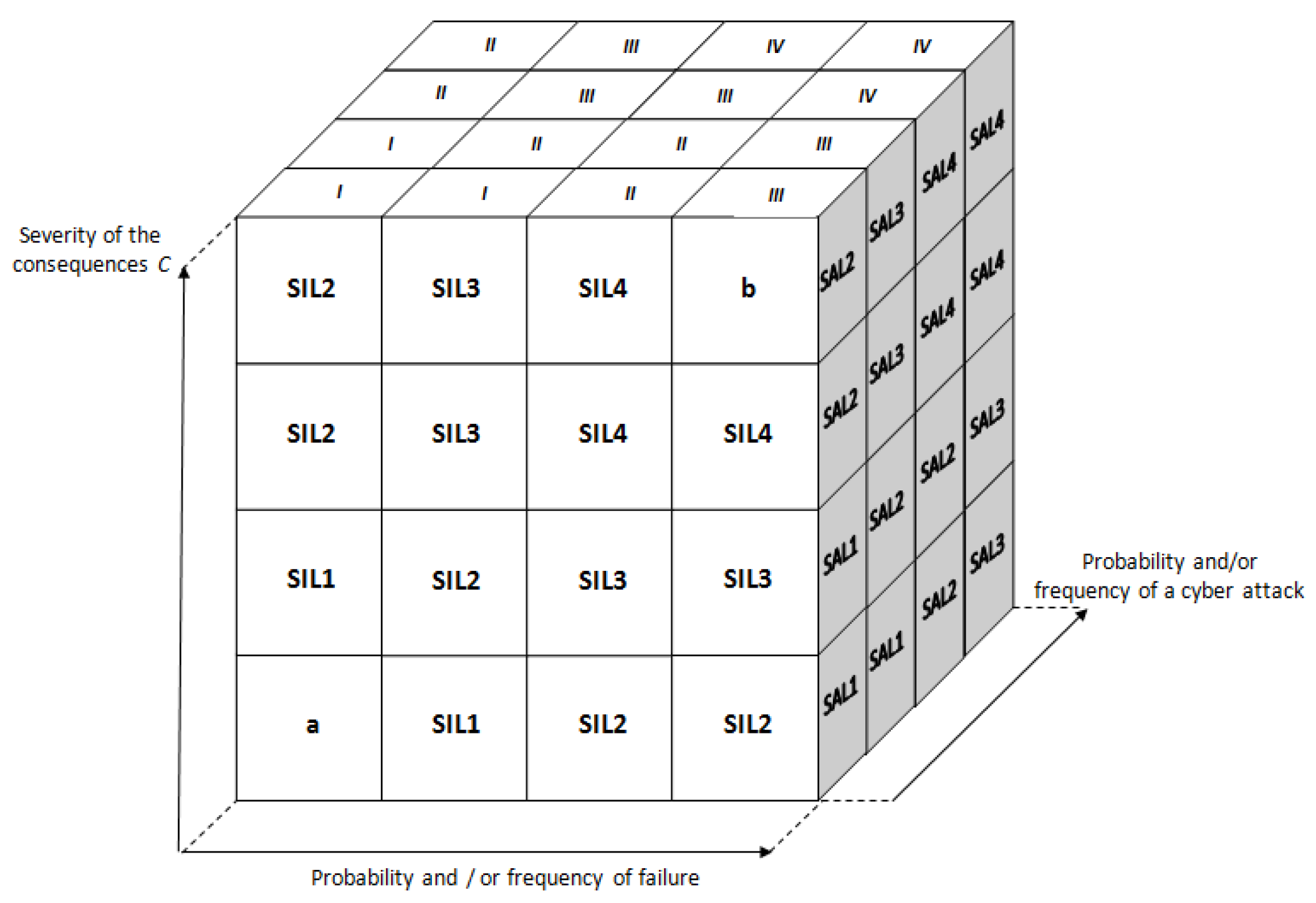

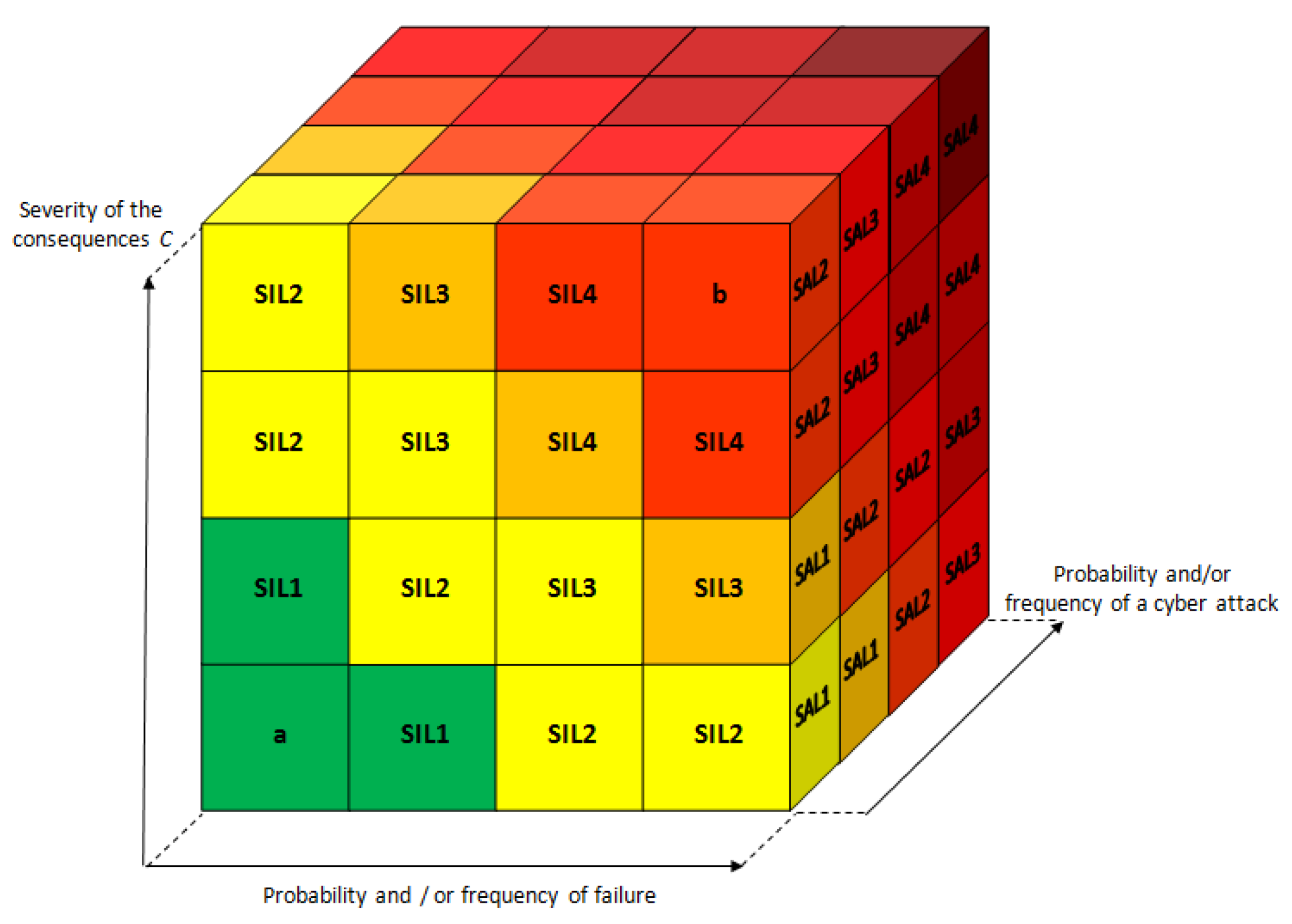

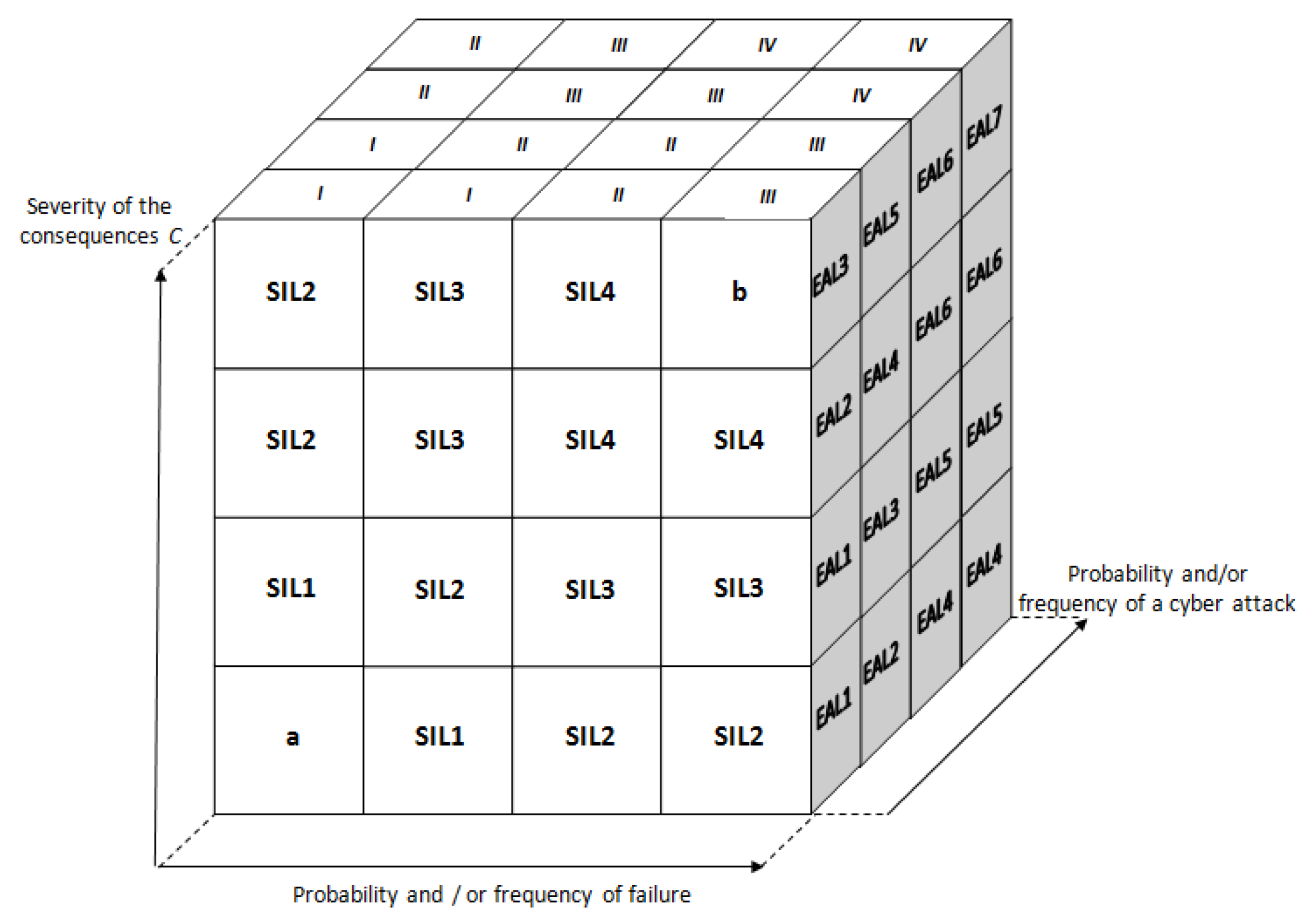

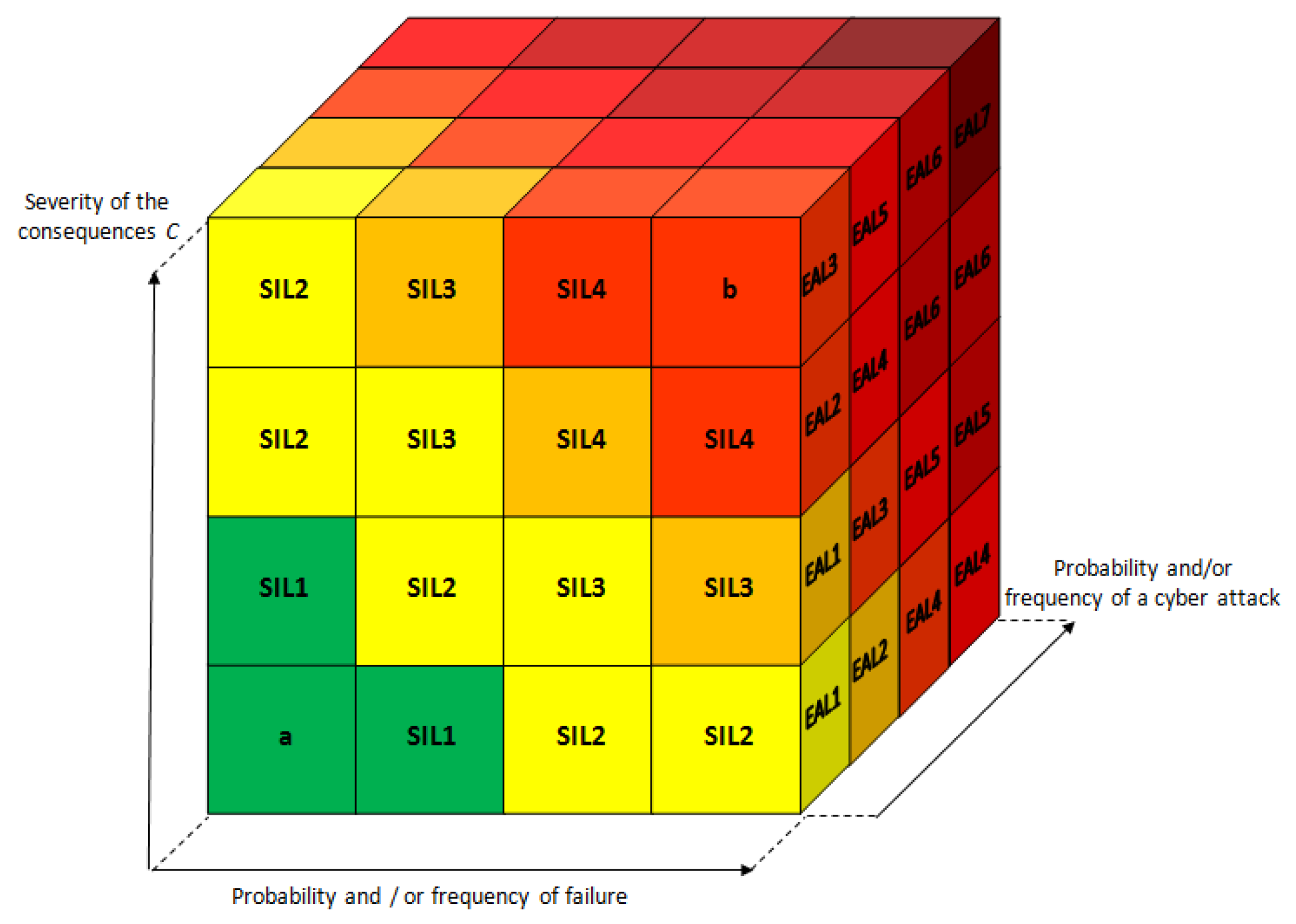

2.3. The Risk Cube Methodology

2.4. SIL Determining with Cybersecurity Aspects

3. Safety Integrity Level Calculation

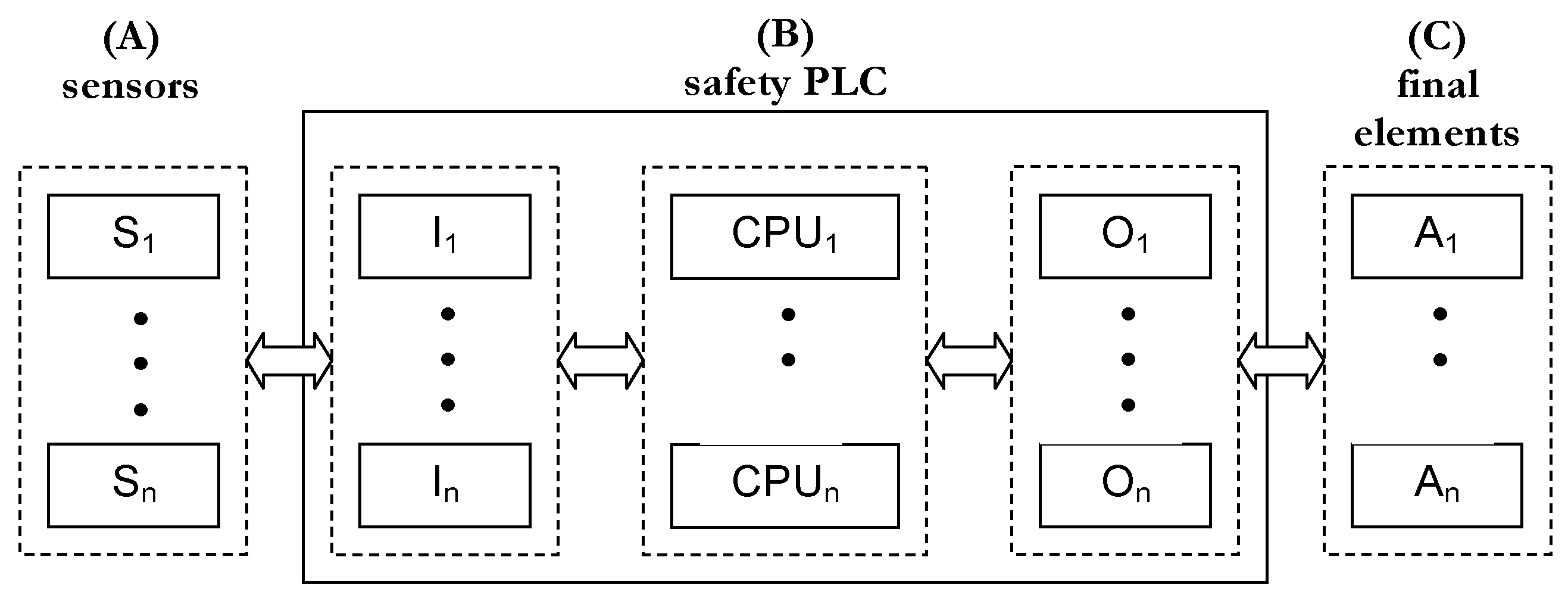

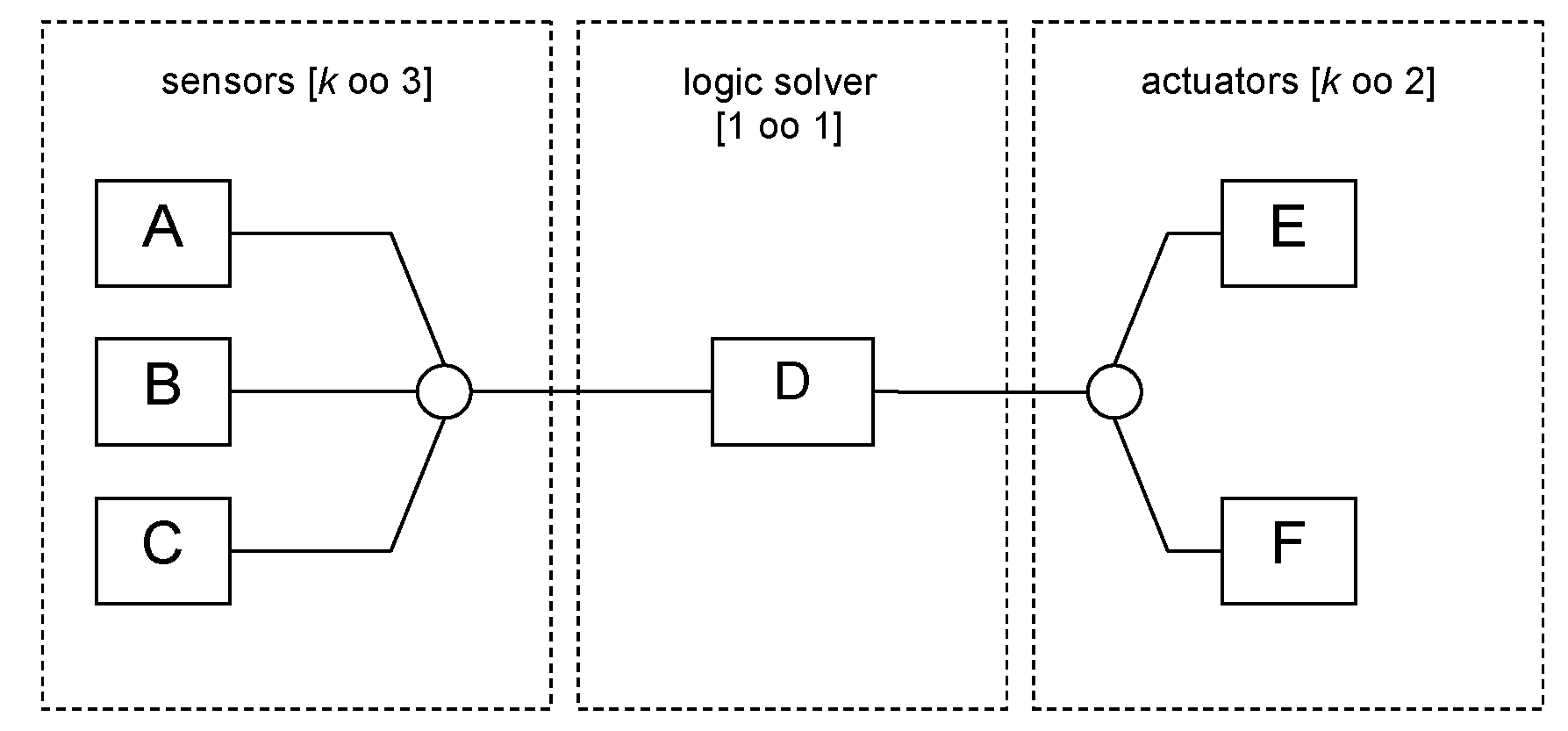

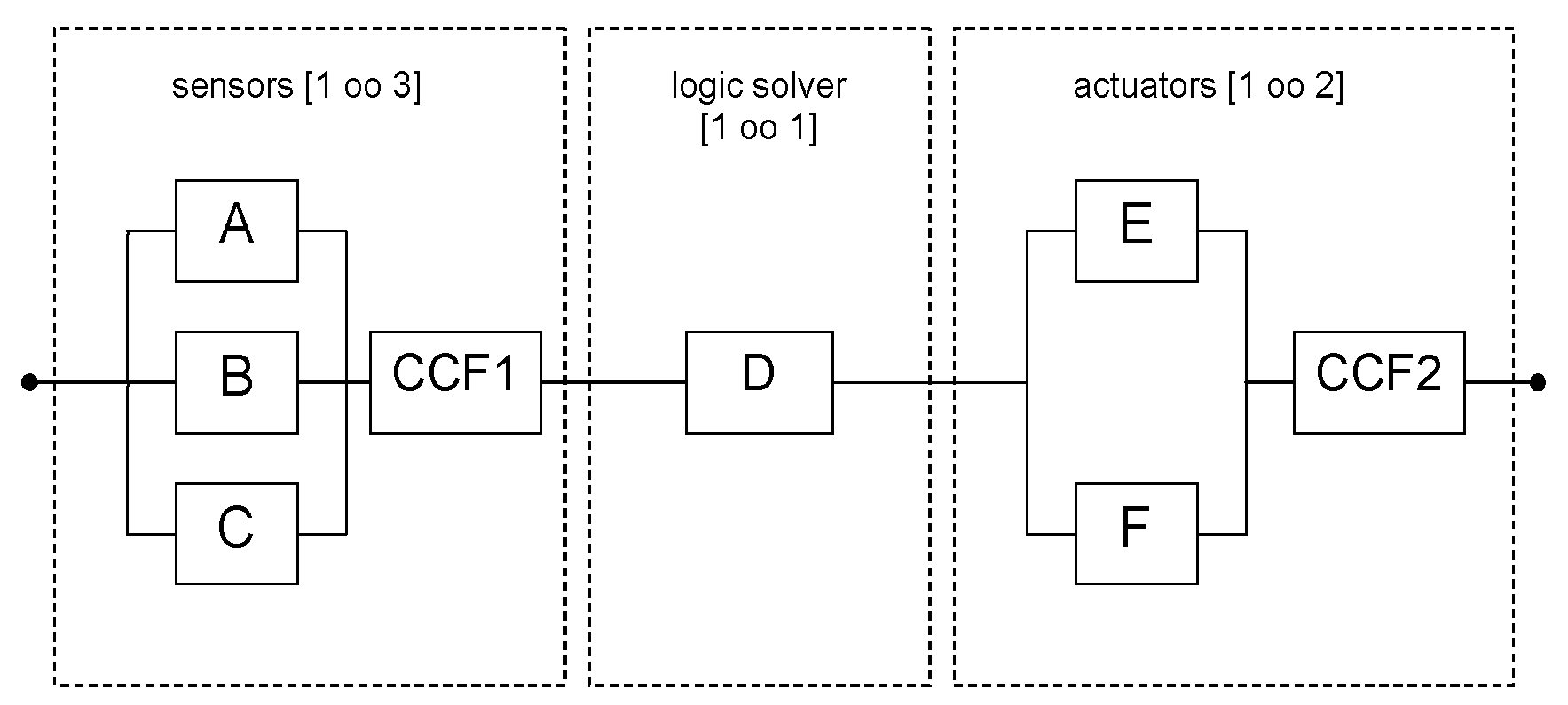

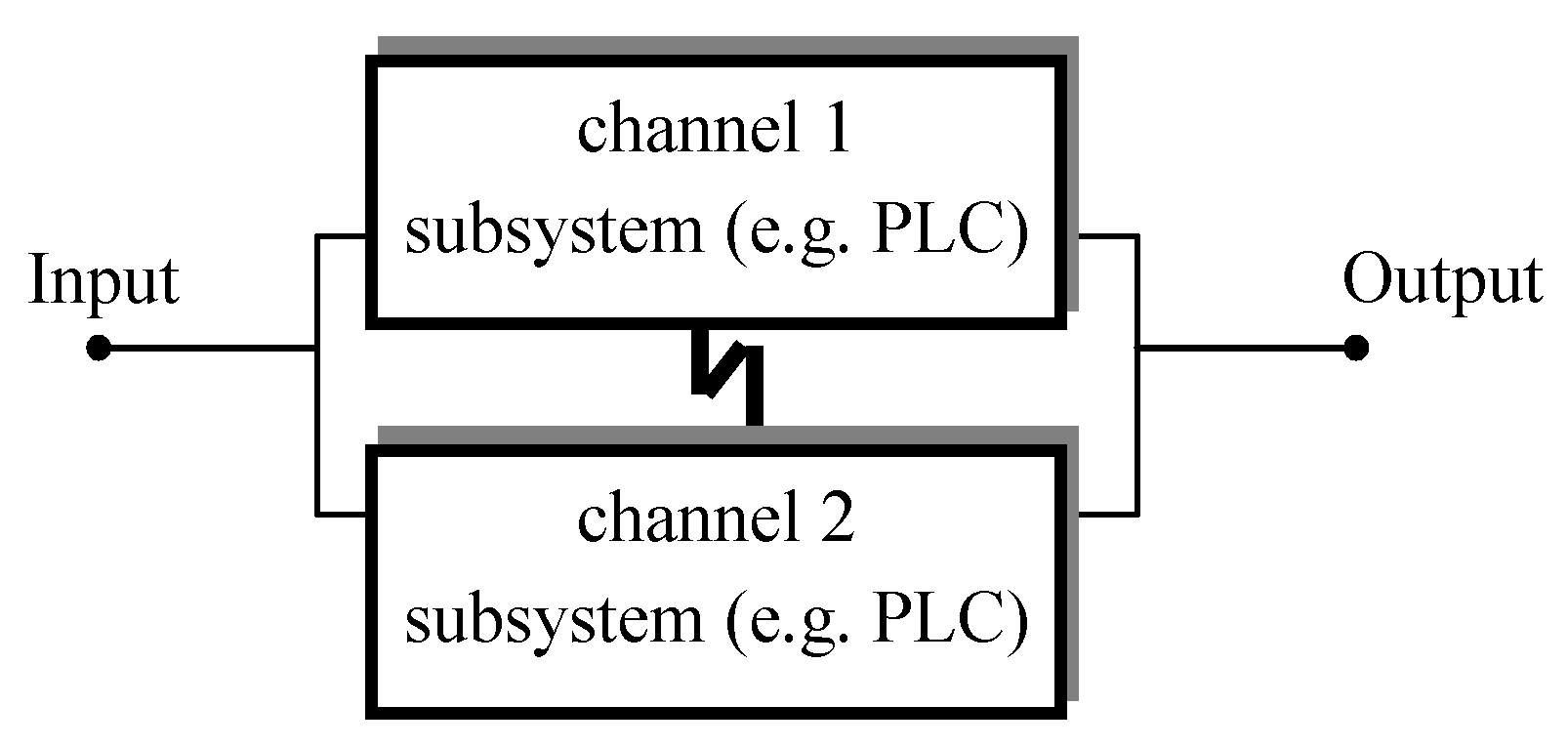

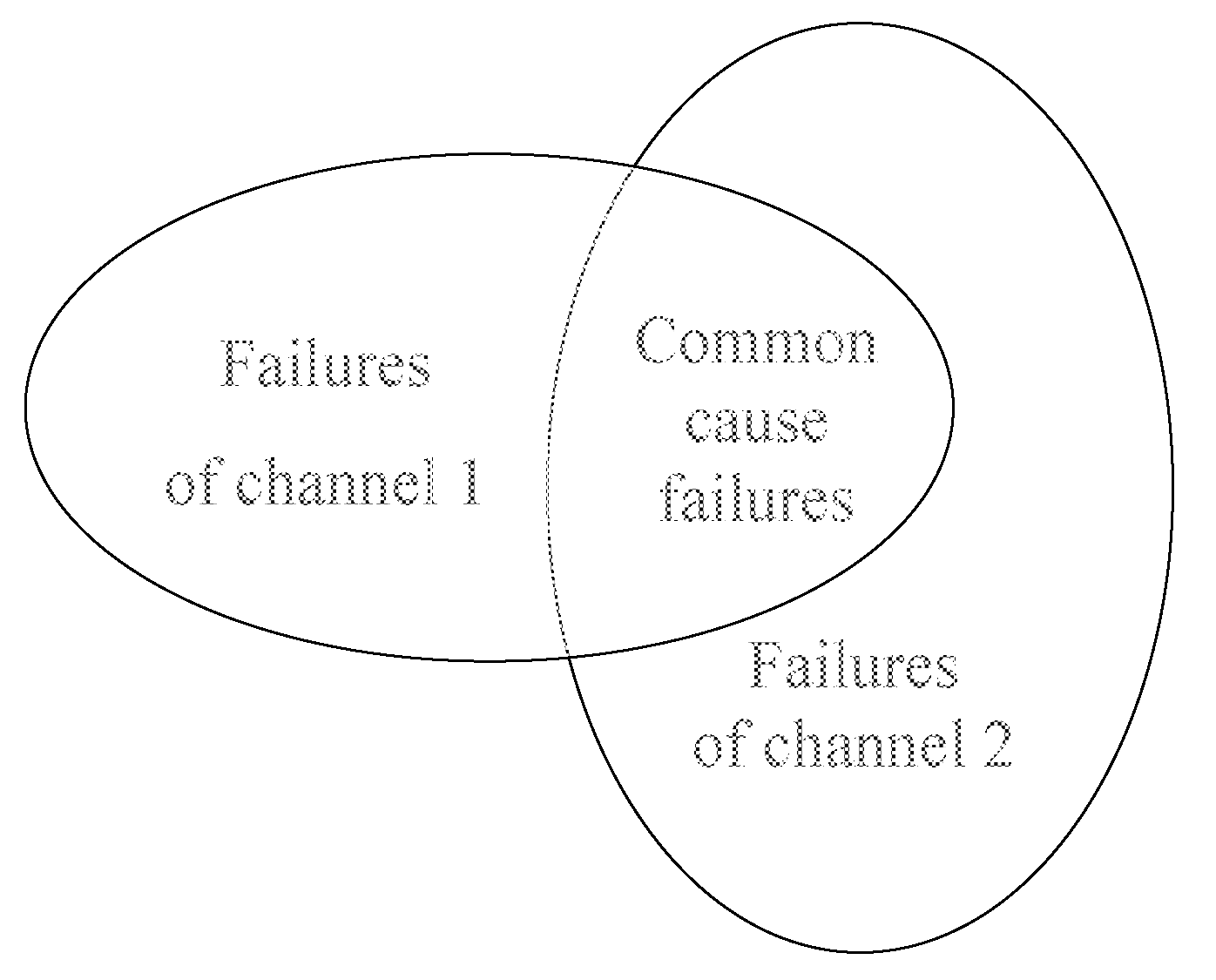

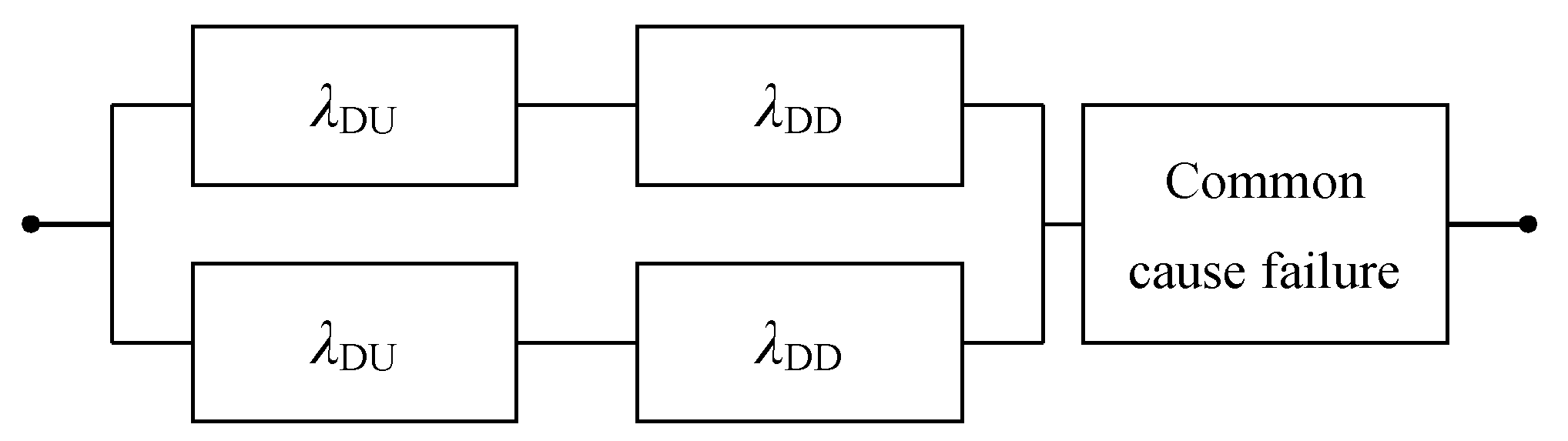

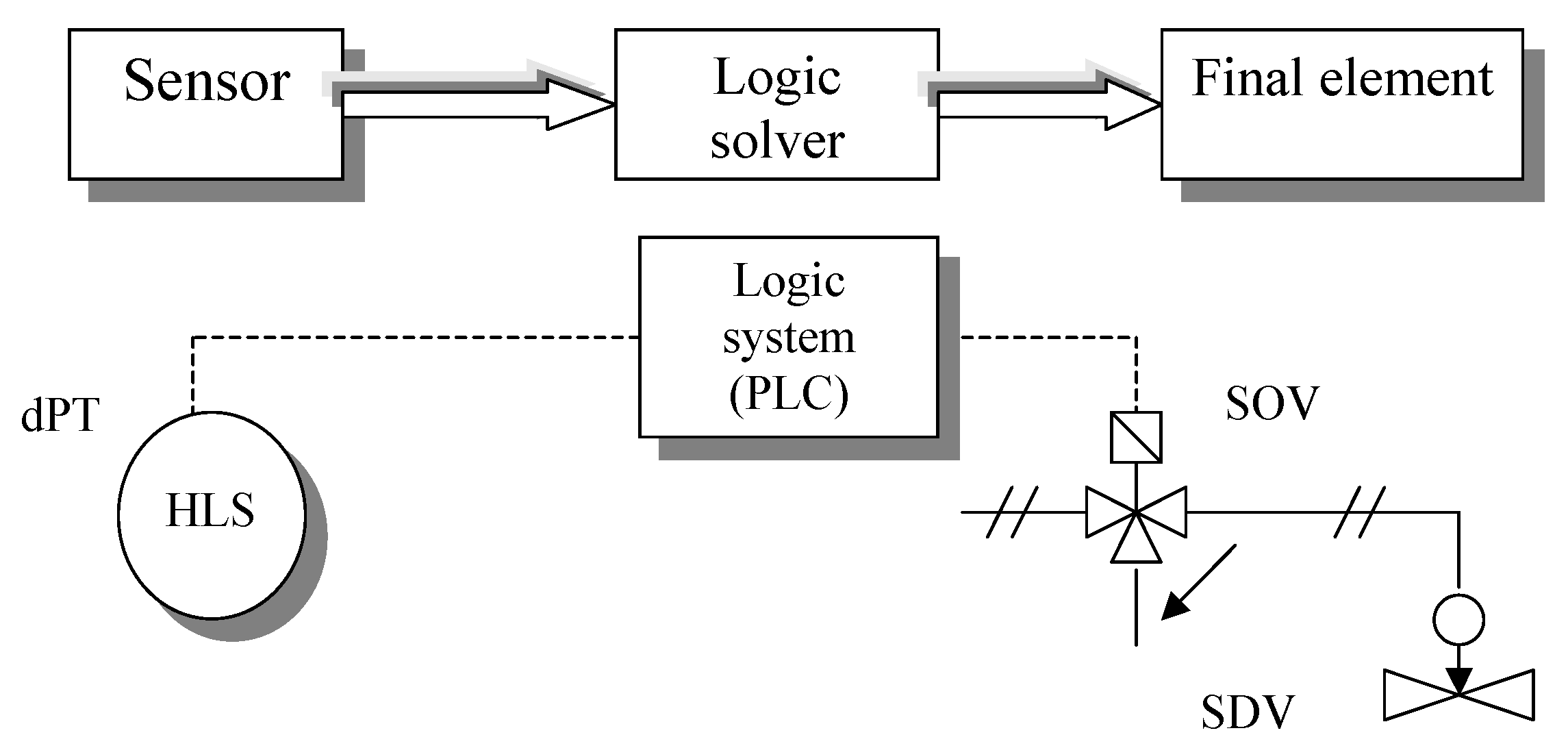

3.1. Probabilistic Modelling of Safety-Related Subsystems

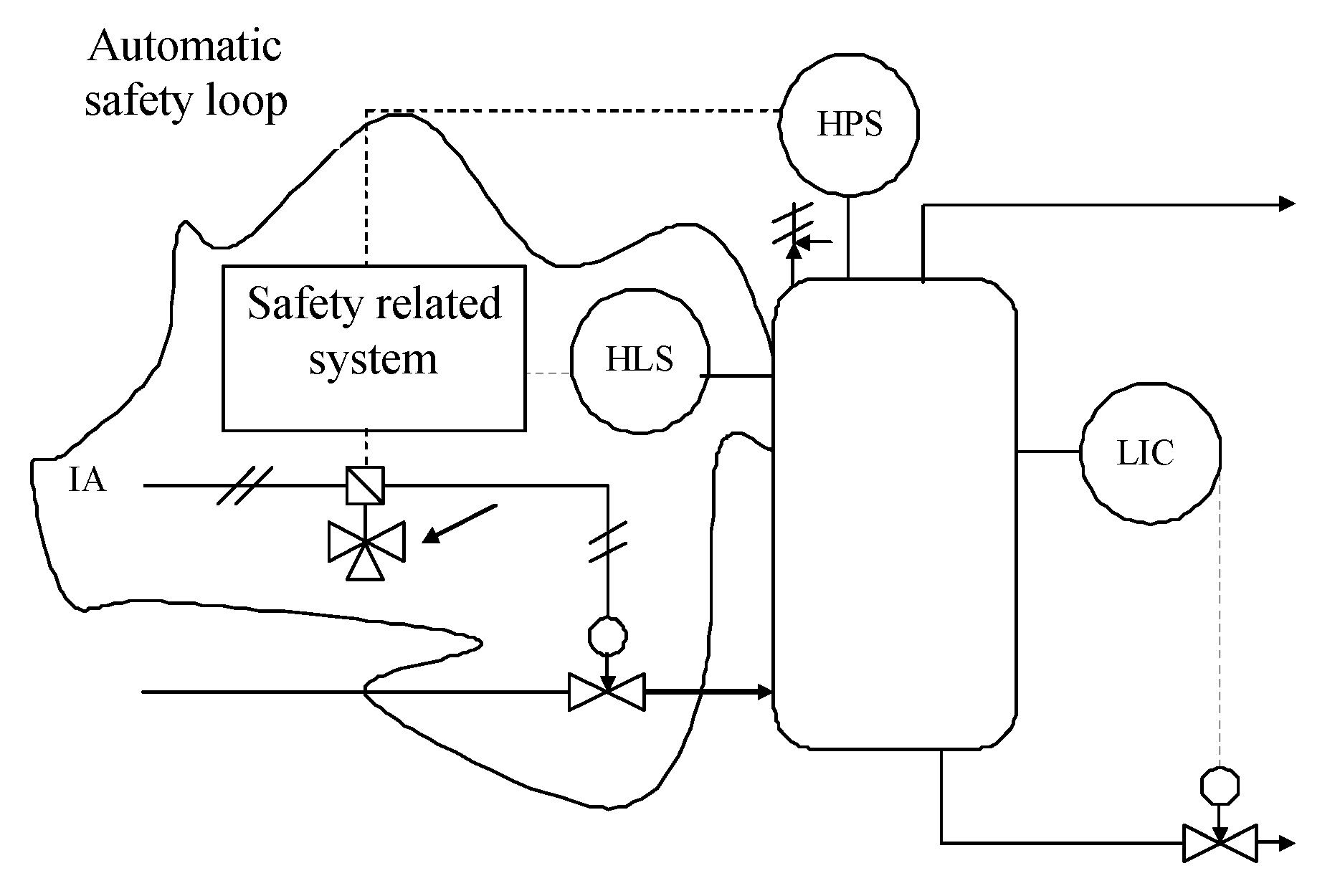

3.2. Examples of Functional Safety Analysis with Cybersecurity

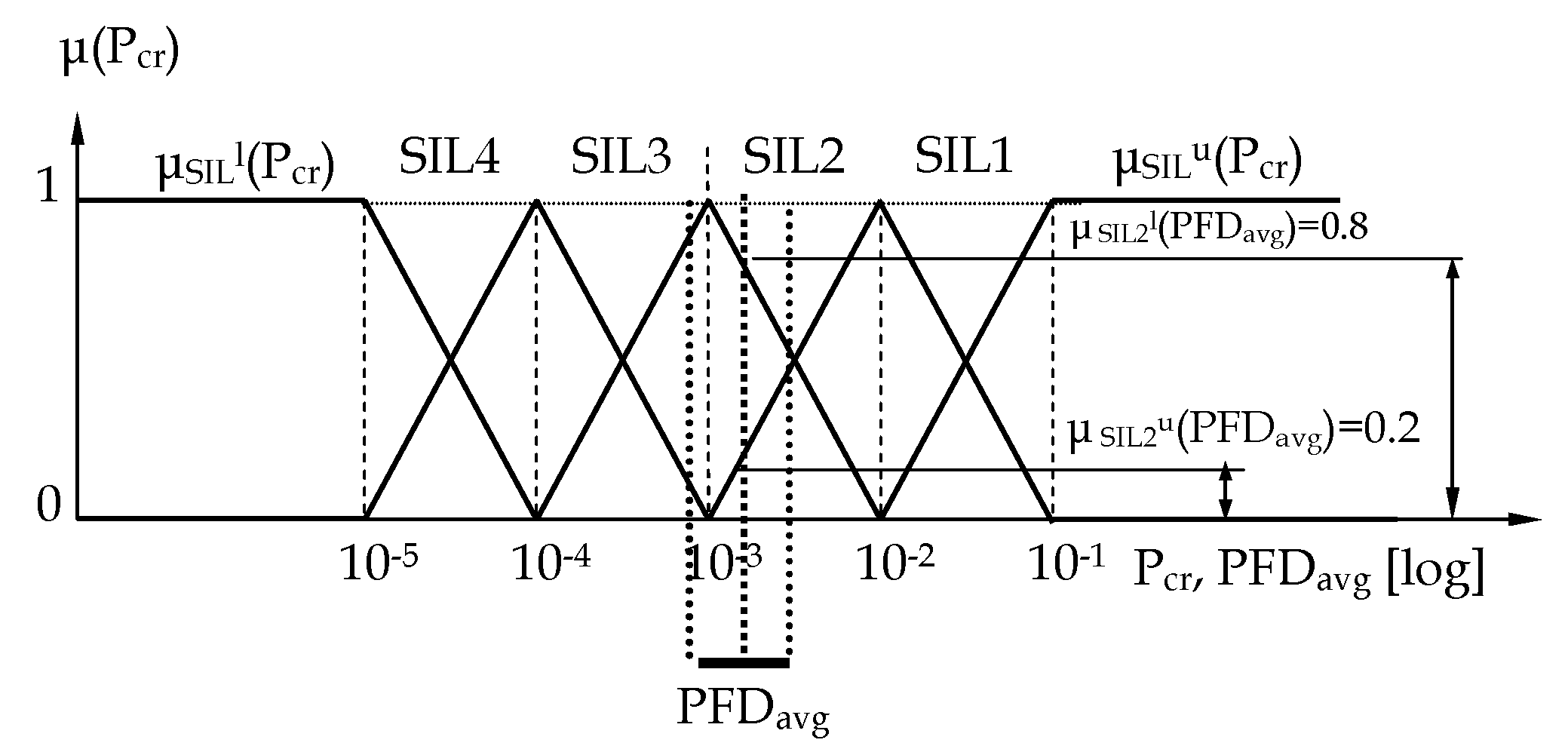

4. Verification of SIL under Uncertainty

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kosmowski, K.T. Functional Safety and Reliability Analysis Methodology for Hazardous Industrial Plants; Gdansk University of Technology: Gdansk, Poland, 2013. [Google Scholar]

- Śliwiński, M. Functional Safety and Information Security in the Critical Infrastructure Systems and Objects; Monographs 171; Gdansk University of Technology: Gdansk, Poland, 2018. [Google Scholar]

- Security for Industrial Automation and Control Systems; IEC 62443; International Electrotechnical Commission: Geneva, Switzerland, 2013.

- Functional Safety of Electrical/Electronic/Programmable Electronic Safety-Related Systems; IEC 61508; International Electrotechnical Commission: Geneva, Switzerland, 2010.

- Functional Safety: Safety Instrumented Systems for the Process Industry Sector; IEC 61511; International Electrotechnical Commission: Geneva, Switzerland, 2015.

- LOPA: Layer of Protection Analysis, Simplified Process Risk Assessment; Center for Chemical Process Safety, American Institute of Chemical Engineers: New York, NY, USA, 2001.

- Torres-Echeverria, A.C. On the use of LOPA and risk graphs for SIL determination. J. Loss Prev. Process Ind. 2016, 41, 333–343. [Google Scholar] [CrossRef]

- Subramanian, N.; Zalewski, J. Quantitative Assessment of Safety and Security of System Architectures for Cyberphysical Systems Using NFR Approach. IEEE Syst. J. 2016, 10, 397–409. [Google Scholar] [CrossRef]

- Śliwiński, M. Verification of safety integrity level for safety-related functions enhanced with security aspects. Process Saf. Environ. Prot. 2018, 118, 79–92. [Google Scholar] [CrossRef]

- Kriaa, S.; Pietre-Cambacedes, L.; Bouissou, M.; Halgand, Y. Approaches combining safety and security for industrial control systems. Reliab. Eng. Syst. Saf. 2015, 139, 156–178. [Google Scholar] [CrossRef]

- Piesik, E.; Śliwiński, M.; Barnert, T. Determining the safety integrity level of systems with security aspects. Reliab. Eng. Syst. Saf. 2016, 152, 259–272. [Google Scholar] [CrossRef]

- Gabriel, A.; Ozansoy, C.; Shi, J. Developments in SIL determination and calculation. Reliab. Eng. Syst. Saf. 2018, 177, 148–161. [Google Scholar] [CrossRef]

- Śliwiński, M.; Piesik, E. Integrated functional safety and cybersecurity analysis. IFAC Pap. OnLine 2018, 51, 1263–1270. [Google Scholar] [CrossRef]

- Saleh, J.H.; Cummings, A.M. Safety in the mining industry and the unfinished legacy of mining accidents. Saf. Sci. 2011, 49, 764–777. [Google Scholar] [CrossRef]

- Subramanian, N.; Zalewski, J. Use of the NFR Approach to Safety and Security Analysis of Control Chains in SCADA. IFAC Pap. OnLine 2018, 51, 214–219. [Google Scholar] [CrossRef]

- CYBER Methods and Protocols. Part. 1: Method and Pro Forma for Threat, Vulnerability, Risk Analysis (TVRA); Technical Specs, ETSI TS 102 165-1; European Telecommunications Standards Institute: Sophia Antipolis, France, 2017. [Google Scholar]

- Kosmowski, K.T.; Śliwiński, M. Knowledge-based functional safety and security management in hazardous industrial plants with emphasis on human factors. In Advanced Control and Diagnostic Systems; PWNT: Gdańsk, Poland, 2015. [Google Scholar]

- Information Technology Security Techniques—Evaluation Criteria for IT Security; ISO/IEC 15408; ISO: Geneva, Switzerland, 2009.

- Safety of Machinery—Guidance to Machinery Manufacturers for Consideration of Related IT Security (Cyber Security) Aspects; ISO/DTR 22100; ISO: Geneva, Switzerland, 2018.

- Safety of Machinery—Security Aspects to Functional Safety of Safety-Related—Control Systems; IEC TR 63074; International Electrotechnical Commission: Geneva, Switzerland, 2019.

- Information Technology—Information Security Management Systems—Overview and Vocabulary; ISO/IEC 27000; ISO: Geneva, Switzerland, 2018.

- Information Technology, Security Techniques, Information Security Management Systems; ISO/IEC 27001; ISO: Geneva, Switzerland, 2007.

- Information Technology, Security Techniques, Information Security Risk Management; ISO/IEC 27005; ISO: Geneva, Switzerland, 2011.

- Białas, A. Semiformal Common Criteria Compliant IT Security Development Framework, Studia Informatica; Silesian University of Technology Press: Gliwice, Poland, 2008. [Google Scholar]

- Risk Management—Guidelines; ISO 31000; International Organization for Standardization: Geneva, Switzerland, 2018.

- Braband, J. What’s Security Level got to do with Safety Integrity Level? In Proceedings of the ERTS 2016, Toulouse, France, 27–29 January 2016. [Google Scholar]

- Aven, T. A Framework for Risk Analysis Covering both Safety and Security. Reliab. Eng. Syst. Saf. 2007, 92, 745–754. [Google Scholar] [CrossRef]

- Kanamaru, H. Bridging Functional Safety and Cyber Security of SIS/SCS. In Proceedings of the SICE Annual Conference 2017, Kanazawa University, Kanazawa, Japan, 19–22 September 2017. [Google Scholar]

- Chockalingam, S.; Hadžiosmanović, D.; Pieters, W.; Teixeira, A.; van Gelder, P. A Survey of Integrated Safety and Security Risk Assessment Methods. In Proceedings of the CRITIS 2016, Paris, France, 10–12 October 2016; pp. 50–62. [Google Scholar]

- Abdo, H.; Kaouk, M.; Flaus, J.M.; Masse, F. Safety and Security Risk Analysis Approach to Industrial Control Systems. Comput. Secur. 2018, 72, 175–195. [Google Scholar] [CrossRef]

- Guide for Conducting Risk Assessments; Report NIST SP 800-30 Rev. 1; NIST: Gaithersburg, MD, USA, 2012.

- Goble, W.; Cheddie, H. Safety Instrumented Systems Verification: Practical Probabilistic Calculations; ISA: Pittsburgh, PA, USA, 2015. [Google Scholar]

- Smith, D.J. Reliability. In Practical Methods for Maintainability and Risk, 9th ed.; Elsevier: London, UK, 2017. [Google Scholar]

- Subramanian, N.; Zalewski, J. Safety and Security Integrated SIL Evaluation Using the NFR Approach. In Integrating Research and Practice in Software Engineering; Springer: Berlin/Heidelberg, Germany, 2020; pp. 53–68. [Google Scholar]

- Kościelny, J.M.; Syfert, M.; Fajdek, B. Modern Measures of Risk Reduction in Industrial Processes. J. Autom. Mob. Robot. Intell. Syst. 2019, 13, 20–29. [Google Scholar] [CrossRef]

- Hoyland, A.; Rausand, M. System Reliability Theory. In Models and Statistical Methods, 2nd ed.; John Wiley & Sons, Inc: Hoboken, NJ, USA, 2004. [Google Scholar]

- Kumamoto, H. Satisfying Safety Goals by Probabilistic Risk Assessment; Springer Series in Reliability Engineering; Springer: London, UK, 2007. [Google Scholar]

- Hokstad, P. A generalisation of the beta factor model. In Proceedings of the European Safety & Reliability Conference, Berlin, Germany, 14–18 June 2004. [Google Scholar]

- Grøtan, T.O.; Jaatun, M.G.; Øien, K.; Onshus, T. The SeSa Method for Assessing Secure Access to Safety Instrumented Systems; Report SINTEF A1626; SINTEF: Trondheim, Norway, 2007. [Google Scholar]

- SESAMO. Security and Safety Modelling; Artemis JU Grant Agreement 295354, April 2014; European Commission: Brussels, Belgium, 2014. [Google Scholar]

- SINTEF. Reliability Data for Safety Instrumented Systems; PDS Data Handbook; SINTEF: Trondheim, Norway, 2010. [Google Scholar]

| SIL | PFDavg | PFH [h−1] |

|---|---|---|

| 4 | [10−5, 10−4) | [10−9, 10−8) |

| 3 | [10−4, 10−3) | [10−8, 10−7) |

| 2 | [10−3, 10−2) | [10−7, 10−6) |

| 1 | [10−2, 10−1) | [10−6, 10−5) |

| Categories: Fatality → Frequency ↓ | NA (10−3, 10−2] Injury | NB (10−2, 10−1] More Injuries | NC (10−1, 1] Single Fatality | ND (1, 10] Several Fatalities | NE (10, 102] Many Fatalities |

|---|---|---|---|---|---|

| W3 [a−1], Fd (1, 10] Frequent | a | SIL3x SIL2y; ↓10−3 SIL1x | SIL4z SIL3y; ↓10−4 SIL2x | bz SIL4y; ↓10−5 SIL3x | bz by bx |

| W2 [a−1], Fc (10−1, 1] Probable | SIL2z SIL1y; ↓10−2 ax | SIL3z SIL2y; ↓10−3 SIL1x | SIL4z SIL3y; ↓10−4 SIL2x | bz SIL4y; ↓10−5 SIL3x | |

| W1 [a−1], Fb (10−2, 10−1] Occasional | SIL1z ay; ↓10−1 | SIL2z SIL1y; ↓10−2 ax | SIL3x SIL2y; ↓10−3 SIL1x | SIL4z SIL3y; ↓10−4 SIL2x | |

| W0 [a−1], Fa (10−3, 10−2] Seldom | SIL1z ay; ↓10−1 | SIL2z SIL1y; ↓10−2 ax | SIL3x SIL2y; ↓10−3 SIL1x |

| SAL1 | Protection against casual or coincidental violation |

| SAL2 | Protection against intentional violation using simple means with low resources, generic skills, and low motivation |

| SAL3 | Protection against intentional violation using sophisticated means with moderate resources, system-specific skills and moderate motivation |

| SAL4 | Protection against intentional violation using sophisticated means with extended resources, system-specific skills, and high motivation |

| Evaluation Assurance Level | Security Assurance Level | Cybersecurity |

|---|---|---|

| EAL1 | SAL1 | Low |

| EAL2 | SAL1 | Low |

| EAL3 | SAL2 | Medium |

| EAL4 | SAL2 | Medium |

| EAL5 | SAL3 | High |

| EAL6 | SAL4 | High |

| EAL7 | SAL4 | High |

| The Degree of Risk Rcs and the Associated Security Assurance Level SAL | Probability and/or Frequency of a Cyber-Attack | ||||

|---|---|---|---|---|---|

| Low | Medium | High | Very High | ||

| Severity of the consequences C | catastrophic | medium Rcs SAL2 | high Rcs SAL3 | very high Rcs SAL4 | very high Rcs SAL4 |

| critical | medium Rcs SAL2 | high Rcs SAL3 | very high Rcs SAL4 | very high Rcs SAL4 | |

| marginal | low Rcs SAL1 | medium Rcs SAL2 | medium Rcs SAL2 | high Rcs SAL3 | |

| minor | low Rcs SAL1 | low Rcs SAL1 | medium Rcs SAL2 | high Rcs SAL3 | |

| The Degree of Rsec Risk and the Associated Evaluation Assurance Level EAL | Probability and/or Frequency of a Cyber-Attack | ||||

|---|---|---|---|---|---|

| Low | Medium | High | Very High | ||

| Severity of the consequences C | catastrophic | medium Rsec EAL3 | high Rsec EAL5 | very high Rsec EAL6 | very high Rsec EAL7 |

| critical | medium Rsec EAL2 | medium Rsec EAL4 | very high Rsec EAL6 | very high Rsec EAL6 | |

| marginal | low Rsec EAL1 | medium Rsec EAL3 | high Rsec EAL5 | high Rsec EAL5 | |

| minor | low Rsec EAL1 | low Rsec EAL2 | medium Rsec EAL4 | medium Rsec EAL4 | |

| Rfs Risk and Associated SIL Safety Integrity Level | Probability and/or Frequency of Failure | ||||

|---|---|---|---|---|---|

| Low | Medium | High | Very High | ||

| Severity of the consequences C | catastrophic | medium Rfs SIL2 | high Rfs SIL3 | very high Rfs SIL4 | very high Rfs b |

| critical | medium Rfs SIL2 | high Rfs SIL3 | very high Rfs SIL4 | very high Rfs SIL4 | |

| marginal | low Rfs SIL1 | medium Rfs SIL2 | high Rfs SIL3 | high Rfs SIL3 | |

| minor | very low Rfs a | low Rfs SIL1 | medium Rfs SIL2 | medium Rfs SIL2 | |

| Subsystem | dPT | I/I | PLC | SDV | SOV |

|---|---|---|---|---|---|

| λDU [h−1] | 2.24 × 10−7 | 1.1 × 10−7 | 5.2 × 10−11 | 1 × 10−7 | 1.14 × 10−8 |

| TI [y] | 1 | 1 | 1 | 1 | 1 |

| Subsystem | PFDavg | ||

|---|---|---|---|

| β = 0 | β = 0.05 | β = 0.1 | |

| dPT (1oo2) | 1.28 × 10−6 | 5.02 × 10−5 | 9.92 × 10−5 |

| I/I (1oo2) | 3.09 × 10−7 | 2.44 × 10−5 | 4.84 × 10−5 |

| PLC | 2.28 × 10−7 | 2.28 × 10−7 | 2.28 × 10−7 |

| SDV | 4.38 × 10−4 | 4.38 × 10−4 | 4.38 × 10−4 |

| SOV | 4.99 × 10−5 | 4.99 × 10−5 | 4.99 × 10−5 |

| System | 5.38 × 10−4 | 5.63 × 10−4 | 6.36 × 10−4 |

| SIL | 3 | 3 | 3 |

| PS | TS | DI | CPU | DO | V | |

|---|---|---|---|---|---|---|

| λ [h−1] | 4 × 10−6 | 2 × 10−6 | 1.2 × 10−6 | 2.2 × 10−6 | 6.5 × 10−7 | 1.6 × 10−6 |

| FS [%] | 50% | 50% | 50% | 50% | 50% | 50% |

| λD [h−1] | 2 × 10−6 | 1 × 10−6 | 5.46 × 10−7 | 1.04 × 10−6 | 3.1 × 10−7 | 6.5 × 10−7 |

| λS [h−1] | 2 × 10−6 | 1 × 10−6 | 5.46 × 10−7 | 1.04 × 10−6 | 3.1 × 10−7 | 6.5 × 10−7 |

| DC [%] | 90% | 90% | 90% | 90% | 90% | 90% |

| λDD [h−1] | 1.8 × 10−6 | 9 × 10−7 | 4.91 × 10−7 | 9.38 × 10−7 | 2.79 × 10−7 | 5.85 × 10−7 |

| λDU [h−1] | 2 × 10−7 | 1 × 10−7 | 5.46 × 10−8 | 1.04 × 10−7 | 3.1 × 10−8 | 6.5 × 10−8 |

| λSD [h−1] | 1.8 × 10−6 | 9 × 10−7 | 4.91 × 10−7 | 9.38 × 10−7 | 2.79 × 10−7 | 5.85 × 10−7 |

| λSU [h−1] | 2 × 10−7 | 1 × 10−7 | 5.46 × 10−8 | 1.04 × 10−7 | 3.1 × 10−8 | 6.5 × 10−8 |

| MTTR [h] | 8 | 8 | 8 | 8 | 8 | 8 |

| TI [y] | 1 | 1 | 1 | 1 | 1 | 1 |

| β | 0.02 | 0.02 | 0.02 | 0.02 | 0.02 | 0.02 |

| PS | TS | DI | CPU | DO | V | |

|---|---|---|---|---|---|---|

| PFDavg1oo1 | 8.78 × 10−3 | 4.39 × 10−3 | 2.39 × 10−3 | 4.57 × 10−3 | 1.36 × 10−3 | 2.85 × 10−3 |

| PFDavg1oo2 | 2.76 × 10−4 | 1.13 × 10−4 | 5.53 × 10−5 | 1.19 × 10−4 | 2.96 × 10−5 | 6.76 × 10−5 |

| PFDavg2oo3 | 4.77 × 10−4 | 1.63 × 10−4 | 7.03 × 10−5 | 1.73 × 10−4 | 3.45 × 10−5 | 8.88 × 10−5 |

| SILelem | 3 | 3 | 4 | 3 | 4 | 4 |

| PFDavgSYS | 9.7 × 10−4 | |||||

| SILSYS | 3 | |||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Śliwiński, M.; Piesik, E. Designing Control and Protection Systems with Regard to Integrated Functional Safety and Cybersecurity Aspects. Energies 2021, 14, 2227. https://doi.org/10.3390/en14082227

Śliwiński M, Piesik E. Designing Control and Protection Systems with Regard to Integrated Functional Safety and Cybersecurity Aspects. Energies. 2021; 14(8):2227. https://doi.org/10.3390/en14082227

Chicago/Turabian StyleŚliwiński, Marcin, and Emilian Piesik. 2021. "Designing Control and Protection Systems with Regard to Integrated Functional Safety and Cybersecurity Aspects" Energies 14, no. 8: 2227. https://doi.org/10.3390/en14082227

APA StyleŚliwiński, M., & Piesik, E. (2021). Designing Control and Protection Systems with Regard to Integrated Functional Safety and Cybersecurity Aspects. Energies, 14(8), 2227. https://doi.org/10.3390/en14082227