Leveraging Label Information in a Knowledge-Driven Approach for Rolling-Element Bearings Remaining Useful Life Prediction

Abstract

1. Introduction

- A new autoencoder (AE) capable of seeding label information in hidden layers without affecting the learning inputs or even the reconstruction process, and also to ensure knowledge transfer, is designed.

- This AE is stacked and strengthened with a denoising scheme to ensure more robust extraction as well as more homogeneous mixture of label and feature mapping.

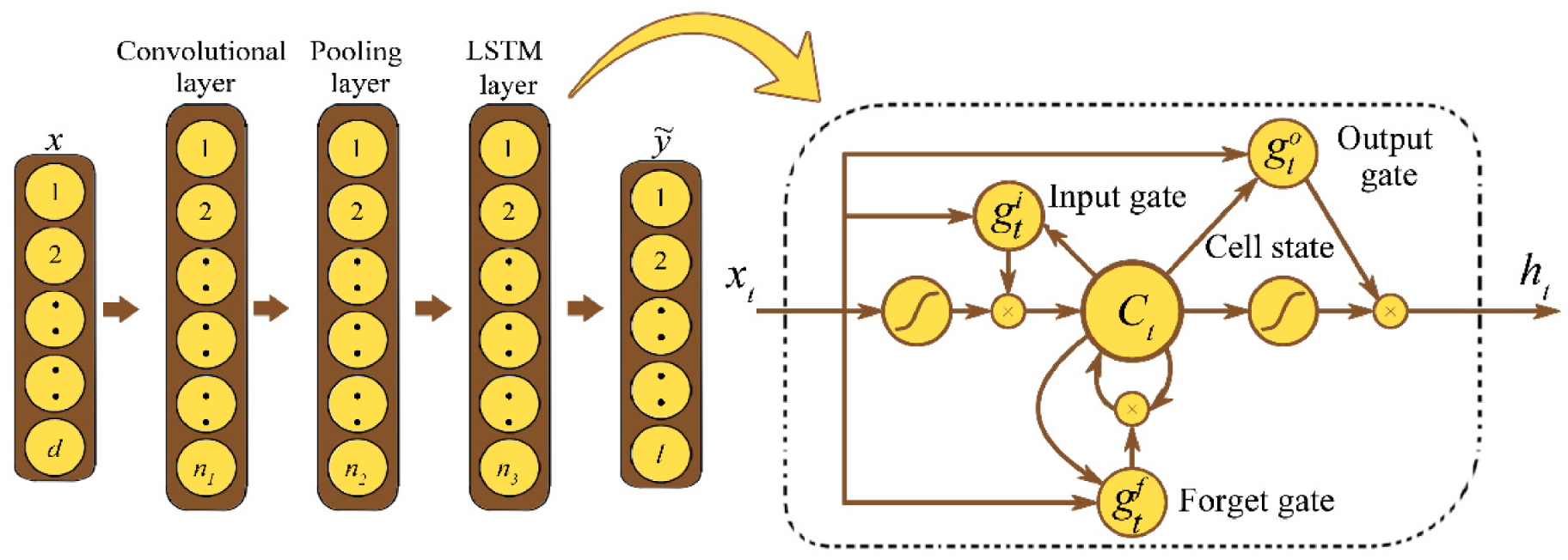

- Due to the sequentially driven and non-stationary nature of vibration signals, a convolutional LSTM (C-LSTLM) has been designed to fit both time-varying adaptive learning as well as accurate extraction, similar to the work done in [26].

- Two main datasets, namely, IMS and WTHS, have been involved in an attempt to produce more generalization by transferring knowledge between them using the same learning framework.

- Unlike previously mentioned works that mostly deal with a single prediction problem (either HI or HS), both HI and HS have been investigated in this work.

- In this work, we also used an exponential HI deteriorating function that shows it is more compatible with extracted deterioration features rather than the linear one.

- Concerning the HS splitting, we have involved the Gaussian mixture model (GMM) based silhouettes coefficient.

2. Materials and Methods

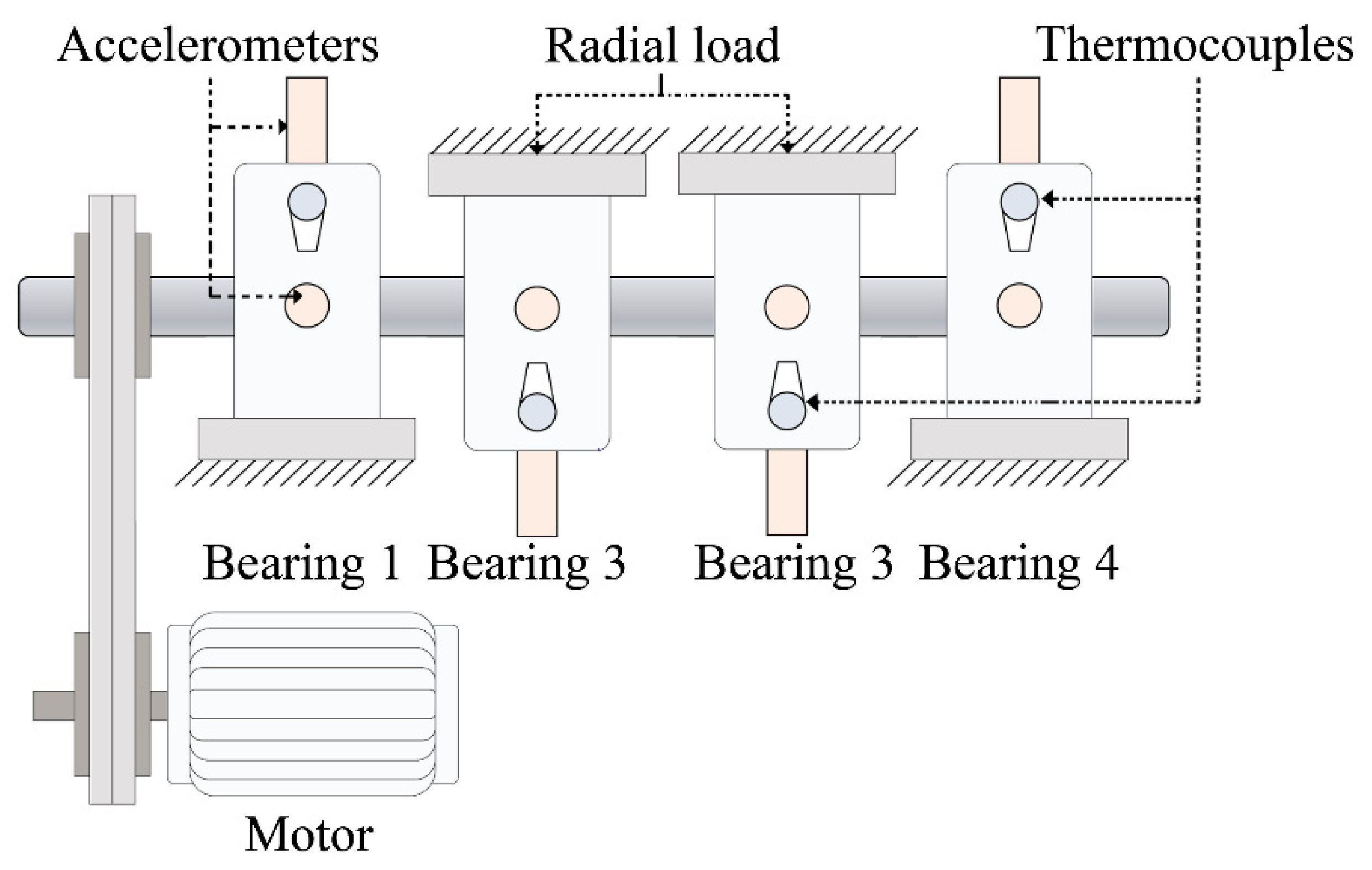

2.1. IMS Bearing Data

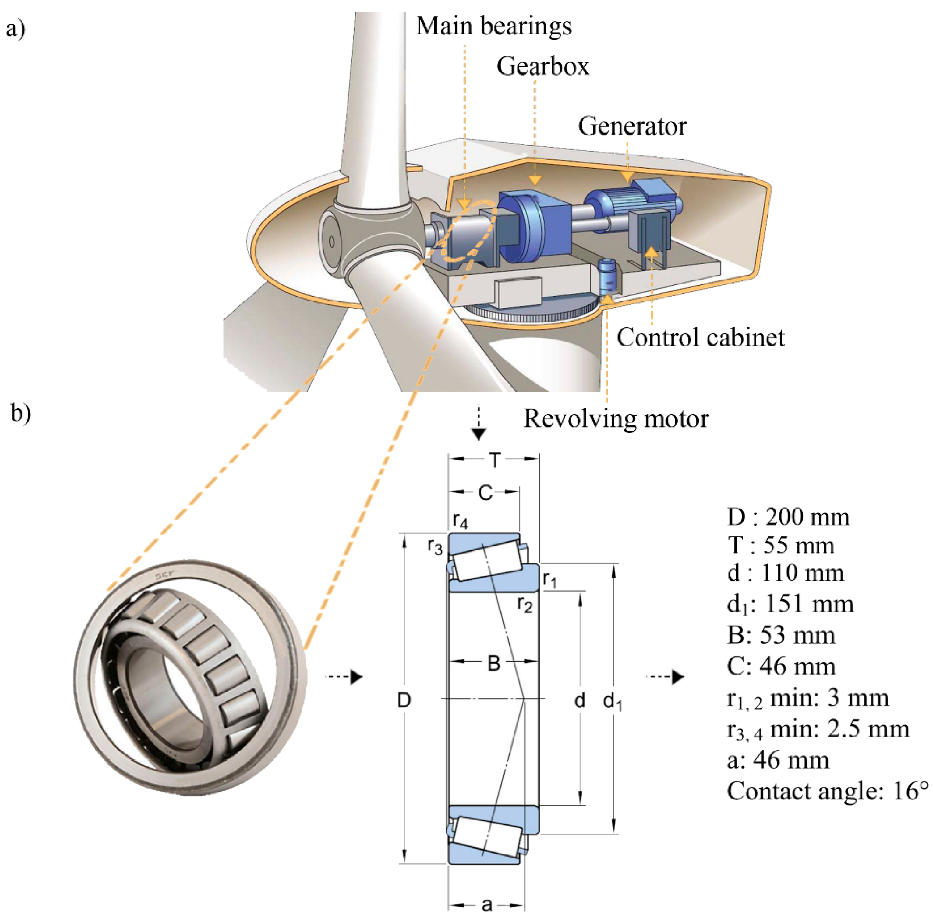

2.2. WTHS Bearing Data

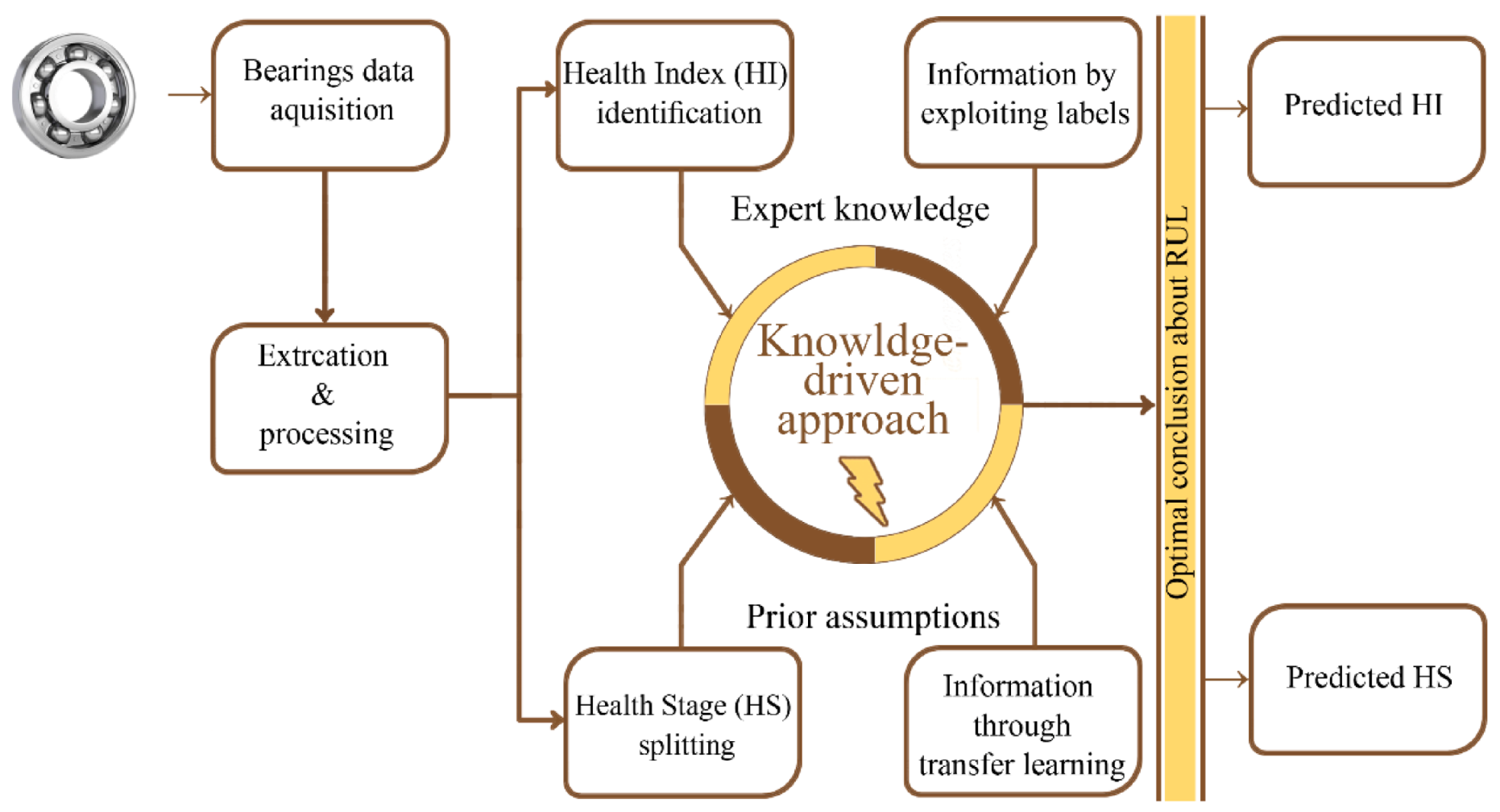

2.3. Proposed Knowledge-Driven Methodology

2.3.1. Data Preprocessing

2.3.2. HI Identification

2.3.3. HS Splitting

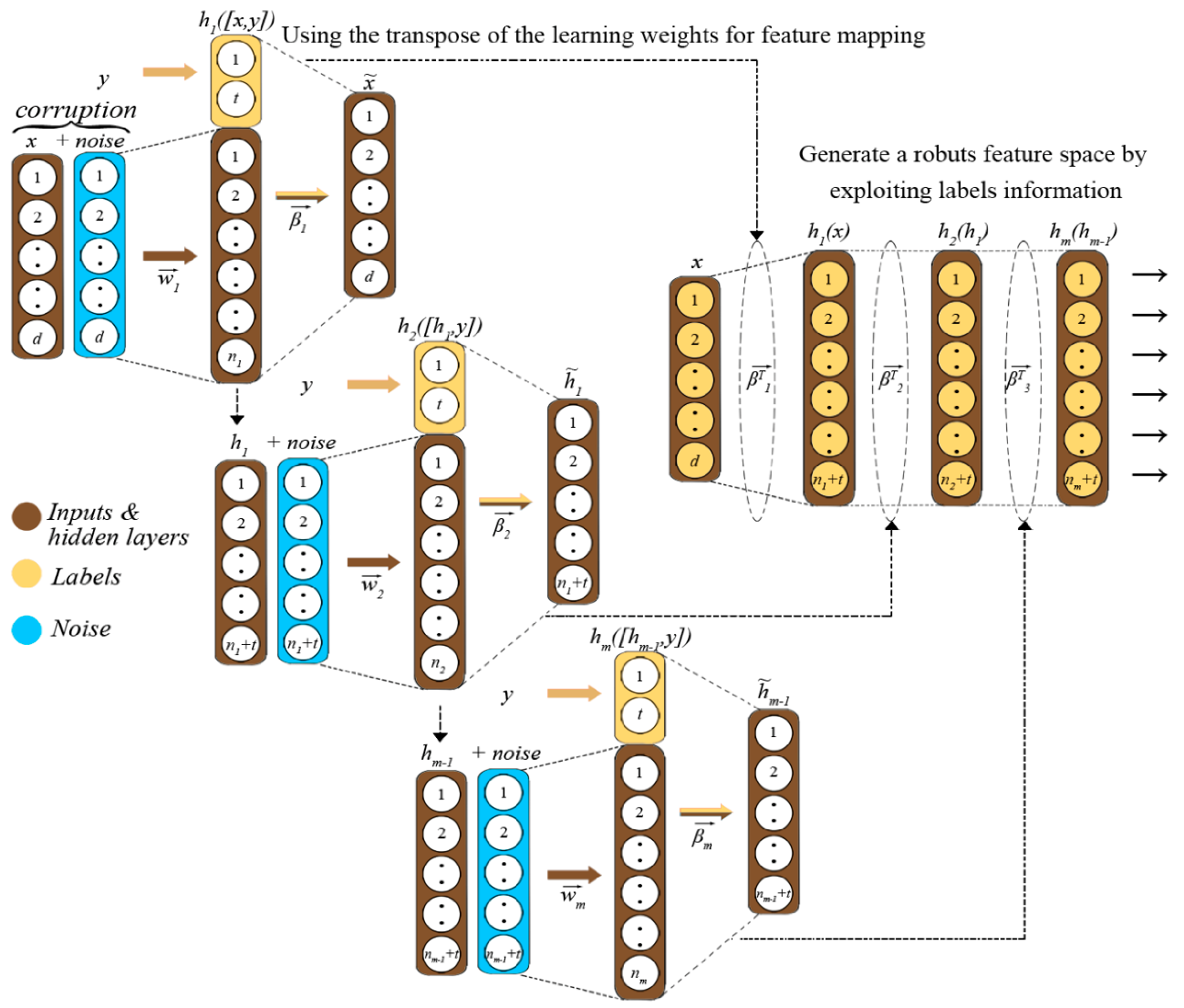

2.3.4. Denoising Autoencoder for Label Embedding

- Retrieve corrupted inputs using generated noise from any distribution with a specific magnitude and rate and use them to corrupt the inputs , same as in Equation (5).

- Activate the hidden layer using any activation transfer function that holds both ordinary full rank mapping of and seeded labels , as explained by Equation (6). are the input weights and biases, respectively.

- Determine the reconstructions weights using the original inputs and the new feature mapping, same as described by Equation (7).

- Repeat the learning process by considering the hidden layers as inputs of the next autoencoders, as explained by Equation (8), where is the index of the autoencoder.

- After the training process is finished, one can construct a fully trained network for robust feature mapping using the transpose of the output weights , as in Equation (9).

2.3.5. Data-Driven Network and Transfer Learning

3. Results and Discussion

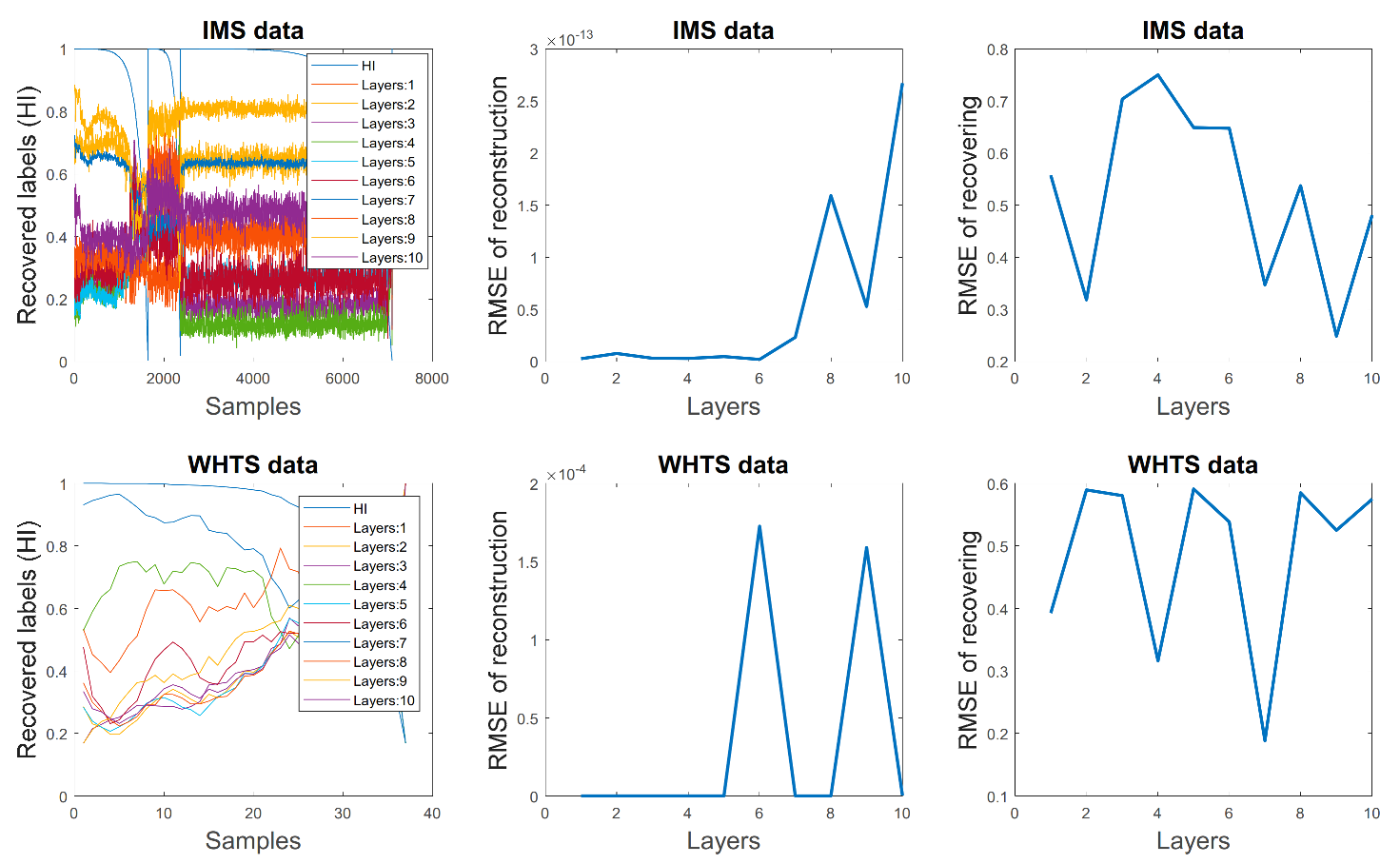

3.1. Labels Recovering Process

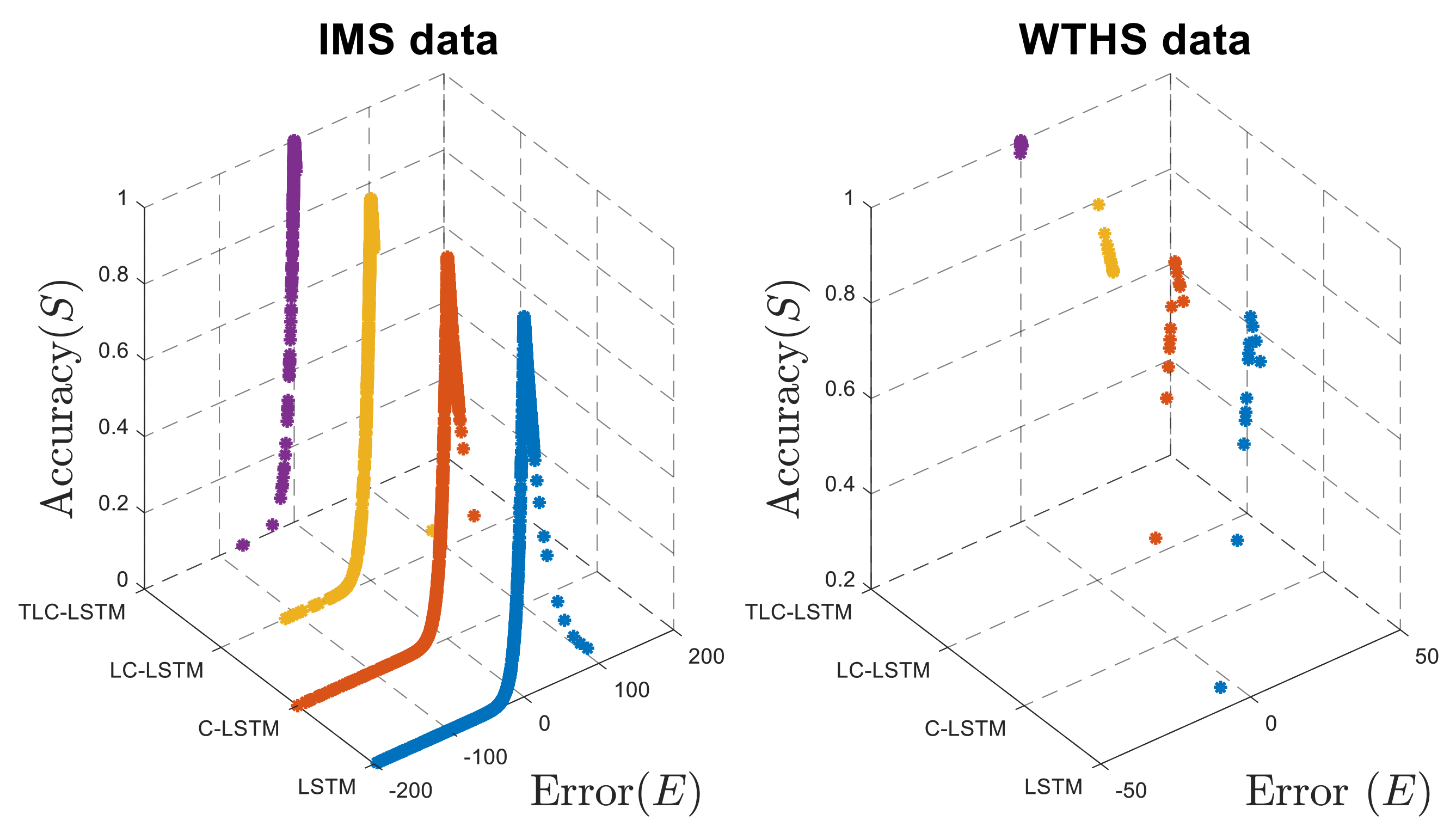

3.2. HI Prediction Results

3.3. HS Prediction Results

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Berghout, T.; Mouss, L.H.; Bentrcia, T.; Elbouchikhi, E.; Benbouzid, M. A deep supervised learning approach for condition-based maintenance of naval propulsion systems. Ocean. Eng. 2021, 221, 108525. [Google Scholar] [CrossRef]

- Peng, J.; Zheng, Z.; Zhang, X.; Deng, K.; Gao, K.; Li, H.; Chen, B.; Yang, Y.; Huang, Z. A data-driven method with feature enhancement and adaptive optimization for lithium-ion battery remaining useful life prediction. Energies 2020, 13, 752. [Google Scholar] [CrossRef]

- Lei, Y. Remaining useful life prediction. Intelligent Fault Diagnosis and Remaining Useful Life Prediction of Rotating Machinery; Elsevier BV: Oxford, UK, 2017; pp. 281–358. [Google Scholar]

- Lei, Y.; Li, N.; Guo, L.; Li, N.; Yan, T.; Lin, J. Machinery health prognostics: A systematic review from data acquisition to RUL prediction. Mech. Syst. Signal. Process. 2018, 104, 799–834. [Google Scholar] [CrossRef]

- Khamoudj, C.E.; Benbouzid-Si Tayeb, F.; Benatchba, K.; Benbouzid, M.; Djaafri, A. A Learning Variable Neighborhood Search Approach for Induction Machines Bearing Failures Detection and Diagnosis. Energies 2020, 13, 2953. [Google Scholar] [CrossRef]

- Yan, M.; Wang, X.; Wang, B.; Chang, M.; Muhammad, I. Bearing remaining useful life prediction using support vector machine and hybrid degradation tracking model. ISA Trans. 2020, 98, 471–482. [Google Scholar] [CrossRef]

- Li, X. Deep learning-based remaining useful life estimation of bearings using multi-scale feature extraction. Reliab. Eng. Syst. Saf. 2019, 182, 208–218. [Google Scholar] [CrossRef]

- Sun, S.; Przystupa, K.; Wei, M.; Yu, H.; Ye, Z.; Kochan, O. Fast bearing fault diagnosis of rolling element using Lévy Moth-Flame optimization algorithm and Naive Bayes. Eksploat. I Niezawodn. 2020, 22, 730–740. [Google Scholar] [CrossRef]

- Ben Ali, J.; Saidi, L.; Mouelhi, A.; Chebel-Morello, B.; Fnaiech, F. Linear feature selection and classification using PNN and SFAM neural networks for a nearly online diagnosis of bearing naturally progressing degradations. Eng. Appl. Artif. Intell. 2015, 42, 67–81. [Google Scholar] [CrossRef]

- Ben Ali, J.; Harrath, S.; Bechhoefer, E.; Benbouzid, M. Online automatic diagnosis of wind turbine bearings progressive degradations under real experimental conditions based on unsupervised machine learning Online automatic diagnosis of wind turbine bearings progressive degradations under real experimental con. Appl. Acoust. 2018, 132, 167–181. [Google Scholar] [CrossRef]

- Qiu, H.; Lee, J.; Lin, J.; Yu, G. Wavelet filter-based weak signature detection method and its application on rolling element bearing prognostics. J. Sound. Vib. 2006, 289, 1066–1090. [Google Scholar] [CrossRef]

- Han, S.; Oh, S.; Jeong, J. Bearing Fault Diagnosis Based on Multiscale Convolutional Neural Network Using Data Augmentation. J. Sens. 2021, 2021, 6699637. [Google Scholar] [CrossRef]

- Moshrefzadeh, A. Condition monitoring and intelligent diagnosis of rolling element bearings under constant/variable load and speed conditions. Mech. Syst. Signal. Process. 2021, 149, 107153. [Google Scholar] [CrossRef]

- Zareapoor, M.; Shamsolmoali, P.; Yang, J. Oversampling adversarial network for class-imbalanced fault diagnosis. Mech. Syst. Signal. Process. 2021, 149, 107175. [Google Scholar] [CrossRef]

- Saidi, L.; Ben Ali, J.; Bechhoefer, E.; Benbouzid, M. Wind turbine high-speed shaft bearings health prognosis through a spectral Kurtosis-derived indices and SVR. Appl. Acoust. 2017, 120, 1–8. [Google Scholar] [CrossRef]

- Meddour, I.; Messekher, S.E.; Younes, R.; Yallese, M.A. Selection of bearing health indicator by GRA for ANFIS-based forecasting of remaining useful life. J. Braz. Soc. Mech. Sci. Eng. 2021, 43, 144. [Google Scholar] [CrossRef]

- Li, X.; Zhang, W.; Ma, H.; Luo, Z.; Li, X. Data alignments in machinery remaining useful life prediction using deep adversarial neural networks. Knowl.-Based Syst. 2020, 197, 105843. [Google Scholar] [CrossRef]

- Wang, J.; Liang, Y.; Zheng, Y.; Gao, R.X.; Zhang, F. An integrated fault diagnosis and prognosis approach for predictive maintenance of wind turbine bearing with limited samples. Renew. Energy 2020, 145, 642–650. [Google Scholar] [CrossRef]

- Huang, G.; Zhang, Y.; Ou, J. Transfer remaining useful life estimation of bearing using depth-wise separable convolution recurrent network. Measurement 2021, 176, 109090. [Google Scholar] [CrossRef]

- Kim, M.; Uk, J.; Lee, J.; Youn, B.D.; Ha, J. A Domain Adaptation with Semantic Clustering (DASC) method for fault diagnosis of rotating machinery. ISA Trans. 2021, 1. [Google Scholar] [CrossRef]

- Cheng, H.; Kong, X.; Chen, G.; Wang, Q.; Wang, R. Transferable convolutional neural network based remaining useful life prediction of bearing under multiple failure behaviors. Meas. J. Int. Meas. Confed. 2021, 168, 108286. [Google Scholar] [CrossRef]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A comprehensive survey on transfer learning. Proc. IEEE 2020, 109, 43–76. [Google Scholar] [CrossRef]

- Sánchez-Morales, A.; Sancho-Gómez, J.-L.; Figueiras-Vidal, A.R. Exploiting label information to improve auto-encoding based classifiers. Neurocomputing 2019, 370, 104–108. [Google Scholar] [CrossRef]

- Sánchez-Morales, A.; Sancho-Gómez, J.L.; Figueiras-Vidal, A.R. Complete autoencoders for classification with missing values. Neural Comput. Appl. 2020, 33, 1951–1957. [Google Scholar] [CrossRef]

- Berghout, T. A New Health Assessment Prediction Approach: Multi-Scale Ensemble Extreme Learning Machine. Preprints 2020. [Google Scholar] [CrossRef]

- Tovar, M.; Robles, M.; Rashid, F. PV Power Prediction, Using CNN-LSTM Hybrid Neural Network Model. Case of Study: Temixco-Morelos, México. Energies 2020, 13, 6512. [Google Scholar] [CrossRef]

- NASA Prognostics Center of Excellence. Available online: https://ti.arc.nasa.gov/tech/dash/groups/pcoe/prognostic-data-repository/#turbofan (accessed on 20 March 2021).

- Mathworks Wind Turbine High-Speed Bearing Prognosis. Available online: https://www.mathworks.com/help/predmaint/ug/wind-turbine-high-speed-bearing-prognosis.html (accessed on 21 March 2021).

- Panić, B.; Klemenc, J.; Nagode, M. Gaussian Mixture Model Based Classification Revisited: Application to the Bearing Fault Classification. Stroj. Vestn.-J. Mech. Eng. 2020, 66, 215–226. [Google Scholar] [CrossRef]

- He, Z.; Zhang, X.; Liu, C.; Han, T. Fault Prognostics for Photovoltaic Inverter Based on Fast Clustering Algorithm and Gaussian Mixture Model. Energies 2020, 13, 4901. [Google Scholar] [CrossRef]

- Rousseeuw, P.J. Silhouettes: A graphical aid to the interpretation and validation of cluster analysis. J. Comput. Appl. Math. 1987, 20, 53–65. [Google Scholar] [CrossRef]

- Berghout, T.; Mouss, L.; Kadri, O.; Saïdi, L.; Benbouzid, M. Aircraft Engines Remaining Useful Life Prediction with an Improved Online Sequential Extreme Learning Machine. Appl. Sci. 2020, 10, 1062. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep Learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef]

- Yoo, Y.; Baek, J.G. A novel image feature for the remaining useful lifetime prediction of bearings based on continuous wavelet transform and convolutional neural network. Appl. Sci. 2018, 8, 1102. [Google Scholar] [CrossRef]

- Elforjani, M.; Shanbr, S.; Bechhoefer, E. Detection of faulty high speed wind turbine bearing using signal intensity estimator technique. Wind. Energy 2018, 21, 53–69. [Google Scholar] [CrossRef]

- Elasha, F.; Shanbr, S.; Li, X.; Mba, D. Prognosis of a wind turbine gearbox bearing using supervised machine learning. Sensors 2019, 19, 3092. [Google Scholar] [CrossRef] [PubMed]

- Mao, W.; Feng, W.; Liu, Y.; Zhang, D.; Liang, X. A new deep auto-encoder method with fusing discriminant information for bearing fault diagnosis. Mech. Syst. Signal. Process. 2021, 150, 107233. [Google Scholar] [CrossRef]

- Glowacz, A. Acoustic fault analysis of three commutator motors. Mech. Syst. Signal. Process. 2019, 133, 106226. [Google Scholar] [CrossRef]

| WTHS Data | ||

|---|---|---|

| Algorithm | RMSE | |

| LSTM | 0.0305 | 0.8094 |

| C-LSTM | 0.0217 | 0.8477 |

| LC-LSTM | 0.0132 | 0.8679 |

| TLC-LSTM | 0.0017 | 0.9921 |

| IMS Data | ||

| LSTM | 0.2141 | 0.5883 |

| C-LSTM | 0.1010 | 0.7259 |

| LC-LSTM | 0.0185 | 0.8844 |

| TLC-LSTM | 0.0065 | 0.9636 |

| Dataset | ||

|---|---|---|

| Algorithm | IMS | WTHS |

| LSTM | 0.84363% | 0.90000% |

| C-LSTM | 0.96196% | 0.95000% |

| LC-LSTM | 0.96117% | 0.95000% |

| TLC-LSTM | 0.96856% | 0.95000% |

| WTHS Data | ||||

|---|---|---|---|---|

| Approach | HS | HI | HI Metrics | HS Metrics |

| Saidi, L. et al. [15] | ✗ | ✓ | Curve fitting only | ✗ |

| Meddour, I et al. [16] | ✗ | ✓ | Average percentage error (%) and curve fitting | ✗ |

| Elforjani, M. et al. [35] | ✗ | ✓ | Average percentage error (%) and curve fitting | ✗ |

| Elasha, F. et al. [36] | ✓ | ✓ | Sum square error results | ✗ |

| Ben Ali, J. [10] | ✓ | ✗ | ✗ | Classification accuracy only |

| TLC-LSTM | ✓ | ✓ | RMSE, accuracy formula (equation (16)) and curve fitting | Classification accuracy, data scatters, ROC curves, AUC and silhouette coefficient |

| IMS data | ||||

| Ben Ali, J. et al. [9] | ✓ | ✗ | ✗ | Classification accuracy and ROC curves |

| Han, S. et al. [12] | ✓ | ✗ | ✗ | F1 score and confusion matrix |

| Moshrefzadeh, A. et al. [13] | ✓ | ✗ | ✗ | Classification accuracy and confusion matrix |

| Zareapoor, M. et al. [14] | ✓ | ✗ | ✗ | Precision, Recall, FAM (average of AUC, MCC, and F1-measure) |

| Mao, W. et al. [37] | ✓ | ✗ | ✗ | Classification accuracy and confusion matrix |

| TLC-LSTM | ✓ | ✓ | RMSE, accuracy formula (equation (16)) and curve fitting | Classification accuracy, data scatters, ROC curves, AUC and silhouette coefficient |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Berghout, T.; Benbouzid, M.; Mouss, L.-H. Leveraging Label Information in a Knowledge-Driven Approach for Rolling-Element Bearings Remaining Useful Life Prediction. Energies 2021, 14, 2163. https://doi.org/10.3390/en14082163

Berghout T, Benbouzid M, Mouss L-H. Leveraging Label Information in a Knowledge-Driven Approach for Rolling-Element Bearings Remaining Useful Life Prediction. Energies. 2021; 14(8):2163. https://doi.org/10.3390/en14082163

Chicago/Turabian StyleBerghout, Tarek, Mohamed Benbouzid, and Leïla-Hayet Mouss. 2021. "Leveraging Label Information in a Knowledge-Driven Approach for Rolling-Element Bearings Remaining Useful Life Prediction" Energies 14, no. 8: 2163. https://doi.org/10.3390/en14082163

APA StyleBerghout, T., Benbouzid, M., & Mouss, L.-H. (2021). Leveraging Label Information in a Knowledge-Driven Approach for Rolling-Element Bearings Remaining Useful Life Prediction. Energies, 14(8), 2163. https://doi.org/10.3390/en14082163