Data-Driven Online Energy Scheduling of a Microgrid Based on Deep Reinforcement Learning

Abstract

1. Introduction

- To reduce the dependency on accurate forecasting information or an explicit physical model, we propose a data-driven formulation method for online energy scheduling of a microgrid based on MDP. This formulation enables us to optimize the scheduling decisions without having accurate forecasts of the uncertainty or knowing precise system model of the microgrid.

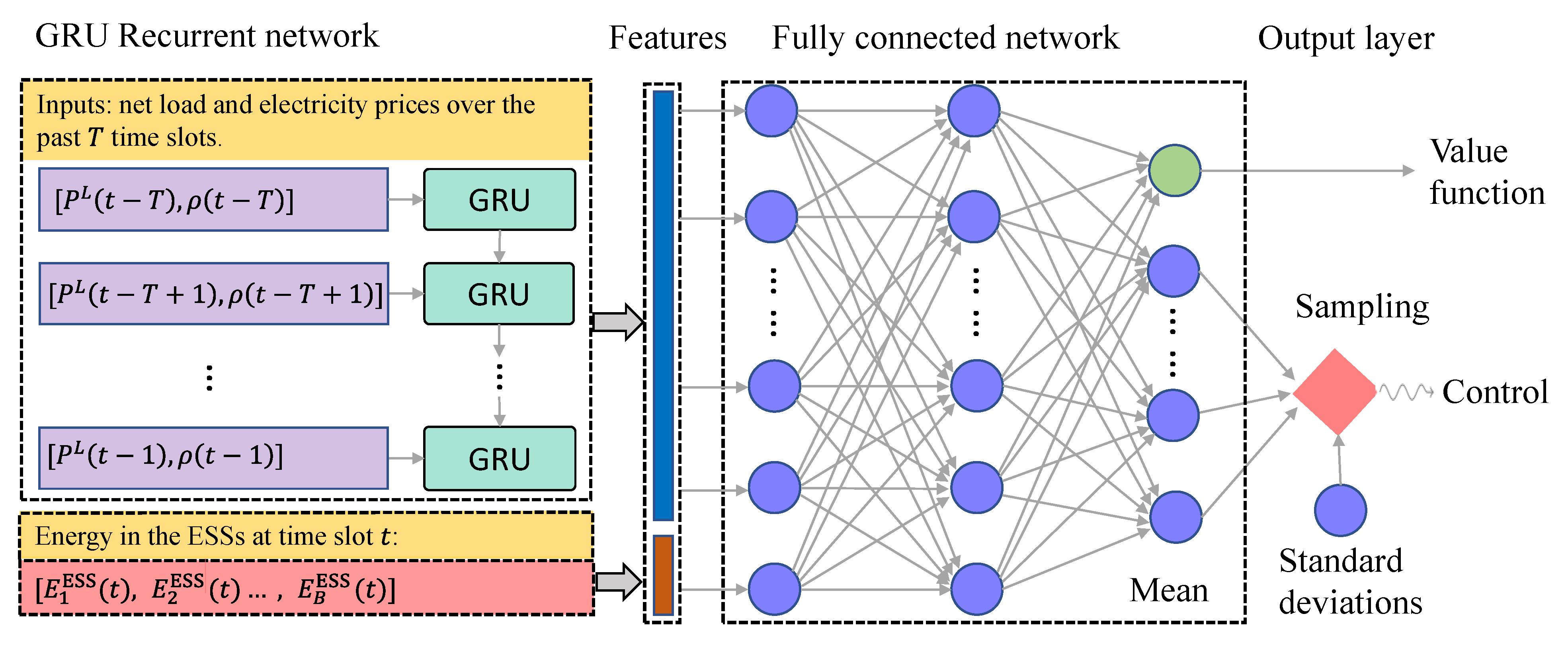

- To avoid solving complex optimization problems during online scheduling, we design a GRU-based neural network to learn the optimal policy in an end-to-end fashion. During online execution of the scheduling policy, the neural network can directly produce scheduling decisions based on historical data and current state without predicting the uncertainty or solving complex optimization models.

- To effectively learn the optimal scheduling policy for our problem with continuously-controlled devices, we employ the PPO algorithm to train the GRU-based policy network. The PPO-based method is effective for optimizing high-dimensional continuous-control actions and practical for real-world microgrid environments.

2. MDP Formulation of Online Energy Scheduling in Microgrids

2.1. States

2.2. Actions

2.3. Rewards

2.4. Objective Function

3. Deep Reinforcement Learning Solution Based on Proximal Policy Optimization

3.1. Proximal Policy Optimization Algorithm

3.2. Design of the Policy and Value Network

3.3. Practical Implementation

| Algorithm 1 The PPO algorithm for microgrid real-time scheduling |

|

4. Case Studies

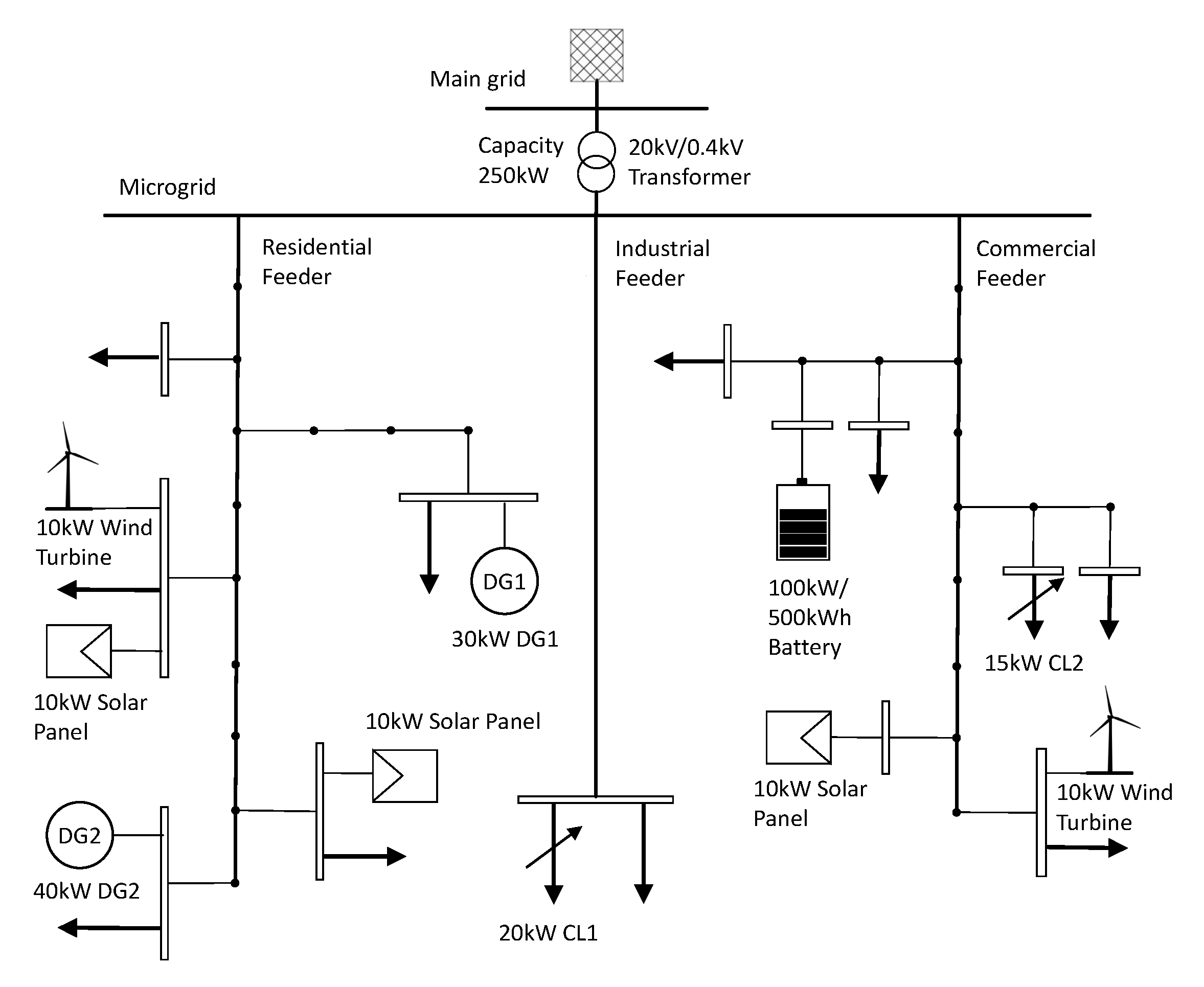

4.1. Experimental Setup

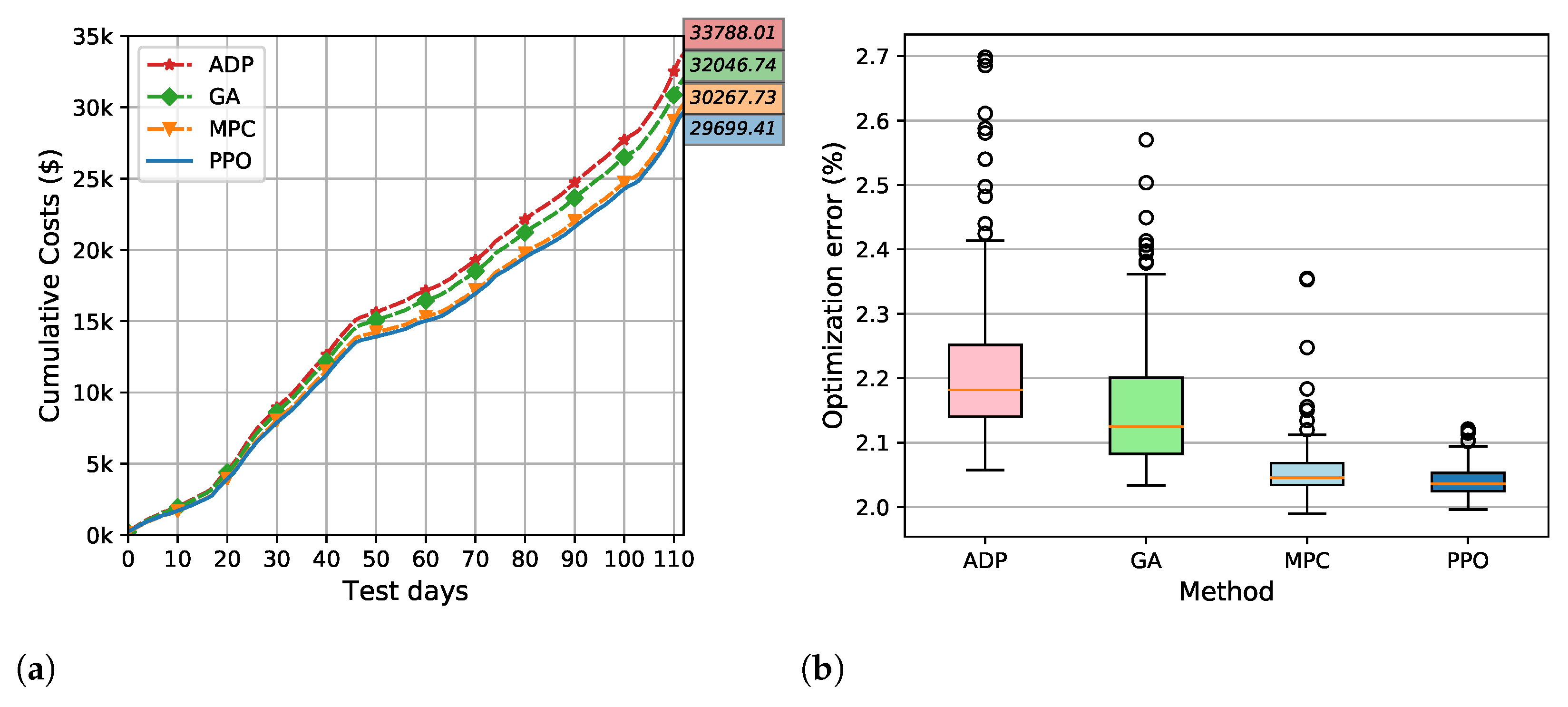

4.2. Comparison with Commonly Used Online Scheduling Methods

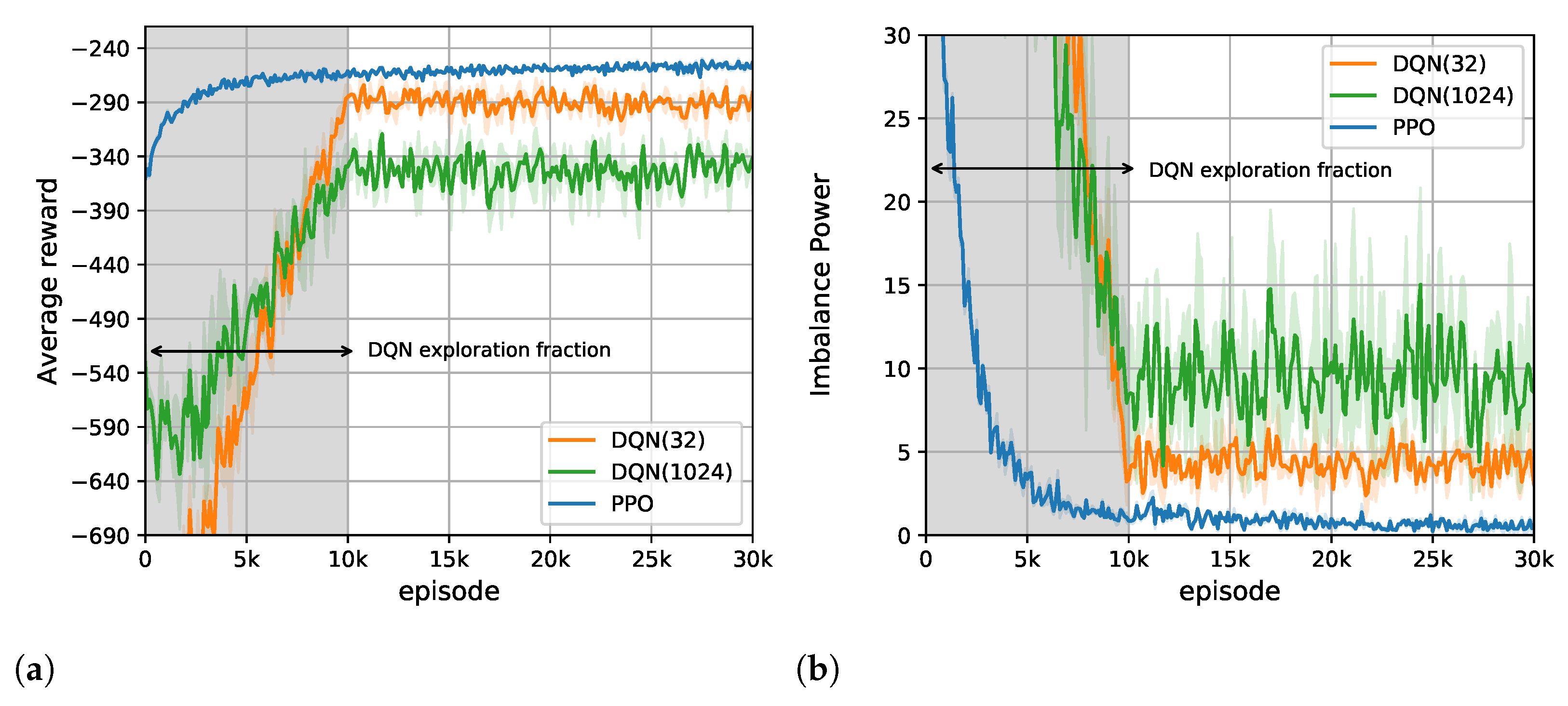

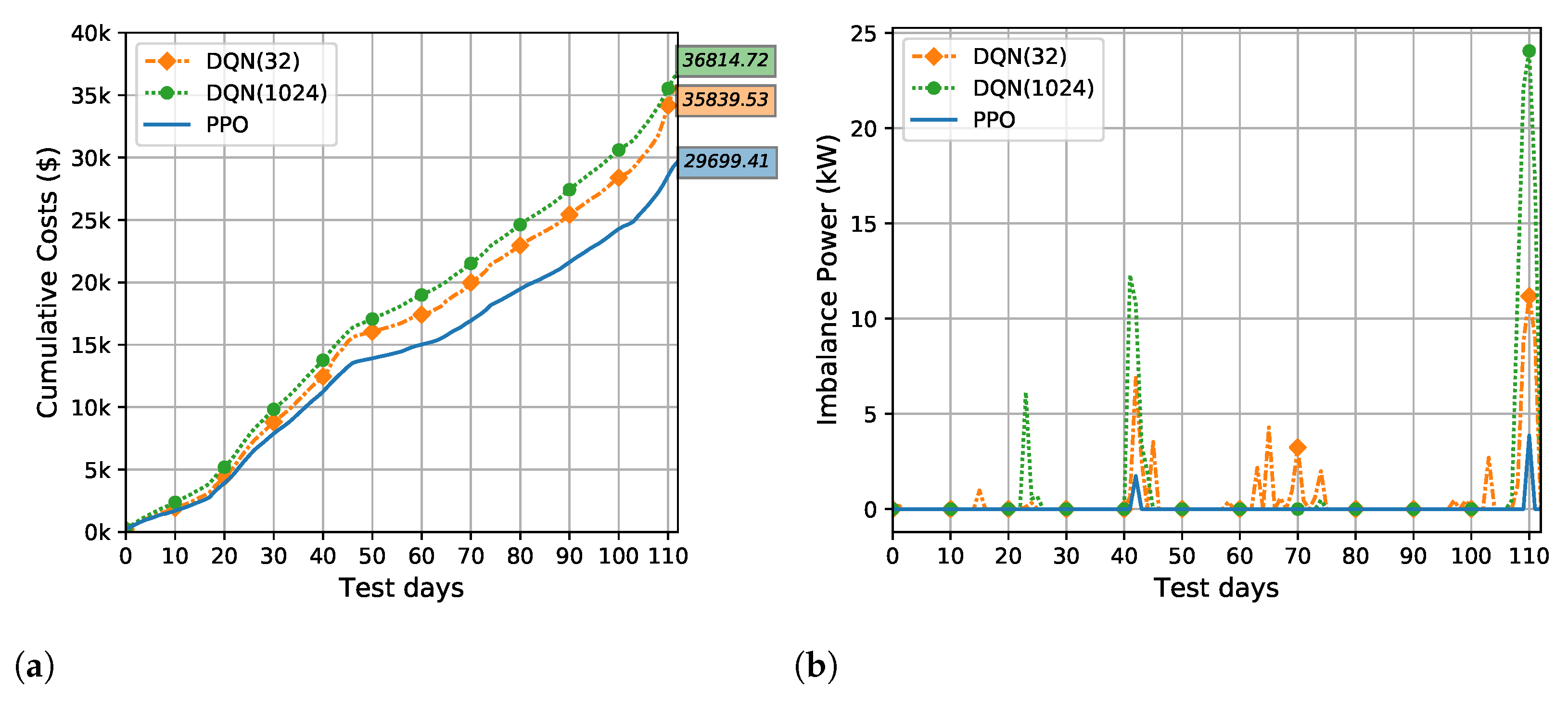

4.3. Comparison with DQN

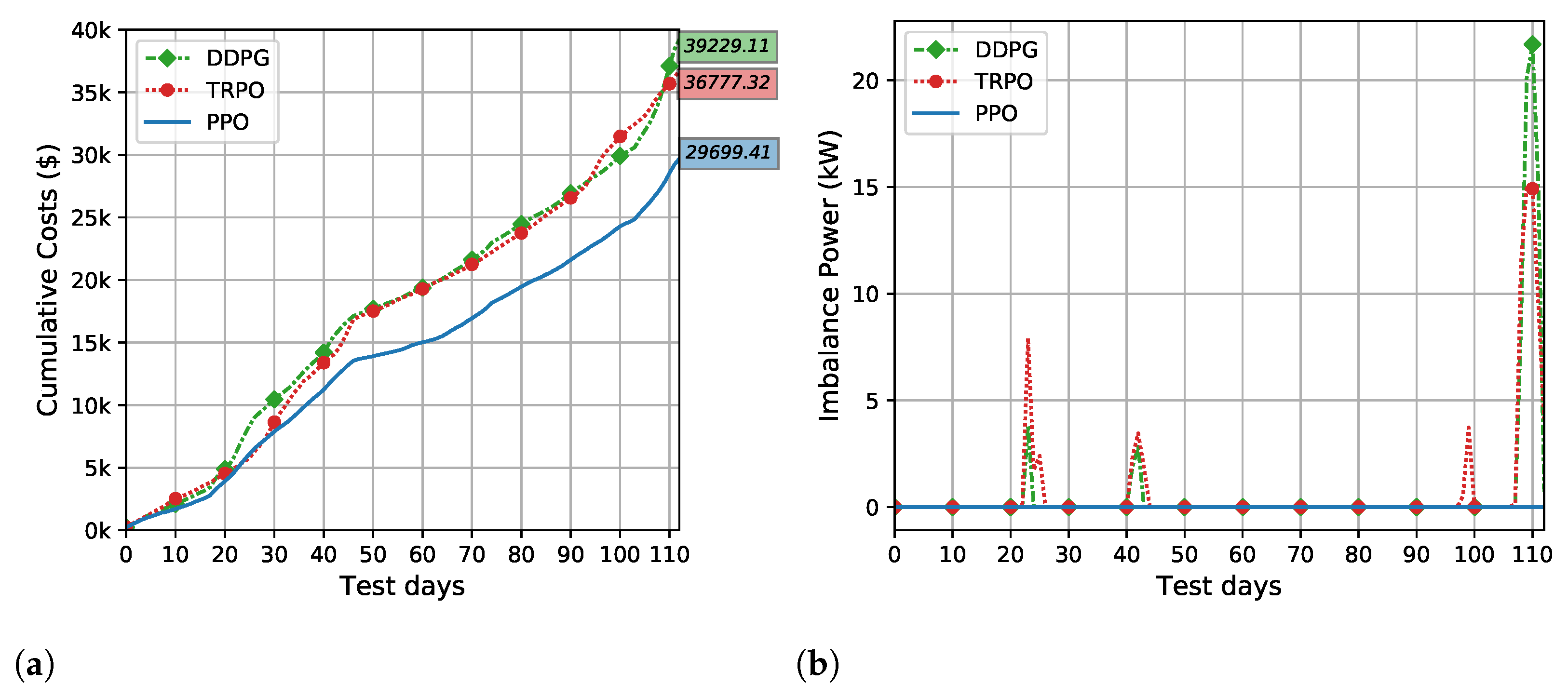

4.4. Comparison with Other Continuously-Controlled DRL Methods

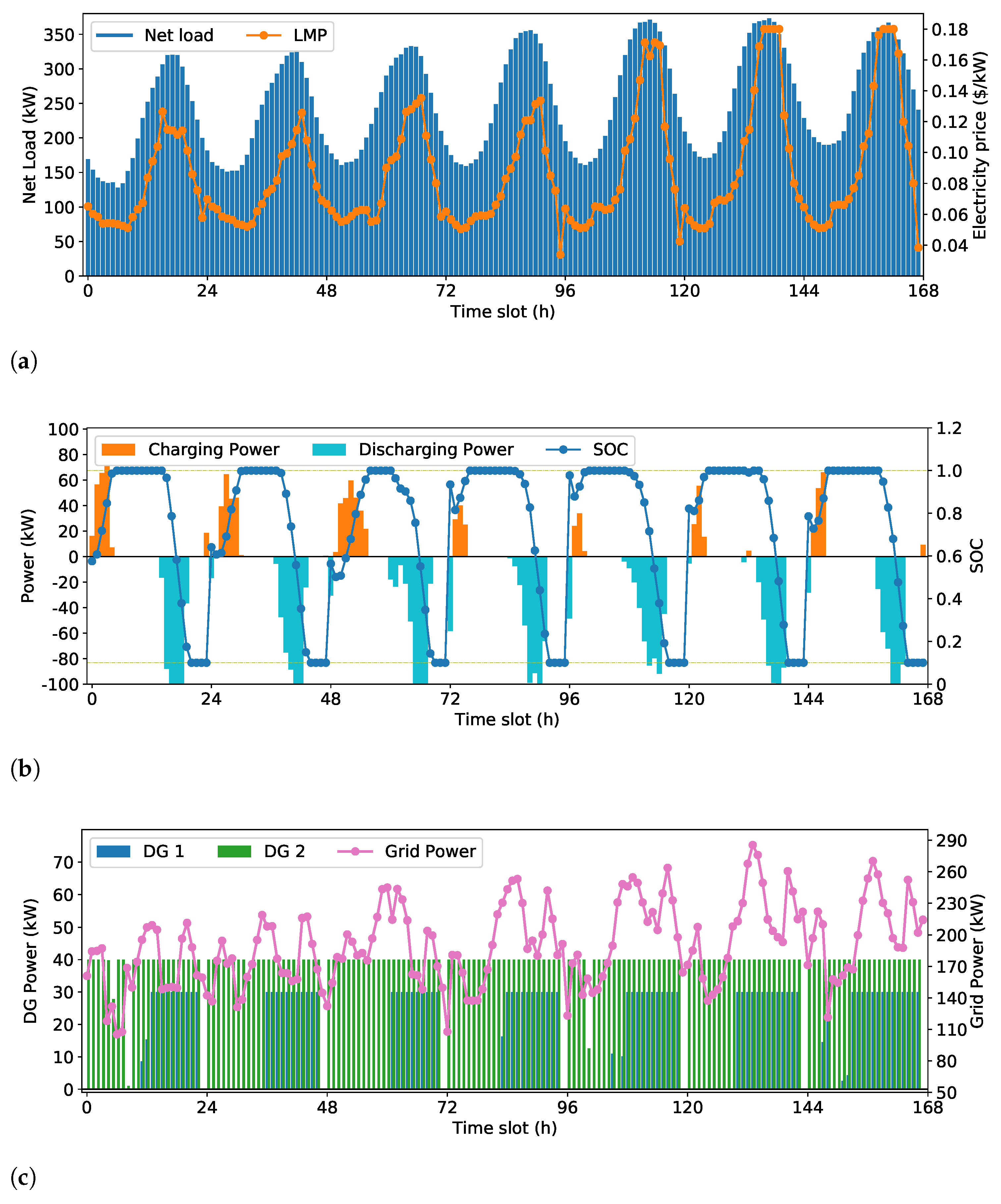

4.5. Scheduling Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ADP | Approximate dynamic programming |

| CAISO | California Independent System Operator |

| CL | Controllable load |

| DQN | Deep Q-network |

| DDPG | Deep deterministic policy gradient |

| DRL | Deep reinforcement learning |

| DG | Distributed Generator |

| ESS | Energy Storage System |

| GA | Genetic Algorithm |

| GRU | Gated Recurrent Unit |

| LMP | Locational marginal price |

| MDP | Markov decision process |

| MIQP | Mixed Integer Quadratic Programming |

| MPC | Model Predictive Control |

| PPO | Proximal policy optimization |

| SOC | State of Charge |

| TRPO | Trust region policy optimization |

| RES | Renewable energy resources |

| ReLU | Rectified linear unit |

References

- Huang, B.; Liu, L.; Li, Y.; Zhang, H. Distributed Optimal Energy Management for Microgrids in the Presence of Time-Varying Communication Delays. IEEE Access 2019, 7, 83702–83712. [Google Scholar] [CrossRef]

- BN Research. Projects and trends in the global microgrid market by region, segment, business model, and top states and countries. Navig. Res. Microgrid Deploy. Tracker 2020, 1Q20. Available online: https://guidehouseinsights.com/reports/microgrid-deployment-tracker-1q20 (accessed on 11 November 2020).

- Kazerani, M.; Tehrani, K. Grid of Hybrid AC/DC Microgrids: A New Paradigm for Smart City of Tomorrow. In Proceedings of the IEEE 15th International Conference of System of Systems Engineering (SoSE), Budapest, Hungary, 2–4 June 2020; pp. 175–180. [Google Scholar]

- Valencia, F.; Collado, J.; Sáez, D.; Marín, L.G. Robust Energy Management System for a Microgrid Based on a Fuzzy Prediction Interval Model. IEEE Trans. Smart Grid 2016, 7, 1486–1494. [Google Scholar] [CrossRef]

- Garcia-Torres, F.; Bordons, C. Optimal economical schedule of hydrogen-based microgrids with hybrid storage using model predictive control. IEEE Trans. Ind. Electron. 2015, 62, 5195–5207. [Google Scholar] [CrossRef]

- Li, Z.; Zang, C.; Zeng, P.; Yu, H. Combined Two-Stage Stochastic Programming and Receding Horizon Control Strategy for Microgrid Energy Management Considering Uncertainty. Energies 2016, 7, 499. [Google Scholar] [CrossRef]

- Bazmohammadi, N.; Tahsiri, A.; Anvari-Moghaddam, A.; Guerrero, J.M. A hierarchical energy management strategy for interconnected microgrids considering uncertainty. Int. J. Electr. Power Energy Syst. 2019, 109, 597–608. [Google Scholar] [CrossRef]

- Garcia-Torres, F.; Bordons, C.; Tobajas, J.; Marquez, J.J.; Garrido-Zafra, J.; Moreno-Munoz, A. Optimal Schedule for Networked Microgrids under Deregulated Power Market Environment using Model Predictive Control. IEEE Trans. Smart Grid 2020, 12, 182–191. [Google Scholar] [CrossRef]

- Bazmohammadi, N.; Anvari-Moghaddam, A.; Tahsiri, A.; Madary, A.; Vasquez, J.C.; Guerrero, J.M. Stochastic predictive energy management of multi-microgrid systems. Appl. Sci. 2020, 10, 4833. [Google Scholar] [CrossRef]

- Tehrani, K. A smart cyber physical multi-source energy system for an electric vehicle prototype. J. Syst. Archit. 2020, 111, 1383–7621. [Google Scholar] [CrossRef]

- Leonori, S.; Paschero, M.; Mascioli, F.M.F.; Rizzi, A. Optimization strategies for Microgrid energy management systems by Genetic Algorithms. Appl. Soft Comput. 2020, 86, 1568–4946. [Google Scholar] [CrossRef]

- Mbuwir, B.V.; Ruelens, F.; Spiessens, F.; Deconinc, G. Battery Energy Management in a Microgrid Using Batch Reinforcement Learning. Energies 2017, 10, 1846. [Google Scholar] [CrossRef]

- Anvari-Moghaddam, A.; Rahimi-Kian, A.; Mirian, M.S.; Guerrero, J.M. A multi-agent based energy management solution for integrated buildings and microgrid system. Appl. Energy 2017, 203, 41–56. [Google Scholar] [CrossRef]

- Wei, Q.; Shi, G.; Song, R.; Liu, Y. Adaptive Dynamic Programming-Based Optimal Control Scheme for Energy Storage Systems With Solar Renewable Energy. IEEE Trans. Ind. Electron. 2017, 64, 5468–5478. [Google Scholar] [CrossRef]

- Zeng, P.; Li, H.; He, H.; Li, S. Dynamic Energy Management of a Microgrid Using Approximate Dynamic Programming and Deep Recurrent Neural Network Learning. IEEE Trans. Smart Grid 2019, 10, 4435–4445. [Google Scholar] [CrossRef]

- Shuai, H.; Fang, J.; Ai, X.; Tang, Y.; Wen, J.; He, H. Stochastic Optimization of Economic Dispatch for Microgrid Based on Approximate Dynamic Programming. IEEE Trans. Smart Grid 2019, 10, 2440–2452. [Google Scholar] [CrossRef]

- Shuai, H.; Fang, J.; Ai, X.; Wen, J.; He, H. Optimal Real-Time Operation Strategy for Microgrid: An ADP-Based Stochastic Nonlinear Optimization Approach. IEEE Trans. Sustain. Energy 2019, 10, 931–942. [Google Scholar] [CrossRef]

- Zhang, Q.; Dehghanpour, K.; Wang, Z.; Huang, Q. A Learning-Based Power Management Method for Networked Microgrids Under Incomplete Information. IEEE Trans. Smart Grid 2020, 11, 1193–1204. [Google Scholar] [CrossRef]

- Cao, J.; Harrold, D.; Fan, Z.; Morstyn, T.; Healey, D.; Li, K. Deep Reinforcement Learning-Based Energy Storage Arbitrage With Accurate Lithium-Ion Battery Degradation Model. IEEE Trans. Smart Grid 2020, 11, 4513–4521. [Google Scholar] [CrossRef]

- Li, H.; Wan, Z.; He, H. Constrained EV Charging Scheduling Based on Safe Deep Reinforcement Learning. IEEE Trans. Smart Grid 2020, 11, 2427–2439. [Google Scholar] [CrossRef]

- Harrold, D.J.B.; Cao, J.; Fan, Z. Battery Control in a Smart Energy Network using Double Dueling Deep Q-Networks. In Proceedings of the 2020 IEEE PES Innovative Smart Grid Technologies Europe (ISGT-Europe), The Hague, The Netherlands, 26–28 October 2020; pp. 106–110. [Google Scholar]

- Ji, Y.; Wang, J.; Xu, J.; Fang, X.; Zhang, H. Real-Time Energy Management of a Microgrid Using Deep Reinforcement Learning. Energies 2019, 12, 2291. [Google Scholar] [CrossRef]

- Shuai, H.; He, H. Online Scheduling of a Residential Microgrid via Monte-Carlo Tree Search and a Learned Model. IEEE Trans. Smart Grid 2020, 12, 1073–1087. [Google Scholar] [CrossRef]

- Bui, V.; Hussain, A.; Kim, H. Double Deep Q-Learning-Based Distributed Operation of Battery Energy Storage System Considering Uncertainties. IEEE Trans. Smart Grid 2020, 11, 457–469. [Google Scholar] [CrossRef]

- Du, Y.; Li, F. Intelligent Multi-Microgrid Energy Management Based on Deep Neural Network and Model-Free Reinforcement Learning. IEEE Trans. Smart Grid 2020, 11, 1066–1076. [Google Scholar] [CrossRef]

- Domínguez-Barbero, D.; García-González, J.; Sanz-Bobi, M.A.; Sánchez-Úbeda, E.F. Optimising a Microgrid System by Deep Reinforcement Learning Techniques. Energies 2020, 13, 2830. [Google Scholar] [CrossRef]

- Fan, L.; Zhang, J.; He, Y.; Liu, Y.; Hu, T.; Zhang, H. Optimal Scheduling of Microgrid Based on Deep Deterministic Policy Gradient and Transfer Learning. Energies 2021, 14, 584. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Neshat, M.; Nezhad, M.M.; Abbasnejad, E.; Groppi, D.; Heydari, A.; Tjernberg, L.B.; Garcia, D.A.; Alexander, B.; Wagner, M. Hybrid Neuro-Evolutionary Method for Predicting Wind Turbine Power Output. arXiv 2004, arXiv:2004.12794. [Google Scholar]

- Liu, H.; Mi, X.; Li, Y. Smart multi-step deep learning model for wind speed forecasting based on variational mode decomposition, singular spectrum analysis, LSTM network and ELM. Energy Convers. Manag. 2018, 159, 54–64. [Google Scholar] [CrossRef]

- Yan, X.; Liu, Y.; Xu, Y.; Jia, M. Multistep forecasting for diurnal wind speed based on hybrid deep learning model with improved singular spectrum decomposition. Energy Convers. Manag. 2020, 225, 113456. [Google Scholar] [CrossRef]

- Jaseena, K.U.; Kovoor, B.C. Decomposition-based hybrid wind speed forecasting model using deep bidirectional LSTM networks. Energy Convers. Manag. 2021, 234, 113944. [Google Scholar] [CrossRef]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal Policy Optimization Algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Shi, W.; Li, N.; Chu, C.; Gadh, R. Real-Time Energy Management in Microgrids. IEEE Trans. Smart Grid 2017, 8, 228–238. [Google Scholar] [CrossRef]

- Henderson, P.; Islam, R.; Bachman, P.; Pineau, J.; Precup, D.; Meger, D. Deep Reinforcement Learning that Matters. arXiv 2019, arXiv:1709.06560. [Google Scholar]

- Schulman, J.; Levine, S.; Abbeel, P.; Jordan, M.; Moritz, P. Trust Region Policy Optimization. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 7–9 July 2015; pp. 1889–1897. [Google Scholar]

- Schulman, J.; Moritz, P.; Levine, S.; Jordan, M.; Abbeel, P. High-Dimensional Continuous Control Using Generalized Advantage Estimation. arXiv 2018, arXiv:1506.02438. [Google Scholar]

- Cho, K.; Merrienboer, B.V.; Bahdanau, D.; Bengio, Y. On the Properties of Neural Machine Translation: Encoder-Decoder Approaches. arXiv 2017, arXiv:1409.1259. [Google Scholar]

- Wu, W.; Liao, W.; Miao, J.; Du, G. Using Gated Recurrent Unit Network to Forecast Short-Term Load Considering Impact of Electricity Price. Energy Procedia 2019, 158, 3369–3374. [Google Scholar] [CrossRef]

- Wang, R.; Li, C.; Fu, W.; Tang, G. Deep Learning Method Based on Gated Recurrent Unit and Variational Mode Decomposition for Short-Term Wind Power Interval Prediction. IEEE Trans. Neural Networks Learn. Syst. 2020, 31, 3814–3827. [Google Scholar] [CrossRef]

- Mnih, V.; Badia, A.P.; Mirza, M.; Graves, A.; Harley, T.; Lillicrap, T.P.; Silver, D.; Kavukcuoglu, K. Asynchronous Methods for Deep Reinforcement Learning. In Proceedings of the 33rd International Conference on International Conference on Machine Learning (ICML), New York, NY, USA, 19–24 June 2016; pp. 1928–1937. [Google Scholar]

- Papathanassiou, S.; Hatziargyriou, N.; Strunz, K. Proceedings of the CIGRE Symposium: Power Systems with Dispersed Generation. In Proceedings of the CIGRE Symposium: Power Systems with Dispersed Generation, Athens, Greece, 13–16 April 2005. [Google Scholar]

- California ISO Open Access Same-time Information System (OASIS). Available online: http://oasis.caiso.com/mrioasis/logon.do (accessed on 10 August 2020).

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017, arXiv:1412.6980. [Google Scholar]

- Lincoln, R. Pypower. Version 5.1.2. Available online: https://pypi.org/project/PYPOWER/ (accessed on 5 October 2020).

- Gurobi Optimization LLC. Gurobi Optimizer Reference Manual. 2021. Available online: http://www.gurobi.com (accessed on 20 March 2021).

| DG1 | |||||

| 0 kW | 30 kW | $/kWh | $/kWh | $/h | |

| DG2 | |||||

| 0 kW | 40 kW | $/kWh | $/kWh | $/h | |

| ESS | |||||

| 50 kWh | 500 kWh | 100 kW | |||

| CL1 | – | – | |||

| 0 kW | 20 kW | $/kWh | – | – | |

| CL2 | – | – | |||

| 0 kW | 15 kW | $/kWh | – | – |

| Hyperparameter | Value |

|---|---|

| # of steps in one episode (T) | 24 |

| # of episodes in each iteration (N) | 100 |

| # of iterates (I) | 1000 |

| # of epochs (K) | 10 |

| Discount factor () | |

| Adam stepsize | |

| Minibatch size (M) | 64 |

| GAE parameter () | 0.95 |

| Penalty coefficient () | 5 |

| Vf coefficient () | 0.5 |

| Entropy coefficient () | 0.01 |

| Method | ADP | GA | MPC | PPO |

|---|---|---|---|---|

| Time | 57.71 ± 13.85 ms | 9317.29 ± 1912.83 ms | 228.68 ± 116.20 ms | 0.50 ± 0.11 ms |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ji, Y.; Wang, J.; Xu, J.; Li, D. Data-Driven Online Energy Scheduling of a Microgrid Based on Deep Reinforcement Learning. Energies 2021, 14, 2120. https://doi.org/10.3390/en14082120

Ji Y, Wang J, Xu J, Li D. Data-Driven Online Energy Scheduling of a Microgrid Based on Deep Reinforcement Learning. Energies. 2021; 14(8):2120. https://doi.org/10.3390/en14082120

Chicago/Turabian StyleJi, Ying, Jianhui Wang, Jiacan Xu, and Donglin Li. 2021. "Data-Driven Online Energy Scheduling of a Microgrid Based on Deep Reinforcement Learning" Energies 14, no. 8: 2120. https://doi.org/10.3390/en14082120

APA StyleJi, Y., Wang, J., Xu, J., & Li, D. (2021). Data-Driven Online Energy Scheduling of a Microgrid Based on Deep Reinforcement Learning. Energies, 14(8), 2120. https://doi.org/10.3390/en14082120