2. Review of Known Algorithms

To our knowledge, the idea of calculating the inverse cube root using a magic constant for floating point numbers was presented for the first time by Professor W. Kahan [

5]. In order to compute the inverse cube root, it was represented in the following form:

If the argument

x is given as a floating-point number, it can be read as an integer

corresponding to the binary representation of the number

x in the IEEE-754 standard. Then, after dividing by 3, the sign is changed to the opposite and a fixed integer (known as a magic constant) is added. The result can be read as a floating-point number. It turns out that this represents an initial (albeit inaccurate) approximation, which can be refined using iterative formulas. Such an algorithm for calculating the cube and inverse cube root, based on the use of the magic constant, is presented in [

6].

| Algorithm 1. |

| 1: float InvCbrt1 (float x){ |

| 2: const float onethird = 0.33333333; |

| 3: const float fourthirds = 1.3333333; |

| 4: float thirdx = x * onethird; |

| 5: union {//get bits from floating-point number |

| 6: int ix; |

| 7: float fx; |

| 8: } z; |

| 9: z.fx = x; |

| 10: z.ix = 0x54a21d2a − z.ix/3; //initial guess for inverse cube root |

| 11: float y = z.fx; //convert integer type back to floating-point type |

| 12: y = y * (fourthirds − thirdx * y*y*y); //1st Newton’s iteration |

| 13: y = y * (fourthirds − thirdx * y*y*y); //2nd Newton’s iteration |

| 14: return y; |

| 15: } |

The above algorithm can be briefly described as follows. The input argument x is a floating point number of type float (line 1). Next, the content of the z.fx buffer can be read as an integer z.ix (line 7). Subtracting the integer

from the magic constant

R = 0x54a21d2a results in obtaining the integer

(z.ix, line 7). Line 7 contains a division by 3 which can be replaced by multiplying the numerator by the binary expansion 0.010101010101… of 1/3 in fixed point arithmetic. For 32 bit numerators, we multiply by the hexadecimal constant 55555556 and shift the (64 bit) result down by 32 binary places. Therefore the line 7 of the code can be written in the form [

6]: z.ix = 0x54a21d2a − (int) ((z.ix * (int64_t) 0x55555556) >> 32);

The contents of the z.ix buffer can be read as a float number. This is the initial approximation

of the function

(line 8). Then, the value of the function can be specified by the classical Newton–Raphson formula:

(lines 9 and 10). In the general case:

The relative error of the calculation results after the two Newtonian iterations is approximately

= 1.09·10

−5, or

= 16.47 correct bits, see [

6]. Then, according to the author of [

6], this algorithm works 20 times faster than the library function powf (x, −1.0f/3.0f) of the C language.

The disadvantage of the Algorithm 1 is that it has a relatively low accuracy (only 16.47 correct bits). The lack of an analytical description of the transformation processes that take place in this algorithm hindered a progress in increasing the accuracy of calculations of both the inverse cube root and the cube root. This article eliminates this shortcoming. Herein is a brief analytical description of the transformations that occur in algorithms of this type. Ways to increase accuracy of calculations are specified and the corresponding working codes of algorithms are presented.

3. The Theory of the Fast Inverse Cube Root Method and Proposed Algorithms

The main challenge in the theory of the fast inverse cube root method is obtaining an analytical description of initial approximations. We acquire these approximations through integer operations on floating-point argument

x converted to an integer

. A magic constant is used, which inversely converts the final result of these operations into floating-point form (see, for example, lines 7, 8 of the Algorithm 1). To do this, we can use the basics of the theory of the fast inverse square root method, described in [

4].

To correctly apply this theory, it is sufficient to replace the integer division from

with

and expand the range of values of the input argument from

to

. It is known that the behaviour of the relative error in calculating

in the whole range of normalized numbers with a floating point can be described by its behaviour for

or

[

6,

17]. According to [

4], relating to the inverse cube root, in this range there are four piecewise linear approximations of the function

where

. Here,

is the fractional part of the mantissa of the magic constant

, and

and

. The maximum relative error of such analytical approximations does not exceed

[

4,

20]. To verify their correctness, we compare the values of

obtained in line 8 of the Algorithm 1 with the values obtained using Equations (4)–(7) in the corresponding ranges. The maximum relative error of the results does not exceed 5.96·10

−8 for any normalized numbers of type float for

.

To further improve the accuracy of the Algorithm 1, we can apply the additive correction of the parameter

for each iteration, as proposed in [

10,

20,

21]. In other words, the first iteration should be performed according to the modified Newton–Raphson formulas

where the product

is used instead of power of three and we assume

1/3. The optimal values of

and

(which give minimum relative errors of both signs) have to be found. In order to find the optimal values we use the algorithm proposed in [

10]. We write the expressions of the relative errors of the first iteration in the range

for four piecewise linear approximations

where

1, 2, 3, 4) and

are given by Equations (4)–(7). Next, we find the values of

x, at which functions

have maximum values (both positive

and negative

) To do this, we have to solve four equations

= 0 with respect to

x. Their solutions (

and

) have two indices and the first index denotes the interval to which the solution belongs:

i = 1 for [1, 2),

i = 2 for [2, 4),

i = 3 for [4,

t) and

i = 4 for [

t, 8). The second index numerates solutions within the corresponding interval (in this paper we consider cases with at most

j = 1). One has to remember that boundary points of the intervals also can be taken into account as possible positive or negative maxima. Then, applying the alignment algorithm proposed in [

10], we form two linear combinations of selected positive and negative maximum values

,

,

and

(including suitable boundary values) and equate them to zero. It is important that the number of positive and negative items in each equation is the same. Finally, we solve this system of two equations obtaining new values of

which yield a more accurate algorithm. In order to increase further the accuracy one can repeat this procedure, starting with redefined values of

.

Now, we start from the code Algorithm 1, i.e., we set the following values of zero approximations of parameters

= 7.19856286048889160156250, corresponding to the magic constant

R = 0x54a21d2a (see [

2]),

= 0.33333333333333,

= 1.334.

We selected the following five maximum points. For the first interval [1,2):

for the second interval [2,4):

for the third interval [4,

t):

and two boundary points:

= 4 and

. At these points, we form five expressions for the relative errors according to formulas (9). Next, we form two equations from different combinations for positive and negative errors (the number of positive and negative errors for each equation should be the same) [

10]. We have not provided analytical expressions for the equations because they are quite cumbersome. The best option found that gives the lowest maxima of positive and negative errors is as follows:

= 7.2086406427967456 (corresponding to the magic constant

R = 0x54a239b9) and

= 1.3345156576807351.

Next, we make one more iteration of this procedure. The second approximation gives the following results: = 7.2005756147691136 (corresponding to the magic constant R = 0x54a223b4) and = 1.3345157539616962.

Similarly, analytical equations for two modified iterations of Newton–Raphson can be written and the corresponding values of the coefficients of the formula for the second iteration can be found.

The code of the derived new algorithm is as follows:

| Algorithm 2. |

| 1: float InvCbrt10 (float x){ |

| 2: float xh = x*0.33333333; |

| 3: int i = *(int*) &x; |

| 4: i = 0x54a223b4-i/3; |

| 5: float y = *(float*) &i; |

| 6: y = y*(1.33451575396f − xh *y*y*y); |

| 7: y = y*(1.333334485f − xh*y*y*y); |

| 8: return y; |

| 9: } |

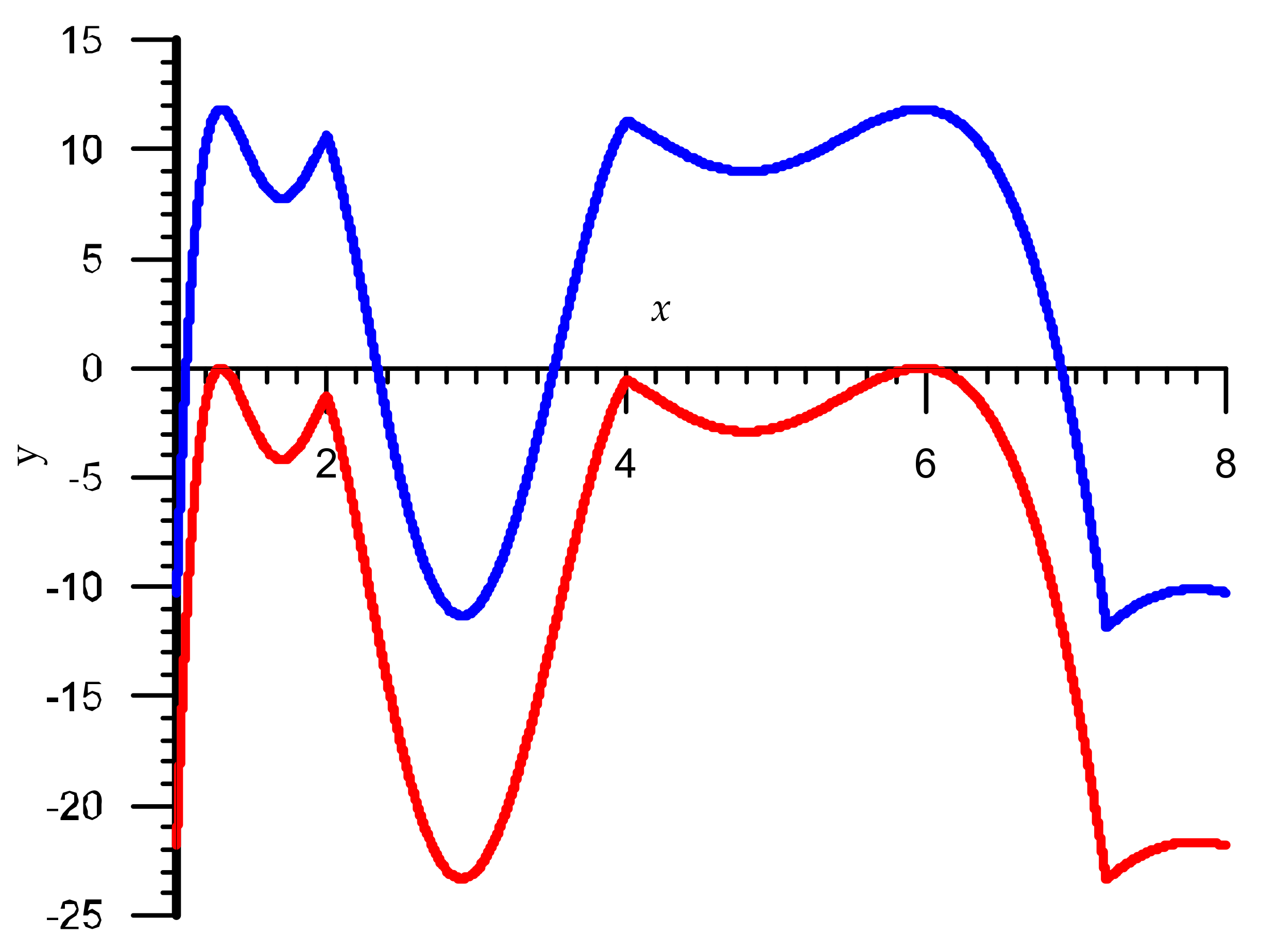

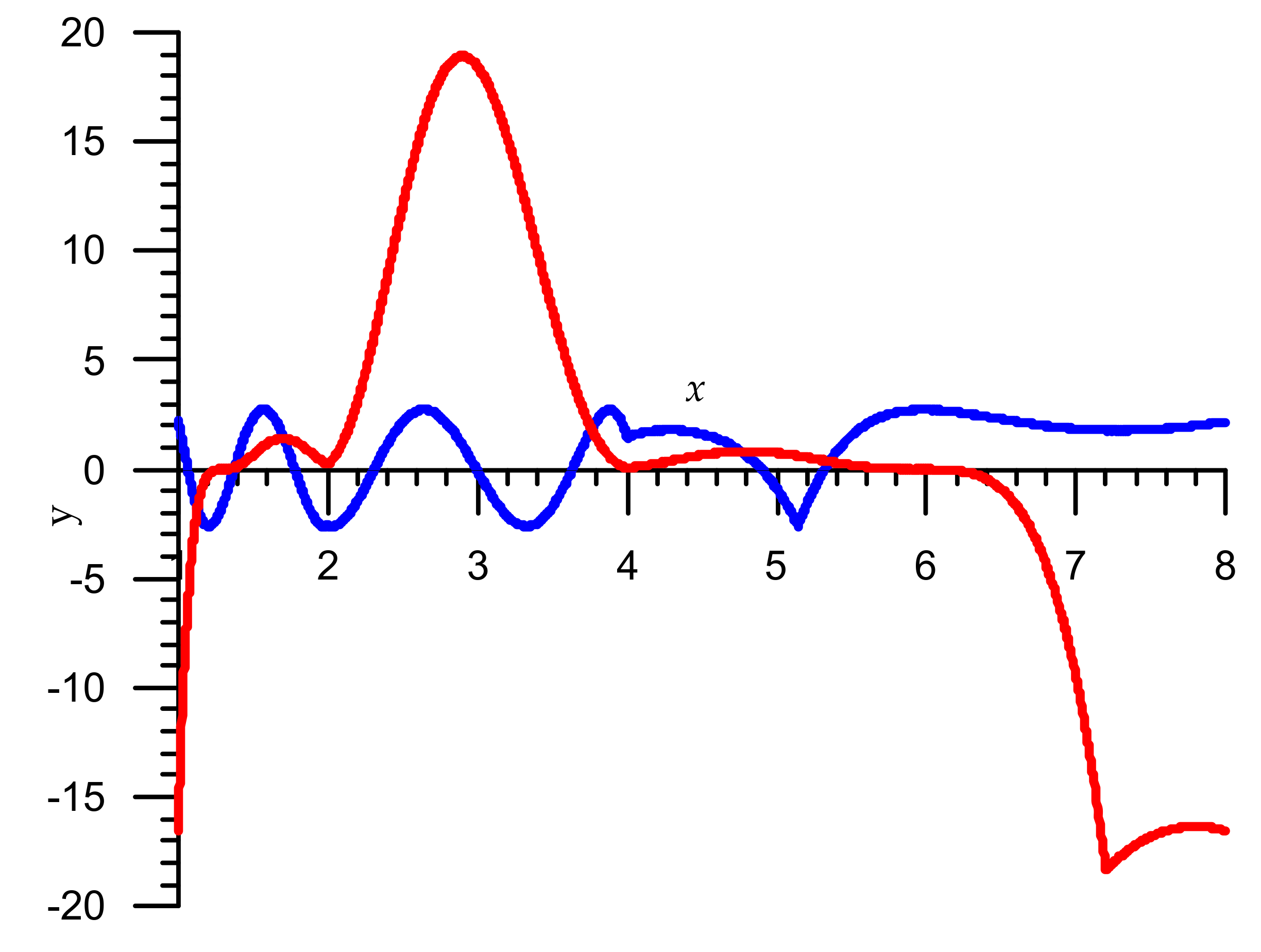

Comparative graphs of relative errors of the Algorithm 1 and our Algorithm 2 for the first iteration are shown in

Figure 1, where error = y·10

−4. Note that the maximum relative errors for the first iteration are as follows:

The maximum relative errors for the second iteration are

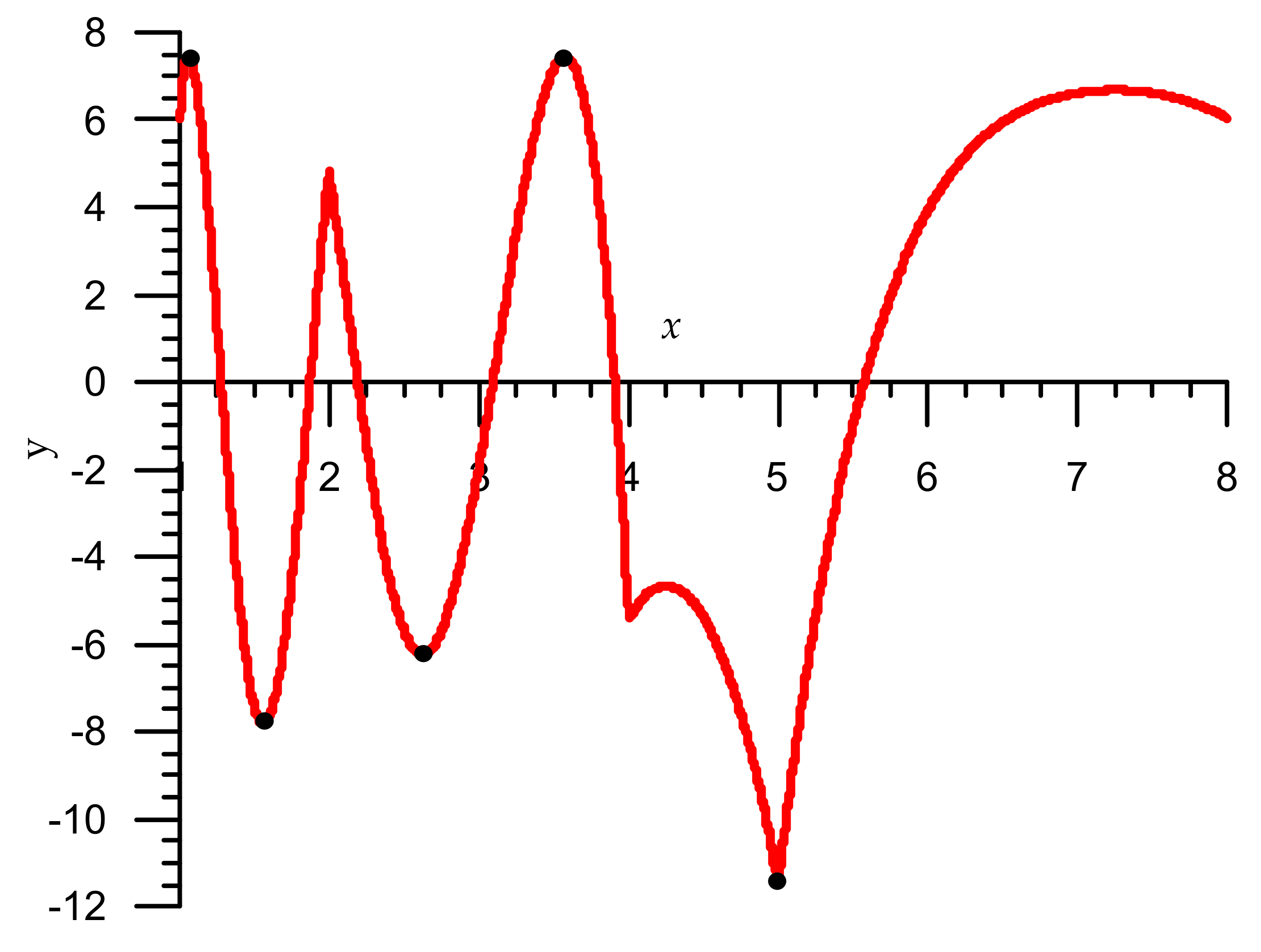

The best results are given by the Algorithm 3 with the released (not equal to 1/3) value of

. The five points of maxima on the interval

are also controlled here, but they are located differently than for Algorithm 2 (

Figure 2), where error = y·10

−4.

The algorithm for finding unknown parameters is similar to the one described above. At the maximum points of relative errors, we form five expressions of these errors according to Equation (8). Then, we form not two but three equations (to find three unknown parameters ) from different combinations for positive and negative errors (as before, the number of positive and negative errors for each equation must be the same). Solving the resulting system from these equations, we get the first approximation of the parameters. Then, we use the found values as initial approximations and we repeat the iteration once again, etc.

As an example, we set the initial approximations of the parameters

t = 5.0013587474822998046875 (corresponding to the magic constant

R = 0x548aae60),

,

= 1.512. We select the following five maximum points (see

Figure 2). For the first interval [1, 2):

= 1.0643720067216318545117069225172418,

= 1.5625849217176437377929687500000016,

for the second interval :

= 3.5748550880488707008059220665584755,

= 2.6426068842411041259765624999999972,

and one boundary point . Then, the first approximation gives the following values:

t = 5.1234715101106982878054945337942215,

(R = 0x548bfbd3),

= 0.5384362738855964773578619540917762,

= 1.5040684526500142722173589084203817.

The second approximation gives these values:

t = 5.1412469466865047480874277903326086

(R = 0x548c2c5c),

= 0.53548262076207845495640510408749138,

= 1.5019929400707382448275120237832709.

At the third approximation we obtained the final values:

t = 5.1461652964315795696493228918438855,

(R = 0x548c39cb),

= 0.53485024938484233191364545015941228,

= 1.5015480449170468503060851266551834.

Then, the code of the proposed algorithm was as follows:

| Algorithm 3. |

| 1: float InvCbrt11 (float x){ |

| 2: int i = *(int*) &x; |

| 3: i = 0x548c39cb − i/3; |

| 4: float y = *(float*) &i; |

| 5: y = y*(1.5015480449f − 0.534850249f*x*y*y*y); |

| 6: y = y*(1.333333985f − 0.33333333f*x*y*y*y);; |

| 7: return y; |

| 8: } |

The graph of the relative error for the first iteration of this code is shown in

Figure 3, where error = y·10

−4. As can be seen, at the selected five points, the values of the relative errors are equal to each other modulo. Note that the maximum relative errors of the Algorithm 3 for the first iteration are:

= 8.0837·10−4 or = 10.27 correct bits,

= 8.0523·10−4, = −8.0837·10−4.

Maximum relative errors for the second iteration are as follows:

= 8.0803·10−7 or = 20.23 correct bits,

= 7.698·10−7, = −8.0803·10−7.

However, more accurate results are given by algorithms using formulas of higher orders of convergence—for example, the Householder method of order 2, which has a cubic convergence [

1,

10]. For the inverse cube root, the classic Householder method of order 2 has the following form:

or:

Here is a simplified Algorithm 4 with the classical Householder method of order 2 and with a magic constant from Algorithm 1:

| Algorithm 4. |

| 1: float InvCbrt20 (float x){ |

| 2: float k1 = 1.5555555555f; |

| 3: float k2 = 0.7777777777f; |

| 4: float k3 = 0.222222222f; |

| 5: int i = *(int*) &x; |

| 6: i = 0x54a21d2a − i/3; |

| 7: float y = *(float*) &i; |

| 8: float c = x*y*y*y; |

| 9: y = y*(k1 − c*(k2 − k3*c)); |

| 10: c = 1.0f − x*y*y*y;//fmaf |

| 11: y = y*(1.0f + 0.3333333333f*c ;//fmaf |

| 12: return y; |

| 13: } |

Here and in the subsequent codes the comments “fmaf” mean that the fused multiply-add function can be applied reducing the calculation error. We point out that this function is implemented in many modern processors in the form of fast hardware instructions.

The maximum relative errors for the first and second iterations of this algorithm are:

= 1.8922·10−4, or = 12.36 correct bits,

= 2.0021·10−7, or = 22.25 correct bits.

However, the Algorithm 5 with the modified Householder method of order 2 has a much higher accuracy. It consists of choosing the optimal values of the coefficients

of the iterative formula and gives much smaller errors. For example, the first iteration (as in the Algorithm 4) has the form:

and contains six multiplication operations and two subtraction operations. The algorithm for finding unknown parameters

is similar to the above. First, we set the zero approximations of the parameters:

5.0680007152557373046875 (corresponding to the magic constant

R = 0x548b645a),

= 1.75183,

= 1.25,

= 0.509.

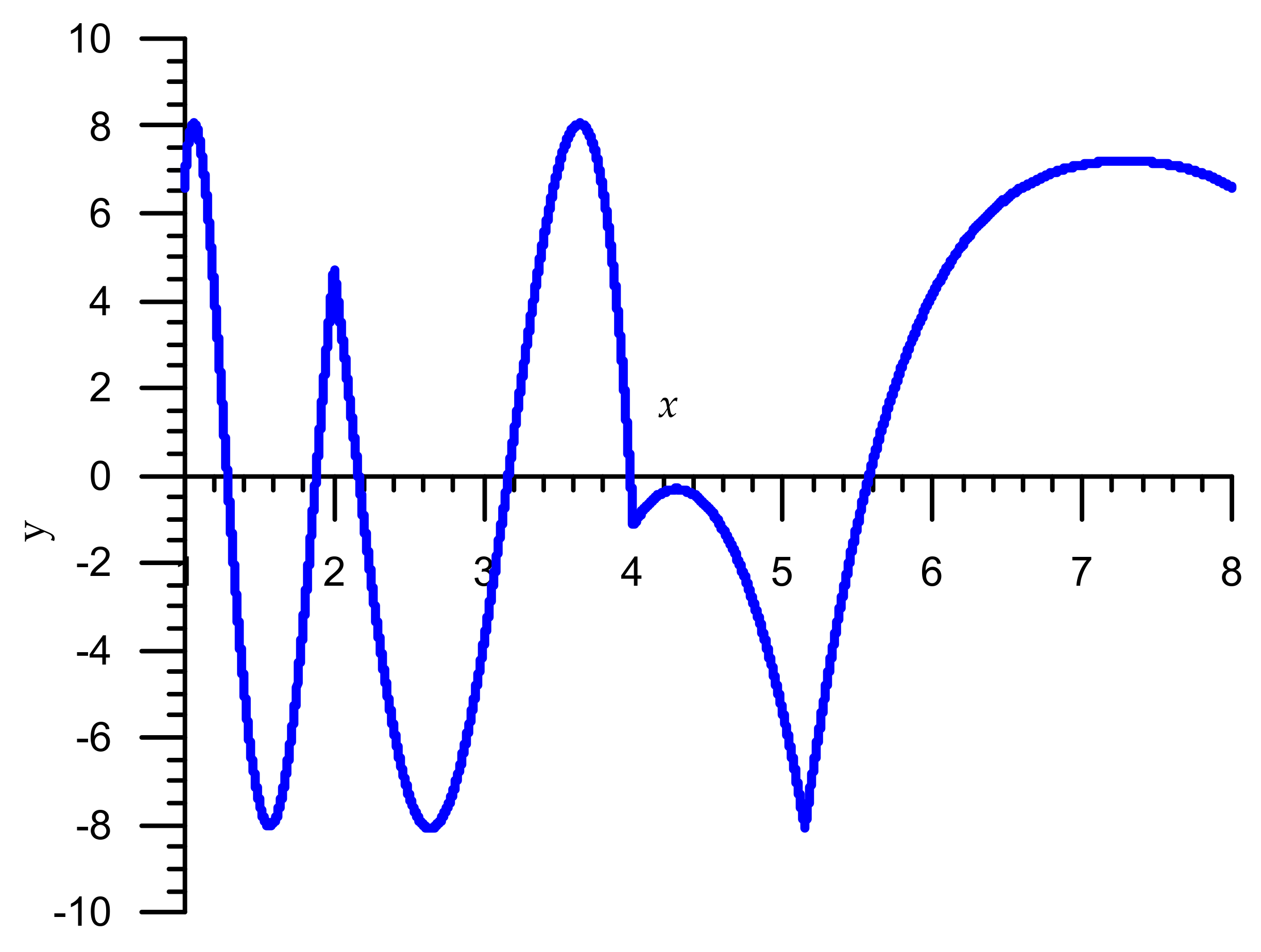

In this case, the graph of relative error for the first iteration has the form shown in

Figure 4, where error = y·10

−5. Then, a system of four equations is constructed for nine points of maxima on the interval

(see

Figure 4) and the values of the parameters

are refined (

0x548b645a,

= 1.75183,

= 1.25,

= 0.509). After two approximations, we obtain the following values:

5.1408540319298113766739657997215219

(corresponding to the magic constant R = 0x548c2b4a),

= 1.7523196763699390234751750023038468,

= 1.2509524245066599988510127816507199,

= 0.50938182920440939104272244099570921.

The algorithm code is as follows:

| Algorithm 5. |

| 1: float InvCbrt21 (float x){ |

| 2: float k1 = 1.752319676f; |

| 3: float k2 = 1.2509524245f; |

| 4: float k3 = 0.5093818292f; |

| 5: int i = *(int*) &x; |

| 6: i = 0x548c2b4b − i/3; |

| 7: float y = *(float*) &i; |

| 8: float c = x*y*y*y; |

| 9: y = y*(k1 − c*(k2 − k3*c)); |

| 10: c = 1.0f − x*y*y*y;//fmaf |

| 11: y = y*(1.0f + 0.333333333333f*c);//fmaf |

| 12: return y; |

| 13: } |

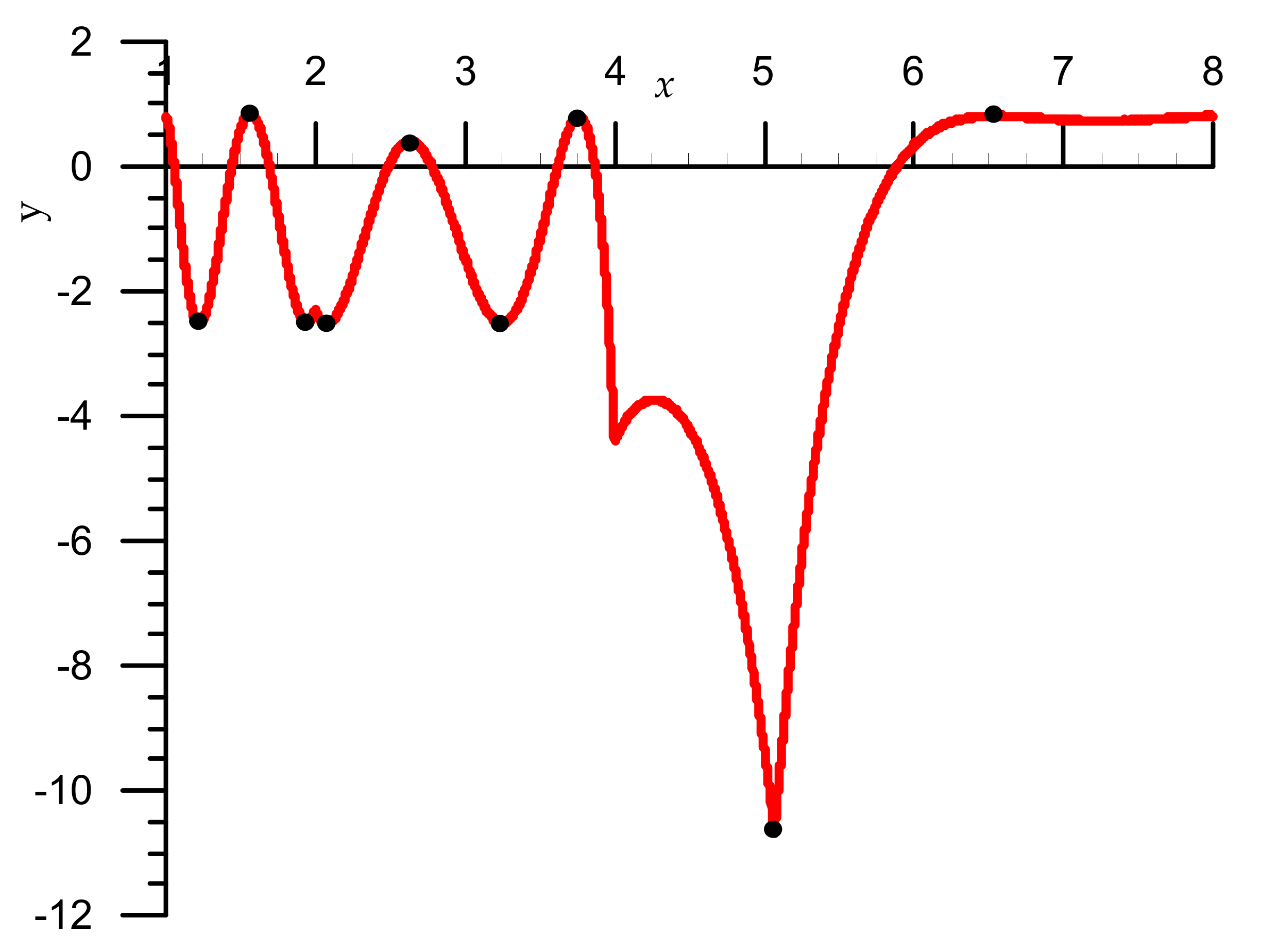

Figure 5 shows compatible graphs of relative errors for Algorithm 4 (red) and Algorithm 5 (blue) algorithms after the first iteration, where error = y·10

−5. From analysis of

Figure 5, it follows that in the Algorithm 5 there was a significant reduction in errors in the first iteration (7 times). This results in second iteration improvement (1.5 times). Note that the maximum relative errors of the Algorithm 5 for the first iteration are:

= 2.686·10−5 or = 15.18 correct bits.

Maximum relative errors for the second iteration are:

= 1.3301·10

−7 or

= 22.84 correct bits [

1].

In order to calculate the cube root (Algorithm 6), the last lines of the algorithm codes related to the second iteration (for example, Algorithm 5, as well as any of the above algorithms).

| Algorithm 6. |

| 1: c = 1.0f − x*y*y*y; |

| 2: y = y*(1.0f + 0.333333333333f*c);//fmaf |

| 3: return y; |

| 4: should be written as follows: |

| 5: float d = x*y*y; |

| 6: c = 1.0f − d*y;//fmaf |

| 7: y = d*(1.0f + 0.333333333333f*c);//fmaf |

| 8: return y; |

4. Evaluation of Speed and Accuracy for Different Types of Microcontrollers

We researched the relative error of the aforementioned algorithms using a STM32F767ZIT6 microcontroller (manufacturer: STMicroelectronics) [

22]. We determined the maximum negative and positive errors of two iterations for different algorithms (

Table 1). These errors were calculated according to the formula InvCbrt

K(x)*pow((double)x,1./3.)-1. for all numbers floating between [1,8], where

K {1, 10, 11, 20, 21}. The maximum error was determined by the formula

. Analysing the results of

Table 1, we can see that Algorithm 5 gives the most accurate results in both iterations. In the last column, we present the results of errors which give the standard library function 1.f/cbrtf(). According to the results (

Table 1) our Algorithm 5 (1.3301·10

−7) has more than twice less error than the error of the library function (2.7947·10

−7).

In addition, the operating time of the algorithm was researched. Testing was performed on four 32-bit microcontrollers with FPU.

TM4C123GH6PM (manufacturer: Texas Instruments Incorporated, Austin, TX, USA)—microcontroller with 80 MHz tact frequency; compilers used were arm-none-eabi-gcc and arm-none-eabi-g++ (version 8.3.1).

STM32L432KC (manufacturer: STMicroelectronics, Geneva Switzerland)—microcontroller with 80 MHz tact frequency; compilers used were arm-none-eabi-gcc and arm-none-eabi-g++ (version 9.2.1).

STM32F767ZIT6 (manufacturer: STMicroelectronics)—microcontroller with 216 MHz tact frequency; compilers used were arm-none-eabi-gcc and arm-none-eabi-g++ (version 9.2.1).

ESP32-D0WDQ6 (manufacturer: Espressif Systems, Shanghai, China)—microcontroller with 240 MHz tact frequency; compilers used were xtensa-esp32-elf-gcc and xtensa-esp32-elf-g++ [

23].

We used two types of optimization for the -Os (smallest) and -O3 (fastest) microcontroller compilers. In all cases, the best optimization result was for -O3; therefore, we do not cite the optimization results of -Os.

Table 2 shows average function calculation times of InvCbrt(x) for Iteration I, which corresponds to 10,000,000 tests. Analysis of the results shows that the shortest calculation time is for the Algorithm 3 for all types of microcontrollers. Similar results are shown in

Table 3 for Iteration II. In this table, we have added one more column with the results of the library function 1.f/cbrtf(). For the first three microcontrollers, the gain of the Algorithm 5 function is more than twice that of the library function and nine times better in terms of work gain for the microcontroller ESP32-D0WDQ6.

If we compare microcontrollers, we can see that the STM32F767ZIT6 is the fastest.

Table 4 shows the results of algorithm performance on different microcontrollers for Iteration I, which is defined as the product of time calculating the inverse cube root and the tact frequency of the microcontroller (cycles).

Table 5 shows the results for Iteration II. In this table, we also have added one more column for library function 1.f/cbtf(). According to this table, the productivity of the function Algorithm 5 is twice better than the library function. Regarding microcontroller ESP32-D0WDQ6, the function Algorithm 5 works nine times better than the library function.