1. Introduction

Low energy consumption is a key requirement in the design of modern embedded systems, affecting the size, cost, user experience, and the capability to integrate more high-level features. Single Instruction-Set Architecture (Single-ISA) Heterogeneous Multi-Processors (HMP) are known for delivering a significantly higher performance-power ratio than their counterparts. There exist many commercially available designs in the embedded and mobile world [

1,

2,

3]. Nevertheless, in this type of architecture it is increasingly more complicated to find the number of cores, operating frequency, and voltage that optimize performance and energy consumption to meet the requirements of a given application [

4,

5,

6]. This complexity increases when application characteristics need to be extracted at runtime to increase performance or save energy at different phases during the execution of an application [

7].

One of the commercial widely used heterogeneous architectures is the ARM big.LITTLE [

2]. It consists of two clusters with two types of processing cores, each one containing one or more cores. Cluster type

big is composed of higher performance larger cores that are also more power-hungry. Cluster type

LITTLE consists of smaller cores that are slower and more energy-efficient. The increased sophistication of this type of architecture delivers a challenging task to develop better energy management solutions. Running a multi-threaded application only on big cores may not justify the performance gain related to energy consumption. Also, using only small cores may not be the best choice for reducing energy consumption, as the application may suffer a significant increase in execution time. Besides, it is possible to scale up or scale down the core frequencies to improve the performance or save energy, respectively. Each arrangement, or configuration, of operating frequency and the number of available cores of each cluster type to execute a given application may produce a different performance-energy trade-off.

As simple approach to obtaining one or more configurations in HMPs that provide most beneficial performance-energy trade-offs is to collect the performance and energy consumption of a given application in all available configurations. However, in current heterogeneous systems, this would require a high cost to be performed on-line. For example, an embedded system with two clusters each with four cores and 16 clock frequency levels yields 4096 configurations to explore. Such a large number of configurations could be unfeasible for on-line approaches since, in many cases, the search process for the best configuration can outlast the execution itself. Even if executed offline, although it could be possible to evaluate all configuration, the expected overheads will make the method unfeasible.

Considering the vast diversity of performance-energy trade-offs that the large configuration space of HMP systems yields for a given application, the understanding of these trade-offs becomes critical to energy-efficient software development and operation [

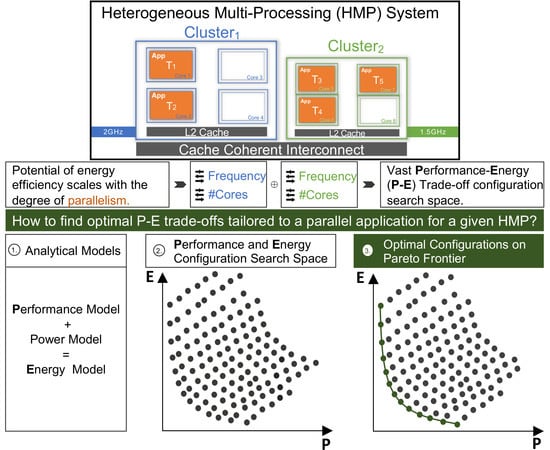

8]. We present a novel methodology to find the best performance and energy trade-off configurations of parallel applications running on (HMP) systems. It is intended to be used by the operating system to make scheduling decisions at runtime according to performance and energy consumption requirements of the given application and according to the system it is running on. For that, we propose analytical models for performance and power that are fitted offline using a few measurements of the application’s execution.

The combination of the outcomes of the performance and power models yields the energy model that can be used to estimate the energy consumption of all configurations for a given application on a specific architecture. The performance and energy models are used to assess the whole configuration space, commonly in the order of thousands of points, and the outcomes are used to select the configurations that lay on the performance and energy Pareto frontier, whose number is often in the order of tens of points. The Pareto frontier is the set of all optimal solutions from which it is not possible to improve a criterion (performance or energy) without degrading another. Hence, we propose a methodology that can estimate the best relationship between performance and energy consumption, without requiring an expensive exploration of the full search space.

We employed the methodology in an ODROID-XU3 board on eight multi-thread applications. We present the fitting of the proposed models and the trade-off Pareto frontier configurations for each application. The results show that the proposed methodology and models can successfully estimate the Pareto trade-off consistently with measured data. On average, we obtained gains in performance and energy consumption in the order of 33% and 40% respectively, when compared to the performance, ondemand and powersave standard Linux DVFS governors. The main contributions of this paper are:

Simple analytical models for HMP performance and power consumption that only require measurements of execution time and power, avoiding performance hardware counters, which may not be accessible on all architectures.

A practical methodology to sample the configuration space of a given application efficiently and select points that are estimated to be Pareto-optimal regarding trade-offs of performance and energy consumption.

Results show that the specific knowledge of the application performance and the system power embedded into analytical models can deliver significantly better results than conventional DVFS governors.

The technique is easy to use on new applications running on the same HMP system; the power model of the HMP system can be reused, and the performance model can be re-fitted based solely on the execution time of the new application.

The rest of this paper is arranged as follows.

Section 2 presents our motivation and places this paper in the context of existing research.

Section 3 introduces our modeling techniques and our methodology to find the trade-off configurations.

Section 4 describes the experimental setup used to evaluate our methodology, and compares our results to those obtained using the standard Linux DVFS governors. Our conclusions and a discussion of future work follow in

Section 5.

2. Related Work

We attempt to distinguish the main characteristics that differentiate our work from others with respect to existing approaches for finding applications’ configurations to improve energy-efficiency. The most relevant works are characterised in

Table 1.

Research can be categorized concerning the application scenario focus. Some works concentrate on concurrent execution of multiple applications [

11,

13,

17] and others exclusively on single-application scenarios [

9,

10,

12]. Tzilis et al. [

13] propose a runtime manager that estimates the performance and power of applications choosing the most efficient configuration by making use of heuristics to select candidate solutions. They do not consider multi-threaded versions, but instead, they allow multiple instances of the same application to run simultaneously. Indeed, energy efficient management of concurrent applications is harder to accomplish due to dynamically changing situations. However, guarantee an energy consumption requirement with a minimum performance level of a single application affects the energy efficiency of a whole cluster-based system.

Thus, considering this aspect important, our methodology manages energy and performance requirements of individual applications as approached by other works [

9,

11,

12,

15]. Some works are only concerned about the performance constraints [

13,

14]. Furthermore, for parallel or multi-threaded applications, some works like ours extend Gustafson’s [

18] and Amdahl’s law [

19] in order to characterize the application [

14,

16,

20]. Others attempt to identify the phases of each application thread through monitoring performance counters [

7,

11]. As shown in Loghin et al. [

14], the applications’ workloads with large sequential fractions present small energy savings regardless of the heterogeneous processing system. Therefore, it is clearly vital to exploit the energy efficiency in HPM architectures as well as the parallelism of single multi-threaded applications.

A notable number of power-management approaches target a reduction of power dissipation and a performance increase by combining three techniques. First, Dynamic Voltage and Frequency Scaling (DVFS) [

21], which selects the optimal operating frequency. Second, Dynamic Power Management (DPM) [

22], which turns off system components that are idle. Finally, application placement (allocation) [

23], which determines the number of cores or the cluster type an application executes on. Some strategies decide the application placement without taking control of the DVFS aspect [

14,

24,

25,

26]. Gupta et al. [

11] and Tzilis et al. [

13] have different approaches, yet combine DVFS, DPM, and application placement by setting the operating frequency/voltage and the type and number of active cores simultaneously. Also, there are works where the only concern is finding the best cluster frequency performances [

9,

12,

14,

16]. In this work, we find the Pareto-optimal core and frequency configurations in heterogeneous architecture that deliver the optimal performance-energy trade-off. Consequently, our method merges DVFS and DPM by choosing the operating frequency/voltage and number of active cores of each cluster type. Thus, our approach covers two of the main techniques for saving energy.

Next, we describe the points that are essential to guide the configuration choice. Generally, we distinguish: (i) offline application profiling; (ii) runtime performance or power monitoring; (iii) predictions performed using a model. Gupta et al. [

11] characterize the application by collecting power consumption, processing time and six performance counters. They use the data to train classifiers that map performance counters to optimal configurations. Then, at runtime, these classifiers and performance counters are used to select the optimal configuration concerning a specific metric, e.g. energy consumption. The major problem of this approach is the growth of the number of cores and cluster types, leading to this strategy losing reliability. Tzilis et al. [

13] use a strategy that requires a total number of runs that is linear concerning the number of cores. They use a similar number of performance counters as [

11] to profile the application. When the profiled application spawns, they match the online measurement to the closest profiled value and use it as a starting point to predict its performance in the current situation. De Sensi et al. [

10] focus on a single application and also monitor data to refine power consumption and throughput prediction models. They compared the predicted outcomes from their models against the data monitored in the current configuration. In case the prediction error is lower than a defined threshold, the calibration phase completes. The problems of online monitoring are the overhead incurred in refining models or making decisions. In contrast, our proposal characterises the application performance and power consumption of a given HMP system using only a few strategically sampled offline measurements. Further, they are used for predicting the optimal performance and energy consumption trade-off configurations.

A sort of performance and power modelling is commonly used to guide the configuration selection. Many works [

10,

11,

13,

15,

17] are based on analytical models that rely upon runtime measurements retrieved from traces or instrumentation to collect performance counters, which is often not a trivial process. Usually, performance and energy events should be recorded in different runs to prevent counter multiplexing. Otherwise, it may cause application disturbance and decrease measurement accuracy [

27]. As in other works [

9,

12,

16], our approach only relies on a minimum set of parameters, such as the number of cores of each cluster and the cluster frequency. Thus, our approach is simple to automate because it does not require any external instrumentation tools, and its utilisation across different architectures is less restricted.

Loghin and Teo [

14] derived equations to evaluate the speedup and energy savings of modern shared-memory homogeneous and heterogeneous computer systems. They introduced two parameters: the inter-core speedup (ICS), describing the execution time relation among diverse types of cores in a heterogeneous system for a given workload; and the active power fraction (APF), representing the ratio between the average active power of one core and the idle power of the whole system. They validated their work against measurements on different types of heterogeneous and homogeneous modern multicore systems. Their results show that energy savings are limited by the system’s APF, particularly on performance cores and by the workload’s sequential fraction when running on more efficient cores. We used a similar approach when devising our performance and power equations. Our performance model has a parameter that represents the median of the larger (big) cores speedup compared with the smaller (LITTLE) cores. The value of this parameter can be determined independently of any target application, i.e., it is application-agnostic. Our power model describes the dynamic and the static power consumption of the active cores of each cluster type. The aim is to capture the properties of the hardware architecture so that we do not need to fit the power model and the performance speedup parameter for each application. Ultimately, our energy model is a combination of a performance model and an application-agnostic power model.

The main concern with using heuristics, models refinement or any other strategy to make decisions at runtime is the added overhead. Thus, the most significant contribution of our work is to avoid a full exploration of the search space to find the best configurations to execute an application at runtime. A similar goal, but with a different approach, has been pursued by De Sensi [

16]. However, the architecture is not HMP, and it offers only 312 possibles configurations. Moreover, Endrei et. al [

9] and Manumachu et al. [

12] use Pareto Frontier as a strategy to minimize energy consumption without degrading application performance. Still, they do not work on ISA heterogeneous architecture as Gupta et al. [

11] and our approach. Furthermore, our approach has the advantage of not depending on performance counters, resulting in less restricted employment.

Using the Pareto frontier method, we can find optimal configurations, since it represents the optimal trade-off between energy consumption and performance for the target system [

28]. Thus, users can take advantage of configurations at the Pareto frontier to guide development toward energy-optimal computing [

29]. Also, these configurations can provide a power-constrained performance optimization to identify the optimized performance under a power budget [

30]. Moreover, the Pareto-optimal fronts offer a trade-off zone that can be used to produce the optimal performance and energy efficiency prediction of a model [

9,

31].

In summary, we propose a method to find the optimal energy-performance trade-offs on a two-cluster heterogeneous architecture of a single multi-threaded application. The energy estimation is obtained by combining the offline performance and power models. The offline performance application characterization is straightforward; it does not use performance counters. The power model involves only architecture-specific parameters, so it is sufficient to fit only once for a specific platform. The individual applications’ performance and power requirements can be achieved by the energy-performance trade-off zone provided by the Pareto frontier.

3. Estimating Pareto-Optimal HMP Configurations

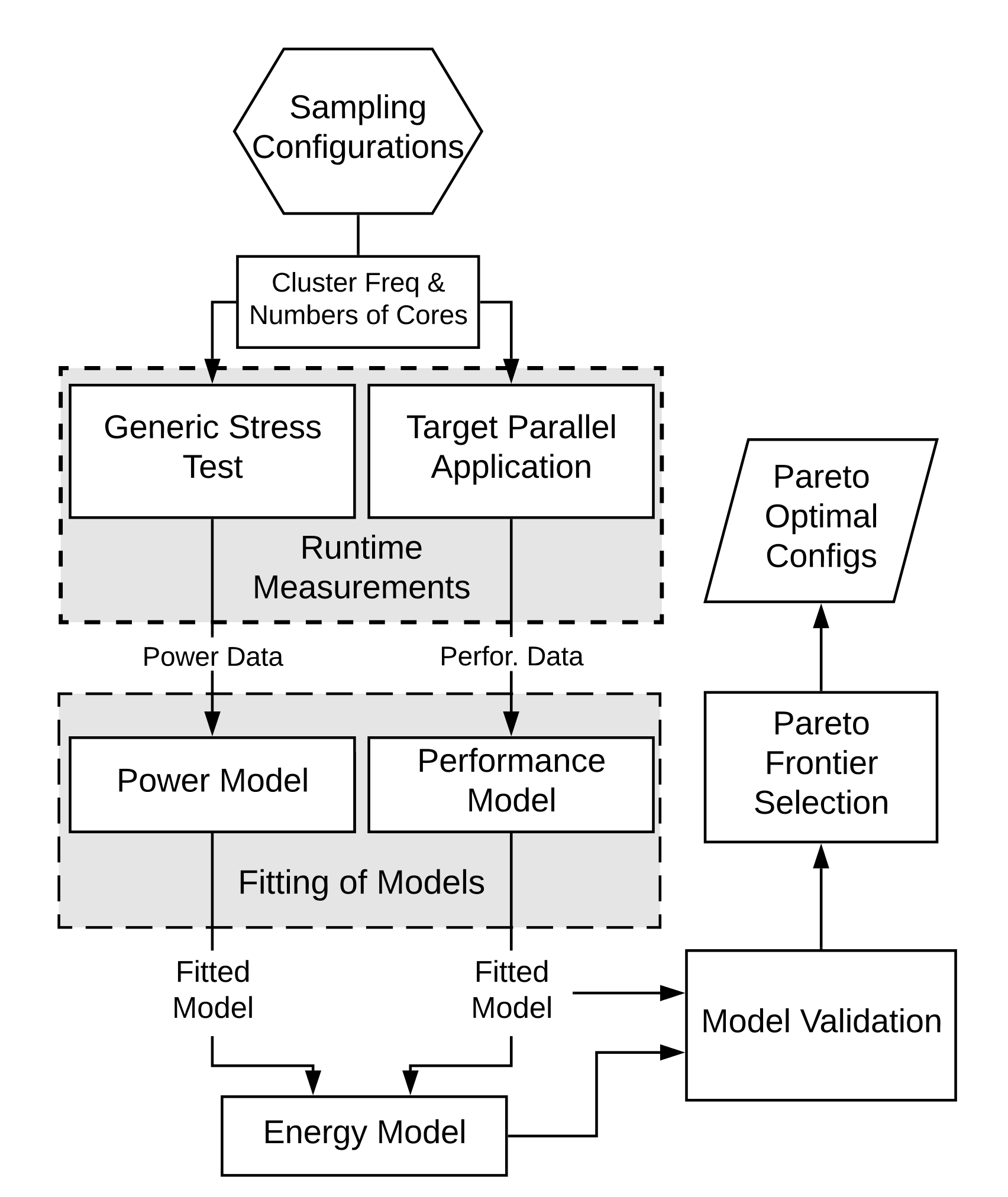

This section describes the methodology proposed to estimate the Pareto-optimal configurations for HMP systems. A configuration is defined as a point in the vast parametric space that defines parallel software operation, including the number of processing cores used in each cluster and the operating frequency of those cores. We assume that the clusters have their own voltage and frequency domain, which is shared among the cores of the cluster. Design attributes such as the number of clusters and the number of cores and frequency levels of each cluster are known parameters. The model matches a large subset of actual HMP systems, to which the proposed methodology can be applied. Five steps compose this methodology: sampling of configurations, runtime measurements, fitting of models, model validation, and the Pareto frontier selection.

Figure 1 presents an overview of these steps.

In the first step—sampling of configurations, we need to carefully choose the hardware configurations that will be used to obtain the required data to fit the parameters of the models. To obtain parameters that generate higher accuracy, data measurements should be as diverse as possible, i.e., two or more measurement points should not have a similar number of cores and operating frequency, since those points will most likely not add significant information to the modeling. For this reason, we used a Halton low discrepancy number generator to choose configurations that are not too similar to each other. Morokoff et al. [

32] describe how the low discrepancy number generator ensures that it covers the domain more evenly compared to pseudo-random number generators. Also, De Sensi [

16] shows that by picking equidistributed points, it is possible to achieve higher accuracy compared to pseudo-random selection of the points.

In the second step—runtime measurements, we evaluate the sampled configurations at runtime, measuring performance and power data to fit the models. First, for each configuration, we execute a generic stress-test to stress the processor and capture power consumption. Second, we execute the target parallel application to measure execution time for another subset of sampled configurations using the Halton number generator.

In the third step—fitting of models, we fit the measured data to the proposed architecture- and application-specific performance model and the architecture-specific, application-agnostic power model. The performance model intends to estimate the execution time of a parallel application for any point in the configuration space. We adjust the parameters of the power and performance models separately. The goal of the proposed power model is not to be accurate per se, but to capture the trend changes in power when a change of the operating frequency and number of active cores occurs, independent of the application running. The power consumption prediction accuracy is not strictly crucial if there are no power restrictions to apply on system budget. More importantly, we aim for decreasing energy consumption in HMP since it has more impact on batteries, which is essential on embedded devices. Moreover, being application-agnostic avoids the need for power measurements for every application while preserving the architecture’s signature. Thus, we do not need to fit the power model for each application. Besides, the process of acquiring these measurements also increases the difficulty of automating these models as practical tools. For each model, we use a non-linear regression minimizing the Root Mean Square Error, given by

where

is the

ith measured data of

,

is the

ith estimated value given by the model, and

n is the total number of configurations.

Section 3.1 and

Section 3.2 detail the proposed performance and power consumption models, respectively. The proposed energy model is a combination of the power consumption model—previously fitted to a specific HMP system, and the performance model—fitted to a given parallel application and the same HMP system.

Section 3.3 presents the proposed energy model.

In the fourth step—model validation, we evaluate the performance and energy consumption models for every point in the vast configuration space that defines parallel software operation.

Finally, in the fifth step—Pareto frontier selection, we select all modeled configurations whose models’ outcomes lay on the Pareto frontier, i.e., those configurations that yield the optimal performance and energy consumption trade-offs.

3.1. Application Performance Modelling

In this section, we devise a performance model for an HMP platform with two processing clusters. We assume that a parallel application coherently runs in

b big cores and

L LITTLE cores. Moreover, we do not consider or require different frequencies for each core in a cluster, i.e., all the cores in a cluster run at the same frequency. Inspired by other works [

33,

34,

35], we devised the following model that can be used to estimate the performance of a given parallel application running on a two-cluster HMP.

where

is the performance target, that is the total execution time goal for a given application.

is the application’s execution time running on a single LITTLE core with operating frequency

F, which is not necessarily the same frequency of

. The number of active big and LITTLE cores in the processor is denoted by

b and

L, respectively.

and

are the operating frequencies of the big and LITTLE cores, respectively. perf is the performance improvement when moving computation from a LITTLE to a big core, independent of an application. Note that, perf depends on the hardware design, so we assume it has a fixed value representing how fast a big core is when compared to any LITTLE core. Thus, we do not need to fit this performance speedup parameter for each application.

The value of

f represents the parallel fraction of the application. It is the only parameter that characterizes the application. Indeed, by modelling complex parallel applications using their parallel fraction only, we are neglecting the effects that a frequency change has on the performance of the memory hierarchy, on the parallel overhead, and on the distribution of load across the heterogeneous cores [

36]. Besides, as those features should limit the parallel speedup, it is expected that the sequential fraction includes them. It is our priority to keep our model straightforward to achieve low runtime overheads. We observed that, despite the many simplifications, the model still provides reasonably accurate energy consumption estimations.

We consider that the sequential fraction of the application is accelerated by using one of the big cores when such core is available. In this approach, the sequential portion expects to include the parallel overhead, i.e., communication or synchronization among the threads, which does not accelerate by making the big core run faster—as it would do for actual sequential code. Otherwise, we can extend Equation (

2) as follows:

In Equation (

3), when there is no big core available or active, the application would not take advantage of the performance improvements from the big cores, therefore the parameter perf is removed. Also, the big core operating frequency is not used by the parallel application.

This performance model assumes that the modelled parallel workload is dynamically distributed over the running threads. If that is not the case for the application, cores may run out of work and become idle while others might become overloaded and delay the end of the program’s execution. Thus, the performance model may then make incorrect estimations as idle cores still consume power. This can lead to improper power predictions, resulting in inadequate energy predictions. Although, this is a limitation of the proposed approach, the advantages in terms of performance gain and energy reduction presented in this work is an argument for pursuing this type of workload balancing scheme.

3.2. Power Consumption Modelling

In this section, we devise a power model for an HMP platform with two clusters. Assuming that the transistors used on both types of cores have the same power consumption behaviour with respect to their operating frequency and voltages, we can model the power consumption of the big and LITTLE cores, as follows:

where

is the power consumption of

b big cores running at frequency

,

is the power consumption of the

L LITTLE cores running at frequency

.

Consider the following equation to describe the power consumption of a processor running at frequency

F [

37,

38,

39].

where

a is the average activity factor,

C is the load capacitance,

V is the supply voltage, and

is the average leakage current. Also, consider the equation that defines the maximum operating frequency for a transistor [

40,

41]:

where

denotes the threshold voltage,

is a constant, and

h is a technology-dependent value often assumed to be within the range [2,3]. Since performance is proportional to the operating frequency, digital circuits often operate with voltage and frequency pairs that push frequency closer to the maximum. If maximum performance is not required, the voltage is scaled down with frequency to maintain this policy [

42]. Usually, device vendors provide a table of discrete values of supply voltages that the processing chip can operate beneath by maximum frequencies [

43]. Generally, the supply voltage

V can be perceived as a linear function of the frequency depicted such as follows:

where

is any constant. Some authors make a similar approach [

42,

44] showing that it is reasonable and common in the literature to make such approximation. Furthermore, we reiterate that we intend to capture the trend of how a change in the operating frequency-voltage would affect the power consumption of the system. For this work, our target is to save energy consumption and not accuracy in power consumption prediction. Using Equation (

7), we can rewrite Equation (

5) as follows:

Considering

and

a to be approximately constant, we can approximate Equation (

8) by

where

and

are considered constants that mainly abstract semiconductor technology attributes. These attributes should be application-independent and fixed since they rely on hardware design. Then, the power of each cluster type is modelled as follows:

where

b and

L are the numbers of active big and LITTLE cores, respectively, accounted for the dynamic power modelling component. The technical setup of our experimental platform did not permit disabling individual cores. Therefore, we consider a single term of leak current for each cluster. Then,

and

represent the total number of cores of each cluster type and account for the static power modelling component.

Note that this power model does not distinguish one application from another since the activity factor a is assumed to be constant. This is a very generic assumption, which may cause an inaccurate power consumption estimation. Nevertheless, for this approach, more critical than accurate estimations of power is to capture how a change of the operating frequency-voltage would affect power consumption. It is expected that the way in which this change affects the power is the same, regardless of the activity factor. In other words, as long as the activity factor of the target application does not change too much from one frequency configuration to another, this assumption will not adversely impact on the proposed power model.

By combining Equations (

4), (

10) and (

11), we come to the power model for the whole HMP chip parameterized by the two frequencies of each cluster as follows:

Equation (

12) represents the power consumption of a parallel fraction (

) of an parallel application. Also, we need to predict the power of the sequential part of a code that runs in one core.

When there is, at least, one big core available, we consider the dynamic power of one big core and the static power of all cores. In the case that just LITTLE cores are available, then the sequential part runs on one LITTLE core. In this case, the power consumption of the sequential portion contains the static power of all cores and the dynamic component of one LITTLE core.

3.3. Energy Modelling

In this section, we devise an energy model for a parallel application running on a two-cluster HMP platform by aggregating the sequential and parallel energy consumption as follows:

By combining the parallel part of Equation (

2) and the power model of the whole chip in Equation (

12), we have the parallel portion energy model component:

Using Equation (

13) and the sequential part of the Equations (

2) and (

3), we have the sequential portion energy model component, which relies on the number of active big cores available:

Finally, combining Equations (

15) and (

16), we come to the consolidated energy model for the whole HMP chip parameterized by the frequencies and the number of active cores in each cluster:

We can now estimate energy consumption and the performance of any configuration for a given parallel application using our energy model in Equation (

17) and performance models in Equations (

2) and (

3), respectively. Considering fitted models, we can estimate all possible performance and energy consumptions of an application. Then, these estimations are used in the Pareto method to obtain the most optimal performance-energy trade-offs. In the next section, we are going to show the values obtained after the fitting process and the Pareto approach validation on a two-cluster heterogeneous board.

4. Experimental Evaluation and Results

This section describes the experimental setup, including the specifications of the platform and the measurement methodology applied to collect data. Then, we will show the hardware design parameters and the values of the parallel fractions obtained through the non-linear regression. We validate our proposed methodology concerning the estimated Pareto frontier against direct measurements. We computed the error of the estimations by using the Mean Absolute Percentage Error (MAPE), defined as:

where

n is the number of configurations,

is the actual measurement (execution time or energy consumption) and

is the estimated execution time or energy consumption.

4.1. Experimental Setup

The proposed methodology was validated using an ODROID-XU3 [

45] board developed by Hardkernel co. with two core types of the same single-ISA, so both can execute the same compiled code. It uses a Samsung Exynos5422 System on a Chip (SoC), which utilizes ARM big.LITTLE technology and constitutes an ARM Cortex-A15 (big) quad-core cluster and a Cortex-A7 (LITTLE) quad-core cluster. The ODROID-XU3 has 19 available frequency levels ranging from 200 MHz up to 2 GHz on Cortex-A15 and 14 available frequency levels from 200 MHz to 1.5 GHz on Cortex-A7. Therefore, considering that each configuration is a combination of the number of cores in each cluster and a frequency pair that is available as a resource for an application to be executed, there are 6384 possible configurations to explore. To further clarify, consider that each cluster can select none to four cores, then

. For each combination of active numbers of LITTLE and big cores, there are

arrangements of frequencies to choose. Since the combination of 0 big cores and 0 LITTLE cores is not possible, the number of available configurations is

. The experimental platform was set up with a Ubuntu Minimal 18.04 LTS running Linux Kernel LTS 4.14.43, and it did not permit disabling individual cores. Our software project can be found in the GitLab (

https://gitlab.com/lappsufrn/XU3EM).

The ODROID-XU3 has four current and voltage sensors to measure the power dissipation of the cortex-A15 cores, cortex-A7 cores, GPUs and DRAMs individually. We sampled the clusters’ power consumption readings every 0.5 s with timestamps. Then, by integrating the power samples, we computed the energy used by a given application. We consider the average power calculated by dividing total energy by execution time. Moreover, we used

cpuset [

46] subsystem to confine all the data collection instrumentation and operating system’s processes/threads to the not active cores, when available, and assign the workload application appropriately to the cores. Thus, this mitigates the interference of all non-target application in the experiment.

4.2. Hardware Design Parameters

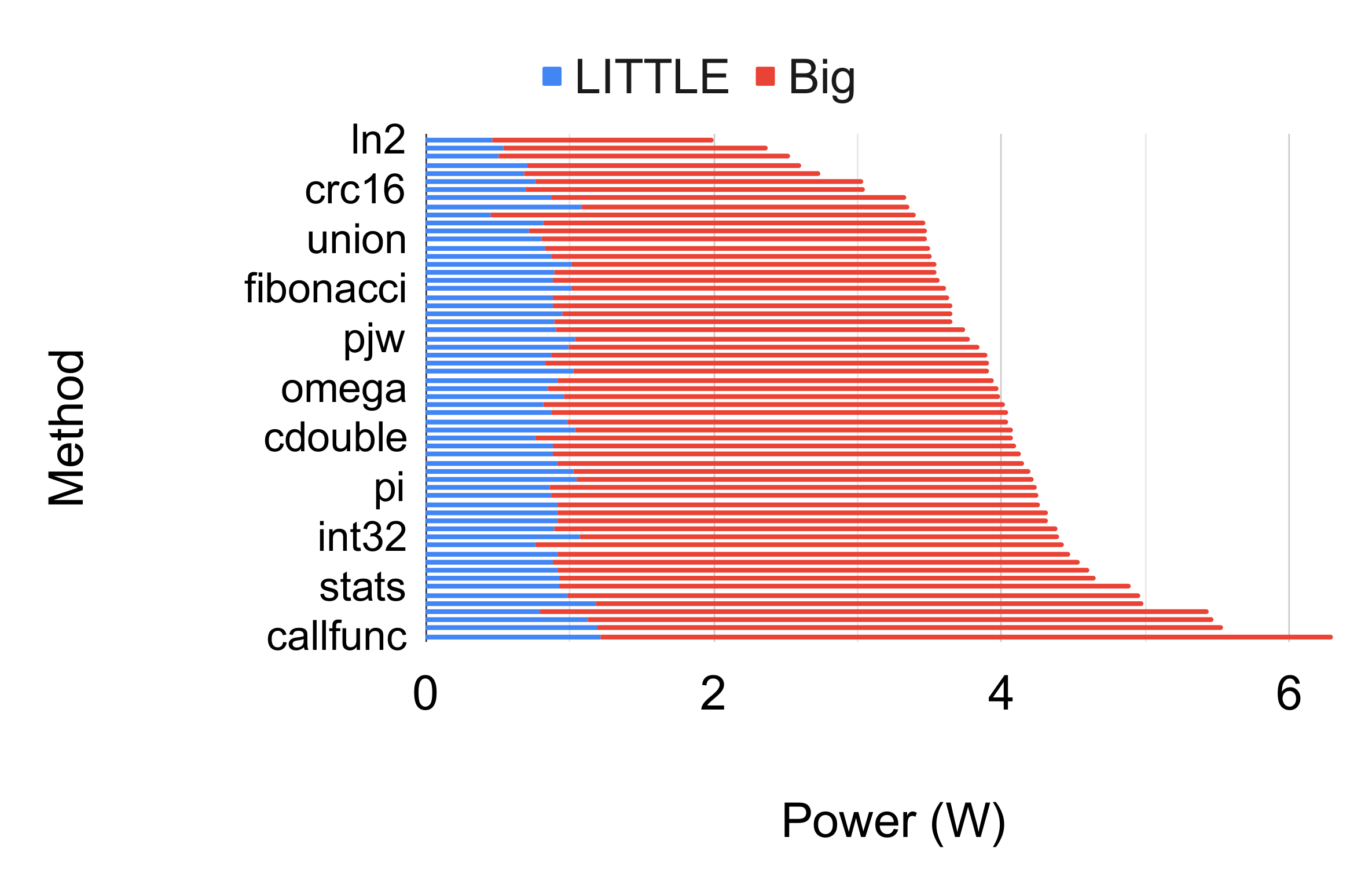

This section shows the values obtained for the parameters that represent the hardware design characteristics. As these parameters are related to the CPU design and they must be application-agnostic, we used a CPU stress tool, called stress-ng [

47]. This tool can load and stress a computer system in various selectable ways and is available in any Linux distribution. This tool has an extensive range of specific stress tests that exercise floating-point, integer, bit manipulation, and control flow operations on the CPU.

We tested each method to find the one that maximizes the stress on the ODROID-XU3, i.e., the method that led to the highest power draw as cores executed their instructions (see

Figure 2). Hence, after executing every method for 15 s on two big cores (1.9 GHz), and four LITTLE cores (1.5 GHZ), we chose the callfunc stress method. Using all four big cores at the highest frequency would have overheated the board. High CPU frequencies, especially from the big cores, produce a large amount of heat, making the system protection mechanism against high temperatures halt the device to prevent damage. Moreover, running 15 s provides 30 power reading samples from the sensors. The average standard deviations regarding all methods are 0.0792 and 0.2395 for LITTLE and big power consumption, respectively.

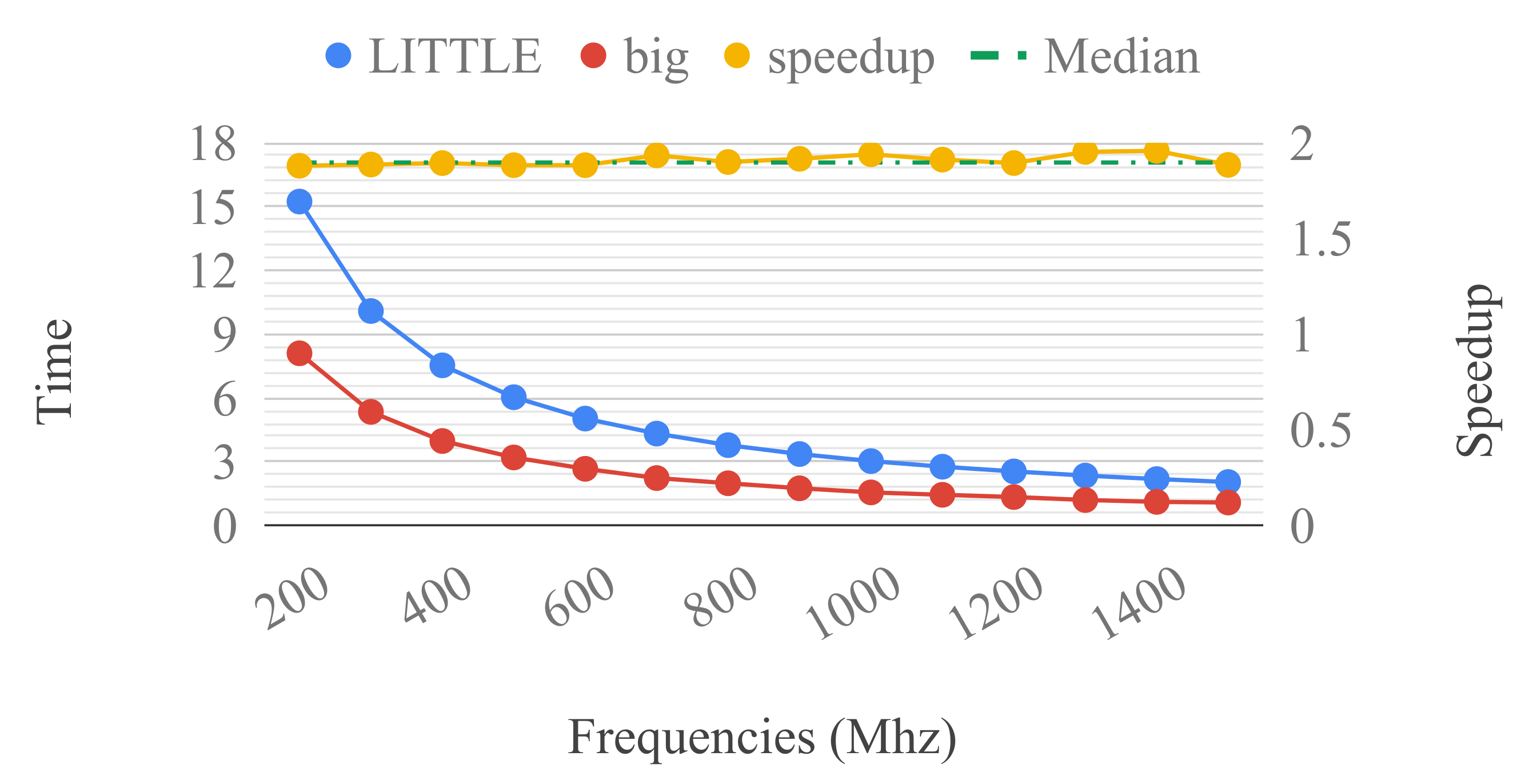

4.2.1. The Perf Parameter

The perf performance parameter represents the big core speedup in comparison with the LITTLE core. It is application-agnostic, relying only on the chip design. We executed the stress-ng with callfunc method on one core of each cluster type, fixing the number of operations at 87,555. This number of operations provides approximately one second to conclude in one big core with the highest frequency and up to 15 s for one LITTLE core with the lowest frequency.

Figure 3 shows the result of perf fitting. The left-hand side Y-axis shows the median execution time of five runs for one core of each cluster type and different frequencies. The frequency range used is 200 MHz–1.5 GHz. Higher values are not possible due to the maximum LITTLE core frequency. The right-hand side Y-axis represents the speedup of one big core when comparing to one LITTLE core for each frequency. The average standard deviations of the runs regarding all frequencies are 0.0252 and 0.0404 for LITTLE and big core, respectively. The median of every speedup is

which is the obtained perf value.

4.2.2. Power Consumption Parameters

We aim to obtain a power model that independent of the application, represents the power consumption of the two-clusters. As we want to model the total power consumption of the entire chip, we measured the whole chip to obtain . We defined the number of processes and which cores would be active to execute the stress-ng by generating 95 evenly distributed Halton configurations. Each configuration is composed of the number of active big cores b, active LITTLE cores L, big’s frequency cluster and LITTLE’s frequency cluster .

We used the stress-ng with callfunc stress method and a fixed execution time of 75 s, with the command line stress-ng –cpu N –cpu-method callfunc -t 75 –taskset t. The –cpu parameter determines the number of processes on which the same method will be executed, and the parameter –taskset can be used to set a process’s CPU affinity.

Running 75 s provides 150 power samples. The average standard deviations regarding all 95 configurations are 0.0164 and 0.1478 for LITTLE and big core power consumption, respectively. As the configuration parameters and their total power consumption measurements are known, we fit the parameters

from the Equation (

12). The fitted values are:

.

4.3. Applications

To validate our approach, we used eight applications: the Black-Scholes, Bodytrack, Freqmine applications provided by PARSEC, Smallpt and x264 by Phoronix Test Suite and kmeans, Particle Filter and LavaMD from Rodinia benchmark. PARSEC [

48] is a well-known benchmark suite of parallel applications, which focuses on emerging workloads and is designed to be representative of next-generation shared-memory programs for chip-multiprocessors. The Phoronix Test Suite [

49] is a benchmarking platform that provides an extensible framework for carrying out tests in a fully automated manner from test installation to execution and reporting. Rodinia benchmark [

50] is a set of applications designed for heterogeneous computing infrastructures with OpenMP [

51], OpenCL [

52], and CUDA [

53] implementations.

We have chosen the applications because they were implemented using OpenMP. This allowed us to modify their workloads to be dynamically balanced using dynamic OpenMP schedule clause [

54]. Moreover, the number of threads created is equal to the number of active cores available of a given application under a configuration. The thread master was bound to one big core in order to make sure that the sequential part of the code would run on the higher performance core. The other threads were bound to one core each. This mean that these applications follow the assumptions we made for the performance model.

The notable exception for the chosen criteria is the x264 encoder provided by the Phoronix test suite since it has only POSIX threads for parallelism. As higher video quality significantly depends on the encoding process, i.e., longer execution time and higher energy consumption, it is essential to improve the energy efficiency of video encoding on embedded devices. We therefore evaluate the behaviour of our methodology on this video encoder.

The characteristics of the applications which are used in this paper, according to [

48,

50,

55,

56,

57], can be seen in

Table 2. Most applications are CPU and memory intensive, so we expect a considerable use of the HMP chip and the memory system as well as the communication between them.

Nevertheless, some implicit hardware or software features, such as communication-to-computation ratio, cache sharing and off-chip traffic, are not explicitly included in our power and performance model. What we want to demonstrate is that a low-overhead, straightforward model is sufficient to estimate a Pareto frontier. Besides, as those features should limit the parallel speedup, it is expected that the sequential fraction of the application may be more significant for some applications.

We strive to capture the nuances of each configuration that will affect the way the application works on the hardware, producing different execution time. Therefore, we fit the performance model to find the specific parallel fraction f of each application by executing 50 Halton configurations (). As Halton generates configurations that cover the domain more evenly than pseudo-random algorithms, 50 configurations provide sufficient data for us to build our performance model. One more configuration is needed to find , it is 0, 1, 2 GHz, 800 MHz. We calculated the median of the execution time of five runs for each configuration.

Considering the fitted perf, and the Equations (

2) and (

3), we use non-linear regression to find the parallel fraction

f for every application, as explained in the

Section 3.

Table 3 shows the value of

f for each application. Note that Black-Scholes and kmeans have a parallel fraction of 0.7743 and 0.6381, respectively, indicating a larger sequential portion of these applications when compared to other applications. The following section will show the validation of the Pareto frontier. It provides the evidence that our low-overhead models are sufficient for achieving our goals.

4.4. Pareto Frontier Validation

In this subsection, we validate our proposed methodology. Using the energy model in Equation (

17) and the fitted performance model in Equations (

2) and (

3), we generated a pair of estimated performance and energy consumption values of all possible configurations for each application in

Table 2. Then, the Pareto frontier method selects the performance and energy pairs that offer the optimal trade-off.

For all applications, the Pareto frontier selection step resulted in configurations that use all cores of the two clusters. The frequencies of the cluster big and LITTLE were the only parameters that have changed. This probably occurred because it was not possible to disable the unused cores during the fitting. As a result, the energy savings from configurations, which are not using all available cores, are not enough to justify the performance mitigation. Therefore, the optimal configurations become those that use all cores.

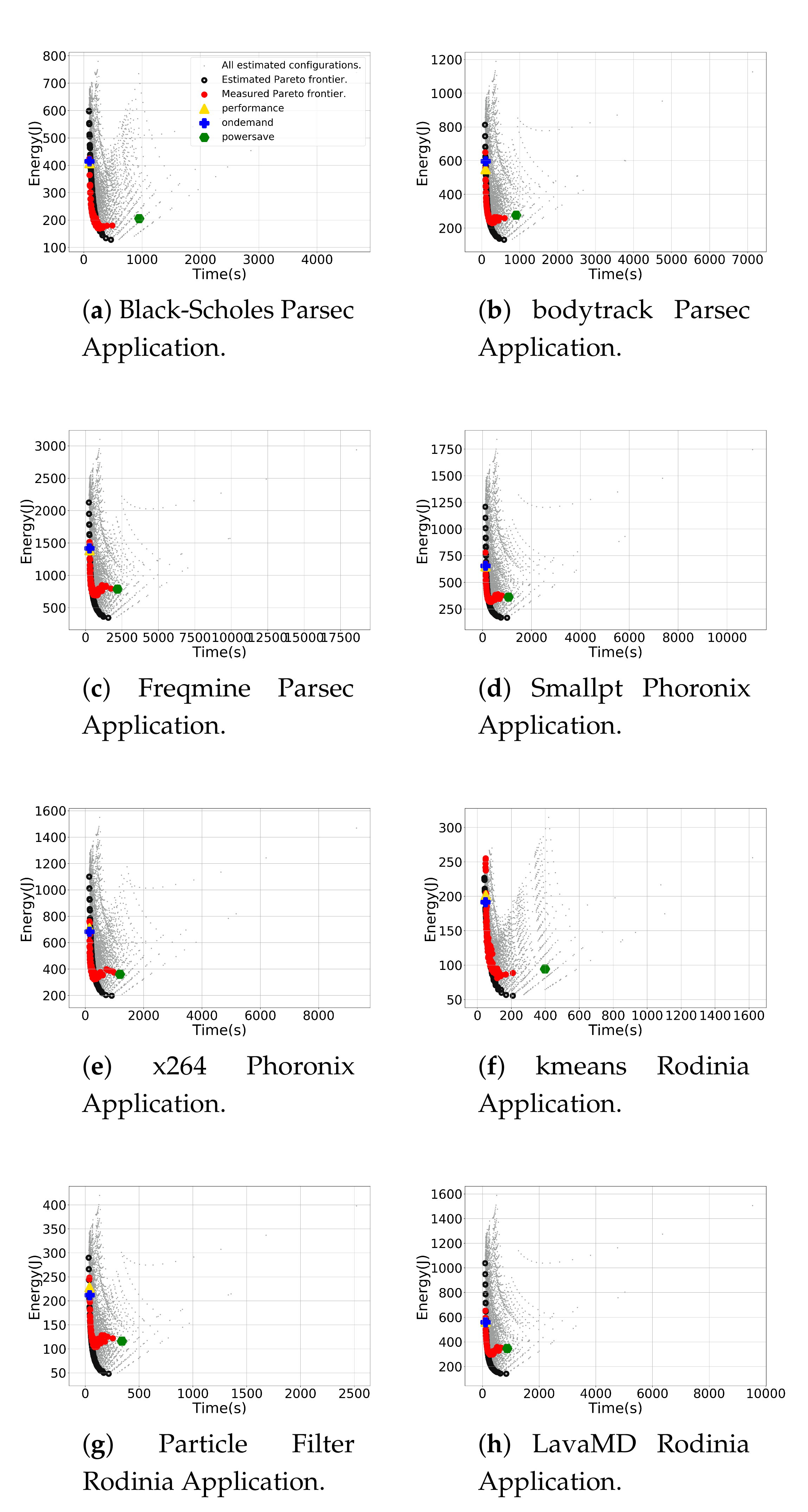

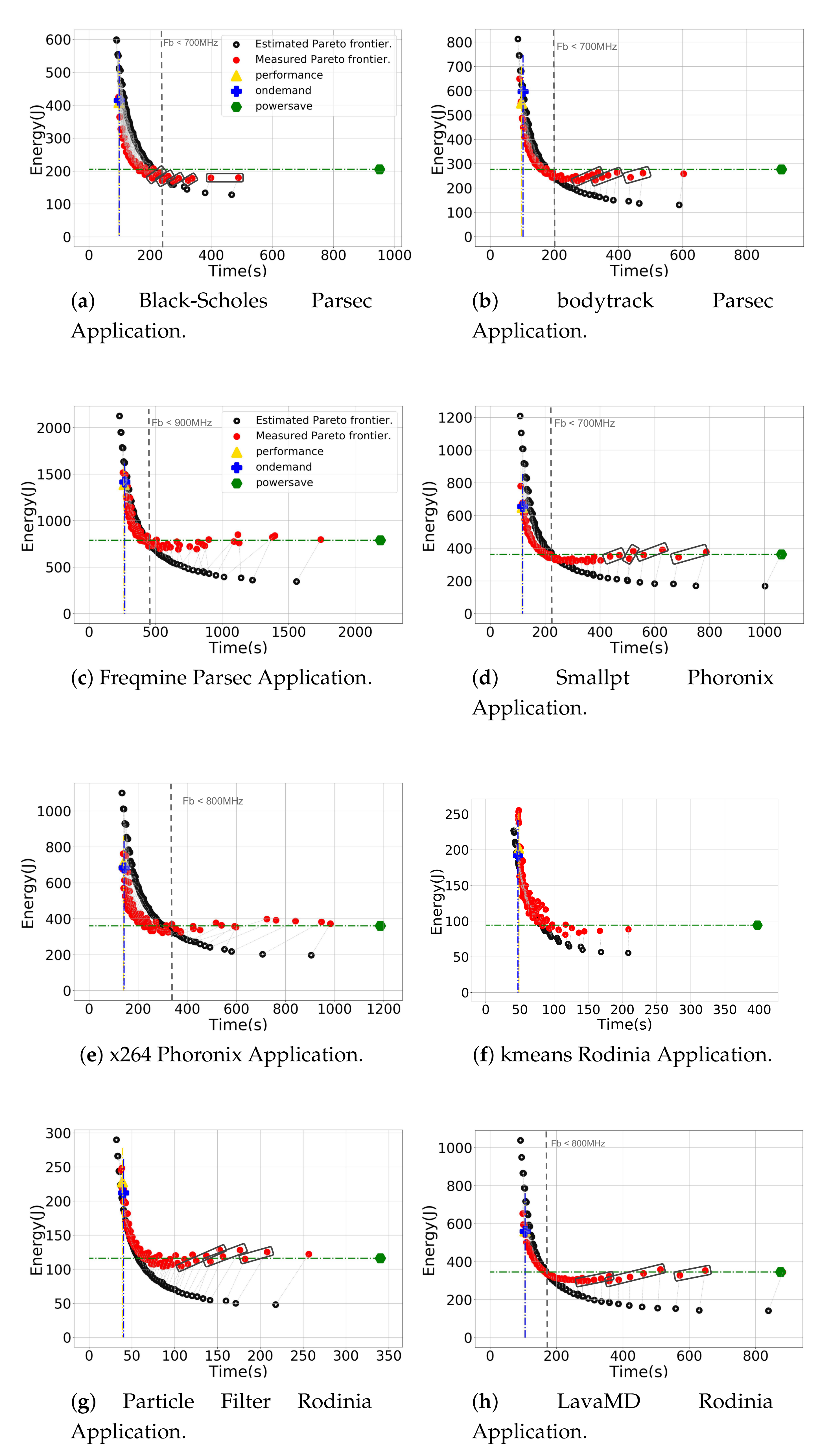

Figure 4 shows the estimated and measured Pareto Frontier for all applications w.r.t. all possible configurations. Each point describes a pair of performance and energy consumption of a configuration. The grey points are the estimated outcomes of our energy and performance models for all possible configurations. In total, there are 6384 available configurations w.r.t. the combinations of the number of cores of each cluster and pair frequency.

The black circles depict the selected Pareto configurations from the estimated energy and performance pairs. Notice that the estimated Pareto have the least energy consumption concerning different performances. Each red point represents the actual values measured from the sensors using each selected Pareto configuration. Observe that the measured and estimated values are similar to each other but, as the execution time increases, the difference between the measured and estimated energy consumption also rises. The yellow triangle, the blue plus sign and the green hexagon are the performance, ondemand and powersave Linux governors, respectively. We compare our methodology with them in the next section.

Table 4 shows the total number of configurations selected by Pareto Frontier and the measured Pareto Frontier variations of energy and performance for each application. Our approach decreased the search space of 6384 available configurations for the given platform by approximately 99%. Considering the highest and least energy consumption and performance among the measured Pareto configurations, we observe energy savings of up to 68.23% and performance gains of up to 75.62% for kmeans application. The Freqmine application presented the least variation of energy, up to 54.35%, however, the performance gains were up to 85.41%. This demonstrates that the selected configurations by Pareto frontier can provide a good range of performance improvement and energy saving options for operating systems.

Figure 5 gives a zoom-in in

Figure 4. The grey points that represent all possible estimated configurations’ outcomes are not displayed. Moreover, the measured and the related estimated Pareto configurations are linked using a dotted line, thus, showing the configurations’ results from the models against the measured data. Depending on the application, we could not execute some configurations to avoid overheating the board. As anticipated in

Section 4.2, high CPU frequencies produce high temperatures that make the system halt. For instance, the Freqmine results presented in

Figure 5c show four estimated Pareto points with the frequencies (big,LITTLE) being (1500,2000), (1500,1900), (1400,1900) and (1500,1800) without their respective measured points. Note that two of them are overlapping. The same occurred with the Smallpt, LavaMD, x264 and particle Filter applications (see

Figure 5d,e,g,h).

Notice that all applications except the Particle Filter and kmeans (see

Figure 5g,f), have a point when the measured energy consumed is higher than the estimated. A vertical line represents at which time and big cluster frequency this occurs (see

Figure 5). As the energy is also influenced by the performance modeling, the measured execution time in some cases takes longer when compared with the expected performance consuming more energy.

Notice the highlighted configurations (see

Figure 5) that have similar measured energy. Those points have the same big frequency, and, as the LITTLE frequency scales down, the execution time increases, and so does energy consumption. Further, when the big cluster frequency decreases, there is an abrupt drop in energy consumption. For instance, this can be seen in

Figure 5b of the Bodytrack application in points above 200 s. This shows that the big cores play an important role in energy consumption.

Some applications do not have a workload as well-balanced as we expected. This results in the predicted performance not being well correlated with the measured data. Even though Freqmine (see

Figure 5c) and kmeans (see

Figure 5f) have the dynamic clause included in their parallel loops, they do not present consistent performance. Additional work will be required to understand why these applications are not workload balanced. In particular, the x264 application (see

Figure 5e) requires more refined modelling since video processing is much more complex, and the application phases tends to vary according to the frames from the input video. Our approach shows reasonable energy savings, even though fixing a Pareto-optimal configuration to the whole application execution.

Table 5 shows the mean absolute percentage error (MAPE) between the measured and estimated performance and energy consumption of the Pareto Frontier, including the average standard deviation of the measured data. The highest energy and performance errors are from Black-Scholes (38.92%) and x264 (11.10%) applications, respectively. The lowest errors are observed in kmeans and LavaMD with 10.48% and 1.75% of energy and performance errors, respectively. It is important to notice that the x264 had a reasonable accuracy, considering its complexity. On average, we achieved performance and energy errors of 5.53% and 22.30%, respectively.

It is important to recall that our concern is the trend of the measured Pareto frontier. Therefore, even without an exact match between measured and estimated values, the Pareto configurations are reasonable alternatives that give each application suitable trade-off choices. In the next section, we will compare our approach against the Linux governors.

4.5. Comparison against DVFS Governors

All applications were executed under the performance, ondemand, and powersave Linux governors (see

Figure 5). The performance and powersave governors set the CPU statically to the highest and lowest frequency, respectively, within the borders of available minimum and maximum frequencies. Moreover, the ondemand governor scales the frequency dynamically according to the current load. It boosts to the highest frequency and then likely decreases as the idle time raises. We compared our approach to these three governors as they provide a consistent variety of optimization options and are implemented on numerous mobile devices, making them competitive baselines.

The green line in

Figure 5 shows the measured points that have higher performance and less energy consumption when compared with the powersave governor to all applications. Notice that there are many configurations pointed from the Pareto frontier better than the powersave governor. The blue and yellow lines show the measured points that have higher or similar performance when compared with the ondemand and performance governor, respectively. Notice that there are few configurations at the Pareto frontier better or similar to those governors.

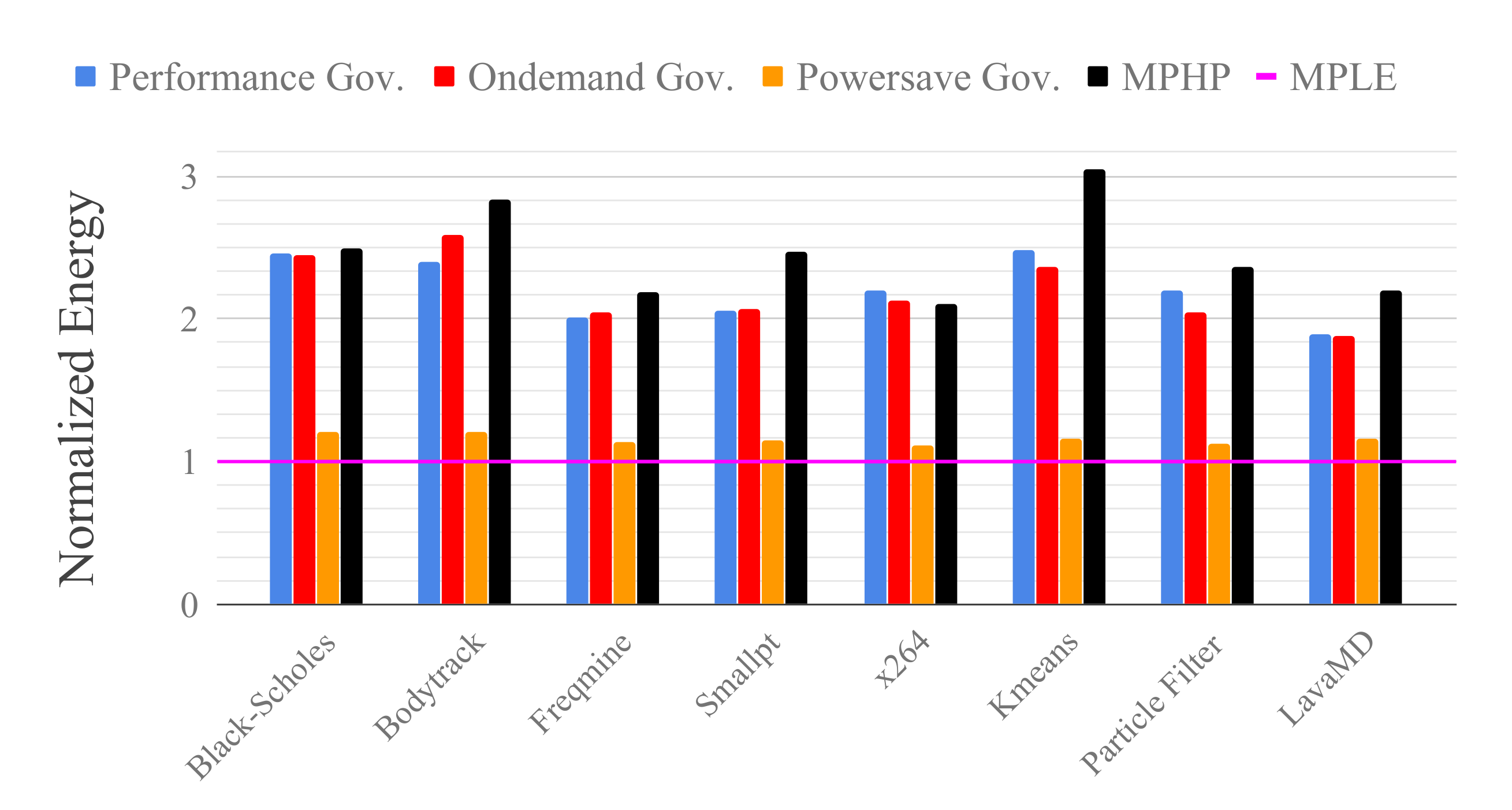

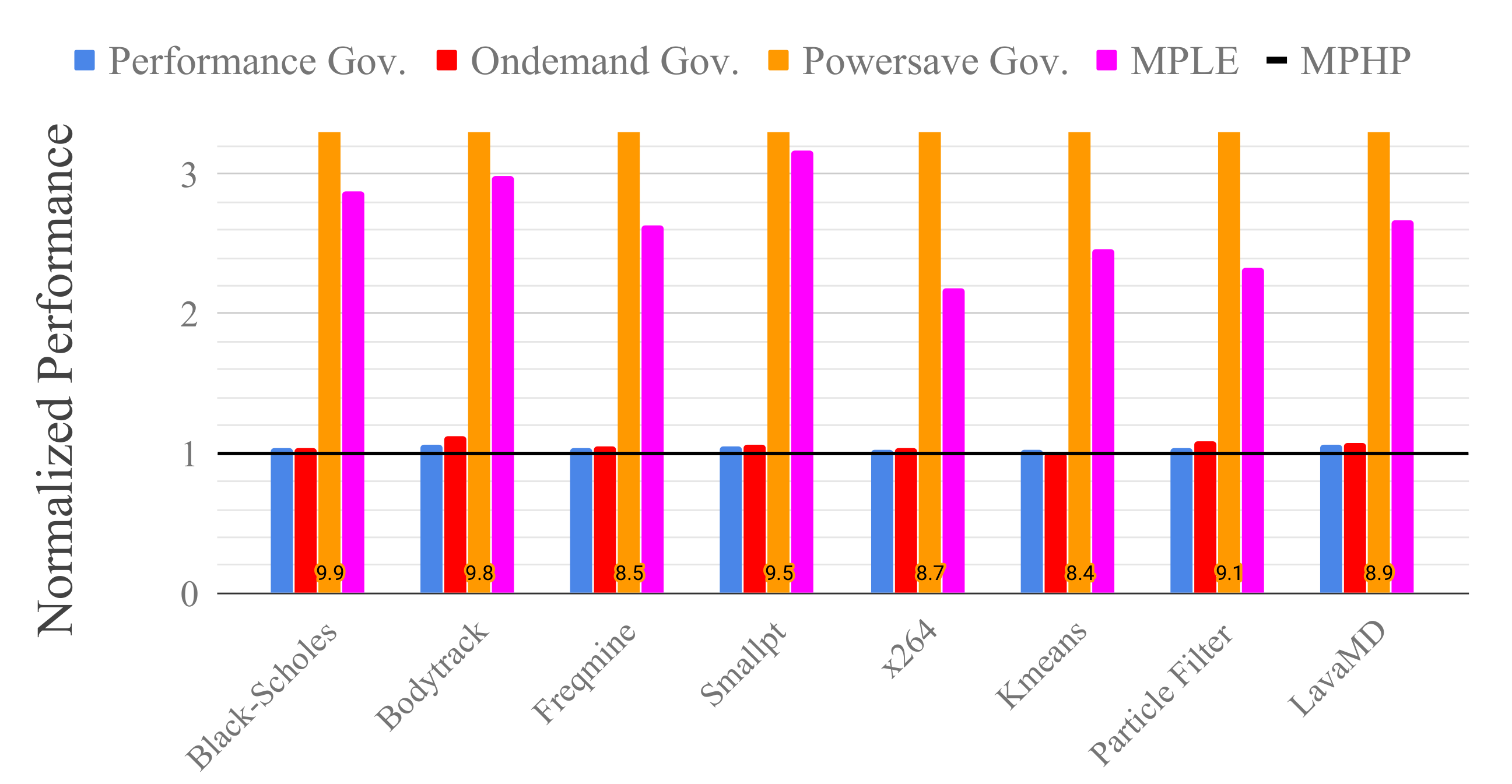

Normalized Comparison

Figure 6 and

Figure 7 show, respectively, the normalized energy consumption and the normalized performance of all the benchmarks compared with the performance, ondemand and powersave governors. We chose the measured Pareto configurations that give the least energy consumption (MPLE) and the highest performance (MPHP) to normalize the energy and the performance, respectively, for each application.

Figure 6 shows that the MPLE saved more energy when compared to all governors for every application. Also, we can observe that MPHP saved energy when compared to the performance and ondemand governors for the x264 application.

Figure 7 shows that MPHP has a small speedup when compared to performance and ondemand Linux governors. On average, notice that MPLE has 7× higher speedup when compared with the powersave governor and it is only 2× slower when compared to the performance governor.

Table 6 shows the percentage of performance gains and energy savings when compared to the MPHP and MPLE, respectively, for all applications. Our methodology saved, on average, 54.38%, 53.99% and 13.67% of energy w.r.t. the performance, ondemand, and powersave Linux governors, respectively. Also, we observed 4.23%, 5.52% and 89.03% of speedup w.r.t. the performance, ondemand, and powersave Linux governor, respectively.

5. Conclusions and Future Work

We presented a novel methodology to estimate optimal performance and energy trade-off configurations for parallel applications on Heterogeneous Multi-Processing (HMP) systems. We devised an analytical low-overhead straightforward performance model for a given multi-thread application and an analytical application-agnostic power model for a specific two-cluster HMP system. These models, when combined, generate the energy model which can assess all available configurations to predict an application’s energy consumption. The Pareto frontier uses the provided offline performance and energy models to select, from all available options, the optimal performance-energy trade-off.

We validated our methodology on an ODROID-XU3 board which we used to fit our models and to validate the Pareto frontier configurations. Moreover, we compared the measured Pareto frontier with the performance, powersave and ondemand Linux governors. Our approach achieved a reduction of the configuration search space of approximately 99%, significantly decreasing the number of options available to identify an optimal performance-energy trade-off configuration. On average, the performance and energy absolute percentage errors between the measured and the estimated Pareto frontier for all applications are 5.53% and 22.30%, respectively. The average variation of performance and energy concerning all applications are 84.25% and 59.68%, respectively. Also, we obtained 13.67% of energy savings regarding the powersave governor with higher or similar performance. These results encourage future research in performance- and energy-aware schedulers using our methodology. Furthermore, we can apply our models to predict optimal energy and performance trade-offs.

The proposed approach can be used to run parallel applications that have previously been characterized, minimizing the overhead and energy waste associated with runtime characterization. Also, offline characterization can be made as precise as necessary since resource limitation is often not an issue. As future work, we intend to reduce the performance restrictions resulting from data-content dependent workloads, the size of the problem’s input and application phase changing. Furthermore, the accuracy of the power prediction may be remodelled, considering the chip voltage as a non-linear relationship with the maximum operating frequency.