Virtualization Management Concept for Flexible and Fault-Tolerant Smart Grid Service Provision

Abstract

1. Introduction

2. State-Of-The-Art in Virtualization

2.1. Virtualization in Smart Grids

2.2. Virtualization in Other Domains

2.3. Summary

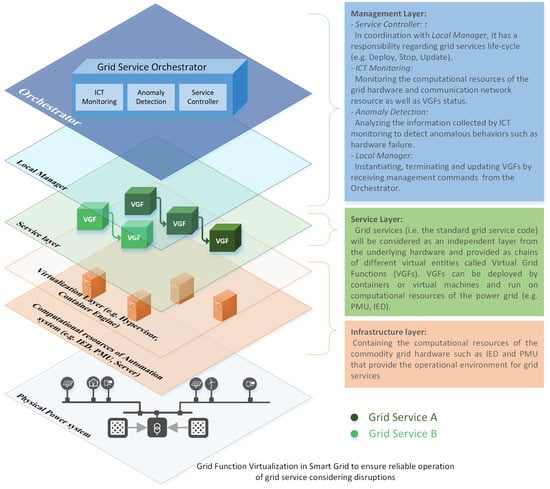

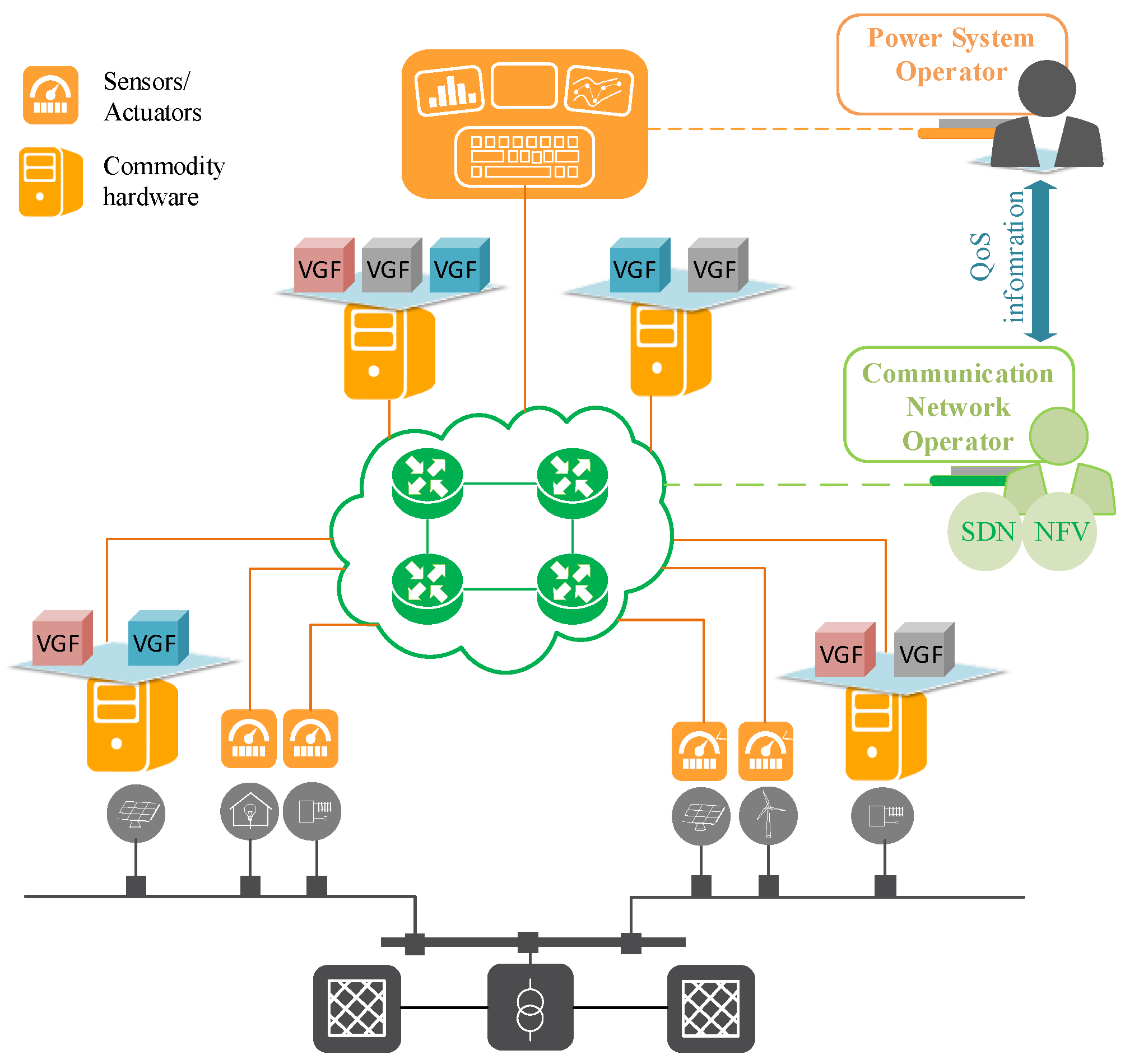

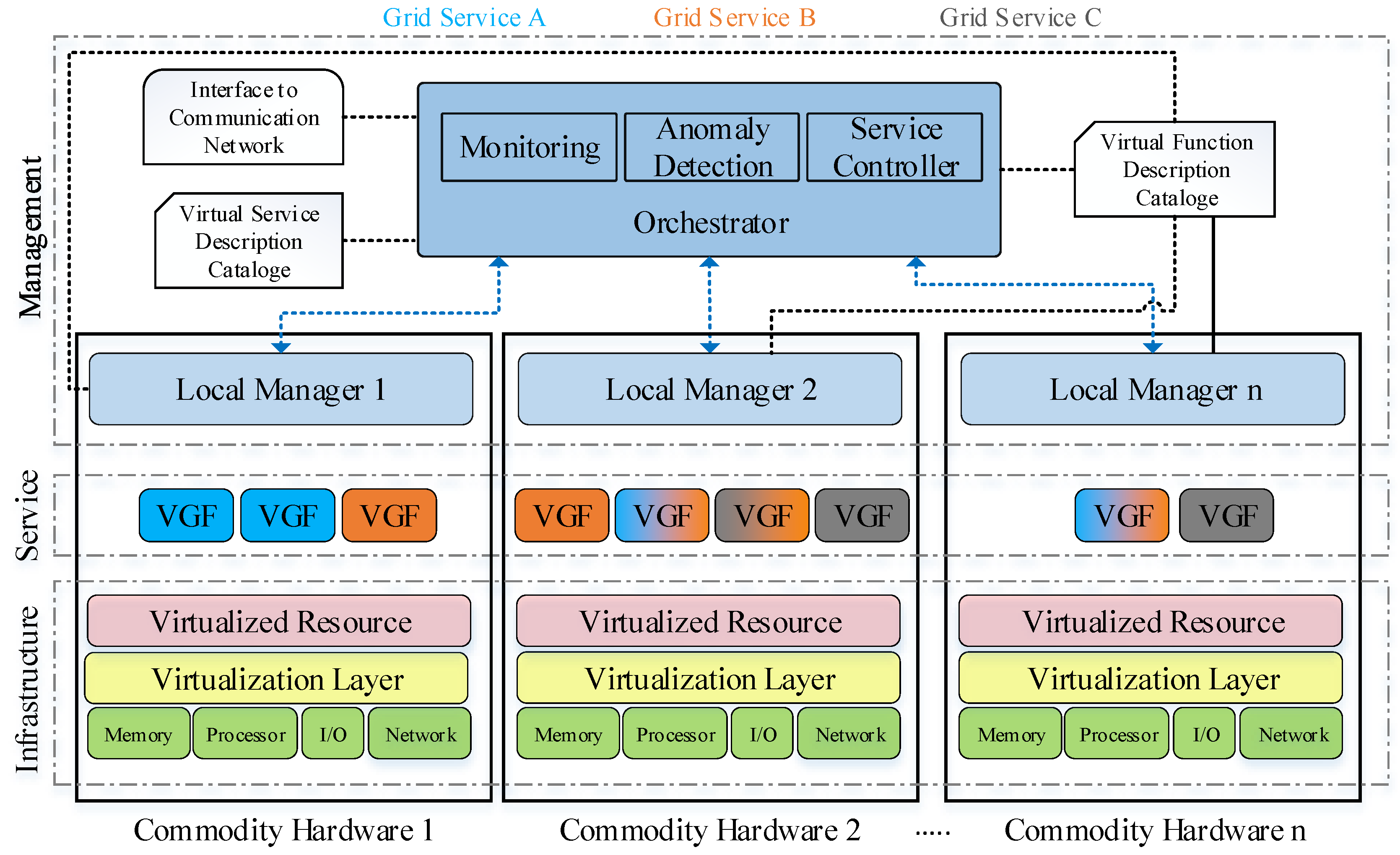

3. Grid Function Virtualization

3.1. Grid Function Virtualization Architecture

3.1.1. Infrastructure

3.1.2. Service

3.1.3. Management

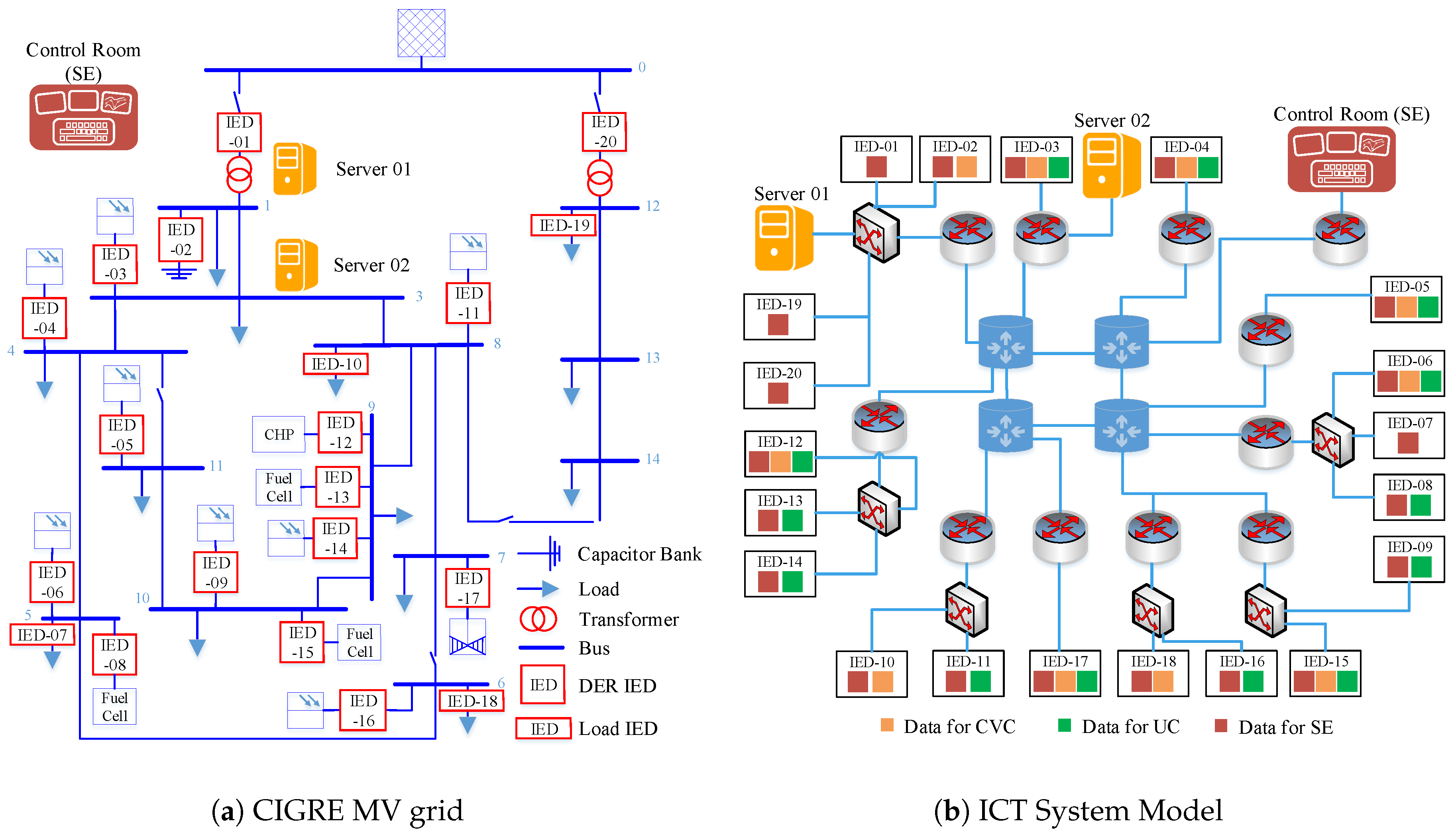

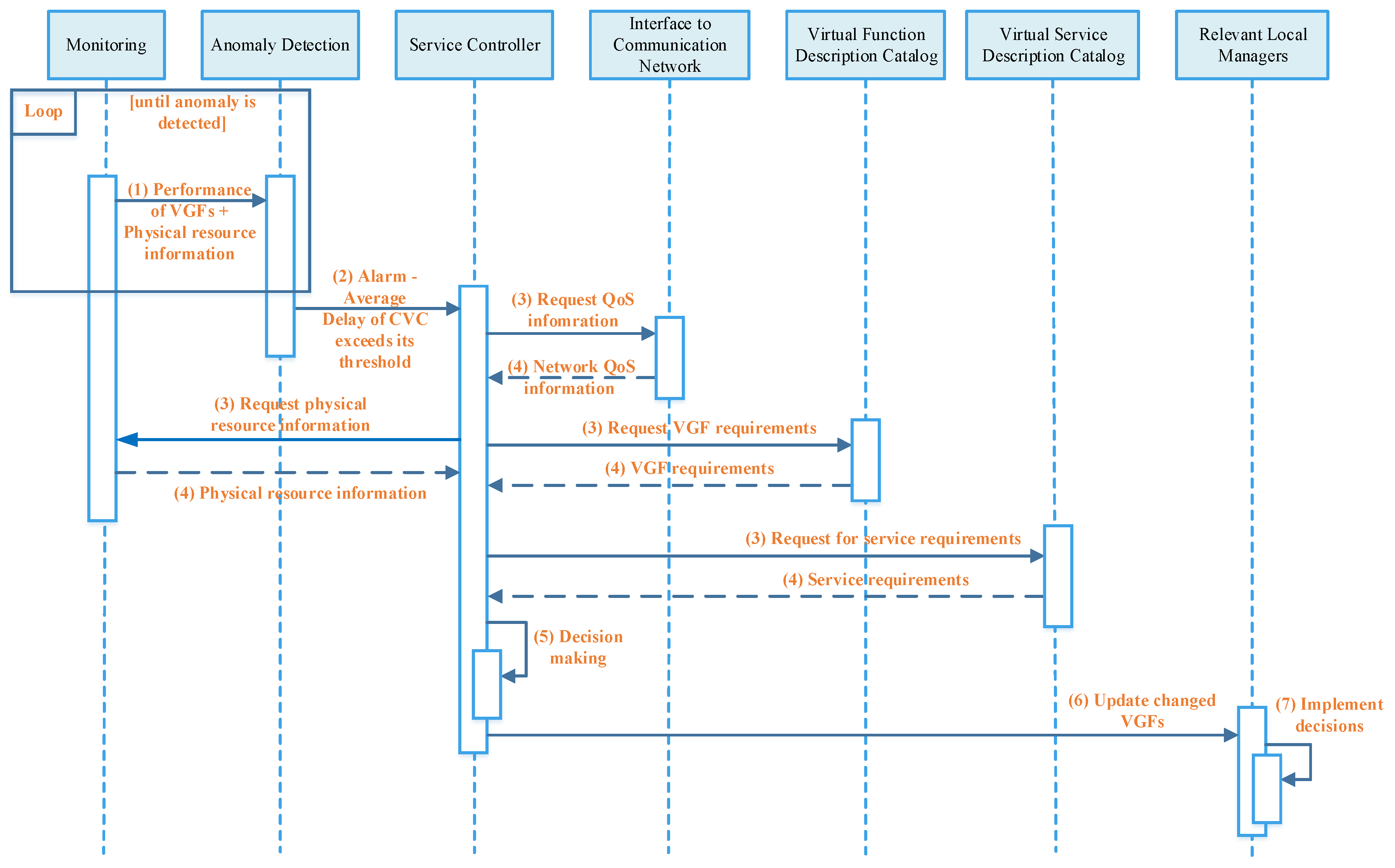

4. Proof of Concept

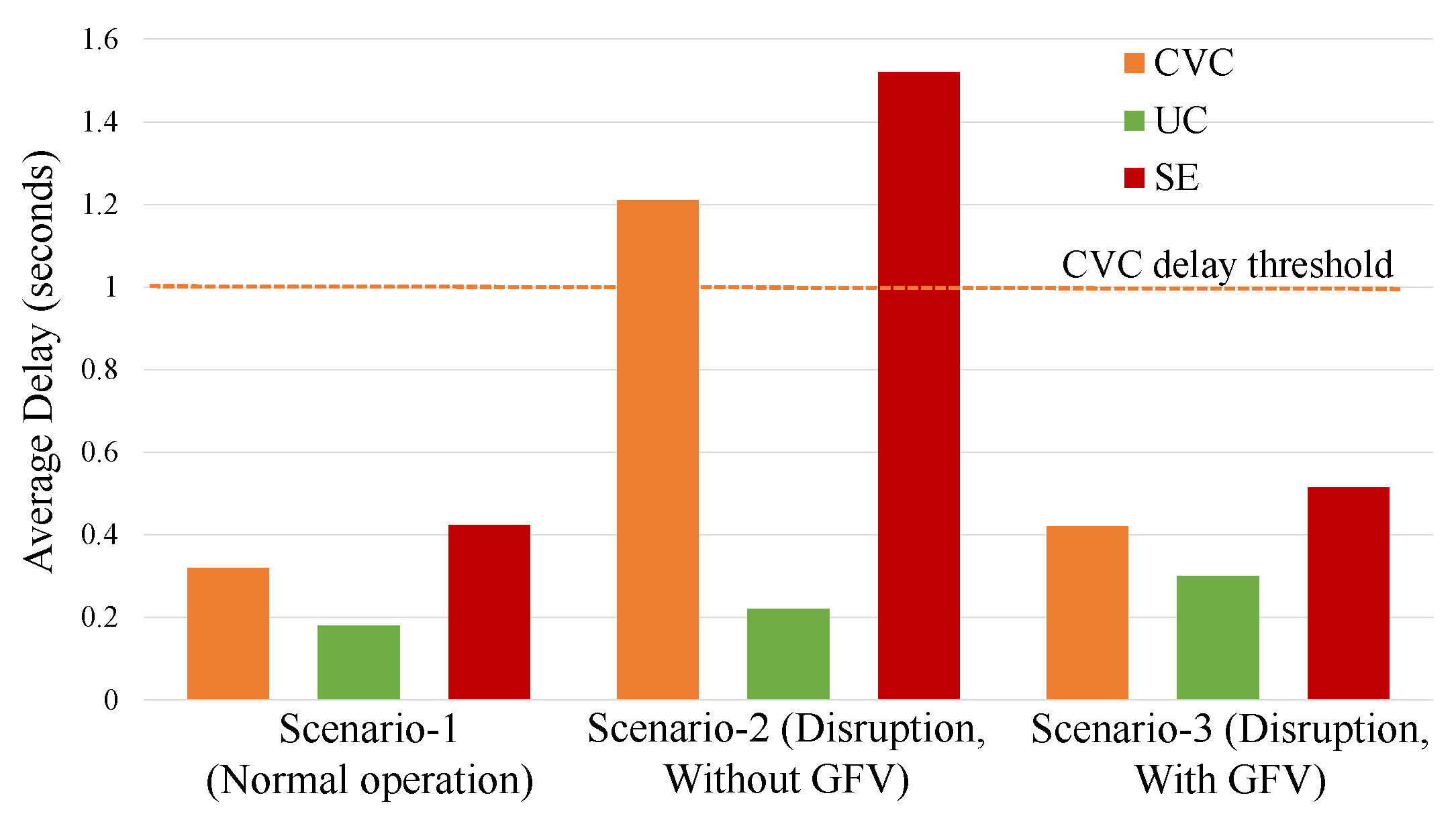

4.1. Use-Case 1

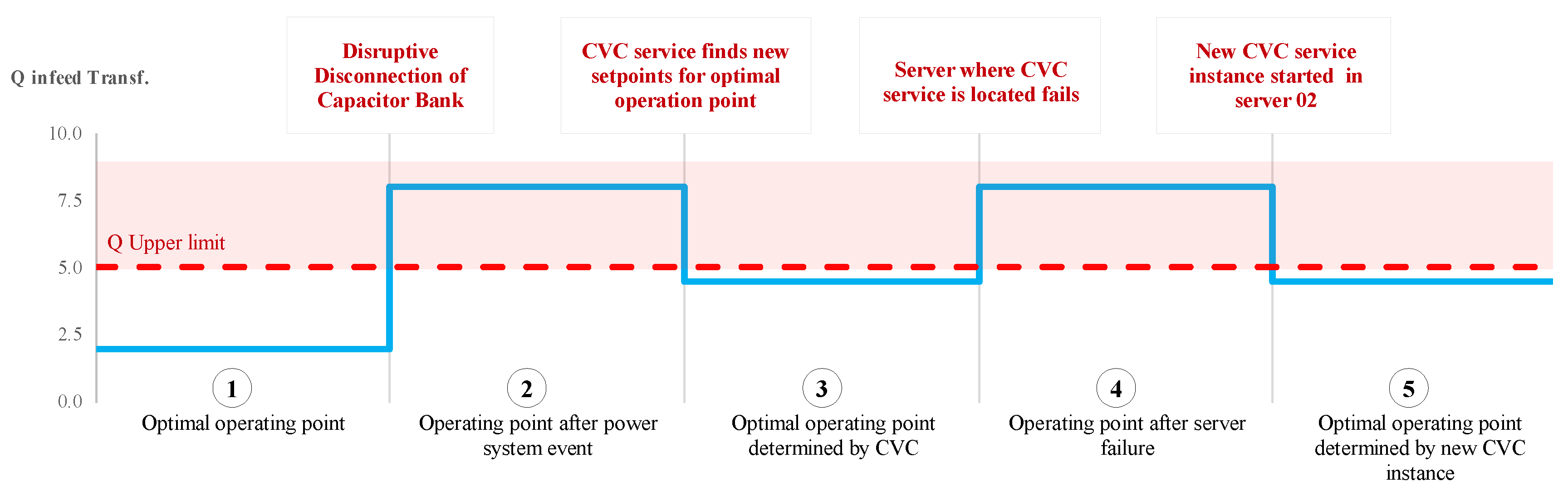

4.2. Use-Case 2

- The power system operates in an optimal point determined by the CVC service.

- The power system is subjected to the disruptive disconnection of the capacitor bank. This causes the reactive power flow through the transformer to violate the acceptable operation limits, i.e., −5/5 MVAr.

- Considering this, the CVC service determines new set-points for the transformer tap position and the reactive power set-points of the DERs. The reactive power flow through the transformer is then restored within its acceptable operation range.

- The system is then subjected to the failure of Server 01 resulting in the interruption of the CVC service. This causes the power system to be in a sub-optimal operating point because the controllable elements can no longer receive optimal set-points from the CVC service. Their control is now based on local measurements.

- The proposed GFV concept can be used to solve this problem. The CVC service can be relocated to another server, i.e., Server 02. Once the CVC service is running again, it can continue to determine set-points of the controllable elements, which can then restore the system to its optimal operating point.

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| CIGRE | Conseil International des Grands Réseaux Électriques |

| CPU | Central Processing Unit |

| CVC | Coordinated Voltage Control |

| DER | Distributed Energy Resource |

| ECU | Electronic Control Units |

| GFD | Grid Function Descriptors |

| GSD | Grid Service Descriptors |

| GFV | Grid Function Virtualization |

| IaaS | Infrastructure-as-a-Service |

| ICT | Information and Communication Technology |

| IED | Intelligent Electronic Devices |

| IIoT | Industrial Internet of Things |

| IMA | Integrated Modular Avionics |

| IoT | Internet of Things |

| NF | Network Function |

| NFV | Network Function Virtualization |

| PLC | Power Line Communication |

| PMU | Phasor Measurement Unit |

| QoS | Quality of Service |

| SCADA | Supervisory Control and Data Acquisition |

| SDN | Software-Defined Networking |

| SE | State Estimation |

| UC | Unit Commitment |

| VGF | Virtual Grid Function |

| VNF | Virtual Network Function |

| WSN | Wireless Sensor Network |

References

- Mallet, P.; Granstrom, P.; Hallberg, P.; Lorenz, G.; Mandatova, P. Power to the People!: European Perspectives on the Future of Electric Distribution. IEEE Power Energy Mag. 2014, 12, 51–64. [Google Scholar] [CrossRef]

- Al-Rubaye, S.; Kadhum, E.; Ni, Q.; Anpalagan, A. Industrial internet of things driven by SDN platform for smart grid resiliency. IEEE Internet Things J. 2017, 6, 267–277. [Google Scholar] [CrossRef]

- Narayan, A.; Klaes, M.; Babazadeh, D.; Lehnhoff, S.; Rehtanz, C. First Approach for a Multi-dimensional State Classification for ICT-reliant Energy Systems. In Proceedings of the International ETG-Congress 2019, Esslingen, Germany, 8–9 May 2019; pp. 1–6. [Google Scholar]

- Krüger, C.; Velasquez, J.; Babazadeh, D.; Lehnhoff, S. Flexible and reconfigurable data sharing for smart grid functions. Energy Inform. 2018, 1, 43. [Google Scholar] [CrossRef][Green Version]

- Brand, M.; Ansari, S.; Castro, F.; Chakra, R.; Hage Hassan, H.; Krüger, C.; Babazadeh, D.; Lehnhoff, S. Framework for the Integration of ICT-relevant Data in Power System Applications. In Proceedings of the 2019 IEEE PES PowerTech, Milan, Italy, 23–27 June 2019; pp. 1–6. [Google Scholar]

- Narayan, A.; Krueger, C.; Goering, A.; Babazadeh, D.; Harre, M.C.; Wortelen, B.; Luedtke, A.; Lehnhoff, S. Towards Future SCADA Systems for ICT-reliant Energy Systems. In Proceedings of the International ETG-Congress 2019; ETG Symposium, Esslingen, Germany, 8–9 May 2019; pp. 1–7. [Google Scholar]

- Stewart, E.M.; von Meier, A. Phasor measurements for distribution system applications. In Smart Grid Handbook; Wiley: Hoboken, NJ, USA, 2016; pp. 1–10. [Google Scholar]

- Alam, I.; Sharif, K.; Li, F.; Latif, Z.; Karim, M.M.; Nour, B.; Biswas, S.; Wang, Y. IoT Virtualization: A Survey of Software Definition & Function Virtualization Techniques for Internet of Things. arXiv 2019, arXiv:1902.10910. [Google Scholar]

- Blenk, A.; Basta, A.; Zerwas, J.; Kellerer, W. Pairing SDN with network virtualization: The network hypervisor placement problem. In Proceedings of the 2015 IEEE Conference on Network Function Virtualization and Software Defined Network (NFV-SDN), San Francisco, CA, USA, 18–21 November 2015; pp. 198–204. [Google Scholar]

- Plauth, M.; Feinbube, L.; Polze, A. A performance survey of lightweight virtualization techniques. In Proceedings of the European Conference on Service-Oriented and Cloud Computing, Oslo, Norway, 27–29 September 2017; pp. 34–48. [Google Scholar]

- Dong, X.; Lin, H.; Tan, R.; Iyer, R.K.; Kalbarczyk, Z. Software-defined networking for smart grid resilience: Opportunities and challenges. In Proceedings of the 1st ACM Workshop on Cyber-Physical System Security, Singapore, 14–17 April 2015; ACM: New York, NY, USA, 2015; pp. 61–68. [Google Scholar]

- Loveland, S.; Dow, E.M.; LeFevre, F.; Beyer, D.; Chan, P.F. Leveraging virtualization to optimize high-availability system configurations. IBM Syst. J. 2008, 47, 591–604. [Google Scholar] [CrossRef]

- Khan, I.; Belqasmi, F.; Glitho, R.; Crespi, N.; Morrow, M.; Polakos, P. Wireless sensor network virtualization: A survey. IEEE Commun. Surv. Tutor. 2015, 18, 553–576. [Google Scholar] [CrossRef]

- Lee, C.; Shin, S. Fault Tolerance for Software-Defined Networking in Smart Grid. In Proceedings of the 2018 IEEE International Conference on Big Data and Smart Computing (BigComp), Shanghai, China, 15–17 January 2018; pp. 705–708. [Google Scholar]

- Rehmani, M.H.; Davy, A.; Jennings, B.; Assi, C. Software defined networks based smart grid communication: A comprehensive survey. IEEE Commun. Surv. Tutor. 2019, 21, 2637–2670. [Google Scholar] [CrossRef]

- Danzi, P.; Angjelichinoski, M.; Stefanović, Č.; Dragičević, T.; Popovski, P. Software-defined microgrid control for resilience against denial-of-service attacks. IEEE Trans. Smart Grid 2018, 10, 5258–5268. [Google Scholar] [CrossRef]

- Abdella, J.; Shuaib, K. Peer to peer distributed energy trading in smart grids: A survey. Energies 2018, 11, 1560. [Google Scholar] [CrossRef]

- Rinaldi, S.; Ferrari, P.; Brandão, D.; Sulis, S. Software defined networking applied to the heterogeneous infrastructure of smart grid. In Proceedings of the 2015 IEEE World Conference on Factory Communication Systems (WFCS), Palma de Mallorca, Spain, 27–29 May 2015; pp. 1–4. [Google Scholar]

- Ghosh, U.; Chatterjee, P.; Shetty, S. A security framework for SDN-enabled smart power grids. In Proceedings of the 2017 IEEE 37th International Conference on Distributed Computing Systems Workshops (ICDCSW), Atlanta, GA, USA, 5–8 June 2017; pp. 113–118. [Google Scholar]

- Hans, M.; Phad, P.; Jogi, V.; Udayakumar, P. Energy Management of Smart Grid using Cloud Computing. In Proceedings of the 2018 International Conference on Information, Communication, Engineering and Technology (ICICET), Pune, India, 29–31 August 2018; pp. 1–4. [Google Scholar]

- Cankaya, H.C. Software Defined Networking and Virtualization for Smart Grid. In Transportation and Power Grid in Smart Cities: Communication Networks and Services; Wiley: Hoboken, NJ, USA, 2018; pp. 171–190. [Google Scholar]

- Meloni, A.; Pegoraro, P.A.; Atzori, L.; Castello, P.; Sulis, S. IoT cloud-based distribution system state estimation: Virtual objects and context-awareness. In Proceedings of the 2016 IEEE International Conference on Communications (ICC), Kuala Lumpur, Malaysia, 22–27 May 2016; pp. 1–6. [Google Scholar]

- Cosovic, M.; Tsitsimelis, A.; Vukobratovic, D.; Matamoros, J.; Anton-Haro, C. 5G mobile cellular networks: Enabling distributed state estimation for smart grids. IEEE Commun. Mag. 2017, 55, 62–69. [Google Scholar] [CrossRef]

- Mijumbi, R.; Serrat, J.; Gorricho, J.L.; Bouten, N.; De Turck, F.; Boutaba, R. Network function virtualization: State-of-the-art and research challenges. IEEE Commun. Surv. Tutor. 2015, 18, 236–262. [Google Scholar] [CrossRef]

- European Telecommunications Standards Institute (ETSI). GS NFV-MAN 001 V1. 1.1 Network Function Virtualisation (NFV); Management and Orchestration; ETSI: Sophia Antipolis, France, 2014. [Google Scholar]

- Yi, B.; Wang, X.; Li, K.; Huang, M. A comprehensive survey of network function virtualization. Comput. Netw. 2018, 133, 212–262. [Google Scholar] [CrossRef]

- Kim, H.; Feamster, N. Improving network management with software defined networking. IEEE Commun. Mag. 2013, 51, 114–119. [Google Scholar] [CrossRef]

- Kreutz, D.; Ramos, F.M.; Verissimo, P.; Rothenberg, C.E.; Azodolmolky, S.; Uhlig, S. Software-defined networking: A comprehensive survey. Proc. IEEE 2015, 103, 14–76. [Google Scholar] [CrossRef]

- Attarha, S.; Hosseiny, K.H.; Mirjalily, G.; Mizanian, K. A load balanced congestion aware routing mechanism for Software Defined Networks. In Proceedings of the 2017 Iranian Conference on Electrical Engineering (ICEE), Tehran, Iran, 2–4 May 2017; pp. 2206–2210. [Google Scholar]

- Toyonaga, S.; Kominami, D.; Murata, M. Virtual wireless sensor networks: Adaptive brain-inspired configuration for internet of things applications. Sensors 2016, 16, 1323. [Google Scholar] [CrossRef] [PubMed]

- Liu, R.; Srivastava, M. VirtSense: Virtualize Sensing through ARM TrustZone on Internet-of-Things. In Proceedings of the 3rd Workshop on System Software for Trusted Execution; ACM: New York, NY, USA, 2018; pp. 2–7. [Google Scholar]

- Varasteh, A.; Goudarzi, M. Server consolidation techniques in virtualized data centers: A survey. IEEE Syst. J. 2015, 11, 772–783. [Google Scholar] [CrossRef]

- Nishiguchi, Y.; Yano, A.; Ohtani, T.; Matsukura, R.; Kakuta, J. IoT fault management platform with device virtualization. In Proceedings of the 2018 IEEE 4th World Forum on Internet of Things (WF-IoT), Singapore, 5–8 February 2018; pp. 257–262. [Google Scholar]

- Broy, M.; Kruger, I.H.; Pretschner, A.; Salzmann, C. Engineering automotive software. Proc. IEEE 2007, 95, 356–373. [Google Scholar] [CrossRef]

- Rajan, A.K.S.; Feucht, A.; Gamer, L.; Smaili, I. Hypervisor for consolidating real-time automotive control units: Its procedure, implications and hidden pitfalls. J. Syst. Archit. 2018, 82, 37–48. [Google Scholar] [CrossRef]

- Herber, C.; Reinhardt, D.; Richter, A.; Herkersdorf, A. HW/SW trade-offs in I/O virtualization for Controller Area Network. In Proceedings of the 2015 52nd ACM/EDAC/IEEE Design Automation Conference (DAC), San Francisco, CA, USA, 8–12 June 2015; pp. 1–6. [Google Scholar]

- Morabito, R.; Petrolo, R.; Loscri, V.; Mitton, N.; Ruggeri, G.; Molinaro, A. Lightweight virtualization as enabling technology for future smart cars. In Proceedings of the 2017 IFIP/IEEE Symposium on Integrated Network and Service Management (IM), Lisbon, Portugal, 8–12 May 2017; pp. 1238–1245. [Google Scholar]

- Bello, L.L.; Mariani, R.; Mubeen, S.; Saponara, S. Recent Advances and Trends in On-board Embedded and Networked Automotive Systems. IEEE Trans. Ind. Inform. 2019, 15, 1038–1051. [Google Scholar] [CrossRef]

- Parkinson, P.; Kinnan, L. Safety-critical software development for integrated modular avionics. Embed. Syst. Eng. 2003, 11, 40–41. [Google Scholar]

- Kleidermacher, D.; Wolf, M. Mils virtualization for integrated modular avionics. In Proceedings of the 2008 IEEE/AIAA 27th Digital Avionics Systems Conference, St. Paul, MN, USA, 26–30 October 2008. [Google Scholar]

- Kaiwartya, O.; Abdullah, A.H.; Cao, Y.; Lloret, J.; Kumar, S.; Shah, R.R.; Prasad, M.; Prakash, S. Virtualization in wireless sensor networks: Fault tolerant embedding for internet of things. IEEE Internet Things J. 2018, 5, 571–580. [Google Scholar] [CrossRef]

- Champa, H. An Extensive Review on Sensing as a Service Paradigm in IoT: Architecture, Research Challenges, Lessons Learned and Future Directions. Int. J. Appl. Eng. Res. 2019, 14, 1220–1243. [Google Scholar]

- Yedder, H.B.; Ding, Q.; Zakia, U.; Li, Z.; Haeri, S.; Trajkovic, L. Comparison of virtualization algorithms and topologies for data center networks. In Proceedings of the 2017 26th International Conference on Computer Communication and Networks (ICCCN), Vancouver, BC, Canada, 31 July–3 August 2017; pp. 1–6. [Google Scholar]

- Horváth, Á.; Varró, D. Model-driven development of ARINC 653 configuration tables. In Proceedings of the 29th Digital Avionics Systems Conference, Salt Lake City, UT, USA, 3–7 October 2010; pp. 6.E.3-1–6.E.3-15. [Google Scholar]

- Magableh, B.; Almiani, M. A Self Healing Microservices Architecture: A Case Study in Docker Swarm Cluster. In Proceedings of the International Conference on Advanced Information Networking and Applications, Matsue, Japan, 27–29 March 2019; Springer: Berlin, Germany, 2019; pp. 846–858. [Google Scholar]

- PE/PSRCC. IEEE Standard for SCADA and Automation Systems, IEEE Std C37.1 (Revision of IEEE Std C37.1-1994); IEEE: Piscataway, NJ, USA, 2008. [Google Scholar]

- Zhu, K.; Chenine, M.; Nordstrom, L. ICT architecture impact on wide area monitoring and control systems’ reliability. IEEE Trans. Power Deliv. 2011, 26, 2801–2808. [Google Scholar] [CrossRef]

| Domain | Literature | Goals | Methods |

|---|---|---|---|

| Communication—NFV | [20,21,22,24,25,26] | Scalability, Cost reduction, Fast integration | Implementing network functions as pure software elements that can be run in standardized hardware |

| Communication—SDN | [2,11,14,15,28,29] | Resiliency, Easy management | Abstracting network control function into logical programmable entity |

| WSN | [13,31,41,42] | Flexibility in deployment | Virtual sensor networks, Sensing as a service model |

| Data Center | [32,33,43] | Performance improvement, Cost reduction | Server consolidation, Cloud based |

| Avionics Systems | [39,40,44] | Cost reduction | Embedded hypervisor technology |

| Grid Service | Acceptable Delay (s) | Update Periodicity (s) | Data Rate (pps) |

|---|---|---|---|

| Coordinated Voltage Control (CVC) | 1 | 2 | 0.5 |

| State Estimation (SE) | 10 | 15 | 0.067 |

| Unit Commitment (UC) | 0.4 | 1.5 | 0.67 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Attarha, S.; Narayan, A.; Hage Hassan, B.; Krüger, C.; Castro, F.; Babazadeh, D.; Lehnhoff, S. Virtualization Management Concept for Flexible and Fault-Tolerant Smart Grid Service Provision. Energies 2020, 13, 2196. https://doi.org/10.3390/en13092196

Attarha S, Narayan A, Hage Hassan B, Krüger C, Castro F, Babazadeh D, Lehnhoff S. Virtualization Management Concept for Flexible and Fault-Tolerant Smart Grid Service Provision. Energies. 2020; 13(9):2196. https://doi.org/10.3390/en13092196

Chicago/Turabian StyleAttarha, Shadi, Anand Narayan, Batoul Hage Hassan, Carsten Krüger, Felipe Castro, Davood Babazadeh, and Sebastian Lehnhoff. 2020. "Virtualization Management Concept for Flexible and Fault-Tolerant Smart Grid Service Provision" Energies 13, no. 9: 2196. https://doi.org/10.3390/en13092196

APA StyleAttarha, S., Narayan, A., Hage Hassan, B., Krüger, C., Castro, F., Babazadeh, D., & Lehnhoff, S. (2020). Virtualization Management Concept for Flexible and Fault-Tolerant Smart Grid Service Provision. Energies, 13(9), 2196. https://doi.org/10.3390/en13092196