AdViSED: Advanced Video SmokE Detection for Real-Time Measurements in Antifire Indoor and Outdoor Systems

Abstract

1. Introduction

2. State of the Art Review of Video-Based Smoke Detection Algorithm

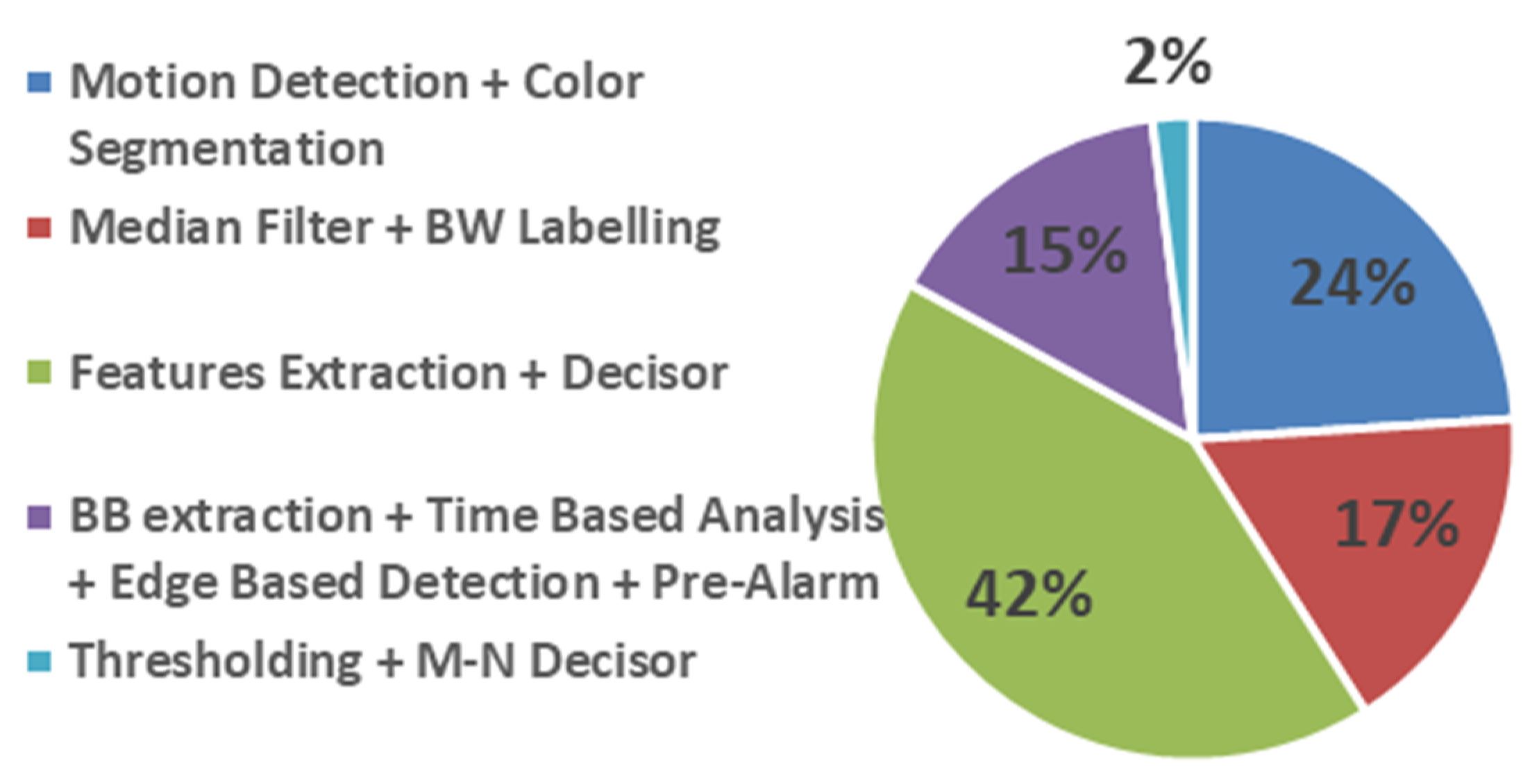

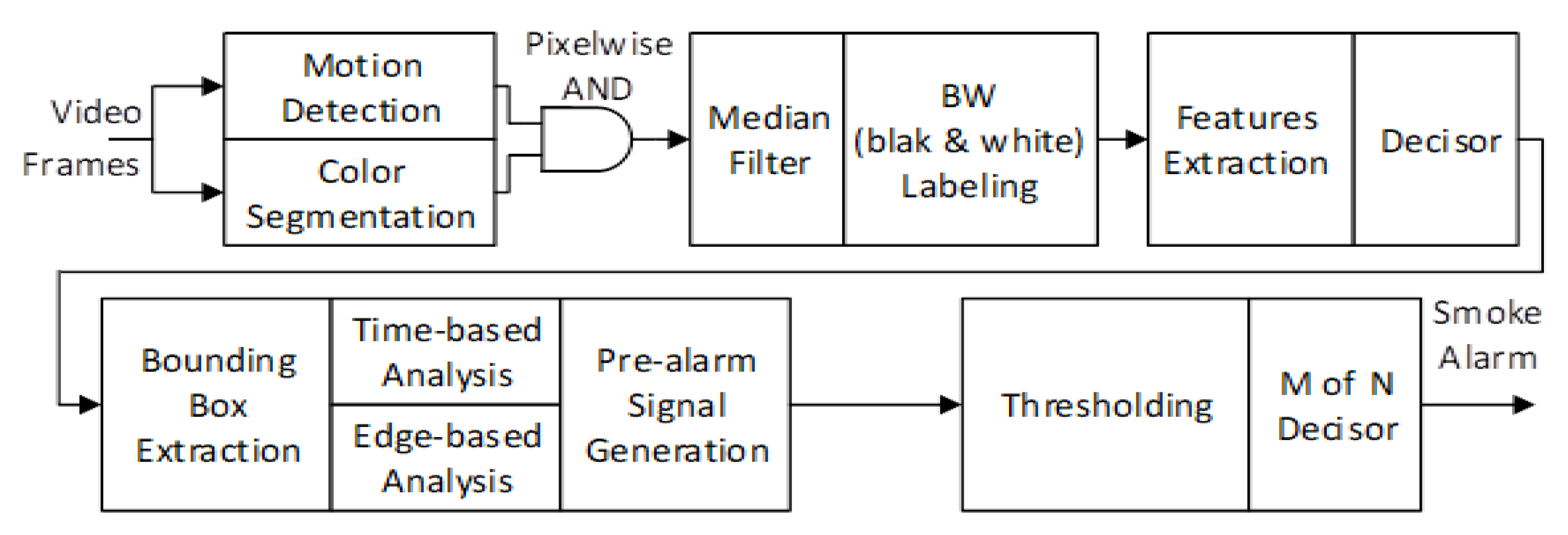

3. AdViSED Fast Measuring System

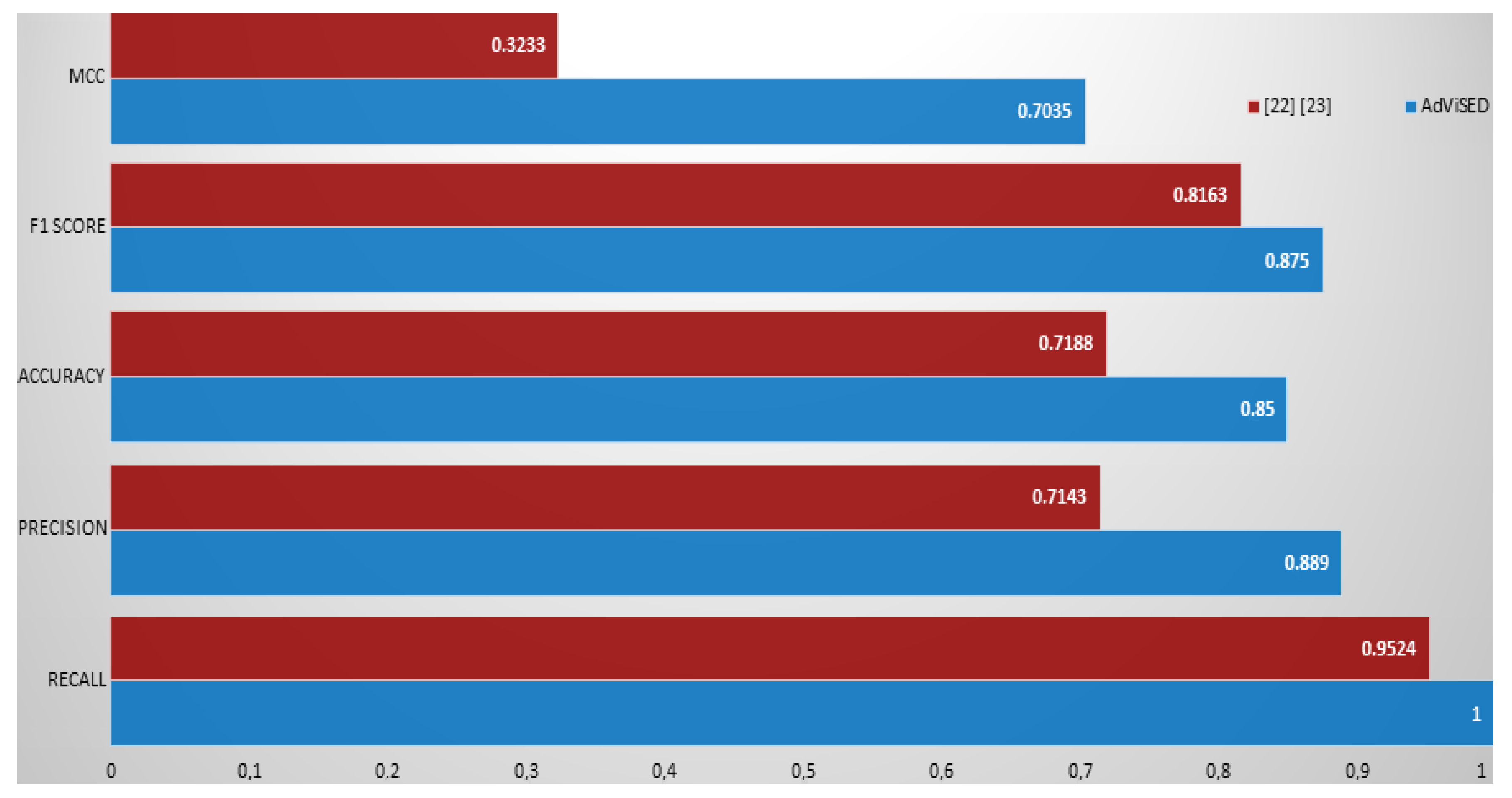

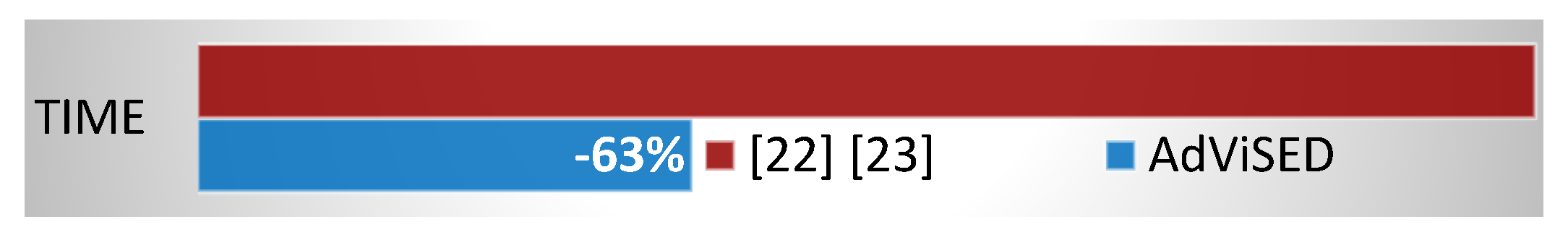

3.1. Motion Detection

3.2. Color Segmentation and Blob Labeling

3.3. Color Segmentation and Blob Labeling

- Area is the number of pixels in the region studied.

- Turbulence is calculated as.

- Extent is the ratio between the number of pixels in the region and in the bounding box.

- Convex area is the region inside a bounding box delimited from a polygon without concaved angles; the corners are defined from the most external pixels.

- Eccentricity is the eccentricity of the ellipse of the bounding box.

3.4. Bounding Box Extraction and Time/Edge Analysis

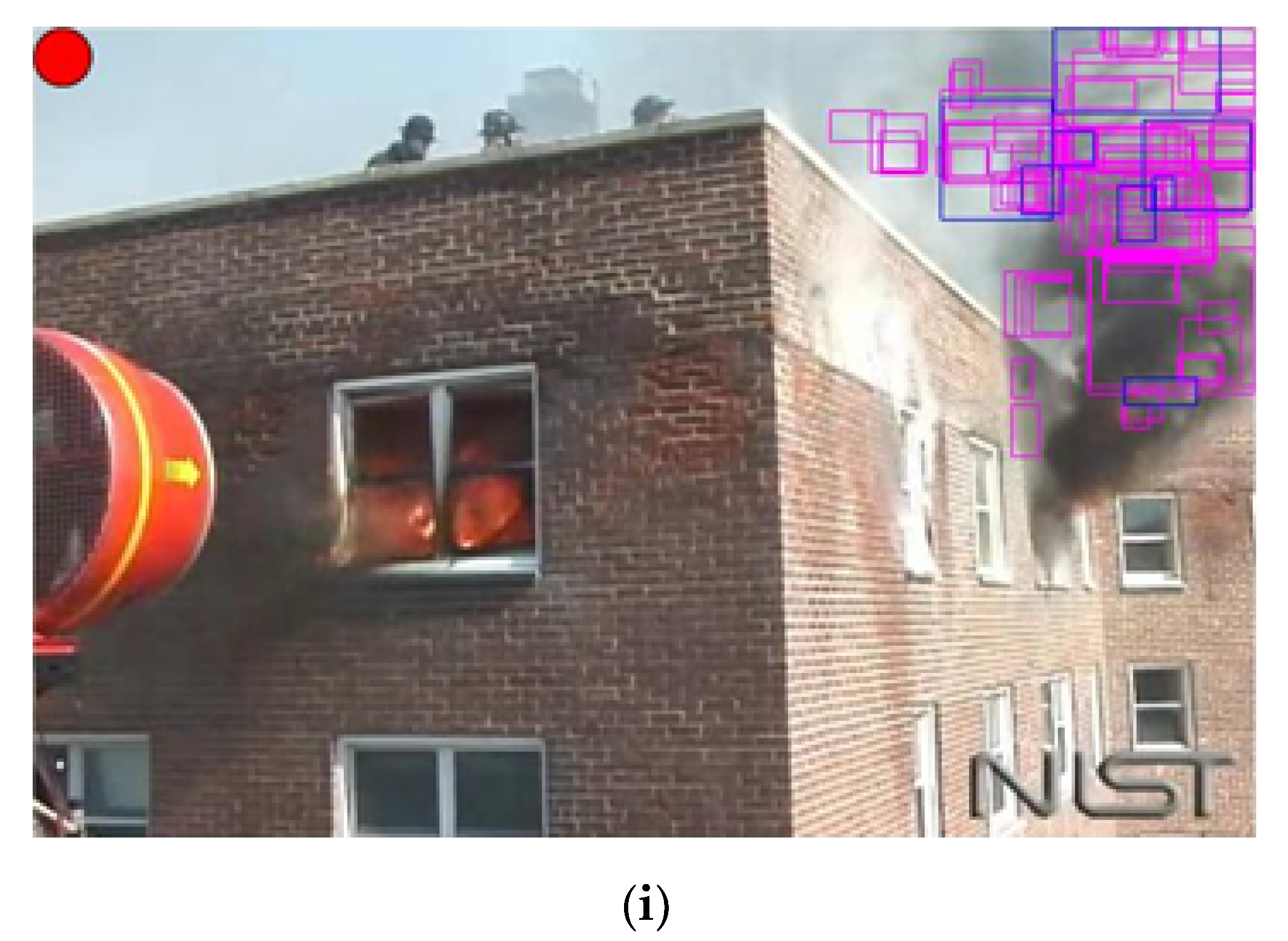

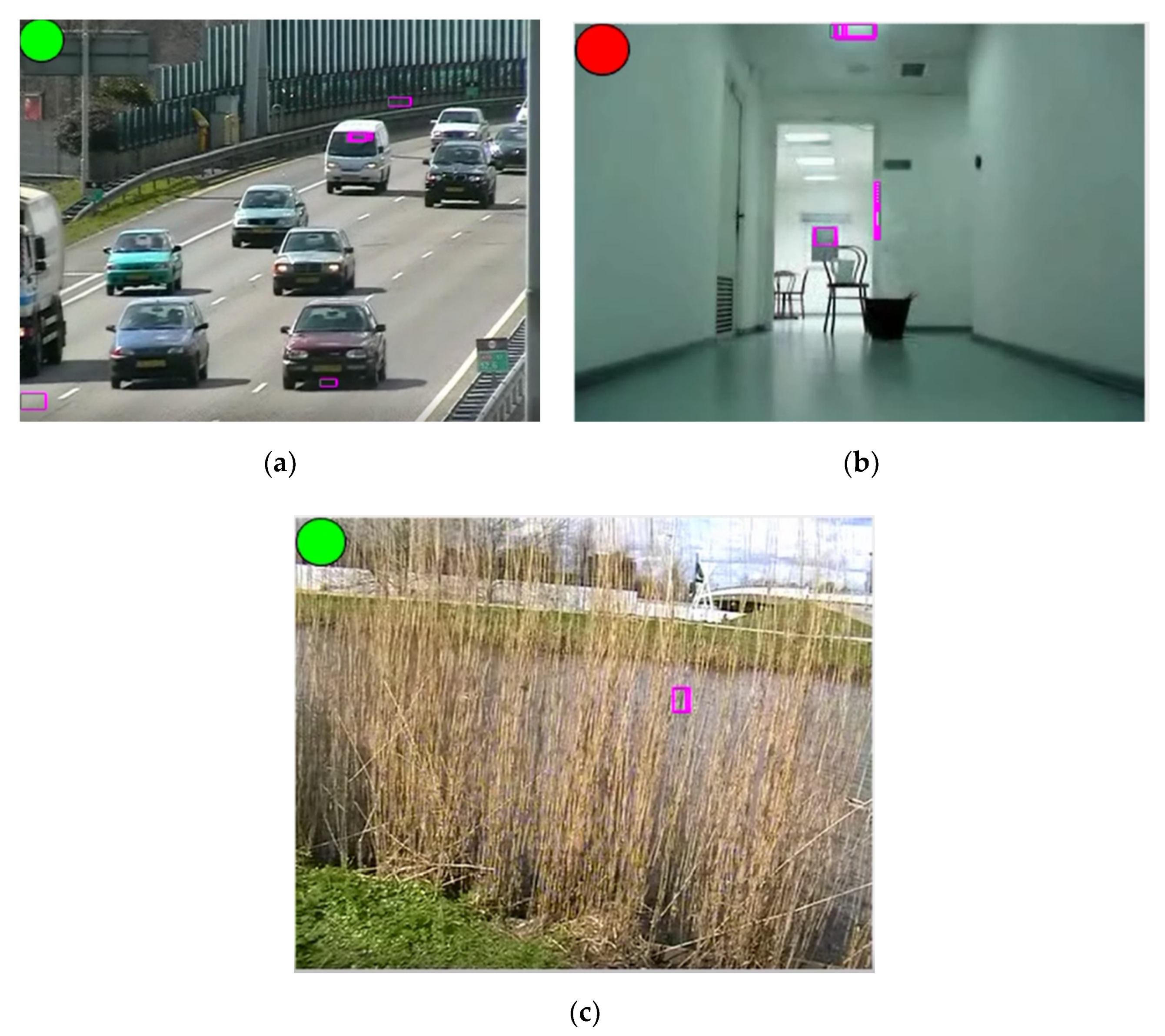

3.5. Pre-Alarm Signal and Smoke Alarm

3.6. Pre-Alarm Signal and Smoke Alarm

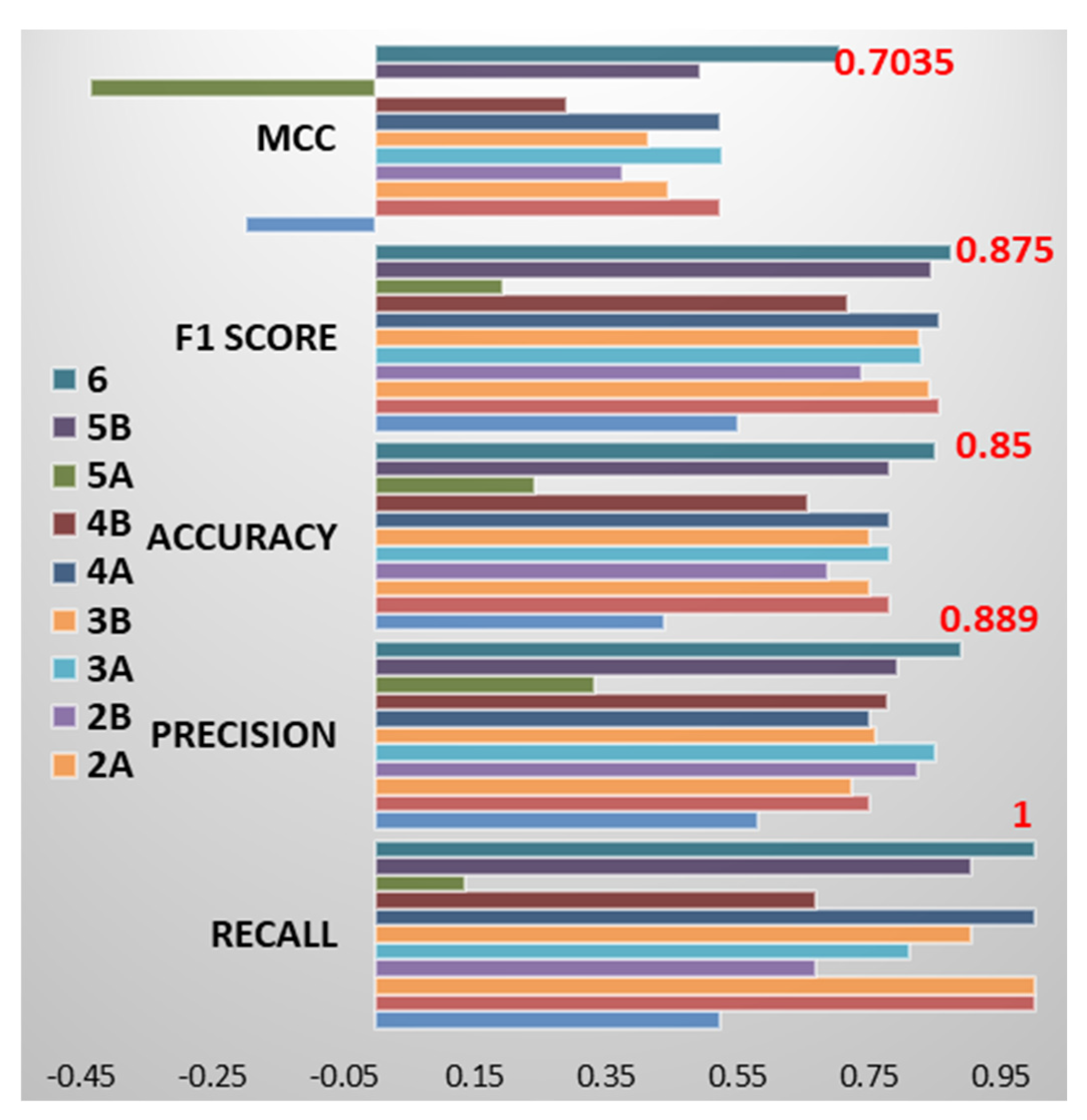

4. AdViSED Thresholds Analysis

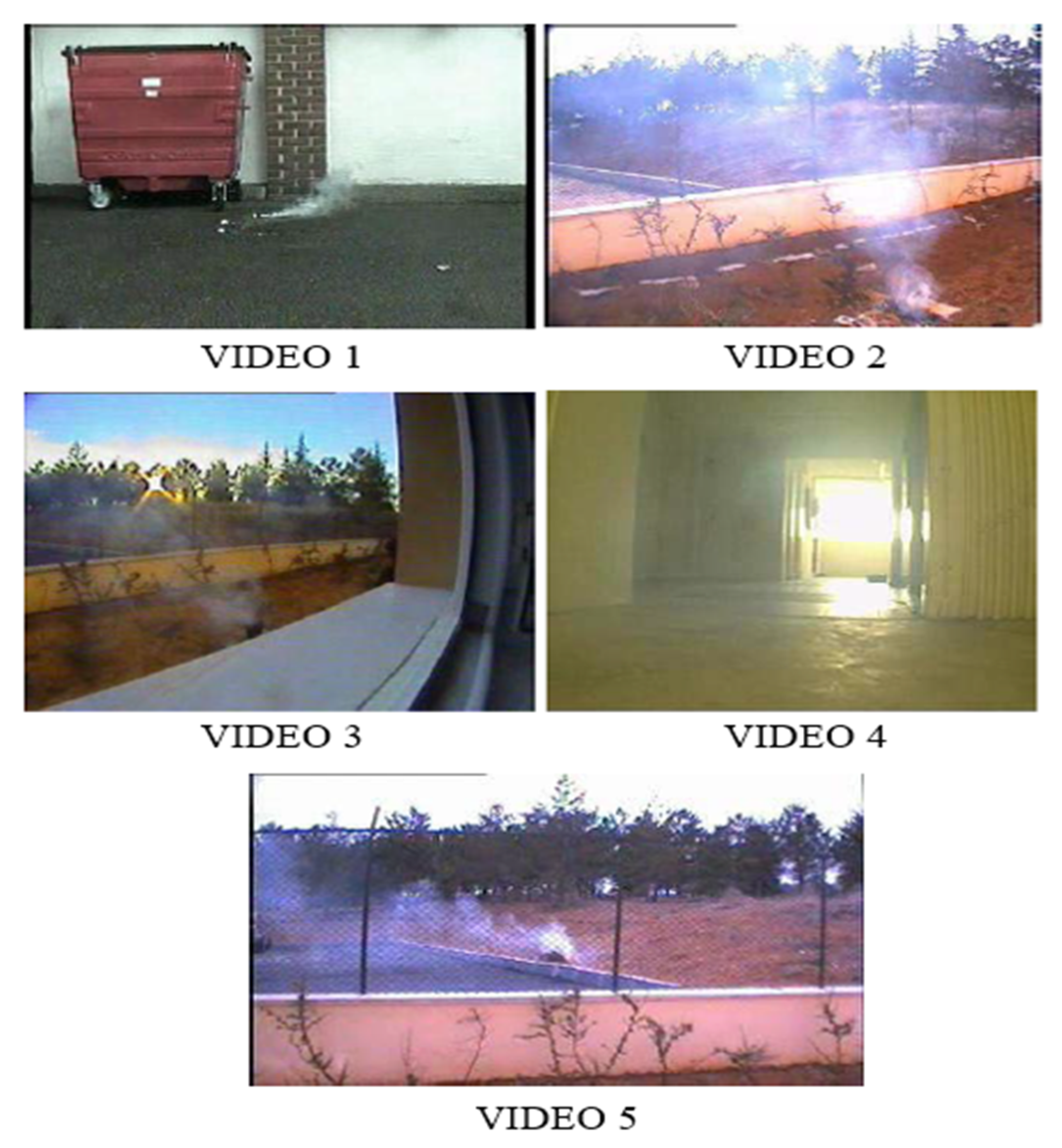

5. Performance and Complexity Results

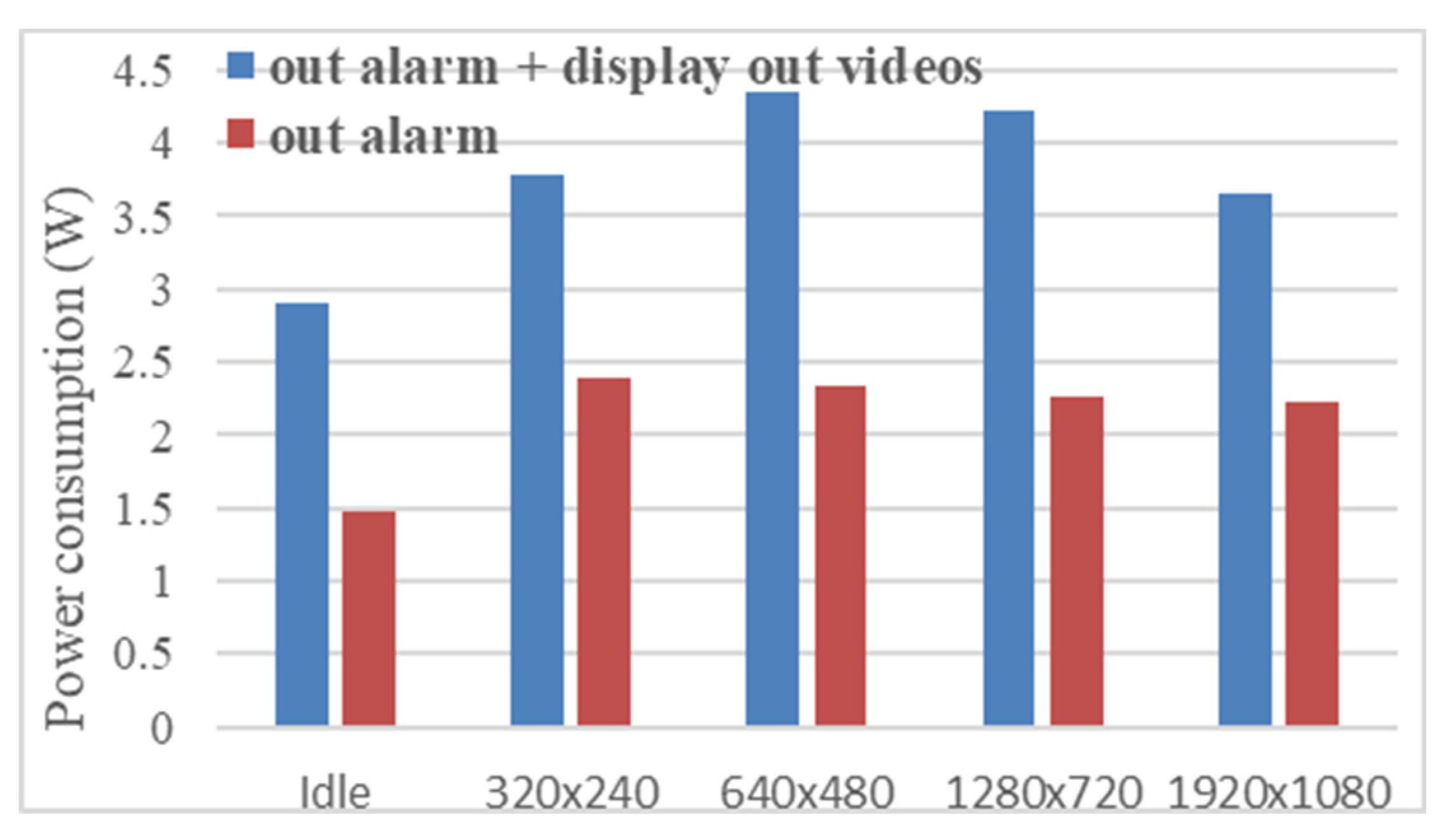

6. Real-Time Embedded Platform Implementation

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Zhuang, J.; Payyappalli, V.; Behrendt, A.; Lukasiewicz, K. Total Cost of Fire in the United States; NFPA National Fire Protection Association: Buffalo, NY, USA, 2017; Available online: https://www.nfpa.org/News-and-Research/Data-research-and-tools/US-Fire-Problem/Total-cost-of-fire-in-the-United-States (accessed on 21 April 2020).

- Toreyin, B.U.; Soyer, E.B.; Urfalioglu, O.; Cetin, A.E. Flame detection using PIR sensors. In Proceedings of the IEEE 16th Signal Processing, Communication and Applications Conference, Aydin, Turkey, 20–22 April 2008; pp. 1–4. [Google Scholar]

- Erden, F.; Töreyin, B.U.; Soyer, E.B.; Inac, I.; Günay, O.; Köse, K.; Çetin, A.E. Wavelet based flame detection using differential PIR sensors. In Proceedings of the IEEE 20th Signal Processing and Communications Applications Conference (SIU), Mugla, Turkey, 18–20 April 2012; pp. 1–4. [Google Scholar]

- Ge, Q.; Wen, C.; Duan, S.A. Fire localization based on range-range-range model for limited interior space. IEEE Trans. Instrum. Meas. 2014, 63, 2223–2237. [Google Scholar] [CrossRef]

- Aleksic, Z.J. The analysis of the transmission-type optical smoke detector threshold sensitivity to the high rate temperature variations. IEEE Trans. Instrum. Meas. 2004, 53, 80–85. [Google Scholar] [CrossRef]

- Aleksic, Z.J. Minimization of the optical smoke detector false alarm probability by optimizing its frequency characteristic. IEEE Trans. Instrum. Meas. 2000, 49, 37–42. [Google Scholar] [CrossRef]

- Aleksic, Z.J. Evaluation of the design requirements for the electrical part of transmission-type optical smoke detector to improve its threshold stability to slowly varying influences. IEEE Trans. Instrum. Meas. 2000, 49, 1057–1062. [Google Scholar] [CrossRef]

- Amer, H.; Daoud, R. Fault-Secure Multidetector Fire Protection System for Trains. IEEE Trans. Instrum. Meas. 2007, 56, 770–777. [Google Scholar] [CrossRef]

- Amer, H.H.; Daoud, R.M. Fault-Secure Multidetector Fire Protection System for Trains. In Proceedings of the Instrumentation and Measurement Technology Conference (IEEE I2MTC), Ottawa, ON, Canada, 17–19 May 2005; pp. 1664–1668. [Google Scholar]

- Wabtec Corp. Available online: www.wabtec.com/products/8582/smir%E2%84%A2 (accessed on 10 January 2020).

- Wabtec Corp. Available online: www.wabtec.com/products/8579/ifds-r (accessed on 10 January 2020).

- Gagliardi, A.; Saponara, S. Distributed video antifire surveillance system based on iot embedded computing nodes. In Applications in Electronics Pervading Industry, Environment and Society (ApplePies 2019); Springer LNEE: Cham, Switzerland, 2020; Volume 627, pp. 405–411. [Google Scholar]

- Gunay, O.; Toreyin, B.U.; Kose, K.; Cetin, A.E. Entropy-functional-based online adaptive decision fusion framework with application to wildfire detection in video. IEEE Trans. Image Process. 2012, 21, 2853–2865. [Google Scholar] [CrossRef]

- Vijayalakshmi, S.; Muruganand, S. Smoke detection in video images using background subtraction method for early fire alarm system. In Proceedings of the 2017 2nd International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 19–20 October 2017; pp. 167–171. [Google Scholar]

- Barnich, O.; Van Droogenbroeck, M. ViBE: A powerful random technique to estimate the background in video sequences. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Taipei, Taiwan, 19–24 April 2009; pp. 945–948. [Google Scholar]

- Zhang, Q.; Liu, F.; Li, X.; Li, B. Dissipation Function and ViBe Based Smoke Detection in Video. In Proceedings of the 2nd International Conference on Multimedia and Image Processing (ICMIP), Wuhan, China, 17–19 March 2017; pp. 325–329. [Google Scholar]

- Yuanbin, W. Smoke Recognition Based on Machine Vision. In Proceedings of the International Symposium on Computer, Consumer and Control (IS3C), Xi’an, China, 4–6 July 2016; pp. 668–671. [Google Scholar]

- Tao, C.; Zhang, J.; Wang, P. Smoke Detection Based on Deep Convolutional Neural Networks. In Proceedings of the 2016 International Conference on Industrial Informatics—Computing Technology, Intelligent Technology, Industrial Information Integration (ICIICII), Wuhan, China, 3–4 December 2016; pp. 150–153. [Google Scholar]

- Ridder, C.; Munkelt, O.; Kirchner, H. Adaptive Background Estimation and Foreground Detection using Kalman-Filtering. In Proceedings of the International Conference on recent Advances in Mechatronics (ICRAM), Istanbul, Turkey, 14–16 August 1995; pp. 193–199. [Google Scholar]

- Millan-Garcia, L.; Sanchez-Perez, G.; Nakano, M.; Toscano-Medina, K.; Perez-Meana, H.; Rojas-Cardenas, L. An early fire detection algorithm using IP cameras. Sensors 2012, 12, 5670–5686. [Google Scholar] [CrossRef]

- Wang, Y.; Chua, T.W.; Chang, R.; Pham, N.T. Real-time smoke detection using texture and color features. In Proceedings of the 21st International Conference on Pattern Recognition (ICPR2012), Tsukuba, Japan, 11–15 November 2012; pp. 1727–1730. [Google Scholar]

- Saponara, S.; Pilato, L.; Fanucci, L. Early Video Smoke Detection System to Improve Fire Protection in Rolling Stocks. Proc. SPIE 2014, 9139, 913903. [Google Scholar]

- Saponara, S.; Pilato, L.; Fanucci, L. Exploiting CCTV camera system for advanced passenger services on-board trains. In Proceedings of the IEEE International Smart Cities Conference (ISC2), Trento, Italy, 12–15 September 2016; pp. 1–6. [Google Scholar]

- Flir Corp. Available online: https://flir.netx.net/file/asset/8888/original (accessed on 10 January 2020).

- Brisley, P.M.; Lu, G.; Yan, Y.; Cornwell, S. Three-dimensional temperature measurement of combustion flames using a single monochromatic CCD camera. IEEE Trans. Instrum. Meas. 2005, 54, 1417–1421. [Google Scholar] [CrossRef]

- Qiu, T.; Yan, Y.; Lu, G. An autoadaptive edge-detection algorithm for flame and fire image processing. IEEE Trans. Instrum. Meas. 2011, 61, 1486–1493. [Google Scholar] [CrossRef]

- Foggia, P.; Saggese, A.; Vento, M. Real-time fire detection for video surveillance applications using a combination of experts based on color, shape, and motion. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 1545–1556. [Google Scholar] [CrossRef]

- Muhammad, K.; Ahmad, J.; Mehmood, I.; Rho, S.; Baik, S.W. Convolutional neural networks based fire detection in surveillance videos. IEEE Access 2018, 6, 18174–18183. [Google Scholar] [CrossRef]

- Filonenko, A.; Hernández, D.C.; Jo, K.H. Fast smoke detection for video surveillance using CUDA. IEEE Trans. Ind. Inform. 2017, 14, 725–733. [Google Scholar] [CrossRef]

- Zhao, C.; Sain, A.; Qu, Y.; Ge, Y.; Hu, H. Background Subtraction based on Integration of Alternative Cues in Freely Moving Camera. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 1933–1945. [Google Scholar] [CrossRef]

- Wu, Y.; He, X.; Nguyen, T.Q. Moving object detection with a freely moving camera via background motion subtraction. IEEE Trans. Circuits Syst. Video Technol. 2015, 27, 236–248. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6848–6856. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Iandola, F.; Keutzer, K. Small neural nets are beautiful: Enabling embedded systems with small deep-neural-network architectures. In Proceedings of the Twelfth IEEE/ACM/IFIP International Conference on Hardware/Software Codesign and System Synthesis Companion, Seoul, Korea, 15–20 October 2017; p. 1. [Google Scholar]

- Çetin, A.E.; Dimitropoulos, K.; Gouverneur, B.; Grammalidis, N.; Günay, O.; Habiboǧlu, Y.H.; Verstockt, S. Video fire detection—Review. Digit. Signal Process. 2013, 23, 1827–1843. [Google Scholar] [CrossRef]

- Sample Smoke Video Clips. Available online: http://signal.ee.bilkent.edu.tr/VisiFire/Demo/SampleClips.html (accessed on 10 January 2020).

- Grammalidis, N.; Dimitropoulos, K.; Cetin, E. Firesense Database of Videos for Flame and Smoke Detection. Available online: https://zenodo.org/record/836749 (accessed on 10 January 2020).

- Dimitropoulos, K.; Barmpoutis, P.; Grammalidis, N. Spatio-temporal flame modeling and dynamic texture analysis for automatic video-based fire detection. IEEE Trans. Circuits Syst. Video Technol. 2014, 25, 339–351. [Google Scholar] [CrossRef]

- Toreyin, B.U.; Dedeoglu, Y.; Cetin, A.E. Contour based smoke detection in video using wavelets. In Proceedings of the 14th European Signal Processing Conference, Florence, Italy, 4–8 September 2006; pp. 1–5. [Google Scholar]

- Töreyin, B.U.; Dedeoğlu, Y.; Cetin, A.E. Wavelet based real-time smoke detection in video. In Proceedings of the 13th European Signal Processing Conference, Antalya, Turkey, 4–8 September 2005; pp. 1–4. [Google Scholar]

- Yu, C.; Fang, J.; Wang, J.; Zhang, Y. Video fire smoke detection using motion and color features. Fire Technol. 2010, 46, 651–663. [Google Scholar]

- Yuan, F.; Fang, Z.; Wu, S.; Yang, Y.; Fang, Y. Real-time image smoke detection using staircase searching-based dual threshold AdaBoost and dynamic analysis. IET Image Process. 2015, 9, 849–856. [Google Scholar] [CrossRef]

- Celik, T.; Demirel, H. Fire detection in video sequences using a generic color model. Fire Saf. J. 2009, 44, 147–158. [Google Scholar] [CrossRef]

- Raspberry Pi Foundation. Available online: https://www.raspberrypi.org/ (accessed on 10 January 2020).

- Raspbian OS. Available online: https://www.raspberrypi.org/downloads/raspbian/ (accessed on 10 January 2020).

- RPI Camera Module v.1.3. Available online: https://www.raspberrypi.org/documentation/hardware/camera/ (accessed on 10 January 2020).

| Smoke Presence | No Smoke Presence | |

|---|---|---|

| Alarm | TP | FP |

| No Alarm | FP | TN |

| Set | Sat | Foreig. | Area | Turb. | Overlap | Ecc. | ConvArea | Ext min | Ext max |

|---|---|---|---|---|---|---|---|---|---|

| 1A | 0.2 | 0.8 | 2000 | 55 | 14 | 0.99 | 0.5 | 0.2 | 0.95 |

| 1B | 0.2 | 0.8 | 2000 | 55 | 4 | 0.99 | 0.5 | 0.2 | 0.95 |

| 2A | 0.5 | 0.8 | 3000 | 70 | 7 | 0.99 | 0.5 | 0.2 | 0.95 |

| 2B | 0.2 | 0.16 | 1000 | 40 | 7 | 0.99 | 0.5 | 0.2 | 0.95 |

| 3A | 0.2 | 0.04 | 2000 | 55 | 7 | 0.99 | 0.5 | 0.2 | 0.95 |

| 3B | 0.2 | 0.8 | 2000 | 55 | 7 | 0.99 | 0.5 | 0.2 | 0.95 |

| 4A | 0.6 | 0.8 | 2000 | 55 | 7 | 0.99 | 0.5 | 0.2 | 0.95 |

| 4B | 0.1 | 0.8 | 2000 | 55 | 7 | 0.99 | 0.5 | 0.2 | 0.95 |

| 5A | 0.2 | 0.8 | 2000 | 55 | 7 | 1 | 0.9 | 0.1 | 0.99 |

| 5B | 0.2 | 0.8 | 2000 | 55 | 7 | 0.7 | 0.1 | 0.4 | 0.80 |

| 6 | 0.2 | 0.8 | 2000 | 55 | 7 | 0.99 | 0.5 | 0.2 | 0.95 |

| Video in Figure 8 | Total Frames | Smoke Frames | Toreyin [36,40,41] | Wang [21] | AdViSED | |||

|---|---|---|---|---|---|---|---|---|

| Correct Detected | False Alarm | Correct Detected | False Alarm | Correct Detected | False Alarm | |||

| n.1 | 900 | 805 | 805 | 2 | 836 | 0 | 900 | 0 |

| n.2 | 900 | 651 | 651 | 4 | 711 | 2 | 900 | 0 |

| n.3 | 483 | 408 | 235 | 0 | 337 | 0 | 483 | 0 |

| Video in Figure 8 | Total Frames | Millan-Garcia [20] | Yu [42] | Toreyin [36,40,41] | AdViSED |

|---|---|---|---|---|---|

| Delay in Smoke Detection (in n. frames) | |||||

| n.1 | 900 | 805 | 86 | 98 | 9 |

| n.2 | 900 | 651 | 121 | 127 | 19 |

| n.3 | 483 | 408 | 118 | 132 | 120 |

| Platform | Frame Size | ||||

|---|---|---|---|---|---|

| 320 × 480 | 640 × 480 | 1280 × 720 | 1920 × 1080 | 1920 × 1440 | |

| X86 64b PC | 19.1 fps | 9.32 fps | 4.23 fps | N/A | 1.54 fps |

| RPi 3 model B | 10.3 fps | 2.33 fps | 0.64 fps | 0.27 fps | N/A |

| Platform | Frame Size | ||||

|---|---|---|---|---|---|

| 320 × 480 | 640 × 480 | 1280 × 720 | 1920 × 1080 | 1920 × 1440 | |

| X86 64b PC | 29.3 fps | 17.5 fps | 6.07 fps | N/A | 1.81 fps |

| RPi 3 model B | 13.4 fps | 4.47 fps | 01.38 fps | 0.87 fps | N/A |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gagliardi, A.; Saponara, S. AdViSED: Advanced Video SmokE Detection for Real-Time Measurements in Antifire Indoor and Outdoor Systems. Energies 2020, 13, 2098. https://doi.org/10.3390/en13082098

Gagliardi A, Saponara S. AdViSED: Advanced Video SmokE Detection for Real-Time Measurements in Antifire Indoor and Outdoor Systems. Energies. 2020; 13(8):2098. https://doi.org/10.3390/en13082098

Chicago/Turabian StyleGagliardi, Alessio, and Sergio Saponara. 2020. "AdViSED: Advanced Video SmokE Detection for Real-Time Measurements in Antifire Indoor and Outdoor Systems" Energies 13, no. 8: 2098. https://doi.org/10.3390/en13082098

APA StyleGagliardi, A., & Saponara, S. (2020). AdViSED: Advanced Video SmokE Detection for Real-Time Measurements in Antifire Indoor and Outdoor Systems. Energies, 13(8), 2098. https://doi.org/10.3390/en13082098