Application of the Motion Capture System to Estimate the Accuracy of a Wheeled Mobile Robot Localization †

Abstract

:1. Introduction

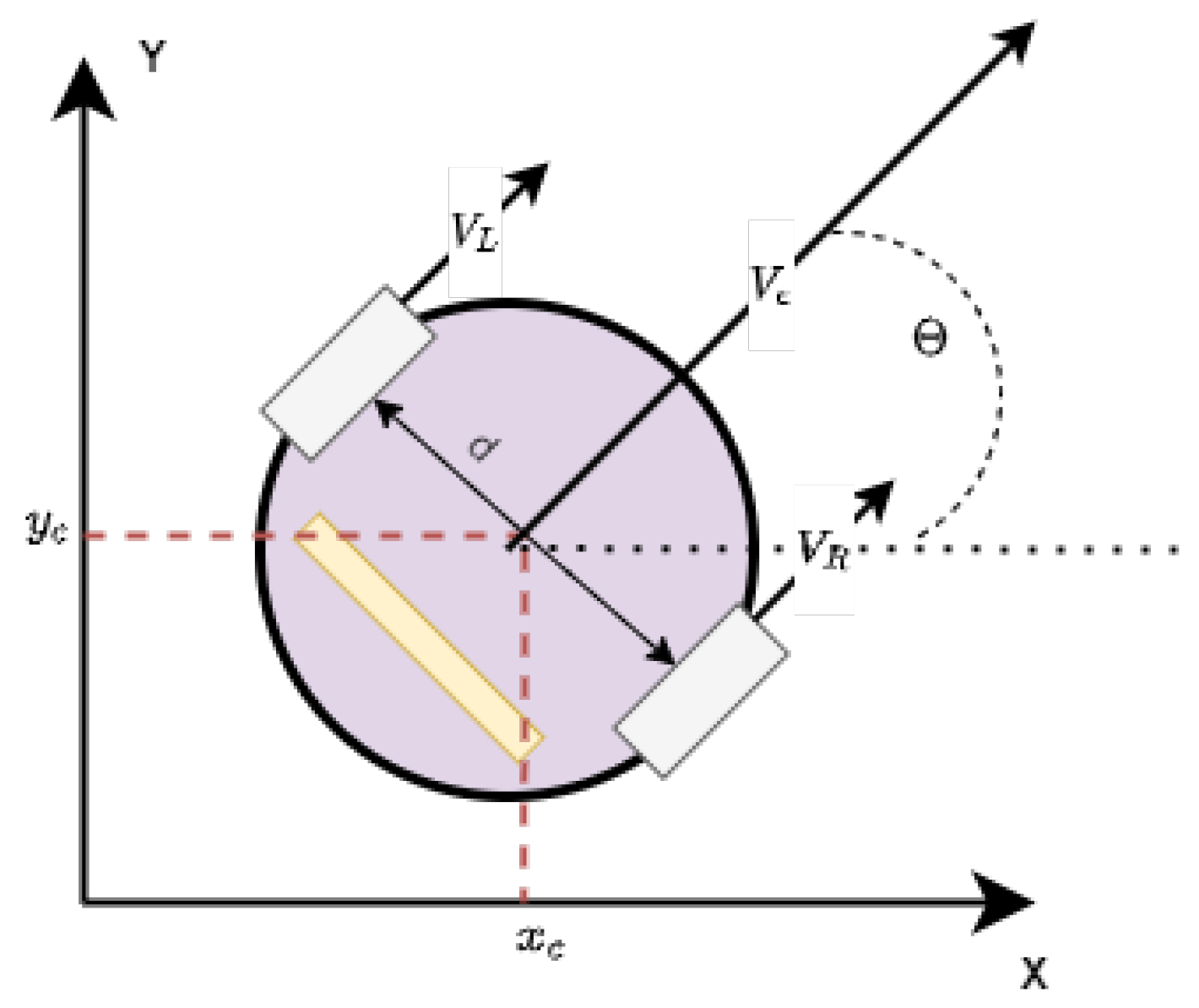

1.1. Wheeled Mobile Robots

1.2. Dead Reckoning and Pure Pursuit Controller

1.3. Motion Capture Systems and Path Similarity Measures

2. Materials and Methods

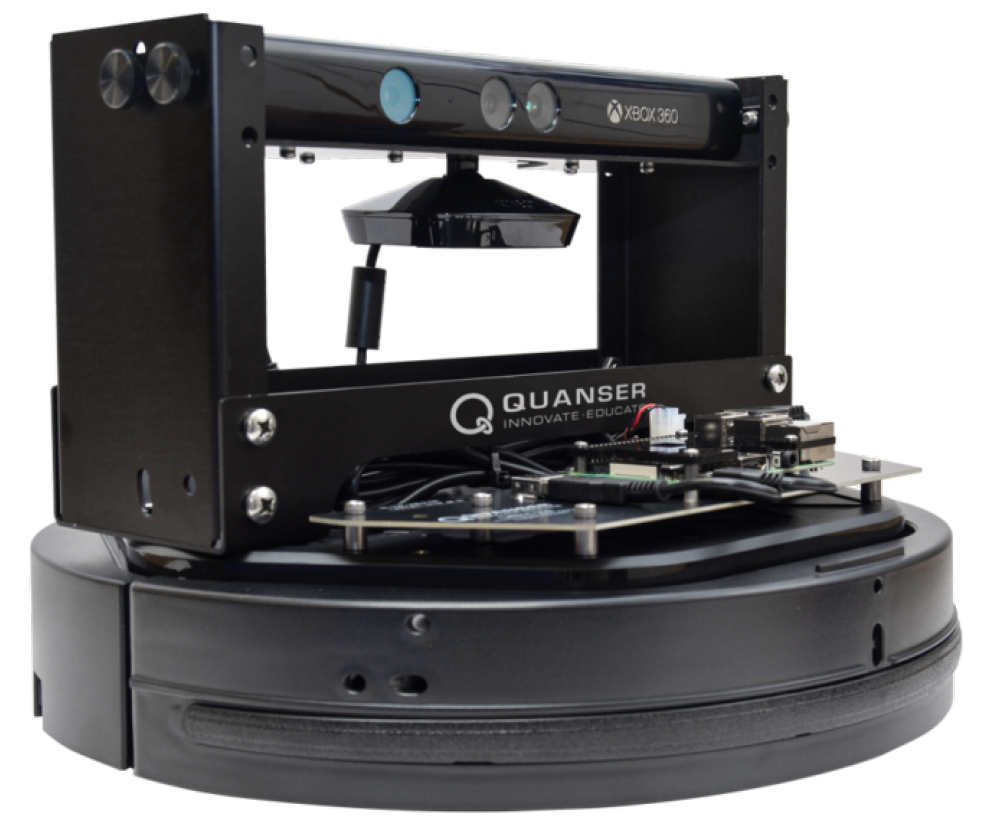

2.1. Test Stand for Evaluating the Control Algorithms for Mobile Wheeled Robots

2.2. Methodology of Research

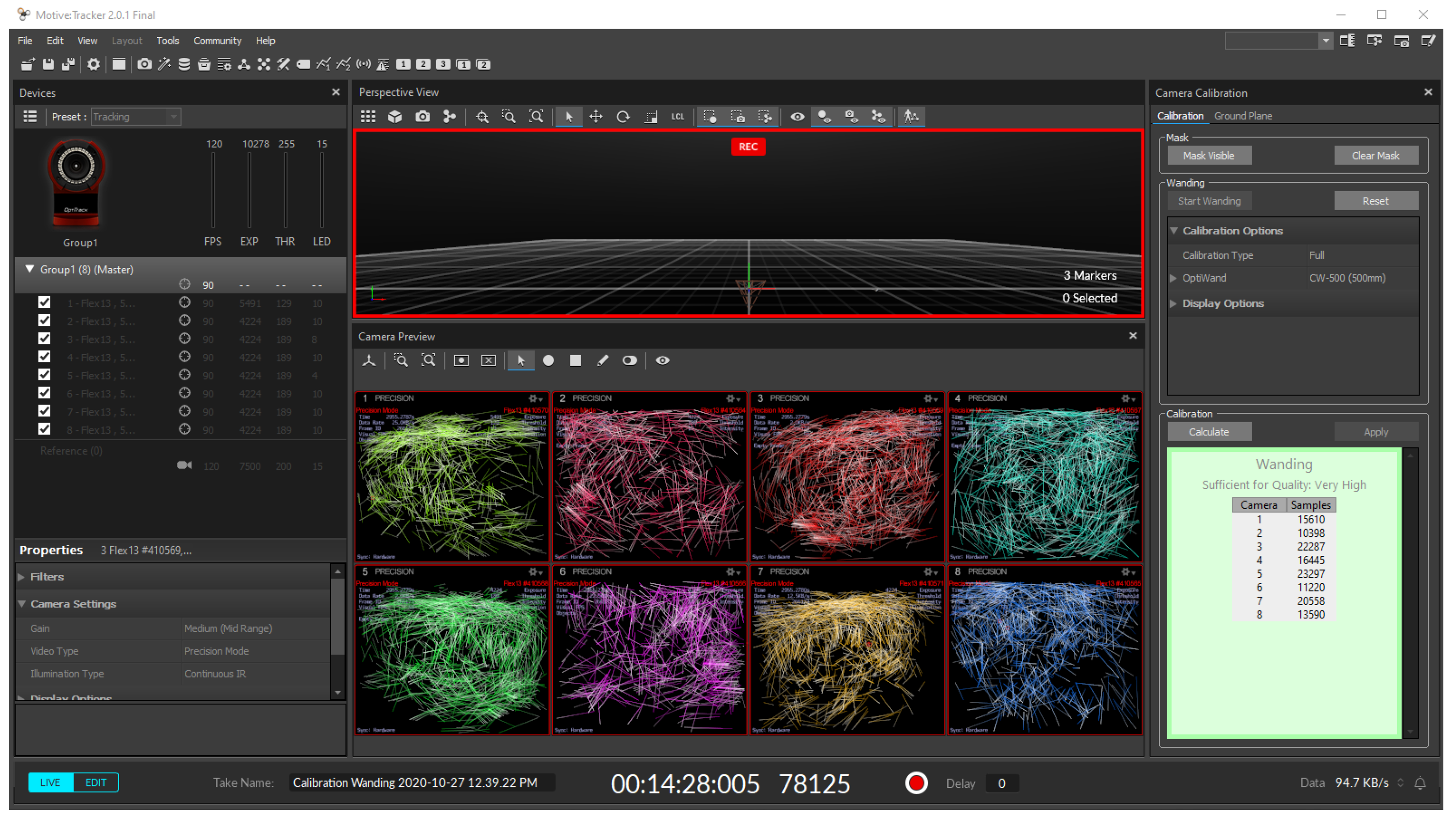

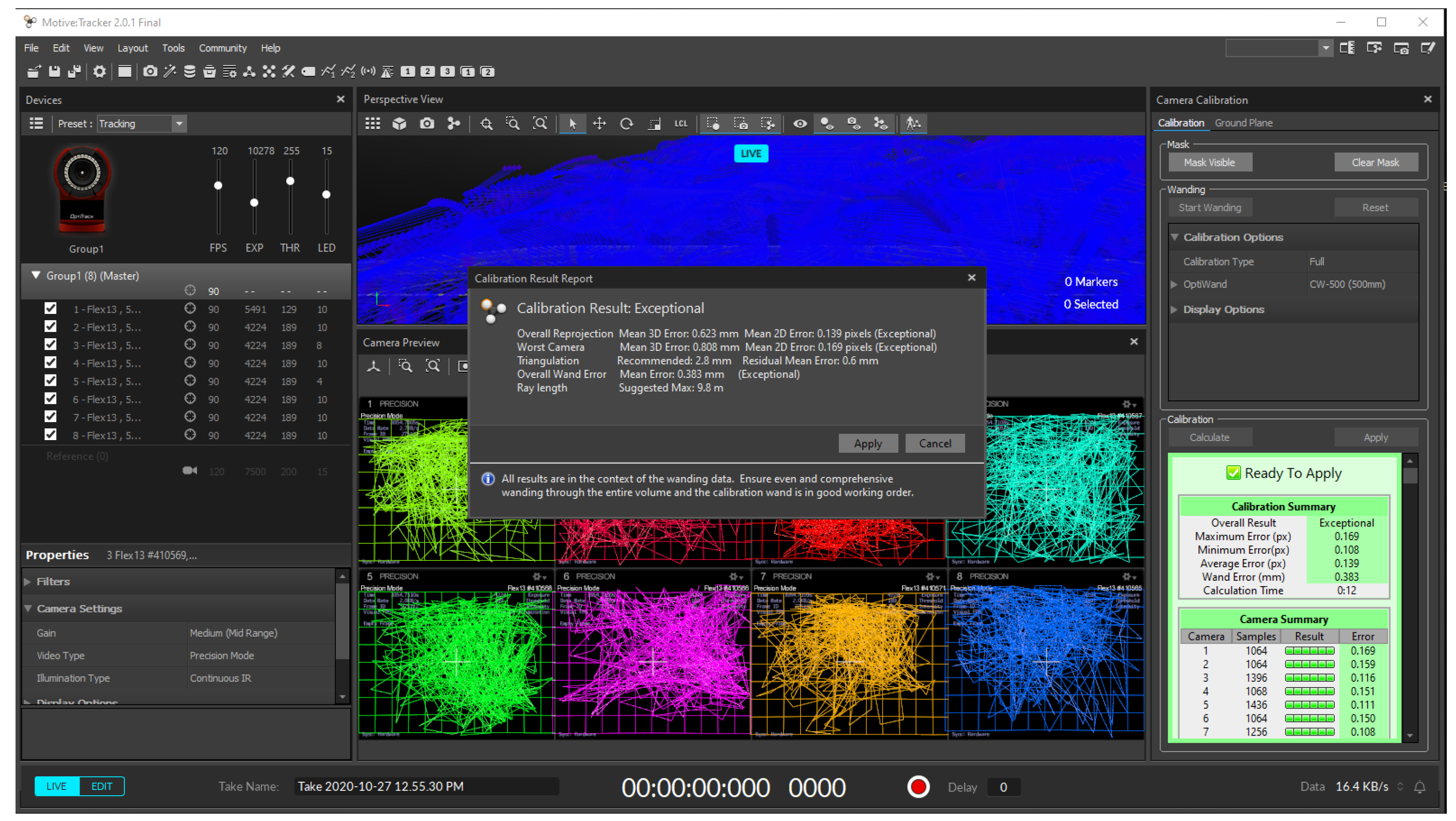

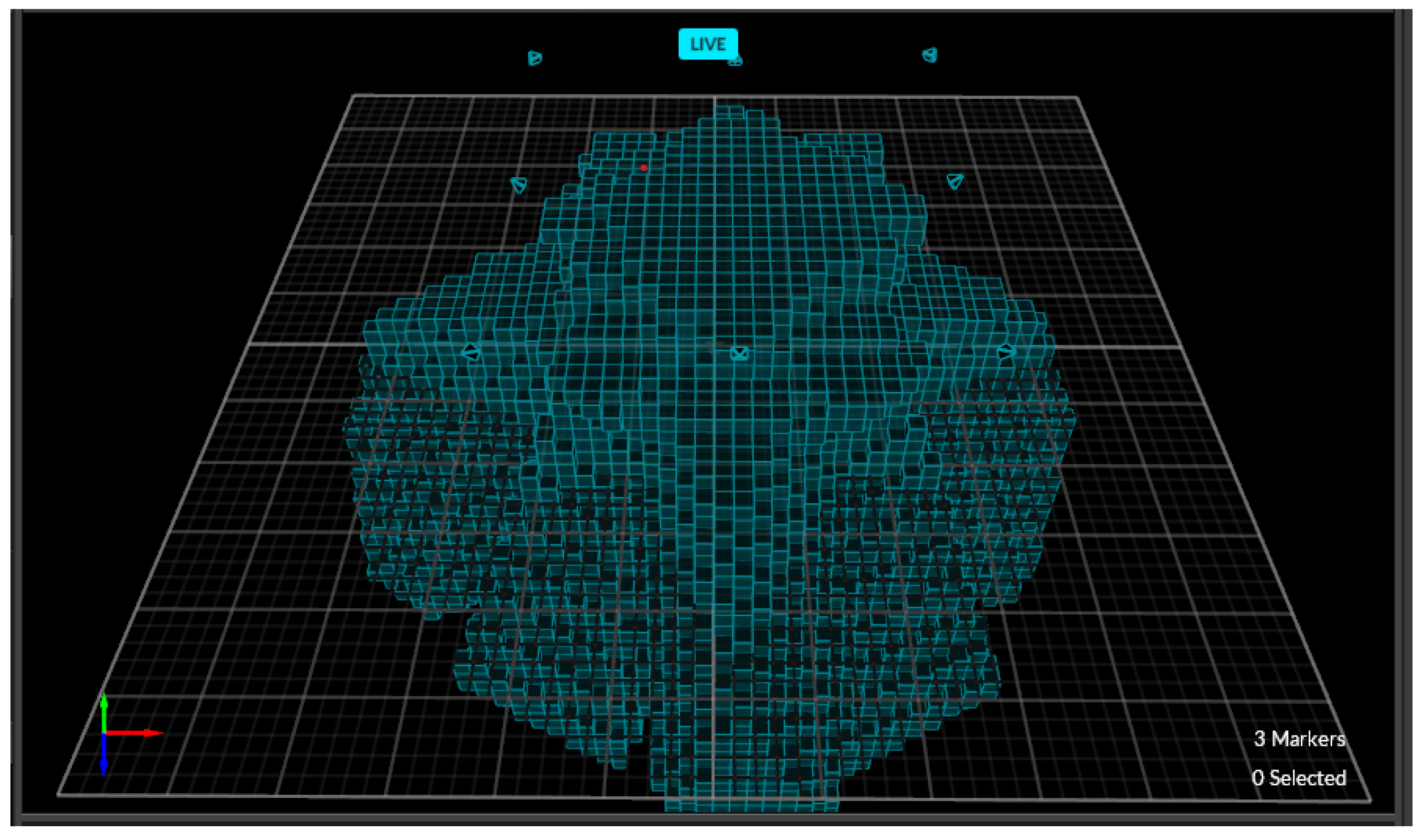

- Calibration of the OptiTRACK motion capture system;

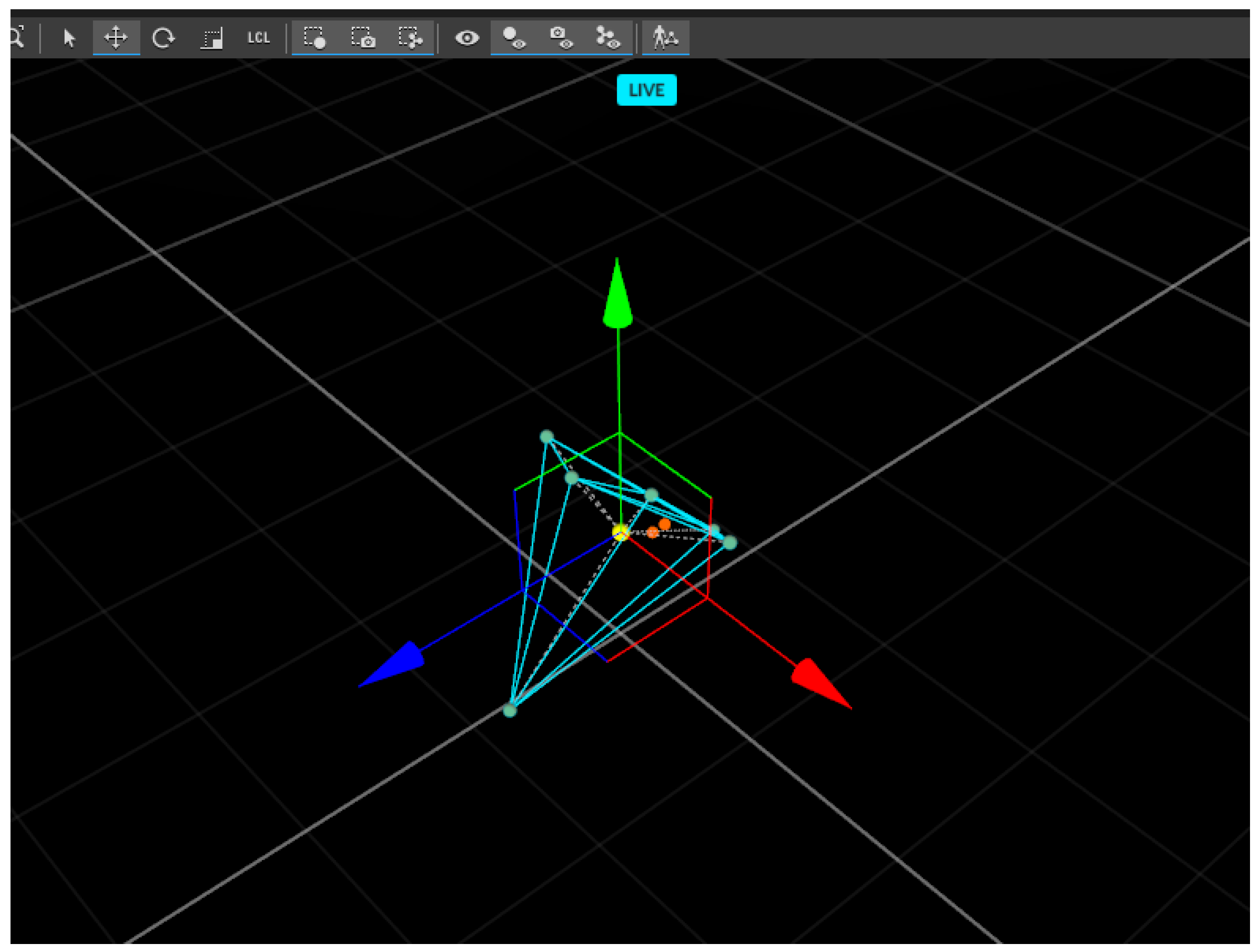

- Definition of geometric model of rigid body in Motive 2.0;

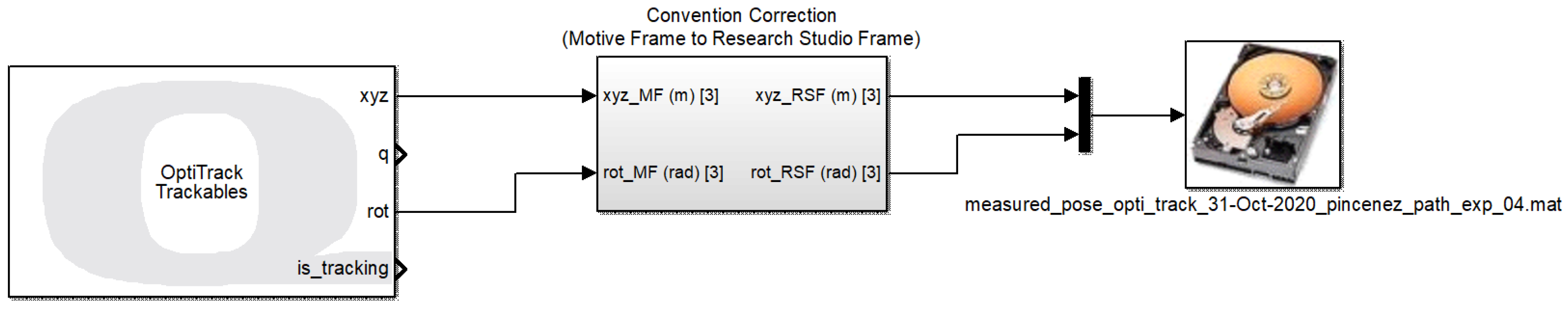

- Creation of a Simulink diagram allowing capturing the robot’s motion by OptiTRACK camera system;

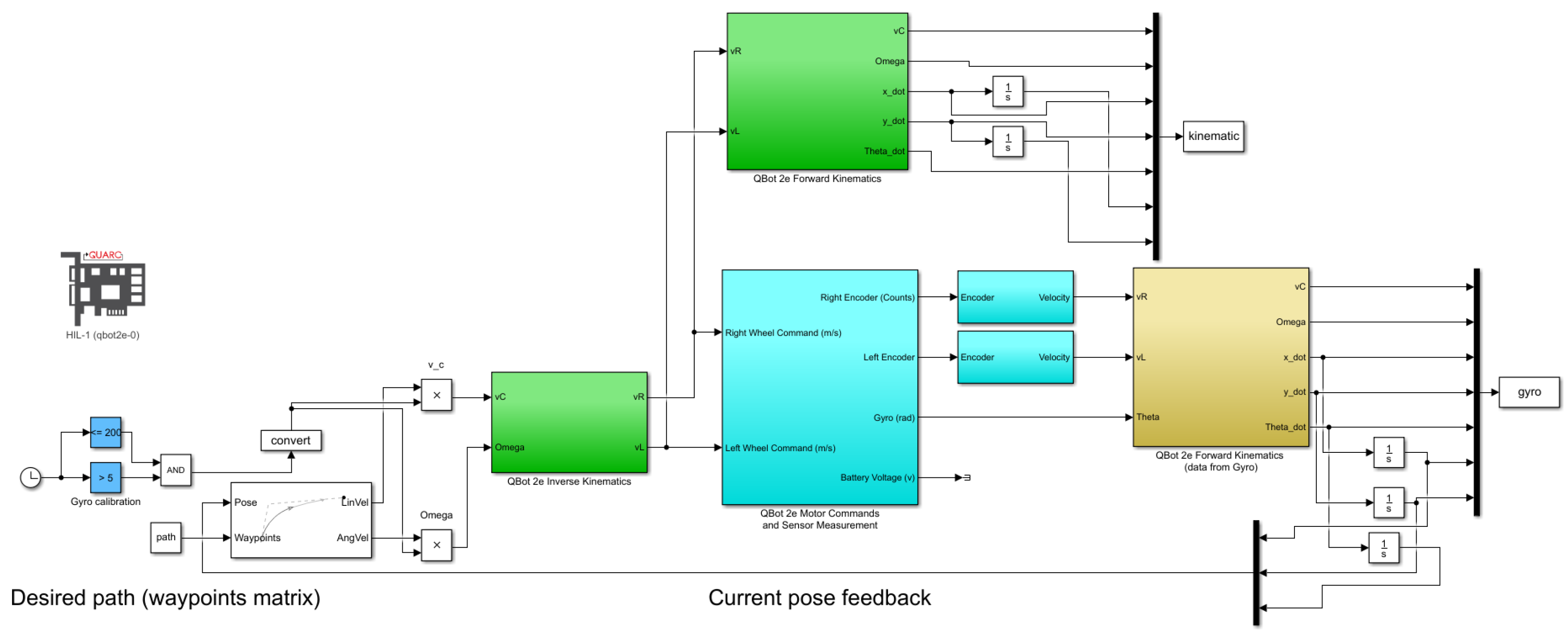

- Programming the robot’s movement according to the assumed path in the Matlab/Simulink environment;

- Compile an i transmission of the simulation model to the target platform running in the real time target;

- Running the model on the target platform and hardware in the loop (HIL) simulation;

- Analysis of HIL results, including determination of Hausdorff measures for recorded tracks.

- Determining the relative position of the cameras inside the working space;

- Determining the position of cameras in relation to the ground (ground level calibration).

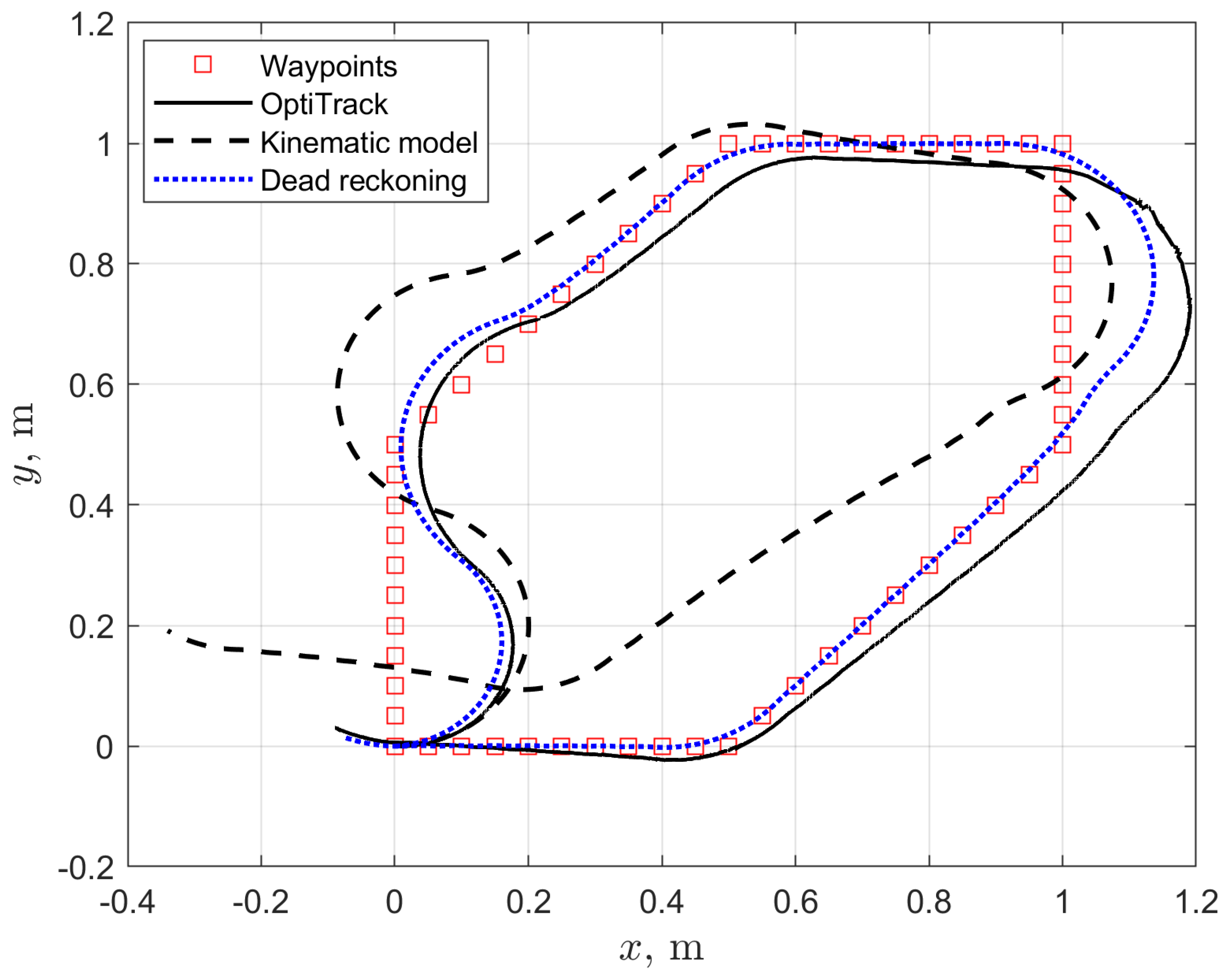

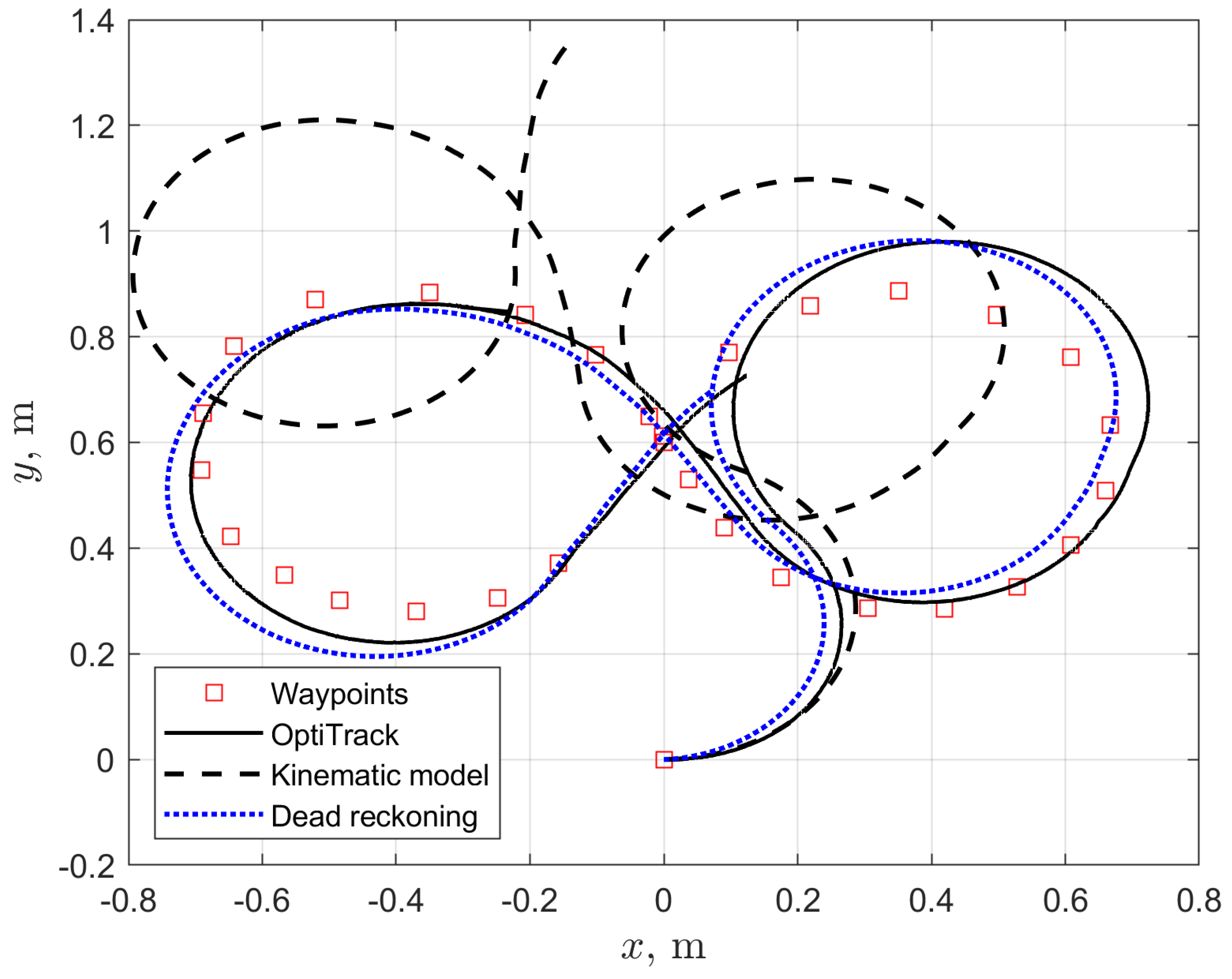

- The Hausdorff distance between the path determined by the odometric localization and the reference path recorded by the OptiTRACK system

- The Hausdorff distance between the path determined on the basis of the kinematics model and the reference path recorded by the OptiTRACK system

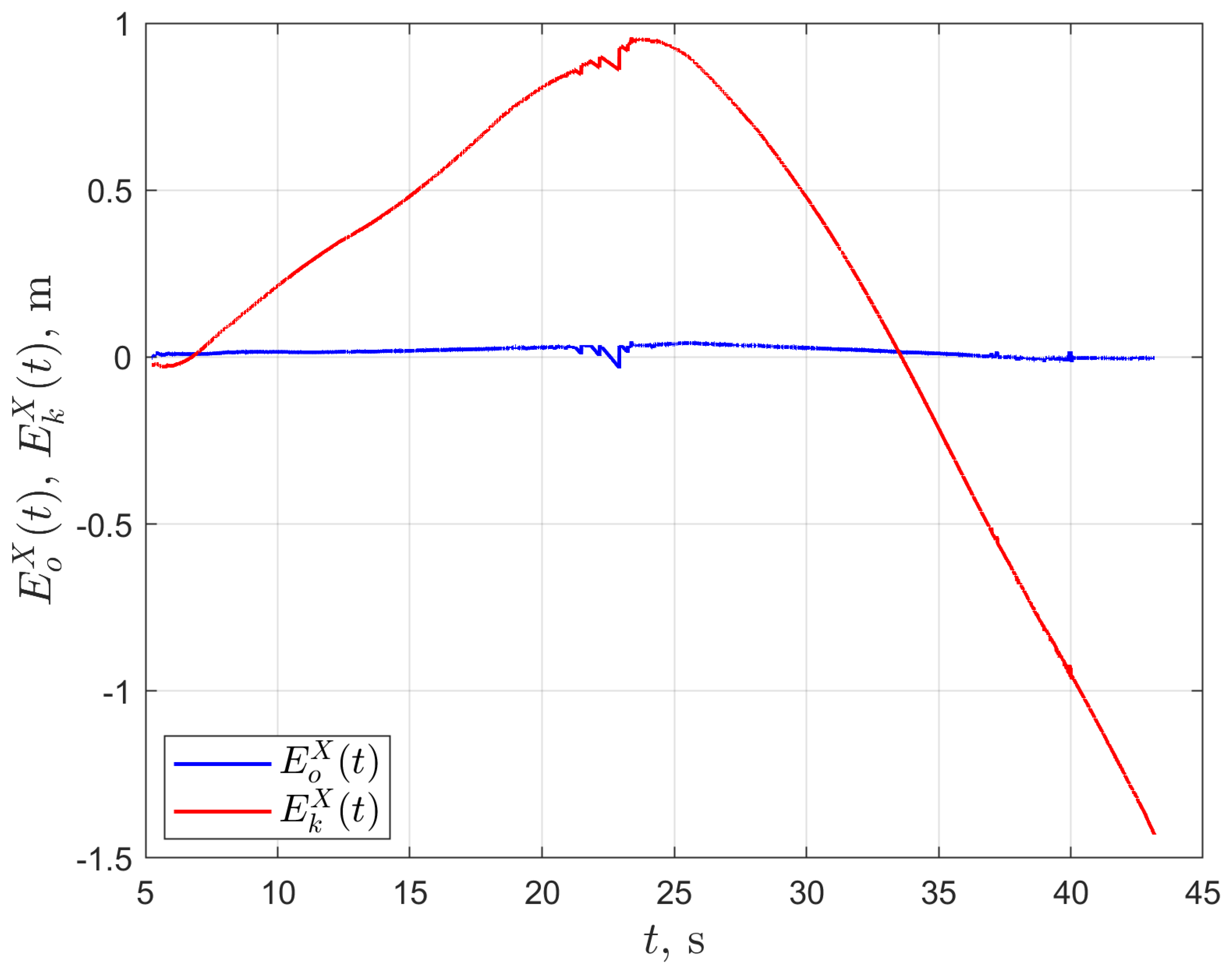

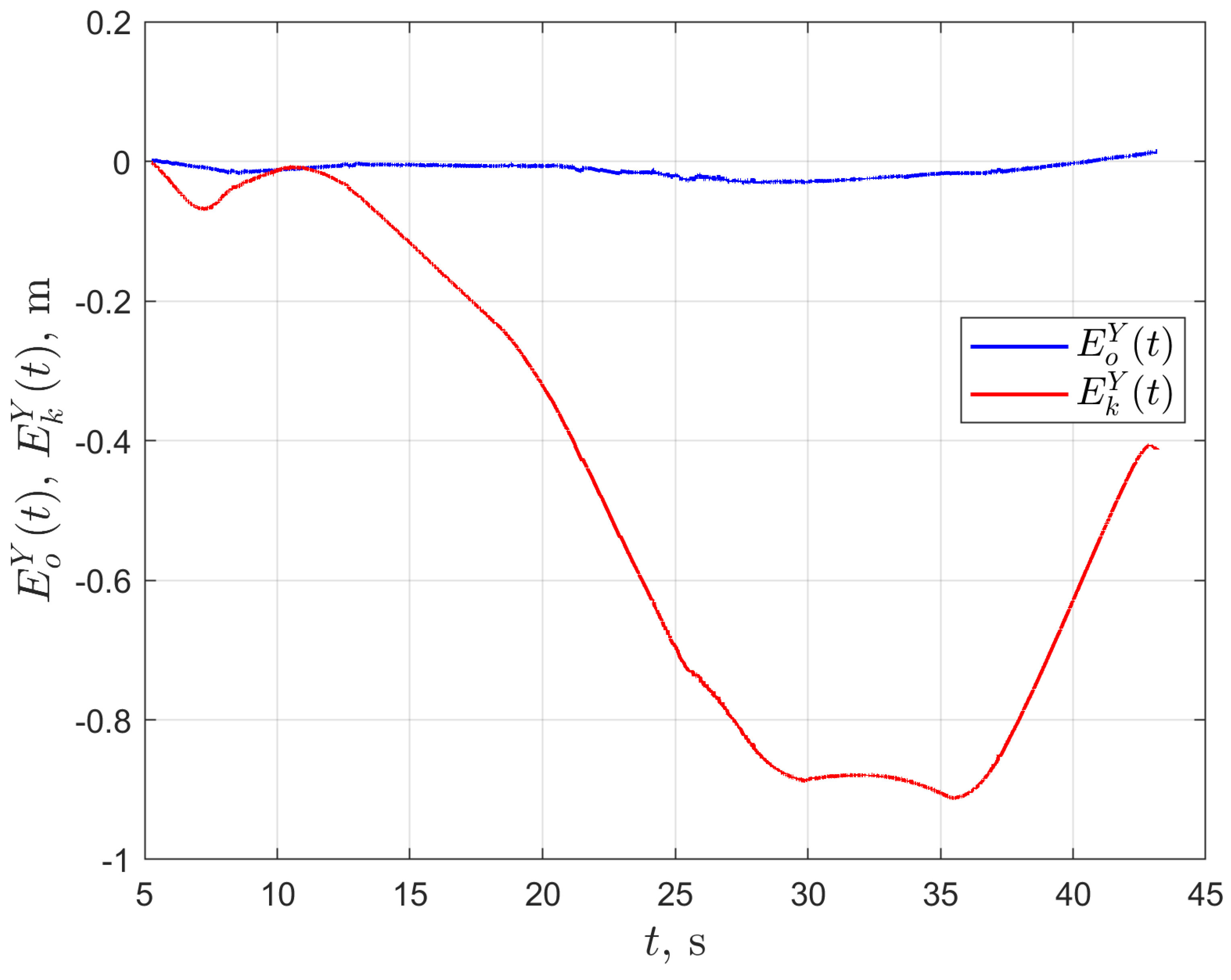

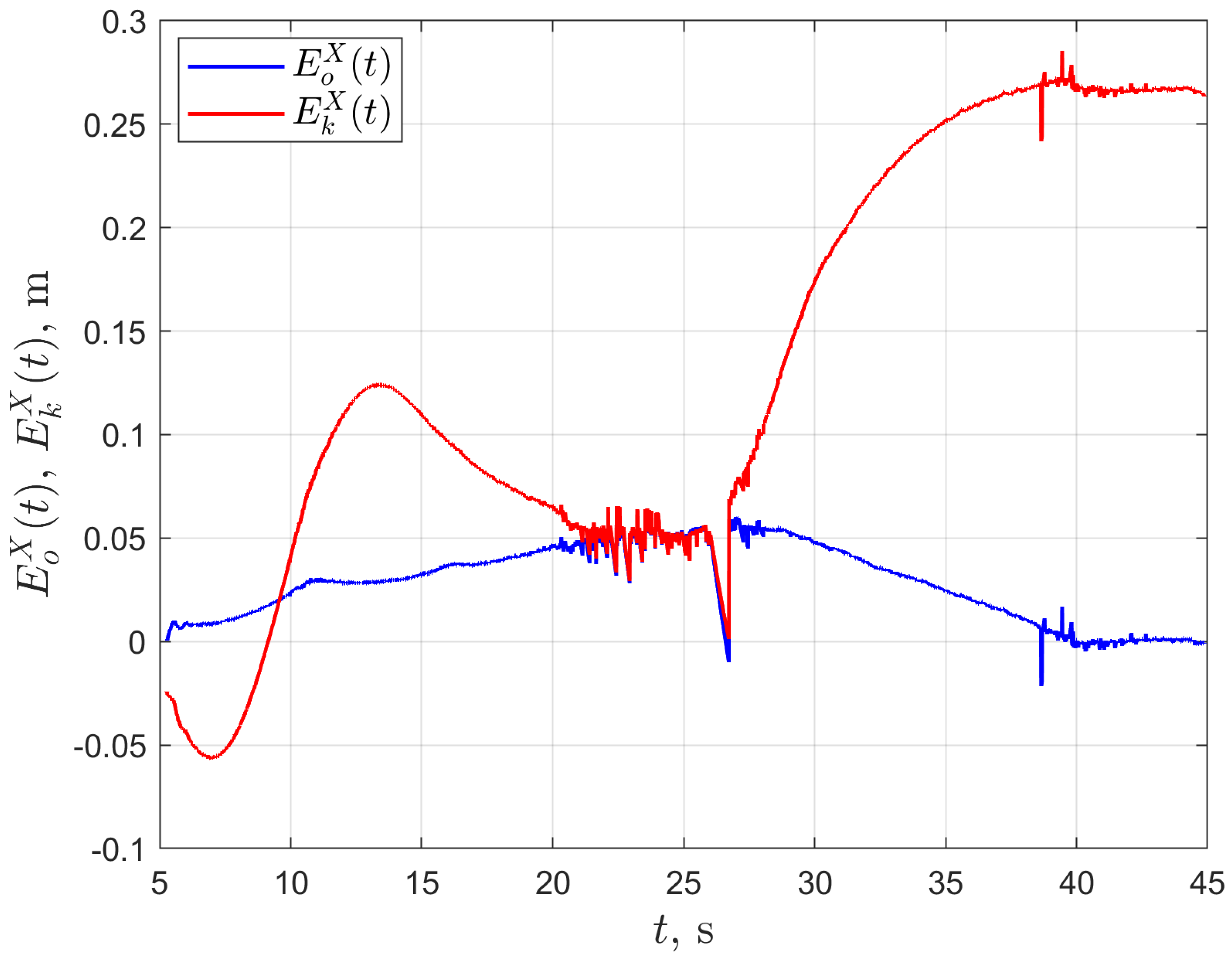

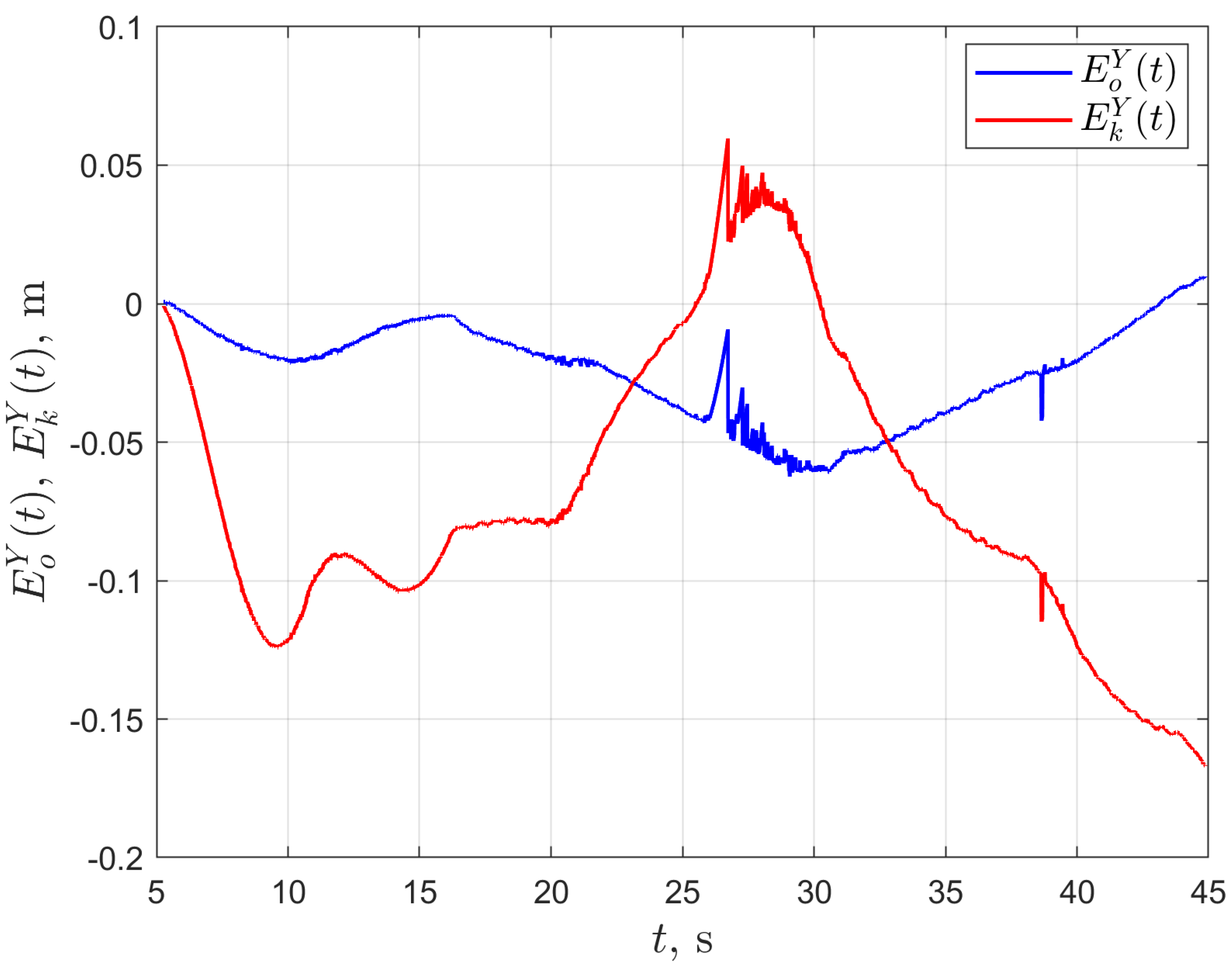

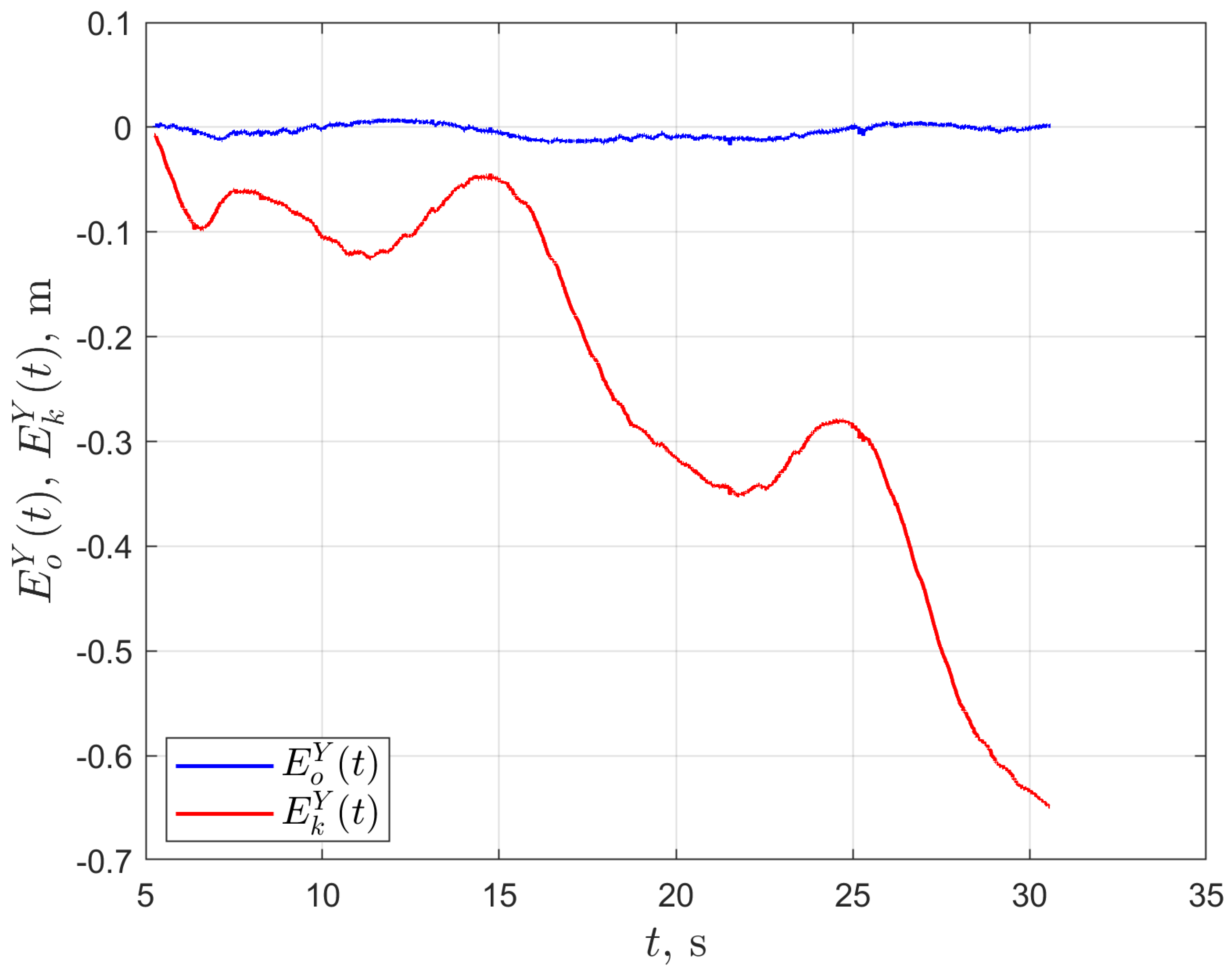

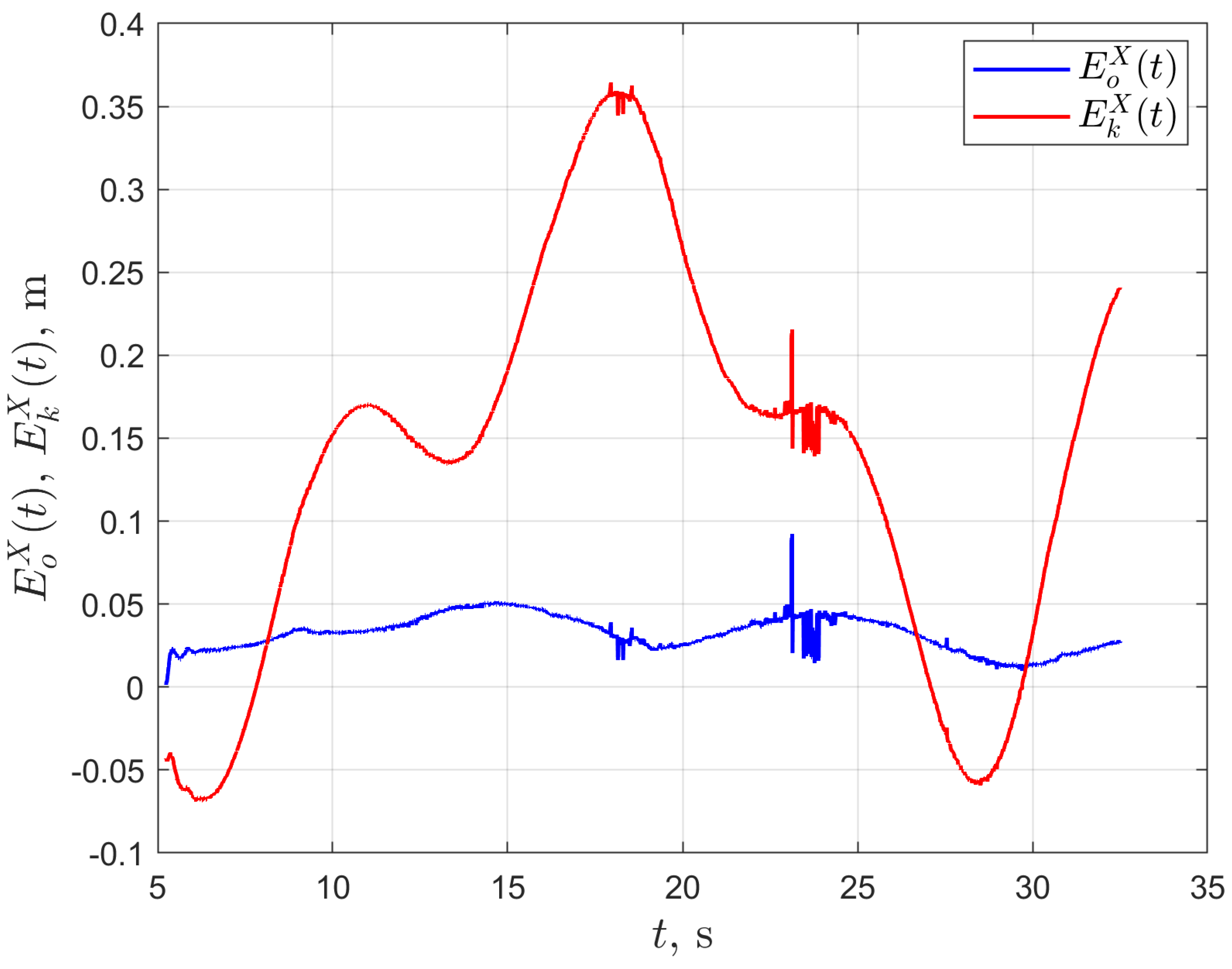

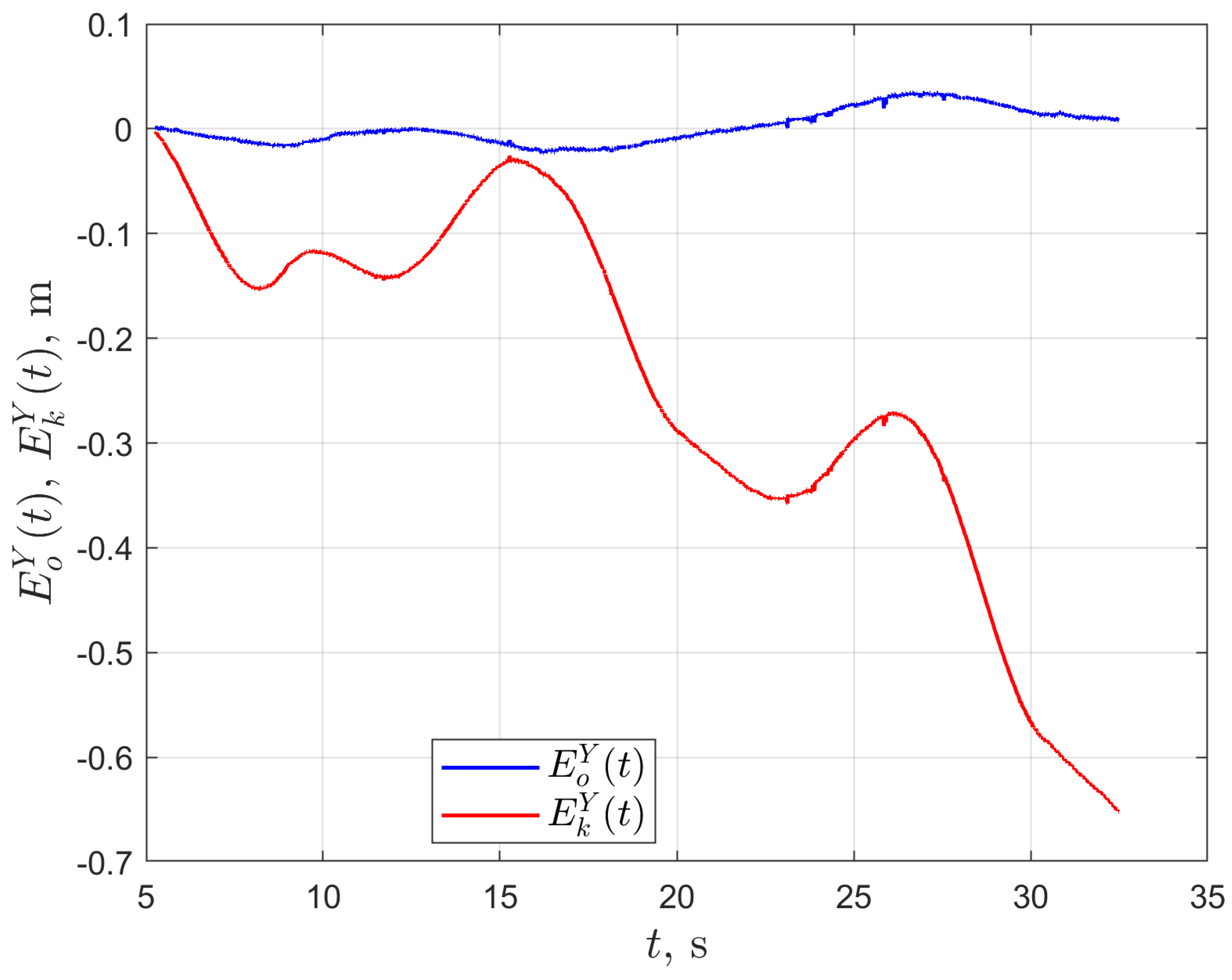

- Odometric localization errors for the x and y coordinates, defined as:where: (m)—the x coordinate of the position of the centre of the rigid body representing the robot at time t obtained from the OptiTRACK system, (m)—the y coordinate of the position of the centre of the rigid body representing the robot at time t obtained from the OptiTRACK system, —the coordinate x of the position of the centre of the rigid body representing the robot at time t obtained from odometric data, the coordinate y of the position of the centre of the rigid body representing the robot at time t obtained from odometric data

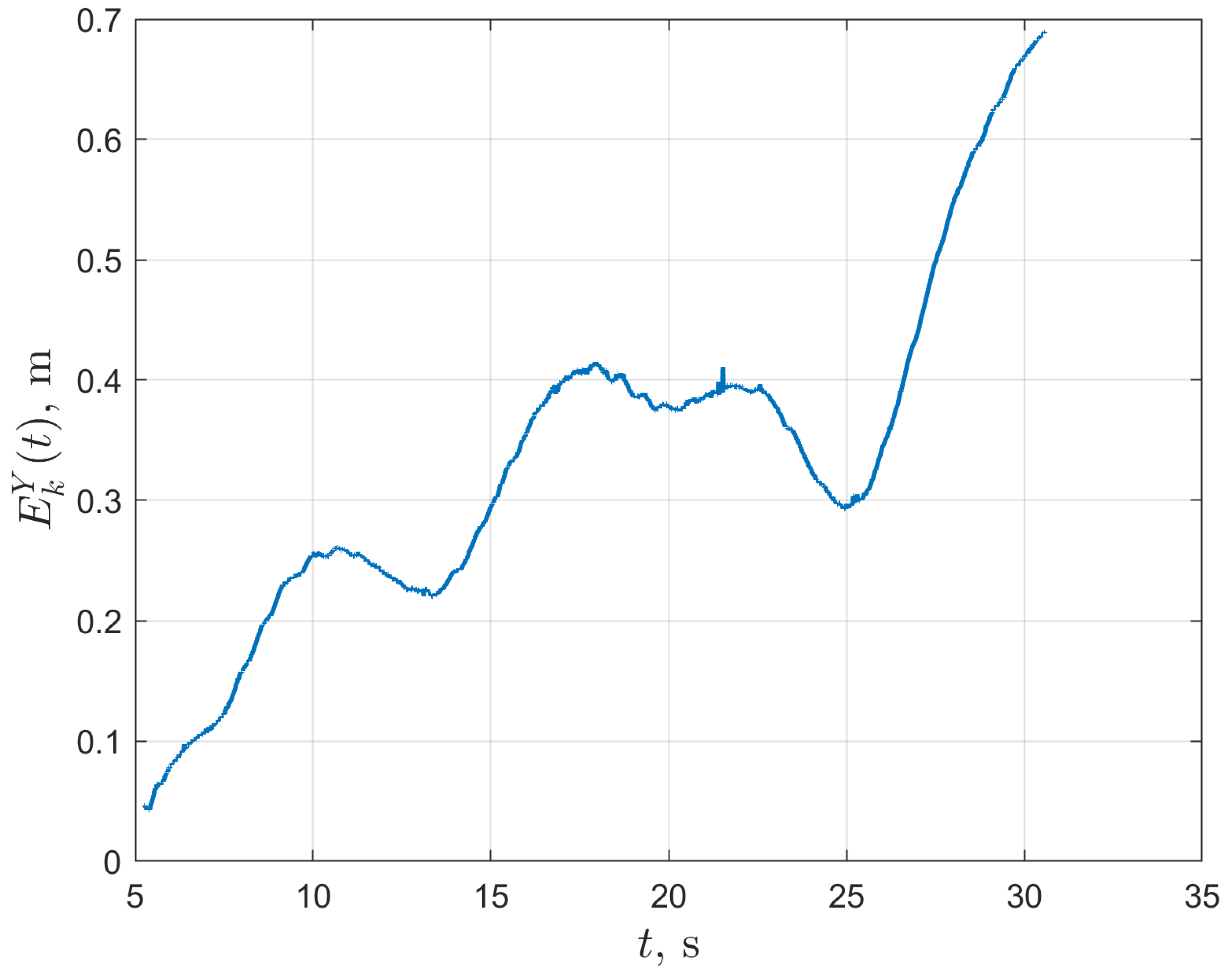

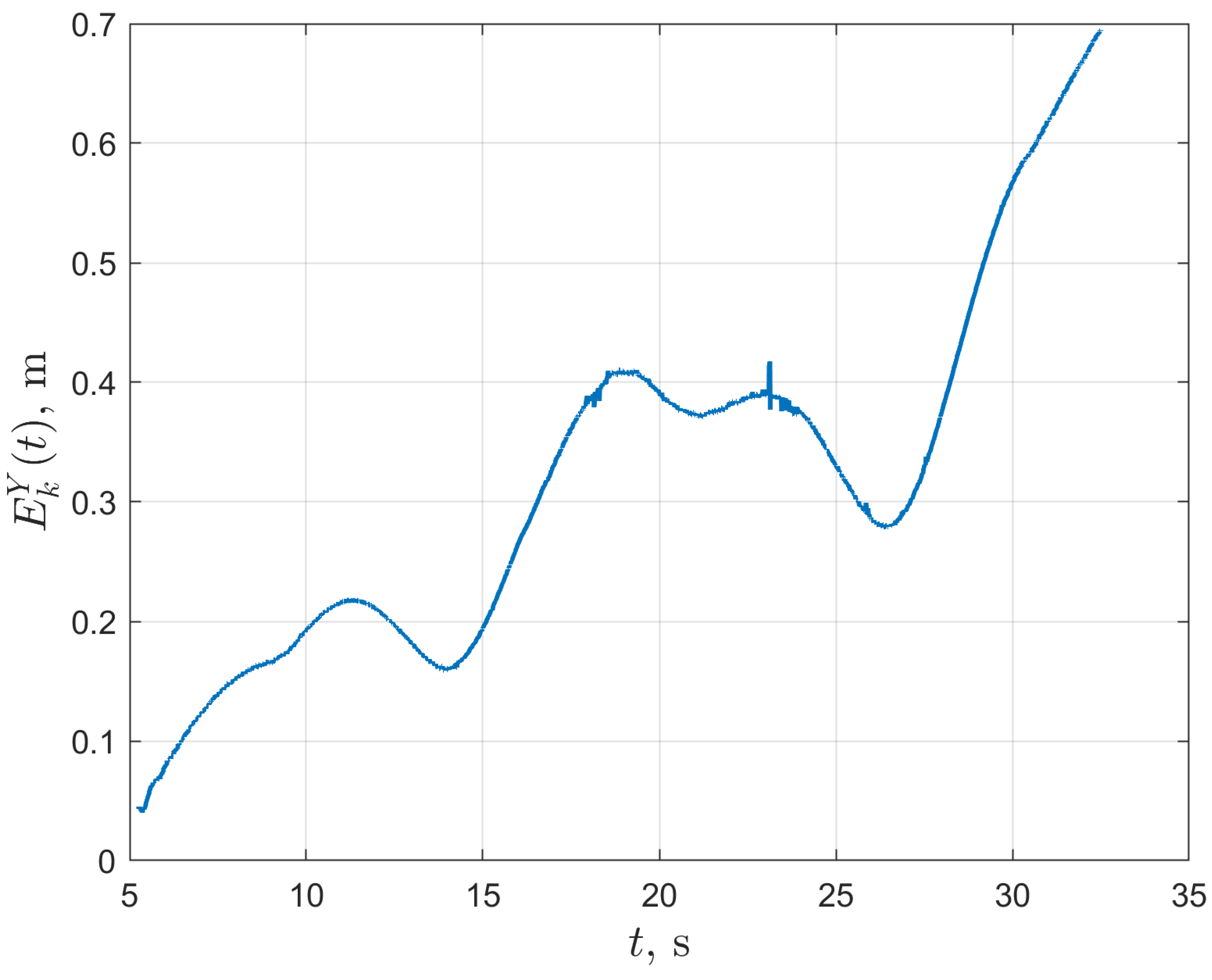

- Localization errors based on the kinematics model forthe x and y coordinates, defined as:where: —the x coordinate of the position of the centre of the rigid body representing the robot at time t obtained from the model of kinematics, the y coordinate of the position of the centre of the rigid body representing the robot at time t obtained from the model of kinematics.

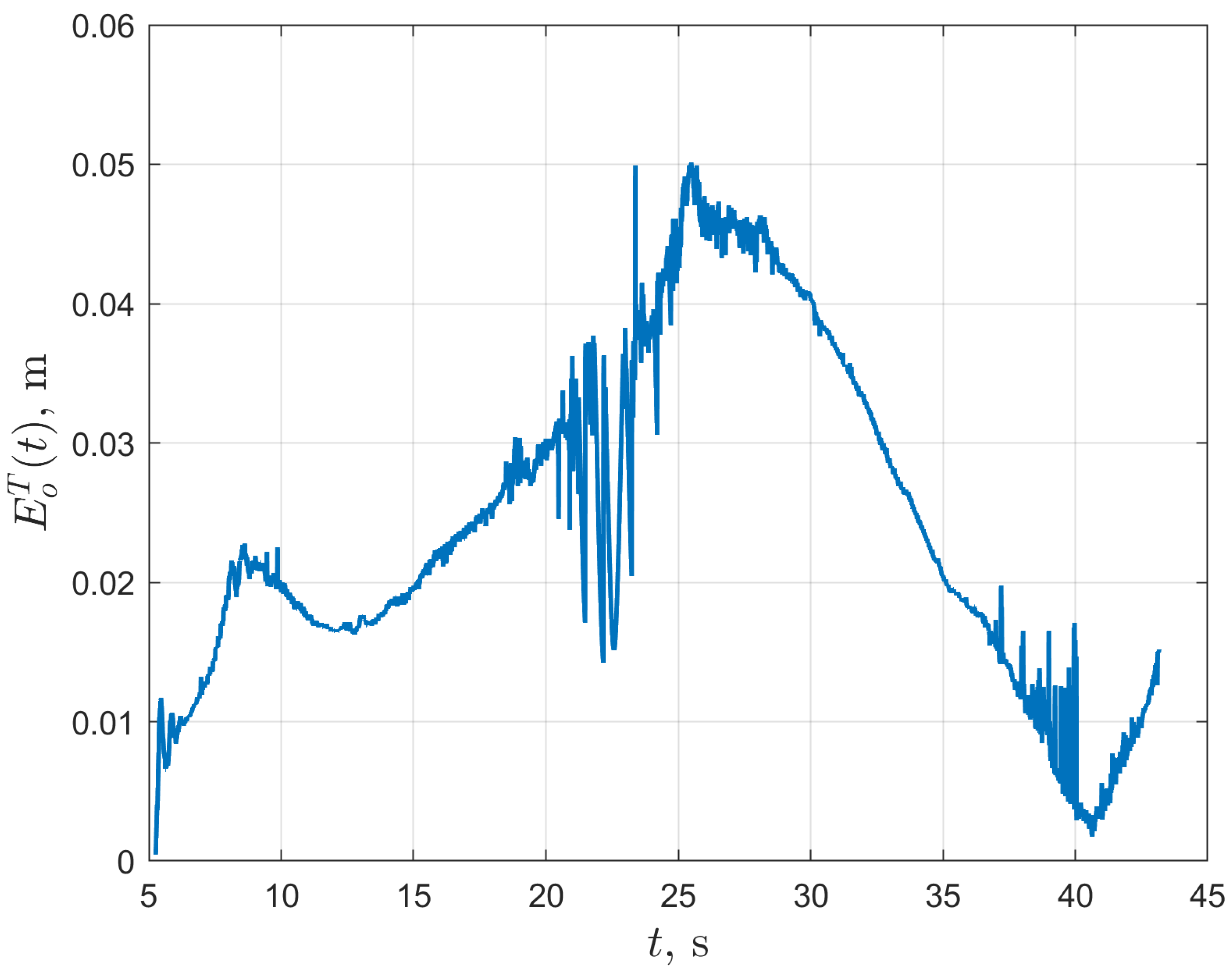

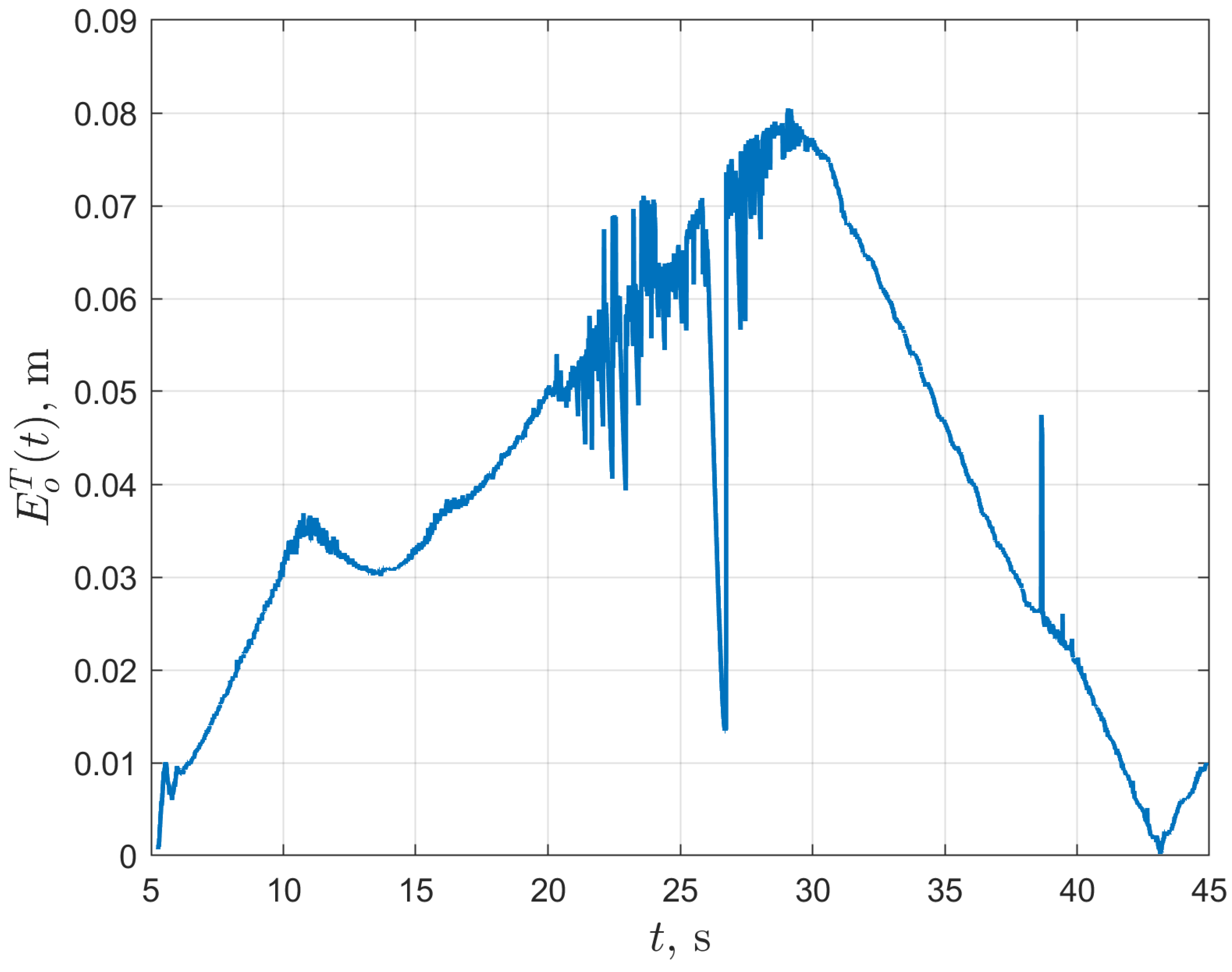

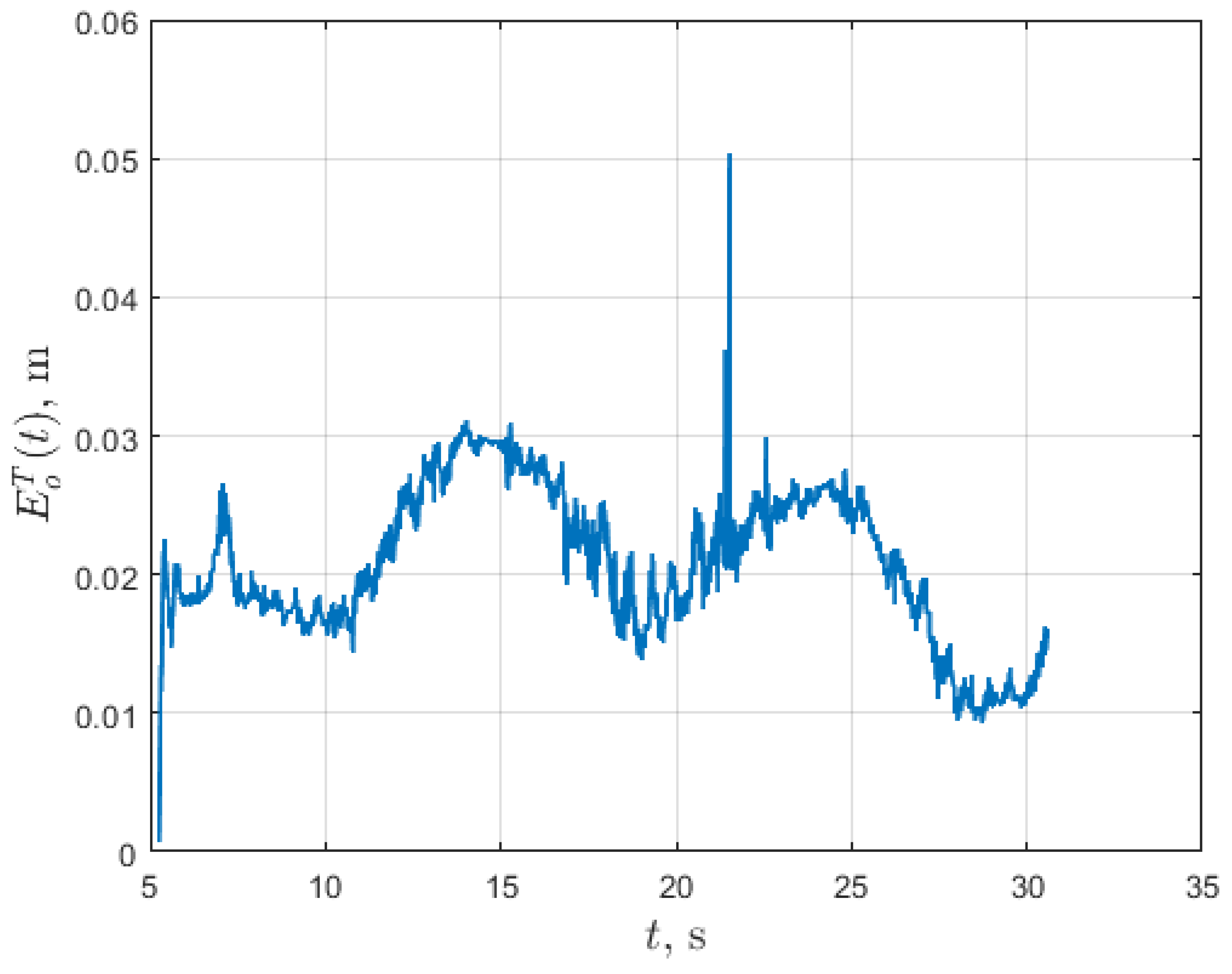

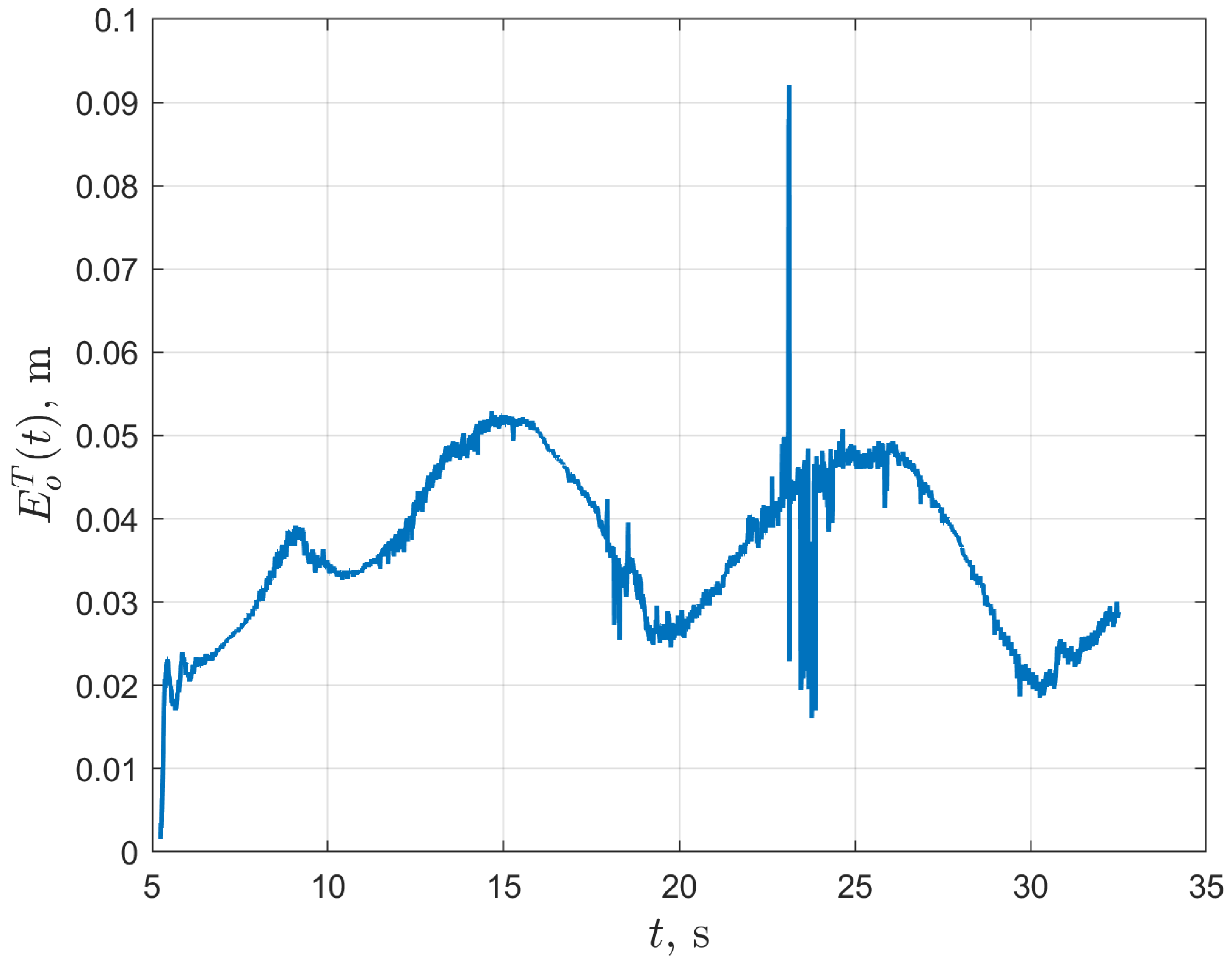

- The total odometric localization error described by the following equation:

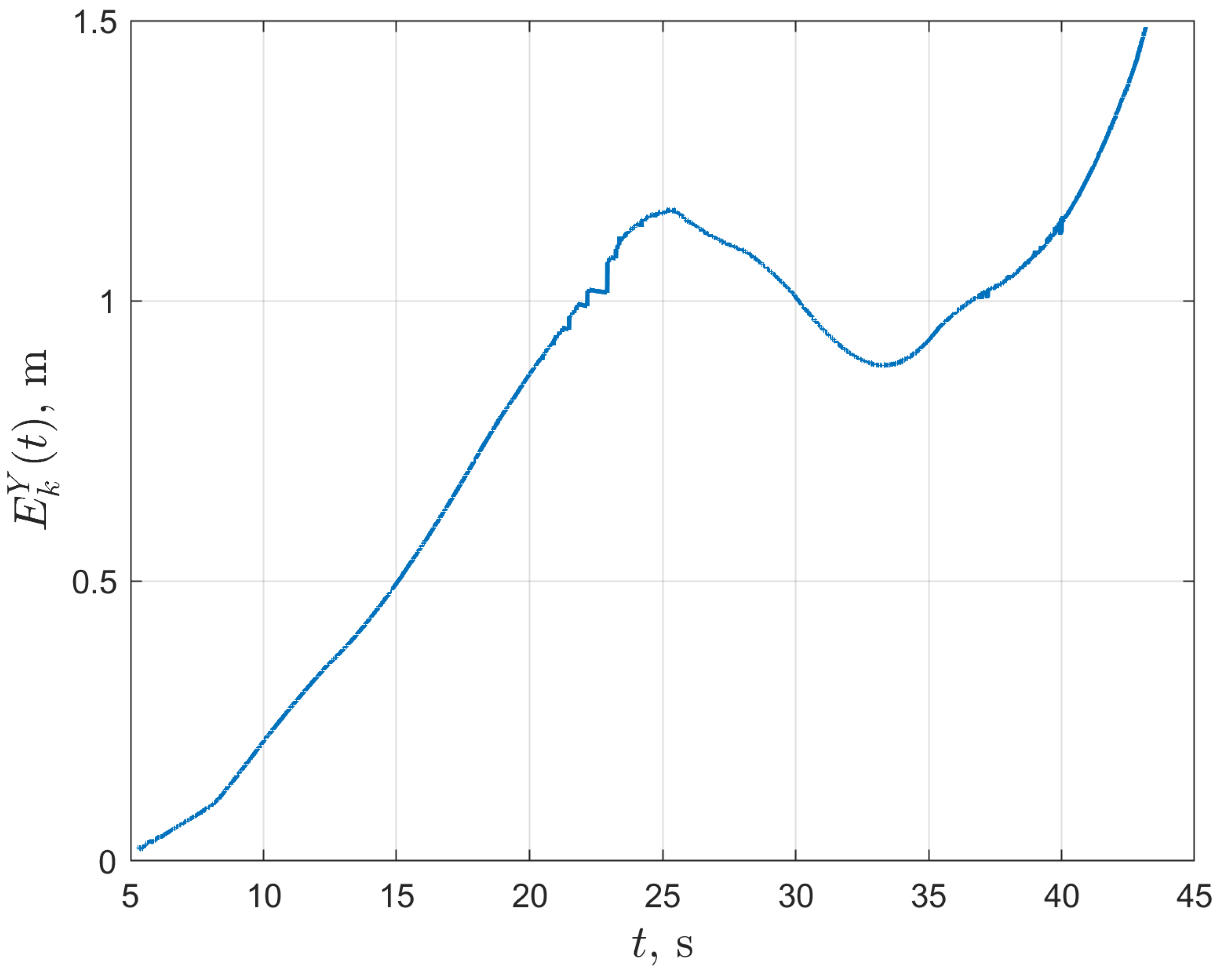

- Total location error based on the kinamatics model described by the following equation:

3. Results

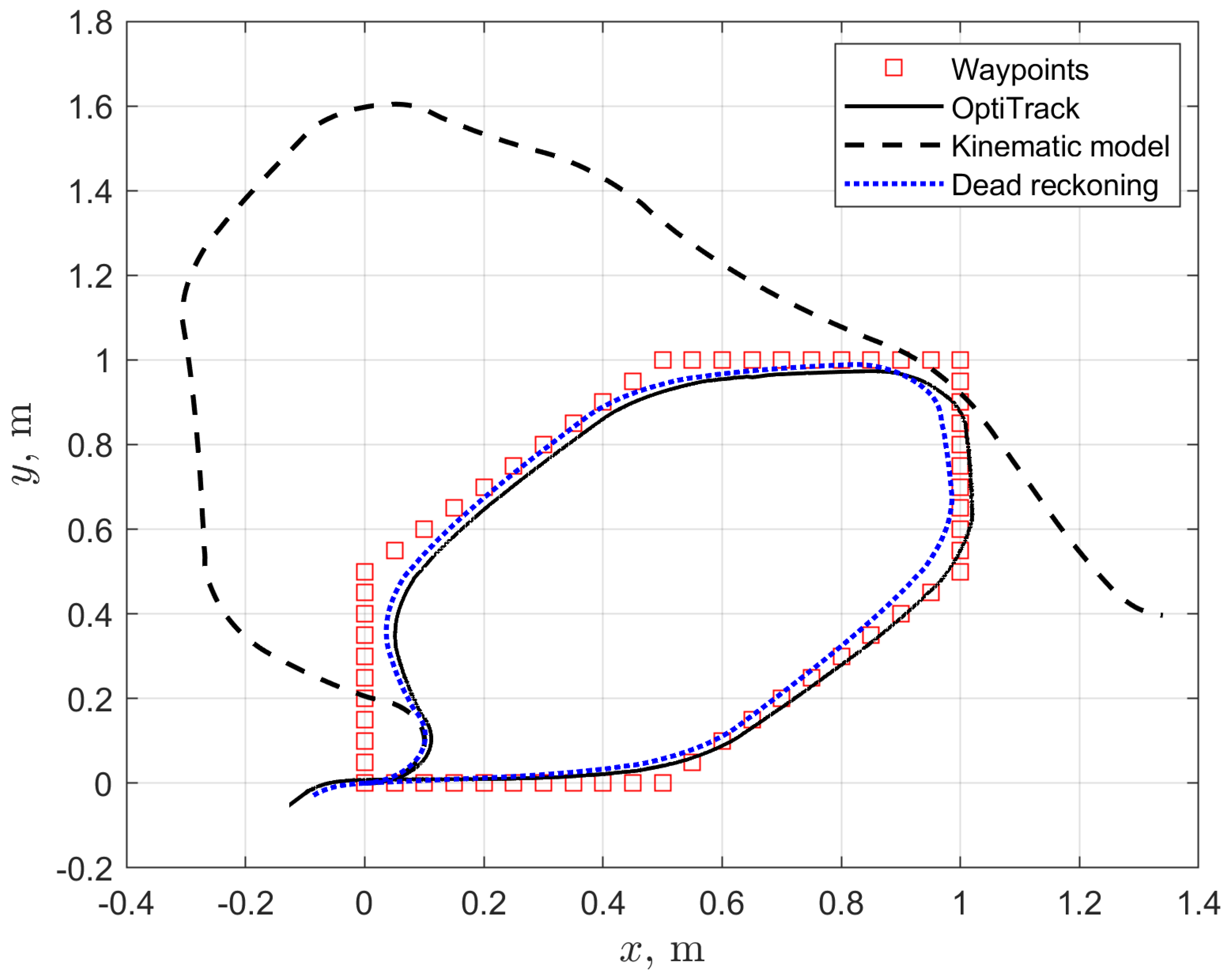

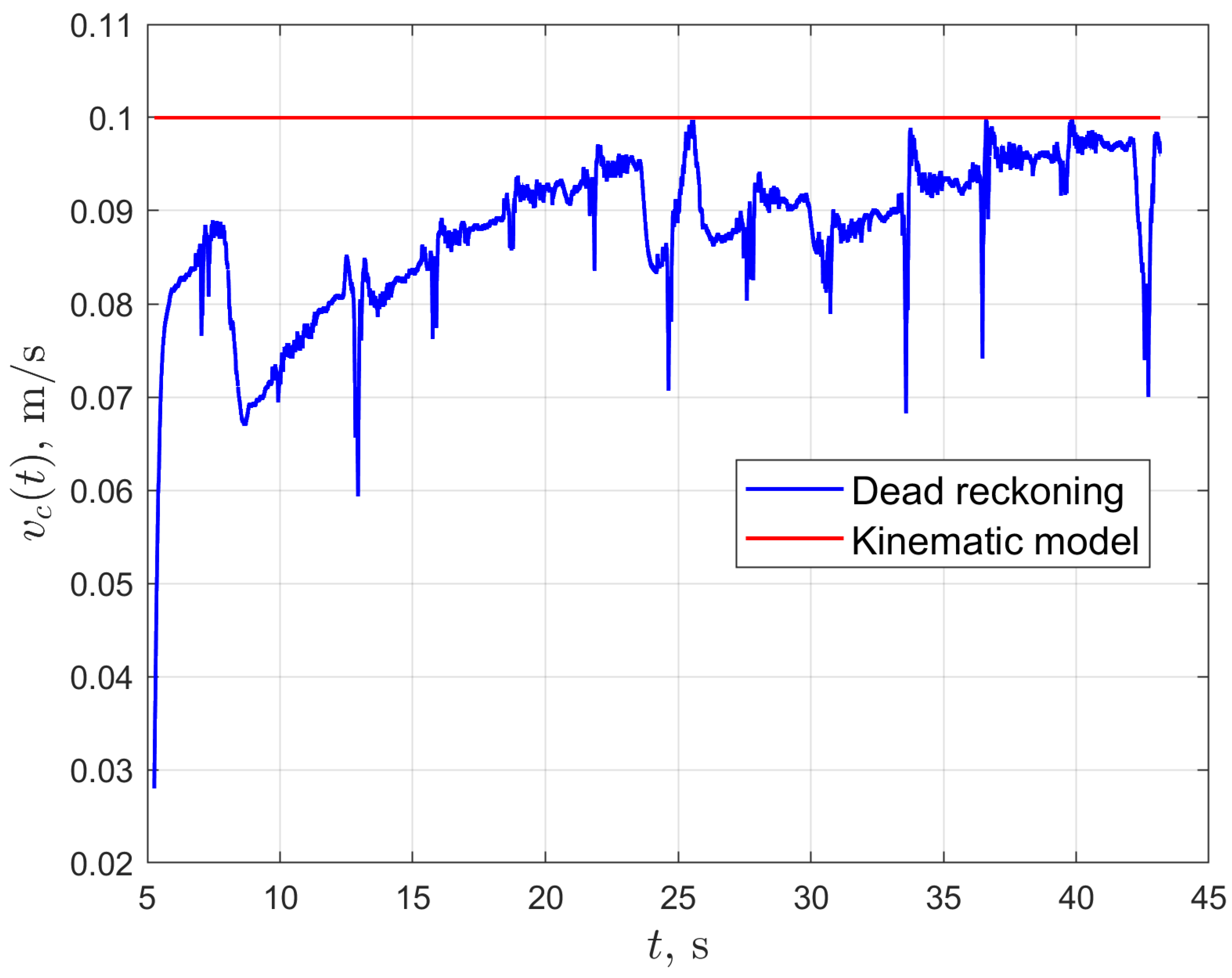

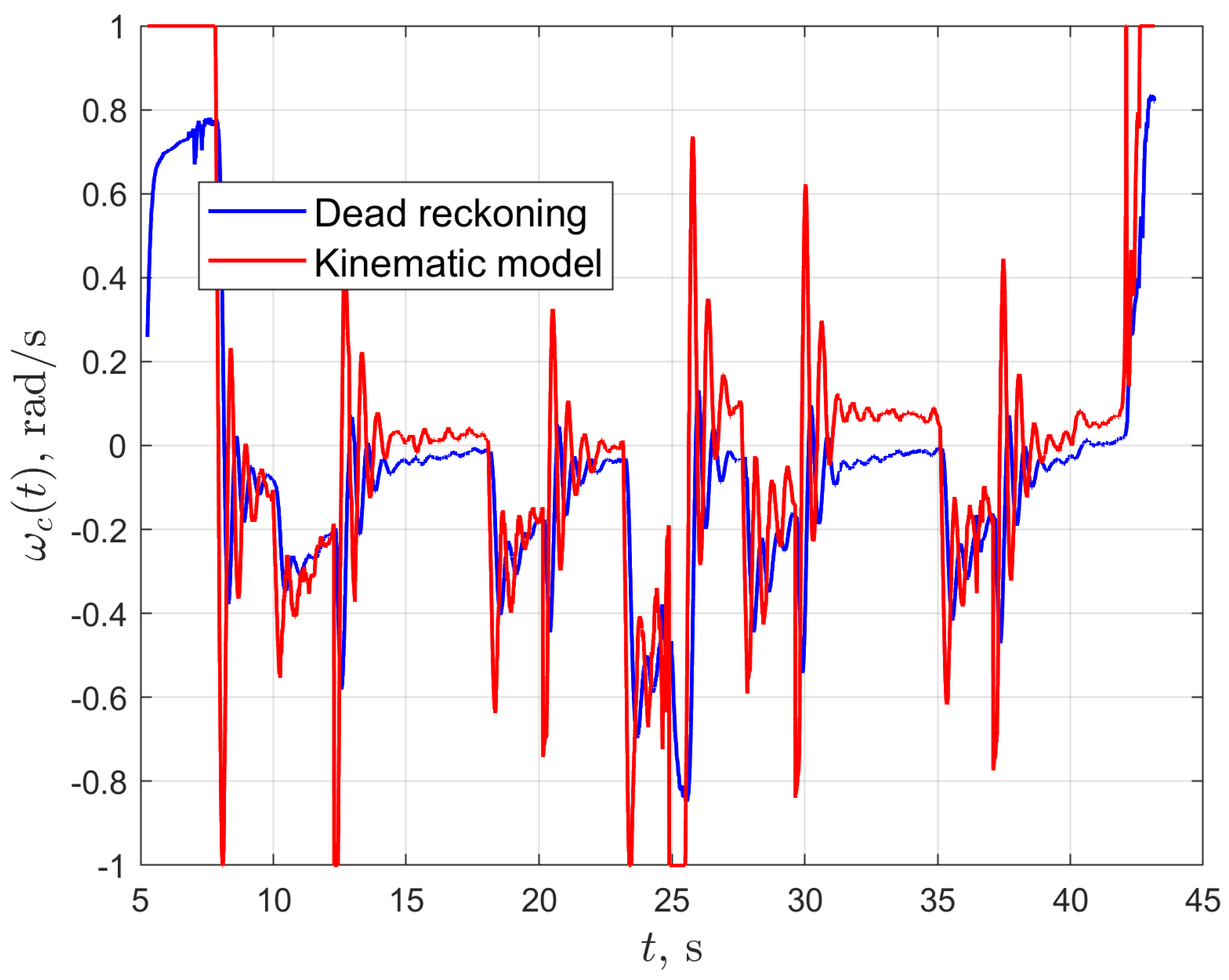

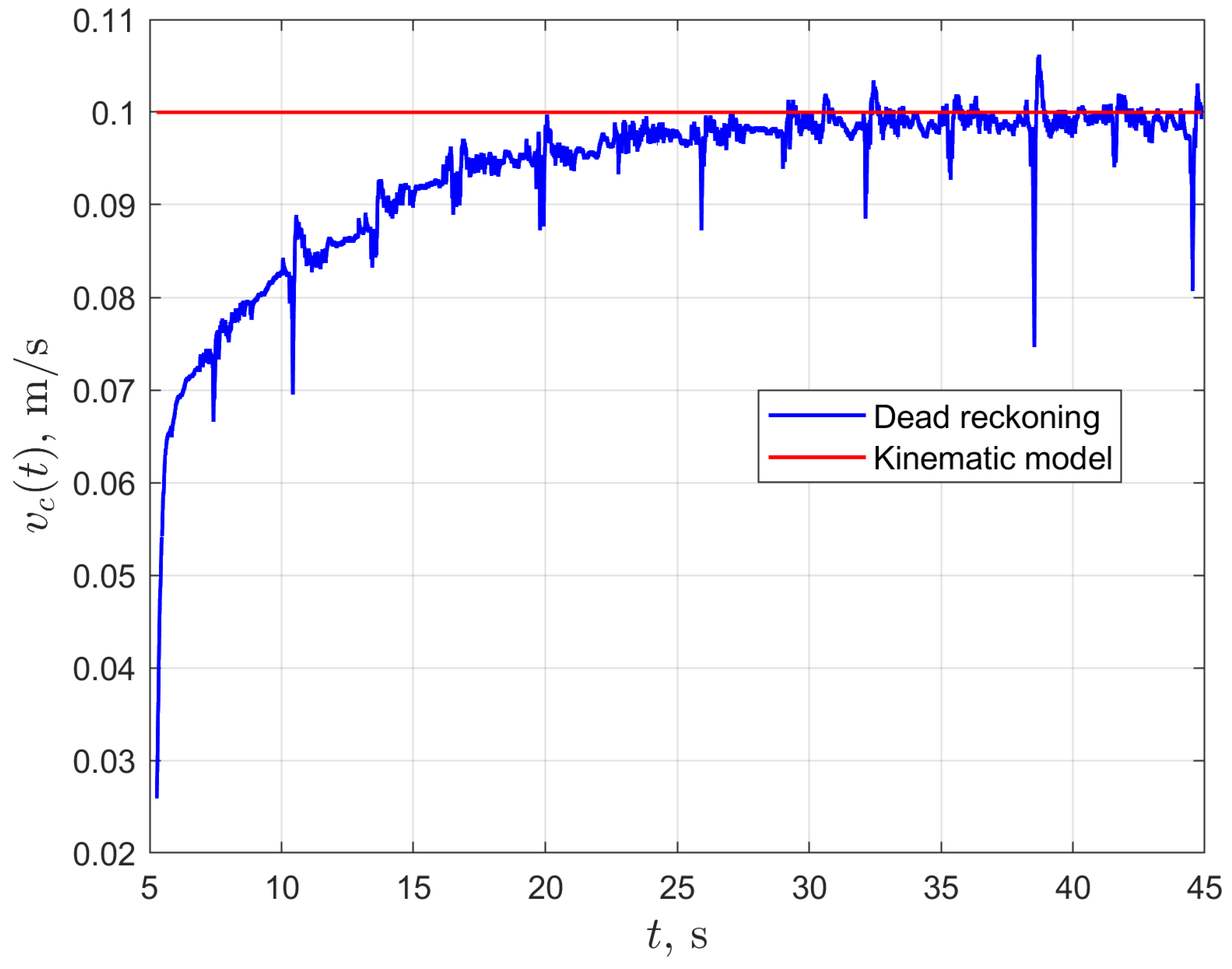

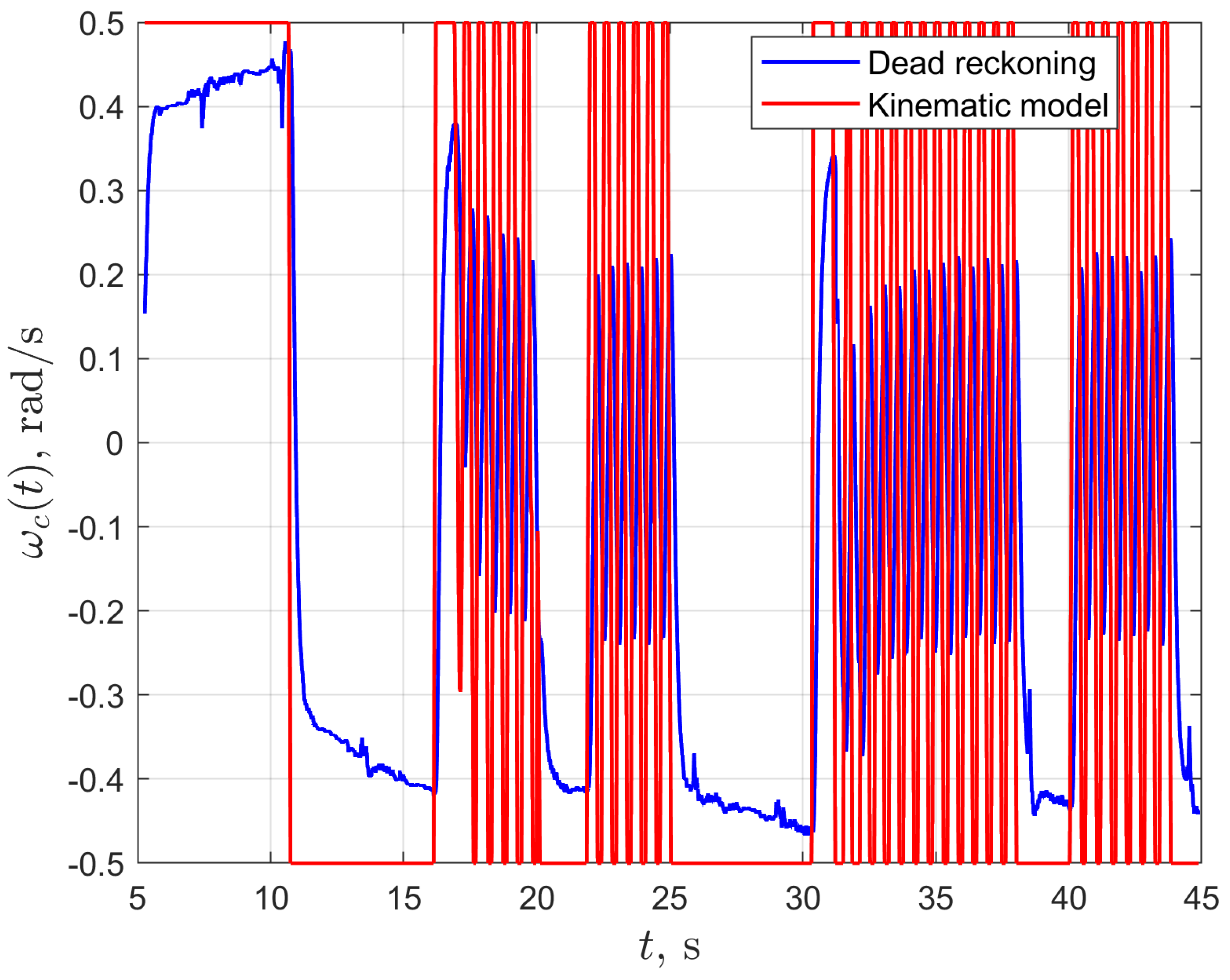

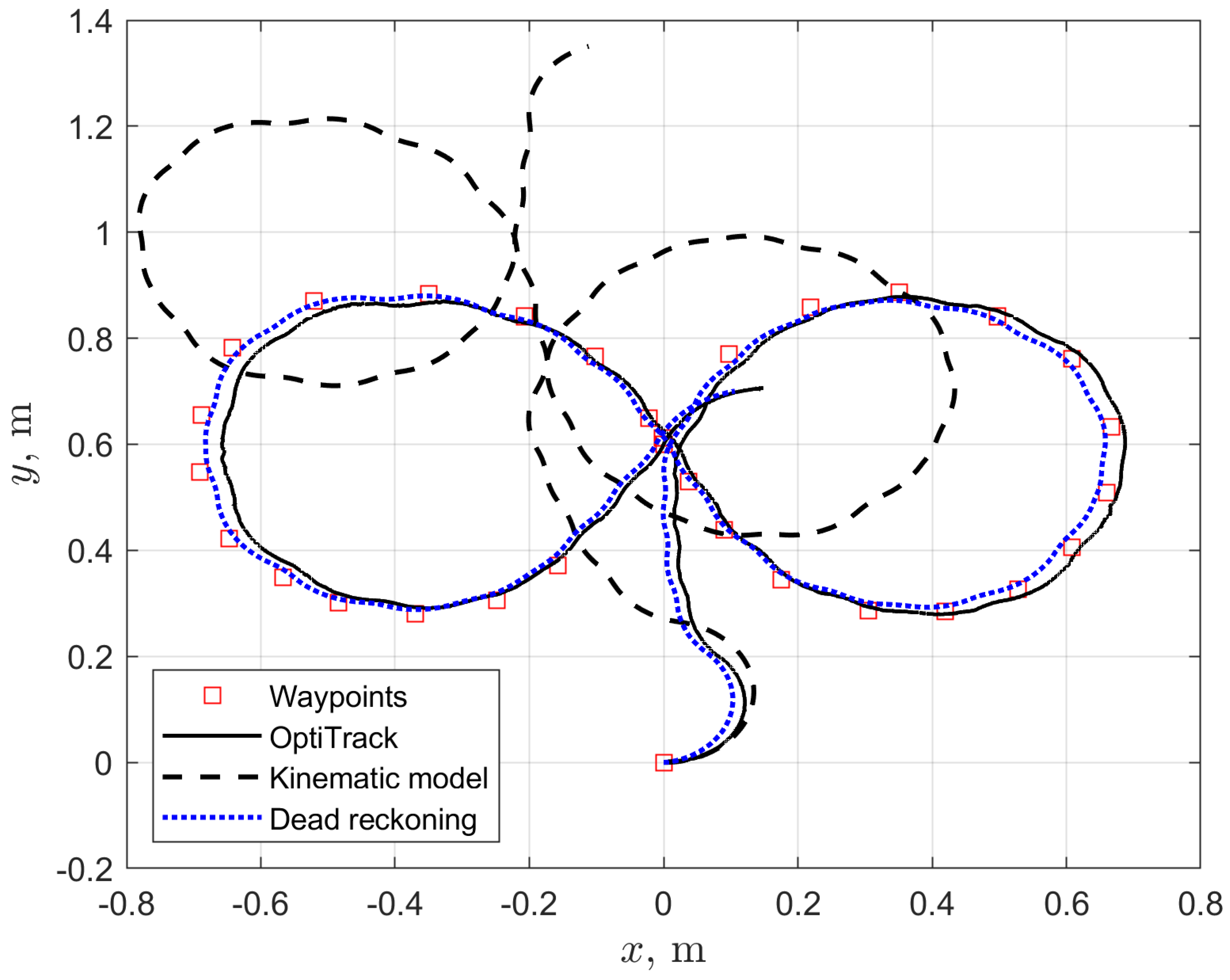

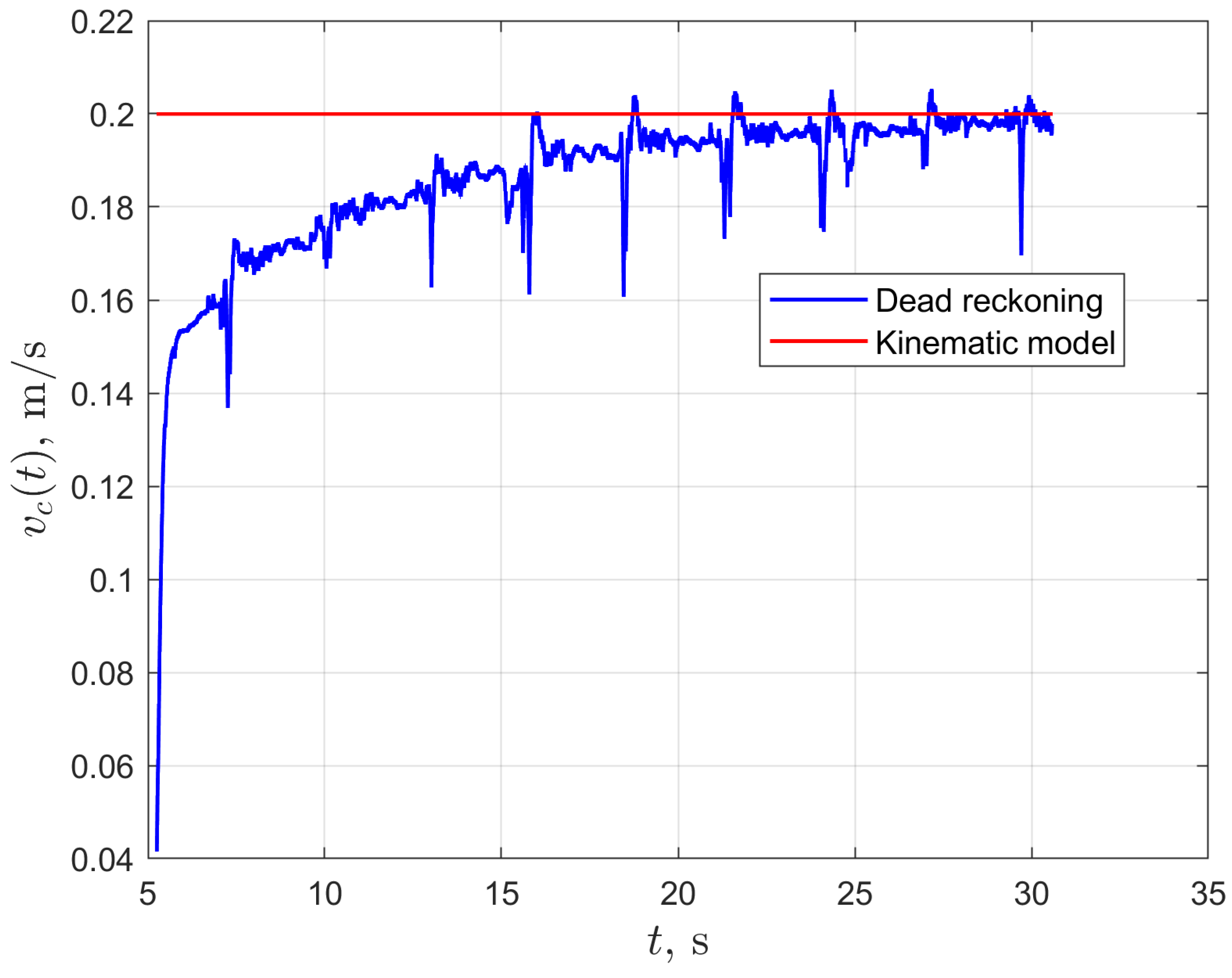

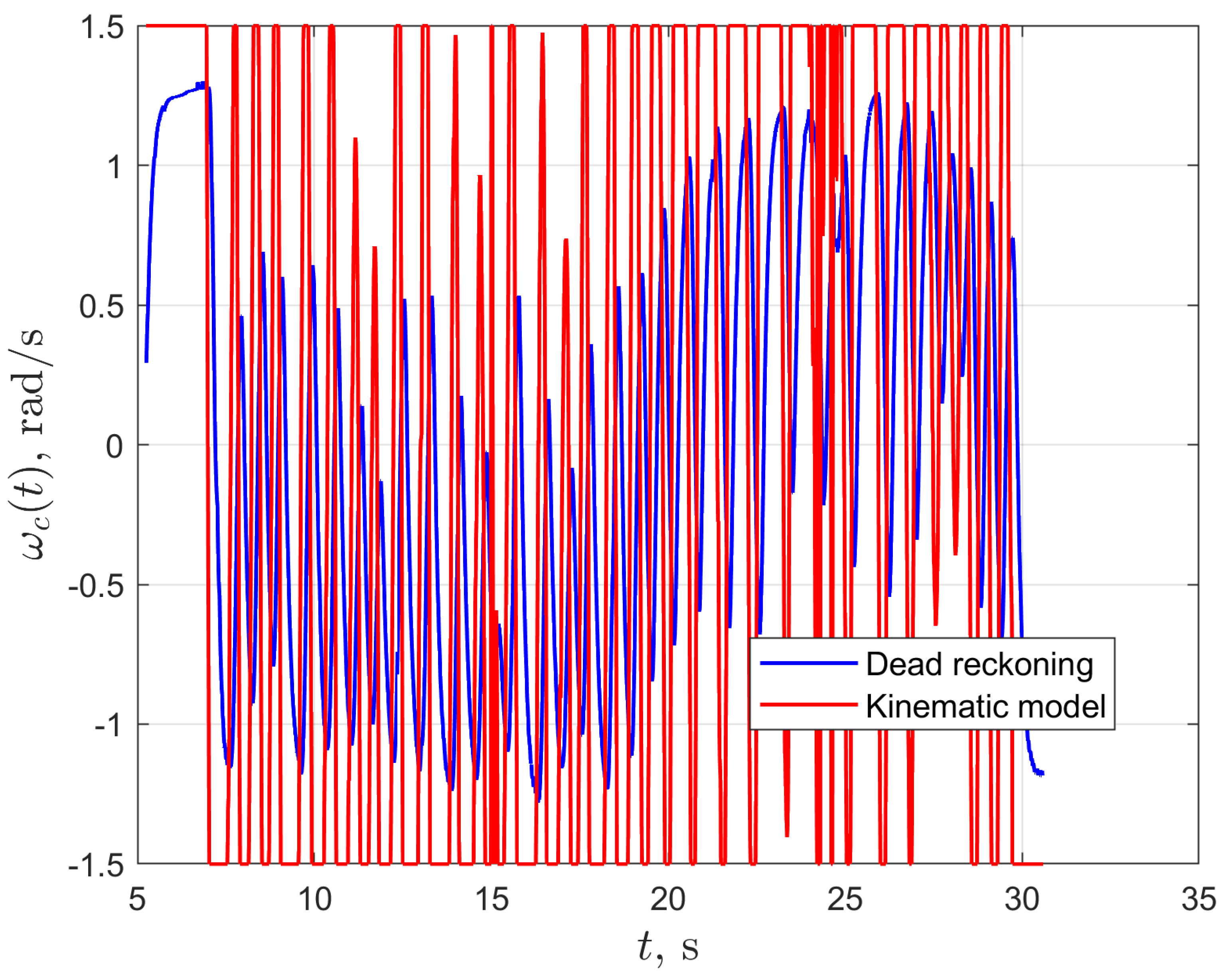

3.1. Results for Path

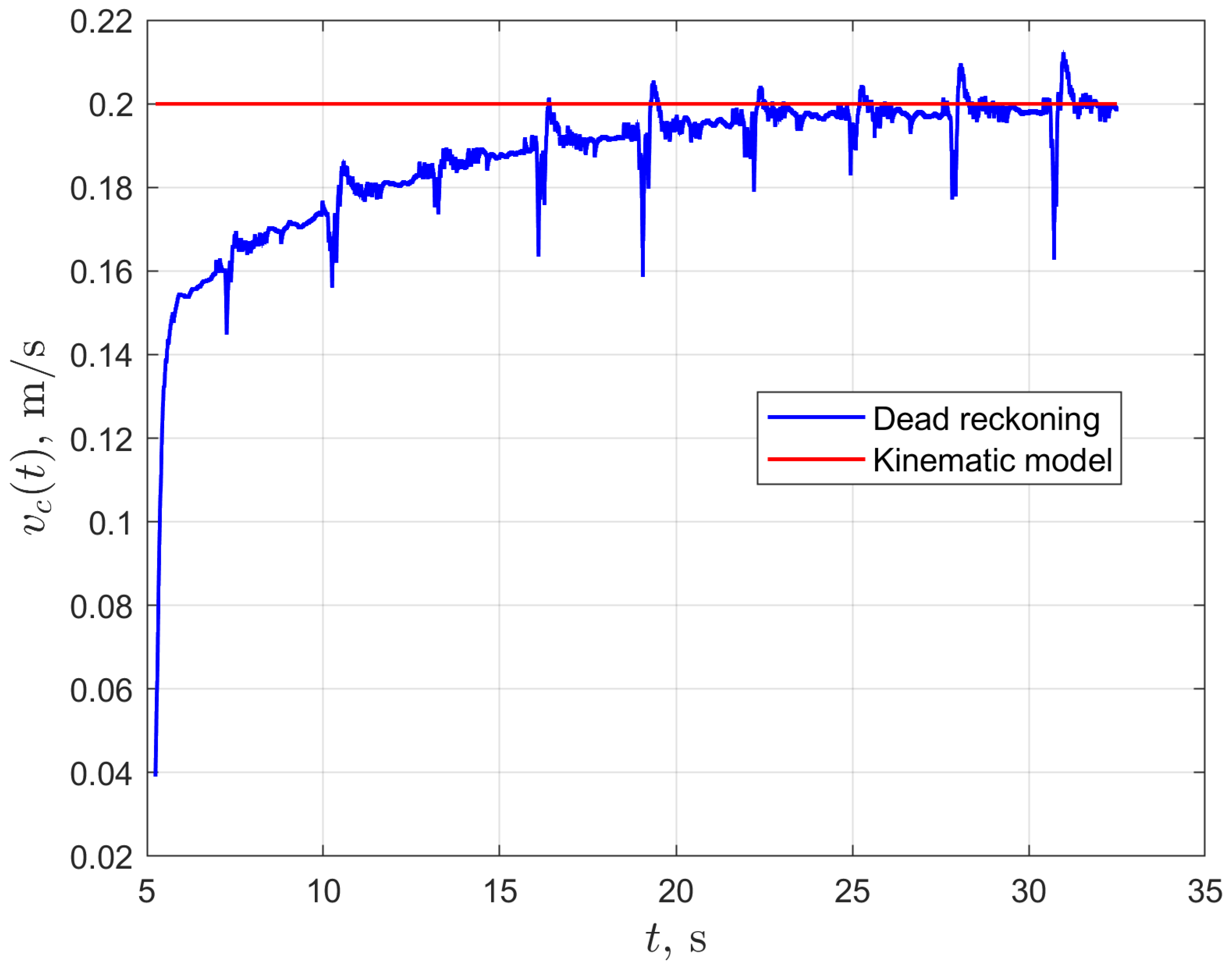

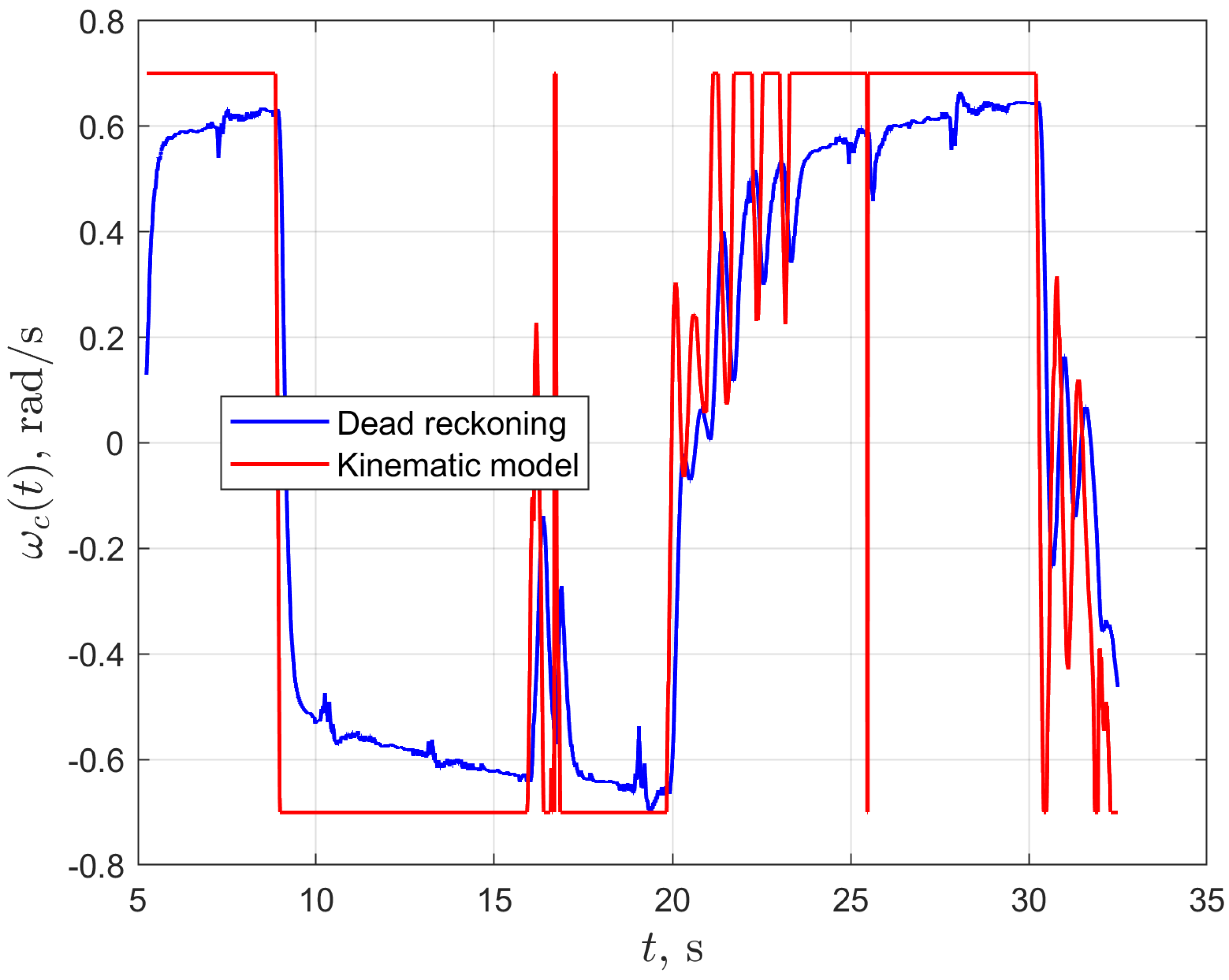

3.2. Results for Path

4. Discussion

5. Conclusions

Funding

Conflicts of Interest

References

- Le, A.; Clue, R.; Wang, J.; Ahn, I.S. Distributed Vision-Based Target Tracking Control Using Multiple Mobile Robots. In Proceedings of the 2018 IEEE International Conference on Electro/Information Technology (EIT), Rochester, MI, USA, 3–5 May 2018; pp. 142–149. [Google Scholar]

- Corke, P. Robotics, Vision and Control: Fundamental Algorithms in MATLAB, 2nd ed.; Springer Publishing Company Incorporated: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Yamaguchi, H.; Nishijima, A.; Kawakami, A. Control of two manipulation points of a cooperative transportation system with two car-like vehicles following parametric curve paths. Robot. Auton. Syst. 2015, 63, 165–178. [Google Scholar] [CrossRef]

- Machado, T.; Malheiro, T.; Monteiro, S.; Erlhagen, W.; Bicho, E. Attractor dynamics approach to joint transportation by autonomous robots: theory, implementation and validation on the factory floor. Auton. Robot. 2019, 43, 589–610. [Google Scholar] [CrossRef]

- Niewola, A.; Podsędowski, L. PSD—Probabilistic algorithm for mobile robot 6D localization without natural and artificial landmarks based on 2.5D map and a new type of laser scanner in GPS-denied scenarios. Mechatronics 2020, 65, 102308. [Google Scholar] [CrossRef]

- Gharajeha, M.S.; Jondb, H.B. Hybrid Global Positioning System-Adaptive Neuro-Fuzzy Inference System based autonomous mobile robot navigation. Robot. Auton. Syst. 2020, 134, 103669. [Google Scholar] [CrossRef]

- Cook, G. Mobile Robots. Navigation, Control and Remote Sensing; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2011. [Google Scholar]

- Jadidi, M.G.; Miro, J.V.; Dissanayake, G. Gaussian processes autonomous mapping and exploration for range-sensing mobile robots. Auton Robot 2018, 42, 273–290. [Google Scholar]

- Fauser, T.; Bruder, S.; El-Osery, A. A Comparison of Inertial-Based Navigation Algorithms for a Low-Cost Indoor Mobile Robot. In Proceedings of the 12th International Conference on Computer Science & Education (ICCSE 2017), Houston, TX, USA, 22–25 August 2017. [Google Scholar]

- Kelly, A. Mobile Robotics, Mathematics, Models, and Methods; Cambridge University Press: Cambridge, UK, 2013. [Google Scholar]

- Dudek, G.; Jenkin, M. Computational Principles of Mobile Robotics; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Bethencourt, J.V.M.; Ling, Q.; Fernández, A.V. Controller design and implementation for a differential drive wheeled mobile robot. In Proceedings of the Chinese Control and Decision Conference (CCDC), Mianyang, China, 23–25 May 2011; pp. 4038–4043. [Google Scholar]

- Rogne, R.H.; Bryne Torleiv, H.; Fossen Thor, I.; Johansen Tor, A. MEMS-based Inertial Navigation on Dynamically Positioned Ships: Dead Reckoning. IFAC-PapersOnLine 2016, 23, 139–146. [Google Scholar] [CrossRef]

- Sekiguchi, S.; Yorozu, A.; Kuno, K.; Okada, M.; Watanabe, Y.; Takahashi, M. Human-friendly control system design for two-wheeled service robot with optimal control approach. Robot. Auton. Syst. 2020, 131, 103562. [Google Scholar] [CrossRef]

- Ardentov, A.A.; Karavaev, Y.L.; Yefremov, K.S. Euler Elasticas for Optimal Control of the Motion of Mobile Wheeled Robots: the Problem of Experimental Realization. Regul. Chaotic Dyn. 2019, 24, 312–328. [Google Scholar] [CrossRef]

- Snider, J.M. Automatic Steering Methods for Autonomous Auto-Mobile Path Tracking; Technical Report, CMU-RI-TR-09-08; Robotics Institute, Carnegie Mellon University: Pittsburgh, PA, USA, 2009. [Google Scholar]

- Coulter, R. Implementation of the Pure Pursuit Path Tracking Algorithm; Carnegie Mellon University: Pittsburgh, PA, USA, 1990. [Google Scholar]

- Furtado, J.S.; Lai, G.; Lacheray, H.; Desuoza-Coelho, J. Comparative Analysis of OptiTrack Motion Capture Systems. In Advances in Motion Sensing and Control for Robotic Applications; Janabi-Sharifi, F., Melek, W., Eds.; Springer Nature Switzerland AG: Cham, Switzerland, 2018; pp. 15–31. [Google Scholar]

- Mashood, A.; Mohammed, M.; Abdulwahab, M.; Abdulwahab, S.; Noura, H. A hardware setup for formation flight of UAVs using motion tracking system. In Proceedings of the 10th International Symposium on Mechatronics and Its Applications (ISMA), Sharjah, UAE, 8–10 December 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Magdy, N.; Sakr, M.A.; Mostafa, T.; El-Bahnasy, K. Review on trajectory similarity measures. In Proceedings of the 2015 IEEE Seventh International Conference on Intelligent Computing and Information Systems (ICICIS), Cairo, Egypt, 12–14 December 2015; pp. 613–619. [Google Scholar] [CrossRef]

- Little, J.J.; Gu, Z. Video retrieval by spatial and temporal structure of trajectories. In Proceedings of the Photonics West 2001—Electronic Imaging, International Society for Optics and Photonics, San Jose, CA, USA, 1 January 2001; pp. 545–552. [Google Scholar]

- Keogh, E.; Ratanamahatana, C.A. Exact indexing of dynamic time warping. Knowl. Inf. Syst. 2005, 3, 358–386. [Google Scholar] [CrossRef]

- Alt, H. The computational geometry of comparing shapes. In EffiCient Algorithms; Springer: Berlin/Heidelberg, Germany, 2009; pp. 235–248. [Google Scholar]

- Taha, A.A.; Hanbury, A. An Efficient Algorithm for Calculating the Exact Hausdorff Distance. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 11, 2153–2163. [Google Scholar] [CrossRef] [PubMed]

- QUANSER. Available online: https://www.quanser.com/products/autonomous-vehicles-research-studio/ (accessed on 1 July 2020).

- OptiTrack. Available online: https://optitrack.com/ (accessed on 1 July 2020).

| Simulation # | (m/s) | (rad/s) | (m) |

|---|---|---|---|

| 1 | 0.1 | 1.0 | 0.2 |

| 2 | 0.1 | 0.5 | 0.2 |

| 3 | 0.1 | 1.0 | 0.1 |

| 4 | 0.1 | 0.5 | 0.1 |

| Simulation # | (m/s) | (rad/s) | (m) |

|---|---|---|---|

| 1 | 0.2 | 1.5 | 0.1 |

| 2 | 0.2 | 0.7 | 0.1 |

| 3 | 0.2 | 1.5 | 0.2 |

| 4 | 0.2 | 0.7 | 0.2 |

| Simulation # | (m) | (m) |

|---|---|---|

| 1 | 0.040 | 0.610 |

| 2 | 0.054 | 0.270 |

| 3 | 0.072 | 0.646 |

| 4 | 0.074 | 0.240 |

| Simulation # | (m) | (m) |

|---|---|---|

| 1 | 0.031 | 0.381 |

| 2 | 0.041 | 0.310 |

| 3 | 0.050 | 0.351 |

| 4 | 0.050 | 0.423 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dudzik, S. Application of the Motion Capture System to Estimate the Accuracy of a Wheeled Mobile Robot Localization . Energies 2020, 13, 6437. https://doi.org/10.3390/en13236437

Dudzik S. Application of the Motion Capture System to Estimate the Accuracy of a Wheeled Mobile Robot Localization . Energies. 2020; 13(23):6437. https://doi.org/10.3390/en13236437

Chicago/Turabian StyleDudzik, Sebastian. 2020. "Application of the Motion Capture System to Estimate the Accuracy of a Wheeled Mobile Robot Localization " Energies 13, no. 23: 6437. https://doi.org/10.3390/en13236437

APA StyleDudzik, S. (2020). Application of the Motion Capture System to Estimate the Accuracy of a Wheeled Mobile Robot Localization . Energies, 13(23), 6437. https://doi.org/10.3390/en13236437