1. Introduction

In recent years, electric vehicles (EV) are considered a promising eco-friendly means of transportation that alleviate the environmental pollution problems caused by the use of traditional fossil fuel sources [

1]. Although EVs aim to provide zero fossil fuel consumption and emits no greenhouse gases, the additional charging power generated by the increase in the use of EVs may be concentrated in a specific time period, depending on the user’s charging patterns, which significantly result in high peak demand. The required amount of charging electricity for EVs will increase power losses, voltage fluctuations and grid overloads, making the operation of power plants inefficient and negatively affecting the stability and reliability of the power grid [

2]. The electric vehicle charging stations (EVCS) are playing an important role in recharging EVs. These EVCSs buy power from the power grid at a lower price and then sell power to EVs at a higher price in order to make a profit [

3]. Compared with home charging, the charging stations could offer lower charging prices because of the lower rate of purchasing from the wholesale market. In order to support the grid integration of EVs and alleviate the high peak demand issues, hourly rates (day-ahead pricing or real-time rates) are widely used to move loads and stabilize the power systems.

The grid integration of electric vehicles and charging/discharging capabilities of the charging stations have received much attention in different applications including vehicle-to-home (V2H), vehicle-to-vehicle (V2V), and vehicle-to-grid (V2G). In V2H, a single electric vehicle is connected to a single home/building for charging/discharging based on the home/building control scheme. In V2V, multiple electric vehicles are able to transfer energy to a local grid or other electric vehicles using bidirectional chargers. In V2G, a large number of electric vehicles can be connected to a grid for charging/discharging such as parking lots and fast charging stations [

4,

5].

Many studies have proposed different charging schemes for scheduling and optimizing the operation of EVs. These methods aim to mitigate power peaks using dynamic electricity prices. However, most studies determine the charging time and the charging speed when EVs are parking at home and parking lots for a long time. However, EV users may need the charging service while driving for a short mileage due to limited EV battery capacity. Therefore, an electric vehicle navigation system (EVNS) will play an important role to recommend the appropriate route and charging station for charging, taking into account user preferences such as the driving time, the charging price, and the charging wait time [

6,

7,

8,

9,

10,

11].

Authors in [

6] proposed an integrated EV navigation system (EVNS) based on a hierarchical game approach considering the impact of the transportation system and the power system. The proposed system consists of a power system operating center (PSOC), charging stations, an EVNS, and EV terminals. The competition between charging stations has been modeled as a non-cooperative game approach. Authors in [

7] proposed a charging navigation strategy and an optimal EV route selection based on real-time crowdsensing using a central control center. The control center is collecting information from EV drivers such as the real-time traffic information (vehicle speed and location) while charging stations are uploading charging station information. Authors in [

8] proposed an electric vehicle navigation system (EVNS) based on autonomic computing and a hierarchical architecture over vehicle ad-hoc network (VANET). The proposed architecture consists of EVs, charging stations and a traffic information center (TIC). The main functions of TIC are monitoring, analysis, planning and execution. Authors in [

9] proposed an integrated rapid charging navigation system based on an intelligent transport system (ITS) center, a power system control center (PSCC), charging stations and EV terminals. The EV terminal determines the best route based on the broadcasted data from the ITS center (status of the traffic system and the power the grid) without any uplink data from the EV side that ensure driver privacy. Authors in [

11] proposed a hybrid charging management framework for optimal choice between battery charging/swapping stations for urban EV taxis. The main entities are EVs, charging stations, battery-swapping stations, and a global controller. The global controller is a central entity that receives real-time information from charging/swapping stations and accurately determine the optimal station for supporting the EV taxi charging. However, the above methods are performed in a deterministic environment and do not consider the uncertainties due to the dynamically changing traffic conditions and waiting time of the charging stations. The randomness of the traffic conditions and the charging waiting time can have a significant impact on the performance of the route and charging station selection schemes. In addition, EV charging requests that arrive dynamically according to EV user’s behavior patterns are also another important factor. Therefore, dealing effectively with the uncertainty of unknown future states to select the appropriate route and charging station presents a very considerable challenge. Reinforcement learning can be applied to complex decision-making problems, as reinforcement learning does not rely on prior knowledge of uncertainty.

Deep reinforcement learning (DRL) is a combination of reinforcement learning (decision-making ability) and deep learning (perception function) which is able to address the challenging problems of sequential decision-making. Under a stochastic environment and uncertainty, most of the decision-making problems can be modeled by the Markov decision process (MDP). The MDP is a basic formulation for reinforcement learning, which provides a framework for optimal decision making under uncertainty. The DRL can be divided into two categories: model-based methods and model-free methods. To evaluate the decision behavior, the DRL uses the reward function [

12,

13,

14,

15]. With respect to charging navigation of electric vehicle on the move, the main challenges are the location of the electric vehicle (the selected route and charging station are different based on electric vehicle location), charging mode (slow/fast charging), battery state of charge (the travel distance is proportional to remain battery status). Other factors include the randomness of user behaviors, traffic conditions, waiting time at the charging station, and charging prices [

16,

17,

18,

19,

20,

21,

22].

Most studies using Reinforcement Learning are studied for the purpose of energy management and cost minimization in EVs, charging stations, and smart buildings [

16,

17,

18,

19,

20,

21]. There are few studies for charging station selection. To minimize charging cost and time, the EV charging navigation using reinforcement learning is proposed in [

22]. The proposed system selects the optimum route and charging station without prior knowledge of traffic conditions, charging price, and charging waiting time. However, the proposed system only considers the route from the starting point to the charging station and can significantly increase complexity in the large-size network due to the extraction of features using optimization techniques from inter-node movements. In addition, the impact between EVs serviced by the navigation system and the uncertainty of future EV charging requests was not considered.

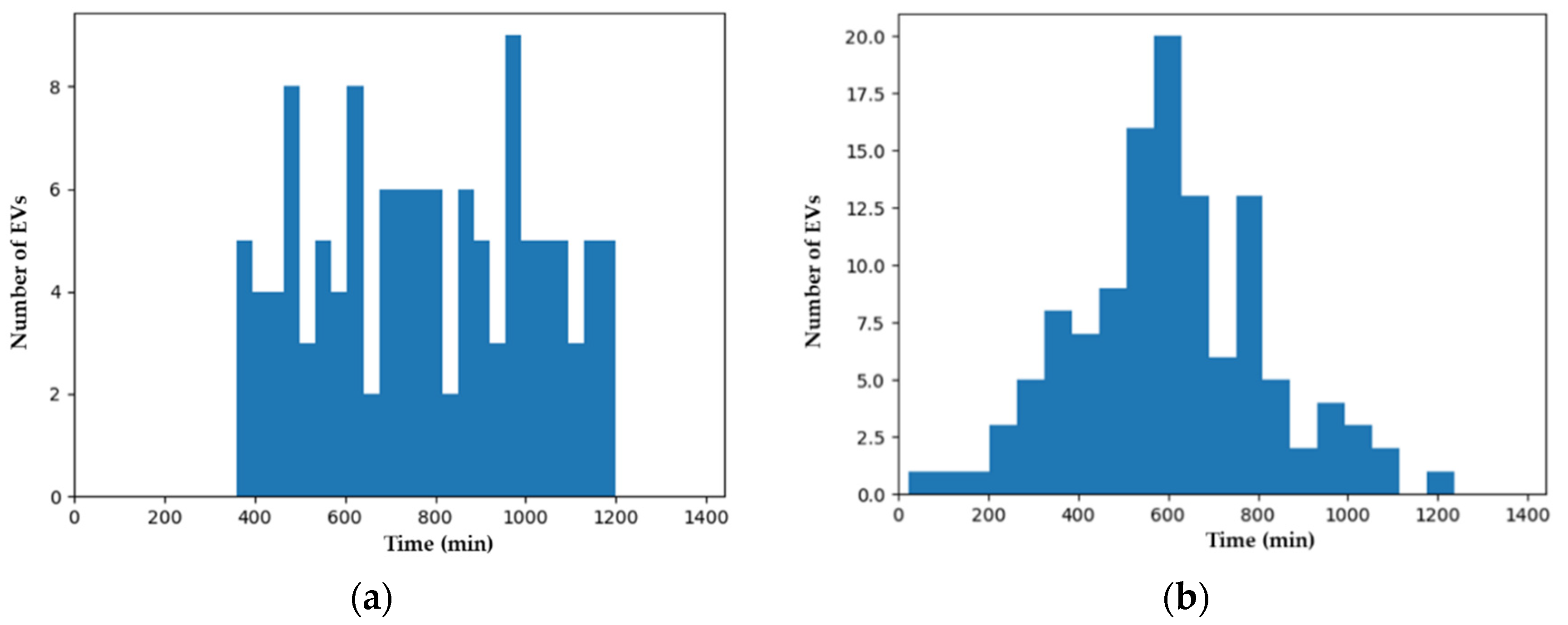

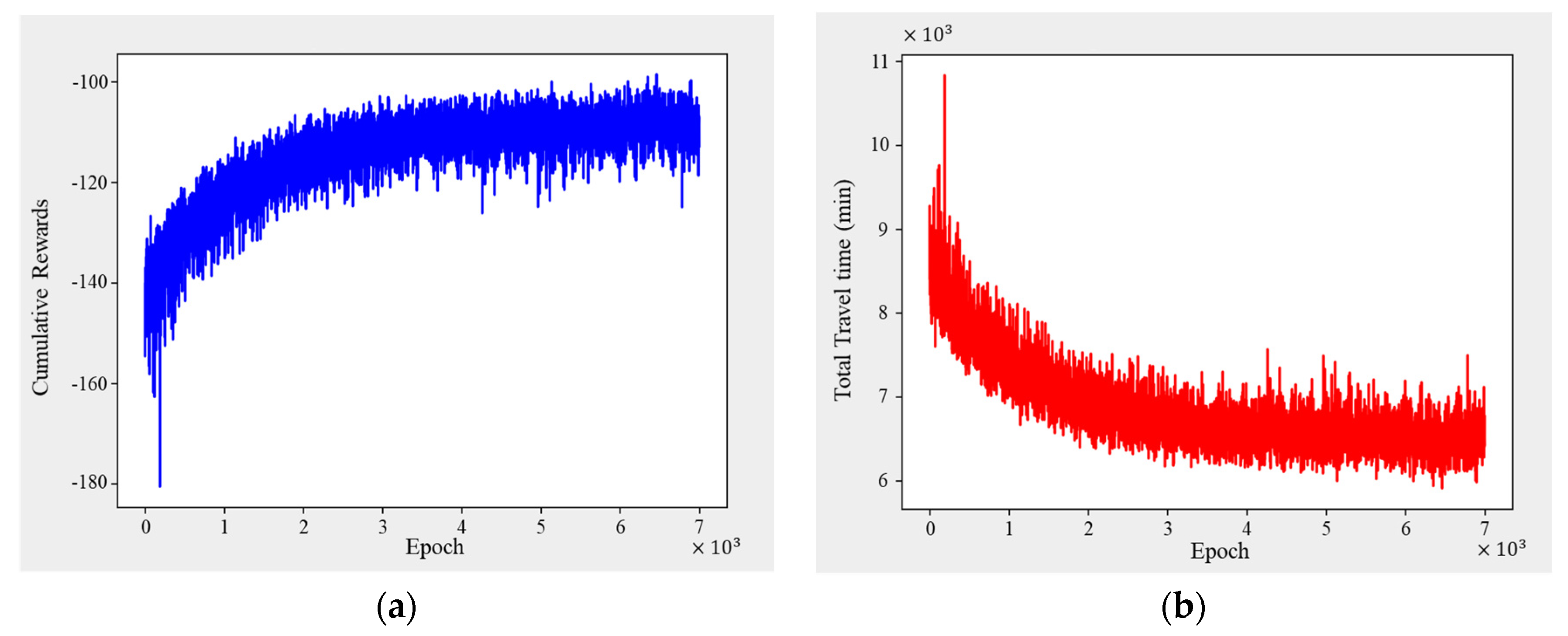

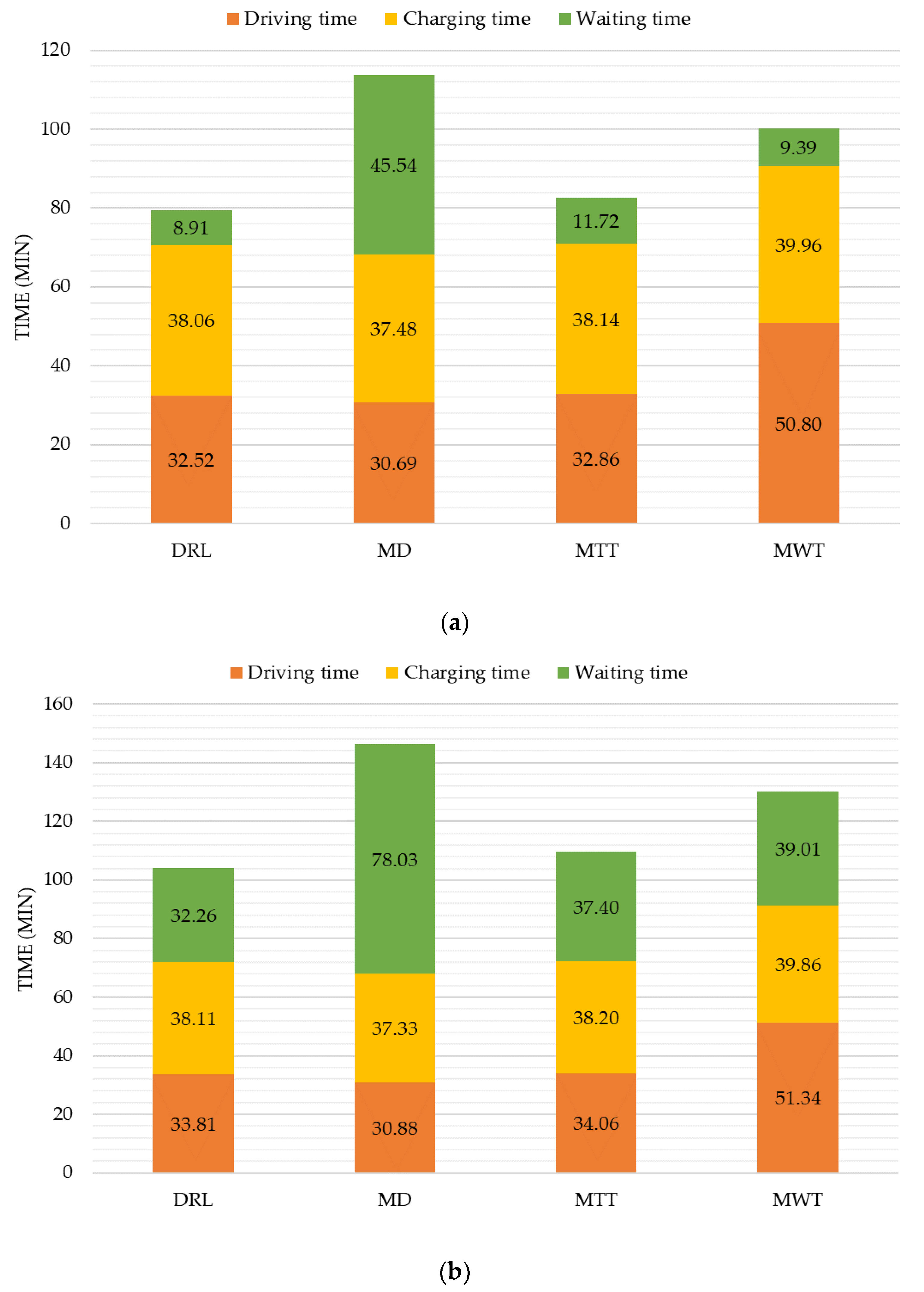

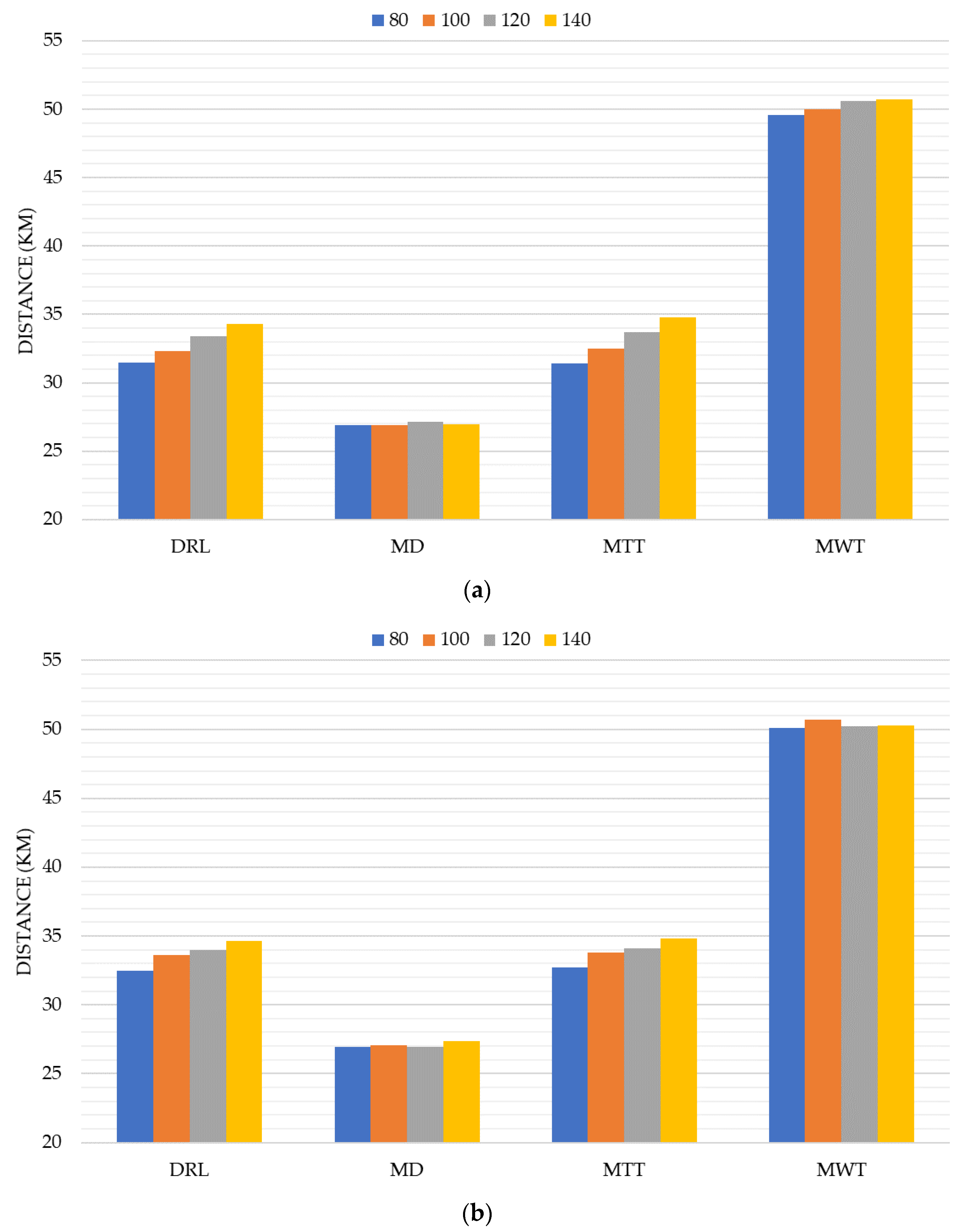

In this paper, we propose an optimal route and charging station selection (RCS) algorithm based on model-free deep reinforcement learning. The proposed RCS algorithm minimizes the total travel time with the uncertainty of the traffic conditions and dynamic arrival charging requests. We formulate this RCS problem as a Markov decision process (MDP) model with unknown transition probability. The proposed deep reinforcement learning (DRL) based RCS algorithm learns the optimal RCS policy by the DQN through repeated trial and error. To obtain the feature states for each EVCS, we present the traffic preprocess module, charging preprocess module, and feature extract module. The energy consumption model and link cost function are defined. The performance of the proposed DRL based RCS algorithm is compared to the conventional strategies in terms of travel time, waiting time, charging time, driving time, and distance under the various distributions and number of EV charging requests. The novelty and attribution of the paper are as follows:

Model-free deep reinforcement learning based optimal route and charging station selection (RCS) algorithm is proposed to overcome the uncertainty issues of the traffic conditions and dynamic arrival EV charging requests.

The RCS problem is formulated by the Markov Decision Process (MDP) model with unknown transition probabilities.

The performance of the proposed DRL based RCS algorithm is compared to the conventional algorithms in terms of travel time, waiting time, charging time, driving time, and distance under the various distributions and number of EV charging requests.

The rest of this paper is organized as follows. In

Section 2, we discuss the related work. In

Section 3, we proposed EV charging navigation system architecture and deep reinforcement learning-based RCS algorithm. Various simulations are carried out in

Section 4 to prove the effectiveness and benefits of the proposed approach. Finally, conclusions are drawn in

Section 5.

2. Related Work

Generally, the electric vehicle system consists of two main layers: the physical infrastructure layer (electric vehicles, charging stations, transformers, electric feeders, etc.) and the cyber infrastructure layer (IoT devices, sensor nodes, meters, monitoring devices, etc.) [

5]. There are many challenges associated with the charging/discharging of the electric vehicles considering many sources of uncertainties and the interaction among different domains including electric vehicles, charging stations, the electric power grid, communication networks, and the electricity market. Deep reinforcement learning has received much attention and is considered as a promising tool to address the aforementioned challenges.

With respect to the electric power grid, authors in [

12] introduced the applications of deep reinforcement learning in the power system such as operational control, electricity market, demand response, and energy management. With respect to communications and networking, authors in [

13] presented the applications of the deep reinforcement learning approach to address many emerging issues such as data rate control, data offloading, dynamic network access, wireless catching, network security, etc. In [

14], the authors presented the application of deep reinforcement learning for the future IoT systems, called autonomous IoT (AIoT), where the environment has been divided into three layers: the perception layer, the network layer, and the application layer. In [

15], the application of deep reinforcement learning for cyber security has been presented to solve the security problem with the presence of threats/cyber-attacks.

A comprehensive review of the application of reinforcement learning for autonomous building energy management has been presented in [

16]. Reinforcement learning has been applied to different tasks such as appliance scheduling, electric vehicle charging, water heather control, HVAC control, lighting control, etc. In [

17], the authors presented a reinforcement learning for scheduling the energy consumption of smart home appliances and distributed energy resources (electric vehicle and energy storage system). The energy consumptions of home appliances and distributed energy resources are scheduled in a continuous action space using the actor-centric deep reinforcement learning method. Authors in [

18] proposed a reinforcement learning-based energy management system for a smart building with a renewable energy source, energy storage system, and vehicle-to-grid station to minimize the total energy cost. The energy management system has been modeled using the Markov decision process describing the state space, transition probability, action space, and reward function.

Authors in [

19] proposed a model-free real-time electric vehicle charging scheduling based on deep reinforcement learning. The scheduling problem of EV charging/discharging has been formulated as a Markov decision process (MDP) with unknown transition probability. The proposed architecture consists of two networks: a representation network for extracting the discriminative features from electricity prices and a Q network for optimal action-value function. Authors in [

20] proposed model-free coordination of EV charging with reinforcement learning to coordinate a group of charging stations. The work focused on load flattening/load shaving (minimizing the load and spreading out the consumption equally over time). Authors in [

21] proposed a reinforcement learning approach for scheduling EV charging in a single public charging station with random arrival and departure time. The pricing and the scheduling problem have been formulated as a Markov decision process. The system was able to optimize the total charging rates of electric vehicles and fulfill the charging demand before departure. Authors in [

22] proposed a deep reinforcement learning for EV charging navigation with the aim to minimize charging cost (at charging station) and total travel time. The proposed system adaptively learns the optimal strategy without any prior knowledge of system data uncertainties (traffic condition, charging prices, and waiting time at charging stations).

Most of the above-mentioned studies are aimed at managing energy and minimizing costs in EVs, charging stations and smart buildings [

16,

17,

18,

19,

20,

21]. In [

22], The proposed system selects the optimum route and charging station without prior knowledge of traffic condition, charging price, and charging waiting time. Due to the extraction of features using optimization techniques from inter-node movements, the proposed system can significantly increase complexity to calculate the feature states in the large-size network. In addition, the uncertainty in future EV charging requests was not considered because the MDP model was designed from a single EV perspective.

Table 1 provides a summary of the literature review for the main entities of EVNS. It can be observed that the main entities in our system are EVs, charging stations, ITS center, and EVNS control center.

Table 2 provides a comparison between our work and other related work for the main objectives and challenges of reinforcement learning.

5. Conclusions

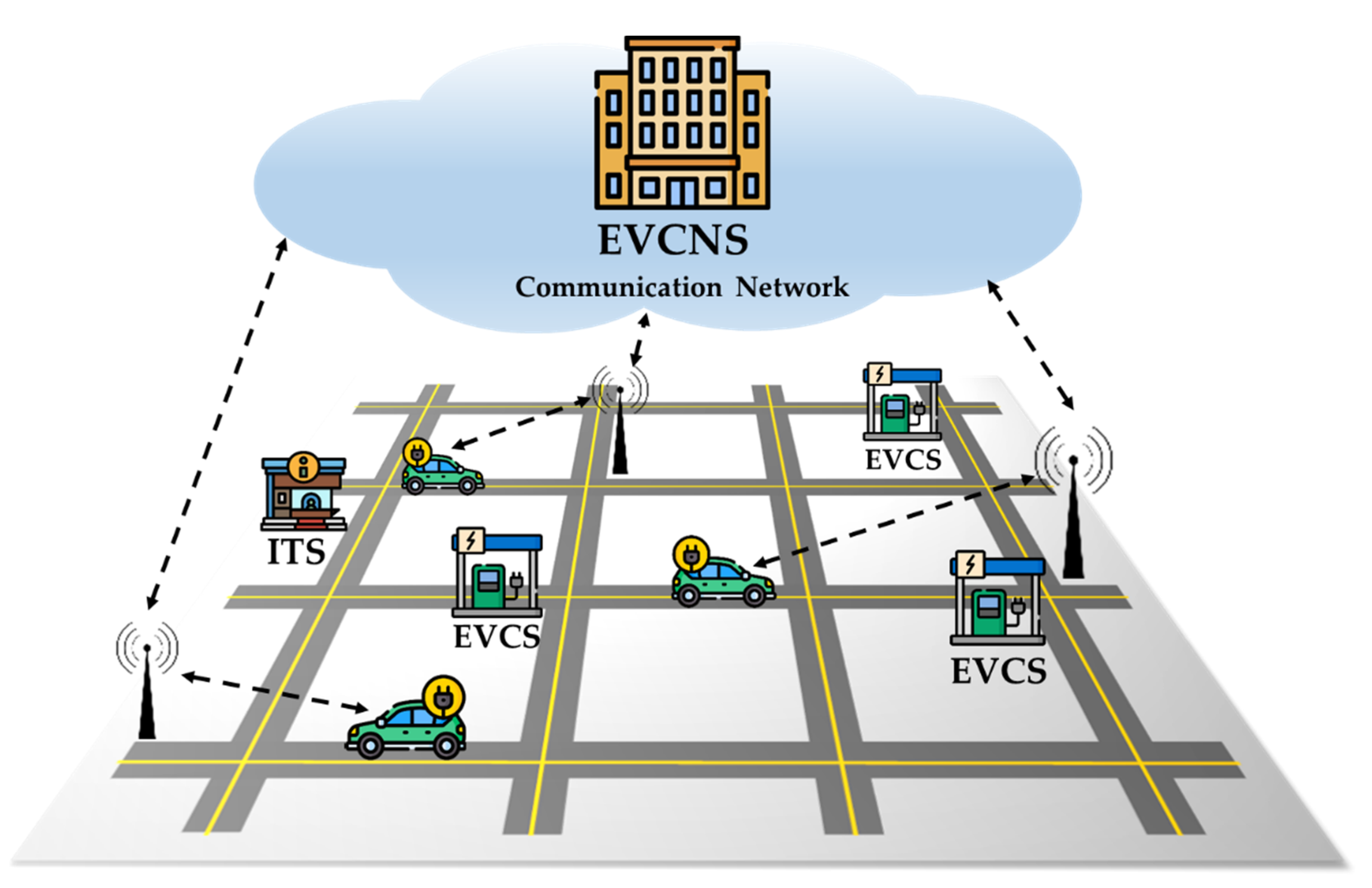

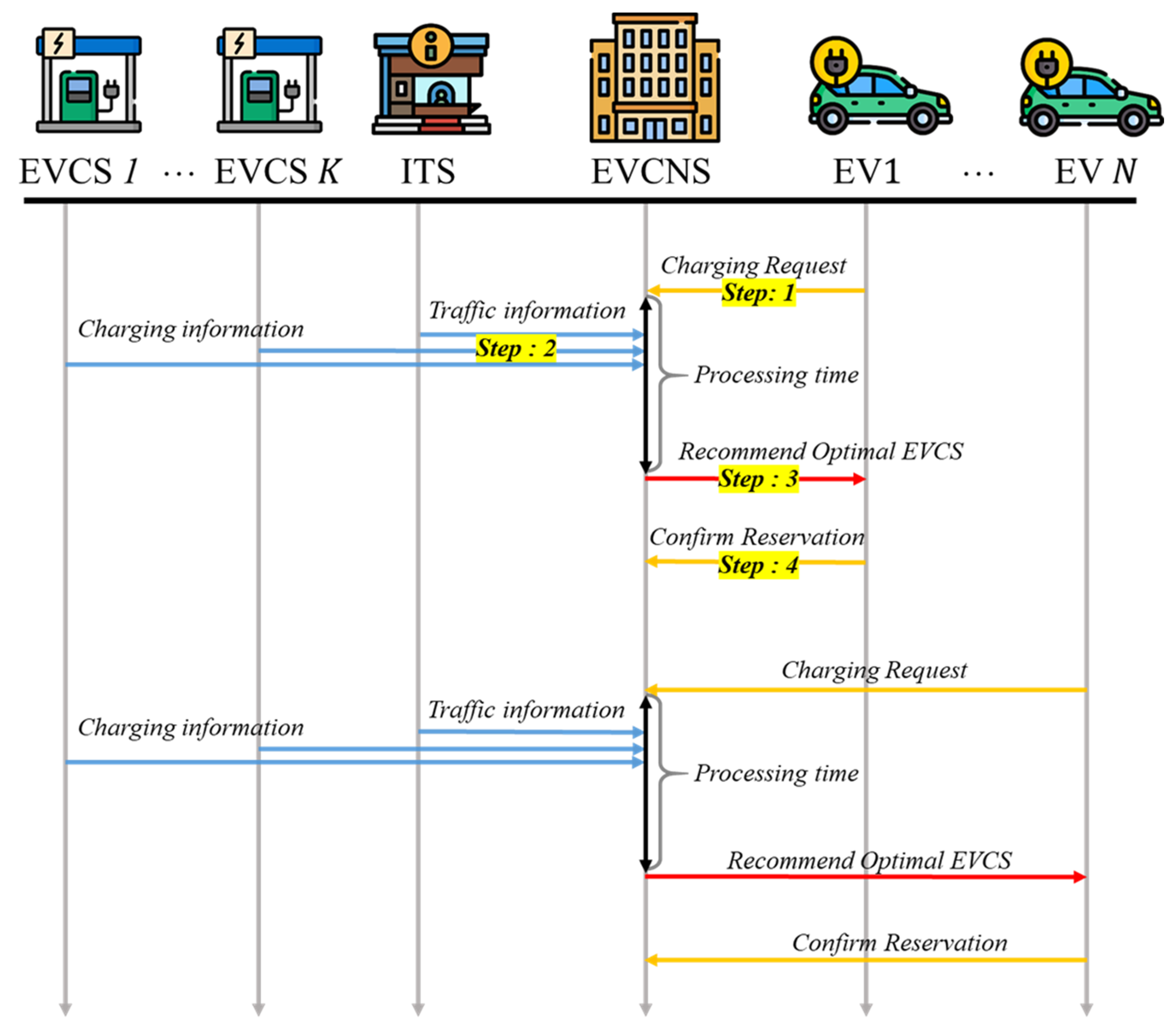

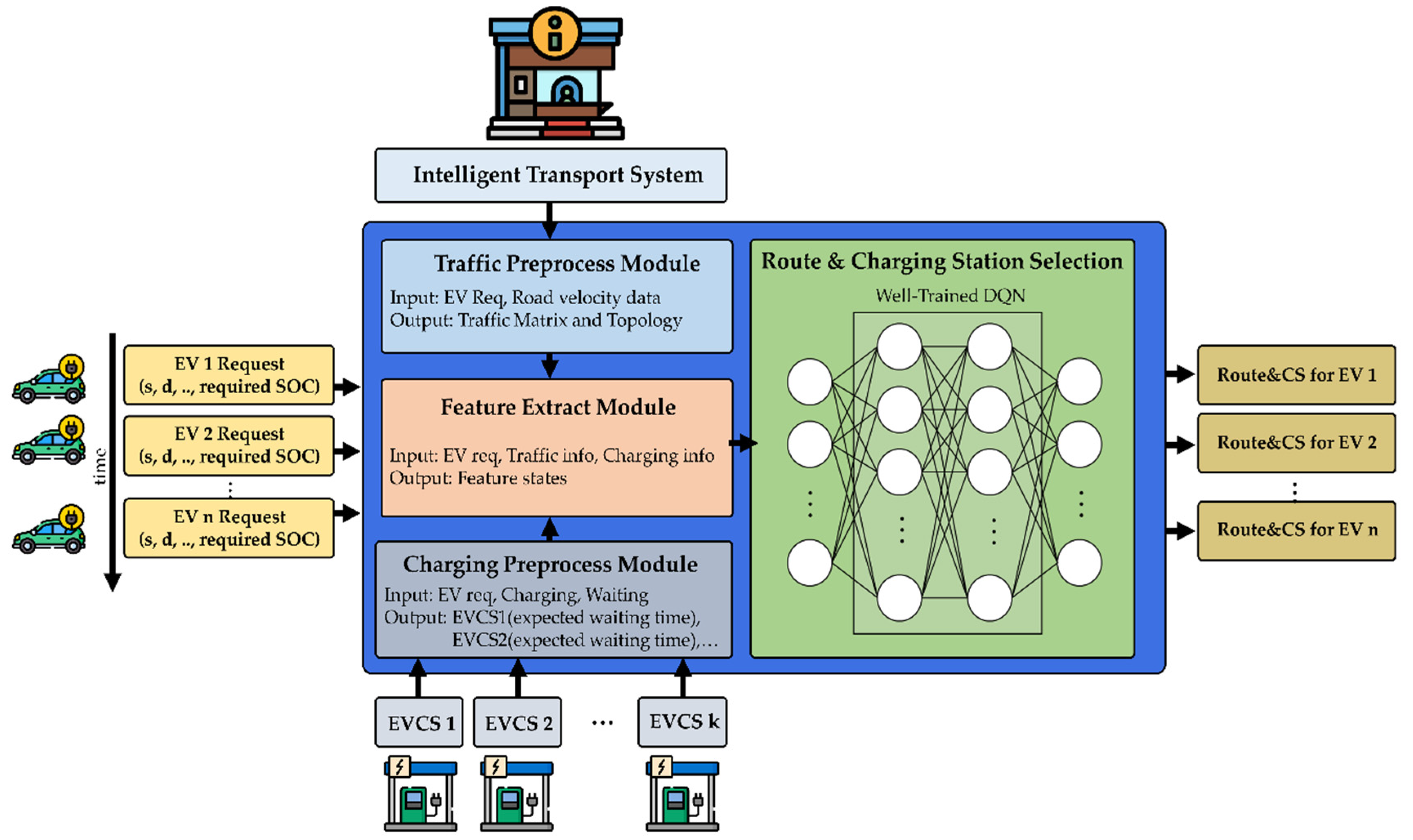

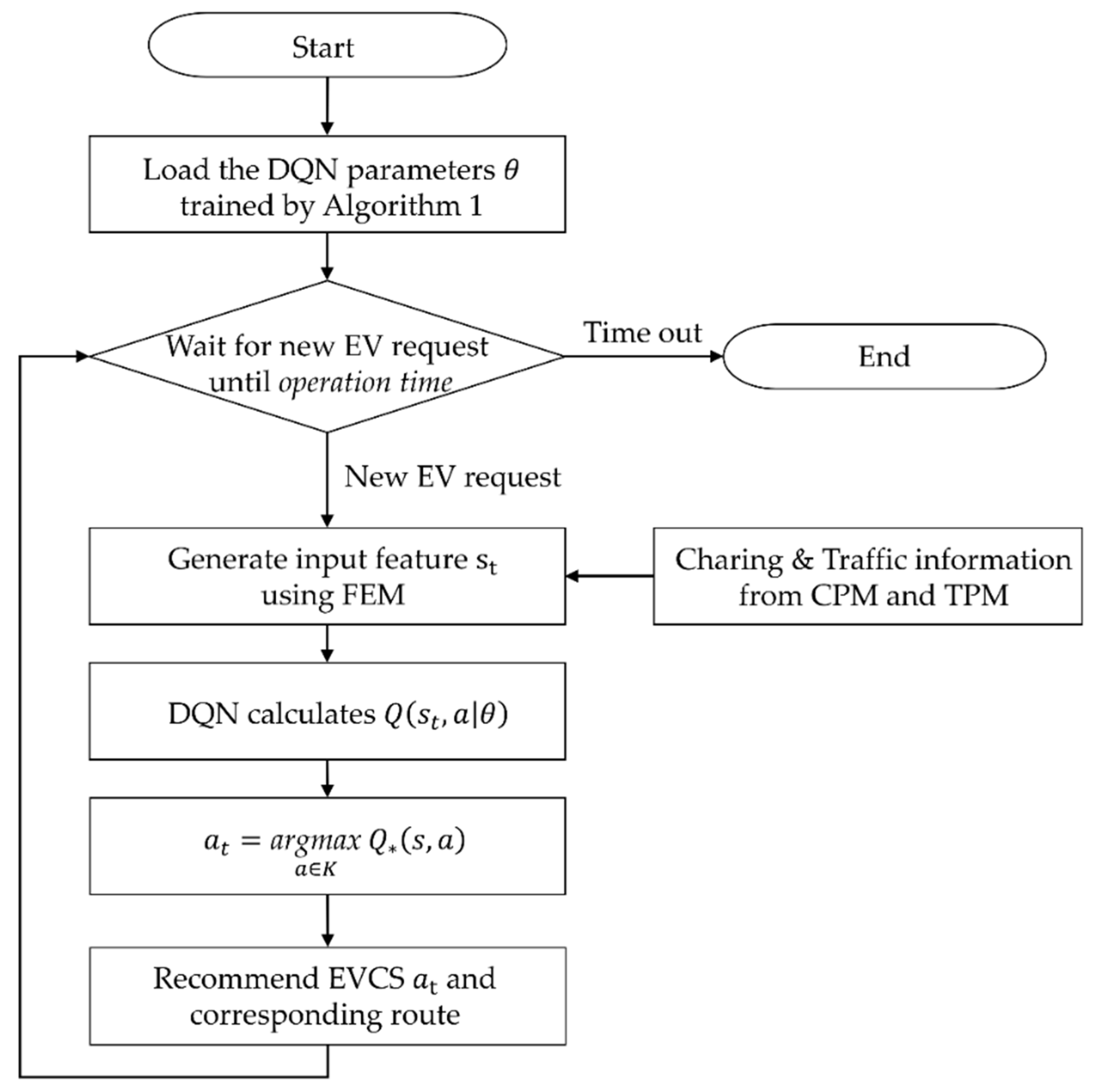

This paper proposed a framework for an electric vehicle charging navigation system (EVCNS) which aims to select the optimal route and electric vehicle charging station (EVCS). The proposed architecture consists of four main elements: EVs, EVCSs, an intelligent transport system (ITS) center, and an EVCNS center. The EVCNS includes four main modules: traffic preprocess module (TPM), charging preprocess module (CPM), feature extract module (FEM), and route & charging station selection module (RCSM). The TPM module receives traffic information from the ITS center such as the average road velocity where the data are processed in order to define the road traffic matrix and the network topology. The CPM module communicates with EVCSs and received information such as the number of charging vehicles, number of waiting vehicles. The FEM module extracts the feature state from inputs (TPM, CPM and EVs), and feeds it to the route & charging station selection module (RCSM). The RCSM makes the decision based on a well-trained Deep Q Network to select the optimal route and the charging station for each EV charging request. The performance of the proposed algorithm is compared with conventional strategies including minimum distance strategy, minimum travel time strategy, and minimum waiting time strategy in terms of travel time, waiting time, charging time, driving time, and distance under the various distributions and number of EV charging requests. The results showed that the proposed well-trained DRL based route and charging station selection algorithm improved the performance by about 4% to 30% compared to conventional strategies. The proposed well-trained DRL based algorithm showed the potential capacity to provide the optimal route and charging station selection for minimizing total travel time in real-world environments where the distribution and number of future EV charging requests according to EV user behavior patterns are unknown. Future work would consider applying the proposed algorithm for a real scenario with actual EV user behavior patterns.