Abstract

In the power and energy systems area, a progressive increase of literature contributions that contain applications of metaheuristic algorithms is occurring. In many cases, these applications are merely aimed at proposing the testing of an existing metaheuristic algorithm on a specific problem, claiming that the proposed method is better than other methods that are based on weak comparisons. This ‘rush to heuristics’ does not happen in the evolutionary computation domain, where the rules for setting up rigorous comparisons are stricter but are typical of the domains of application of the metaheuristics. This paper considers the applications to power and energy systems and aims at providing a comprehensive view of the main issues that concern the use of metaheuristics for global optimization problems. A set of underlying principles that characterize the metaheuristic algorithms is presented. The customization of metaheuristic algorithms to fit the constraints of specific problems is discussed. Some weaknesses and pitfalls that are found in literature contributions are identified, and specific guidelines are provided regarding how to prepare sound contributions on the application of metaheuristic algorithms to specific problems.

1. Introduction

Large-scale complex optimization problems, in which the number of variables is high and the structure of the problem contains non-linearities and multiple local optima, are computationally challenging to solve, as they would require computational time beyond reasonable limits, and/or excessive memory with respect to the available computing capabilities. These problems appear in many domains, with different characteristics. Objective functions with non-smooth surfaces that correspond to the solution points, the presence of discrete variables, and several local minima, as well as the combinatorial explosion of the number of cases to be evaluated to search for the global optimum, characterize a number of optimization problems. The algorithms used to solve these problems have to be able to perform efficient global optimization.

In general, the solution algorithms are based on two types of methods:

- (1)

- deterministic methods, in which the solution strategy is driven by well-identified rules, with no random components; and,

- (2)

- probability-based methods, whose evolution depends on random choices carried out during the evolution of the solution process.

If the nature and size of the problem enable convenient formulations (e.g., linearizing the non-linear components by means of piecewise linear representations in a convex problem structure), some exact deterministic methods can reach the global optimum under specific data representations. However, in general, the structure of the problems can be so complex to make it impossible or impracticable to use methods that can guarantee to reach the global optimum. Furthermore, in some cases, even the structure of the solution space is unknown, thus needing a specific approach to obtain information from the solutions themselves. In these cases, the use of metaheuristics is a viable approach.

What is a metaheuristic? In synthesis, the term heuristic identifies a tool that helps us discover ‘something’. The term meta is typically added to represent the presence of a higher-level strategy that drives the search of the solutions. The metaheuristics could depend on the specific problem [1]. Many metaheuristics are based on translating the representation of natural phenomena or physical processes into computational tools [2].

Some solution methods are metaheuristics that are based on one of the following mechanisms:

- (a)

- Single solution update: a succession of solutions is calculated, each time updating the solution only if the new one satisfies a predefined criterion. These methods are also called trajectory methods.

- (b)

- Population-based search: many entities are simultaneously sent in parallel to solve the same problem. Subsequently, the collective behavior can be modeled to link the different entities with each other, and, in general, the best solution is maintained for the next phase of the search.

Detailed surveys of single solution-based and population-based metaheuristics are presented (among others) in [3,4,5].

On another point of view, when considering the number of optimization objectives, a distinction can be indicated among:

- (i)

- Single objective optimization, in which there is only one objective to be minimized or maximized.

- (ii)

- Multi-objective optimization, in which there are two or more objectives to be minimized or maximized. Multi-objective optimization tools are significant to assist decision-making processes, when the objectives are conflicting with each other. In this case, an approach that is based on Pareto-dominance concepts becomes useful. In this approach, a solution is non-dominated when no other solution does exist with better values for all of the individual objective functions. The set of non-dominated solutions forms the Pareto front, which contains the compromise solutions among which the decision-maker can choose the preferred one. If the Pareto front is convex, the weighted sum of the objectives can be used to track the compromise solutions. However, in general, the Pareto front has a non-convex shape, which calls for appropriate solvers to construct it. During the solution process, the best-known Pareto front is updated until a specific stop criterion is satisfied. The best-known Pareto front should converge to the true Pareto front (that could be unknown). The solvers need to balance convergence (i.e., approaching a stable Pareto front) with diversity (i.e., keeping the solutions spread along the Pareto front, avoiding concentrating the solutions in limited zones). Diversity is represented by estimating the density of the solutions that are located around a given solution. For this purpose, a dedicated parameter, called crowding distance, is defined as the average distance between the given solution and the closest solutions belonging to the Pareto front (the number of solutions is user-defined).

- (iii)

- Many-objective optimization, a subset of multi-objective optimization in which, conventionally, there are more than two objectives. This distinction is important, as some problems that are reasonably solvable in two dimensions, such as finding a balance between convergence and diversity, become much harder to solve in more than two dimensions. Moreover, by increasing the number of objectives, it becomes more intrinsically difficult to visualize the solutions in a way convenient for the decision-maker. The main challenges in many-objective optimization are summarized in [6]. When the number of objectives increases, the number of non-dominated solutions largely increases, even reaching situations in which almost all of the solutions become non-dominated [7]. This aspect heavily impacts on slowing down the solution process in methods that use Pareto dominance as a criterion to select the solutions. A large number of non-dominated solutions may also require increasing the size of the population to be used in the solution method, which again results in a slower solution process. Finally, the calculation of the hyper-volume as a metric for comparing the effectiveness of the Pareto front construction from different methods [8] is geometrically simple in two dimensions, but it becomes progressively harder [9], with the exponential growth of the computational burden, when the number of objectives increases [10].

For multi-objective and many-objective problem formulations, the metaheuristic approach has gained momentum, because of the issues that exist in the application of gradient search and numerical programming methods. However, a number of issues appear, mainly concerning the characteristics of the search space (such as non-convexity and multimodality), and the presence of discrete non-uniform Pareto fronts [11].

The above indications set up the framework of analysis used in this paper. The main aims are to start from the concepts referring to the formulation of the metaheuristics and discuss a number of both correct and inappropriate practices found in the literature. Some details are provided on the applications in the power and energy systems domain, in which hundreds of papers that are based on the use of metaheuristics for solving optimization problems have been published.

The specific contributions of this paper are:

- ▪

- A systematic analysis of the state of the art regarding the main issues on the convergence of metaheuristics, and the comparisons among metaheuristic algorithms with suitable metrics for single-objective and multi-objective optimization problems.

- ▪

- The identification of a set of underlying principles that explain the characteristics of the various metaheuristics, and that can be used to search for similarities and complementarities in the definition of the metaheuristic algorithms.

- ▪

- The indication of some pitfalls and inappropriate statements sometimes found in literature contributions on global optimization through metaheuristic algorithms, which partially foster the proliferation of articles on the application of metaheuristics, are not always justified by a rigorous methodological approach, leading to an almost uncontrollable ‘rush to heuristics’.

- ▪

- The discussion on the characteristics of some problems in the power and energy system domain, which are solved with metaheuristic optimization, highlight some problem-related customizations of the classical versions of the metaheuristic algorithms.

- ▪

- The indication of some hints for preparing sound contributions on the application of metaheuristic algorithms to power and energy system problems with one or more objective functions, while using statistically significant and sufficiently strong metrics for comparing the solutions, in such a way to mitigate the ‘rush to heuristics’.

The next sections of this paper are organized, as follows. Section 2 summarizes the underlying principles that can be found in the construction of metaheuristic algorithms. Section 3 discusses the application of metaheuristic algorithms to specific problems for power and energy systems. Section 4 recalls the convergence properties of some metaheuristics. Section 5 provides a critical discussion and new insights on the comparison of metaheuristic algorithms. Section 6 deals with the hybridization of the metaheuristics. Section 7 addresses the use of metaheuristics for solving multi-objective problems. Section 8 discusses the effectiveness of metaheuristic-based optimization, pointing out a number of weak statements that should not appear in scientific contributions. The last section contains the Conclusions, which include specific guidelines for preparing sound contributions on the application of metaheuristic algorithms to power and energy system problems.

2. Materials and Methods

2.1. Evolution of the Metaheuristics

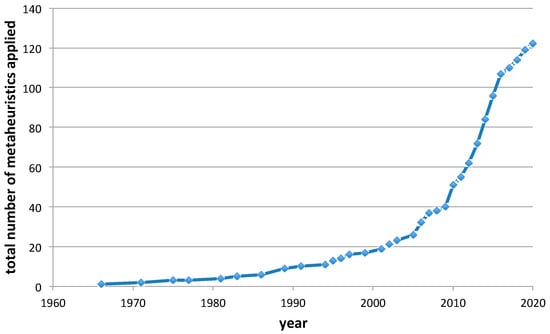

The evolution of the metaheuristics has progressively increased in time. New algorithms appear each year, and it is not clear-cut whether these algorithms bring new contents for the research on evolutionary computation. Table A1 in the Appendix A reports a non-exhaustive list of over one hundred metaheuristics that have been applied in the power and energy systems field, together with the corresponding references (the years indicated refer to the first date of publication of relevant articles or books). Figure 1 shows the corresponding number of metaheuristics used during time. The number of metaheuristics that appeared in the last years is underestimated, as some recent metaheuristics (not indicated) have not yet found application in power and energy systems. Moreover, the list in Table A1 refers to basic versions of the metaheuristics only, without accounting for the proposed variants and hybridizations among heuristics; otherwise, the number of contributions would quickly rise to significantly higher numbers. However, the rush to apply a new metaheuristic to all of the engineering problems is a vulnerable point for scientific research [12], especially when each “new” method or variant applied to a given problem is claimed to become the best method, pretending to show its superiority with respect to any other existing method. Apparently, this ‘rush to heuristics’ is producing hundreds of articles, most of them being questionable in terms of methodological advances that are provided in the evolutionary computation field.

Figure 1.

Number of metaheuristics available (variants and hybrid versions excluded).

The need to better understand the characteristics of the various algorithms has started specific discussions since the early phase of the development of new algorithms. Two decades ago, the unified view that was proposed in [13] started from the consideration that the implementation of the solvers was increasingly similar. The unified view was introduced under the name Adaptive Memory Programming (AMP), synthesizing a series of basic steps for the solution procedure valid for most metaheuristics (AMP is not applicable to single-update methods, such as Simulated Annealing [14]):

- (1)

- store a set of solutions;

- (2)

- construct a provisional solution using the available data;

- (3)

- improve the provisional solution with local search or another algorithm; and,

- (4)

- update the set of available solutions with the new solution.

These steps indicate four basic principles that re used to set up a metaheuristic algorithm, namely, memory (i.e., storage of information), the presence of a constructive mechanism, a local search strategy, and the definition of a mechanism for solution update. Taillard et al. [13] consider memory as a key principle for describing the possible similarities between the algorithmic structures of the metaheuristics. Indeed, memory is fundamental in the definition of the metaheuristics. However, memory can be seen as a general term with different meanings for different algorithms. As such, memory is not considered here to be sufficiently specific as a detailed underlying principle, and more underlying principles are used for representing the characteristics of the various types of metaheuristics.

2.2. Underlying Principles

Each metaheuristic algorithm applies specific mechanisms in the solution procedure. The presence of a multitude of algorithms raises a fundamental question: are all of the metaheuristic algorithms used really different from each other?

To address this issue, the solution procedures have been revisited by identifying a set of underlying principles that form a common basis for the various methods [15]. On the other side, these principles embed the structural differences among the methods.

The following list of underlying principles has been found, which also highlights some contents that refer to typical issues that appear in the power and energy systems domain:

- ▪

- parallelism;

- ▪

- acceptance;

- ▪

- elitism;

- ▪

- selection;

- ▪

- decay (or reinforcement);

- ▪

- immunity;

- ▪

- self-adaptation; and,

- ▪

- topology.

A brief description of these principles follows.

2.3. Parallelism

The parallelism principle appears in population-based search, in which more entities are sent in parallel to perform the same task, and the obtained results are then compared. On the basis of the comparison, further principles are applied in order to determine the evolution of the individuals within the population or to create new populations.

2.4. Acceptance

The principle of acceptance appears in a threefold way:

- Temporarily accept solutions that lead to objective function worsening, with the rationale of broadening the search space

- In the treatment of the constraints applied to the objective function. The constraints can be handled in two different ways. The first way is to discard all solutions in which any violation appears. This way is applied to algorithms that use a non-penalized objective function, in which the initial conditions have to correspond to a feasible solution (for single-update methods) or to all feasible solutions (in population-based methods). The second way is to use a penalized objective function, which makes it possible to find a numerical value to any solution and avoid discarding any solution. In this case, all solutions are automatically accepted, and the initial conditions could correspond to infeasible solutions. The penalty factors that are used in the penalized objective functions have to be sufficiently high to obtain high values for the solutions with violations. However, if the penalty factor is too high, then very high values could appear for too many solutions, which makes it difficult to drive the search in the direction of exploring the search space efficiently.

- Introducing a threshold for only accepting solutions that improve the current best solution at least of the value of the threshold. This way could help to avoid numerical issues in the comparison between values that result from previous calculations, e.g., when the same number is represented in different ways depending on numerical precisions.

2.5. Elitism

In the iterative population-based methods (in which more individuals are generated at the same iteration from probability-based criteria), if no action is done, then it is possible to lose the best solution passing from one iteration to another. The basic versions of metaheuristics (such as simulated annealing, genetic algorithms, and others) privilege the randomness of the search and do not contain mechanisms to preserve the best solutions. To avoid this, the elitism principle is applied by storing the individual with the best objective function found so far and passing it from one iteration to the next one. The best solution can be used as a reference individual to form other modified solutions, and it is immediately updated when another best solution is found. In a more extensive way, the elitism principle can also be applied to more than one individual, passing an élite group of solutions to the next iteration. The elitism principle has resulted in being very effective in practical applications. For the elitistic versions of some metaheuristics, it has been possible to prove convergence to the global optimum under specified conditions (see Section 3).

2.6. Selection

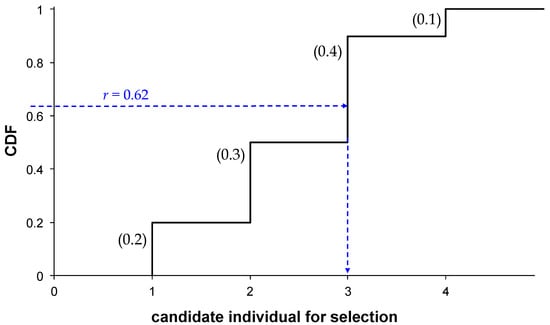

In a probability-based method, a mechanism has to be identified in order to extract a number of individuals at random from an available population, possibly associating weights to the probabilistic choices. In particular, for problems with variables that are described in a discrete way, the extraction mechanism is driven by the conventional way to extract a point from a given probability distribution. The Cumulative Distribution Function (CDF) is constructed by considering a quality measure (fitness) of the solutions, reported in a normalized way, such as the individuals corresponding to better values of the objective function have higher fitness (hence, higher probability to be chosen). For example, let us consider a set of M individuals, whose objective function values are , and the objective function has to be minimized [16]. The fitness is defined as . Subsequently, a random number r is extracted from a uniform probability distribution in [0, 1] and is entered on the vertical axis of the CDF. The individual corresponding to the discrete position on the horizontal axis is then selected. Figure 2 exemplifies the situation, with four individuals and the related fitness values of 0.2, 0.3, 0.4, and 0.1, respectively. By extracting a random number (e.g., 0.62), the individual number 3 is selected.

Figure 2.

Random selection from Cumulative Distribution Function (CDF).

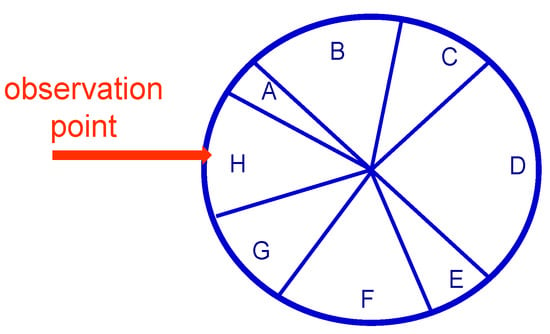

This method is equivalent to the so-called biased roulette wheel, in which the slices have a different amplitude (proportional to the fitness that is associated to each individual). The selected variable is the one seen from the observation at which the roulette stops. In the example of Figure 3, individual D has the largest probability of being selected, but any individual can be selected (e.g., the individual H is selected in Figure 3).

Figure 3.

Random selection from biased roulette wheel.

2.7. Decay (or Reinforcement)

The decay principle may be applied in order to enable larger initial flexibility in the application of the method, followed by progressive restrictions of that flexibility. The application of a decay rate to the parameter (cooling rate) that drives the external cycle in the simulated annealing method is a direct example. Decay is typically considered by using a multiplicative factor lower than unity, which is applied at successive iterations. In some cases, reinforcement is applied in a similar way by using a multiplicative factor that is higher than unity.

The decay principle can also reduce the strength of some search paths that are less convenient than others or have not been recently visited. This application has been introduced in the ant colony optimization algorithms [17], in which the paths can also be reinforced if they were found to be convenient. Relative decay has also been considered in the hyper-cube ant colony optimization framework [18], in which decay or reinforcement are first applied, and then the overall outcomes are normalized to fit a hyper-cube with dimensions limited inside the interval [0, 1].

2.8. Immunity

Immunity is applied by identifying some properties of the solutions, where such properties lead to satisfactory configurations. Immunity gives priority to the solutions that have characteristics similar to those properties.

2.9. Self-Adaptation

Self-adaptation consists of changing the parameters of the algorithms in an automatic way, depending on the evolution of the procedure.

2.10. Topology

The topology principle is applied when the problem under analysis needs to satisfy specific constraints, such as the definition on a graph or connectivity requirements. A relevant example is the graph corresponding to the operational configuration of an electrical distribution system. The principle of topology is linked, for example, to the generation of radial structures during the execution of the algorithms. The representation of the topology is associated with how the information regarding the connections is coded, which can be more or less effective to ensure that only radial structures are progressively generated. For example, for an electrical network, the information coding is typically carried out in one of these ways:

- (a)

- creating the list of the open branches;

- (b)

- forming the list of the loops and identifying the branches of each loop with a progressive number; or,

- (c)

- using a binary string of length that is equal to the number of branches, containing the status (on/off) of the branches.

2.11. Remarks on the Underlying Principles

The identification of the underlying principles has clarified that the memory term can be intended in different ways, e.g., pheromone for ant colony optimization, the presence of the previous population for genetic algorithms, the list of past moves for tabu search, and so on. Elitism itself is a form of memory.

In the use of metaheuristics, a balance is generally sought between exploration and exploitation of the search space. Exploration means the ability to reach all of the points in the search space, while exploitation refers to the use of knowledge from the solutions that are already found to drive the search towards more convenient regions. The underlying principles may affect both exploration and exploitation in different ways. For example, the selection principle applied to a given population could mainly refer to exploitation [19], as it drives the search towards the choice of the best individuals [20]. However, selection may, to a given extent, also refer to exploration, by varying the width of the population involved [21].

Finally, the synthesis of the underlying principles can also be a way to generate new metaheuristic algorithms or variants. Even automatic generation of algorithms could be considered, for which there is wide literature referring to deterministic and other algorithms [22]. Indeed, conceptually, there are different ways to proceed to define new metaheuristic algorithms:

- (a)

- Taking existing algorithms and constructing new ones by changing the context and the nomenclature; indeed, this practice is not advancing the state of the art and only contributes to add entropy to the evolutionary computation domain [12].

- (b)

- Synthesizing the underlying principles and combining them to obtain new algorithms; also, in this case, it is only a recombination of existing principles, which, in general, could not add significant contributions and would just play into the ‘rush to heuristics’.

- (c)

- Generate new algorithms by using a set of components taken from promising approaches [23]. This line of research is also useful for identifying appropriate reusable portions of subprograms [24], and also leads to using hyper-heuristics to select or generate (meta-)heuristics by exploring a search space of a number of heuristics for identifying the most effective ones [25,26].

Some useful variants can be found when the existing metaheuristics are customized in order to solve specific problems, incorporating specific constraints, as indicated in the next section.

3. Specific Problems for Power and Energy Systems

3.1. Main Problems Solved with Metaheuristic Algorithms

In the power and energy domain, metaheuristic optimization is widely used to solve many problems referring to operation, planning, control, forecasting, reliability, security, and demand management. A set of typical problems that are solved with metaheuristic optimization have been considered in [27,28,29], including unit commitment, economic dispatch, optimal power flow, distribution system reconfiguration, power system planning, distribution system planning, load forecasting, and maintenance scheduling. Table 1 shows a selection of the metaheuristics most applied to these typical problems. From this set of problems, it emerges that the genetic algorithm is the most used or mentioned method for all of the problems, followed by particle swarm optimization (or simulated annealing in two cases, or tabu search in another case). Further review of the particle swarm optimization applications to power systems is presented in [30].

Table 1.

Most used metaheuristic algorithms used to solve some power and energy systems problems [28,29].

Concerning the information coding, the most successful implementations of genetic algorithms do not use binary coding of the strings, but use representations that are adapted to the application, and the crossover and mutation operators are re-defined accordingly [13]. However, binary coding schemes are still mainly used in power and energy system problems. In alternative, evolutionary programming schemes, in which the binary values are replaced with integer or real numbers, are appropriate for specific problems.

Some specific examples are presented below, in order to indicate how metaheuristics may be a viable alternative to (or a more successful option than) mathematical programming tools for the solution of large-scale optimization problems in the power and energy systems area. A general remark is that the size of the problem matters. If the size of the problem is limited, for which exhaustive search could be practicable, or mathematical programming tools that are able to provide exact solutions can be used with reasonable computational burden, then the use of a metaheuristic algorithm is not justified. The only exception is the case in which a metaheuristic algorithm is tested on a problem with known global optimum for checking its effectiveness in finding the global optimum, before applying it to large-scale problems: if the algorithm fails to find the global optimum on a small-size problem after adequate testing (e.g., with hundreds or thousands of executions), then its implementation or parameter setting are likely to be ineffective.

3.2. Unit Commitment (UC)

The UC problem consists of scheduling the generation units (typically thermal units) in order to serve the forecast demand in future periods (e.g., from one day to one week), by minimizing the total generation costs. The output is the start-up and shut-down schedule of these generation units. The problem has integer and continuous variables, and a complex set of constraints also involving time-dependent constraints for the units, such as minimum up and down times, start-up ramps, and time-dependent start-up costs. The UC problem has been traditionally solved with mathematical programming and stochastic programming tools [31]. However, these tools exhibit some drawbacks. For example, for dynamic programming, the computation time could become prohibitive for real-size systems, and time-dependent constraints are hard to be successfully implemented. Lagrangian relaxation has no problem with time-dependent constraints and it optimizes each unit separately. Thus, the dimension of the system is not an issue. The problem is solved using duality theory, maximizing the dual objective function for a given original problem. However, because of the non-convexity of the original problem, the solution of the dual problem cannot guarantee the feasibility of the primal problem, and optimal values for the original and dual problems could be different. Robust optimizations with bi-level or three-level are computationally less demanding than stochastic programming models, but they may lead to over-conservative solutions. A framework for comparing mathematic programming algorithms to solve the UC problem has been formulated in [32], and it has been applied to three recently developed algorithms.

Metaheuristics have been successfully used to solve the UC problem to overcome these difficulties. First of all, the binary coding common to various metaheuristics is fully appropriate to represent the on/off status of the units. Thereby, the information on the status of each unit is included in a binary string with length that is equal to the number of time intervals considered. This information coding is naturally leading to the use of genetic algorithms [33], in which a unique string (called chromosome) is constructed as the ordered succession of the strings referring to the individual generation units. The whole information on the scheduling is then available at each time. However, Kazarlis et al. [33] showed that the straightforward application of the basic version of the genetic algorithm does not lead to acceptable performance, requiring the addition of specific problem-related operators to significantly enhance the algorithm performance. The need to define specific operators has also been confirmed in next implementations, for example, in [34]. Further review on the application of metaheuristics to the UC problem is presented in [35].

3.3. Economic Dispatch (ED)

Once the schedule of the generators has been fixed from UC, the share of the load satisfied by every generation unit is fixed through the ED, in such way that the total generation cost can be minimized. The ED problem is solved per single time step, by verifying generation (power bounds) and transmission system (line capacities) constraints. In ED, a specific cause of non-linearity is the valve-point effect that appears in the input-output curve of the generation units [36].

It is worth noting that, in the UC problem, the transmission system constraints are not considered, while, in the ED problem, some technical constraints (like ramping limits) are not taken into account. The joint solution of both UC and ED leads to the Network-Constrained Unit Commitment (NCUC) problem [37]. Its aims are to find (i) the time steps when the different generators are in operation and (ii) the power output of all the these generators over the time horizons, by taking into account power balance equations (equality constraints) and inequality constraints (such as minimum and maximum power, prohibited operating zones, multiple fuel options, transmission limits, etc.). The large-scale combinatorial nature of UC in the NCUC reduces the possibility of using deterministic methods, due to the difficulties of incorporating the various constraints, by opening the possibility of using metaheuristic methods. Various traditional programming algorithms are used, in particular dynamic programming [38], the interior point method [39,40], and further scenario-based decomposition methods that are applied to stochastic ED, e.g., using the asynchronous block iteration method to reduce the computational burden by using multicore computational architectures [41]. Metaheuristic algorithms, mainly genetic algorithms [36,42] and particle swarm optimization [43], have been used in the last decades to solve the ED problem, addressing specific challenges due to the non-convexity of the domain of definition of the variables. Further customized solvers have been set up using hybridizations of mathematical programming and metaheuristics, e.g., interior point and differential evolution [44], or hybrid versions that are based on particle swarm optimization with other methods [45].

3.4. Optimal Power Flow (OPF)

The OPF aims to find the steady state operating point in such a way that the system under analysis can be run in optimal way, by considering both single- and multi-objective formulations (including beyond costs also environmental or network compensation aspects). The control variables (that affect voltages and active powers at specific nodes) have to be chosen to satisfy a number of constraints on the system components and operation. The OPF problem is a mixed-integer non-linear and non-convex problem. The OPF problem can be formulated in different ways, depending on which characteristics are considered (e.g., including reactive power-related aspects). In particular, the Security Constrained Optimal Power Flow (SCOPF) is a widely used formulation. A number of constraints, which are related to the network and the generators exists, and also constraints related to contingencies, are included. The tools adopted to solve the OPF problem are many, including a wide set of mathematical programming tools, also used to solve challenging real-time OPF problems [46]. Among them, interior point methods (e.g., [47]) have emerged among the most efficient solvers.

However, the nature of the OPF problem makes metaheuristic algorithms appropriate in providing effective solutions. Genetic algorithms, particle swarm optimization, evolutionary algorithms, and differential evolution are the most used metaheuristics [48]. Other methods come from well-designed hybridizations. For example, the differential evolutionary particle swarm optimization (DEEPSO) [49] was the winner of the 2014 competition that was organized by the Working Group on Modern Heuristic Optimization (under the IEEE Power and Energy Society Analytic Methods in Power Systems), dedicated to the solution of OPF problems. DEEPSO is a hybrid metaheuristic that applies the underlying principles of three heuristics (particle swarm optimization, evolutionary programming, and differential evolution) in order to construct an efficient tool. Its creation has followed well-studied criteria, which make it an example of best practice in the development of metaheuristics.

3.5. Distribution System Reconfiguration (DSR)

The DSR problem concerns the selection, within a weakly-meshed network, of the set of network branches to keep open to (i) obtain a radial network and (ii) optimize a predefined objective (or multi-objective) function. The main constraints refer to the need to operate a radial network, together with the equality constraint on the power balance and inequality constraints that involve node voltages, branch currents, short circuit currents, and others [50]. In this case, the discrete variables are the open/closed states of the network branches (or switches, with two switches for each branch, located at the branch terminals). The binary coding of the information is easily applicable. The length of the string is equal to the number of branches (or switches). Additional information, such as the branch list, can be added [51]. The number of the possible radial configurations in real-size systems is too high to allow for the construction of all radial configurations [52]. The structure of the problem makes it difficult to identify a neighborhood of the solutions and other regularities that could drive mathematical programming approaches. As such, metaheuristic algorithms are viable for approaching this problem.

The main issues for the DSR problem refer to the implementation of the constraints. In particular, the radiality constraint is not always easily incorporated in the metaheuristic algorithm. For some algorithms, such as simulated annealing, it is sufficient to exploit the branch-exchange mechanism, which consists of starting from the list of the open branches, closing (at random) an open branch to close (that will form a loop), identify the loop, and choosing (at random) within the loop a closed branch to open. In this way, the radial structure of the network is automatically guaranteed. However, for a genetic algorithm, the application of the crossover and mutation operators is not consistent with keeping the radial network structure. Hence, the crossover and mutation operators, and the information coding itself [53], have to be suitably re-defined to ensure that radiality is not lost during the solution process.

Nowadays, the presence of increasing share of distributed generation in the distribution network leads to consider operational conditions that are based on time-variable load and generation profiles. This aspect is posing challenges also regarding the creation of proper network samples for the algorithm tests [28]. In multi-objective problem formulations, more than one conflicting objectives are considered, such as losses and reliability indices [54].

3.6. Transmission Network Expansion Planning (TNEP)

The general objective of the TNEP problem is the minimization of the costs that are related to the transmission infrastructure, sometimes also associated to the planning of the generators connected to the system. Another objective is the reliability of the transmission system, considering the loss of load, or the interruption costs. The actions to be taken can be the installation of new lines, the repowering of the generator (or the insertion of new generators), and the embedding of new technologies (for example, Flexible AC Transmission Systems—FACTS). The traditional solution was based on the cost optimization for a given time period by considering a given set of fixed and variable costs. Today, the solutions have to take many uncertainties in generation, demand, market conditions, and technology developments into account. Furthermore, external aspects (including vulnerability issues [55]) have an impact on the transmission system reinforcement. This requires the development of a multi-scenario analysis.

The optimal set of investments is chosen by considering the power balance (equality constraint, which is usually verified by calculating a DC power flow [56]), and inequality constraints, usually referring to the maximum number of lines to be added, the capacity of each line, limits on the capability of the different generators and, in case, also a budget constraint on the availability of financial resources during the various planning stages. Further operation and security constraints can be introduced by considering electrical and natural gas networks [57], or the integration of wind systems with maximum wind capacity in a site and maximum risk for wind power installation [58].

Early algorithms [59] that are used constructive heuristics in which the network components are added one at a time, while using sensitivity measures to decide which is the next component to add. These sensitivity measures are local and, as such, cannot drive the solution in the direction of the global optimum. For large systems, the solution could reach a poor local optimum. For avoiding these issues, the exploration of alternative expansion possibilities was successfully introduced when the solutions achieved are considered to be weak. When alternative solutions are considered, the solutions grow exponentially with the system size, and there are typically many local optima. Hence, metaheuristic algorithms become viable and effective for solving the TNEP. In particular, genetic algorithms have been largely used. In the presence of a multi-objective problem, the multi-objective versions of genetic algorithms and other metaheuristics are particularly useful in providing effective solutions.

3.7. Distribution System Planning (DSP)

The DSP problem includes expansion planning and operational planning (with conventional and “active”) procedures. The difference between expansion planning and operational planning lies into the number of nodes of the system, which remains constant when the operational planning procedure is applied, while it can change in the case of expansion planning.

For the expansion planning, different time horizons may be considered: short terms (1–4 years), long term (5–20 years), and horizon year planning (more than 20 years) [60]. The distribution expansion is a mixed-integer non-linear problem, in which binary variables represent either the installation of new device or the upgrade of the existing facilities, while continue variables are more related to all the time-variant variables, i.e., the Distributed Generation (DG) profiles or curtailed load [61]. Both the expansion and the operational planning have, as aims, the minimization of the investment costs, as well as the operational costs (usually losses and maintenance), when considering the technical and operational constraints.

The difference between conventional and active operational planning lies in the management of the DG: in the conventional case, the DG is installed and managed with a “fit and forget” approach (thus, it is included with a constant power, without considering the generation profiles). Conversely, the active operational planning aims to investigate the impact of DG by considering their generation profiles (including sometimes their uncertainty) as well as several load profile scenarios. For both cases, usual investments, such as new conductors or new substation components, are also considered [62]. In the case of expansion planning, the system operator may face an increase of the loads (or more recently also the connection of new centralized power plants based on renewable energy sources), and thus additional electrical nodes have to be added. While the static approach involves what should be installed and where, the dynamic approach also specifies when the installation has to be made [63]. In the latter case, constraints regarding the time-relationships between the investments should be taken into account. Another problem that has been recently faced is the planning, including resilience aspects, which should handle with rare event having high impact [64,65]. In real-world applications, often the optimal investment choice requires to consider a number of aspects (not only economic, but also social and environmental) that can be managed through multi-criteria approaches, as reviewed in [66].

The metaheuristic methods are easy to be implemented and are particularly useful to solve multi-objective problems. In fact, the deterministic method mostly based on 0–1 linear programming become hard to manage when the number of variables and constraints increases. Branch-and-bound techniques can reduce the computational burden at the expense of reducing the solution space. However, for large-scale system, the solutions can trap into poor local optima. The possible success of genetic algorithms was envisioned more than two decades ago [67], and the reality has confirmed the success of the metaheuristic approach. The genetic algorithms are particularly appropriate, because of their binary coding of the information that enables the handling of the possible on/off states of the components that are considered as possible candidates to be added to the distribution network.

3.8. Load and Generation Forecasting (LGF)

Electrical load forecasting is a traditional problem, which has been solved with a number of methods, from statistical methods to approaches that are based on artificial intelligence, in particular neural networks and support vector machines, or more recently based on deep learning [68,69]. Today, the increase of the generation from renewable energy sources has introduced the further high uncertainty, depending on solar irradiance, wind speed and direction, and energy prices. Thereby, there is a need of approaches that also solve the generation forecasting. Load and generation forecasting are typically maintained as separate problems, due to the different nature of the corresponding time series and to the different phenomena that impact their evolution.

The forecasting time horizons are very important to define the problems. The classical view makes a distinction among very short term (e.g., from a few seconds to tens of minutes), short term (from tens of minutes to one day or one week), medium term (from one week to some months), and long term (from some months to many years).

Persistence models, which are based on replicating past time series considered as the closest one to the future conditions, are used as general benchmarks. Statistical approaches and methods that are based on neural networks and fuzzy systems have been used for many years. Hybrid methods have been constructed by adding to the neural networks an algorithm that assists parameter tuning in the training phase. Metaheuristic algorithms have been considered in these hybridizations [70]. Genetic algorithms are the most used, while particle swarm optimization, evolutionary algorithms, and simulated annealing have been used in various applications. Ensemble-based forecast models are emerging as effective tools, with the integration of different forecasting methods in order to reach better accuracy in the results [71].

3.9. Maintenance Scheduling (MS)

The MS problem aims to find the optimal time interval among the maintenance interventions on generation units and network components, with the aim to maintain their functionality and minimize the operational costs of the system where they are installed [72]. It is possible to define two different problems from the conceptual point of view: the generation unit maintenance scheduling (GMS) and the transmission maintenance scheduling (TMS). In the first case, the idea is the definition of the period of out of service of the generation units in terms of time occurrence and duration, by considering the reliability of the system where the generators are installed, the personnel availability, and the limitation of the ramp rates of the units to come back to the normal operation. The definition of the maintenance periods is carried out according to objective functions that can only consider the reliability of the system (including the reserve margins), only the costs (fuel, start-up costs, loss of profit), or both of them [72,73]. When the TMS is considered, the main goal is to verify that the maintenance of the network component is not affecting the functionality of the system: thus, generally the constraints are the same as in GMS. The two problems may also be considered together, in order to account for both the security of the system and its efficiency. After the restructuring of the electricity business, the two problems may be conflicting because the generators would like to make the intervention when the electricity costs is low, which can lead to some difficulties to meet the total demand. Thus, an iterative process is required in order to fix scheduling periods taking into account the request of the generators and the network operator.

From the point of view of the solution methods, both mathematical programming approaches and metaheuristics have been used. Regarding the first group, dynamic programming, mixed-integer programming, Lagrangian relaxation, branch-and-bound, and Benders decomposition have been exploited [72]. However, all of those methods are suitable with linear objective and linear constraints. Thus, metaheuristics have been introduced to handle more complex objective functions and/or constraint formulation. Population-based methods (genetic algorithm and particle swarm optimization), simulated annealing, and tabu search have mostly been used, sometimes in a coordinated manner [74].

4. Convergence Aspects of Global Optimization Problems and Metaheuristics

Metaheuristic algorithms are also applied to solve global optimization problems when the problem structure is not known. For exploring the search space, these algorithms are generally based on the use of random variables, which make it possible to follow non-deterministic paths to reach a solution. The basic versions of the metaheuristic algorithms are relatively simple to be implemented, even though their customization to engineering problems could be very challenging. The metaheuristic algorithms are counterparts of stochastic methods, such as two-phase methods, random search methods, and random function methods [75]. In the two-phase methods, the objective function is assessed in a number of points selected at random. Subsequently, a local search is carried out to refine the solutions starting from these points. In the random search methods, a sequence of points is generated in the search space by considering some probability distributions, without following with local search. In the random function methods, a stochastic process that is consistent with the properties of the objective function has to be determined. With respect to these methods, the metaheuristic approach adds a high-level strategy that drives the solutions according to a specific rationale. However, the key point for confirming the significance of metaheuristics is the possibility of proving their convergence in a rigorous way. From the mathematical point of view, convergence proofs are not established for all metaheuristics. Two examples are provided:

- (1)

- Genetic algorithms: following the introduction of the concepts of genetic algorithms in [76], the canonical genetic algorithm shown in [77] did not preserve the best solutions during the evolution of the algorithm, namely, the elitism principle was not applied. In the homogeneous version of the canonical genetic algorithm, the crossover and mutation probabilities always remain constant. For this homogeneous canonical genetic algorithm, there is no proof of convergence to the global optimum. However, better results have been obtained under the condition of ensuring the survival of the best individual with probability that is equal to unity (elitist selection). In this case, finite Markov chain analysis has been used to prove probabilistic convergence to the best solution in [78]. The proof that the elitist homogeneous canonical genetic algorithm converges almost surely to a population that has an optimum point in that it has been given in [79]. Subsequently, a number of conditions to ensure asymptotic convergence of genetic algorithms to the global optimum have been given in [80]. Conceptually, at each generation, there is a non-zero probability that a new individual reaches the global optimum due to the application of the genetic operators. As such, saving the best individual at each generation (in the elitist version) and running the algorithm for an infinite number of generations guarantees that the global optimum can be reached. Further indications to extend the proof of almost sure convergence to the elitist non-homogeneous canonical genetic algorithm are provided in [81], by considering that the mutation and crossover probabilities are allowed to change during the evolution of the algorithm [82].

- (2)

- Simulated annealing: a proof of convergence has been given in [83] for a particular class of algorithms, and the asymptotic convergence has been proven for the algorithm that is shown in [84]. Further results have been indicated in [85], showing convergence to the global optimum for continuous global optimization problems under specific conditions for the cooling schedule, the function under analysis, and the feasible set.

For multiobjective optimization problems, the proofs of convergence have been set up by introducing elitism, following the successful practice that was found for single-objective functions. For some multi-objective evolutionary algorithms, convergence proofs to the global optimum are provided in [86,87]. The asymptotic convergence analysis of Simulated Annealing, an Artificial Immune System and a General Evolutionary Algorithm (with any algorithm in which the transition probabilities use a uniform mutation rule) for multiobjective optimization problems, is shown in [88].

5. Discussion and Results on the Comparisons among Metaheuristic Algorithms

5.1. No Free Lunches?

Comparing different algorithms is a very challenging task. Unfortunately, many articles concerning metaheuristics applications in the power and energy systems area (as well as in other engineering fields) are underestimating the importance of this task, and propose simplistic comparison criteria and metrics, such as the best solution obtained, the evolution in time of the objective function improvement for a single run, or related criteria.

In the literature, there is wide discussion on the algorithm comparison aspects. One of the contributions that have opened an interesting debate is the one that introduced the No Free Lunch (NFL) theorem(s) [89]. These theorems state that “any two optimization algorithms are equivalent when their performance is averaged across all possible problems” [90]. Basically, the NFL theorems state that no optimization algorithm results in the best solutions for all problems. In other words, if a given algorithm performs better than another on a certain number of problems, then there should be a comparable number of problems in which the other algorithm outperforms the first one. However, if a given problem is considered, with its objective functions and constraints, some algorithms could perform better than others, especially when these algorithms are able to incorporate specific knowledge on the problem at hand. The debate includes contributions that argue the NFL theorems are of little relevance for the machine learning research [91], in which meta-learning can be used to gain experience on the performance of a number of applications of a learning system.

5.2. Comparisons among Metaheuristics

A recent contribution [92] has addressed comparison strategies and their mathematical properties in a systematic way. The numerical comparison between optimization algorithms consists of the selection of a set of algorithms and problems, the testing of the algorithms on the problems, the identification of comparison strategy, methods and metrics, the analysis of the outcomes that were obtained from applying the metrics, and the final determination of the results.

One of the main issues for setting up the comparisons is the definition of the overall scenario in which the comparison is carried out. The use of benchmarking methodologies, such as Black-Box Optimization Benchmarking (BBOB) discussed in [93], pointed out that reaching consensus on ranking the results from evaluations of individual problems is a crucial issue. It is then hard to provide a response to the question “which is the best algorithm to solve a given problem?” [94]. However, in some cases, a response should be given, as in the case of competitions launched among algorithms.

When testing a single (existing or new) algorithm, a set of algorithms that provide good results for similar problems are typically selected to carry out the comparison. This is one of the weak points that are encountered in the literature, especially when the choice of the benchmark problems is carried out by the authors without a clear and convincing criterion. A number of mathematical functions that can be used as standard benchmarks are available [2]. Some test problems have been defined in different contexts [95,96,97]. However, a systematic guide on how to select the set of problems is still missing. The hint that is given in [92] is to select the whole set of optimization problems in a given domain, and not only a partial set.

Moreover, for global optimization, there is no known mathematical optimal condition to be satisfied for stopping the search for all of the problems. Thereby, the computation time is generally taken as the common limit for stopping the algorithms. For deterministic algorithms, a typical comparison metric is the performance ratio [98]; namely, the ratio between the computation time of the algorithm and the minimum computation time of all algorithms applied to the same problem), from which the performance profile is obtained as the CDF of the performance ratio. Furthermore, the data profile [99] is based on the CDF of the problems that can be solved (by reaching at least a certain target in the solution) with a number of function evaluations not higher than a given limit. With non-deterministic algorithms, the concepts used in the definition of performance profiles and data profiles could be exploited. Comparisons can be carried out by implementing all of the algorithms on the same computer and running them for the same computational time. The quality of the algorithms can then be determined by calculating the percentage of the best solutions, averaged over a given number of executions of each algorithm [13]. The series of the best solutions obtained during the execution of the algorithm in the given time is typically considered for applying a performance metric [29,92].

When the constraints are directly imposed, it may happen that unfeasible solutions are generated during the calculations. These solutions have to be skipped or eliminated from the search. In this case, less useful solutions will be found by running the solver under analysis for a given number of times, worsening the performance indicator. Similar considerations apply when the solutions to be compared are subject to further conditions, for example, in order to satisfy the N-1 security conditions, as requested in [100] for a transmission expansion planning problem.

Indications on the comparison strategies are provided in [92], basically identifying pairwise comparison between algorithms (with the variants one-plays-all, generally used to check a new algorithm, and all-play-all or “round-robin”), and multi-algorithm comparison, both being used in many contexts. For multi-algorithm comparison, statistical aggregations, such as the cumulative distribution function, are often used.

The comparison methods can be partitioned into static (with evaluation of the best solution, mean, standard deviation, or other statistic outcomes), dynamic ranking (which considers the succession of the best values or static rankings during the time), and the cumulative distribution functions (considered at different times during the solution process). The latter type of comparison has become increasingly interesting, also representing the confidence intervals [101,102].

Liu et al. [92] defined the problem of finding the best algorithm as a voting system, in which the algorithms are the candidates, the problems are the votes, and an algorithm performs better than the others if it exhibits better performance on more problems. However, they found the existence of the so-called “cycle ranking” or Condorcet paradox, namely, it may happen that different algorithms are winners for different problems, and it is not possible to conclude which algorithm is better overall. In practice, taking three algorithms A, B, and C, it may happen that, for different problems, A is better than B, B is better than C, and C is better than A. The same concept is shown in [29] by indicating that the relation between the solvers is non-transitive, namely, if algorithm A is better than algorithm B for some problems, and algorithm B is better than algorithm C, this does not imply that algorithm A is better than algorithm C. Another paradox that is shown in [92] is the so-called “survival of the fittest”. In this case, the winner can be different by using different comparison strategies. The probability of occurrence of the two paradoxes is calculated based on the NFL assumption.

5.3. Which Superiority?

Superiority is the term widely used to indicate that a given algorithm performs better than others. However, the way to assess superiority is often stated in a trivial and misleading way. In particular, the use of simple performance indicators, such as the best solution, the average value of the solutions, or the standard deviation of the solutions, makes it possible to exacerbate the paradoxes that are indicated in the above section. The main reason is the lack of robustness of these indicators, especially the ones that are based on a single occurrence (such as the best value) that could be found occasionally during the execution of the algorithm (or even with a “lucky” choice of the initial population). The continuous production of articles claiming that the algorithm used is superior with respect to a selected set of other algorithms is mostly due to the use of these simple performance indicators. A synthesis of the mechanism that leads to this continuous production of articles has been provided in [29], by introducing a perpetual motion conceptual scheme, from which it is clear that it is not possible to find a formal and rigorous way to stop the production of articles.

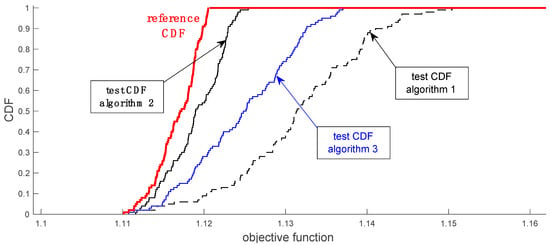

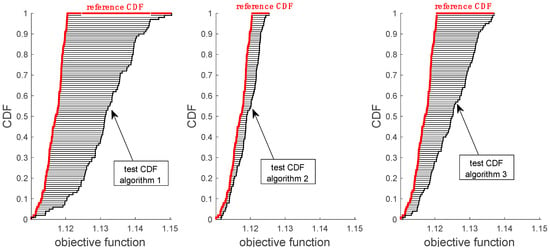

The only way to reduce the number of articles with questionable superiority is to introduce more robust statistics-based indicators for comparing the algorithms with each other. A number of non-parametric statistical tests are summarized in [103]. Another example is the Optimization Performance Indicator based on Stochastic Dominance (OPISD) indicator provided in [29], by considering the first-order stochastic dominance concepts [104] with the approach indicated in [105]. Starting from the CDFs of the solutions that were obtained from a set of algorithms run on the same problem (a qualitative example with three algorithms is shown in Figure 4), the OPISD indicator is formulated by considering a reference CDF together with the CDFs obtained from the given algorithms, calculating for each algorithm the area A between the corresponding CDF and the reference CDF (Figure 5). Subsequently, the indicator is defined as OPISD = (1 + A)−1. In this way, the algorithm with the smallest area is the one that exhibits better performance. From Figure 5, algorithm 2 is the one that exhibits the best performance.

Figure 4.

Determination of the reference CDF for the calculation of the Optimization Performance Indicator that is based on Stochastic Dominance (OPISD) indicator without knowing the global optimum.

Figure 5.

Determination of the areas A for the calculation of the OPISD indicator.

The reference CDF is constructed in different ways, depending on whether the global optimum is known or not. If the global optimum is known, then the reference CDF is equal to zero for values that are lower than the global optimum, and then it jumps to unity at the global optimum. This enables absolute comparisons among the algorithms, even though the global optimum can only be known in a few cases of relatively small systems, and “good” algorithms should always reach the global optimum for these small systems. If the global optimum is not known, the reference CDF is determined by starting from a given number of best solutions obtained from any of the algorithms used for comparison. In this case, only a relative comparison on the set of algorithms under analysis is possible, as the reference CDF changes each time.

6. Hybridization of the Metaheuristics

The various metaheuristics have advantages and disadvantages, usually analyzed in terms of exploration and exploitation characteristics [106], and of contributions to improve the local search. In order to enhance the performance of the algorithms, one of the ways has been the formulation of hybrid optimization methods. The main types of hybridizations can be summarized, as follows:

- (a)

- combinations of different heuristics; and,

- (b)

- combinations of metaheuristics with exact methods.

Successful strategies have been found from the combined use of a heuristic that carries out an extensive search in the solution space, together with a method that is suitable for local search. A practical example is the Evolutionary Particle Swarm Optimization (EPSO), in which an evolutionary model is used together with a particle movement operator to formulate a self-adaptive algorithm [107]. Another useful tool is the Lévy flights [108], which is used to mitigate the issue of early convergence of metaheuristics [109,110] and obtain a better balance between exploration and exploitation. A further example of hybridization is the Differential Evolutionary Particle Swarm Optimization Algorithm [111]—the winner of the smart grid competition at the IEEE Congress on Evolutionary Computation/The Genetic and Evolutionary Computation Conference in 2019.

Depending on the problem under analysis, a useful practice can be the combination of a metaheuristic that aimed at providing a contribution to the global search, and of an exact method of proven effectiveness to perform a local search. In many other cases, hybridizations have no special meaning and they could be only aimed at producing further articles that contribute to the ‘rush to heuristics’.

7. Multi-Objective Formulations

Multi-objective or many-objective optimization (see Section 1) consider more than one objective. The solutions obtained for the individual objectives are relevant when conflicting objectives appear. Optimization with conflicting objectives does not search only the optimal values of the individual objectives, but also identifies the compromise solutions as feasible alternatives for decision making.

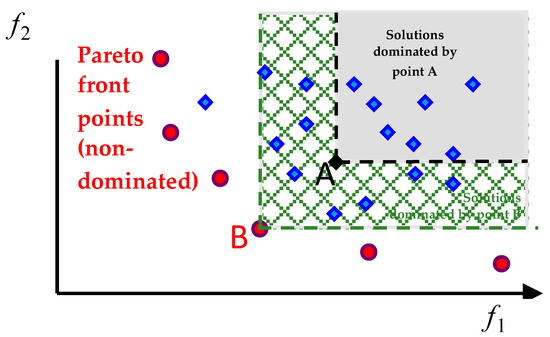

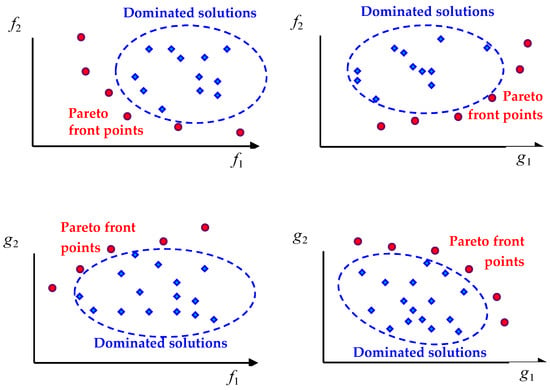

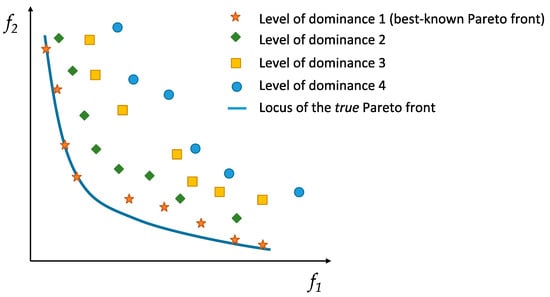

Figure 6 shows the concept of dominated solution for a case with two objective functions f1 and f2 to be minimized. More generally, Figure 7 reports some qualitative examples of locations of the dominated solutions when the objective functions have to be maximized or minimized. Moreover, the dominated solutions can be assigned different levels of dominance, for assisting their ranking when they are used within solution algorithms. Figure 8 shows an example with four levels of dominance (where the first level is the best-known Pareto front, some points of which could be located on the true Pareto front). Fuzzy-based dominance degrees have also been defined in [112].

Figure 6.

Concept of Pareto dominance. The functions f1 and f2 are minimized.

Figure 7.

Pareto front points and dominated solutions for objective functions minimized (f1 and f2), or maximized (g1 and g2).

Figure 8.

Levels of dominance. The functions f1 and f2 are minimized.

7.1. Techniques for Pareto Front Calculation

Some deterministic approaches are available. If the Pareto front is convex, then the weighted sum of the individual objectives can be used to track the points of the Pareto front. Otherwise, other methods have to be exploited. The first type of possibility is to use the ε-constrained method [113], which considers an individual objective as the target to be optimized and sets for all the other objectives a limit that is expressed by a threshold ε, and then progressively reduces the threshold and upgrades the set of non-dominated solutions. Reduction to a single objective function is also carried out by using the goal programming approach [114]. Fuzzy logic-based approaches are also available [115].

However, multi-objective optimization with Pareto front construction and assessment is a successful field of application of metaheuristics. In particular, the direct construction through metaheuristic approaches is an iterative process, in which, at each iteration, multiple solutions are generated, and the solution set is then reduced to only maintain the non-dominated solutions. The dominated solutions are arranged into levels of dominance to enable wider comparisons.

Practically, for most of the many metaheuristic algorithms formulated for solving single-objective optimization problems, there is also the corresponding multi-objective optimization algorithm. As such, a list of metaheuristics for multi-objective optimization is not provided here. Only a few algorithms are mentioned because of their key historical and practical relevance: the Strength Pareto Evolutionary Approach (SPEA2 [116]), the Pareto Archived Evolution Strategy (PAES [117]), and two versions of Non-dominated Sorting Genetic Algorithm, namely NSGA-II [118] and NSGA-III [119].

7.2. No Free Lunches and Comparisons among Algorithms

The discussion about the NFL theorem(s) is also valid for multi-objective optimization. When considering all of the problems under analysis, according to the NFL theorem(s) all of the algorithms outperform the other algorithms for some problems. However, for multi-objective optimization Corne & Knowles [120] showed that the NFL does not generally apply when absolute performance metrics are used. This means that some multi-objective approaches can be better than others to construct the Pareto front. In fact, the best-known Pareto front should be sufficiently wide in order to contain a number of points relatively far from each other, and an approach that only finds a set of points concentrated in a limited region can be considered to be less efficient than another one that provides more dispersed compromise solutions.

On these bases, developing comparison metrics or quality indicators for multi-objective optimization algorithms is a challenging but worthwhile task. Some principles that are indicated in [11] for the construction of effective multi-objective comparison metrics include:

- (a)

- The minimization of the distance between the best-known Pareto front and the true optimal Pareto front (when the latter is known).

- (b)

- The presence of a distribution of the solutions as uniform as possible.

- (c)

- For each objective, the presence of a wide range of values in the best-known Pareto front.

For comparison purposes, it is convenient to represent the quality of each Pareto front obtained using a multi-objective optimization algorithm by using a scalar value (a real number). This number can be the average distance between the points located onto the Pareto front under analysis and the closest points of the best-known Pareto front. A survey of the indicators proposed in the literature is provided in [121,122]. Other indicators are assessed with a chi-square-like deviation measure, in order to exploit the Pareto front diversity [121,123]. An Inverted Generational Distance indicator has been proposed in [124] to deal with the tradeoff between proximity and diversity preservation of the solutions in multi-objective optimization problems.

Furthermore, the hyper-volume indicator [8,10,121] has been used both for performance assessment and guiding the search in various hyper-volume-based evolutionary optimizers [125]. For a problem in which all of the objectives are minimized, and all of the points that form the Pareto front are positive, the hyper-volume can be calculated by setting up the maximum value for each objective, in such a way to obtain a regular polyhedron. Subsequently, given the Pareto front, the hyper-volume is determined by calculating the volume that is found starting from the origin of the axes and is limited by the Pareto front or by the regular polyhedron surfaces. If the objective function has negative values, then the same concepts apply by using translation operators in such a way that the Pareto front is located inside the regular polyhedron.

A weighted hyper-volume indicator has been introduced in [126], including weight functions to express user preferences. The selection of the weight functions and transformation of the user preferences into weight functions has been addressed in [127]. While the idea of exploiting the hyper-volume calculation is interesting and based on geometric considerations, the determination of efficient algorithms to determine the hyper-volume when the number of dimensions increases is an open research field. For many objectives, a diversity metric has been proposed in [128] by summing up the dissimilarity of the solutions to the rest of the population.

For power and energy systems, many multi-objective problems are defined with two or three objectives. In these cases, the hyper-volume can be calculated from the available methods [9,129]. In this case, it is also possible to extend previous results regarding the comparison among metaheuristics. Let us consider a number of objectives to be minimized. Following the concepts introduced in [29], when multiple metaheuristic algorithms have to be compared on a given multi-objective optimization problem, it is possible to determine the best-known Pareto front that results from all of the executions of the algorithm for a given time. Subsequently, the comparison of all the Pareto fronts, which result from the various methods, provide a quality indicator given by the hyper-volume included between the Pareto front under analysis and the best-known Pareto front. In this case, each solution is represented by using a scalar value. Lower values of this scalar value mean better quality of the result. The CDF of these scalar values can be constructed, and it is considered as the reference CDF for OPISD calculation. The comparison between the CDFs of the individual metaheuristic algorithms and the reference CDF provides the area A to be used for OPISD calculation.

For comparing multi-objective metaheuristic algorithms, the definition of a suitable set of test functions is needed as a benchmark. For this purpose, classical test functions have been introduced in [11], with the ZDT functions that contain two objectives, chosen to represent different cases with specific features. Further test functions have been introduced with nine DTLZ functions in [130], as well as in [131]. However, for power and energy systems, these benchmarks do not take into account the typical constraints that appear in specific problems and, as such, an algorithm that shows good performance on these mathematical benchmarks could behave with poor performance on these problems. A lack of dedicated benchmarking for a wide set of power and energy problems does not enable the scientists in the power and energy domain presenting sufficiently broad results on the metaheuristic algorithm performance.

7.3. Multi-Objective Solution Ranking

The last, but not less, important aspect concerning the multi-objective optimization outcomes is the possible ranking of the solutions that were determined by numerical calculations, in order to assist the decision-maker in the task of identifying the preferable solution. The methods that are available for this task require, in some way, to obtain the opinion of the expert to express preferences about the objectives considered. These methods belong to multi-criteria decision-making, where the criteria coincide with the objectives under consideration here. Some tools widely adopted are the Analytic Hierarchy Process [132], in which a nine-point scale quantifies the relative preferences between pairs of objectives, and the overall feasibility of the process is confirmed if an appropriately defined consistency criterion is satisfied. Furthermore, in the Ordered Weighted Averaging approach [133] the weights are ordered according to their relative importance, and a procedure that is driven by a single parameter is set up by using a transformation function that modifies the weighted values of the objectives. The Technique of Order Preference by Similarity to Ideal Solution (TOPSIS) method is based on the evaluation of the objectives, depending on their distance to reference (ideal) points [134,135]. Further methods, such as ELECTRE [136] and PROMETHEE [137], are based on comparing pairs of weights. Other methods have been formulated using fuzzy logic-based tools [112,130]. For example, for a transmission expansion planning problem, the fuzzy logic-based tools are used in [138,139], and in [58], where the rank of each solution is directly established in each Pareto front.

The reduction of personal judgment from the decision-maker is sometimes desired, especially when the problem is highly technical and the decision-maker has different qualifications. Moreover, in some cases, the introduction of automatic procedures to determine the relative importance of the objectives is needed, in particular when the judgment is included in an iterative process and the relative importance has to be established many times when considering the variation of the objective function values.

These cases may typically occur when dealing with technical aspects in the power and energy systems domain. For example, a criterion for comparing non-dominated solutions based on power systems concepts has been introduced in [140], in which the Incremental Cost Benefit ratio has been defined by calculating the ratio between the congestion cost reduction with respect to the base case and the investment referring to the solution considered. The automatic creation of the entries of the pair comparison matrices for the AHP approach has been introduced in [54] for a distribution system reconfiguration problem, using an affine function that maps the objective function values onto the Saaty interval from 1 to 9.

8. Discussion on the Effectiveness of Metaheuristic-Based Optimization: Pitfalls and Inappropriate Statements

In their articles aimed at applying metaheuristic optimization methods, various authors include inappropriate statements on the effectiveness of the methods used. These statements are also one of the main reasons of the rejection of many papers sent to scientific journals or conferences. The most significant (and sometimes common) situations are recalled in this section, with corresponding discussion on whether more appropriate solutions could be adopted.