Multi-Labeled Recognition of Distribution System Conditions by a Waveform Feature Learning Model

Abstract

1. Introduction

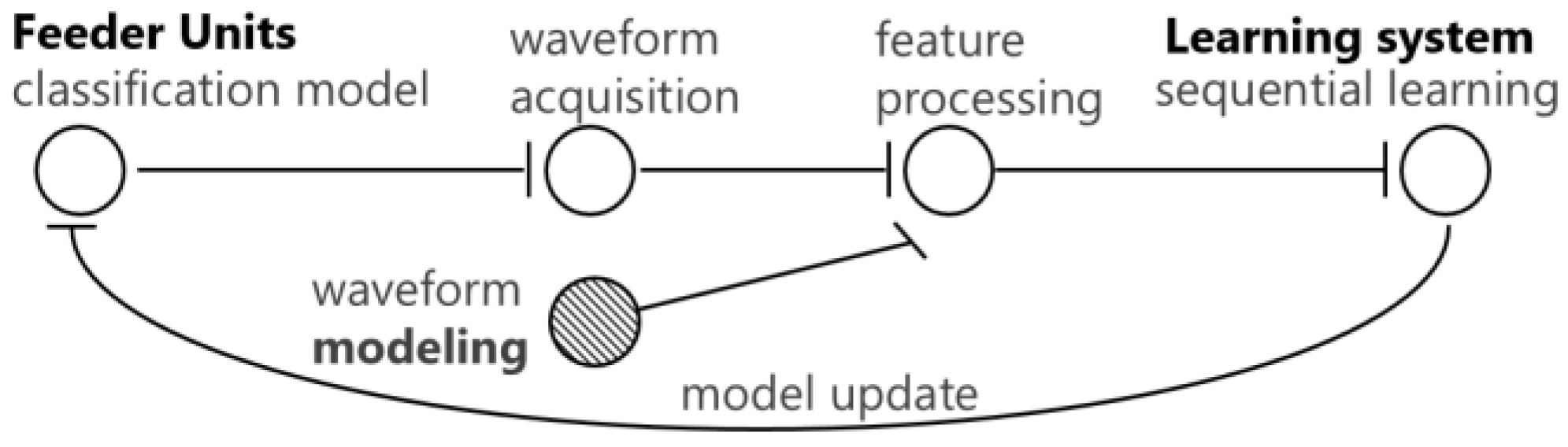

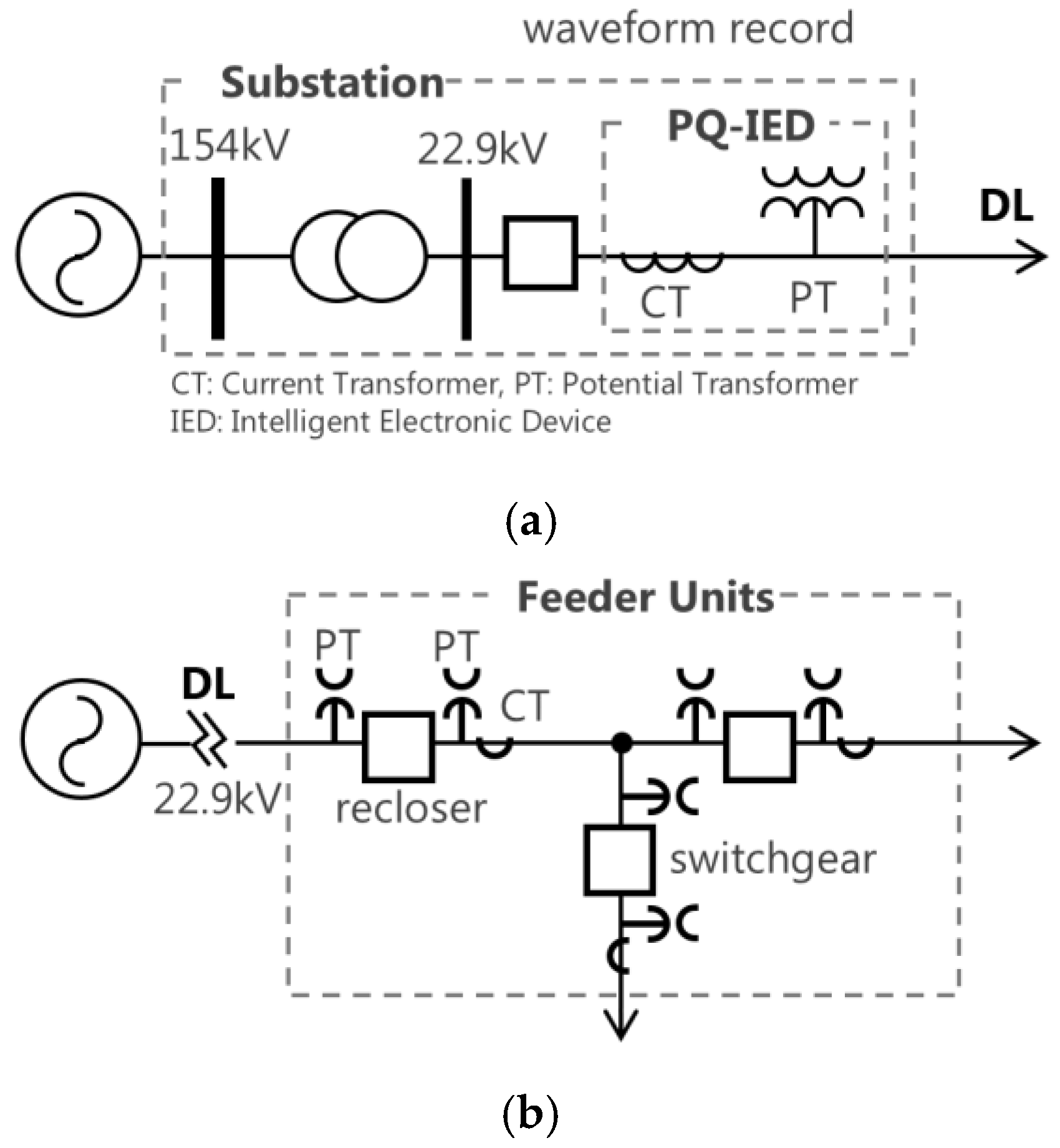

2. Modeling the Power Signals and Acquisition Processes

2.1. Field-Measured Waveform Data Processing

2.2. Modeling Disturbance Waveforms

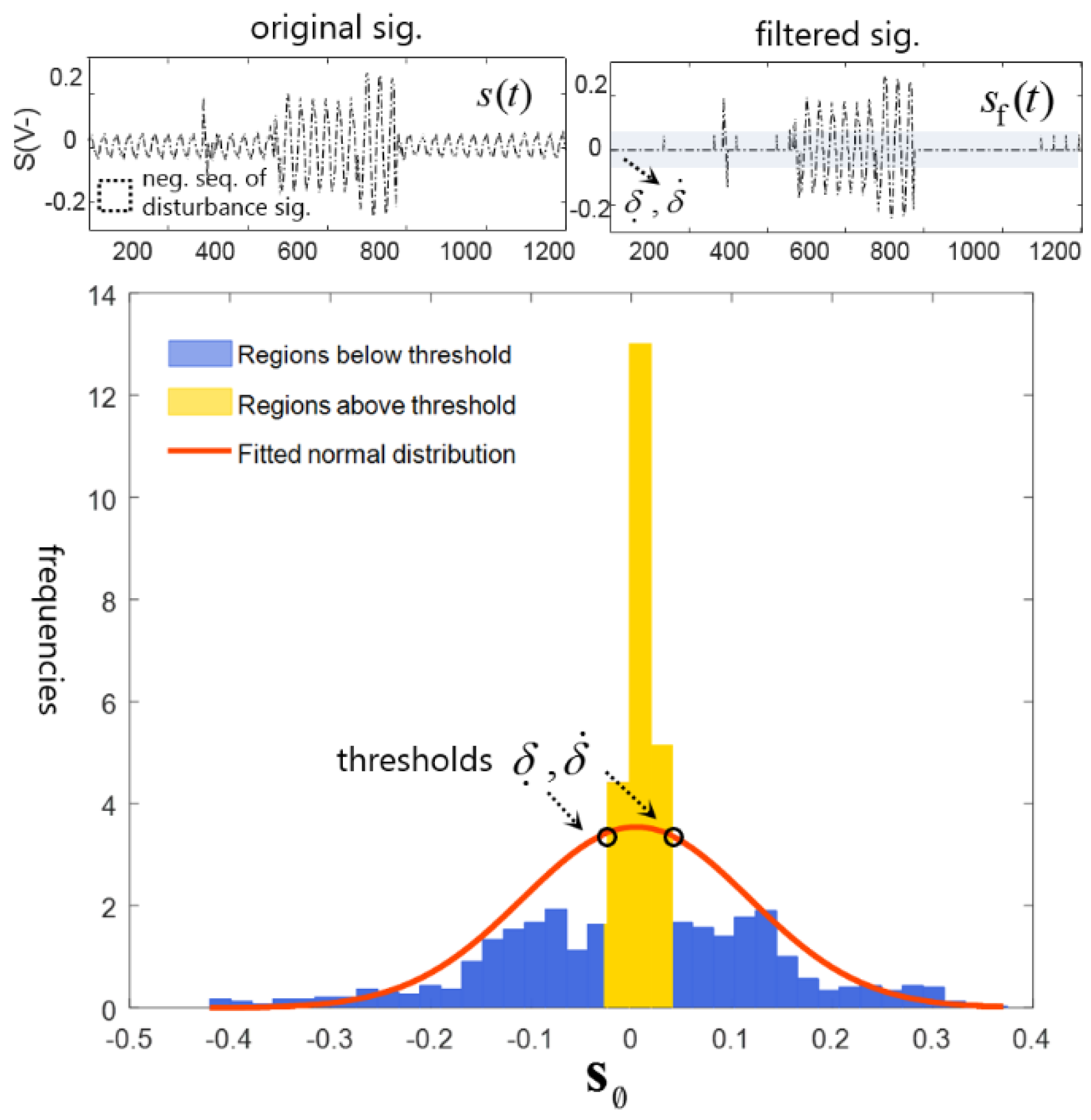

2.3. Symmetrical Component Processing

2.4. Signal Filtering for Feature Extraction

2.5. Waveform Representation with Variable Windows

2.6. Feature Modeling and Extraction

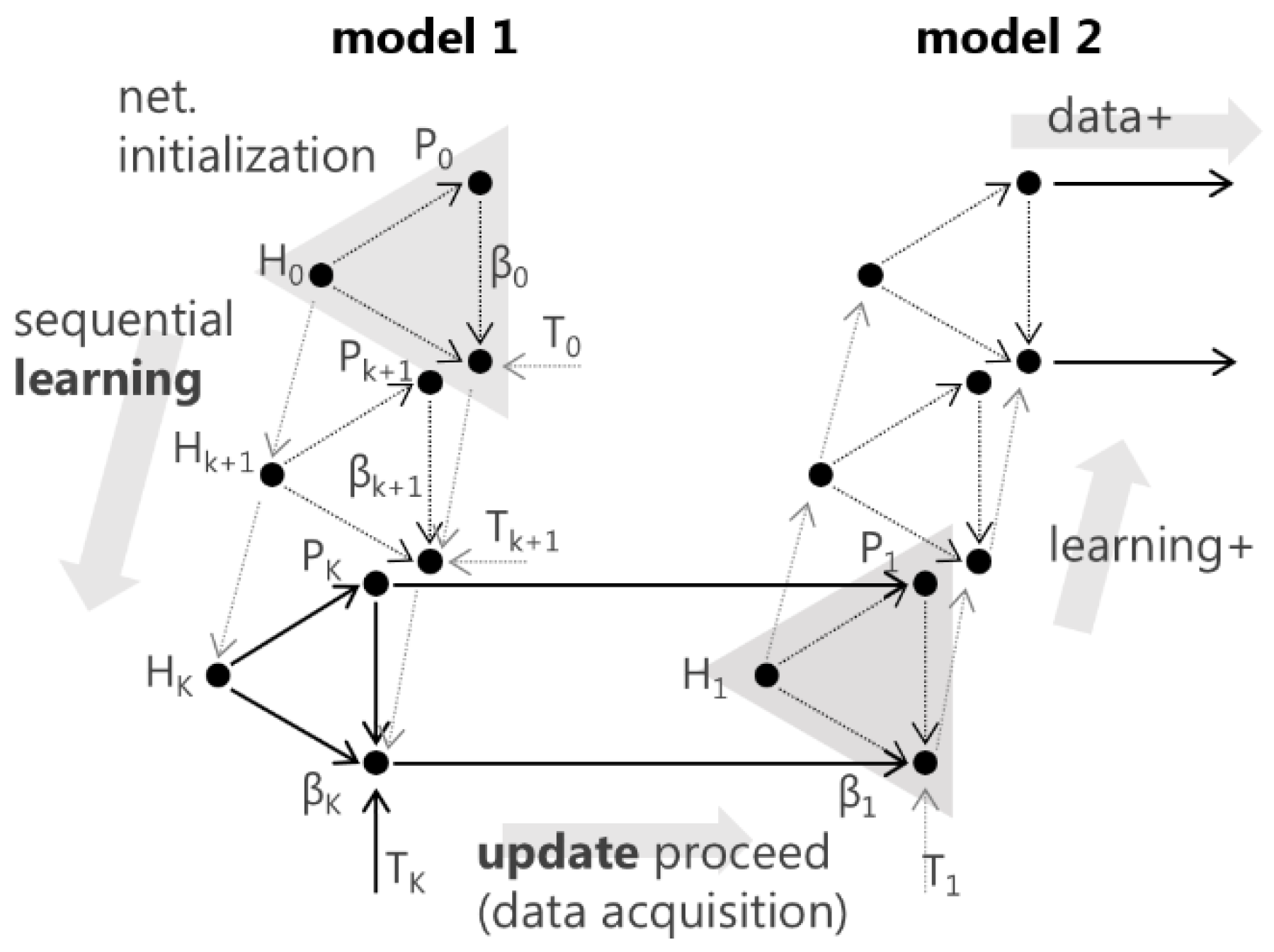

3. Learning for Waveform Pattern Recognition

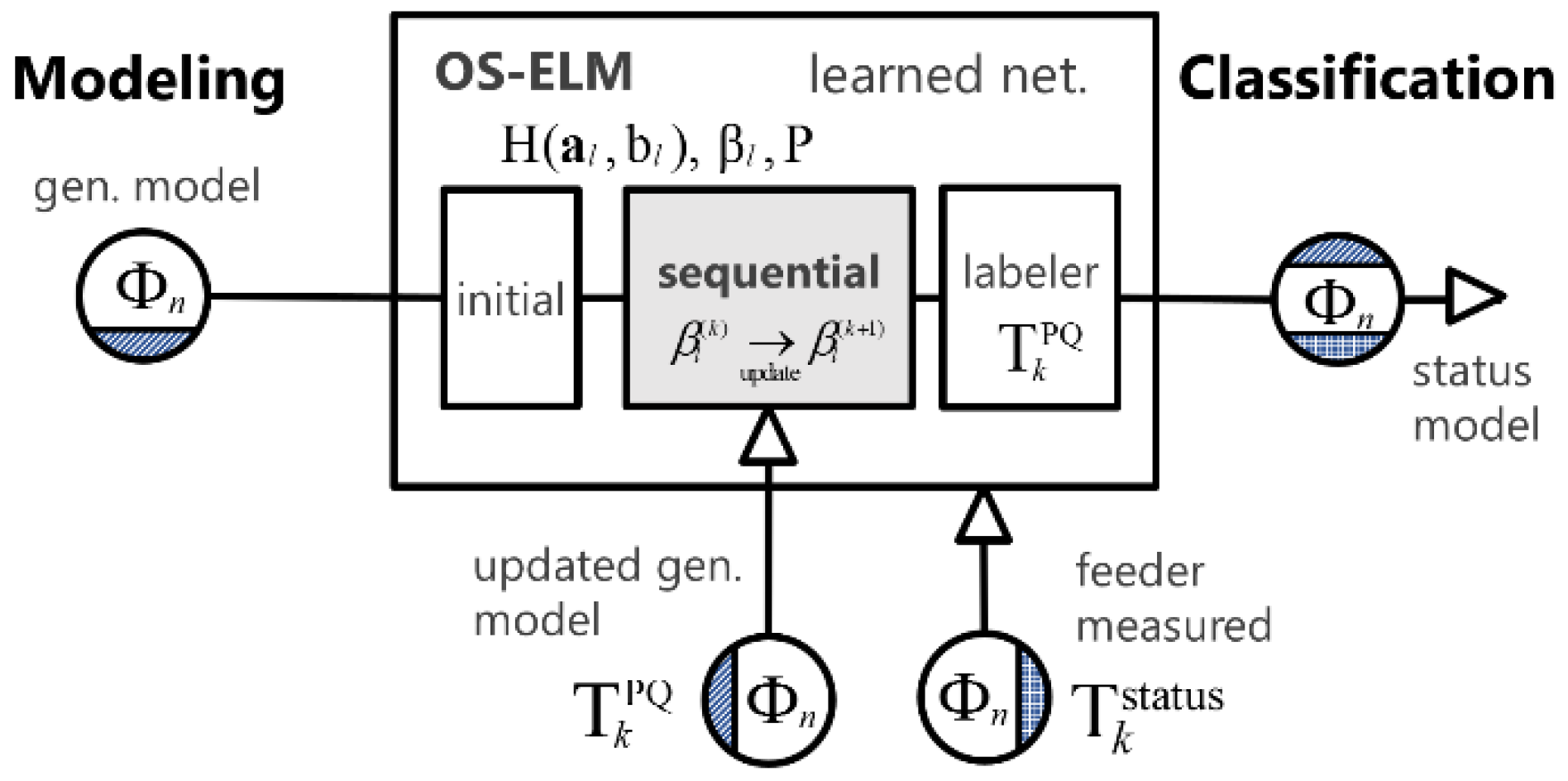

3.1. Using OS-ELM with Condition Signals

3.2. Condition Classification Model Process

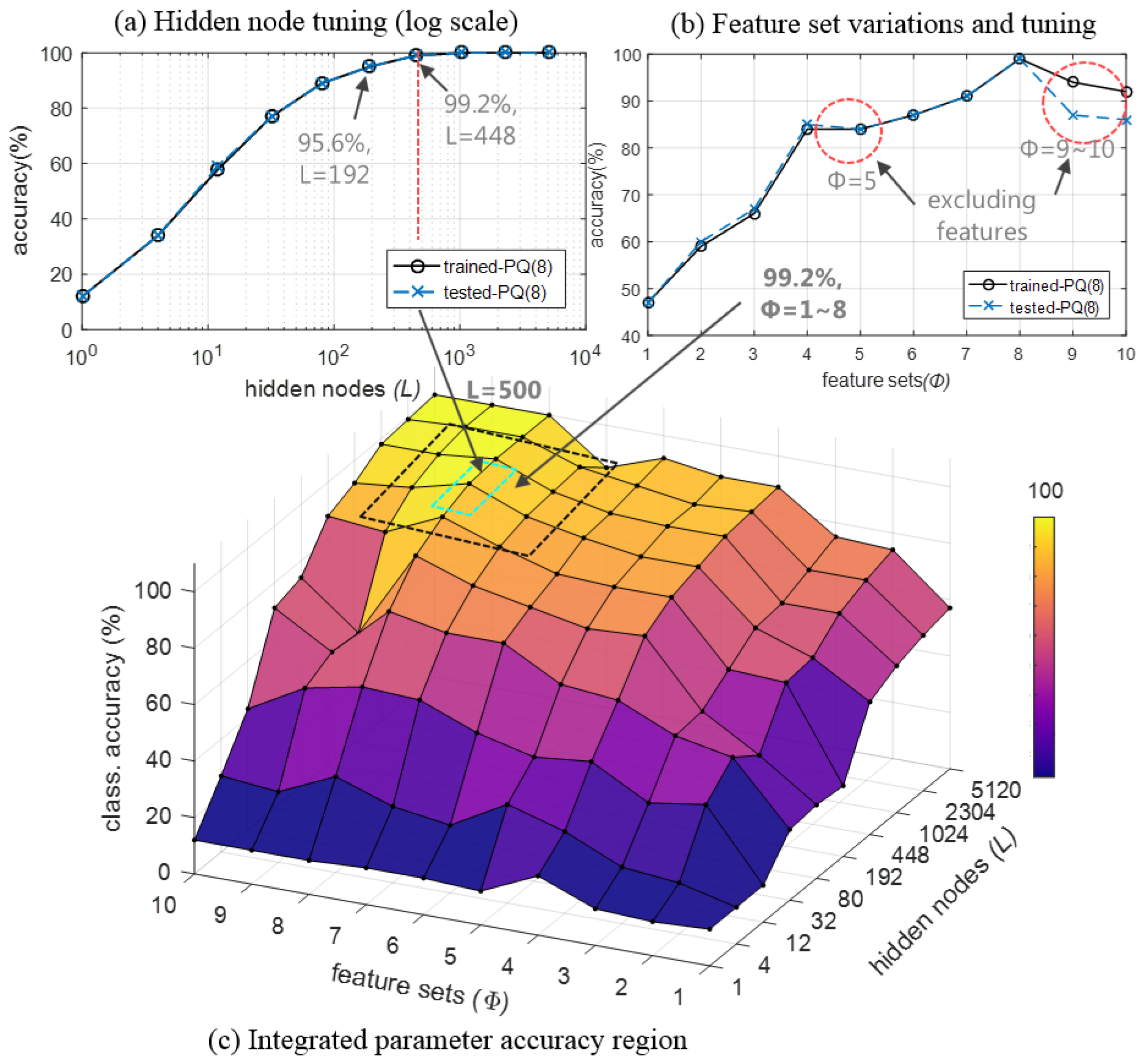

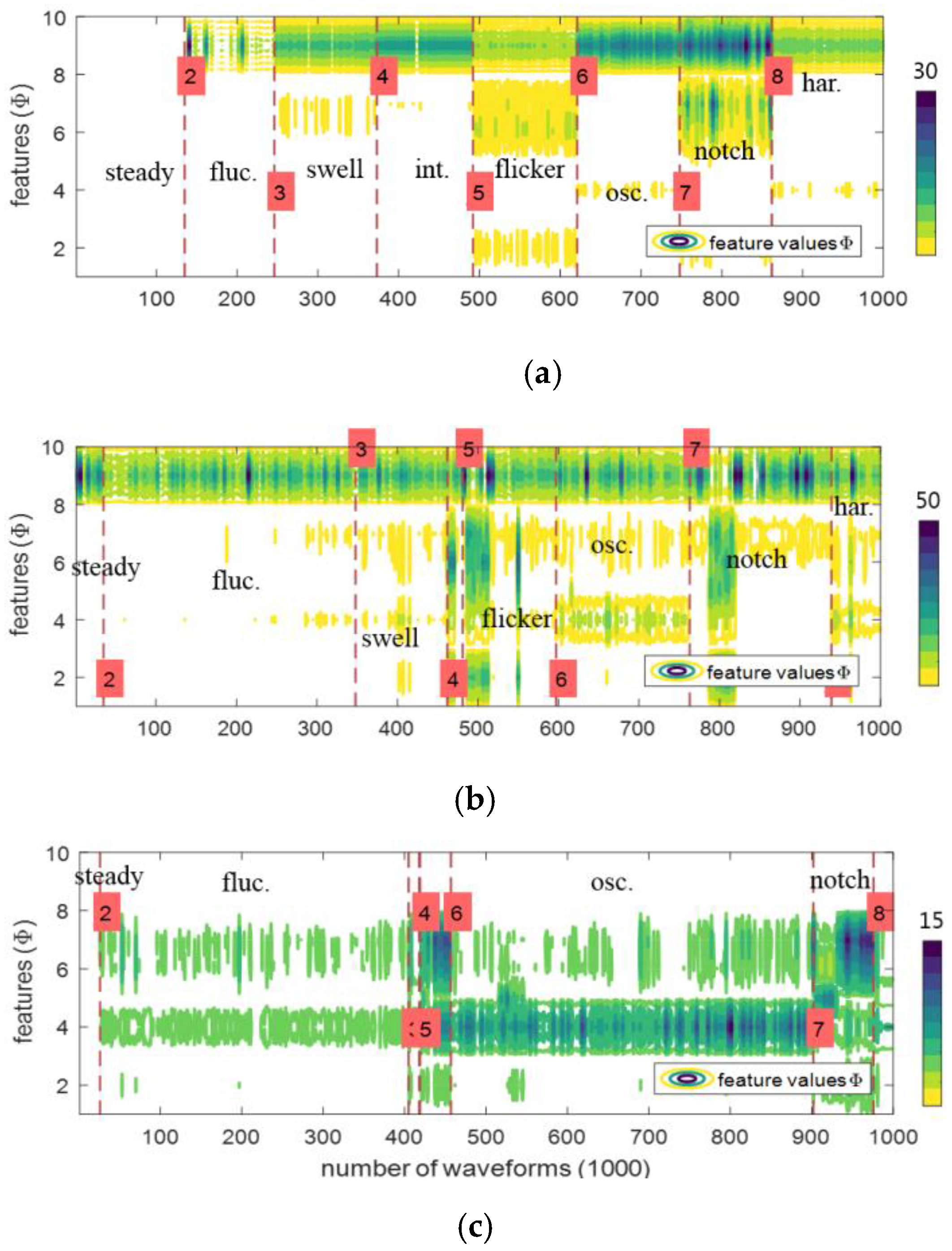

4. Model Validation

5. Discussion and Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- 1159-2009—IEEE Recommended Practice for Monitoring Electric Power Quality Industrial and Commercial Applications; IEEE Press: New York, NY, USA, 2009.

- Gaouda, A.; Kanoun, S.; Salama, M.; Chikhani, A. Pattern recognition applications for power system disturbance classification. IEEE Trans. Power Deliv. 2002, 17, 677–683. [Google Scholar] [CrossRef]

- Mahela, O.P.; Shaik, A.G.; Gupta, N. A critical review of detection and classification of power quality events. Renew. Sustain. Energy Rev. 2015, 41, 495–505. [Google Scholar] [CrossRef]

- Santoso, S.; Grady, W.M.; Powers, E.J.; Lamoree, J.; Bhatt, S.C. Characterization of distribution power quality events with Fourier and wavelet transforms. IEEE Trans. Power Deliv. 2000, 15, 247–254. [Google Scholar] [CrossRef]

- Chakravorti, T.; Patnaik, R.K.; Dash, P.K. Detection and classification of islanding and power quality disturbances in microgrid using hybrid signal processing and data mining techniques. IET Signal Process. 2017, 12, 82–94. [Google Scholar] [CrossRef]

- Li, B.; Jing, Y.; Xu, W. A Generic Waveform Abnormality Detection Method for Utility Equipment Condition Monitoring. IEEE Trans. Power Deliv. 2017, 32, 162–171. [Google Scholar] [CrossRef]

- Kumar, R.; Singh, B.; Shahani, D.; Chandra, A.; Al-Haddad, K. Recognition of power-quality disturbances using S-transform-based ANN classifier and rule-based decision tree. IEEE Trans. Ind. Appl. 2015, 51, 1249–1258. [Google Scholar] [CrossRef]

- Afroni, M.J.; Sutanto, D.; Stirling, D. Analysis of nonstationary power-quality waveforms using iterative Hilbert Huang transform and SAX algorithm. IEEE Trans. Power Deliv. 2013, 28, 2134–2144. [Google Scholar] [CrossRef]

- Lima, F.P.; Lotufo, A.D.P.; Minussi, C.R. Wavelet-artificial immune system algorithm applied to voltage disturbance diagnosis in electrical distribution systems. IET Gener. Transm. Distrib. 2015, 9, 1104–1111. [Google Scholar] [CrossRef]

- Martinez-Figueroa, G.D.J.; Morinigo-Sotelo, D.; Zorita-Lamadrid, A.L.; Morales-Velazquez, L.; Romero-Troncoso, R.D.J. FPGA-Based Smart Sensor for Detection and Classification of Power Quality Disturbances Using Higher Order Statistics. IEEE Access 2017, 5, 14259–14274. [Google Scholar] [CrossRef]

- Santoso, S.; Powers, E.J.; Grady, W.M.; Parsons, A.C. Power quality disturbance waveform recognition using wavelet-based neural classifier. I. Theoretical foundation. IEEE Trans. Power Deliv. 2000, 15, 222–228. [Google Scholar] [CrossRef]

- Borges, F.A.; Fernandes, R.A.; Silva, I.N.; Silva, C.B. Feature extraction and power quality disturbances classification using smart meters signals. IEEE Trans. Ind. Inform. 2016, 12, 824–833. [Google Scholar] [CrossRef]

- Liao, C.-C. Enhanced RBF network for recognizing noise-riding power quality events. IEEE Trans. Instrum. Meas. 2010, 59, 1550–1561. [Google Scholar] [CrossRef]

- Biswal, B.; Biswal, M.; Mishra, S.; Jalaja, R. Automatic classification of power quality events using balanced neural tree. IEEE Trans. Ind. Electron. 2014, 61, 521–530. [Google Scholar] [CrossRef]

- Dash, P.; Liew, A.; Salama, M.; Mishra, B.; Jena, R. A new approach to identification of transient power quality problems using linear combiners. Electr. Power Syst. Res. 1999, 51, 1–11. [Google Scholar] [CrossRef]

- Li, J.; Teng, Z.; Tang, Q.; Song, J. Detection and classification of power quality disturbances using double resolution S-transform and DAG-SVMs. IEEE Trans. Instrum. Meas. 2016, 65, 2302–2312. [Google Scholar] [CrossRef]

- Manikandan, M.S.; Samantaray, S.; Kamwa, I. Detection and classification of power quality disturbances using sparse signal decomposition on hybrid dictionaries. IEEE Trans. Instrum. Meas. 2015, 64, 27–38. [Google Scholar] [CrossRef]

- Thakur, P.; Singh, A.K. Unbalance voltage sag fault-type characterization algorithm for recorded waveform. IEEE Trans. Power Deliv. 2013, 28, 1007–1014. [Google Scholar] [CrossRef]

- Rodríguez, A.; Aguado, J.; Martín, F.; López, J.; Muñoz, F.; Ruiz, J. Rule-based classification of power quality disturbances using S-transform. Electr. Power Syst. Res. 2012, 86, 113–121. [Google Scholar] [CrossRef]

- Ghanbari, T. Kalman filter based incipient fault detection method for underground cables. IET Gener. Transm. Distrib. 2015, 9, 1988–1997. [Google Scholar] [CrossRef]

- Toscani, S.; Muscas, C.; Pegoraro, P.A. Design and Performance Prediction of Space Vector-Based PMU Algorithms. IEEE Trans. Instrum. Meas. 2017, 66, 394–404. [Google Scholar] [CrossRef]

- He, H.; Shen, X.; Starzyk, J.A. Power quality disturbances analysis based on EDMRA method. Int. J. Electr. Power Energy Syst. 2009, 31, 258–268. [Google Scholar] [CrossRef]

- Erişti, H.; Yıldırım, Ö.; Erişti, B.; Demir, Y. Automatic recognition system of underlying causes of power quality disturbances based on S-Transform and Extreme Learning Machine. Int. J. Electr. Power Energy Syst. 2014, 61, 553–562. [Google Scholar] [CrossRef]

- Li, Y.; Yang, Z. Application of EOS-ELM with Binary Jaya-Based Feature Selection to Real-Time Transient Stability Assessment Using PMU Data. IEEE Access 2017, 5, 23092–23101. [Google Scholar] [CrossRef]

- Wong, P.K.; Gao, X.H.; Wong, K.I.; Vong, C.M. Online extreme learning machine based modeling and optimization for point-by-point engine calibration. Neurocomputing 2018, 277, 187–197. [Google Scholar] [CrossRef]

- Wang, B.; Huang, S.; Qiu, J.; Liu, Y.; Wang, G. Parallel online sequential extreme learning machine based on MapReduce. Neurocomputing 2015, 149, 224–232. [Google Scholar] [CrossRef]

- Negi, S.S.; Kishor, N.; Uhlen, K.; Negi, R. Event Detection and Its Signal Characterization in PMU Data Stream. IEEE Trans. Ind. Inform. 2017, 13, 3108–3118. [Google Scholar] [CrossRef]

- Paap, G.C. Symmetrical components in the time domain and their application to power network calculations. IEEE Trans. Power Syst. 2000, 15, 522–528. [Google Scholar] [CrossRef]

- C37.111-1999—IEEE Standard Common Format for Transient Data Exchange (COMTRADE) for Power Systems; Measuring relays and protection equipment—Part 24; International Electrotechnical Commission: Geneva, Switzerland, 1999.

- Kwon, S.; Kim, J.; Song, I.; Park, Y. Current Development and Future Plan for Smart Distribution Grid in Korea. In Proceedings of the Seminar SmartGrids for Distribution 2008, Frankfurt, Germany, 23–24 June 2008; p. 1. [Google Scholar]

- De, S.; Debnath, S. Real-time cross-correlation-based technique for detection and classification of power quality disturbances. IET Gener. Transm. Distrib. 2017, 12, 688–695. [Google Scholar] [CrossRef]

- Singh, U.; Singh, S.N. Detection and classification of power quality disturbances based on time–frequency-scale transform. IET Sci. Meas. Technol. 2017, 11, 802–810. [Google Scholar] [CrossRef]

- 181-2011—IEEE Standard for Transitions, Pulses, and Related Waveforms (Revision of IEEE Std. 181-2003); IEEE: New York, NY, USA, 2011.

- Lee, C.-Y.; Shen, Y.-X. Optimal feature selection for power-quality disturbances classification. IEEE Trans. Power Deliv. 2011, 26, 2342–2351. [Google Scholar] [CrossRef]

- Liang, N.-Y.; Huang, G.-B.; Saratchandran, P.; Sundararajan, N. A fast and accurate online sequential learning algorithm for feedforward networks. IEEE Trans. Neural Netw. 2006, 17, 1411–1423. [Google Scholar] [CrossRef]

- Lan, Y.; Soh, Y.C.; Huang, G.-B. Ensemble of online sequential extreme learning machine. Neurocomputing 2009, 72, 3391–3395. [Google Scholar] [CrossRef]

- Huang, G.-B.; Zhu, Q.-Y.; Siew, C.-K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Bai, Z.; Huang, G.-B.; Wang, D.; Wang, H.; Westover, M.B. Sparse extreme learning machine for classification. IEEE Trans. Cybern. 2014, 44, 1858–1870. [Google Scholar] [CrossRef]

- Bishop, C.; Bishop, C.M. Neural Networks for Pattern Recognition; Oxford University Press: Oxford, UK, 1995. [Google Scholar]

- Kim, C.; Bialek, T.; Awiylika, J. An Initial Investigation for Locating Self-Clearing Faults in Distribution Systems. IEEE Trans. Smart Grid 2013, 4, 1105–1112. [Google Scholar] [CrossRef]

| Types | Models |

|---|---|

| Magnitude (1-norm) | |

| Signal deviation | |

| Disturbance duration | |

| Zero crossing counts | |

| Window average | |

| Window peak values | |

| Window differential | |

| Cycle RMS a deviation | |

| Peak to RMS | |

| Amplitude of waveforms |

| Class | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | ||

|---|---|---|---|---|---|---|---|---|---|---|

| Types | Steady | Fluc. | Swell | Int. | Flicker | Osc. | Notch | Har. | Total | |

| Voltage changes | V | 57 | 620 | 221 | 30 | 164 | 456 | 316 | 115 | 1979 |

| I | 48 | 732 | 27 | 4 | 65 | 953 | 117 | 33 | 1979 | |

| Fault currents | V | 15 | 79 | 40 | 13 | 35 | 190 | 41 | 124 | 537 |

| I | 5 | 15 | 24 | 68 | 133 | 79 | 169 | 44 | 537 | |

| Abnormal triggers | V | 972 | 2450 | 140 | 26 | 50 | 548 | 96 | 111 | 4393 |

| I | 123 | 1658 | 36 | 11 | 125 | 2339 | 66 | 35 | 4393 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moon, S.-K.; Kim, J.-O.; Kim, C. Multi-Labeled Recognition of Distribution System Conditions by a Waveform Feature Learning Model. Energies 2019, 12, 1115. https://doi.org/10.3390/en12061115

Moon S-K, Kim J-O, Kim C. Multi-Labeled Recognition of Distribution System Conditions by a Waveform Feature Learning Model. Energies. 2019; 12(6):1115. https://doi.org/10.3390/en12061115

Chicago/Turabian StyleMoon, Sang-Keun, Jin-O Kim, and Charles Kim. 2019. "Multi-Labeled Recognition of Distribution System Conditions by a Waveform Feature Learning Model" Energies 12, no. 6: 1115. https://doi.org/10.3390/en12061115

APA StyleMoon, S.-K., Kim, J.-O., & Kim, C. (2019). Multi-Labeled Recognition of Distribution System Conditions by a Waveform Feature Learning Model. Energies, 12(6), 1115. https://doi.org/10.3390/en12061115