1. Introduction

By 2020, ICT industries will account for 3.5% of global carbon emissions, which are predicted to grow by up to 14% by 2040 [

1]. Data centers are becoming a predominant ICT industry due to the rapid growth of Big Data applications, the Internet of Things (IoT), 5G, autonomous systems, Blockchain, and artificial intelligence (AI) [

2,

3]. In addition, it has been predicted that demand for data centers will rise exponentially by 2025, which would make data centers consume 33% of the total global ICT electricity consumption [

4]. Furthermore, it is also predicted that data centers will use 30% of the total world’s energy and, nevertheless, produce only 5.5% of the global carbon footprint due to the adaptation of efficient energy sources and technologies. In addition, data centers will produce 340 metric megatons of CO

2 per year by 2030 [

5]. All the above-mentioned statistics present an alarming growth rate of power usage and Greenhouse Gas (GHG) emissions by data centers in the coming decades. These facts have inspired researchers to increase power-usage efficiency and lower the environmental impact of data centers. The latest research work conducted by big IT companies in the sector reveals that the adoption of predictive modeling in the capacity management of data centers is the key to unlocking stranded capacity and identify practices for higher efficiency and reliability [

6,

7,

8].

Gao [

8] used a Neural Network to predict the Power Usage Effectiveness (

) [

9] of a Google data center using data from different sensors with the aim of increasing the energy efficiency of the data center. However, this research does not address the different types of uncertainty caused by sensors [

10]. Hossain et al. [

7] used a trained Belief Rule-Based Expert System (BRBES) to predict PUE with sensor-data uncertainty in a data center. BRBES consists of belief rules as knowledge base and evidential reasoning as inference engine, which is capable of addressing different types of uncertainty such as incompleteness, ignorance, vagueness, imprecision, and ambiguity. Different parameters of belief rules such as, attribute weight, rule weights, and belief degrees are usually determined by domain experts. However, the values set by experts are not always accurate. Therefore, Hossain et al. [

7] used randomly generated rules to learn about these parameters from the dataset. However, this method was not suitable as the results were not reproducible. Yang et al. [

11] proposed a learning mechanism for BRBES using a sequential quadratic programming-based optimization technique. For this, they have the

fmincon function of the MATLAB optimization tool box. The proposed learning mechanism for training the BRBES suffers from a local optimal problem where the algorithm finds the best solution from the smallest number of candidates instead of all solutions to the problem. Therefore, a learning mechanism is needed that can address the aforementioned problem and provide a better prediction.

The Differential Evolution (DE) algorithm is not prone to a local optimal problem due to its randomness [

12,

13]. However, the control parameters of DE, such as the crossover (

) and mutation (

F) factors, play an important role in the success of DE. The BRBES-based adaptive DE algorithm, named BRBaDE [

14], helps to identify the proper value of

and

F for DE. Furthermore, the learning mechanism for BRBES can be considered as two types. One is parameter optimization and the other one is structure optimization. In parameter optimization, the BRBES parameters are optimized, while in structure optimization the structure of belief rules of the BRBES is optimized. Yang et al. [

15] proposed a parameter and structure optimization for BRBES using DE. However, their proposed method has the inherent problem of determining the optimal values of

F and

for DE. Therefore, BRBES accuracy can be improved by employing parameter and structure optimization using BRBaDE as a learning technique.

In our previous work [

7], two parameters, indoor and outdoor temperature, were used for predicting

. To improve prediction accuracy in this research work, wind speed and direction were also included, as these parameters also influence the environment. The accurate prediction of

helps data-center operators to take necessary steps for making their data centers more energy-efficient. This paper aims to demonstrate the employment of parameter and structure optimization using BRBaDE as a learning technique for BRBES to predict the energy-efficiency metric,

, from existing data generated within a data center. The raw data used for the experiments were sourced from a Facebook data center in Luleå. The collected data were used to provide trends and predict data-center energy efficiency.

The article is organized according to the following structure:

Section 2 reviews related work, and

Section 3 and

Section 4 cover the methodology followed by the experimental part. Subsequently,

Section 5 contains the implementation of the predictive models that forecast

, followed by

Section 6, which presents results and their analysis. Lastly,

Section 7 outlines the conclusion and indicates our future work.

2. Related Work

Data centers are becoming a more integral part of our daily life. All major services, such as telecommunications, transport, public health, and urban traffic, are now using data centers to deploy IT services. Due to the importance of the above-mentioned facilities and increasing demand, the power consumption and operating cost of data centers are rapidly rising. Therefore, researchers are now primarily focusing on optimizing data centers.

In recent years, significant research has been devoted to the development of appropriate matrices for measuring data-center energy efficiency. First, the energy efficiency of a system is measured as the ratio of useful work done by a system to the total energy delivered to the system. For a data center, energy efficiency can be considered as useful work performed by different subsystems. According to the Green Grid Association [

16], PUE and Data Center Infrastructure Efficiency (DCiE), which are shown by Equations (

1) and (

2), can help to better understand and improve the energy efficiency of existing data centers. This also helps to support smarter managerial decision making for improving data-center efficiency.

IT Equipment Power includes the load associated with all IT equipment, such as computing, storage, and network devices.

Total Facility Power includes everything that supports the processing of IT equipment load (e.g., mechanical and cooling systems).

However, the Green Grid Association also proposed metrics such as Carbon Usage Effectiveness (

) [

17], Water Usage Effectiveness (

) [

18], and Electronics Disposal Efficiency (

) [

19] to measure the CO

footprint, water consumption per year, and the disposal efficiency of data centers, respectively. From all these matrices,

and

are considered as the industry de facto for measuring power efficiency.

Nowadays, data centers consist of numerous sensors that generate millions of data points every day. These huge numbers of data are usually used for monitoring purposes. However, machine-learning algorithms can exploit the use of these monitoring data to improve the energy efficiency of data centers. In addition, machine-learning algorithms are capable of predicting

using these data while considering the complexity of the components of the data centers. According to Belden Inc. [

20], one of the largest US-based manufacturers on networking, connectivity, and cable products: “It won’t be long before Data Center Infrastructure Management (DCIM) systems will routinely contain an AI tool that not only optimizes critical mechanical- and electrical-equipment performance, but also optimizes compute and storage needs. AI will affect how data-center operations teams work and change what’s involved with day-to-day tasks like fulfilling normal maintenance needs and monitoring networks. They’ll become “automation engineers”, using the AI engine to optimize data centers”.

Furthermore, Vigilent [

21] is another IT company that has succeeded in reducing data-center cooling capacity by implementing real-time monitoring and machine learning to match cooling needs with the exact cooling capacity. This frees up stranded capacity and allows to determine when cooling infrastructure is at risk of failure, resulting in uptime improvement, and preventing unexpected downtime and revenue loss.

Moreover, Rego [

22] developed a set of software tools named Prognose that could be used for the predictive modelling of energy and capacity planning within a data center. Their model analyzes different metrics that go into building a data center and is intended to perform predictive modelling throughout the life of the data center (not just during planning).

Shoukourian et al. [

23] have used neural network based machine learning approach for modeling the coefficient of performance of a high performance data center. Balanici et al. [

24] used server traffic flow to improve the power usage of a data center. They have used auto-regressive neural networks to predict the server traffic flow. Furthermore, power usage of a data center can be improved by optimizing the control policy of the cooling system. Li et al. [

25] proposed a Reinforcement Learning based control policy of the cooling system of a data center. The proposed model has been able to reduce 11% cooling cost in a simulation platform. Moreover, Haghshenas et al. [

26] have also used multi-agent based Reinforcement Learning algorithm to minimize energy consumption of a large-scale data center.

Gao [

8] conducted extensive work to predict the

metric of a Google data center. This work aims to demonstrate that machine learning is an effective tool to leverage existing sensor data to model data-center performance and improve energy efficiency. The model has been tested and validated at Google’s data centers. In his work, a neural network was selected as the mathematical framework for training data-center energy-efficiency models. Their training dataset contained 19 normalized input variables and one normalized output, the data center PUE, each variable spanning 182,435 samples (two years of operational data). This custom AI DCIM solution reduced overall data-center power consumption by 15% and reduced cooling power by 40%. However, the data coming from sensors contained different types of uncertainty, such as ignorance, incompleteness, ambiguity, vagueness, and imprecision. Different kinds of uncertainty exist in sensor data due to malfunctions, and faulty or duplicate sensor measurements [

10]. A neural network uses forward propagation as an inferencing procedure that does not have a mechanism to address data uncertainty. Therefore, BRBES can be used to address these uncertainties by using a Belief Rule Base (BRB) as the knowledge base and Evidential Reasoning (ER) as the inference engine.

Hossain et. al. [

7] used trained BRBES to predict the

of a data center. BRBES has the capability to address the uncertainties of sensor data [

10]. Furthermore, Yang et al. [

11] used a MATLAB tool-based optimization technique

fmincon as a learning methodology for training BRBES. However, this gradient-based method does not always perform better due to local optima-related problems. Furthermore, the above-mentioned research work used conjunctive BRB, which becomes computationally costly as the number of rules grows with the increase of referential values and antecedent attributes. Therefore, a better learning mechanism is needed for training the BRBES and effective BRB that is not computationally costly.

Chang et al. [

27] proposed an optimization model for disjunctive BRB where lower and upper bounds are set for the utility values of the referential values of the antecedent attributes. These strict constraints influence the optimized model to become stuck in local optima instead of finding a global optimal solution. However, the disjunctive BRB does not grow exponentially with the increase of referential values and it is computationally less costly.

Yang et al. [

15] proposed a join optimization model for BRBES that consisted of parameter and structure optimization. A heuristic strategy is used to optimize the structure of BRB, while a DE algorithm is used to perform parameter optimization. Furthermore, the generalization capability of BRBES is shown in this research work. This research work illustrates DE efficiency for BRBES parameter optimization. However, there is a lack of finding optimal values for the control parameters of DE, which may lead to better results.

In summary, the joint optimization of the parameters and structure for BRBES has shown better results among the different optimization techniques as mentioned above. Among evolutionary algorithms, DE is preferable for the joint optimization of BRBES, as it is better suited for multiple local minima. However, there is a lack of determining optimal values for DE control parameters. Furthermore, there should be a balance of exploration and exploitation of search space while finding the optimal solution for using DE. Therefore, a hyperoptimized algorithm is required to find the optimal values of the DE control parameters while ensuring the balanced exploration and exploitation of the search space. In the next sections, BRBES and its learning mechanism are discussed in detail.

3. BRBES

In this section, a brief description of BRBES is discussed. BRBES is an integrated expert system framework for handling different types of uncertainty with support for both qualitative and quantitative data [

28]. BRBES consists of a knowledge base and an inference mechanism. Expert knowledge is elicited and represented in a knowledge base, using belief structure, incorporated with IF-THEN rules, which is named BRB. The inference mechanism uses ER for processing the input and generating output based on BRB [

29].

Each belief rule of BRB is formulated using an antecedent and consequent. The antecedent consists of antecedent attributes with referential values to represent the inputs for the system. The consequent has the consequent attribute with the associated belief degrees that represent the output of the system. These rules can be prioritized using rule weights. An example of belief rule is given in Equation (

3).

where

are the antecedent attributes of the

kth rule.

is the referential value of the

ith antecedent attribute.

is the

jth referential value of the consequent attribute.

is the degree of belief for the consequent reference value

. If

, then the

kth rule is considered as complete; otherwise, it is incomplete.

A belief rule can also be explained with linguistic terms as shown in the following example.

In the above rule, External Temperature and Room Temperature have the following referential values: “Medium" and “High", while is the consequent attribute with referential values, “Critical", “Moderate", and “Low". As the summation of belief degrees () is one, hence the rule is considered complete.

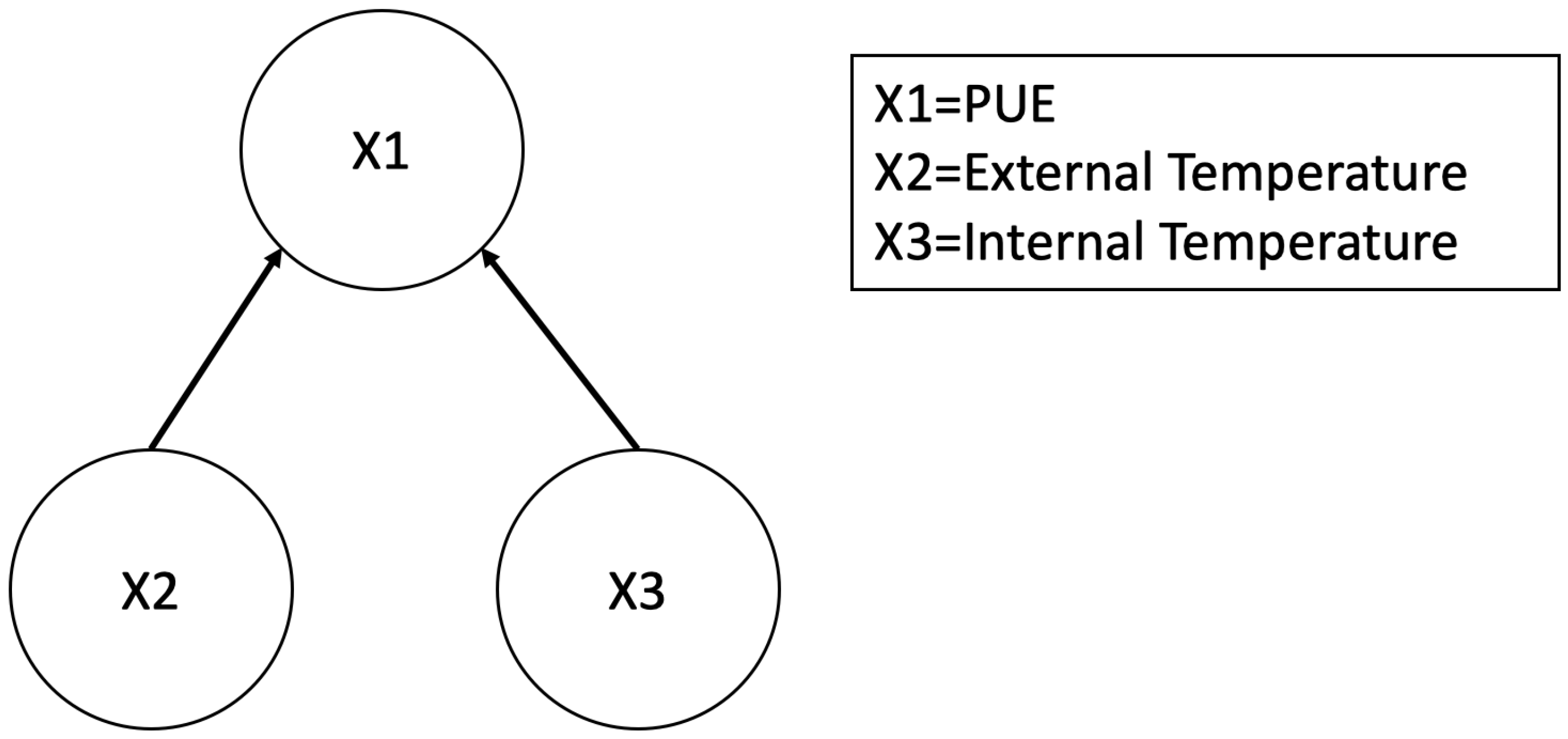

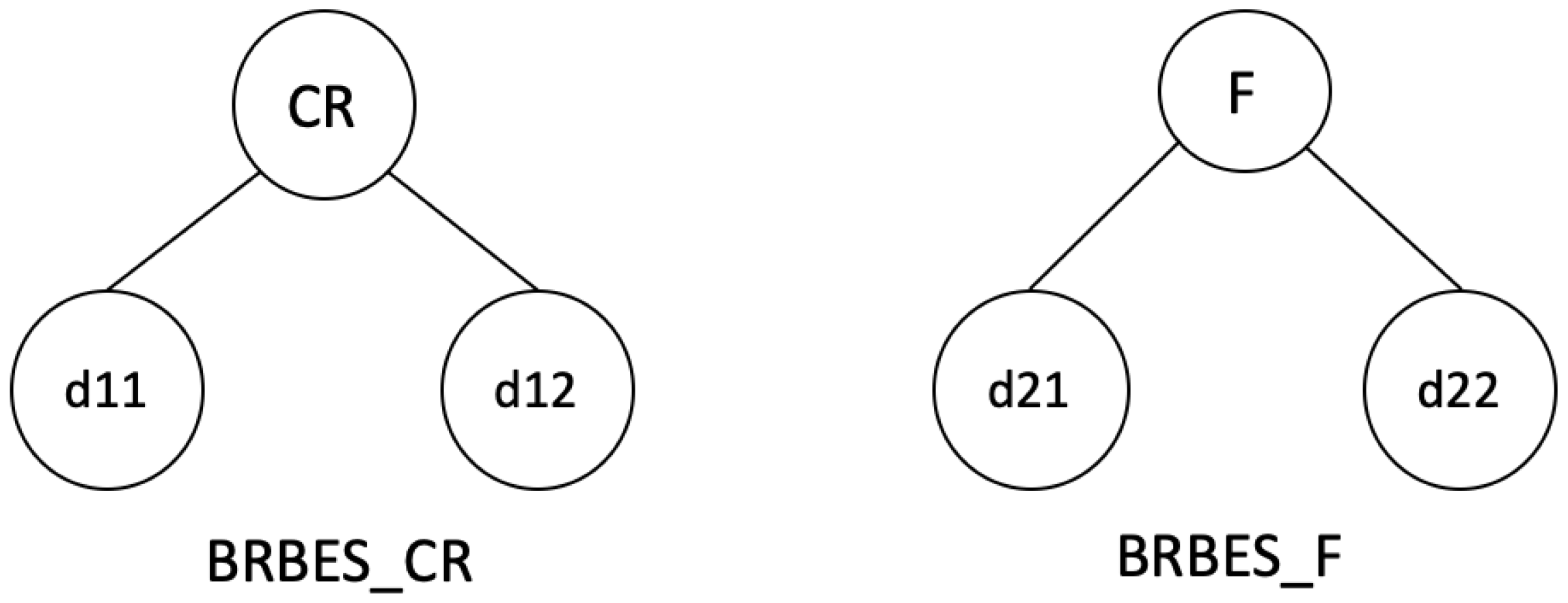

Furthermore, this can also be represented as a tree structure with two leaf nodes and one parent as shown in

Figure 1. The logical connectives of the antecedent attributes in a belief rule can either be AND or OR, which represent the conjunctive or the disjunctive assumptions of the rule, respectively. Based on the logical connectivity of the BRB, a BRBES can be named either Conjunctive or Disjunctive BRB.

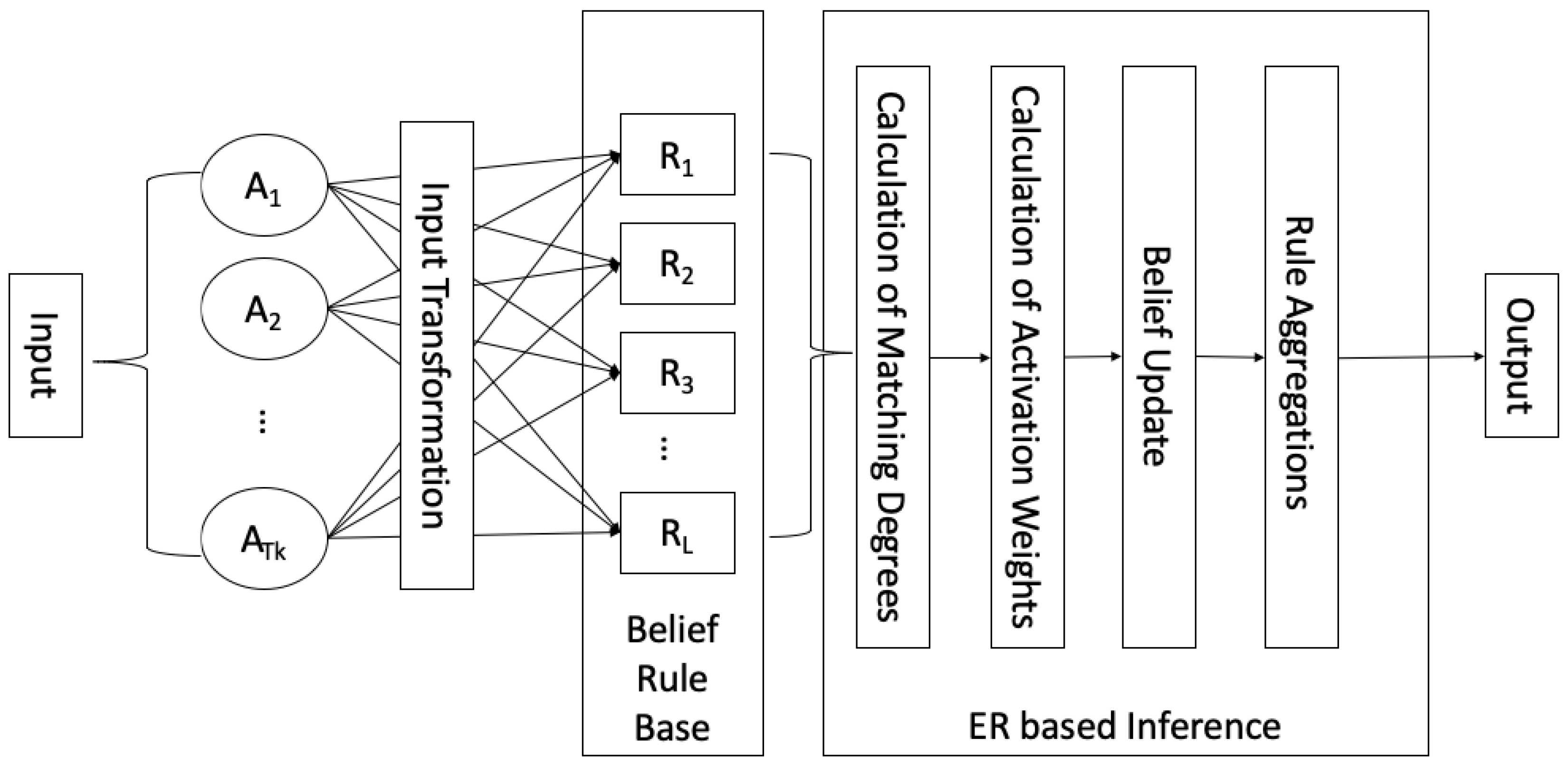

The inference procedures consist of four steps, namely, input transformation, rule activation, belief update, and rule aggregation using an evidential-reasoning approach. The input data are distributed over the referential values of the antecedent attributes, which is called the matching degree during the input transformation. The belief rules are called packet antecedent, which are stored in short-term memory. The activation weight of the rules are calculated using matching degrees.

Activation weight

for the

kth rule for conjunctive assumption can be generated using the following equation:

Here, is the rule weight and is the matching degree of the kth rule. As in the conjunctive assumption, all matching degrees are multiplied.

However, for disjunctive assumption, activation weight

for the

kth rule can be generated using the following equation:

Here, is the rule weight and is the matching degree of the kth rule. In the disjunctive assumption, all matching degrees are summed.

Moreover, the belief degrees associated with each belief rule in the rule base should be updated when input data for any of the antecedent attribute are ignored. The belief-degree update is calculated using the method presented in [

28]. Subsequently, rule aggregation is performed using a recursive reasoning algorithm [

30] due to its less computational cost by using Equation (

7).

Here, is the activation weight of the kth rule, while denotes the belief degree related to one of the consequent reference values.

The fuzzy output of the rule-aggregation procedure is converted to a crisp value using the utility values of the consequent attribute, which is considered as the final result, as shown in Equation (

8). The above-described BRBES execution procedure is shown in

Figure 2.

4. Learning in BRBES Based on BRBaDE

Different parameters of BRBES, such as attribute weights, rule weights, and belief degrees (

,

, and

) play an important role in result accuracy. These parameters are usually known as learning parameters, which are generally assigned by domain experts or they are randomly selected. The antecedent attributes and belief rules are prioritized by consecutively using the attribute and rule weights. Belief degrees of the consequent attribute are used to present the uncertainty of the output. Hence, the learning parameters are important for a BRBES. Therefore, a suitable method is needed to find the optimal values of the learning parameters. By training the BRBES with data, the optimal values of the learning parameters could be discovered [

11]. Different optimization techniques have been proposed to discover the optimal values [

11,

31,

32,

33,

34,

35].

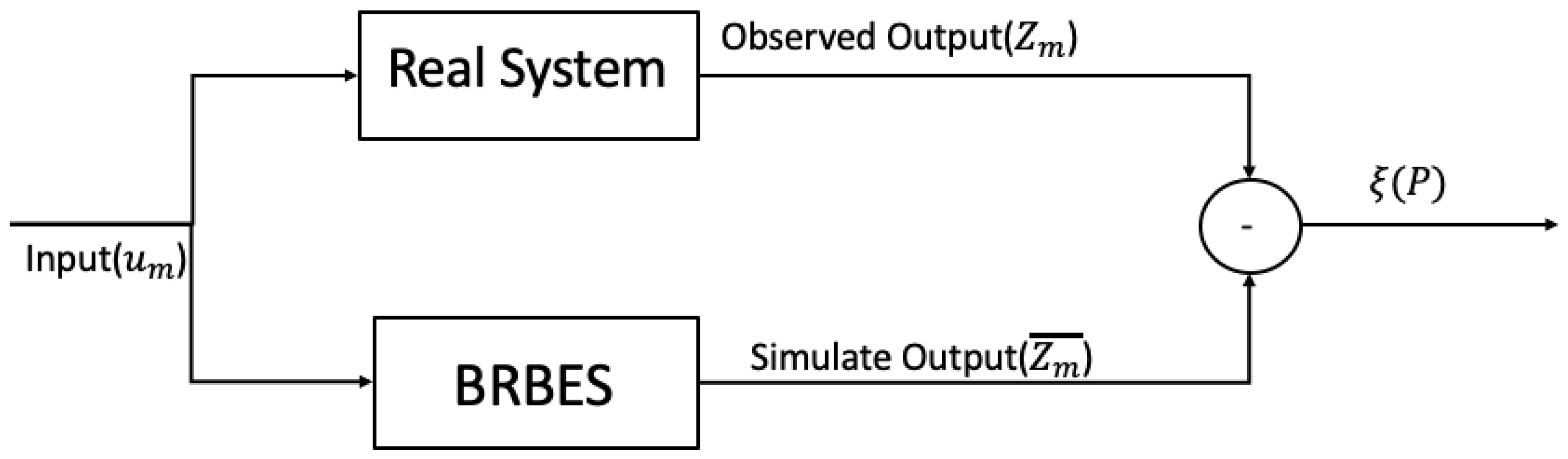

The learning parameters need to be trained to determine the optimal values by using an objective function that considers linear equality and inequality constraints. The output from BRBES is considered as a simulated output (

), and the output from the system is named the observed output (

). Difference

between a simulated and observed output needs to be minimized by the optimization process, as shown in

Figure 3. The training sample contained

M data points, where the input for BRBES was

, the observed output was

, and the simulated output was

(

). Error

was measured by Equation (

9).

Optimization of the learning parameters was executed using the following equation:

The objective function for training the BRBES consists of Equations (

7) and (

8). Additionally, the values of the attribute weights, rule weights, and belief degrees ranged between zero and one. Henceforth, to enforce the above-mentioned criteria, the following constraints were considered:

Utility values of consequent attributes

:

Rule weights

:

Antecedent attribute weights

:

Consequent belief degrees for the

kth rule

:

DE is highly influenced by mutation and crossover factors [

36]. The mutation (

F) and crossover factor (

) can be adapted to improve DE performance [

37]. It was evident that

F and

may change during each iteration of DE, which facilitates a more efficient way to find optimal values. Most of the research on DE parameter adaptation considers the variation of parameter values based on fitness values of an optimization function. However, previous researchers [

38,

39] have not considered the different types of uncertainty related to DE approaches. Therefore, we propose a BRBES-based DE parameter-adaptation algorithm, BRBaDE, which addresses different types of uncertainty.

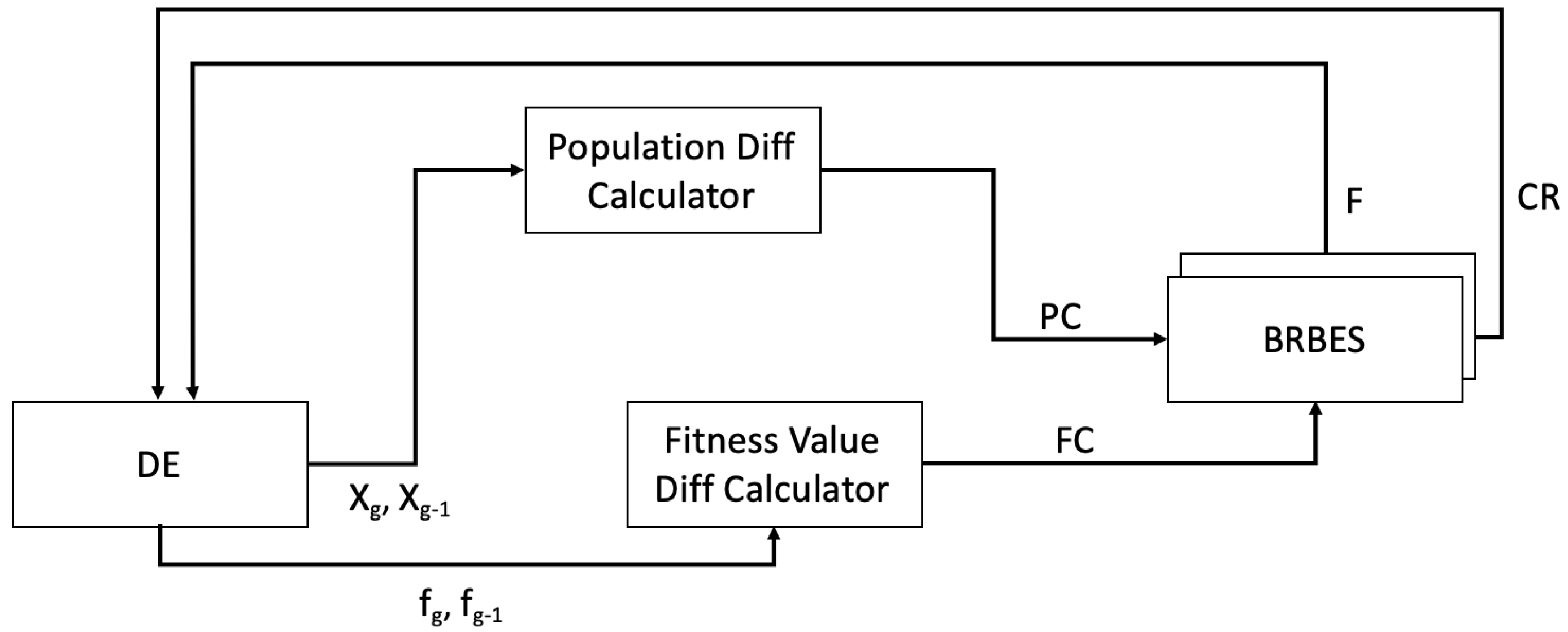

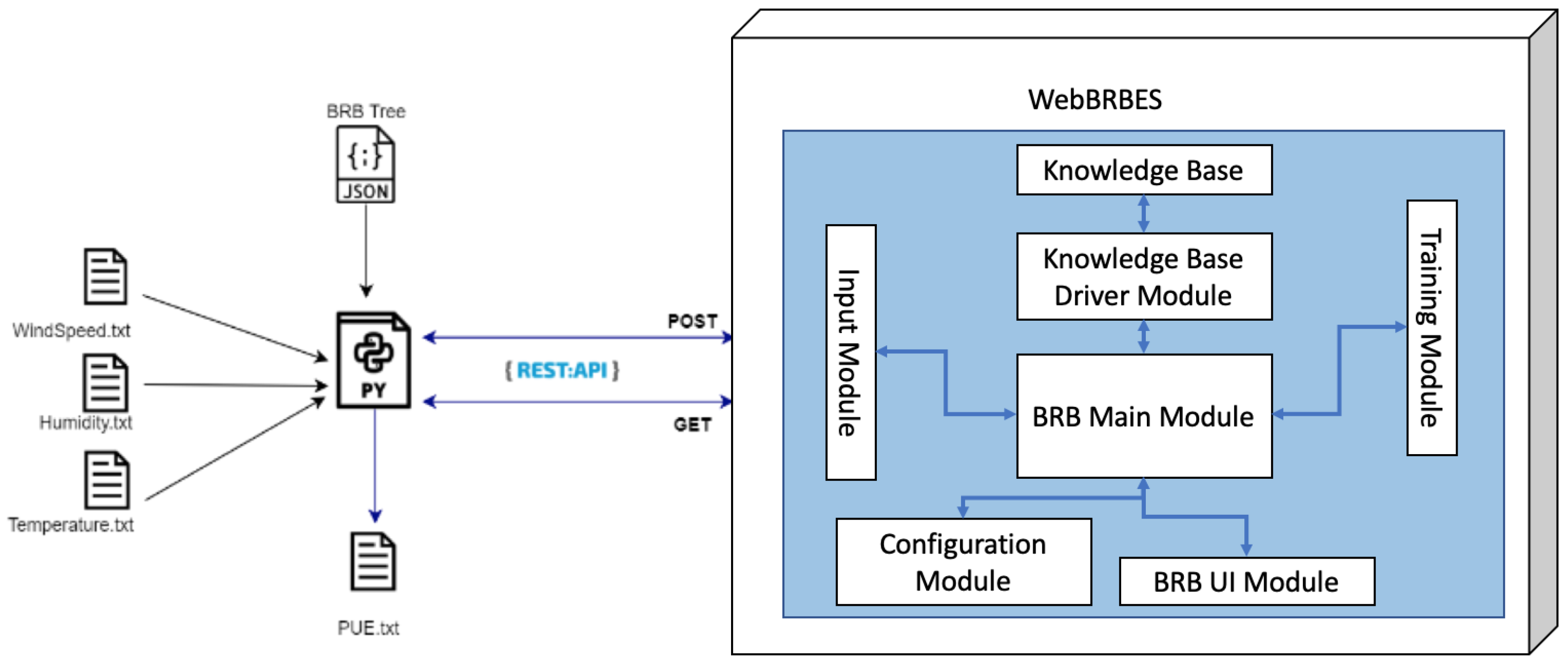

Figure 4 depicts the system diagram of BRBaDE.

In BRBaDE, the changes of population and objective-function values in each generation are supplied to two BRBESs as input. Subsequently, based on the belief rule base and using an evidential-reasoning approach, new

F and

values are selected for the next generation as shown in

Figure 4. The BRBES helps to achieve the optimal exploration and exploitation of the search space by considering the changes of population and objective-function values in each generation.

Here,

is the change in magnitude of a population vector during the last two generations, and

and

are the population vectors on the

gth generation and

th, respectively.

is the change in magnitude of the objective function during the last two generations, while the

and

are the function values for the

ith population on

gth generation and

th respectively. The values of

and

have been rescaled between 0 to 1 using Equations (

17) and (

18), where

and

contain the rescaled value of

and

, respectively. Similarly, using Equations (

19) and (

20), the values of

and

were rescaled between 0 to 2 and assigned in

and

, which were subsequently used as inputs for BRBES for determining new values for

F and

.

Table 1 and

Table 2 and

Figure 5 present the details of the BRBES used to predict the values of

F and

.

Therefore, the proposed BRBaDE provides a solution for addressing uncertainty in objective functions by incorporating BRBES with DE. Furthermore, it facilitates optimal exploration and exploitation of the search space, which leads to finding the optimal solution with fewer iterations.

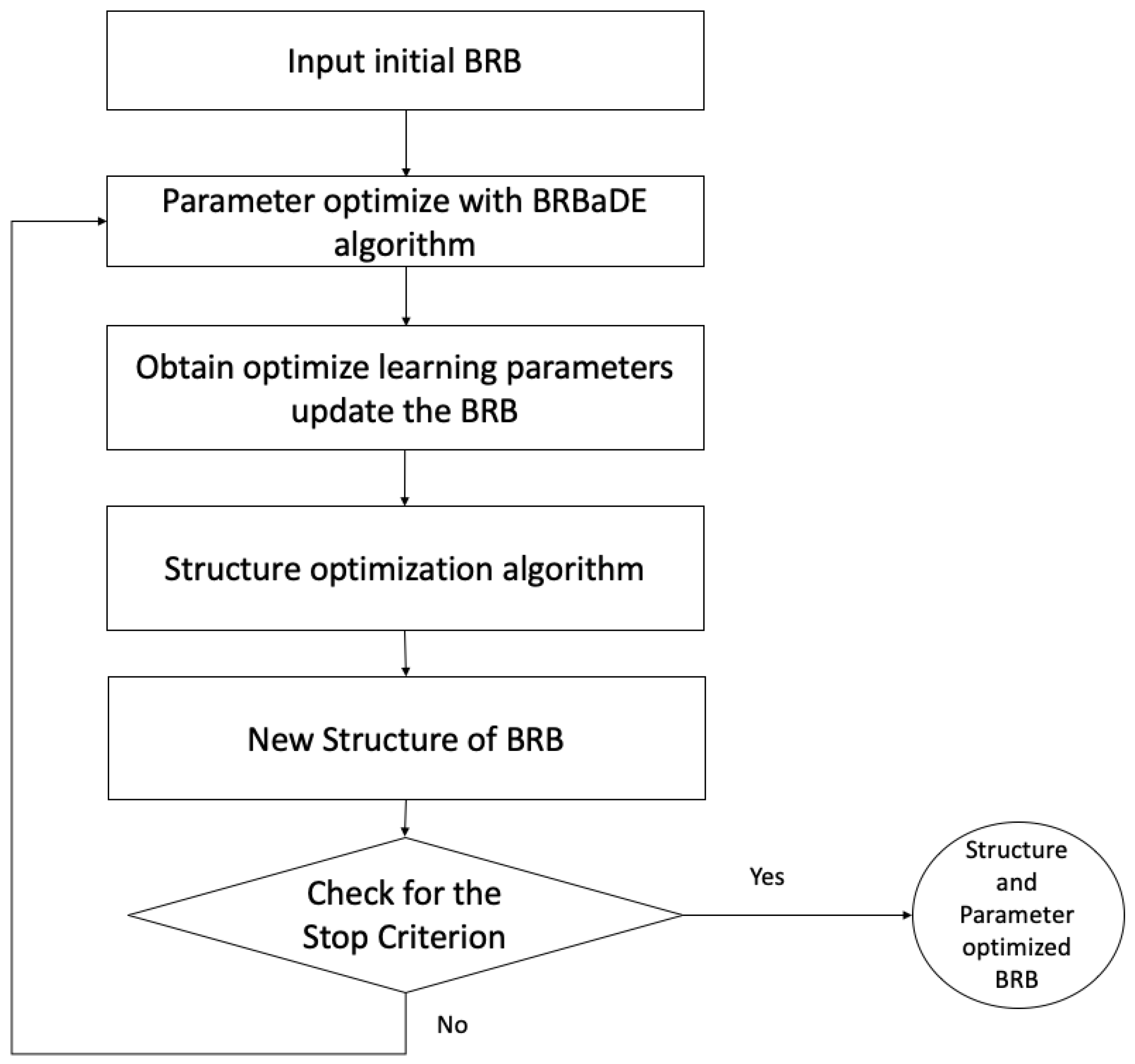

Subsequently, structure optimization of the initial BRB was performed using the Structure Optimisation-based on the Heuristic Strategy (SOHS) algorithm mentioned in [

15]. These iterations continue until the structure of the BRB remains unchanged for a certain number of iterations. The above-described BRBaDE-based parameter and structure-optimization process are presented in

Figure 6.

In summary, parameter optimization (PO) using BRBaDE is performed with the initial BRB, while structure optimization (SO) is performed using the SOHS algorithm. When the number of iterations reaches the threshold value, the stop criterion is met and that is considered as an optimized BRB; otherwise, the loop continues.

By incorporating BRBaDE as a parameter-optimization technique and performing structure optimization of the BRB using SOHS, a better optimized BRB can be generated that subsequently helps in producing results with higher accuracy. The next section presents the implementation of BRBES for predicting the of the Facebook data center.

6. Results

An accurate

prediction model is very useful for efficiently managing data centers. This allows data-center operators to evaluate data-center

sensitivity with respect to its operational parameters. Furthermore, a comparison of actual versus predicted

values provide invaluable insight into real-time plan efficiency and generating performance alerts. Additionally, a data-center efficiency model allows operators to simulate data-center operating configuration without making physical changes—note that this is a challenging task due to the complexity of modern data centers and the interactions among multiple control systems. Therefore, it is very important to verify the accuracy of the predicted

. We used the Mean Square Error (MSE) metric, which is very commonly used for measuring the error of predicted

. The PO and SO using BRBaDE were implemented using MATLAB 2018b. All experiments were conducted on a MacBook Pro with Intel Core i7 processor, 2.2 GHz, and 16 GB RAM. The dataset was partitioned into a 80:20 ratio for training and testing with fivefold cross-validation. The results of training and testing are shown in

Table 5 and

Table 6. The second, third, and fourth columns of the

Table 5 and

Table 6 represent the MSE values for

fmincon-based learning, PO and SO using BRBaDE for Conjunctive and Disjunctive BRBs. From

Table 5, it can be observed that PO and SO using BRBaDE for Disjunctive BRB preformed better than the other methods with the best value of 0.000230, and an average value of 0.000302 for the training dataset. Similar results were also observed for the test datasets from

Table 6. The best MSE obtained by the BRBES for the training dataset after training it by PO for SO using BRBaDE for a disjunctive BRB was 0.0023, which is shown on the last row of the fourth column of

Table 5. On the other hand, the best MSE obtained by the BRBES while being trained by the

fmincon-based learning mechanism was 0.000320, which can be seen from the last row and second column of

Table 5. The

fmincon-based learning mechanism was only parameter optimization. Therefore, it can be concluded that result accuracy by BRBES could be improved by employing parameter and structure optimization using BRBaDE as a learning technique.

In addition, the BRBES is compared with two other machine-learning techniques, namely, Artificial Neural Network (ANN) and Adaptive Neuro Fuzzy Inference System (ANFIS) [

44]. The ANN was implemented using MATLAB. The ANN had one input layer, one hidden layer with three neurons, and one output layer. Levenberg–Marquardt was used as the training algorithm for the ANN. The ANFIS model was also developed in MATLAB. The "gaussmf" function of MATLAB was used as the membership function for the inputs, and hybrid function was used for training the fuzzy interface function. The results are presented in the fifth and sixth columns of

Table 5 and

Table 6 for training and testing, respectively. For the training dataset, it could be observed that the average MSE value of all cross-validation for PO and SO using BRBaDE for Disjunctive BR qas 0.000302, while ANN and ANFIS had 0.001727 and 0.00346, respectively. This clearly presents that PO and SO using BRBaDE for Disjunctive BRB performed better than ANN and ANFIS for the training dataset. For the testing dataset, the average MSE value of PO and SO using BRBaDE for Disjunctive BRB performed better than ANFIS and ANN. However, the minimum MSE value was achieved by PO and SO using BRBaDE for Disjunctive BRB compared with ANN and ANFIS.

To have more detailed analysis of the results, the root mean square error (RMSE), mean absolute percentage error (MAPE), and mean absolute error (MAE) were calculated on the test dataset as shown in

Table 7. From the table, it can be observed that PO and SO optimization using BRBaDE had better results compared to

fmincon, PO and SO using conjunctive BRB, ANN, and ANFIS. Regarding MAE and MAPE values, similar phenomena can be seen.

Furthermore, the receiver operating characteristic (ROC) curve provides detailed visualization and comparative assessment of the different methods [

45]. Therefore, it is used in different domains, such as clinical applications [

46], atmospheric science, and many other fields [

47]. Additionally, the ROC curve is used to assess the accuracy of trained disjunctive and conjunctive BRBs, ANN, and ANFIS for the prediction of

. The area under curve (AUC) of the ROC curve is the measurement of the accuracy of a result, where one is the highest value. Usually, ROC curves with a larger area and higher AUC values are considered better in terms of performance.

Figure 9 illustrates the ROC curves of

fmincon, Disjunctive BRB, Conjunctive BRB, ANN, and ANFIS for predicting the

of the Facebook data center. The AUC and confidence-interval (CI) values of the aforementioned method are shown in

Table 8. The AUC for

fmincon, Disjunctive BRB, Conjunctive BRB, ANN, and ANFIS was 0.50, 0.68, 0.29, 0.57, and 0.53, respectively. By taking into account 95% CI, the lower and upper limit of AUC for Disjunctive BRB, Conjunctive BRB, ANN, and ANFIS were 0.31–0.69, 0.46–0.90, 0.12–0.45, 0.36–0.79, and 0.32–0.75, respectively. Hence, it can be argued that Disjunctive BRB trained by PO and SO optimization using BRBaDE performed better than the other machine learning methods such as ANN, ANFIS, and

fmincon-based optimization method. The disjunctive BRB performed better than other methods not only in terms of AUC but also in respect to other lower and upper limits with 95% CI. The PO and SO using BRBaDE helps to uncover the optimal values of the learning parameters and the optimal BRB structure based on the training dataset. The PO is enhanced by BRBaDE as the BRBES helps to find optimal values of

F and

during each DE iteration while ensuring balanced exploration and exploitation of the search space of the learning parameters. The Disjunctive BRB performed better than the Conjunctive BRB due to the use of an OR logical operator in the belief rule, which helped in more accurately capturing the relationship between the attributes for the mentioned use case scenario. Due to the strictness of the AND logical operator, the Conjunctive BRB failed to capture the relationship beween the attributes and performed poorly. ANFIS has the inherent problem of a fuzzy system that fails to address all types of uncertainty. Due to this, ANFIS did not perform better than the Disjunctive BRB. ANN performed better than the ANFIS but not the Disjunctive BRB. In ANN, there was only one learning parameter, namely, weight, whereas BRBES had multiple learning parameters, such as attribute weights, rule weights, and belief degrees. Hence, the lack of learning parameters hindered the performance of ANN.

Furthermore, the complexity of the model influenced the results predicted by them. The Akaike Information Criterium (AIC) [

48] and Bayesian Information Criterium (BIC) [

49] are commonly used for comparison between different models’ complexity. AIC takes into account loss function (sum squared error) and the number of parameters used for calibrating model complexity. BIC is closely related to AIC, which is also based on likelihood function. However, the penalty of the parameters is comparatively higher for BIC. Therefore, AIC and BIC are used to compare the complexity between

fmincon-based BRBES optimization, PO, and SO using BRBaDE for disjunctive and conjunctive BRB, ANN, and ANFIS.

Table 9 shows the results of AIC and BIC comparisons among the methods. Among the different methods, the Disjunctive BRB was preferable as it contained lesser values for AIC and BIC. Thus, the model demonstrates its reliability compared to the other models.

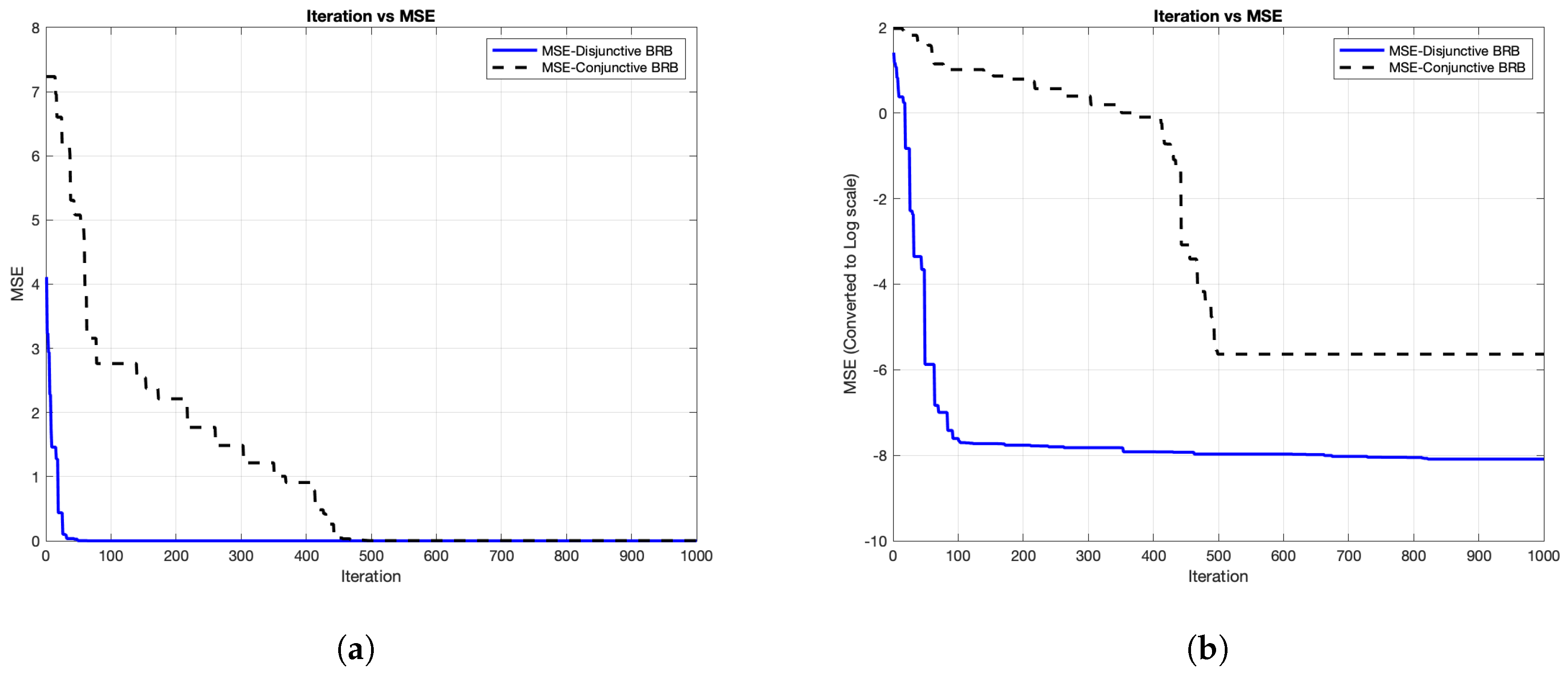

The convergence of PO and SO using BRBaDE for Conjunctive and Disjunctive BRB are depicted in

Figure 10. The solid blue line illustrates the decrease of the MSE for the Disjunctive BRB during each iteration. The initial MSE was 4.111466, which decreased to 0.000281 after the 1000th iteration. The dashed line represents convergence for the Conjunctive BRB. The initial MSE for the Conjunctive BRB was 7.235699, which decreased to 0.003566 around the 499th iteration, after which it became fixed. Even though the BRBaDE reached a steady state for the Conjunctive BRB in fewer iterations, it had a more accurate result for the Disjunctive BRB. For better visualization of the convergence of the BRBaDE, the MSE value was been converted to LOG scale as shown in

Figure 10b. From

Figure 10, it can be concluded that PO and SO using BRBaDE performed better for the Disjunctive BRB than the Conjunctive BRB.

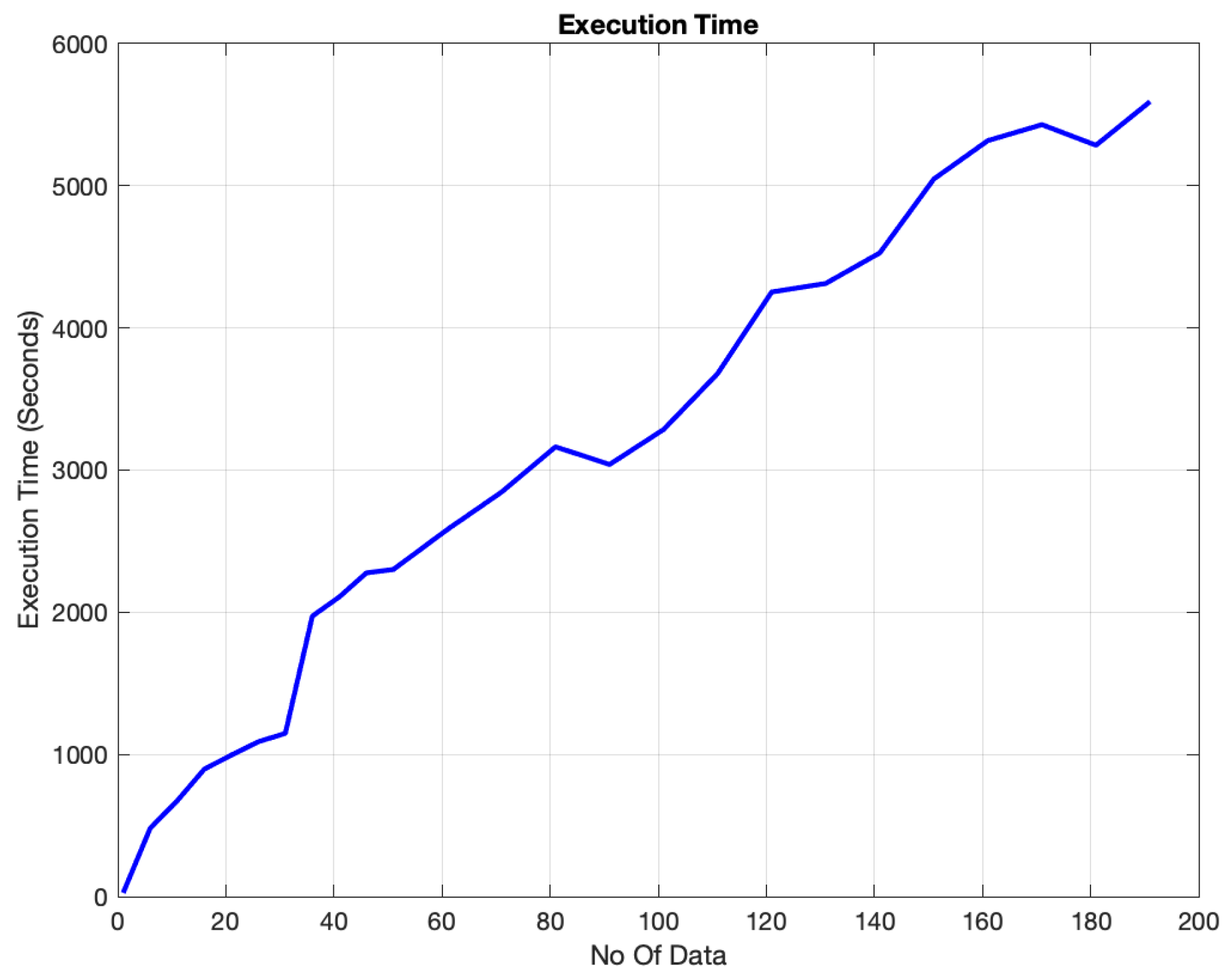

Figure 11 illustrates the learning time of the PO and SO using BRBaDE in correspondence with different data sizes, where it can be observed that learning time grew linearly with the increase of the data size.

To further investigate the impact of BRBES PO and SO using BRBaDE, the initial and trained structure of the disjunctive BRB is represented in

Table 10 and

Table 11 respectively. The trained structure of disjunctive BRB has four referential values for each antecedent attribute with optimized utility values to improve the accuracy of predicting

, which is evident from

Table 11. Furthermore, the attribute weights of the antecedent attributes were also optimized based on training the dataset. The higher values of the attribute weights demonstrate the importance of attributes. Similarly, the utility values of the consequent attributes were also optimized, which is also shown in

Table 11. The trained BRB for disjunctive BRB is presented in

Table 12. It can be observed that the rule weights and belief degrees changed in respect to the initial rule base (

Table 3), improving prediction accuracy.

Furthermore, to evaluate the robustness of the proposed learning mechanism, we have used another dataset from the Joint Information Systems Committee (JISC) funded project named Measuring Data Center Efficiency [

50,

51]. The dataset contained outside temperature, server room temperature, IT equipment energy consumption, and

from 26 October 2011 to 15 December 2011 with a sample rate of 30 min. The dataset contained a total of 2400 data points, whereas in the Facebook dataset there were 298 data points as mentioned in

Section 5.1. Therefore, this dataset is significantly larger than the previous one. Outside temperature, server room temperature, and IT equipment energy consumption of this dataset were considered as input and

as output. The dataset was partitioned into a 80:20 ratio for training and testing. Disjunctive BRB with our proposed learning algorithm, named BRBaDE (

Section 4), ANN, and ANFIS were used for predicing the

.

Table 13 presents the results of predicting

by Disjunctive BRB with PO and SO using BRBaDE, ANN, and ANFIS for different evaluation metrics (such as RMSE, MAPE, MAE) on the testing dataset. The RMSE values for PO and SO using BRBaDE, ANN, and ANFIS are 0.0139, 0.01418, and 0.0138 respectively. The MAPE values for the above-mentioned algorithms are 0.0035, 0.0197, and 0.0074 respectively. Subsequently, 0.0111, 0.0115, and 0.0112 are the MAE values for PO and SO using BRBaDE, ANN, and ANFIS respectively. For all the evaluation matrices, it can be observed that PO and SO using BRBaDE has the lowest value than the other methods. Thus it can be concluded that Disjunctive BRB with PO and SO using BRBaDE is performing better than ANN, and ANFIS.

Thus from the above discussion it can be seen that prediction using new learning algorithm BRBaDE performed better than the ANN and ANFIS due to its capability of addressing all kinds of uncertainties in data. Furthermore, new learning algorithm BRBaDE helps to find optimal values better than the fmincon-based gradient algorithm used in MATLAB.