Towards a New Generation of Building Envelope Calibration

Abstract

:1. Introduction

- Initialization problems also produce a great variability in building energy performance [22].

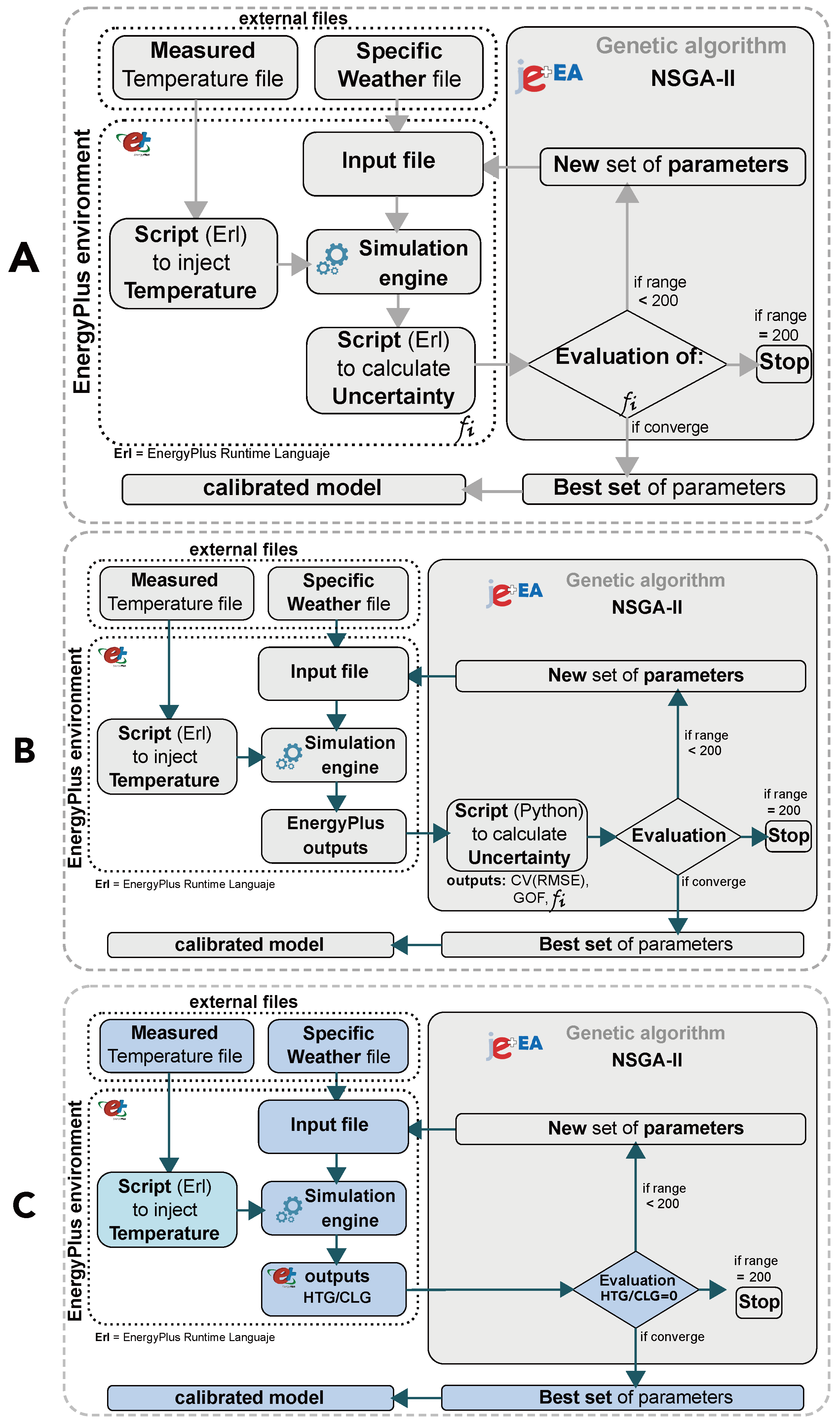

2. The New Approach: The Law-Data-Driven Model

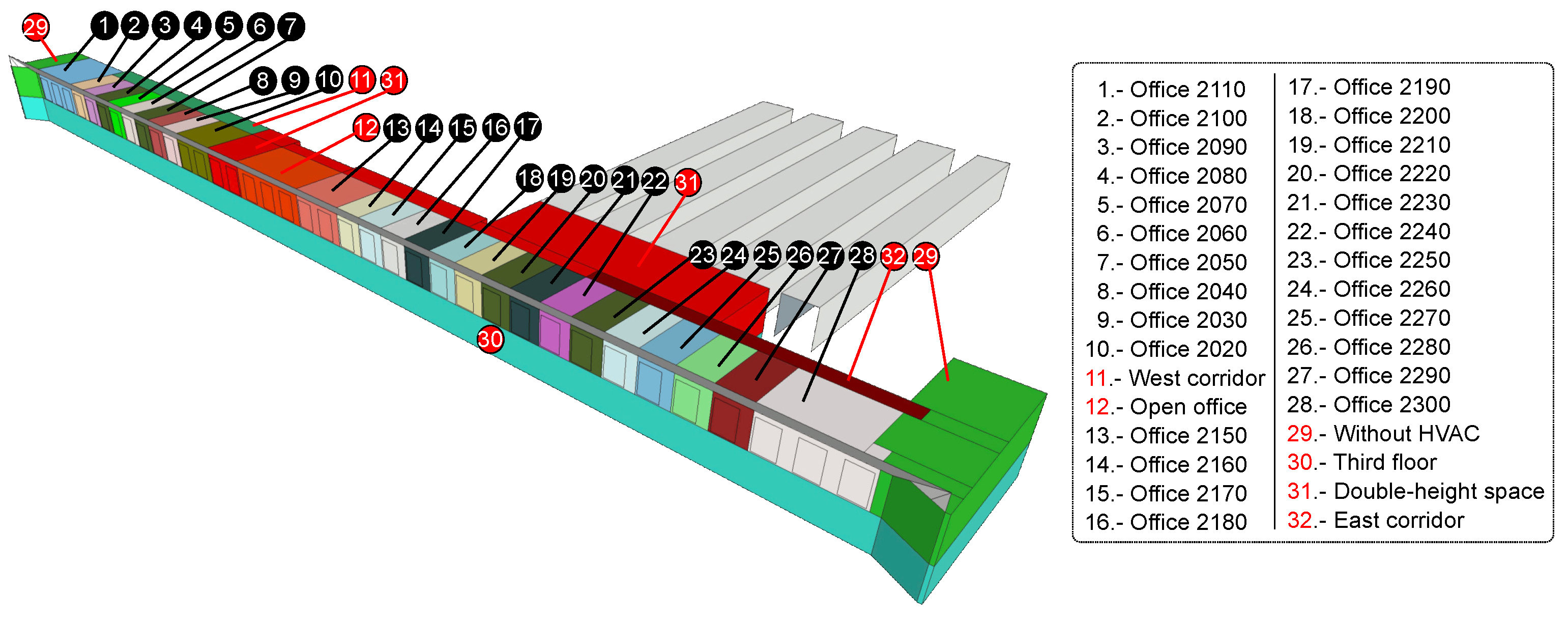

3. The Design of the Experiment

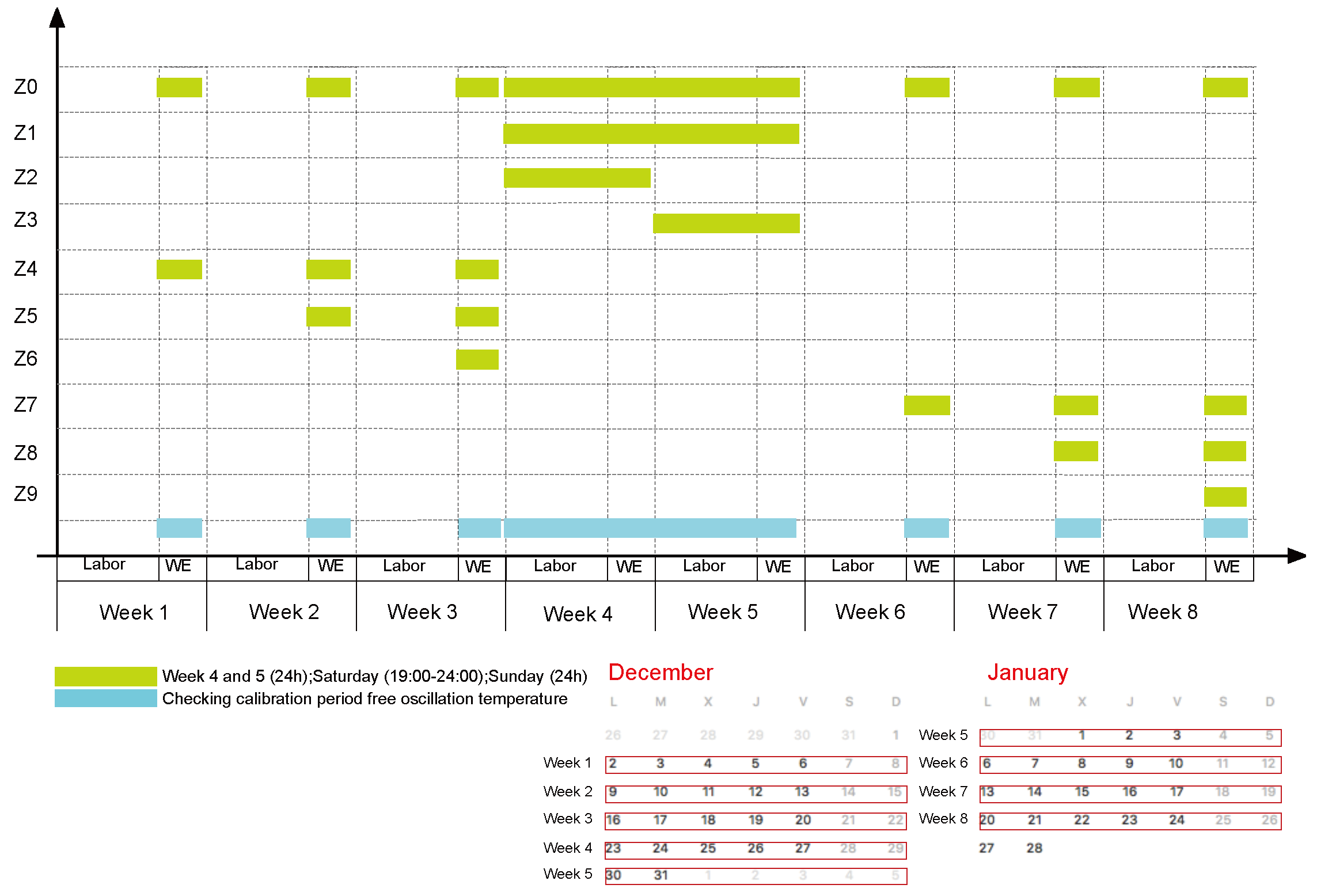

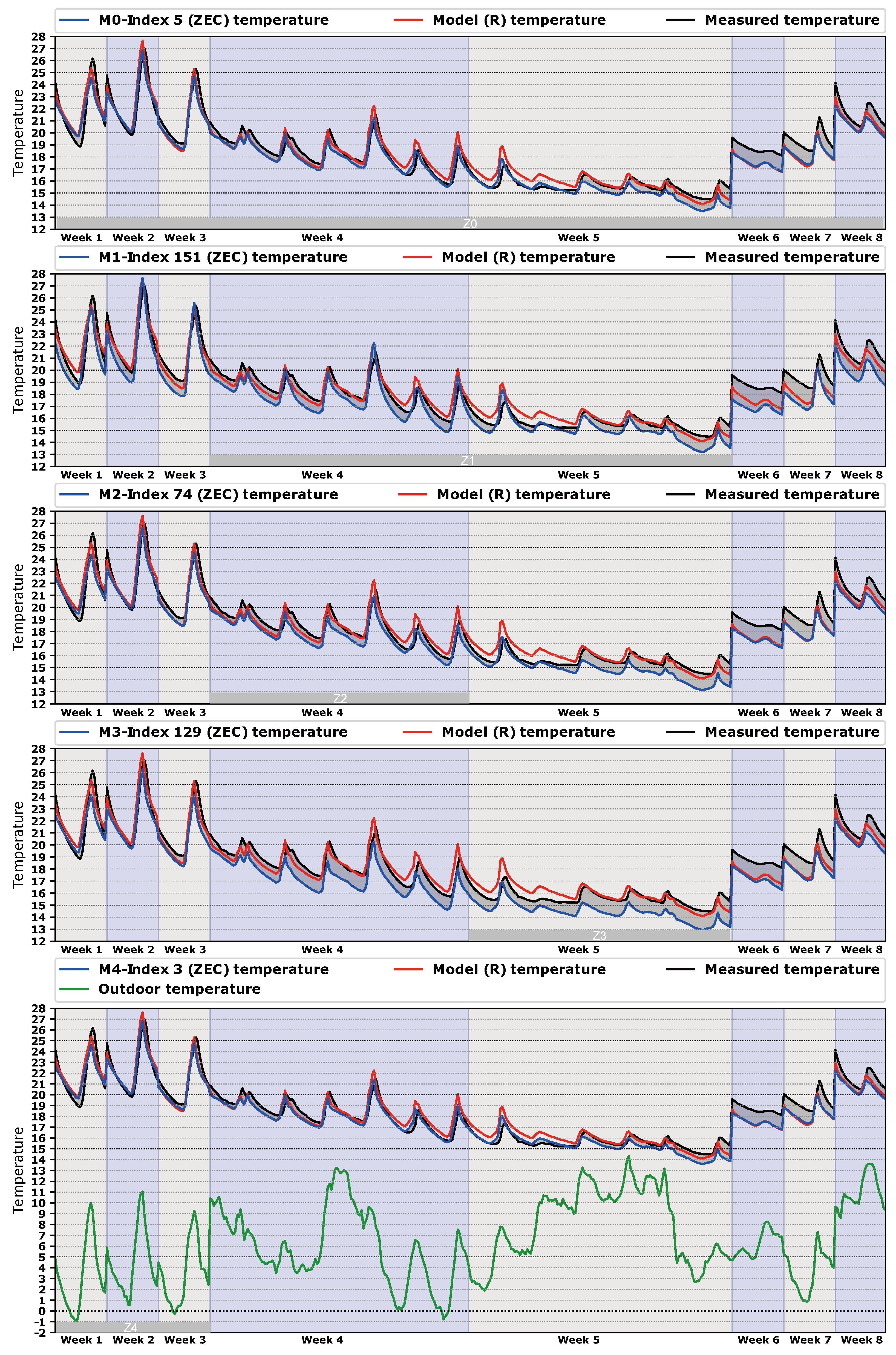

- First stage: Select a variety of different periods with temperature data available in free oscillation mode. Free oscillation periods are very suitable for achieving good calibration results when we are trying to find good parameters for the building envelope. Figure 4 shows the schema and the names proposed for the different periods. Each scenario has been named from to . The idea is to check if the methodology we propose can offer reliable results in different environments. Each scenario () will produce a class of models (). The calibration process is guided by the genetic algorithm (NSGA-II), and since it is a stochastic approach, there are several solutions. For each class, we have chosen the first 20 models (). In order to compare this new methodology with the former one [26], the results of our past calibration study have been included as an extra class named as “R” (). Thus, the total amount of models that we are going to check is 220.In relation to the different periods, we can comment that is the longest scenario. This scenario has a double function, as a space of calibration and at the same time as a space for checking for the rest of the models. This means that all the models will be evaluated in this scenario independently of where they have been generated. This scenario has been used in our previous articles [26,27]. In this way, we can compare all the models under the same conditions.We can divide the rest of the scenarios into three types. The first three (, , and ) are related to the long period of the Christmas season (week 4 and 5) where the building was unoccupied and out of operation. The first type covers the whole period, and the other two are the first and second halves of this period. The second type corresponds with the previous weekends. In particular, is a very challenging scenario where we use data from one weekend, but must take note that the weekend is formed by 30 h of temperature data taken at a pace of ten minutes per time-step. The third type is similar to the previous one, but with the difference that the building structure is cold after the unoccupied period, and therefore a transient state of heat storage is generated.

- Second stage: In this phase we prepare the EnergyPlus models for producing energy, heating (HTG), and cooling (CLG) for those periods. This information will be introduced into the GA as an objective function (Figure 1C), and the goal will be to obtain the least possible amount of energy (ideally zero). Our approach is that the model that provides a better fit to the measured curve of temperature with the least amount of energy is the one nearer to the real model.

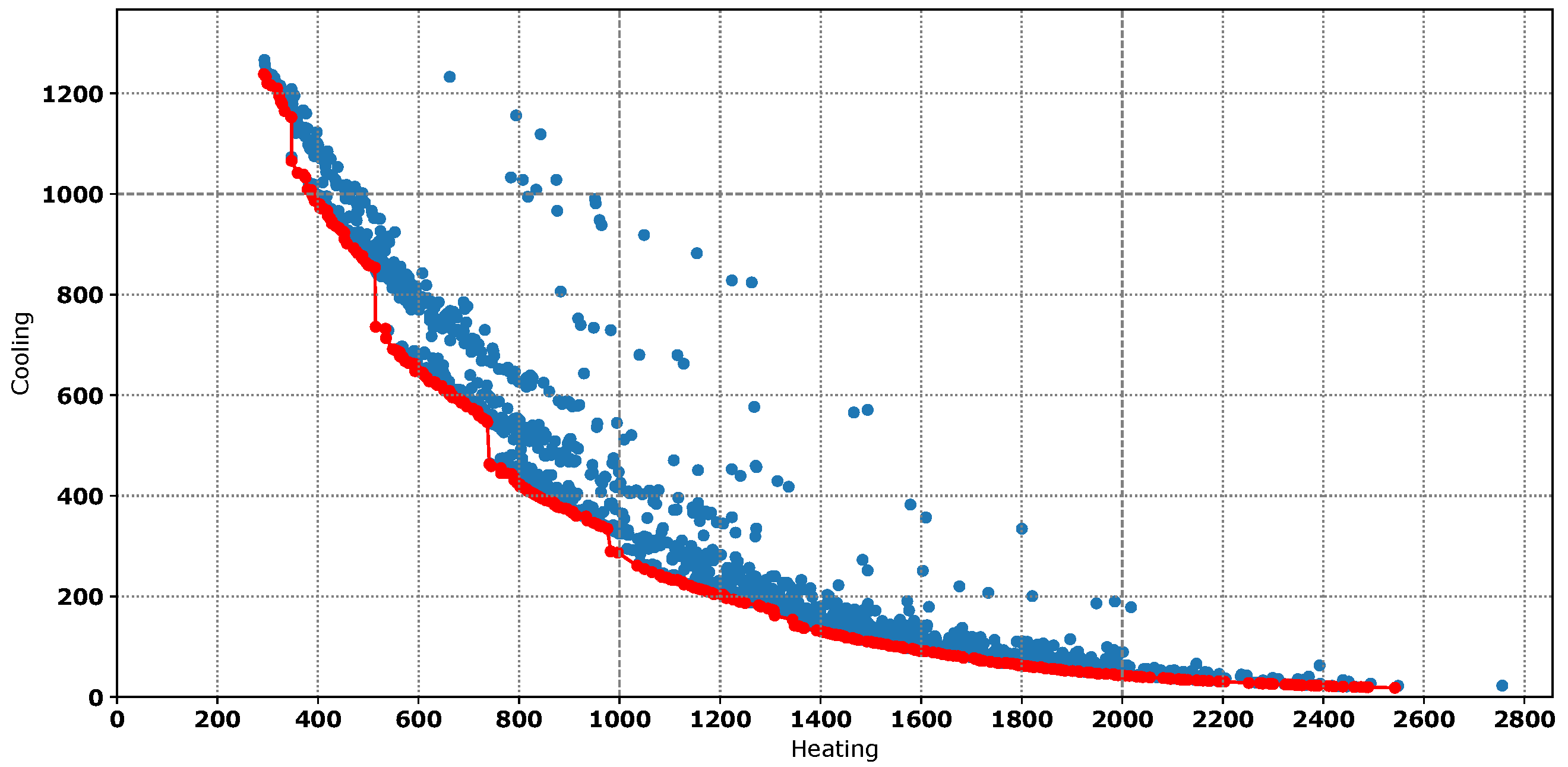

- Third stage: We perform the genetic algorithm in order to determine the parameters that produce lower energy. As can be seen in Figure 5, the objective function obeys the classical rule of a Pareto front (red dots), because we are working with a pair of values (heating and cooling) that are opposite. The algorithm used to perform the thermal zone energy balance in EnergyPlus is the conduction transfer function (CTF), which offers a very fast an elegant solution to find the temperature of the thermal zone. However, zero energy calibration (ZEC) is a technique based on the thermal zone energy balance, and for that reason, CTF sometimes introduces energy penalty. This extra energy consumption makes it so that some models with slightly higher energy consumption have better uncertainty results than the best models ranked by energy. Therefore, the best way of solving this problem is by selecting the 20 best energy models, in the same way as other similar works [47].

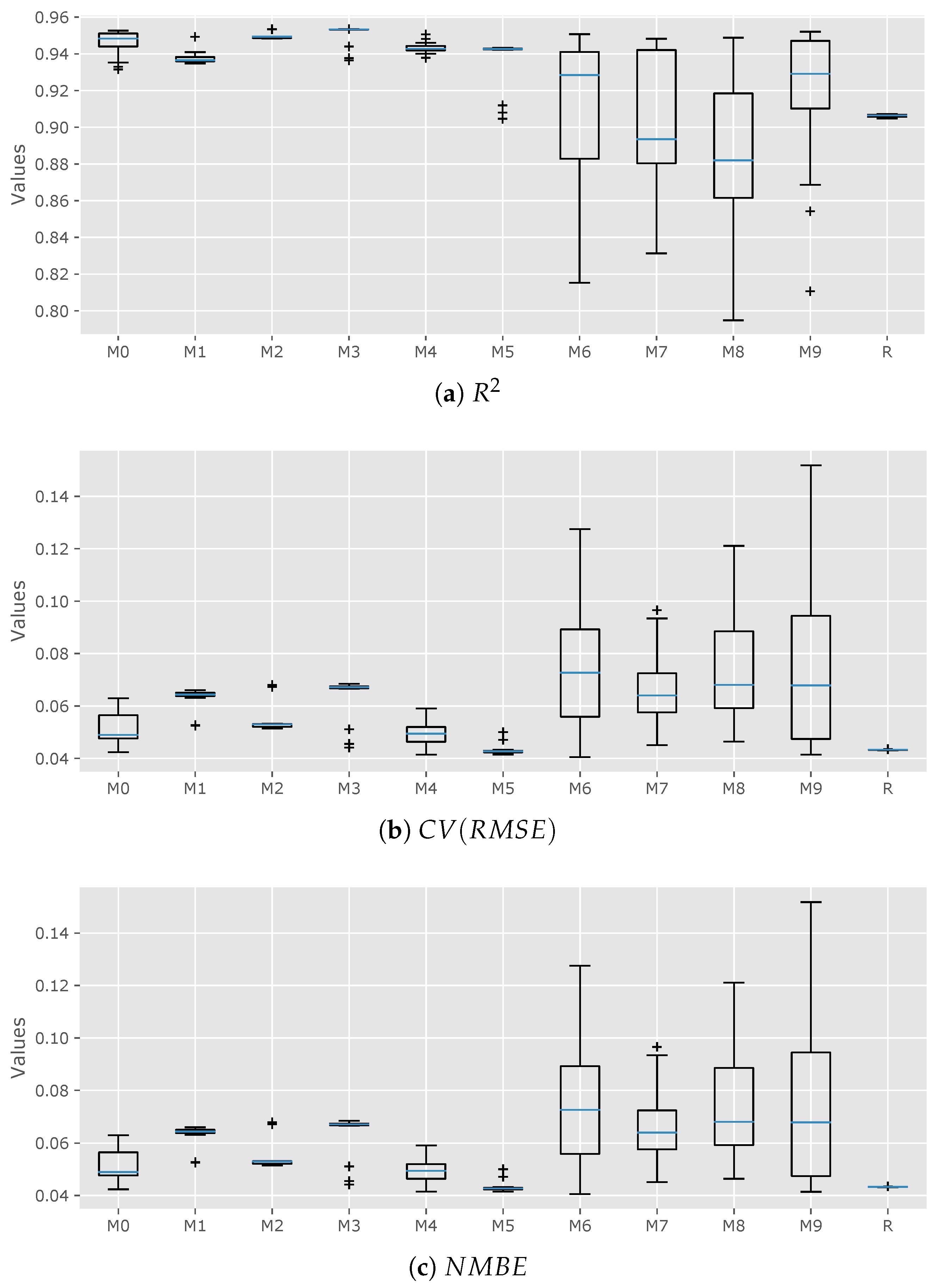

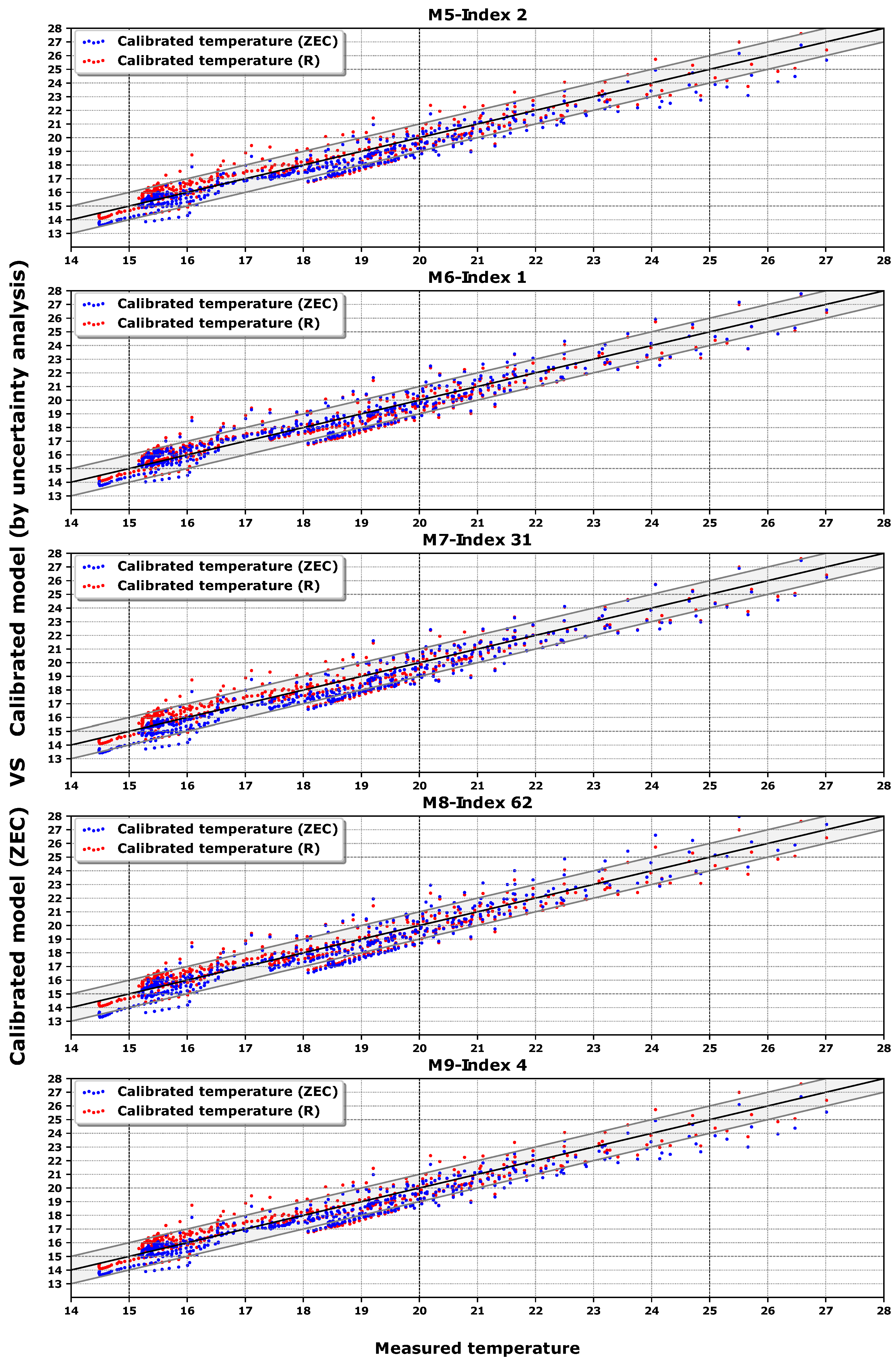

- Fourth stage: Once the 20 best models of each class () have been selected, we perform an uncertainty analysis to check if the results of the calibration process are within the margins recommended by ASHRAE Guidelines 14, FEMP 3.0, and IPMVP (see Table 2). We have used the box plot graph see Figure 6 as a way of measuring the dispersion or compactness of the models. In general, we can state that when a model’s class offers compact values, the calibration process is clear on that zone, and when there is dispersion, more attention should be paid. This could mean () that the algorithm has insufficient data to offer a compact solution. The last statement does not mean that good results cannot be achieved, as will be seen later in this article.

4. Analysis of the Results

- Models that perform better than the best R model (, , , , ). If we look at them from the point of view of the time taken for calibration, three types can be considered: long calibration spaces like with 466 h of free oscillation, medium spaces like and with 90 h and 60 h, respectively, and short calibration spaces like and with 30 h. The common characteristic of these spaces is that the indoor temperature is generally over 20 C, and therefore they are the warmer free oscillation hours of the process. Accordingly, we can state that during these free oscillation hours the building is thermally in a steady-state condition.

- Models that perform similarly to the R model (, , ). In this case, the spaces , , and have 143, 90, and 60 h of calibration, respectively. They have in common a mixture of warm and cool indoor temperatures, due to the proximity to week 5. This week generates a bad influence in the calibration process that reduces the quality of the models, as we have said before. Hence, we can say that in these zones the building is thermally in a transient state from a warm to cool period () and from a cool to a warm period (, ).

- Models that perform worst than the best R model (, ). It seems clear that the reason for that is because week 5 is part of the calibration space. As we have said before, in this week the junction of two phenomena (low indoor temperature and high outdoor temperature) generated a low thermal jump, which produces poor results.

5. Conclusions

- The simplicity of implementing new calibration spaces with a reduced amount of data (temperature).

- There is no need to have long free oscillation periods in order to produce good results in terms of , , and .

- A dramatic reduction in the expertise and the amount of code needed to implement a reliable model is realized. It means that the code to connect the simulation environment with the optimization software has disappeared.

- In this new approach, the temperatures measured in each thermal zone are the guide for the algorithm to find a suitable set of parameters. Therefore, the new methodology takes advantage of a complete thermal characterization of the model.

- The proposed methodology has the limitation that the measured data should be gathered from an unoccupied building.

6. Future Works

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| AS | Asset Rating |

| ASHRAE | American Society of Heating, Refrigerating, and Air-Conditioning Engineers |

| BEM | Building Energy Model |

| BEP | Building Energy Performance |

| BMS | Building Management Systems |

| CLG | Cooling |

| () | Coefficient of Variation of the Root Mean Square Error |

| EMS | Energy Management System |

| EPC | Energy Performance Certificate |

| FEMP | Federal Energy Management Program |

| GA | Genetic Algorithm |

| HTG | Heating |

| HVAC | Heating Ventilation and Air Conditioning |

| IPMVP | International Performance Measurements and Verification Protocol |

| Normalized Mean Bias Error | |

| NSGA | Non-dominated Sorting Genetic Algorithm |

| OR | Operational Rating |

| ZEC | Zero Energy Calibration |

References

- Fraunhofer, I. How Energy Efficiency Cuts Costs for a 2-Degree Future; Fraunhofer Institute for Systems and Innovation Research ISI: Karlsruhe, Germany, 2015. [Google Scholar]

- Lewry, A.J.; Ortiz, J.; Nabil, A.; Schofield, N.; Vaid, R.; Hussain, S.; Davidson, P. Bridging the Gap between Operational and Asset Ratings—The UK Experience and the Green Deal Tool; BRE Group: Watford, UK, 2013. [Google Scholar]

- IPMVP Committee. International Performance Measurement and Verification Protocol: Concepts and Options for Determining Energy and Water Savings; Technical Report; Efficiency Valuation Organization: Washington, DC, USA, 2012; Volume I, Available online: www.evo-world.org (accessed on 8 December 2017).

- Cipriano, J.; Mor, G.; Chemisana, D.; Pérez, D.; Gamboa, G.; Cipriano, X. Evaluation of a multi-stage guided search approach for the calibration of building energy simulation models. Energy Build. 2015, 87, 370–385. [Google Scholar] [CrossRef]

- American Society of Heating, Refrigerating and Air Conditioning Engineers (ASHRAE). Handbook Fundamentals; American Society of Heating, Refrigerating and Air Conditioning Engineers: Atlanta, Georgia, 2013; Volume 111, pp. 19.1–19.42. [Google Scholar]

- Crawley, D.B.; Lawrie, L.K.; Winkelmann, F.C.; Buhl, W.F.; Huang, Y.J.; Pedersen, C.O.; Strand, R.K.; Liesen, R.J.; Fisher, D.E.; Witte, M.J.; et al. EnergyPlus: Creating a new-generation building energy simulation program. Energy Build. 2001, 33, 319–331. [Google Scholar] [CrossRef]

- Trnsys, A. Transient System Simulation Program; University of Wisconsin: Madison, WI, USA, 2000. [Google Scholar]

- Kalamees, T. IDA ICE: The simulation tool for making the whole building energy and HAM analysis. Annex 2004, 41, 12–14. [Google Scholar]

- Saltelli, A.; Ratto, M.; Andres, T.; Campolongo, F.; Cariboni, J.; Gatelli, D.; Saisana, M.; Tarantola, S. Global Sensitivity Analysis: The Primer; John Wiley & Sons: Hoboken, NJ, USA, 2008. [Google Scholar]

- Mustafaraj, G.; Marini, D.; Costa, A.; Keane, M. Model calibration for building energy efficiency simulation. Appl. Energy 2014, 130, 72–85. [Google Scholar] [CrossRef]

- Karlsson, F.; Rohdin, P.; Persson, M.L. Measured and Predicted Energy Demand of a Low Energy Building: Important Aspects When Using Building Energy Simulation; American Society of Heating, Refrigerating and Air Conditioning Engineers: Atlanta, GA, USA, 2007; Volume 28, pp. 223–235. [Google Scholar]

- Turner, C.; Frankel, M. Energy performance of LEED for new construction buildings. New Build. Inst. 2008, 4, 1–42. [Google Scholar]

- Scofield, J.H. Do LEED-certified buildings save energy? Not really…. Energy Build. 2009, 41, 1386–1390. [Google Scholar] [CrossRef]

- Ahmad, M.; Culp, C.H. Uncalibrated building energy simulation modeling results. HVAC&R Res. 2006, 12, 1141–1155. [Google Scholar]

- Zhang, Y.; O’Neill, Z.; Dong, B.; Augenbroe, G. Comparisons of inverse modeling approaches for predicting building energy performance. Build. Environ. 2015, 86, 177–190. [Google Scholar] [CrossRef]

- Subbarao, K. PSTAR: Primary and Secondary Terms Analysis and Renormalization: A Unified Approach to Building Energy Simulations and Short-Term Monitoring: A Summary; Technical Report; Solar Energy Research Institute: Golden, CO, USA, 1988.

- Tahmasebi, F.; Mahdavi, A. Monitoring-based optimization-assisted calibration of the thermal performance model of an office building. In Proceedings of the International Conference on Architecture and Urban Design, Tirana, Albania, 19–21 April 2012; Volume 18, pp. 2012–2021. [Google Scholar]

- Coakley, D.; Raftery, P.; Keane, M. A review of methods to match building energy simulation models to measured data. Renew. Sustain. Energy Rev. 2014, 37, 123–141. [Google Scholar] [CrossRef]

- Reddy, T.A.; Maor, I.; Jian, S.; Panjapornporn, C. Procedures for Reconciling Computer-Calculated Results With Measured Energy Data; Technical Report; ASHRAE Research Project 1051-RP; ASHRAE: Atlanta, GA, USA, 2006. [Google Scholar]

- Magnier, L.; Haghighat, F. Multiobjective optimization of building design using TRNSYS simulations, genetic algorithm, and Artificial Neural Network. Build. Environ. 2010, 45, 739–746. [Google Scholar] [CrossRef]

- Manfren, M.; Aste, N.; Moshksar, R. Calibration and uncertainty analysis for computer models—A meta-model based approach for integrated building energy simulation. Appl. Energy 2013, 103, 627–641. [Google Scholar] [CrossRef]

- Zakula, T.; Armstrong, P.R.; Norford, L. Modeling environment for model predictive control of buildings. Energy Build. 2014, 85, 549–559. [Google Scholar] [CrossRef]

- Sonderegger, R. Diagnostic Tests Determining the Thermal Response of a House; Technical Report; California University: Berkeley, CA, USA, 1977. [Google Scholar]

- Coakley, D.; Raftery, P.; Molloy, P.; White, G. Calibration of a detailed BES model to measured data using an evidence-based analytical optimisation approach. In Proceedings of the 12th International IBPSA Conference, Sydney, Australia, 14–16 November 2011. [Google Scholar]

- Attia, S. Computational Optimisation for Zero Energy Building Design, Interviews with Twenty Eight International Experts; Technical Report; Architecture et Climat: Louvain La Neuve, Belgium, 2012. [Google Scholar]

- Ruiz, G.R.; Bandera, C.F. Analysis of uncertainty indices used for building envelope calibration. Appl. Energy 2017, 185, 82–94. [Google Scholar] [CrossRef]

- Ruiz, G.R.; Bandera, C.F.; Temes, T.G.A.; Gutierrez, A.S.O. Genetic algorithm for building envelope calibration. Appl. Energy 2016, 168, 691–705. [Google Scholar] [CrossRef]

- Spitler, J.D.; Fisher, D.E.; Pedersen, C.O. The Radiant Time Series Cooling Load Calculation Procedure; Transactions-American Society of Heating Refrigerating and Air Conditioning Engineers: Atlanta, GA, USA, 1997; Volume 103, pp. 503–518. [Google Scholar]

- Strand, R.K. Modularization and simulation techniques for heat balance-based energy and load calculation programs: The experience. In Proceedings of the ASHRAE Loads Toolkits and EnergyPlus, Building Simulation, Rio de Janeiro, Brazil, 13–15 August 2001. [Google Scholar]

- Fisher, D. Experimental Validation of Design Cooling Load Procedures: The Heat Balance Method. ASHRAE Trans. 2003, 109, 160–173. [Google Scholar]

- Eldridge, D.S., Jr. Design of an Experimental Facility for the Validation of Cooling Load Calculation Procedures; Oklahoma State University: Stillwater, OK, USA, 2007. [Google Scholar]

- Yang, T.; Pan, Y.; Mao, J.; Wang, Y.; Huang, Z. An automated optimization method for calibrating building energy simulation models with measured data: Orientation and a case study. Appl. Energy 2016, 179, 1220–1231. [Google Scholar] [CrossRef]

- Song, S.; Haberl, J.S. Analysis of the impact of using synthetic data correlated with measured data on the calibrated as-built simulation of a commercial building. Energy Build. 2013, 67, 97–107. [Google Scholar] [CrossRef]

- Robertson, J.; Polly, B.; Collis, J. Evaluation of Automated Model Calibration Techniques for Residential Building Energy Simulation; National Renewable Energy Laboratory: Golden, CO, USA, 2013.

- Royapoor, M.; Roskilly, T. Building model calibration using energy and environmental data. Energy Build. 2015, 94, 109–120. [Google Scholar] [CrossRef]

- Raftery, P.; Keane, M.; O’Donnell, J. Calibrating whole building energy models: An evidence-based methodology. Energy Build. 2011, 43, 2356–2364. [Google Scholar] [CrossRef]

- Coakley, D.; Raftery, P.; Molloy, P. Calibration of whole building energy simulation models: Detailed case study of a naturally ventilated building using hourly measured data. In Proceedings of the First Building Simulation and Optimization Conference, Loughborough, UK, 10–11 September 2012; pp. 10–11. [Google Scholar]

- Thermal Performance of Buildings—Determination of Air Permeability of Buildings—Fan Pressurization Method; ISO 9972:1996, Modified; UNE-EN 13829:2002; CTN 92—AISLAMIENTO TÉRMICO: Madrid, Spain, 2002.

- Zhang, Y. Use jEPlus as an efficient building design optimisation tool. In Proceedings of the CIBSE ASHRAE Technical Symposium, London, UK, 3–4 April 2012; pp. 18–19. [Google Scholar]

- Deb, K.; Pratap, A.; Agarwal, S.; Meyarivan, T. A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 2002, 6, 182–197. [Google Scholar] [CrossRef]

- Evins, R. A review of computational optimisation methods applied to sustainable building design. Renew. Sustain. Energy Rev. 2013, 22, 230–245. [Google Scholar] [CrossRef]

- Ruiz, G.R.; Bandera, C.F. Validation of Calibrated Energy Models: Common Errors. Energies 2017, 10, 1587. [Google Scholar] [CrossRef]

- ASHRAE. Guideline 14-2002, Measurement of Energy and Demand Savings; Technical Report; American Society of Heating, Ventilating, and Air Conditioning Engineers: Atlanta, GA, USA, 2002. [Google Scholar]

- ASHRAE. Guideline 14-2014, Measurement of Energy and Demand Savings; Technical Report; American Society of Heating, Ventilating, and Air Conditioning Engineers: Atlanta, GA, USA, 2014. [Google Scholar]

- Webster, L.; Bradford, J. M&V Guidelines: Measurement and Verification for Federal Energy Projects; version 3.0; Technical Report; U.S. Department of Energy Federal Energy Management Program: Washington, DC, USA, 2008.

- Webster, L.; Bradford, J.; Sartor, D.; Shonder, J.; Atkin, E.; Dunnivant, S.; Frank, D.; Franconi, E.; Jump, D.; Schiller, S.; et al. M&V Guidelines: Measurement and Verification for Performance-Based Contracts; version 4.0; Technical Report; U.S. Department of Energy Federal Energy Management Program: Washington, DC, USA, 2015.

- Chaudhary, G.; New, J.; Sanyal, J.; Im, P.; O’Neill, Z.; Garg, V. Evaluation of “Autotune” calibration against manual calibration of building energy models. Appl. Energy 2016, 182, 115–134. [Google Scholar] [CrossRef]

- Zhang, Y.; Korolija, I. Performing complex parametric simulations with jEPlus. In Proceedings of the 9th SET Conference Proceedings, Shanghai, China, 24 August 2010. [Google Scholar]

- Sang, Y.; Zhao, J.R.; Sun, J.; Chen, B.; Liu, S. Experimental investigation and EnergyPlus-based model prediction of thermal behavior of building containing phase change material. J. Build. Eng. 2017, 12, 259–266. [Google Scholar] [CrossRef]

- Seifhashem, M.; Capra, B.; Milller, W.; Bell, J. The potential for cool roofs to improve the energy efficiency of single storey warehouse-type retail buildings in Australia: A simulation case study. Energy Build. 2017, 158, 1393–1403. [Google Scholar] [CrossRef]

| Construction | Type of Parametrized Value | Baseline Model | Other Values | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Value 1 | Value 2 | Value 3 | Value 4 | Value 5 | Value 6 | Value 7 | Value 8 | Value 9 | Value 10 | |||

| A | Façade CE04c | Thickness (m) | 0 | 0.05 | 0.06 | 0.07 | 0.08 | 0.09 | 0.1 | 0.11 | - | - |

| B | Brick density (kg/m3) | 1150 | 1250 | 1350 | 1450 | 1550 | - | - | - | - | - | |

| C | Façade CE06a | Thickness (m) | 0 | 0.01 | 0.02 | 0.03 | 0.04 | 0.05 | 0.6 | 0.07 | - | - |

| D | Façade CE06b | Thickness (m) | 0 | 0.05 | 0.06 | 0.07 | 0.08 | 0.09 | 0.1 | 0.11 | - | - |

| E | Façade CE07 | Thickness (m) | 0 | 0.05 | 0.06 | 0.07 | 0.08 | 0.09 | 0.1 | 0.11 | - | - |

| F | Roof | Insulation thickness (m) | 0.10 | 0.15 | 0.20 | 0.25 | 0.30 | 0.35 | 0.40 | 0.45 | 0.50 | 0.55 |

| G | Gravel thickness (m) | 0.10 | 0.025 | 0.05 | 0.075 | 0.125 | 0.15 | 0.175 | 0.20 | - | - | |

| H | Top façade | Thickness (m) | 0 | 0.01 | 0.02 | 0.03 | 0.04 | 0.05 | 0.6 | 0.07 | - | - |

| I | Slab | Specific heat (J/kgK) | 1000 | 850 | 900 | 950 | 1050 | 1100 | - | - | - | - |

| J | Thickness (m) | 0.35 | 0.25 | 0.30 | 0.40 | 0.45 | 0.50 | - | - | - | - | |

| K | Partition walls | Density (kg/m3) | 1 | 1000 | 1100 | 1200 | 1300 | 1400 | 1500 | 1600 | 1700 | - |

| L | U-Factor | W/m2K | 1.4 | 0.8 | 0.9 | 1.0 | 1.1 | 1.2 | 1.3 | - | - | - |

| M | Solar Heat Gain Coefficient | Non-dimensional | 0.6 | 0.4 | 0.5 | 0.7 | 0.8 | 0.9 | 1.0 | - | - | - |

| Data Type | Index | FEMP Criteria [45,46] | ASHRAE Guideline 14 [43,44] | IPMVP [3] |

|---|---|---|---|---|

| Calibration criteria | ||||

| Monthly criteria % | ±5 | ±5 | ±20 | |

| 15 | 15 | - | ||

| Hourly criteria % | ±10 | ±10 | ±5 | |

| 30 | 30 | 20 | ||

| Model recommendation | ||||

| - | >0.75 | >0.75 | ||

| Class | Best Rank | Rank-25 | Rank-50 | Rank-75 | Rank-100 |

|---|---|---|---|---|---|

| 5 | 2 | 13 | 14 | 14 | |

| 151 | 0 | 0 | 0 | 0 | |

| 74 | 0 | 0 | 2 | 18 | |

| 129 | 0 | 0 | 0 | 0 | |

| 3 | 1 | 7 | 10 | 14 | |

| 2 | 20 | 20 | 20 | 20 | |

| 1 | 1 | 5 | 5 | 6 | |

| 31 | 0 | 1 | 2 | 3 | |

| 62 | 0 | 0 | 2 | 2 | |

| 4 | 1 | 4 | 5 | 6 | |

| R | 53 | 0 | 0 | 15 | 17 |

| Total | 25 | 50 | 75 | 100 |

| Rank | Class | Index | |||

|---|---|---|---|---|---|

| 1 | 92.281% | 0.436% | 4.053% | 0.12203 | |

| 2 | 94.223% | 2.311% | 4.152% | 0.12235 | |

| 3 | 94.084% | 2.238% | 4.146% | 0.12296 | |

| 4 | 94.027% | 2.198% | 4.143% | 0.12310 | |

| 5 | 94.399% | 2.531% | 4.237% | 0.12365 | |

| 6 | 94.273% | 2.439% | 4.212% | 0.12373 | |

| 7 | 94.219% | 2.397% | 4.202% | 0.12375 | |

| 8 | 94.246% | 2.420% | 4.208% | 0.12377 | |

| 9 | 94.256% | 2.438% | 4.216% | 0.12393 |

| Class | Best Rank | Index | |||

|---|---|---|---|---|---|

| 5 | 94.399% | 2.531% | 4.237% | 0.12365 | |

| 151 | 93.154% | 4.763% | 6.061% | 0.17670 | |

| 74 | 94.839% | 3.951% | 5.140% | 0.14252 | |

| 129 | 95.321% | 5.835% | 6.652% | 0.17166 | |

| 3 | 94.084% | 2.238% | 4.146% | 0.12296 | |

| 2 | 94.223% | 2.311% | 4.152% | 0.12235 | |

| 1 | 92.281% | 0.436% | 4.053% | 0.12203 | |

| 31 | 93.631% | 2.403% | 4.456% | 0.13227 | |

| 62 | 91.855% | 1.208% | 4.677% | 0.14030 | |

| 4 | 94.027% | 2.198% | 4.143% | 0.12310 | |

| R | 53 | 90.463% | 0.065% | 4.360% | 0.13962 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fernández Bandera, C.; Ramos Ruiz, G. Towards a New Generation of Building Envelope Calibration. Energies 2017, 10, 2102. https://doi.org/10.3390/en10122102

Fernández Bandera C, Ramos Ruiz G. Towards a New Generation of Building Envelope Calibration. Energies. 2017; 10(12):2102. https://doi.org/10.3390/en10122102

Chicago/Turabian StyleFernández Bandera, Carlos, and Germán Ramos Ruiz. 2017. "Towards a New Generation of Building Envelope Calibration" Energies 10, no. 12: 2102. https://doi.org/10.3390/en10122102

APA StyleFernández Bandera, C., & Ramos Ruiz, G. (2017). Towards a New Generation of Building Envelope Calibration. Energies, 10(12), 2102. https://doi.org/10.3390/en10122102