1. Introduction

Hedging derivatives involve dynamically trading underlying instruments to offset the risk associated with the derivative’s price movements. Traditionally, this process has been guided by theoretical models, such as Black–Scholes, which provide a closed-form solution for determining hedge ratios for European call and put options under frictionless market assumptions (

Black & Scholes, 1973). However, in realistic markets, hedging decisions are further complicated by transaction costs, execution slippage, and permanent market impact when trading the underlying security—all of which are factors that distort the price trajectory of the underlying asset and increase the cumulative cost of rebalancing hedge positions (

Leland, 1985). These frictions are particularly relevant in inefficient or illiquid markets, where large trades can substantially affect the price of the underlying security (

Almgren & Li, 2016).

Market impact is defined as the difference between the price trajectory of an asset before an order is sent to the market versus after an order is completed, compared to the expected value (

Harvey et al., 2022). Any difference in market price from the expected execution price is known as execution slippage (

Chriss, 2001). Optimal order execution algorithms to minimize total impact and trading costs have been extensively studied in existing literature. These studies primarily focus on intraday order scheduling and predicting total accumulated impact from different trading patterns in a single security, equity-focused setting (

Chriss, 2001). In addition to slippage, market impact can accumulate and affect the price paths of an underlying security for extended periods, especially when trades are executed in succession (

Harvey et al., 2022).

In theoretical hedging strategies such as the use of the Black Scholes model, the “optimal” hedging position is achieved by having net zero delta exposure in a portfolio (

Black & Scholes, 1973). When short a call option, a trader would purchase

shares of the underlying security to achieve a neutral delta. This buying pressure can inadvertently create a feedback loop that increases the option’s value over time, especially in inefficient markets (

Gatheral, 2010;

Rogers & Singh, 2010). As the stock price rises, the call option’s delta increases, requiring the trader to buy additional shares to maintain a delta-neutral position. This buying pressure can introduce market impact, pushing the stock price even higher and further increasing the call option’s value, especially in illiquid environments (

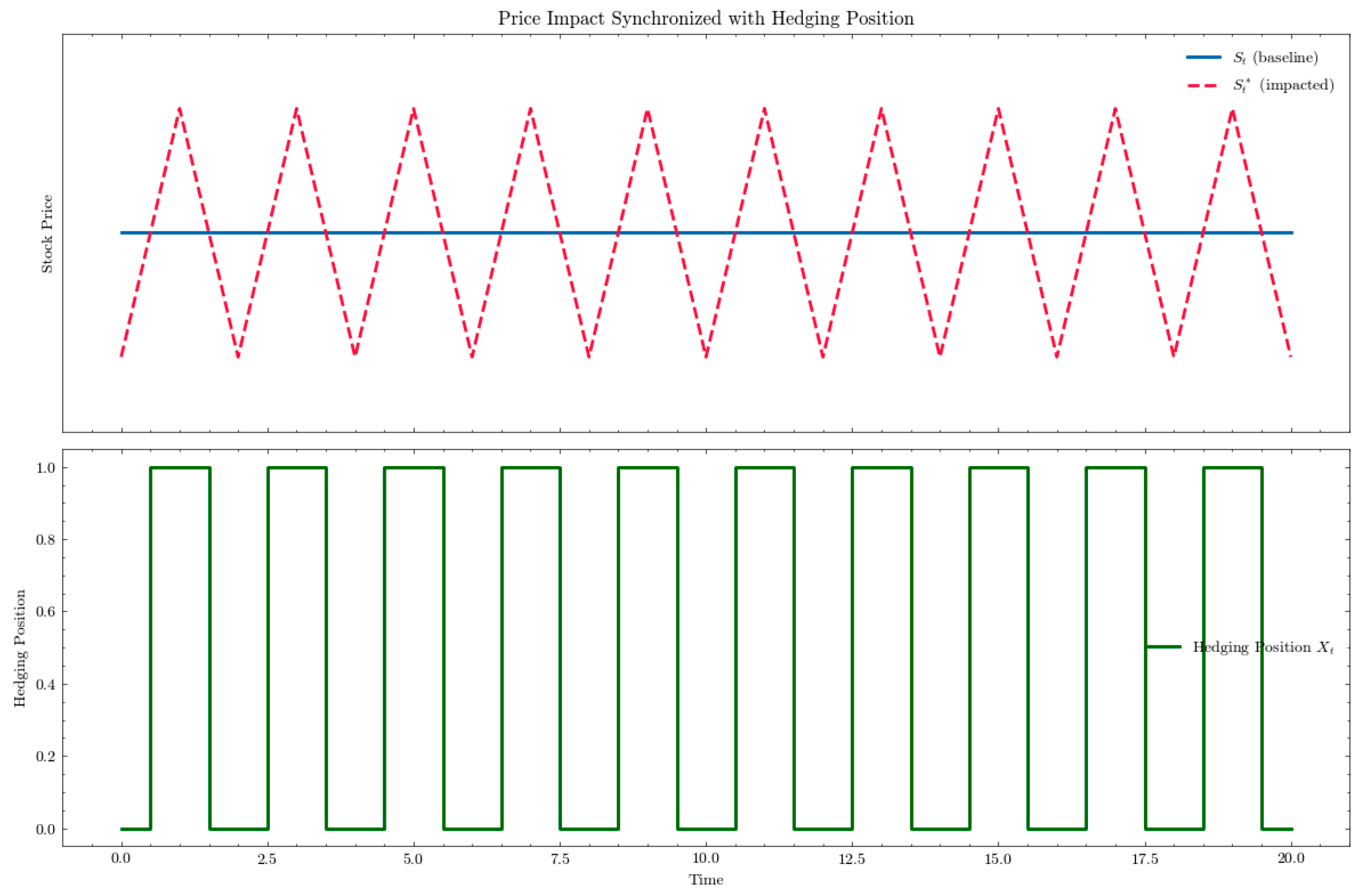

Figure 1). This cyclical behavior, driven by the interaction between market frictions and hedging adjustments, can lead to repeated oscillations in both underlying and derivative asset prices, further increasing costs to attain a delta neutral position (

Anderegg et al., 2022). Such dynamics underscore the limitations of traditional hedging strategies, where the cumulative effects of slippage and permanent impact can significantly increase both hedging costs and risk exposure.

Reinforcement learning (RL) has emerged as a powerful tool for decision-making in complex, stochastic environments, offering significant advantages over rules-based or model-driven approaches (

Pickard & Lawryshyn, 2023). In financial applications, RL can learn optimal strategies directly from simulated or historical market data, bypassing the need for explicit modeling of market dynamics. Recent advances in hedging research, such as Buehler’s Deep Hedging framework have enabled additional complexity when modelling hedging environments, demonstrating superiority over traditional models in the presence of transaction costs and stochastic volatility (

Buehler et al., 2018). However, these frameworks often simplify market dynamics, neglecting critical frictions such as slippage and permanent impact. This paper extends the capabilities of RL-based hedging by integrating realistic cost models that reflect the challenges of trading in illiquid markets.

To overcome these challenges, we propose a deep reinforcement learning (RL) framework that determines hedging actions across discrete time steps in a dynamic trading environment with transaction costs, slippage, and market impact. Overall, we illustrate how incorporating stochastic temporary and permanent market impact feedback in the RL environment better reflects real trading conditions than simplified frictionless assumptions. In this framework, the “agent” is a trader who is hedged against a European Call option position, allowed to rebalance its hedged position with no underlying understanding of the impact models. Our approach considers short term, “transaction cost” like impacts, as well as lasting effects from excess trading by the agent, creating a more realistic framework where the true cost of hedging the security deviates from when hedging decisions are made. This deviation between the optimal hedging environments and those with frictions become more apparent as execution frequency and liquidity constraints increase.

We first design a hedging environment incorporating market frictions, liquidity constraints and transaction costs. The agent can make rebalancing decisions at every discrete timestep until expiry, with the caveat that each trade will impact the future price path of the underlying security. When the option expires, the seller will liquidate their underlying positions at the market price and pay the appropriate payoff for the option, where is the strike price and is the underlying security price at expiry.

We compare and train Deep Deterministic Policy Gradient (DDPG), Twin Delayed DDPG (TD3) and Soft Actor Critic (SAC) models to optimally hedge in these environments across various degrees of impact, with a reward function to minimize risk-adjusted hedging costs at expiry. We benchmark our results against the Black–Scholes model. Like existing deep hedging research, we will first benchmark our reinforcement learning models in an environment with only transaction costs, where there is ample liquidity to assume zero market impact. Then, we introduce liquidity constraints on the underlying asset along with market impact as a function of trade size.

The remainder of this paper is organized as follows:

Section 2 reviews the existing literature on market impact, slippage, and reinforcement learning in financial applications.

Section 3 details the methodology, including the RL framework, agents tested and market simulation environment.

Section 4 presents the experimental results, and

Section 5 concludes with a discussion of the findings and future research directions.

3. Methodology

In this section, we introduce our (1) market friction modelling, including temporary and permanent market impact, (2) our reinforcement learning (RL) framework, models and hyperparameters and (3) synthetic data generation. This methodology integrates a RL agent into a synthetic market environment, where slippage and permanent market impact are explicitly modeled as dynamic cost factors. The current framework does not consider limit order book dynamics, but relies on empirical assumptions from prior works on market impact, specifically the square root law. The RL agent is trained to optimize hedging strategies by minimizing total hedging costs while balancing the immediate and residual effects of its trades on the underlying asset’s price path.

3.1. Market Impact Model

We use the square root approximation for market impact to estimate the total impact estimate with daily trading frequency as a function of the liquidity traded.

Bucci et al. (

2019) demonstrated that the square root law, which is a function of the total trade size (in shares) as a percentage of average volume traded, provides a robust empirical approximation for market impact, effectively capturing the sublinear relationship between trade size and price deviation. This approach has been validated across various trading datasets and is commonly used in discrete-time market simulations. Where the current holdings at any time t are defined as

, and the trades at time t (which are equivalent to our agents’ actions) are defined as

we assume that the market impact

M(

) at each time step for a change in position size

is stochastic but strictly in the direction of each trade (i.e., if

is positive, the impact is positive as well).

Under these assumptions, the total market impact cost of size for a trade of size at execution time

,

, relative to the security’s average daily trading volume (ADV) of V can be modelled as:

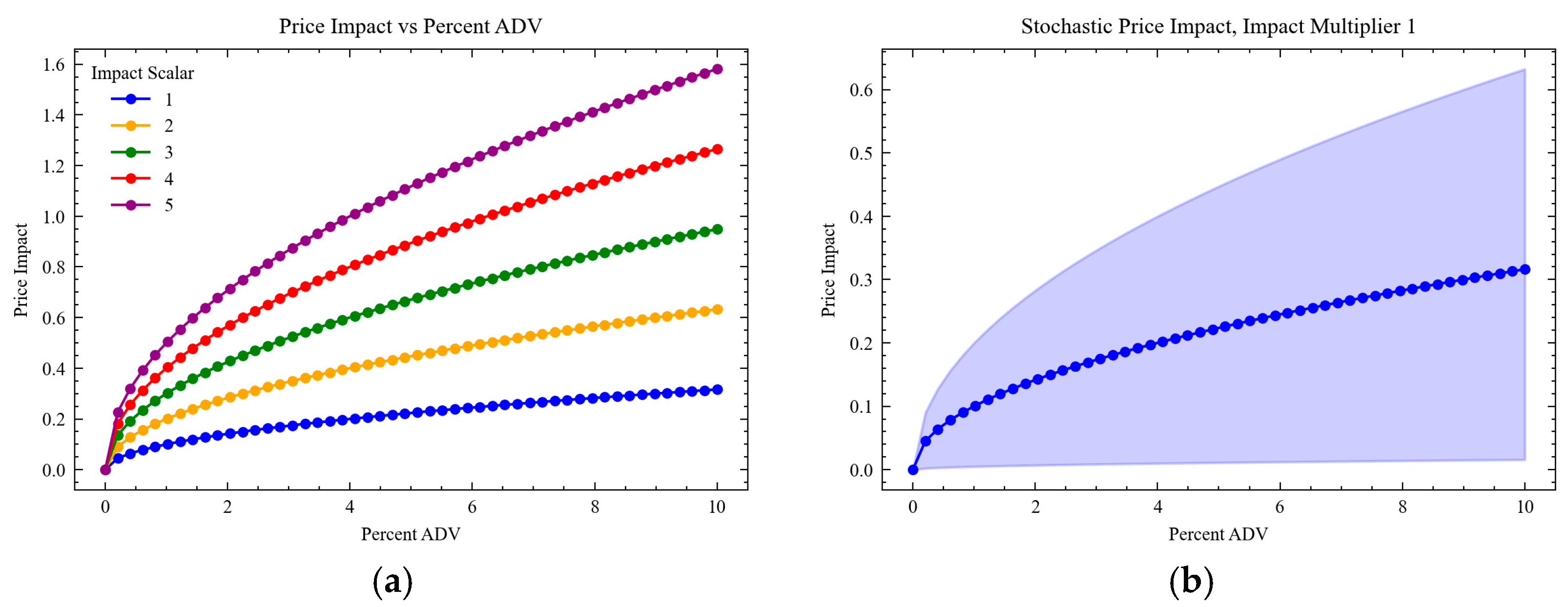

where

is a constant impact scalar that controls the magnitude of the impact,

is a normally distributed stochastic scalar, and

is incorporated to ensure the impact is in the same direction as the trade (

Figure 2b). We incorporate a clipped stochastic variable

to better simulate the effects of market impact, given that real life trading impact can vary based on other factors including market participants, news and latent variables not modelled by our environment. Without loss of generality, we will assume that

is a scaled trade size and the average daily volume

is 10 times the number of shares in the options contract (i.e.,

if we are hedging 1 contract with 100 shares).

Figure 2a illustrates the impact distributions as a function of the trade size relative to average daily volume, specifically scaling effects on the impact magnitude as our impact scalar

increases.

Figure 2b illustrates the impact of the stochastic impact scalar

, specifically the additional stochasticity that is introduced and its amplified impact as the trade size increases.

As demonstrated by (

Chriss, 2001;

Gatheral, 2010), the total market impact can be illustrated by two separate components: temporary impact costs

and permanent market impact

. We assume that there is a reversion constant

which controls the percentage of impact that is temporary and permanent, i.e., what amount will be persistent in the security price following the execution of the trade. When we have both temporary and permanent market impact, we will assume that this reversion constant

is 50%, meaning that immediately following a trade at time

the price of the underlying security is:

where

is the price path following impact and

is our impact reversion constant. The impact reversion constant controls the amount of impact that is realized during trade execution (temporary) at time

and the residual impact that persists at

following the trade

(permanent). We can further deconstruct our total impact cost into a using

,

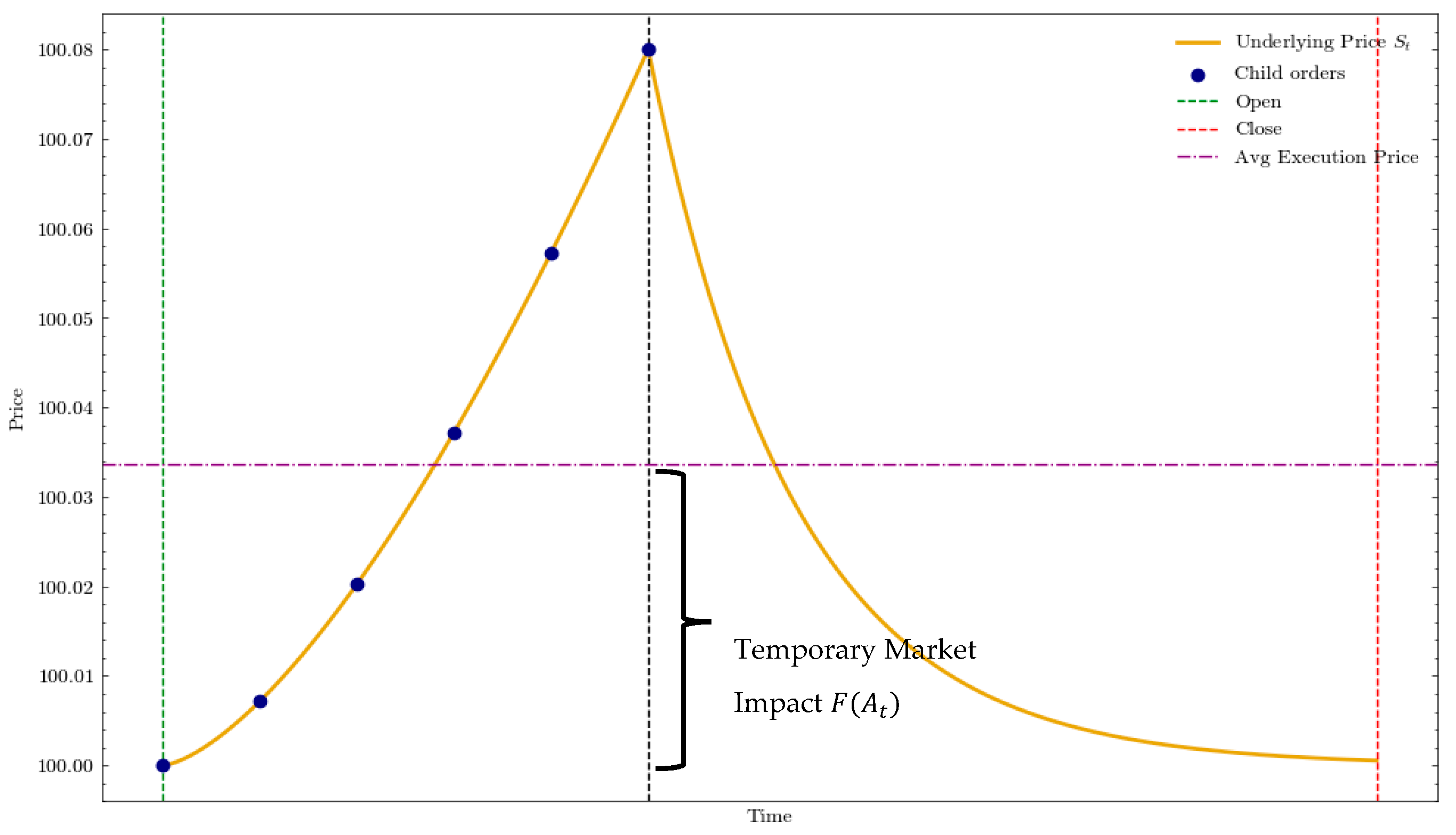

In a state of only temporary impact (

, the price of the underlying will immediately revert to the original price path following execution (

Figure 3). The temporary impact component is treated as an additional execution cost, like commissions and fees. This assumption follows standard models in market impact literature who outlined temporary impacts as short-term deviations due to liquidity effects (

Bucci et al., 2019).

At any time

the agent can choose to execute a trade

, resulting in an execution price of

. The final transaction cost realized at time

,

, is the sum of the total temporary impact

and transaction cost per share of

c:

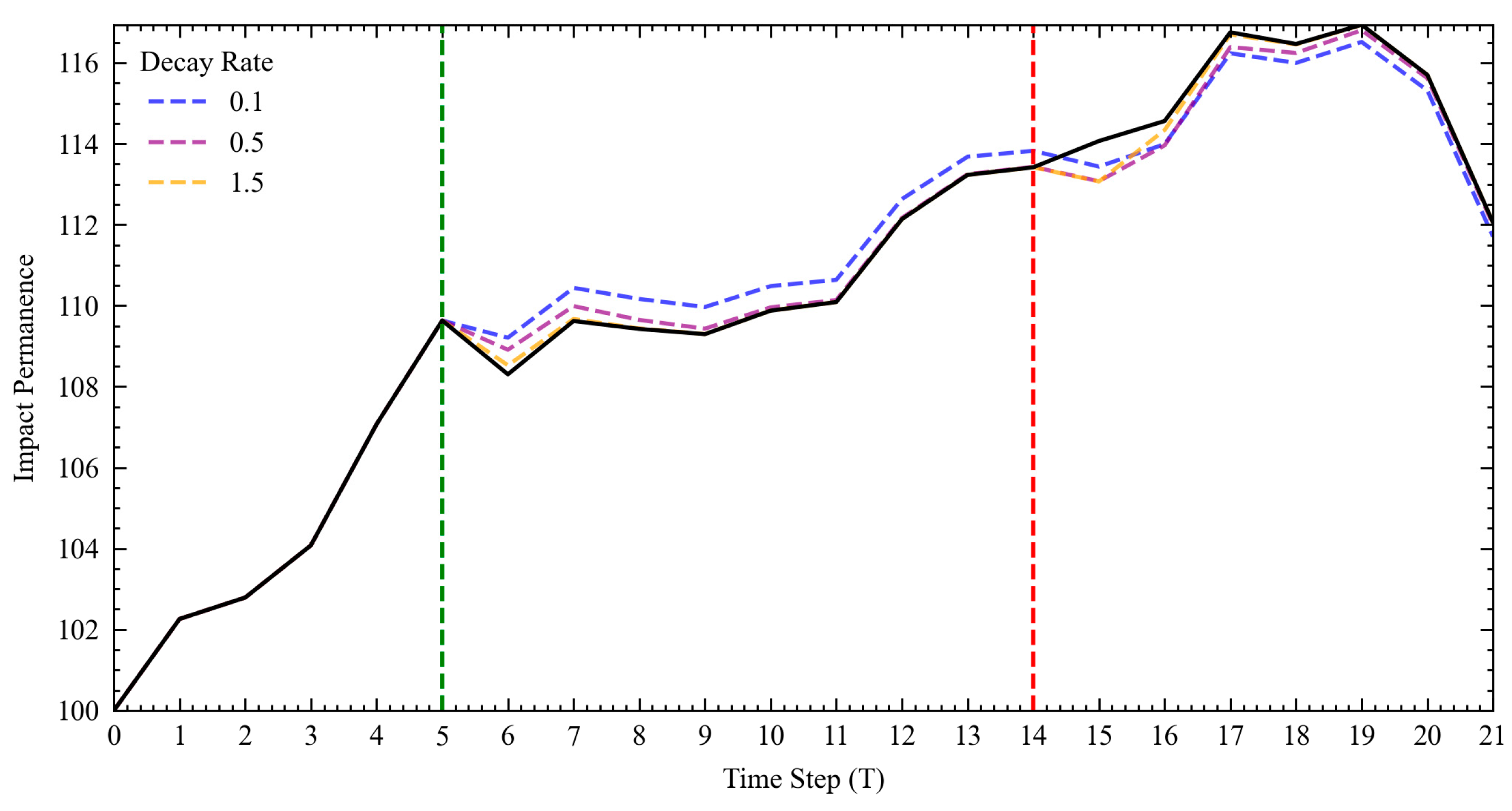

In the permanent-impact-only experiment (

, the permanent impact (

Figure 4) of a trade

will alter the future price path

from the residual effects of the market impact. An exponential decay function of the form

inspired by (

Gatheral, 2010) and (

Rogers & Singh, 2010) is applied to model the gradual reversion of the initial impact

at time

over time with a decay rate of

. The impact at time

from a trade at

(where

) can be expressed as

. The total accumulated impact

at time

, where

is a vector of all trades occurring before

is therefore:

resulting in a future price path

.

To note is that the proposed market impact model does not factor in order book dynamics, as we assume that hedging decisions are daily metaorders with a total impact rather than smaller intraday orders. Further studies could further integrate order book simulations, which can serve as additional inputs for an intraday hedging agent.

3.2. Reinforcement Learning Framework

Our environment reflects a discrete-time trading world across continuous action spaces, with daily trading across and a continuous action space. In this environment, the trader (agent) is short one unit of an at the money European call option, with = , days to maturity and an annual lognormal price volatility of At every timestep, the trader is given the option to reoptimize their position until the option expires. The agent’s primary goal is to minimize the cumulative cost of hedging one short call option position across various degrees of market impact and transaction costs.

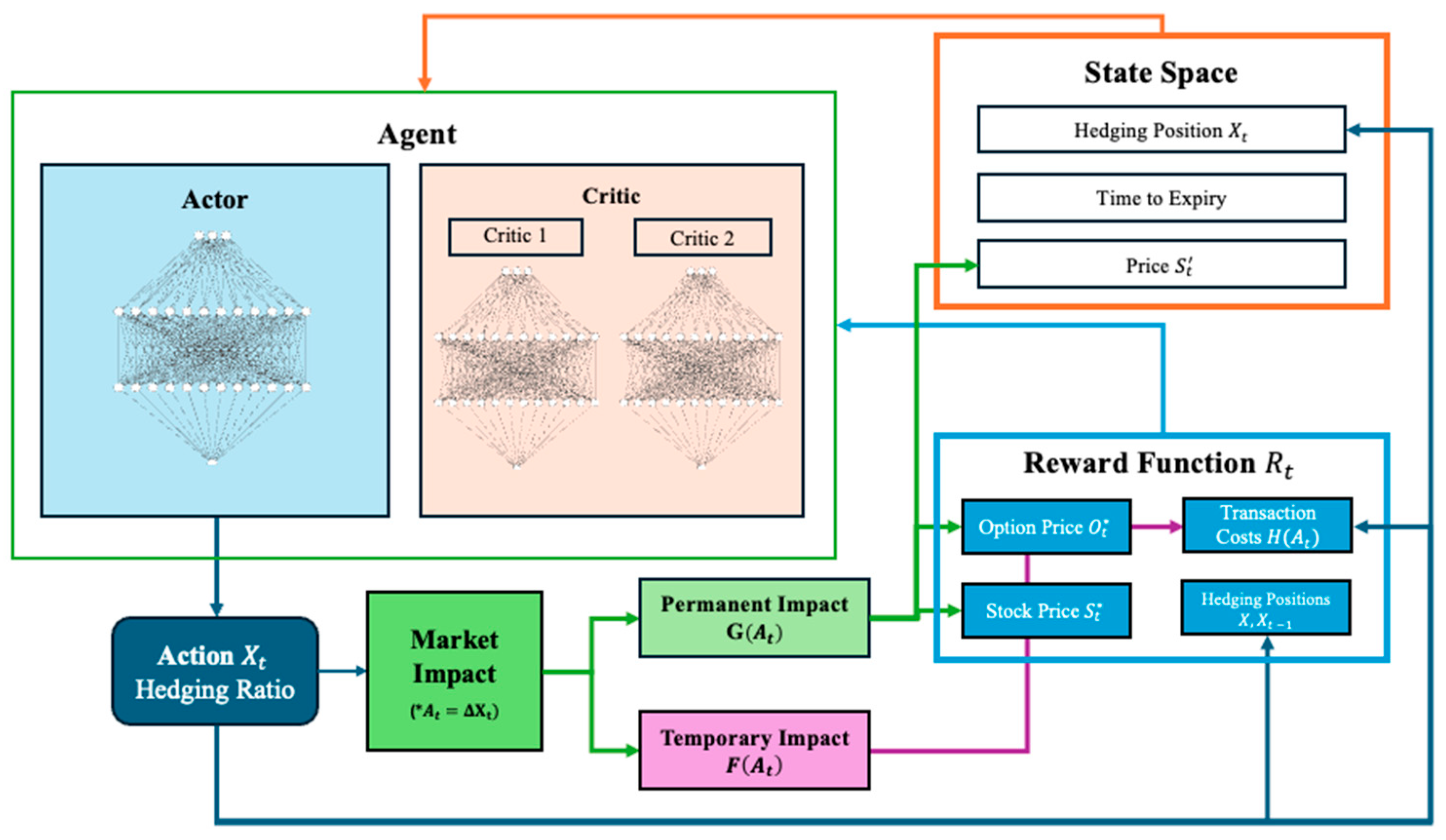

We integrate the market impact models into a discrete-time reinforcement learning environment for delta hedging, where the agent hedges a short position on a European call option. The agent (

Figure 5) takes actions

(same as the hedging position size in

Section 3.1) to rebalance its hedging position at a daily frequency.

represents a ratio of the total number of hedged securities owned by the agent, bound between zero and one, meaning that the agent cannot short the underlying security. A hedge position of one is equivalent to owning 100 underlying shares of the option in the environment assuming we are hedging 1 options contract. Each trading decision at time

results in both a fixed cost (broker and exchange fees) and subsequent market impact at

.

The agent’s observation space consists of:

Underlying security price ( if permanent market impact is enabled): If there is permanent market impact, the agent only sees the price following the cumulative residual impacts , and cannot see the original underlying price path

Current hedging position : This represents how hedged the agent currently is.

Time to expiry : This illustrates the amount of time the agent has until the option payout occurs, or the time left in the training episode.

The agent itself has no knowledge of option Greeks, and utilizes only market information to decide its next hedging position .

3.2.1. Reward Function

The deep hedging problem can also be framed as a PnL maximizing utility function in the form of a mean-variance optimization problem, treating our holdings as a portfolio at each timestep

with a risk aversion parameter

to penalize high PnL variance, given by

where portfolio wealth

is the sum of wealth increments

Resulting in

Similarly to Kolm and Ritter’s derivation, we that the wealth increments are uncorrelated for

(

Kolm & Ritter, 2019).

We restructure the optimization function to a more simplified objective for our hedging agent,

where

is the change in portfolio value between each discrete time step and

is the total days until expiry when the option is first sold. The original implementation of Equation (8) by Almgren and Chriss was presented to optimize for a trading execution strategy that minimized total losses. As shown in the derivation by (

Kolm & Ritter, 2019), this can be simplified into a reward function,

, by observing the changes in

after each rebalance period,

recasting the objective function

into a reward after each action in our reinforcement learning setting.

The PnL (profit and loss) will have 3 components: the value of the options position, value of the underlying security , and total transaction costs. At expiry, the reward function reflects the cost of liquidating the hedged position and the option payoff at expiry. However, unlike other studies, with this approach, the deviations in PnL are not exclusively from transaction costs, but instead are further complicated by slippage and market impact. We will use a higher parameter of 1.5 to discourage excess trading and subsequent price manipulation by the agent.

We define the total portfolio value

to be:

where

is the value of the underlying security holdings,

is the value of the cash account,

is the value of the option given a time to expiry

and

is the transaction cost of all rebalancing trades prior to time

(which includes temporary market impact). The value of the call option is calculated at each time step using the Black Scholes equation. The cash account

tracks the value of the financing required to purchase the hedged positions; we also assume that the agent exists within a zero-interest rate environment, which applies to both positive cash (from liquidated shares) as well as negative funds resultant from borrowing. We assume that maximum borrowing capacity of the agent is limited to the number of shares required to fully hedge the options position (i.e., achieve a hedge ratio of 1).

Due to the portfolio being self-financing, the wealth increment at time

from the holdings at time

can be expressed as:

where

is the change in security value of the underlying position. In practice

reflects the cash needed to finance our hedge. However, as we assume an interest-free environment and that cash flows are used only to adjust the hedged position, the cash account

behaves as a passive balancing term rather than an active wealth component.

Permanent market adds an additional layer of complexity to both the value of the underlying and the derivative. In this scenario, the subsequent price paths are redefined following each action

with

and

representing the new underlying and option price paths, respectively. Each are recalculated after finding the cumulative residual impact

The price impact of the trade will be immediately reflected in

and

in the reward calculation. The PnL under both slippage and price impact can then be expressed as:

3.2.2. Model Parameters

We compare three different continuous off-policy deep reinforcement learning algorithms:

DDPG introduces a deterministic actor and critic pair to learn a continuous hedge ratio from replay-buffer data, but its single-critic design and heuristic noise exploration can suffer from value over-estimation and unstable convergence in volatile markets. TD3 tackles these weaknesses with three tweaks: (i) twin critics and a “min” target to damp over-optimism, (ii) delayed policy updates so the actor trains on more reliable Q-values, and (iii) target-policy smoothing that averages Q-targets over a noisy action neighborhood. SAC goes a step further by learning a stochastic, entropy-regularized policy. Maximizing expected return plus an entropy bonus keeps the agent exploring a spectrum of hedge sizes rather than locking into one deterministic action, a property that helps it navigate the multi-modal, noisy reward landscape created by liquidity shocks. Like TD3, SAC uses twin critics to curb over-estimation (

J. Cao et al., 2020,

2023;

G. Cao et al., 2024) but its entropy term automatically tempers policy shifts and improves sample efficiency.

Empirical studies show SAC and TD3 generally outperform vanilla DDPG on continuous-control and trading tasks, with SAC excelling when market dynamics are highly stochastic or non-stationary. Together, these algorithms form a progression of increasing robustness for deep hedging: DDPG for baseline continuous control, TD3 for bias-corrected stability, and SAC for entropy-driven adaptability in the face of transaction costs and market impact.

We train the agents using the StableBaselines 3 implementation of each algorithm. We choose to use similar hyperparameters in performance evaluations across all three models. The parameters were originally calibrated on a no-friction hedging environment using DDPG, equalized to (1) maximize training efficiency, (2) provide a comparable benchmark between the three algorithms, and (3) prevent overfitting across different market conditions.

The hyperparameters for our simulation environment are:

Policy Updates: we update the policy at every 4 timesteps. This frequency was chosen to find a balance between update frequency and training time; we found that more frequent updates did not drastically improve learning speed but did significantly increase training time per agent. Our update frequency corresponds to a policy update 5 times per episode;

Replay Buffer Size: A buffer of size 100,000, enabling the agent to learn from a diverse set of past experiences, improving sample efficiency and avoiding overfitting to recent market conditions. Buffer sizes of and 107 were tested;

Soft Update Parameter (τ): A target network soft update coefficient of 0.0001, which ensures stable convergence by gradually updating the target network weights to prevent sudden shifts in learning;

Optimizer: The Adam optimizer is employed, with relatively low learning rates of

for the actor and critic networks. This implementation is the same as presented in (

Mikkilä & Kanniainen, 2022);

The hyperparameters were chosen after running no-transaction cost simulations of the DDPG algorithm, prioritizing time to convergence as well as total training time, unless otherwise stated. For the neural network hyper-parameters, we use the default implementation with two fully connected layers for both the actor and critic networks, each with 256 units. Furthermore, we choose to apply ReLU activation functions within our fully connected networks, consistent with the approach by (

J. Cao et al., 2020).

3.2.3. Data

We use Geometric Brownian Motion (GBM) to simulate the underlying price path without market impact until expiry. The GBM model assumes that the price path

following the stochastic differential equation:

We assume that the agent rebalances its hedging position discretely rather than continuously. In this setting, the GBM model is discretized as:

where

is the stock’s annualized expected return and

is the annualized volatility, and

is a random normal variable and

is a discrete time step, specifically 1 day. We set

,

and

with a time to expiry of 1 month, corresponding (21 trading days). We generate 20,000 independent price paths, which are reused across different training environments.

We price the environment’s option using the Black–Scholes model, which provides a closed form solution for pricing our European call option. In frictionless environments, the Black Scholes model provides a perfect hedge and risk neutralization.

The Black–Scholes model for pricing call options is used as a benchmark to calculate the European call option price and its respective delta across

, where the call option price

is:

where

and

is the time to expiry at the start of the episode,

is the strike price,

is the risk free interest rate and

is the underlying volatility,

denotes the cumulative distribution function of the standard normal distribution. The delta,

, used in a standard Black Scholes hedge is

3.2.4. Volatility Estimation Under Permanent Market Impact

To account for fluctuations in price when permanent market impact is present, we introduce an adaptive volatility model to price the options rather than using a constant volatility of . This new measure does not affect the future price path , but instead is an episode-specific volatility estimate that is meant to reflect the implied volatility of the price path .

We employ a model that updates volatility estimates in response to the agent’s trading activity. In the formulation, the variance at timestep is determined by a constant term , the most recent squared return and the previous variance . Mathematically, the model is expressed as . Here, represents the shock term at time t, or in our case the last observed return of the underlying security over the latest interval that includes the permanent market impact.

Prior to agent intervention we simulate a rolling window of daily returns based on a standard geometric Brownian motion with . This forms the basis for initializing Once the agent begins trading (, the returns are recalculated at each timestep using the updated and the process continues to update accordingly. By aligning the derivative’s volatility and returns influenced by , any strategic distortion of by the agent—particularly under permanent impact—is captured in option pricing and reflected in the reward structure.

Following each action

, the environment recalculates the volatility of the underlying of

to include the underlying returns

. Large or aggressive trades result in more significant price moves

, which subsequently inflate

via the

update. Because this volatility is then used to value the option (e.g., via Black–Scholes or numerical methods), higher

increases the cost of hedging and penalizes the behavior in the reward function. The volatility adjustment is intended to increase the price of the option if the agent trades aggressively (

Figure 6), consequently increasing the cost of the option and decreasing rewards.

4. Results

4.1. Comparison of DDPG, TD3 and SAC

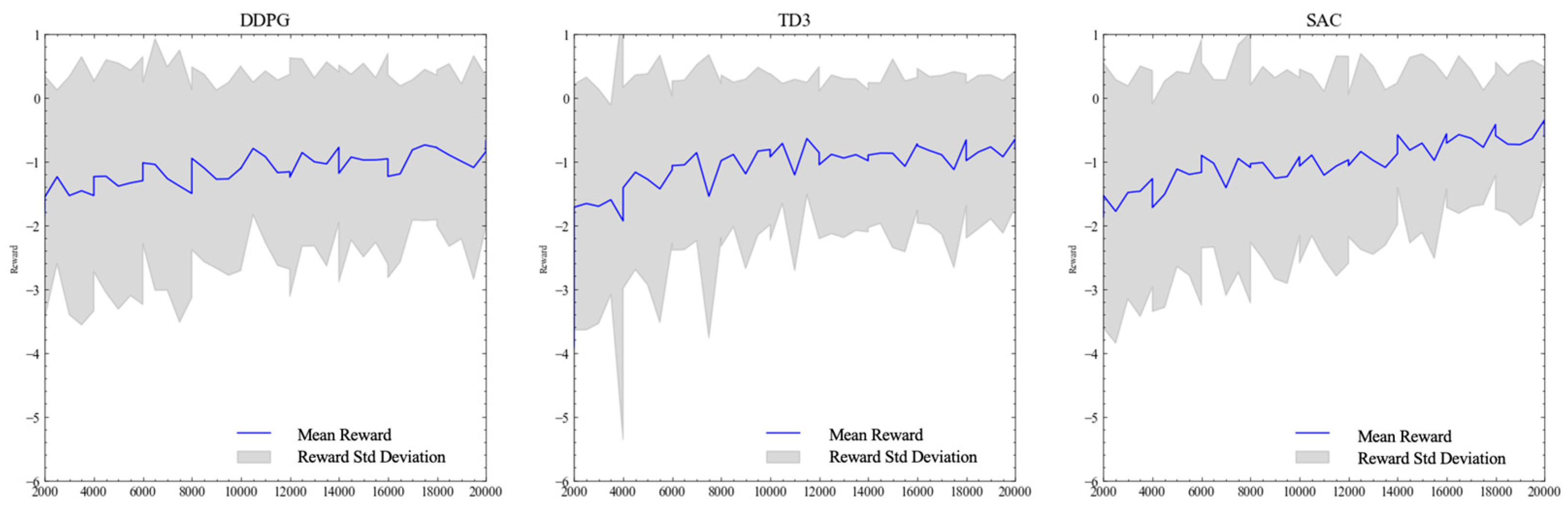

In this section, we show the numerical results of comparing in-sample and out-of-sample training performance for 3 continuous, off-policy models: DDPG, TD3, and SAC. We analyze each models’ ability to maximize the total objective and converge throughout the 20,000 episodes.

First, we train all 3 models in our simplest experimental setup, the transaction cost-only environment with a transaction cost of 5 cents per share.

Table 1 compares the model outcomes when trained in a transaction cost only environment, providing a benchmark for RL agent performance in more complex market scenarios. In

Figure 7, we see that the DDPG and TD3 and algorithms illustrate very similar learning patterns, with relatively quick convergence and stable out-of-sample performance, with both achieving convergence at approximately

. On the other hand, SAC continues to illustrate continued learning past 20,000 episodes, with its out-of-sample reward continuing to increase in an almost linear fashion. SAC took much longer to train per episode, highlighting the added computational power needed for the entropy-based learning. We continued to train the SAC model in this environment until 30,000 episodes, and saw its out-of-sample reward converge to

We then initiate a new comparison environment with both permanent and temporary market impact. In this section, we use lower magnitude parameters as well to serve as a benchmark case when lighter market frictions are introduced: we use a transaction cost

of 1 cent/share, an impact multiplier

of 1 and an impact decay rate

of 1. In

Figure 8, we illustrate the average reward per episode achieved on both the training and testing data, where we tested the model performance every 500 episodes on the testing data. Immediately, we see that DDPG and TD3 demonstrated strong convergence on the training data, reaching similar and stable points of convergence past 2000 episodes. However, we further see that DDPG algorithm fails to generalize as well as TD3 when exposed to new price paths with the same parameters (

and

), with a much higher volatility between evaluation periods. TD3 on the other hand maintains a relatively similar in-sample and out-of-sample performance, with a deviation of only 0.5. Both TD3 and DDPG converge relatively quickly in-sample past episode 3000 and maintain a stable upwards learning path with little translation to its out-of-sample performance. SAC on the other hand has a large divergence between in-sample and out-of-sample performance across time, even in later stages of training. Although the SAC algorithm appears to continue learning on the training dataset, this does not translate well to its performance on the validation dataset, especially in the more complex environment.

The differences between TD3 and SAC can be better visualized in where we directly compare the relative performance (out-of-sample reward minus in-sample reward) of the two algorithms in

Figure 7, Although TD3 is able to sustain relatively high-performance metrics, illustrates higher volatility in evaluation episodes, whereas the SAC agents perform better out-of-sample. This is likely due to its added exploration and stochasticity during training, whereas our evaluation process for both models rely on deterministic predictions. TD3’s in-sample metrics rarely surpassed its out-of-sample metrics with a high degree of magnitude at each evaluation point, seems to maintain a relatively stable transfer of its learned parameters when tested out-of-sample.

In

Table 2, we see that DDPG underperforms both SAC and TD3 in the more complex environment as well, albeit slightly. TD3 and SAC can achieve lower overall variance in both PnL and total rewards, including a lower conditional value at risk. Furthermore, we see that SAC achieves an incredibly low relative standard deviation compared to both agents, especially as training continued, whereas DDPG and TD3 had limited past 10,000 episodes (

Figure 8). Counter to our expectations, this is achieved while SAC trades more (~17% relative to DDPG and ~10% relative to TD3) in the environment with both sets of market impact, implying that the agent is learning to trade more than the deterministic algorithms while achieving a higher PnL.

Following our comparative analysis of SAC, TD3, and DDPG, we have chosen to focus our subsequent experiments exclusively on SAC and TD3, each for distinct reasons. TD3 demonstrates notably faster training times coupled with solid overall performance; however, it does not achieve SAC’s consistently higher average rewards and lower reward variance, even after extended training periods. Both SAC and TD3 exhibit rapid convergence within a relatively small number of training episodes, with marginal improvements beyond approximately 15,000 episodes, whereas DDPG shows a markedly slower learning rate. The following sections will provide an in-depth analysis of the hedging strategies derived by each algorithm under varying test scenarios and complexity levels, as well as specific case studies of robustness among each model’s respective policies.

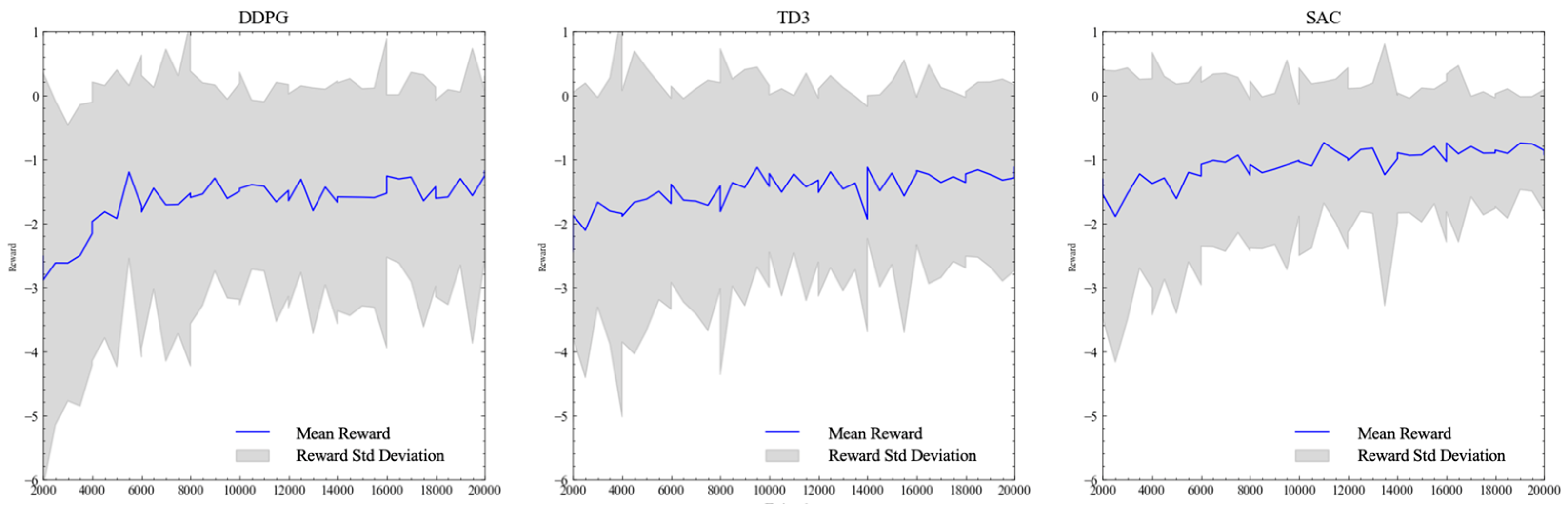

4.2. Transaction Cost Only Experiments

This section serves as a baseline to evaluate the performance of reinforcement learning (RL) agents under conditions devoid of market frictions. The objective is to compare the hedging efficiency of our RL agents (TD3 and SAC) against traditional Black–Scholes delta hedging under varying transaction costs (

and to verify the results of existing literature. In the subsequent sections, we will add additional frictions to the environment to assess trading efficacy. We train independent agents in 5 different environments of varying transaction costs per share, ranging from 1 cent per share to 20 cents per share.

Table 3 illustrates the rewards, conditional value at risk, and total transaction costs in the episode across 5 different experiments.

The results demonstrate that our experimental setup can replicate the relative results of existing deep hedging research, with TD3 and SAC slightly the delta hedging benchmark across most metrics and environments. As expected, delta hedge exhibits lower PnL and episodic reward, in addition to a much higher standard deviation on both metrics. Both agents trade less frequently than the delta hedging agent (with transaction costs being proportional to total shares traded) and lower the standard deviation of P&L and rewards in addition to minimizing conditional value at risk. SAC surpasses TD3 in lowering volatility, with a lower reward and L volatility in addition to a lower value at risk across all environments. Counter to our original expectations, SAC achieves both a smaller total risk and high relative P&L with larger with higher turnover compared to TD3. TD3 on the other hand learns to reduce total costs by trading less frequently, consistently having the lowest transaction cost of the three.

In

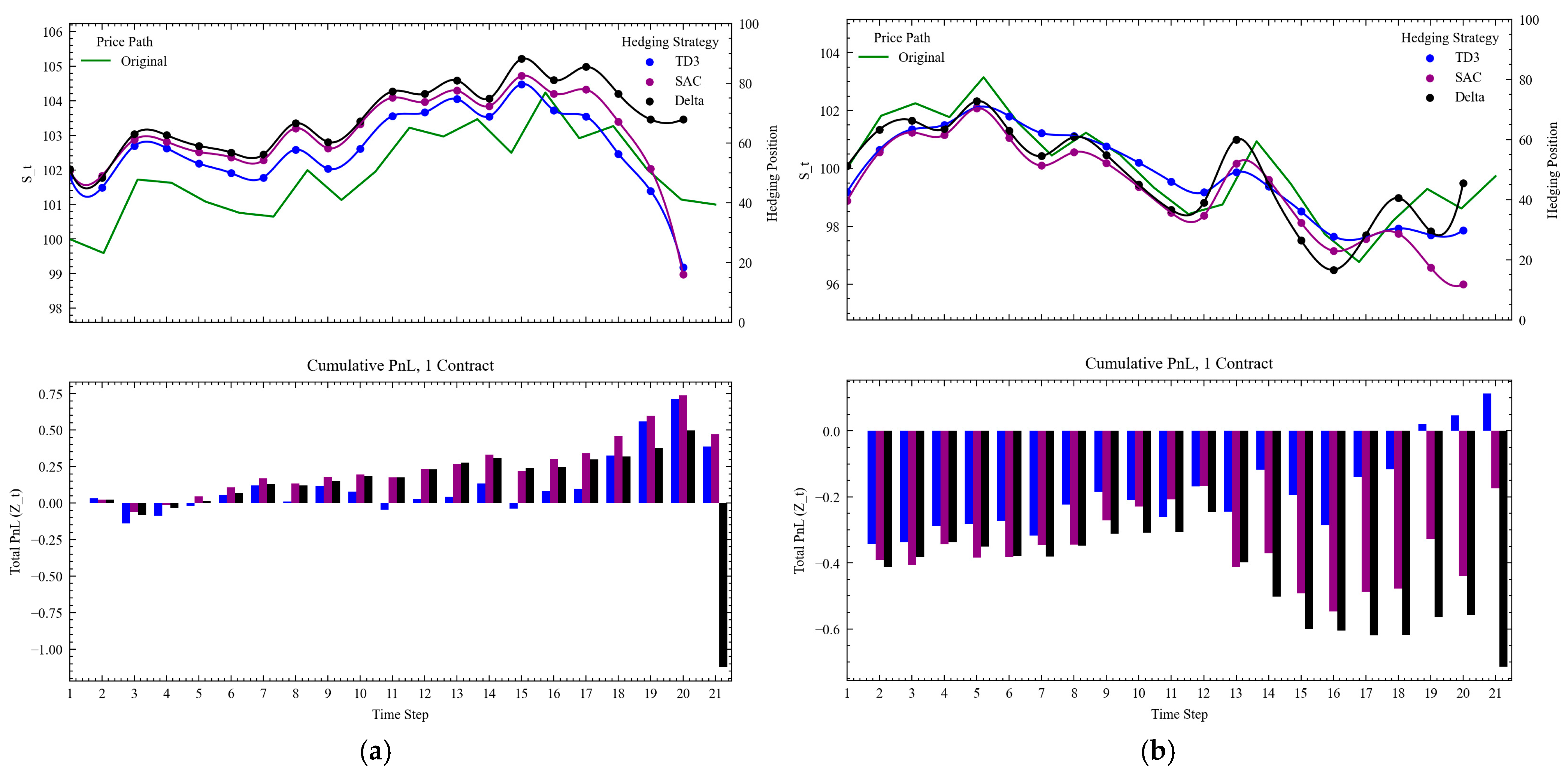

Figure 9 we illustrate two examples of hedging patterns when the cost to trade is higher at

We see that when the option expires in-the-money (left), the agents hold onto the shares as long as possible, but begin to liquidate their hedging positions slowly once they are confident the option is going to expire in-the-money, with SAC liquidating slightly faster than TD3. Overall, the agents remain underhedged compared to the benchmark, but can earn small profits from their trades as the underlying prices move. The delta hedging strategy on the other hand holds onto the positions, resulting in a large liquidation cost at expiry. When the option expires at the money (right), we see that the agents maintain a similar trading pattern in the presence of high hedging costs, closely emulating the delta hedge until the close. SAC is more careful in its approach, maintaining a closer hedge to the delta hedge relative to TD3, which begins liquidation consistently with 3 days to expiry.

4.3. Temporary Impact Only

Across this set of results, we illustrate the RL agents’ behavior when the environment has transaction costs and temporary impact costs following each transaction. This scenario emphasizes the agent’s ability to adapt to cost dynamics that are proportional to trade size, offering a robust test of hedging strategies under transient market frictions. In our reward function, the temporary impact is immediately reflected in the reward at time . The total slippage cost, is proportional to the magnitude of the total shares traded relative to the average daily volume (ADV). For simplicity, all environments hedge a single option contract. We set the environment ADV to 1000 shares and assume a constant trading cost of 5 cents per share.

The temporary market impact effectively introduces an additional cost (slippage) to every trade, however unlike a transaction cost, the slippage cost per share scales with the agent’s trade size due to the stochastic impact scalar

Y.

Table 4 illustrates the results across various levels of impact magnitude. Overall, both agents with lower and higher constant transaction costs outperform the benchmarks, with superior risk adjusted performance and total PnL (with the outlier being one agent when

The agents consistently achieve a lower CVaR relative to the delta hedging agent, along with lower overall transaction costs; this difference becomes more extreme as the level of

increases. We can visualize the difference in

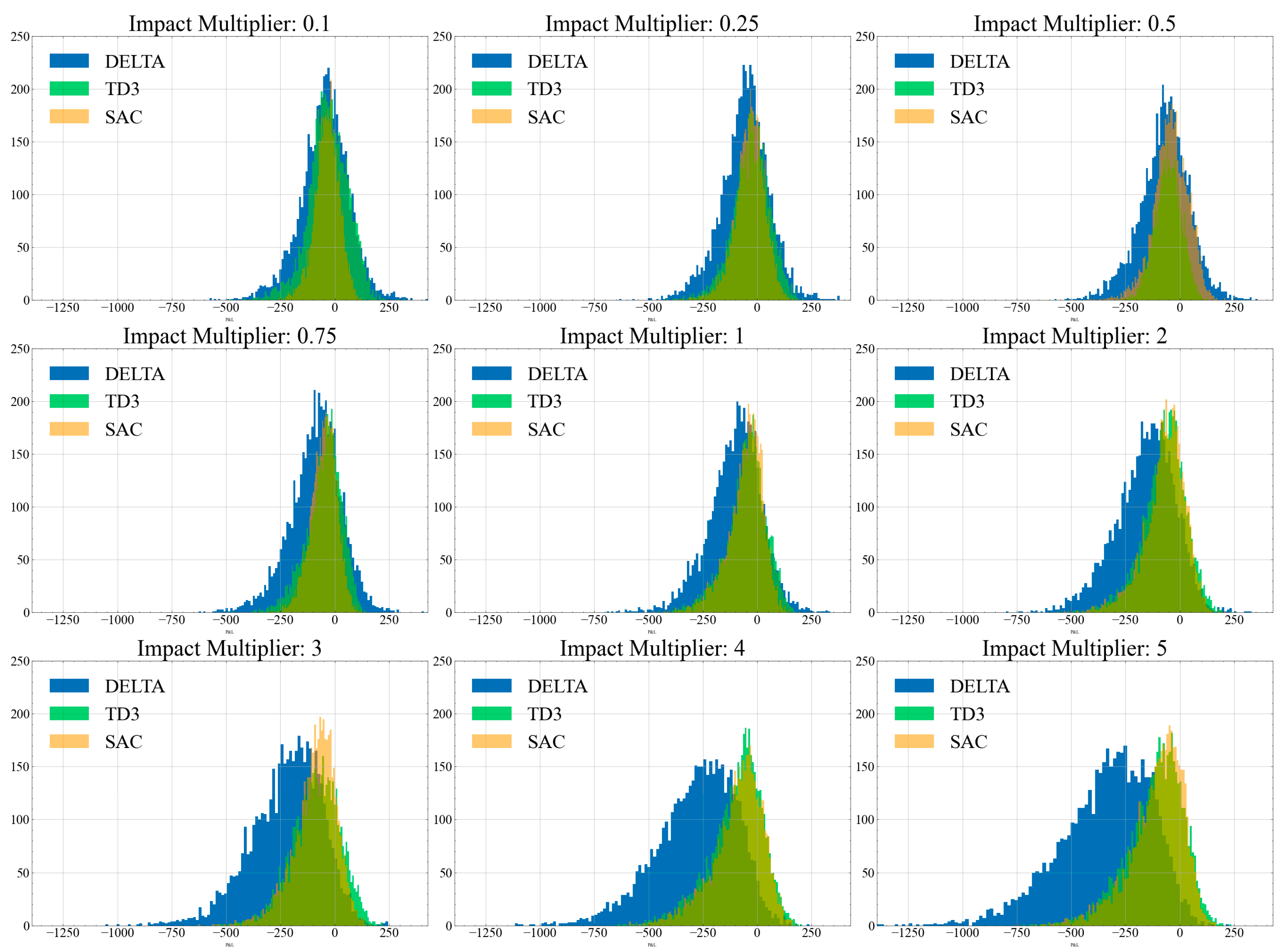

Figure 10, which illustrates a histogram of the total PnL across various levels of

for the Delta, TD3 and SAC evaluations.

In

Figure 11, we can better analyze the learned agentic behaviors in dealing with higher, stochastic slippage costs. Closer to expiry, the agent does not divest its hedged positions as quickly as the benchmark when the option is in-the-money (

Figure 11b). However, when the option is out of the money (

Figure 11a), we see that the agent liquidates faster than the benchmark strategy; as designed, the temporary impact function encourages faster liquidation to avoid smaller total transaction costs, unlike the delta agent. Although the option expires close to the strike price near the end, the agent decides to liquidate much earlier.

4.4. Temporary and Permanent Market Impact

This section evaluates the performance of reinforcement learning (RL) agents under the most realistic trading conditions, incorporating both temporary market impact () and permanent market impact (G). These dual frictions create a challenging environment where agents must optimize their hedging strategies to manage short-term deviations in execution price and long-term impacts on the underlying asset’s price path. The results shown below indicate that RL agents vastly excel relative to standard hedging strategies in these scenarios, significantly outperforming the traditional delta hedging benchmark across all evaluation metrics.

We initialize an environment with both permanent and temporary market impact. As outlined by

Section 3.1, the total impact

M(

X″) will be evenly split between permanent impact and temporary impact component, i.e.,

F(

X″) =

G(

X″) =

αM(

X″), where

α = 0.5 (as defined in

Section 3.1). The temporary impact will be reflected in the execution price, seen by the agent through the subsequent reward, while the permanent impact is reflected in the future price path and options value. We analyze the results across various impact magnitudes (

β) and decay rates (

γ), with a higher constant transaction cost per share of 5 cents. To note, is that compared to experiments in

Section 4.3 and

Section 4.2, we increase the range of impact multipliers in our environments to account for impact being allocated to both

F(

X″) and

G(

X″). Like

Section 4.3, we also apply the dynamic volatility model to our environment to account for any large changes in price volatility because of the market impact.

As per

Table 4, the RL agents demonstrated robust adaptability by tailoring their trading strategies to account for both temporary and permanent market impacts simultaneously. Unlike prior experiments where the agents focused on a single type of friction, the combined impact environment required the agents to balance competing trade-offs: reducing turnover to mitigate slippage while strategically managing position sizes to minimize the residual effects of permanent impact.

As seen in

Table 5, the RL agents achieved superior performance relative to the benchmark in terms of total hedging PnL and turnover with minimal drawdown risk across varying levels of slippage magnitude and impact persistence. SAC’s achieves the highest mean episodic reward along with the lowest standard deviation in every experiment, which is also reflected in its smaller value at risk compared to the benchmark and TD3. Although TD3 achieves a slightly higher PnL in most experiments, SAC exhibits superior risk management abilities despite having higher transaction costs. This reflects SAC’s optimization for stochastic tasks (such as trading with market impact); the agents likely learned additional patterns in the state space to tamper risks given the algorithm’s entropy maximization.

The agents’ trading behavior reflected a nuanced understanding of the interaction between temporary and permanent impacts. When impact magnitude was substantial, agents adopted a conservative trading approach, limiting large rebalancing trades to avoid compounding costs. By prioritizing minimal turnover, the agents reduced the total impact and slippage costs while maintaining an effective hedge across the episode. As the impact increased, we also see a marked decrease in transaction costs amongst the RL agents relative to Delta, with TD3 trading the least.

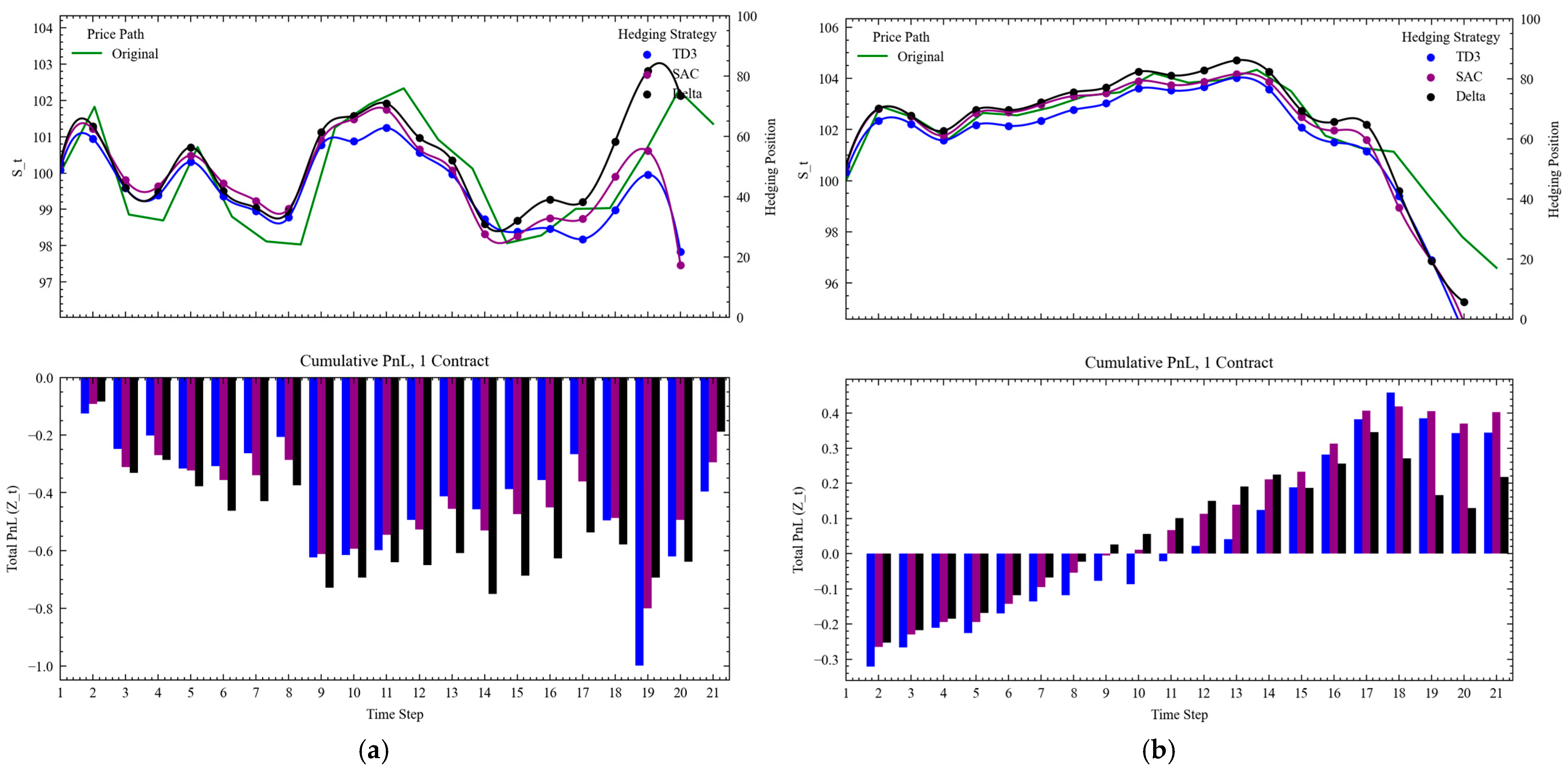

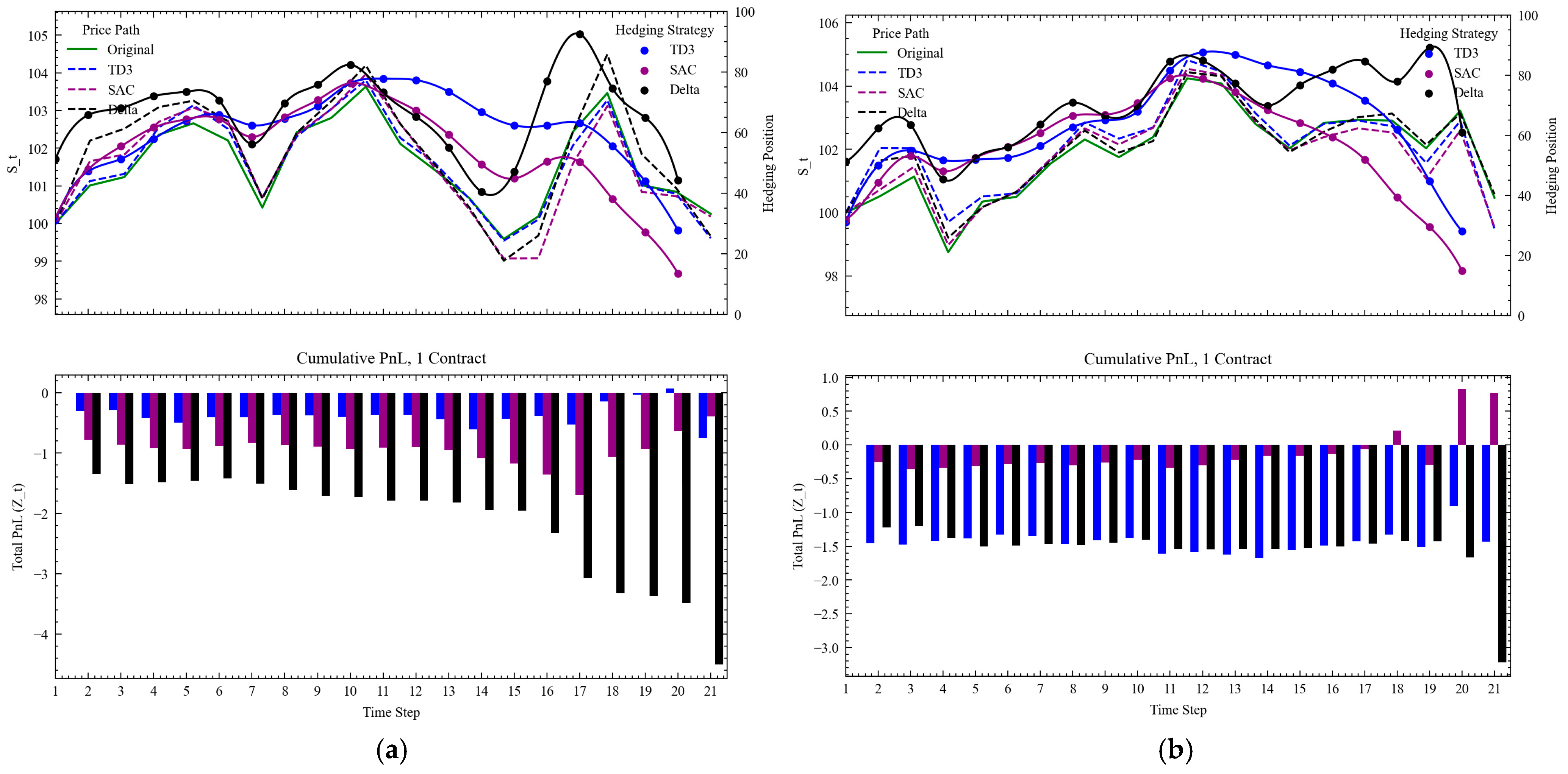

In

Figure 12, we can see two specific examples of TD3 the agents hedging an option that expires both in and out of the money. First, across both figures we see that the agents now start at a lower hedging position relative to the delta strategy to reduce total upwards impact of the underlying security. When the option expires in the money (left), we see that the agents learn to decrease their positions substantially prior to expiry to mitigate the volatility spike from a singular trade. We also see that SAC rapidly sells at

t = 20, likely to impact the underlying’s price prior to expiry as much as possible to maximize its PnL at the end of the episode. The delta hedging strategy on the other hand holds onto its underlying position and is forced to liquidate at

t = 21, resulting in the large PnL drop. Overall, it is interesting to see that the agents are willing to sacrifice early rewards to maximize its rewards at expiry, despite being trained across time steps (rather than episodes). Next, we see that when the trading is against an in-the-money security, the agents slowly liquidate their positions to mitigate against a sudden spike in price (and the costs of overcorrecting their positions).

We conclude that the SAC algorithm is the most suitable for complex hedging environments. These findings highlight the practical applicability of RL-based hedging strategies for institutional traders operating in illiquid or high-cost markets. By optimizing for both immediate and residual costs, the RL agents offer a robust framework for managing derivative portfolios under realistic trading conditions. This capability is particularly valuable for minimizing the combined effects of execution delays and market impact, ensuring cost-efficient and risk-aware portfolio management.

5. Discussion

Our results offer empirical support to the growing body of literature advocating the use of deep reinforcement learning (DRL) for derivative hedging in frictional markets. Similarly to findings by (

Buehler et al., 2018;

Mikkilä & Kanniainen, 2022), we observe that DRL models not only outperform traditional delta hedging in environments with transaction costs, but also show robust adaptability in the presence of execution slippage and permanent market impact. By constructing an environment where both types of market impact are explicitly modeled—and validated across thousands of simulated paths—we provide a more nuanced and empirically grounded understanding of hedging performance under real-world trading constraints.

Our side-by-side comparison of DDPG, TD3, and SAC across multiple experimental environments distinguishes our work from previous studies which often focus on a single algorithm. For example, while

Francois et al. (

2025) incorporated market frictions in deep hedging, they primarily evaluated a single agent, making it difficult to generalize findings across algorithm classes. Our comparative analysis shows that TD3 and SAC not only outperform Black–Scholes delta hedging across all experiments but also maintain superior risk-adjusted performance across environments with varying slippage, impact, and transaction costs. DDPG, in contrast, exhibits instability in out-of-sample performance, reinforcing earlier concerns about its reliability in high-variance settings.

SAC’s stochastic policy proves particularly effective in environments with significant stochasticity, as demonstrated in both the temporary and combined impact experiments. It consistently achieves higher PnL and lower CVaR compared to TD3 and DDPG, despite trading more frequently. This challenges the assumption, commonly seen in friction-based literature (e.g.,

Leland, 1985), that minimizing trades necessarily leads to improved hedging efficiency. Instead, SAC learns to identify and exploit state-dependent opportunities where trading—even with higher transaction costs—yields net performance benefits.

Moreover, our findings expand on the insights from

Shi et al. (

2024) and

Cheridito and Weiss (

2025), who explored DRL applications to market making and execution optimization under impact. By contextualizing our results in the broader scope of DRL-based trade execution, we illustrate that our RL agents’ behavior mirrors those of tactical execution agents, strategically delaying or reducing trades in response to liquidity constraints. Our use of a dynamic volatility model further enhances realism by ensuring that agent-induced volatility is penalized appropriately, ensuring learned policies do not exploit arbitrage-like behaviors.

Our experiments also emphasize the importance of environment complexity on model selection. While TD3 performs admirably under simpler transaction cost-only scenarios due to its deterministic policy and bias-reduction mechanisms, it falls short in capturing the adaptive behaviors required under complex frictions. SAC’s entropy-maximizing formulation appears better suited to navigate the multi-modal, stochastic reward landscape introduced by our market impact model, especially under high decay and impact magnitudes.

6. Conclusions

This paper contributes to the literature on deep hedging by presenting a reinforcement learning-based framework capable of adapting to both temporary and permanent market impact, slippage, and transaction costs. Furthermore, we present a comparison of various DRL models across different market impact settings, highlighting the drawbacks and benefits of DDPG, TD3 and SAC in these settings. Our results demonstrate that DRL agents, particularly those utilizing entropy-regularized exploration like SAC, can outperform traditional hedging strategies in both expected return and risk-adjusted terms.

We see that in the most complex experiments with both permanent and temporary market impact, TD3 and SAC can minimize expected losses seen from delta hedging by over 2/3rd (

Table 4). SAC specifically illustrated its ability to minimize total value at risk across most experiment parameters, achieving a 50% reduction in CVaR, compared to TD3 with a limited reduction at ~20%. The algorithms’ adaptability across a wide range of environments highlights its potential utility in real-world hedging applications, especially where liquidity constraints and execution costs dominate. Compared to SAC, TD3 also demonstrates reliable performance with faster convergence and efficient trading, making it suitable for lower-friction environments or where training time is a key constraint.

By incorporating realistic cost models and analyzing behavior across frictions, our framework provides a comprehensive testbed for evaluating hedging strategies in markets where trading decisions influence asset prices. The nuanced behaviors observed—such as strategic under hedging, early liquidation, and impact-aware trade timing—illustrate the depth of policy complexity that DRL agents can internalize.

We conclude that actor-critic DRL models, and SAC in particular, offer promising tools for institutional hedging strategies. Their ability to balance risk reduction and cost minimization makes them suitable for illiquid and friction-heavy markets. These findings reinforce the argument for integrating DRL into the risk management toolkits of institutional investors, particularly in portfolios with significant option exposure. Future extensions could include applications to real-world market data, multi-asset portfolios, and integration with execution algorithms for end-to-end portfolio optimization under market impact. Our research contributes to the evolving field of financial risk management by introducing a robust and adaptive DRL-based risk management framework for hedging derivatives under liquidity constraints. The results highlight practical applications of reinforcement learning to improve existing hedging strategies, especially for illiquid instruments sensitive to trade pressure.