Forecasting Financial Volatility Under Structural Breaks: A Comparative Study of GARCH Models and Deep Learning Techniques

Abstract

1. Introduction

2. Literature Review

2.1. Structural Breaks and Regime Dynamics

2.2. Deep Learning and GARCH Hybridization

2.3. Textual Information, Big Data, and News

2.4. Reviews, Model Selection, and Robustness

2.5. Study Objective and Hypotheses

- Ignoring structural breaks reduces the predictive accuracy of GARCH models, whereas explicitly modeling them improves performance.

- Deep learning models, thanks to their flexibility, maintain or enhance predictive accuracy in the presence of structural instability.

3. Materials and Methods

3.1. Data

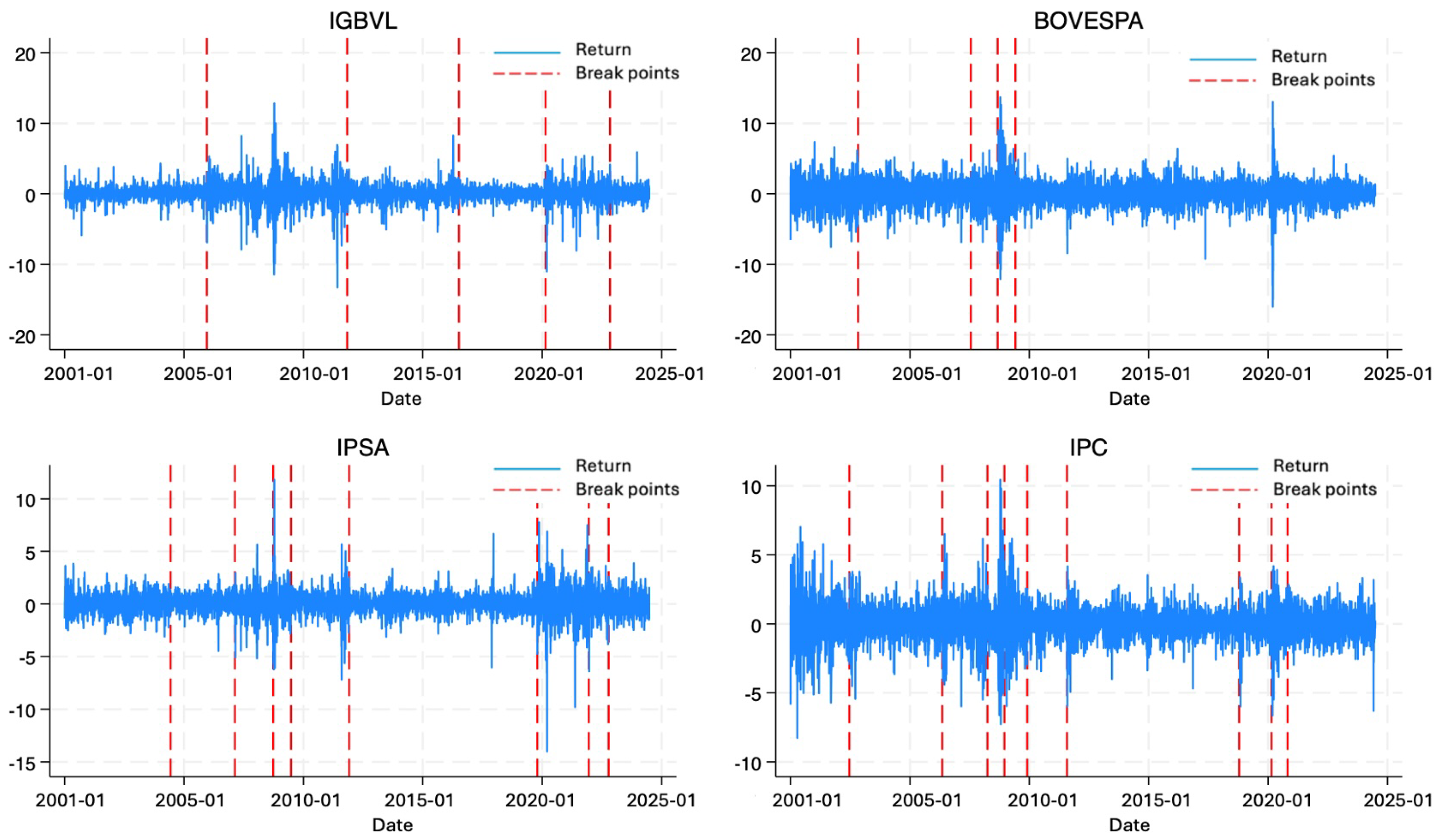

3.2. Modified ICSS Algorithm

3.3. GARCH Model

3.4. Deep Learning

3.4.1. Long Short-Term Memory (LSTM)

- Forget Gate (): Regulates which information from the previous cell state should be removed. It applies a sigmoid activation to the concatenation of the prior hidden state and the current input , producing a weight between 0 and 1 that controls the degree of forgetting, as follows:

- Input Gate (): Determines the portion of new information to be incorporated into the memory cell. Through a sigmoid activation, it selects the relevant signals to be updated, as follows:

- Candidate Vector (): Generates a vector of candidate values that may enrich the cell state. This vector is formed by applying a hyperbolic tangent transformation to the weighted input and previous hidden state, as follows:

- Cell State Update (): Integrates retained information from the previous state with newly generated candidate values to update the cell memory. The previous state is scaled by the forget gate , while the candidate vector is modulated by the input gate . Both contributions are combined through the Hadamard product, i.e., element-wise multiplication (Magnus & Neudecker, 2019), as follows:

- Output Gate (): Selects which parts of the updated memory will influence the current output. A sigmoid activation provides the weighting, as follows:

- Hidden State (): Produces the actual output of the LSTM at time t. It is obtained by applying a hyperbolic tangent to the updated cell state and filtering it through the output gate, as follows:

3.4.2. Convolutional Neural Networks (CNNs)

- Convolutional Layers: Extract local features by applying filters that slide across the input series, generating feature maps.

- Pooling Layers: Downsample these feature maps, commonly through max pooling, to reduce dimensionality while preserving the most salient features.

- Flattening: Convert the multidimensional feature maps into a one-dimensional vector suitable for subsequent processing.

- Fully Connected Layers: Map the extracted features to the final output, allowing the network to combine information across different filters and learn complex relationships.

3.5. Forecast Performance Metrics

3.5.1. Aggregated Mean Squared Forecast Error ()

3.5.2. Quasi-Likelihood Loss (QLIKE)

3.5.3. White and Hansen Test

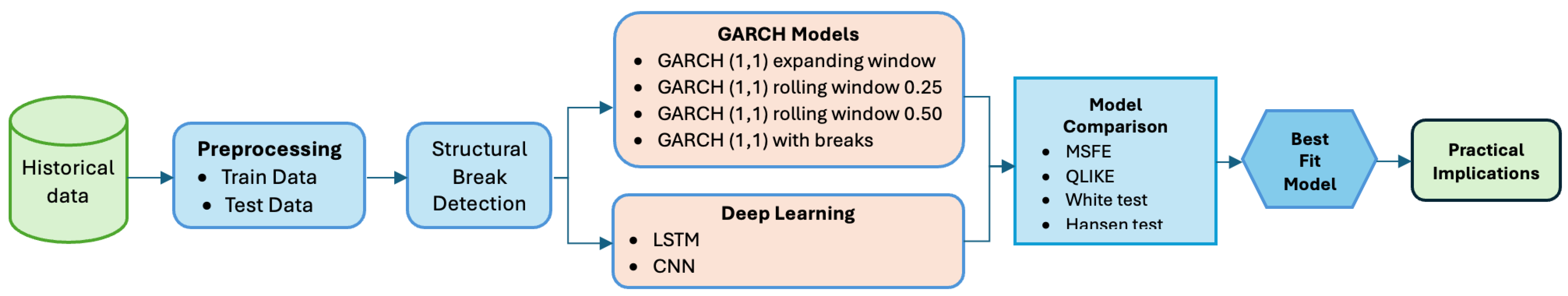

3.6. Methodology

3.6.1. In-Sample Evaluation

- Stage 1: Identification of structural breaks

- Stage 2: Estimation of models in subsamples

3.6.2. Out-of-Sample Evaluation

- Stage 3: Forecasting design

- GARCH(1, 1) with Expanding Window: This model served as our benchmark. The estimation sample was expanded recursively: starting with data from to , the model was estimated and a one-step forecast was generated. Then, at each iteration, a new observation was added and the model was re-estimated to issue the next forecast. This approach inherently assumes parameter stability over time.

- GARCH(1, 1) with Rolling Window 0.25 and 0.5: These models used a fixed-size estimation window equivalent to 25% or 50% of the training sample. The window slid forward one step at a time, and the model was re-estimated for each new forecast, allowing parameters to be dynamically updated with the most recent information.

- GARCH(1, 1) with Structural Breaks: For this dynamic approach, the modified ICSS algorithm was applied to the observations available up to time R. If at least one significant variance break was detected, the GARCH(1, 1) model was estimated using only the observations after the most recent break point. If no structural changes were detected, the model was estimated with the entire sample up to time R. This recursive procedure was carried out throughout the out-of-sample period, ensuring that no look-ahead bias occurred. While this approach adapts effectively to regime changes, a break occurring very close to the forecast date may result in a limited number of observations for estimation, which could affect parameter stability.

- LSTM and CNN Neural Networks: Both deep learning models were trained on the training sample. For each out-of-sample forecast, a new prediction was generated by feeding the network with an updated sliding window of the most recent data. Importantly, these networks were not retrained during the out-of-sample period but relied on their learning capacity to capture long-term dependencies (LSTM) and local patterns (CNN) in order to adapt to new market information.

- Stage 4: Predictive performance evaluation

4. Results

4.1. Descriptive Statistics

4.2. In-Sample Results

4.3. Out-of-Sample Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ahelegbey, D. F., & Giudici, P. (2022). NetVIX—A network volatility index of financial markets. Physica A: Statistical Mechanics and Its Applications, 594, 127017. [Google Scholar] [CrossRef]

- Alfeus, D. (2025). Improving realised volatility forecasts for emerging markets: Evidence from South Africa. Review of Quantitative Finance and Accounting, 65(3), 699–725. [Google Scholar] [CrossRef]

- Amin, M. D., Badruddoza, S., & Sarasty, O. (2024). Comparing the great recession and COVID-19 using Long Short-Term Memory: A close look into agricultural commodity prices. Applied Economic Perspectives and Policy, 46(4), 1406–1428. [Google Scholar] [CrossRef]

- Andrews, D. W. K. (1993). Tests for parameter instability and structural change with unknown change point. Econometrica, 61(4), 821–856. [Google Scholar] [CrossRef]

- Araya, H. T., Aduda, J., & Berhane, T. (2024). A hybrid GARCH and deep learning method for volatility prediction. Journal of Applied Mathematics, 2024, 1–15. [Google Scholar] [CrossRef]

- Babikir, A., Gupta, R., & Mwamba, J. W. (2012). Structural breaks and GARCH models of stock return volatility: The case of South Africa. Economic Modelling, 29(6), 2435–2443. [Google Scholar] [CrossRef]

- Bai, J., & Perron, P. (1998). Estimating and testing linear models with multiple structural changes. Econometrica, 66(1), 47–78. [Google Scholar] [CrossRef]

- Bao, W., Yue, J., & Rao, Y. (2017). A deep learning framework for financial time series using stacked autoencoders and Long-Short Term Memory. PLoS ONE, 12(7), e0180944. [Google Scholar] [CrossRef]

- Bhambu, A., Bera, K., Natarajan, S., & Suganthan, P. N. (2025). High frequency volatility forecasting and risk assessment using neural networks-based heteroscedasticity model. Engineering Applications of Artificial Intelligence, 149, 110397. [Google Scholar] [CrossRef]

- Bildirici, M., Bayazit, N. G., & Ucan, Y. (2020). Analyzing crude oil prices under the impact of COVID-19 by using lstargarchlstm. Energies, 13(11), 2980. [Google Scholar] [CrossRef]

- Blasques, F., Gorgi, P., Koopman, S. J., & Wintenberger, O. (2018). Feasible invertibility conditions and maximum likelihood estimation for observation-driven models. Electronic Journal of Statistics, 12(1), 1019–1052. [Google Scholar] [CrossRef]

- Bodilsen, S. T., & Lunde, A. (2025). Exploiting news analytics for volatility forecasting. Journal of Applied Econometrics, 40(1), 18–36. [Google Scholar] [CrossRef]

- Bollerslev, T. (1986). Generalized autoregressive conditional heteroskedasticity. Journal of Econometrics, 31(3), 307–327. [Google Scholar] [CrossRef]

- Boubaker, S., Liu, Z., & Zhai, L. (2021). Big data, news diversity and financial market crash. Technological Forecasting and Social Change, 168, 120755. [Google Scholar] [CrossRef]

- Castle, J., Doornik, J., & Hendry, D. (2022). Detecting structural breaks and outliers for volatility data via impulse indicator saturation. In Contributions in economics (pp. 679–687). Springer. [Google Scholar] [CrossRef]

- Chung, V., Espinoza, J., & Mansilla, A. (2024). Analysis of financial contagion and prediction of dynamic correlations during the COVID-19 pandemic: A combined DCC-GARCH and deep learning approach. Journal of Risk and Financial Management, 17(12), 567. [Google Scholar] [CrossRef]

- Daboh, F., Kur, K. K., & Knox-Goba, T. L. (2025). Exchange rate volatility and macroeconomic stability in Sierra Leone: Using EGARCH and Markov switching regression. SN Business and Economics, 5(9), 111. [Google Scholar] [CrossRef]

- Dauphin, Y. N., Fan, A., Auli, M., & Grangier, D. (2017, August 6–11). Language modeling with gated convolutional networks. International Conference on Machine Learning (pp. 933–941), Sydney, Australia. [Google Scholar]

- De Gaetano, D. (2018). Forecast combinations for structural breaks in volatility: Evidence from BRICS countries. Journal of Risk and Financial Management, 11(4), 64. [Google Scholar] [CrossRef]

- de Oliveira, A. M. B., Mandal, A., & Power, G. J. (2024). Impact of COVID-19 on stock indices volatility: Long-memory persistence, structural breaks, or both? Annals of Data Science, 11(2), 619–646. [Google Scholar] [CrossRef]

- Diebold, F. X., & Yilmaz, K. (2012). Better to give than to receive: Predictive directional measurement of volatility spillovers. International Journal of Forecasting, 28(1), 57–66. [Google Scholar] [CrossRef]

- Di-Giorgi, G., Salas, R., Avaria, R., Ubal, C., Rosas, H., & Torres, R. (2025). Volatility forecasting using deep recurrent neural networks as GARCH models. Computational Statistics, 40(6), 3229–3255. [Google Scholar] [CrossRef]

- Engle, R. F. (1982). Autoregressive conditional heteroscedasticity with estimates of the variance of United Kingdom inflation. Econometrica, 50(4), 987–1007. [Google Scholar] [CrossRef]

- Fang, Y., & Yang, Y. (2025). Dynamic linkage between stock and forex markets: Mechanisms and evidence from China. Emerging Markets Finance and Trade, 20, 2039–2060. [Google Scholar] [CrossRef]

- Fischer, T., & Krauss, C. (2018). Deep learning with long short-term memory networks for financial market predictions. European Journal of Operational Research, 270(2), 654–669. [Google Scholar] [CrossRef]

- García-Medina, A., & Aguayo-Moreno, E. (2024). LSTM–GARCH hybrid model for the prediction of volatility in cryptocurrency portfolios. Computational Economics, 63(4), 1511–1542. [Google Scholar] [CrossRef]

- Giudici, P., & Pagnottoni, P. (2019). High frequency price change spillovers in bitcoin markets. Risks, 7(4), 111. [Google Scholar] [CrossRef]

- Gong, X., & Lin, B. (2018). Structural breaks and volatility forecasting in the copper futures market. Journal of Futures Markets, 38(3), 290–339. [Google Scholar] [CrossRef]

- Hansen, P. R. (2005). A test for superior predictive ability. Journal of Business & Economic Statistics, 23(4), 365–380. [Google Scholar] [CrossRef]

- Hassanniakalager, A., Baker, P. L., & Platanakis, E. (2024). A false discovery rate approach to optimal volatility forecasting model selection. International Journal of Forecasting, 40(3), 881–902. [Google Scholar] [CrossRef]

- Hillebrand, E. (2005). Neglecting parameter changes in GARCH models. Journal of Econometrics, 129(1), 121–138. [Google Scholar] [CrossRef]

- Hochreiter, S., & Schmidhuber, J. (1997). Long short-term memory. Neural Computation, 9(8), 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Hortúa, H. J., & Mora-Valencia, A. (2025). Forecasting VIX using Bayesian deep learning. International Journal of Data Science and Analytics, 20, 2039–2060. [Google Scholar] [CrossRef]

- Inclán, C., & Tiao, G. C. (1994). Use of cumulative sums of squares for retrospective detection of changes of variance. Journal of the American Statistical Association, 89(427), 913–923. [Google Scholar] [CrossRef]

- Khan, F. U., Khan, F., & Shaikh, P. A. (2023). Forecasting returns volatility of cryptocurrency by applying various deep learning algorithms. Future Business Journal, 9(1), 25. [Google Scholar] [CrossRef]

- Kim, H. Y., & Won, C. H. (2018). Forecasting the volatility of stock price index: A hybrid model integrating LSTM with multiple GARCH-type models. Expert Systems with Applications, 103, 25–37. [Google Scholar] [CrossRef]

- Kristjanpoller, W., & Minutolo, M. (2014). Volatility forecast using hybrid neural network models: Evidence from Latin American stock markets. Expert Systems with Applications, 41(15), 6717–6726. [Google Scholar] [CrossRef]

- Kumar, D. (2018). Volatility prediction: A study with structural breaks. Theoretical Economics Letters, 8(6), 1218–1231. [Google Scholar] [CrossRef][Green Version]

- Lamoureux, C. G., & Lastrapes, W. D. (1990). Persistence in variance, structural change, and the GARCH model. Journal of Business & Economic Statistics, 8(2), 225–234. [Google Scholar] [CrossRef]

- LeCun, Y., Bottou, L., Bengio, Y., & Haffner, P. (1998). Gradient-based learning applied to document recognition. Proceedings of the IEEE, 86(11), 2278–2324. [Google Scholar] [CrossRef]

- Luo, J., Chen, Z., & Cheng, M. (2025). Forecasting realized betas using predictors indicating structural breaks and asymmetric risk effects. Journal of Empirical Finance, 80, 101575. [Google Scholar] [CrossRef]

- Magnus, J. R., & Neudecker, H. (2019). Matrix differential calculus with applications in statistics and econometrics. John Wiley & Sons. [Google Scholar] [CrossRef]

- Mikosch, T., & Stărică, C. (2004). Nonstationarities in financial time series, the long-range dependence, and the IGARCH effects. Review of Economics and Statistics, 86(1), 378–390. [Google Scholar] [CrossRef]

- Molina Muñoz, J. E., & Castañeda, R. (2023). The use of machine learning in volatility: A review using K-means. Universidad & Empresa, 25(44), 1–28. [Google Scholar] [CrossRef]

- Moreno-Pino, F., & Zohren, S. (2024). DeepVol: Volatility forecasting from high-frequency data with dilated causal convolutions. Quantitative Finance, 24(8), 1105–1127. [Google Scholar] [CrossRef]

- Nelson, D. M., Pereira, A., & de Oliveira, R. A. (2017, May 14–19). Stock market’s price movement prediction with LSTM neural networks. Proceedings of the International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA. [Google Scholar] [CrossRef]

- Ni, J., Wohar, M. E., & Wang, B. (2016). Structural breaks in volatility: The case of Chinese stock returns. The Chinese Economy, 49(2), 81–93. [Google Scholar] [CrossRef]

- Ni, J., & Xu, Y. (2023). Forecasting the dynamic correlation of stock indices based on deep learning method. Computational Economics, 61(1), 35–55. [Google Scholar] [CrossRef]

- Patra, S., & Malik, K. (2025). Return and volatility connectedness among US and Latin American markets: A quantile VAR approach with implications for hedging and portfolio diversification. Global Finance Journal, 65, 101094. [Google Scholar] [CrossRef]

- Patton, A. J. (2009). Volatility forecast comparison using imperfect volatility proxies. Journal of Econometrics, 146(1), 147–156. [Google Scholar] [CrossRef]

- Pesämaa, O., Zwikael, O., Hair, J. F., & Huemann, M. (2021). Publishing quantitative papers with rigor and transparency. International Journal of Project Management, 39(3), 217–222. [Google Scholar] [CrossRef]

- Petrozziello, A., Troiano, L., Serra, A., Jordanov, I., Storti, G., Tagliaferri, R., & La Rocca, M. (2022). Deep learning for volatility forecasting in asset management. Soft Computing, 26, 8553–8574. [Google Scholar] [CrossRef]

- Qiu, Z., Kownatzki, C., Scalzo, F., & Cha, E. S. (2025). Historical perspectives in volatility forecasting methods with machine learning. Risks, 13(5), 98. [Google Scholar] [CrossRef]

- Quandt, R. E. (1960). Tests of the hypothesis that a linear regression system obeys two separate regimes. Journal of the American Statistical Association, 55(290), 324–330. [Google Scholar] [CrossRef]

- Rapach, D. E., & Strauss, J. K. (2008). Structural breaks and GARCH models of exchange rate volatility. Journal of Applied Econometrics, 23(1), 65–90. [Google Scholar] [CrossRef]

- Sanso, A., Aragó, V., & Carrion, J. L. (2004). Testing for change in the unconditional variance of financial time series. Revista de Economía Financiera, 4, 32–53. [Google Scholar]

- Stărică, C., & Granger, C. (2005). Nonstationarities in stock returns. The Review of Economics and Statistics, 87(3), 503–522. [Google Scholar] [CrossRef]

- Sun, Y., Du, X., & Zhang, Y. (2025). Inference for a multiplicative volatility model allowing for structural breaks. Communications in Statistics: Simulation and Computation. [Google Scholar] [CrossRef]

- Tsuji, C. (2025). Dual asymmetries in Bitcoin. Finance Research Letters, 82, 107450. [Google Scholar] [CrossRef]

- White, H. (2000). A reality check for data snooping. Econometrica, 68(5), 1097–1126. [Google Scholar] [CrossRef]

- Zhang, Z., Yuan, J., & Gupta, A. (2024). Let the laser beam connect the dots: Forecasting and narrating stock market volatility. INFORMS Journal on Computing, 36(6), 1400–1416. [Google Scholar] [CrossRef]

- Zitti, M. (2024). Forecasting salmon market volatility using long short-term memory (LSTM). Aquaculture Economics and Management, 28(1), 143–175. [Google Scholar] [CrossRef]

| IGBVL | BOVESPA | IPSA | IPC | |

|---|---|---|---|---|

| Return | ||||

| Minimum | −0.11001 | −0.1599 | −0.1401 | −0.0664 |

| Maximum | 0.0826 | 0.1302 | 0.0749 | 0.0634 |

| Mean | 0.0002 | 0.0003 | 0.0002 | 0.0001 |

| Standard Deviation | 0.0113 | 0.0159 | 0.0118 | 0.0102 |

| Skewness | −0.4906 | −0.7165 | −1.2829 | −0.3592 |

| Kurtosis | 12.6790 | 14.3077 | 21.9460 | 7.0105 |

| Shapiro–Wilk | 12.3240 (0.000) | 12.1120 (0.000) | 13.1060 (0.000) | 10.1400 (0.000) |

| Squared return | ||||

| Ljung–Box (r = 20) | 91.51 (0.000) | 45.82 (0.001) | 58.02 (0.000) | 25.74 (0.174) |

| ARCH LM (q = 2) | 111.04 (0.000) | 726.03 (0.000) | 453.71 (0.000) | 82.15 (0.000) |

| ARCH LM (q = 10) | 235.89 (0.000) | 941.86 (0.000) | 466.94 (0.000) | 334.49 (0.000) |

| IGBVL | BOVESPA | IPSA | IPC |

|---|---|---|---|

| 14 December 2005 | 30 October 2002 | 9 June 2004 | 19 June 2002 |

| 31 October 2011 | 23 July 2007 | 19 February 2007 | 10 May 2006 |

| 8 July 2016 | 3 September 2008 | 26 September 2008 | 1 April 2008 |

| 21 February 2020 | 4 June 2009 | 26 June 2009 | 17 December 2008 |

| 4 November 2022 | 30 November 2011 | 1 December 2009 | |

| 18 October 2019 | 1 August 2011 | ||

| 13 December 2021 | 17 October 2018 | ||

| 14 October 2022 | 21 February 2020 | ||

| 27 October 2020 |

| IGBVL | BOVESPA | IPSA | IPC | |||||

|---|---|---|---|---|---|---|---|---|

| Full sample | 0.980 | 2.179 | 0.982 | 2.763 | 0.984 | 1.509 | 0.987 | 1.472 |

| Subsample 1 | 0.793 | 0.776 | 0.912 | 4.385 | 0.946 | 0.896 | 0.997 | 0.000 |

| Subsample 2 | 0.975 | 7.760 | 0.955 | 2.466 | 0.951 | 0.599 | 0.895 | 1.123 |

| Subsample 3 | 0.976 | 1.152 | 0.730 | 3.969 | 0.943 | 2.170 | 0.946 | 2.450 |

| Subsample 4 | 0.812 | 0.467 | 0.982 | 5.275 | 0.937 | 1.550 | 1.000 | - |

| Subsample 5 | 0.932 | 2.916 | 0.964 | 2.028 | 0.973 | 1.233 | 0.990 | 2.199 |

| Subsample 6 | 0.475 | 0.789 | 0.941 | 0.589 | 0.948 | 0.695 | ||

| Subsample 7 | 0.903 | 4.009 | 0.978 | 0.686 | ||||

| Subsample 8 | 0.944 | 1.579 | 0.949 | 0.772 | ||||

| Subsample 9 | 0.709 | 0.917 | 0.967 | 1.686 | ||||

| Subsample 10 | 0.973 | 0.918 | ||||||

| Model | S = 1 | S = 20 | S = 60 | S = 120 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MSFE | Ratio | p-Value | MSFE | Ratio | p-Value | MSFE | Ratio | p-Value | MSFE | Ratio | p-Value | |

| IGBVL | ||||||||||||

| GARCH(1, 1) expanding window | 9.24 | 1.000 | 493.1 | 1.000 | 3577.6 | 1.000 | 16,730.6 | 1.000 | ||||

| Garch Model | 0.031 [0.045] | 0.988 [0.667] | 0.882 [0.600] | 0.765 [0.590] | ||||||||

| GARCH(1, 1) 0.25 rolling window | 9.25 | 1.001 | 533.2 | 1.081 | 4457.8 | 1.246 | 19,139.0 | 1.144 | ||||

| GARCH(1, 1) 0.50 rolling window | 9.04 | 0.979 | 356.7 | 0.723 | 2163.7 | 0.605 | 7225.6 | 0.432 | ||||

| GARCH(1, 1) with breaks | 3.96 | 0.428 | 354.8 | 0.720 | 3898.7 | 1.090 | 19,014.6 | 1.137 | ||||

| Deep Learning | 0.536 [0.428] | 0.000 [0.001] | 0.000 [0.000] | 0.000 [0.000] | ||||||||

| LSTM | 9.17 | 0.993 | 172.7 | 0.350 | 967.2 | 0.270 | 3106.2 | 0.186 | ||||

| CNN | 9.28 | 1.005 | 210.7 | 0.427 | 1167.7 | 0.326 | 3838.4 | 0.229 | ||||

| BOVESPA | ||||||||||||

| GARCH(1, 1) expanding window | 5.02 | 1.000 | 260.7 | 1.000 | 3964.8 | 1.000 | 23,883.6 | 1.000 | ||||

| Garch Model | 0.017 [0.010] | 0.102 [0.108] | 0.217 [0.119] | 0.118 [0.109] | ||||||||

| GARCH(1, 1) 0.25 rolling window | 5.01 | 0.999 | 209.0 | 0.802 | 2454.4 | 0.619 | 11,382.1 | 0.477 | ||||

| GARCH(1, 1) 0.50 rolling window | 4.98 | 0.993 | 215.2 | 0.825 | 2521.0 | 0.636 | 11,787.9 | 0.494 | ||||

| GARCH(1, 1) with breaks | 6.48 | 1.291 | 357.7 | 1.372 | 4571.6 | 1.153 | 25,312.4 | 1.060 | ||||

| Deep Learning | 0.390 [0.281] | 0.000 [0.000] | 0.000 [0.000] | 0.000 [0.000] | ||||||||

| LSTM | 4.92 | 0.981 | 104.5 | 0.401 | 661.3 | 0.167 | 1671.5 | 0.070 | ||||

| CNN | 4.95 | 0.985 | 103.6 | 0.397 | 629.1 | 0.159 | 1482.8 | 0.062 | ||||

| IPSA | ||||||||||||

| GARCH(1, 1) expanding window | 3.36 | 1.000 | 172.8 | 1.000 | 1075.0 | 1.000 | 3507.0 | 1.000 | ||||

| Garch Model | 0.988 [0.556] | 0.960 [0.753] | 0.893 [0.553] | 0.803 [0.664] | ||||||||

| GARCH(1, 1) 0.25 rolling window | 3.40 | 1.014 | 260.4 | 1.507 | 3997.2 | 3.718 | 37,376.1 | 10.658 | ||||

| GARCH(1, 1) 0.50 rolling window | 3.36 | 1.000 | 197.7 | 1.144 | 1856.6 | 1.727 | 10,136.9 | 2.890 | ||||

| GARCH(1, 1) with breaks | 2.32 | 0.692 | 297.7 | 1.722 | 4666.8 | 4.341 | 27,528.3 | 7.849 | ||||

| Deep Learning | 0.757 [0.482] | 0.004 [0.006] | 0.001 [0.002] | 0.000 [0.000] | ||||||||

| LSTM | 3.35 | 0.998 | 99.3 | 0.575 | 582.6 | 0.542 | 1958.7 | 0.559 | ||||

| CNN | 3.55 | 1.057 | 145.0 | 0.839 | 870.9 | 0.810 | 3004.7 | 0.857 | ||||

| IPC | ||||||||||||

| GARCH(1, 1) expanding window | 5.14 | 1.000 | 144.7 | 1.000 | 550.0 | 1.000 | 2827.5 | 1.000 | ||||

| Garch Model | 0.788 [0.665] | 1.000 [0.890] | 0.975 [0.639] | 0.929 [0.718] | ||||||||

| GARCH(1, 1) 0.25 rolling window | 5.11 | 0.994 | 144.2 | 0.996 | 413.3 | 0.751 | 1352.1 | 0.478 | ||||

| GARCH(1, 1) 0.50 rolling window | 5.11 | 0.994 | 139.0 | 0.961 | 405.5 | 0.737 | 488.0 | 0.173 | ||||

| GARCH(1, 1) with breaks | 5.68 | 1.105 | 156.9 | 1.085 | 702.0 | 1.277 | 1437.1 | 0.508 | ||||

| Deep Learning | 0.867 [0.601] | 0.001 [0.002] | 0.008 [0.009] | 0.002 [0.001] | ||||||||

| LSTM | 5.17 | 1.006 | 128.0 | 0.884 | 354.9 | 0.645 | 965.1 | 0.341 | ||||

| CNN | 5.17 | 1.006 | 128.3 | 0.887 | 344.1 | 0.626 | 870.7 | 0.308 | ||||

| Model | S = 1 | S = 20 | S = 60 | S = 120 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| QLIKE | Ratio | p-Value | QLIKE | Ratio | p-Value | QLIKE | Ratio | p-Value | QLIKE | Ratio | p-Value | |

| IGBVL | ||||||||||||

| GARCH(1, 1) expanding window | 1.581 | 1.000 | 0.192 | 1.000 | 0.196 | 1.000 | 0.252 | 1.000 | ||||

| GARCH Model | 0.027 [0.021] | 0.976 [0.641] | 0.864 [0.580] | 0.743 [0.571] | ||||||||

| GARCH(1, 1) 0.25 rolling window | 1.586 | 1.003 | 0.190 | 0.985 | 0.174 | 0.888 | 0.223 | 0.884 | ||||

| GARCH(1, 1) 0.50 rolling window | 1.572 | 0.995 | 0.171 | 0.888 | 0.120 | 1.000 | 0.119 | 0.471 | ||||

| GARCH(1, 1) with breaks | 1.537 | 0.973 | 0.331 | 1.718 | 0.306 | 1.562 | 0.316 | 1.256 | ||||

| Deep Learning | 0.501 [0.398] | 0.002 [0.005] | 0.003 [0.005] | 0.000 [0.000] | ||||||||

| LSTM | 1.853 | 1.172 | 0.201 | 1.043 | 0.191 | 0.976 | 0.193 | 0.766 | ||||

| CNN | 2.446 | 1.548 | 0.328 | 1.703 | 0.289 | 1.477 | 0.286 | 1.134 | ||||

| BOVESPA | ||||||||||||

| GARCH(1, 1) expanding window | 1.294 | 1.000 | 0.164 | 1.000 | 0.220 | 1.000 | 0.260 | 1.000 | ||||

| Garch Model | 0.021 [0.014] | 0.117 [0.112] | 0.122 [0.115] | 0.124 [0.112] | ||||||||

| GARCH(1, 1) 0.25 rolling window | 1.287 | 0.995 | 0.143 | 0.872 | 0.171 | 0.775 | 0.174 | 0.669 | ||||

| GARCH(1, 1) 0.50 rolling window | 1.291 | 0.997 | 0.149 | 0.913 | 0.174 | 0.790 | 0.177 | 0.681 | ||||

| GARCH(1, 1) with breaks | 1.449 | 1.119 | 0.226 | 1.382 | 0.267 | 1.211 | 0.321 | 1.234 | ||||

| Deep Learning | 0.001 [0.003] | 0.000 [0.000] | 0.000 [0.000] | 0.000 [0.000] | ||||||||

| LSTM | 1.281 | 0.990 | 0.072 | 0.440 | 0.054 | 0.243 | 0.037 | 0.142 | ||||

| CNN | 1.289 | 0.996 | 0.065 | 0.400 | 0.047 | 0.212 | 0.031 | 0.118 | ||||

| IPSA | ||||||||||||

| GARCH(1, 1) expanding window | 1.386 | 1.000 | 0.135 | 1.000 | 0.078 | 1.000 | 0.064 | 1.000 | ||||

| GARCH Model | 0.972 [0.534] | 0.944 [0.739] | 0.875 [0.561] | 0.799 [0.641] | ||||||||

| GARCH(1, 1) 0.25 rolling window | 1.385 | 1.000 | 0.161 | 1.196 | 0.185 | 2.378 | 0.323 | 5.049 | ||||

| GARCH(1, 1) 0.50 rolling window | 1.385 | 1.000 | 0.144 | 1.064 | 0.102 | 1.315 | 0.117 | 1.828 | ||||

| GARCH(1, 1) with breaks | 1.484 | 1.071 | 0.198 | 1.468 | 0.209 | 2.689 | 0.254 | 3.967 | ||||

| Deep Learning | 0.791 [0.455] | 0.007 [0.006] | 0.007 [0.008] | 0.000 [0.000] | ||||||||

| LSTM | 1.483 | 1.070 | 0.109 | 0.810 | 0.063 | 0.805 | 0.050 | 0.782 | ||||

| CNN | 1.812 | 1.308 | 0.118 | 0.871 | 0.071 | 0.913 | 0.056 | 0.876 | ||||

| IPC | ||||||||||||

| GARCH(1, 1) expanding window | 1.549 | 1.000 | 0.141 | 1.000 | 0.075 | 1.000 | 0.081 | 1.000 | ||||

| GARCH Model | 0.781 [0.659] | 0.995 [0.879] | 0.969 [0.618] | 0.913 [0.701] | ||||||||

| GARCH(1, 1) 0.25 rolling window | 1.545 | 0.997 | 0.139 | 0.983 | 0.064 | 0.848 | 0.049 | 0.601 | ||||

| GARCH(1, 1) 0.50 rolling window | 1.564 | 1.009 | 0.144 | 1.021 | 0.062 | 0.823 | 0.022 | 0.273 | ||||

| GARCH(1, 1) with breaks | 1.514 | 0.977 | 0.142 | 1.006 | 0.095 | 1.257 | 0.051 | 0.625 | ||||

| Deep Learning | 0.912 [0.624] | 0.006 [0.007] | 0.004 [0.006] | 0.004 [0.009] | ||||||||

| LSTM | 1.707 | 1.102 | 0.140 | 0.991 | 0.057 | 0.761 | 0.061 | 0.754 | ||||

| CNN | 1.672 | 1.006 | 0.133 | 0.941 | 0.052 | 0.688 | 0.012 | 0.153 | ||||

| Index | Model | MSFE | QLIKE | ||

|---|---|---|---|---|---|

| Value | Ratio | Value | Ratio | ||

| IGBVL | GARCH(1, 1) expanding window | 3577.6 | 1.000 | 0.196 | 1.000 |

| GJR-GARCH(1, 1) expanding window | 3935.4 | 1.100 | 0.216 | 1.110 | |

| MS-GARCH(1, 1) expanding window | 4650.9 | 1.300 | 0.245 | 1.250 | |

| BOVESPA | GARCH(1, 1) expanding window | 3964.8 | 1.000 | 0.220 | 1.000 |

| GJR-GARCH(1, 1) expanding window | 4361.3 | 1.100 | 0.242 | 1.100 | |

| MS-GARCH(1, 1) expanding window | 4956.0 | 1.250 | 0.282 | 1.282 | |

| IPSA | GARCH(1, 1) expanding window | 1075.0 | 1.000 | 0.078 | 1.000 |

| GJR-GARCH(1, 1) expanding window | 1290.0 | 1.200 | 0.094 | 1.200 | |

| MS-GARCH(1, 1) expanding window | 1451.3 | 1.350 | 0.105 | 1.350 | |

| IPC | GARCH(1, 1) expanding window | 550.0 | 1.000 | 0.075 | 1.000 |

| GJR-GARCH(1, 1) expanding window | 605.0 | 1.100 | 0.083 | 1.100 | |

| MS-GARCH(1, 1) expanding window | 687.5 | 1.250 | 0.096 | 1.280 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chung, V.; Espinoza, J.; Quispe, R. Forecasting Financial Volatility Under Structural Breaks: A Comparative Study of GARCH Models and Deep Learning Techniques. J. Risk Financial Manag. 2025, 18, 494. https://doi.org/10.3390/jrfm18090494

Chung V, Espinoza J, Quispe R. Forecasting Financial Volatility Under Structural Breaks: A Comparative Study of GARCH Models and Deep Learning Techniques. Journal of Risk and Financial Management. 2025; 18(9):494. https://doi.org/10.3390/jrfm18090494

Chicago/Turabian StyleChung, Víctor, Jenny Espinoza, and Renán Quispe. 2025. "Forecasting Financial Volatility Under Structural Breaks: A Comparative Study of GARCH Models and Deep Learning Techniques" Journal of Risk and Financial Management 18, no. 9: 494. https://doi.org/10.3390/jrfm18090494

APA StyleChung, V., Espinoza, J., & Quispe, R. (2025). Forecasting Financial Volatility Under Structural Breaks: A Comparative Study of GARCH Models and Deep Learning Techniques. Journal of Risk and Financial Management, 18(9), 494. https://doi.org/10.3390/jrfm18090494