1. Introduction

The interplay between information flow and market behavior has long intrigued economists and behavioral scholars. In today’s digital era, news spreads instantly, making sentiment analysis more relevant than ever. Understanding how sentiment in text influences market dynamics is a growing area of interest. Traditional stock price models, grounded in the Efficient Market Hypothesis (EMH), argue prices reflect all known data (

Fama, 1970). This leaves little theoretical room for emotions or tone to influence asset prices. Yet growing empirical evidence shows investor sentiment can move prices beyond fundamentals. News reactions, whether optimistic, pessimistic, or neutral, can sometimes shape short-term market swings. This study asks whether sentiment scores from TextBlob, VADER, and FinBERT predict such movements. In doing so, it aims to link qualitative narratives in financial news to real, measurable market behavior.

The motivation for this investigation stems from the rise of unstructured textual data and NLP advancements. Earlier studies showed that earnings announcements and macro news impact returns, often with delayed effects (

Ball & Brown, 1968). These early works were mainly event-based, overlooking the steady stream of sentiment in daily news cycles. Recent research shifted to sentiment analysis to bridge this gap in market reaction studies. For example,

Bollen et al. (

2019) showed Twitter sentiment predicts cryptocurrency prices. Similarly,

Tetlock and Saadi (

2020) linked news sentiment indices with short-term equity volatility. Still, the literature remains divided on whether sentiment offers real signals or just reflects noise (

Garcia & Norli, 2021). This paper uses TextBlob, VADER, and FinBERT to rigorously test sentiment’s predictive value in a replicable framework, especially in the context of any exploitable trading signal.

Beyond academic curiosity, decoding news sentiment holds significant practical value. Portfolio managers, traders, and policymakers increasingly seek real-time signals during turbulent periods. The pandemic-driven market swings of 2020 revealed how “animal spirits” can override fundamentals (

Shiller, 2020).

Chen et al. (

2022) showed sentiment shaped trading volumes during COVID-19, while

Li et al. (

2023) found it predicted tech stock returns. TextBlob, VADER, and FinBERT offer distinct yet complementary tools to analyze sentiment’s role. Their potential in market forecasting remains underexplored, especially compared to deep learning methods (

Kim et al., 2021). Furthermore, prior research links sentiment to contemporaneous market moves, but the timing of its predictive power is less clear. The optimal horizon, whether hours, days, or weeks, remains an open question (

Huang et al., 2020). Thus, this paper serves as both a scientific inquiry and a practical test of whether interpretable sentiment metrics can inform market predictions and support actionable trading strategies.

At its core, this research is driven by the hypothesis that news sentiment serves as an important indicator of stock market dynamics. We posit that sentiment captures shifts in collective investor perception before they materialize in price or volume data, raising the possibility of systematically outperforming the market. More formally, the study tests whether the semi-strong form of the Efficient Market Hypothesis (EMH) holds under varying model configurations. Using a momentum-based baseline, we also assess the weak form of EMH by examining whether past returns contain predictive information. This research agenda is further refined by asking whether market behavior is primarily anticipatory (forward-looking) or reactive (backward-looking). We also investigate whether a structural bias exists in financial markets by examining asymmetries between bullish and bearish market states. This research framework becomes even more crucial as financial markets become increasingly intertwined with the digital information ecosystem. Addressing these questions and dilemmas may illuminate the mechanisms through which language (news), amplified by volatility and trading intensity, shapes the process of market-driven wealth creation.

This study fills a critical gap in the literature by systematically analyzing sentiment dynamics using a combination of sentiment tools—TextBlob, VADER, and FinBERT. We contribute further by evaluating the predictive power of news sentiment through a multi-model ensemble learning framework, applied across multiple stock market indices, and sectoral subindices. While prior studies have explored individual algorithms (

Wang & Liu, 2022;

Xu & Zhang, 2021;

Zhang & Chen, 2023), few offer a comparative analysis that jointly considers varied sentiment measures. Much of the existing literature prioritizes computational complexity over interpretability (

Kim et al., 2021) or focuses on niche markets such as cryptocurrencies (

Bollen et al., 2019). By integrating several ensemble models, this paper capitalizes on their distinct strengths, outlined as follows: gradient-based optimization (XGBoost), adaptive reweighting (AdaBoost), variance reduction (Bagging), feature resilience (Random Forest), stage-wise boosting (GBM), and meta-learning (Stacking). The key contribution lies in bridging lexicon-based and transformer-based sentiment analysis with robust machine learning techniques to detect subtle, finance-specific emotional signals. We also introduce daily aggregated sentiment indices and topic-refined subindices (“firm” vs. “non-firm”) based on stock market-related news flows. Finally, the research provides a structured, multifaceted framework for testing the Efficient Market Hypothesis (EMH), including out-of-sample validation and walk-forward trading experiments.

Our preliminary findings reveal that sentiment scores and market returns reflect heterogeneous responses to FOMC and BLS news releases. FinBERT sentiment consistently captures more persistent and smoother shifts, while subsequent rebounds vary by index. Compared to sentiment-only models (around 50% balanced accuracy), models that include implied volatility and trading intensity elevate classification performance to approximately 70–72% accuracy. FinBERT sentiment can enhance market state predictions in some contexts, its benefit is uneven across models and sectors. Ensemble classifiers exhibit persistently higher recall and F1-score for Bullish sentiment compared to Bearish sentiment each year. The regression tasks reveal a substantial improvement in predictive performance 1026 after including FinBERT sentiment and control variables. The algorithm-specific average gains range from 0.26 (AdaBoost) to 0.33 (Stacking). Past returns and anticipated market volatility as signals cannot be translated into a meaningful and economically viable trading strategy, especially after accounting for transactional costs. Refines sentiment scores (firm vs. non-firm) boost overall predictive performance of the ML algorithms; however, their marginal contribution, as proxied by permutation feature importance, remains negligible.

2. Literature Review

The relationship between news sentiment and stock market dynamics has garnered significant attention over the past decade, propelled by the proliferation of digital media and advancements in natural language processing (NLP). Early work by

Tetlock (

2007) established that negative sentiment in news articles, particularly from financial columns, correlates with downward pressure on stock prices, introducing sentiment as a quantifiable factor in asset pricing. This seminal study relied on dictionary-based sentiment scoring, paving the way for subsequent research to explore computational methods. The advent of social media and real-time news platforms further expanded this domain, with

Bollen et al. (

2011) demonstrating that Twitter sentiment could predict daily movements in the Dow Jones Industrial Average with up to 87% accuracy. These foundational studies underscored the potential of textual data as a market signal, shifting focus from structured financial metrics to unstructured narratives.

Recent research has refined these insights by leveraging more sophisticated NLP techniques and broader data sources.

Soo (

2019) applied Latent Dirichlet Allocation (LDA) to news articles, finding that topic-specific sentiment (e.g., earnings, mergers) outperforms aggregate sentiment in predicting stock returns. Similarly,

Huang et al. (

2020) used a vector autoregression model to show that news sentiment Granger-causes stock volatility over a one-week horizon, highlighting temporal dynamics. The COVID-19 pandemic provided a natural experiment for sentiment studies, with

Chen et al. (

2022) documenting that negative news sentiment amplified trading volumes during market downturns in 2020. Meanwhile,

Li et al. (

2023) employed lexicon-based tools like VADER to extract sentiment from tech-sector news, reporting a 5% improvement in return predictions for NASDAQ stocks over baseline models. These studies collectively affirm sentiment’s role but vary in their predictive horizons and methodological rigor.

Machine learning has increasingly dominated this field, offering tools to handle the nonlinearity and complexity of sentiment-market relationships.

Kim et al. (

2021) compared deep learning models (e.g., LSTM) with traditional regressions, finding that neural networks better capture sentiment-driven volatility in intraday trading data.

Xu and Zhang (

2021) extended this by integrating news and Twitter sentiment into a Random Forest model, achieving a 12% increase in accuracy for S&P 500 returns over a sentiment-only baseline. The following ensemble methods have also gained traction:

Wang and Liu (

2022) used XGBoost to predict cryptocurrency price swings from news sentiment, reporting an

of 0.65, while

Zhang and Chen (

2023) applied Gradient Boosting Machines (GBMs) to equity indices, noting superior performance in volatile markets. However, simpler ensemble techniques like Bagging and AdaBoost remain underexplored, as do hybrid approaches like Stacking, which combine multiple learners for robustness.

Methodologically, these studies typically follow a pipeline of data collection, sentiment extraction, and predictive modeling. News sources range from Reuters and Bloomberg (

Garcia & Norli, 2021) to aggregated feeds via APIs (

Chen et al., 2022), with sentiment scored using lexicons (e.g., Loughran-McDonald, VADER) or pretrained models (e.g., BERT). Time-series models (

Huang et al., 2020), regressions (

Soo, 2019), and machine learning classifiers (

Xu & Zhang, 2021) dominate estimation, often benchmarked against fundamentals like earnings or macroeconomic indicators. Key findings include short-term predictive power (hours to days) for returns and volatility (

Li et al., 2023;

Tetlock & Saadi, 2020), though longer horizons weaken (

Garcia & Norli, 2021). Sentiment’s interaction with market conditions also varies as follows:

Shiller (

2020) linked narrative-driven sentiment to speculative bubbles, while

Baker and Bloom (

2021) tied it to uncertainty proxies like the VIX.

Connections to the broader finance literature reveal both synergies and tensions. Behavioral finance, exemplified by

Shleifer (

2000), supports sentiment as a driver of mispricing, aligning with

Tetlock (

2007) and

Bollen et al. (

2011). Event-study approaches (

Ball & Brown, 1968) complement sentiment’s role in information shocks, yet efficient market proponents challenge its persistence (

Fama, 1970), a debate that is also reflected in noise critique by

Garcia and Norli (

2021). Recent interdisciplinary work integrates sentiment with network analysis (

Yang & Zhou, 2022) or macroeconomic signals (

Zhou & Wu, 2023), suggesting a holistic approach to market dynamics. Still, gaps remain: most studies focus on single algorithms (e.g., LSTM, XGBoost) rather than ensemble combinations, and few compare lightweight tools like TextBlob and VADER against advanced NLP in a predictive context.

Recent contributions underscore the growing importance of sentiment in modeling financial volatility and crises.

Bai et al. (

2024) employ a multi-country GJR-GARCH-MIDAS framework to examine how market sentiment spills over across global equity markets. Their results reveal that sentiment significantly influences volatility, especially in interconnected economies. Spillover intensity varies by country, with stronger effects observed in developed markets.

Chari et al. (

2023) study the Indian stock market and document that aggregate news sentiment can predict short-term fluctuations in NIFTY returns. The impact is particularly pronounced during periods of economic or political uncertainty.

Naeem et al. (

2024) apply machine learning to forecast financial crises in African markets. They find that sentiment variables improve predictive accuracy, although market-based features like price and exchange rate movements remain primary drivers. Collectively, these studies support the view that sentiment is a valuable input in financial forecasting. However, its utility depends on the broader market regime, model complexity, and geographic focus.

Overall, the literature consistently recognizes sentiment as a relevant market signal, though its predictive strength varies across models, horizons, and market states. Early work based on lexicon methods has evolved into more advanced NLP and machine learning pipelines, with greater emphasis on topic-specific sentiment and temporal effects. Recent studies highlight the growing role of deep learning and tree-based algorithms, yet few explore ensemble combinations or compare lightweight tools like VADER to transformer-based models. A recurring theme is sentiment’s short-term predictive power and heightened effectiveness during periods of uncertainty or narrative-driven market behavior. However, concerns remain about its economic significance, generalizability across regimes, and value-added beyond traditional signals.

3. Data and Methodology

The current study employs sentiment analysis tools, in particular, TextBlob, Valence Aware Dictionary and sEntiment Reasoner (VADER), and FinBERT, to analyze daily news sentiment scores. It is followed by the classification and prediction tasks to grasp whether or not news-driven sentiment is correlated with stock market trends. Initially, we use a Kaggle dataset encompassing over 6000 stocks and approximately 1.85 million headlines, spanning from 3 February 2010 to 4 June 2020. Then, we calculate initial sentiment scores for individual headlines using TextBlob, VADER, and FinBert. To ensure daily variations, we subsequently average these scores to derive date-specific sentiment measures. This process results in 3731 daily sentiment scores, which reflects the prevailing investment mood in the stock market. After merging these scores with daily stock returns to align the dates, we obtain 2588 daily observations. The reduction in matched observations arises primarily because stock market data only includes trading days, excluding weekends, public holidays, and occasional unscheduled closures. Consequently, the dataset reflects approximately 252 trading days per year rather than all calendar days, which explains the discrepancy between the number of sentiment scores and stock return records.

TextBlob analyzes text sentiment by providing the following two primary metrics: a polarity score and a subjectivity score. The polarity score ranges from −1 (most negative) to 1 (most positive) and quantifies the overall sentiment orientation of the text. The subjectivity score ranges from 0 (completely objective) to 1 (completely subjective), indicating the degree to which the text expresses personal opinions versus factual information. TextBlob uses a lexicon-based approach, assigning predefined polarity scores to individual words. The polarity of the entire text is calculated by averaging these individual polarity scores as follows:

where

n is the number of words, and

is the polarity score of the

i-th word, ranging from −1 to +1. The subjectivity score similarly aggregates the degree of subjectivity across words, providing insight into how opinionated or neutral the text is. The polarity score captures the text’s sentiment direction (negative, neutral, or positive), while the subjectivity score reflects whether the text conveys objective facts or subjective viewpoints. Together, these subscores offer a nuanced understanding of sentiment and content nature, despite TextBlob’s simpler approach which does not adjust for linguistic nuances such as negations or intensifiers.

VADER (Valence Aware Dictionary and Sentiment Reasoner) is a rule-based sentiment analysis tool explicitly designed to capture the nuances of social media text. It combines a lexicon-based approach with heuristic rules that adjust sentiment intensity based on factors such as punctuation, capitalization, degree modifiers, and negations. VADER employs a pre-compiled lexicon in which each word is assigned a valence score ranging from −4 (most negative) to +4 (most positive). The overall sentiment score of a text is computed by summing the valence scores of its constituent words:

where

n is the number of words and

represents the valence score of the

i-th word. Beyond this aggregate, VADER provides three subscores that quantify the proportions of positive, negative, and neutral sentiment within the text, denoted as

positive,

negative, and

neutral subscores, respectively. These scores reflect the relative intensity and presence of sentiment types: the

positive score measures the proportion of strongly positive words, the

negative score captures the intensity of negative words, and the

neutral score indicates the share of words that carry no clear sentiment or are sentimentally neutral. The

compound score synthesizes the overall sentiment by applying a normalization function to the sum of valence scores (including heuristic adjustments), resulting in a metric bounded between −1 (extreme negativity) and +1 (extreme positivity):

where

is a normalization constant ensuring the score remains within the defined range. This compound score offers a comprehensive sentiment summary, while the subscores provide a detailed breakdown of sentiment composition.

FinBERT (Financial Bidirectional Encoder Representations from Transformers) is a domain-adapted variant of the BERT transformer model used to generate sentiment scores from financial news text. FinBERT processes input text

and outputs a probability distribution over sentiment classes

. Formally, the model estimates

where

denotes the probability that the text expresses sentiment class

s. To derive a continuous sentiment score

for each document, we compute the weighted sum of the class probabilities as follows:

This scalar score reflects the overall sentiment intensity and direction, ranging from

(entirely negative) to

(entirely positive). Aggregating these document-level polarity scores on a daily basis produces daily sentiment indicators that capture the prevailing market sentiment dynamics.

In the next phase of the empirical investigation, we utilize a range of ensemble learning algorithms (see

Table 1) to assess whether these daily sentiment scores alone, or enhanced by trading volume and implied volatility, have predictive power regarding stock market dynamics, specifically for the S&P 500, Dow Jones, and Russell 2000. Specifically, XGBoost and Gradient Boosting Machines (GBM) aim to minimize a loss function using gradient descent and iterative updates. AdaBoost modifies weights based on misclassified instances to concentrate on more challenging examples. Bagging and Random Forest decrease variance by aggregating predictions from multiple models, with Random Forest introducing additional feature randomness. Stacking improves prediction performance by combining multiple models through a meta-learner.

The comparative outline of the objective function and update rules for each algorithm, is presented in

Appendix A (see

Table A1). More formally, both XGBoost and Gradient Boosting Machines (GBM) focus on minimizing a loss function by iteratively adding new models to refine predictions. GBM enhances its predictions by incrementally incorporating the output of new trees into the existing model, aiming to progressively reduce prediction error. This method involves fitting each new tree to the residuals of the previous predictions. In contrast, XGBoost improves upon this approach by integrating regularization terms into its objective function, which helps manage model complexity and mitigate overfitting. Moreover, XGBoost employs a gradient-based optimization technique for updating predictions, facilitating more accurate adjustments and potentially delivering superior overall performance and generalization compared to GBM.

On the other hand, AdaBoost and Bagging use distinct strategies to enhance model accuracy. AdaBoost adjusts the weights of training examples based on misclassifications, concentrating on harder-to-classify instances by modifying model weights in each iteration. This process ensures that subsequent models focus more on examples that were misclassified previously, aiming to minimize overall error. In contrast, Bagging reduces variance and improves model stability by aggregating predictions from multiple models, each trained on different subsets of the data. This is achieved through methods such as averaging or voting across various models. Random Forest builds on Bagging by employing a collection of decision trees and introducing additional randomness in feature selection, which further diversifies the model set and increases robustness. Stacking improves predictive performance by combining the predictions of multiple models through a meta-learner. The meta-learner synthesizes these predictions to enhance overall accuracy, leveraging the strengths of different base models and learning the optimal way to integrate their outputs. This approach often leads to improved performance compared to individual models.

In selecting appropriate machine learning models, we prioritized methods that balance predictive performance with interpretability. We focused on ensemble tree-based methods due to their proven effectiveness in financial prediction, especially with heterogeneous numerical inputs like market indicators and aggregated sentiment scores. These models generalize well, handle multicollinearity, and perform implicit feature selection. This enhances interpretability and clarifies sentiment’s marginal impact. Neural networks, SVMs, and LSTMs excel at modeling unstructured data and temporal dependencies. However, our study uses pre-aggregated sentiment metrics instead of raw text sequences, reducing the need for sequence modeling. Given the low dimensionality and limited sample size, ensemble tree models provide a more efficient and interpretable approach. They also generalize better than neural networks or LSTMs in such contexts, requiring less data and simpler tuning.

3.1. Classification and Regression Tasks

During the pre-estimation stage, the analysis begins by computing daily log returns for each index and sector. Each return is then discretized into a binary indicator as follows: 1 for a positive return (bullish state) and 0 for a negative return (bearish state), producing a directional sequence of market states. To assess temporal dependence in these series, the Ljung–Box test (lag 10) evaluates autocorrelation, while the Augmented Dickey-Fuller (ADF) test verifies stationarity. The classification task aims to identify features that distinguish bullish from bearish states. The modeling proceeds in the following stages: it starts with a sentiment-only specification, followed by a restricted model incorporating controls such as implied volatility (VIX) as a proxy for market uncertainty, trading volume as a measure of liquidity and trend strength, and the 1-year Treasury yield as a benchmark for the risk-free rate. The final specification, the unrestricted model, integrates these controls with news sentiment to assess incremental predictive power.

(b) Restricted Model:

(c) Unrestricted Model:

Each control captures a distinct market mechanism. The VIX reflects perceived uncertainty; volume signals ease of trading and conviction; and the Treasury yield, by anchoring opportunity cost, subtly shapes momentum. Higher yields may temper equity demand, muting trend persistence, whereas lower yields often support risk-taking, though they act less directly than lagged returns in sustaining momentum. We also test lagged sentiment scores (1 to 3 days) to explore potential delayed information-driven market adjustments.

In the post-classification stage, we evaluate recall, F1-score, and balanced accuracy to assess model effectiveness in predicting U.S. market regimes (Bullish vs. Bearish). We compare the augmented (unrestricted) model against the controls-only specification to isolate the incremental value of sentiment-based predictors. Higher F1 or balanced accuracy in the augmented model suggests sentiment captures unique signals (such as investor mood or behavioral shifts) beyond traditional factors. For example, a surge in bullish sentiment may precede observable market rallies, improving recall by detecting more true bullish cases. If performance gains are negligible, sentiment may be redundant or noisy, offering little beyond controls like volume, volatility, or yields. The difference in classification metrics provides a practical measure of sentiment’s predictive utility over standard market indicators.

In the regression tasks, we take a sequential approach to determine what are the primary drivers behind stock market fluctuations. Initially, we estimate a one-factor (or sentiment-only, Model 1) model to explore whether emotional dynamics derived from news headlines is correlated with stock market dynamics. Then, the study proceeds by estimating the momentum baseline model (Model 2) to test for short-term momentum or autocorrelation. There are two interconnected goals here, outlined as follows: (1) we aim to detect a possible trading signal that might (not) be exploitable; (2) it sets a benchmark to assess the predictive potential of more sophisticated model specifications (i.e., sentiment plus control variables). In the following step, we specify a controls-only model (i.e., restricted model) by including the following control variables: implied volatility (VIX), trading intensity (Volume), and 1Y Treasury Yield (to capture short-term interest rate effects). The restricted model sets another benchmark to be able to estimate explanatory power of the market sentiment itself (primarily VADER and FinBERT). This will be carried out by comparing the predictive power of the unrestricted model (controls plus sentiment, VADER and FinBERT) with the restricted one. To explore gradual rather than immediate effects, we estimate models using sentiment scores lagged by one to three days to capture delayed market adjustments.

(d) Unrestricted Model (Model 4):

The momentum-baseline model assesses the viability of momentum-based strategies that exploit directional persistence. The controls-only model captures market-timing signals based on price patterns, sentiment proxies like VIX, trading activity, and interest rates. The unrestricted model extends this framework by adding forward-looking sentiment signals derived from textual data. These models serve several interconnected goals. One is to quantify the marginal effect of sentiment, alone or alongside controls, on stock market returns. Another is to compare the explanatory power of VADER and FinBERT sentiment scores, given their distinct computational approaches. The models may also help identify trading signals that could or could not be translated into meaningful and economically viable performance. As a word of caution, while evidence of return predictability may be tempting to interpret as a violation of the weak or semi-strong forms of the EMH, such conclusions require careful scrutiny. The results must demonstrate not only statistical significance but also a systematic and economically exploitable pattern of portfolio returns, net of real-world trading frictions. Without this, the EMH remains intact despite any apparent signs of predictability.

3.2. Model Tuning and Validation

To optimize the outcomes from the classification and regression tasks, we apply model-level tuning via hyperparameter optimization. In this study, model-level tuning involves adjusting critical hyperparameters that shape how each algorithm learns, regularizes, or aggregates predictions. Given the diversity of algorithms in our study, we tailor the tuning strategy accordingly. Random Forest and Bagging are relatively robust to overfitting due to their averaging nature, so we employ randomized hyperparameter search with cross-validation for efficiency. In contrast, boosting algorithms such as XGBoost, GBM, and AdaBoost are highly sensitive to the interaction of hyperparameters like learning rate, tree depth, and regularization terms. For these, we use Bayesian optimization, which models the performance surface probabilistically (as a Gaussian Process) and strategically selects promising hyperparameter configurations. The stacking ensemble combines Random Forest and XGBoost as base learners, with Logistic Regression as the meta-learner. Accordingly, we apply randomized search to tune the Random Forest and Bayesian optimization to fine-tune XGBoost. Finally, the meta-model is tuned using elastic net regularization (a mix of L1 and L2 penalties), optimized via the ’saga’ solver (a stochastic gradient-based algorithm).

The data-driven tuning pipeline applies objective-level tuning (data-level adjustments) followed by probability calibration (post-training adjustment) to enhance classification performance. Empirically, since stock markets exhibit a long-term upward drift, positive returns (bullish periods) are more frequent; in contrast, sharp declines such as crashes or corrections are less common but typically more severe. In our classification setup, bearish market states (Class 0) are also underrepresented (see

Figure 1). To avoid neglecting important patterns in the minority class and to prevent misleading evaluation metrics, we employ SMOTE (Synthetic Minority Over-sampling Technique). This method generates synthetic samples by interpolating between a minority class observation and one of its

nearest neighbors. These new samples are added only to the training dataset to augment the representation of the minority class. In the second step, we apply Platt scaling to calibrate predicted probabilities and ensure they reflect the true likelihood of each class. This calibration is performed using a separate hold-out calibration set to avoid data leakage and to maintain out-of-sample generalizability. If class bias emerges consistently, we perform year-by-year cross-validation to determine whether it stems from overfitting or a structural bias in the market.

In the regression tasks, we use a time-aware holdout split to preserve the temporal structure of the data. This approach is preferred over traditional k-fold cross-validation for the following two key reasons: (a) k-fold splits mix past and future observations, violating the time order inherent in financial time series; (b) training on “future” data before validating on earlier observations can artificially inflate performance due to data leakage. Time-based holdout, by contrast, ensures that no information from the validation or test sets contaminates the training process. This setup allows for a more realistic, chronological assessment of model performance. To further strengthen robustness, we implement walk-forward (rolling window) cross-validation. This enhanced strategy offers several advantages: (a) it slides forward through time, always training on past data and testing on unseen future periods; (b) it evaluates performance across multiple time slices, helping detect regime-dependent behavior; and (c) it avoids the bias of relying on a single validation set and better reveals model stability over time. In the final stage, we compute permutation-based feature importance to obtain a more robust, model-agnostic estimate of each feature’s true contribution to predictive performance.

3.3. Backtesting

In the next stage of the empirical investigation, we implement a set of backtesting strategies to test the validity of the EMH in a two-stage procedure. In the first stage, we implement a sentiment-driven trading strategy by first predicting the FinBERT-derived sentiment polarity (positive or negative) for each asset using a hybrid stacking algorithm. Given the distribution of FinBERT scores is strongly skewed toward positive values, we binarize the sentiment by using the median score (≈0.466) as the classification threshold. This ensures a balanced training set and distinguishes between relatively strong and weak sentiment days. Alternative thresholds were considered, but the median cutoff offered the best balance between class representation and model learnability. The stacking model combines XGBoost, Random Forest, and Gradient Boosting classifiers as base learners to predict binary FinBERT sentiment labels. A logistic regression model serves as the meta-learner, aggregating both original features and base-level predictions. This ensemble approach captures nonlinear patterns and reduces overfitting by leveraging diverse learning algorithms. A time-aware split ensures the model respects the sequential structure of financial data.

For each of the eight target assets, we construct an asset-specific model. The dependent variable is the daily FinBERT sentiment label (binary: above median, positive, vs. below median, negative), obtained by aggregating FinBERT sentiment scores across relevant news headlines. Predictor variables include lagged returns of the specific asset, the VIX index, trading volume, and 1Y Treasury yields, capturing both asset-specific and macro-financial drivers of sentiment. Once the model is trained, its predicted sentiment output serves as the basis for trading decisions. For a given asset, a predicted positive sentiment triggers a long position, while a predicted negative sentiment triggers a short position. Positions are entered at the close of day t and held until the close of day , with daily rebalancing. We evaluate each sentiment-based strategy using performance metrics such as annualized Sharpe ratio, cumulative return, and hit rate, and benchmark them against a long-only baseline. This approach quantifies the trading relevance of predicted sentiment across different asset classes using only observable market signals as inputs.

In the second stage, we are focused on past returns, and the most significant feature(s) for predicting returns, measured by the permuted feature importance scores. First, we assess serial dependence in stock and sectoral returns. Next, we apply the Wald–Wolfowitz Runs Test. This test checks whether the sequence of positive and negative returns is random. Formally, the null hypothesis is as follows: return signs are randomly distributed, implying a random walk in directionality. This detects non-random clustering of return signs, signaling potential autocorrelation or structural bias. Next, we employ the Variance Ratio (VR) Test, which tests whether returns are independently and identically distributed (i.i.d.). The null is as follows: the return series follows a random walk. If , we infer mean reversion; if , we observe signs of momentum. Both cases violate the i.i.d. assumption. Rejecting either null suggests that returns exhibit predictable patterns, thereby formally challenging the weak-form EMH. Yet, statistical significance alone is insufficient. To refute EMH, these patterns must also yield economically exploitable strategies with robust, out-of-sample profitability. Thus, randomness rejection is a necessary but not sufficient condition for market inefficiency.

Based upon estimates from the unrestricted model, we compute feature-specific permutation-based feature importance scores. These scores are then used as a selection criterion to discriminate between strong and weak feature(s). Features with the highest permutation importance are used as trading signals to evaluate whether they are already priced in. Each potential publicly available signal is examined for autocorrelation using the Ljung–Box test to infer the appropriate backtesting strategy (momentum or mean-reversion). We also run Granger causality test to examine whether past values of signals improve return forecasts beyond what is explained by lagged stock returns alone. Rejecting the null implies that changes in that signal tend to precede stock return movements. This points to a predictive relationship, though it does not establish a direct causal effect.

To verify the economic relevance of any deviations from randomness, we construct two out-of-sample trading strategies as follows: a momentum-based strategy and a mean-reversion strategy. Both are implemented using one-day (1D) and five-day (5D) lookback windows (k). As an additional robustness check, we implement a walk-forward backtesting strategy with a rolling window. At each step, the best-performing lookback period is selected based on past data, and the strategy is then evaluated out-of-sample. In the momentum strategy, the trading signal is straightforward, and it outlined as follows: if the past k-day return is positive, we take a long position; if negative, we go short. The mean-reversion rule flips this logic is as follows: we short after a positive return and go long after a negative one. As a benchmark, we use a passive buy-and-hold strategy to assess whether active trading rules offer meaningful improvements.

These trading strategies serve as formal tests of the weak-form Efficient Market Hypothesis (EMH), i.e., whether historical prices contain predictive content. To evaluate the semi-strong form of the EMH, we assess whether publicly available variables (e.g., news-driven sentiment, implied volatility, trading volume, etc.) enhance returns. We assess the economic relevance of these strategies by constructing bootstrapped confidence intervals around mean returns. Performance is also measured using average returns, Sharpe ratios (risk-adjusted performance), and hit rates (directional accuracy). All results are computed both before and after accounting for transaction costs, set at (i.e., 0.1%). This ensures that the strategies remain viable under realistic market conditions.

3.4. Sophisticated Feature Innovation(s)

In our baseline framework, sentiment scores are derived from a broad set of raw news headlines to match daily stock market returns, and without topic-specific filtering. Standard approaches typically discard news published on non-business days when matching daily sentiment to returns. This exclusion overlooks potentially valuable signals, especially when sentiment builds during weekends or holidays. To address this, we propose the first feature innovation, outlined as follows: reassigning non-business day news to the next available trading day. This adjustment preserves continuity in sentiment flow and captures investor reactions to weekend developments at market open. Such temporal reallocation accounts for the delayed impact of information released outside trading hours. It also mitigates the risk of omitting sentiment-driven catalysts that influence early-morning trading behavior. Overall, this enhancement allows the sentiment features to reflect the full information set available to market participants. It thereby strengthens the predictive relevance of sentiment inputs forecasting models.

There is another feature innovation worth considering that enhances the informative capacity of sentiment scores. More specifically, we develop more focused sentiment measures by distinguishing between firm-related and non-firm-related news content. Specifically, we identify firm-specific topics (for instance, revenue, earnings, acquisitions, etc.), based on keyword filtering and topic modeling. To achieve this, we first apply a pretrained language model (FinBERT) to embed headline texts, and then extract interpretable topic clusters using BERTopic. These clusters are labeled as “firm” or “non-firm” (macro and general market) based on the presence of signal words. Sentiment scores are then computed using FinBERT for each topic class. This feature engineering step enhances the granularity of the sentiment signal and allows us to refine the specifications of the sentiment-only and unrestricted models. By distinguishing topic-based sentiment, we aim to assess whether filtered sentiment scores offer greater predictive power than unfiltered ones. Specifically, we test whether firm- and non-firm-specific sentiment signals can better capture shifts in market regimes (bearish vs. bullish) and continuous daily market trends. This distinction is explored using both classification metrics (recall, F1 scores, balanced accuracy, and feature importance) and regression measures (validation and test , as well as feature importance).

To align with stock market returns, firm and non-firm sentiment scores are averaged over matching dates. When sentiment scores are unavailable for certain days, we address missingness in a tailored manner. Specifically, gaps in firm-specific sentiment arise structurally, i.e., due to the absence of firm-related news, rather than from data quality issues. We treat these missing values as informative, not missing at random. Using imputation techniques such as linear interpolation or MICE (Multiple Imputation by Chained Equations) would incorrectly imply a smooth or continuous sentiment process. Instead, we preserve these gaps to reflect informational inactivity as follows: no news implies no update in sentiment, which we interpret as a zero signal. Moreover, we model firm and non-firm sentiment within the same model, given their distinct informational dynamics, and expected low correlation. Given the breadth of the dataset (multiple market and sectoral indices), we report only the most relevant results and offer generalized comparative conclusions.

4. Results

We begin by providing a statistical overview of news sentiment scores and stock market returns, highlighting key distributional properties and patterns in the data. The analysis then shifts to a predictive framework that evaluates the forecasting power of selected features while implicitly testing the informational efficiency of financial markets. Specifically, we examine whether asset prices fully reflect all available information, as posited by the Efficient Market Hypothesis (EMH), or if certain features provide statistically significant signals that can be leveraged to construct a trading strategy. This strategy is empirically tested against a simple buy-and-hold benchmark to assess its performance relative to EMH expectations.

4.1. Exploratory Data Analysis (EDA)

The summary statistics in

Table A2 offer a detailed view of return behavior and control variables across major indices and sectors. Mean daily returns for the SP500, DJ, and Russell indices are near zero. Given the large sample size and modest volatility, formal

t-tests would likely fail to reject the null hypothesis that these means equal zero. This, in turn, suggests no persistent directionality in returns and no exploitability of past return patterns for forecasting future gains. The price series tends to evolve like a martingale, with changes that are essentially random and uncorrelated over time. The Russell index exhibits slightly higher volatility, reflecting its greater exposure to small-cap risk. The VIX displays the widest dispersion, capturing episodic spikes in investor uncertainty. Yield shows positive skewness, with rare surges above the median. Trading volume appears stable on average, but varies sufficiently to reflect changes in trading intensity. Sectoral indices broadly mirror the behavior of the aggregate market, though Energy and Tech exhibit more pronounced extremes. Overall, the data point to stable daily return profiles, accompanied by occasional episodes of heightened dispersion and risk.

The sentiment-centered descriptive statistics are summarized in

Table A3. Most sentiment signals exhibit a central tendency toward neutrality or mild positivity. Both polarity and compound scores are slightly positive on average (0.042 and 0.073). Their narrow standard deviations suggest a restrained sentiment tone in daily news headlines. VADER scores also reflect neutrality, with a high mean for neutral score (0.842), while average negative and positive scores remain low (0.054 and 0.104). Subjectivity and objectivity scores confirm that most headlines are objective in nature. The mean objectivity score is 0.810, aligning with expectations for financial journalism. FinBERT sentiment is moderately positive (mean = 0.460), while

and

center near zero. These subindices show much higher variance, indicating directional ambiguity and greater dispersion in targeted sentiment classification.

The pairwise correlation matrix in

Figure A1 (left panel) displays correlations between sentiment indicators and market controls. Polarity and Compound scores are moderately correlated (

), indicating partial overlap between textual sentiment measures. FinBERT shows weak correlation with both, suggesting it captures distinct sentiment signals. All sentiment variables exhibit low correlations with VIX returns, volume, and the 1-year yield. These range from −0.13 to 0.28, indicating minimal shared variance. The low alignment implies that sentiment contains information not embedded in standard control variables. This supports its potential as an independent input in predictive modeling. The right panel shows correlations among log returns for major indices and sectors. SP500 and DJ exhibit near-perfect correlation (

), reflecting their shared large-cap composition. Sector returns are also strongly correlated, especially between Industrials, Materials, and Technology. Energy shows weaker ties, particularly with Financials (

), consistent with its exposure to commodity-specific risks. High correlations across sectors suggest common market-wide shocks dominate return variation. These patterns imply limited short-term diversification benefits within equity sectors.

To support classification tasks, we first discretized market conditions into binary states (

,

) and examined the distribution of these classes across indices and sectors. As illustrated in

Figure 1, the class distribution is relatively balanced, with Bullish states slightly prevailing in all cases. The SP500, DJ, and Russell indices exhibit Bullish shares ranging from 52.9% to 53.6%, suggesting modest asymmetry at the aggregate level. Sectoral indices show similar patterns, with the Energy and Tech sectors displaying the most balanced distributions (approximately 51–49%). Financials and Materials lean marginally more toward Bullish states (around 54%). The near-equal division between Bullish and Bearish periods reflects a structurally oscillating market environment, rather than one dominated by persistent directional trends.

Figure 1.

Distribution of market states (bearish vs. bullish).

Figure 1.

Distribution of market states (bearish vs. bullish).

This balance indicates that shifts in return regimes are frequent and that markets alternate between upward and downward states with comparable frequency. However, even modest asymmetries in class distribution can affect classification performance by biasing predictions toward the dominant regime. To mitigate this, we employed class-weight balancing to ensure that both market states are adequately represented during model training and evaluation.

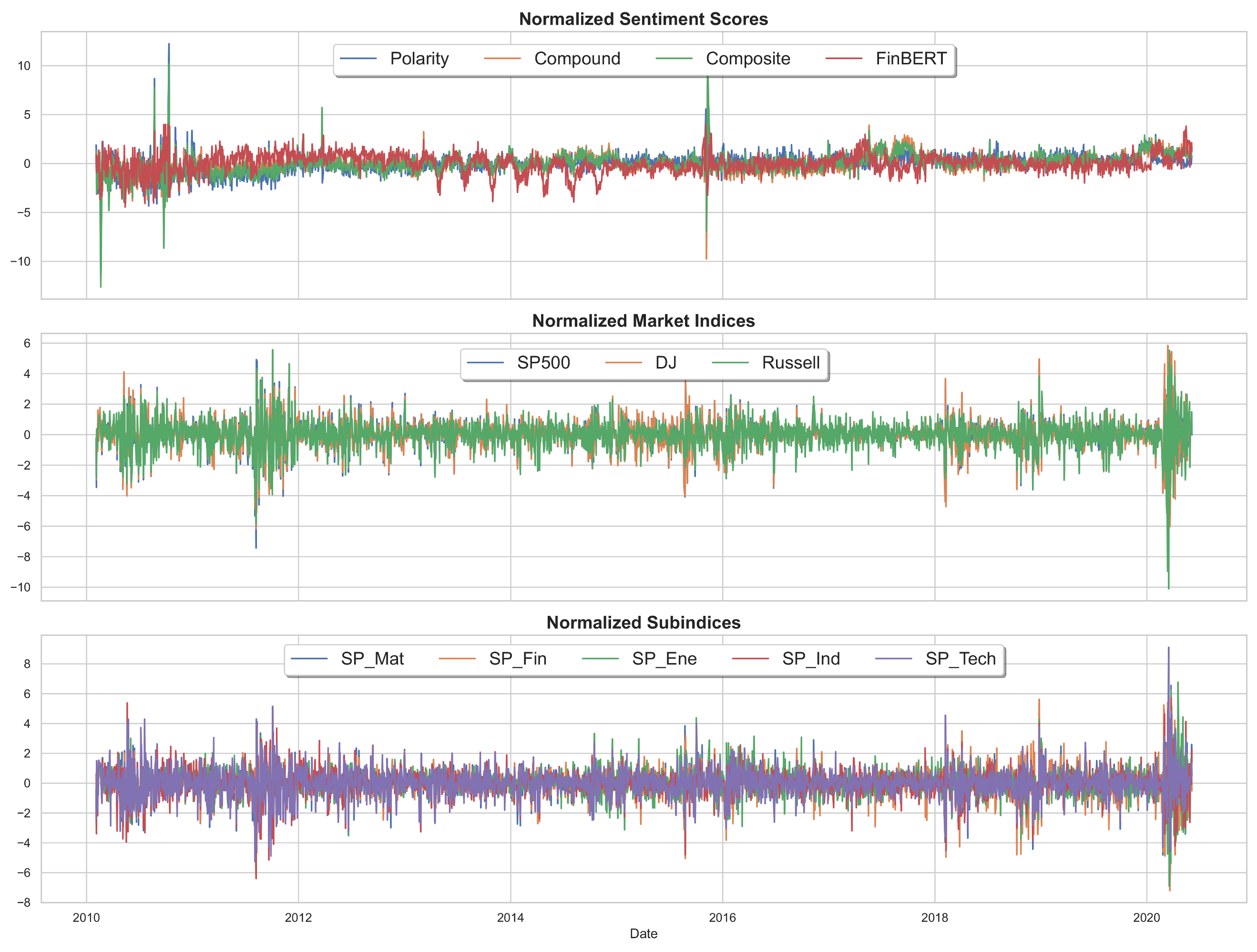

Normalized trends in sentiment scores, broad market indices, and S&P 500 sectoral subindices are shown in

Figure A2. Sentiment scores exhibit episodic shifts, with sharp deteriorations during major market stress periods, including the 2011–2012 sovereign debt crisis, the 2015–2016 global growth slowdown, and the COVID-19 shock in early 2020. These sentiment declines broadly align with sharp drawdowns in major equity indices, suggesting that textual sentiment captures investor reactions during periods of heightened uncertainty. Among market indices, the Russell consistently displays larger fluctuations than the SP500 and DJ, reflecting its greater sensitivity to small-cap and high-beta exposures. Sectoral subindices follow similar directional movements but differ in magnitude. Energy and Financials show deeper drawdowns during downturns, consistent with their cyclical and credit-driven risk profiles. In contrast, Technology and Industrials recover more strongly post-crisis, indicating higher sectoral resilience. However, there are also notable decoupling episodes (for instance, in 2013–2014 and parts of 2018) where asset price volatility increased without corresponding shifts in sentiment. These patterns suggest that sentiment scores, while informative, do not fully account for all drivers of return variation. This is especially true when volatility arises from technical shifts, abrupt policy moves, or events that are weakly expressed in headline tone or underrepresented in textual data.

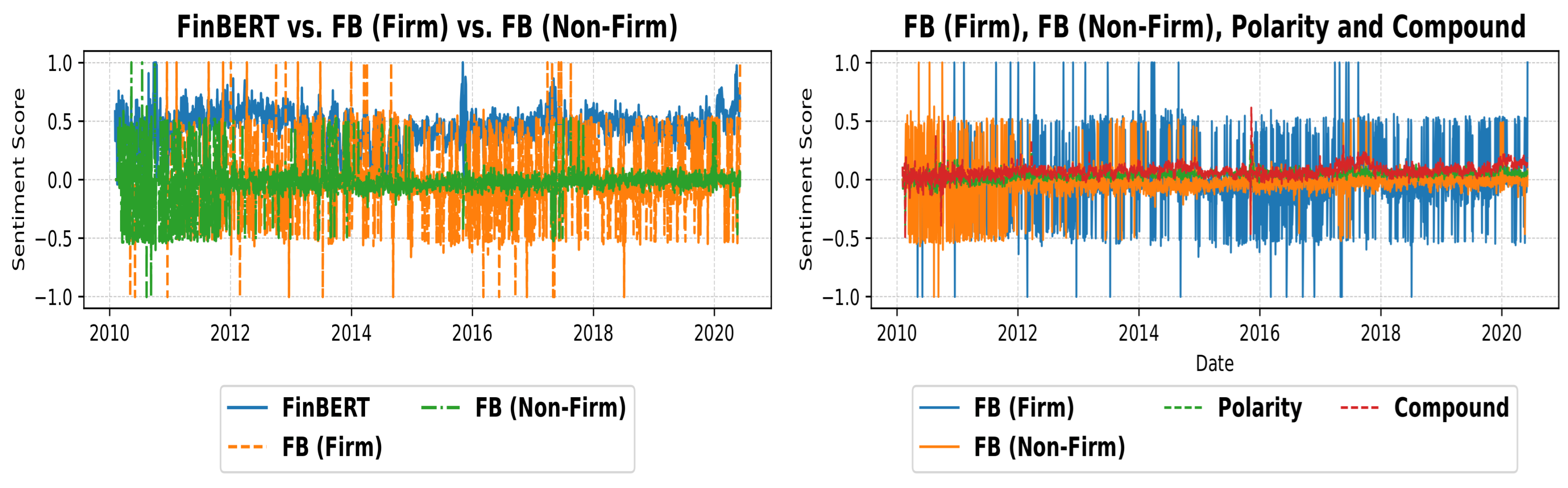

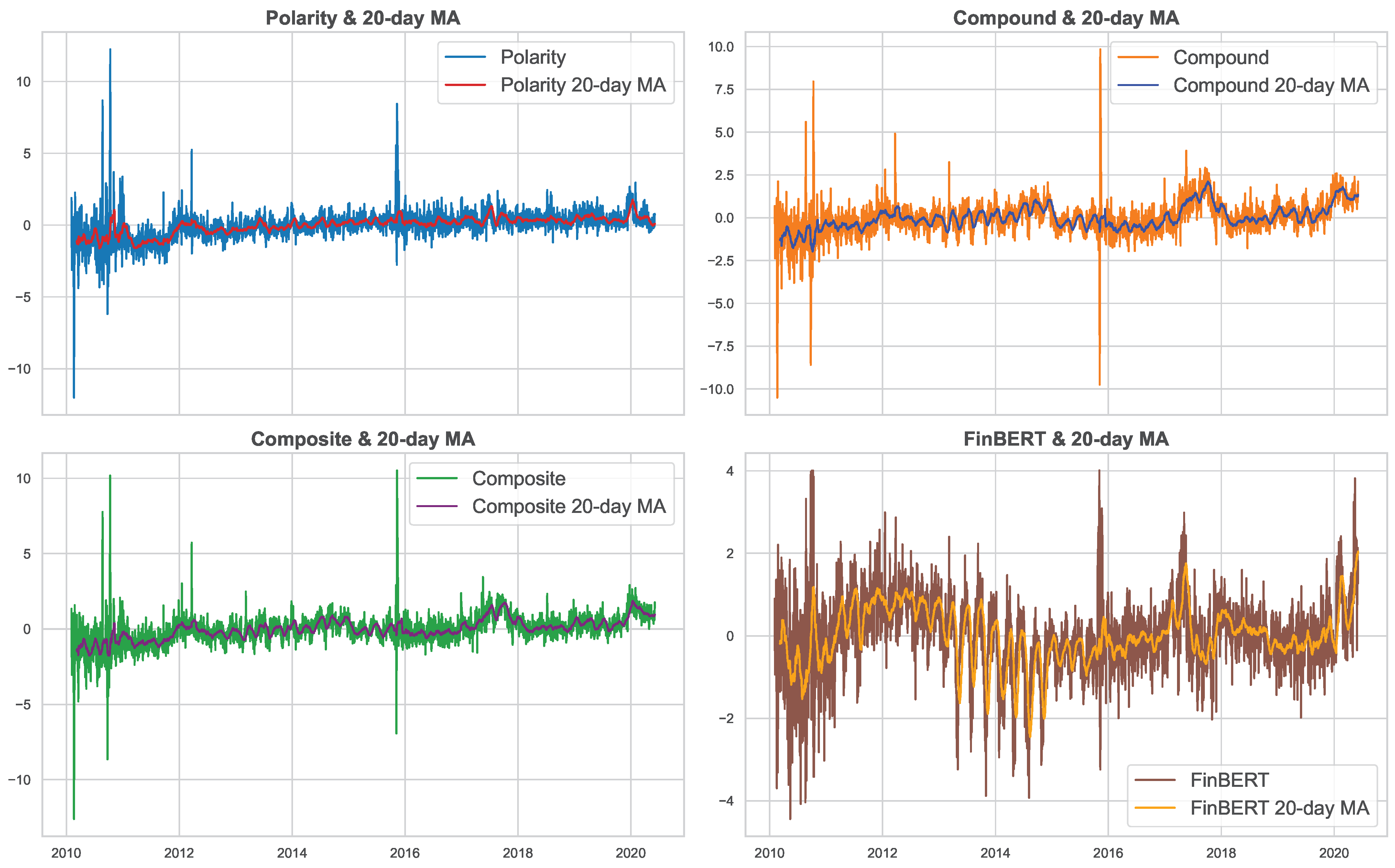

Polarity, Compound, and their Composite sentiment score exhibit broadly aligned trends, marked by noticeable dips during major risk episodes, as shown in

Figure 2. These sentiment metrics exhibit smooth cyclical fluctuations, suggesting that they capture gradual changes in sentiment momentum. In contrast, FinBERT displays considerably higher short-term variability and more erratic swings around its moving average. This divergence likely stems from FinBERT’s deeper contextual parsing, which incorporates domain-specific nuances in financial text. Unlike rule-based methods, FinBERT assigns sharper sentiment shifts in response to subtle changes in language, resulting in a higher-frequency signal that is more reactive but also more volatile. Overall, the pronounced spikes in sentiment scores during 2010–2011 correspond to heightened market sensitivity amid pivotal monetary and fiscal developments.

The Federal Reserve’s announcement of QE2 in November 2010 signaled sustained support for economic recovery, fostering optimism. Throughout 2011, Fed communications navigated the tension between accommodative policy and inflationary pressures, further impacting sentiment. Concurrently, the 2011 U.S. debt ceiling crisis and subsequent S&P credit rating downgrade exacerbated uncertainty and risk aversion. The more substantial spike in late 2016 aligns with critical events including the U.S. presidential election, Brexit negotiations, and evolving global trade dynamics, all contributing to elevated market uncertainty and investor sentiment fluctuations.

Sentiment scores also surged markedly in 2020, reflecting the profound macroeconomic disruptions triggered by the COVID-19 pandemic. The rapid escalation of health crises, coupled with extensive fiscal and monetary interventions, heightened market uncertainty and volatility. Compound is notably more responsive to economy-wide shocks, with fluctuations resembling FinBERT, while Polarity remains comparatively muted. The differences between Polarity (TextBlob) and Compound (VADER) arise from their distinct methodologies. VADER’s valence-aware design captures more nuanced and intense sentiment, especially in emotionally charged or informal language. In contrast, TextBlob applies a simpler, rule-based approach that produces more tempered scores. These differences underscore the tools’ complementary strengths and raise the following key question: do such methodological divergences enhance predictive power, or do they merely reflect alternative sentiment constructions?

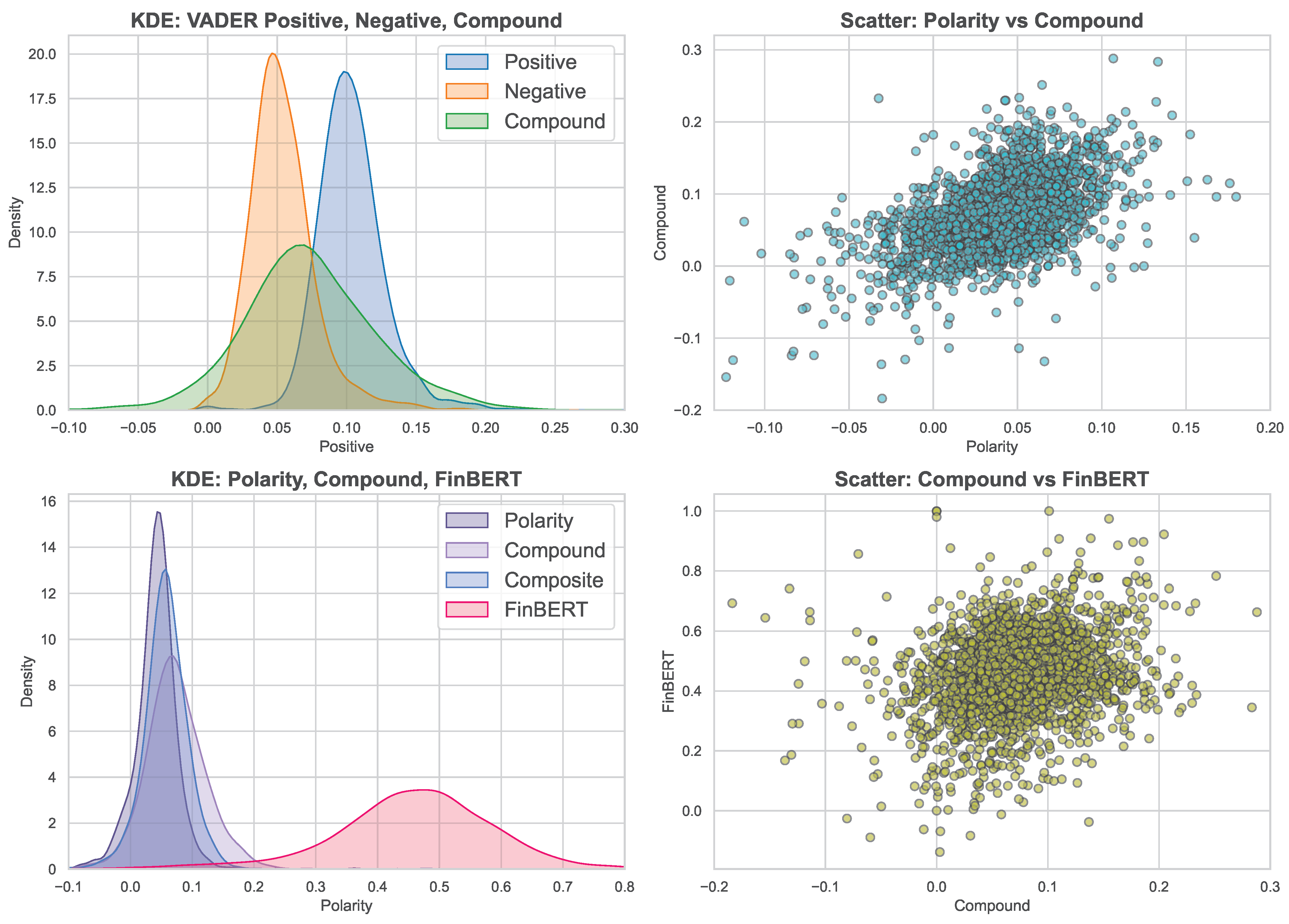

We further explore the relationship between alternative sentiment metrics, and their distributional features, as presented in

Figure 3. The first plot, comparing Polarity and Compound, shows a moderately positive but nonlinear association, with higher Polarity scores generally aligning with stronger Compound values. However, the spread suggests that the two metrics are not interchangeable, likely due to their differing sentiment extraction methodologies. The second scatterplot, comparing Compound and FinBERT, shows a broader and more dispersed pattern, indicating weaker alignment. FinBERT values remain concentrated in a narrow range despite wider variation in Compound, reflecting FinBERT’s distinct calibration and contextual sensitivity. These visual patterns highlight methodological divergence across sentiment tools and imply that aggregation or ensemble use of scores may enhance robustness.

The KDE plots in

Figure 3 provide smooth estimates of sentiment score distributions across models. The VADER density shows a bimodal shape. One peak near zero corresponds to frequent neutral to mildly negative sentiment. Another peak around 0.10–0.15 indicates moderate positive sentiment. The compound score has a broader, flatter distribution centered near zero with a slight positive skew. This pattern highlights mixed sentiment in financial narratives, typically balanced or mildly optimistic but occasionally marked by extremes. Comparing all sentiment scores, VADER-centric measures cluster tightly around zero with little skew. This points to a conservative sentiment response. In contrast, FinBERT exhibits a sharper, right-skewed distribution; so it detects stronger positive sentiment more frequently. Polarity and Compound scores appear more symmetric and bounded, whereas the Composite score closely mirrors their combined behavior. These differences reflect methodological variation and thereby support the complementarity of these models in capturing nuanced market sentiment.

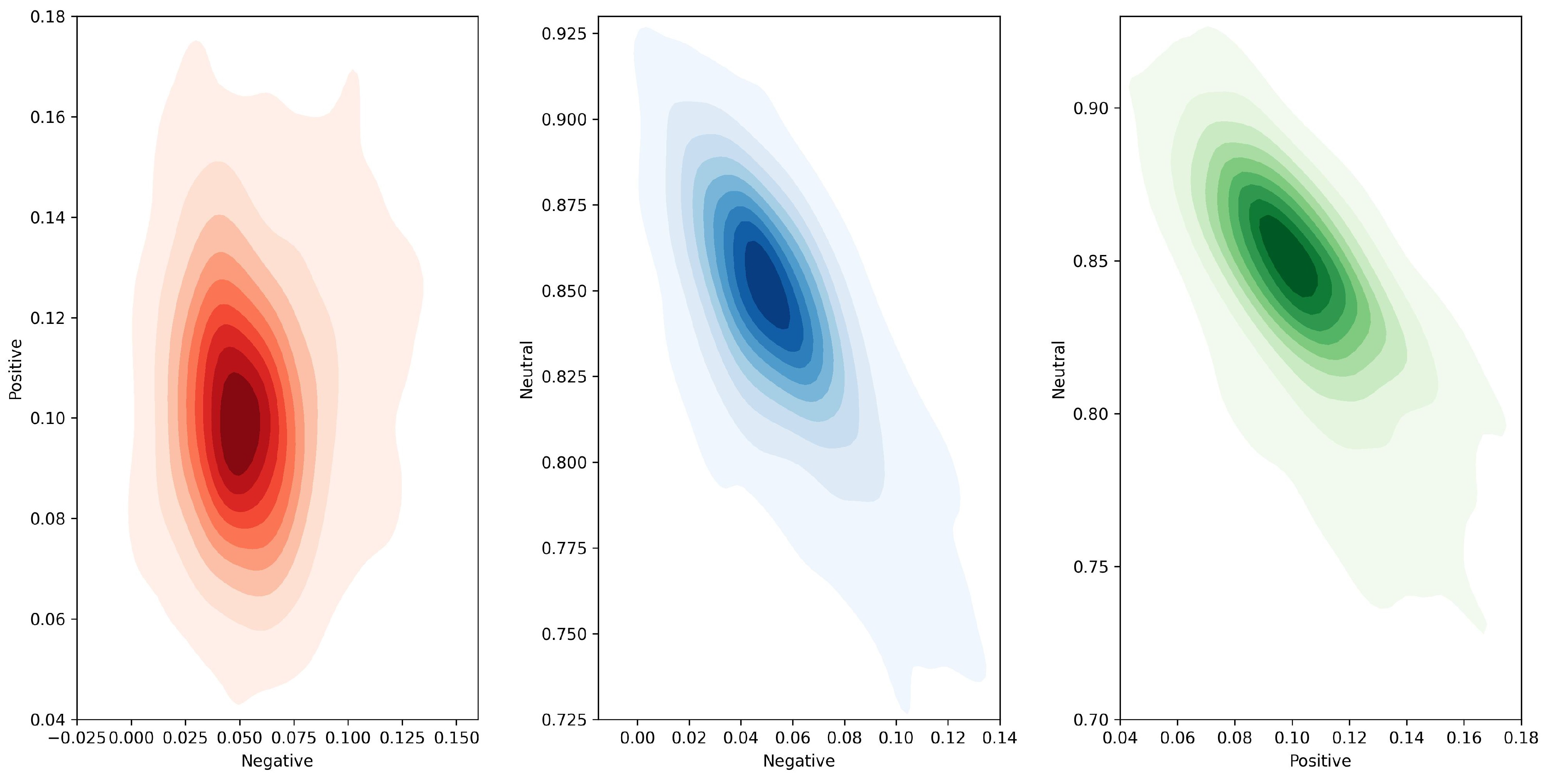

The contour plots of VADER sentiment components (see

Figure A3) depict their joint distributions and densities within stock market news. The Positive versus Negative sentiment plot shows a concentrated elliptical distribution centered near a Positive score of 0.08–0.12 and a Negative score of 0.00–0.02. The highest density region indicates most observations have moderate positive sentiment with minimal negativity, reflecting an overall optimistic tone. The Neutral versus Negative plot reveals a dense cluster around Neutral values of 0.82–0.85 and Negative values near 0.00–0.02. This pattern highlights the dominance of neutral sentiment alongside low negative sentiment, suggesting largely balanced or factual narratives. The Neutral versus Positive plot also centers near Neutral 0.82–0.85 and Positive 0.08–0.12. This confirms frequent coexistence of neutral and moderate positive sentiments. Collectively, these plots illustrate VADER’s ability to capture a sentiment landscape dominated by neutral and positive tones, with negative sentiment largely marginal.

A complimentary set of contour plots (see

Figure A4) illustrates the joint distributions of TextBlob sentiment scores. The left plot shows Polarity versus Subjectivity with an elliptical concentration near Polarity 0.025–0.050 and Subjectivity 0.175–0.200. The highest density indicates most observations combine slightly positive sentiment with moderate subjectivity, suggesting a balanced but mildly emotional narrative. The right plot depicts Polarity against Objectivity, clustered around Polarity 0.025–0.050 and Objectivity 0.80–0.825. This reflects a predominance of slightly positive sentiment accompanied by high objectivity, indicative of a largely factual yet subtly optimistic tone. Together, these plots reveal TextBlob’s tendency to characterize sentiment with neutral to positive polarity, low subjectivity, and strong objectivity.

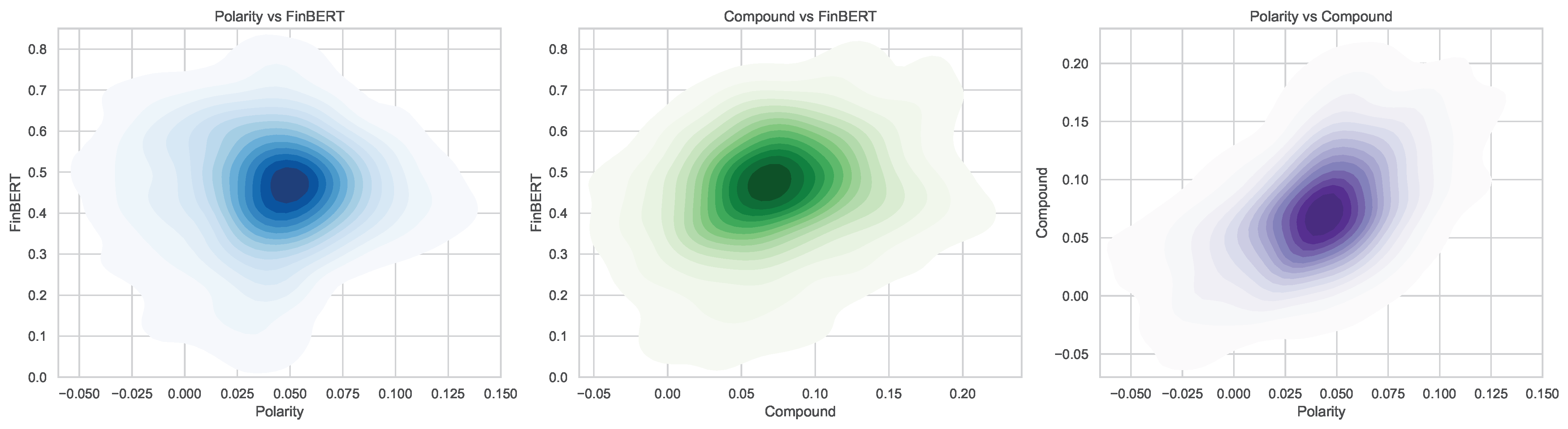

The joint distributions of key sentiment metrics across models are presented in

Figure 4. The Polarity versus FinBERT plot reveals a concentrated elliptical distribution spanning Polarity values from approximately 0.00 to 0.15 and FinBERT scores from 0.2 to 0.8. The highest density is centered near moderate Polarity (0.05–0.10) and mid-to-high FinBERT values (0.4–0.6), indicating that moderate positive polarity often coincides with substantial FinBERT sentiment scores.

The Compound versus FinBERT plot shows a similar elliptical pattern, with densities peaking around Compound scores of 0.05–0.15 and FinBERT values between 0.3 and 0.7. This reflects a positive but dispersed association, suggesting that although both metrics capture overlapping sentiment signals, FinBERT exhibits a broader dynamic range. The Polarity versus Compound plot exhibits a moderately strong positive correlation, with a Pearson coefficient of approximately 0.54. This indicates substantial but not perfect alignment between these two sentiment metrics, reflecting differences in their methodologies and sensitivity. The clustering along the diagonal suggests consistent directional agreement, yet the moderate correlation signals that each captures unique sentiment nuances. Lastly, the wider spread in comparisons involving FinBERT underscores its distinct modeling approach and sensitivity to context.

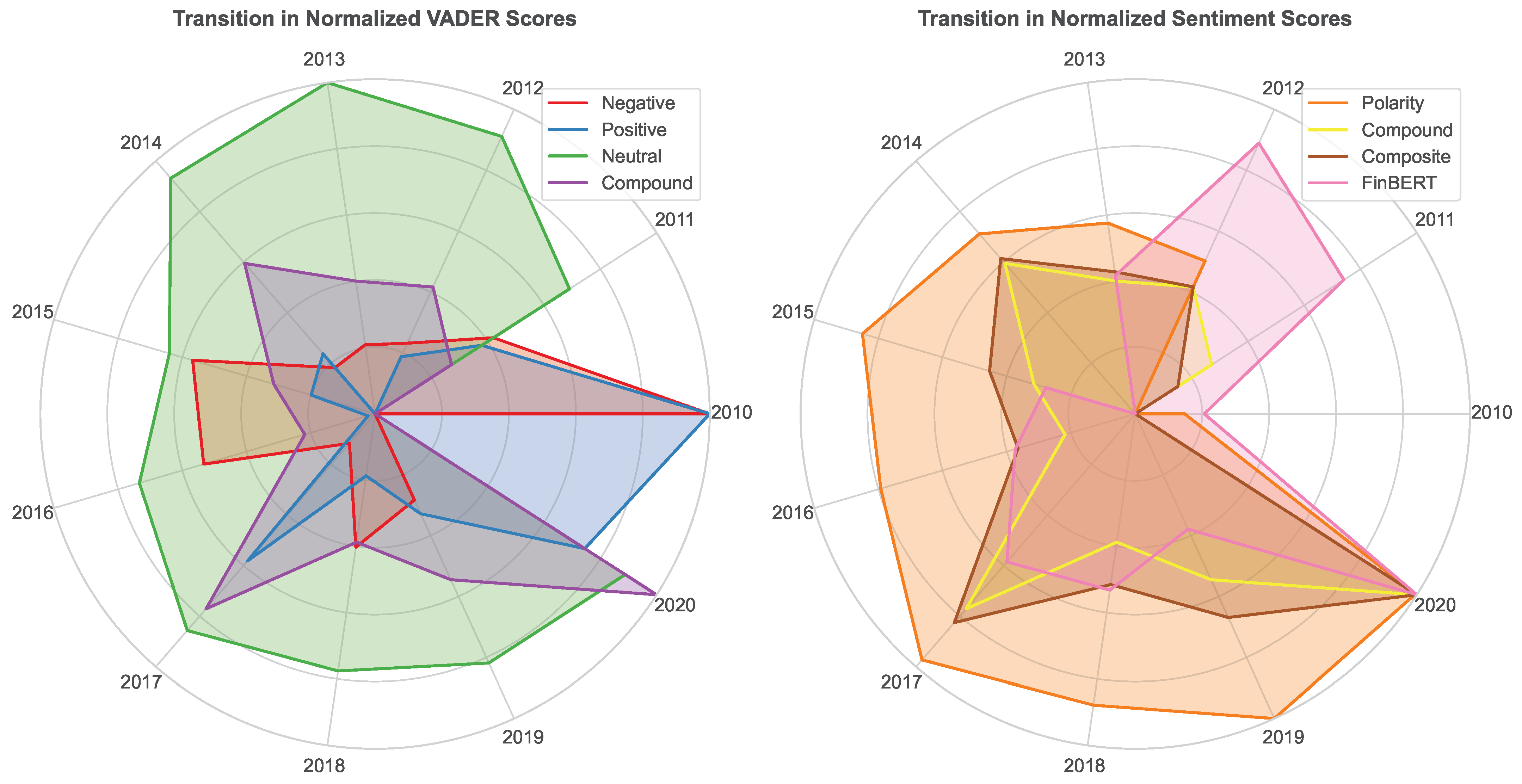

Finally, it is also interesting to look at the temporal evolution of the average annual stock market sentiment over the observed period (see

Figure 5). The first radar chart (left) illustrates the annual transitions in normalized VADER sentiment subscores (2010–2020). Over this period, Neutral sentiment consistently dominates, indicating that financial news generally maintains a balanced tone with limited emotional extremes. Positive sentiment maintains a steady presence but shows marked fluctuations, including a subtle decline during the 2011–2012 stress period and a pronounced spike in 2020 driven by optimistic market trends.

Negative sentiment peaks notably in 2010 and 2016–2017, reflecting periods of heightened pessimism aligned with adverse economic conditions. The Compound score shows moderate variation and trends toward neutrality, as it balances market optimism and pessimism. Peaks in 2014, 2017, and 2020 correspond primarily to increases in neutral and mildly positive sentiment and mark episodic shifts in market mood.

The second radar chart (right) depicts annual normalized trends for Polarity, Compound, Composite, and FinBERT scores over the decade. Polarity and Composite scores follow similar trajectories, with incremental shifts in sentiment tone and generally moderate values. Compound scores present a comparable but somewhat more volatile pattern, due to the combination of positive and negative elements. FinBERT differs with more pronounced fluctuations, particularly during stress periods like 2011–2012 and 2020. This indicates greater contextual sensitivity and finer granularity in capturing nuanced sentiment changes. Together, these measures reveal a complex sentiment landscape, where models emphasize distinct facets of market mood. This finding supports the earlier argument on the essential role of multi-dimensional sentiment analysis for capturing financial market emotions accurately.

4.2. Event-Driven Sentiment Dynamics

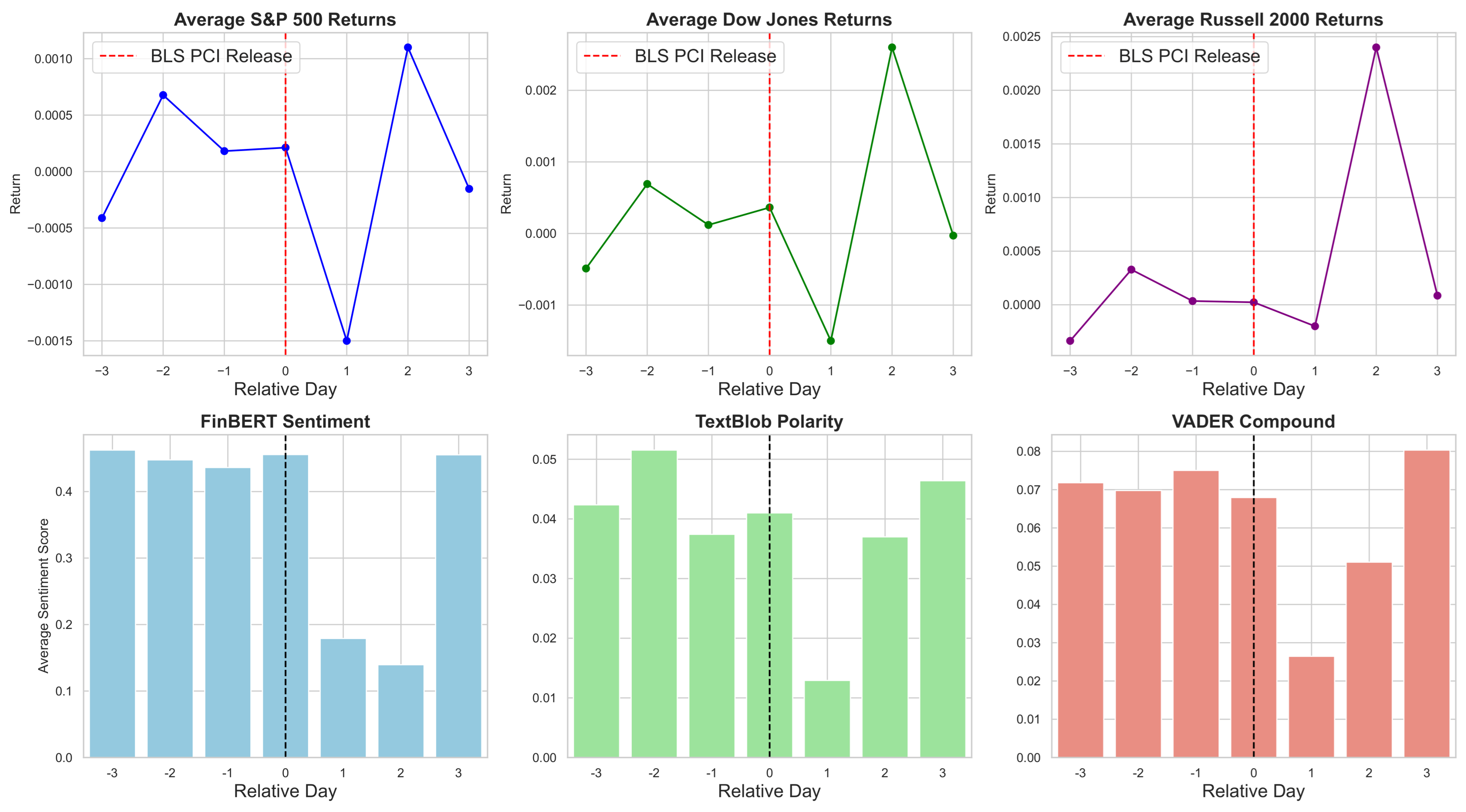

This section summarizes key sentiment trends occurring on and around (typically days) major event-driven news over the period from February 2010 to June 2020, based upon day-centered summary statistics. The sentiment scores around FOMC release dates (day 0) show clear dynamics within a day window. FinBERT scores start relatively high at day with a mean near 0.49. They gradually decline through the event day and following days, reaching a minimum on day 1 (mean ). Scores recover on days 2 and 3, with a marked spike at day 3 (mean ). This may reflect delayed market reactions. Polarity scores remain low and fairly stable, fluctuating between approximately 0.05 and 0.04 until day 3. At day 3, there is a pronounced drop to negative values, suggesting transient negative sentiment post-event. Compound scores show moderate variation, declining slightly from day to day 1, then recovering. However, by day 3, they shift into negative territory, possibly indicating market uncertainty or mixed interpretations. These patterns imply that sentiment responds to FOMC announcements with initial caution. The tone partially reverses in subsequent days, while late reactions or volatility may drive sharper sentiment swings beyond the event window.

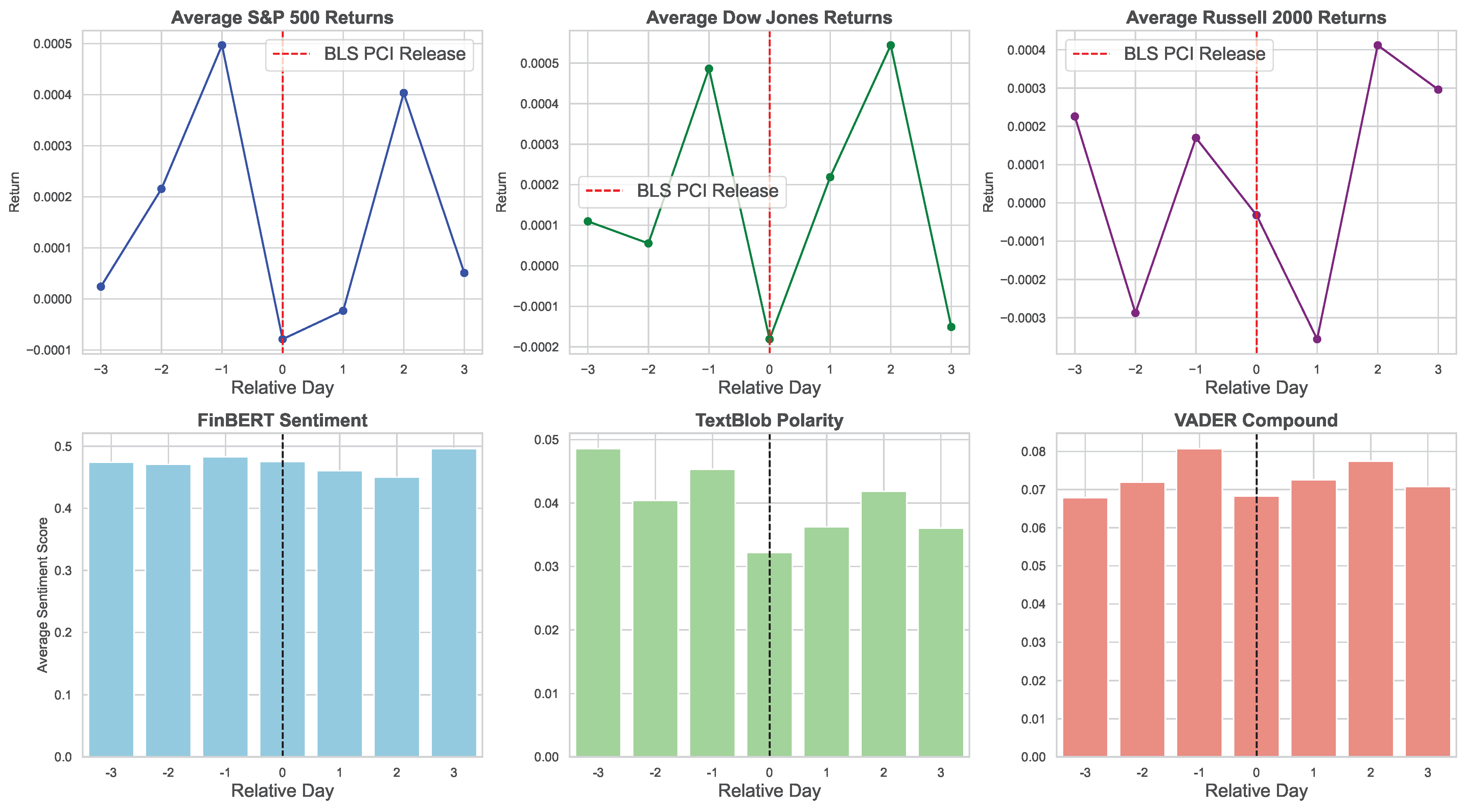

The sentiment scores around BLS PCI release dates (day 0) exhibit relatively stable dynamics within the day window. FinBERT scores fluctuate moderately, beginning near 0.47 at day , peaking at day around 0.48, and dipping slightly around the event day and following days, with a recovery to approximately 0.50 by day 3. Polarity scores remain low throughout, generally ranging between 0.03 and 0.05, with a slight dip on the event day to about 0.03, suggesting limited shifts in overt sentiment polarity. Compound scores display moderate variation, peaking slightly before the release at 0.08 and decreasing slightly at day 0, then stabilizing around 0.07 thereafter. Overall, these patterns suggest that sentiment responses to BLS PCI news releases are subtle and mostly balanced. Comparatively, FinBERT captures slightly more nuanced fluctuations compared to Polarity and Compound scores. Its ability to interpret financial jargon and complex sentence structures likely contributes to capturing these finer variations in market sentiment.

The sentiment scores surrounding BLS Employment Situation release dates (day 0) show moderate stability within the day window. FinBERT scores start near 0.46 at day , gradually decline to about 0.44 at day , then rise slightly on the event day to approximately 0.46. Scores dip sharply on days 1 and 2, though sample sizes there are limited, and recover by day 3 to 0.46. Polarity remains low and relatively stable, fluctuating around 0.04–0.05, with no major deviations on event days. Compound scores vary modestly, peaking at 0.075 just before the event and decreasing slightly on day 0, before rising again by day 3. Similar to the BLS PCI release analysis, these patterns indicate a generally balanced sentiment response to employment news. Nevertheless, FinBERT appears more effective at detecting subtle fluctuations absent in Polarity and Compound scores.

We can also connect the fluctuations in sentiment scores with those of major market indices around these events, as presented in

Figure 6,

Figure 7 and

Figure 8. The FinBERT sentiment score exhibits a distinct U-shaped pattern around FOMC announcements (see

Figure 6), decreasing gradually from three days before to the event day, followed by a sharp recovery in the subsequent days. In contrast, both TextBlob Polarity and VADER Compound scores display a subtle W-shaped trajectory, with mild rises and falls prior to the event and a pronounced decline on the third day after the announcement. Regarding market returns, all three indices experience a sharp increase in the days leading up to the announcement. This is followed by a marked decline immediately after the event. Subsequently, the S&P 500 and Dow Jones recover rapidly, whereas the Russell 2000 shows a more volatile pattern with an initial rebound followed by a renewed decline. These dynamics reflect the evolving investor sentiment and market uncertainty around monetary policy releases, highlighting heterogeneous responses across sentiment models and market segments.

Sentiment and returns exhibit markedly different patterns around the BLS PCI release date (see

Figure 7). FinBERT sentiment remains relatively stable with a slight upward trend, while other sentiment metrics reach their lowest average values on the release day, followed by a volatile recovery. The S&P 500 returns increase significantly up to one day before the announcement, then decline sharply on day 0, before mounting a strong rebound in subsequent days. In contrast, the Dow Jones shows a fragile pre-announcement trend and a sharp drop on the release day, while the Russell 2000 experiences an extended decline on day 1. Both indices display erratic, day-specific recovery patterns thereafter. Accordingly, event-driven impacts vary across market segments and call for careful consideration of index-specific dynamics in event studies.

The sentiment response and corresponding stock market adjustment around the BLS Employment Situation release exhibit distinctive dynamics (see

Figure 8). FinBERT sentiment shows relative stability in the days leading up to the release.

Following the announcement, it experiences a marked two-day decline before rebounding sharply, a pattern mirrored across all sentiment metrics. The other two sentiment scores display a similar pattern, with recovery commencing on the second day after the release. Market returns mirror these trends, differing mainly in the magnitude of changes across indices. The release day triggers a sharp decline in the S&P 500 and Dow Jones, and a more modest drop in the Russell 2000, followed by a pronounced rebound that reverses on the third day post-announcement. These findings highlight the temporal complexity of sentiment and market reactions. They underscore the importance of capturing both immediate and lagged effects in event studies.

Overall, the analysis of sentiment scores and market returns around FOMC, BLS PCI, and BLS Employment releases reveals distinct yet interrelated dynamics. FinBERT sentiment consistently captures more persistent and smoother shifts. This is shown by its U-shaped decline and rebound around FOMC announcements and steady increase near BLS PCI. These patterns contrast with more volatile, short-term fluctuations detected by lexicon-based metrics like TextBlob and VADER. Lexicon scores exhibit pronounced drops on release days, especially for BLS PCI, indicating sensitivity to immediate event-driven noise. FinBERT moderates this through contextual understanding. Market returns reflect heterogeneous responses. Pre-announcement rallies followed by sharp declines on release days are common. Subsequent rebounds vary by index. The Russell 2000 shows greater volatility and delayed recoveries compared to the S&P 500 and Dow Jones. This highlights size- and sector-specific sensitivities.

4.3. Supervised Learning Outcomes: Classification Task

As a preliminary step preceding the classification tasks, we conducted tests for serial autocorrelation and stationarity on the market-state-centered binary target variables. The results, summarized in

Table A4, show that the Ljung–Box test fails to reject the null hypothesis, indicating no significant autocorrelation up to lag 10. Concurrently, the Augmented Dickey-Fuller (ADF) test rejects the null hypothesis of a unit root, confirming that these binary series are stationary. The classification performance using the one-factor model with VADER Compound Score as the sole feature shows moderate predictive power (see

Table A5). The F1 scores for Class 1 (Bullish Market) consistently outperform those for Class 0 (bearish market) across all methods, indicating a relative ease in predicting positive return days. Accuracy values remain close to the 50–53% range, reflecting the challenging nature of the binary market direction prediction task with only a sentiment feature. Notably, ensemble methods such as Stacking and GBM outperform simpler methods like AdaBoost and Bagging, yielding higher F1 scores and slightly better accuracy, particularly for bullish market days. The Russell index generally shows marginally higher accuracy and F1 values, potentially reflecting more discernible sentiment patterns in that index relative to the others.

When replacing VADER with FinBERT sentiment score, classification results improve notably, especially for Class 1 (see

Table A6). F1 scores for bullish market predictions increase consistently across all models and indices, with Stacking and AdaBoost again providing the highest performance. Accuracy is also slightly enhanced, ranging mostly between 49% and 53%. The difference between F1 scores of bullish and bearish classes is narrower here compared to the VADER-based model, suggesting FinBERT’s sentiment captures more balanced signals across market directions. Interestingly, the S&P 500 shows a slight uptick in accuracy compared to VADER, implying FinBERT’s domain-specific NLP capabilities better align with financial text nuances relevant to the broader market. The DJ and Russell indices maintain similar improvements, underscoring FinBERT’s robustness in capturing sentiment features.

Comparatively speaking, FinBERT sentiment features improve classification performance notably compared to VADER’s compound scores, raising accuracy on average by approximately 1.5 to 2 percentage points. Additionally, FinBERT improves bullish class F1 scores by approximately 2 to 7 percentage points, depending on the model and asset. It also reduces the imbalance between bullish and bearish class F1 scores by about 2 to 5 percentage points. Ensemble methods like Stacking and GBM achieve the highest gains in both sentiment feature sets, but their improvements with FinBERT are more pronounced (3–5 percentage points higher in F1 scores). Accuracy values generally hover around 50–53%, which is only slightly better than the 50% baseline expected from random classification in a balanced binary setting. Sentiment alone does not fully explain the complexity of market movements, so the models struggle to achieve high predictive power.

The one-factor model leveraging VADER compound scores as the predictor demonstrates limited but measurable classification ability across the S&P 500 sector subindices (see

Table A7). For all sectors and methods, F1 scores for the bullish market class surpass those of the bearish class, suggesting that upward market movements are relatively easier to predict from VADER sentiment alone. Accuracy scores hover around 50–52%, marginally exceeding random classification, reflecting the difficulty of relying solely on a single sentiment feature. Among the algorithms tested, ensemble approaches such as Stacking and GBM consistently deliver superior results, especially for positive return days. Notably, Materials and Financial sectors tend to yield slightly better results, while Technology lags behind, possibly due to more complex or less directly sentiment-driven price behaviors.

Substituting VADER with FinBERT sentiment scores yields clearer gains in predictive performance across all sectors and classifiers (see

Table A8). The improvements are most marked in the F1 scores for bullish market days, which rise steadily for all models. Stacking and AdaBoost continue to lead in performance, while accuracy metrics, although still moderate (approximately 49–54%), slightly improve in sectors such as Financials and Industrials, indicating FinBERT’s enhanced ability to capture sector-specific sentiment signals. Furthermore, the gap between bullish and bearish class F1 scores narrows, signifying a more balanced prediction capability across classes when using FinBERT, with notable increases in bearish class performance for Energy and Industrials sectors.

In summary, FinBERT-based sentiment consistently enhances classification effectiveness compared to VADER across S&P 500 subindices. Bullish class F1 scores increase by roughly 3 to 7 percentage points depending on the sector and model, while the imbalance between class performances decreases by about 2 to 6 points, indicating a more equitable model output. Ensemble methods again provide the best predictive power, and their advantage is more prominent when paired with FinBERT features. However, overall accuracy remains close to the random baseline, underscoring the challenge of predicting market direction with single-factor sentiment models. These findings emphasize the need for more comprehensive feature sets to capture the multifaceted drivers of market movements.

In the light of our previous findings, we explore the predictive accuracy based on the FinBERT unrestricted model (see

Table 2). This classification report reveals consistently strong performance across all major market indices and subindices. Specifically, F1 scores generally range from the mid-0.60 s to low 0.70 s and accuracy levels consistently around 62 to 72 percentage points. Ensemble methods like Stacking and GBM outperform individual models such as XGBoost and Random Forest. This shows that combining predictions helps capture complex relationships among VIX, volume, Treasury yield, and FinBERT sentiment. The bullish market class (Class 1) has slightly higher F1 scores than the bearish class (Class 0), indicating the model detects positive returns more effectively. This aligns with earlier findings that bullish signals are often more identifiable.

Performance across sector subindices is fairly consistent, with minor differences likely due to sector-specific sentiment strength and market behavior. Balanced accuracy values above 60% demonstrate predictive power well beyond random chance. The average gap in F1 scores between the bullish (Class 1) and bearish (Class 0) classes across models and assets is roughly 3 to 4 percentage points. This indicates a moderate imbalance, where the model predicts bullish market days somewhat better than bearish ones.

Comparing the FinBERT-based unrestricted model to the VADER-based model (see

Table A9) reveals modest yet consistent improvements. FinBERT generally produces higher F1 scores for the bullish market class, exceeding VADER by approximately 1 to 3 percentage points. Balanced accuracy values for both models range between 62% and 72%, indicating solid but not flawless predictive capability. The narrower gap between bullish and bearish F1 scores in the FinBERT model suggests improved balance in classification performance. Sector-level results show stable patterns with minor variations reflecting differences in sentiment strength and market volatility. Both models deliver classification accuracy well above the 50% random baseline, yet neither achieves near-perfect prediction. This highlights the complexity and inherent noise of forecasting financial markets using sentiment as the primary feature.

To explore the marginal contribution of sentiment inthese classification tasks, we have derived the difference in F1 scores and balanced accuracy between unrestricted models (VADER and FinBERT-centered, respectively) and the restricted ones (excluding news sentiment as a feature). The estimated results indicate that the inclusion of VADER sentiment (see

Table A10) yields small but generally positive improvements in F1 scores and accuracy across several assets and models, with the most notable gains observed for XGBoost and Random Forest in indices like S&P 500, Dow Jones, and Russell. However, improvements are inconsistent for other methods such as AdaBoost, GBM, and Stacking, where some differences are negative or negligible, particularly across certain sectors like Energy and Industrials. The mixed performance suggests that while VADER sentiment adds some predictive value, its contribution is modest and model-dependent. This underscores the limited incremental power of generic sentiment features in complex market state prediction tasks.

The classification results when sentiment is proxied by FinBERT score (see

Table A11) also reveal mixed effects: certain models like XGBoost show consistent improvements in F1 scores and accuracy across several indices, particularly S&P 500, Dow Jones, and Russell, with gains often between 1 and 5 percentage points. However, other models, including AdaBoost, GBM, and Stacking, exhibit mostly marginal or even negative differences, especially in sectors such as S&P Financial, Energy, and Industrials. These findings suggest that while FinBERT sentiment can enhance market state predictions in some contexts, its benefit is uneven across models and sectors. Comparatively, VADER generally provides more consistent positive improvements across models and sectors, with several gains exceeding 2–4 percentage points in F1 and accuracy metrics. In contrast, FinBERT’s impact is more variable, showing strong improvements mainly for XGBoost but frequent marginal or negative effects for other ensemble methods. Thus, while FinBERT’s domain-specific embeddings offer richer sentiment information, their integration into complex models may require further tuning. We observe consistent results when lagged sentiment scores are included as predictive features.

These findings offer actionable insights for portfolio managers and financial analysts aiming to enhance predictive models. FinBERT-based sentiment improves classification performance, especially for bullish market states, aiding short-term positioning and tactical trades. Ensemble models like GBM and Stacking benefit the most, suggesting that advanced algorithms better capture subtle sentiment patterns. Sector-specific gains, particularly in Financials and Materials sectors, indicate that sentiment tools may be more effective in news-sensitive industries. However, accuracy levels remain modest, and sentiment signals alone do not suffice for reliable forecasting. Thus, analysts should treat sentiment as a complementary input, best used alongside structural indicators like VIX, volume, and interest rates, etc.

4.4. Demystifying Classification Bias

This section initially addresses the classification bias expressed through constantly higher F1 score for Class 1 (Bullish state), despite the application of smoothing and model calibration. A closer look at the one-factor (FinBERT) model (see

Table A12) indicate still consistently higher predictive performance for bullish market days compared to bearish ones, which indicates a clear classification bias. More formally, the models favor identifying bullish market conditions with roughly 6 to 7 percentage points better F1 scores on average. This bias is most pronounced in certain sectoral assets (such as Financials, Industrials, and Tech sectors), where the F1 gap reaches up to 0.09 for certain models like Bagging and RandomForest. Conversely, lower differences in the Russell and Materials indices (around 0.00 to 0.03 for some models) imply more balanced class predictions or perhaps less distinctive sentiment signals for bearish states in those indices.