High-Definition Map Representation Techniques for Automated Vehicles

Abstract

1. Introduction

- This paper describes and compares different map representation approaches and their applications, such as highly/moderately simplified map representations, which are primarily used in the robotics domain.

- We provide a detailed literature review of HD maps for automated vehicles, as well as the structure of their various layers and the information contained within them, based on different companies’ definitions of an HD map.

- We discuss the current limitations and challenges of the HD map, such as data storage and map update routines, as well as future research directions.

2. Real-Time (Online) Mapping

Simultaneous Localization and Mapping (SLAM)

3. Highly/Moderately Simplified Map Representations

3.1. Topological Maps

3.2. Metric Maps

3.2.1. Landmark-Based Maps

3.2.2. Occupancy Grid Maps

- Octree: The octree encoding [107] is a 3D hierarchical octal tree structure capable of representing objects with any morphology at any resolution. Because the memory required for representation and manipulation is on the order of the area of the object, it is commonly employed in systems that require 3D data storage due to its great efficiency [71,72,73,75,76,77,108,109,110].

- Costmap: The costmap represents the difficulty of traversing different areas of the map. The cost is calculated by integrating the static map, local obstacle information, and the inflation layer, and it takes the shape of an occupancy grid with abstract values that do not represent any measurement of the environment. It is mostly utilized in path planning [111,112,113,114].

3.3. Geometric Maps

4. High-Accuracy Map Representations

4.1. Digital Maps

4.2. Enhanced Digital Maps

- Road curvature;

- Gradient (slope) of the roads;

- Curvature (sharpness) at junctions;

- Lane markings at junctions;

- Traffic signs;

- Speed restrictions (necessary for adaptive cruise control).

4.3. High-Definition (HD) Maps

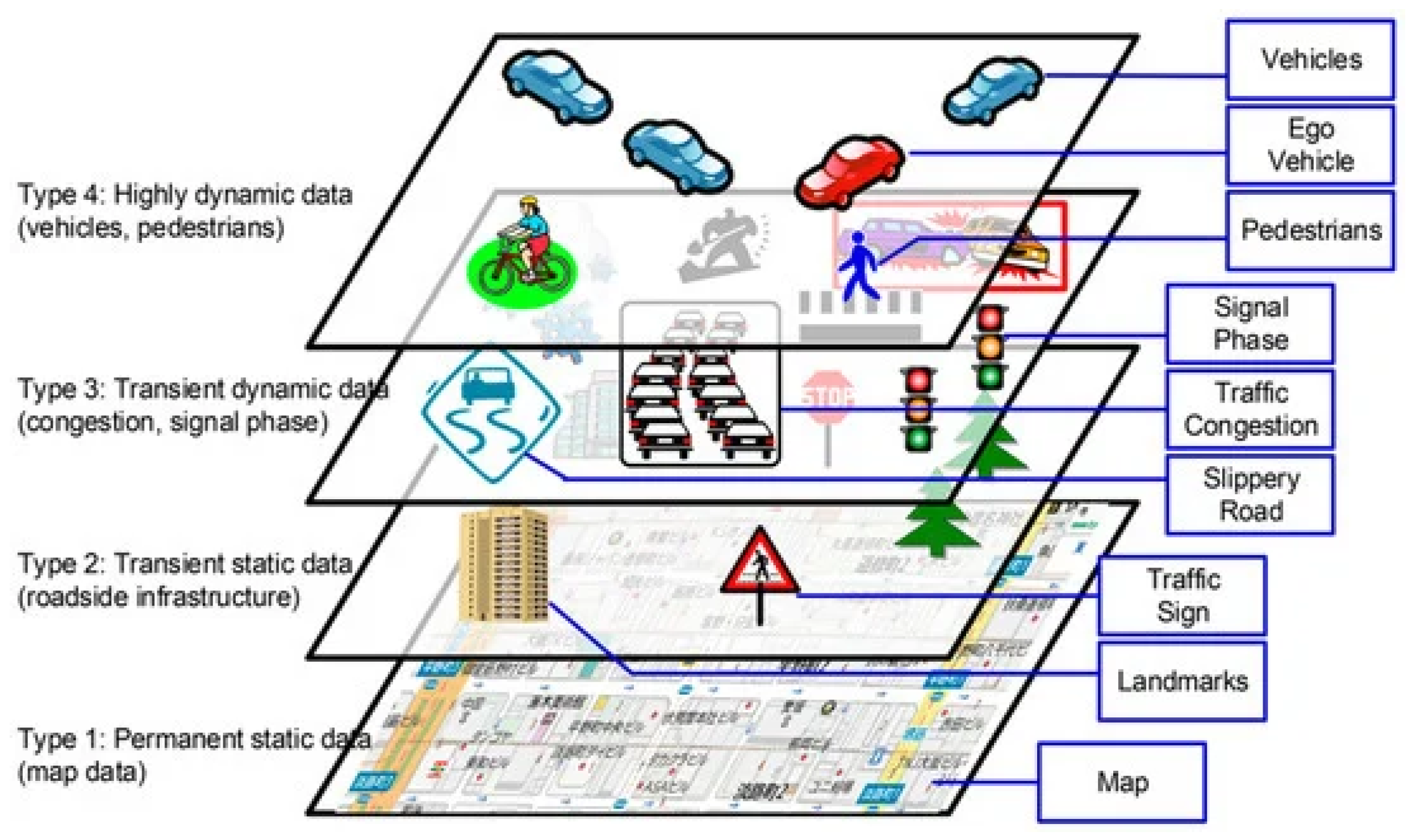

- Base map layer: The entire HD map is layered on top of a standard street map.

- Geometric map layer: The geometric layer in Lyft’s maps contains a 3D representation of the surrounding road network. This 3D representation is provided by a voxel map with voxels of 5 cm × 5 cm × 5 cm and was built using sensory data of LiDAR and cameras. Voxels are a cheaper alternative to point clouds in terms of required storage.

- Semantic map layer: The semantic map layer contains all semantic data, such as lane marker placements, travel directions, and traffic sign locations [23,134,135]. Within the semantic layer, there are three major sublayers:

- -

- Road-graph layer;

- -

- Lane-geometry layer;

- -

- Semantic features include all objects relevant to the driving task, such as traffic lights, pedestrian crossings, and road signs.

- Map priors layer: This layer adds to the semantic layer by integrating data that have been learned via experience (crowd-sourced data). For example, the average time it takes for a traffic light to turn green or the likelihood of coming across parked vehicles on the side of a narrow route, allows the AV to raise its “caution” while driving.

- Real-time knowledge layer: This is the only layer designed to be updated in real time, to reflect changing conditions such as traffic congestion, accidents, and road work.

- Lane positions and widths: The position of lane markings in 2D along with the type of lane (solid line, dashed line, etc.). Lane markings may also indicate intersections, road edges, and off-ramps.

- Road sign positions: The 3D position of road signage includes stop signs, traffic lights, give-way signs, one-way road signs, and traffic signs. This task is especially challenging when signage conventions and road rules vary by country.

- Special road features: such as pedestrian crossings, school zones, speed bumps, bicycle lanes and bus lanes.

- Occupancy map: A spatial 3D representation of the road and all physical objects around the road. This representation can be stored as a mesh geometry, point cloud, or voxels. The 3D model is essential to centimeter-level accuracy in the AV’s location on the map.

5. Localization in HD Maps

6. Limitations and Challenges

7. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Badue, C.; Guidolini, R.; Carneiro, R.V.; Azevedo, P.; Cardoso, V.B.; Forechi, A.; Jesus, L.; Berriel, R.; Paixao, T.M.; Mutz, F.; et al. Self-driving cars: A survey. Expert Syst. Appl. 2021, 165, 113816. [Google Scholar] [CrossRef]

- Phan-Minh, T.; Grigore, E.C.; Boulton, F.A.; Beijbom, O.; Wolff, E.M. Covernet: Multimodal behavior prediction using trajectory sets. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 14074–14083. [Google Scholar]

- Rhinehart, N.; McAllister, R.; Kitani, K.; Levine, S. Precog: Prediction conditioned on goals in visual multi-agent settings. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 2821–2830. [Google Scholar]

- Toghi, B.; Grover, D.; Razzaghpour, M.; Jain, R.; Valiente, R.; Zaman, M.; Shah, G.; Fallah, Y.P. A Maneuver-based Urban Driving Dataset and Model for Cooperative Vehicle Applications. In Proceedings of the 2020 IEEE 3rd Connected and Automated Vehicles Symposium (CAVS), Victoria, BC, Canada, 18 November–16 December 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Mahjoub, H.N.; Raftari, A.; Valiente, R.; Fallah, Y.P.; Mahmud, S.K. Representing Realistic Human Driver Behaviors using a Finite Size Gaussian Process Kernel Bank. In Proceedings of the 2019 IEEE Vehicular Networking Conference (VNC), Los Angeles, CA, USA, 4–6 December 2019; pp. 1–8. [Google Scholar]

- Ma, Y.; Zhu, X.; Zhang, S.; Yang, R.; Wang, W.; Manocha, D. TrafficPredict: Trajectory Prediction for Heterogeneous Traffic-Agents. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 6120–6127. [Google Scholar] [CrossRef]

- Laconte, J.; Kasmi, A.; Aufrère, R.; Vaidis, M.; Chapuis, R. A Survey of Localization Methods for Autonomous Vehicles in Highway Scenarios. Sensors 2022, 22, 247. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Zhang, Y.; Wang, J. Map-based localization method for autonomous vehicles using 3D-LIDAR. IFAC-PapersOnLine 2017, 50, 276–281. [Google Scholar] [CrossRef]

- Javanmardi, E.; Javanmardi, M.; Gu, Y.; Kamijo, S. Factors to Evaluate Capability of Map for Vehicle Localization. IEEE Access 2018, 6, 49850–49867. [Google Scholar] [CrossRef]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuScenes: A Multimodal Dataset for Autonomous Driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Liu, L.; Wu, T.; Fang, Y.; Hu, T.; Song, J. A smart map representation for autonomous vehicle navigation. In Proceedings of the 2015 12th International Conference on Fuzzy Systems and Knowledge Discovery (FSKD), Zhangjiajie, China, 15–17 August 2015; pp. 2308–2313. [Google Scholar] [CrossRef]

- Razzaghpour, M.; Mosharafian, S.; Raftari, A.; Velni, J.M.; Fallah, Y.P. Impact of Information Flow Topology on Safety of Tightly-coupled Connected and Automated Vehicle Platoons Utilizing Stochastic Control. In Proceedings of the 2022 European Control Conference (ECC), London, UK, 11–14 July 2022; pp. 27–33. [Google Scholar] [CrossRef]

- Valiente, R.; Raftari, A.; Zaman, M.; Fallah, Y.P.; Mahmud, S. Dynamic Object Map Based Architecture for Robust Cvs Systems. Technical Report, SAE Technical Paper. 2020. Available online: https://www.sae.org/publications/technical-papers/content/2020-01-0084/ (accessed on 10 August 2022). [CrossRef]

- Leurent, E. A Survey of State-Action Representations for Autonomous Driving. Working Paper or Preprint. 2018. Available online: https://hal.archives-ouvertes.fr/hal-01908175/document (accessed on 10 August 2022).

- Jami, A.; Razzaghpour, M.; Alnuweiri, H.; Fallah, Y.P. Augmented Driver Behavior Models for High-Fidelity Simulation Study of Crash Detection Algorithms. arXiv 2022, arXiv:2208.05540. [Google Scholar] [CrossRef]

- Pannen, D.; Liebner, M.; Hempel, W.; Burgard, W. How to Keep HD Maps for Automated Driving Up To Date. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 2288–2294. [Google Scholar] [CrossRef]

- Petrovskaya, A.; Thrun, S. Model based vehicle detection and tracking for autonomous urban driving. Auton. Robot. 2009, 26, 123–139. [Google Scholar] [CrossRef]

- Ilci, V.; Toth, C. High Definition 3D Map Creation Using GNSS/IMU/LiDAR Sensor Integration to Support Autonomous Vehicle Navigation. Sensors 2020, 20, 899. [Google Scholar] [CrossRef]

- Zheng, L.; Li, B.; Zhang, H.; Shan, Y.; Zhou, J. A High-Definition Road-Network Model for Self-Driving Vehicles. ISPRS Int. J.-Geo-Inf. 2018, 7, 417. [Google Scholar] [CrossRef]

- Bauer, S.; Alkhorshid, Y.; Wanielik, G. Using High-Definition maps for precise urban vehicle localization. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 492–497. [Google Scholar] [CrossRef]

- Casas, S.; Sadat, A.; Urtasun, R. MP3: A Unified Model To Map, Perceive, Predict and Plan. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 14403–14412. [Google Scholar]

- Phillips, J.; Martinez, J.; Barsan, I.A.; Casas, S.; Sadat, A.; Urtasun, R. Deep Multi-Task Learning for Joint Localization, Perception, and Prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 4679–4689. [Google Scholar]

- Sadat, A.; Casas, S.; Ren, M.; Wu, X.; Dhawan, P.; Urtasun, R. Perceive, Predict, and Plan: Safe Motion Planning Through Interpretable Semantic Representations. In Computer Vision—ECCV 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 414–430. [Google Scholar]

- Luo, W.; Yang, B.; Urtasun, R. Fast and Furious: Real Time End-to-End 3D Detection, Tracking and Motion Forecasting With a Single Convolutional Net. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Liang, M.; Yang, B.; Zeng, W.; Chen, Y.; Hu, R.; Casas, S.; Urtasun, R. PnPNet: End-to-End Perception and Prediction With Tracking in the Loop. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Zhang, J.; Ohn-Bar, E. Learning by watching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 12711–12721. [Google Scholar]

- Valiente, R.; Zaman, M.; Ozer, S.; Fallah, Y.P. Controlling steering angle for cooperative self-driving vehicles utilizing cnn and lstm-based deep networks. In Proceedings of the 2019 IEEE Intelligent Vehicles symposium (IV), Paris, France, 9–12 June 2019; pp. 2423–2428. [Google Scholar]

- Natan, O.; Miura, J. Fully End-to-end Autonomous Driving with Semantic Depth Cloud Mapping and Multi-Agent. arXiv 2022, arXiv:2204.05513. [Google Scholar]

- Valiente, R.; Toghi, B.; Pedarsani, R.; Fallah, Y.P. Robustness and Adaptability of Reinforcement Learning-Based Cooperative Autonomous Driving in Mixed-Autonomy Traffic. IEEE Open J. Intell. Transp. Syst. 2022, 3, 397–410. [Google Scholar] [CrossRef]

- Pomerleau, D.A. Alvinn: An Autonomous Land Vehicle in a Neural Network. In Advances in Neural Information Processing Systems; Morgan Kaufmann: Denver, CO, USA, 1989. [Google Scholar]

- Eraqi, H.M.; Moustafa, M.N.; Honer, J. End-to-End Deep Learning for Steering Autonomous Vehicles Considering Temporal Dependencies. arXiv 2017, arXiv:1710.03804. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Du, S.; Guo, H.; Simpson, A. Self-Driving Car Steering Angle Prediction Based on Image Recognition. arXiv 2019, arXiv:1912.05440. [Google Scholar]

- Dirdal, J. End-to-End Learning and Sensor Fusion with Deep Convolutional Networks for Steering an Off-Road Unmanned Ground Vehicle. Ph.D. Thesis, NTNU, Trondheim, Norway, 2018. [Google Scholar]

- Yu, H.; Yang, S.; Gu, W.; Zhang, S. Baidu driving dataset and end-To-end reactive control model. In Proceedings of the IEEE Intelligent Vehicles Symposium, Los Angeles, CA, USA, 11–14 June 2017. [Google Scholar] [CrossRef]

- Cui, A.; Casas, S.; Sadat, A.; Liao, R.; Urtasun, R. LookOut: Diverse Multi-Future Prediction and Planning for Self-Driving. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 16107–16116. [Google Scholar]

- Casas, S.; Luo, W.; Urtasun, R. Intentnet: Learning to predict intention from raw sensor data. In Proceedings of the Conference on Robot Learning, PMLR, Zürich, Switzerland, 29–31 October 2018; pp. 947–956. [Google Scholar]

- Toghi, B.; Valiente, R.; Pedarsani, R.; Fallah, Y.P. Towards Learning Generalizable Driving Policies from Restricted Latent Representations. arXiv 2021, arXiv:2111.03688. [Google Scholar]

- Yang, B.; Liang, M.; Urtasun, R. HDNET: Exploiting HD Maps for 3D Object Detection. In Proceedings of the Conference on Robot Learning, Zürich, Switzerland, 29–31 October 2018; Volume 87, pp. 146–155. [Google Scholar]

- Bansal, M.; Krizhevsky, A.; Ogale, A. Chauffeurnet: Learning to drive by imitating the best and synthesizing the worst. arXiv 2018, arXiv:1812.03079. [Google Scholar]

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. MonoSLAM: Real-Time Single Camera SLAM. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1052–1067. [Google Scholar] [CrossRef]

- Xu, Z.; Deng, D.; Shimada, K. Autonomous UAV exploration of dynamic environments via incremental sampling and probabilistic roadmap. IEEE Robot. Autom. Lett. 2021, 6, 2729–2736. [Google Scholar] [CrossRef]

- Biswas, R.; Limketkai, B.; Sanner, S.; Thrun, S. Towards object mapping in non-stationary environments with mobile robots. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Lausanne, Switzerland, 30 September–4 October 2002; Volume 1, pp. 1014–1019. [Google Scholar]

- Durrant-Whyte, H.; Bailey, T. Simultaneous localization and mapping: Part I. IEEE Robot. Autom. Mag. 2006, 13, 99–110. [Google Scholar] [CrossRef]

- Bailey, T.; Durrant-Whyte, H. Simultaneous localization and mapping (SLAM): Part II. IEEE Robot. Autom. Mag. 2006, 13, 108–117. [Google Scholar] [CrossRef]

- Bresson, G.; Alsayed, Z.; Yu, L.; Glaser, S. Simultaneous Localization and Mapping: A Survey of Current Trends in Autonomous Driving. IEEE Trans. Intell. Veh. 2017, 2, 194–220. [Google Scholar] [CrossRef]

- Lamon, P.; Stachniss, C.; Triebel, R.; Pfaff, P.; Plagemann, C.; Grisetti, G.; Kolski, S.; Burgard, W.; Siegwart, R. Mapping with an autonomous car. In Proceedings of the Workshop on Safe Navigation in Open and Dynamic Environments (IROS), Zürich, Switzerland, 2006. [Google Scholar]

- Ort, T.; Paull, L.; Rus, D. Autonomous Vehicle Navigation in Rural Environments Without Detailed Prior Maps. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 2040–2047. [Google Scholar] [CrossRef]

- Aldibaja, M.; Suganuma, N. Graph SLAM-Based 2.5 D LIDAR Mapping Module for Autonomous Vehicles. Remote Sens. 2021, 13, 5066. [Google Scholar] [CrossRef]

- Lluvia, I.; Lazkano, E.; Ansuategi, A. Active mapping and robot exploration: A survey. Sensors 2021, 21, 2445. [Google Scholar] [CrossRef] [PubMed]

- Cartographer. Available online: https://github.com/cartographer-project/cartographer (accessed on 10 August 2022).

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-Time Loop Closure in 2D LIDAR SLAM. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1271–1278. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardós, J.D. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.; Tardós, J.D. ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual–Inertial, and Multimap SLAM. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast semi-direct monocular visual odometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 15–22. [Google Scholar] [CrossRef]

- Sucar, E.; Liu, S.; Ortiz, J.; Davison, A.J. iMAP: Implicit Mapping and Positioning in Real-Time. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11–17 October 2021; pp. 6229–6238. [Google Scholar]

- Yamauchi, B. A frontier-based approach for autonomous exploration. In Proceedings of the 1997 IEEE International Symposium on Computational Intelligence in Robotics and Automation CIRA’97.’ Towards New Computational Principles for Robotics and Automation’, Monterey, CA, USA, 10–11 July 1997; pp. 146–151. [Google Scholar]

- Stachniss, C.; Hahnel, D.; Burgard, W. Exploration with active loop-closing for FastSLAM. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)(IEEE Cat. No. 04CH37566), Sendai, Japan, 28 September–2 October 2004; Volume 2, pp. 1505–1510. [Google Scholar] [CrossRef]

- Stachniss, C.; Grisetti, G.; Burgard, W. Information Gain-based Exploration Using Rao-Blackwellized Particle Filters. In Robotics: Science and Systems; MIT Press: Cambridge, MA, USA, 2005; Volume 2, pp. 65–72. [Google Scholar]

- Maurović, I.; ðakulović, M.; Petrović, I. Autonomous exploration of large unknown indoor environments for dense 3D model building. IFAC Proc. Vol. 2014, 47, 10188–10193. [Google Scholar] [CrossRef]

- Carlone, L.; Du, J.; Kaouk Ng, M.; Bona, B.; Indri, M. Active SLAM and exploration with particle filters using Kullback–Leibler divergence. J. Intell. Robot. Syst. 2014, 75, 291–311. [Google Scholar] [CrossRef]

- Trivun, D.; Šalaka, E.; Osmanković, D.; Velagić, J.; Osmić, N. Active SLAM-based algorithm for autonomous exploration with mobile robot. In Proceedings of the 2015 IEEE International Conference on Industrial Technology (ICIT), Seville, Spain, 17–19 March 2015; pp. 74–79. [Google Scholar]

- Umari, H.; Mukhopadhyay, S. Autonomous robotic exploration based on multiple rapidly-exploring randomized trees. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1396–1402. [Google Scholar] [CrossRef]

- Valencia, R.; Andrade-Cetto, J. Active pose SLAM. In Mapping, Planning and Exploration with Pose SLAM; Springer: Cham, Switzerland, 2018; pp. 89–108. [Google Scholar]

- Sodhi, P.; Ho, B.J.; Kaess, M. Online and consistent occupancy grid mapping for planning in unknown environments. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 7879–7886. [Google Scholar]

- Davison, A.J. Real-time simultaneous localisation and mapping with a single camera. In Proceedings of the Ninth IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; Volume 3, p. 1403. [Google Scholar]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-scale direct monocular SLAM. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 834–849. [Google Scholar]

- Bourgault, F.; Makarenko, A.A.; Williams, S.B.; Grocholsky, B.; Durrant-Whyte, H.F. Information based adaptive robotic exploration. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Lausanne, Switzerland, 30 September–4 October 2002; Volume 1, pp. 540–545. [Google Scholar] [CrossRef]

- Bircher, A.; Kamel, M.; Alexis, K.; Oleynikova, H.; Siegwart, R. Receding horizon “next-best-view” planner for 3d exploration. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1462–1468. [Google Scholar]

- Zhu, C.; Ding, R.; Lin, M.; Wu, Y. A 3d frontier-based exploration tool for mavs. In Proceedings of the 2015 IEEE 27th International Conference on Tools with Artificial Intelligence (ICTAI), Vietri sul Mare, Italy, 9–11 November 2015; pp. 348–352. [Google Scholar] [CrossRef]

- Bircher, A.; Kamel, M.; Alexis, K.; Oleynikova, H.; Siegwart, R. Receding horizon path planning for 3D exploration and surface inspection. Auton. Robot. 2018, 42, 291–306. [Google Scholar] [CrossRef]

- Selin, M.; Tiger, M.; Duberg, D.; Heintz, F.; Jensfelt, P. Efficient Autonomous Exploration Planning of Large-Scale 3-D Environments. IEEE Robot. Autom. Lett. 2019, 4, 1699–1706. [Google Scholar] [CrossRef]

- Senarathne, P.; Wang, D. Towards autonomous 3D exploration using surface frontiers. In Proceedings of the 2016 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Lausanne, Switzerland, 23–27 October 2016; pp. 34–41. [Google Scholar]

- Papachristos, C.; Khattak, S.; Alexis, K. Uncertainty-aware receding horizon exploration and mapping using aerial robots. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 4568–4575. [Google Scholar] [CrossRef]

- Faria, M.; Maza, I.; Viguria, A. Applying frontier cells based exploration and Lazy Theta* path planning over single grid-based world representation for autonomous inspection of large 3D structures with an UAS. J. Intell. Robot. Syst. 2019, 93, 113–133. [Google Scholar] [CrossRef]

- Dai, A.; Papatheodorou, S.; Funk, N.; Tzoumanikas, D.; Leutenegger, S. Fast frontier-based information-driven autonomous exploration with an mav. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 9570–9576. [Google Scholar] [CrossRef]

- The List of Vision-Based SLAM. Available online: https://github.com/tzutalin/awesome-visual-slam (accessed on 10 August 2022).

- Taketomi, T.; Uchiyama, H.; Ikeda, S. Visual SLAM algorithms: A survey from 2010 to 2016. IPSJ Trans. Comput. Vis. Appl. 2017, 9, 16. [Google Scholar] [CrossRef]

- Casas, S.; Gulino, C.; Liao, R.; Urtasun, R. Spagnn: Spatially-aware graph neural networks for relational behavior forecasting from sensor data. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 9491–9497. [Google Scholar]

- Shi, W.; Rajkumar, R. Point-gnn: Graph neural network for 3d object detection in a point cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 1711–1719. [Google Scholar]

- Ivanovic, B.; Pavone, M. The trajectron: Probabilistic multi-agent trajectory modeling with dynamic spatiotemporal graphs. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 2375–2384. [Google Scholar]

- Fraundorfer, F.; Engels, C.; Nistér, D. Topological mapping, localization and navigation using image collections. In Proceedings of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; pp. 3872–3877. [Google Scholar]

- Grisetti, G.; Kümmerle, R.; Stachniss, C.; Burgard, W. A tutorial on graph-based SLAM. IEEE Intell. Transp. Syst. Mag. 2010, 2, 31–43. [Google Scholar] [CrossRef]

- Liang, M.; Yang, B.; Hu, R.; Chen, Y.; Liao, R.; Feng, S.; Urtasun, R. Learning Lane Graph Representations for Motion Forecasting. In Computer Vision—ECCV 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 541–556. [Google Scholar]

- Gao, J.; Sun, C.; Zhao, H.; Shen, Y.; Anguelov, D.; Li, C.; Schmid, C. VectorNet: Encoding HD Maps and Agent Dynamics From Vectorized Representation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Bender, P.; Ziegler, J.; Stiller, C. Lanelets: Efficient map representation for autonomous driving. In Proceedings of the 2014 IEEE Intelligent Vehicles Symposium Proceedings, Dearborn, MI, USA, 8–11 June 2014; pp. 420–425. [Google Scholar] [CrossRef]

- Poggenhans, F.; Pauls, J.H.; Janosovits, J.; Orf, S.; Naumann, M.; Kuhnt, F.; Mayr, M. Lanelet2: A high-definition map framework for the future of automated driving. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 1672–1679. [Google Scholar] [CrossRef]

- Yang, L.; Cui, M. Lane Network Construction Using High Definition Maps for Autonomous Vehicles. U.S. Patent 10,545,029, 28 January 2020. [Google Scholar]

- Chen, Y.; Huang, S.; Fitch, R.; Zhao, L.; Yu, H.; Yang, D. On-line 3D active pose-graph SLAM based on key poses using graph topology and sub-maps. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 169–175. [Google Scholar]

- Feder, H.J.S.; Leonard, J.J.; Smith, C.M. Adaptive mobile robot navigation and mapping. Int. J. Robot. Res. 1999, 18, 650–668. [Google Scholar] [CrossRef]

- Lazanas, A.; Latombe, J.C. Landmark-based robot navigation. Algorithmica 1995, 13, 472–501. [Google Scholar] [CrossRef]

- Dailey, M.N.; Parnichkun, M. Landmark-based simultaneous localization and mapping with stereo vision. In Proceedings of the Asian Conference on Industrial Automation and Robotics, Bangkok, Thailand, 11–13 May 2005; Volume 2. [Google Scholar]

- Schuster, F.; Keller, C.G.; Rapp, M.; Haueis, M.; Curio, C. Landmark based radar SLAM using graph optimization. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 2559–2564. [Google Scholar]

- Engel, N.; Hoermann, S.; Horn, M.; Belagiannis, V.; Dietmayer, K. Deeplocalization: Landmark-based self-localization with deep neural networks. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 926–933. [Google Scholar]

- Zhou, B.; Li, Q.; Mao, Q.; Tu, W.; Zhang, X.; Chen, L. ALIMC: Activity landmark-based indoor mapping via crowdsourcing. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2774–2785. [Google Scholar] [CrossRef]

- Ghrist, R.; Lipsky, D.; Derenick, J.; Speranzon, A. Topological Landmark-Based Navigation and Mapping; Tech. Rep. University of Pennsylvania, Department of Mathematics: Pennsylvania, PA, USA, 2012; Volume 8. [Google Scholar]

- Yoo, H.; Oh, S. Localizability-based Topological Local Object Occupancy Map for Homing Navigation. In Proceedings of the 2021 18th International Conference on Ubiquitous Robots (UR), Gangneung, Korea, 12–14 July 2021; pp. 22–25. [Google Scholar]

- Kim, B.; Kang, C.M.; Kim, J.; Lee, S.H.; Chung, C.C.; Choi, J.W. Probabilistic vehicle trajectory prediction over occupancy grid map via recurrent neural network. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; pp. 399–404. [Google Scholar]

- Li, H.; Tsukada, M.; Nashashibi, F.; Parent, M. Multivehicle cooperative local mapping: A methodology based on occupancy grid map merging. IEEE Trans. Intell. Transp. Syst. 2014, 15, 2089–2100. [Google Scholar] [CrossRef]

- Meyer-Delius, D.; Beinhofer, M.; Burgard, W. Occupancy grid models for robot mapping in changing environments. In Proceedings of the Twenty-Sixth AAAI Conference on Artificial Intelligence, Toronto, Canada, 22–26 July 2012. [Google Scholar]

- Tsardoulias, E.G.; Iliakopoulou, A.; Kargakos, A.; Petrou, L. A review of global path planning methods for occupancy grid maps regardless of obstacle density. J. Intell. Robot. Syst. 2016, 84, 829–858. [Google Scholar] [CrossRef]

- Wirges, S.; Stiller, C.; Hartenbach, F. Evidential occupancy grid map augmentation using deep learning. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 668–673. [Google Scholar]

- Xue, H.; Fu, H.; Ren, R.; Wu, T.; Dai, B. Real-time 3D Grid Map Building for Autonomous Driving in Dynamic Environment. In Proceedings of the 2019 IEEE International Conference on Unmanned Systems (ICUS), Beijing, China, 17–19 October 2019; pp. 40–45. [Google Scholar] [CrossRef]

- Han, S.J.; Kim, J.; Choi, J. Effective height-grid map building using inverse perspective image. In Proceedings of the 2015 IEEE Intelligent Vehicles Symposium (IV), Seoul, Korea, 28 June–1 July 2015; pp. 549–554. [Google Scholar]

- Luo, K.; Casas, S.; Liao, R.; Yan, X.; Xiong, Y.; Zeng, W.; Urtasun, R. Safety-Oriented Pedestrian Motion and Scene Occupancy Forecasting. arXiv 2021, arXiv:2101.02385. [Google Scholar] [CrossRef]

- Meagher, D. Geometric modeling using octree encoding. Comput. Graph. Image Process. 1982, 19, 129–147. [Google Scholar] [CrossRef]

- Hornung, A.; Wurm, K.M.; Bennewitz, M.; Stachniss, C.; Burgard, W. OctoMap: An efficient probabilistic 3D mapping framework based on octrees. Auton. Robot. 2013, 34, 189–206. [Google Scholar] [CrossRef]

- Papachristos, C.; Kamel, M.; Popović, M.; Khattak, S.; Bircher, A.; Oleynikova, H.; Dang, T.; Mascarich, F.; Alexis, K.; Siegwart, R. Autonomous exploration and inspection path planning for aerial robots using the robot operating system. In Robot Operating System (ROS); Springer: Cham, Swizterland, 2019; pp. 67–111. [Google Scholar]

- Suresh, S.; Sodhi, P.; Mangelson, J.G.; Wettergreen, D.; Kaess, M. Active SLAM using 3D Submap Saliency for Underwater Volumetric Exploration. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 3132–3138. [Google Scholar] [CrossRef]

- Moravec, H.P. Sensor fusion in certainty grids for mobile robots. In Sensor Devices and Systems for Robotics; Springer: Berlin/Heidelberg, Germany, 1989; pp. 253–276. [Google Scholar]

- Lu, D.V.; Hershberger, D.; Smart, W.D. Layered costmaps for context-sensitive navigation. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 709–715. [Google Scholar]

- Toghi, B.; Valiente, R.; Sadigh, D.; Pedarsani, R.; Fallah, Y.P. Cooperative autonomous vehicles that sympathize with human drivers. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021. [Google Scholar]

- Newcombe, R.A.; Lovegrove, S.J.; Davison, A.J. DTAM: Dense tracking and mapping in real-time. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2320–2327. [Google Scholar]

- González-Banos, H.H.; Latombe, J.C. Navigation strategies for exploring indoor environments. Int. J. Robot. Res. 2002, 21, 829–848. [Google Scholar] [CrossRef]

- Jiang, R.; Yang, S.; Ge, S.S.; Wang, H.; Lee, T.H. Geometric map-assisted localization for mobile robots based on uniform-Gaussian distribution. IEEE Robot. Autom. Lett. 2017, 2, 789–795. [Google Scholar] [CrossRef]

- Maturana, D.; Chou, P.W.; Uenoyama, M.; Scherer, S. Real-time semantic mapping for autonomous off-road navigation. In Field and Service Robotics; Springer: Cham, Switzerland, 2018; pp. 335–350. [Google Scholar]

- Liu, R.; Wang, J.; Zhang, B. High definition map for automated driving: Overview and analysis. J. Navig. 2020, 73, 324–341. [Google Scholar] [CrossRef]

- Kim, C.; Cho, S.; Sunwoo, M.; Jo, K. Crowd-Sourced Mapping of New Feature Layer for High-Definition Map. Sensors 2018, 18, 4172. [Google Scholar] [CrossRef] [PubMed]

- Armand, A.; Ibanez-Guzman, J.; Zinoune, C. Digital maps for driving. In Automated Driving; Springer: Berlin/Heidelberg, Germany, 2017; pp. 201–244. [Google Scholar]

- Bétaille, D.; Toledo-Moreo, R. Creating enhanced maps for lane-level vehicle navigation. IEEE Trans. Intell. Transp. Syst. 2010, 11, 786–798. [Google Scholar] [CrossRef]

- Peyret, F.; Laneurit, J.; Betaille, D. A novel system using enhanced digital maps and WAAS for a lane level positioning. In Proceedings of the 15th World Congress on Intelligent Transport Systems and ITS America’s 2008 Annual Meeting ITS AmericaERTICOITS JapanTransCore, New York, NY, USA, 16–20 November 2008. [Google Scholar]

- Adas Map. Available online: https://www.tomtom.com/products/adas-map (accessed on 10 August 2022).

- Zhang, R.; Chen, C.; Di, Z.; Wheeler, M.D. Visual Odometry and Pairwise Alignment for High Definition Map Creation. U.S. Patent 10,598,489, 24 March 2020. [Google Scholar]

- Shimada, H.; Yamaguchi, A.; Takada, H.; Sato, K. Implementation and evaluation of local dynamic map in safety driving systems. J. Transp. Technol. 2015, 5, 102. [Google Scholar] [CrossRef]

- Lee, J.; Lee, K.; Yoo, A.; Moon, C. Design and Implementation of Edge-Fog-Cloud System through HD Map Generation from LiDAR Data of Autonomous Vehicles. Electronics 2020, 9, 2084. [Google Scholar] [CrossRef]

- Kent, L. HERE Introduces HD Maps for Highly Automated Vehicle Testing; HERE: Amsterdam, The Netherlands, 2015; Available online: http://360.here.com/2015/07/20/here-introduces-hd-maps-for-highlyautomated-vehicle-testing/ (accessed on 16 April 2018).

- Bao, Z.; Hossain, S.; Lang, H.; Lin, X. High-Definition Map Generation Technologies For Autonomous Driving. arXiv 2022, arXiv:2206.05400. [Google Scholar] [CrossRef]

- LiDAR Boosts Brain Power for Self-Driving Cars. Available online: https://eijournal.com/resources/lidar-solutions-showcase/lidar-boosts-brain-power-for-self-driving-cars (accessed on 10 August 2022).

- García, M.; Urbieta, I.; Nieto, M.; González de Mendibil, J.; Otaegui, O. iLDM: An Interoperable Graph-Based Local Dynamic Map. Vehicles 2022, 4, 42–59. [Google Scholar] [CrossRef]

- HERE HD Live Map, Technical Paper, a Self-Healing Map for Reliable Autonomous Driving. Available online: https://engage.here.com/hubfs/Downloads/Tech%20Briefs/HERE%20Technologies%20Self-healing%20Map%20Tech%20Brief.pdf?t=1537438054632 (accessed on 10 August 2022).

- Semantic Maps for Autonomous Vehicles by Kris Efland and Holger Rapp, Engineering Managers, Lyft Level 5. Available online: https://medium.com/wovenplanetlevel5/semantic-maps-for-autonomous-vehicles-470830ee28b6 (accessed on 10 August 2022).

- Rethinking Maps for Self-Driving By Kumar Chellapilla, Director of Engineering, Lyft Level 5. Available online: https://medium.com/wovenplanetlevel5/https-medium-com-lyftlevel5-rethinking-maps-for-self-driving-a147c24758d6 (accessed on 10 August 2022).

- Qin, T.; Zheng, Y.; Chen, T.; Chen, Y.; Su, Q. A Light-Weight Semantic Map for Visual Localization towards Autonomous Driving. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 11248–11254. [Google Scholar] [CrossRef]

- Guo, C.; Lin, M.; Guo, H.; Liang, P.; Cheng, E. Coarse-to-fine Semantic Localization with HD Map for Autonomous Driving in Structural Scenes. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 1146–1153. [Google Scholar] [CrossRef]

- HD Map and Localization. Available online: https://developer.apollo.auto/developer.html (accessed on 10 August 2022).

- Wheeler, M.D. High Definition Map and Route Storage Management System for Autonomous Vehicles. U.S. Patent 10,353,931, 16 July 2019. [Google Scholar]

- TomTom. Available online: https://www.tomtom.com/products/hd-map (accessed on 10 August 2022).

- Han, S.J.; Kang, J.; Jo, Y.; Lee, D.; Choi, J. Robust ego-motion estimation and map matching technique for autonomous vehicle localization with high definition digital map. In Proceedings of the 2018 International Conference on Information and Communication Technology Convergence (ICTC), Jeju, Korea, 17–19 October 2018; pp. 630–635. [Google Scholar]

- Sobreira, H.; Costa, C.M.; Sousa, I.; Rocha, L.; Lima, J.; Farias, P.; Costa, P.; Moreira, A.P. Map-matching algorithms for robot self-localization: A comparison between perfect match, iterative closest point and normal distributions transform. J. Intell. Robot. Syst. 2019, 93, 533–546. [Google Scholar] [CrossRef]

- Hausler, S.; Milford, M. Map Creation, Monitoring and Maintenance for Automated Driving—Literature Review. 2020. Available online: https://imoveaustralia.com/wp-content/uploads/2021/01/P1%E2%80%90021-Map-creation-monitoring-and-maintenance-for-automated-driving.pdf (accessed on 10 August 2022).

- Yurtsever, E.; Lambert, J.; Carballo, A.; Takeda, K. A Survey of Autonomous Driving: Common Practices and Emerging Technologies. IEEE Access 2020, 8, 58443–58469. [Google Scholar] [CrossRef]

- Luo, Q.; Cao, Y.; Liu, J.; Benslimane, A. Localization and navigation in autonomous driving: Threats and countermeasures. IEEE Wirel. Commun. 2019, 26, 38–45. [Google Scholar] [CrossRef]

- waymo. Available online: https://digital.hbs.edu/platform-digit/submission/way-mo-miles-way-mo-data/ (accessed on 10 August 2022).

- Seif, H.G.; Hu, X. Autonomous driving in the iCity—HD maps as a key challenge of the automotive industry. Engineering 2016, 2, 159–162. [Google Scholar] [CrossRef]

- Ahmad, F.; Qiu, H.; Eells, R.; Bai, F.; Govindan, R. CarMap: Fast 3D Feature Map Updates for Automobiles. In Proceedings of the 17th USENIX Symposium on Networked Systems Design and Implementation (NSDI 20), Santa Clara, CA, USA, 26–27 February 2022; USENIX Association: Santa Clara, CA, USA, 2020; pp. 1063–1081. [Google Scholar]

| Different Categories of Robotic Maps | ||||

|---|---|---|---|---|

| Category | Pros | Cons | Details | Related Papers |

| Topological | Easier map extension | Lack of sense of proximity, lack of explicit information | Graph-based, deals with places and their interactions | [80,81,82,83,84,85,86,87,88,89,90] |

| Metric | Precise coordinate of objects | Computationally expensive in vast areas | Contains all required information for mapping or navigation algorithm | [58,59,60,64,69,71,72,73,75,76,77,91,92,93,94,95,96,97,98,99,100,101,102,103,104,105,106,108,109,110,111,112,113,114,116,117] |

| Geometric | Efficient data storage with low amount of information loss | Hard trajectory calculation and data management | Data are represented with discrete geometric shapes | [115,116,117] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ebrahimi Soorchaei, B.; Razzaghpour, M.; Valiente, R.; Raftari, A.; Fallah, Y.P. High-Definition Map Representation Techniques for Automated Vehicles. Electronics 2022, 11, 3374. https://doi.org/10.3390/electronics11203374

Ebrahimi Soorchaei B, Razzaghpour M, Valiente R, Raftari A, Fallah YP. High-Definition Map Representation Techniques for Automated Vehicles. Electronics. 2022; 11(20):3374. https://doi.org/10.3390/electronics11203374

Chicago/Turabian StyleEbrahimi Soorchaei, Babak, Mahdi Razzaghpour, Rodolfo Valiente, Arash Raftari, and Yaser Pourmohammadi Fallah. 2022. "High-Definition Map Representation Techniques for Automated Vehicles" Electronics 11, no. 20: 3374. https://doi.org/10.3390/electronics11203374

APA StyleEbrahimi Soorchaei, B., Razzaghpour, M., Valiente, R., Raftari, A., & Fallah, Y. P. (2022). High-Definition Map Representation Techniques for Automated Vehicles. Electronics, 11(20), 3374. https://doi.org/10.3390/electronics11203374