Artificial Intelligence-Based Diabetes Diagnosis with Belief Functions Theory

Abstract

1. Introduction

2. Classification Methods Based on ML

2.1. Supervised Classification

2.1.1. KNN Classifier

- Description of the algorithm

- Strengths and drawbacks

2.1.2. SVM Classifier

- Description of the algorithm

- Strengths and drawbacks

2.1.3. Decision Tree

- Presentation of the method

- Strengths and drawbacks

2.2. Comparison and Conclusion

3. Classification Methods Based on DL

- -

- Automotive (car ignition, automatic guidance).

- -

- Aerospace (automatic piloting, flight simulation).

- -

- Defense (missile guidance, target tracking, radar, sonar).

- -

- Character, face, speech and handwriting recognition.

- -

- Forecasting of water consumption, electricity, road traffic.

- -

- Finance (forecasts on the money markets).

- -

- Identification of industrial processes (e.g., Air Liquide, Elf Atochem, Lafarge cements).

- -

- Electronics.

- -

- Medical diagnosis (EEC and ECG analysis).

- -

- Robotics.

- -

- Telecommunication (Data compression).

- -

- Business and management.

- Strengths and drawbacks

- -

- Feature automatically deduced, no data labeling required.

- -

- The same neural network can be applied to diversification of applications.

- -

- Unstructured data processing.

- -

- While the drawbacks are:

- -

- Development of learning algorithms takes a relatively long time.

- -

- Requires a large database.

- -

- Expensive to train due to complex data models.

3.1. Neural Network

3.2. CNN

- Presentation of the Network

- Operating Mode of CNN

3.3. RNN

- Presentation of the Network

- Operating Mode of RNN

3.4. LSTM

- Presentation of the Algorithm

- Operating Mode of the LSTM

3.5. GRU

- Presentation of the Algorithm

- Operating Mode of the GRU

3.6. Comparison and Conclusions

4. Belief Functions

5. Application: Diagnosis of Diabetes Disease

5.1. Description of Database and Process

- -

- True Positives (TP): The number of positive instances classified as positive.

- -

- True Negatives (TN): The number of negative instances classified as negative.

- -

- False Positives (FP): The number of negative instances classified as positive.

- -

- False Negatives (FN): The number of positive instances classified as negative.

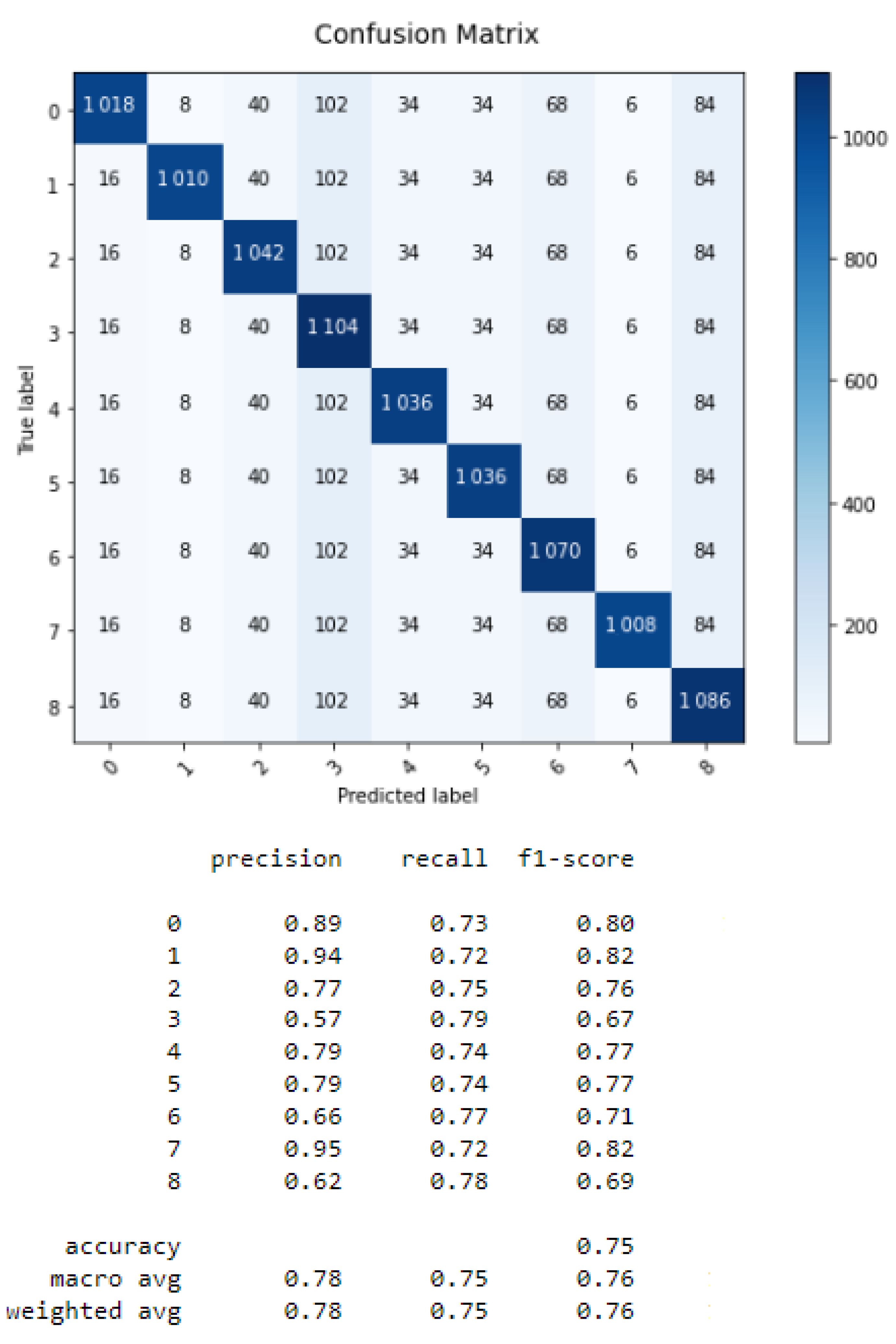

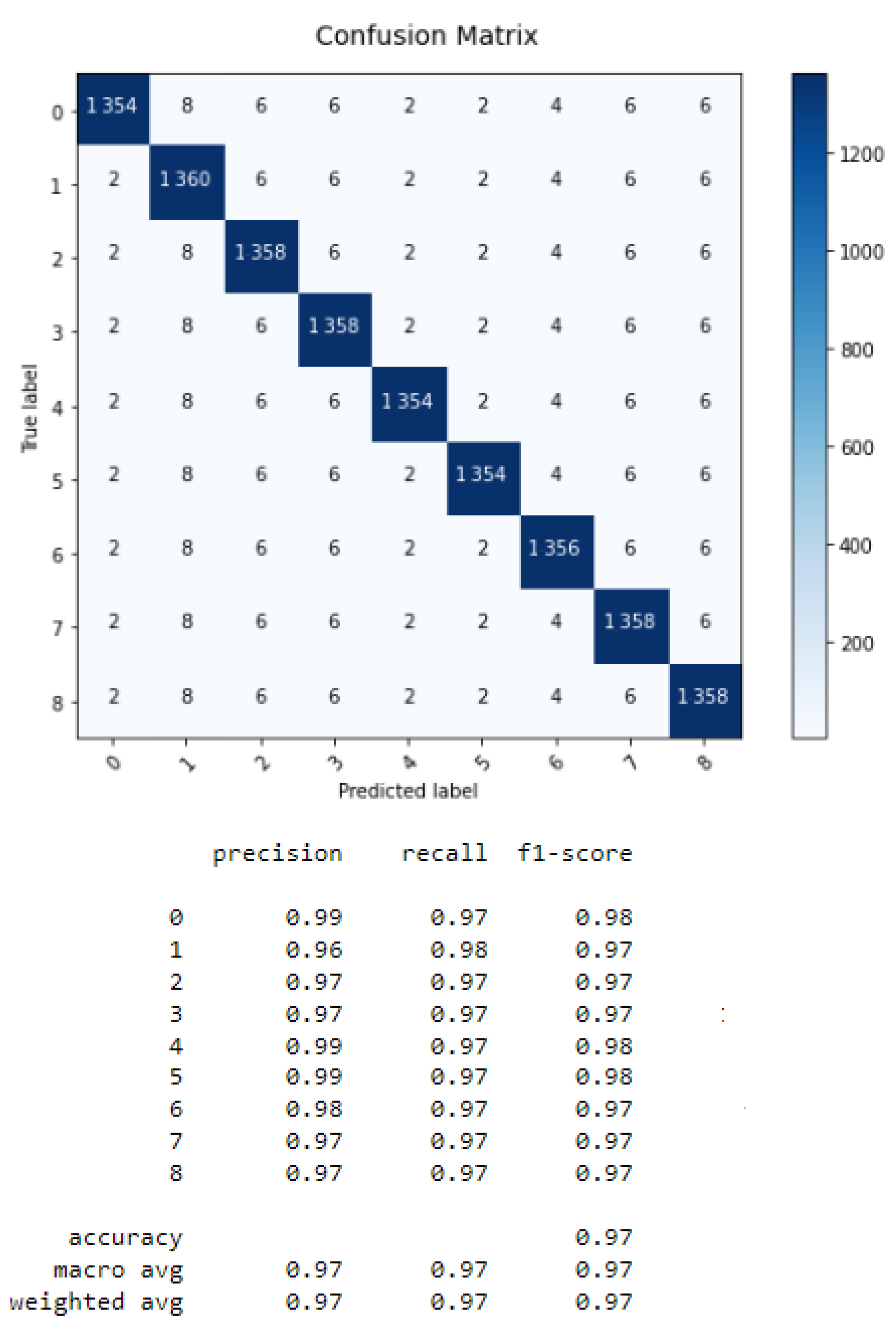

5.2. Results and Discussions

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ongsulee, P. Artificial Intelligence, Machine Learning and Deep Learning. In Proceedings of the International Conference on ICT and Knowledge Engineering, Bangkok, Thailand, 22–24 November 2017; pp. 22–24. [Google Scholar]

- Frier, B.M. How hypoglycaemia can affect the life of a person with diabetes. Diabetes Metab. Res. Rev. 2008, 24, 87–92. [Google Scholar] [CrossRef] [PubMed]

- Liu, L.; Yager, R.R. Classic Works of the Dempster-Shafer Theory of Belief Functions: An Introduction; Springer: Berlin/Heidelberg, Germany, 2008; pp. 1–34. [Google Scholar]

- Available online: https://fr.statista.com/statistiques/570844/prevalence-du-diabete-dans-lemonde/#statisticContainer (accessed on 22 November 2017).

- Zhou, H.; Myrzashova, R.; Zheng, R. Diabetes prediction model based on an enhanced deep neural network. EURASIP J. Wirel. Commun. Netw. 2020, 2020, 148. [Google Scholar] [CrossRef]

- Ayon, S.I.; Islam, M.M. Diabetes Prediction: A Deep Learning Approach. Int. J. Inf. Eng. Electron. Bus. 2019, 2, 21–27. [Google Scholar]

- Mhaskar, H.; Pereverzyev, S.M. Deep Learning Approach to Diabetic Blood Glucose Prediction. Front. Appl. Math. Stat. 2017, 3, 14. [Google Scholar] [CrossRef]

- Rahman, M.; Islam, D.; Mukti, R.J.; Saha, I. A deep learning approach based on convolutional LSTM for detecting diabetes. Comput. Biol. Chem. 2020, 88, 107329. [Google Scholar] [CrossRef]

- Tymchenko, B.; Marchenko, P.; Spodarets, D. Deep Learning Approach to Diabetic Retinopathy Detection. In Proceedings of the 9th International Conference on Pattern Recognition Applications and Methods—ICPRAM, Valletta, Malta, 22–24 February 2020; pp. 501–509. [Google Scholar]

- Ksantini, M.; Ben Hassena, A.; Delmotte, F. Comparison and fusion of classifiers applied to a medical diagnosis. In Proceedings of the International Multi-Conference on Systems, Signals & Devices, Marrakech, Morocco, 28–31 March 2017; pp. 28–31. [Google Scholar]

- Lichman, M. UCI Machine Learning Repository. 2013. Available online: http://archive.ics.uci.edu/ml (accessed on 17 August 2022).

- Bloch, I. Fusion of Information under Imprecision and Uncertainty, Numerical Methods, and Image Information Fusion. In Multisensor Data Fusion; Springer: Berlin/Heidelberg, Germany, 2002; pp. 267–294. [Google Scholar]

- Ennaceur, A.; Elouedi, Z.; Lefevre, E. Reasoning under uncertainty in the AHP method using the belief function theory. In Proceedings of the 14th International Conference on Information Processing and Management of Uncertainty in Knowledge-Based Systems (IPMU’2012), Catania, Italy, 9–13 July 2012; pp. 373–382. [Google Scholar]

- Srivastava, S.; Sharma, L.; Sharma, V.; Kumar, A.; Darbari, H. Prediction of diabetes using artificial neural network approach. In Engineering Vibration, Communication and Information Processing; Springer: Berlin/Heidelberg, Germany, 2019; pp. 679–687. [Google Scholar]

- Chowdary, P.B.K.; Kumar, R.U. An Effective Approach for Detecting Diabetes using Deep Learning Techniques based on Convolutional LSTM Networks. Int. J. Adv. Comput. Sci. Appl. (IJACSA) 2021, 12. [Google Scholar] [CrossRef]

- Madan, P.; Singh, V.; Chaudhari, V.; Albagory, Y.; Dumka, A.; Singh, R.; Gehlot, A.; Rashid, M.; Alshamrani, S.S.; AlGhamdi, A.S. An Optimization-Based Diabetes Prediction Model Using CNN and Bi-Directional LSTM in Real-Time Environment. Appl. Sci. 2022, 12, 3989. [Google Scholar] [CrossRef]

- Dempster, A.P. Upper and lower probabilities induced by a multivalued mapping. Ann. Math. Stat. 1967, 38, 325–339. [Google Scholar] [CrossRef]

- Shafer, G. A Mathematical Theory of Evidence; Princeton University Press: Princeton, NJ, USA, 1976. [Google Scholar]

- Saravanan, R.; Sujatha, P. A State of Art Techniques on Machine Learning Algorithms: A Perspective of Supervised Learning Approaches in Data Classification. In Proceedings of the International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 14–15 June 2018. [Google Scholar]

- Osisanwo, F.Y.; Akinsola, J.E.T.; Awodele, O.; Hinmikaiye, J.O.; Olakanmi, O.; Akinjobi, J. Supervised Machine Learning Algorithms: Classification and Comparison. Int. J. Comput. Trends Technol. (IJCTT) 2017, 48, 128–138. [Google Scholar]

- Caruana, R.; Mizil, A.N. An empirical comparison of supervised learning algorithms. In Proceedings of the 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 161–168. [Google Scholar]

- Jiao, L.; Pan, Q.; Feng, X.; Yang, F. An evidential k-nearest neighbor classification method with weighted attributes. In Proceedings of the 2013 16th International Conference on Information Fusion (FUSION), Istanbul, Turkey, 9–12 July 2013; pp. 145–150. [Google Scholar]

- Yildiz, T.; Yildirim, S.; Altilar, D.T. Spam Filtering with Parallelized KNN Algorithm; Akademik Bilisim: Istanbul, Turkey, 2008. [Google Scholar]

- Cover, T.M.; Hart, P.E. Nearest Neighbour Pattern Classification. Inst. Electr. Electron. Eng. Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar]

- Fogarty, T.C. First nearest neighbor classification on Frey and Slate’s letter recognition problem. Mach. Learn. 1992, 9, 387–388. [Google Scholar] [CrossRef][Green Version]

- Shukran, M.A.M.; Khairuddin, M.A.; Maskat, K. Recent trends in data classifications. In Proceedings of the International Conference on Industrial and Intelligent Information, Pune, India, 17–18 November 2012; Volume 31. [Google Scholar]

- Jakkula, V. Tutorial on Support Vector Machine (SVM); Washington State University, School of EECS: Pullman, WA, USA, 2006. [Google Scholar]

- Vapnik, V. The Nature of Statistical Learning Theory; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Cristianini, N.; Shawe, J. An Introduction to Support Vector Machines and Other Kernel-Based Learning Methods; Cambridge University Press: Oxford, UK, 2000. [Google Scholar]

- Xu, P.; Davoine, F.; Zha, H.; Denœux, T. Evidential calibration of binary SVM classifiers. Int. J. Approx. Reason. 2016, 72, 55–70. [Google Scholar] [CrossRef]

- Bryson, O.; Muata, K. Evaluation of decision trees: A multi-criteria approach. Comput. Oper. Res. 2004, 31, 1933–1945. [Google Scholar] [CrossRef]

- Priyama, A.; Guptaa, R.; Ratheeb, A.; Srivastavab, S. Comparative Analysis of Decision Tree Classification Algorithms. Int. J. Curr. Eng. Technol. 2013, 3, 334–337. [Google Scholar]

- Pouyanfar, S.; Sadiq, S.; Yan, Y.; Tian, H.; Tao, Y.; Reyes, M.P.; Shyu, M.-L.; Chen, S.-C.; Iyengar, S.S. A Survey on Deep Learning. ACM Comput. Surv. 2019, 51, 1–36. [Google Scholar] [CrossRef]

- Shrestha, A.; Mahmood, A. Review of Deep Learning Algorithms and Architectures. IEEE Access 2019, 7, 53040–53065. [Google Scholar] [CrossRef]

- Lauzon, F.Q. An introduction to deep learning. In Proceedings of the International Conference on Information Science, Signal Processing and their Applications (ISSPA), Montreal, QC, Canada, 2–5 September 2012. [Google Scholar]

- Mathew, A.; Amudha, P.; Sivakumari, S. Deep Learning Techniques: An Overview. Adv. Intell. Syst. Comput. 2020, 1141, 599–608. [Google Scholar]

- Dong, S.; Wang, P.; Abbas, K. A survey on deep learning and its applications. Comput. Sci. Rev. 2021, 40, 100379. [Google Scholar] [CrossRef]

- Wu, Q.; Liu, Y.; Li, Q.; Jin, S.; Li, F. The application of deep learning in computer vision. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017. [Google Scholar]

- Mouha, R.A. Deep Learning for Robotics. J. Data Anal. Inf. Process. 2021, 9. [Google Scholar] [CrossRef]

- Piccialli, F.; Di Somma, V.; Giampaolo, F.; Cuomo, S.; Fortino, G. A survey on deep learning in medicine: Why, how and when? Inf. Fusion 2020, 66, 111–137. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 1–74. [Google Scholar] [CrossRef] [PubMed]

- Zhang, G. Neural networks for classification: A survey. IEEE Trans. Syst. 2000, 30, 451–462. [Google Scholar] [CrossRef]

- Pietro, R.D.; Hager, G.D. Deep Learning: RNNs and LSTM Handbook of Medical Image Computing and Computer Assisted Intervention; Academic Press: Cambridge, MA, USA, 2020; pp. 503–519. [Google Scholar]

- Kumaraswamy, B. Neural networks for data classification. In Artificial Intelligence in Data Mining; Academic Press: Cambridge, MA, USA, 2021; pp. 109–131. [Google Scholar]

- Li, Y.; Lu, Y. Detection Approach Combining LSTM and Bayes. In Proceedings of the International Conference on Advanced Cloud and Big Data (CBD), Suzhou, China, 21–22 September 2019; pp. 180–185. [Google Scholar]

- Mateus, B.C.; Mendes, M.; Farinha, J.T.; Assis, R.; Cardoso, A.M. Comparing LSTM and GRU Models to Predict the Condition of a Pulp Paper Press. Energies 2021, 14, 6958. [Google Scholar] [CrossRef]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Li, W.; Logenthiran, T.; Woo, W.L. Multi-GRU prediction system for electricity generation’s planning and operation. IET Gener. Transm. Distrib. 2019, 13, 1630–1637. [Google Scholar] [CrossRef]

- Yamak, P.T.; Yujian, L.; Gadosey, P.K. A Comparison between ARIMA, LSTM, and GRU for Time Series Forecasting. In Proceedings of the 2019 2nd International Conference on Algorithms, Computing and Artificial Intelligence, Sanya, China, 20–22 December 2019; pp. 49–55. [Google Scholar]

- Bloch, I. Some aspects of Dempster-Shafer evidence theory for classification of muti-modality medical images taking partial volume effect into account. In Pattern Recognition Letters; Elsevier: Amsterdam, The Netherlands, 1996; pp. 905–919. [Google Scholar]

- Bloch, I. Fusion D’informations en Traitement du Signal et des Images; Hermes Science Publication: Paris, France, 2003. [Google Scholar]

- Dubois, D.; Prade, H. Possibility theory and data fusion in poorly informed environments. Control Eng. Pract. 1994, 2, 811–823. [Google Scholar] [CrossRef]

- Lefevre, E.; Colot, O.; Vannoorenberghe, P. Belief function combination and conflict management. Inf. Fusion 2002, 3, 149–162. [Google Scholar] [CrossRef]

| ML Classifiers | AUC-ROC |

|---|---|

| SVM | 0.87 |

| KNN | 0.76 |

| DT | 0.79 |

| ML Classifiers | Classifier | Error Rate |

|---|---|---|

| Individual classifier error rates | C1 | 0.23 |

| C2 | 0.2 | |

| C3 | 0.25 | |

| Fused classifiers error rates | C1 and C2 (m1 ⊕ m2) | 0.13 |

| C1 and C3 (m1 ⊕ m3) | 0.19 | |

| C2 and C3 (m2 ⊕ m3) | 0.17 |

| DL Classifiers | Error Rates |

|---|---|

| C4 | 0.07 |

| C5 | 0.1 |

| C6 | 0.05 |

| C7 | 0.03 |

| DL Classifiers | AUC-ROC |

|---|---|

| CNN | 0.92 |

| GRU | 0.97 |

| LSTM | 0.99 |

| RNN | 0.95 |

| Fused DL Classifiers | Fusion Error Rate |

|---|---|

| C4 and C6 (m4 ⊕ m6) | 0.04 |

| C4 and C7 (m4 ⊕ m7) | 0.03 |

| C5 and C4 (m5 ⊕ m4) | 0.09 |

| C5 and C6 (m5 ⊕ m6) | 0.08 |

| C5 and C7 (m5 ⊕ m7) | 0.06 |

| C6 and C7 (m6 ⊕ m7) | 0.02 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ellouze, A.; Kahouli, O.; Ksantini, M.; Alsaif, H.; Aloui, A.; Kahouli, B. Artificial Intelligence-Based Diabetes Diagnosis with Belief Functions Theory. Symmetry 2022, 14, 2197. https://doi.org/10.3390/sym14102197

Ellouze A, Kahouli O, Ksantini M, Alsaif H, Aloui A, Kahouli B. Artificial Intelligence-Based Diabetes Diagnosis with Belief Functions Theory. Symmetry. 2022; 14(10):2197. https://doi.org/10.3390/sym14102197

Chicago/Turabian StyleEllouze, Ameni, Omar Kahouli, Mohamed Ksantini, Haitham Alsaif, Ali Aloui, and Bassem Kahouli. 2022. "Artificial Intelligence-Based Diabetes Diagnosis with Belief Functions Theory" Symmetry 14, no. 10: 2197. https://doi.org/10.3390/sym14102197

APA StyleEllouze, A., Kahouli, O., Ksantini, M., Alsaif, H., Aloui, A., & Kahouli, B. (2022). Artificial Intelligence-Based Diabetes Diagnosis with Belief Functions Theory. Symmetry, 14(10), 2197. https://doi.org/10.3390/sym14102197