On the Quantification of Visual Texture Complexity

Abstract

1. Introduction

- To investigate visual texture complexity and establish the degree to which it is correlated with homogeneity, randomness, repetitiveness, regularity, color variation, and strongness to better understand its perception. Visual texture complexity will be introduced and defined in detail in Section 4.1.

- To investigate the possible relationships between visual texture complexity and some of the most popular and effective image texture measures.

- To find the most suitable color space/s for the quantitative evaluation of visual texture complexity.

2. Quantitative Color Texture

2.1. First-Order Statistical Texture Features—Image Descriptors

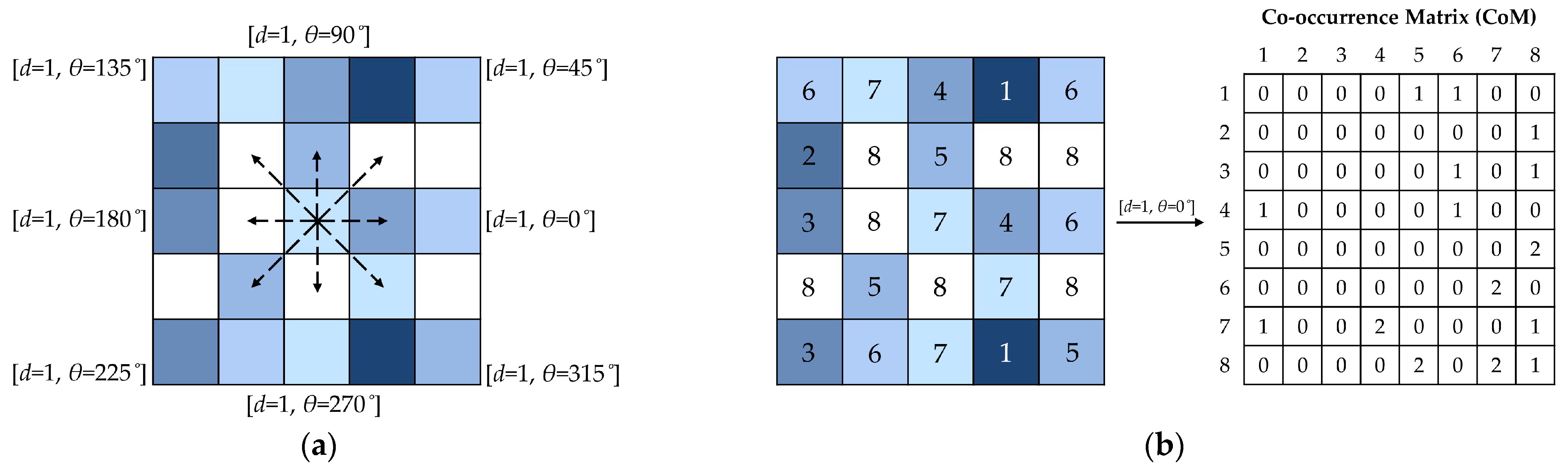

2.2. Second-Order Statistical Texture Features—Co-Occurrence Matrix (CoM) Features

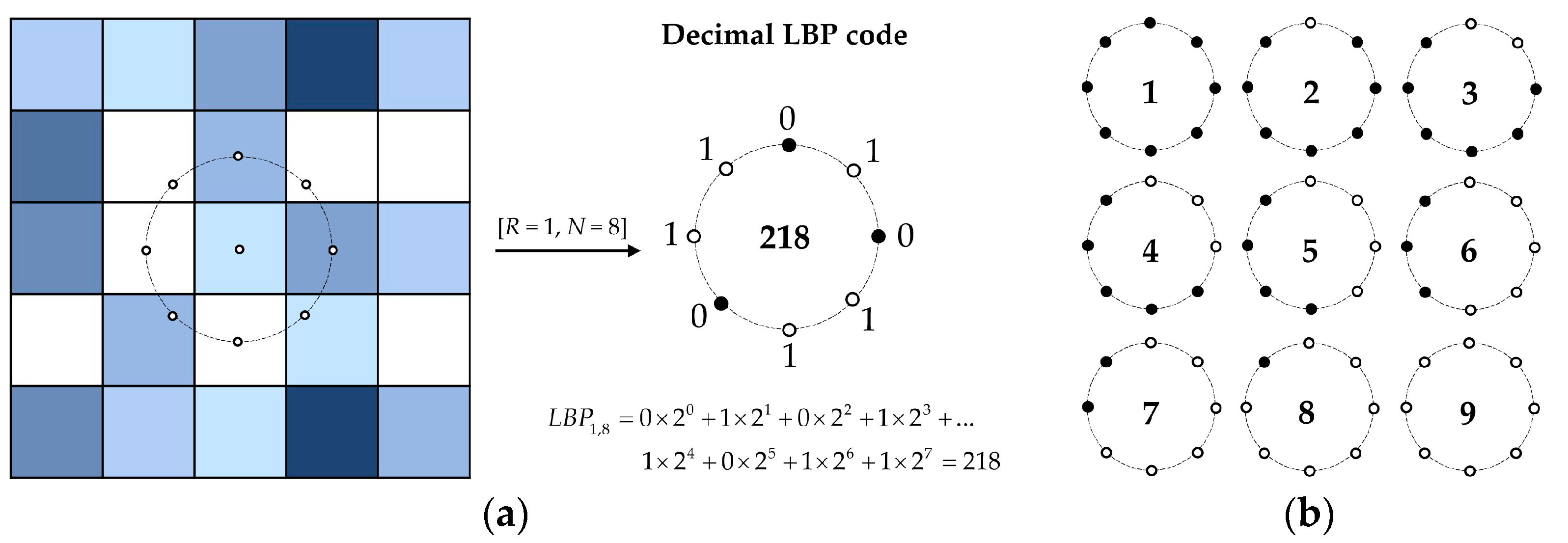

2.3. Local Binary Pattern (LBP) Features

2.4. Gabor Filtering

3. Materials and Methods

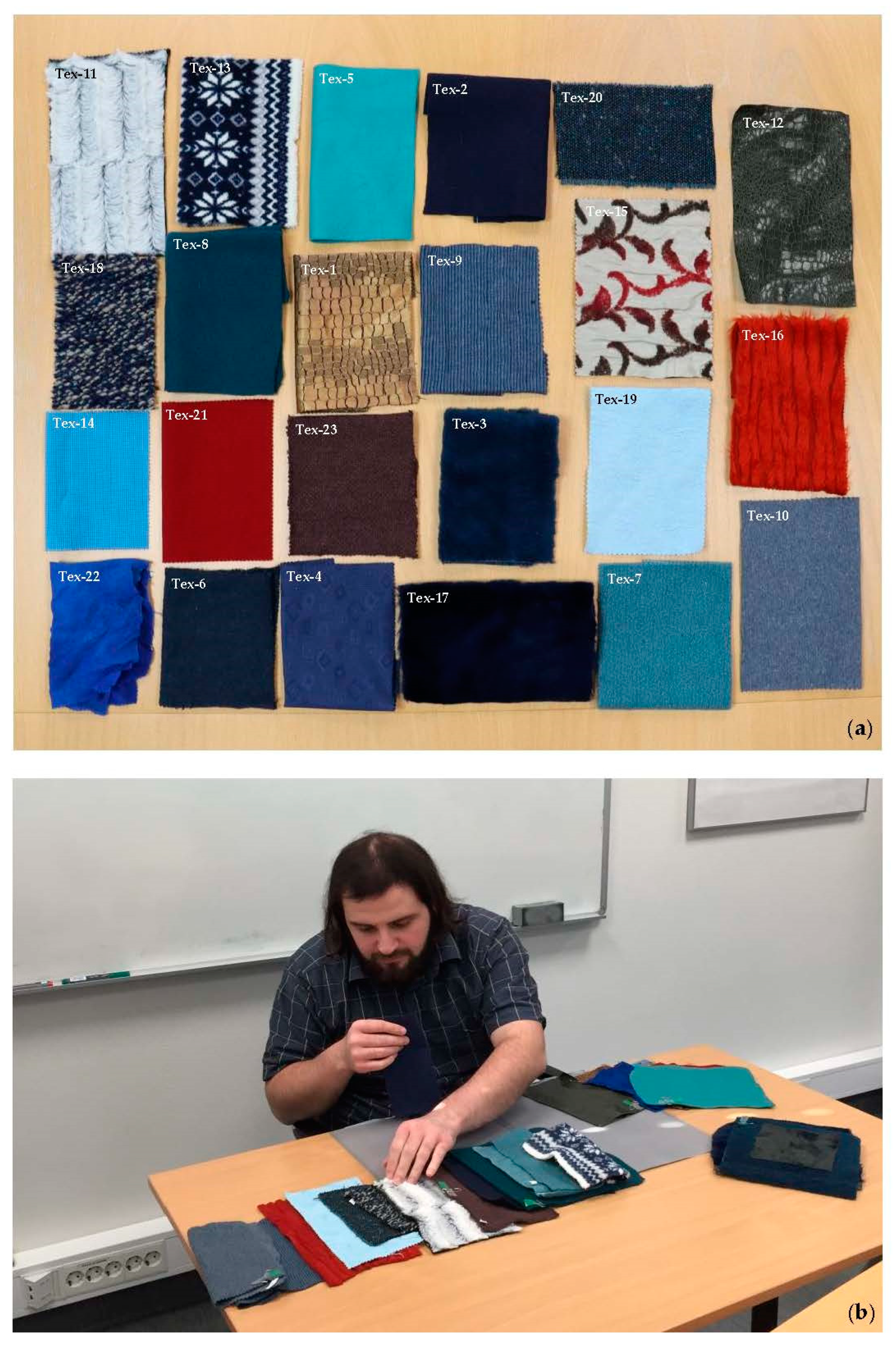

3.1. Visual Assessment of Texture of the Textiles

3.2. Image Acquisition Procedure

3.3. Computing the Image Descriptors and Texture Features of the Textile Images

4. Results

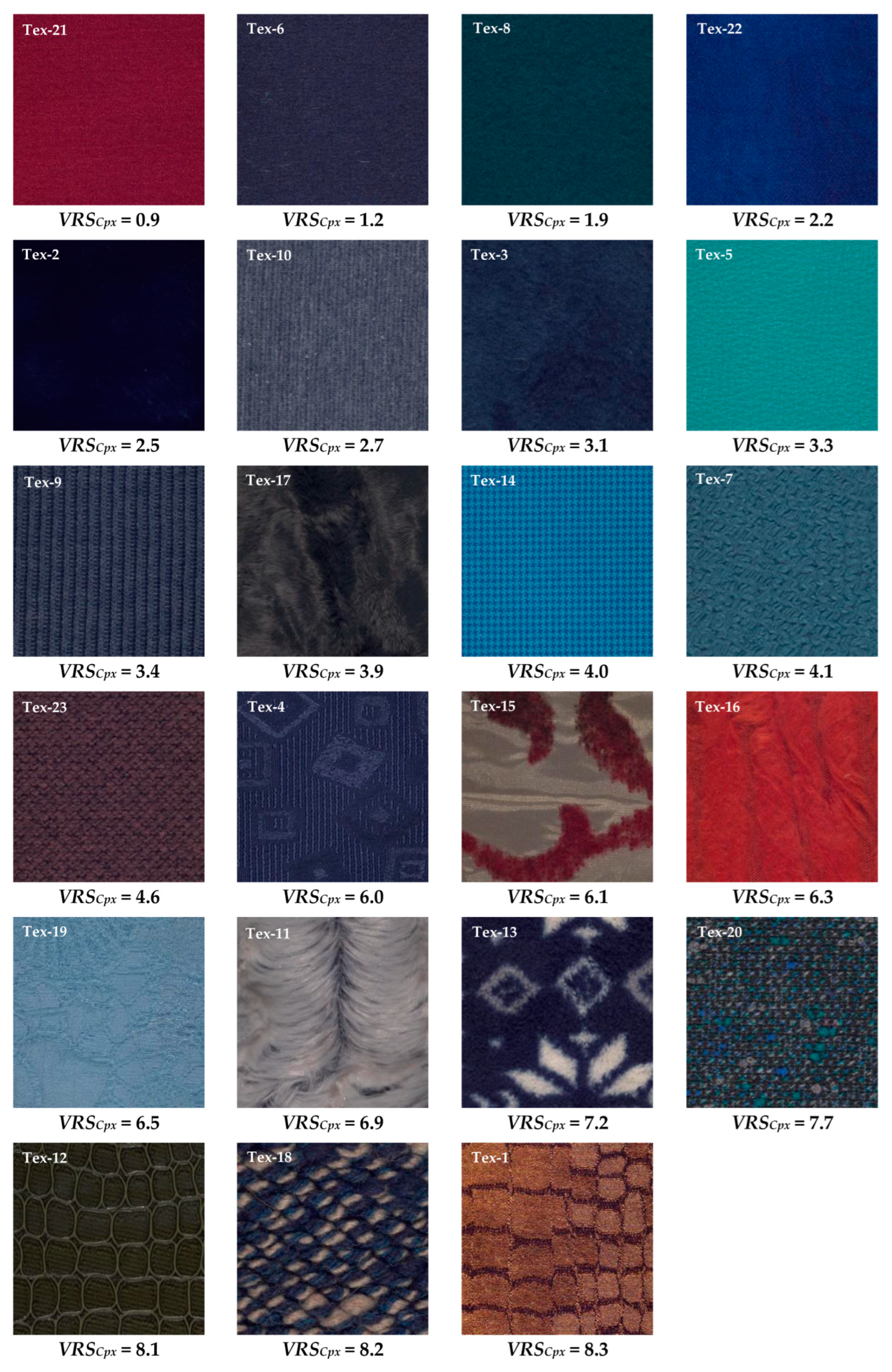

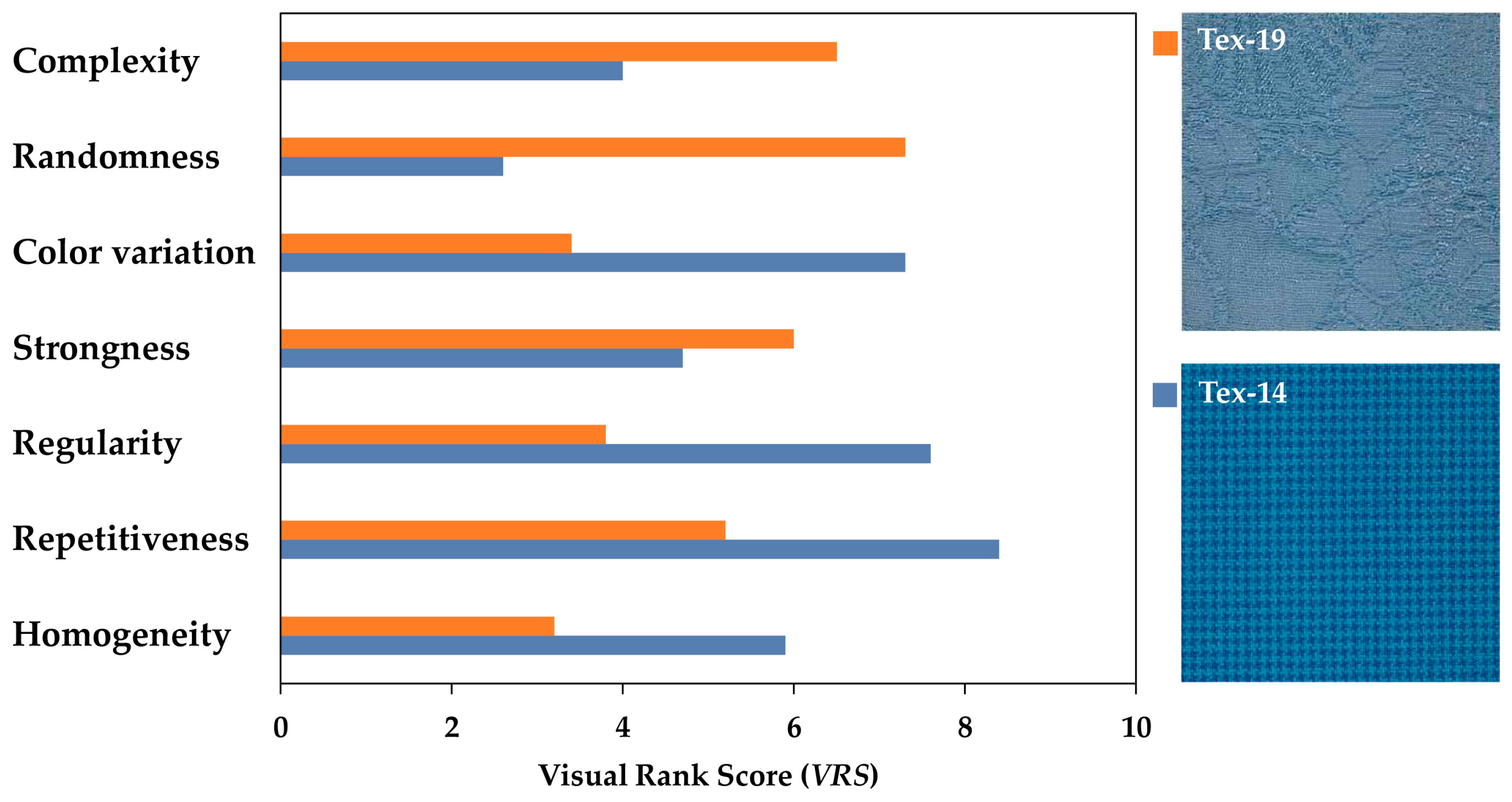

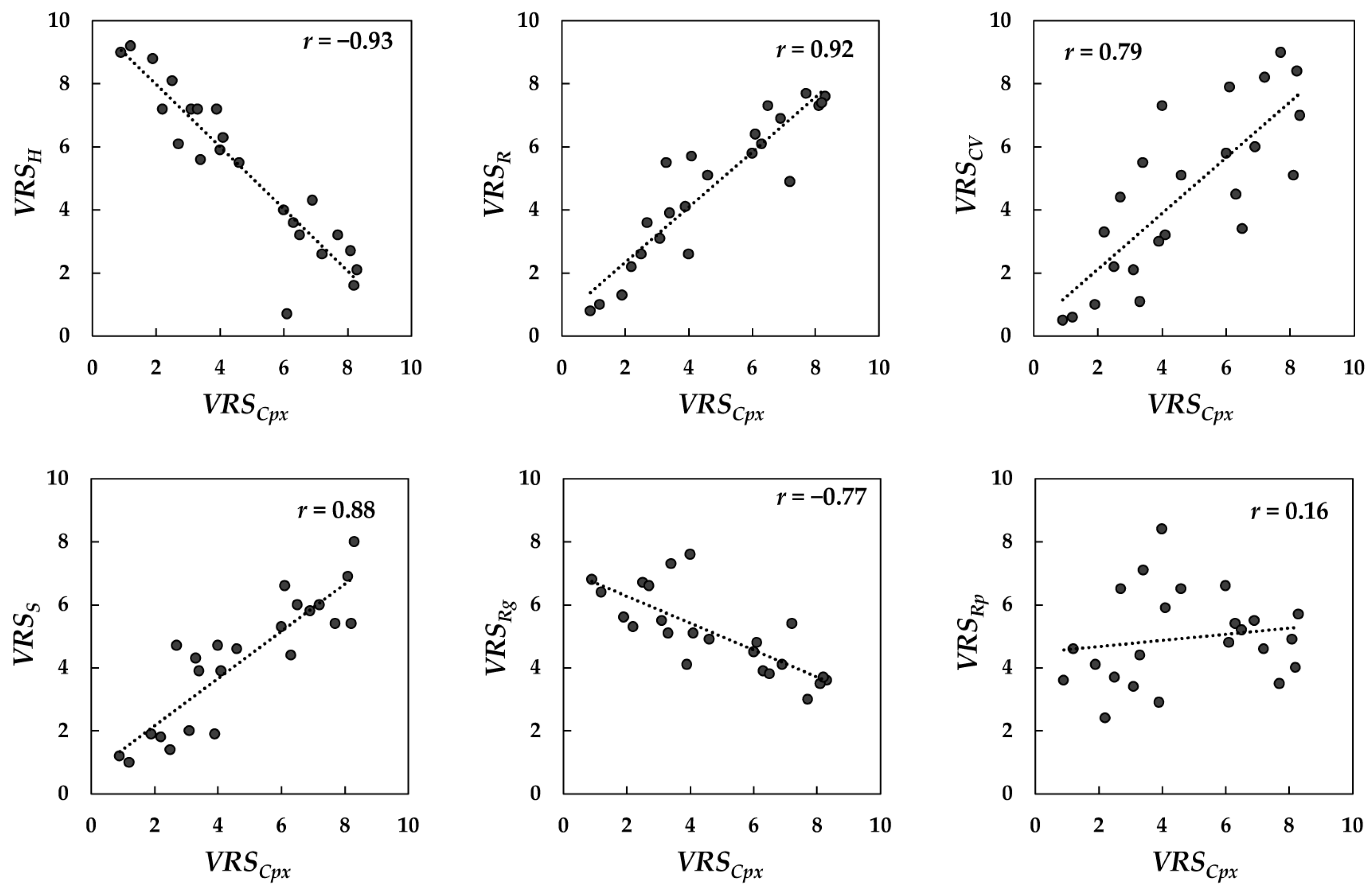

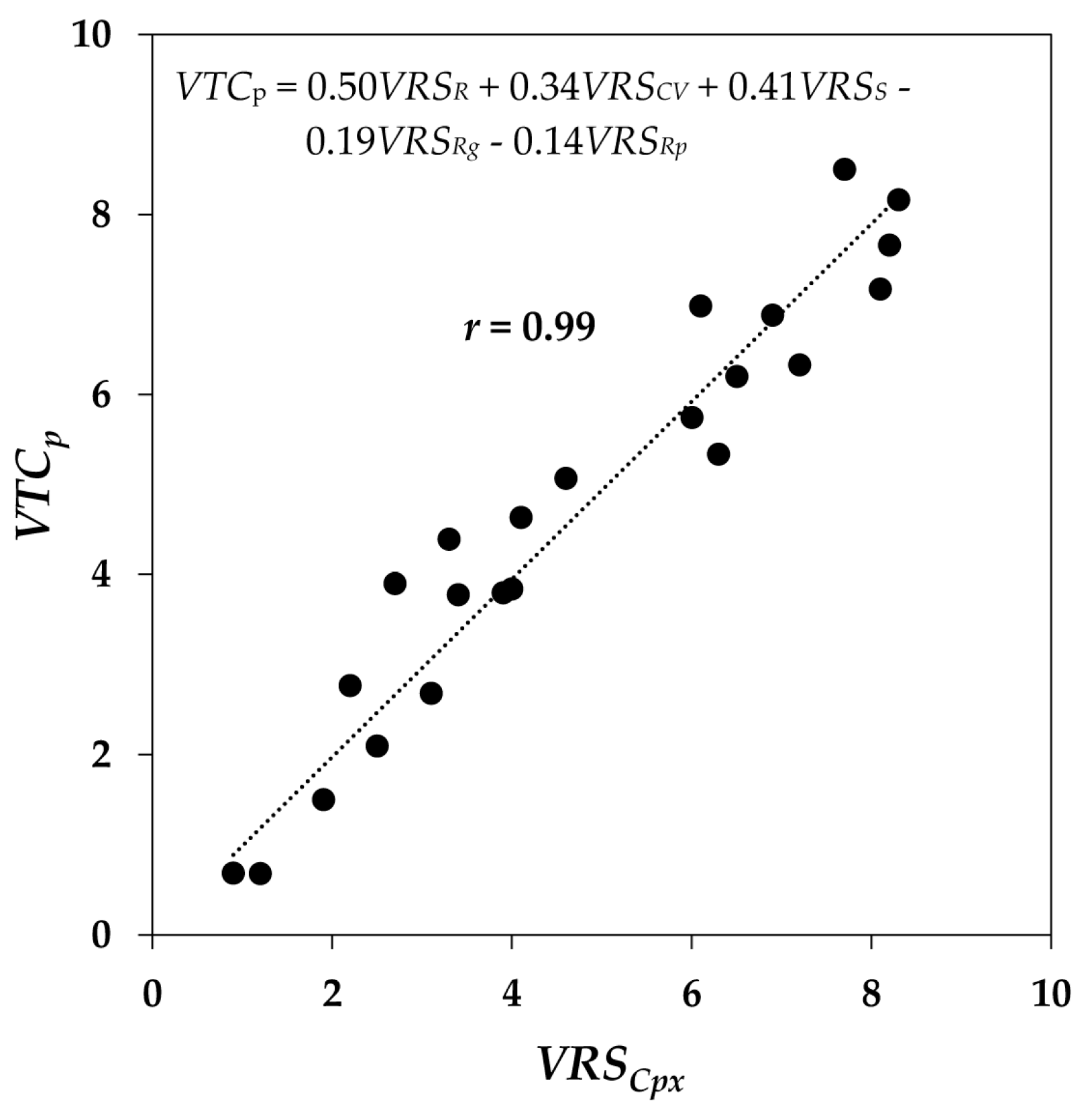

4.1. Visual Texture Complexity

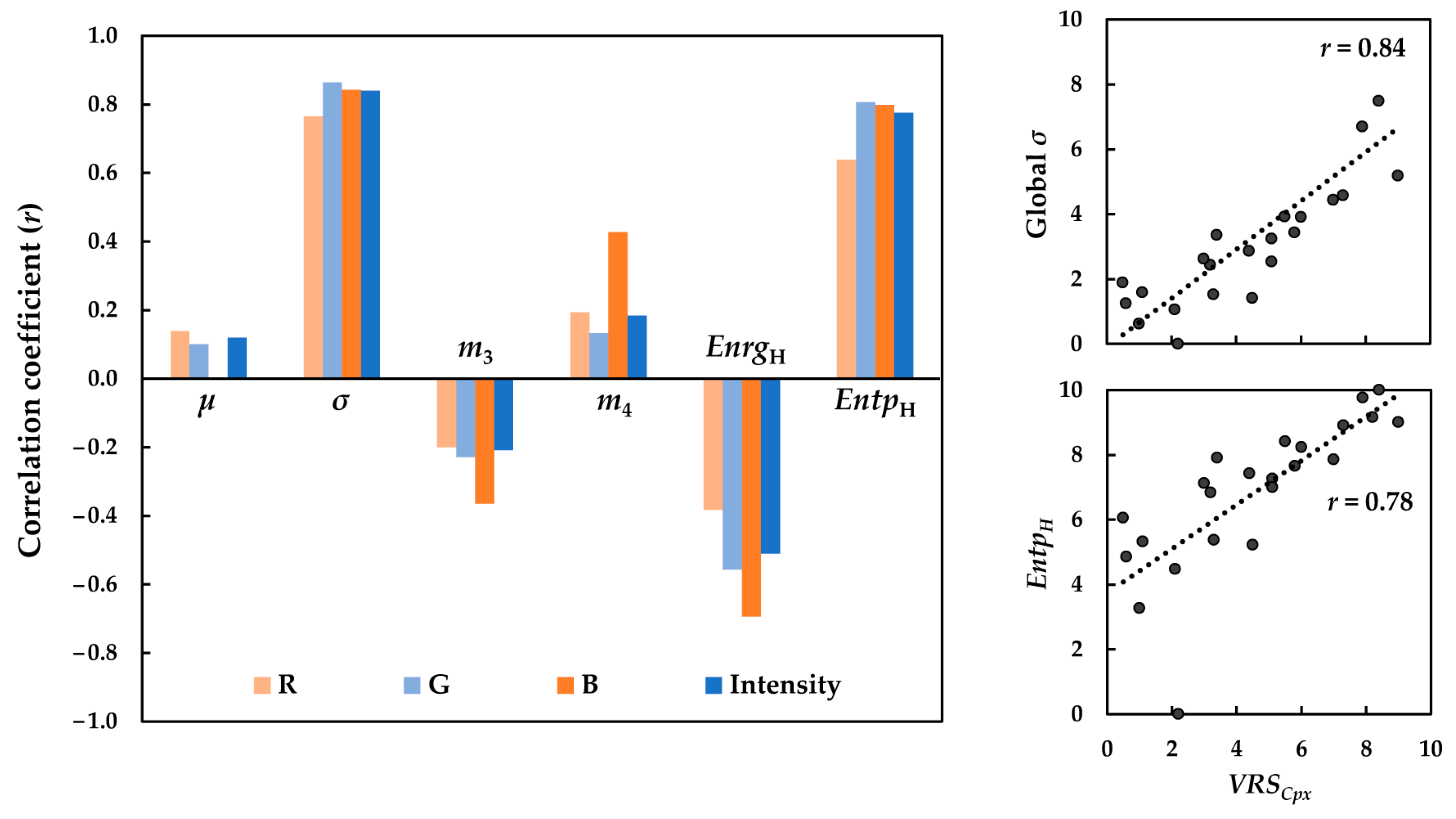

4.2. Correlation between Visual Texture Complexity and Image Descriptors

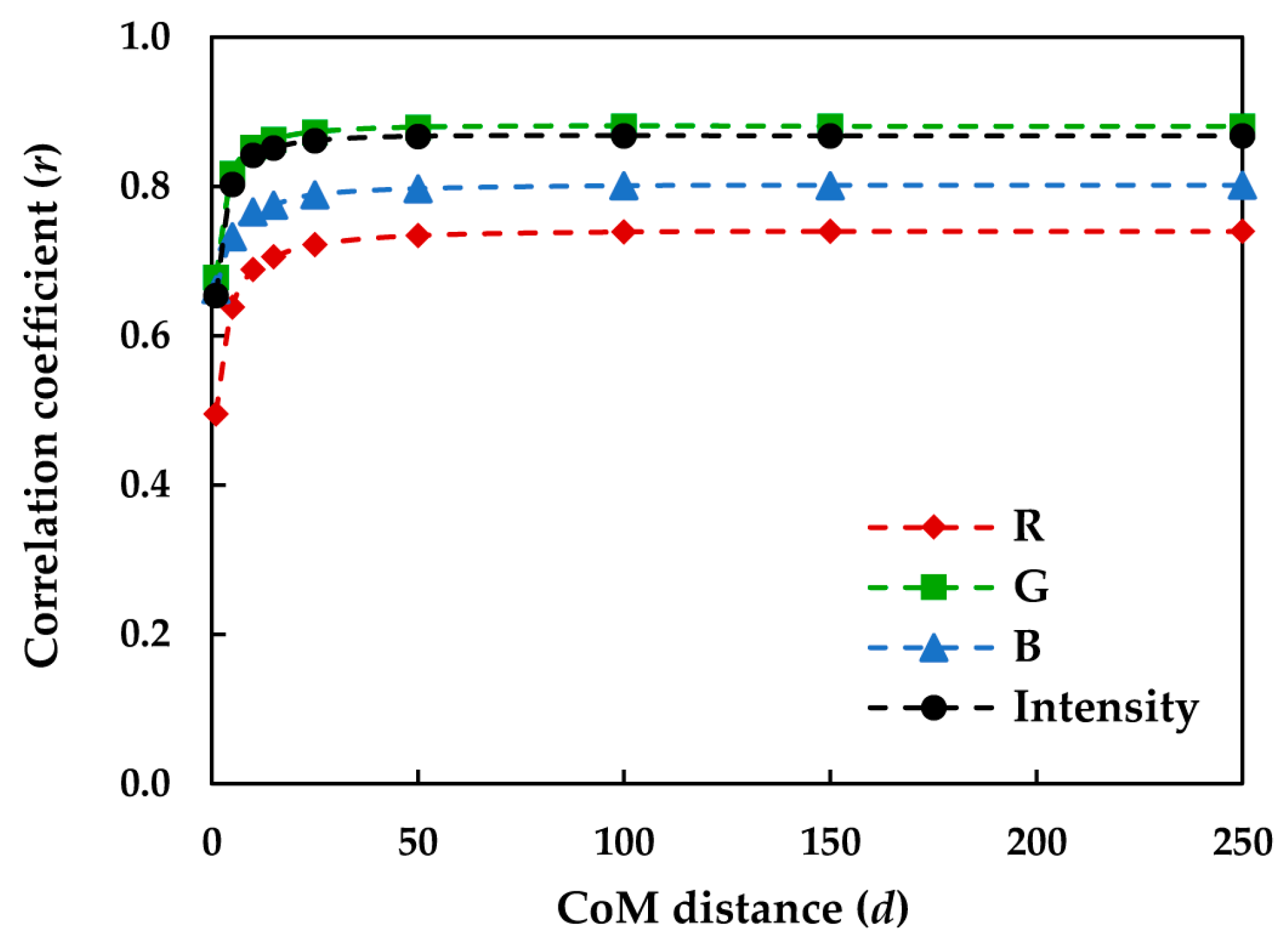

4.2.1. sRGB Space

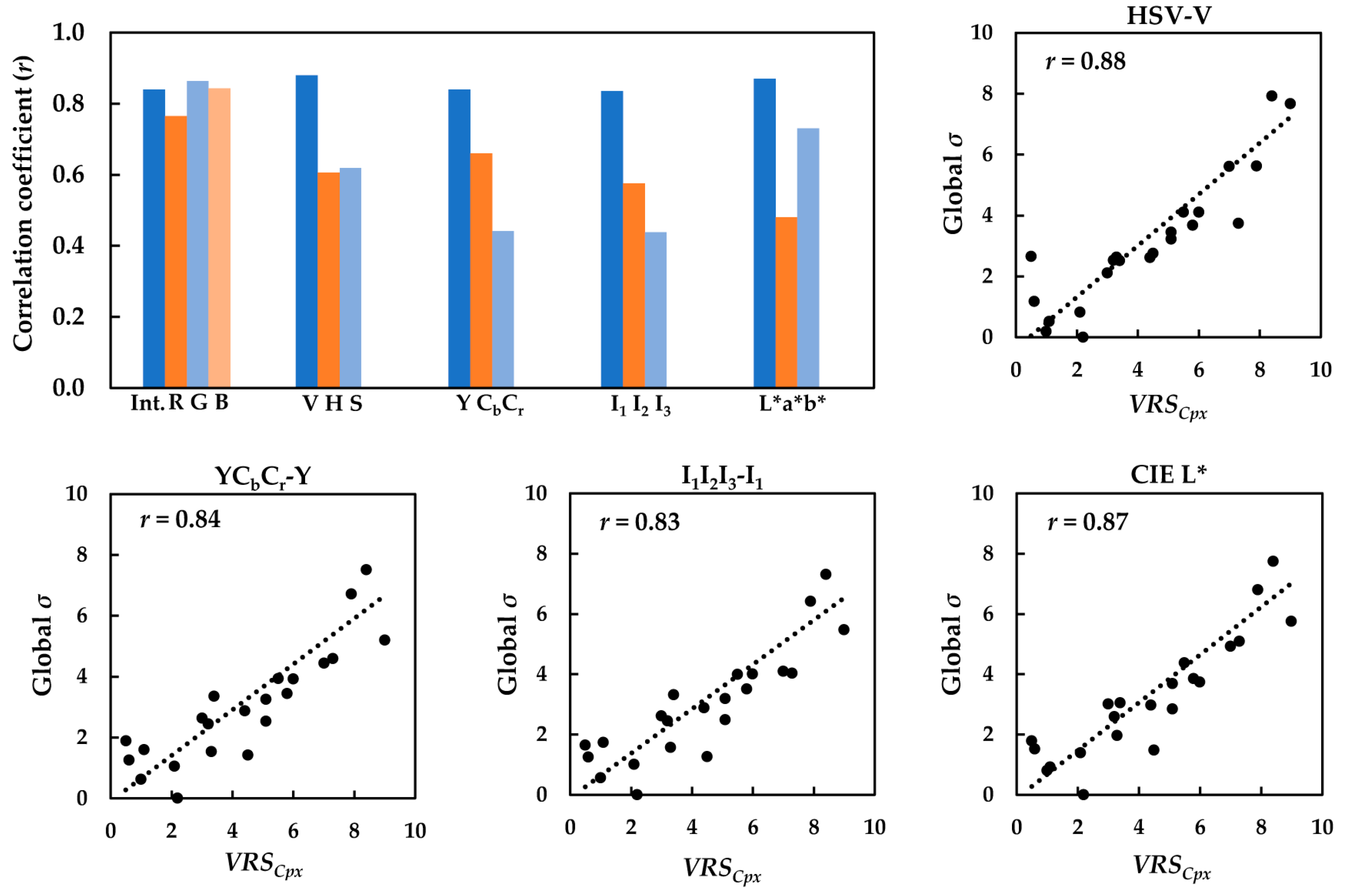

4.2.2. Luminance–Chrominance Color Spaces

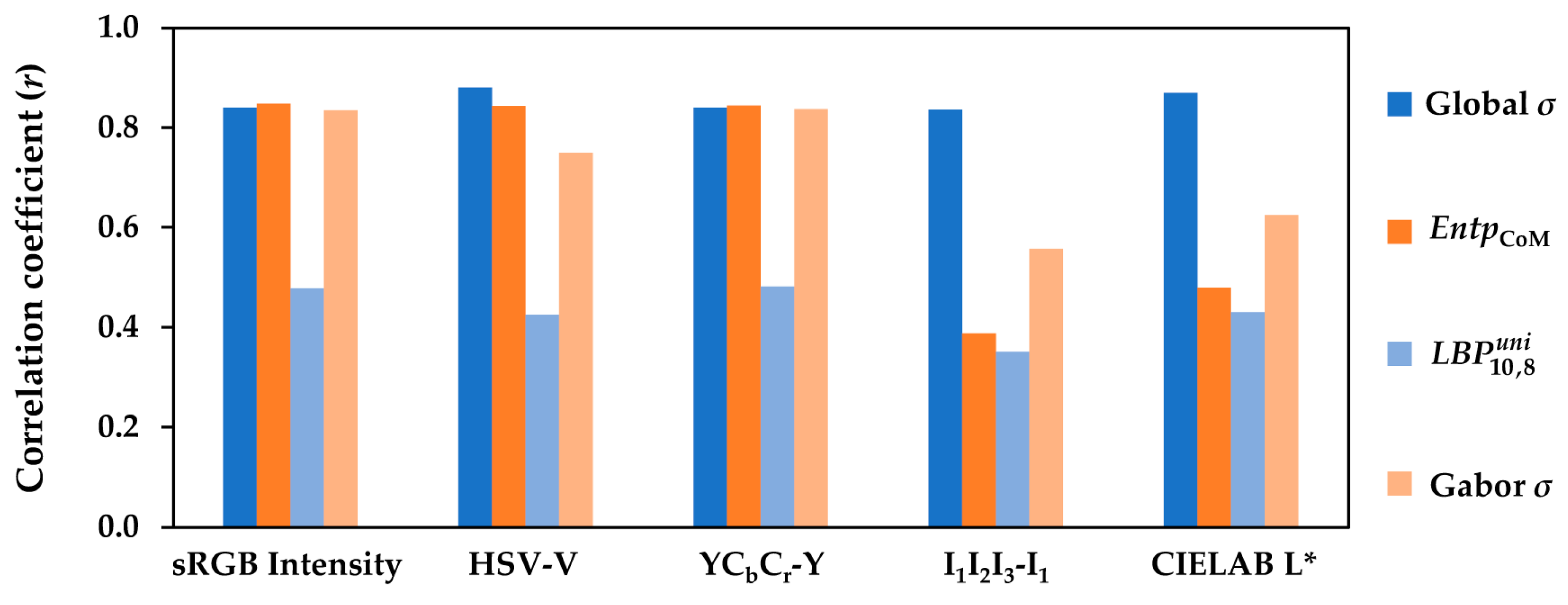

4.3. Correlation between Visual Texture Complexity and Texture Features

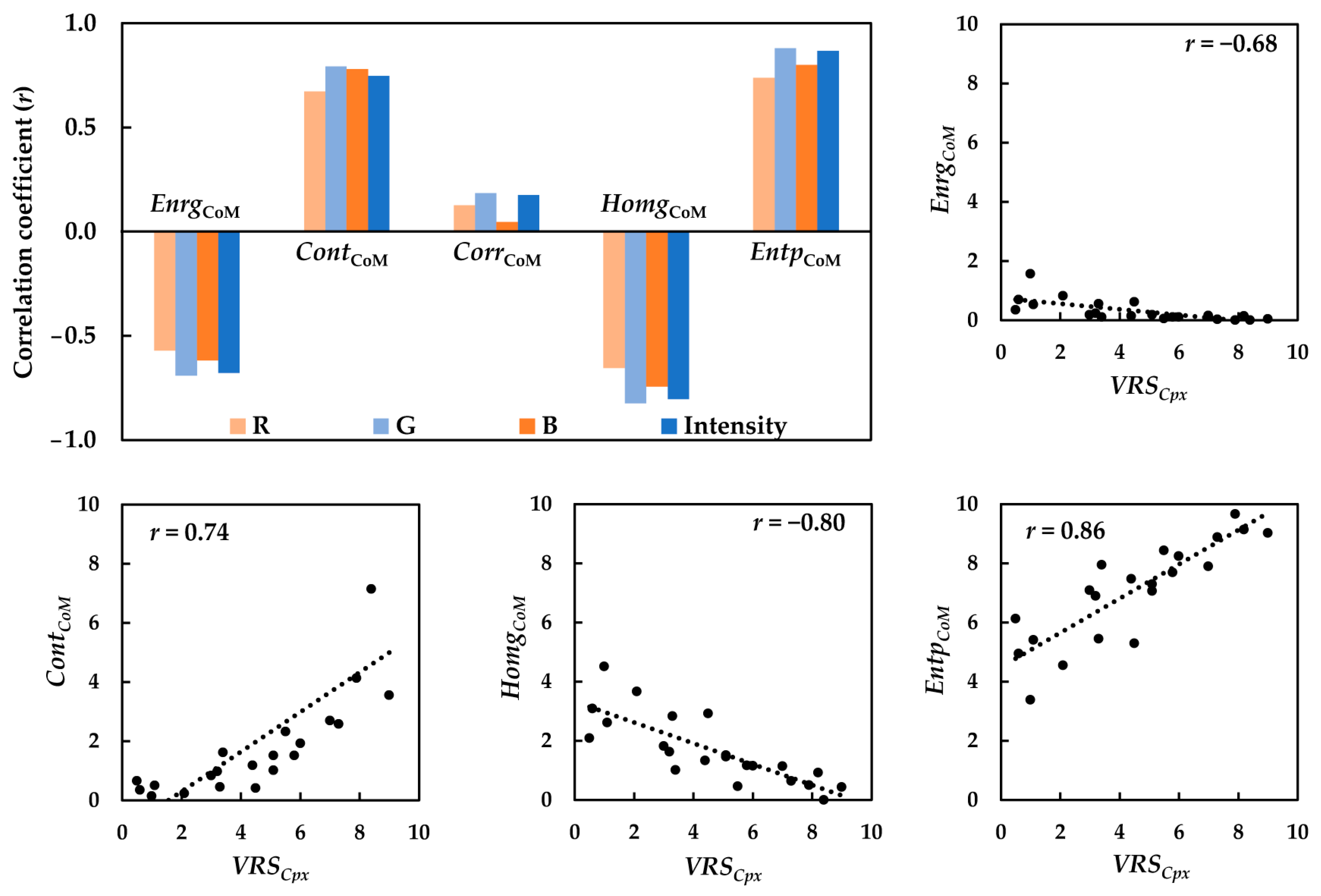

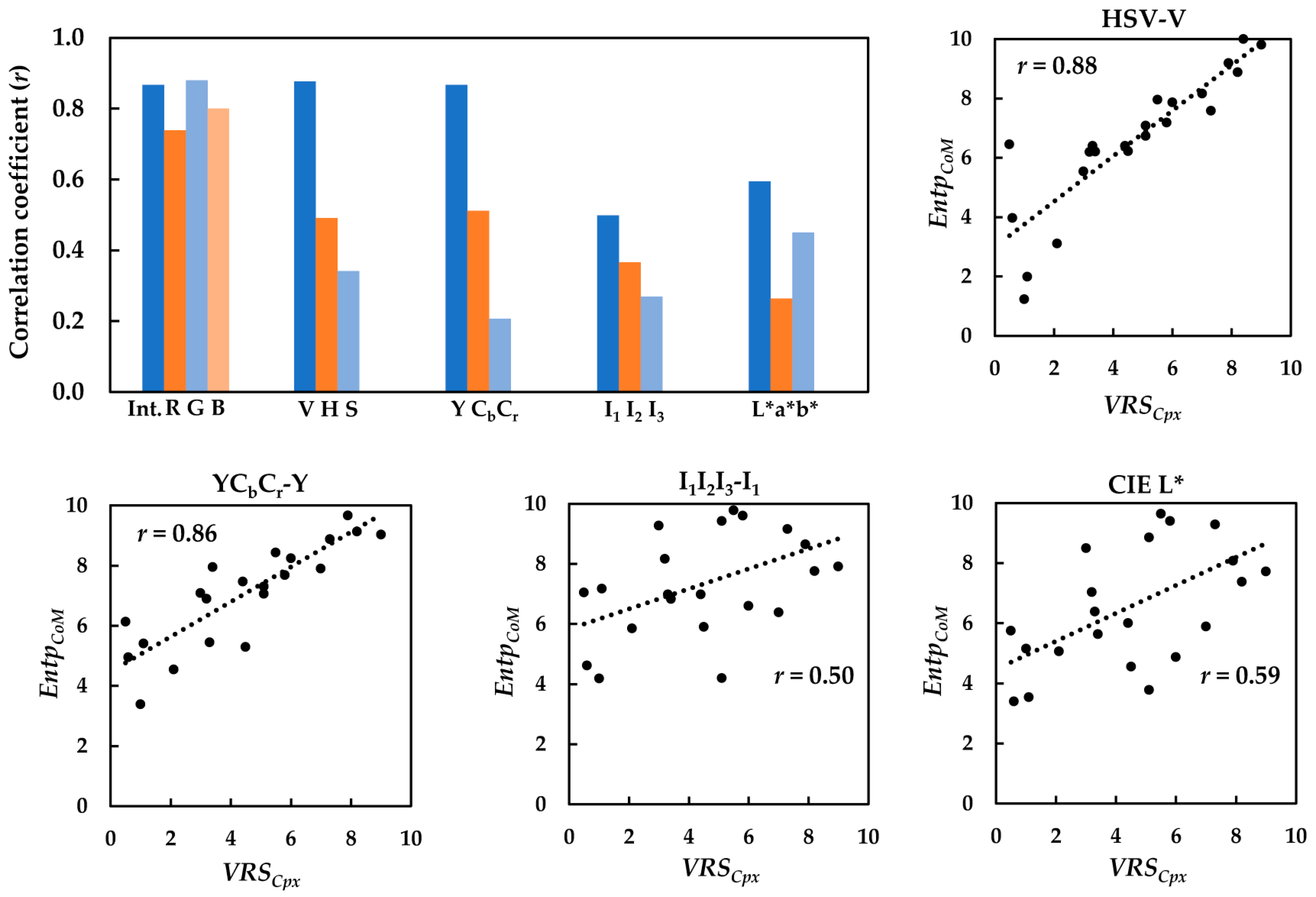

4.3.1. Co-Occurrence Matrix (CoM) Texture Features

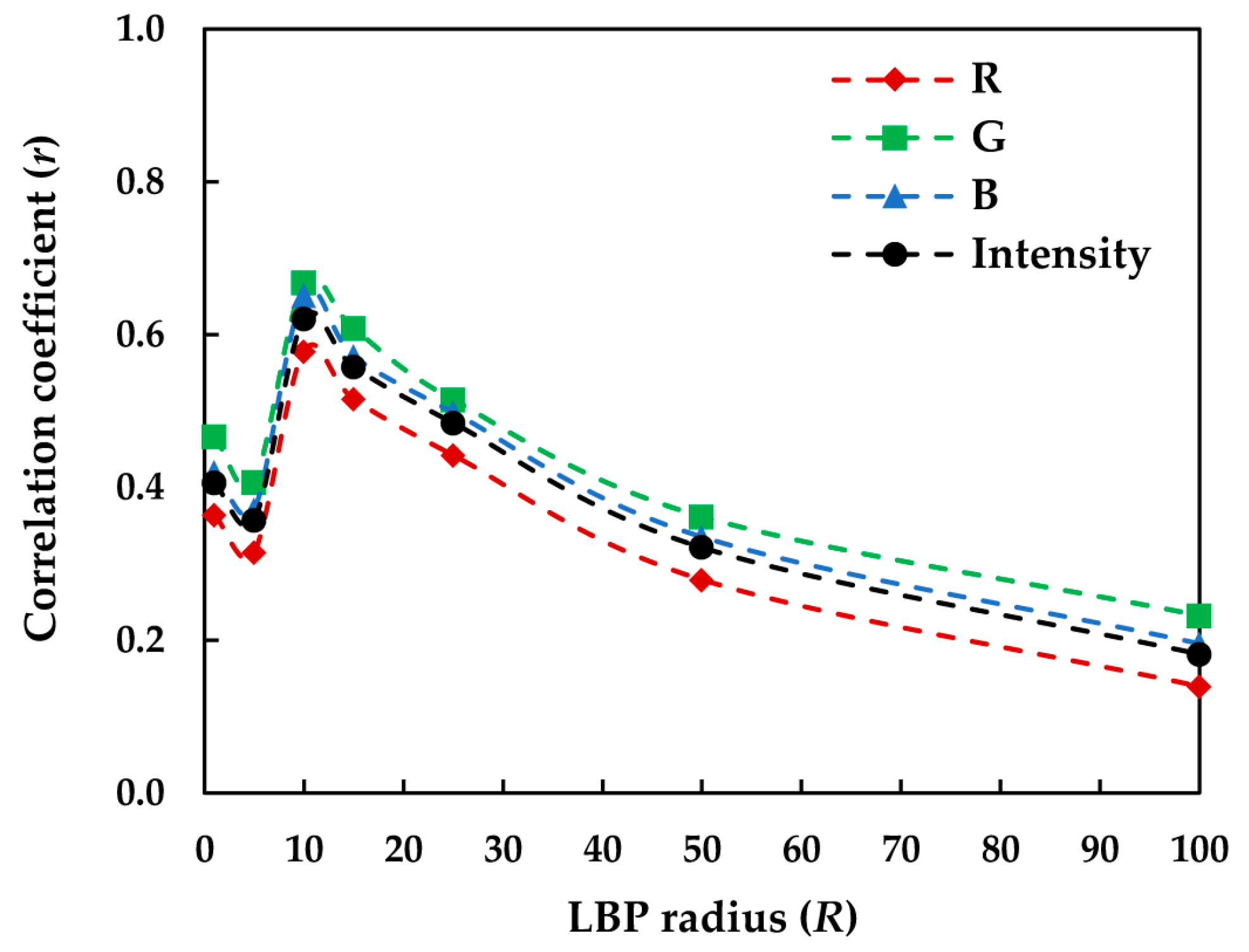

4.3.2. Local Binary Pattern (LBP) Texture Features

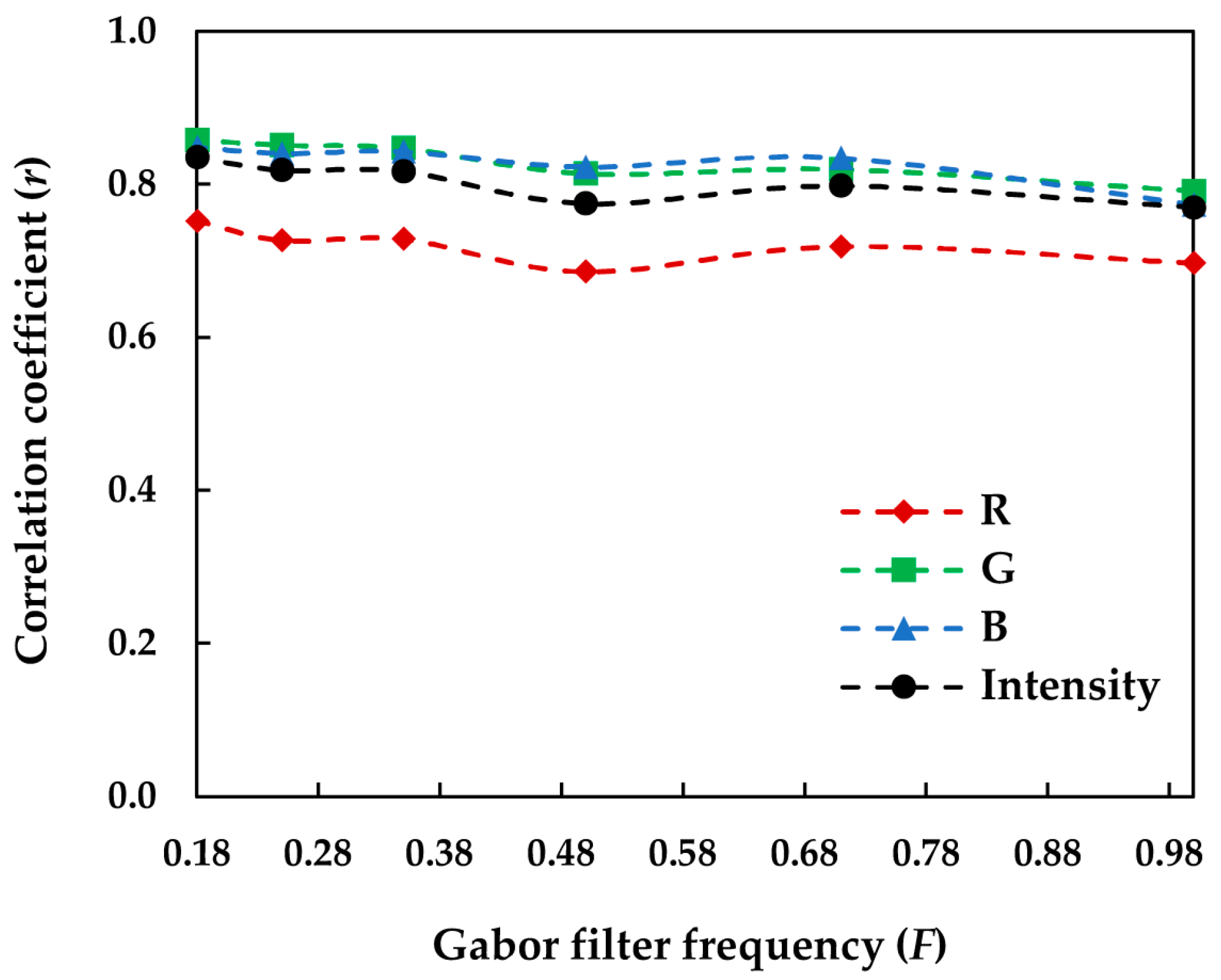

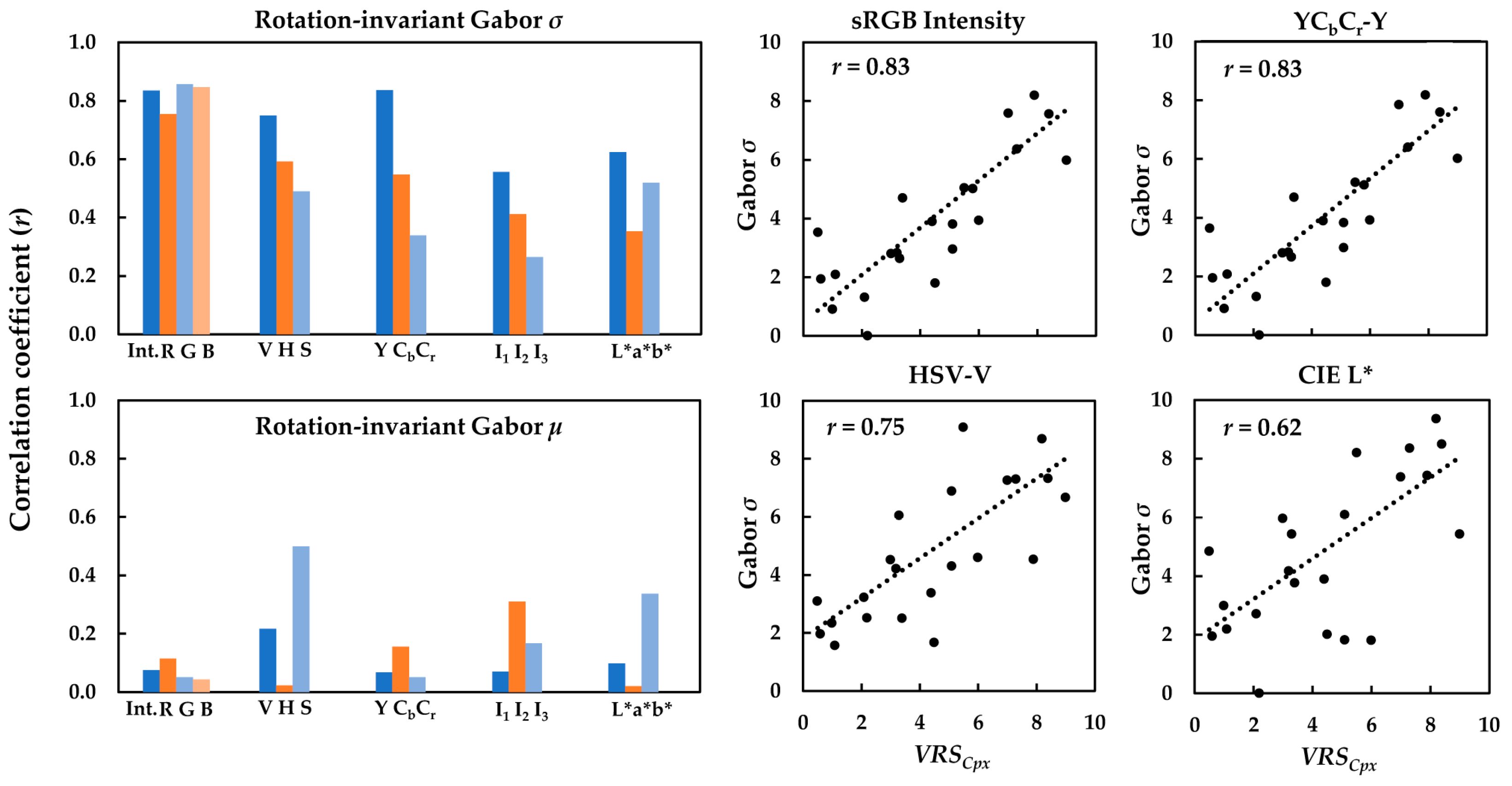

4.3.3. Gabor Features

5. Discussion

5.1. Perception of Visual Texture Complexity

5.2. Visual Texture Complexity and Its Correlation with Image Texture Measures

5.3. Most Suitable Color Spaces for Quantitative Evaluation of Visual Texture Complexity

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. The Written Instruction about the Visual Assessment Experiment

- Homogeneity

- Randomness

- Repetitiveness

- Regularity

- Color variation

- Strongness

- Complexity

- How homogeneous is fabric #1?

- Is there any fabric with higher homogeneity than fabric #1?

Appendix B. Formulas for Transforming sRGB to HSV, YCbCr, Ohta’s I1I2I3 and CIELAB Color Spaces

Appendix B.1. sRGB to Intensity Image

Appendix B.2. sRGB to HSV Color Space

Appendix B.3. sRGB to YCbCr Color Space

Appendix B.4. sRGB to Ohta’s I1I2I3 Color Space

Appendix B.5. sRGB to CLELAB Color Space

References

- Chai, X.J.; Ofen, N.; Jacobs, L.F.; Gabrieli, J.D.E. Scene complexity: Influence on perception, memory, and development in the medial temporal lobe. Front. Hum. Neurosci. 2010, 4, 21. [Google Scholar] [CrossRef] [PubMed]

- Bonacci, L.M.; Bressler, S.; Kwasa, J.A.C.; Noyce, A.L.; Shinn-Cunningham, B.G. Effects of visual scene complexity on neural signatures of spatial attention. Front. Hum. Neurosci. 2020, 14, 91. [Google Scholar] [CrossRef] [PubMed]

- Sun, Z.; Firestone, C. Curious objects: How visual complexity guides attention and engagement. Cogn. Sci. 2021, 45, e12933. [Google Scholar] [CrossRef] [PubMed]

- Van Marlen, T.; van Wermeskerken, M.; van Gog, T. Effects of visual complexity and ambiguity of verbal instructions on target identification. J. Cogn. Psychol. 2018, 31, 206–214. [Google Scholar] [CrossRef]

- Cassarino, M.; Setti, A. Complexity as key to designing cognitive-friendly environments for older people. Front. Psychol. 2016, 7, 1329. [Google Scholar] [CrossRef]

- Pieters, R.; Wedel, M.; Batra, R. The stopping power of advertising: Measures and effects of visual complexity. J. Mark. 2010, 74, 48–60. [Google Scholar] [CrossRef]

- Braun, J.; Amirshahi, S.A.; Denzler, J.; Redies, C. Statistical image properties of print advertisements, visual artworks and images of architecture. Front. Psychol. 2013, 4, 808. [Google Scholar] [CrossRef]

- Michailidou, E.; Harper, S.; Bechhofer, S. Visual complexity and aesthetic perception of web pages. In Proceedings of the 26th ACM International Conference on Design of Communication, Lisbon, Portugal, 22–24 September 2008. [Google Scholar]

- King, A.J.; Lazard, A.J.; Whit, S.R. The influence of visual complexity on initial user impressions: Testing the persuasive model of web design. Behav. Inform. Technol. 2020, 39, 497–510. [Google Scholar] [CrossRef]

- Forsythe, A.; Nadal, M.; Sheehy, N.; Cela-Conde, C.J.; Sawey, M. Predicting beauty: Fractal dimension and visual complexity in art. Br. J. Psychol. 2011, 102, 49–70. [Google Scholar] [CrossRef]

- Chen, X.; Li, B.; Liu, Y. The impact of object complexity on visual working memory capacity. Psychology 2017, 8, 929–937. [Google Scholar] [CrossRef][Green Version]

- Madan, C.R.; Bayer, J.; Gamer, M.; Lonsdorf, T.B.; Sommer, T. Visual complexity and affect: Ratings reflect more than meets the eye. Front. Psychol. 2018, 8, 2368. [Google Scholar] [CrossRef] [PubMed]

- Kurmi, Y.; Chaurasia, V.; Kapoor, N. Design of a histopathology image segmentation algorithm for CAD of cancer. Optik 2020, 218, 164636. [Google Scholar] [CrossRef]

- Bravo, M.J.; Farid, H. Object recognition in dense clutter. Percept. Psychophys. 2006, 68, 911–918. [Google Scholar] [CrossRef] [PubMed]

- Ionescu, R.T.; Alexe, B.; Leordeanu, M.; Popescu, M.; Papadopoulos, D.P.; Ferrari, V. How hard can it be? Estimating the difficulty of visual search in an image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 2157–2166. [Google Scholar]

- Richard, N.; Martínez Ríos, A.; Fernandez-Maloigne, C. Colour local pattern: A texture feature for colour images. J. Int. Colour Assoc. 2016, 16, 56–68. [Google Scholar]

- CIE technical committee 8-14: Specification of spatio-chromatic complexity. Available online: https://cie.co.at/technicalcommittees/specification-spatio-chromatic-complexity (accessed on 16 August 2022).

- Olivia, A.; Mack, M.L.; Shrestha, M.; Peeper, A. Identifying the perceptual dimensions of visual complexity of scenes. In Proceedings of the 26th Annual Meeting of the Cognitive Science Society, Chicago, IL, USA, 4–7 August 2004; pp. 4–7. [Google Scholar]

- Douchová, V. Birkhoff’s aesthetic measure. Acta. U. Carol. Philos. Hist. 2016, 2015, 39–53. [Google Scholar] [CrossRef]

- Snodgrass, J.G.; Vanderwart, M. A standardized set of 260 pictures: Norms for name agreement, image agreement, familiarity, and visual complexity. J. Exp. Psychol. 1980, 6, 174–215. [Google Scholar] [CrossRef]

- Heaps, C.; Handel, S. Similarity and features of natural textures. J. Exp. Psychol. 1999, 25, 299–320. [Google Scholar] [CrossRef]

- Amadasun, M.; King, R. Textural features corresponding to textural properties. IEEE Trans. Syst. Man Cybern. 1989, 19, 1264–1274. [Google Scholar] [CrossRef]

- Rao, A.R.; Lohse, G.L. Identifying high level features of texture perception. CVGIP-Graph. Models Image Process. 1993, 55, 218–233. [Google Scholar] [CrossRef]

- Mojsilovic, A.; Kovacevic, J.; Kall, D.; Safranek, R.J.; Kicha Ganapathy, S. The vocabulary and grammar of color patterns. IEEE Trans. Image Process. 2000, 9, 417–431. [Google Scholar] [CrossRef]

- Guo, X.; Asano, C.M.; Asano, A.; Kurita, T. Visual complexity perception and texture image characteristics. In Proceedings of the International Conference on Biometrics and Kansei Engineering, Takamatsu, Kagawa, Japan, 19–22 September 2011. [Google Scholar]

- Ciocca, G.; Corchs, S.; Gasparini, F. Complexity perception of texture images. In New Trends in Image Analysis and Processing–ICIAP 2015 Workshops; Lecture Notes in Computer Science; Murino, V., Puppo, E., Sona, D., Cristani, M., Sansone, C., Eds.; Springer International Publishing: Zürich, Switzerland, 2015; Volume 9281, pp. 119–126. [Google Scholar]

- Ciocca, G.; Corchs, S.; Gasparini, F.; Bricolo, E.; Tebano, R. Does color influence image complexity perception? In Computational Color Imaging Workshop, CCIW 2015; Lecture Notes in Computer Science; Trémeau, A., Schettini, R., Tominaga, S., Eds.; Springer: Berlin/Heidelberg, Germany, 2015; Volume 9016, pp. 139–148. [Google Scholar]

- Ivanovici, M.; Richard, N. Fractal dimension of color fractal images. IEEE Trans. Image Process. 2011, 20, 227–235. [Google Scholar] [CrossRef] [PubMed]

- Brodatz, P. Textures: A Photographic Album for Artists and Designers; Dover Publications: New York, NY, USA, 1996. [Google Scholar]

- Nagamachi, M. Kansei Engineering: A new ergonomic consumer-oriented technology for product development. Int. J. Ind. Ergon. 1995, 15, 3–11. [Google Scholar] [CrossRef]

- MIT Vision and Modeling Group, Vision Texture. Available online: https://vismod.media.mit.edu/vismod/imagery/VisionTexture/distribution.html (accessed on 16 August 2022).

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef]

- Ivanovici, M.; Richard, N. A naive complexity measure for color texture images. In Proceedings of the International Symposium on Signals, Circuits and Systems (ISSCS), Iasi, Romania, 13–14 July 2017. [Google Scholar]

- Nicolae, I.E.; Ivanovici, M. Color texture image complexity—EEG-sensed human brain perception vs. computed measures. Appl. Sci. 2021, 11, 4306. [Google Scholar] [CrossRef]

- Fernandez-Lozano, C.; Carballal, A.; Machado, P.; Santos, A.; Romero, J. Visual complexity modelling based on image features fusion of multiple kernels. PeerJ 2019, 18, e7075. [Google Scholar] [CrossRef] [PubMed]

- Mirjalili, F.; Hardeberg, J.Y. Appearance perception of textiles, a tactile and visual study. In Proceedings of the 27th Color Imaging Conference, Paris, France, 21–25 October 2019. [Google Scholar]

- Shapiro, L.G.; Stockman, G.C. Computer Vision, 1st ed.; Upper Saddle River; Prentice–Hall: Upper Saddle River, NJ, USA, 2001. [Google Scholar]

- Bianconi, F.; Fernández, A.; Smeraldi, F.; Pascoletti, G. Colour and texture descriptors for visual recognition: A historical overview. J. Imaging 2021, 7, 245. [Google Scholar] [CrossRef]

- López, F.; Valiente, J.M.; Prats, J.M.; Ferrer, A. Performance evaluation of soft color texture descriptors for surface grading using experimental design and logistic regression. Pattern Recognit. 2008, 41, 1744–1755. [Google Scholar] [CrossRef]

- Bianconi, F.; Fernández, A.; Gonzalez, E.; Saetta, S.A. Performance analysis of colour descriptors for parquet sorting. Expert Syst. Appl. 2013, 40, 1636–1644. [Google Scholar] [CrossRef]

- Xie, X.; Mirmehdi, M. A galaxy of texture features. In Handbook of Texture Analysis; Mirmehdi, M., Xie, X., Suri, J., Eds.; Imperial College Press: London, UK, 2008; pp. 375–406. [Google Scholar]

- Palm, C. Color texture classification by integrative co-occurrence matrices. Pattern Recognit. 2004, 37, 965–976. [Google Scholar] [CrossRef]

- Jain, A.; Healey, G. A multiscale representation including opponent color features for texture recognition. IEEE Trans. Image Process. 1998, 7, 124–128. [Google Scholar] [CrossRef]

- Bianconi, F.; Harvey, R.W.; Southam, P.; Fernandez, A. Theoretical and experimental comparison of different approaches for color texture classification. J. Electron. Imaging 2011, 20, 43006–430017. [Google Scholar] [CrossRef]

- Umbaugh, S.E. Computer Imaging: Digital Image Analysis and Processing; CRC Press: Boca Raton, FL, USA, 2005. [Google Scholar]

- Sundararajan, D. Digital Image Processing: A Signal Processing and Algorithmic Approach; Springer: Singapore, 2017. [Google Scholar]

- Simone, G.; Pedersen, M.; Hardeberg, J.Y. Measuring perceptual contrast in digital images. J. Vis. Commun. Image Represent. 2012, 23, 491–506. [Google Scholar] [CrossRef]

- Albregtsen, F. Statistical Texture Measures Computed from Gray Level Co-Occurrence Matrices. Image Processing Laboratory, Department of Informatics, University of Oslo. 2008. Available online: https://www.uio.no/studier/emner/matnat/ifi/INF4300/h08/undervisningsmateriale/glcm.pdf (accessed on 4 January 2022).

- Ou, X.; Pan, W.; Xiao, P. In vivo skin capacitive imaging analysis by using grey level co-occurrence matrix (GLCM). Int. J. Pharm. 2014, 460, 28–32. [Google Scholar] [CrossRef] [PubMed]

- Iqbal, N.; Mumtaz, R.; Shafi, U.; Zaidi, S.M.H. Gray level co-occurrence matrix (GLCM) texture based crop classification using low altitude remote sensing platforms. PeerJ Comput. Sci. 2021, 7, e536. [Google Scholar] [CrossRef] [PubMed]

- Dhruv, B.; Mittal, N.; Modi, M. Study of Haralick’s and GLCM texture analysis on 3D medical images. Int. J. Neurosci. 2019, 129, 350–362. [Google Scholar] [CrossRef] [PubMed]

- Ben Salem, Y.; Nasri, S. Automatic recognition of woven fabrics based on texture and using SVM. Signal Image Video Process. 2010, 4, 429–434. [Google Scholar] [CrossRef]

- Gomez, W.; Pereira, W.C.A.; Infantosi, A.F.C. Analysis of co-occurrence texture statistics as a function of gray-level quantization for classifying breast ultrasound. IEEE Trans. Med. Imaging 2012, 31, 1889–1899. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikäinen, M.; Mäenpää, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Huang, D.; Shan, C.; Ardabilian, M.; Wang, Y.; Chen, L. Local binary patterns and its application to facial image analysis: A survey. IEEE Trans. Syst. Man Cybern. 2011, 41, 765–781. [Google Scholar] [CrossRef]

- Kaya, Y.; Faruk Ertuğrul, Ö.; Tekin, R. Two novel local binary pattern descriptors for texture analysis. Appl. Soft Comput. 2015, 34, 1568–4946. [Google Scholar] [CrossRef]

- Bianconi, F.; Fernandez, A. Evaluation of the effects of Gabor filter parameters on texture classification. Pattern Recognit. 2007, 40, 3325–3335. [Google Scholar] [CrossRef]

- Mäenpää, T. The Local Binary Pattern Approach to Texture Analysis—Extensions and Applications. Ph.D. Dissertation, Department of Electrical and Information Engineering, University of Oulu, Oulu, Finland, 2003. [Google Scholar]

- Cui, C. Comparison of two psychophysical methods for image color quality measurement: Paired comparison and rank order, In Proceedings of the 8th Color Imaging Conference, Scottsdale, AZ, USA, 7–10 November 2000.

- Khan, H.A.; Mihoubi, S.; Mathon, B.; Thomas, J.B.; Hardeberg, J.Y. HyTexiLa: High resolution visible and near infrared hyperspectral texture images. Sensors 2018, 18, 2045. [Google Scholar] [CrossRef] [PubMed]

- ColorChecker® Classic. Available online: https://www.xrite.com/categories/calibration-profiling/colorchecker-classic (accessed on 1 June 2022).

- Thomas, J.B.; Colantoni, P.; Trémeau, A. On the uniform sampling of CIELAB color space and the number of discernible colors. In Computational Color Imaging Workshop, CCIW 2013; Tominaga, S., Schettini, R., Trémeau, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 53–67. [Google Scholar]

- Stokman, H.; Gevers, T. Selection and fusion of color models for image feature detection. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 371–381. [Google Scholar] [CrossRef] [PubMed]

- Gevers, T.; Gijsenij, A.; van de Weijer, J.; Geusebroek, J.M. Color in Computer Vision: Fundamentals and Applications; John Wiley & Sons: Portland, OR, USA, 2012. [Google Scholar]

- Bello-Cerezo, R.; Bianconi, F.; Fernández, A.; González, E.; Di Maria, F. Experimental comparison of color spaces for material classification. J. Electron. Imaging 2016, 25, 61406. [Google Scholar] [CrossRef]

- Pachos, G. Perceptually uniform color spaces for color texture analysis: An empirical evaluation. IEEE Trans. Image Process. 2001, 10, 932–937. [Google Scholar] [CrossRef]

- Cernadas, E.; Fernández-Delgado, M.; González-Rufino, E.; Carrión, P. Influence of normalization and color space to color texture classification. Pattern Recognit. 2017, 61, 120–138. [Google Scholar] [CrossRef]

- Mäenpää, T.; Pietikäinen, M.; Viertola, J. Separating color and pattern information for color texture discrimination. In Proceedings of the 16th International Conference on Pattern Recognition, Montreal, QC, Canada, 11–15 August 2002. [Google Scholar]

- García, P.A.; Huertas, R.; Melgosa, M.; Cui, C. Measurement of the relationship between perceived and computed color differences. J. Opt. Soc. Am. A 2007, 24, 1823. [Google Scholar] [CrossRef]

- Jolliffe, I.T. PPrincipal Component Analysis and Factor Analysis. In Principal Component Analysis; Springer Series in Statistics; Springer: New York, NY, USA, 1986. [Google Scholar]

- Kahu, S.Y.; Raut, R.B.; Bhurchandi, K.M. Review and evaluation of color spaces for image/video compression. Color Res. Appl. 2019, 44, 8–33. [Google Scholar] [CrossRef]

- Poirson, B.; Wandell, B. Pattern-color separable pathways predict sensitivity to simple colored patterns. Vision Res. 1996, 34, 515–526. [Google Scholar] [CrossRef][Green Version]

- Daugman, G. Uncertainty relation for resolution in space, spatial frequency and orientation optimized by two-dimensional visual cortical filters. J. Opt. Soc. Am. A 1985, 7, 1160–1169. [Google Scholar] [CrossRef]

- Petrou, M.M.P.; Kamata, S. Image Processing: Dealing with Texture, 1st ed.; John Wiley & Sons: Chichester, UK, 2006. [Google Scholar]

- Chernov, V.; Alander, J.; Bochko, V. Integer-based accurate conversion between RGB and HSV color spaces. Comput. Electr. Eng. 2015, 46, 328–337. [Google Scholar] [CrossRef]

- Ohta, Y.I.; Kanade, T.; Sakai, T. Color information for region segmentation. Comput. Graph. Image Process. 1980, 13, 222–241. [Google Scholar] [CrossRef]

| Variable | PC1 | PC2 |

|---|---|---|

| VRSH | 0.44 | −0.04 |

| VRSR | −0.37 | −0.37 |

| VRSRp | −0.08 | 0.47 |

| VRSRg | 0.16 | 0.46 |

| VRSCV | −0.40 | 0.11 |

| VRSS | −0.34 | 0.05 |

| VRSCpx | −0.42 | −0.15 |

| Texture Measure | Factor Loading | Specific Variance |

|---|---|---|

| Global σ | 1.00 | 0.01 |

| EntpCoM | 0.83 | 0.32 |

| 0.64 | 0.60 | |

| Gabor σ | 0.96 | 0.08 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mirjalili, F.; Hardeberg, J.Y. On the Quantification of Visual Texture Complexity. J. Imaging 2022, 8, 248. https://doi.org/10.3390/jimaging8090248

Mirjalili F, Hardeberg JY. On the Quantification of Visual Texture Complexity. Journal of Imaging. 2022; 8(9):248. https://doi.org/10.3390/jimaging8090248

Chicago/Turabian StyleMirjalili, Fereshteh, and Jon Yngve Hardeberg. 2022. "On the Quantification of Visual Texture Complexity" Journal of Imaging 8, no. 9: 248. https://doi.org/10.3390/jimaging8090248

APA StyleMirjalili, F., & Hardeberg, J. Y. (2022). On the Quantification of Visual Texture Complexity. Journal of Imaging, 8(9), 248. https://doi.org/10.3390/jimaging8090248