Guided Filter-Based Edge Detection Algorithm for ICT Images of Solid Rocket Motor Propellant

Abstract

:1. Introduction

2. Problem Description

3. The Proposed Algorithm Based on the Guided Filter

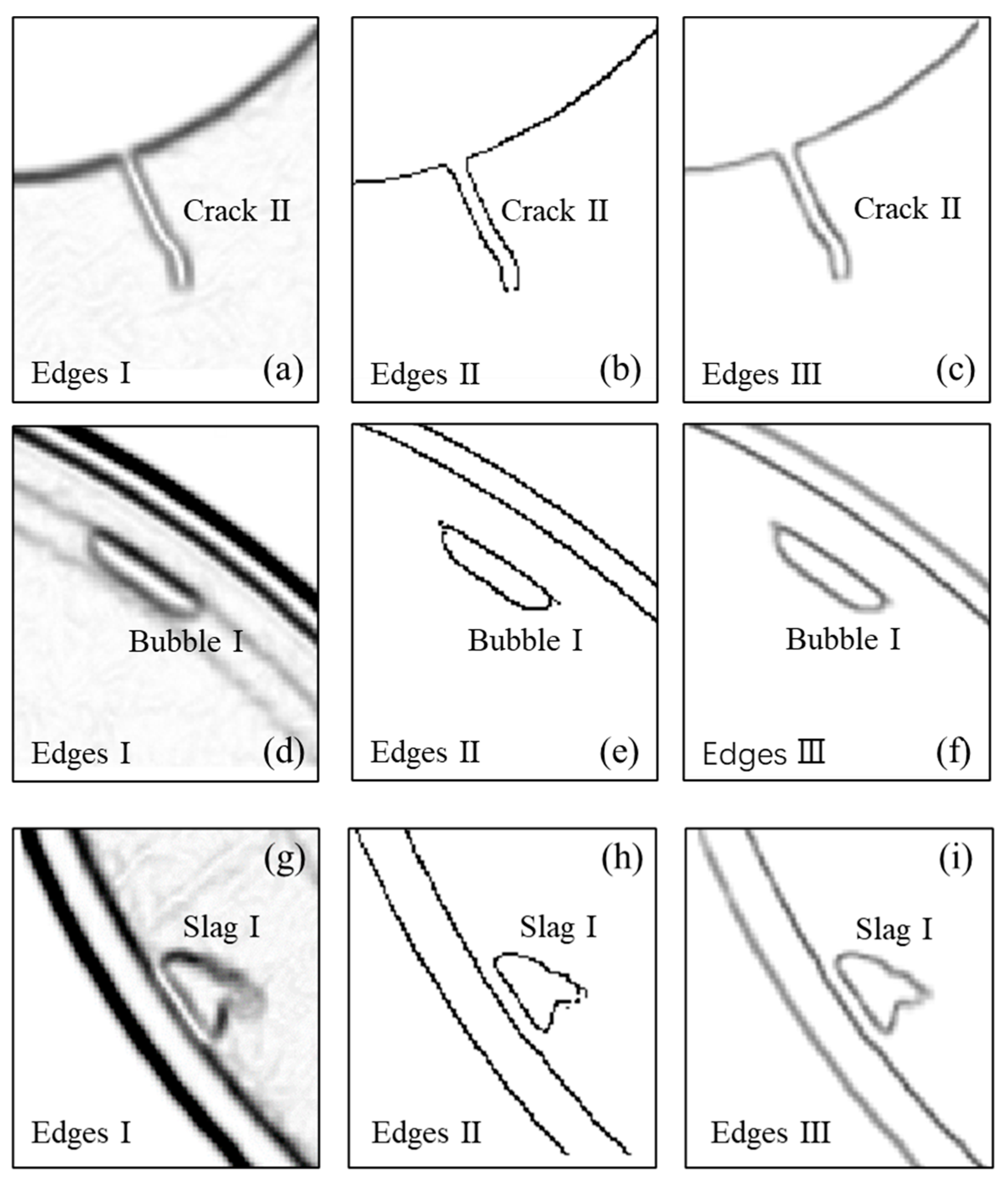

4. Experimental Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Remakanthan, S.; Kk, M.; Gunasekaran, R.; Thomas, C.; Thomas, C.R. Analysis of defects in solid rocket motors using X-ray radiography. E-J. Nondestruct. Test. 2015, 20, 6. [Google Scholar]

- Fan, J.W.; Tan, F.T. Analysis of major defects and nondestructive testing methods for solid rocket motor. In Applied Mechanics and Materials; Trans Tech Publications Ltd.: Stafa-Zurich, Switzerland, 2013; Volume 365, pp. 618–622. [Google Scholar]

- Lu, H.Y.; Zhu, M.; Yu, G.H. 3D Visualization Fault Diagnosis Technology for Solid Rocket Moter; National Defense Industry Press: Beijing, China, 2014; pp. 18–20. [Google Scholar]

- Tian, R.; Sun, G.; Liu, X.; Zheng, B. Sobel Edge Detection Based on Weighted Nuclear Norm Minimization Image Denoising. Electronics 2021, 10, 655. [Google Scholar] [CrossRef]

- Sekehravani, E.A.; Babulak, E.; Masoodi, M. Implementing canny edge detection algorithm for noisy image. Bull. Electr. Eng. Inform. 2020, 9, 1404–1410. [Google Scholar] [CrossRef]

- Kumar, A.; Seemanti, S.; Rajarshi, B. Wavelet transform based novel edge detection algorithms for wideband spectrum sensing in CRNs. AEU-Int. J. Electron. Commun. 2018, 84, 100–110. [Google Scholar] [CrossRef]

- Xie, S.; Zhuo, W.T. Holistically-nested edge detection. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1395–1403. [Google Scholar]

- Hwang, J.J.; Liu, T.L. Pixel-wise Deep Learning for Contour Detection. arXiv 2015, arXiv:1504.01989. [Google Scholar]

- Bertasius, G.; Shi, J.; Torresani, L. DeepEdge: A multi-scale bifurcated deep network for top-down contour detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 4380–4389. [Google Scholar]

- Shen, W.; Wang, X.; Wang, Y.; Bai, X.; Zhang, Z. Deepcontour: A deep convolutional feature learned by positive-sharing loss for contour detection draft version. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3982–3991. [Google Scholar]

- Wang, K.; Zhang, J.; Ni, H.; Ren, F. Thermal Defect Detection for Substation Equipment Based on Infrared Image Using Convolutional Neural Network. Electronics 2021, 10, 1986. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courvile, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; pp. 140–142. [Google Scholar]

- Ganin, Y.; Lempitsky, V. N4-fields: Neural network nearest neighbor fields for image transforms. In Proceedings of the Asian Conference on Computer Vision, Singapore, 1–5 November 2014; Springer: Cham, Switzerland, 2014; pp. 536–551. [Google Scholar]

- Tian, C.W.; Xu, Y.; Zuo, W.; Du, B.; Lin, C.W.; Zhang, D. Designing and training of a dual CNN for image denoising. Knowl. -Based Syst. 2021, 226, 106949. [Google Scholar] [CrossRef]

- Liu, Y.; Cheng, M.M.; Hu, X.; Bian, J.-W.; Zhang, L.; Bai, X.; Tang, J. Richer convolutional features for edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 3000–3009. [Google Scholar] [CrossRef] [Green Version]

- Zhang, H.; Cao, X. A way of image fusion based on wavelet transform. In Proceedings of the 2013 IEEE 9th International Conference on Mobile Ad-hoc and Sensor Networks, Washington, DC, USA, 11–13 December 2013; pp. 498–501. [Google Scholar]

- Song, Y.; Yan, H. Image segmentation algorithms overview. arXiv 2017, arXiv:1707.02051. [Google Scholar]

- Yousaf, R.M.; Habib, H.A.; Dawood, H.; Shafiq, S. A Comparative Study of Various Edge Detection Methods. In Proceedings of the 2018 14th International Conference on Computational Intelligence and Security (CIS), Hangzhou, China, 16–19 November 2018; pp. 96–99. [Google Scholar]

- Zhang, X.Y.; Zhang, R.J. The technology research in decomposition and reconstruction of image based on two-dimensional wavelet transform. In Proceedings of the 2012 9th International Conference on Fuzzy Systems and Knowledge Discovery, Chongqing, China, 29–31 May 2012; pp. 1998–2000. [Google Scholar]

- Wang, F.Y.; Chen, M.; Fei, Q.S. The Improved Method for Image Edge Detection Based on Wavelet Transform with Modulus Maxima. Pattern Recognit. Lett. 2002, 23, 1771–1784. [Google Scholar] [CrossRef]

- Jin, S.L.; Bai, J.; Ye, H.J. Edge Detection of Lung Images Based on Improved Morphology. J. Data Acquis. Process. 2014, 29, 134–140. [Google Scholar]

- He, K.M.; Sun, J.; Tang, X.O. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1397–1409. [Google Scholar] [CrossRef] [PubMed]

- Setiadi, D.R.I.M. PSNR vs. SSIM: Imperceptibility quality assessment for image steganography. Multimed. Tools Appl. 2021, 80, 8423–8444. [Google Scholar] [CrossRef]

- Sara, U.; Akter, M.; Uddin, M.S. Image quality assessment through FSIM, SSIM, MSE and PSNR—A comparative study. J. Comput. Commun. 2019, 7, 8–18. [Google Scholar] [CrossRef] [Green Version]

| Edge Type | Crack | Bubble | Slag |

|---|---|---|---|

| Edges I | 11.7346 | 10.3247 | 8.7919 |

| Edges II | 10.9417 | 9.4380 | 7.6812 |

| Edges III | 11.2083 | 9.6185 | 7.8318 |

| Edge Type | Crack | Bubble | Slag |

|---|---|---|---|

| Edges I | 0.5980 | 0.5961 | 0.2324 |

| Edges II | 0.6092 | 0.6033 | 0.2382 |

| Edges III | 0.6106 | 0.6036 | 0.2389 |

| Edge Type | Image Number | Edges I | Edges II | Edges III |

|---|---|---|---|---|

| PSNR | 1 | 6.1767 | 6.1908 ↗ | 6.2003 ↗ |

| 2 | 6.4420 | 6.4576 ↗ | 6.4672 ↗ | |

| 3 | 6.4228 | 6.4462 ↗ | 6.4566 ↗ | |

| 4 | 6.4516 | 6.4704 ↗ | 6.4798 ↗ | |

| 5 | 6.5189 | 6.5417 ↗ | 6.5450 ↗ | |

| 6 | 5.9414 | 5.9560 ↗ | 5.9566 ↗ | |

| 7 | 6.5196 | 6.5423 ↗ | 6.5455 ↗ | |

| 8 | 6.2127 | 6.2364 ↗ | 6.2473 ↗ | |

| 9 | 6.5203 | 6.5430 ↗ | 6.5463 ↗ | |

| 10 | 6.1763 | 6.1907 ↗ | 6.2002 ↗ |

| Edge Type | Image Number | Edges I | Edges II | Edges III |

|---|---|---|---|---|

| SSIM | 1 | 0.5989 | 0.6092 ↗ | 0.6115 ↗ |

| 2 | 0.5915 | 0.6009 ↗ | 0.6030 ↗ | |

| 3 | 0.5967 | 0.6033 ↗ | 0.6043 ↗ | |

| 4 | 0.5907 | 0.5996 ↗ | 0.6006 ↗ | |

| 5 | 0.4926 | 0.5000 ↗ | 0.5016 ↗ | |

| 6 | 0.5667 | 0.5778 ↗ | 0.5796 ↗ | |

| 7 | 0.4927 | 0.4995 ↗ | 0.5017 ↗ | |

| 8 | 0.6068 | 0.6147 ↗ | 0.6157 ↗ | |

| 9 | 0.4927 | 0.5000 ↗ | 0.5017 ↗ | |

| 10 | 0.5990 | 0.6093 ↗ | 0.6116 ↗ |

| Edge Type | Image Number | Edges I | Edges II | Edges III | Total Time |

|---|---|---|---|---|---|

| Time(s) | 1 | 2.043 | 1.858 | 0.469 | 4.370 |

| 2 | 2.391 | 1.640 | 0.520 | 4.551 | |

| 3 | 1.949 | 2.047 | 0.717 | 4.713 | |

| 4 | 1.319 | 2.519 | 0.790 | 4.628 | |

| 5 | 2.042 | 1.779 | 0.695 | 4.516 | |

| 6 | 1.899 | 1.957 | 0.643 | 4.499 | |

| 7 | 1.895 | 1.848 | 0.666 | 4.409 | |

| 8 | 1.469 | 2.392 | 0.776 | 4.637 | |

| 9 | 1.551 | 2.147 | 0.779 | 4.797 | |

| 10 | 1.934 | 1.977 | 0.598 | 4.509 | |

| Average Time(s) | - | 1.849 | 2.016 | 0.665 | 4.530 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dai, J.; Li, T.; Xuan, Z. Guided Filter-Based Edge Detection Algorithm for ICT Images of Solid Rocket Motor Propellant. Electronics 2022, 11, 2118. https://doi.org/10.3390/electronics11142118

Dai J, Li T, Xuan Z. Guided Filter-Based Edge Detection Algorithm for ICT Images of Solid Rocket Motor Propellant. Electronics. 2022; 11(14):2118. https://doi.org/10.3390/electronics11142118

Chicago/Turabian StyleDai, Junjie, Tianpeng Li, and Zhaolong Xuan. 2022. "Guided Filter-Based Edge Detection Algorithm for ICT Images of Solid Rocket Motor Propellant" Electronics 11, no. 14: 2118. https://doi.org/10.3390/electronics11142118

APA StyleDai, J., Li, T., & Xuan, Z. (2022). Guided Filter-Based Edge Detection Algorithm for ICT Images of Solid Rocket Motor Propellant. Electronics, 11(14), 2118. https://doi.org/10.3390/electronics11142118