Abstract

More accurate and standardised screening and assessment instruments are needed for studies to better understand the epidemiology of mild cognitive impairment (MCI) and dementia in Europe. The Survey of Health, Ageing and Retirement in Europe (SHARE) does not have a harmonised multi-domain cognitive test available. The current study proposes and validates a new instrument, the SHARE cognitive instrument (SHARE-Cog), for this large European longitudinal cohort. Three cognitive domains/sub-tests were available across all main waves of the SHARE and incorporated into SHARE-Cog; these included 10-word registration, verbal fluency (animal naming) and 10-word recall. Subtests were weighted using regression analysis. Diagnostic accuracy was assessed from the area under the curve (AUC) of receiver operating characteristic curves. Diagnostic categories included normal cognition (NC), subjective memory complaints (SMC), MCI and dementia. A total of 20,752 participants were included from wave 8, with a mean age of 75 years; 55% were female. A 45-point SHARE-Cog was developed and validated and had excellent diagnostic accuracy for identifying dementia (AUC = 0.91); very good diagnostic accuracy for cognitive impairment (MCI + dementia), (AUC = 0.81); and good diagnostic accuracy for distinguishing MCI from dementia (AUC = 0.76) and MCI from SMC + NC (AUC = 0.77). SHARE-Cog is a new, short cognitive screening instrument developed and validated to assess cognition in the SHARE. In this cross-sectional analysis, it has good–excellent diagnostic accuracy for identifying cognitive impairment in this wave of SHARE, but further study is required to confirm this in previous waves and over time.

1. Introduction

The world’s population is ageing such that the number of people aged ≥65 years of age is expected to more than double from 771 million in 2022 to 1.6 billion by 2050 [1]. This trend is associated with an increased prevalence of cognitive impairment, including dementia [2]. Estimates from the Global Burden of Disease study suggest that the number living with dementia will increase from 57.4 million in 2019 to 152.8 million by 2050 [3]. Ageing is also associated with other cognitive issues, which herald or predispose to the development of dementia, such as mild cognitive impairment (MCI), a minor neurocognitive disorder characterised by symptoms and observable deficits in cognition without a loss of function [4], as well as subjective memory complaints (SMC) [5].

For healthcare practitioners, the early detection of cognitive symptoms may improve outcomes by facilitating the prompt initiation of multi-modal management strategies including lifestyle risk factor modification and treatment with cholinesterase inhibitors for those with dementia [6,7]. Recent studies showing the potential for disease modifying therapies have added impetus to this. Although the evidence for population or community-level cognitive screening in asymptomatic individuals is limited and has little support at present [8,9,10], the use of short accurate cognitive screening instruments (CSIs) improves case-finding and can aid the development of integrated care pathways [11,12]. Given that CSIs often exhibit poor sensitivity and specificity [12], as well as the well-recognised time–accuracy trade-off, where longer tests are more accurate but time-consuming to administer [13], it is important to look for approaches that balance brevity with sensitivity and specificity.

For researchers, especially within epidemiology, estimates of cognitive impairment are often missing and/or incomparable between studies. For example, the need for more standardised diagnostic criteria has been raised in numerous systematic reviews of MCI examining its prevalence [14,15,16] and incidence [17]. Some reviews have addressed this issue by applying strict inclusion criteria, which has resulted in more harmonised estimates, but also greatly limited the number of studies available for comparison [18,19]. Several efforts have also been made to document the cognitive items or domains (e.g., short-term memory or language) available across large studies of ageing [20,21,22] and identify which are harmonised across different longitudinal studies [23,24]. Many of these population-level longitudinal studies are modelled on the Health and Retirement Study (HRS) in the United States [21] and thus contain similar questions.

The largest of these HRS-based longitudinal studies is the Survey of Health, Ageing and Retirement in Europe (SHARE), which, to date (2004 to 2023), has included 29 countries and 8 main study waves. It focuses on people aged ≥50 years and their partners. While the SHARE includes multiple cognitive assessments, it lacks a cognitive scale combining different cognitive domains to measure impairment over time. This limits the ability of the study and researchers accessing the dataset to (1) track cognitive decline over time in this population, (2) properly examine the epidemiology of MCI and dementia and (3) compare findings with other studies that include established instruments. In this context, the objective of this study is to propose and validate a new, short CSI for use in SHARE. This SHARE cognitive instrument (SHARE-Cog) could then be used to produce harmonised estimates of MCI and dementia in the SHARE, and other similar studies, where it could be replicated.

2. Materials and Methods

2.1. Sample

This study included participants aged ≥65 years who completed a longitudinal questionnaire in wave 8 (2019/20) of the SHARE [25], which included 19 countries (Austria, Belgium, Croatia, Czech Republic, Denmark, Estonia, France, Germany, Greece, Hungary, Israel, Italy, Luxembourg, Netherlands, Poland, Slovenia, Spain, Sweden and Switzerland). These questionnaires were standardised across countries and were administered via computer-assisted interviews by trained personnel. Further methodological details of the SHARE study have been published elsewhere [26]. Only participants aged ≥65 years who had completed a longitudinal questionnaire were selected for this current analysis as these had a greater number of cognitive tests available. Participants were excluded if they were missing data for the variables of interest, were physically unable to complete the cognitive drawing tests or could not be reliably classified into one of the cognitive diagnostic groups.

2.2. Cognitive Tests

2.2.1. SHARE Cognitive Instrument (SHARE-Cog)

The CSI proposed for the SHARE contains three subtests/domains: 10-word registration; verbal fluency, and 10-word recall.

For 10-word registration the interviewee was read a list of 10 words aloud and was asked to immediately recall as many as they could. The task was explained to the participant prior to reading the word list as follows: “Now, I am going to read a list of words from my computer screen. We have purposely made the list long so it will be difficult for anyone to recall all the words. Most people recall just a few. Please listen carefully, as the set of words cannot be repeated. When I have finished, I will ask you to recall aloud as many of the words as you can, in any order. Is this clear?”. One of four word lists was randomly chosen by the computer. The participant only had one attempt.

For verbal fluency the participant was asked to name as many animals as they could within one minute. This test was timed and finished after one minute precisely, with no extensions being applied if the instructions needed to be repeated. The basic instructions would be repeated if the respondent was silent for 15 s: “I want you to tell me all the animals you can think of” and if the respondent appeared to stop early, then they could be encouraged to try to find more words. Any members of the animal kingdom and mythical animals, including different breeds, male, female and infant names within the species, were all accepted. Repetitions, redundancies and proper nouns were not.

The 10-word recall task took place after the verbal fluency test and a serial-7 subtraction task, and the participants were asked to recall as many words as they could from the 10-word registration list. The question was asked as: “A little while ago, the computer read you a list of words, and you repeated the ones you could remember. Please tell me any of the words that you can remember now?”.

The SHARE-Cog instrument (scoring template) and scoring instructions are presented in the Supplementary Material.

2.2.2. Cognitive Battery

Additional subtests/domains available in SHARE wave 8 were compiled into a more comprehensive cognitive battery, including six subtests. This acted as a “gold standard” to perform a diagnostic accuracy test. These subtests resembled and often mirrored items included in the Montreal Cognitive Assessment (MoCA) [27] and the same item-scoring approach was applied, leading to a battery with a total score of 16 points (from 0, indicating poor cognition, reflecting an inability to complete the items, to 16 points, suggesting normal cognition).

There were three visuospatial/executive function tasks (5 points), including a clock drawing test, and instructions to copy images of a cube and an infinity loop. The clock drawing test involved drawing the clock face and setting the time to ten past five. Like the MoCA, it was scored with one point for each correct part considering the contour, numbers and hands. For the cube and infinity loop, drawings were scored 1 point each if fully correct. Verbal object naming (3 points) was assessed through verbally describing three objects and asking the participant to name them. The three objects described were scissors from “What do people usually use to cut paper?”, a cactus from “What do you call the kind of prickly plant that grows in the desert?” and a pharmacy from “Where do people usually go to buy medicine?”. Synonyms and names of cacti were acceptable and each correct answer was scored 1 point. Attention/numeracy (4 points) included serial 7s subtraction (3 points) and a counting backwards from 20 task (1 point). The serial 7s subtraction involved counting backwards five times from 100 in 7s, and this task was scored as 3 points for 4/5 correct, 2 points for 2/3 correct, 1 point for 1 correct and 0 if none were correct, as in the MoCA. For the other counting task, participants were asked to count backwards as quickly as they could starting from 20 and were given up to two attempts to complete this task. They got one point if they managed to successfully count backwards from 19 to 10 or from 20 to 11. Orientation (4 points) was also considered, with participants receiving one point for each of the following they got correct: date, month, year and day of the week.

2.3. Descriptive Variables

The main descriptive variables included age, sex, education and activities of daily living (ADL). Age was calculated via the year and months of birth and interview, and was divided into 10-year age groups of 65–74, 75–84 and ≥85 years. Education was measured according to the International Standard Classification of Education (ISCED) 1997 [28], and was divided into the following groups: low (0–2, none to lower secondary), medium (3–4, upper secondary and post-secondary non-tertiary) and high (5–6, tertiary) [29]. Other (ambiguous) question responses of “still in school” and “other” were difficult to categorise and were excluded.

ADL difficulties were self-reported such as: “Please tell me if you have any difficulty with these activities because of a physical, mental, emotional or memory problem… exclude any difficulties you expect to last less than three months”. For this study, three cognitively focused instrumental activities of daily living (IADL) were considered: “making telephone calls”; “taking medications”; and “managing money, such as paying bills and keeping track of expenses”. Disability, including physical causes, was assessed using the Global Activity Limitation Indicator (GALI): “For the past six months at least, to what extent have you been limited because of a health problem in activities people usually do? Severely limited; Limited, but not severely or Not limited” [30].

Additional descriptive variables included living alone, employment status, multimorbidity (defined as having at least two comorbidities based on a self-reported list of sixteen conditions), eyesight problems (self-rated as “fair” or “poor”), hearing problems (self-rated as “fair” or “poor”), low self-rated health (rated as “fair” or “poor”), physical frailty (according to the SHARE-FI [31]) and hospitalisation (self-reported as an overnight hospital stay in the last year).

2.4. Cognitive Classifications

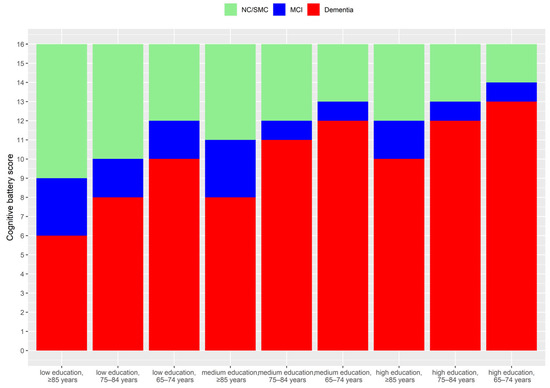

Participants were divided into the following diagnostic groups: dementia, MCI, SMC and normal cognition using age- and education- specific cut-offs on the cognitive battery (Figure 1, Table S1), as well as questions on IADL difficulties, subjective memory issues and reports of doctor-diagnosed memory problems. Each of the categories is described below and a detailed overview of the categorisation scheme is provided in Table S2.

Figure 1.

Age and education-specific cut-offs applied for the identification of an objective cognitive deficit on testing. Cut-offs were defined based on being 1 or 2 standard deviations (SD) below the mean cognitive score.

2.4.1. Dementia (D)

Dementia involves cognitive disease that is severe enough to interfere with ADLs [32]. While a clinically confirmed diagnosis of dementia is not possible or available in the SHARE, participants were able to report that “a doctor told [them]… and [they] are either currently being treated for or bothered by… Alzheimer’s disease, dementia, organic brain syndrome, senility or any other serious memory impairment”. If these participants also reported difficulty with cognitively focused/orientated IADLs (telephone calls, taking medications and managing money), they were considered to have dementia. In addition, those reporting difficulties with these cognitively focused IADLs that also scored 2 or more standard deviations (SD) below the mean cognitive battery score by age group and education level (Figure 1) were also considered to have dementia [32].

2.4.2. Mild Cognitive Impairment (MCI)

MCI was determined based on the standard Petersen’s criteria [33]. These participants had objective evidence of cognitive impairment based on testing, a subjective self-reported cognitive complaint, preserved independence in functional abilities (ADLs) and no dementia [33]. The objective cognitive impairment was defined a score between 1 and 2 standard deviations below the mean cognitive battery score by age group and education level [32], as shown in Figure 1. The subjective complaint was taken as a response of “fair” or “poor” to the question: “How would you rate your memory at the present time? Would you say it is excellent, very good, good, fair or poor?”. For independence in functional abilities, the three cognitively focused IADLs considered were telephone calls, taking medications, managing money. In addition, those within the MCI or those in the normal cognitive range with no impairment in IADLs but who reported a doctor provided them with diagnosis of “serious memory impairment” were considered to have MCI.

2.4.3. Subjective Memory Complaints (SMC)

Participants who scored from >1 SD below the mean cognitive battery score and above (Figure 1), and did not meet the criteria for dementia or MCI, were categorised as SMC if they responded “fair” or “poor” to the question: “How would you rate your memory at the present time? Would you say it is excellent, very good, good, fair or poor?”. As those reporting difficulties in IADL were difficult to categorise, they were excluded from this analysis (Table S2).

2.4.4. Normal Cognition (NC)

2.5. Statistical Analysis

All analysis was carried out in R (version 4.2.1). The statistical significance of differences between categorical variables by cognitive group was assessed using the Pearson’s Chi-squared test. To determine the optimal weights for each SHARE-Cog subtest, they were included in a multivariate logistic regression model and their regression coefficients were compared. The overall performance of a logistic model (i.e., its statistical fit) was assessed using the pseudo R-squared approach by Estrella (), which ranged from 0 to 1, where 1 represents a perfect fit [34,35]. Dominance analysis was carried out to assess the relative importance of each item in the model, measured as the average additional added to the model by including that subtest [36]. The area under the curve (AUC) value of the receiver operating characteristic curves was also used to assess diagnostic accuracy and the 95% confidence intervals (CI) were calculated using DeLong’s method [37]. Covariate-adjusted AUC estimates were used to assess if differences in age, education, sex and country impacted the predictive accuracy according to a frequentist semiparametric approach [38]. For this study, the AUC was interpreted as “excellent” (0.9 to 1), “very good” (0.8 to 0.9), “good” (0.7 to 0.8), “sufficient” (0.6 to 0.7) and “bad” (0.5 to 0.6) [39]. Pearson’s correlation was used to assess the correlation between the three SHARE-Cog items. Internal consistency of the SHARE-Cog was measured using Cronbach’s alpha (α) and the rule-of-thumb cut-offs of unacceptable (<0.5), poor (0.5–0.6), questionable (0.6–0.7), acceptable (0.7–0.8), good (0.8–0.9) and excellent (>0.9) [40].

3. Results

3.1. Sample Description

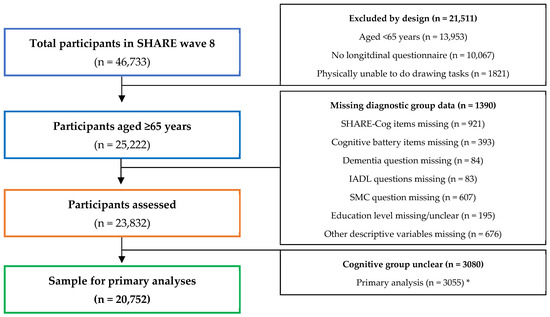

This study is a secondary analysis of participants from the SHARE wave 8 and the selection process for inclusion in this study is described in a flow diagram (Figure 2).

Figure 2.

Flow diagram illustrating participant selection and reasons for exclusion from this secondary analysis. * Sensitivity analyses (presented in the Supplementary Material) excluded different numbers due to the cognitive groups being unclear and details on these are provided in Table S2.

The mean age (SD) of the participants was 74.67 (6.61) and 55% were female. Additional descriptive statistics are provided in Table 1 by cognitive group and illustrate that those with better cognitive status also had statistically significant differences in numerous health issues, such as multi-morbidity, frailty and an overnight hospital stay in the last year.

Table 1.

Descriptive characteristics of the participants by diagnostic category: dementia, mild cognitive impairment (MCI), subjective memory complaints (SMCs) and normal cognition (NC).

3.2. Regression Analysis and SHARE-Cog Weighting

Logistic regression was performed using the three cognitive tests (word registration, verbal fluency and delayed recall) to assess their relative diagnostic importance and to decide how they should be scored within the overall SHARE-Cog instrument (weighting).

3.2.1. Maximum Score for Verbal Fluency

The maximum number of animals named in the verbal fluency task ranged from 0 to 100 in the SHARE. However, based on the value (Table S3), reducing this maximum animal cut-off as low as 30 had almost no impact on the diagnostic accuracy of the regression model. The value was thus set to 30 for further analysis, equating to an average of one animal named every 2 s. Even where participants named ≥30 animals, the number was capped at 30, with no additional points awarded above this threshold.

3.2.2. Relative Importance of Each Subtest

As presented in Table 2, the regression models including word registration, verbal fluency and word recall achieved good diagnostic accuracy (AUC) and could explain a fair amount of the variability in the outcomes (). The dominance analysis found that all three contributed to the performance of the models and are thus all worth including. Word registration contributed more than word recall when distinguishing dementia from MCI, and word recall contributed more when distinguishing MCI from NC.

Table 2.

Regression modelling (unadjusted) of the three SHARE-Cog items for different diagnostic comparisons including the regression coefficients for the best fit, the overall diagnostic performance of the model and the relative importance of each item in regards to the model performance (dominance analysis).

3.2.3. Scoring of Each Subtest for SHARE-Cog

As presented in Table 2, the regression coefficients illustrate the optimum value per correct word for each subtest in each model. Given the possible variations, a further analysis was carried out, looking at the accuracy (AUC) of all unique weighting ratios (n = 291) created by combinations of 0.5, 1, 2, 3 and 4 points per word, as well as varying the maximum cut-off for animal naming in verbal fluency between 20, 30 and 40 (Table S4). Based on these results, multiple weightings achieved very similar diagnostic accuracy. Hence, in addition to this, clinical judgement based on the literature was applied to decide on the final weighting used. As verbal fluency and delayed recall are more accurate than registration in diagnostic accuracy test studies of individual patients [41], the weighting of 1 point (for registration), 0.5 points (for verbal fluency, taking a maximum of 30 animals named, and rounding to the nearest whole number) and 2 points (for recall) per word was selected. This gave the SHARE-Cog a total score of 45 points: 10 (registration), 15 (verbal fluency) and 20 (recall) points.

3.3. SHARE-Cog Items and Internal Consistency

The 10-word registration subtest ranged between 0 and 10 with a mean number of words of 5.29 (SD: 1.61 and 99th percentile: 9). Verbal fluency ranged between 0 and 100 animals and the mean number of animals was 20.69 (SD = 7.09, 99th percentile = 39). The 10-word recall had a range between 0 and 10 and a mean number of words of 3.9 (SD = 2.05, 99th percentile = 9). Applying the weights and 30 animal limit to verbal fluency, the mean (SD) values of each SHARE-Cog subtests were: 5.29 (1.61) for word registration, 10.14 (3.12) for verbal fluency and 10.58 (3.21) for word recall. The total SHARE-Cog score ranged from 0 to 45 with a mean of 23.24 points (SD = 7.43, 99th percentile = 40). The Pearson’s correlations (r) between the SHARE-Cog subtests were 0.49 for registration and verbal fluency, 0.46 for recall and verbal fluency and 0.73 for registration and recall. The overall internal consistency of SHARE-Cog was “acceptable” (Cronbach Alpha = 0.71).

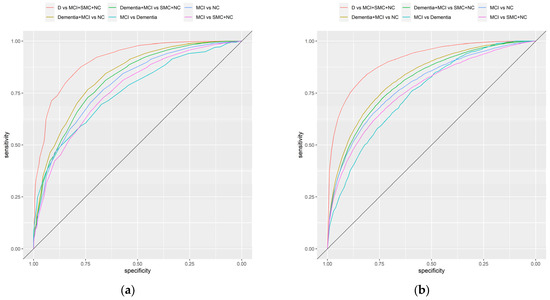

3.4. SHARE-Cog Diagnostic Accuracy

The diagnostic accuracy of SHARE-Cog (Figure 3a) was excellent in its ability to differentiate dementia (from everything else), (AUC = 0.91 and 95% confidence interval (CI): 0.89–0.92) and was very good for separating cognitive impairment (i.e., dementia and MCI) from NC (AUC: 0.83 and 95% CI: 0.82–0.84) and cognitive impairment (dementia and MCI) from SMC and NC (AUC: 0.81 and 95% CI: 0.80–0.82). Similarly, it had good diagnostic accuracy for the other comparisons: MCI versus NC (AUC: 0.79 and 95% CI: 0.77–0.81), MCI versus SMC and NC (AUC: 0.77 and 95% CI: 0.75–0.78) and MCI versus dementia (AUC: 0.76 and 95% CI: 0.72–0.79). Sensitivity analysis was also carried out for different ways of measuring IADLs and excluding the self-reported medical diagnosis question (Table S5). These confirmed similar diagnostic accuracies.

Figure 3.

Receiver operating characteristic curves illustrating the diagnostic accuracy of the SHARE-Cog including: (a) unadjusted curves and (b) covariate-adjusted (age, sex, education and country) curves.

3.5. Adjusting for Covariates

A covariate-adjusted ROC analysis was carried out to assess the impact of age, education, sex and country on the diagnostic accuracy of the SHARE-Cog (Figure 3b, Table S6). The covariate-adjusted AUC was slightly lower, for example, it provided an AUC of 0.80 (95% CI: 0.79–0.81) for separating cognitive impairment (dementia and MCI) from SMC and NC. Across all the comparisons, differences in age and education level were statistically significantly associated with changes in diagnostics accuracy, with the accuracy being better in those who were older and those who were less educated. For sex, the results were more inconsistent and mostly statistically insignificant. Similarly, few statistically significant differences in diagnostic accuracy were observed between countries when taking Austria as the reference with only Denmark (p = 0.029) and Italy (p = 0.024) being marginally statistically significant in some comparisons (Table S6).

4. Discussion

This study proposed and validated a new short novel cognitive screen or instrument, SHARE-Cog, which could be used to assess and measure cognitive impairment in the SHARE study. It includes three commonly used cognitive items (subtests): word registration (10 words), verbal fluency (animal naming) and word recall. The weighted scoring of these items in the SHARE-Cog showed good to excellent diagnostic accuracy for cognitive impairment in the SHARE across the spectrum, from identifying MCI to differentiating dementia. Specifically, this analysis showed that SHARE-Cog had excellent diagnostic accuracy for dementia (AUC = 0.91) and very good diagnostic accuracy for cognitive impairment, including MCI (AUC = 0.81). However, for differentiating MCI from normal (objective) performance, including SMC and NC (AUC = 0.77), and for differentiating MCI from dementia (AUC = 0.76) it had good diagnostic accuracy. These results suggest that, based on its relative brevity (estimated to take approximately 2 to 3 min to administer), SHARE-Cog could be a useful screening instrument for studies outside of SHARE and potentially also in clinical practice, though more research is required to confirm this.

These items are also widely available in other longitudinal studies of ageing [23,24], such as The Irish Longitudinal Study of Ageing (TILDA), the English Longitudinal Study of Ageing (ELSA) and the Study on Global Ageing and Adult Health (SAGE), which all share common questions with the University of Michigan’s HRS. Thus, this new tool has the potential to provide a homogeneous approach to measuring cognitive impairment, in a range of studies both in Europe and internationally. Further, while studies have assessed differences in cognitive functioning in the SHARE [42,43,44], to the best of our knowledge, this is the first study to develop and validate a bespoke CSI for this study, as well as the first step toward a harmonised definition of MCI, which the SHARE currently lacks.

The logistic regression analysis found that the optimal scoring of the three subtests depended on which diagnostic comparisons were being made and, upon further analysis, several scores were found to produced very similar predictive accuracy. Relative to the other two assessments, the word registration was weighted higher for differentiating MCI from dementia and word recall was weighted higher when differentiating MCI from NC. It was also found (Table S3) that reducing the maximum score for verbal fluence from 100 to 30 did not negatively impact the diagnostic accuracy of model. This may be useful, since some studies may not record such large numbers; for example, the Mexican Health and Ageing Study (MHAS) only records 30 [24]. The final SHARE-Cog involved scoring 1 point for each word registered, half a point for each animal in verbal fluency (rounded up to nearest whole number) and 2 points for each word recalled for a total score out of 45.

The dominance analysis assessed the relative importance of each of the three subtests on the performance of the logistic regression models (according to ). Since the order in which items are added to a model impacts how much value they add this method considers the average contribution across all possible combinations. This analysis found that all three items contributed positively to the model and suggested that word registration was statistically capturing something in addition to delayed recall. This may be due to the fact that it involved 10 words, which was a large number. Previous research has also suggested that, in immediate recall learning tasks, such as a short form of the California Verbal Learning Test, may necessitate the use of networks beyond traditional episodic memory, such as semantic processing [45]. Despite this, other CSIs vary on whether or not they score immediate recall, for example, both the Mini-Mental State Examination (MMSE) [46] and the Quick Mild Cognitive Impairment (Qmci) screening [47] score it, but the MoCA [27] does not. This needs to be considered carefully in future studies, where scoring it may improve the diagnostic accuracy or reduce the number of additional subtests.

The covariate adjustment found that the diagnostic accuracy was consistently affected by age and education, where the screening was more accurate for those who were older and those with lower education levels. Hence, SHARE-Cog may be a particularly useful tool for those with low literacy or education. This is supported by research that found that tasks involving “reading, writing, arithmetic, drawing, praxis, visuospatial and visuoconstructive skills have a greater educational bias than naming, orientation, or memory” [48]. While they were excluded from the current study, SHARE-Cog can also be administered to those with physical limitations that affect their ability to complete drawing and written tasks, since all three tasks in SHARE-Cog are verbal. SHARE-Cog may be slightly worse at distinguishing MCI from normal cognition in women (p = 0.02), although this may just be a chance finding, because, while statistically significant, the difference was marginal from a clinical perspective. Similarly, for countries there were very few statistically significant differences suggesting that country has little to no impact on its diagnostic accuracy within the European context.

The strengths of this study include the large sample size and that it was a population-based cohort. Given that CSIs are mostly assessed in clinical settings, there is a need for more population-level studies to assess their utility within community settings. There are several limitations, however, relating to the accuracy of the dementia and MCI diagnostic categories. Firstly, the responses were self-reported, potentially introducing bias. For example, the self-reported “doctor-diagnosed serious memory problem” question likely included a range of conditions. This could have resulted in misclassification. For this study, attempts were made to separate it into dementia and MCI. The SMC and ADL questions were also subjective “tick box” questions that would lack the accuracy and rigour of a formal clinical investigation. Similarly, the reasons for ADL limitations were not available and could have been related to cognition, physical or emotional issues. We attempted to isolate more cognitively focused tasks, but this approach may have introduced bias. However, sub-analysis assessed the impact of different approaches to measuring these and did not find that varying them had little impact on the diagnostic accuracy of the SHARE-Cog for overall cognitive impairment (Table S6). In addition, many SHARE participants were excluded based on their diagnostic group being too unclear/contradictory to quantify clearly.

It is also unclear if the results of this study are generalisable to all waves of SHARE, other longitudinal studies or clinical practice i.e., spectrum bias; hence, more research is needed to evaluate and externally validate SHARE-Cog. Given that the participants had received a previous SHARE interview, there may have been a small risk of practice effects, although this is unlikely, since there were, on average, 2–3 years between interviews. Even in this context, four random lists of words were used for word registration and recall, minimising learning effects. Verbal fluency was only for animals, but this is not as prone to learning/practice effects as other subtests. A previous study suggested that, in those with MCI, practice effects can last up to one year, but are more marked for word registration and recall than other cognitive domains, such as verbal fluency [49]. Most of the subtests used in the broader cognitive battery were only introduced in the current wave.

Our analysis focused on generating a CSI (SHARE-Cog), and further research could look at generating a more complete risk-prediction model including both cognitive measures and patient characteristics (such as age, sex and education and likely risk factors, such as hypertension, etc.) [50].

5. Conclusions

In conclusion, SHARE-Cog performed well, demonstrating good to excellent diagnostic accuracy across the spectrum of cognitive impairment in this validation study. Despite being only composed of three subtests, it could be used in analyses of the SHARE and numerous other longitudinal studies of ageing to better understand the epidemiology of cognitive impairment. This could aid in harmonisation efforts quantifying the prevalence of syndromes such as MCI and cognitive frailty, which currently are extremely heterogeneous across studies, thus making epidemiological comparisons challenging.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/ijerph20196869/s1, Table S1: Details on the age- and education- specific cut-offs for MCI and dementia that were obtained from the MoCA-based cognitive test battery. Table S2. Details on those who were excluded from the analyses due to their cognitive diagnostic group being too unclear/contradictory including the numbers and rational. Table S3. Assessment of the impact of reducing the maximum number of animals in the verbal fluency subtest on the overall model (registration, verbal fluency and recall) performance including the overall model fit measured using the pseudo R-squared () value and overall diagnostic accuracy of the model measured using the area under the ROC curve (AUC). Table S4. Diagnostic accuracy for all unique weighting combinations (n = 291) obtained from varying the weightings of the three SHARE-Cog subtests (word registration, verbal fluency and word recall) between 0.5, 1, 2, 3, and 4 points per word and by changing the maximum number of animals in the verbal fluency subtest between 20, 30 and 40. Table S5. Sensitivity analysis of SHARE-Cog for different ways of defining the cognitive diagnostic groups. Table S6. Results from the covariate-adjusted ROC curves of the SHARE-Cog including the adjusted area under the curve (AAUC), the regression coefficients (p-values) for each of the covariate levels and the R2 value of the covariates combined. In addition, a SHARE-Cog instrument (scoring template) and scoring instructions are is provided, and a R project file containing all the R codes used to reproduce the analysis in this study.

Author Contributions

Conceptualisation, M.R.O. and R.O.; methodology, M.R.O. and R.O.; software, M.R.O.; validation, M.R.O., N.C. and R.O.; formal analysis, M.R.O.; investigation, M.R.O. and R.O.; resources, M.R.O.; data curation, M.R.O.; writing—original draft preparation, M.R.O.; writing—review and editing, M.R.O., N.C. and R.O.; visualisation, M.R.O.; supervision, N.C. and R.O.; project administration, N.C. and R.O.; funding acquisition, N.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work is in part a contribution to a PhD funded as part of an EU Joint Programme—Neurodegenerative Disease Research (JPND) project. The project is supported through the following funding organisations under the aegis of JPND—www.jpnd.eu: Canada, Canadian Institutes of Health Research (grant number 161462); the Czech Republic, Ministry of Education, Youth and Sport (grant number 8F19005); Netherlands, Netherlands Organisation for Health Research and Development (grant number 733051084); Republic of Ireland, Health Research Board (grant number JPND-HSC-2018-002); the UK, Alzheimer’s Society (grant number AS-IGF-17-001).

Institutional Review Board Statement

The ethic approval has been waived due to this study is a secondary analysis of an anonymous dataset from the Survey of Health, Ageing and Retirement in Europe and data access for scientific use is granted to individual users via an online the registration process (https://share-eric.eu/data/become-a-user (accessed on 1 August 2023)).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data may be accessed through becoming a registered user with the Survey of Health, Ageing and Retirement in Europe (via www.share-project.org) (accessed on 7 September 2023).

Acknowledgments

This paper uses data from SHARE Wave 8 (DOI: 10.6103/SHARE.w8.800). The SHARE data collection has been funded by the European Commission, DG RTD through FP5 (QLK6-CT-2001-00360), FP6 (SHARE-I3: RII-CT-2006-062193, COM-PARE: CIT5-CT-2005-028857, SHARELIFE: CIT4-CT-2006-028812), FP7 (SHARE-PREP: GA N°211909, SHARE-LEAP: GA N°227822, SHARE M4: GA N°261982, DASISH: GA N°283646) and Horizon 2020 (SHARE-DEV3: GA N°676536, SHARE-COHESION: GA N°870628, SERISS: GA N°654221, SSHOC: GA N°823782, SHARE-COVID19: GA N°101015924) and by DG Employment, Social Affairs and Inclusion through VS 2015/0195, VS 2016/0135, VS 2018/0285, VS 2019/0332 and VS 2020/0313. Additional funding from the German Ministry of Education and Research, the Max Planck Society for the Advancement of Science, the U.S. National Institute on Aging (U01_AG09740-13S2, P01_AG005842, P01_AG08291, P30_AG12815, R21_AG025169, Y1-AG-4553-01, IAG_BSR06-11, OGHA_04-064, HHSN271201300071C and RAG052527A) and from various national funding sources is gratefully acknowledged (see www.share-project.org) (accessed on 7 September 2023).

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- United Nations. World Population Prospects 2022: Summary of Results; United Nations: New York, NY, USA, 2022; ISBN 978-92-1-001438-0. [Google Scholar]

- Park, H.L.; O’Connell, J.E.; Thomson, R.G. A Systematic Review of Cognitive Decline in the General Elderly Population. Int. J. Geriat. Psychiatry 2003, 18, 1121–1134. [Google Scholar] [CrossRef]

- Nichols, E.; Steinmetz, J.D.; Vollset, S.E.; Fukutaki, K.; Chalek, J.; Abd-Allah, F.; Abdoli, A.; Abualhasan, A.; Abu-Gharbieh, E.; Akram, T.T.; et al. Estimation of the Global Prevalence of Dementia in 2019 and Forecasted Prevalence in 2050: An Analysis for the Global Burden of Disease Study 2019. Lancet Public Health 2022, 7, e105–e125. [Google Scholar] [CrossRef] [PubMed]

- Mitchell, A.J.; Shiri-Feshki, M. Rate of Progression of Mild Cognitive Impairment to Dementia—Meta-Analysis of 41 Robust Inception Cohort Studies. Acta Psychiatr. Scand. 2009, 119, 252–265. [Google Scholar] [CrossRef]

- Mendonça, M.D.; Alves, L.; Bugalho, P. From Subjective Cognitive Complaints to Dementia: Who Is at Risk? A Systematic Review. Am. J. Alzheimer’s Dis. Other Dement. 2016, 31, 105–114. [Google Scholar] [CrossRef]

- Ngandu, T.; Lehtisalo, J.; Solomon, A.; Levälahti, E.; Ahtiluoto, S.; Antikainen, R.; Bäckman, L.; Hänninen, T.; Jula, A.; Laatikainen, T.; et al. A 2 Year Multidomain Intervention of Diet, Exercise, Cognitive Training, and Vascular Risk Monitoring versus Control to Prevent Cognitive Decline in at-Risk Elderly People (FINGER): A Randomised Controlled Trial. Lancet 2015, 385, 2255–2263. [Google Scholar] [CrossRef] [PubMed]

- Cummings, J.; Passmore, P.; McGuinness, B.; Mok, V.; Chen, C.; Engelborghs, S.; Woodward, M.; Manzano, S.; Garcia-Ribas, G.; Cappa, S.; et al. Souvenaid in the Management of Mild Cognitive Impairment: An Expert Consensus Opinion. Alzheimer’s Res. Ther. 2019, 11, 73. [Google Scholar] [CrossRef] [PubMed]

- Canadian Task Force on Preventive Health Care; Pottie, K.; Rahal, R.; Jaramillo, A.; Birtwhistle, R.; Thombs, B.D.; Singh, H.; Gorber, S.C.; Dunfield, L.; Shane, A.; et al. Recommendations on Screening for Cognitive Impairment in Older Adults. CMAJ 2016, 188, 37–46. [Google Scholar] [CrossRef]

- Lin, J.S.; O’Connor, E.; Rossom, R.C.; Perdue, L.A.; Eckstrom, E. Screening for Cognitive Impairment in Older Adults: A Systematic Review for the U.S. Prev. Serv. Task Force. Ann. Intern. Med. 2013, 159, 601–612. [Google Scholar] [CrossRef]

- US Preventive Services Task Force; Owens, D.K.; Davidson, K.W.; Krist, A.H.; Barry, M.J.; Cabana, M.; Caughey, A.B.; Doubeni, C.A.; Epling, J.W.; Kubik, M.; et al. Screening for Cognitive Impairment in Older Adults: US Preventive Services Task Force Recommendation Statement. JAMA 2020, 323, 757–763. [Google Scholar] [CrossRef]

- Borson, S.; Frank, L.; Bayley, P.J.; Boustani, M.; Dean, M.; Lin, P.; McCarten, J.R.; Morris, J.C.; Salmon, D.P.; Schmitt, F.A.; et al. Improving Dementia Care: The Role of Screening and Detection of Cognitive Impairment. Alzheimer’s Dement. 2013, 9, 151–159. [Google Scholar] [CrossRef]

- Karimi, L.; Mahboub–Ahari, A.; Jahangiry, L.; Sadeghi-Bazargani, H.; Farahbakhsh, M. A Systematic Review and Meta-Analysis of Studies on Screening for Mild Cognitive Impairment in Primary Healthcare. BMC Psychiatry 2022, 22, 97. [Google Scholar] [CrossRef]

- Larner, A. Performance-Based Cognitive Screening Instruments: An Extended Analysis of the Time versus Accuracy Trade-Off. Diagnostics 2015, 5, 504–512. [Google Scholar] [CrossRef]

- Casagrande, M.; Marselli, G.; Agostini, F.; Forte, G.; Favieri, F.; Guarino, A. The Complex Burden of Determining Prevalence Rates of Mild Cognitive Impairment: A Systematic Review. Front. Psychiatry 2022, 13, 960648. [Google Scholar] [CrossRef]

- Bai, W.; Chen, P.; Cai, H.; Zhang, Q.; Su, Z.; Cheung, T.; Jackson, T.; Sha, S.; Xiang, Y.-T. Worldwide Prevalence of Mild Cognitive Impairment among Community Dwellers Aged 50 Years and Older: A Meta-Analysis and Systematic Review of Epidemiology Studies. Age Ageing 2022, 51, afac173. [Google Scholar] [CrossRef]

- Pessoa, R.M.P.; Bomfim, A.J.L.; Ferreira, B.L.C.; Chagas, M.H.N. Diagnostic Criteria and Prevalence of Mild Cognitive Impairment in Older Adults Living in the Community: A Systematic Review and Meta-Analysis. Arch. Clin. Psychiatry 2019, 46, 72–79. [Google Scholar] [CrossRef]

- Luck, T.; Luppa, M.; Briel, S.; Riedel-Heller, S. Incidence of mild cognitive impairment—A systematic review. Dement. Geriatr. Cogn. Disord. 2010, 29, 164–175. [Google Scholar] [CrossRef] [PubMed]

- Sachdev, P.S.; Lipnicki, D.M.; Kochan, N.A.; Crawford, J.D.; Thalamuthu, A.; Andrews, G.; Brayne, C.; Matthews, F.E.; Stephan, B.C.M.; Lipton, R.B.; et al. The Prevalence of Mild Cognitive Impairment in Diverse Geographical and Ethnocultural Regions: The COSMIC Collaboration. PLoS ONE 2015, 10, e0142388. [Google Scholar] [CrossRef]

- Gillis, C.; Mirzaei, F.; Potashman, M.; Ikram, M.A.; Maserejian, N. The Incidence of Mild Cognitive Impairment: A Systematic Review and Data Synthesis. Alzheimer’s Dement. Diagn. Assess. Dis. Monit. 2019, 11, 248–256. [Google Scholar] [CrossRef]

- Maelstrom Research Integrative Analysis of Longitudinal Studies of Aging and Dementia. Available online: http://www.maelstrom-research.org/ (accessed on 24 July 2023).

- Lee, J.; Chien, S.; Phillips, D.; Weerman, B.; Wilkens, J.; Chen, Y.; Green, H.; Petrosyan, S.; Shao, K.; Young, C.; et al. Gateway to Global Aging Data. Available online: https://g2aging.org/ (accessed on 24 July 2023).

- NACDA NACDA: National Archive of Computerized Data on Aging. Available online: https://www.icpsr.umich.edu/web/pages/NACDA/index.html (accessed on 24 July 2023).

- Stefler, D.; Prina, M.; Wu, Y.-T.; Sánchez-Niubò, A.; Lu, W.; Haro, J.M.; Marmot, M.; Bobak, M. Socioeconomic Inequalities in Physical and Cognitive Functioning: Cross-Sectional Evidence from 37 Cohorts across 28 Countries in the ATHLOS Project. J. Epidemiol. Community Health 2021, 75, 980–986. [Google Scholar] [CrossRef]

- Céline, D.L.; Feeney, J.; Kenny, R.A. The CANDID Initiative. Leveraging Cognitive Ageing Dementia Data from Around the World; The Irish Longitudinal Study on Ageing: Dublin, Ireland, 2021. [Google Scholar]

- Börsch-Supan, A. Survey of Health, Ageing and Retirement in Europe (SHARE) Wave 8. Release Version: 8.0.0. SHARE-ERIC. Data Set. 2022. Available online: https://share-eric.eu/data/data-documentation/waves-overview/wave-8 (accessed on 7 July 2023).

- Börsch-Supan, A.; Brandt, M.; Hunkler, C.; Kneip, T.; Korbmacher, J.; Malter, F.; Schaan, B.; Stuck, S.; Zuber, S. Data Resource Profile: The Survey of Health, Ageing and Retirement in Europe (SHARE). Int. J. Epidemiol. 2013, 42, 992–1001. [Google Scholar] [CrossRef] [PubMed]

- Nasreddine, Z.S.; Phillips, N.A.; Bédirian, V.; Charbonneau, S.; Whitehead, V.; Collin, I.; Cummings, J.L.; Chertkow, H. The Montreal Cognitive Assessment, MoCA: A Brief Screening Tool For Mild Cognitive Impairment. J. Am. Geriatr. Soc. 2005, 53, 695–699. [Google Scholar] [CrossRef] [PubMed]

- UNESCO. International Standard Classification of Education ISCED 1997; English edition.-Re-edition.; UNESCO-UIS: Montreal, QC, Canada, 2006; ISBN 978-92-9189-035-4. [Google Scholar]

- Fernández-Alvira, J.M.; Mouratidou, T.; Bammann, K.; Hebestreit, A.; Barba, G.; Sieri, S.; Reisch, L.; Eiben, G.; Hadjigeorgiou, C.; Kovacs, E.; et al. Parental Education and Frequency of Food Consumption in European Children: The IDEFICS Study. Public Health Nutr. 2013, 16, 487–498. [Google Scholar] [CrossRef] [PubMed]

- van Oyen, H.; Van der Heyden, J.; Perenboom, R.; Jagger, C. Monitoring Population Disability: Evaluation of a New Global Activity Limitation Indicator (GALI). Soz. Präventivmed. 2006, 51, 153–161. [Google Scholar] [CrossRef] [PubMed]

- Romero-Ortuno, R.; Walsh, C.D.; Lawlor, B.A.; Kenny, R.A. A Frailty Instrument for Primary Care: Findings from the Survey of Health, Ageing and Retirement in Europe (SHARE). BMC Geriatr. 2010, 10, 57. [Google Scholar] [CrossRef]

- Mast, B.T.; Yochim, B.P. Alzheimer’s Disease and Dementia; Advances in Psychotherapy Series; Hogrefe: Boston, MA, USA; Göttingen, Germany, 2018; ISBN 978-1-61676-503-3. [Google Scholar]

- Petersen, R.C.; Caracciolo, B.; Brayne, C.; Gauthier, S.; Jelic, V.; Fratiglioni, L. Mild Cognitive Impairment: A Concept in Evolution. J. Intern. Med. 2014, 275, 214–228. [Google Scholar] [CrossRef] [PubMed]

- Estrella, A. WHICH PSEUDO R-SQUARED? CONCLUSIVE NEW EVIDENCE; Finance, Economics and Monetary Policy Discussion Papers; Discussion Paper No. 2202. 2022. Available online: http://financeecon.com/DPs/DP2202.pdf (accessed on 28 July 2023).

- Estrella, A. A New Measure of Fit for Equations With Dichotomous Dependent Variables. J. Bus. Econ. Stat. 1998, 16, 198–205. [Google Scholar] [CrossRef]

- Azen, R.; Traxel, N. Using Dominance Analysis to Determine Predictor Importance in Logistic Regression. J. Educ. Behav. Stat. 2009, 34, 319–347. [Google Scholar] [CrossRef]

- DeLong, E.R.; DeLong, D.M.; Clarke-Pearson, D.L. Comparing the Areas under Two or More Correlated Receiver Operating Characteristic Curves: A Nonparametric Approach. Biometrics 1988, 44, 837–845. [Google Scholar] [CrossRef]

- Janes, H.; Pepe, M.S. Adjusting for Covariate Effects on Classification Accuracy Using the Covariate-Adjusted Receiver Operating Characteristic Curve. Biometrika 2009, 96, 371–382. [Google Scholar] [CrossRef]

- Šimundić, A.-M. Measures of Diagnostic Accuracy: Basic Definitions. EJIFCC 2009, 19, 203–211. [Google Scholar]

- Adawi, M.; Zerbetto, R.; Re, T.S.; Bisharat, B.; Mahamid, M.; Amital, H.; Del Puente, G.; Bragazzi, N.L. Psychometric Properties of the Brief Symptom Inventory in Nomophobic Subjects: Insights from Preliminary Confirmatory Factor, Exploratory Factor, and Clustering Analyses in a Sample of Healthy Italian Volunteers. Psychol. Res. Behav. Manag. 2019, 12, 145. [Google Scholar] [CrossRef] [PubMed]

- O’Caoimh, R.; Gao, Y.; Gallagher, P.F.; Eustace, J.; McGlade, C.; Molloy, D.W. Which part of the Quick mild cognitive impairment screen (Qmci) discriminates between normal cognition, mild cognitive impairment and dementia? Age Ageing 2013, 42, 324–330. [Google Scholar] [CrossRef]

- Ahrenfeldt, L.J.; Lindahl-Jacobsen, R.; Rizzi, S.; Thinggaard, M.; Christensen, K.; Vaupel, J.W. Comparison of Cognitive and Physical Functioning of Europeans in 2004–05 and 2013. Int. J. Epidemiol. 2018, 47, 1518–1528. [Google Scholar] [CrossRef] [PubMed]

- Barbosa, R.; Midão, L.; Almada, M.; Costa, E. Cognitive Performance in Older Adults across Europe Based on the SHARE Database. Aging Neuropsychol. Cogn. 2021, 28, 584–599. [Google Scholar] [CrossRef] [PubMed]

- Formanek, T.; Kagstrom, A.; Winkler, P.; Cermakova, P. Differences in Cognitive Performance and Cognitive Decline across European Regions: A Population-Based Prospective Cohort Study. Eur. Psychiatry 2019, 58, 80–86. [Google Scholar] [CrossRef] [PubMed]

- Casaletto, K.B.; Marx, G.; Dutt, S.; Neuhaus, J.; Saloner, R.; Kritikos, L.; Miller, B.; Kramer, J.H. Is “Learning” Episodic Memory? Distinct Cognitive and Neuroanatomic Correlates of Immediate Recall during Learning Trials in Neurologically Normal Aging and Neurodegenerative Cohorts. Neuropsychologia 2017, 102, 19–28. [Google Scholar] [CrossRef]

- Folstein, M.F.; Folstein, S.E.; McHugh, P.R. “Mini-Mental State”. A Practical Method for Grading the Cognitive State of Patients for the Clinician. J. Psychiatr. Res. 1975, 12, 189–198. [Google Scholar] [CrossRef]

- O’Caoimh, R.; Gao, Y.; McGlade, C.; Healy, L.; Gallagher, P.; Timmons, S.; Molloy, D.W. Comparison of the Quick Mild Cognitive Impairment (Qmci) Screen and the SMMSE in Screening for Mild Cognitive Impairment. Age Ageing 2012, 41, 624–629. [Google Scholar] [CrossRef]

- Pellicer-Espinosa, I.; Díaz-Orueta, U. Cognitive Screening Instruments for Older Adults with Low Educational and Literacy Levels: A Systematic Review. J. Appl. Gerontol. 2022, 41, 1222–1231. [Google Scholar] [CrossRef]

- Goldberg, T.E.; Harvey, P.D.; Wesnes, K.A.; Snyder, P.J.; Schneider, L.S. Practice Effects Due to Serial Cognitive Assessment: Implications for Preclinical Alzheimer’s Disease Randomized Controlled Trials. Alzheimer’s Dement. Diagn. Assess. Dis. Monit. 2015, 1, 103–111. [Google Scholar] [CrossRef]

- Hu, M.; Gao, Y.; Kwok, T.C.Y.; Shao, Z.; Xiao, L.D.; Feng, H. Derivation and Validation of the Cognitive Impairment Prediction Model in Older Adults: A National Cohort Study. Front. Aging Neurosci. 2022, 14, 755005. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).