Codesign and Feasibility Testing of a Tool to Evaluate Overweight and Obesity Apps

Abstract

:1. Introduction

2. Materials and Methods

2.1. The Co-Creation Process: Development of the Format and Sections of the EVALAPPS Tool

2.2. Pilot Testing: Feasibility Test of the EVALAPPS Tool

3. Results

3.1. Co-Creation Workshop: Development of the Format and Sections of the EVALAPPS Tool

3.1.1. General Issues

“The approach is different, because for the patient it is a data collection and for the professional it is an evaluation tool.”

“There are indicators or dimensions that may be more typical of the health professional and others that may be more specific to the patient. For example, motivational issues are purely the patient’s, as the health professional cannot evaluate it. Instead, other aspects are more typical of the health professional.”

“Tracking, adherence, … are more users. Instead, efficacy can be shared and safety would be more health professional-like.”

“There are dimensions that are more typical of an ICT professional, or about how data is encrypted, etc. And a user or health professional cannot assess these criteria.”

“Me, as a patient, may be particularly concerned about data security or confidentiality. Instead, someone else may not have this concern.”

“On safety issues, depending on which diets are promoted, the patient and health professional may see it differently. For the patient it may be a motivational element, but instead, the health professional may consider that depending on what type of diets may put the patient’s health at risk.”

“If we make two profiles, we are already making two products. They may be pretty much the same questions for each other. To avoid complexity it is best to make a single application.”

“Another possible option is to leave the choice at the discretion of each person so that everyone chooses the dimensions that they want to evaluate (the ones that concern them most and in which they have opinion). In this case, there would be no need to differentiate between profiles, but the content of the evaluation would vary by person.”

“That each person can choose, as a user, the criteria that he/she wants to value of the app, because they are the ones that he or she cares about the most. More than creating multiple profiles.”

“Two profiles will always be there, and perhaps the decision is if you ask at the beginning when you create the profile to discriminate, but this can be very aggressive for users who make use of the application because they already have the feeling that “they will study them.”

“In any case, entry cannot be aggressive and differentiating between patients and health professionals can create a barrier. The question should go depending on the use that will be made of the application (as a patient, to recommend it to patients, …)”

3.1.2. Content of the Application

3.1.3. Dimension Weights

3.1.4. Mobile Phone EVALAPPS Tool

3.2. Pilot Testing: Feasibility Testing

Feasibility In-Depth Interviews

4. Discussion

Strengths and Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- World Health Organization. Obesity and Overweight. 2021. Available online: Https://Www.Who.Int/News-Room/Fact-Sheets/Detail/Obesity-and-Overweight (accessed on 20 February 2022).

- Harvard, T.H. Chan School of Public Health. In Obesity Prevention Source; Harvard: Boston, MA, USA, 2021; Available online: https://www.hsph.harvard.edu/obesity-prevention-source/ (accessed on 20 February 2022).

- Castelnuovo, G.; Manzoni, G.M.; Pietrabissa, G.; Corti, S.; Giusti, E.M.; Molinari, E.; Simpson, S. Obesity and outpatient rehabilitation using mobile technologies: The potential mHealth approach. Front. Psychol. 2014, 5, 559. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Byambasuren, O.; Sanders, S.; Beller, E.; Glasziou, P. Prescribable mHealth apps identified from an overview of systematic reviews. NPJ Digit. Med. 2018, 1, 12. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Raaijmakers, L.C.H.; Pouwels, S.; Berghuis, K.A.; Nienhuijs, S.W. Technology-based interventions in the treatment of overweight and obesity: A systematic review. Appetite 2015, 95, 138–151. [Google Scholar] [CrossRef] [PubMed]

- Rumbo-Rodríguez, L.; Sánchez-SanSegundo, M.; Ruiz-Robledillo, N.; Albaladejo-Blázquez, N.; Ferrer-Cascales, R.; Zaragoza-Martí, A. Use of Technology-Based Interventions in the Treatment of Patients with Overweight and Obesity: A Systematic Review. Nutrients 2020, 12, 3634. [Google Scholar] [CrossRef] [PubMed]

- Nikolaou, C.K.; Lean, M.E.J. Mobile applications for obesity and weight management: Current market characteristics. Int. J. Obes. 2017, 41, 200–202. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Puigdomenech Puig, E.; Robles, N.; Saigí-Rubió, F.; Zamora, A.; Moharra, M.; Paluzie, G.; Balfegó, M.; Cuatrecasas Cambra, G.; Garcia-Lorda, P.; Carrion, C. Assessment of the Efficacy. Safety, and Effectiveness of Weight Control and Obesity Management Mobile Health Interventions: Systematic Review. JMIR MHealth UHealth 2019, 7, e12612. [Google Scholar] [CrossRef] [PubMed]

- Robles, N.; Puigdomènech Puig, E.; Gómez-Calderón, C.; Saigí-Rubió, F.; Cuatrecasas Cambra, G.; Zamora, A.; Moharra, M.; Paluzié, G.; Balfegó, M.; Carrion, C. Evaluation Criteria for Weight Management Apps: Validation Using a Modified Delphi Process. JMIR MHealth UHealth 2020, 8, e16899. [Google Scholar] [CrossRef] [PubMed]

- World Health Organization. Monitoring and Evaluating Digital Health Interventions. A Practical Guide to Conducting Research and Assessment. 2016. Available online: https://www.who.int/publications/i/item/9789241511766 (accessed on 20 February 2022).

- Roberts, J.P.; Fisher, T.R.; Trowbridge, M.J.; Bent, C. A design thinking framework for healthcare management and innovation. Healthcare 2016, 4, 11–14. [Google Scholar] [CrossRef] [PubMed]

- Braun, V.; Clarke, V. Using thematic analysis in psychology. Qual. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef] [Green Version]

- Agarwal, S.; LeFevre, A.E.; Lee, J.; L’Engle, K.; Mehl, G.; Sinha, C.; Labrique, A.; WHO mHealth Technical Evidence Review Group. Guidelines for reporting of health interventions using mobile phones: Mobile health (mHealth) evidence reporting and assessment (mERA) checklist. BMJ 2016, 352, i1174. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Public Health England. Health App Development: The App Assessment Process. 2017. Available online: https://www.gov.uk/guidance/health-app-developers-the-assessment-process (accessed on 20 February 2022).

- Wyatt, J.C.; Thimbleby, H.; Rastall, P.; Hoogewerf, J.; Wooldridge, D.; Williams, J. What makes a good clinical app? Introducing the RCP Health Informatics Unit checklist. Clin. Med. 2015, 15, 519–521. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Stoyanov, S.R.; Hides, L.; Kavanagh, D.J.; Zelenko, O.; Tjondronegoro, D.; Mani, M. Mobile App Rating Scale: A New Tool for Assessing the Quality of Health Mobile Apps. JMIR MHealth UHealth 2015, 3, e27. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kim, B.Y.; Sharafoddini, A.; Tran, N.; Wen, E.Y.; Lee, J. Consumer Mobile Apps for Potential Drug-Drug Interaction Check: Systematic Review and Content Analysis Using the Mobile App Rating Scale (MARS). JMIR MHealth UHealth 2018, 6, e74. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gong, E.; Zhang, Z.; Jin, X.; Liu, Y.; Zhong, L.; Wu, Y.; Zhong, X.; Yan, L.L.; Oldenburg, B. Quality, Functionality, and Features of Chinese Mobile Apps for Diabetes Self-Management: Systematic Search and Evaluation of Mobile Apps. JMIR MHealth UHealth 2020, 8, e14836. [Google Scholar] [CrossRef] [PubMed]

- Sharif, M.O.; Alkadhimi, A. Patient focused oral hygiene apps: An assessment of quality (using MARS) and knowledge content. Br. Dent. J. 2019, 227, 383–386. [Google Scholar] [CrossRef] [PubMed]

- Bardus, M.; Ali, A.; Demachkieh, F.; Hamadeh, G. Assessing the Quality of Mobile Phone Apps for Weight Management: User-Centered Study With Employees From a Lebanese University. JMIR MHealth UHealth 2019, 7, e9836. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- DiFilippo, K.N.; Huang, W.; Chapman-Novakofski, K.M. A New Tool for Nutrition App Quality Evaluation (AQEL): Development, Validation, and Reliability Testing. JMIR MHealth UHealth 2017, 5, e163. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Access to the Application: Log-In Free Access vs. Login Access |

| Login free access: |

|

| Login access: |

|

| Evaluator’s profile |

| Gathering the evaluator’s profile (background, exertise, …) can be used to: |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| Selection of the APP to be evaluated |

|

|

|

|

|

| Content of the evaluation |

|

|

|

| -change the way scales are presented, combining different types of icons (stars, faces, etc.); |

| -use different color scales for each dimension; |

| -use icons related to the content of the dimension to be evaluated; |

| -when a dimension evaluation is complete, an intermediate screen appears with a chart collecting the scores collected in that dimension throughout the evaluation process. |

|

| -Present questions randomly, to prevent questions presented at the end from being systematically evaluated automatically; |

| -Start with the easiest questions to answer, and increase complexity as you progress through the assessment; |

| -Start with the most relevant questions to make the assessment, to ensure that you get the most answers to these questions, and then ask the rest. |

|

| -Distribution of dimensions by tabs; |

| -List followed by criteria and scroll navigation; |

| -It was also suggested to incorporate information (using a pop-up screen) on the criteria to be evaluated, next to each question. |

|

| Report |

|

| -Overall score; |

| -Score disaggregated by dimensions; |

| -Comparison between user and median score obtained during the evaluation process (e.g., using spider charts). |

|

|

| Gamification |

|

|

|

|

|

|

| n | (%) | |

|---|---|---|

| Gender | ||

| Female | 23 | (74.2) |

| Male | 8 | (25.8) |

| Age group | ||

| 18–25 | 9 | (29.0) |

| 26–35 | 5 | (16.0) |

| 36–45 | 9 | (29.0) |

| 46–55 | 2 | (6.5) |

| 56–65 | 4 | (13.0) |

| >65 | 2 | (6.5) |

| Operating system | ||

| Android | 14 | (45.2) |

| iOS | 17 | (54.8) |

| App evaluated | ||

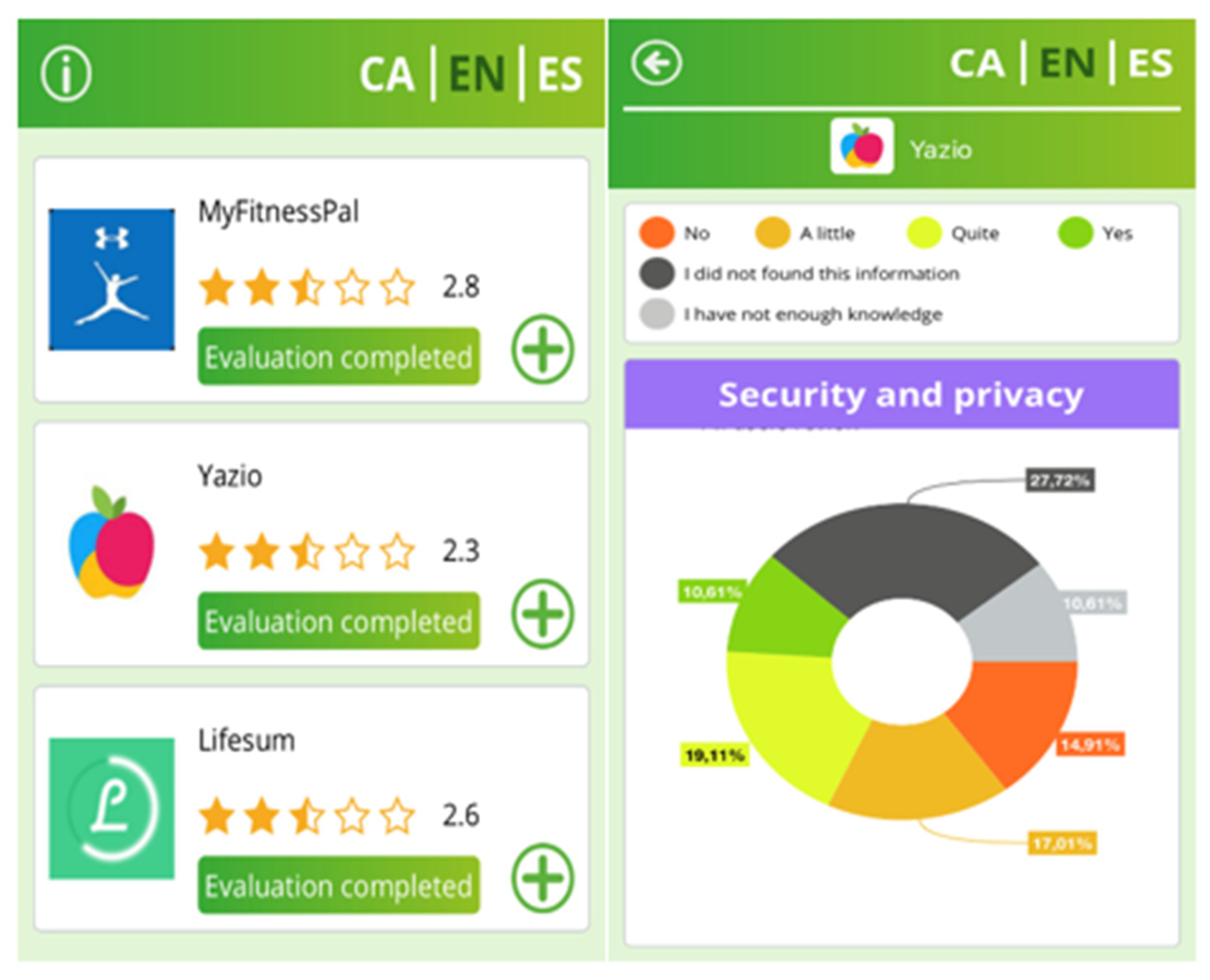

| MyFitnessPal | 10 | (41.7) |

| Yazio | 6 | (25.0) |

| MyPlate | 8 | (33.3) |

| Language used when using EVALAPSS tool | ||

| Catalan | 3 | (12.5) |

| Spanish | 20 | (83.3) |

| English | 1 | (4.2) |

| Yazio (n = 6) | MyPlate (n = 8) | MyFitnessPal (n = 10) | ||||

|---|---|---|---|---|---|---|

| Mean (SD) | Min–Max | Mean (SD) | Min–Max | Mean (SD) | Min–Max | |

| App Purpose | 8.5 (3.4) | 4–12 | 7.3 (3.6) | 3–12 | 9.1 (2.7) | 2–12 |

| Development | 1 (1.1) | 0–2 | 0.7 (0.5) | 0–1 | 1 (0.8) | 0–2 |

| Reliability | 4.6 (4.6) | 0–11 | 2.9 (2.1) | 0–6 | 4.6 (3.3) | 0–11 |

| Usability | 17.8 (10.9) | 0–27 | 13.8 (9.7) | 0–27 | 15 (10.1) | 0–27 |

| Health indicators | 6.3 (3.9) | 0–11 | 4.8 (4.3) | 0–10 | 4.2 (4.2) | 0–10 |

| Clinical effectiveness | 0.8 (1.16) | 0–3 | 0.1 (0.3) | 0–1 | 1 (1.6) | 0–4 |

| Security/Privacy | 5.5 (5.4) | 0–12 | 1.5 (2.9) | 0–8 | 2.9 (3.6) | 0–9 |

| Total | 44.6 (23.9) | 4–65 | 31.3 (17.6) | 3–55 | 37.8 (17.6) | 10–62 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Puigdomènech, E.; Robles, N.; Balfegó, M.; Cuatrecasas, G.; Zamora, A.; Saigí-Rubió, F.; Paluzié, G.; Moharra, M.; Carrion, C. Codesign and Feasibility Testing of a Tool to Evaluate Overweight and Obesity Apps. Int. J. Environ. Res. Public Health 2022, 19, 5387. https://doi.org/10.3390/ijerph19095387

Puigdomènech E, Robles N, Balfegó M, Cuatrecasas G, Zamora A, Saigí-Rubió F, Paluzié G, Moharra M, Carrion C. Codesign and Feasibility Testing of a Tool to Evaluate Overweight and Obesity Apps. International Journal of Environmental Research and Public Health. 2022; 19(9):5387. https://doi.org/10.3390/ijerph19095387

Chicago/Turabian StylePuigdomènech, Elisa, Noemi Robles, Mariona Balfegó, Guillem Cuatrecasas, Alberto Zamora, Francesc Saigí-Rubió, Guillem Paluzié, Montserrat Moharra, and Carme Carrion. 2022. "Codesign and Feasibility Testing of a Tool to Evaluate Overweight and Obesity Apps" International Journal of Environmental Research and Public Health 19, no. 9: 5387. https://doi.org/10.3390/ijerph19095387

APA StylePuigdomènech, E., Robles, N., Balfegó, M., Cuatrecasas, G., Zamora, A., Saigí-Rubió, F., Paluzié, G., Moharra, M., & Carrion, C. (2022). Codesign and Feasibility Testing of a Tool to Evaluate Overweight and Obesity Apps. International Journal of Environmental Research and Public Health, 19(9), 5387. https://doi.org/10.3390/ijerph19095387