Empathy in Human–Robot Interaction: Designing for Social Robots

Abstract

1. Introduction

2. Methods

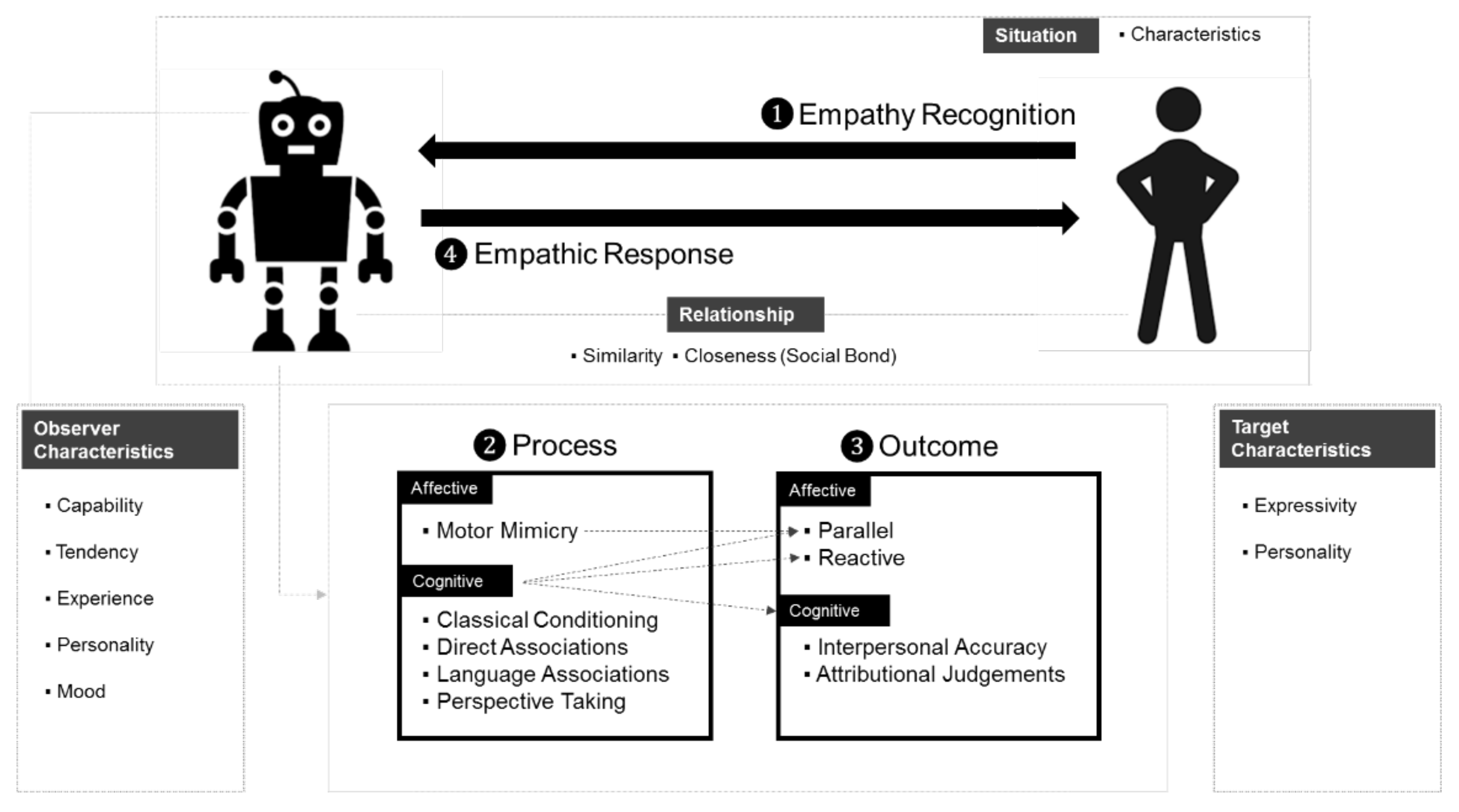

3. Empathy in Interpersonal Interaction

3.1. Processes

3.2. Outcome

3.3. Observer and Target Characteristics

3.4. Relationship

3.5. Situation

3.6. Empathic Recognition (❶)

3.7. Empathic Response (❹)

4. Empathy in Human–Agent and Human–Robot Interaction

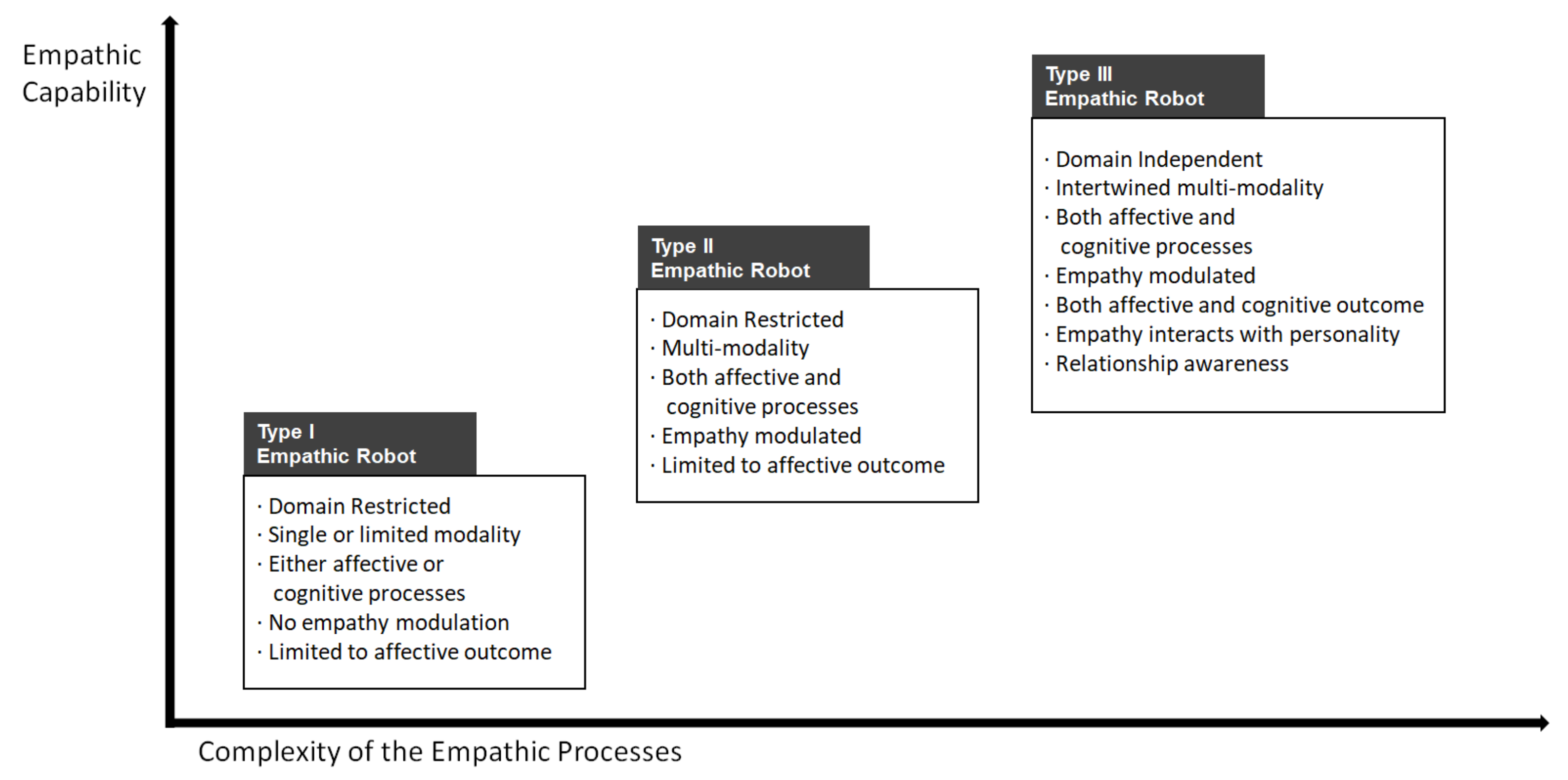

5. Synthesis and Discussion

5.1. Domain-Dependent vs. Domain-Independent

5.2. Emotion Modeling

5.3. Single vs. Multimodality

5.4. Empathy Modulation

5.5. Affective and Cognitive Outcomes

5.6. Interaction with Personality

5.7. Anthropomorphic versus Biomorphic

5.8. For a Hybrid Computational Model

Author Contributions

Funding

Conflicts of Interest

References

- SoftBank Robotics. Pepper Robot: Characteristics. 2018. Available online: https://www.softbankrobotics.com/emea/en/robots/pepper/find-out-more-about-pepper (accessed on 8 August 2018).

- Hoffman, M.L. Empathy and Moral Development: Implications for Caring and Justice; Cambridge University Press: Cambridge, UK, 2001. [Google Scholar]

- Batson, C.D.; Shaw, L.L. Evidence for Altruism: Toward a Pluralism of Prosocial Motives. Psychol. Inq. 1991, 2, 107–122. [Google Scholar] [CrossRef]

- Eisenberg, N.; Morris, A.S. The origins and social significance of empathy-related responding. A review of empathy and moral development: Implications for caring and justice by ML Hoffman. Soc. Justice Res. 2001, 14, 95–120. [Google Scholar] [CrossRef]

- Hume, D. A Treatise of Human Nature; Dover Publications: Mineola, NY, USA, 1978. [Google Scholar]

- Paiva, A.; Leite, I.; Boukricha, H.; Wachsmuth, I. Empathy in virtual agents and robots: A survey. ACM Trans. Interact. Intell. Syst. 2017, 7, 1–40. [Google Scholar] [CrossRef]

- Duradoni, M.; Colombini, G.; Russo, P.A.; Guazzini, A. Robotic Psychology: A PRISMA Systematic Review on Social-Robot-Based Interventions in Psychological Domains. J 2021, 4, 664–697. [Google Scholar] [CrossRef]

- Lipps, T. Einfühlung, innere nachahmung und organenempfindungen. Rev. Philos. Fr. L’etranger 1903, 56, 660–661. [Google Scholar]

- Titchener, E.B. Lectures on the Experimental Psychology of the Thought-Processes; Macmillan: London, UK, 1909. [Google Scholar]

- Gladstein, G.A. Understanding empathy: Integrating counseling, developmental, and social psychology perspectives. J. Couns. Psychol. 1983, 30, 467. [Google Scholar] [CrossRef]

- Eisenberg, N.; Strayer, J. Empathy and Its Development; Cambridge University Press: Cambridge, UK, 1990. [Google Scholar]

- Mahrer, A.R.; Boulet, D.B.; Fairweather, D.R. Beyond empathy: Advances in the clinical theory and methods of empathy. Clin. Psychol. Rev. 1994, 14, 183–198. [Google Scholar] [CrossRef]

- Zaki, J.; Ochsner, K.N. The neuroscience of empathy: Progress, pitfalls and promise. Nat. Neurosci. 2012, 15, 675–680. [Google Scholar] [CrossRef]

- Rizzolatti, G.; Fadiga, L.; Gallese, V.; Fogassi, L. Premotor cortex and the recognition of motor actions. Cogn. Brain Res. 1996, 3, 131–141. [Google Scholar] [CrossRef]

- De Vignemont, F.; Singer, T. The empathic brain: How, when and why? Trends Cogn. Sci. 2006, 10, 435–441. [Google Scholar] [CrossRef]

- Wicker, B.; Keysers, C.; Plailly, J.; Royet, J.-P.; Gallese, V.; Rizzolatti, G. Both of Us Disgusted in My Insula: The Common Neural Basis of Seeing and Feeling Disgust. Neuron 2003, 40, 655–664. [Google Scholar] [CrossRef]

- Keysers, C.; Wicker, B.; Gazzola, V.; Anton, J.-L.; Fogassi, L.; Gallese, V. A Touching Sight: SII/PV Activation during the Observation and Experience of Touch. Neuron 2004, 42, 335–346. [Google Scholar] [CrossRef]

- Singer, T.; Seymour, B.; O’Doherty, J.; Kaube, H.; Dolan, R.J.; Frith, C.D. Empathy for Pain Involves the Affective but not Sensory Components of Pain. Science 2004, 303, 1157–1162. [Google Scholar] [CrossRef] [PubMed]

- Decety, J.; Jackson, P.L. The Functional Architecture of Human Empathy. Behav. Cogn. Neurosci. Rev. 2004, 3, 71–100. [Google Scholar] [CrossRef]

- Davis, M.H. A multidimensional approach to individual differences in empathy. JSAS Cat. Sel. Doc. Psychol. 1980, 10, 85. [Google Scholar]

- Preston, S.D.; de Waal, F.B.M. Empathy: Its ultimate and proximate bases. Behav. Brain Sci. 2002, 25, 1–20. [Google Scholar] [CrossRef]

- Cuff, B.; Brown, S.; Taylor, L.; Howat, D.J. Empathy: A Review of the Concept. Emot. Rev. 2016, 8, 144–153. [Google Scholar] [CrossRef]

- Deutsch, F.; Madle, R.A. Empathy: Historic and Current Conceptualizations, Measurement, and a Cognitive Theoretical Perspective. Hum. Dev. 1975, 18, 267–287. [Google Scholar] [CrossRef]

- Shamay-Tsoory, S.G. The neural bases for empathy. Neuroscientist 2011, 17, 18–24. [Google Scholar] [CrossRef]

- Zaki, J. Empathy: A motivated account. Psychol. Bull. 2014, 140, 1608–1647. [Google Scholar] [CrossRef]

- Barnett, M.A. Empathy and Related Responses in Children. In Empathy and Its Development; Cambridge University Press: Cambridge, UK, 1987; pp. 146–162. [Google Scholar]

- Eisenberg, N.; Eggum, N.D. Empathic Responding: Sympathy and Personal Distress. In The Social Neuroscience of Empathy; The MIT Press: Cambridge, MA, USA, 2009; pp. 71–84. [Google Scholar] [CrossRef]

- Goldman, A.I. Ethics and Cognitive Science. Ethics 1993, 103, 337–360. [Google Scholar] [CrossRef]

- Wondra, J.D.; Ellsworth, P.C. An appraisal theory of empathy and other vicarious emotional experiences. Psychol. Rev. 2015, 122, 411–428. [Google Scholar] [CrossRef] [PubMed]

- Dymond, R.F. Personality and empathy. J. Consult. Psychol. 1950, 14, 343–350. [Google Scholar] [CrossRef] [PubMed]

- Ickes, W.J. Empathic Accuracy; Guilford Press: New York, NY, USA, 1997. [Google Scholar]

- Kerr, W.A.; Speroff, B.J. Validation and Evaluation of the Empathy Test. J. Gen. Psychol. 1954, 50, 269–276. [Google Scholar] [CrossRef]

- Colman, A.M. A Dictionary of Psychology; Oxford University Press: New York, NY, USA, 2015. [Google Scholar]

- Davis, M.H. Empathy: A Social Psychological Approach; Routledge: Abingdon, UK, 2018. [Google Scholar]

- Oliveira-Silva, P.; Gonçalves, Ó.F. Responding Empathically: A Question of Heart, not a Question of Skin. Appl. Psychophysiol. Biofeedback 2011, 36, 201–207. [Google Scholar] [CrossRef] [PubMed]

- Leite, I.; Pereira, A.; Mascarenhas, S.; Martinho, C.; Prada, R.; Paiva, A. The influence of empathy in human–robot relations. Int. J. Hum. Comput. Stud. 2013, 71, 250–260. [Google Scholar] [CrossRef]

- Batson, C.D.; Fultz, J.; Schoenrade, P.A. Distress and Empathy: Two Qualitatively Distinct Vicarious Emotions with Different Motivational Consequences. J. Pers. 1987, 55, 19–39. [Google Scholar] [CrossRef]

- Hoffman, M.L. Interaction of affect and cognition in empathy. In Emotions, Cognition, and Behavior; Cambridge University Press: Cambridge, UK, 1984; pp. 103–131. [Google Scholar]

- Eisenberg, N.; Shea, C.L.; Carlo, G.; Knight, G.P. Empathy-related responding and cognition: A “chicken and the egg” dilemma. Handb. Moral Behav. Dev. 2014, 2, 63–88. [Google Scholar]

- Hoffman, M.L. Toward a Theory of Empathic Arousal and Development. In The Development of Affect; Springer: Boston, MA, USA, 1978; pp. 227–256. [Google Scholar] [CrossRef]

- Hatfield, E.; Cacioppo, J.T.; Rapson, R.L. Emotional Contagion: Studies in Emotion and Social Interaction; Editions de la Maison des Sciences de l’homme; Cambridge University Press: New York, NY, USA, 1994. [Google Scholar]

- Chartrand, T.L.; Bargh, J.A. The chameleon effect: The perception–behavior link and social interaction. J. Pers. Soc. Psychol. 1999, 76, 893. [Google Scholar] [CrossRef]

- Bavelas, J.B.; Black, A.; Lemery, C.R.; Mullett, J. “I show how you feel”: Motor mimicry as a communicative act. J. Pers. Soc. Psychol. 1986, 50, 322. [Google Scholar] [CrossRef]

- Bailenson, J.N.; Yee, N. Digital Chameleons: Automatic Assimilation of Nonverbal Gestures in Immersive Virtual Environments. Psychol. Sci. 2005, 16, 814–819. [Google Scholar] [CrossRef] [PubMed]

- Spencer, H. The Principles of Psychology; Appleton: Boston, MA, USA, 1895; Volume 1. [Google Scholar]

- Humphrey, G. The conditioned reflex and the elementary social reaction. J. Abnorm. Psychol. Soc. Psychol. 1922, 17, 113–119. [Google Scholar] [CrossRef][Green Version]

- Eisenberg, N.; Fabes, R.A.; Miller, P.A.; Fultz, J.; Shell, R.; Mathy, R.M.; Reno, R.R. Relation of sympathy and personal distress to prosocial behavior: A multimethod study. J. Pers. Soc. Psychol. 1989, 57, 55. [Google Scholar] [CrossRef] [PubMed]

- McDougall, W. An Introduction to Social Psychology; Psychology Press: Hove, UK, 2015. [Google Scholar]

- Gruen, R.J.; Mendelsohn, G. Emotional responses to affective displays in others: The distinction between empathy and sympathy. J. Pers. Soc. Psychol. 1986, 51, 609. [Google Scholar] [CrossRef]

- Staub, E. Commentary on Part I. In Empathy and Its Development; Cambridge University Press: Cambridge, UK, 1987; pp. 103–115. [Google Scholar]

- Wispé, L. The distinction between sympathy and empathy: To call forth a concept, a word is needed. J. Pers. Soc. Psychol. 1986, 50, 314. [Google Scholar] [CrossRef]

- Davis, M.H. Measuring individual differences in empathy: Evidence for a multidimensional approach. J. Pers. Soc. Psychol. 1983, 44, 113. [Google Scholar] [CrossRef]

- Cialdini, R.B.; Brown, S.L.; Lewis, B.P.; Luce, C.; Neuberg, S.L. Reinterpreting the empathy–altruism relationship: When one into one equals oneness. J. Pers. Soc. Psychol. 1997, 73, 481. [Google Scholar] [CrossRef]

- Vitaglione, G.D.; Barnett, M.A. Assessing a New Dimension of Empathy: Empathic Anger as a Predictor of Helping and Punishing Desires. Motiv. Emot. 2003, 27, 301–325. [Google Scholar] [CrossRef]

- Rogers, C.R. The attitude and orientation of the counselor in client-centered therapy. J. Consult. Psychol. 1949, 13, 82–94. [Google Scholar] [CrossRef]

- Regan, D.T.; Totten, J. Empathy and attribution: Turning observers into actors. J. Pers. Soc. Psychol. 1975, 32, 850. [Google Scholar] [CrossRef]

- Gould, R.; Sigall, H. The effects of empathy and outcome on attribution: An examination of the divergent-perspectives hypothesis. J. Exp. Soc. Psychol. 1977, 13, 480–491. [Google Scholar] [CrossRef]

- Hogan, R. Development of an empathy scale. J. Consult. Clin. Psychol. 1969, 33, 307–316. [Google Scholar] [CrossRef] [PubMed]

- Mehrabian, A.; Epstein, N. A measure of emotional empathy. J. Pers. 1972, 40, 525–543. [Google Scholar] [CrossRef] [PubMed]

- Zaki, J.; Bolger, N.; Ochsner, K. It takes two: The interpersonal nature of empathic accuracy. Psychol. Sci. 2008, 19, 399–404. [Google Scholar] [CrossRef]

- Nichols, S.; Stich, S.; Leslie, A.; Klein, D. Varieties of off-line simulation. In Theories of Theories of Mind; Cambridge University Press: Cambridge, UK, 1996; pp. 39–74. [Google Scholar] [CrossRef]

- Moffat, D. Personality parameters and programs. In Creating Personalities for Synthetic Actors; Springer: Berlin/Heidelberg, Germany, 1997; pp. 120–165. [Google Scholar]

- Lee, K.M.; Peng, W.; Jin, S.V.; Yan, C. Can Robots Manifest Personality? An Empirical Test of Personality Recognition, Social Responses, and Social Presence in Human–Robot Interaction. J. Commun. 2006, 56, 754–772. [Google Scholar] [CrossRef]

- Walters, M.L.; Syrdal, D.S.; Dautenhahn, K.; Boekhorst, R.T.; Koay, K.L. Avoiding the uncanny valley: Robot appearance, personality and consistency of behavior in an attention-seeking home scenario for a robot companion. Auton. Robot. 2008, 24, 159–178. [Google Scholar] [CrossRef]

- Baron-Cohen, S.; Wheelwright, S. The Empathy Quotient: An Investigation of Adults with Asperger Syndrome or High Functioning Autism, and Normal Sex Differences. J. Autism Dev. Disord. 2004, 34, 163–175. [Google Scholar] [CrossRef]

- Riek, L.D.; Paul, P.C.; Robinson, P.M. When my robot smiles at me: Enabling human-robot rapport via real-time head gesture mimicry. J. Multimodal User Interfaces 2009, 3, 99–108. [Google Scholar] [CrossRef]

- Krebs, D. Empathy and altruism. J. Pers. Soc. Psychol. 1975, 32, 1134. [Google Scholar] [CrossRef]

- Krebs, D.L. Altruism: An examination of the concept and a review of the literature. Psychol. Bull. 1970, 73, 258–302. [Google Scholar] [CrossRef]

- Singer, T.; Seymour, B.; O’Doherty, J.P.; Stephan, K.E.; Dolan, R.J.; Frith, C.D. Empathic neural responses are modulated by the perceived fairness of others. Nature 2006, 439, 466–469. [Google Scholar] [CrossRef]

- Lamm, C.; Batson, C.D.; Decety, J. The Neural Substrate of Human Empathy: Effects of Perspective-taking and Cognitive Appraisal. J. Cogn. Neurosci. 2007, 19, 42–58. [Google Scholar] [CrossRef] [PubMed]

- Patterson, M.L. Invited article: A parallel process model of nonverbal communication. J. Nonverbal Behav. 1995, 19, 3–29. [Google Scholar] [CrossRef]

- Saarela, M.V.; Hlushchuk, Y.; Williams, A.C.d.C.; Schürmann, M.; Kalso, E.; Hari, R. The compassionate brain: Humans detect intensity of pain from another’s face. Cereb. Cortex 2006, 17, 230–237. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Becker, C.; Prendinger, H.; Ishizuka, M.; Wachsmuth, I. Evaluating affective feedback of the 3D agent max in a competitive cards game. In Affective Computing and Intelligent Interaction; Springer: Berlin/Heidelberg, Germany, 2005; pp. 466–473. [Google Scholar]

- Bickmore, T.W.; Picard, R.W. Establishing and maintaining long-term human-computer relationships. ACM Trans. Comput. Interact. 2005, 12, 293–327. [Google Scholar] [CrossRef]

- Boukricha, H.; Wachsmuth, I.; Carminati, M.N.; Knoeferle, P. A computational model of empathy: Empirical evaluation. In Proceedings of the 2013 Humaine Association Conference on Affective Computing and Intelligent Interaction, Geneva, Switzerland, 2–5 September 2013; pp. 1–6. [Google Scholar]

- Brave, S.; Nass, C.; Hutchinson, K. Computers that care: Investigating the effects of orientation of emotion exhibited by an embodied computer agent. Int. J. Hum. Comput. Stud. 2005, 62, 161–178. [Google Scholar] [CrossRef]

- Lisetti, C.; Amini, R.; Yasavur, U.; Rishe, N. I Can Help You Change! An Empathic Virtual Agent Delivers Behavior Change Health Interventions. ACM Trans. Manag. Inf. Syst. 2013, 4, 19. [Google Scholar] [CrossRef]

- McQuiggan, S.W.; Robison, J.L.; Phillips, R.; Lester, J.C. Modeling parallel and reactive empathy in virtual agents: An inductive approach. In Proceedings of the 7th International Joint Conference on Autonomous Agents and Multiagent Systems—Volume 1. International Foundation for Autonomous Agents and Multiagent Systems, Estoril, Portugal, 12–16 May 2008; pp. 167–174. [Google Scholar]

- Ochs, M.; Sadek, D.; Pelachaud, C. A formal model of emotions for an empathic rational dialog agent. Auton. Agents Multi-Agent Syst. 2012, 24, 410–440. [Google Scholar] [CrossRef]

- Prendinger, H.; Ishizuka, M. The Empathic Companion: A Character-Based Interface That Addresses Users’ Affective States. Appl. Artif. Intell. 2005, 19, 267–285. [Google Scholar] [CrossRef]

- Rodrigues, S.H.; Mascarenhas, S.F.; Dias, J.; Paiva, A. “I can feel it too!”: Emergent empathic reactions between synthetic characters. In Proceedings of the 2009 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops, Amsterdam, The Netherlands, 10–12 September 2009; pp. 1–7. [Google Scholar]

- Boukricha, H.; Wachsmuth, I. Empathy-Based Emotional Alignment for a Virtual Human: A Three-Step Approach. KI-Künstliche Intelligenz 2011, 25, 195–204. [Google Scholar] [CrossRef]

- Cramer, H.; Goddijn, J.; Wielinga, B.; Evers, V. Effects of (in)accurate empathy and situational valence on attitudes towards robots. In Proceedings of the 5th ACM/IEEE International Conference on Human Robot Interaction, Osaka, Japan, 2–5 March 2010; pp. 141–142. [Google Scholar] [CrossRef]

- Leite, I.; Castellano, G.; Pereira, A.; Martinho, C.; Paiva, A. Empathic Robots for Long-term Interaction. Int. J. Soc. Robot. 2014, 6, 329–341. [Google Scholar] [CrossRef]

- Charrier, L.; Galdeano, A.; Cordier, A.; Lefort, M. Empathy display influence on human-robot interactions: A pilot study. In Proceedings of the Workshop on Towards Intelligent Social Robots: From Naive Robots to Robot Sapiens at the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2018), Madrid, Spain, 1–5 October 2018; p. 7. [Google Scholar]

- James, J.; Watson, C.I.; MacDonald, B. Artificial empathy in social robots: An analysis of emotions in speech. In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing, China, 27–31 August 2018; pp. 632–637. [Google Scholar]

- Hegel, F.; Spexard, T.; Wrede, B.; Horstmann, G.; Vogt, T. Playing a different imitation game: Interaction with an Empathic Android Robot. In Proceedings of the 2006 6th IEEE-RAS International Conference on Humanoid Robots, Genova, Italy, 4–6 December 2006; pp. 56–61. [Google Scholar] [CrossRef]

- Churamani, N.; Barros, P.; Strahl, E.; Wermter, S. Learning Empathy-Driven Emotion Expressions using Affective Modulations. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Park, U.; Kim, M.; Jang, Y.; Lee, G.; Kim, K.; Kim, I.-J.; Choi, J. Robot facial expression framework for enhancing empathy in human-robot interaction. In Proceedings of the 2021 30th IEEE International Conference on Robot & Human Interactive Communication (RO-MAN), Vancouver, BC, Canada, 8–12 August 2021; pp. 832–838. [Google Scholar]

- Bagheri, E.; Esteban, P.G.; Cao, H.-L.; De Beir, A.; Lefeber, D.; Vanderborght, B. An Autonomous Cognitive Empathy Model Responsive to Users’ Facial Emotion Expressions. ACM Trans. Interact. Intell. Syst. 2020, 10, 1–23. [Google Scholar] [CrossRef]

- Leite, I.; Martinho, C.; Paiva, A. Social Robots for Long-Term Interaction: A Survey. Int. J. Soc. Robot. 2013, 5, 291–308. [Google Scholar] [CrossRef]

- Hegel, F.; Krach, S.; Kircher, T.; Wrede, B.; Sagerer, G. Understanding social robots: A user study on anthropomorphism. In Proceedings of the RO-MAN 2008-The 17th IEEE International Symposium on Robot and Human Interactive Communication, Munich, Germany, 1–3 August 2008; pp. 574–579. [Google Scholar]

- Chovil, N. Social determinants of facial displays. J. Nonverbal Behav. 1991, 15, 141–154. [Google Scholar] [CrossRef]

- Barrett-Lennard, G.T. Dimensions of therapist response as causal factors in therapeutic change. Psychol. Monogr. Gen. Appl. 1962, 76, 1–36. [Google Scholar] [CrossRef]

- Urakami, J.; Moore, B.A.; Sutthithatip, S.; Park, S. Users’ Perception of Empathic Expressions by an Advanced Intelligent System. In Proceedings of the 7th International Conference on Human-Agent Interaction, Kyoto, Japan, 6 October 2019; pp. 11–18. [Google Scholar]

- Prendinger, H.; Mori, J.; Ishizuka, M. Using human physiology to evaluate subtle expressivity of a virtual quizmaster in a mathematical game. Int. J. Hum. Comput. Stud. 2005, 62, 231–245. [Google Scholar] [CrossRef]

- Bartneck, C.; Forlizzi, J. A design-centred framework for social human-robot interaction. In Proceedings of the RO-MAN 2004 13th IEEE International Workshop on Robot and Human Interactive Communication (IEEE Catalog No 04TH8759), Kurashiki, Japan, 22–22 September 2004; pp. 591–594. [Google Scholar]

- Yalcin Özge, N.; DiPaola, S. A computational model of empathy for interactive agents. Biol. Inspired Cogn. Archit. 2018, 26, 20–25. [Google Scholar] [CrossRef]

| Interpersonal | Human–Agent | Human–Robot | |

|---|---|---|---|

| Abstract Screened | 1116 | 128 | 76 |

| Full-text Assessed | 232 | 27 | 21 |

| Studies Included | 70 | 10 | 12 |

| Emphasis on | Author(s) | Definition |

|---|---|---|

| Affective | [26] | “The vicarious experiencing of an emotion that is congruent with, but not necessarily identical to, the emotion of another individual (p. 146).” |

| “One specific set of congruent emotions, those feelings that are more other-focused than self-focused.” | ||

| [27] | “An affective response that stems from the apprehension or comprehension of another’s emotional state or condition, and which is similar to what the other person is feeling or would be expected to feel (p. 71).” | |

| [28] | “Consists of a sort of ‘mimicking’ of one person’s affective state by that of another.” | |

| [2] | “An affective response more appropriate to another’s situation than one’s own (p. 4).” | |

| [29] | “Feeling what another person feels because something happens to them which does not require understanding another’s internal states (p. 411–412).” | |

| Cognitive | [30] | “The imaginative transposing of oneself into the thinking, feeling, and acting of another (p. 343).” |

| [31] | “A form of complex psychological inference in which observation, memory, knowledge, and reasoning are combined to yield insights into the thoughts and feelings of others (p. 2).” | |

| [32] | “Ability to put yourself in the other person’s position, establish rapport, and anticipate his reaction, feelings, and behaviors (p. 269).” | |

| Affective and Cognitive | [33] | “The capacity to understand and enter into another person’s feelings and emotions or to experience something from the other person’s point of view (p. 248).” |

| [34] | “A set of constructs having to do with the responses of one individual to the experiences of another. These constructs include the processes taking place within the observer and the affective and non-affective outcome which result from those processes (p. 12).” | |

| [35] | “The capacities to resonate with another person’s emotion, understand his/her thoughts and feelings, separate our own thoughts and emotions from those of the observed and responding with the appropriate prosocial and helpful behavior (p. 201).” |

| Author | Purpose | Observer | Target | Relationship | Situation | Results |

|---|---|---|---|---|---|---|

| [73] | To increase the level of social engagement | Agent competitor with neutral, self-centered, empathy condition | Participant competitor motivated for monetary reward when won | Competitive power relationship—fear and anger | Cards game Skip-Bo | Participants in empathic conditions felt less lonely, perceived the agent as more caring, attractive, and more human-like but more stressed |

| [74] | To improve long-term relationship quality | Exercise advisor with or without empathic relationship building skills | Exercise client | Advisor–client | Daily conversation on target’s physical activity for a month | Participants respected, liked, and trusted the empathic agent more and wished continued interaction |

| [75] | To understand factors modulating agent’s empathic behavior | Agent EMMA with mood varied | Agent MAX | Liking and familiarity were varied between virtual agents | Three-way conversation among EMMA, MAX, a participant | Participants liked the agent that empathizes the other agent more |

| [76] | For the positive perception of agents | Agent (photographic human face) game player with self-oriented or empathy condition | Participant game player | Co-present gamer (not a competition) | Each plays blackjack with a dealer (split-screen) | Participants liked, trusted, and perceived caring, and felt more supported by the empathic agent |

| [77] | To change health-related behaviors (alcohol consumption, exercising, drug use) | 3D personalized on-demand virtual counselor | Participant counselee | Counselor–counselee | Behavioral change in health interventions on excessive alcohol consumption | Participants accepted and enjoyed the empathic agent more and showed an intention to use the system longer |

| [78] | To investigate the effects of parallel and reactive virtual agent responses | Six agents with a reactive or parallel response | Member of a research team on an island | Inhabitant—researcher | Participants solve a mystery on an island while interacting with agents | A model was induced from positively perceived agent responses in terms of appropriateness and effectiveness |

| [79] | To investigate the effects of dialogue agent with beliefs, uncertainties, and intentions | Expressive 3D taking head with empathic or non-congruent empathic condition | Email user | Assistant—user | Participants converse with an agent to find out information on their mail (sender, message) | Participants perceived the non-congruent agent more negatively |

| [80] | To support job-seekers preparing for an interview by reducing their stress levels | Mail companion agent in a suit invisible to the interviewer agent | Participant Interviewee | Companion | Job Interview | Participant’s stress level was reduced by empathic feedback |

| [81] | To establish a generic computational model of empathy | Four virtual agents interacting (can be either an observer or a target) varied in mood, personality | Four virtual agents | Relationships among agents were varied in similarity, social bond, liking | A short narrative consists of virtual agents interacting (compliment, criticize) at a schoolyard | Participants evaluated virtual agent-agent interactions from a video. They perceived virtual agents applied with an empathy model more positively, especially with an agent who carried out a prosocial behavior (comforting) |

| Author | Empathy Recognition | Process | Outcome | Empathy Responses |

|---|---|---|---|---|

| [73] | Affective states - Physiological data (skin conductance, EMG) Situation - User actions (moves in game Skip-Bo) | Cognitive - Assumes whether the participant is happy or distressed | Affective - Parallel (positive), reactive (sympathy) Cognitive - Estimation of feelings | Facial expression and nonverbal voice (grunts, moans) |

| [74] | Situation - Dialogue (multiple choices) | Cognitive | Affective Cognitive | TTS voice (“I’m sorry to hear that”), synchronized hand gestures, posture, gaze |

| [75] | Affective states - Facial expression of the virtual human MAX Situation - Dialogue (praise, insult) | Affective - Motor mimicry (shared representation system) | Affective - Parallel, reactive | Facial expression, speech prosody, verbal utterance |

| [76] | Situation - User actions (the out come of each round of blackjack) | Cognitive | Affective - Parallel Cognitive - Estimation of feelings | Facial expression |

| [77] | Affective states - Facial expression Situation - Dialogue | Affective - Motor mimicry (head posture) Cognitive - Perspective taking | Affective - Parallel, reactive Cognitive | TTS voice, nonverbal (head nod, direction) |

| [78] | Affective states - Selecting emotions when asked Galvanic skin response, heart rate Situation - The context in the island narrative | Affective Cognitive | Affective - Parallel, reactive | One or two sentences of text responses |

| [79] | Situation - Dialogue (multiple choices) | Cognitive | Affective - Congruent, incongruent | Facial expression, text responses |

| [80] | Affective states - Physiological data (skin conductance, EMG) Situation - Dialogue (multiple choices) | Cognitive | Cognitive - Interpersonal accuracy | Text responses (“It seems you did not like this question so much.”) |

| [81] | Affective states - Facial expression Situation - Self-projection appraisal | Affective Cognitive | Affective Cognitive | Facial expression, text responses |

| Author | Purpose | Observer | Target | Relationship | Situation | Measures and Results |

|---|---|---|---|---|---|---|

| [83] | To investigate attitudes toward a robot with accurate or inaccurate empathy | A robot with a synthetic female voice | A male user | Collaborator | A male user and a robot played an online collaborative game. | Participants viewed a video of a robot emphasizing a user. Their trust decreased when the robot’s empathic responses were incongruent with the user. |

| [87] | To evaluate the acceptance of mimicked emotion | A robot mimics the target’s voice and does facial expressions with parallel emotion | Human participant | Not defined | Participants read an emotion-embedded story. | Participants perceived the robot’s mimicking response to be more adequate and human-like than the neutral response. |

| [36] | To investigate the effects of robot’s empathic responses when the relationship was varied | A robot reacts to the player’s chess moves empathically to a player and neutrally to the other | Two participants | Relationship between the robot and each participant was varied | Two humans played chess. | Participants perceived the empathic robot as being friendlier than the non-empathic one. |

| [84] | To evaluate an empathic model for social robots interacting with children | A robot reacts to the children’s chess move based on the empathic appraisal of children’s affect and the game’s state | Children | Not defined | A child played chess against the robot. | Participants responded positively in social presence, engagement, help, and self-validation when interacting with a robot and remained similar after five weeks. |

| [66] | To understand human’s perception of the robot’s imitation | A robot with a full head gesture mimicking, partial mimicking (nodding), and non-mimicking | Human participant | Not defined | Participants described non-emotional personal statements and salient personal experience. | Male participants made more gestures than women while interacting with the robot. Participants showed coordinated gestures (co-nodding). |

| [86] | To understand human’s perception of robot speech | A robot conversed with the participants in three situations (greeting, medicine reminder, guiding the user to use the touch interface) | Human participant | Not defined | Participants interacted with a Healthbot as a patient. | Participants were able to perceive empathy and emotions in robot speech. They preferred it over the standard robotic voice. |

| [89] | To develop a deep learning model for a social robot that mirrors humans | A robot with a display that animates facial expressions | Human participant | Not defined | Participants conversed with the robot with various facial expressions. | Participants’ interaction data were used to train the model. |

| [90] | To evaluate a robot with an empathy model that simulates advanced empathy (i.e., reactive emotions) | A robot (Pepper) embedded with the proposed Autonomous Cognitive Empathic Model that expresses parallel and reactive emotions | Human participant | Not defined | Participants watched emotion-eliciting videos on the robot’s tablet and interacted with the robot. | Participants’ responses were better to a robot embedded with the proposed model than the baseline model in terms of social and friendship constructs. |

| [88] | To evaluate a robot with a deep hybrid neural model for multimodal affect recognition | A robot embedded with a model that simulates intrinsic emotions (i.e., mood) | Human Participant | Not defined | Participants told a story portraying different emotional contexts to the robot. | Independent annotators rated the robot higher in performance (i.e., the accuracy of empathic emotion) than the participants. |

| [85] | To evaluate a robot with an empathy model that draws participant’s attention when inattentive | A robot (Pepper) embedded with the attention-based empathic module to hold participant’s attention | Human participant | Not defined | Participants responded to a quiz on the robot’s tablet. | Participants perceived the empathic robot as more engaging and empathic and as spending more time than the non-empathic robot. |

| Author | Empathy Recognition | Process | Outcome | Empathy Responses |

|---|---|---|---|---|

| [83] | Situation - Win or lose the game | Cognitive | Affective Cognitive | Facial expression, verbal responses |

| [87] | Affective states - Speech signal | Affective - Motor mimicry | Affective - Parallel | Facial expression |

| [36] | Situation - Appraisal of each move | Cognitive - Perspective taking | Affective Cognitive | Facial expression, verbal responses |

| [84] | Affective states - Facial cues, head direction Situation - Appraisal of each move | Cognitive - Perspective taking | Affective Cognitive | Facial expression, verbal responses |

| [66] | Affective states - Facial expression | Affective - Motor mimicry | Affective - Parallel | Facial expression |

| [86] | Situation - e.g., time to take medicine | Cognitive | Affective Cognitive | Verbal responses (The robot’s facial expression was not varied with emotions.) |

| [89] | Affective states - Facial expression | Affective - Motor mimicry | Affective - Parallel | Facial expression |

| [90] | Affective states - Facial expression | Affective Cognitive - Perspective taking | Affective - Parallel Cognitive - Reactive | Facial expression, verbal responses, gestures |

| [88] | Affective states - Facial expression - Speech signal | Affective | Affective - Parallel | Facial expression |

| [85] | Situation - Participant’s attentiveness | Cognitive | Affective | Facial expression, verbal responses, gestures |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, S.; Whang, M. Empathy in Human–Robot Interaction: Designing for Social Robots. Int. J. Environ. Res. Public Health 2022, 19, 1889. https://doi.org/10.3390/ijerph19031889

Park S, Whang M. Empathy in Human–Robot Interaction: Designing for Social Robots. International Journal of Environmental Research and Public Health. 2022; 19(3):1889. https://doi.org/10.3390/ijerph19031889

Chicago/Turabian StylePark, Sung, and Mincheol Whang. 2022. "Empathy in Human–Robot Interaction: Designing for Social Robots" International Journal of Environmental Research and Public Health 19, no. 3: 1889. https://doi.org/10.3390/ijerph19031889

APA StylePark, S., & Whang, M. (2022). Empathy in Human–Robot Interaction: Designing for Social Robots. International Journal of Environmental Research and Public Health, 19(3), 1889. https://doi.org/10.3390/ijerph19031889