HIGEA: An Intelligent Conversational Agent to Detect Caregiver Burden

Abstract

1. Introduction

2. Related Work

3. HIGEA: A Conversational Agent for Caregivers

3.1. Requirements

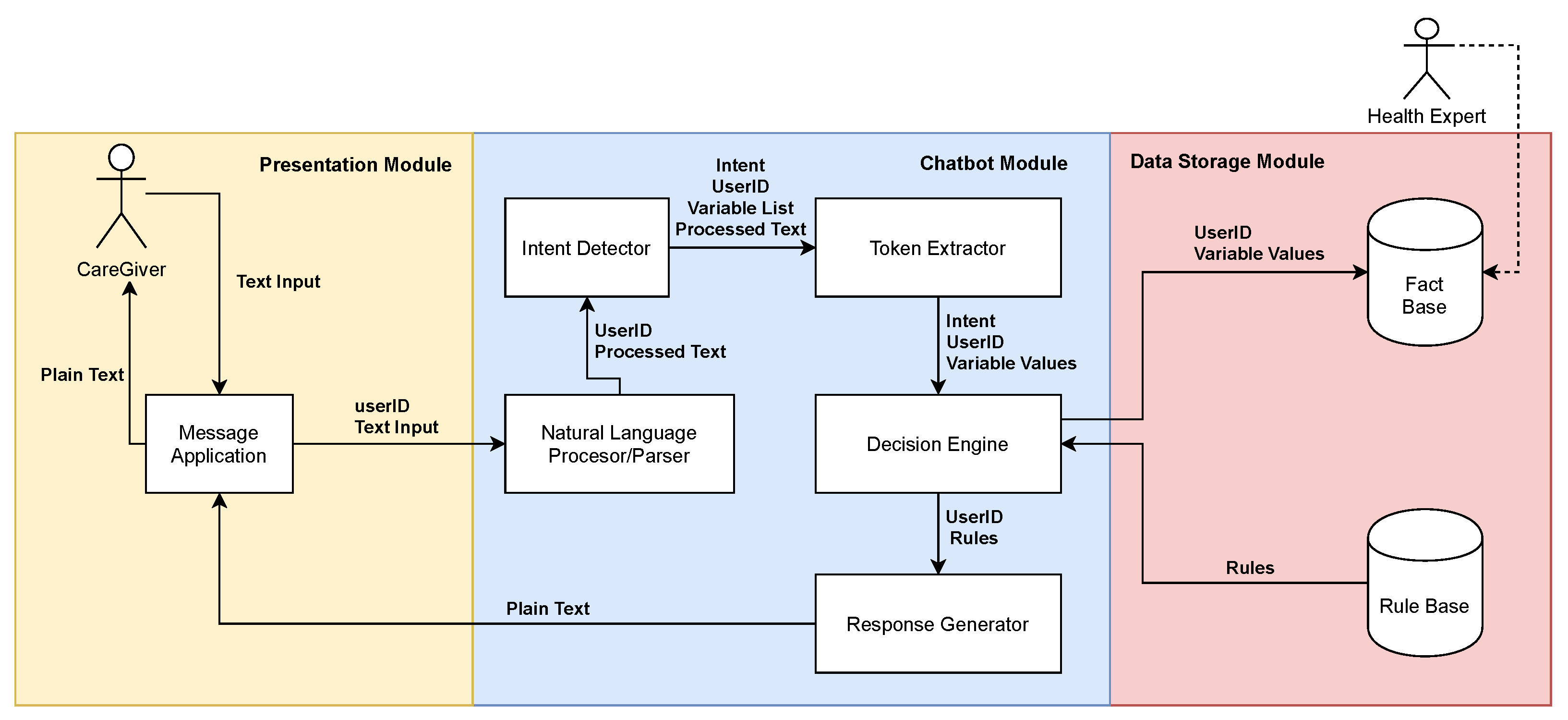

3.2. Architecture

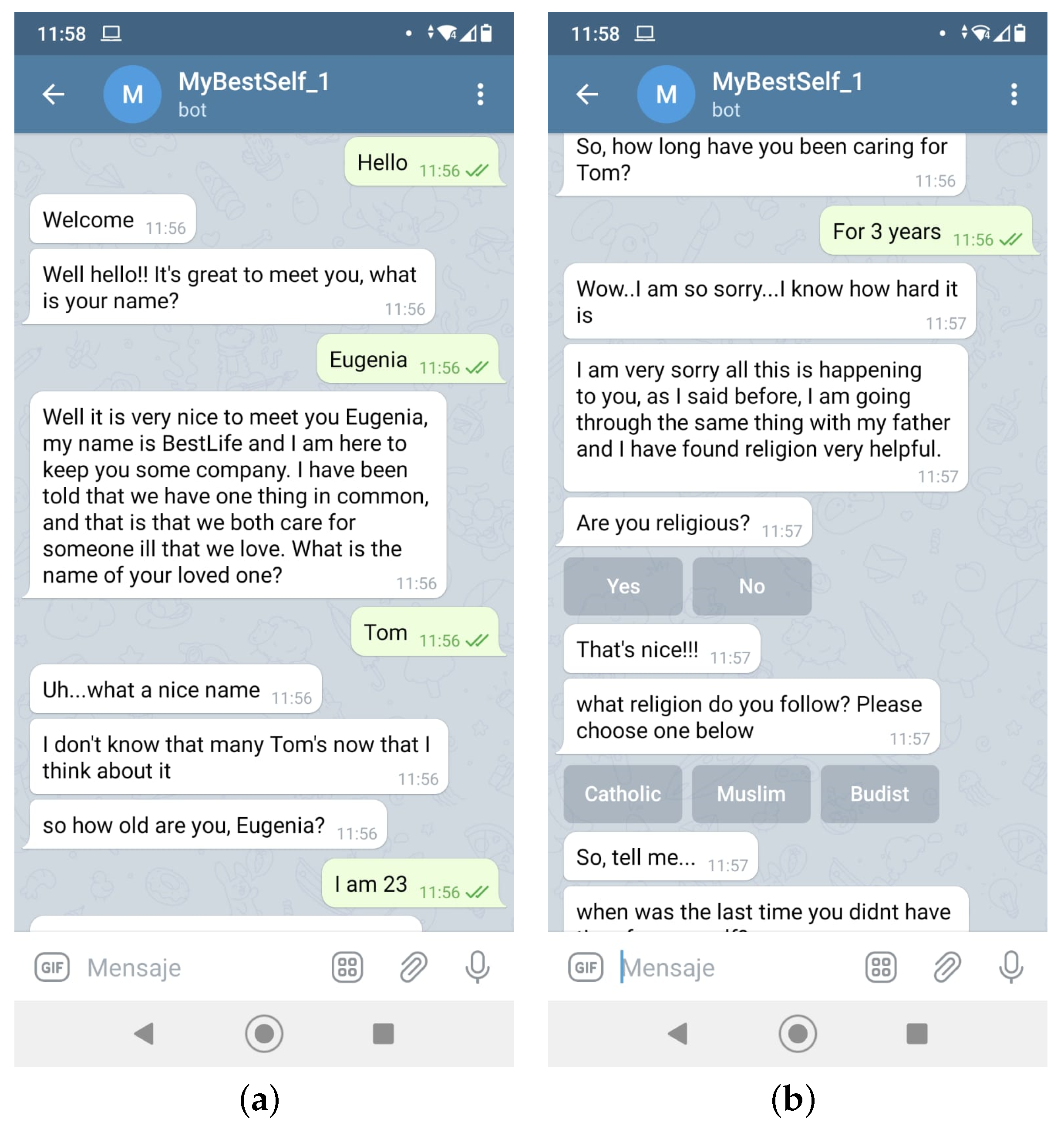

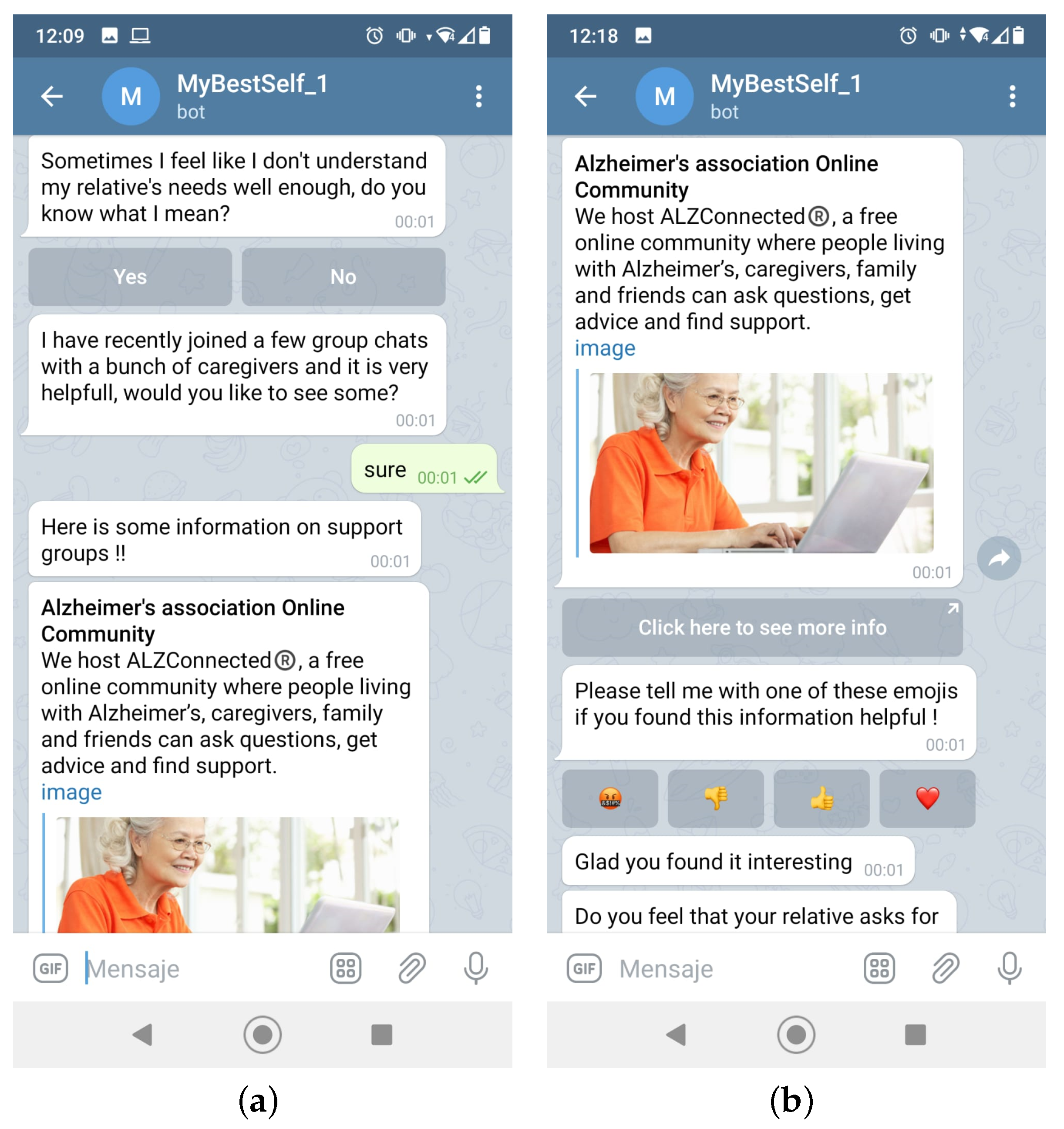

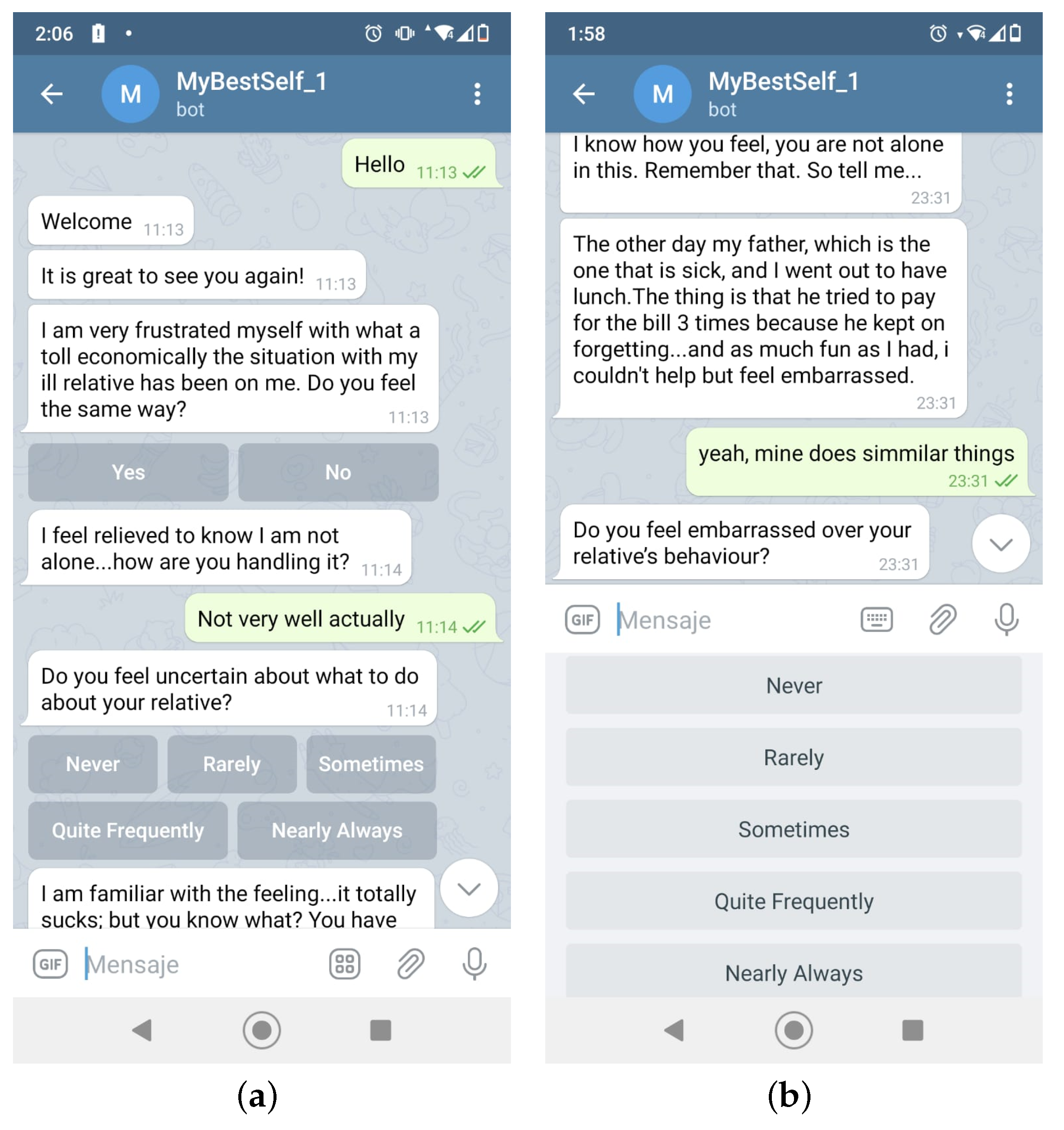

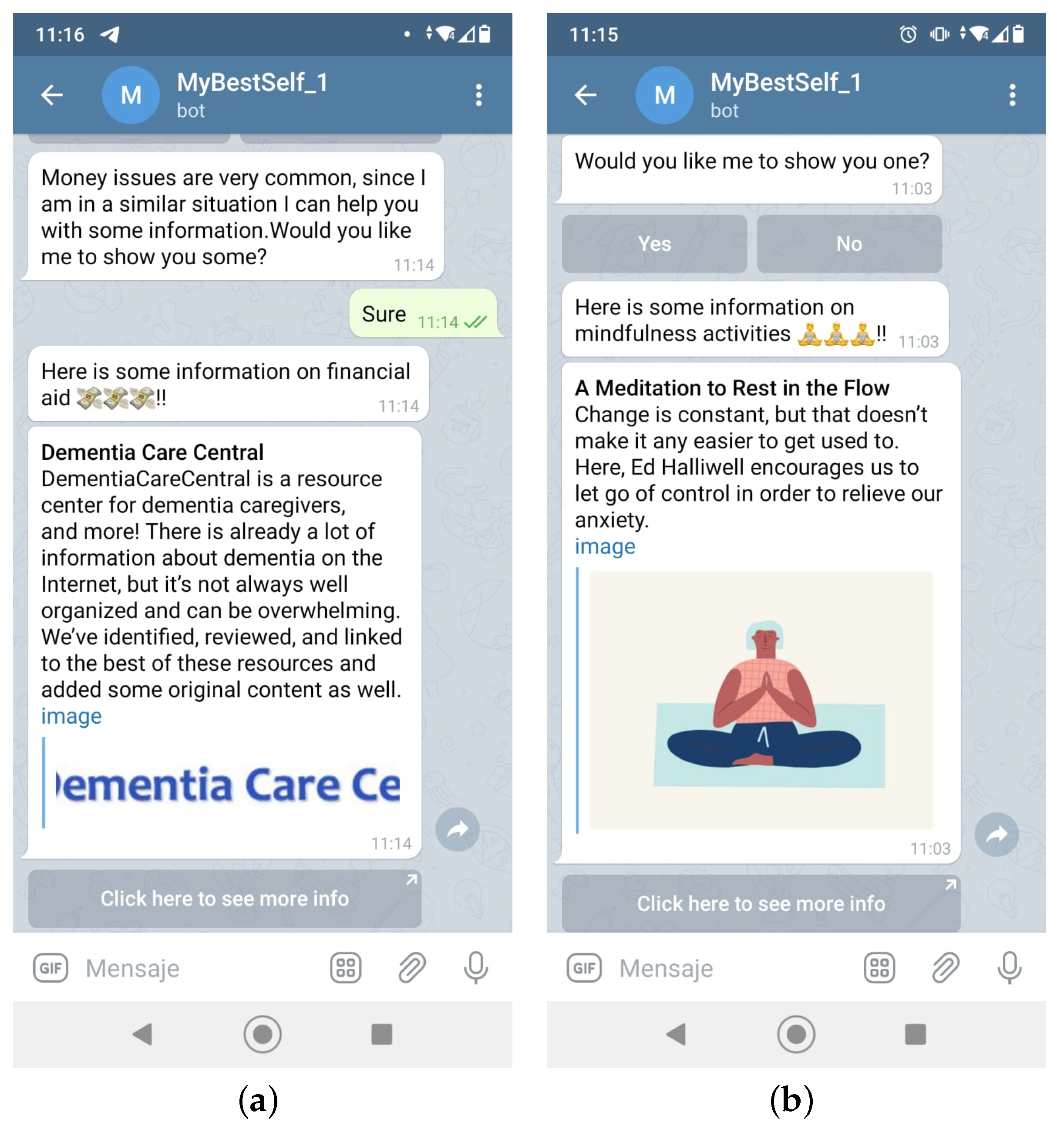

3.3. Implementation

4. Evaluation

4.1. Results

4.2. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| API | Application Programming Interface |

| ERDF | European Regional Development Fund |

| MBCT | Mindfulness-Based Cognitive Therapy |

| MYLO | Manage Your Life Online |

| NLP | Natural Language Processor |

| RB | Rule-Based |

| SUS | System Usability Scale |

| USA | United States of America |

References

- World Health Organization. Available online: https://www.who.int/ (accessed on 10 August 2022).

- Alzheimer’s Association. Available online: https://www.alz.org/ (accessed on 26 August 2022).

- Markowitz, J.S.; Gutterman, E.M.; Sadik, K.; Papadopoulos, G. Health-related Quality of Life for Caregivers of Patients with Alzheimer Disease. Alzheimer Dis. Assoc. Disord. 2003, 17, 209–214. [Google Scholar] [CrossRef] [PubMed]

- Bird, T.; Mansell, W.; Wright, J.; Gaffney, H.; Tai, S. Manage Your Life Online: A Web-Based Randomized Controlled Trial Evaluating the Effectiveness of a Problem-Solving Intervention in a Student Sample. Behav. Cogn. Psychother. 2018, 46, 570–582. [Google Scholar] [CrossRef] [PubMed]

- Gaffney, H.; Mansell, W.; Edwards, R.; Wright, J. Manage Your Life Online (MYLO): A Pilot Trial of a Conversational Computer-based Intervention for Problem Solving in a Student Sample. Behav. Cogn. Psychother. 2014, 42, 731–746. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.; Lee, J.; Gweon, G. Comparing Data from Chatbot and Web Surveys: Effects of Platform and Conversational Style on Survey Response Quality. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI’2019), Glasgow, UK, 4–9 May 2019; pp. 1–12. [Google Scholar] [CrossRef]

- Cameron, G.; Cameron, D.; Megaw, G.; Bond, R.; Mulvenna, M.; O’Neill, S.; Armour, C.; McTear, M. Best Practices for Designing Chatbots in Mental Healthcare: A Case Study on IHelpr. In Proceedings of the 32nd International BCS Human Computer Interaction Conference (HCI’2018), Belfast, UK, 4–6 July 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Siddig, A.; Hines, A. A Psychologist Chatbot Developing Experience. In Proceedings of the 27th AIAI Irish Conference on Artificial Intelligence and Cognitive Science (AICS’2019), Galway, Ireland, 5–6 December 2019; pp. 200–211. Available online: http://ceur-ws.org/Vol-2563/aics_20.pdf (accessed on 26 August 2022).

- Joerin, A.; Rauws, M.; Ackerman, M.L. Psychological Artificial Intelligence Service, Tess: Delivering On-demand Support to Patients and their Caregivers: Technical Report. Cureus 2019, 11, e3972. [Google Scholar] [CrossRef]

- Cooper, C.; Katona, C.; Orrell, M.; Livingston, G. Coping Strategies, Anxiety and Depression in Caregivers of People with Alzheimer’s Disease. Int. J. Geriatr. Psychiatry 2008, 23, 929–936. [Google Scholar] [CrossRef]

- Stolley, J.M.; Buckwalter, K.C.; Koenig, H.G. Prayer and Religious Coping for Caregivers of Persons with Alzheimer’s Disease and Related Disorders. Am. J. Alzheimer’s Dis. 1999, 14, 181–191. [Google Scholar] [CrossRef]

- Pratt, C.C.; Schmall, V.L.; Wright, S.; Marilyn, C. Burden and Coping Strategies of Caregivers to Alzheimer’s Patients. Fam. Relat. 1985, 34, 27–33. [Google Scholar] [CrossRef]

- Clegg, D.; Barker, R. Case Method Fast-Track: A Rad Approach; Addison-Wesley Longman Publishing Co., Inc.: Boston, MA, USA, 1994. [Google Scholar]

- Bachner, Y.; O’rourke, N. Reliability generalization of responses by care providers to the Zarit Burden Interview. Aging Ment. Health 2007, 11, 678–685. [Google Scholar] [CrossRef] [PubMed]

- BrightFocus Foundation. Available online: https://www.brightfocus.org/ (accessed on 26 August 2022).

- Dementia Care Central. Available online: https://www.dementiacarecentral.com/ (accessed on 26 August 2022).

- Medical News Today. Available online: https://www.medicalnewstoday.com/ (accessed on 26 August 2022).

- Mindfulness Melbourne. Progressive Muscle Relaxation. Available online: https://soundcloud.com/mindfulness-melbourne/progressive-muscle-relaxation (accessed on 26 August 2022).

- Johns Hopkins Rheumatology. Available online: https://www.hopkinsrheumatology.org/ (accessed on 26 August 2022).

- Therapist Aid. Essential Tools for Mental Health Professionals. Available online: https://www.therapistaid.com/ (accessed on 26 August 2022).

- Eisler, M. Ease Anxiety with Visualization Techniques. Available online: https://mindfulminutes.com/ease-anxiety-with-visualization-techniques/ (accessed on 26 August 2022).

- Memory People. Available online: https://www.facebook.com/Memory-People-126017237474382 (accessed on 26 August 2022).

- Dementia Caregivers Support Group. Available online: https://www.facebook.com/groups/672984902717938 (accessed on 26 August 2022).

- Alzheimer’s and Dementia Caregivers Support Chat Group. Available online: https://www.facebook.com/groups/703409729808429 (accessed on 26 August 2022).

- Beeson, R.A. Loneliness and Depression in Spousal Caregivers of Those with Alzheimer’s Disease Versus Non-caregiving Spouses. Arch. Psychiatr. Nurs. 2003, 17, 135–143. [Google Scholar] [CrossRef]

- Family Caregiver Alliance. Available online: https://www.caregiver.org (accessed on 26 August 2022).

- Rodriguez, G.; De Leo, C.; Girtler, N.; Vitali, P.; Grossi, E.; Nobili, F. Psychological and Social Aspects in Management of Alzheimer’s Patients: An Inquiry among Caregivers. Neurol. Sci. 2003, 24, 329–335. [Google Scholar] [CrossRef]

- Aguglia, E.; Onor, M.L.; Trevisiol, M.; Negro, C.; Saina, M.; Maso, E. Stress in the Caregivers of Alzheimer’s Patients: An Experimental Investigation in Italy. Am. J. Alzheimer’s Dis. Other Dement. 2004, 19, 248–252. [Google Scholar] [CrossRef]

- Collins, R.N.; Kishita, N. The Effectiveness of Mindfulness- and Acceptance-Based Interventions for Informal Caregivers of People with Dementia: A Meta-Analysis. Gerontologist 2019, 59, e363–e379. [Google Scholar] [CrossRef] [PubMed]

- Finucane, A.; Mercer, S.W. An Exploratory Mixed Methods Study of the Acceptability and Effectiveness of Mindfulness-Based Cognitive Therapy for Patients with Active Depression and Anxiety in Primary Care. BMC Psychiatry 2006, 6, 14. [Google Scholar] [CrossRef] [PubMed]

- Waelde, L.C.; Thompson, L.; Gallagher-Thompson, D. A Pilot Study of a Yoga and Meditation Intervention for Dementia Caregiver Stress. J. Clin. Psychol. 2004, 60, 677–687. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.F.; Huang, X.Y.; Chien, C.H.; Cheng, J.F. The Effectiveness of Diaphragmatic Breathing Relaxation Training for Reducing Anxiety. Perspect. Psychiatr. Care 2017, 53, 329–336. [Google Scholar] [CrossRef]

- Hernandez, N.E.; Kolb, S. Effects of Relaxation on Anxiety in Primary Caregivers of Chronically Ill Children. Pediatr. Nurs. 1998, 24, 51–56. Available online: https://go.gale.com/ps/i.do?p=AONE&u=anon~d96aadd7&id=GALE|A20327274&v=2.1&it=r&sid=googleScholar&asid=86ef8c1b (accessed on 26 August 2022).

- Patel, M. A Study to Assess the Effectiveness Of Progressive Muscle Relaxation Therapy on Stress among Staff Nurses Working in Selected Hospitals at Vadodara City. IOSR J. Nurs. Health Sci. 2014, 3, 34–59. [Google Scholar] [CrossRef]

- Fisher, P.A.; Laschinger, H.S. A Relaxation Training Program to Increase Self-efficacy for Anxiety Control in Alzheimer Family Caregivers. Holist. Nurs. Pract. 2001, 15, 47–58. [Google Scholar] [CrossRef]

- Masoudi, R.; Soleimany, M.A.; Moghadasi, J.; Qorbani, M.; Mehralian, H.A.; Bahrami, N. Effect of Progressive Muscle Relaxation Program on Self-efficacy and Quality of Life in Caregivers of Patients with Multiple Sclerosis. J. Inflamm. Dis. 2011, 15, 41–47. Available online: http://journal.qums.ac.ir/article-1-1113-en.html (accessed on 26 August 2022).

- Huang, M.S. Coping with Performance Anxiety: College Piano Students’ Perceptions of Performance Anxiety and Potential Effectiveness of Deep Breathing, Deep Muscle Relaxation, and Visualization. Ph.D. Thesis, Florida State University, Tallahassee, FL, USA, 2011. [Google Scholar]

- Brooke, J. SUS: A Quick and Dirty’Usability. In Usability Evaluation in Industry; Chapter 21; CRC Press: Boca Raton, FL, USA, 1996; pp. 189–194. [Google Scholar] [CrossRef]

- Bangor, A.; Kortum, P.T.; Miller, J.T. An Empirical Evaluation of the System Usability Scale. Int. J. Hum.-Comput. Interact. 2008, 24, 574–594. [Google Scholar] [CrossRef]

- Lewis, J.R. The System Usability Scale: Past, Present, and Future. Int. J. Hum.-Comput. Interact. 2018, 34, 577–590. [Google Scholar] [CrossRef]

| Group | A Social Life | B Money/Means | C Expectations | D Personal Values |

|---|---|---|---|---|

| Question ID | 3, 6, 12, 13 | 7, 15, 16, 19 | 1, 8, 14, 18–22 | 2, 4, 5, 9, 10, 11, 17 |

| Score | Interpretation |

|---|---|

| 0–20 | Little to no burden |

| 21–40 | Mild to moderate burden |

| 41–60 | Moderate to severe burden |

| 61–88 | Severe burden |

| Options | Score | |||

|---|---|---|---|---|

| 0–20 | 21–40 | 41–60 | 61–88 | |

| Visualization | X | X | X | |

| Breathing | X | X | X | |

| Muscle | X | X | X | |

| Testimonies | X | X | X | |

| MBCT | X | X | ||

| Support | X | X | ||

| Economic | X | X | ||

| None | X | X | X | X |

| Participant | Gender | Age | Current Job | Sick Family Member Status |

|---|---|---|---|---|

| A | Female | 53 | Housekeeper | Father |

| B | Female | 24 | Civil Servant | Grandfather |

| C | Male | 55 | Civil Servant | Mother |

| D | Female | 50 | Lawyer | Partner |

| E | Female | 15 | Student | Father |

| F | Female | 47 | Baker | Father |

| G | Female | 69 | Housekeeper | Husband |

| H | Female | 20 | Student | Grandmother |

| I | Female | 58 | Health Professional | Great aunt |

| J | Male | 59 | Civil Servant | Mother |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Castilla, E.; Escobar, J.J.; Villalonga, C.; Banos, O. HIGEA: An Intelligent Conversational Agent to Detect Caregiver Burden. Int. J. Environ. Res. Public Health 2022, 19, 16019. https://doi.org/10.3390/ijerph192316019

Castilla E, Escobar JJ, Villalonga C, Banos O. HIGEA: An Intelligent Conversational Agent to Detect Caregiver Burden. International Journal of Environmental Research and Public Health. 2022; 19(23):16019. https://doi.org/10.3390/ijerph192316019

Chicago/Turabian StyleCastilla, Eugenia, Juan José Escobar, Claudia Villalonga, and Oresti Banos. 2022. "HIGEA: An Intelligent Conversational Agent to Detect Caregiver Burden" International Journal of Environmental Research and Public Health 19, no. 23: 16019. https://doi.org/10.3390/ijerph192316019

APA StyleCastilla, E., Escobar, J. J., Villalonga, C., & Banos, O. (2022). HIGEA: An Intelligent Conversational Agent to Detect Caregiver Burden. International Journal of Environmental Research and Public Health, 19(23), 16019. https://doi.org/10.3390/ijerph192316019