Evaluation of the Second Premolar’s Bud Position Using Computer Image Analysis and Neural Modelling Methods

Abstract

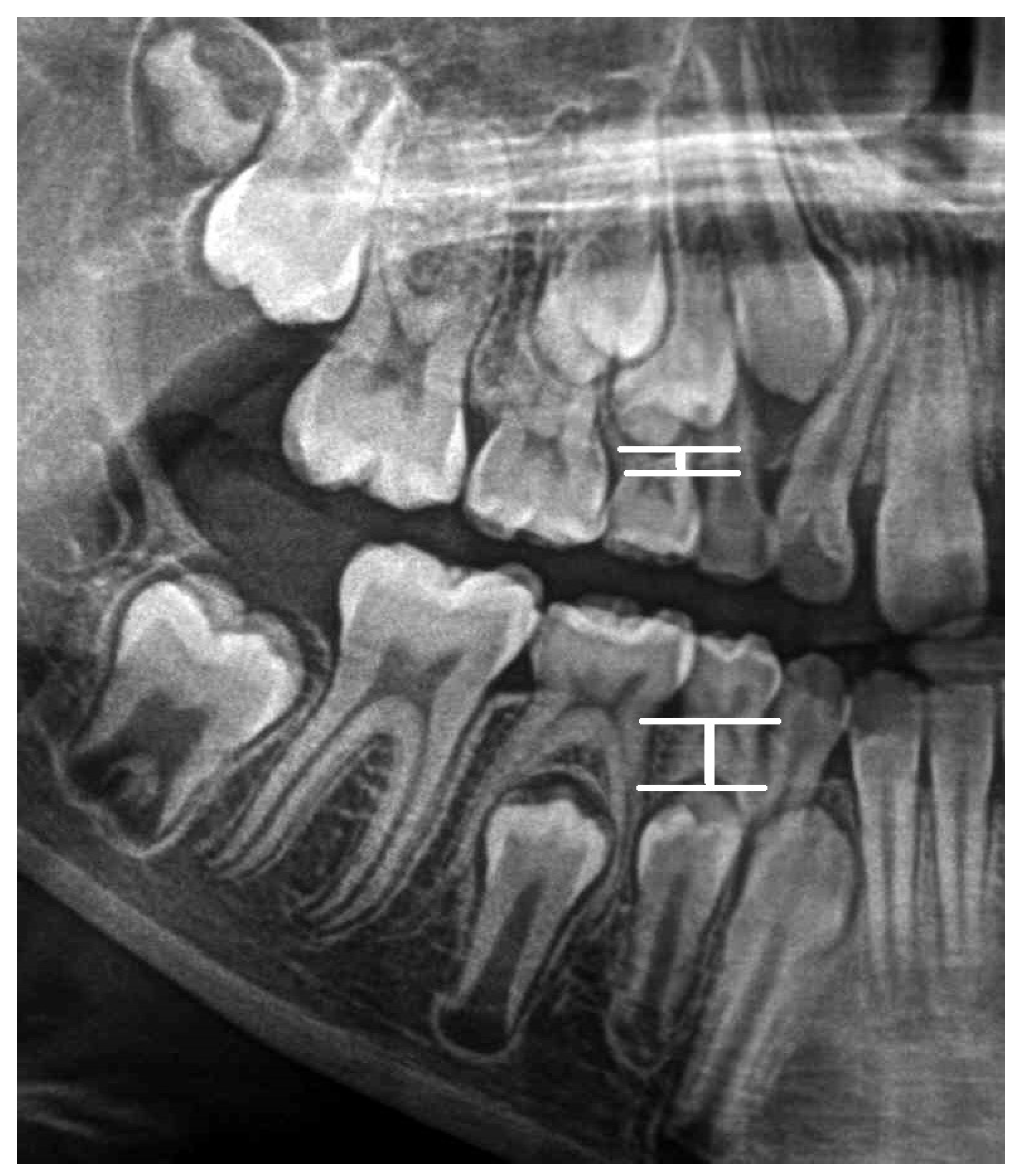

1. Introduction

2. The Aim

2.1. Material

2.2. Methods

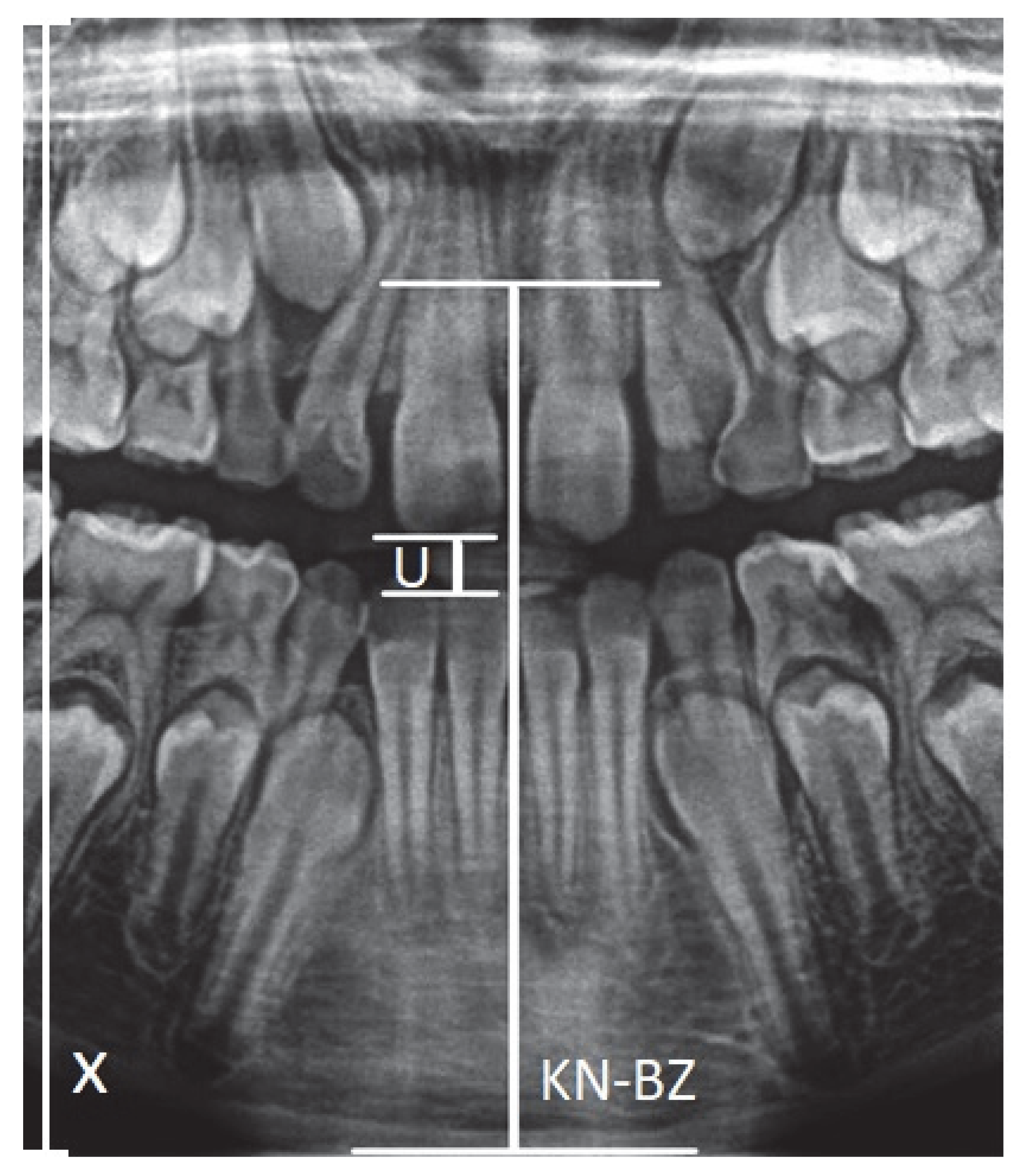

- X-axis—Left vertical edge of the pantomographic radiograph.

- U—Width of the mouthpiece of the X-ray equipment.

- KN-BZ—Distance of the anterior nasal spike from the mandibular margin.

2.3. Analysis of the Collected Data

- LS 2021,04,25 Q-I

- -

- NETWORK INPUT: GEDER, AGE, AV, CQ, CS, CU, CW, CY, DA, DC, DE, DG, DW, ED, EL

- -

- NETWORK OUTPUT: DI

- LS 2021,04,25 Q-II

- -

- NETWORK INPUT: GENDER, AGE, AV, CQ, CS, CU, CW, CY, DA, DC, DE, DK, DY, EE, EP

- -

- NETWORK OUTPUT: DM

- LS 2021,04,25 Q-III

- -

- NETWORK INPUT: GENDER, AGE, AV, CQ, CS, CU, CW, CY, DA, DC, DE, DS, EC, EG, EX

- -

- NETWORK OUTPUT: DU

- LS 2021,04,25 Q-IV

- -

- NETWORK INPUT: GENDER, AGE, AV, CQ, CS, CU, CW, CY, DA, DC, DE, DO, EA, EF, ET

- -

- NETWORK OUTPUT: DQ

- LS 2021,04,25 4Q

- -

- NETWORK INPUT: GENDER, AGE, AV, CQ, CS, CU, CW, CY, DA, DC, DE, DG, DK, DO, DS, DW, DY, EA, EC, ED, EE, EF, EG, EL, EP, ET, EX

- -

- NETWORK OUTPUT: DM, DI, DU, DQ

2.4. Neural Modeling

2.4.1. LS2021,04,25 Q-I

2.4.2. LS 2021,04,25 Q-II

2.4.3. LS 2021,04,25 Q-III

2.4.4. LS 2021,04,25 Q-IV

2.4.5. LS 2021,04,25 I-IV

2.4.6. Summary of the Models for Each Quadrant

2.4.7. LS 2021,04,25 4Q

3. Summary of Models

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

Abbreviations

| No. | Abbr. | Description of Abbreviation |

|---|---|---|

| 1 | KN | anterior nasal spike |

| 2 | BZ | lower mandibular margin (a point in the projection of a line parallel to the X axis passing through the anterior nasal spike) |

| 3 | S11 | incisal margin of tooth 11 |

| 4 | S21 | incisal margin of tooth 21 |

| 5 | S12 | incisal margin of tooth 22 |

| 6 | S22 | incisal margin of tooth 22 |

| 7 | S31 | incisal margin of tooth 31 |

| 8 | S41 | incisal margin of tooth 41 |

| 9 | S32 | incisal margin of tooth 32 |

| 10 | S42 | incisal margin of tooth 42 |

| 11 | C13 | top of cusp of canine 13 |

| 12 | C23 | top of cusp of canine 23 |

| 13 | C33 | top of cusp of canine 33 |

| 14 | C43 | top of cusp of canine 43 |

| 15 | C14 | top of buccal cusp of tooth 14 |

| 16 | C15 | top of buccal cusp of tooth 15 |

| 17 | C24 | top of buccal cusp of tooth 24 |

| 18 | C25 | top of buccal cusp of tooth 25 |

| 19 | C34 | top of buccal cusp of tooth 34 |

| 20 | C35 | top of buccal cusp of tooth 35 |

| 21 | C44 | top of buccal cusp of tooth 44 |

| 22 | C45 | top of buccal cusp of tooth 45 |

| 23 | AV | the ratio between the distance |KN-BZ| and U (the width of the interdental handle-mouth of the X-ray camera) |

| 24 | CQ | the ratio between S11-W11 and AV distance |

| 25 | CS | the ratio between S12-W12 and AV distance |

| 26 | CU | the ratio between S21-W21 and AV distance |

| 27 | CW | the ratio between S22-W22 and AV distance |

| 28 | CY | the ratio between S31-W31 and AV distance |

| 29 | DA | the ratio between S41-W41 and AV distance |

| 30 | DC | the ratio between S32-W32 and AV distance |

| 31 | DE | the ratio between S42-W42 and AV distance |

| 32 | DG | the ratio between S14-W14 and AV distance |

| 33 | DK | the ratio between S24-W24 and AV distance |

| 34 | DS | the ratio between S34-W34 and AV distance |

| 35 | DO | the ratio between S44-W44 and AV distance |

| 36 | DW | the ratio between S13-W13 and AV distance |

| 37 | DY | the ratio between S23-W23 and AV distance |

| 38 | EC | the ratio between S33-W33 and AV distance |

| 39 | EA | the ratio between S43-W43 and AV distance |

| 40 | DI | the ratio between S15-W15 and AV distance |

| 41 | DM | the ratio between S25-W25 and AV distance |

| 42 | DU | the ratio between S35-W35 and AV distance |

| 43 | DQ | the ratio between S45-W45 and AV distance |

| 44 | MLP | multilayer perceptron |

| 45 | RBF | radial basis function |

| 46 | RMSE | root mean square error |

References

- Różyło, T.K.; Różyło-Kalinowska, I. Współczesna Radiologia Stomatologiczna; Wydawnictwo Lekarskie PZWL: Lublin, Poland, 2012; ISBN 978-83-7563-303-0. [Google Scholar]

- Różyłło-Kalinowska, I. Interpretacja zdjęć rentgenowskich, Anatomia zdjęcia pantomograficznego. Twój Przegl. Stomatolog. 2012, 1–2, 22–26. [Google Scholar]

- Różyło-Kalinowska, I. Wykonywanie stomatologicznych badań radiologicznych u dzieci. In Dziecko w Gabinecie Stomatologicznym; Elamed: Katowice, Poland, 2013; pp. 45–50. ISBN 978-83-61190-46-2. [Google Scholar]

- Perschbacher, S. Interpretation of panoramic radiographs. Aust. Dent. J. 2012, 57, 40–45. [Google Scholar] [CrossRef] [PubMed]

- Bilge, N.H.; Yeşiltepe, S.; Törenek Ağırman, K.; Çağlayan, F.; Bilge, O.M. Investigation of prevalence of dental anomalies by using digital panoramic radiographs. Folia Morphol. 2018, 77, 323–328. [Google Scholar] [CrossRef] [PubMed]

- Biedziak, B.; Jaskuła, J. Pozorna hipodoncja. Forum Ortodon. 2009, 2, 40–46. [Google Scholar]

- Garib, D.G.; Peck, S.L.; Gomes, S.C. Increased occurrence of dental anomalies associated with second-premolar agenesis. Angle Orthod. 2009, 79, 436–441. [Google Scholar] [CrossRef] [PubMed]

- Cieślińska, K.; Zaborowicz, K.; Buchwald, Z.; Biedziak, B. Eruption pattern of permanent canines and premolars in Polish children. Int. J. Environ. Res. Public Health 2022, 19, 8464. [Google Scholar] [CrossRef] [PubMed]

- Duerdental. Available online: www.duerdental.com (accessed on 1 June 2022).

- ImageJ. Available online: www.imagej.nih.com (accessed on 1 June 2022).

- Microsoft. Available online: www.microsoft.com (accessed on 1 June 2022).

- Statsoft. Available online: www.statsoft.pl (accessed on 1 June 2022).

- Hung, K.; Montalvao, C.; Tanaka, R.; Kawai, T.; Bornstein, M.M. The use and performance of artificial intelligence applications in dental and maxillofacial radiology: A systematic review. Dentomaxillofacial Radiol. 2020, 49, 20190107. [Google Scholar] [CrossRef] [PubMed]

- Tuzoff, D.V.; Tuzova, L.N.; Bornstein, M.M.; Krasnov, A.S.; Kharchenko, M.A.; Nikolenko, S.I.; Sveshnikov, M.M.; Bednenko, G.B. Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dentomaxillofacial Radiol. 2019, 48, 20180051. [Google Scholar] [CrossRef]

- Kim, C.; Kim, D.; Jeong, H.; Yoon, S.-J.; Youm, S. Automatic Tooth Detection and Numbering Using a Combination of a CNN and Heuristic Algorithm. Appl. Sci. 2020, 10, 5624. [Google Scholar] [CrossRef]

- Ha, E.-G.; Jeon, K.J.; Kim, Y.H.; Kim, J.-Y.; Han, S.-S. Automatic detection of mesiodens on panoramic radiographs using artificial intelligence. Sci. Rep. 2021, 11, 23061. [Google Scholar] [CrossRef] [PubMed]

- Yang, H.; Jo, E.; Kim, H.J.; Cha, I.H.; Jung, Y.-S.; Nam, W.; Kim, J.-Y.; Kim, J.-K.; Kim, Y.H.; Oh, T.G.; et al. Deep Learning for Automated Detection of Cyst and Tumors of the Jaw in Panoramic Radiographs. J. Clin. 2020, 9, 1839. [Google Scholar] [CrossRef] [PubMed]

- Khasawneh, N.; Fraiwan, M.; Fraiwan, L. Detection of K-complexes in EEG signals using deep transfer learning and YOLOv3. Clust. Comput. 2022, 1–11. [Google Scholar] [CrossRef]

- Pratiwi, D. Dental Abnormalities Detection System through Panoramic X-ray using Backpropagation Neural Network and Cross-Validation. Int. J. Technol. Bus. 2017, 1, 24–30. [Google Scholar]

- Vila Blanco, N.; Vilas, R.; Carreira, M.; Carmona, T. Inmaculada: Towards Deep Learning Reliable Gender Estimation from Dental Panoramic Radiographs. In Proceedings of the 9th European Starting AI Researchers’ Symposium (STAIRS) Co-Located with 24th European Conference on Artificial Intelligence (ECAI 2020), Santiago de Compostela, Spain, 31 August–4 September 2020. [Google Scholar]

- Zaborowicz, M.; Zaborowicz, K.; Biedziak, B.; Garbowski, T. Deep Learning Neural Modelling as a Precise Method in the Assessment of the Chronological Age of Children and Adolescents Using Tooth and Bone Parameters. Sensors 2022, 22, 637. [Google Scholar] [CrossRef] [PubMed]

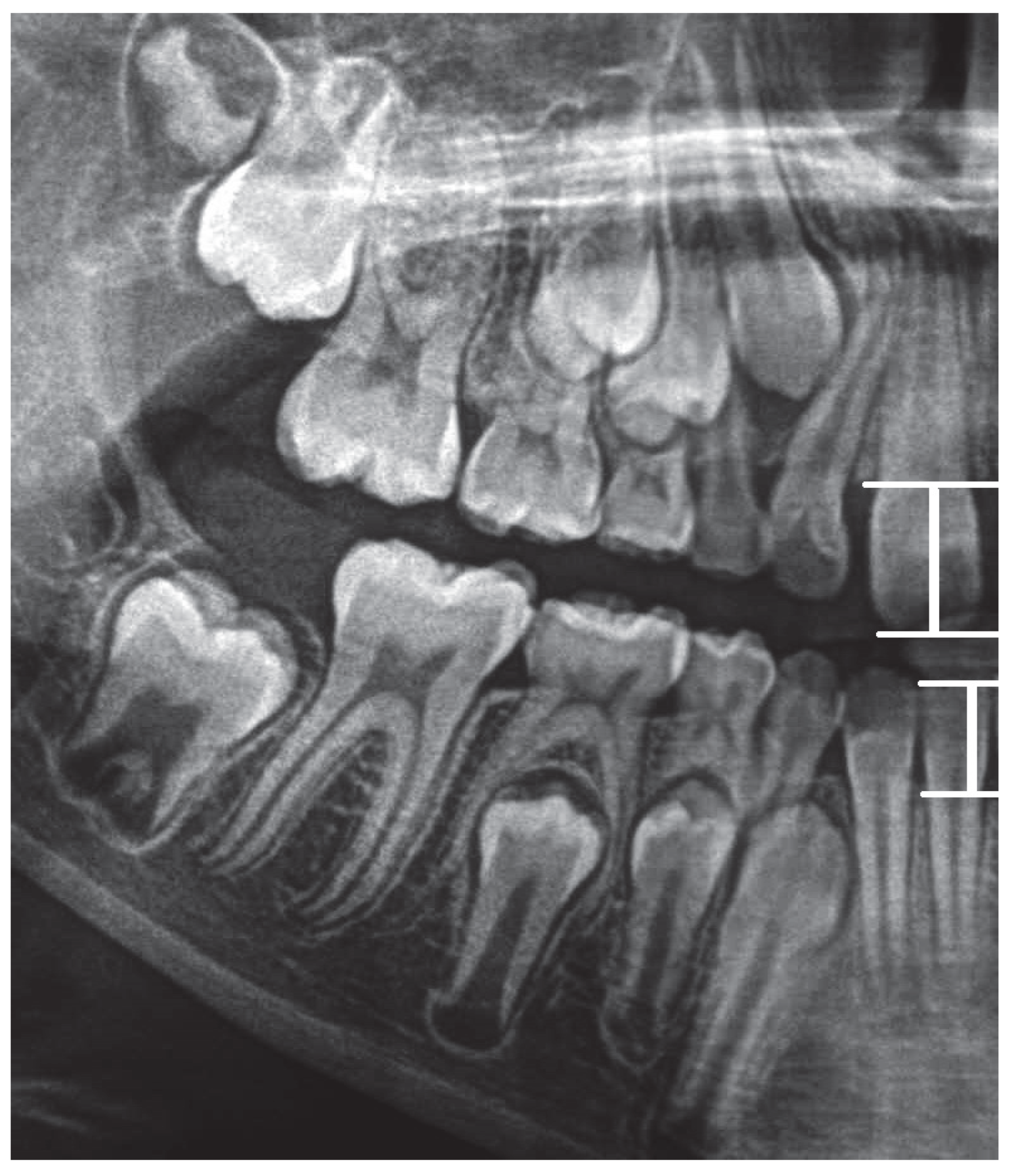

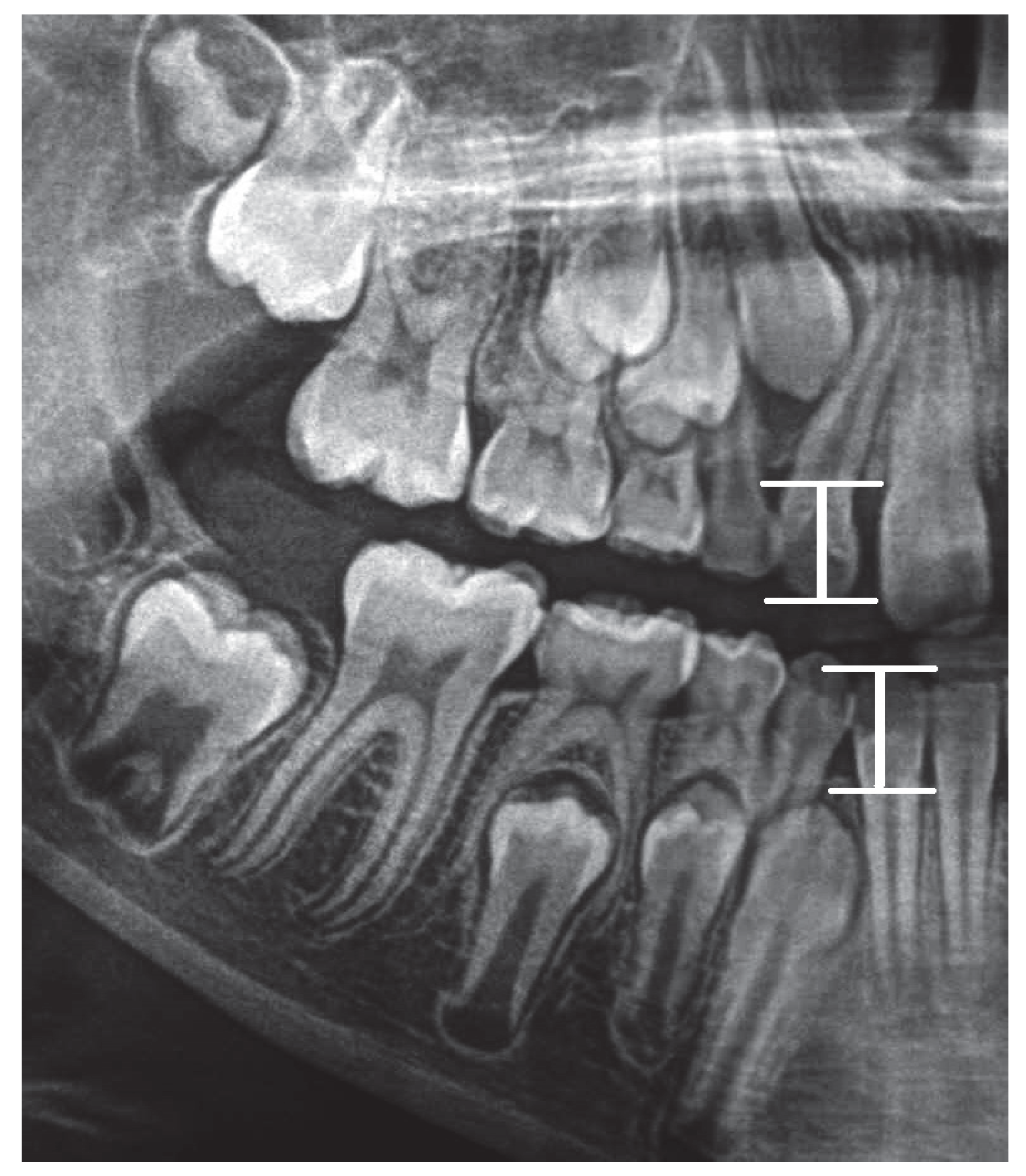

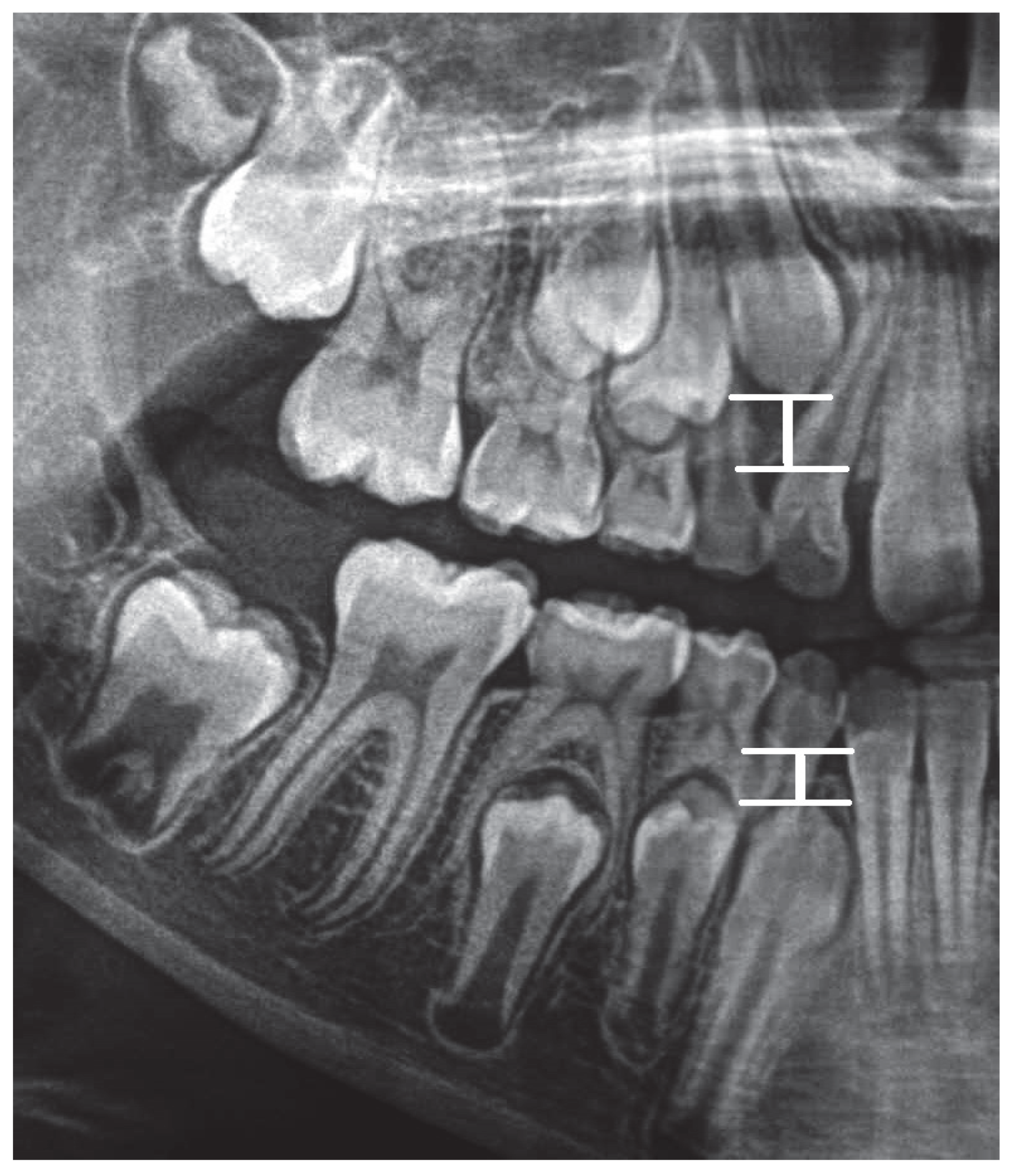

| No. | Description of Ratio | Ratio |

|---|---|---|

| 1 | AV—the ratio between the distance |KN-BZ| and U (the width of the interdental handle-mouth of the X-ray camera) | |

| 2 | CQ—the ratio between S11-W11 and AV distance | |

| 3 | CS—the ratio between S12-W12 and AV distance | |

| 4 | CU—the ratio between S21-W21 and AV distance | |

| 5 | CW—the ratio between S22-W22 and AV distance | |

| 6 | CY—the ratio between S31-W31 and AV distance | |

| 7 | DA—the ratio between S41-W41 and AV distance | |

| 8 | DC—the ratio between S32-W32 and AV distance | |

| 9 | DE—the ratio between S42-W42 and AV distance | |

| 10 | DG—the ratio between S14-W14 and AV distance | |

| 11 | DK—the ratio between S24-W24 and AV distance | |

| 12 | DS—the ratio between S34-W34 and AV distance | |

| 13 | DO—the ratio between S44-W44 and AV distance | |

| 14 | DW—the ratio between S13-W13 and AV distance | |

| 15 | DY—the ratio between S23-W23 and AV distance | |

| 16 | EC—the ratio between S33-W33 and AV distance | |

| 17 | EA—the ratio between S43-W43 and AV distance | |

| 18 | DI—the ratio between S15-W15 and AV distance | |

| 19 | DM—the ratio between S25-W25 and AV distance | |

| 20 | DU—the ratio between S35-W35 and AV distance | |

| 21 | DQ—the ratio between S45-W45 and AV distance |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cieślińska, K.; Zaborowicz, K.; Zaborowicz, M.; Biedziak, B. Evaluation of the Second Premolar’s Bud Position Using Computer Image Analysis and Neural Modelling Methods. Int. J. Environ. Res. Public Health 2022, 19, 15240. https://doi.org/10.3390/ijerph192215240

Cieślińska K, Zaborowicz K, Zaborowicz M, Biedziak B. Evaluation of the Second Premolar’s Bud Position Using Computer Image Analysis and Neural Modelling Methods. International Journal of Environmental Research and Public Health. 2022; 19(22):15240. https://doi.org/10.3390/ijerph192215240

Chicago/Turabian StyleCieślińska, Katarzyna, Katarzyna Zaborowicz, Maciej Zaborowicz, and Barbara Biedziak. 2022. "Evaluation of the Second Premolar’s Bud Position Using Computer Image Analysis and Neural Modelling Methods" International Journal of Environmental Research and Public Health 19, no. 22: 15240. https://doi.org/10.3390/ijerph192215240

APA StyleCieślińska, K., Zaborowicz, K., Zaborowicz, M., & Biedziak, B. (2022). Evaluation of the Second Premolar’s Bud Position Using Computer Image Analysis and Neural Modelling Methods. International Journal of Environmental Research and Public Health, 19(22), 15240. https://doi.org/10.3390/ijerph192215240