Deep Mobile Linguistic Therapy for Patients with ASD

Abstract

1. Introduction

Contributions

2. Related Work and Problem Statement

2.1. Related Work

- Speech and language therapy assessment: Speech and language therapy may be recommended for children who are having difficulty developing communication skills [15]. This can be initiated by the child’s primary care physician or pediatrician, as well as by the parents. The speech and language therapist’s job is to examine a child’s communication abilities and work with parents (and schools) to help them develop. The significance of early diagnosing drew the attention of researchers towards using different machine learning-based procedures [16]. Therefore, early and accurate detection of ASD is required, which will help in treatment planning with the patient history and different medical tests, the brain MR scans can proceed towards ASD controls [17]. Rather than speech development only, the focus of therapy will be on successful communication in its broadest meaning. The therapist may conduct assessments of varying degrees of formality to provide advice that is specific to the individual child. Several tests have been standardized, which means we know how children normally perform at different ages and genre, taking into account the expected variation in the population. These tests, on the other hand, have all been standardized from work with children who are developing in a usual manner and do not have any physical, sensory, or cognitive disabilities. As they frequently rely on bodily reactions such as pointing to pictures, handling toys, or speaking, administering them to children with Communication Problems (CP) may appear unfair. When they are adapted for kids who eye-point or need extra help such as signing, precise scoring criteria cannot be used, so any comparisons to typically developing kids should be taken with a grain of salt. Despite their flaws, assessments may be useful in establishing a profile of a child’s communication strengths and weaknesses, following a child’s progress over time, and advising on areas of development to focus on in therapy. Most of these children need assistance in their daily lives exclusively when they are communicating, interacting and behaving with others [18,19].

- Other approaches: Hard coding and advances using Natural Language Processing with Sentiment Analysis are mentioned in [20]. The authors also discuss the possibility of using three kinds of verbs, dividing those into three types: Descriptive Action Verbs (DAVS), Interpretation Action Verbs (IAVS), and State Verbs (SVS). In the end, a particular issue occurred with participants in all the experiments in terms of position in the linguistic category model used, and sometimes related to shared meaning between words, for example: “A hurts B” and “A Kicks B”. Other of the approaches studied in [20] discuss using a combination of Sentiment Analysis with Linguistic Semantic extraction. On the other hand, pictures are not used because it is mentioned it would be not statistically different. Finally, the use of Information and Communication Technologies (ICT) is not something new. ICT has been widely studied [21], and most researchers agree on the following taxonomy:

- (a)

- Number of Vocalizations: In this approach, the software is important but limited. HyperStudio 3.2 Evaluation with eight preschool children with autism (and eight matched children without autism) suggested the program’s potential for teaching problem-solving skills to children with and without autism. Children with autism were significantly less able than their peers to generate new ideas [22]. The software description is used with several screens presenting videos where the user with ASD can select the content. This content is related to a specific topic, for example, greetings and directions. On the other hand, the patient has to be assisted by a tutor, but at the same time, they can indirectly develop communication skills. However, it is mentioned that this approach is limited because the patient cannot select other peers. Presenting Spoken Impact Project Software (SIPS), which introduced to emphasize social aspects of online collaborative learning (OCL), expresses the degree to which online environments for collaborative learning support social aspects through social affordances by the sociability attribute [23], it relates the visual with audio, but at the same time, it is also limited due to low functionality with ASD patients.

- (b)

- Vocabulary expansion: The number of words is increasing, which makes the children increase their stimulation in learning more. This approach is more graphical and provides reinforcement with images and sounds with commands given. There also exists a software called Baldi with a 3D language tutor with reading and speech with exercises which stimulates more the learning part. Baldi has been successful in teaching vocabulary and grammar to children with autism and those with hearing problems. The present study assessed to what extent the face facilitated this learning process relative to the voice alone. Baldi was implemented in a Language Wizard/Tutor, which allows the easy creation and presentation of a vocabulary lesson involving the association of pictures and spoken words [24]. There is a limitation which is mentioned in that the same software has a hard level setting, which causes the demotivation of kids.

- (c)

- Communication in Social Context: In this approach, authors investigate the development of software by them. On the other hand, it is related to other types of ASD such as Delayed Echolalia, Immediate Echolalia, Irrelevant Speech, Relevant Speech and Communicated Initiations [25]. The patient selects an animation and the software provides all the possibilities, then the user’s interest in communication skills declines [26].

- (d)

- General Communication Learning: Using Mobile Devices, the patient selects pictures from the screen, the device stores them. In this way, the user can construct sentences. This software can support the learning process, but it makes the situation complex because it does not allow direct communication with other people.

- (e)

- Commercial Tools: In the market, there are different tools, but only very generic ones for cognitive and communication impairments. Other ones are created for populations with different levels of ASD and other needs. One mentioned is Zac Browser, Boardmaker, which is a multimedia software created especially for recreational and entertainment with predefined content, and Teach Town covers other areas, additionally, it was developed for ages between 2 and 6.

2.2. Problem Statement

- Teachers or relatives do not have experience. Freshmen teachers or relatives, even the most experienced ones, have probably never handled such a case in the past and they do not ask for help immediately.

- Lack of access to important information related about symptoms. The access to information and the sources are sometimes lacking, and some people between the family and social group do not know symptoms, e.g., difficulty of learning is an implicit one, it affects the ability to understand the students.

- Some students do not have perception of the condition and do not understand it. Physically patients with ASD are the same as other people. This means that visually, people cannot see the difference. Then, in case those disabilities are not revealed, some teachers or relatives will simply assume that the children are being lazy or are not focused in class. This kind of behaviour causes frustration because even when they try and find ways of improving, teaching cannot see advancement by conventional methods.

- Tools for rehabilitation: There are many systems and hardware which can provide therapy, but in most of cases, they are focused on some specific autism syndrome which differs from other. Furthermore, not all patients, families, hospitals, or clinics or physicians can have access to such technology, especially in developing countries.

3. Proposed System and Architecture

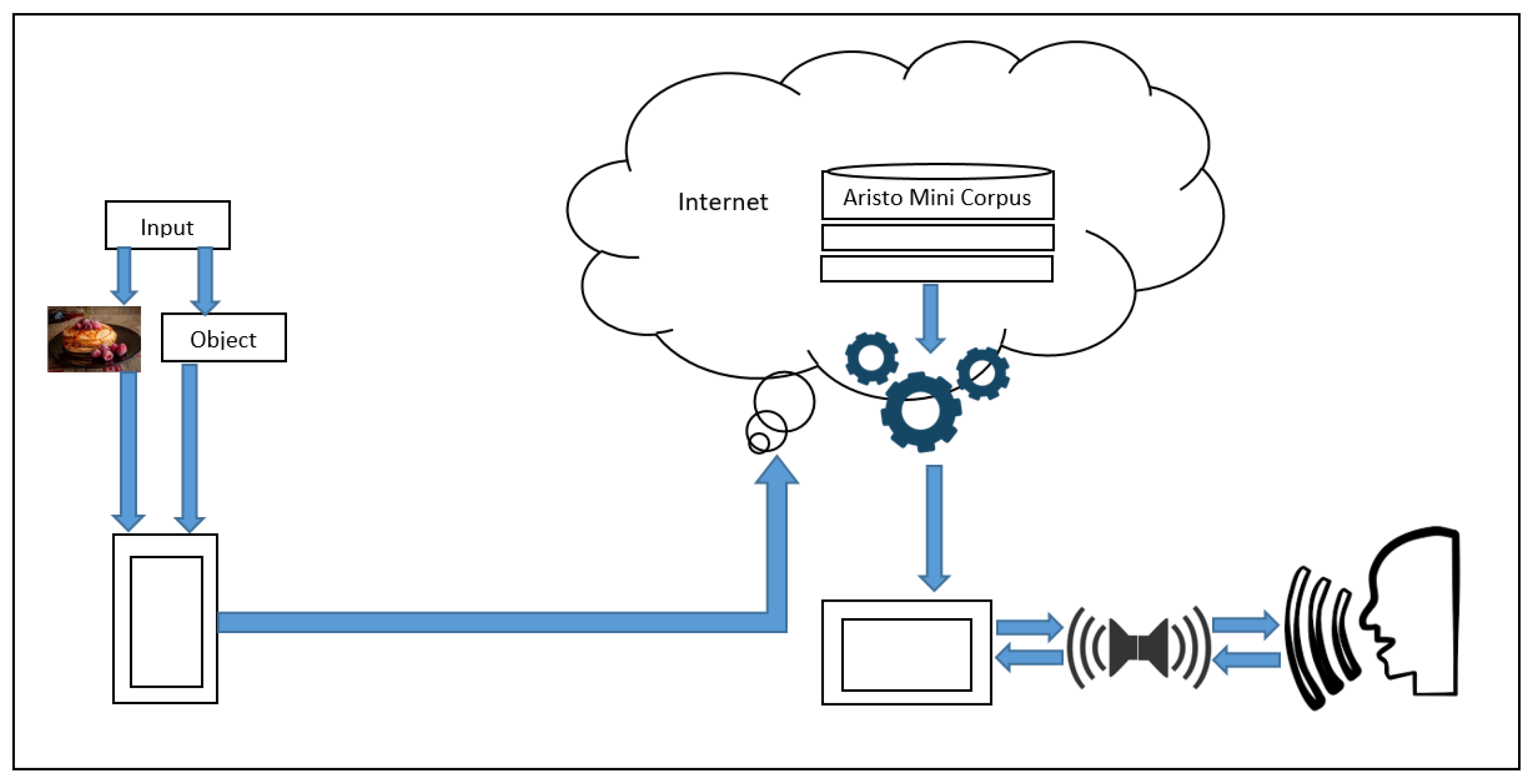

3.1. Software Architecture

- Mobile Image Classification;

- Image Labelling Annotation;

- Cloud Processing in words with selecting sentences;

- Information Retrieval;

- Text-to-Speech;

- Speech-to-Text.

3.2. CALTECH 256 Dataset

3.3. System Design

3.4. Binary Search Algorithm

3.5. Aristo Mini-Corpus

3.6. iOS AVKit

4. Experiments and Discussion

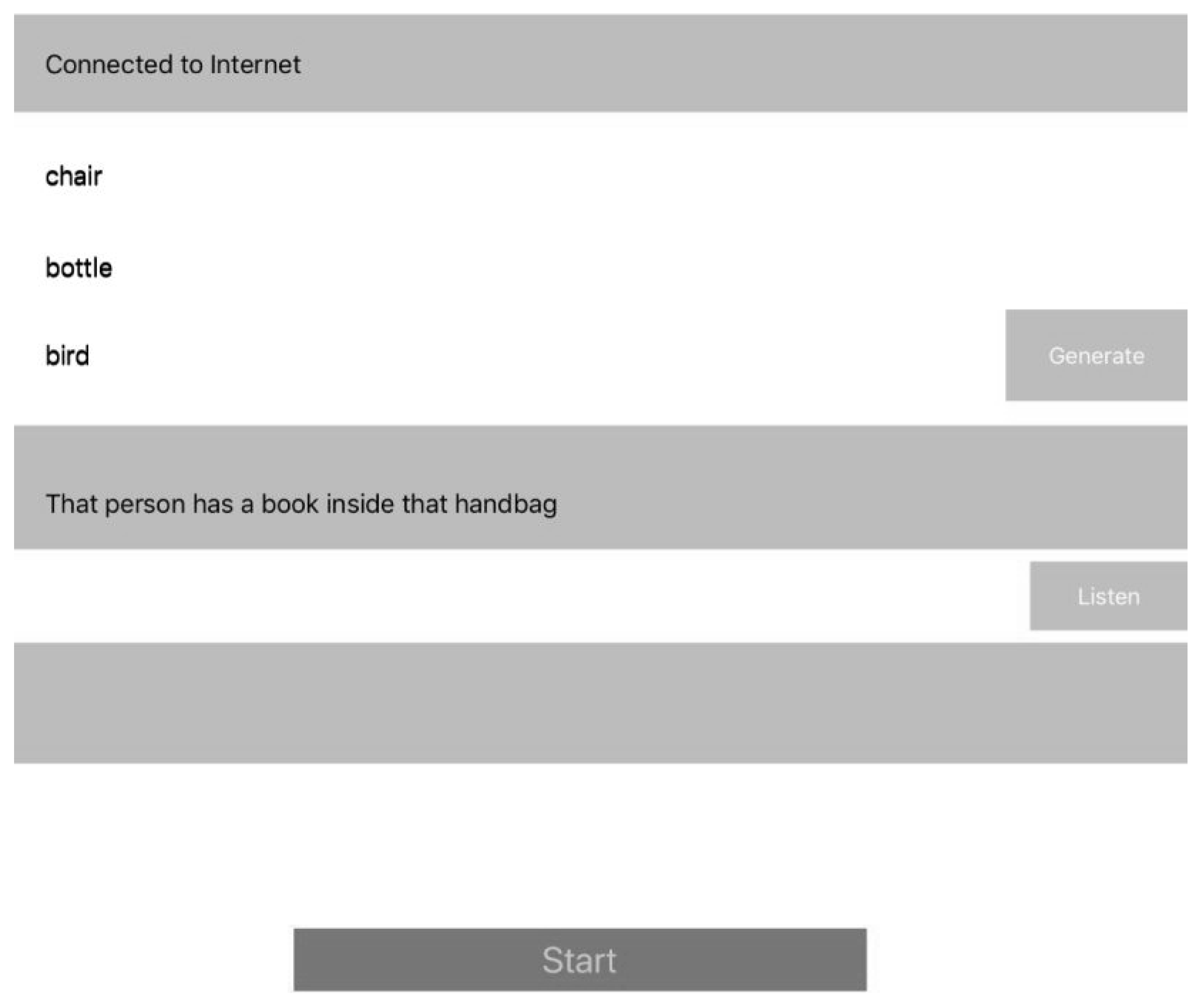

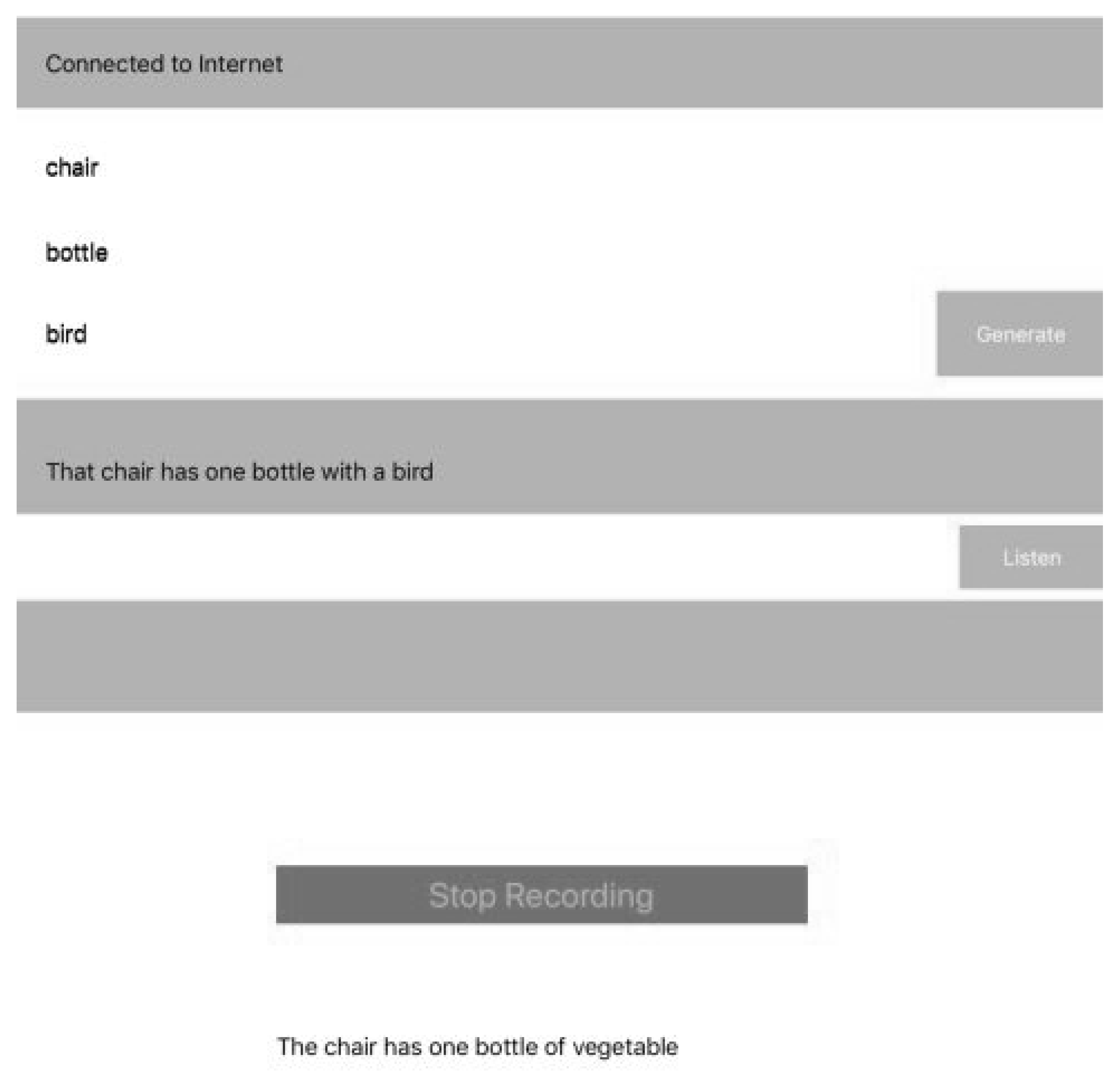

4.1. System Overview

4.2. Therapy System Operation Process Methodology

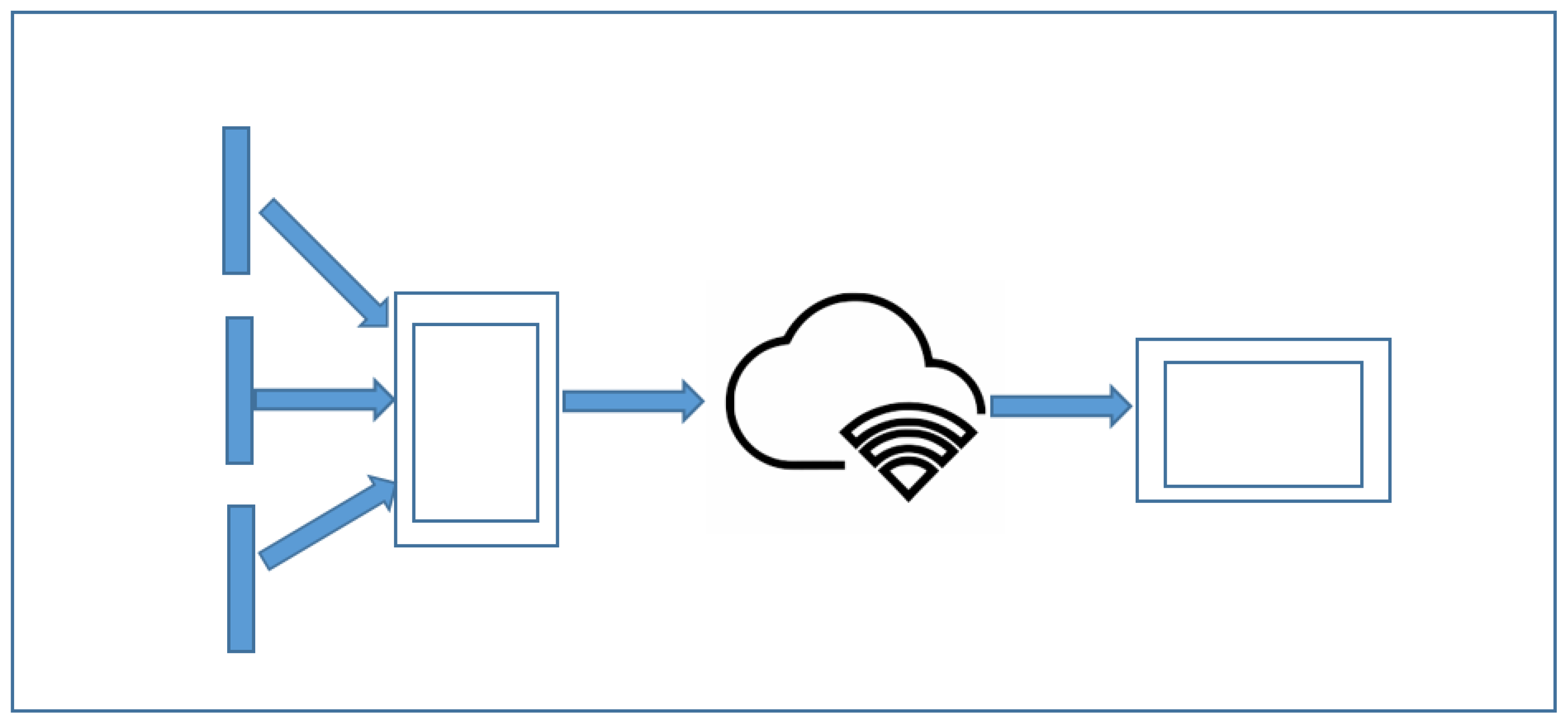

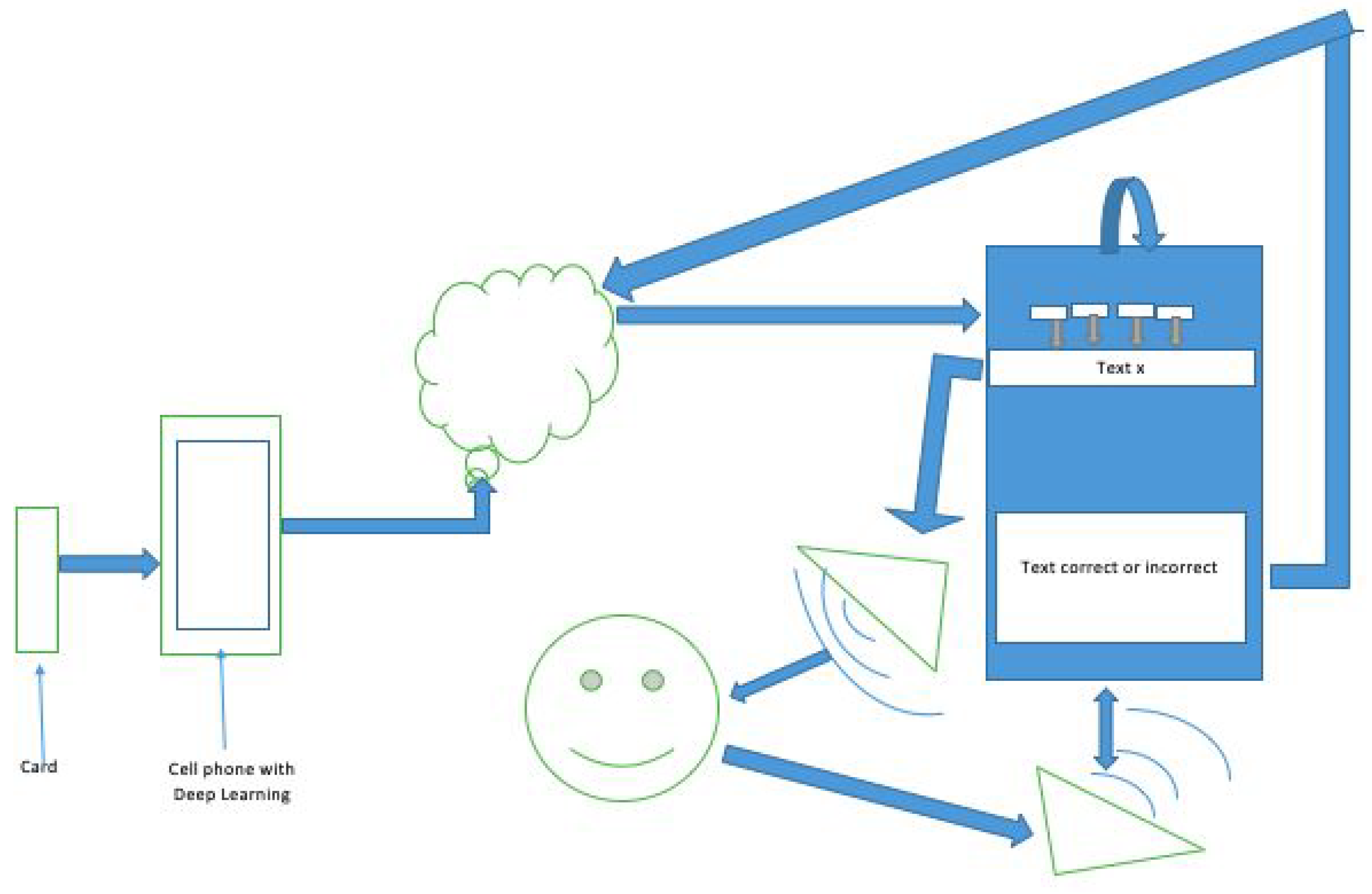

- The patient can use a certain number of picture cards or select from the device where TensorFlow is installed and running;

- The person who attends to or cares for the patient verifies that the patient has completed step 1, the images are converted into text of words;

- After step 2, Binary Search takes the words and in a small portion of the Aristo-Mini Corpus dataset within device 1, the most correct sentence is selected;

- Once step 3 is completed, the original words and the sentence are sent over the internet to the main Aristo Mini Corpus database for the same comparison;

- When step 4 ends, only one sentence remains, and by an asynchronous process, it is automatically downloaded to the iPad (second device)—the therapy device;

- When step 5 is over, when the sentence appears, the iPad will play it automatically using AVKit;

- The patient should repeat what the iPad device tells them (patient, therapist, or others):

- (a)

- Yes: The sentence is pronounced correctly, you will receive a point and a percentage of attempt.

- (b)

- No: The device will make you repeat it up to a certain number of times until you are allowed to change it and the iPad device will save the number of attempts and 0 points. However, if the patient is correct, a point will be awarded, but the number of repetitions will be saved.

- When the therapy session ends, the iPad will send to the cloud statistics collected with the number of therapy sessions that day, date, patient code;

- Once in the cloud, the iPad will notify the doctor; Google has its own tools to see the statistics of each patient without having to visit them at home or go to the hospital more frequently.

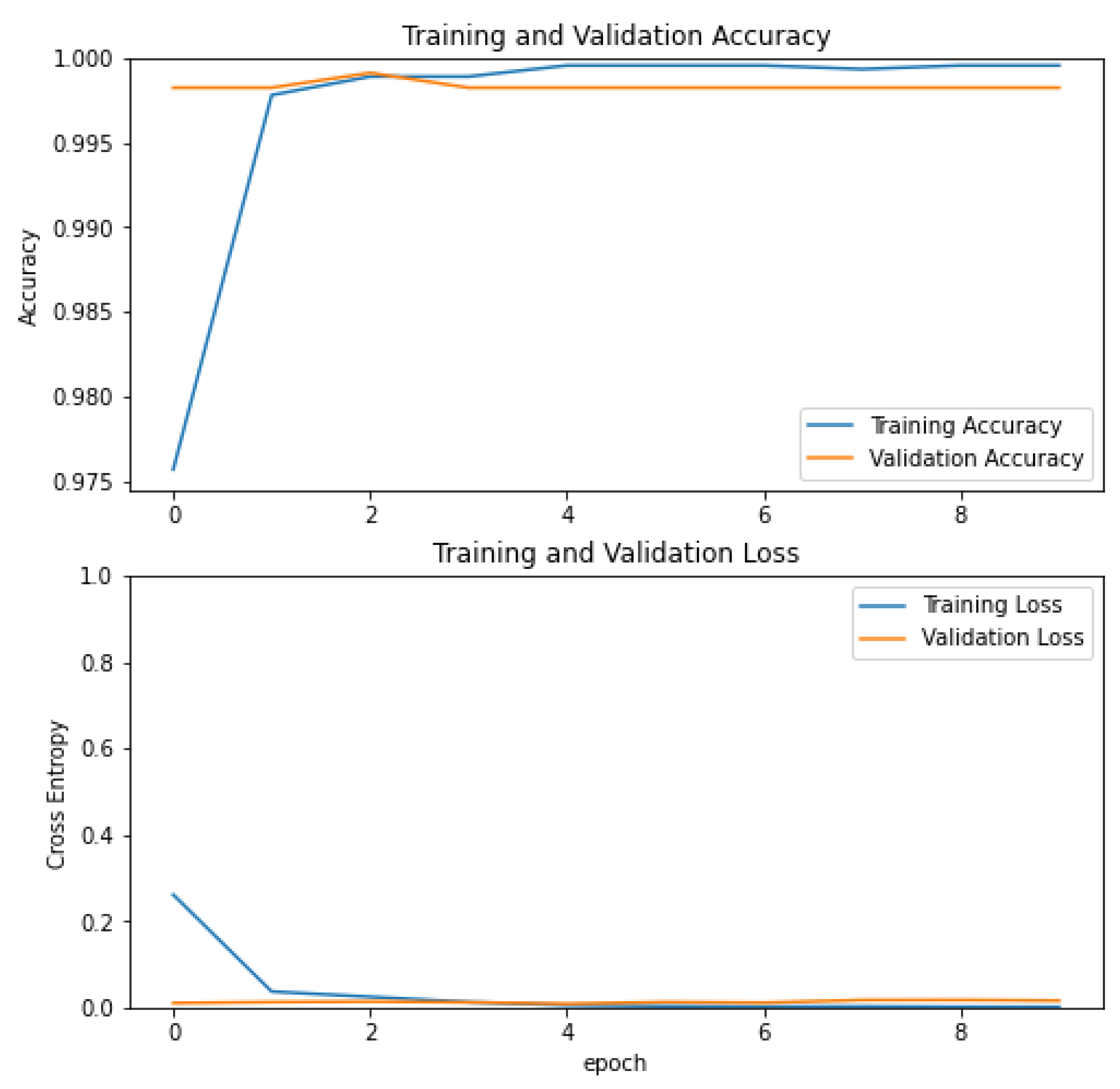

4.3. TensorFlow

4.4. Classification Model

4.5. Natural Language Processing

4.6. Technical Specifications

4.7. Results

4.8. Discussions

- Gestures and sign language. Some children have the intellectual ability to communicate, but have difficulty making sounds or composing sentences. These children can be trained to communicate with their caretakers via sign language. Children with more movement issues may still be able to express themselves by pointing or gesturing, such as pointing to their mouth to indicate hunger. This is a technique that does not require any technology, although the individual being addressed may need to know sign language or the precise gesture.

- Picture Exchange Communication System (PECS). PECS, a method that employs symbols or graphics to help people with autism learn particular phrases and how to communicate with others, is used by many devices, both physical and electronic. PECS devices may aid in the development of stronger communication abilities as well as social, cognitive, and physical skills in youngsters. This can aid with fine motor movements because younger children must learn to point precisely to images in order to have a conversation. The ability to communicate can increase one’s self-esteem and willingness to interact with others.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ASD | Autistic Spectrum Disorder |

| STs | Speech Therapists |

| CP | Communication Problems |

| DAVS | Descriptive Action Verbs |

| IAVS | Interpretation Action Verbs |

| SVS | State Verbs |

| ICT | Information and Communication Technologies |

| SIPS | Spoken Impact Project Software |

| ILSVRC | ImageNet Large Scale Visual Recognition Challenge |

| OS | Operating System |

| TF | TensorFlow |

| NPL | Natural Language Processing |

| CALTECH | California Institute of Technology |

| UI | User Interface |

| PECS | Picture Exchange Communication System |

| AAC | Alternative and Augmentative Communication |

References

- Chojnicka, I.; Wawer, A. Social language in autism spectrum disorder: A computational analysis of sentiment and linguistic abstraction. PLoS ONE 2020, 15, e0229985. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Liu, J.; He, H.; Xu, Q.; Xiu, X.; Liu, H. Screening Early Children With Autism Spectrum Disorder via Response-to-Name Protocol. IEEE Trans. Ind. Inform. 2021, 17, 587–595. [Google Scholar] [CrossRef]

- Xu, G.; Strathearn, L.; Liu, B.; Bao, W. Prevalence of autism spectrum disorder among us children and adolescents. Am. Med. Assoc. 2018, 319, 81–82. [Google Scholar] [CrossRef] [PubMed]

- Jalal, A.; Ahmed, A.; Rafique, A.A.; Kim, K. Scene Semantic Recognition Based on Modified Fuzzy C-Mean and Maximum Entropy Using Object-to-Object Relations. IEEE Access 2021, 9, 27758. [Google Scholar] [CrossRef]

- Huang, Q.Y.; Cai, Z.C.; Lan, T.L. A Single Neural Network for Mixed Style License Plate Detection and Recognition. IEEE Access 2021, 9, 21777. [Google Scholar] [CrossRef]

- Rogge, N.; Janssen, J. The economic cost of autism Spectrum Disorder: A Literature Review. Natl. Libr. Med. 2019, 49, 2873–2900. [Google Scholar] [CrossRef]

- Qiang, B.H.; Zha, Y.J.; Zhou, M.L.; Yang, X.Y.; Peng, B.; Wang, Y.F.; Pang, Y.C. SqueezeNet and Fusion Network-Based Accurate Fast Fully Convolutional Network for Hand Detection and Gesture Recognition. IEEE Access 2021, 9, 2169. [Google Scholar] [CrossRef]

- Duan, N.; Cui, J.J.; Zheng, L.R. An End to End Recognition for License Plates Using Convolutional Neural Networks. IEEE Intell. Transp. Syst. Mag. 2021, 13, 177. [Google Scholar] [CrossRef]

- Mandalapu, H.; Reddy, A.; Ramachandra, R.; Rao, K.S.; Mitra, P.; Prasanna, S.R.M.; Busch, C. Audio-Visual Biometric Recognition and Presentation Attack Detection: A Comprenhensive Survey. IEEE Access 2021, 9, 37341. [Google Scholar] [CrossRef]

- Wang, J.; Zheng, Y.L.; Wang, M.; Shen, Q.; Huang, J. Object-Scale Adaptative Convolutional Neural Networks for High-Spartial Resolution Remote Sensing Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 14, 283. [Google Scholar] [CrossRef]

- Zhou, Q.; Bao, W.X.; Zhang, X.W.; Ma, X. A Joint Spartial-Spectral Representation Based Capsule Network for Hyperspectral Image Classificatrion. In Proceedings of the WHISPERS, Amsterdam, The Netherlands, 24–26 March 2021. [Google Scholar] [CrossRef]

- Sanz-Pena, I.; Blanco, J.; Kim, J.H. Computer Interface for Real-Time Gait Biofeedback Using a Wearable Integrated Sensor System for Data Acquisition. IEEE Trans. Hum.-Mach. Syst. 2021, 5, 484. [Google Scholar] [CrossRef]

- Wang, Y.; Fan, Z.Q.; Wang, M.X.; Liu, J.T.; Xu, S.W.; Lu, Z.Y.; Wang, H.; Song, Y.; Wang, Y.D.; Qu, L.N.; et al. Research on the Specificity of Electrophysiological Signals of Human Acupoints Based on 90-Day Simulated Weightlessness Experiment on the Ground. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 2164. [Google Scholar] [CrossRef]

- da Silva, M.L.; Goncalves, D. A survey of ICT toold for communication development in children with ASD. In Proceedings of the International Conference on Physiological Computing Systems, Lisbon, Portugal, 7–14 January 2016. [Google Scholar] [CrossRef]

- Pennnington, L.; Goldbart, J.; Marshall, J. Speech and lanuage theraphy to improve the communication skills of chilren with cerebral palsy. Natl. Libr. Med. 2004, 2, 285–292. [Google Scholar] [CrossRef]

- Karim, S.; Akter, N.; Patwary, M.J.; Islam, M.R. A Review on Predicting Autism Spectrum Disorder(ASD) meltdown using Machine Learning Algorithms. In Proceedings of the 2021 5th International Conference on Electrical Engineering and Information Communication Technology (ICEEICT), Dhaka, Bangladesh, 18–20 November 2021. [Google Scholar] [CrossRef]

- Lohar, M.; Chorage, S. Spectrum Disorder (ASD) from Brain MR Images Based on Feature Optimization and Machine Learning. In Proceedings of the International Conference on Smart Generation Computing, Communication and Networking (SMART GENCON), Pune, India, 29–30 October 2021. [Google Scholar] [CrossRef]

- Mubin, S.A.; Poh, M.W.A.; Rohizan, R.; Abidin, A.A.Z.; Wei, W.C. A Gamification Design Framework for Supporting ASD Children Social Skills. In Proceedings of the International Conference on Developments in eSystems Engineering (DeSE), Sharjah, United Arab Emirates, 7–10 December 2021. [Google Scholar] [CrossRef]

- Sharmin, M.; Hosssain, M.; Saha, A.; Das, M.; Maxwell, M.; Ahmed, S. From research to practice: Informing the design of Autism support smart technology. In Proceedings of the Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018. [Google Scholar] [CrossRef]

- Paek, Y.A.; Brantley, J.; Evans, B.J. Contreras-Vidal JL, Concerns in the Blurred Divisions Between Medical and Consumer Neurotechnology. IEEE Syst. J. 2021, 15, 3069. [Google Scholar] [CrossRef]

- Shahamiri, S.R. Speech Vision: An End-to-end Deep Learning-Based Dysarthric Automatic Speech Recognition System. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 852. [Google Scholar] [CrossRef]

- Bernard-Opitz, V.; Sriram, N.; Nakhoda-Sapuan, S. Enhancing Social Problem Solving in Children with Autism and Normal Children through Computer-Assisted Instruction. J. Autism Dev. Disord. 2001, 31, 377–384. [Google Scholar] [CrossRef]

- Kreijns, K.; Kirschner, P.A. Extending the SIPS-Model: A Research Framework for Online Collaborative Learning. In Proceedings of the European Conference on Technology Enhanced Learning, Leeds, UK, 3–5 September 2018. [Google Scholar] [CrossRef]

- Masarro, D.W.; Bosseler, A. Read my lips: The importance of the face in a computer-animated tutor for vocabulary learning by children with autism. Autism 2006, 10, 495–510. [Google Scholar] [CrossRef]

- Stiegler, L.N. Examining the Echolalia Literature: Where Do Speech-Language Pathologists Stand? Am. J. Speech Lang. Pathol. 2015, 24, 750–762. [Google Scholar] [CrossRef]

- Mulholl, R.; Pete, A.M.; Popeson, J. Using Animated Language Software with Children Diagnosed with Autism Spectrum Disorders. Teach. Except. Child. Plus 2008, 4, n6. [Google Scholar]

- Griffin, G.; Holub, A.; Perona, P. Caltech-256 Object Category Dataset; California Institute of Technology: Pasadena, CA, USA, 2007. [Google Scholar]

- Slade, P.; Habib, A.; Hicks, J.L.; Delp, S.L. An open-source and wearable system for measuring 3D human motion in real-time. IEEE Trans. Biomed. Eng. 2021, 69, 2313. [Google Scholar] [CrossRef]

- Hussain, A.; Memon, A.R.; Wang, Y.; Miao, Y.Z.; Zhang, X. S-VIT: Stereo Visual-Inertial Tracking of Lower Limb for Physiotherapy Rehabilitation in Context of Comprehensive Evaluation of SLAM Systems. IEEE Trans. Autom. Sci. Eng. 2021, 18, 1550–1562. [Google Scholar] [CrossRef]

- Wang, C.; Peng, L.; Hou, Z.G.; Zhang, P. The Assessment of Upper-Limp Spasticity Based on a Multi-Layer Using a Portable Measurement System. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 2242. [Google Scholar] [CrossRef]

- Yang, L.; Zhang, F.H.; Zhu, J.B.; Fu, Y.L. A Portable Device for Hand Rehabilitation with Force Cognition: Design, Interaction and Experiment. IEEE Trans. Cogn. Dev. Syst. 2021, 69, 678. [Google Scholar] [CrossRef]

- Khan, S.; Sajjad, M.; Hussain, T.; Ullah, A.; Imram, A.S. A Review on Traditional Machine Learning and Deep Learning Models for WBCs Classification in Blood Smear Images. IEEE Access 2021, 9, 2169. [Google Scholar] [CrossRef]

- Guan, Q.J.; Huang, Y.P.; Luo, Y.W.; Liu, P.; Xu, M.L.; Yang, Y. Discriminative Feature Learning for Thorax Disease Classification in Chest X-ray Images. IEEE Trans. Image Process. 2021, 30, 2476. [Google Scholar] [CrossRef] [PubMed]

- Shao, G.F.; Tang, L.; Zhang, H. Introducing Image Classification Efficacies. IEEE Access 2021, 9, 134809. [Google Scholar] [CrossRef]

- Diaz, N.; Ramirez, J.; Vera, E.; Arguello, H. Adaptative Multisensor Acquisition via Spartial Contextual Information for Compressive Spectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 9254–9266. [Google Scholar] [CrossRef]

- Pang, S.M.; Pang, C.L.; Chen, Y.F.; Su, Z.H.; Zhou, Y.J.; Huang, M.Y.; Wei, Y.; Lu, H.; Feng, Q.J. SpineParseNet: Spine Parsing for Volumetric MR Image by a Two-Stage Segmentation Framework With Semantic Image Representation. IEEE Trans. Med. Imaging 2021, 40, 262–273. [Google Scholar] [CrossRef]

| Hardware | Model | CPU | GPU | RAM |

|---|---|---|---|---|

| MacBook 128 GB SSD | Air 2013 | i5 | Intel 5000 | 4 GB |

| iPhone 64 GB | 6 Plus | Dual Core Typhoon | PowerVR GX6450 | 1 GB |

| Xiaomi 64 GB | Redmi Note 6 Pro | Octa-core | Adreno 509 | 3 GB |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ortiz Castellanos, A.E.; Liu, C.-M.; Shi, C. Deep Mobile Linguistic Therapy for Patients with ASD. Int. J. Environ. Res. Public Health 2022, 19, 12857. https://doi.org/10.3390/ijerph191912857

Ortiz Castellanos AE, Liu C-M, Shi C. Deep Mobile Linguistic Therapy for Patients with ASD. International Journal of Environmental Research and Public Health. 2022; 19(19):12857. https://doi.org/10.3390/ijerph191912857

Chicago/Turabian StyleOrtiz Castellanos, Ari Ernesto, Chuan-Ming Liu, and Chongyang Shi. 2022. "Deep Mobile Linguistic Therapy for Patients with ASD" International Journal of Environmental Research and Public Health 19, no. 19: 12857. https://doi.org/10.3390/ijerph191912857

APA StyleOrtiz Castellanos, A. E., Liu, C.-M., & Shi, C. (2022). Deep Mobile Linguistic Therapy for Patients with ASD. International Journal of Environmental Research and Public Health, 19(19), 12857. https://doi.org/10.3390/ijerph191912857