The Use of Binaural Based Spatial Audio in the Reduction of Auditory Hypersensitivity in Autistic Young People

Abstract

:1. Introduction

2. Methods

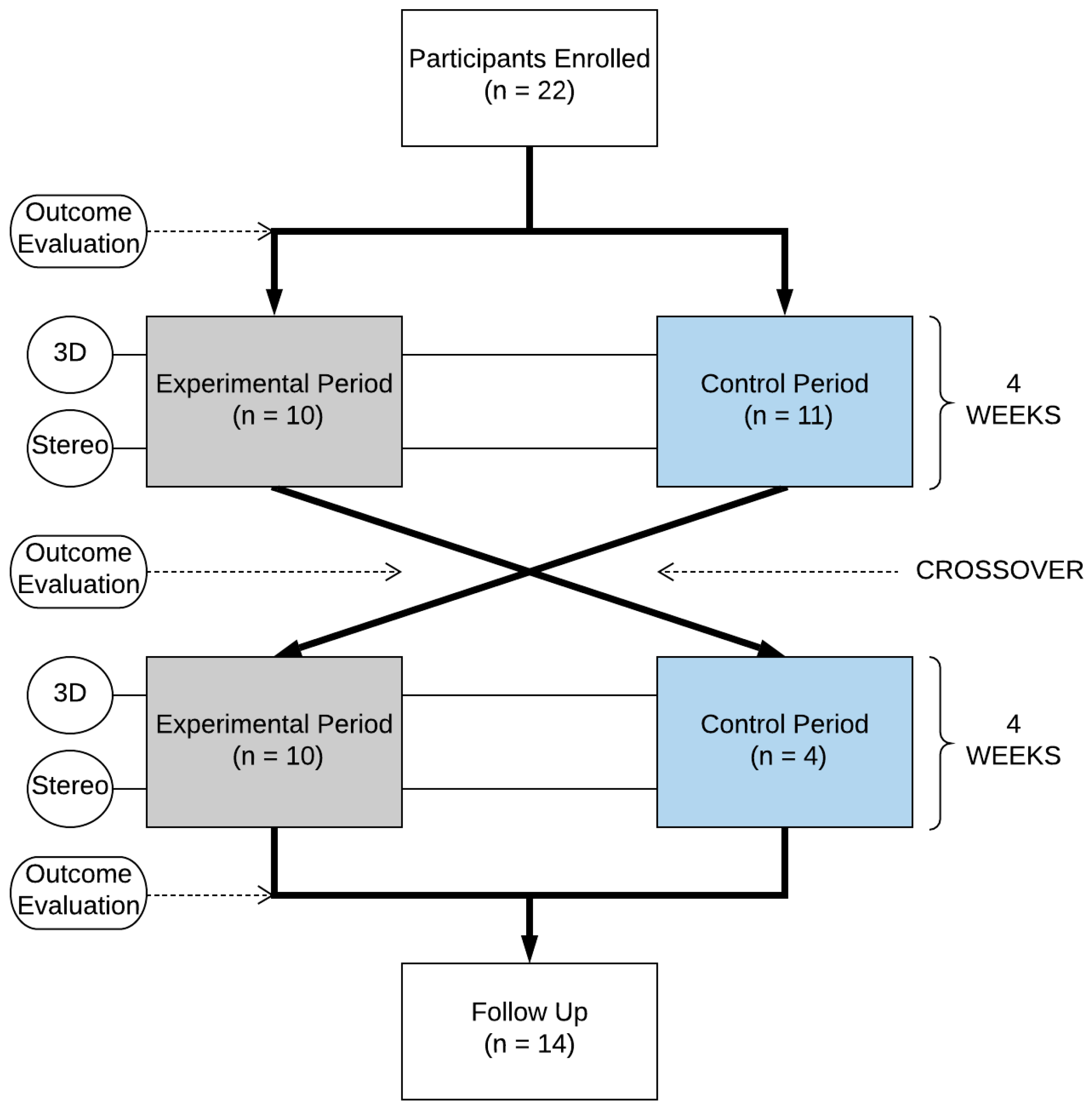

2.1. Study Design

2.2. Participants

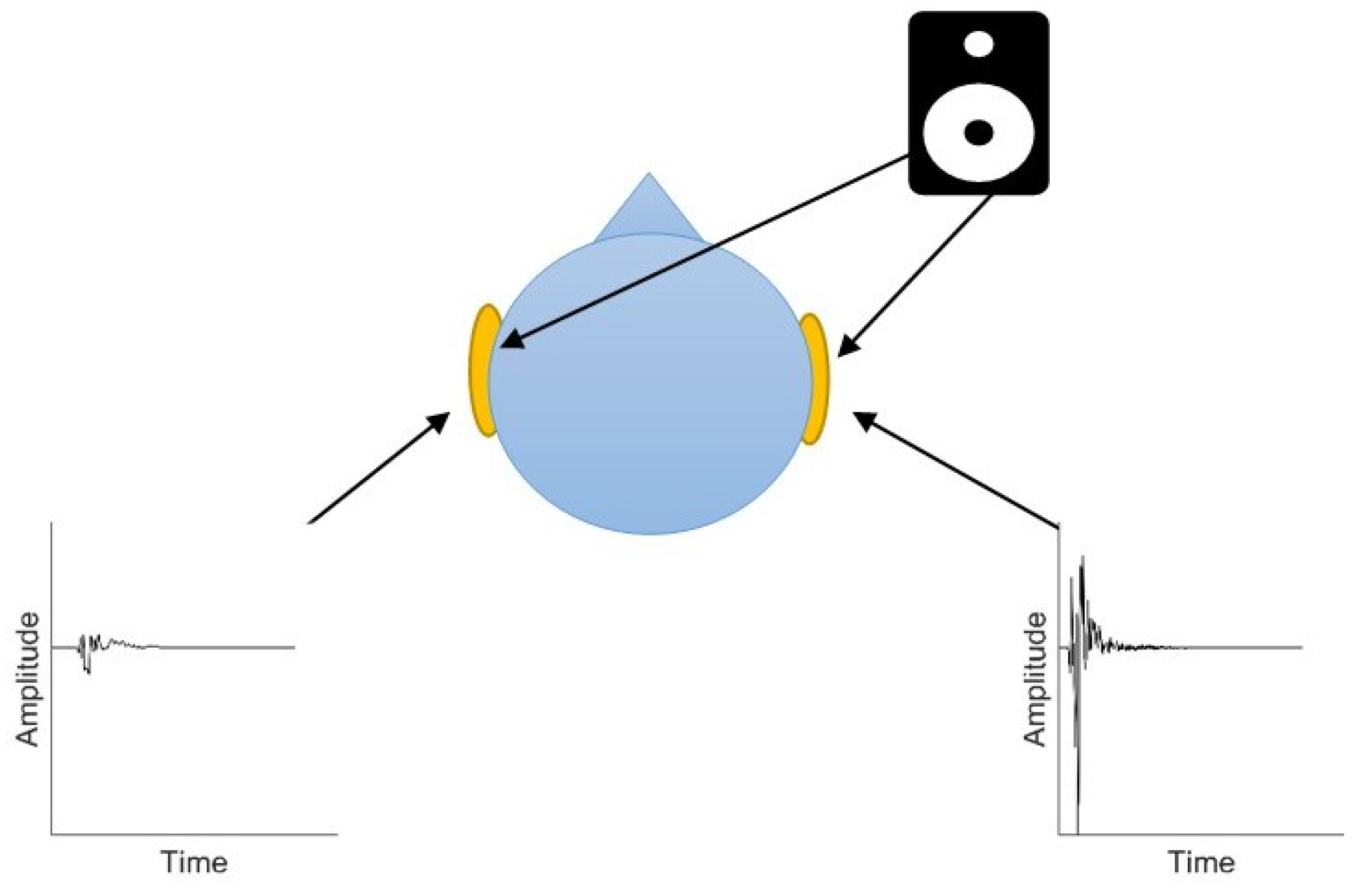

2.3. Equipment

2.4. Game Intervention

2.5. Experimental Procedure

2.5.1. Baseline Assessment

2.5.2. Session Procedure: Experimental Period

2.5.3. Session Procedure: Control Period

2.5.4. Session Procedure: Follow-Up Measurement

2.6. Data Collection

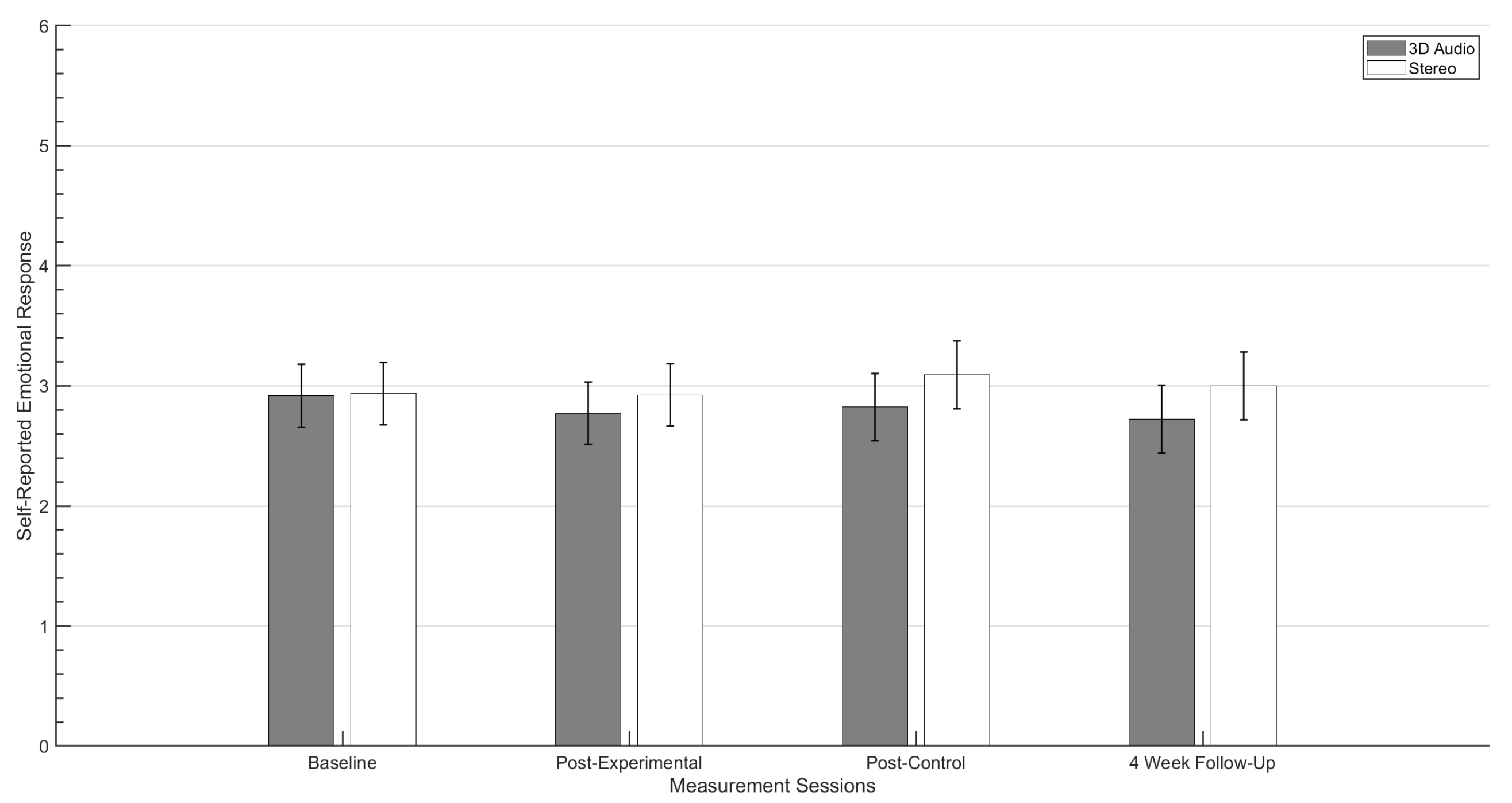

2.6.1. Primary Outcome: Self-Reported Emotional Response

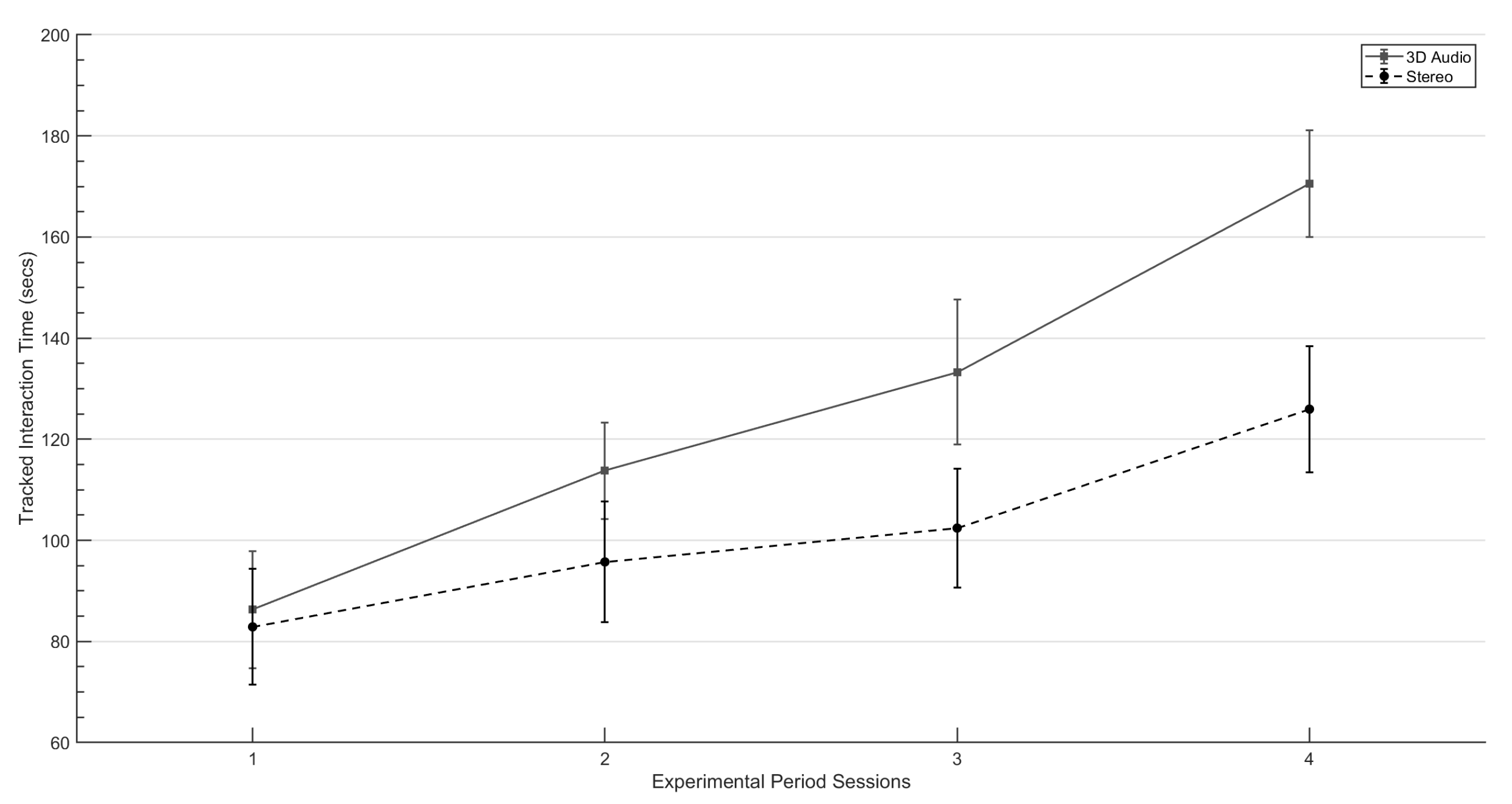

2.6.2. Secondary Outcome: Tracked Voluntary Participant Interaction with Target Auditory Stimuli

3. Results

3.1. Self-Reported Emotional Response

3.2. Tracked Interaction Times

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ASD | Autism Spectrum Disorders |

| HMD | Head Mounted Display |

| NPC | Non-Playable Character |

| SG | Serious Game |

| VE | Virtual Environment |

| VR | Virtual Reality |

References

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders (DSM-5®); American Psychiatric Publishing: Washington, DC, USA, 2013. [Google Scholar]

- Rimland, B.; Edelson, S.M. Brief report: A pilot study of auditory integration training in autism. J. Autism Dev. Disord. 1995, 25, 61–70. [Google Scholar] [CrossRef] [PubMed]

- Stiegler, L.N.; Davis, R. Understanding sound sensitivity in individuals with autism spectrum disorders. Focus Autism Other Dev. Disabil. 2010, 25, 67–75. [Google Scholar] [CrossRef]

- Tharpe, A.M.; Bess, F.H.; Sladen, D.P.; Schissel, H.; Couch, S.; Schery, T. Auditory characteristics of children with autism. Ear Hear. 2006, 27, 430–441. [Google Scholar] [CrossRef] [PubMed]

- Gravel, J.S.; Dunn, M.; Lee, W.W.; Ellis, M.A. Peripheral audition of children on the autistic spectrum. Ear Hear. 2006, 27, 299–312. [Google Scholar] [CrossRef] [PubMed]

- Lucker, J.R. Auditory hypersensitivity in children with autism spectrum disorders. Focus Autism Other Dev. Disabil. 2013, 28, 184–191. [Google Scholar] [CrossRef]

- Koegel, R.L.; Openden, D.; Koegel, L.K. A systematic desensitization paradigm to treat hypersensitivity to auditory stimuli in children with autism in family contexts. Res. Pract. Pers. Sev. Disabil. 2004, 29, 122–134. [Google Scholar] [CrossRef]

- Steigner, J.; Ruhlin, S.U. Systematic Desensitization of Hyperacusis and Vocal Pitch Disorder Treatment in a Patient with Autism. Internet J. Allied Health Sci. Pract. 2014, 12, 6. [Google Scholar] [CrossRef]

- Lucker, J.R.; Doman, A. Auditory Hypersensitivity and Autism Spectrum Disorders: An Emotional Response. Autism Sci. Dig. J. Autismone 2012, 103–108. [Google Scholar]

- Gomes, E.; Rotta, N.T.; Pedroso, F.S.; Sleifer, P.; Danesi, M.C. Auditory hypersensitivity in children and teenagers with autistic spectrum disorder. Arq. Neuro-Psiquiatr. 2004, 62, 797–801. [Google Scholar] [CrossRef]

- Ikuta, N.; Iwanaga, R.; Tokunaga, A.; Nakane, H.; Tanaka, K.; Tanaka, G. Effectiveness of earmuffs and noise-cancelling headphones for coping with hyper-reactivity to auditory stimuli in children with Autism Spectrum Disorder: A preliminary study. Hong Kong J. Occup. Ther. 2016, 28, 24–32. [Google Scholar] [CrossRef]

- Pfeiffer, B.; Stein, L.; Shui, C.; Murphy, A. Effectiveness of Noise-Attenuating Headphones on Physiological Responses for Children With Autism Spectrum Disorders. Front. Integr. Neurosci. 2019, 13, 65. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jackson, H.J.; King, N.J. The therapeutic management of an autistic child’s phobia using laughter as the anxiety inhibitor. Behav. Cogn. Psychother. 1982, 10, 364–369. [Google Scholar] [CrossRef]

- Van Steensel, F.; Bögels, S. CBT for anxiety disorders in children with and without autism spectrum disorders. J. Consult. Clin. Psychol. 2015, 83, 512. [Google Scholar] [CrossRef] [PubMed]

- Wijnhoven, L.A.; Creemers, D.H.; Engels, R.C.; Granic, I. The effect of the video game Mindlight on anxiety symptoms in children with an Autism Spectrum Disorder. BMC Psychiatry 2015, 15, 138. [Google Scholar] [CrossRef] [PubMed]

- Silver, M.; Oakes, P. Evaluation of a new computer intervention to teach people with autism or Asperger syndrome to recognize and predict emotions in others. Autism 2001, 5, 299–316. [Google Scholar] [CrossRef]

- White, S.W.; Oswald, D.; Ollendick, T.; Scahill, L. Anxiety in children and adolescents with autism spectrum disorders. Clin. Psychol. Rev. 2009, 29, 216–229. [Google Scholar] [CrossRef]

- Ince, P.; Haddock, G.; Tai, S. A systematic review of the implementation of recommended psychological interventions for schizophrenia: Rates, barriers, and improvement strategies. Psychol. Psychother. Theory Res. Pract. 2016, 89, 324–350. [Google Scholar] [CrossRef]

- Van Der Krieke, L.; Wunderink, L.; Emerencia, A.C.; De Jonge, P.; Sytema, S. E–mental health self-management for psychotic disorders: State of the art and future perspectives. Psychiatr. Serv. 2014, 65, 33–49. [Google Scholar] [CrossRef]

- Susi, T.; Johannesson, M.; Backlund, P. Serious Games: An Overview; ResearchGate GmbH: Berlin, Germany, 2007. [Google Scholar]

- Zakari, H.M.; Ma, M.; Simmons, D. A review of serious games for children with autism spectrum disorders (ASD). In Serious Games Development and Applications, Proceedings of the 5th International Conference, SGDA 2014, Berlin, Germany, 9–10 October 2014; Springer: Cham, Switzerland, 2014; pp. 93–106. [Google Scholar]

- Zakari, H.M.; Poyade, M.; Simmons, D. Sinbad and the Magic Cure: A serious game for children with ASD and auditory hypersensitivity. In Games and Learning Alliance, Proceedings of the6th International Conference, GALA 2017, Lisbon, Portugal, 5–7 December 2017; Springer: Cham, Switzerland, 2017; pp. 54–63. [Google Scholar]

- Sherman, W.R.; Craig, A.B. Understanding Virtual Reality; Morgan Kauffman: San Francisco, CA, USA, 2003. [Google Scholar]

- Maskey, M.; Rodgers, J.; Grahame, V.; Glod, M.; Honey, E.; Kinnear, J.; Labus, M.; Milne, J.; Minos, D.; McConachie, H.; et al. A randomised controlled feasibility trial of immersive virtual reality treatment with cognitive behaviour therapy for specific phobias in young people with autism spectrum disorder. J. Autism Dev. Disord. 2019, 49, 1912–1927. [Google Scholar] [CrossRef]

- Johnston, D.; Egermann, H.; Kearney, G. SoundFields: A Virtual Reality Game Designed to Address Auditory Hypersensitivity in Individuals with Autism Spectrum Disorder. Appl. Sci. 2020, 10, 2996. [Google Scholar] [CrossRef]

- Hacihabiboglu, H.; De Sena, E.; Cvetkovic, Z.; Johnston, J.; Smith, J.O., III. Perceptual spatial audio recording, simulation, and rendering: An overview of spatial-audio techniques based on psychoacoustics. IEEE Signal Process. Mag. 2017, 34, 36–54. [Google Scholar] [CrossRef] [Green Version]

- Kearney, G.; Doyle, T. An HRTF database for virtual loudspeaker rendering. In Proceedings of the 139th International Convention of the Audio Engineering Society, New York, NY, USA, 29 October–1 November 2015; Audio Engineering Society: New York, NY, USA, 2015. [Google Scholar]

- Johnston, D.; Egermann, H.; Kearney, G. Measuring the behavioral response to spatial audio within a multi-modal virtual reality environment in children with autism spectrum disorder. Appl. Sci. 2019, 9, 3152. [Google Scholar] [CrossRef]

- Maples-Keller, J.L.; Bunnell, B.E.; Kim, S.J.; Rothbaum, B.O. The Use of Virtual Reality Technology in the Treatment of Anxiety and Other Psychiatric Disorders. Harv. Rev. Psychiatry 2017, 25, 103–113. [Google Scholar] [CrossRef] [PubMed]

- Diemer, J.; Alpers, G.W.; Peperkorn, H.M.; Shiban, Y.; Mühlberger, A. The impact of perception and presence on emotional reactions: A review of research in virtual reality. Front. Psychol. 2015, 6, 26. [Google Scholar] [CrossRef] [PubMed]

- Panksepp, J.; Bernatzky, G. Emotional sounds and the brain: The neuro-affective foundations of musical appreciation. Behav. Process. 2002, 60, 133–155. [Google Scholar] [CrossRef]

- Wood, D.P.; Webb-Murphy, J.; Center, K.; McLay, R.; Koffman, R.; Johnston, S.; Spira, J.; Pyne, J.M.; Wiederhold, B.K. Combat-related post-traumatic stress disorder: A case report using virtual reality graded exposure therapy with physiological monitoring with a female Seabee. Mil. Med. 2009, 174, 1215–1222. [Google Scholar] [CrossRef]

- Abate, A.F.; Nappi, M.; Ricciardi, S. AR based environment for exposure therapy to mottephobia. In Virtual and Mixed Reality—New Trends, Part I, Proceedings of the International Conference, Virtual and Mixed Reality 2011, Orlando, FL, USA, 9–14 July 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 3–11. [Google Scholar]

- Thanh, V.D.H.; Pui, O.; Constable, M. Room VR: A VR therapy game for children who fear the dark. In Proceedings of the SIGGRAPH Asia 2017 Posters, Bangkok, Thailand, 27–30 November 2017; pp. 1–2. [Google Scholar]

- Poyade, M.; Morris, G.; Taylor, I.; Portela, V. Designing a virtual reality exposure therapy to familiarise and desensitise to environmental stressors in airports. In Proceedings of the 14th Annual EuroVR Conference—EuroVR 2017, Laval, France, 12–14 December 2017. [Google Scholar]

- Brinkman, W.P.; Hoekstra, A.R.; van Egmond, R. The effect of 3D audio and other audio techniques on virtual reality experience. Stud. Health Technol. Inform. 2015, 219, 44–48. [Google Scholar]

- Sibbald, B.; Roberts, C. Understanding controlled trials crossover trials. BMJ 1998, 316, 1719–1720. [Google Scholar] [CrossRef]

- Green, M.C.; Murphy, D. EigenScape: A database of spatial acoustic scene recordings. Appl. Sci. 2017, 7, 1204. [Google Scholar] [CrossRef]

- Tatla, S.K. The Development of the Pediatric Motivation Scale for Children in Rehabilitation: A Pilot Study. Ph.D. Thesis, University of British Columbia, Vancouver, BC, Canada, 2014. [Google Scholar]

- Roberto, C.A.; Baik, J.; Harris, J.L.; Brownell, K.D. Influence of licensed characters on children’s taste and snack preferences. Pediatrics 2010, 126, 88–93. [Google Scholar] [CrossRef]

- Salvador-Herranz, G.; Perez-Lopez, D.; Ortega, M.; Soto, E.; Alcaniz, M.; Contero, M. Manipulating virtual objects with your hands: A case study on applying desktop augmented reality at the primary school. In Proceedings of the 2013 46th Hawaii International Conference on System Sciences, Wailea, HI, USA, 7–10 January 2013; pp. 31–39. [Google Scholar]

- Nijs, L.; Leman, M. Interactive technologies in the instrumental music classroom: A longitudinal study with the Music Paint Machine. Comput. Educ. 2014, 73, 40–59. [Google Scholar] [CrossRef]

- Read, J.C.; MacFarlane, S.; Casey, C. Endurability, engagement and expectations: Measuring children’s fun. In Interaction Design and Children; Shaker Publishing: Eindhoven, The Netherlands, 2002; Volume 2, pp. 1–23. [Google Scholar]

- Hall, D.E. Musical Acoustics; Brooks/Cole Publishing Company: Salt Lake City, UT, USA, 2002; pp. 54–340. [Google Scholar]

- Adjorlu, A.; Barriga, N.B.B.; Serafin, S. Virtual Reality Music Intervention to Reduce Social Anxiety in Adolescents Diagnosed with Autism Spectrum Disorder. In Proceedings of the 16th Sound and Music Computing Conference, Malaga, Spain, 28–31 May 2019; pp. 261–268. [Google Scholar]

- Reynolds-Keefer, L.; Johnson, R.; Dickenson, T.; McFadden, L. Validity issues in the use of pictorial Likert scales. Stud. Learn. Eval. Innov. Dev. 2009, 6, 15–24. [Google Scholar]

- Hall, L.; Hume, C.; Tazzyman, S. Five degrees of happiness: Effective smiley face likert scales for evaluating with children. In Proceedings of the 15th International Conference on Interaction Design and Children, Manchester, UK, 21–24 June 2016; pp. 311–321. [Google Scholar]

- Sakamoto, Y.; Ishiguro, M.; Kitagawa, G. Akaike Information Criterion Statistics; D. Reidel: Dordrecht, The Netherlands, 1986; Volume 81, p. 26853. [Google Scholar]

- Wolfinger, R.D. Heterogeneous variance: Covariance structures for repeated measures. J. Agric. Biol. Environ. Stat. 1996, 205–230. [Google Scholar] [CrossRef]

- Morris, R. Managing Sound Sensitivity in Autism Spectrum Disorder: New Technologies for Customized Intervention. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2009. [Google Scholar]

- Maskey, M.; Lowry, J.; Rodgers, J.; McConachie, H.; Parr, J.R. Reducing specific phobia/fear in young people with autism spectrum disorders (ASDs) through a virtual reality environment intervention. PLoS ONE 2014, 9, e100374. [Google Scholar] [CrossRef]

- Emmelkamp, P.M. Technological innovations in clinical assessment and psychotherapy. Psychother. Psychosom. 2005, 74, 336–343. [Google Scholar] [CrossRef]

- Price, M.; Anderson, P. The role of presence in virtual reality exposure therapy. J. Anxiety Disord. 2007, 21, 742–751. [Google Scholar] [CrossRef] [PubMed]

- Wallace, S.; Parsons, S.; Westbury, A.; White, K.; White, K.; Bailey, A. Sense of presence and atypical social judgments in immersive virtual environments: Responses of adolescents with Autism Spectrum Disorders. Autism 2010, 14, 199–213. [Google Scholar] [CrossRef]

- Poeschl, S.; Wall, K.; Doering, N. Integration of spatial sound in immersive virtual environments an experimental study on effects of spatial sound on presence. In Proceedings of the 2013 IEEE Virtual Reality (VR), Lake Buena Vista, FL, USA, 18–20 March 2013; pp. 129–130. [Google Scholar]

- Zotkin, D.N.; Duraiswami, R.; Davis, L.S. Rendering localized spatial audio in a virtual auditory space. IEEE Trans. Multimed. 2004, 6, 553–564. [Google Scholar] [CrossRef]

- Hendrix, C.; Barfield, W. The sense of presence within auditory virtual environments. Presence Teleoperators Virtual Environ. 1996, 5, 290–301. [Google Scholar] [CrossRef]

- Larsson, P.; Västfjäll, D.; Kleiner, M. Effects of auditory information consistency and room acoustic cues on presence in virtual environments. Acoust. Sci. Technol. 2008, 29, 191–194. [Google Scholar] [CrossRef]

- Argo, J. Immersive Soundscapes to Elicit Anxiety in Exposure Therapy: Physical Desensitization and Mental Catharsis. Ph.D. Thesis, University of Glasgow, Glasgow, UK, 2017. [Google Scholar]

- Hughes, S.; Kearney, G. Fear and Localisation: Emotional Fine-Tuning Utlising Multiple Source Directions. In Proceedings of the 56th International Conference: Audio for Games, London, UK, 11–13 February 2015; Audio Engineering Society: New York, NY, USA, 2015. [Google Scholar]

- Low, J.; Goddard, E.; Melser, J. Generativity and imagination in autism spectrum disorder: Evidence from individual differences in children’s impossible entity drawings. Br. J. Dev. Psychol. 2009, 27, 425–444. [Google Scholar] [CrossRef] [PubMed]

- Lind, S.E.; Williams, D.M.; Bowler, D.M.; Peel, A. Episodic memory and episodic future thinking impairments in high-functioning autism spectrum disorder: An underlying difficulty with scene construction or self-projection? Neuropsychology 2014, 28, 55. [Google Scholar] [CrossRef] [PubMed]

- Strickland, D.C.; McAllister, D.; Coles, C.D.; Osborne, S. An evolution of virtual reality training designs for children with autism and fetal alcohol spectrum disorders. Top. Lang. Disord. 2007, 27, 226. [Google Scholar] [CrossRef] [Green Version]

- Charitos, D.; Karadanos, G.; Sereti, E.; Triantafillou, S.; Koukouvinou, S.; Martakos, D. Employing virtual reality for aiding the organisation of autistic children behaviour in everyday tasks. In Proceedings of the ICDVRAT, Alghero, Italy, 23–25 September 2000. [Google Scholar]

- Wang, M.; Anagnostou, E. Virtual reality as treatment tool for children with autism. In Comprehensive Guide to Autism; Springer: New York, NY, USA, 2014; pp. 2125–2141. [Google Scholar]

- Saiano, M.; Pellegrino, L.; Casadio, M.; Summa, S.; Garbarino, E.; Rossi, V.; Dall’Agata, D.; Sanguineti, V. Natural interfaces and virtual environments for the acquisition of street crossing and path following skills in adults with Autism Spectrum Disorders: A feasibility study. J. Neuroeng. Rehabil. 2015, 12, 17. [Google Scholar] [CrossRef] [PubMed]

- Wijnhoven, L.A.; Creemers, D.H.; Vermulst, A.A.; Lindauer, R.J.; Otten, R.; Engels, R.C.; Granic, I. Effects of the video game ‘Mindlight’on anxiety of children with an autism spectrum disorder: A randomized controlled trial. J. Behav. Ther. Exp. Psychiatry 2020, 68, 101548. [Google Scholar] [CrossRef]

- Tomchek, S.D.; Dunn, W. Sensory processing in children with and without autism: A comparative study using the short sensory profile. Am. J. Occup. Ther. 2007, 61, 190–200. [Google Scholar] [CrossRef] [PubMed]

- Berry, J.O.; Jones, W.H. The parental stress scale: Initial psychometric evidence. J. Soc. Pers. Relatsh. 1995, 12, 463–472. [Google Scholar] [CrossRef]

| Exposure Level | Virtual Distance between Player and Stimulus |

|---|---|

| 1 | 25 m |

| 2 | 15 m |

| 3 | 5 m |

| 4 | 2.5 m |

| Condition | Target Stimulus | Participants (n) | Pre-Test (M) | Post-Test (M) | % Decrease |

|---|---|---|---|---|---|

| Alarm | 2 | 4.75 | 3.25 | 31.59 | |

| Baby | 2 | 5 | 4.25 | 10.53 | |

| Engine | 2 | 5 | 3.25 | 35 | |

| 3D Audio | Fireworks | 2 | 5.5 | 4.5 | 18.18 |

| Hair Dryer | 1 | 6 | 4 | 33.33 | |

| Children Fighting | 4 | 5.12 | 3.37 | 34.18 | |

| Children Playing | 7 | 5 | 2.5 | 50 | |

| Alarm | 4 | 5.25 | 4.37 | 16.76 | |

| Baby | 2 | 5.5 | 5 | 9.09 | |

| Engine | 2 | 5.25 | 4 | 23.81 | |

| Stereo | Fireworks | 3 | 5.33 | 3.67 | 31.14 |

| Hair Dryer | 3 | 5 | 5 | 20 | |

| Children Fighting | 2 | 5.25 | 4.75 | 9.52 | |

| Children Playing | 3 | 4.5 | 4.33 | 3.77 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Johnston, D.; Egermann, H.; Kearney, G. The Use of Binaural Based Spatial Audio in the Reduction of Auditory Hypersensitivity in Autistic Young People. Int. J. Environ. Res. Public Health 2022, 19, 12474. https://doi.org/10.3390/ijerph191912474

Johnston D, Egermann H, Kearney G. The Use of Binaural Based Spatial Audio in the Reduction of Auditory Hypersensitivity in Autistic Young People. International Journal of Environmental Research and Public Health. 2022; 19(19):12474. https://doi.org/10.3390/ijerph191912474

Chicago/Turabian StyleJohnston, Daniel, Hauke Egermann, and Gavin Kearney. 2022. "The Use of Binaural Based Spatial Audio in the Reduction of Auditory Hypersensitivity in Autistic Young People" International Journal of Environmental Research and Public Health 19, no. 19: 12474. https://doi.org/10.3390/ijerph191912474

APA StyleJohnston, D., Egermann, H., & Kearney, G. (2022). The Use of Binaural Based Spatial Audio in the Reduction of Auditory Hypersensitivity in Autistic Young People. International Journal of Environmental Research and Public Health, 19(19), 12474. https://doi.org/10.3390/ijerph191912474