Abstract

The use of the Internet to develop new technologies has generated a considerable change in teaching and student learning in higher education. The pandemic caused by COVID-19 has forced universities to switch from face-to-face to online instruction. Furthermore, this transfer process was planned and executed quickly, with urgent redesigns of courses originally conceived for live teaching. The aim of this work is to measure the service quality of online teaching delivered during the COVID-19 period. The methodology was based on an importance-performance analysis using a structural equations model. The data were obtained from a sample of 467 students attending a university in southern Spain. The results reveal five priority attributes of online teaching that need to be improved in order to enhance the service quality of the virtual instruction provided to students. Universities need to redefine their online format by integrating methodological and technological decisions and involving collaboration between teachers, students and administration staff and services. The results do not apply to educational institutions that exclusively teach courses online, but to those institutions that had to rapidly adapt, and shift course material originally designed for face-to-face training.

1. Introduction

The higher education sector is currently facing new challenges that had never previously experienced, arising from sharper global competitiveness, advances in technology and the rise in the numbers of universities that offer students an ever-broader range of courses to choose from. This has led universities and higher education centers to ponder how they can improve the service quality they offer their students. The use of Internet in education, especially in higher education, has generated considerable change in the way in which learning takes place. The e-learning model for teaching and learning has rapidly developed in recent years, mainly driven by technological advances [1]. E-learning spans a wide range of methods, applications, processes and academic areas, making difficult to agree on a commonly accepted definition of what e-learning is [2]. With the substantial growth in demand for e-learning, further research is needed on the factors that influence the adoption of this form of instruction [3].

Due to COVID-19, there was a rapid decline in face-to-face teaching in early 2020, with the closure of campuses, schools and other education centers, which saw on-site teaching shift rapidly online. According to the United Nations Educational, Scientific and Cultural Organization (UNESCO), more than 1.5 billion students in 165 countries have been affected by the closure of education centers, impacting around 90% of the world student population [4]; and not only students are affected: the shutdown has hit teachers and families, and will have long-term economic and social consequences. The type of online teaching that has taken place in recent months cannot be compared in terms of experience, planning and development to the proposals specifically developed for online teaching in its original pre-pandemic form [5]. Since the pandemic emerged, teachers have had to redesign and plan how they teach subjects that were originally conceived for the classroom. An important drawback is that not all teachers and students possess the technological media or digital competences required for giving and receiving virtual classes. This new situation has emphasized the significant digital divide among both teachers and students [6]. In this sense, institutions need to provide more suitable platforms for online teaching [7]. Despite this, the drive towards online teaching worldwide gathers pace.

The COVID-19 crisis has presented an opportunity for the development of effective learning solutions [8]. Universities anchored to the traditional face-to-face teaching model have striven to adopt strategies to ensure the service quality of their online teaching to satisfy their clients, namely the students. This makes research on the service quality of virtual training more important than ever. Students’ perceptions of e-learning are a crucial indicator of the quality of the learning experience and the results obtained, and studies of student satisfaction with e-learning abound [9]. The study of service quality in e-learning is vital to ensure that the implementation of any online learning system meets students’ learning needs. Service quality measurement models were first developed in the industrial and manufacturing sectors, but they cannot be applied directly to higher education. Many studies have highlighted the differences, hence the need for new service quality models that apply strictly to online teaching [10,11]. As it stands, there is no standard model for measuring the quality of service in higher education [11]. Indeed, there seems to be little consensus among researchers on how to measure the quality of teaching in higher education [12]. This work provides a method for measuring service quality in higher education, in a time of considerable upheaval for educational institutions due to COVID-19.

Service quality in higher education has been widely researched by managers and investigators due to its importance in economic terms, in reducing costs and gauging student satisfaction [11,13]. Universities need to consider service quality and students’ perceptions when designing strategies that can boost their rankings [11]. Teaching and learning in the higher education setting differ from other industries in that they cannot be separated into two parts [14]. Education and knowledge are unlike other products and services because they cannot be quantified in purely monetary terms. Higher education institutions bear the considerable responsibility of preparing students for life, not just for earning a good income [15].

In higher education, the main clients are the students [16], an idea that is by no means new, as the literature demonstrates [17,18]. Ref [19] stated that student satisfaction is the only indicator for measuring service quality in higher education. Quality of service in higher education has a significant influence on student satisfaction [20]. Growing competition among higher education institutions regionally, nationally and internationally has hastened acceptance of the notion of students as the main client [21], which means that universities need to develop the means to satisfy their students at this level of education [22,23]; and this is achieved by improving the quality of service they offer [24,25]. Online learning should keep student’s motivation and minimize their frustration with this new situation [26].

The main objective of this work is to measure the quality of the virtual teaching of subjects originally designed for the classroom training. The methodology is based on a variant of the traditional Martilla and James importance-performance analysis [27], as proposed by Picón et al. [28]. Our purpose is to identify which elements or attributes of online teaching needed to be improved and developed further. Before applying this methodology, the importance of each attribute is determined using a structural equations model based on criteria posited by Allen et al. [29]. The data were obtained from a university in southern Spain. Spain has been one of the countries hit hardest by the COVID-19 pandemic, registering more than 28,000 deaths and around 250,000 cases at the time of writing. The justification for this work is that higher education institutions and education centers whose model is based on traditional or face-to-face teaching need to gain experience in, and knowledge of, e-learning methods, and improve their online teaching models.

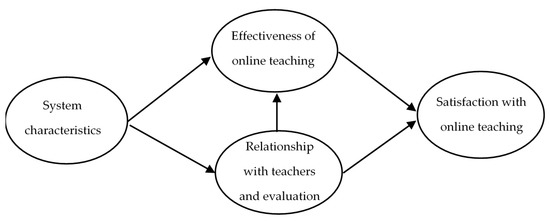

Based on the literature [30,31,32,33,34,35,36,37,38,39,40,41] we specify the structural equation model. On the basis of the above studies, we propose that the system characteristics affect both the effectiveness of the online teaching and the relationship with teachers and evaluation. At the same time, we propose that the effectiveness of online teaching is influenced by the relationship with teachers and evaluation. Finally, we propose that these latent variables influence the satisfaction with online teaching. The importance-performance analysis is an exploratory method and, therefore, we do not define any hypothesis.

This work differs from others in this field, firstly, because it seeks to measure quality in online teaching in undergraduate courses originally designed for traditional or on-site teaching scenarios. The impact of COVID-19 has forced institutions into a real-time restructuring of teaching methodologies and assessment systems originally conceived for classroom training. While universities adapt to these changes, both teachers and students have tried to maintain the access and capacity to acquire knowledge that students enjoyed pre-pandemic. Secondly, this work focuses on a university whose teaching was neither all online nor based on a mixed system before COVID-19, but entirely on-site. Although this university deployed a virtual platform to support face-to-face teaching, classes were nearly all taught on-site. Thirdly, the service quality of the online teaching at this university was not measured by the typical SERVQUAL and SERVPERF methods but by the importance-performance analysis proposed by Picon et al. [28], which has several additional advantages over the former. Finally, the measurement of service quality in the online teaching was not performed by consulting students of a single subject or degree course but across a range of courses, in order to reinforce the external validity and increase the statistical potential of the study.

2. Materials and Methods

2.1. Data

The data were extracted from a questionnaire for undergraduate students attending a public University, in southern Spain, which was the inclusion criteria. There were not exclusion criteria as was done in Tzeng et al. [42]. A convenience sampling was used to collect the maximum number questionaries as in Tzeng et al., Năsui et al. and Zhuo et al. [42,43,44] since their participation was voluntary [44,45]. This sampling is considered valid for this study because students are appropriate research subjects, especially if they represent a population of interest [46]. This method was used to ensure a rapid and easy data collection, since sampling units are easily accessible and furthermore, it is important to emphasize its cost effectiveness. Results will be generalized to higher education students, that is why the students were selected from a range of degree courses to reinforce external validity and to increase statistical power [30]. The questionnaire was delivered to the students by various media, firstly, via the university’s virtual platform, with the collaboration of the teachers. The survey was also sent out via the university’s Twitter account. In order to achieve the biggest response possible, the researchers contacted the delegates and sub-delegates of the various faculties who distributed the questionnaire by email and social media, as well as the student union, which sent out the survey by similar channels. The number of valid responses received was 467, some 5% of the total number of students enrolled at the university across all degree courses.

2.2. Instrument and Variables

The data was extracted from a structured questionnaire in which the students had to value the attributes on a scale of 0–7, in which 0 represented “of lowest value” and 7 “of highest value”. The questionnaire contained 14 items grouped as four latent variables. The four latent variables were system characteristics (CAR), effectiveness of online teaching (EFIC), relationship with teachers and evaluation (PROF), and satisfaction with online teaching (SAT). The items were selected from the literature on e-learning and quality of teaching (Table 1). Attributes representing four dimensions were analysed by means of a structural equations model.

Table 1.

Dimensions and attributes selected to measure quality.

The structural equations model was based on the literature (Figure 1). This model posits that the system’s characteristics have a direct effect on the efficacy of virtual training and on students’ relations with their teachers and aspects of the evaluation. Likewise, these latent variables have a direct effect on student satisfaction with online teaching. The structural equation model was estimated using the AMOS software (International Business Machines Corporation IBM, Armonk, NY, USA).

Figure 1.

Structural model specified for the derivation of the importance of attributes.

2.3. Statistical Analysis

The data were analyzed using SPSS (International Business Machines Corporation IBM, Armonk, NY, USA). The Cronbach’s Alpha coefficient for the value scores was used to determine the reliability of the scales, which came back as higher than 0.7, thus confirming the reliability of the questionnaire. To check the questionnaire’s validity, a factor analysis of the valuations was performed, using the Kaiser-Meyer-Olkin measure, which was 0.75. The null hypothesis was rejected by the Bartlett sphericity test, thus the use of the factor analysis was deemed acceptable. The factor analysis yielded four factors that represent the four dimensions in Table 1, thus confirming the validity of the questionnaire. The importance-performance analysis is required in order to know the importance of each attribute that was obtained by applying the structural equations model, based on the criteria of Allen et al. [29].

3. Results

The sociodemographic characteristics of the sample are showed in Table 2. We can see that most students were women, in their first year of undergraduate studies. The mean age of participants was about 21 years.

Table 2.

Sociodemographic characteristics of the sample.

The maximum likelihood model was used to calculate the model parameters. Although the data did not comply with the multivariate normality assumption, this method facilitates the convergence of estimates even in the absence of multivariate normality [49]. Criteria in Bollen [50] and Rindskopf and Rose [51] were used to evaluate the model, in which the measurement and structural models were assessed separately. A reliability and validity analysis were performed to evaluate the measurement model. To test for reliability, an analysis of the reliability of the items and each construct was carried out. Convergent and discriminant validity were analyzed to test for validity. Results are shown in Table 3 and Table 4.

Table 3.

Standardized estimations for observable indicators, Cronbach’s α values, convergent validity, and reliability assessment.

Table 4.

Discriminant validity of measures.

The reliability of the items was measured in order to check that the standardized load factors were higher than 0.7, in order that the variance shared between the construct and its indicator was higher than the error variance [52,53], though some authors consider a load factor above 0.5 to be acceptable [54]. Table 3 shows that all the standardized load factors exceed 0.7. The load with a value of 0.580 was considered valid, in line with criteria provided by Chau [46]. The reliability of the construct was measured by the Cronbach Alpha and composite reliability (CR) coefficients. Table 3 shows that both the Cronbach Alpha and CR coefficient values are higher than 0.7, thus, the reliability of the constructs is confirmed.

The validity of the measurement model was determined by the convergent and discriminant validity. Convergent validity was measured by the average variance extracted (AVE). Column 5 of Table 3 shows that all AVE coefficient values exceed 0.5, which verifies the convergent validity. Furthermore, for discriminant validity, all correlations between the constructs were calculated in Table 4.

Table 4 shows that these correlations were less than the AVE square root for each construct, except for the EFIC latent variable. Given that the correlation values for this variable are very similar to the AVE square root, it can be said that the discriminant validity is verified.

The structural model was assessed according to the squared correlation coefficient values and the significance of the paths or regression coefficients. Table 5 shows that all the coefficients attain a level of significance of 1%, while the squared multiple correlations are all higher than 0.3, which verifies the nomological or predictive validity. These results support the hypotheses of the model.

Table 5.

Parameter estimates.

Finally, goodness of fit of the structural model was determined by a set of measures presented in Table 6, with reference to the absolute fit, incremental fit and parsimony of the model.

Table 6.

Goodness of fit of the structural model.

The χ2 statistic indicates whether the discrepancy between the original data matrix and the reproduced matrix is significant. In this case, the p-value indicates rejection of this hypothesis. However, the χ2 statistic value is strongly influenced by the sample size, the model’s complexity and the violation of the multivariate normality assumption. All the other measures also have values within the limits that allow us to confirm the data’s goodness of fit. To calculate the importance of each attribute, we applied the criteria of Allen et al. [29], according to which, importance derives from the total of the effects of each latent variable on the satisfaction with the online teaching variable (Table 7).

Table 7.

Effects of predictor variables.

For calculating the relative importance of each attribute, the total effects of each latent variable were distributed among the attributes that constitute it, with the standardized regression weights also considered, as shown in Table 8. The relative importance of each attribute was calculated by multiplying the total effects of each latent variable by the standardized regression weight.

Table 8.

Calculation of importance.

For IPA, we needed to know the values for importance and satisfaction in each attribute (Table 9). As previously mentioned, importance was determined by the structural equations model. Satisfaction was measured by the judgements expressed by the participants in the questionnaire. The values obtained show that importance ranges between 0.33 (lowest) and 0.61 (highest), while the range of satisfaction is between 0.39 (lowest) and 4.08 (highest). Nevertheless, IPA requires the values to appear on the same scale, thus, a normalization of between 0.00 and 1.00 was performed on the lowest and highest values for importance and satisfaction, respectively, to obtain the normalized values (see columns 4 and 5 of Table 8). Furthermore, column 6 of Table 9 presents the discrepancy values obtained, indicating the difference between importance and satisfaction.

Table 9.

Importance, satisfaction and discrepancies.

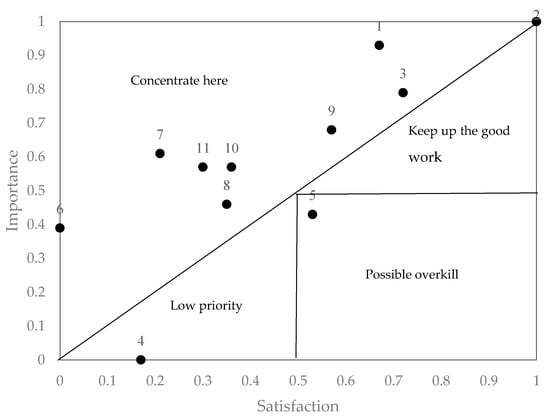

According to the values obtained in Table 9, the IPA representation was performed in Figure 2. To enhance the Martilla and James [27] representation, a combination of classic models was used in opposition to the diagonal models that divide the analysis space in two halves [28,55,56,57]. The diagonal models are based on the calculation of the discrepancies. The attributes with positive discrepancies are those in which importance exceeds satisfaction, thus, they represent a high priority for improvement. The greater the discrepancy, the more urgent the priority to attend to the attribute. In Figure 2, the attributes with a positive discrepancy are situated above the diagonal, in the quadrant named “concentrate on this”, whereas the attributes with negative discrepancies are below the diagonal. In this case, by combining the diagonal models with the classic Martilla and James [27] representation we differentiate three areas in which, based on the values of importance and satisfaction, we identify those attributes that indicate continuing with good work, attributes of low priority and attributes of a potential waste of resources.

Figure 2.

Representation of combined classic and diagonal models.

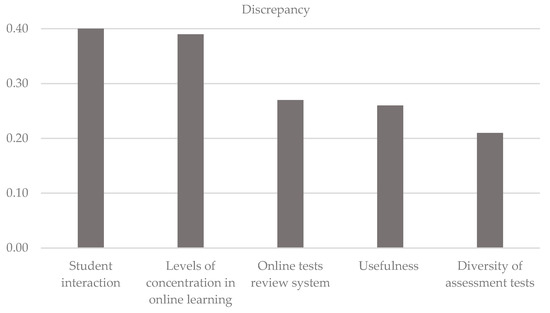

It can be observed that there are eight attributes that fall within the “concentrate on this” area, meaning that these attributes need to be improved. The attributes that require attention are those that are furthest from the diagonal. In this case, the discrepancies show that the attributes most in need of improvement are interaction with other students (attribute 7), levels of concentration in online classes (attribute 6), online tests review system (attribute 11), usefulness of the system (attribute 1) and diversity of activities used in student assessment (attribute 10). There is no attribute in the “continue with the good work” quadrant, while the “low priority” quadrant contains learning speed in online teaching compared to face-to-face teaching (attribute 4). The possible waste of resources quadrant has the online learning autonomy attribute. The results clearly show that there is a general level of dissatisfaction among students with the online teaching rolled out since the outset of COVID-19. Finally, Figure 3 shows the five priority attributes of online teaching that need to be improved.

Figure 3.

Representation of the five priority attributes.

4. Discussion

Universities need to adapt to the new situation created by the COVID-19 pandemic. They need to convert face-to-face teaching into an online format that is generally acceptable by their students. Integrating methodological and technological decisions should not just be a task for the higher education institutions as entities but should also involve collaboration among professors, students and administration staff and services. The results of this study suggest a set of priority areas that require attention in order to improve student satisfaction with online training. The first is interaction between students, as the attribute that presented the highest level of discrepancy. Following Ferri et al. [58], the lack of interactivity is one of the main pedagogical challenges associated with online teaching. In spite of online teaching, Verawardina et al. [59] hold that it is necessary maintain the interaction of direct contacts with other humans. Student interaction may have a decisive influence on the achievement of educational goals [60]. Such interaction may be highly beneficial for students especially in learning situations where moderately divergent points of view can be debated. However, virtual training can leave students feeling lonely and isolated, and reduce their capacity to form interpersonal relationships and accrue the consequent educational benefits. Nevertheless, online interactions can be useful and advantageous for students, especially through good group activity planning or conscientious teacher performance.

The second attribute that requires attention is the level of student concentration in online classes. One of the main problems that students face with virtual training is distraction; concentration wavers when sat in front of a computer screen for a length of time. Distraction can take the form of fatigue, email notifications, social network activity, phone calls, unexpected home visits, etc., all of which reduce student productivity and concentration during online classes. To improve student concentration, online sessions could be shorter, mind breaks could be inserted into the planning, along with mini-tasks or short activities. Students’ attention may also be reduced if they have other open apps on the screen during the online lessons. To resolve this, there are apps that can be activated by the institution to block access to other apps not related to the work being done by students in real time online classes.

The third attribute that requires improvement in order to raise student satisfaction with virtual learning is the system for reviewing online tests. This is also related to the problematic question of online assessments, in particular the need to know the identity of the student doing the test and to ensure that it is genuine, control over the physical context in which the assessment test takes place, and the relevant data protection legal framework. Many educational institutions are striving to overcome these problems using e-proctoring systems [61]. However, as García-Peñalvo et al. [62] indicate, assessment is a complex process that needs to be performed throughout a fixed instruction/learning period, not just at specific moments during the course. In most cases, the design of assessment test reviews in the virtual model is similar to those in the face-to-face teaching model, in other words, it is limited to indicating correct and incorrect answers, but now online instead of face-to-face. The problems that students perceive in this attribute, and the diversity of assessment tests attribute, could be the cause of their general dissatisfaction with online learning.

The fourth attribute that requires attention in order to improve students’ satisfaction with online teaching is the usefulness of the system. When the virtual instruction system functions well, students save time in the learning process, it boosts their sense of learning self-sufficiency and can improve results [31,32,33,63]. One possible reason for student dissatisfaction with how the system works is their lack of experience in managing it. This study has emphasized how the pandemic forced live classes to shift suddenly to the virtual format. A system’s usefulness is greatly affected by the technological media available both to the educational institutions and to the users. This highlights the need to improve the systems for imparting virtual instruction and to address the digital divide among users [64].

The fifth attribute to be tackled is the diversity of assessment tests. The lockdowns ordered as a result of the pandemic obliged the majority of education centers to close and switch rapidly from a face-to-face teaching design to one that could be implemented online. Assessment tests have continued to be set, and adapted to virtual learning, but changing the format. Now, however, teachers need to take advantage of the range of assessment activities available online to broaden the range of testing formats, and to increase student satisfaction with the assessment system. The remaining attributes that educational institutions need to attend in order to enhance student satisfaction are of a lesser priority than those already discussed. One such low-priority attribute is the learning speed in online teaching compared to face-to-face teaching. This attribute has a negative discrepancy but low importance among students. This could be due to the fact that students’ knowledge acquisition is a semester-long process because the university courses for which data are available are taught in six-monthly segments, hence the very low importance students attach to this attribute.

Online learning autonomy is also an attribute of little importance to the students, although the level of satisfaction is high, thus, it is situated in the quadrant denoting possible waste of resources. Learning autonomy refers to students’ gradual acquisition of their own criteria, methods and rules for transforming them into more effective learners. In online instruction, students establish their own pace of learning by accessing content anytime and as often as is necessary for them to acquire knowledge [65]. This could explain the low importance, but high level of satisfaction attached to this attribute.

5. Conclusions

This work emphasizes the enormous efforts made by educational institutions to transform content, activities and assessment systems designed for face-to-face teaching to online formats. This was done urgently, and often without the main actors possessing the minimum technological media, nor the digital competences and aptitudes required to adapt to such rapid change [66]. The results of this study show a general dissatisfaction among students with many of the features of online teaching that have become apparent in the period of lockdown in Spain due to COVID-19. The results do not apply to educational institutions that exclusively teach courses online, but to those institutions that had to adapt quickly, and shift course material originally designed for face-to-face instruction to the online format without enough time to carry out a more thorough process of adaptation.

This work contributes to the generation of knowledge about online teaching. The results provide valuable information for education professionals and educational institutions. The challenges posed by online teaching should be more related to the effectiveness of learning and human relations than to the technical characteristics of the systems used. Aspects such as improving interaction between students or between student/teacher, improving concentration or aspects of assessment must be taken into account in order to improve student satisfaction.

The situation with COVID-19 should not be seen as a threat by students and educational institutions based on conventional teaching but should serve to adapt the benefits of the online education system to their teaching.

At the societal level, online education also has important implications. Aspects such as travel costs and time savings are drastically reduced. On the other hand, for online learning to be effective, students must have reliable access to technology. This requires the development of social and economic policies to break the digital divide in many households and improve access to technology.

Like many other investigations in this field, our study has limitations. Although the results of this work can be extrapolated to other educational institutions that have undergone similar changes, the data for this study are based on a single Spanish university. Future lines of investigation would need to compare the data in our study against other universities, both inside and outside Spain. It would also be interesting to study whether differences exist between the levels of student satisfaction with online instruction in terms of course content and areas of knowledge.

Author Contributions

Conceptualization, J.M.R.-H., A.G.H.-D. and V.E.P.-L.; methodology, J.M.R.-H. and A.G.H.-D.; software, J.M.R.-H. and V.E.P.-L.; validation, A.G.H.-D.; formal analysis, J.M.R.-H. and A.G.H.-D.; investigation, V.E.P.-L.; writing—original draft preparation, A.D.L.-S.; writing—review and editing, J.M.R.-H., V.E.P.-L. and A.D.L.-S.; visualization, V.E.P.-L. and A.D.L.-S.; supervision, A.D.L.-S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Ethical review and approval were waived for this study because it was non-interventional. Confidentiality was maintained by responses being completely anonymous and only aggregated data are presented.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy or ethical restrictions.

Acknowledgments

The authors would also like to thank the three anonymous reviewers for their valuable comments and suggestions to improve the quality of the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Rodrigues, H.; Almeida, F.; Figueiredo, V.; Lopes, S.L. Tracking e-learning through published papers: A systematic review. Comput. Educ. 2019, 136, 87–98. [Google Scholar] [CrossRef]

- Arkorful, V.; Abaidoo, N. The Role of e-Learning, the Advantages and Disadvantages of Its Adoption in Higher Education. Int. J. Educ. Res. 2014, 2, 397–410. [Google Scholar]

- Ehlers, U.D.; Hilera, J.R. Special Issue on Quality in E-Learning. J. Comput. Assist. Learn. 2012, 28, 1–3. [Google Scholar] [CrossRef]

- UNESCO. Impacto del Covid-19 en la Educación. 2020. Available online: https://es.unesco.org/covid19/educationresponse (accessed on 12 December 2020).

- Hodges, C.; Moore, S.; Lockee, B.; Trust, T.; Bond, A. The difference between emergency remote teaching and online learning. Educ. Rev. 2020, 27, 12. Available online: https://bit.ly/3b0Nzx7 (accessed on 22 July 2020).

- Fernández, M. Una Pandemia Imprevisible ha Traído la Brecha Previsible. 2020. Available online: https://bit.ly/2VT3kzU (accessed on 7 September 2020).

- Yusuf, B.N. Are we prepared enough? A case study of challenges in online learning in a private higher learning institution during the Covid-19 outbreaks. Adv. Soc. Sci. Res. J. 2020, 7, 205–212. [Google Scholar] [CrossRef]

- Vlachopoulos, D. COVID-19: Threat or opportunity for online education? High. Learn. Res. Commun. 2020, 10, 2. [Google Scholar]

- Cole, M.T.; Shelley, D.J.; Swartz, L.B. Online instruction, e-learning, and student satisfaction: A three years study. Int. Rev. Res. Open Distance Learn. 2014, 15, 111–131. [Google Scholar] [CrossRef]

- Dick, G.P.; Tarí, J.J. A Review of Quality Management Research in Higher Education Institutions; Kent Business School Working Paper Series, No, 274; University of Kent: Kent, UK, 2013. [Google Scholar]

- Noaman, A.Y.; Ragab, A.H.M.; Madbouly, A.I.; Khedra, A.M.; Fayoumi, A.G. Higher education quality assessment model: Towards achieving educational quality standard. Stud. High. Educ. 2017, 42, 23–46. [Google Scholar] [CrossRef]

- Goos, M.; Salomons, A. Measuring teaching quality in higher education: Assessing selection bias in course evaluations. Res. High. Educ. 2017, 58, 341–364. [Google Scholar] [CrossRef]

- Shauchenka, H.V.; Bleimann, U. Methodology and Measurement System for Higher Education Service Quality Estimation. In Proceedings of the Conference on Education Technologies and Education, Interlaken, Switzerland, 7–9 July 2014; pp. 21–28. [Google Scholar]

- Edler, F.H.W. How Accreditation Agencies in Higher Education are Pushing Total Quality Management: A Faculty Review of the Academic Quality Improvement Program (AQIP). Unpublished Paper. Available online: http://commhum.mccneb.edu/PHILOS/AQIP.htm.2003 (accessed on 19 February 2019).

- Sunder, V.M. Constructs of quality in higher education services. Int. J. Product. Perform. Manag. 2016, 65, 1091–1111. [Google Scholar] [CrossRef]

- Sultan, P.; Wong, H.Y. Antecedents and consequences of service quality in a higher education context: A qualitative research approach. Qual. Assur. Educ. 2013, 21, 70–95. [Google Scholar] [CrossRef]

- Kuh, G.D.; Hu, S. The effects of student-faculty interaction in the 1990s. Rev. High. Educ. 2001, 24, 309–321. [Google Scholar] [CrossRef]

- Elliott, K.M.; Healy, M.A. Key factors influencing student satisfaction related to recruitment retention. J. Mark. High. Educ. 2001, 10, 1–11. [Google Scholar] [CrossRef]

- Barnett, R. The marketised university: Defending the indefensible. In the Marketisation of Higher Education and the Student as Consumer; Molesworth, M., Scullion, R., Nixon, E., Eds.; Routledge: London, UK, 2011; pp. 39–52. [Google Scholar]

- Alves, H.; Raposo, M. The influence of university image on students’ behaviour. Int. J. Educ. Manag. 2010, 24, 73–85. [Google Scholar] [CrossRef]

- Yildiz, S.M.; Kara, A. Developing alternative measures for service quality in higher education: Empirical evidence from the school of physical education and sports sciences. In Proceedings of the 2009 Academy of Marketing Science (AMS) annual conference, Baltimore, MD, USA, 20–23 May 2009; Springer International: Baltimore, MD, USA, 2015; p. 185. [Google Scholar]

- Srikanthan, G.; Dalrymple, J.F. A conceptual overview of a holistic model for quality in higher education. Int. J. Educ. Manag. 2007, 21, 173–193. [Google Scholar] [CrossRef]

- Telford, R.; Masson, R. The congruence of quality values in higher education. Qual. Assur. Educ. 2005, 13, 107–119. [Google Scholar] [CrossRef]

- Kwek, L.C.; Lau, T.C.; Tan, H.P. Education quality process model and its influence on students’ perceived service quality. Int. J. Bus. Manag. 2010, 5, 154–165. [Google Scholar]

- Chong, Y.S.; Ahmed, P.K. An empirical investigation of students’ motivational impact upon university service quality perception: A self- determination perspective. Qual. High. Educ. 2012, 18, 37–41. [Google Scholar] [CrossRef]

- Thomas, M.S.; Rogers, C. Education, the science of learning, and the COVID-19 crisis. Prospects 2020, 49, 87–90. [Google Scholar] [CrossRef]

- Martilla, J.; James, J. The need for implementing total quality management in education. Int. J. Educ. Manag. 1977, 11, 131–135. [Google Scholar]

- Picón, E.; Varela, J.; Braña, T. La representación de los datos mediante el Análisis de Importancia-Valoración. Metodol. Encuestas 2011, 13, 121–142. [Google Scholar]

- Allen, J.; Bellizzi, M.G.; Eboli, L.; Forciniti, C.; Mazzulla, G. Latent factor son the assessment of service quality in an Italian peripheral airport. Transp. Res. Procedia 2020, 47, 91–98. [Google Scholar] [CrossRef]

- Marks, R.B.; Sibley, S.D.; Arbaugh, J.B. A structural equation model of predictor for effective online learning. J. Manag. Educ. 2005, 29, 531–563. [Google Scholar] [CrossRef]

- Chiu, C.-M.; Wang, E.T.G. Understanding web-based learning continuance intention: The role of subjective task value. Inf. Manag. 2008, 45, 194–201. [Google Scholar] [CrossRef]

- DeLone, W.H.; McLean, E.R. The DeLone and McLean model of information systems success: A ten years update. J. Manag. Inf. Syst. 2003, 19, 9–30. [Google Scholar] [CrossRef]

- Hassanzadeh, A.; Kanaani, F.; Elahi, S. A model for measuring e-learning systems success in universities. Expert Syst. Appl. 2012, 39, 10959–10966. [Google Scholar] [CrossRef]

- Abdalla, I. Evaluating effectiveness of e-blackboard system using TAM framework: A structural analysis approach. AACE J. 2007, 3, 279–287. [Google Scholar]

- Tarhini, A.; Masa’deh, R.; Al-Busaidi, K.A.; Mohammed, A.B.; Maqableh, M. Factors influencing students’ adoption of e-learning: A structural equation modeling approach. J. Int. Educ. Bus. 2017, 10, 164–182. [Google Scholar] [CrossRef]

- Wang, Y.S.; Liao, Y.W. Assessing e-Government systems success: A validation of the Delone and Mclean model of information systems success. Gov. Inf. Q. 2008, 25, 717–733. [Google Scholar] [CrossRef]

- Mohammadi, H. Investigating users’ perspectives on e-learning: An integration of TAM and IS success model. Comput. Hum. Behav. 2015, 45, 359–374. [Google Scholar] [CrossRef]

- Wang, W.T.; Wang, C.C. An empirical study of instructor adoption of webbased learning systems. Comput. Educ. 2009, 53, 761–774. [Google Scholar] [CrossRef]

- Liaw, S.S. Investigating students’ perceived satisfaction, behavioral intention, and effectiveness of e-learning: A case study of the Blackboard system. Comput. Educ. 2008, 51, 864–873. [Google Scholar] [CrossRef]

- Eom, B.S.; Wen, H.J.; Ashill, N. The Determinants of Students’ Perceived Learning Outcomes and Satisfaction in University Online Education: An Empirical Investigation. Decis. Sci. J. Innov. Educ. 2006, 4, 215–235. [Google Scholar] [CrossRef]

- Lee, M.C. Explaining and predicting users’ continuance intention toward elearning: An extension of the expectation-confirmation model. Comput. Educ. 2010, 54, 506–516. [Google Scholar] [CrossRef]

- Tzeng, H.-M.; Okpalauwaekwe, U.; Li, C.-Y. Older Adults’ Socio-Demographic Determinants of Health Related to Promoting Health and Getting Preventive Health Care in Southern United States: A Secondary Analysis of a Survey Project Dataset. Nurs. Rep. 2021, 11, 120–132. [Google Scholar] [CrossRef]

- Năsui, B.A.; Ungur, R.A.; Talaba, P.; Varlas, V.N.; Ciuciuc, N.; Silaghi, C.A.; Silaghi, H.; Opre, D.; Pop, A.L. Is Alcohol Consumption Related to Lifestyle Factors in Romanian University Students? Int. J. Environ. Res. Public Health 2021, 18, 1835. [Google Scholar] [CrossRef]

- Zhuo, L.; Wu, Q.; Le, H.; Li, H.; Zheng, L.; Ma, G.; Tao, H. COVID-19-Related Intolerance of Uncertainty and Mental Health among Back-To-School Students in Wuhan: The Moderation Effect of Social Support. Int. J. Environ. Res. Public Health 2021, 18, 981. [Google Scholar] [CrossRef]

- Garvey, A.M.; García, I.J.; Otal Franco, S.H.; Fernández, C.M. The Psychological Impact of Strict and Prolonged Confinement on Business Students during the COVID-19 Pandemic at a Spanish University. Int. J. Environ. Res. Public Health 2021, 18, 1710. [Google Scholar] [CrossRef] [PubMed]

- Peterson, R.A.; Merunka, D.R. Convenience samples of college students and research reproducibility. J. Bus. Res. 2014, 67, 1035–1041. [Google Scholar] [CrossRef]

- Ho, C.L.; Dzeng, R.J. Construction safety training via e-learning: Learning effectiveness and user satisfaction. Comput. Educ. 2010, 55, 858–867. [Google Scholar] [CrossRef]

- Thurmond, V.A.; Wambach, K.; Connors, H.R.; Frey, B.B. Evaluation of Student Satisfaction: Determining the Impact of a Web-Based Environment by Controlling for Student Characteristics. Am. J. Distance Educ. 2002, 16, 169–190. [Google Scholar] [CrossRef]

- Lévy, M.J.-P.; Martín, M.T.; Román, G.M.V. Optimización según estructuras de covarianzas. In Modelización Con Estructuras de Covarianzas en Ciencias Sociales; Lévy, J.-P., Varela, M.J., Eds.; Netbiblo: A Coruña, Spain, 2016; pp. 11–30. [Google Scholar]

- Bollen, K.A. Structural Equations with Latent Variables; John Wiley & Son: New York, NY, USA, 1989. [Google Scholar]

- Rindskopf, D.; Rose, T. Some theory and applications of confirmatory second-order factor analysis. Multivar. Behav. Res. 1988, 23, 51–67. [Google Scholar] [CrossRef] [PubMed]

- Fornell, C.; Larcker, D. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981, 18, 30–50. [Google Scholar] [CrossRef]

- Hair, J.; Anderson, R.; Tatham, R.L.; Black, W.C. Multivariate Data Analysis; Prentice-Hall: Upper Saddle River, NJ, USA, 1998. [Google Scholar]

- Chau, P. Reexamining a model of evaluation information center success using a structural equation modeling approach. Decis. Sci. 1997, 28, 309–334. [Google Scholar] [CrossRef]

- Ábalo, J.; Varela, J.; Rial, A. El análisis de Importancia-Valoración aplicado a la gestión de servicios. Psicothema 2006, 18, 730–737. [Google Scholar]

- Nale, R.D.; Rauch, D.A.; Wathen, S.A.; Barr, P.B. An exploratory look at the use of importance-performance analysis as a curricular assessment tool in a school of business. J. Workplace Learn. 2000, 12, 139–145. [Google Scholar] [CrossRef]

- Sampson, S.E.; Showalter, M.J. The performance-importance response function: Observations and implications. Serv. Ind. J. 1999, 19, 1–26. [Google Scholar] [CrossRef]

- Ferri, F.; Grifoni, P.; Guzzo, T. Online learning and emergency remote teaching: Opportunities and challenges in emergency situations. Societies 2020, 10, 86. [Google Scholar] [CrossRef]

- Verawardina, U.; Asnur, L.; Lubis, A.L.; Hendriyani, Y.; Ramadhani, D.; Dewi, I.P.; Sriwahyuni, T. Reviewing online learning facing the Covid-19 outbreak. J. Talent Dev. Excell. 2020, 12, 385–392. [Google Scholar]

- Chiecher, A.C.; Donolo, D.S. Interacciones entre alumnos en aulas virtuales. Incidencia de distintos diseños instructivos. Pixel-Bit. Rev. Medios Educ. 2011, 39, 127–140. [Google Scholar]

- Adkins, J.; Kenkel, C.; Lim, C.L. Deterrents to online academic dishonesty. J. Learn. High. Educ. 2005, 1, 17–22. [Google Scholar]

- García-Peñalvo, F.J.; Corell, A.; Abella-García, V.; Grande, M. Online Assessment in Higher Education in the Time of COVID-19. Educ. Knowl. Soc. 2020, 21, 1–12. [Google Scholar] [CrossRef]

- Vázquez-Cano, E.; Fombona, J.; Fernández, A. Virtual Attendance: Analysis of an Audiovisual over IP System for Distance Learning in the Spanish Open University (UNED). Int. Rev. Res. Open Distance Learn. 2013, 14, 402–426. [Google Scholar] [CrossRef]

- Vázquez-Cano, E.; León Urrutia, M.; Parra-González, M.E.; López Meneses, E. Analysis of Interpersonal Competences in the Use of ICT in the Spanish University Context. Sustainability 2020, 12, 476. [Google Scholar] [CrossRef]

- Vázquez-Cano, E. Mobile Distance learning with Smartphones and Apps in Higher Education. Educ. Sci. Theory Pract. 2014, 14, 1–16. [Google Scholar] [CrossRef][Green Version]

- Sevillano, M.L.; Vázquez-Cano, E. The impact of digital mobile devices in Higher Education. Educ. Technol. Soc. 2015, 18, 106–118. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).