1. Introduction

Although there has been a reduction in fatal construction injuries, the construction industry still has the highest number of casualties of any other industry, which means the safety of the construction industry still needs attention to avoid fatal accidents [

1]. Previous research on the application of computer vision technology to the detection and tracking of on-site workers has shown its feasibility for safety management on construction sites. However, when utilizing computer vision technology, the detection effect is unsatisfactory because of the limited coverage of single cameras and occlusion. Moreover, there is little study in this area of automatic system analyzing workers’ safety status. The problems mentioned above can be summarized as the following aspects:

- (1)

Is there a worker detection method that can adapt to the dynamic and ever-changing environment of the construction site?

- (2)

Is there a worker detection method that can fuse visual information from multiple angles to avoid occlusion problems?

- (3)

Is there a method with low computational cost for the management of safety of workers on the construction site?

To overcome the knowledge gaps, this paper proposes an improved method of worker detection with multi-angle information fusion and realizes the prediction of movement trajectory to determine their safety status and offer a prior warning signal, contributing to the on-site safety management. This paper focuses on the real-time detection of the safety status of on-site workers, proposing two improvements: (1) multi-angle detection of construction workers; (2) low demand for computational resources for detection of workers. Due to the changeable nature of construction sites, the arrangement of the detection system should be replicated easily without a laborious and tedious process of training a neural network. The framework for workers’ detection should be light and simple, and able to identify workers from multiple angles to solve the above problems. Experimental studies were carried out to verify the effectiveness of the presented framework.

At present, numerous techniques have been applied to the safety management of construction sites [

2,

3,

4]. However, among these techniques, the manual method is low-efficient, high-cost, and subjective [

5,

6,

7], and methods with wearable equipment need frequent charging and high application cost, affecting the efficiency of workers [

8,

9,

10]. Overcoming the limitation of traditional methods, computer vision technology is considered to realize intelligent management on construction sites [

11,

12,

13], especially for automatic recognition and monitoring of on-site workers [

14,

15]. Moreover, previous research indicates that on-site safety performance can be improved by computer vision technology (e.g., detection of safety equipment, motion analysis, and tracking of workers) [

16,

17]. With the advantages of computer vision technology, recognition and tracking of workers has become a trend in safety management, aiming to detect the workers’ unsafe behavior and status on construction sites.

Despite its benefits, there are still some limitations of existing studies on recognition and tracking of on-site workers. Weerasinghe and Ruwanpura [

18] set up an AMOT (automatic multi-target tracking system) to monitor the on-site workers and equipment while analyzing both audio and video. Dong et al. [

19] proposed a proactive struck-by risk detection method for workers proximal to the laydown area to enhance construction site safety, but the implementation of this method is dependent on tag-based tracking technology. Luo et al. [

12] came up with a hierarchical statistical method for capturing and understanding workers’ high-level activities in far-field surveillance videos but creating data sets to train the TSNs is indispensable and expensive when new workers’ actions are introduced. Yang et al. [

20] established a tracking algorithm based on the machine learning method to track multiple workers on construction sites using video cameras with poor recognition effect unfortunately. Brilakis et al. [

21] presented a vision-based tracking framework to identify and track construction entities, providing 3D spatial coordinates of entities. Ren et al. [

22] proposed project related entities tracking on construction sites by particle filtering to overcome the problem that the detection target is blocked, showing the importance of solving the problems caused by occlusion. Guo et al. [

23] pointed out that existing location technologies can perform well only in relatively small areas due to their generally poor penetrating performance. After reviewing many relevant studies, Zhong et al. [

24] argued that multiple cameras are needed to be placed on the site to fill up some knowledge gaps in the field of computer vision technology. Moreover, Park et al. [

25] employed a method of transforming two-dimensional coordinates into three-dimensional coordinates by using the on-site camera system, realizing the tracking of construction resources. However, the processing of the video is not real-time. In another study, the location data of workers and construction equipment was collected and processed by visual detection technology, and relevant safety performance information was displayed to decision-makers in real time, aiming to achieve the safety management of on-site workers [

26], but the information collected had not been processed automatically.

As can be seen, some studies still require miscellaneous conditions (e.g., the placement of tags) to recognize and track workers’ movement trajectories. Although attempts of using computer vision to detect and track workers in construction sites have been developed, the identification effect is still poor and several factors affect the accuracy of worker’s identification, especially occlusion. Therefore, multiple cameras are needed to be placed on the site, coalescing the information they gather to achieve wider coverage and improve the detection effect. What is more, the result of detection and tracking should be processed automatically in real time and the movement estimation is crucial for safety management [

27,

28]. In general, this paper intends to solve the following research problems:

- (1)

A background extraction method for solving the influence of illumination mutation.

- (2)

A multi-angle worker detection method for solving the occlusion problem.

- (3)

A method with low computational cost that can adapt to the construction site.

To address these limitations and implement an automatic process of site safety management, the improved GMM (Gaussian Mixed Model) and HOG + SVM (Histogram of Oriented Gradient and Support Vector Machines) framework are offered, enhancing the effect of workers’ detection. Meanwhile, the information fusion of workers’ detection result from multiple angles is realized by utilizing the SURF (Speeded Up Robust Features) feature algorithm, overcoming the limited coverage of single cameras and achieving a better detection effect. The effectiveness of the method mentioned above was tested on real site videos collected by two cameras. Kalman filter was applied to predict the movement trajectory of construction workers without any pre-positioned labels, after which the workers’ safety status can be determined automatically to offer a prior warning signal when they enter a danger zone. In general, this paper proposes an improved method of workers’ detection utilizing multi-angle fusion and establishes an automatic analysis system for the safety status of on-site workers. The framework in this paper does not require the installation of any sensors on workers, which makes the method available at most construction sites, especially the large-scale ones because of the larger coverage of multiple cameras. Moreover, this paper provides an idea to implement automatic safety management in construction sites, helping to manage the safety of construction sites and prevent accidents from happening.

2. Methodology Overview

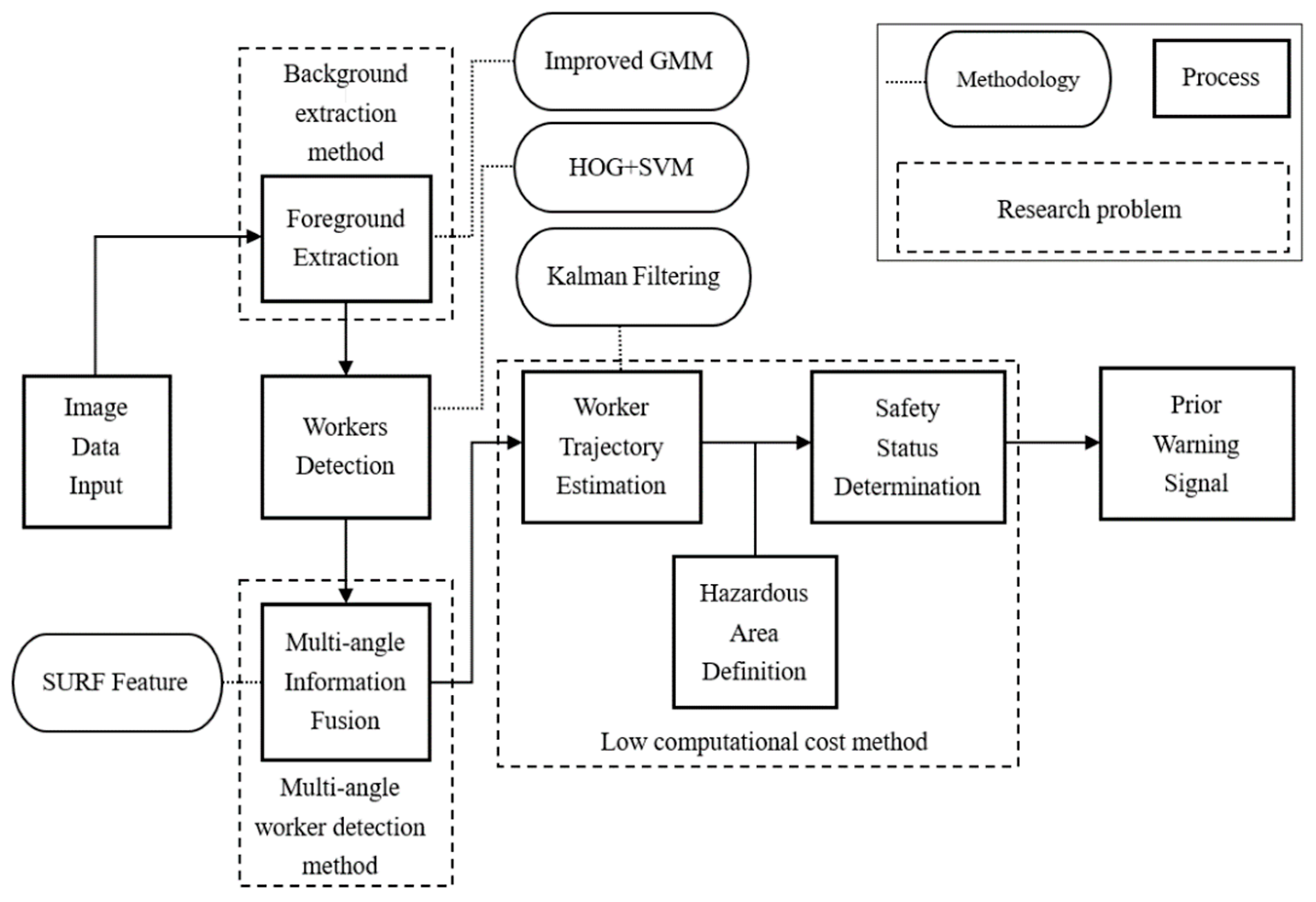

This paper introduces an automated method for the detection of on-site workers from multiple angles and the prediction of movement trajectory. This section is organized as follows: (1) introduction of the methods and some improvement idea utilized in this paper, such as improved GMM, HOG feature and SVM, SURF feature, and Kalman filtering; (2) the improved GMM and HOG + SVM framework for the detection of construction workers; (3) the determination of the safety status of construction workers based on path prediction; (4) discussion, conclusion, insufficient and future expectation. The following content of this paper is shown in

Figure 1.

2.1. Improved GMM

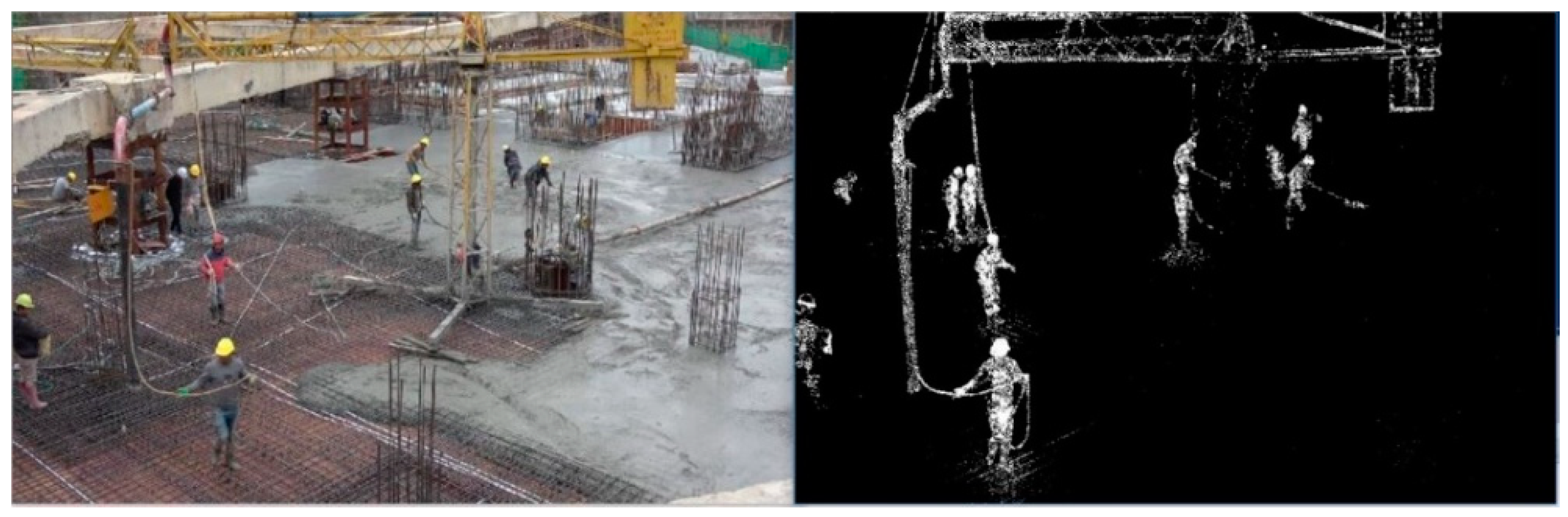

In this paper, the video background refers to the relatively stable picture content in the video, that is, the fixed scene with few changes, while the moving object in the video is considered as the foreground, the specific target to be detected. The purpose of background modeling is to segment the relatively stable part of the video frame from the moving target, achieving the dichotomy between the foreground and background of the video frame content.

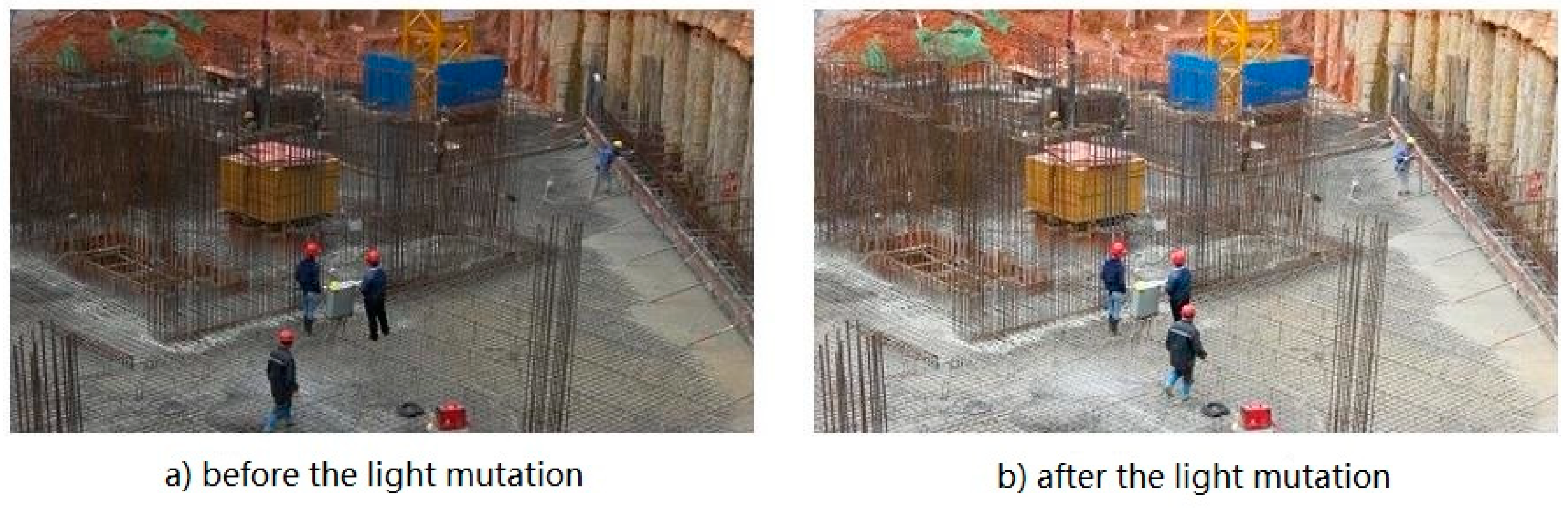

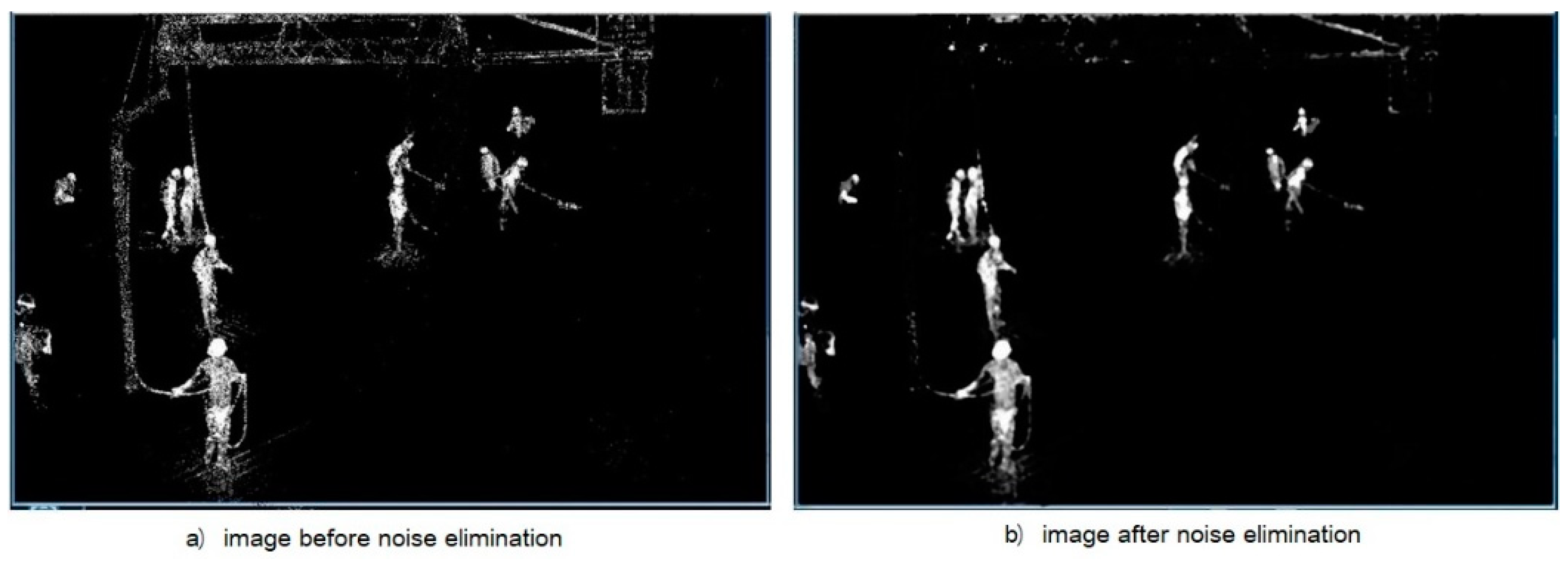

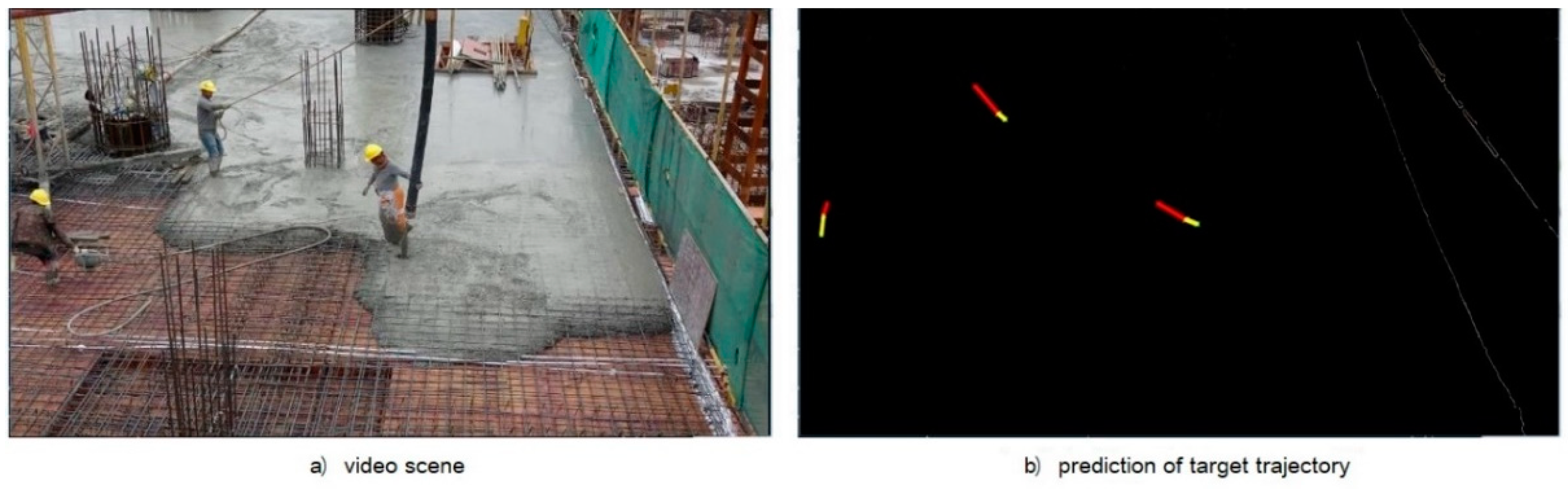

The GMM background modeling method is to establish the color distribution model of a single pixel in the video according to the statistical law of time. This method is suitable for accurate modeling of complex backgrounds such as light gradation and tree sway. However, in the actual application, the abrupt change of the scene (shown in

Figure 2) may lead to the misjudgment of the algorithm. For consideration of this effect, a corresponding improvement to the GMM background modeling method is proposed in this paper.

When the overall brightness of the scene changes suddenly, the GMM background model cannot adapt to such changes. This mutation is mathematically represented as the change in the value of all pixels in image, and its effect is considered according to the average change of pixel values in the common background between adjacent video frames. For the video frames at time

t, the pixel areas of each moving entity in the video frames at time

t − 1,

t − 2, and

t − 3 are known. The centroid coordinates of these areas are used to represent the positions of construction workers, and each movement area needs to be marked with a different number. Therefore, according to the difference of centroid coordinates of the same digital marker region in the continuous video frame before time

t, the velocity and acceleration of each moving target can be obtained, as shown in Formula (1).

In Formula (1),

and

represent the pixel coordinates of the centroid of the

ith motion region,

.

represents the estimated velocity of the centroid of the

ith motion region at time

t,

.

represents the estimated acceleration of the centroid of the

ith motion region at time

t,

. According to Formula (1),

and

are solved, and then the positions of each target

i at time

t can be predicted by Formula (2).

Taking

as the centroid of a rectangle, the prediction region of each target at time

t can be identified. If the boundary rectangle region of each target at time

t − 1 and the predicted boundary rectangle region at time

t are taken as the union set, then for time

t − 1 and time

t, the complementary set region of this union in the image is the common background component, as shown in

Figure 3. The pixel bits corresponding to the region of the video frame at time

t are subtracted from the pixel bits corresponding to the region of the video frame at time

t − 1 to obtain the changes in the pixel values of the background region. The distribution of the change of background pixel value can be obtained by collecting the gray histogram of the change of each pixel value in the background region. If the overall brightness of the scene does not change suddenly, the mean value of the distribution theoretically approaches 0. If the overall brightness of the scene changes suddenly, the mean value of the distribution will shift greatly and the variance will be small.

Before applying the GMM background model for foreground extraction, the mean value of the distribution is first used to modify the mean value of the Gaussian distribution in the GMM model, and the modified formula is shown in Formula (3), in which

and

, respectively, represent the mean Gaussian distribution before and after illumination change.

represents the average variation in background illumination brightness.

2.2. HOG Feature and SVM

HOG feature calculates and counts the gradient direction of each pixel in the local area of the image to establish the histogram, which is widely used for the pedestrian detection. To obtain the HOG feature of the image, the gradient direction of each pixel of the image is firstly calculated and divided the value range n of the gradient direction into a finite sub-interval, so that the direction can be discretized. Then, a designated detection window is set as a statistical region and divided into subranges to construct multiple histograms of direction gradient of the local region. According to the subordination relationship between the gradient orientation value and each orientation sub-interval, all the pixels within the cell unit are voted and counted for each discrete sub-interval to obtain the HOG feature of the cell unit. Finally, the HOG features of each cell unit are serialized into a vector to be the HOG features of the local region.

In the field of machine learning, SVM is a typical classifier and achieves classification by creating an optimal decision hyperplane that maximizes the distance from the samples closest to it. For the case of linear inseparability, the general principle is to map the samples from the original sample space to a higher dimensional space, so that the samples in this higher dimensional space become linearly separable. It has been proved mathematically that if the original space is finite dimensional, there must be a higher dimensional feature space that makes the sample linearly separable.

With the development of deep learning, most of the common target detection methods have been based on CNN, which needs a large training set with high training cost and is time-consuming [

29,

30]. In order to build a simple and fast target detection system, this paper adopts SVM based on machine learning to detect workers, which adapts to the limited and changeable field computing resources.

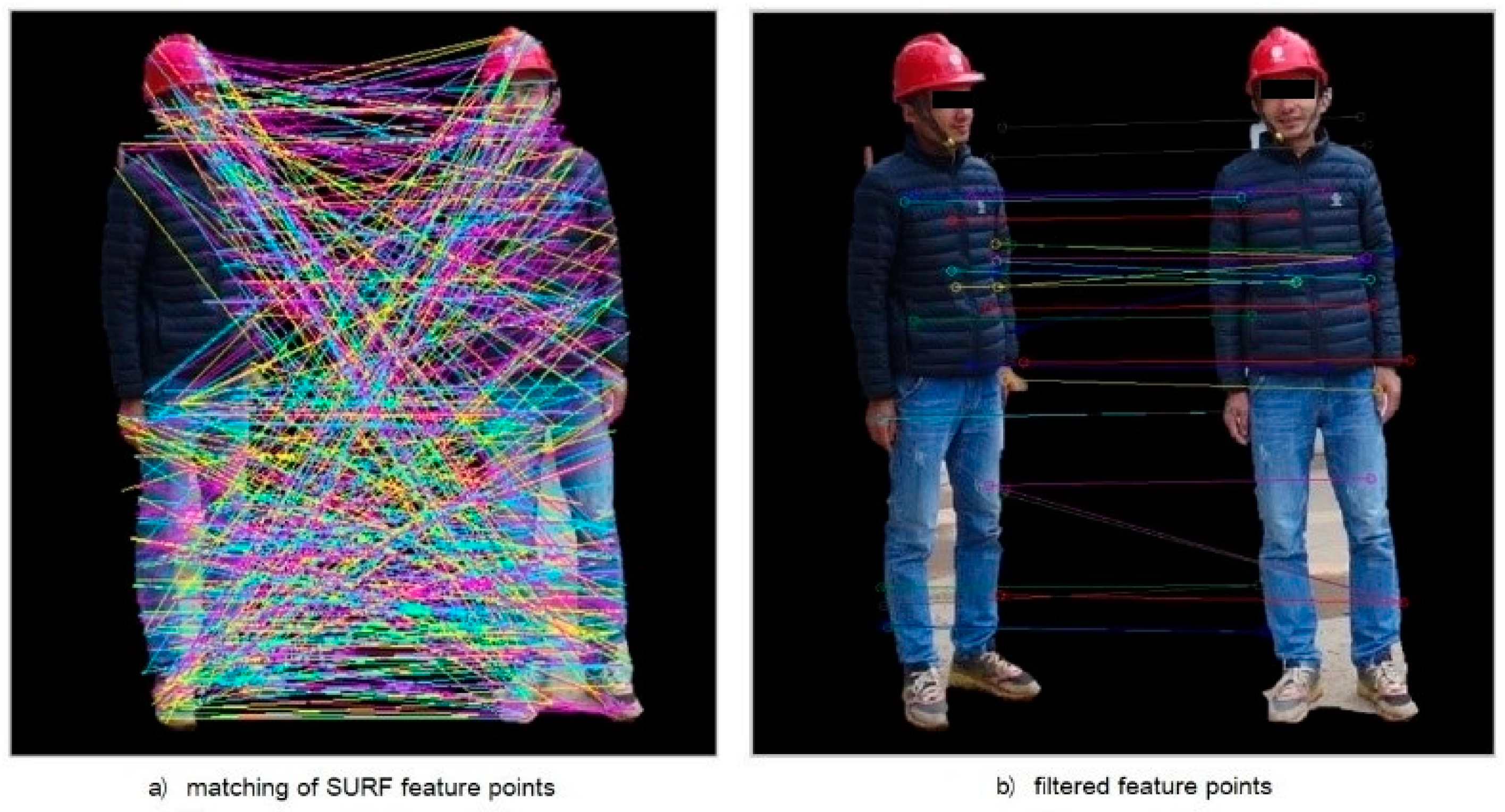

2.3. SURF Feature

The SURF feature is a robust method for detecting and describing local feature points in images, which takes specific pixel points in the image as the processed object. The SURF algorithm, along with its high efficiency, has a better matching effect than other matching algorithms in the case of obvious light changes, which are frequent on construction sites. Therefore, the SURF algorithm meets the requirements of the proposed lightweight framework [

31]. The SURF feature utilizes the Hessian matrix to construct the determination of mutation points, in preparation for feature extraction. The specific form of Hessian matrix is a square matrix composed of the second partial derivative of a multivariate function and describes the local curvature of the function.

Since the image is a discrete binary function, the image pixel value in a small local area may also have mutations and the image in this small local area is often slippery. The specific form of Hessian matrix is Formula (4), which is a square matrix composed of the second partial derivative of a multivariate function and describes the local curvature of the function. The larger the determinant value of

H matrix is, the larger the change value of local curvature of the corresponding point is, and the point with greater local curvature is more likely to be a corner point. Therefore, the discriminant for the feature point to be selected by using

H matrix is Formula (5).

is the threshold set for distinguishing feature points to be selected.

The image is a discrete binary function, the image pixel value in a small local area may also have mutations (noise points, noise spots, etc.), so the image in this small local area is often slippery. The abrupt change of pixel value in such a small local area often interferes with the information provided by the image and affects the determination of the feature point to be selected (

Det(H) of the noise point is large, but it is not the corner point). In order to improve the consistency with the mathematical model and the accuracy of the algorithm, before the numerical model analysis of the image, the image is often smooth processing (fuzzy processing), removing the image noise and noise spots and other interference information. The commonly used image smoothing method is applying Gaussian kernel to carry out convolution operation on the whole image, and its mathematical expression is shown in Formula (6).

For the image, the H-matrix after Gaussian smoothing is shown as Formula (7). Accordingly, the discriminant of h-matrix for feature point discrimination becomes Formula (8). In practical application, in order to use the integral graph to improve the operation rate, the SURF algorithm uses the Gaussian filter. To balance the error caused by using the box filter approximation, the term of the discriminant is multiplied by the coefficient 0.9, so the discriminant is changed to Formula (9).

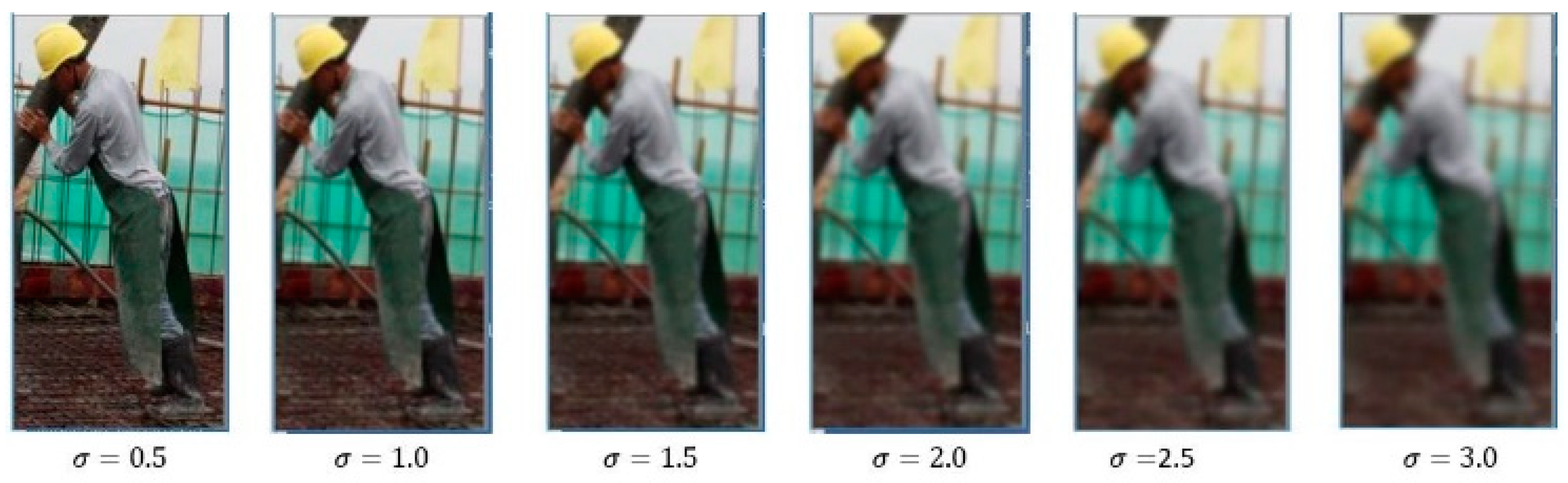

In order to construct the scale invariance of feature points (after the image is scaled to different sizes, all pixel points in the same position can be determined as feature points), it is necessary to construct the scale space of the image. The image is scaled to different sizes at a certain scale, and the image pyramid formed by these scaled images set as scale space. Different sizes of the same image can be reversely seen as different blurring degrees of the image. The Gaussian smoothing is to blur the image and the blurring degree is controlled by σ. Different degrees can be constructed by adjusting the size of σ to obtain the scale space of the image, as shown in

Figure 4.

The directional invariance of feature points refers to the fact that in photos of the same scene under different shooting conditions, the feature points have certain directional invariability in two different pictures. Harr small baud in the circular neighborhood of statistical feature points is utilized to construct directional invariance. In the circular neighborhood of the feature points, with the horizontal axis as the starting direction and the 0.2 radian size as the step size, the sum of the horizontal and vertical Harr wavelet features of all points in the radian sector subregion is calculated, respectively. Then the direction of the sector with the largest sum of the wavelet features was selected as the principal direction of the feature points. After constructing the principal direction of the feature points to be selected, the feature points should meet the same principal direction on different images, so that the direction of the feature points is invariant.

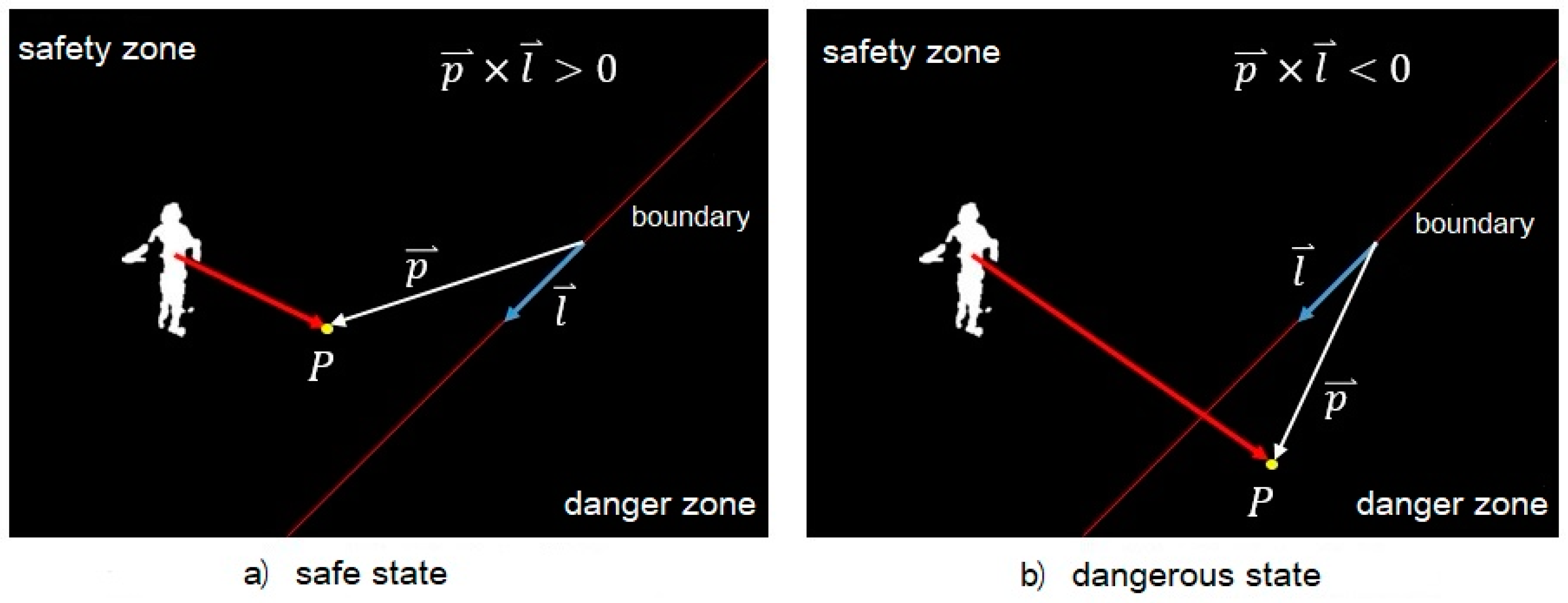

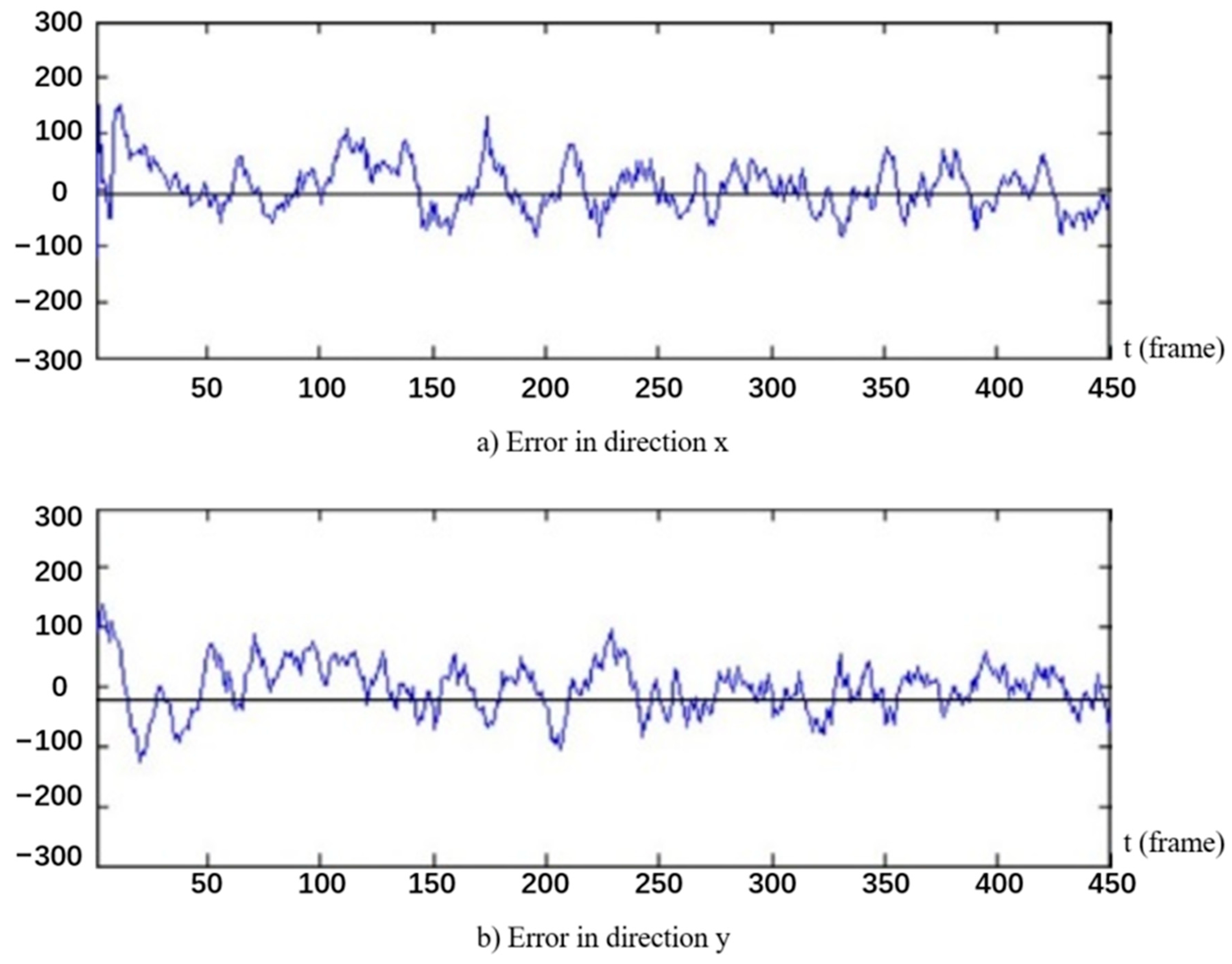

2.4. Kalman Filtering

The location prediction of construction workers refers to the prediction of workers’ movement trajectory by establishing the model of workers’ dynamic movement. Kalman filter is a commonly used method to predict dynamic systems. In linear random systems with interference, Kalman filter can be used to predict the future state of the system, and the error of the predicted value is smaller than that of the direct observation value. In order to apply Kalman filter, the linear stochastic system model of the target needs to be established in advance, that is, state modeling and measurement modeling. State modeling is to determine the state transfer equation, which is the state recursive function of time, while measurement modeling is to determine the measurement equation, which is the function of state. In this paper, the state transfer equation is a recursive linear equation set of worker coordinate positions with process noise interference, and the measurement equation is a linear equation set of worker coordinate positions with measurement noise interference.

After establishing the linear stochastic system, an arbitrary initial state is assumed for the system. The value of the state is obtained from the sensor and the state transfer equation is used to update the state of the system. Thus, the updated state variables and measurement equations are used to obtain the measurements of the target. Moreover, Kalman filtering reduces the errors generated by the noise of the system by combining all historical observations.

In order to apply Kalman filter, state modeling is needed to determine the state transition equation. In this paper, the Singer acceleration model is used to establish the motion state equation of construction workers [

32,

33]. The Singer model assumes that the target acceleration

is a stationary first-order Markov process with zero mean value, thus obtaining the state of the linear time-invariant system as shown in Formula (10). It can be seen from Formula (10) that the parameter σ

2 is the instantaneous change of acceleration and is treated as a random variable.

In Formula (10),

represents the first derivative of the acceleration vector.

is the inverse of the maneuver time τ

m (10~20 s).

and

represent acceleration vector and zero mean Gaussian white noise vector, respectively. According to Formula (10), its discrete form can be obtained, namely Formula (11).

In Formula (11),

is a sequence of zero mean white noise vectors with variance

.

is a constant. For

,

. Assuming that the state variable is

x (

), the state space of the continuous time singer model of Formula (10) is expressed as Formula (12), and the equivalent discrete form of Formula (11) is obtained as Formula (13). In Formula (13),

T represents the unit time interval.

The discrete time linear stochastic dynamic system is described in Formula (14). In Formula (14),

k stands for timing indicators (

).

represents the system state vector at time

k (

).

represents the direction finder for the system state at time

k (

).

represent the system state transition matrix, process evolution noise, noise driven matrix, measurement matrix, and measurement noise, respectively. For the discrete-time linear stochastic dynamic system described by Formula (14), the one-step advance prediction for measurement is expressed as Formula (15), assuming that all random variables are Gauss. The corresponding one-step advance prediction error sequence is shown in Formula (16), which is called the new information sequence.

If the random variable is non-Gauss, it can be estimated by the BLUE criterion (Best Linear Unbiased Estimate, the optimal linear unbiased estimation), and Formula (17) can be obtained. At this point, Formula (18) shows the corresponding prediction error sequence, which is called the pseudo-novelty sequence.

If the discrete-time linear stochastic dynamic system described by Equation (14) is assumed to be and independent processes, independent of each other, and independent of the initial state , then the basic Kalman filtering formulas of Formulas (19) to (24) are valid for any situation loss function. By taking Formula (13) as the state equation of the discrete time linear stochastic dynamic system described by Formula (17), the movement trajectory of construction workers can be predicted according to Formula (20), and the error of the predicted position is Formula (21).

- (1)

- (2)

The predicted value of one step in advance.

- (3)

The covariance matrix of one-step predicted value error.

In Formula (21), stands for prediction error. .

- (4)

The filter update value after the new measurement is obtained.

- (5)

The corresponding filtering error covariance matrix of the filter update value.

In Formula (23), stands for the filtering error..

- (6)

The Kalman gain matrix at time

k.

6. Conclusions

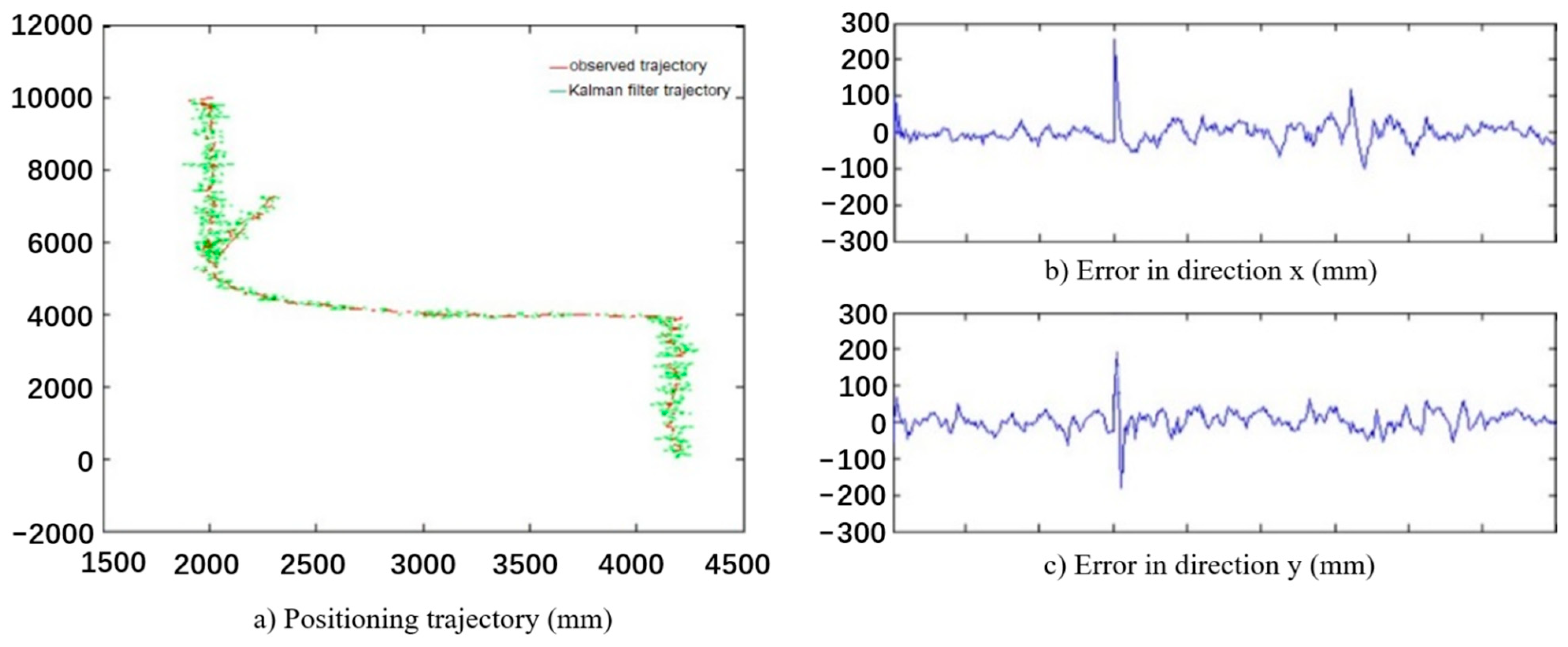

After reviewing the literature referring to the safety management of on-site workers, this paper proposed a method to make up for the knowledge gap on multi-angle detection of workers and implemented a pre-warning method for construction workers’ dangerous status. For the aspect of workers’ detection on the construction site, the improved GMM was adopted to extract the motion foreground, so as to reduce the influence on the algorithm caused by sudden changes in the construction site environment. Meanwhile, the HOG + SVM framework was utilized to complete the detection and classification of sub-images of moving objects, and finally the detection of workers on the construction site was implemented. According to the detection results of the proposed algorithm, it can be considered that the algorithm can accurately detect the construction workers in the video. It is worth mentioning that SURF algorithm was applied for information fusion of construction workers in multiple angles, which implements the detection of workers in multiple cameras. Finally, Kalman filter was applied to estimate the movement trajectory of workers on the construction site and determine the safety status. The location of construction workers on the construction site was tracked and predicted with results showing that the algorithm can track and predict workers’ moving position.

There are some drawbacks of this study that need further research. In the fusion of construction workers from multiple angles, the program is susceptible to the influence of camera resolution, and the detection effect of construction workers will be limited when they are far away from the camera. The presented study fails to provide a device that can offer an early warning signal. Therefore, in future research, investigating the use of substantial equipment (such as safety helmet of vibration) is still required to provide workers with an early warning.