Design and Preliminary Evaluation of a Tongue-Operated Exoskeleton System for Upper Limb Rehabilitation

Abstract

:1. Introduction

2. Materials and Methods

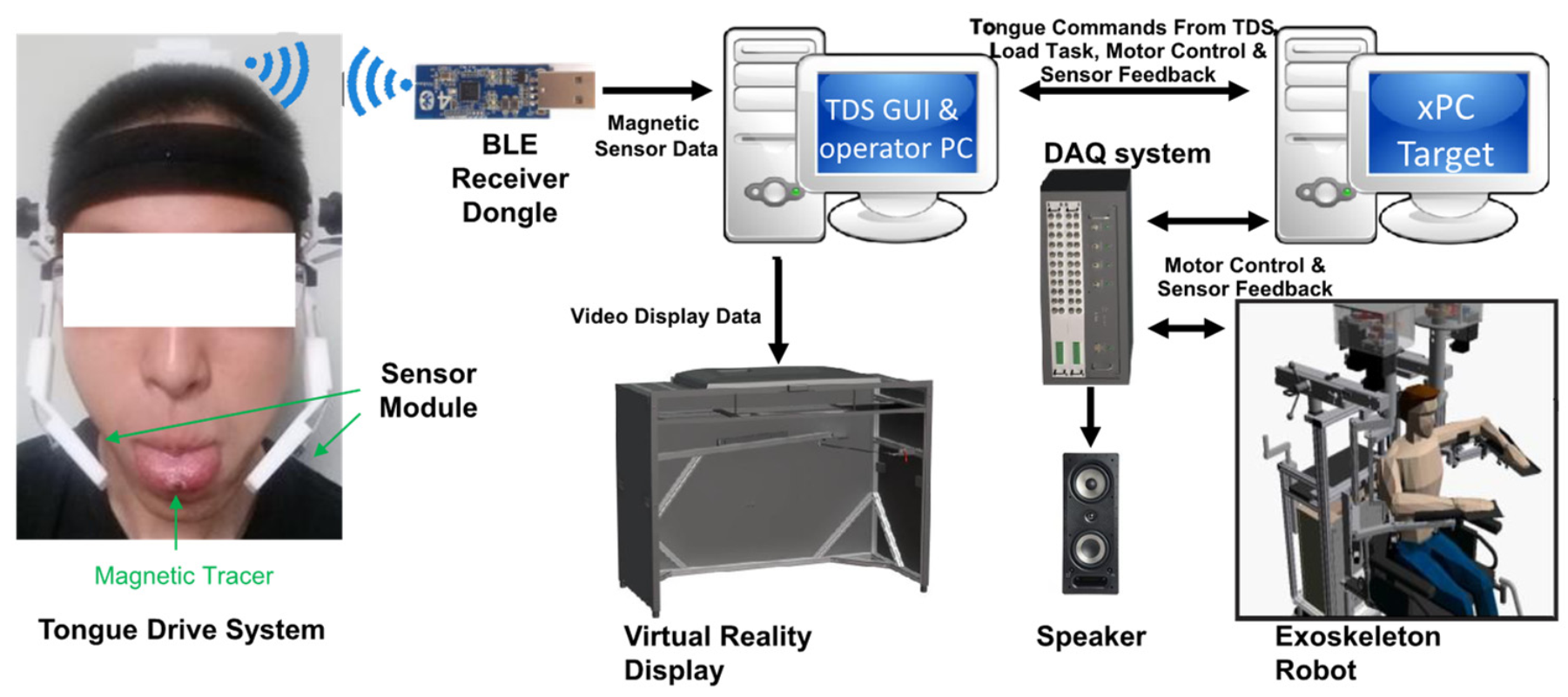

2.1. System Description

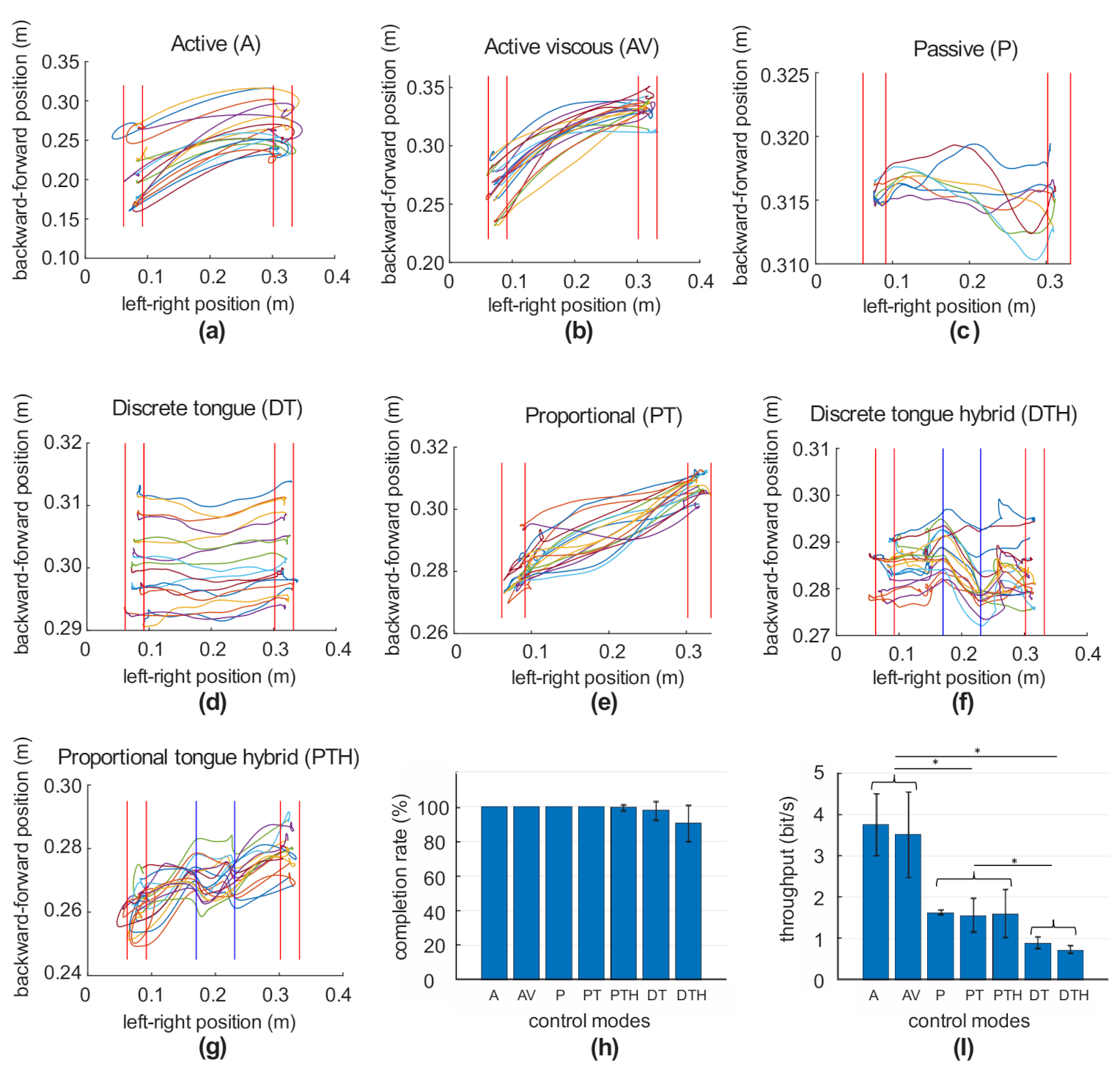

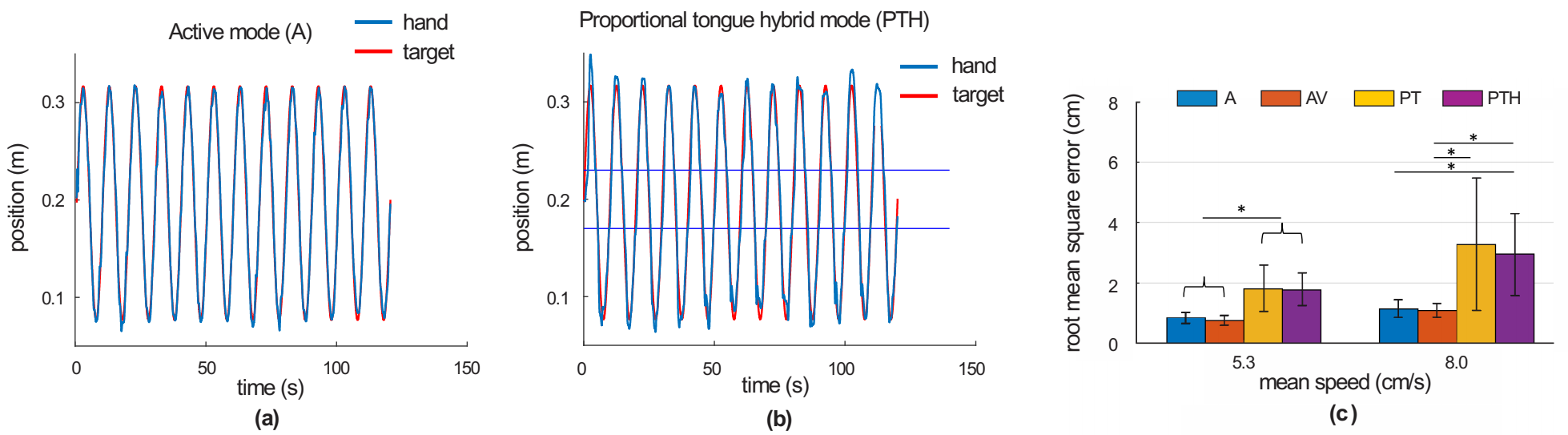

2.2. Tasks

2.3. Control Modes

2.4. Experimental Protocol

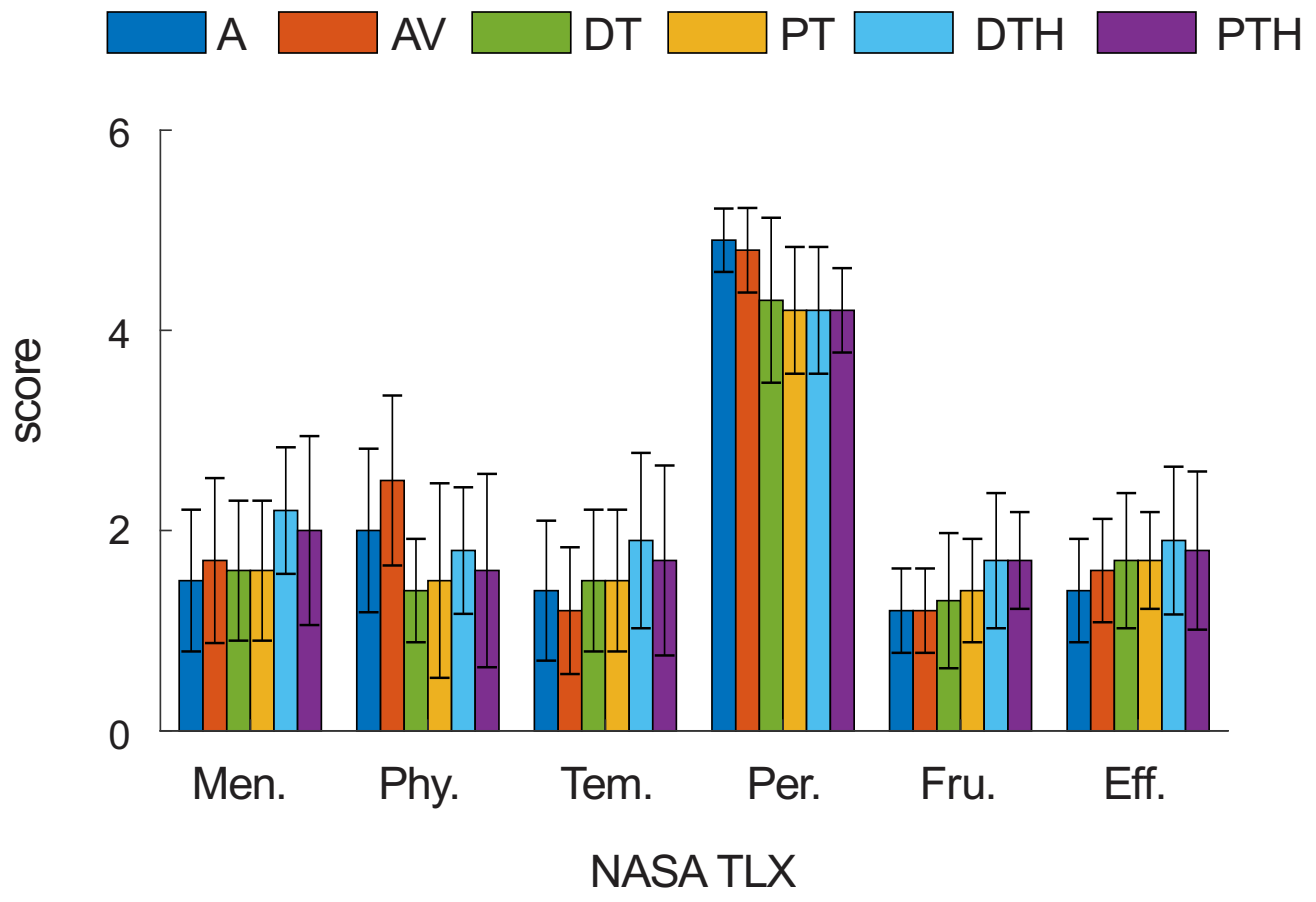

- Mental Demand: How much mental and perceptual activity was required? Was the task easy or demanding, simple or complex? 1 means low and 5 means high.

- Physical Demand: How much physical activity was required? Was the task easy or demanding, slack or strenuous? 1 means low and 5 means high.

- Temporal Demand: How much time pressure did you feel due to the pace at which the tasks or task elements occurred? Was the pace slow or rapid? 1 means low time pressure and 5 means high time pressure.

- Overall Performance: How successful were you in performing the task? How satisfied were you with your performance? 1 means not successful and 5 means successful.

- Frustration Level: How irritated, stressed, and annoyed versus content, relaxed, and complacent did you feel during the task? 1 means relaxed and 5 means stressed.

- Effort: How hard did you have to work (mentally and physically) to accomplish your level of performance? 1 means low effort and 5 means high effort.

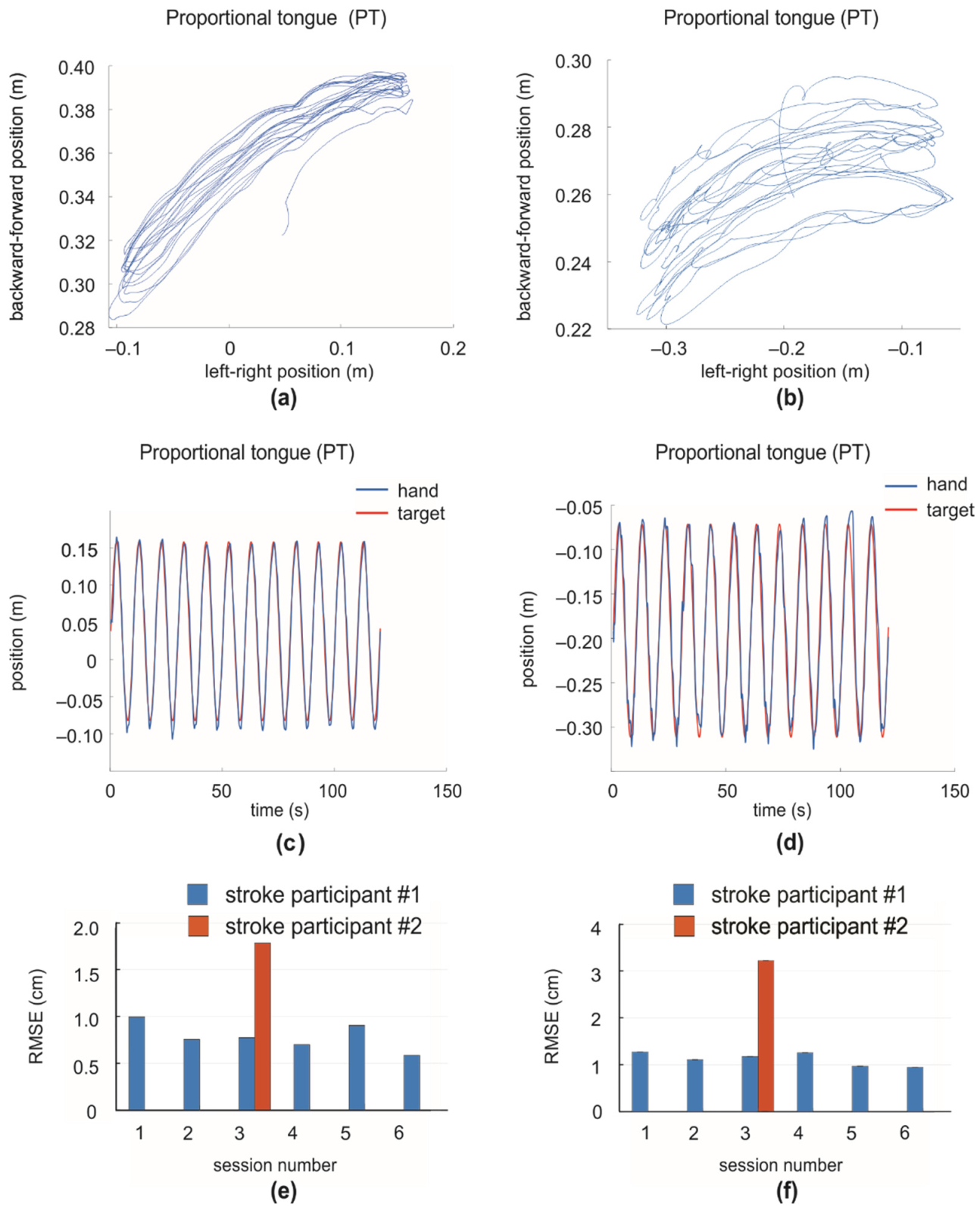

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Nakayama, H.; Jorgensen, H.S.; Raaschou, H.O.; Olsen, T.S. Recovery of upper extremity function in stroke patients: The Copenhagen Stroke Study. Arch. Phys. Med. Rehabil. 1994, 75, 394–398. [Google Scholar] [CrossRef]

- Langhorne, P.; Coupar, F.; Pollock, A. Motor recovery after stroke: A systematic review. Lancet Neurol. 2009, 8, 741–754. [Google Scholar] [CrossRef]

- Iruthayarajah, J.; Mirkowski, M.; Foley, N.; Iliescu, A.; Caughlin, S.; Fragis, N.; Alam, R.; Harris, J.; Dukelow, S.; Chae, J.; et al. Upper extremity motor rehabilitation interventions. Evid. Based Rev. Stroke Rehabil. 2018. Available online: www.ebrsr.com (accessed on 7 January 2019).

- Teasell, R.W.; Foley, N.C.; Bhogal, S.K.; Speechley, M.R. An evidence-based review of stroke rehabilitation. Top. Stroke Rehabil. 2003, 10, 29–58. [Google Scholar] [CrossRef] [PubMed]

- Teasell, R.; Salbach, N.M.; Foley, N.; Mountain, A.; Cameron, J.I.; Jong, A.; Acerra, N.E.; Bastasi, D.; Carter, S.L.; Fung, J.; et al. Canadian Stroke Best Practice Recommendations: Rehabilitation, Recovery, and Community Participation following Stroke. Part One: Rehabilitation and Recovery Following Stroke; 6th Edition Update 2019. Int. J. Stroke Off. J. Int. Stroke Soc. 2020, 15, 763–788. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Krebs, H.I.; Hogan, N. Robotic therapy: The tipping point. Am. J. Phys. Med. Rehabil. Assoc. Acad. Physiatr. 2012, 91, S290–S297. [Google Scholar] [CrossRef] [Green Version]

- Rodgers, H.; Bosomworth, H.; Krebs, H.I.; van Wijck, F.; Howel, D.; Wilson, N.; Aird, L.; Alvarado, N.; Andole, S.; Cohen, D.L.; et al. Robot assisted training for the upper limb after stroke (RATULS): A multicentre randomised controlled trial. Lancet 2019, 394, 51–62. [Google Scholar] [CrossRef] [Green Version]

- Mehrholz, J.; Pohl, M.; Platz, T.; Kugler, J.; Elsner, B. Electromechanical and robot-assisted arm training for improving activities of daily living, arm function, and arm muscle strength after stroke. Cochrane Database Syst. Rev. 2018, 9, CD006876. [Google Scholar] [CrossRef]

- Lo, A.C.; Guarino, P.D.; Richards, L.G.; Haselkorn, J.K.; Wittenberg, G.F.; Federman, D.G.; Ringer, R.J.; Wagner, T.H.; Krebs, H.I.; Volpe, B.T.; et al. Robot-assisted therapy for long-term upper-limb impairment after stroke. N. Engl. J. Med. 2010, 362, 1772–1783. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, Z.; Wang, C.; Fan, W.; Gu, M.; Yasin, G.; Xiao, S.; Huang, J.; Huang, X. Robot-Assisted Arm Training versus Therapist-Mediated Training after Stroke: A Systematic Review and Meta-Analysis. J. Healthc. Eng. 2020, 2020, 8810867. [Google Scholar] [CrossRef] [PubMed]

- Lum, P.S.; Burgar, C.G.; Shor, P.C.; Majmundar, M.; Van der Loos, M. Robot-assisted movement training compared with conventional therapy techniques for the rehabilitation of upper-limb motor function after stroke. Arch. Phys. Med. Rehabil. 2002, 83, 952–959. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Duret, C.; Grosmaire, A.G.; Krebs, H.I. Robot-Assisted Therapy in Upper Extremity Hemiparesis: Overview of an Evidence-Based Approach. Front. Neurol. 2019, 10, 412. [Google Scholar] [CrossRef] [Green Version]

- Lynch, D.; Ferraro, M.; Krol, J.; Trudell, C.M.; Christos, P.; Volpe, B.T. Continuous passive motion improves shoulder joint integrity following stroke. Clin. Rehabil. 2005, 19, 594–599. [Google Scholar] [CrossRef] [PubMed]

- Hogan, N.; Krebs, H.I.; Rohrer, B.; Palazzolo, J.J.; Dipietro, L.; Fasoli, S.E.; Stein, J.; Hughes, R.; Frontera, W.R.; Lynch, D.; et al. Motions or muscles? Some behavioral factors underlying robotic assistance of motor recovery. J. Rehabil. Res. Dev. 2006, 43, 605–618. [Google Scholar] [CrossRef] [PubMed]

- Ferraro, M.; Palazzolo, J.J.; Krol, J.; Krebs, H.I.; Hogan, N.; Volpe, B.T. Robot-aided sensorimotor arm training improves outcome in patients with chronic stroke. Neurology 2003, 61, 1604–1607. [Google Scholar] [CrossRef]

- Nudo, R.J.; Wise, B.M.; SiFuentes, F.; Milliken, G.W. Neural substrates for the effects of rehabilitative training on motor recovery after ischemic infarct. Science 1996, 272, 1791–1794. [Google Scholar] [CrossRef] [Green Version]

- Krebs, H.I.; Volpe, B.; Hogan, N. A working model of stroke recovery from rehabilitation robotics practitioners. J. Neuroeng. Rehabil. 2009, 6, 6. [Google Scholar] [CrossRef] [Green Version]

- Khan, F.; Amatya, B.; Galea, M.P.; Gonzenbach, R.; Kesselring, J. Neurorehabilitation: Applied neuroplasticity. J. Neurol. 2017, 264, 603–615. [Google Scholar] [CrossRef] [PubMed]

- Morris, J.H.; van Wijck, F.; Joice, S.; Ogston, S.A.; Cole, I.; MacWalter, R.S. A comparison of bilateral and unilateral upper-limb task training in early poststroke rehabilitation: A randomized controlled trial. Arch. Phys. Med. Rehabil. 2008, 89, 1237–1245. [Google Scholar] [CrossRef] [PubMed]

- Coupar, F.; Pollock, A.; van Wijck, F.; Morris, J.; Langhorne, P. Simultaneous bilateral training for improving arm function after stroke. Cochrane Database Syst. Rev. 2010, CD006432. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cesqui, B.; Tropea, P.; Micera, S.; Krebs, H.I. EMG-based pattern recognition approach in post stroke robot-aided rehabilitation: A feasibility study. J. Neuroeng. Rehabil. 2013, 10, 75. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ramos-Murguialday, A.; Broetz, D.; Rea, M.; Laer, L.; Yilmaz, O.; Brasil, F.L.; Liberati, G.; Curado, M.R.; Garcia-Cossio, E.; Vyziotis, A.; et al. Brain-machine interface in chronic stroke rehabilitation: A controlled study. Ann. Neurol. 2013, 74, 100–108. [Google Scholar] [CrossRef] [PubMed]

- Sullivan, J.L.; Bhagat, N.A.; Yozbatiran, N.; Paranjape, R.; Losey, C.G.; Grossman, R.G.; Contreras-Vidal, J.L.; Francisco, G.E.; O’Malley, M.K. Improving robotic stroke rehabilitation by incorporating neural intent detection: Preliminary results from a clinical trial. IEEE Int. Conf. Rehabil. Robot. 2017, 2017, 122–127. [Google Scholar] [CrossRef] [PubMed]

- Schomer, D.L.; Lopes da Silva, F.H. Niedermeyer’s Electroencephalography: Basic Principles, Clinical Applications, and Related Fields; Oxford University Press: Oxford, UK, 2017. [Google Scholar]

- Novak, D.; Riener, R. Predicting Targets of Human Reaching Motions With an Arm Rehabilitation Exoskeleton. Biomed. Sci. Instrum. 2015, 51, 385–392. [Google Scholar]

- Sajad, A.; Sadeh, M.; Crawford, J.D. Spatiotemporal transformations for gaze control. Physiol. Rep. 2020, 8, e14533. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Bulach, C.; Richards, K.M.; Wu, D.; Butler, A.J.; Ghovanloo, M. An apparatus for improving upper limb function by engaging synchronous tongue motion. In Proceedings of the 6th International IEEE/EMBS Conference on Neural Engineering (NER), San Diego, CA, USA, 6–8 November 2013; pp. 1574–1577. [Google Scholar]

- Ostadabbas, S.; Housley, N.; Sebkhi, N.; Richards, K.; Wu, D.; Zhang, Z.; Rodriguez, M.; Warthen, L.; Yarbrough, C.; Belagaje, S.; et al. A Tongue-Controlled Robotic Rehabilitation: A feasibility study in people with stroke. J. Rehabil. Res. Dev. 2016, 53, 989–1006. [Google Scholar] [CrossRef] [PubMed]

- Kandel, E.R.; Schwartz, J.H.; Jessell, T.M.; Siegelbaum, S.A.; Hudspeth, A.J. Principles of Neural Science, 5th ed.; The McGraw-Hill Companies, Inc.: New York, NY, USA, 2013. [Google Scholar]

- Danilov, Y.; Kaczmarek, K.; Skinner, K.; Tyler, M. Cranial Nerve Noninvasive Neuromodulation: New Approach to Neurorehabilitation. In Brain Neurotrauma: Molecular, Neuropsychological, and Rehabilitation Aspects; Kobeissy, F.H., Ed.; Frontiers in Neuroengineering: Boca Raton, FL, USA, 2015. [Google Scholar]

- Kent, R.D. The uniqueness of speech among motor systems. Clin. Linguist. Phon. 2004, 18, 495–505. [Google Scholar] [CrossRef] [PubMed]

- Umapathi, T.; Venketasubramanian, N.; Leck, K.J.; Tan, C.B.; Lee, W.L.; Tjia, H. Tongue deviation in acute ischaemic stroke: A study of supranuclear twelfth cranial nerve palsy in 300 stroke patients. Cerebrovasc. Dis. 2000, 10, 462–465. [Google Scholar] [CrossRef] [PubMed]

- Schimmel, M.; Ono, T.; Lam, O.L.; Muller, F. Oro-facial impairment in stroke patients. J. Oral. Rehabil. 2017, 44, 313–326. [Google Scholar] [CrossRef] [PubMed]

- Kaski, D.; Bronstein, A.M.; Edwards, M.J.; Stone, J. Cranial functional (psychogenic) movement disorders. Lancet Neurol. 2015, 14, 1196–1205. [Google Scholar] [CrossRef] [Green Version]

- Robbins, J.; Kays, S.A.; Gangnon, R.E.; Hind, J.A.; Hewitt, A.L.; Gentry, L.R.; Taylor, A.J. The effects of lingual exercise in stroke patients with dysphagia. Arch. Phys. Med. Rehabil. 2007, 88, 150–158. [Google Scholar] [CrossRef]

- Steele, C.M.; Bayley, M.T.; Peladeau-Pigeon, M.; Nagy, A.; Namasivayam, A.M.; Stokely, S.L.; Wolkin, T. A Randomized Trial Comparing Two Tongue-Pressure Resistance Training Protocols for Post-Stroke Dysphagia. Dysphagia 2016, 31, 452–461. [Google Scholar] [CrossRef] [PubMed]

- Mikulis, D.J.; Jurkiewicz, M.T.; McIlroy, W.E.; Staines, W.R.; Rickards, L.; Kalsi-Ryan, S.; Crawley, A.P.; Fehlings, M.G.; Verrier, M.C. Adaptation in the motor cortex following cervical spinal cord injury. Neurology 2002, 58, 794–801. [Google Scholar] [CrossRef]

- Funk, M.; Lutz, K.; Hotz-Boendermaker, S.; Roos, M.; Summers, P.; Brugger, P.; Hepp-Reymond, M.C.; Kollias, S.S. Sensorimotor tongue representation in individuals with unilateral upper limb amelia. Neuroimage 2008, 43, 121–127. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Strunk, J.; Shinohara, M. Brain excitability at the onset of voluntary wrist movement can be enhanced with concurrent tongue movement. In Proceedings of the 2020 ISEK Virtual Congress, Nagoya, Japan, 11–14 July 2020; p. P-N-217. [Google Scholar]

- van Galen, G.P. Handwriting: Issues for a psychomotor theory. Hum. Mov. Sci. 1991, 10, 165–191. [Google Scholar] [CrossRef]

- Hoffmann, E.R. Critical Index of Difficulty for Different Body Motions: A Review. J. Mot. Behav. 2016, 48, 277–288. [Google Scholar] [CrossRef]

- Bernstein, N.A. On the Construction of Movements. 1947. In Bernstein’s Construction of Movements: The Original Text and Commentaries; Latash, M.L., Ed.; Routledge: New York, NY, USA; London, UK, 2020. [Google Scholar]

- Huo, X.; Wang, J.; Ghovanloo, M. A magneto-inductive sensor based wireless tongue-computer interface. IEEE Trans. Neural Syst. Rehabil. Eng. Publ. IEEE Eng. Med. Biol. Soc. 2008, 16, 497–504. [Google Scholar] [CrossRef] [Green Version]

- Huo, X.; Ghovanloo, M. Evaluation of a wireless wearable tongue-computer interface by individuals with high-level spinal cord injuries. J. Neural Eng. 2010, 7, 26008. [Google Scholar] [CrossRef]

- Kim, J.; Park, H.; Bruce, J.; Sutton, E.; Rowles, D.; Pucci, D.; Holbrook, J.; Minocha, J.; Nardone, B.; West, D.; et al. The tongue enables computer and wheelchair control for people with spinal cord injury. Sci. Transl. Med. 2013, 5, 213ra166. [Google Scholar] [CrossRef] [Green Version]

- Huo, X.; Ghovanloo, M. Tongue drive: A wireless tongue- operated means for people with severe disabilities to communicate their intentions. IEEE Commun. Mag. 2012, 50, 128–135. [Google Scholar] [CrossRef]

- Zhang, Z.; Ostadabbas, S.; Sahadat, M.N.; Sebkhi, N.; Wu, D.; Butler, A.J.; Ghovanloo, M. Enhancements of a tongue-operated robotic rehabilitation system. In Proceedings of the 2015 IEEE Biomedical Circuits and Systems Conference (BioCAS), Atlanta, GA, USA, 22 October 2015; pp. 1–4. [Google Scholar]

- Housley, S.N.; Wu, D.; Richards, K.; Belagaje, S.; Ghovanloo, M.; Butler, A.J. Improving Upper Extremity Function and Quality of Life with a Tongue Driven Exoskeleton: A Pilot Study Quantifying Stroke Rehabilitation. Stroke Res. Treat. 2017, 2017, 3603860. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, Z.; Prilutsky, B.; Shinohara, M.; Butler, A.; Ghovanloo, M. Preliminary evaluation of a tongue-operated exoskeleton for post-stroke upper limb rehabilitation. Arch. Phys. Med. Rehabil. 2017, 98, e163. [Google Scholar] [CrossRef]

- Kim, J.; Huo, X.; Minocha, J.; Holbrook, J.; Laumann, A.; Ghovanloo, M. Evaluation of a smartphone platform as a wireless interface between tongue drive system and electric-powered wheelchairs. IEEE Trans. Bio-Med. Eng. 2012, 59, 1787–1796. [Google Scholar] [CrossRef]

- Ayala-Acevedo, A.; Ghovanloo, M. Smartphone-compatible robust classification algorithm for the Tongue Drive System. In Proceedings of the 2014 IEEE Biomedical Circuits and Systems Conference (BioCAS) Proceedings, Lausanne, Switzerland, 22–24 October 2014; pp. 161–164. [Google Scholar]

- Scott, S.H. Apparatus for measuring and perturbing shoulder and elbow joint positions and torques during reaching. J. Neurosci. Methods 1999, 89, 119–127. [Google Scholar] [CrossRef]

- Dukelow, S.P.; Herter, T.M.; Bagg, S.D.; Scott, S.H. The independence of deficits in position sense and visually guided reaching following stroke. J. Neuroeng. Rehabil. 2012, 9, 72. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fitts, P.M.; Peterson, J.R. Information Capacity of Discrete Motor Responses. J. Exp. Psychol. 1964, 67, 103–112. [Google Scholar] [CrossRef]

- MacKenzie, I.S. Fitts’ Law as a Research and Design Tool in Human-Computer Interaction. Hum. Comput. Interact. 1992, 7, 91–139. [Google Scholar] [CrossRef]

- Prilutsky, B.I.; Ashley, D.; VanHiel, L.; Harley, L.; Tidwell, J.S.; Backus, D. Motor control and motor redundancy in the upper extremity: Implications for neurorehabilitation. Top. Spinal Cord. Inj. Rehabil. 2011, 17, 7–15. [Google Scholar] [CrossRef]

- Blank, A.A.; French, J.A.; Pehlivan, A.U.; O’Malley, M.K. Current Trends in Robot-Assisted Upper-Limb Stroke Rehabilitation: Promoting Patient Engagement in Therapy. Curr. Phys. Med. Rehabil. Rep. 2014, 2, 184–195. [Google Scholar] [CrossRef]

- Hart, S.; Staveland, L. Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. Adv. Psychol. 1988, 52, 139–183. [Google Scholar]

- Page, S.J.; Fulk, G.D.; Boyne, P. Clinically important differences for the upper-extremity Fugl-Meyer Scale in people with minimal to moderate impairment due to chronic stroke. Phys. Ther. 2012, 92, 791–798. [Google Scholar] [CrossRef] [Green Version]

- D’Arcy, R.C.N.; Greene, T.; Greene, D.; Frehlick, Z.; Fickling, S.D.; Campbell, N.; Etheridge, T.; Smith, C.; Bollinger, F.; Danilov, Y.; et al. Portable neuromodulation induces neuroplasticity to re-activate motor function recovery from brain injury: A high-density MEG case study. J. Neuroeng. Rehabil. 2020, 17, 158. [Google Scholar] [CrossRef] [PubMed]

- Frehlick, Z.; Lakhani, B.; Fickling, S.D.; Livingstone, A.C.; Danilov, Y.; Sackier, J.M.; D’Arcy, R.C.N. Human translingual neurostimulation alters resting brain activity in high-density EEG. J. Neuroeng. Rehabil. 2019, 16, 60. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tyler, M.E.; Kaczmarek, K.A.; Rust, K.L.; Subbotin, A.M.; Skinner, K.L.; Danilov, Y.P. Non-invasive neuromodulation to improve gait in chronic multiple sclerosis: A randomized double blind controlled pilot trial. J. Neuroeng. Rehabil. 2014, 11, 79. [Google Scholar] [CrossRef] [Green Version]

- Kwakkel, G.; Veerbeek, J.M.; van Wegen, E.E.; Wolf, S.L. Constraint-induced movement therapy after stroke. Lancet Neurol. 2015, 14, 224–234. [Google Scholar] [CrossRef] [Green Version]

- Stippich, C.; Blatow, M.; Durst, A.; Dreyhaupt, J.; Sartor, K. Global activation of primary motor cortex during voluntary movements in man. Neuroimage 2007, 34, 1227–1237. [Google Scholar] [CrossRef]

- Salmelin, R.; Sams, M. Motor cortex involvement during verbal versus non-verbal lip and tongue movements. Hum. Brain Mapp. 2002, 16, 81–91. [Google Scholar] [CrossRef]

| Control Mode | Description |

|---|---|

| Discrete tongue (DT) | Tongue discrete commands control robotic arm |

| Proportional tongue (PT) | Tongue proportional commands control robotic arm |

| Discrete tongue hybrid (DTH) | Combination of discrete tongue control and active control |

| Proportional tongue hybrid (PTH) | Combination of proportional tongue control and active control |

| Active (A) | No robot assistance/resistance |

| Active with viscous resistance (AV) | Robot provides velocity-dependent resistive load |

| Passive (P) | Robot controls arm movement |

| Subject | Sex | Age, Years | Upper Arm Length, cm | Forearm + Hand Length, cm |

|---|---|---|---|---|

| 1 | F | 26 | 27.6 | 39.5 |

| 2 | M | 44 | 28.6 | 47.4 |

| 3 | M | 23 | 28.5 | 42.9 |

| 4 | F | 23 | 27.5 | 35.0 |

| 5 | M | 24 | 30.6 | 41.9 |

| 6 | M | 59 | 31.6 | 50.1 |

| 7 | M | 23 | 28.6 | 41.0 |

| 8 | F | 24 | 28.1 | 37.7 |

| 9 | M | 24 | 31.0 | 45.6 |

| 10 | M | 30 | 30.6 | 42.4 |

| Subject | Stroke Type | Sex | Affected Arm | Time since Stroke (mo) | Age (yr) | FMA at Baseline | FMA at Start | FMA at End |

|---|---|---|---|---|---|---|---|---|

| 1 | Hemorrhagic | F | Right | 27 | 32 | 35/66 | 38/66 | 37/66 |

| 2 | Hemorrhagic | F | Left | 62 | 58 | 13/66 | 12/66 | 20/66 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Z.; Prilutsky, B.I.; Butler, A.J.; Shinohara, M.; Ghovanloo, M. Design and Preliminary Evaluation of a Tongue-Operated Exoskeleton System for Upper Limb Rehabilitation. Int. J. Environ. Res. Public Health 2021, 18, 8708. https://doi.org/10.3390/ijerph18168708

Zhang Z, Prilutsky BI, Butler AJ, Shinohara M, Ghovanloo M. Design and Preliminary Evaluation of a Tongue-Operated Exoskeleton System for Upper Limb Rehabilitation. International Journal of Environmental Research and Public Health. 2021; 18(16):8708. https://doi.org/10.3390/ijerph18168708

Chicago/Turabian StyleZhang, Zhenxuan, Boris I. Prilutsky, Andrew J. Butler, Minoru Shinohara, and Maysam Ghovanloo. 2021. "Design and Preliminary Evaluation of a Tongue-Operated Exoskeleton System for Upper Limb Rehabilitation" International Journal of Environmental Research and Public Health 18, no. 16: 8708. https://doi.org/10.3390/ijerph18168708

APA StyleZhang, Z., Prilutsky, B. I., Butler, A. J., Shinohara, M., & Ghovanloo, M. (2021). Design and Preliminary Evaluation of a Tongue-Operated Exoskeleton System for Upper Limb Rehabilitation. International Journal of Environmental Research and Public Health, 18(16), 8708. https://doi.org/10.3390/ijerph18168708