Depressive Mood Assessment Method Based on Emotion Level Derived from Voice: Comparison of Voice Features of Individuals with Major Depressive Disorders and Healthy Controls

Abstract

1. Introduction

2. Materials and Methods

2.1. Acquisition of Voices

2.2. Voice Emotion Analysis System

2.3. Algorithm

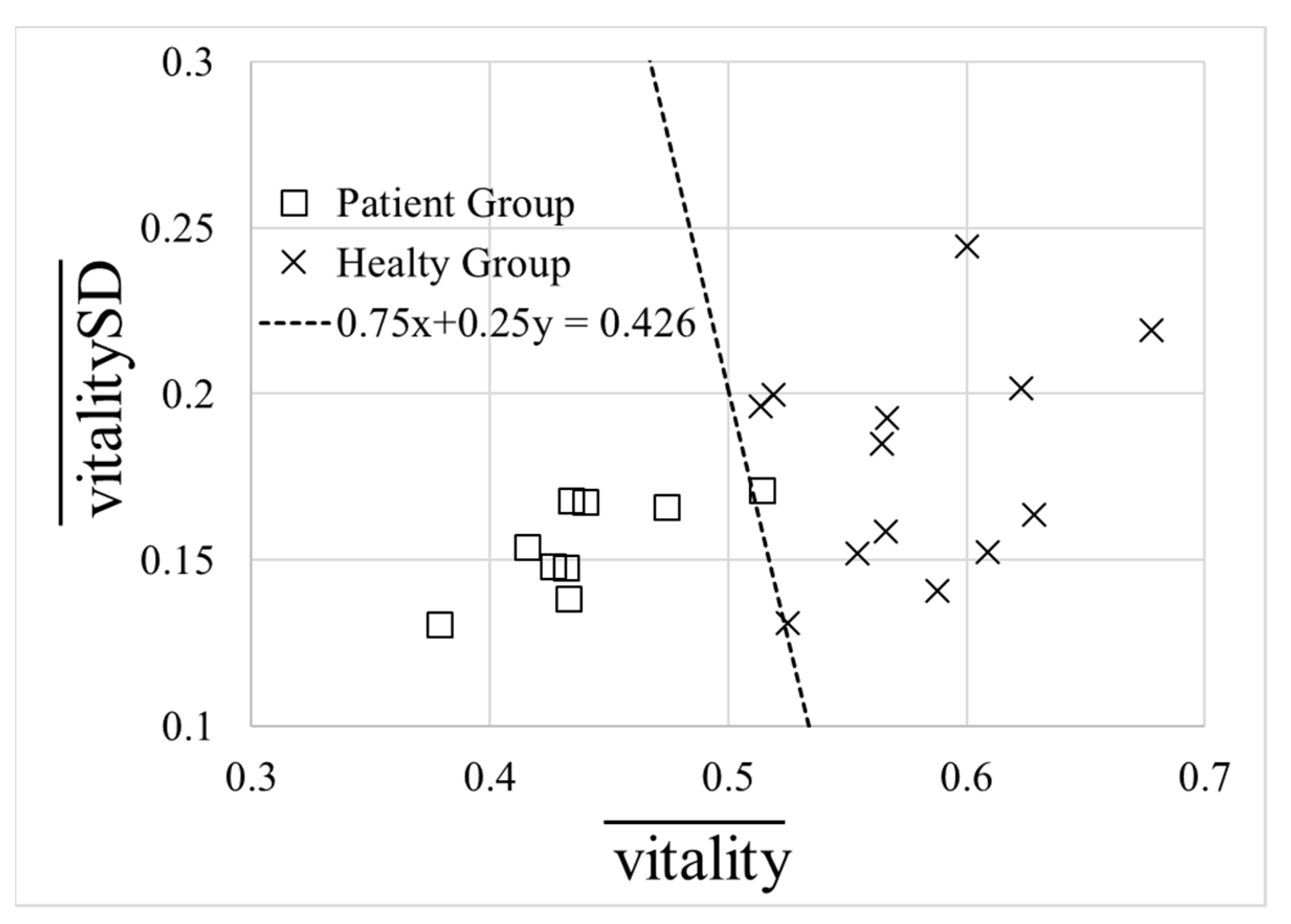

2.3.1. Vitality and Mental Activity

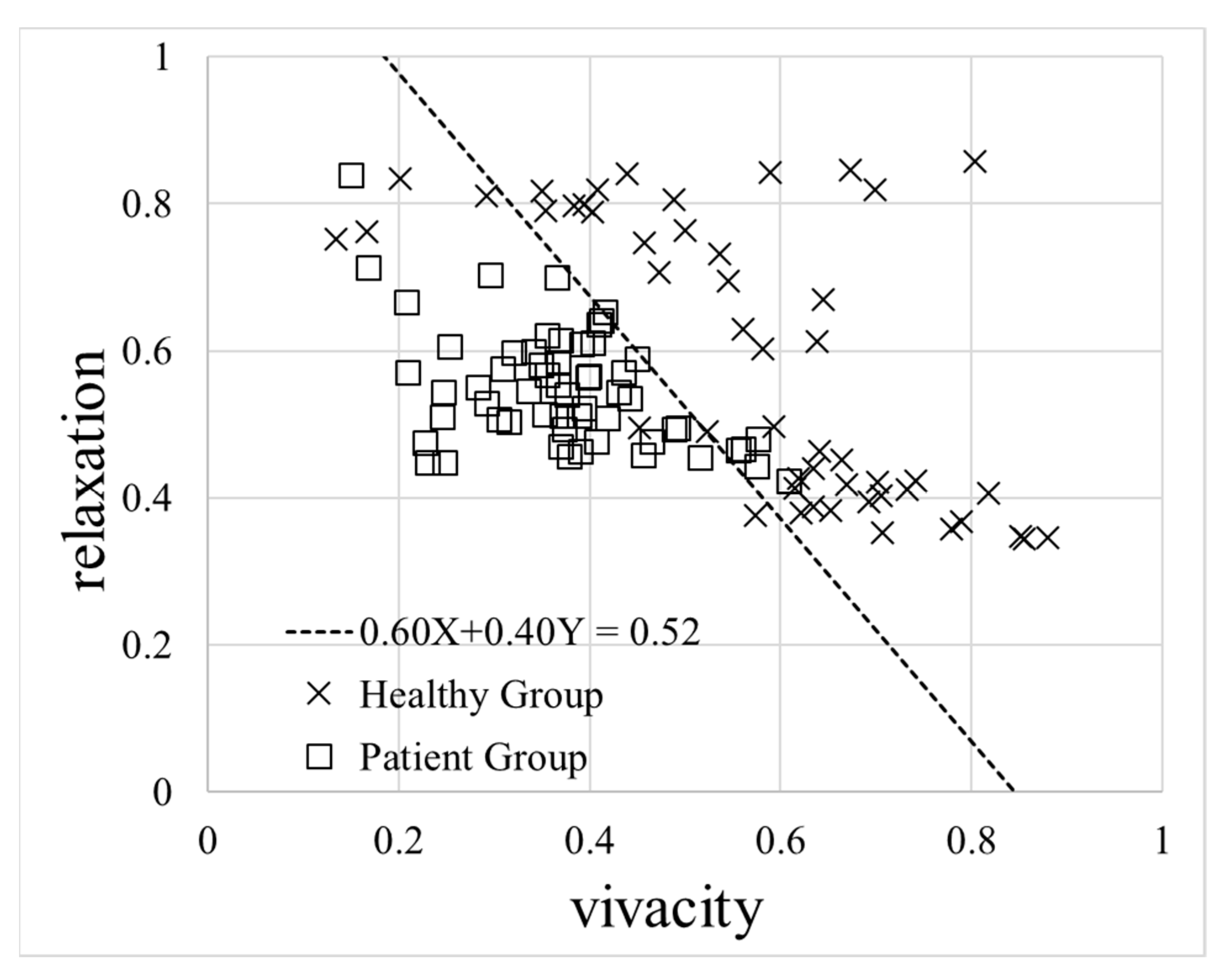

2.3.2. Vivacity and Relaxation

2.3.3. Vitality Calculation Algorithm

2.3.4. Mental Activity Calculation Algorithm

2.4. Analysis Method

3. Results

3.1. HAM-D Score

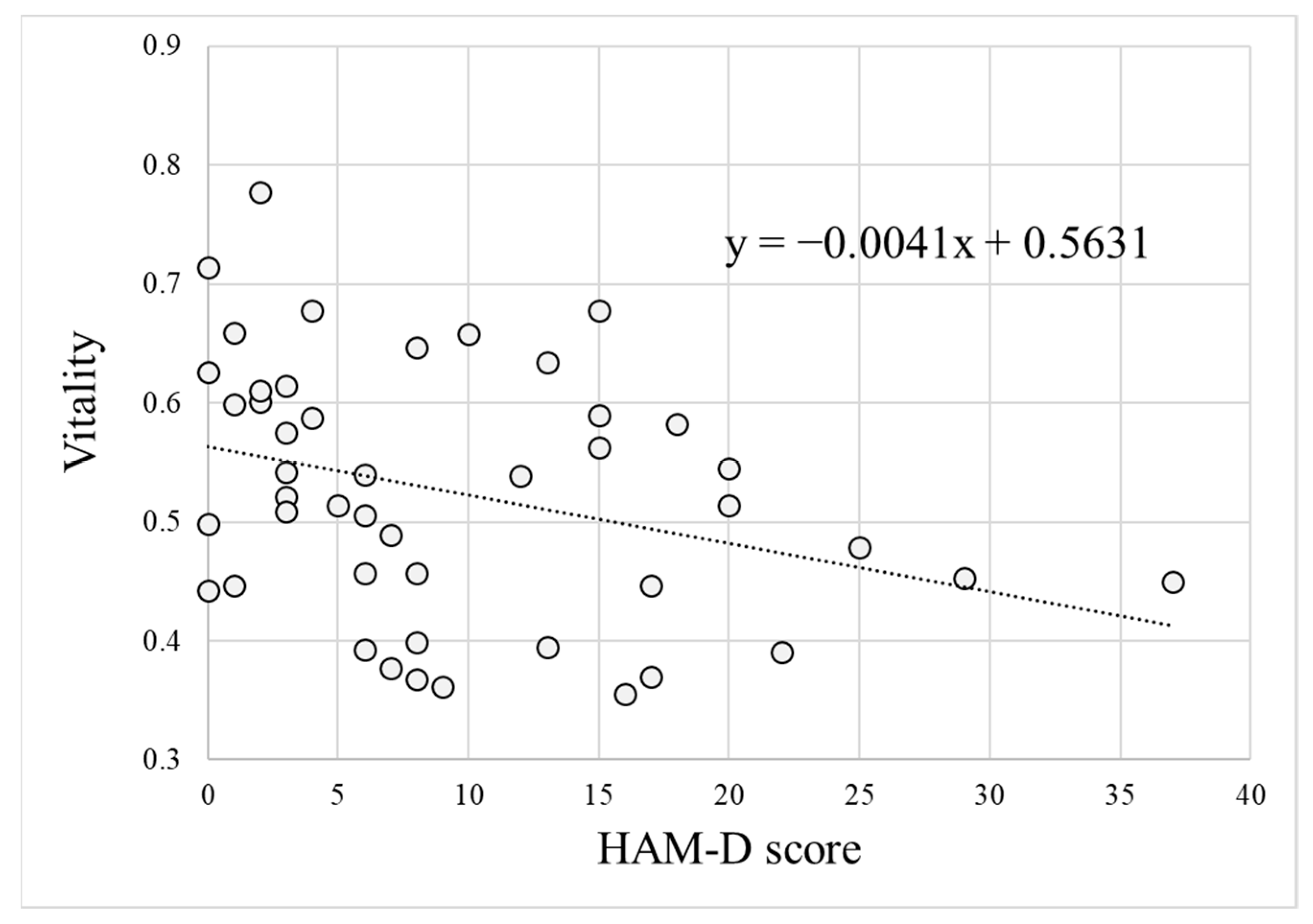

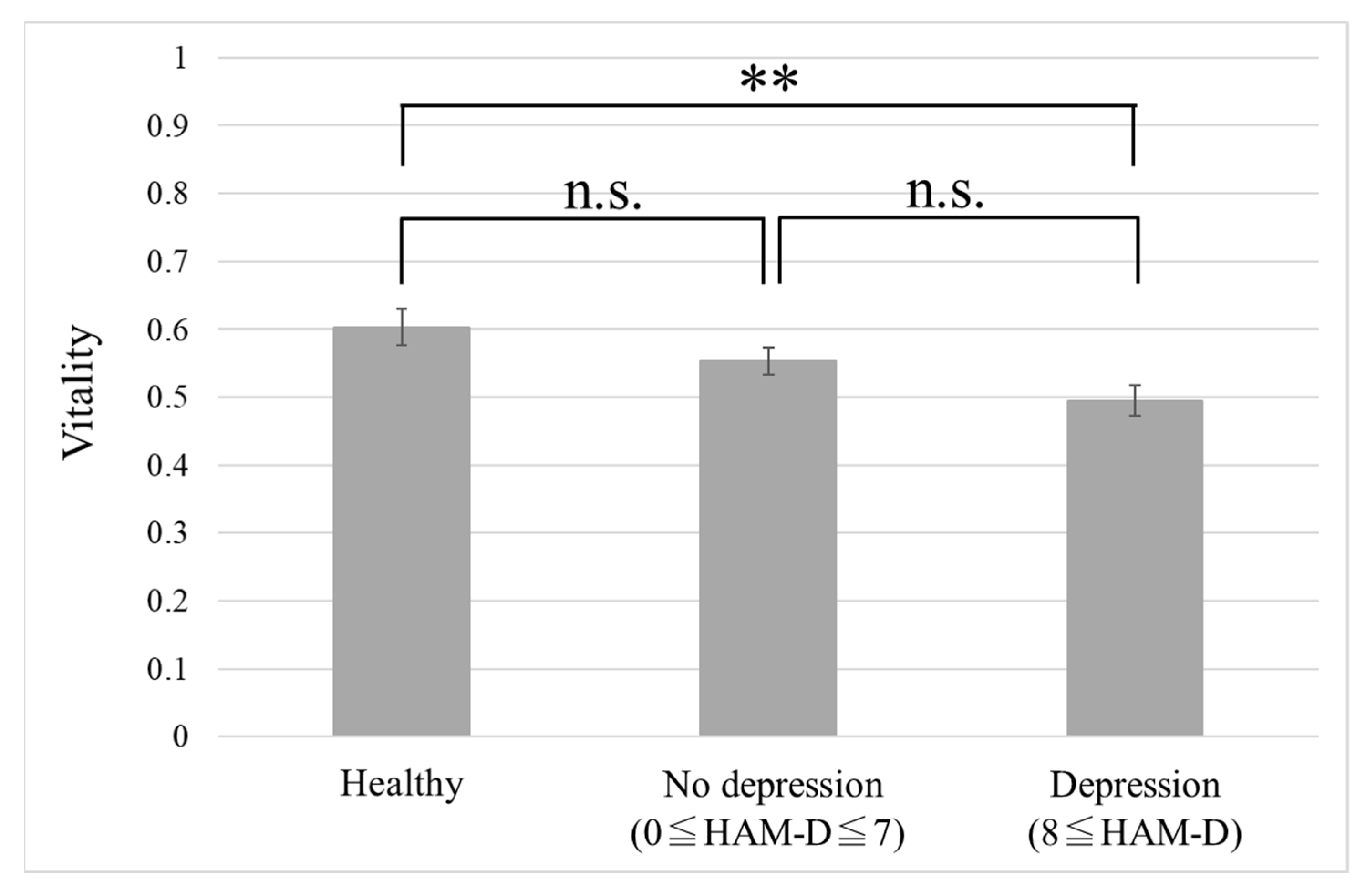

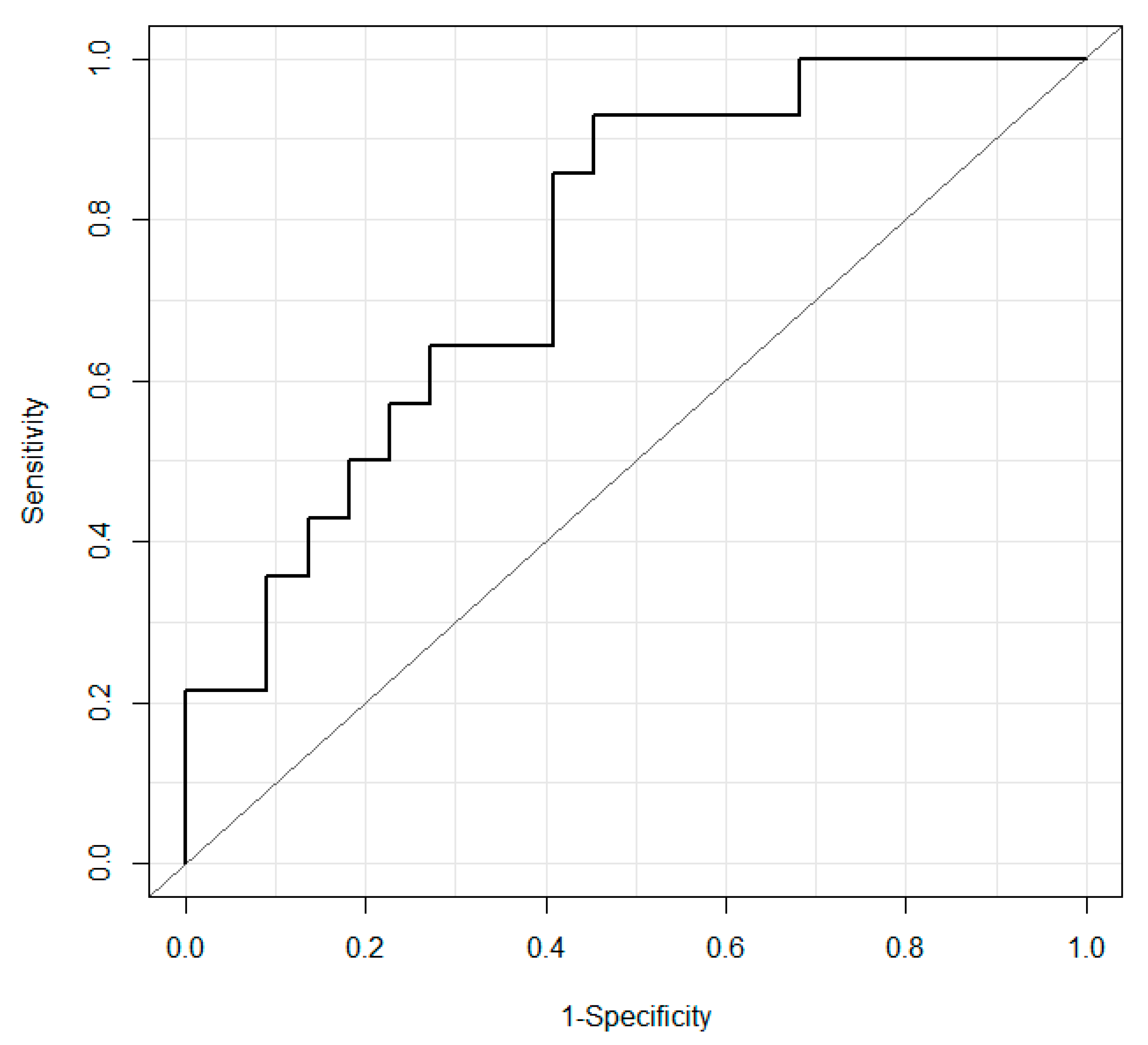

3.2. Performance Evaluation of Vitality

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- World Health Organization. The Global Burden of Disease: 2004 Update; WHO Press: Geneva, Switzerland, 2008; pp. 46–49. [Google Scholar]

- Kessler, R.C.; Akiskal, H.S.; Ames, M.; Birnbaum, H.; Greenberg, P.; Hirschfeld, R.M.A.; Jin, R.; Merikangas, K.R.; Simon, G.E.; Wang, P.S. Prevalence and effects of mood disorders on work performance in a nationally representative sample of U.S. Workers. Am. J. Psychiatry 2006, 163, 1561–1568. [Google Scholar] [CrossRef] [PubMed]

- Goldberg, D.P.; Blackwell, B. Psychiatric illness in general practice: A detailed study using a new method of case identification. Br. Med. J. 1970, 2, 439–443. [Google Scholar] [CrossRef] [PubMed]

- Goldberg, D. Manual of the General Health Questionnaire; NFER Nelson: Windsor, UK, 1978. [Google Scholar]

- Beck, A.T. A systematic investigation of depression. Compr. Psychiatry 1961, 2, 163–170. [Google Scholar] [CrossRef]

- Beck, A.T.; Ward, C.H.; Mendelson, M.; Mock, J.; Erbaugh, J. An inventory for measuring depression. Arch. Gen. Psychiatry 1961, 4, 561–571. [Google Scholar] [CrossRef]

- Takai, N.; Yamaguchi, M.; Aragaki, T.; Eto, K.; Uchihashi, K.; Nishikawa, Y. Effect of psychological stress on the salivary cortisol and amylase levels in healthy young adults. Arch. Oral Biol. 2004, 49, 963–968. [Google Scholar] [CrossRef] [PubMed]

- Suzuki, G.; Tokuno, S.; Nibuya, M.; Ishida, T.; Yamamoto, T.; Mukai, Y.; Mitani, K.; Tsumatori, G.; Scott, D.; Shimizu, K. Decreased plasma brain-derived neurotrophic factor and vascular endothelial growth factor concentrations during military training. PLoS ONE 2014, 9, e89455. [Google Scholar] [CrossRef]

- Porta, M. A Dictionary of Epidemiology, 6th ed.; Oxford University Press: New York, NY, USA, 2014. [Google Scholar]

- Arora, S.; Venkataraman, V.; Zhan, A.; Donohue, S.; Biglan, K.M.; Dorsey, E.R.; Little, M.A. Detecting and monitoring the symptoms of Parkinson’s disease using smartphones: A pilot study. Parkinsonism Relat. Disord. 2015, 21, 650–653. [Google Scholar] [CrossRef]

- Rachuri, K.K.; Musolesi, M.; Mascolo, C.; Rentfrow, P.J.; Longworth, C.; Aucinas, A. EmotionSense: A mobile phones based adaptive platform for experimental social psychology research. In Proceedings of the 12th ACM International Conference on Ubiquitous Computing, Copenhagen, Denmark, 26–29 September 2010; pp. 281–290. [Google Scholar]

- Lu, H.; Frauendorfer, D.; Rabbi, M.; Mast, M.S.; Chittaranjan, G.T.; Campbell, A.T.; Gatica-Perez, D.; Choudhury, T. Stresssense: Detecting stress in unconstrained acoustic environments using smartphones. In Proceedings of the 2012 ACM Conference on Ubiquitous Computing, Pittsburgh, PA, USA, 5–8 September 2012; pp. 351–360. [Google Scholar]

- Sobin, C.; Sackeim, H.A. Psychomotor symptoms of depression. Am. J. Psychiatry 1997, 154, 4–17. [Google Scholar]

- Darby, J.K.; Hollien, H. Vocal and speech patterns of depressive patients. Folia Phoniatr. Logo 1977, 29, 279–291. [Google Scholar] [CrossRef]

- Yang, Y.; Fairbairn, C.; Cohn, J.F. Detecting depression severity from vocal prosody. IEEE Trans. Affect. Comput. 2013, 4, 142–150. [Google Scholar] [CrossRef]

- Cannizzaro, M.; Harel, B.; Reilly, N.; Chappell, P.; Snyder, P.J. Voice acoustical measurement of the severity of major depression. Brain Cogn. 2004, 56, 30–35. [Google Scholar] [CrossRef] [PubMed]

- Moore, E.; Clements, M.; Peifer, J.; Weisser, L. Analysis of prosodic variation in speech for clinical depression. In Proceedings of the 25th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Cancun, Mexico, 17–21 September 2003; pp. 2925–2928. [Google Scholar]

- Mundt, J.C.; Snyder, P.J.; Cannizzaro, M.S.; Chappie, K.; Geralts, D.S. Voice acoustic measures of depression severity and treatment response collected via interactive voice response (IVR) technology. J. Neurolinguist. 2007, 20, 50–64. [Google Scholar] [CrossRef] [PubMed]

- Vicsi, K.; Sztahó, D.; Kiss, G. Examination of the sensitivity of acoustic-phonetic parameters of speech to depression. In Proceedings of the 2012 IEEE 3rd International Conference on Cognitive Info Communications (CogInfoCom), Kosice, Slovakia, 2–5 December 2012; pp. 511–515. [Google Scholar]

- Taguchi, T.; Tachikawa, H.; Nemoto, K.; Suzuki, M.; Nagano, T.; Tachibana, R.; Nishimura, M.; Arai, T. Major depressive disorder discrimination using vocal acoustic features. J. Affect. Disord. 2018, 225, 214–220. [Google Scholar] [CrossRef] [PubMed]

- Fujisawa, D. Assessment scales of cognitive behavioral therapy. Jpn. J. Clin. Psychiatry 2010, 39, 839–850. [Google Scholar]

- Wang, J.; Zhang, L.; Liu, T.; Pan, W.; Hu, B.; Zhu, T. Acoustic differences between healthy and depressed people: A cross-situation study. BMC Psychiatry 2019, 19, 300. [Google Scholar] [CrossRef]

- Bone, D.; Lee, C.C.; Narayanan, S. Robust unsupervised arousal rating: A rule-based framework with knowledge-inspired vocal features. IEEE Trans. Affect. Comput. 2014, 5, 201–213. [Google Scholar] [CrossRef] [PubMed]

- Schmidt, J.; Janse, E.; Scharenborg, O. Perception of emotion in conversational speech by younger and older listeners. Front. Psychol. 2016, 7, 781. [Google Scholar] [CrossRef]

- Lazarus, R.S. From psychological stress to the emotions: A history of changing outlooks. Ann. Rev. Psychol. 1993, 44, 1–22. [Google Scholar] [CrossRef]

- Mitsuyoshi, S.; Nakamura, M.; Omiya, Y.; Shinohara, S.; Hagiwara, N.; Tokuno, S. Mental status assessment of disaster relief personnel by vocal affect display based on voice emotion recognition. Disaster Mil. Med. 2017, 3, 4:1–4:9. [Google Scholar] [CrossRef]

- Tokuno, S.; Mitsuyoshi, S.; Suzuki, G.; Tsumatori, G. Stress evaluation using voice emotion recognition technology: A novel stress evaluation technology for disaster responders. In Proceedings of the XVI World Congress of Psychiatry, Madrid, Spain, 14–18 September 2014; p. 301. [Google Scholar]

- Tokuno, S.; Tsumatori, G.; Shono, S.; Takei, E.; Yamamoto, T.; Suzuki, G.; Mituyoshi, S.; Shimura, M. Usage of emotion recognition in military health care. In Proceedings of the 2011 Defense Science Research Conference and Expo, Singapore, 3–5 August 2011; pp. 1–5. [Google Scholar]

- Mitsuyoshi, S.; Ren, F.; Tanaka, Y.; Kuroiwa, S. Non-verbal voice emotion analysis system. Int. J. ICIC 2006, 2, 819–830. [Google Scholar]

- Mitsuyoshi, S.; Shibasaki, K.; Tanaka, Y.; Kato, M.; Murata, T.; Minami, T.; Yagura, H.; Ren, F. Emotion voice analysis system connected to the human brain. In Proceedings of the 2007 International Conference on Natural Language Processing and Knowledge Engineering, Beijing, China, 30 August–1 September 2007; pp. 476–484. [Google Scholar]

- Mitsuyoshi, S. Advanced Generation Interface Inc., Assignee. Emotion Recognizing Method, Sensibility Creating Method, Device, and Software. U.S. Patent 7,340,393, 4 March 2008. [Google Scholar]

- Hamilton, M.A. Development of a rating scale for primary depressive illness. Br. J. Soc. Clin. Psychol. 1967, 6, 278–296. [Google Scholar] [CrossRef] [PubMed]

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders, 5th ed.; American Psychiatric Association: Arlington, VA, USA, 2013. [Google Scholar] [CrossRef]

- Otsubo, T.; Tanaka, K.; Koda, R.; Shinoda, J.; Sano, N.; Tanaka, S.; Aoyama, H.; Mimura, M.; Kamijima, K. Reliability and validity of Japanese version of the Mini-International Neuropsychiatric Interview. Psychiatry Clin. Neurosci. 2005, 59, 517–526. [Google Scholar] [CrossRef]

- Zimmerman, M.; Martinez, J.H.; Young, D.; Chelminski, I.; Dalrymple, K. Severity classification on the Hamilton depression rating scale. J. Affect. Disord. 2013, 150, 384–388. [Google Scholar] [CrossRef] [PubMed]

- The Comprehensive R Archive Network. Available online: Cran.r-project.org/ (accessed on 15 July 2020).

- Uraguchi, T.; Shinohara, S.; Denis, N.A.; Țaicu, M.; Săvoiu, G.; Omiya, Y.; Nakamura, M.; Higuchi, M.; Takano, T.; Hagiwara, N.; et al. Evaluation of Mind Monitoring System (MIMOSYS) by subjects with Romanian and Russian as their native language. In Proceedings of the 40th International Conference of the IEEE Engineering in Medicine and Biology Society, Honolulu, HI, USA, 18–22 July 2018. [Google Scholar]

- Shinohara, S.; Omiya, Y.; Hagiwara, N.; Nakamura, M.; Higuchi, M.; Kirita, T.; Takano, T.; Mitsuyoshi, S.; Tokuno, S. Case studies of utilization of the mind monitoring system (MIMOSYS) using voice and its future prospects. ESMSJ 2017, 7, 7–12. [Google Scholar]

- Higuchi, M.; Nakamura, M.; Shinohara, S.; Omiya, Y.; Takano, T.; Mitsuyoshi, S.; Tokuno, S. Effectiveness of a Voice-Based Mental Health Evaluation System for Mobile Devices: Prospective Study. JMIR Form. Res. 2020, 4, e16455. [Google Scholar] [CrossRef] [PubMed]

| Group | Sex | Number of Participants | Mean Age | Number of Data |

|---|---|---|---|---|

| Healthy | Male | 9 | 42.9 ± 5.6 | 25 |

| Female | 4 | 33.3 ± 15.4 | 25 | |

| Major depression | Male | 4 | 54.0 ± 12.0 | 24 |

| Female | 5 | 49.4 ± 15.4 | 34 |

| No. | Phrase in Japanese | Purpose (Meaning) |

|---|---|---|

| 1 | I-ro-ha-ni-ho-he-to | Non-emotional (no meaning, like “a-b-c”) |

| 2 | Honjitsu ha seiten nari | Non-emotional (It is fine today) |

| 3 | Tsurezurenaru mama ni | Non-emotional (Having nothing to do) |

| 4 | Wagahai ha neko dearu | Non-emotional (I am a cat) |

| 5 | Mukashi aru tokoro ni | Non-emotional (Once upon a time, there lived) |

| 6 | a-i-u-e-o | Check pronunciation of vowel sounds (no meaning like “a-b-c”) |

| 7 | Ga-gi-gu-ge-go | Check sonant pronunciation (no meaning, like “a-b-c”) |

| 8 | Ra-ri-ru-re-ro | Check liquid sound pronunciation (no meaning, like “a-b-c”) |

| 9 | Pa-pi-pu-pe-po | Check p-sound pronunciation (no meaning, like “a-b-c”) |

| 10 | Omoeba tooku he kita monda | Non-emotional (While thinking, I have come far) |

| 11 | Garapagosu shotou | Check pronunciation (Galápagos Islands) |

| 12 | Tsukarete guttari shiteimasu. | Emotional (I am tired/dead tired) |

| 13 | Totemo genki desu | Emotional (I am very cheerful) |

| 14 | Kinou ha yoku nemuremashita | Emotional (I was able to sleep well yesterday) |

| 15 | Shokuyoku ga arimasu | Emotional (I have an appetite) |

| 16 | Okorippoi desu | Emotional (I am irritable) |

| 17 | Kokoroga odayaka desu | Emotional (My heart is calm) |

| Group | Sex | Number of Participants | Mean Age | Number of Data |

|---|---|---|---|---|

| Healthy | Male | 10 | 42.7 ± 6.0 | 10 |

| Female | 4 | 35.0 ± 14.4 | 4 | |

| Major depression | Male | 19 | 43.7 ± 11.0 | 34 |

| Female | 11 | 53.9 ± 8.2 | 12 |

| Group | Number of Participants | Number of Data | Mean HAM-D Score ± SD |

|---|---|---|---|

| No depression (HAM-D ≤ 7) | 19 | 24 | 3.1 ± 2.3 |

| Depression (HAM-D ≥ 8) | 11 | 22 | 16.1 ± 7.4 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shinohara, S.; Nakamura, M.; Omiya, Y.; Higuchi, M.; Hagiwara, N.; Mitsuyoshi, S.; Toda, H.; Saito, T.; Tanichi, M.; Yoshino, A.; et al. Depressive Mood Assessment Method Based on Emotion Level Derived from Voice: Comparison of Voice Features of Individuals with Major Depressive Disorders and Healthy Controls. Int. J. Environ. Res. Public Health 2021, 18, 5435. https://doi.org/10.3390/ijerph18105435

Shinohara S, Nakamura M, Omiya Y, Higuchi M, Hagiwara N, Mitsuyoshi S, Toda H, Saito T, Tanichi M, Yoshino A, et al. Depressive Mood Assessment Method Based on Emotion Level Derived from Voice: Comparison of Voice Features of Individuals with Major Depressive Disorders and Healthy Controls. International Journal of Environmental Research and Public Health. 2021; 18(10):5435. https://doi.org/10.3390/ijerph18105435

Chicago/Turabian StyleShinohara, Shuji, Mitsuteru Nakamura, Yasuhiro Omiya, Masakazu Higuchi, Naoki Hagiwara, Shunji Mitsuyoshi, Hiroyuki Toda, Taku Saito, Masaaki Tanichi, Aihide Yoshino, and et al. 2021. "Depressive Mood Assessment Method Based on Emotion Level Derived from Voice: Comparison of Voice Features of Individuals with Major Depressive Disorders and Healthy Controls" International Journal of Environmental Research and Public Health 18, no. 10: 5435. https://doi.org/10.3390/ijerph18105435

APA StyleShinohara, S., Nakamura, M., Omiya, Y., Higuchi, M., Hagiwara, N., Mitsuyoshi, S., Toda, H., Saito, T., Tanichi, M., Yoshino, A., & Tokuno, S. (2021). Depressive Mood Assessment Method Based on Emotion Level Derived from Voice: Comparison of Voice Features of Individuals with Major Depressive Disorders and Healthy Controls. International Journal of Environmental Research and Public Health, 18(10), 5435. https://doi.org/10.3390/ijerph18105435