Effects of the Musical Sound Environment on Communicating Emotion

Abstract

1. Introduction

2. Material and Methods

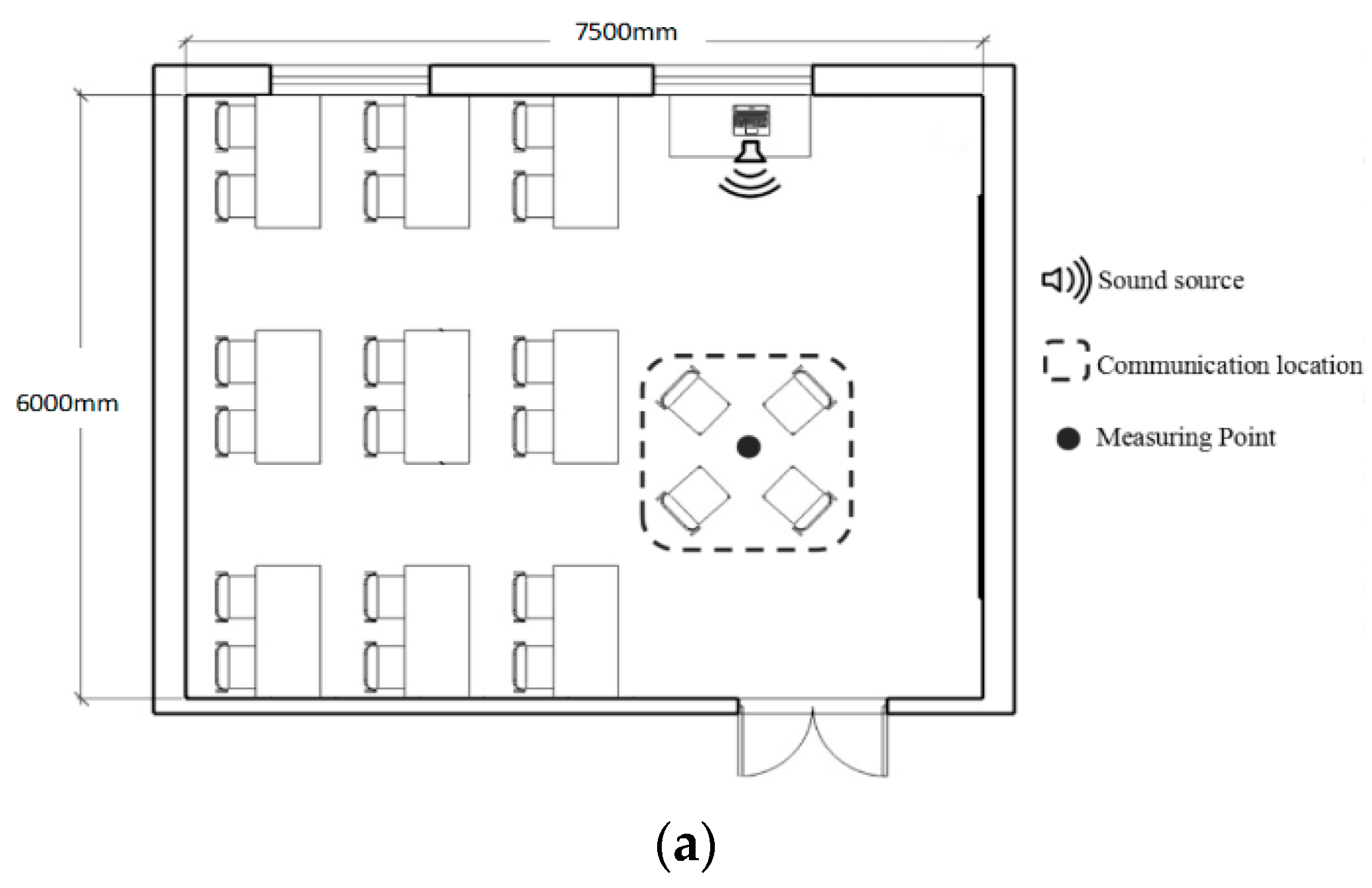

2.1. Experimental Site

2.2. Participants

2.3. Questionnaire Survey

2.4. Experimental Materials

2.4.1. Musical Emotion

2.4.2. Tempo

2.4.3. SPL of Music

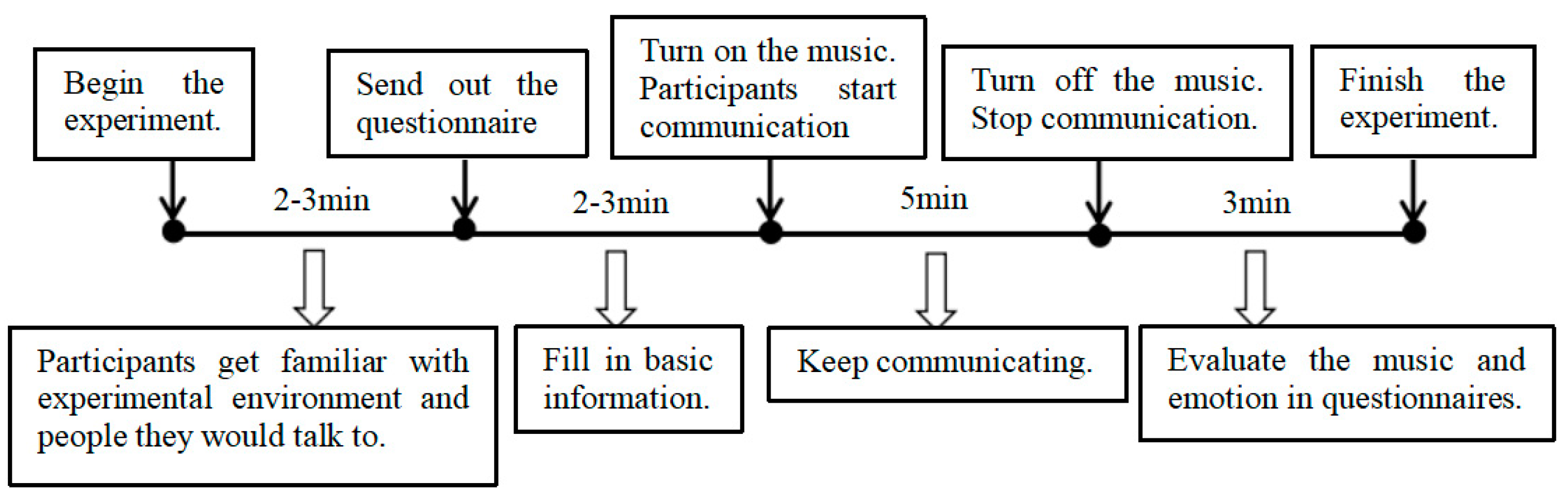

2.5. Experimental Design

2.6. Data Analysis

3. Results

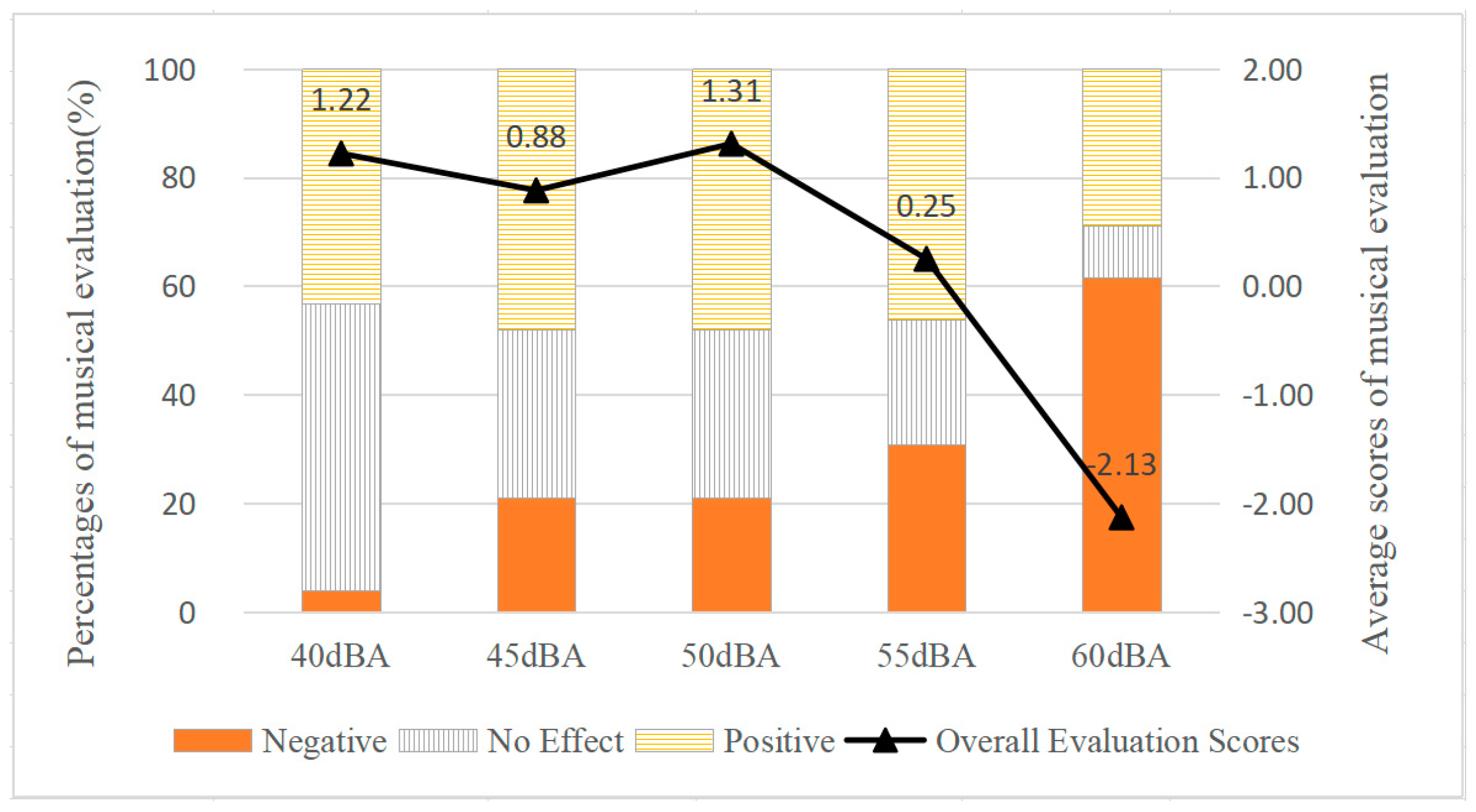

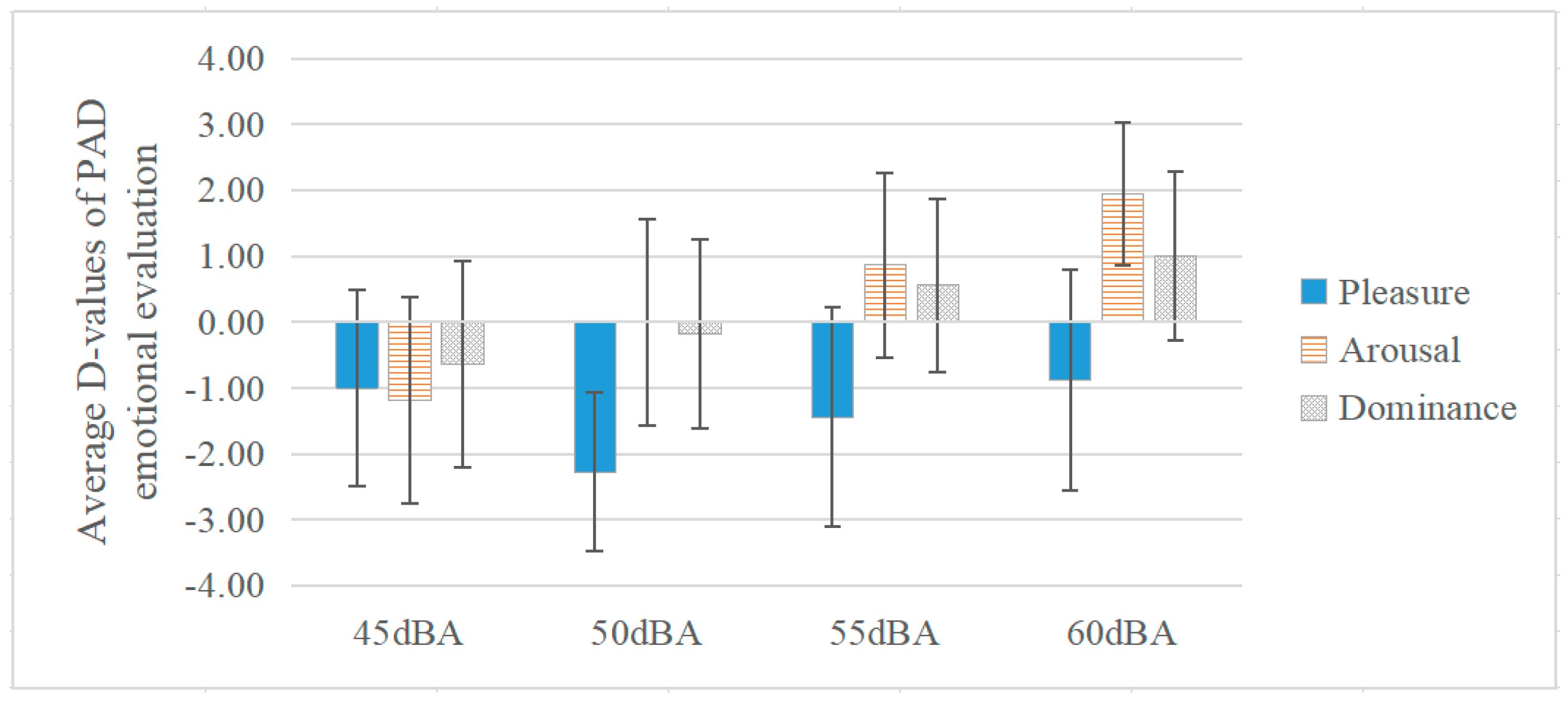

3.1. Effect of SPL of Music on Communication Emotion

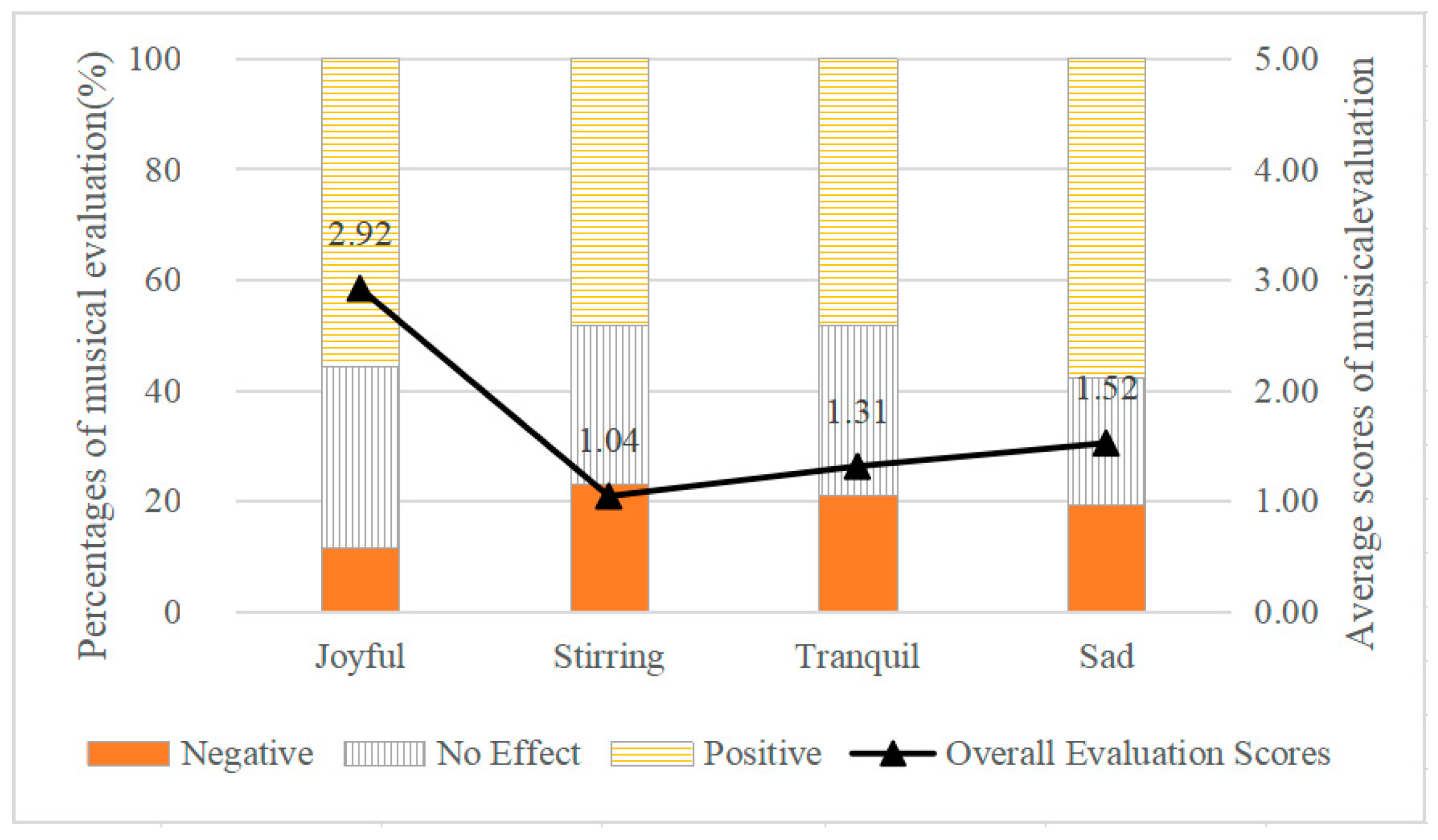

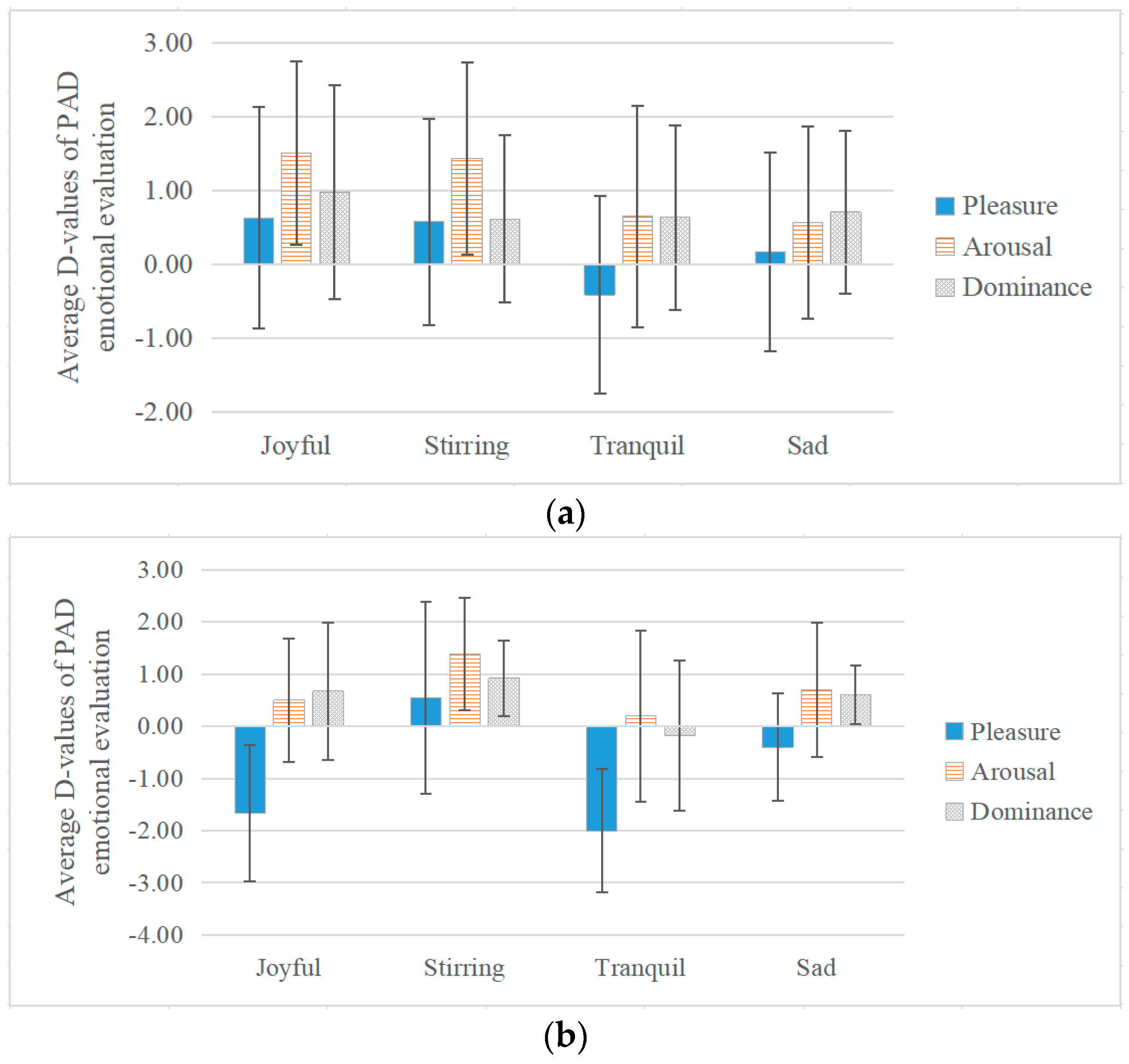

3.2. Effect of Musical Emotion on Communication Emotion

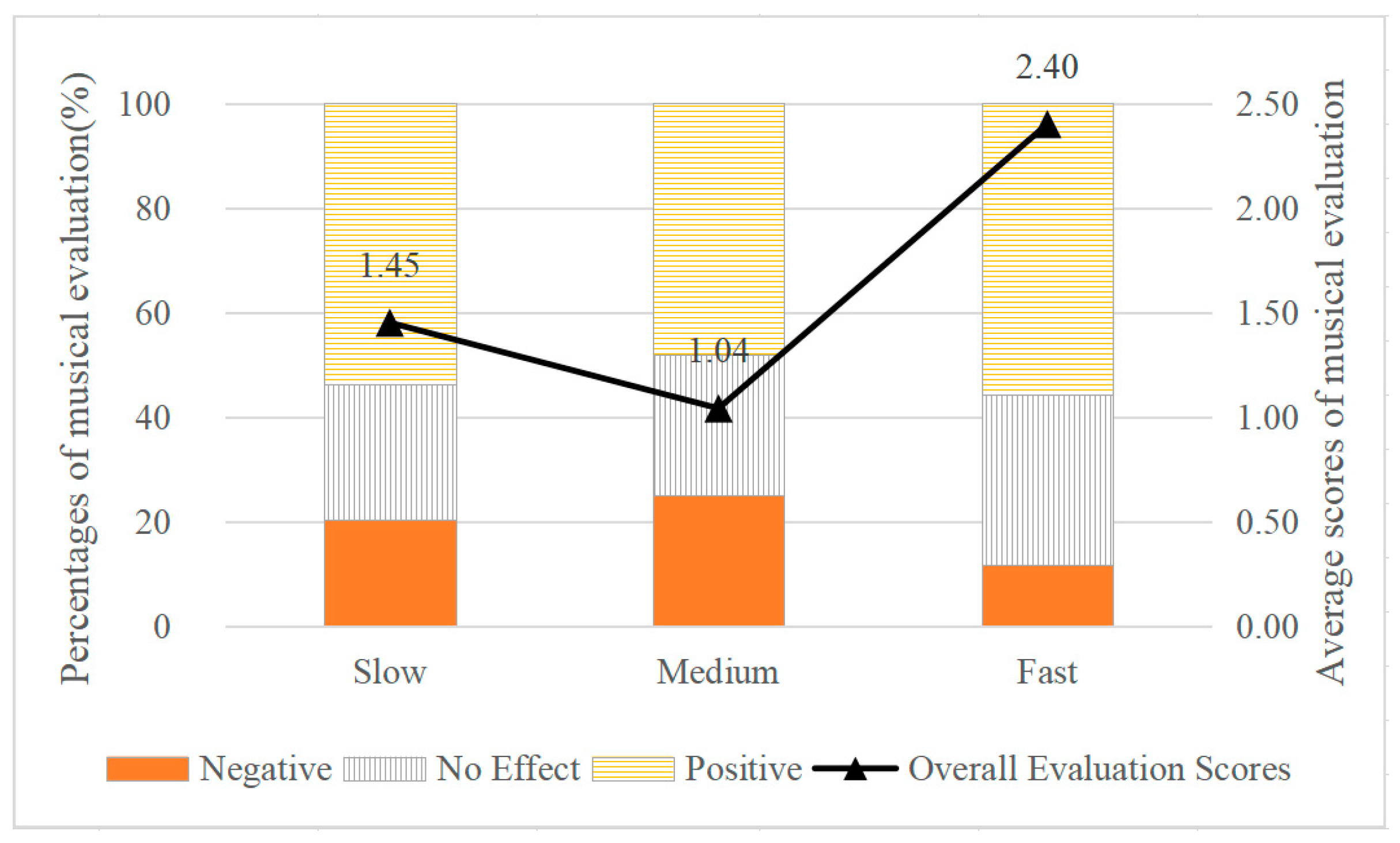

3.3. Effect of Tempo on Communication Emotion

3.4. Discussion

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Kemper, T.D.; Lazarus, R.S. Emotion and Adaptation. Contemp. Sociol. A J. Rev. 1992, 21, 522–523. [Google Scholar] [CrossRef]

- Rolls, E.T. The Orbitofrontal Cortex and Emotion in Health and Disease, Including Depression. Neuropsychologia 2019, 128, 14–43. [Google Scholar] [CrossRef] [PubMed]

- Kubzansky, L.D.; Kawachi, I. Going to the Heart of the Matter. J. Psychosom. Res. 2000, 48, 323–337. [Google Scholar] [CrossRef]

- Smith, T.W.; Glazer, K.; Ruiz, J.M.; Gallo, L.C. Hostility, Anger, Aggressiveness, and Coronary Heart Disease: An Interpersonal Perspective on Personality, Emotion, and Health. J. Pers. 2004, 72, 1217–1270. [Google Scholar] [CrossRef]

- Chew, B.H.; Vos, R.C.; Stellato, R.K.; Ismail, M.; Rutten, G.E.H.M. The Effectiveness of an Emotion-Focused Educational Programme in Reducing Diabetes Distress in Adults with Type 2 Diabetes Mellitus (VEMOFIT): A Cluster Randomized Controlled Trial. Diabet. Med. 2018, 35, 750–759. [Google Scholar] [CrossRef]

- Selby, E.A.; Franklin, J.; Carson-Wong, A.; Rizvi, S.L. Emotional Cascades and Self-Injury: Investigating Instability of Rumination and Negative Emotion. J. Clin. Psychol. 2013, 69, 1213–1227. [Google Scholar] [CrossRef]

- Hess, U.; Bourgeois, P. You Smile-I Smile: Emotion Expression in Social Interaction. Biol. Psychol. 2010, 84, 514–520. [Google Scholar] [CrossRef]

- Wubben, M.J.J.; Cremer, D.D.; Dijk, E.V. How Emotion Communication Guides Reciprocity: Establishing Cooperation through Disappointment and Anger. J. Exp. Soc. Psychol. 2009, 45, 987–990. [Google Scholar] [CrossRef]

- Hess, U.; Blairy, S. Facial Mimicry and Emotional Contagion to Dynamic Emotional Facial Expressions and Their Influence on Decoding Accuracy. Int. J. Psychophysiol. 2001, 40, 129–141. [Google Scholar] [CrossRef]

- Umberson, D.; Anderson, K.L.; Williams, K.; Chen, M.D. Relationship Dynamics, Emotion State, and Domestic Violence: A Stress and Masculinities Perspective. J. Marriage Fam. 2004, 65, 233–247. [Google Scholar] [CrossRef]

- Shorey, R.C.; McNulty, J.K.; Moore, T.M.; Stuart, G.L. Emotion Regulation Moderates the Association Between Proximal Negative Affect and Intimate Partner Violence Perpetration. Prev. Sci. 2015, 16, 873–880. [Google Scholar] [CrossRef] [PubMed]

- Ono, E.; Nozawa, T.; Ogata, T.; Motohashi, M.; Higo, N.; Kobayashi, T.; Ishikawa, K.; Ara, K.; Yano, K.; Miyake, Y. Fundamental Deliberation on Exploring Mental Health through Social Interaction Pattern. In Proceedings of the 2012 ICME International Conference on Complex Medical Engineering (CME), Kobe, Japan, 1–4 July 2012; pp. 321–326. [Google Scholar] [CrossRef]

- Olguin, D.O.; Waber, B.N.; Kim, T.; Mohan, A.; Ara, K.; Pentland, A. Sensible Organizations: Technology and Methodology for Automatically Measuring Organizational Behavior. IEEE Trans. Syst. Man, Cybern. Part B (Cybernetics) 2009, 39, 43–55. [Google Scholar] [CrossRef] [PubMed]

- Shin, Y.B.; Woo, S.H.; Kim, D.H.; Kim, J.; Kim, J.J.; Park, J.Y. The Effect on Emotions and Brain Activity by the Direct/Indirect Lighting in the Residential Environment. Neurosci. Lett. 2015, 584, 28–32. [Google Scholar] [CrossRef] [PubMed]

- Meesters, Y.; Duijzer, W.B.; Hommes, V. The Effects of Low-intensity Narrow-band Blue-light Treatment Compared to Bright White-light Treatment in Sub-syndromal Seasonal Affective Disorder. J. Affect. Disord. 2018, 232, 48–51. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Lian, Z. Use of Neurobehavioral Tests to Evaluate the Effects of Indoor Environment Quality on Productivity. Build. Environ. 2009, 44, 2208–2217. [Google Scholar]

- Brown, L.; Kang, J.; Gjestland, T. Towards Standardization in Soundscape Preference Assessment. Appl. Acoust. 2011, 72, 387–392. [Google Scholar] [CrossRef]

- Acoustics–Soundscape–Part 1: Definition and Conceptual Framework. ISO 12913-1. 2014. Available online: https://www.iso.org/standard/52161.html (accessed on 30 March 2020).

- Liu, F.; Kang, J. A Grounded Theory Approach to the Subjective Understanding of Urban Soundscape in Sheffield. Cities 2016, 50, 28–39. [Google Scholar] [CrossRef]

- Mcdermott, J.H. Auditory Preferences and Aesthetics: Music, Voices, and Everyday Sounds. Neurosci. Prefer. Choice 2012, 227–256. [Google Scholar] [CrossRef]

- Yang, W.; Kang, J. Soundscape and Sound Preferences in Urban Squares: A Case Study in Sheffield. J. Urban Des. 2005, 10, 61–80. [Google Scholar] [CrossRef]

- Marquis-Favre, C.; Premat, E.; Aubree, D. Noise and Its Effects - A Review on Qualitative Aspect of Sound. Part II: Noise and Annoyance. Acta Acoustica United Acoustica 2005, 91, 626–642. [Google Scholar] [CrossRef]

- Concina, G.; Renna, A.; Grosso, A.; Sacchetti, B. The Auditory Cortex and the Emotional Valence of Sounds. Neurosci. Biobehav. Rev. 2019, 98, 256–264. [Google Scholar] [CrossRef] [PubMed]

- Juslin, P.N.; Västfjäll, D. Emotional Responses to Music: The Need to Consider Underlying Mechanisms. Behav. Brain Sci. 2008, 31, 559–575. [Google Scholar] [CrossRef] [PubMed]

- Erkkilä, J.; Punkanen, M.; Fachner, J.; Ala-Ruona, E.; Pöntiö, I.; Tervaniemi, M.; Vanhala, M.; Gold, C. Individual Music Therapy for Depression: Randomised Controlled Trial. Br. J. Psychiatry 2011, 199, 132–139. [Google Scholar] [CrossRef] [PubMed]

- Iyendo, T.O. Exploring the Effect of Sound and Music on Health in Hospital Settings: A Narrative Review. Int. J. Nurs. Stud. 2016, 63, 82–100. [Google Scholar] [CrossRef] [PubMed]

- Hunter, P.G.; Schellenberg, E.G. Music and Emotion. Music Percept. 2010, 36, 129–164. [Google Scholar] [CrossRef]

- Krumhansl, C.L. An Exploratory Study of Musical Emotions and Psychophysiology. Can. J. Exp. Psychol. 1997, 51, 336–353. [Google Scholar] [CrossRef] [PubMed]

- Huron, D. Why Is Sad Music Pleasurable? A Possible Role for Prolactin. Musicae Sci. 2011, 15, 146–158. [Google Scholar] [CrossRef]

- Konečni, V.J. Does Music Induce Emotion? A Theoretical and Methodological Analysis. Psychol. Aesthetics Creat. Arts 2008, 2, 115–129. [Google Scholar] [CrossRef]

- Ding, C.G.; Lin, C.H. How does background music tempo work for online shopping? Electron. Commer. Res. Appl. 2012, 11, 299–307. [Google Scholar] [CrossRef]

- Knoeferle, K.M.; Spangenberg, E.R.; Herrmann, A.; Landwehr, J.R. It Is All in the Mix: The Interactive Effect of Music Tempo and Mode on in-Store Sales. Mark. Lett. 2011, 23, 325–337. [Google Scholar] [CrossRef]

- Nicolaou, N.; Malik, A.; Daly, I.; Weaver, J.; Hwang, F.; Kirke, A.; Roesch, E.B.; Williams, D.; Miranda, E.R.; Nasuto, S.J. Directed Motor-Auditory EEG Connectivity Is Modulated by Music Tempo. Front. Hum. Neurosci. 2017, 11, 502. [Google Scholar] [CrossRef] [PubMed]

- Lehmann, J.A.M.; Seufert, T. The Influence of Background Music on Learning in the Light of Different Theoretical Perspectives and the Role of Working Memory Capacity. Front. Psychol. 2017, 8, 1902. [Google Scholar] [CrossRef] [PubMed]

- Ilie, G.; Thompson, W. A Comparison of Acoustic Cues in Music and Speech for Three Dimensions of Affect. Music Percept. Interdiscip. J. 2006, 23, 319–330. [Google Scholar] [CrossRef]

- Gabrielsson, A. Emotion Perceived and Emotion Felt: Same or Different? Music. Sci. 2001, 5, 123–147. [Google Scholar] [CrossRef]

- Garrido, S.; Schubert, E. Individual Differences in the Enjoyment of Negative Emotion in Music: A Literature Review and Experiment. Music Percept. 2011, 28, 279–296. [Google Scholar] [CrossRef]

- Schubert, E. Emotion Felt by the Listener and Expressed by the Music: Literature Review and Theoretical Perspectives. Front. Psychol. 2013, 4. [Google Scholar] [CrossRef]

- Hevner, K. Experimental Studies of the Elements of Expression in Music. Am. J. Psychol. 1936, 48, 246–268. [Google Scholar] [CrossRef]

- Torre, P.; Grace, J. Changes in Distortion Product Otoacoustic Emission Components After Music Exposure. J. Am. Acad. Audiol. 2014, 25, 804–813. [Google Scholar] [CrossRef]

- Hunter, P.G.; Schellenberg, E.G.; Stalinski, S.M. Liking and identifying emotionally expressive music: Age and gender differences. J. Exp. Child Psychol. 2011, 110, 80–93. [Google Scholar] [CrossRef]

- Clark, D.; Teasdale, J.D. Constraints on the Effects of Mood on Memory. J. Pers. Soc. Psychol. 1985, 48, 1595–1608. [Google Scholar] [CrossRef]

- Dmitrieva, E.S.; Gel’Man, V.Y.; Zaitseva, K.A.; Orlov, A.M. Ontogenetic Features of the Psychophysiological Mechanisms of Perception of the Emotional Component of Speech in Musically Gifted Children. Neurosci. Behav. Physiol. 2006, 36, 53–62. [Google Scholar] [CrossRef] [PubMed]

- Levitt, S.D.; List, J.A. Field Experiments in Economics: The Past, the Present, and the Future. Eur. Econ. Rev. 2009, 53, 1–18. [Google Scholar] [CrossRef]

- Harrison, G.W. Field Experiments and Methodological Intolerance. J. Econ. Methodol. 2013, 20, 103–117. [Google Scholar] [CrossRef]

- Harrison, G.W.; Lau, M.I.; Rutström, E.E. Risk Attitudes, Randomization to Treatment, and Self-Selection into Experiments. J. Econ. Behav. Organ. 2009, 70, 498–507. [Google Scholar] [CrossRef]

- Altomonte, S.; Rutherford, P.; Wilson, R. Indoor Environmental Quality: Lighting and Acoustics. Encycl. Sustain. Technol. 2017, 221–229. [Google Scholar] [CrossRef]

- Petersen, S.; Knudsen, M.D. Method for Including the Economic Value of Indoor Climate as Design Criterion in Optimisation of Office Building Design. Build. Environ. 2017, 122, 15–22. [Google Scholar] [CrossRef]

- Nematchoua, M.K.; Ricciardi, P.; Orosa, J.A.; Asadi, S.; Choudhary, R. Influence of Indoor Environmental Quality on the Self-estimated Performance of Office Workers in the Tropical Wet and Hot Climate of Cameroon. J. Build. Eng. 2019, 21, 141–148. [Google Scholar] [CrossRef]

- Niemelä, R.; Hannula, M.; Rautio, S.; Reijula, K.; Railio, J. The Effect of Air Temperature on Labour Productivity in Call Centres—a Case Study. Energy Build. 2002, 34, 759–764. [Google Scholar] [CrossRef]

- Knez, I.; Kers, C. Effects of Indoor Lighting, Gender, and Age on Mood and Cognitive Performance. Environ. Behav. 2000, 32, 817–831. [Google Scholar] [CrossRef]

- Carlucci, S.; Causone, F.; Rosa, F.D.; Pagliano, L. A Review of Indices for Assessing Visual Comfort with a View to Their Use in Optimization Processes to Support Building Integrated Design. Renew. Sustain. Energy Rev. 2015, 47, 1016–1033. [Google Scholar] [CrossRef]

- Bowers, A.R.; Meek, C.; Stewart, N. Illumination and Reading Performance in Age-Related Macular Degeneration. Clin. Exp. Optom. 2010, 84, 139–147. [Google Scholar] [CrossRef] [PubMed]

- Yang, W.; Moon, H.J. Combined Effects of Acoustic, Thermal, and Illumination Conditions on the Comfort of Discrete Senses and Overall Indoor Environment. Build. Environ. 2019, 148, 623–633. [Google Scholar] [CrossRef]

- Hidayetoglu, M.L.; Yildirim, K.; Akalin, A. The Effects of Color and Light on Indoor Wayfinding and the Evaluation of the Perceived Environment. J. Environ. Psychol. 2012, 32, 50–58. [Google Scholar] [CrossRef]

- Bistafa, S.R.; Bradley, J.S. Reverberation Time and Maximum Background-Noise Level for Classrooms from a Comparative Study of Speech Intelligibility Metrics. J. Acoust. Soc. Am. 2000, 107, 861–875. [Google Scholar] [CrossRef] [PubMed]

- Hodgson, M.; Nosal, E.M. Effect of Noise and Occupancy on Optimal Reverberation Times for Speech Intelligibility in Classrooms. J. Acoust. Soc. Am. 2002, 111, 931–939. [Google Scholar] [CrossRef] [PubMed]

- Vieillard, S.; Peretz, I.; Gosselin, N.; Khalfa, S.; Gagnon, L.; Bouchard, B. Happy, Sad, Scary and Peaceful Musical Excerpts for Research on Emotions. Cogn. Emot. 2008, 22, 720–752. [Google Scholar] [CrossRef]

- Nicolaou, M.A.; Gunes, H.; Pantic, M. Output-associative RVM Regression for Dimensional and Continuous Emotion Prediction. Image Vision Comput. 2012, 30, 186–196. [Google Scholar] [CrossRef]

- Becker-Asano, C.; Wachsmuth, I. Affective Computing with Primary and Secondary Emotions in a Virtual Human. Auton. Agents Multi-Agent Syst. 2009, 20, 32–49. [Google Scholar] [CrossRef]

- Mehrabian, A.; Russell, J.A. The Basic Emotional Impact of Environments. Percept. Mot. Ski. 1974, 38, 283–301. [Google Scholar] [CrossRef]

- Yani-De-Soriano, M.M.; Foxall, G.R. The Emotional Power of Place: The Fall and Rise of Dominance in Retail Research. J. Retail. Consum. Serv. 2006, 13, 403–416. [Google Scholar] [CrossRef]

- Zentner, M.; Grandjean, D.; Scherer, K.R. Emotions Evoked by the Sound of Music: Characterization, Classification, and Measurement. Emot. 2008, 8, 494–521. [Google Scholar] [CrossRef] [PubMed]

- Wieczorkowska, A.; Synak, P.; Raś, Z.W. Multi-Label Classification of Emotions in Music. Adv. Intell. Soft Comput. 2006, 35, 307–315. [Google Scholar] [CrossRef]

- Goshvarpour, A.; Abbasi, A.; Goshvarpour, A. An Accurate Emotion Recognition System Using ECG and GSR Signals and Matching Pursuit Method. Biomed. J. 2017, 40, 355–368. [Google Scholar] [CrossRef] [PubMed]

- Huang, S.; Zhou, L.; Liu, Z.; Ni, S.; He, J. Empirical Research on a Fuzzy Model of Music Emotion Classification Based on Pleasure-Arousal Model. In Proceedings of the 2018 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018; pp. 3239–3244. [Google Scholar]

- Brattico, E.; Alluri, V.; Bogert, B.; Jacobsen, T.; Vartiainen, N.; Nieminen, S.; Tervaniemi, M. A Functional MRI Study of Happy and Sad Emotions in Music with and Without Lyrics. Front. Psychol. 2011, 2, 308. [Google Scholar] [CrossRef] [PubMed]

- Tzanetakis, G.; Cook, P. Musical Genre Classification of Audio Signals. IEEE Trans. Speech Audio Process. 2002, 10, 293–302. [Google Scholar] [CrossRef]

- Dellandrea, E.; Harb, H.; Chen, L. Zipf, Neural Networks and SVM for Musical Genre Classification. In Proceedings of the Fifth IEEE International Symposium on Signal Processing and Information Technology, Athens, Greece, 18–21 December 2005; pp. 57–62. [Google Scholar]

- Zhang, K.; Sun, S. Web Music Emotion Recognition Based on Higher Effective Gene Expression Programming. Neurocomputing 2013, 105, 100–106. [Google Scholar] [CrossRef]

- Webster, G.D.; Weir, C.G. Emotional Responses to Music: Interactive Effects of Mode, Texture, and Tempo. Motiv. Emot. 2005, 29, 19–39. [Google Scholar] [CrossRef]

- Gagnon, L.; Peretz, I. Mode and Tempo Rrelative Contributions to “Happy-sad” Judgements in Equitone Melodies. Cogn. Emot. 2013, 17, 25–40. [Google Scholar] [CrossRef]

- Trochidis, K.; Bigand, E. Investigation of the Effect of Mode and Tempo on Emotional Responses to Music Using EEG Power Asymmetry. J. Psychophysiol. 2013, 27, 142–148. [Google Scholar] [CrossRef]

- Lazaro, A.; Sarno, R.; Andre, R.J.; Mahardika, M.N. Music Tempo Classification Using Audio Spectrum Centroid, Audio Spectrum Flatness, and Audio Spectrum Spread Based on MPEG-7 Audio Features. In Proceedings of the 2017 3rd International Conference on Science in Information Technology (ICSITech), Bandung, Indonesia, 25–26 October 2017; pp. 41–46. [Google Scholar] [CrossRef]

- Jiang, J.; Meng, Q.; Kang, J. The Influence of Background Music on Interactive Behaviour in an Indoor Space. In Proceedings of the 49th International Congress and Exposition on Noise Control Engineering (Internoise 2019), Madrid, Spain, 16–19 June 2019. [Google Scholar]

- Bottalico, P.; Hunter, E.J.; Cutiva, L.C.C. Vocal Effort and Fatigue in Virtual Room Acoustics. J. Acoust. Soc. Am. 2016, 140, 3126. [Google Scholar] [CrossRef]

- Mark, M.M.; Reichardt, C.S. Internal Validity. Int. Encycl. Soc. Behav. Sci. 2001, 7749–7752. [Google Scholar]

- Dong, P.; Wyer, R.S. How Time Flies: The Effects of Conversation Characteristics and Partner Attractiveness on Duration Judgments in a Social Interaction. J. Exp. Soc. Psychol. 2014, 50, 1–14. [Google Scholar] [CrossRef]

- Yamaguchi, T.; Ota, J.; Otake, M. A System That Assists Group Conversation of Older Adults by Evaluating Speech Duration and Facial Expression of Each Participant During Conversation. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, St Paul, MN, USA, 14–19 May 2012; pp. 4481–4486. [Google Scholar]

- Olsen, K.N.; Stevens, C.J. Psychophysiological Response to Acoustic Intensity Change in a Musical Chord. J. Psychophysiol. 2013, 27, 16–26. [Google Scholar] [CrossRef]

- Larsen, J.T.; Norris, C.J.; McGraw, A.P.; Hawkley, L.C.; Cacioppo, J.T. The Evaluative Space Grid: A Single-Item Measure of Positivity and Negativity. Cogn. Emot. 2009, 23, 453–480. [Google Scholar] [CrossRef]

- Husain, G.; Thompson, W.F.; Schellenberg, E.G. Effects of Musical Tempo and Mode on Arousal, Mood, and Spatial Abilities. Music Percept. 2002, 20, 151–171. [Google Scholar] [CrossRef]

| Social Characteristics | Number | |

|---|---|---|

| Gender combination | Single gender | 26 |

| Mixed gender | 26 | |

| Familiarity | Low degree | 16 |

| Middle degree | 16 | |

| High degree | 20 | |

| Number of Participants | One-on-one group (2) | 38 |

| Multi-participants group (3/4) | 14 | |

| Subjective Evaluation | Description | |

|---|---|---|

| Basic Information | Name, Gender, Age | |

| Overall Musical Evaluation | No effect (0) | Music has no effect |

| Conducive (1 to 10) | A little conducive to Conducive very much | |

| Distractive (–1 to –10) | A little distractive to Distractive very much | |

| Emotion Dimension | Pleasure (1 to 10) | Depressed to Satisfied, Unhappy to Happy, Restless to Comfortable, Angry to Glad |

| Arousal (1 to 10) | Peaceful to Fevered, Unexcited to Excited, Relaxed to Stimulated, Drowsy to Awakened | |

| Dominance (1 to 10) | Passive to Active, Controlled to Uncontrolled | |

| Evaluation | Music Title | Joyful | Agitated | Tranquil | Sad |

|---|---|---|---|---|---|

| Joyful | Dance of The Golden Snake | 21 (70%) | 9 (30%) | 0 (0%) | 0 (0%) |

| Ode an die Freude | 22 (73.33%) | 3 (10%) | 5 (16.67%) | 0 (0%) | |

| Spring Festival Prelude | 25(83.33%) | 3 (10%) | 0 (0%) | 2 (6.67%) | |

| Stirring | Croatian Rhapsody | 6 (20%) | 23 (76.67%) | 0 (0%) | 1 (3.33%) |

| Carmen Overture | 14 (46.67%) | 13 (43.33%) | 1 (3.33%) | 2 (6.67%) | |

| Athletes March | 5 (16.67%) | 24 (80%) | 1 (3.33%) | 0 (0%) | |

| Tranquil | A Comme Amour | 2 (6.67%) | 0 (0%) | 24 (80%) | 4 (13.33%) |

| The Blue Danube | 6 (20%) | 5 (16.67%) | 14 (46.67%) | 5 (16.67%) | |

| For Elise | 6 (20%) | 0(0%) | 21 (70%) | 3 (10%) | |

| Sad | River Water | 0 (0%) | 7 (23.33%) | 1 (3.33%) | 22 (73.33%) |

| MARIAGE D’AMOUR | 12 (40%) | 0 (0%) | 14 (46.67%) | 4 (13.33%) | |

| Butterfly Lovers | 4 (13.33%) | 2 (6.67%) | 0 (0%) | 27 (90%) |

| Social Characteristics | Musical Evaluation | D-values of Emotional Evaluation | |||||||

|---|---|---|---|---|---|---|---|---|---|

| ANOVA | Pleasure | Arousal | Dominance | ||||||

| Familiarity | Low degree | 3.69 | 0.88 | 1.88 | 1.06 | ||||

| Middle degree | 0.69 | −0.06 | 0.19 | 1.25 | |||||

| High degree | -0.1 | −0.45 | 1.84 | 0.40 | |||||

| T-test | Mean | SD | Mean | SD | Mean | SD | Mean | SD | |

| Gender Combination | Single gender | 0.85 | 4.628 | −0.27 | 2.616 | 0.69 | 3.222 | 1.08 | 2.365 |

| Mixed gender | 1.77 | 4.403 | 0.42 | 2.774 | 1.65 | 2.058 | 0.65 | 2.529 | |

| Number of Participants | 2 | 1.03 | 4.966 | −0.26 | 2.613 | 1.00 | 2.956 | 0.54 | 2.292 |

| 2–4 | 2.15 | 2.609 | 1.08 | 2.783 | 1.69 | 1.843 | 1.85 | 2.672 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Meng, Q.; Jiang, J.; Liu, F.; Xu, X. Effects of the Musical Sound Environment on Communicating Emotion. Int. J. Environ. Res. Public Health 2020, 17, 2499. https://doi.org/10.3390/ijerph17072499

Meng Q, Jiang J, Liu F, Xu X. Effects of the Musical Sound Environment on Communicating Emotion. International Journal of Environmental Research and Public Health. 2020; 17(7):2499. https://doi.org/10.3390/ijerph17072499

Chicago/Turabian StyleMeng, Qi, Jiani Jiang, Fangfang Liu, and Xiaoduo Xu. 2020. "Effects of the Musical Sound Environment on Communicating Emotion" International Journal of Environmental Research and Public Health 17, no. 7: 2499. https://doi.org/10.3390/ijerph17072499

APA StyleMeng, Q., Jiang, J., Liu, F., & Xu, X. (2020). Effects of the Musical Sound Environment on Communicating Emotion. International Journal of Environmental Research and Public Health, 17(7), 2499. https://doi.org/10.3390/ijerph17072499