Augmenting Patient Education in Hand Surgery—Evaluation of ChatGPT as an Informational Tool in Carpal Tunnel Syndrome

Abstract

1. Introduction

2. Materials and Methods

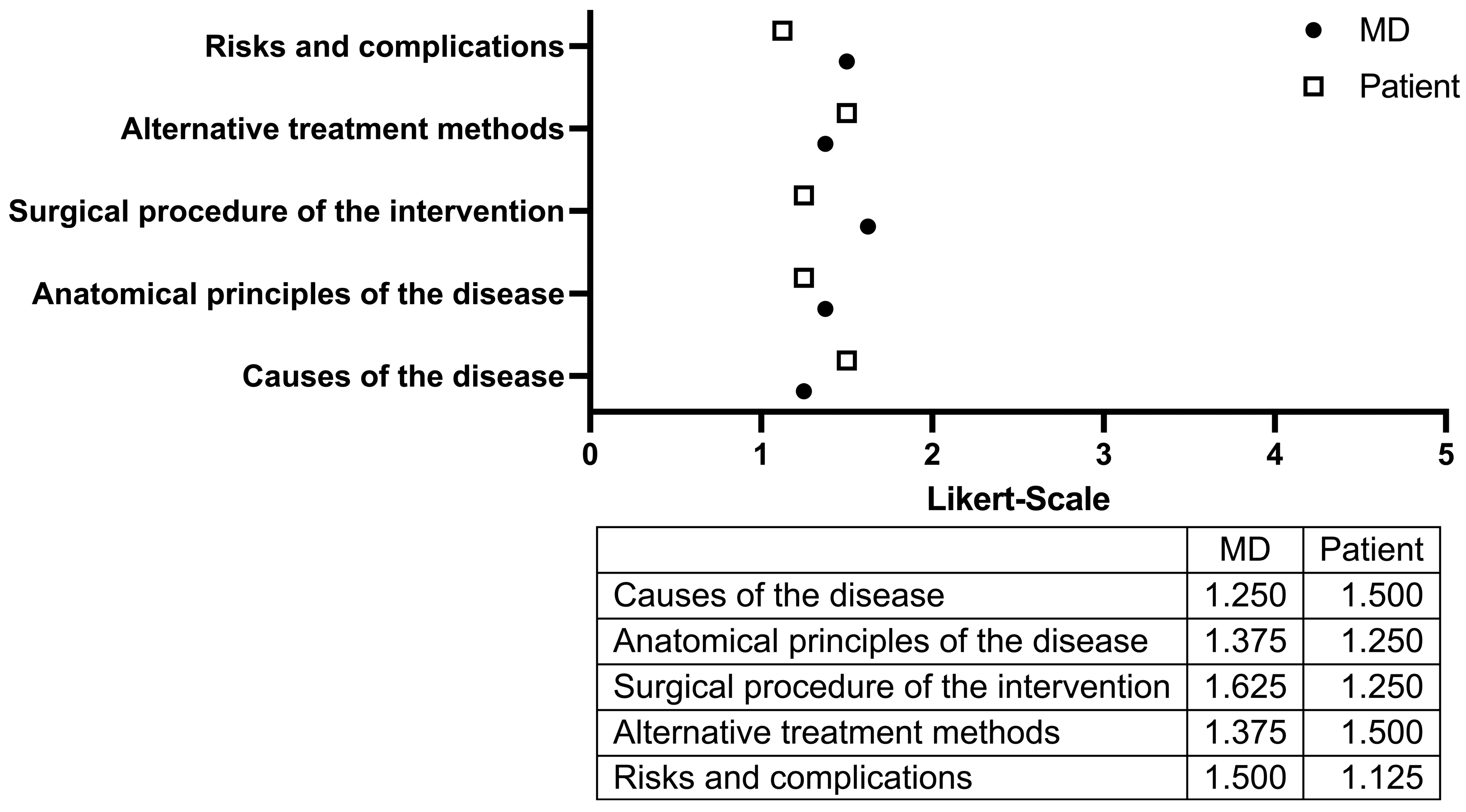

- To what extent were you informed about the causes of the disease?

- How thoroughly were the anatomical foundations of the condition explained?

- How clearly was the surgical procedure described?

- To what extent were alternative treatment modalities presented?

- How comprehensively were the potential risks and complications addressed?

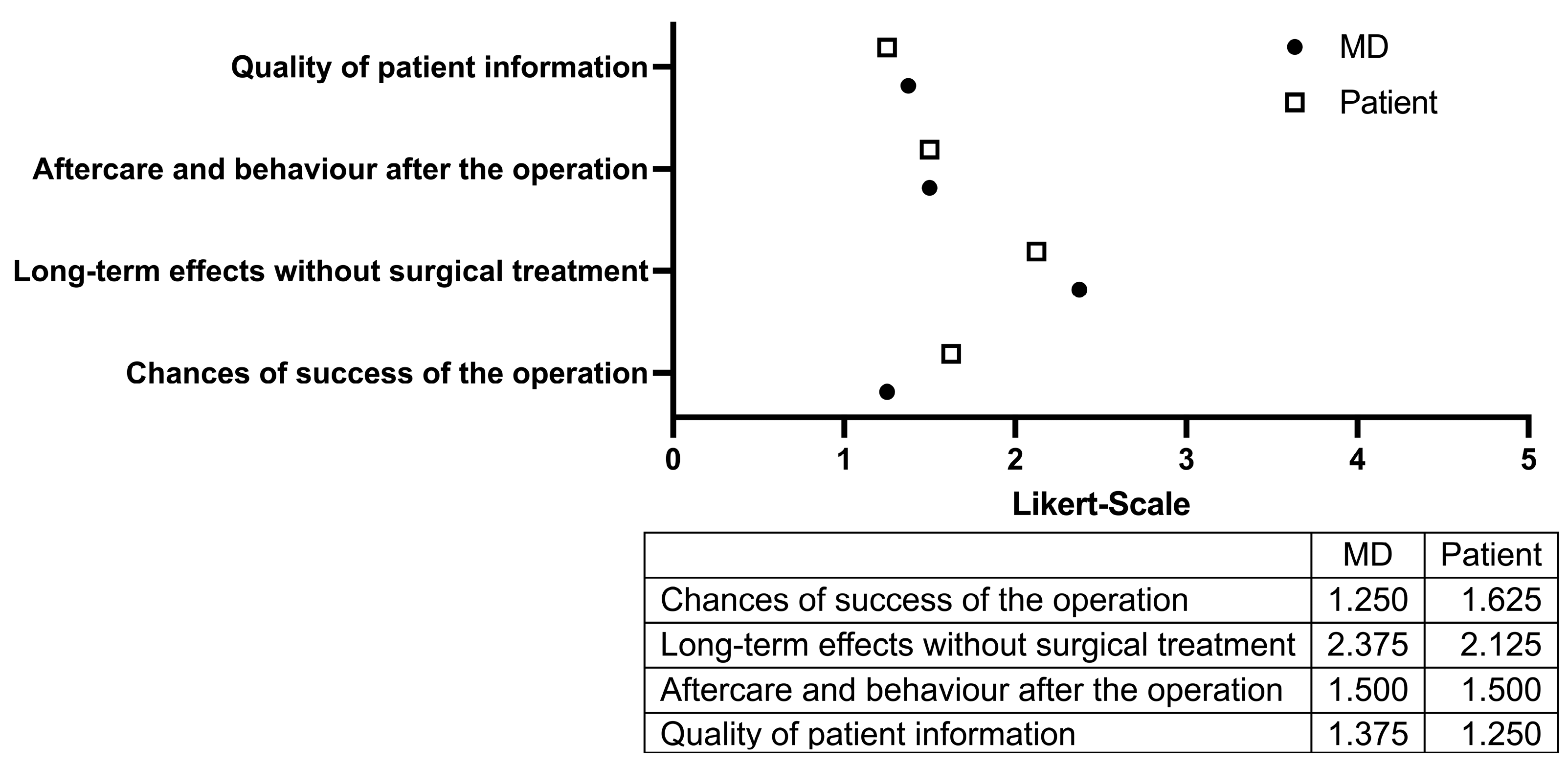

- To what extent were the anticipated benefits and chances of success of the surgery conveyed?

- How well were the long-term consequences of foregoing surgical treatment explained?

- To what extent were postoperative care and recommended patient behavior discussed?

- How would you rate the overall quality of the information provided?

- In your opinion, which relevant aspects were insufficiently addressed?

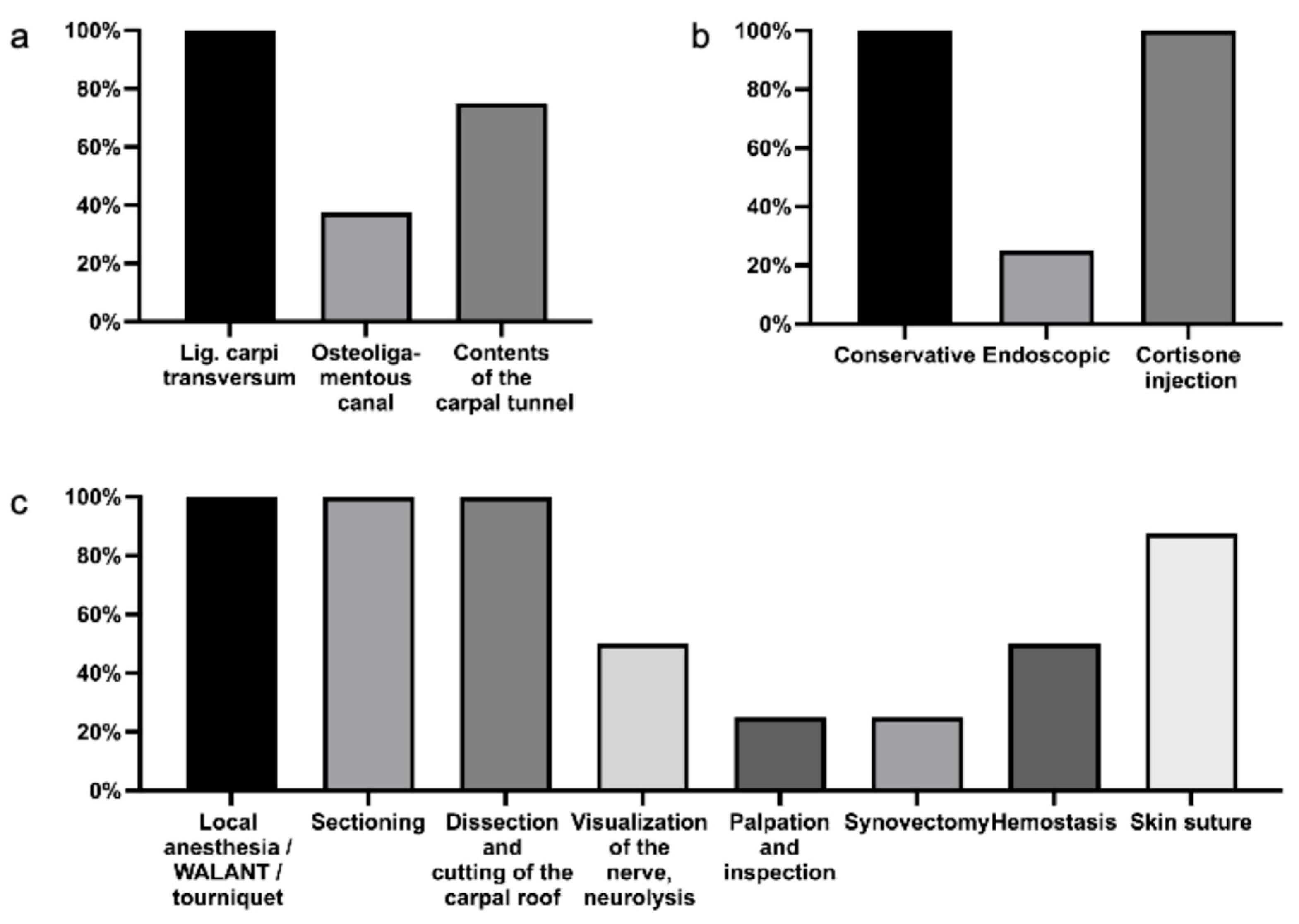

- Were all relevant anatomical structures of the carpal tunnel discussed (e.g., ligamentum carpi transversum, osteoligamentous canal, contents of the carpal tunnel)?

- Were all essential components of the surgical technique described (e.g., anesthesia modalities such as local anesthesia, WALANT (wide awake local anesthesia no tourniquet), tourniquet use; surgical steps including incision, dissection, division of the flexor retinaculum, nerve exposure and neurolysis, handling of the thenar branch, inspection and palpation, synovectomy, hemostasis, and wound closure)?

- Were all viable alternative treatment options mentioned (e.g., conservative management, endoscopic decompression, corticosteroid injection)?

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Padua, L.; Coraci, D.; Erra, C.; Pazzaglia, C.; Paolasso, I.; Loreti, C.; Caliandro, P.; Hobson-Webb, L.D. Carpal tunnel syndrome: Clinical features, diagnosis, and management. Lancet Neurol. 2016, 15, 1273–1284. [Google Scholar] [CrossRef]

- Osiak, K.; Elnazir, P.; Walocha, J.A.; Pasternak, A. Carpal tunnel syndrome: State-of-the-art review. Folia Morphol. 2022, 81, 851–862. [Google Scholar] [CrossRef]

- Wang, L. Guiding Treatment for Carpal Tunnel Syndrome. Phys. Med. Rehabil. Clin. N. Am. 2018, 29, 751–760. [Google Scholar] [CrossRef] [PubMed]

- Assmus, H.; Antoniadis, G.; Bischoff, C.; Haussmann, P.; Martini, A.K.; Mascharka, Z.; Scheglmann, K.; Schwerdtfeger, K.; Selbmann, H.K.; Towfigh, H.; et al. Diagnosis and therapy of carpal tunnel syndrome–guideline of the German Societies of Handsurgery, Neurosurgery, Neurology, Orthopaedics, Clinical Neurophysiology and Functional Imaging, Plastic, Reconstructive and Aesthetic Surgery, and Surgery for Traumatology. Handchir. Mikrochir. Plast. Chir. 2007, 39, 276–288. [Google Scholar] [CrossRef]

- Khalid, S.I.; Deysher, D.; Thomson, K.; Khilwani, H.; Mirpuri, P.; Maynard, M.; Adogwa, O.; Mehta, A.I. Outcomes Following Endoscopic versus Open Carpal Tunnel Release-A Matched Study. World Neurosurg. 2023, 171, e162–e171. [Google Scholar] [CrossRef]

- Gold, D.T.; McClung, B. Approaches to patient education: Emphasizing the long-term value of compliance and persistence. Am. J. Med. 2006, 119 (Suppl. 1), S32–S37. [Google Scholar] [CrossRef] [PubMed]

- Stepan, J.G.; Sacks, H.A.; Verret, C.I.; Wessel, L.E.; Kumar, K.; Fufa, D.T. Standardized Perioperative Patient Education Decreases Opioid Use after Hand Surgery: A Randomized Controlled Trial. Plast. Reconstr. Surg. 2021, 147, 409–418. [Google Scholar] [CrossRef] [PubMed]

- Cook, J.A.; Sasor, S.E.; Tholpady, S.S.; Momeni, A.; Chu, M.W. Hand Surgery Resources Exceed American Health Literacy. Hand 2018, 13, 547–551. [Google Scholar] [CrossRef]

- Roberts, H.J.; Zhang, D.; Earp, B.E.; Blazar, P.; Dyer, G.S.M. Patient self-reported utility of hand surgery online patient education materials. Musculoskelet. Care 2018, 16, 458–462. [Google Scholar] [CrossRef]

- Zhang, D.; Earp, B.E.; Kilgallen, E.E.; Blazar, P. Readability of Online Hand Surgery Patient Educational Materials: Evaluating the Trend Since 2008. J. Hand Surg. Am. 2022, 47, 186.e1–186.e8. [Google Scholar] [CrossRef]

- Hadden, K.; Prince, L.Y.; Schnaekel, A.; Couch, C.G.; Stephenson, J.M.; Wyrick, T.O. Readability of Patient Education Materials in Hand Surgery and Health Literacy Best Practices for Improvement. J. Hand Surg. Am. 2016, 41, 825–832. [Google Scholar] [CrossRef]

- Wang, S.W.; Capo, J.T.; Orillaza, N. Readability and comprehensibility of patient education material in hand-related web sites. J. Hand Surg. Am. 2009, 34, 1308–1315. [Google Scholar] [CrossRef]

- Campbell, D.J.; Estephan, L.E.; Mastrolonardo, E.V.; Amin, D.R.; Huntley, C.T.; Boon, M.S. Evaluating ChatGPT responses on obstructive sleep apnea for patient education. J. Clin. Sleep. Med. 2023, 19, 1989–1995. [Google Scholar] [CrossRef]

- Campbell, D.J.; Estephan, L.E.; Sina, E.M.; Mastrolonardo, E.V.; Alapati, R.; Amin, D.R.; Cottrill, E.E. Evaluating ChatGPT Responses on Thyroid Nodules for Patient Education. Thyroid 2024, 34, 371–377. [Google Scholar] [CrossRef] [PubMed]

- Babiker-Moore, T.; Clark, C.J.; Kavanagh, E.; Crook, T.B. The effect of preoperative interventions on postoperative outcomes following elective hand surgery: A systematic review. Hand Ther. 2025, 30, 19–33. [Google Scholar] [CrossRef]

- Gezer, M.C.; Armangil, M. Assessing the quality of ChatGPT’s responses to commonly asked questions about trigger finger treatment. Ulus. Travma Acil Cerrahi Derg. 2025, 31, 389–393. [Google Scholar] [CrossRef]

- White, C.A.; Kator, J.L.; Rhee, H.S.; Boucher, T.; Glenn, R.; Walsh, A.; Kim, J.M. Can ChatGPT 4.0 reliably answer patient frequently asked questions about boxer’s fractures? Hand Surg. Rehabil. 2025, 44, 102082. [Google Scholar] [CrossRef] [PubMed]

- Arango, S.D.; Flynn, J.C.; Zeitlin, J.; Payne, S.H.; Miller, A.J.; Weir, T.B. Patient Perceptions of Artificial Intelligence in Hand Surgery: A Survey of 511 Patients Presenting to a Hand Surgery Clinic. J. Hand Surg. Am. 2025. [Google Scholar] [CrossRef]

- Bauknecht, S.; Mentzel, M.; Karrasch, M.; Lebelt, M.; Moeller, R.T.; Vergote, D. [The Quality of Hand Surgery Informed Consent Discussions: A Prospective Randomised Study]. Handchir. Mikrochir. Plast. Chir. 2025, 57, 10–16. [Google Scholar] [CrossRef] [PubMed]

- Gao, B.; Skalitzky, M.K.; Rund, J.; Shamrock, A.G.; Gulbrandsen, T.R.; Buckwalter, J. Carpal Tunnel Surgery: Can Patients Read, Understand, and Act on Online Educational Resources? Iowa Orthop. J. 2024, 44, 47–58. [Google Scholar]

- Pohl, N.B.; Tarawneh, O.H.; Johnson, E.; Aita, D.; Tadley, M.; Fletcher, D.J. Patient preferences for carpal tunnel release education: A comparison of education materials from popular healthcare websites and ChatGPT. Hand Surg. Rehabil. 2025, 44, 102073. [Google Scholar] [CrossRef] [PubMed]

- Casey, J.C.; Dworkin, M.; Winschel, J.; Molino, J.; Daher, M.; Katarincic, J.A.; Gil, J.A.; Akelman, E. ChatGPT: A concise Google alternative for people seeking accurate and comprehensive carpal tunnel syndrome information. Hand Surg. Rehabil. 2024, 43, 101757. [Google Scholar] [CrossRef]

- Zhang, A.; Li, C.X.R.; Piper, M.; Rose, J.; Chen, K.; Lin, A.Y. ChatGPT for improving postoperative instructions in multiple fields of plastic surgery. J. Plast. Reconstr. Aesthet. Surg. 2024, 99, 201–208. [Google Scholar] [CrossRef]

- Brenac, C.; Kawamoto-Duran, D.; Fazilat, A.; Tarter, J.; Witters, M.; Rahbi, C.; Macni, C.; de Villeneuve Bargemon, J.B.; Jaloux, C.; Wan, D.C. Assessing the ability of ChatGPT to generate French patient-facing information to improve patient understanding in hand surgery. Ann. Chir. Plast. Esthet. 2025. [Google Scholar] [CrossRef]

- Seth, I.; Xie, Y.; Rodwell, A.; Gracias, D.; Bulloch, G.; Hunter-Smith, D.J.; Rozen, W.M. Exploring the Role of a Large Language Model on Carpal Tunnel Syndrome Management: An Observation Study of ChatGPT. J. Hand Surg. Am. 2023, 48, 1025–1033. [Google Scholar] [CrossRef]

- Tomazin, T.; Pusnik, L.; Albano, D.; Jengojan, S.A.; Snoj, Z. Multiparametric Ultrasound Assessment of Carpal Tunnel Syndrome: Beyond Nerve Cross-sectional Area. Semin. Musculoskelet. Radiol. 2024, 28, 661–671. [Google Scholar] [CrossRef]

- Babayigit, O.; Tastan Eroglu, Z.; Ozkan Sen, D.; Ucan Yarkac, F. Potential Use of ChatGPT for Patient Information in Periodontology: A Descriptive Pilot Study. Cureus 2023, 15, e48518. [Google Scholar] [CrossRef]

- Currie, G.; Robbie, S.; Tually, P. ChatGPT and Patient Information in Nuclear Medicine: GPT-3.5 Versus GPT-4. J. Nucl. Med. Technol. 2023, 51, 307–313. [Google Scholar] [CrossRef]

- Shah, Y.B.; Ghosh, A.; Hochberg, A.R.; Rapoport, E.; Lallas, C.D.; Shah, M.S.; Cohen, S.D. Comparison of ChatGPT and Traditional Patient Education Materials for Men’s Health. Urol. Pract. 2024, 11, 87–94. [Google Scholar] [CrossRef] [PubMed]

- Garg, R.K.; Urs, V.L.; Agarwal, A.A.; Chaudhary, S.K.; Paliwal, V.; Kar, S.K. Exploring the role of ChatGPT in patient care (diagnosis and treatment) and medical research: A systematic review. Health Promot. Perspect. 2023, 13, 183–191. [Google Scholar] [CrossRef] [PubMed]

- Alkaissi, H.; McFarlane, S.I. Artificial Hallucinations in ChatGPT: Implications in Scientific Writing. Cureus 2023, 15, e35179. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the Lithuanian University of Health Sciences. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fuchs, B.; Thierfelder, N.; Aranda, I.M.; Alt, V.; Kuhlmann, C.; Haas-Lützenberger, E.M.; Koban, K.C.; Giunta, R.E.; Mert, S. Augmenting Patient Education in Hand Surgery—Evaluation of ChatGPT as an Informational Tool in Carpal Tunnel Syndrome. Medicina 2025, 61, 1677. https://doi.org/10.3390/medicina61091677

Fuchs B, Thierfelder N, Aranda IM, Alt V, Kuhlmann C, Haas-Lützenberger EM, Koban KC, Giunta RE, Mert S. Augmenting Patient Education in Hand Surgery—Evaluation of ChatGPT as an Informational Tool in Carpal Tunnel Syndrome. Medicina. 2025; 61(9):1677. https://doi.org/10.3390/medicina61091677

Chicago/Turabian StyleFuchs, Benedikt, Nikolaus Thierfelder, Irene Mesas Aranda, Verena Alt, Constanze Kuhlmann, Elisabeth M. Haas-Lützenberger, Konstantin C. Koban, Riccardo E. Giunta, and Sinan Mert. 2025. "Augmenting Patient Education in Hand Surgery—Evaluation of ChatGPT as an Informational Tool in Carpal Tunnel Syndrome" Medicina 61, no. 9: 1677. https://doi.org/10.3390/medicina61091677

APA StyleFuchs, B., Thierfelder, N., Aranda, I. M., Alt, V., Kuhlmann, C., Haas-Lützenberger, E. M., Koban, K. C., Giunta, R. E., & Mert, S. (2025). Augmenting Patient Education in Hand Surgery—Evaluation of ChatGPT as an Informational Tool in Carpal Tunnel Syndrome. Medicina, 61(9), 1677. https://doi.org/10.3390/medicina61091677