The Artificial Intelligence-Enhanced Echocardiographic Detection of Congenital Heart Defects in the Fetus: A Mini-Review

Abstract

1. Introduction

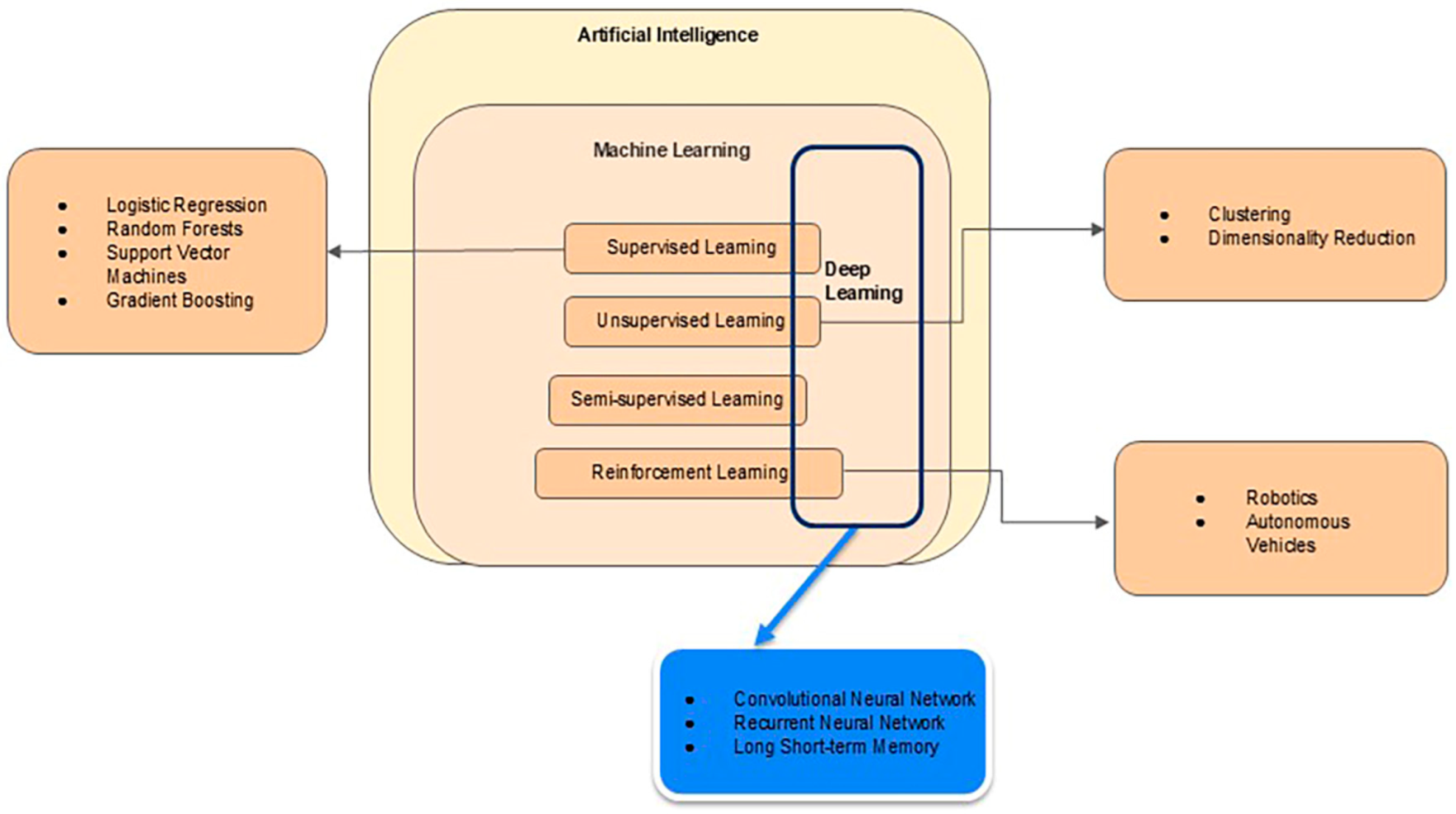

2. Technical Background

2.1. Techniques of AI

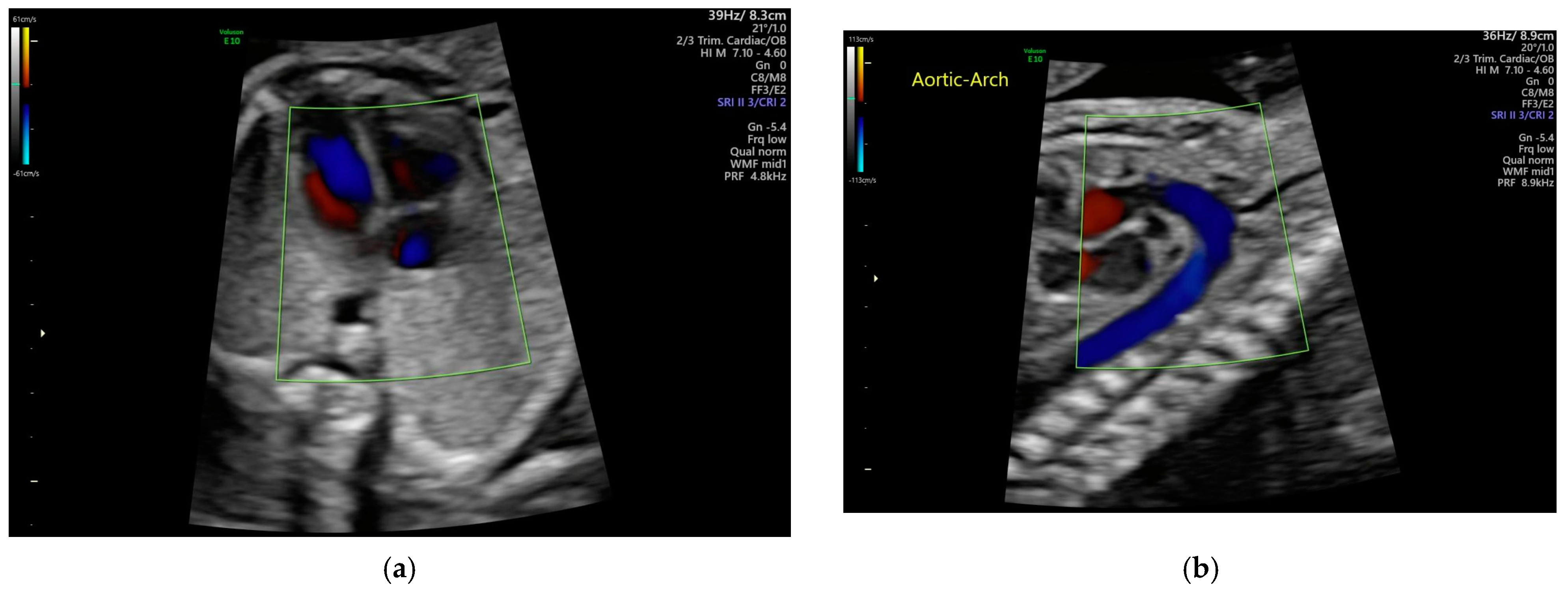

2.2. Echocardiogram Background

3. Artificial Intelligence (AI) Applications

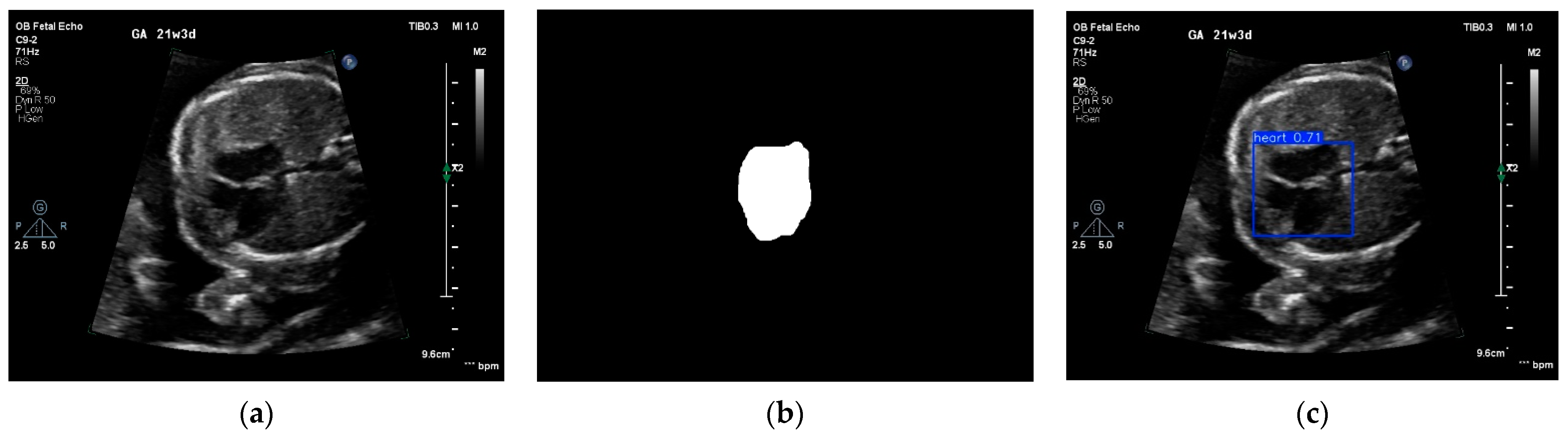

3.1. Image Quality Control and View Selection

3.2. Detection and Classification of Fetal Heart Defects

3.3. Measurements of Cardiac Function and Parameters

3.4. Diagnosis of Specific Abnormalities

3.5. Identification of Normal Heart from CHD

4. Critical Analysis

4.1. Categories of AI Models

4.2. Selection of Training Data

4.3. ML Models and Completeness of Their Descriptions

4.4. Generalization and Computational Requirements of Reviewed ML Models

4.5. AI Performance Versus Clinician’s Performance in Clinical Workflow

4.6. One Case Study [57]

4.7. Scientist–Physician Partnership

5. Ethical Concerns of Artificial Intelligence

6. Discussion

6.1. General Challenges

6.2. Clinical Translation/Implementation

7. Future Outlook and Closing Remarks

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| AUC | Area of the curve |

| CHD | Congenital Heart Defects |

| CNN | Convolutional Neural Network |

| DL | Deep Learning |

| GAN | Generative Adversarial Network |

| GPU | Graphic Processing Unit |

| HLHS | Hypoplastic left Heart Syndrome |

| HIPAA | Health Insurance Portability and Accountability Act |

| ML | Machine Learning |

| NN | Neural Network |

| PACS | Picture archiving and communication system |

| PWD | Pulse Wave Doppler |

| RF | Random Forest |

| VSD | Ventricular Septal Defect |

| YOLO | You Only Look Once |

Appendix A. Non-Technical Explanation of ML Models

Appendix A.1. Convolution Neural Network (CNN)

Appendix A.2. Generative Adversarial Network (GAN)

Appendix A.3. Reinforcement Learning (RL)

Appendix B. Key Phrases Used in the Literature Search

| Person 1 | Personal 2 |

|---|---|

| Deep learning in fetal echocardiography Key anatomical structure detection in fetal echocardiography Standard plane recognition in fetal heart Fetal heart standard views Deep learning in fetal heart echocardiography Fetal echocardiography using artificial intelligence | Fetal echocardiography Artificial intelligence Congenital heart disease Deep learning Machine learning Structural heart disease detection Congenital heart disease detection Heart Disease |

References

- CDC. Congential Heart Defects Data and Statistics. Available online: https://www.cdc.gov/heart-defects/data/index.html#:~:text=Babies%20born%20with%20heart%20defects&text=Heart%20defects%20affect%20nearly%201,year%20in%20the%20United%20States.&text=The%20prevalence%20of%20some%20heart,is%20a%20ventricular%20septal%20defect (accessed on 21 February 2025).

- Plana, M.N.; Zamora, J.; Suresh, G.; Fernandez-Pineda, L.; Thangaratinam, S.; Ewer, A.K. Pulse oximetry screening for critical congenital heart defects. Cochrane Database Syst. Rev. 2018. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.-F.; Zeng, X.-L.; Zhao, E.-F.; Lu, H.-W. Diagnostic Value of Fetal Echocardiography for Congenital Heart Disease: A Systematic Review and Meta-Analysis. Medicine 2015, 94, e1759. [Google Scholar] [CrossRef] [PubMed]

- Sklansky, M.; DeVore, G.R. Fetal Cardiac Screening. J. Ultrasound Med. 2016, 35, 679–681. [Google Scholar] [CrossRef] [PubMed]

- Sun, H.Y.; Proudfoot, J.A.; McCandless, R.T. Prenatal detection of critical cardiac outflow tract anomalies remains suboptimal despite revised obstetrical imaging guidelines. Congenit. Heart Dis. 2018, 13, 748–756. [Google Scholar] [CrossRef]

- van Nisselrooij, A.E.L.; Teunissen, A.K.K.; Clur, S.A.; Rozendaal, L.; Pajkrt, E.; Linskens, I.H.; Rammeloo, L.; van Lith, J.M.M.; Blom, N.A.; Haak, M.C. Why are congenital heart defects being missed? Ultrasound Obstet. Gynecol. 2020, 55, 747–757. [Google Scholar] [CrossRef]

- Wren, C.; Reinhardt, Z.; Khawaja, K. Twenty-year trends in diagnosis of life-threatening neonatal cardiovascular malformations. Arch. Dis. Child.-Fetal Neonatal Ed. 2008, 93, F33–F35. [Google Scholar] [CrossRef]

- Riede, F.T.; Wörner, C.; Dähnert, I.; Möckel, A.; Kostelka, M.; Schneider, P. Effectiveness of neonatal pulse oximetry screening for detection of critical congenital heart disease in daily clinical routine—Results from a prospective multicenter study. Eur. J. Pediatr. 2010, 169, 975–981. [Google Scholar] [CrossRef]

- Davis, A.; Billick, K.; Horton, K.; Jankowski, M.; Knoll, P.; Marshall, J.E.; Paloma, A.; Palma, R.; Adams, D.B. Artificial Intelligence and Echocardiography: A Primer for Cardiac Sonographers. J. Am. Soc. Echocardiogr. 2020, 33, 1061–1066. [Google Scholar] [CrossRef]

- Goecks, J.; Jalili, V.; Heiser, L.M.; Gray, J.W. How Machine Learning Will Transform Biomedicine. Cell 2020, 181, 92–101. [Google Scholar] [CrossRef]

- Abuelezz, I.; Hassan, A.; Jaber, B.A.; Sharique, M.; Abd-Alrazaq, A.; Househ, M.; Alam, T.; Shah, Z. Contribution of Artificial Intelligence in Pregnancy: A Scoping Review. Stud. Health Technol. Inf. 2022, 289, 333–336. [Google Scholar] [CrossRef]

- Islam, M.N.; Mustafina, S.N.; Mahmud, T.; Khan, N.I. Machine learning to predict pregnancy outcomes: A systematic review, synthesizing framework and future research agenda. BMC Pregnancy Childbirth 2022, 22, 348. [Google Scholar] [CrossRef]

- Mennickent, D.; Rodríguez, A.; Opazo, M.C.; Riedel, C.A.; Castro, E.; Eriz-Salinas, A.; Appel-Rubio, J.; Aguayo, C.; Damiano, A.E.; Guzmán-Gutiérrez, E.; et al. Machine learning applied in maternal and fetal health: A narrative review focused on pregnancy diseases and complications. Front. Endocrinol. 2023, 14, 1130139. [Google Scholar] [CrossRef]

- Alsharqi, M.; Woodward, W.J.; Mumith, J.A.; Markham, D.C.; Upton, R.; Leeson, P. Artificial intelligence and echocardiography. Echo Res. Pract. 2018, 5, R115–R125. [Google Scholar] [CrossRef]

- Barry, T.; Farina, J.M.; Chao, C.-J.; Ayoub, C.; Jeong, J.; Patel, B.N.; Banerjee, I.; Arsanjani, R. The Role of Artificial Intelligence in Echocardiography. J. Imaging 2023, 9, 50. [Google Scholar] [CrossRef] [PubMed]

- Qiao, S.; Pan, S.; Luo, G.; Pang, S.; Chen, T.; Singh, A.K.; Lv, Z. A Pseudo-Siamese Feature Fusion Generative Adversarial Network for Synthesizing High-Quality Fetal Four-Chamber Views. IEEE J. Biomed. Health Inform. 2023, 27, 1193–1204. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Yang, T.-Y.; Zhang, Y.-Y.; Liu, X.-W.; Zhang, Y.; Sun, L.; Gu, X.-Y.; Chen, Z.; Guo, Y.; Xue, C.; et al. Diagnosis of fetal total anomalous pulmonary venous connection based on the post-left atrium space ratio using artificial intelligence. Prenat. Diagn. 2022, 42, 1323–1331. [Google Scholar] [CrossRef]

- Yu, X.; Ma, L.; Wang, H.; Zhang, Y.; Du, H.; Xu, K.; Wang, L. Deep learning-based differentiation of ventricular septal defect from tetralogy of Fallot in fetal echocardiography images. Technol. Health Care 2024, 32, 457–464. [Google Scholar] [CrossRef]

- Sutarno, S.; Nurmaini, S.; Partan, R.U.; Sapitri, A.I.; Tutuko, B.; Naufal Rachmatullah, M.; Darmawahyuni, A.; Firdaus, F.; Bernolian, N.; Sulistiyo, D. FetalNet: Low-light fetal echocardiography enhancement and dense convolutional network classifier for improving heart defect prediction. Inform. Med. Unlocked 2022, 35, 101136. [Google Scholar] [CrossRef]

- Arnaout, R.; Curran, L.; Zhao, Y.; Levine, J.C.; Chinn, E.; Moon-Grady, A.J. An ensemble of neural networks provides expert-level prenatal detection of complex congenital heart disease. Nat. Med. 2021, 27, 882–891. [Google Scholar] [CrossRef]

- Li, X.; Zhang, H.; Yue, J.; Yin, L.; Li, W.; Ding, G.; Peng, B.; Xie, S. A multi-task deep learning approach for real-time view classification and quality assessment of echocardiographic images. Sci. Rep. 2024, 14, 20484. [Google Scholar] [CrossRef]

- Yang, X.; Huang, X.; Wei, C.; Yu, J.; Yu, X.; Dong, C.; Chen, J.; Chen, R.; Wu, X.; Yu, Z.; et al. An intelligent quantification system for fetal heart rhythm assessment: A multicenter prospective study. Heart Rhythm. 2024, 21, 600–609. [Google Scholar] [CrossRef] [PubMed]

- Dong, J.; Liu, S.; Liao, Y.; Wen, H.; Lei, B.; Li, S.; Wang, T. A Generic Quality Control Framework for Fetal Ultrasound Cardiac Four-Chamber Planes. IEEE J. Biomed. Health Inform. 2020, 24, 931–942. [Google Scholar] [CrossRef]

- Qiao, S.; Pang, S.; Luo, G.; Xie, P.; Yin, W.; Pan, S.; Lyu, Z. A progressive growing generative adversarial network composed of enhanced style-consistent modulation for fetal ultrasound four-chamber view editing synthesis. Eng. Appl. Artif. Intell. 2024, 133, 108438. [Google Scholar] [CrossRef]

- Truong, V.T.; Nguyen, B.P.; Nguyen-Vo, T.-H.; Mazur, W.; Chung, E.S.; Palmer, C.; Tretter, J.T.; Alsaied, T.; Pham, V.T.; Do, H.Q.; et al. Application of machine learning in screening for congenital heart diseases using fetal echocardiography. Int. J. Cardiovasc. Imaging 2022, 38, 1007–1015. [Google Scholar] [CrossRef] [PubMed]

- Wong, K.C.; Sinkovskaya, E.; Abuhamad, A.; Syeda-Mahmood, T. Multiview and Multiclass Image Segmentation Using Deep Learning in Fetal Echocardiography; SPIE: Bellingham, WA, USA, 2021; Volume 11597. [Google Scholar]

- Milletari, F.; Navab, N.; Ahmadi, S.A. V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar]

- Yan, L.; Ling, S.; Mao, R.; Xi, H.; Wang, F. A deep learning framework for identifying and segmenting three vessels in fetal heart ultrasound images. BioMedical Eng. OnLine 2024, 23, 39. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., Wells, W., Frangi, A., Eds.; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Dozen, A.; Komatsu, M.; Sakai, A.; Komatsu, R.; Shozu, K.; Machino, H.; Yasutomi, S.; Arakaki, T.; Asada, K.; Kaneko, S.; et al. Image Segmentation of the Ventricular Septum in Fetal Cardiac Ultrasound Videos Based on Deep Learning Using Time-Series Information. Biomolecules 2020, 10, 1526. [Google Scholar] [CrossRef]

- Day, T.G.; Budd, S.; Tan, J.; Matthew, J.; Skelton, E.; Jowett, V.; Lloyd, D.; Gomez, A.; Hajnal, J.V.; Razavi, R.; et al. Prenatal diagnosis of hypoplastic left heart syndrome on ultrasound using artificial intelligence: How does performance compare to a current screening programme? Prenat. Diagn. 2024, 44, 717–724. [Google Scholar] [CrossRef]

- Yang, Y.; Wu, B.; Wu, H.; Xu, W.; Lyu, G.; Liu, P.; He, S. Classification of normal and abnormal fetal heart ultrasound images and identification of ventricular septal defects based on deep learning. J. Perinat. Med. 2023, 51, 1052–1058. [Google Scholar] [CrossRef]

- Li, F.; Li, P.; Liu, Z.; Liu, S.; Zeng, P.; Song, H.; Liu, P.; Lyu, G. Application of artificial intelligence in VSD prenatal diagnosis from fetal heart ultrasound images. BMC Pregnancy Childbirth 2024, 24, 758. [Google Scholar] [CrossRef]

- Wu, H.; Wu, B.; Lai, F.; Liu, P.; Lyu, G.; He, S.; Dai, J. Application of Artificial Intelligence in Anatomical Structure Recognition of Standard Section of Fetal Heart. Comput. Math. Methods Med. 2023, 2023, 5650378. [Google Scholar] [CrossRef]

- Magesh, S.; RajaKumar, P.S. Fetal Heart Disease Detection Via Deep Reg Network Based on Ultrasound Images. J. Appl. Eng. Technol. Sci. 2023, 5, 439–450. [Google Scholar] [CrossRef]

- Veronese, P.; Guariento, A.; Cattapan, C.; Fedrigo, M.; Gervasi, M.T.; Angelini, A.; Riva, A.; Vida, V. Prenatal Diagnosis and Fetopsy Validation of Complete Atrioventricular Septal Defects Using the Fetal Intelligent Navigation Echocardiography Method. Diagnostics 2023, 13, 456. [Google Scholar] [CrossRef] [PubMed]

- Qu, Y.; Deng, X.; Lin, S.; Han, F.; Chang, H.H.; Ou, Y.; Nie, Z.; Mai, J.; Wang, X.; Gao, X.; et al. Using Innovative Machine Learning Methods to Screen and Identify Predictors of Congenital Heart Diseases. Front. Cardiovasc. Med. 2022, 8, 797002. [Google Scholar] [CrossRef] [PubMed]

- Ma, M.; Sun, L.-H.; Chen, R.; Zhu, J.; Zhao, B. Artificial intelligence in fetal echocardiography: Recent advances and future prospects. IJC Heart Vasc. 2024, 53, 101380. [Google Scholar] [CrossRef]

- Pang, Y.; Zhao, X.; Xiang, T.-Z.; Zhang, L.; Lu, H. Zoom In and Out: A Mixed-scale Triplet Network for Camouflaged Object Detection. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 2150–2160. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- ASE. Policy Statement on AI. Available online: https://www.asecho.org/ase-policy-statements/ (accessed on 20 February 2025).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Sfakianakis, C.; Simantiris, G.; Tziritas, G. GUDU: Geometrically-constrained Ultrasound Data augmentation in U-Net for echocardiography semantic segmentation. Biomed. Signal Process. Control. 2023, 82, 104557. [Google Scholar] [CrossRef]

- Chen, S.-H.; Weng, K.-P.; Hsieh, K.-S.; Chen, Y.-H.; Shih, J.-H.; Li, W.-R.; Zhang, R.-Y.; Chen, Y.-C.; Tsai, W.-R.; Kao, T.-Y. Optimizing Object Detection Algorithms for Congenital Heart Diseases in Echocardiography: Exploring Bounding Box Sizes and Data Augmentation Techniques. Rev. Cardiovasc. Med. 2024, 25, 335. [Google Scholar] [CrossRef]

- Wang, Y.; Helminen, E.; Jiang, J. Building a virtual simulation platform for quasistatic breast ultrasound elastography using open source software: A preliminary investigation. Med. Phys. 2015, 42, 5453–5466. [Google Scholar] [CrossRef]

- Wang, Y.; Peng, B.; Jiang, J. Building an open-source simulation platform of acoustic radiation force-based breast elastography. Phys. Med. Biol. 2017, 62, 1949. [Google Scholar] [CrossRef]

- Peng, B.; Xian, Y.; Zhang, Q.; Jiang, J. Neural-network-based Motion Tracking for Breast Ultrasound Strain Elastography: An Initial Assessment of Performance and Feasibility. Ultrason. Imaging 2020, 42, 74–91. [Google Scholar] [CrossRef]

- Yurdem, B.; Kuzlu, M.; Gullu, M.K.; Catak, F.O.; Tabassum, M. Federated learning: Overview, strategies, applications, tools and future directions. Heliyon 2024, 10, e38137. [Google Scholar] [CrossRef]

- Goto, S.; Solanki, D.; John, J.E.; Yagi, R.; Homilius, M.; Ichihara, G.; Katsumata, Y.; Gaggin, H.K.; Itabashi, Y.; MacRae, C.A.; et al. Multinational Federated Learning Approach to Train ECG and Echocardiogram Models for Hypertrophic Cardiomyopathy Detection. Circulation 2022, 146, 755–769. [Google Scholar] [CrossRef]

- Mu, N.; Lyu, Z.; Rezaeitaleshmahalleh, M.; Tang, J.; Jiang, J. An attention residual u-net with differential preprocessing and geometric postprocessing: Learning how to segment vasculature including intracranial aneurysms. Med. Image Anal. 2023, 84, 102697. [Google Scholar] [CrossRef] [PubMed]

- Mu, N.; Lyu, Z.; Rezaeitaleshmahalleh, M.; Bonifas, C.; Gosnell, J.; Haw, M.; Vettukattil, J.; Jiang, J. S-Net: A multiple cross aggregation convolutional architecture for automatic segmentation of small/thin structures for cardiovascular applications. Front. Physiol. 2023, 14, 1209659. [Google Scholar] [CrossRef]

- Zhu, F.; Gao, Z.; Zhao, C.; Zhu, H.; Nan, J.; Tian, Y.; Dong, Y.; Jiang, J.; Feng, X.; Dai, N.; et al. A Deep Learning-based Method to Extract Lumen and Media-Adventitia in Intravascular Ultrasound Images. Ultrason. Imaging 2022, 44, 191–203. [Google Scholar] [CrossRef] [PubMed]

- Cui, S.; Mao, L.; Jiang, J.; Liu, C.; Xiong, S. Automatic Semantic Segmentation of Brain Gliomas from MRI Images Using a Deep Cascaded Neural Network. J. Healthc. Eng. 2018, 2018, 4940593. [Google Scholar] [CrossRef] [PubMed]

- Aldridge, N.; Pandya, P.; Rankin, J.; Miller, N.; Broughan, J.; Permalloo, N.; McHugh, A.; Stevens, S. Detection rates of a national fetal anomaly screening programme: A national cohort study. BJOG Int. J. Obstet. Gynaecol. 2023, 130, 51–58. [Google Scholar] [CrossRef]

- Copel, J.A.; Pilu, G.; Green, J.; Hobbins, J.C.; Kleinman, C.S. Fetal echocardiographic screening for congenital heart disease: The importance of the four-chamber view. Am. J. Obstet. Gynecol. 1987, 157, 648–655. [Google Scholar] [CrossRef]

- Buskens, E.; Grobbee, D.E.; Frohn-Mulder, I.M.E.; Stewart, P.A.; Juttmann, R.E.; Wladimiroff, J.W.; Hess, J. Efficacy of Routine Fetal Ultrasound Screening for Congenital Heart Disease in Normal Pregnancy. Circulation 1996, 94, 67–72. [Google Scholar] [CrossRef]

- Athalye, C.; van Nisselrooij, A.; Rizvi, S.; Haak, M.C.; Moon-Grady, A.J.; Arnaout, R. Deep-learning model for prenatal congenital heart disease screening generalizes to community setting and outperforms clinical detection. Ultrasound Obstet. Gynecol. 2024, 63, 44–52. [Google Scholar] [CrossRef]

- Liyanage, H.; Liaw, S.-T.; Jonnagaddala, J.; Schreiber, R.; Kuziemsky, C.; Terry, A.L.; de Lusignan, S. Artificial Intelligence in Primary Health Care: Perceptions, Issues, and Challenges. Prim. Health Care Inform. Work. Group Contrib. Yearb. Med. Inform. 2019, 28, 041–046. [Google Scholar] [CrossRef]

- Sullivan, H.R.; Schweikart, S.J. Are Current Tort Liability Doctrines Adequate for Addressing Injury Caused by AI? AMA J. Ethics 2019, 21, E160–E166. [Google Scholar] [CrossRef]

- Abdullah, Y.I.; Schuman, J.S.; Shabsigh, R.; Caplan, A.; Al-Aswad, L.A. Ethics of Artificial Intelligence in Medicine and Ophthalmology. Asia Pac. J. Ophthalmol. 2021, 10, 289–298. [Google Scholar] [CrossRef] [PubMed]

- Luxton, D. Should Watson be consulted for a second opinion? AMA J. Ethics 2019, 21, 131–137. [Google Scholar] [CrossRef]

- Nordling, L. A fairer way forward for AI in health care. Nature 2019, 573, S103. [Google Scholar] [CrossRef] [PubMed]

- Taksoee-Vester, C.A.; Mikolaj, K.; Bashir, Z.; Christensen, A.N.; Petersen, O.B.; Sundberg, K.; Feragen, A.; Svendsen, M.B.S.; Nielsen, M.; Tolsgaard, M.G. AI supported fetal echocardiography with quality assessment. Sci. Rep. 2024, 14, 5809. [Google Scholar] [CrossRef]

- Day, T.G.; Kainz, B.; Hajnal, J.; Razavi, R.; Simpson, J.M. Artificial intelligence, fetal echocardiography, and congenital heart disease. Prenat. Diagn. 2021, 41, 733–742. [Google Scholar] [CrossRef]

- Ting, D.S.W.; Pasquale, L.R.; Peng, L.; Campbell, J.P.; Lee, A.Y.; Raman, R.; Tan, G.S.W.; Schmetterer, L.; Keane, P.A.; Wong, T.Y. Artificial intelligence and deep learning in ophthalmology. Br. J. Ophthalmol. 2019, 103, 167–175. [Google Scholar] [CrossRef]

- Cardiovascular Business. FDA Has Now Cleared More than 1,000 AI Models, Including Many in Cardiology. Available online: https://cardiovascularbusiness.com/topics/artificial-intelligence/fda-has-cleared-more-1000-ai-algorithms-many-cardiology (accessed on 6 March 2025).

- Blezek, D.J.; Olson-Williams, L.; Missert, A.; Korfiatis, P. AI Integration in the Clinical Workflow. J. Digit. Imaging 2021, 34, 1435–1446. [Google Scholar] [CrossRef]

- Mu, N.; Rezaeitaleshmahalleh, M.; Lyu, Z.; Wang, M.; Tang, J.; Strother, C.M.; Gemmete, J.J.; Pandey, A.S.; Jiang, J. Can we explain machine learning-based prediction for rupture status assessments of intracranial aneurysms? Biomed. Phys. Eng. Express 2023, 9, 037001. [Google Scholar] [CrossRef]

- Satou, G.M.; Rheuban, K.; Alverson, D.; Lewin, M.; Mahnke, C.; Marcin, J.; Martin, G.R.; Mazur, L.S.; Sahn, D.J.; Shah, S.; et al. Telemedicine in Pediatric Cardiology: A Scientific Statement From the American Heart Association. Circulation 2017, 135, e648–e678. [Google Scholar] [CrossRef]

- Stagg, A.; Giglia, T.M.; Gardner, M.M.; Offit, B.F.; Fuller, K.M.; Natarajan, S.S.; Hehir, D.A.; Szwast, A.L.; Rome, J.J.; Ravishankar, C.; et al. Initial Experience with Telemedicine for Interstage Monitoring in Infants with Palliated Congenital Heart Disease. Pediatr. Cardiol. 2023, 44, 196–203. [Google Scholar] [CrossRef] [PubMed]

- Rajpal, S.; Alshawabkeh, L.; Opotowsky, A.R. Current Role of Blood and Urine Biomarkers in the Clinical Care of Adults with Congenital Heart Disease. Curr. Cardiol. Rep. 2017, 19, 50. [Google Scholar] [CrossRef] [PubMed]

- Tandon, A.; Avari Silva, J.N.; Bhatt, A.B.; Drummond, C.K.; Hill, A.C.; Paluch, A.E.; Waits, S.; Zablah, J.E.; Harris, K.C.; Council on Lifelong Congenital Heart Disease and Heart Health in the Young (Young Hearts); et al. Advancing Wearable Biosensors for Congenital Heart Disease: Patient and Clinician Perspectives: A Science Advisory From the American Heart Association. Circulation 2024, 149, e1134–e1142. [Google Scholar] [CrossRef]

- Alim, A.; Imtiaz, M.H. Wearable Sensors for the Monitoring of Maternal Health—A Systematic Review. Sensors 2023, 23, 2411. [Google Scholar] [CrossRef] [PubMed]

| Titles | Input Data | Types of AI Algorithms | Application | Clinical Relevance |

|---|---|---|---|---|

| A Pseudo-Siamese Feature Fusion Generative Adversarial Network for Synthesizing High-Quality Fetal Four-Chamber Views [16] | Four-chamber view ultrasound images | GAN: Pseudo-Siamese Feature Fusion Generative Adversarial Network (PSFFGAN) | Synthesize echocardiograms | Enrich training database for designing better diagnostic CHD AI tools |

| Diagnosis of fetal total anomalous pulmonary venous connection based on the post-left atrium space ratio using artificial intelligence [17] | Four-chamber view time-resolved ultrasound videos | CNN: DeepLabv3+, FastFCN, PSPNet, and DenseASPP | Segmentation, biometric measurement, and diagnosis of specific abnormality | Diagnosis of fetal total anomalous pulmonary venous connection |

| Deep learning-based differentiation of ventricular septal defect from tetralogy of Fallot in fetal echocardiography images [18] | ultrasound images | CNN: VGG19, ResNet50, NTS-Net, and WSDAN | Diagnosis of abnormality | Differentiation of ventricular septal defect from tetralogy of Fallot |

| FetalNet: Low-light fetal echocardiography enhancement and dense convolutional network classifier for improving heart defect prediction [19] | Four-chamber view ultrasound images | CNN-based LLIE model | Image enhancement | A DL-based image processing to improve AI-based CHD detection |

| An ensemble of neural networks provides expert-level prenatal detection of complex congenital heart disease [20] | Ultrasound images with five standard views | CNN: Ensemble Network | Detection of cardiac views and classification of normal and abnormal hearts | Differentiate complex CHD from normal hearts |

| A multi-task deep learning approach for real-time view classification and quality assessment of echocardiographic images [21] | Ultrasound images with multiple views | A multi-task CNN model with four components: a backbone network, a neck network, a view classification branch, and a quality assessment branch | Selection of high-quality views | Improve automation for view selection in the clinical workflow |

| An intelligent quantification system for fetal heart rhythm assessment: A multicenter prospective study [22] | Ultrasound Pulse Wave Doppler | R-CNN | Calculate fetal cardiac time intervals | Assess fetal rhythm and function |

| A Generic Quality Control Framework for Fetal Ultrasound Cardiac Four-Chamber Planes [23] | Ultrasound images with multiple views | Varying CNN models | Quality assessment of ultrasound images | Improve automation for image selection in the clinical workflow |

| A progressive growing generative adversarial network composed of enhanced style-consistent modulation for fetal ultrasound four-chamber view editing synthesis [24] | Four-chamber view ultrasound images | An enhanced Generative Adversarial Network (GAN) | Synthesize echocardiograms | Enrich training database for designing better diagnostic CHD AI tools |

| Application of Machine Learning in screening for congenital heart diseases using fetal echocardiography [25] | Manually measured parameters | Random Forest | Detecting CHD | Screen for CHDs |

| Multiview and multiclass image segmentation using deep learning in fetal echocardiography [26] | Ultrasound images with multiple views | V-Net [27] | Heart structure segmentation | Improve the automation for detecting CHDs |

| A deep learning framework for identifying and segmenting three vessels in fetal heart ultrasound images [28] | Ultrasound images with three-vessel view | Varying U-Net [29] models | Segmenting heart structure | Improve the automation for detecting CHDs |

| Image segmentation of the ventricular septum in fetal cardiac ultrasound videos based on deep learning using time-series information [30] | Four-chamber view ultrasound images | CNN: Cropping–Segmentation–Calibration (CSC) | Detection of ventricular septum | Foundation for detecting VSD |

| Prenatal diagnosis of hypoplastic left heart syndrome on ultrasound using artificial intelligence: How does performance compare to a current screening programme [31] | Four-chamber view ultrasound images | ResNet | Detecting hypoplastic left heart syndrome | Detect hypoplastic left heart syndrome |

| Classification of normal and abnormal fetal heart ultrasound images and identification of ventricular septal defects based on deep learning [32] | Ultrasound images with five standard views | YOLO (version 5) and other CNN models | Classification of normal and abnormal fetal hearts | Screen fetal CHDs |

| Application of artificial intelligence in VSD prenatal diagnosis from fetal heart ultrasound images [33] | Ultrasound images with the four-chamber view and left ventricular outflow view | ResNet-18, DenseNet, and MobileNet, | Detect VSD | Auto-detection of VSD |

| Application of Artificial Intelligence in Anatomical Structure Recognition of Standard Section of Fetal Heart [34] | 5 views of ultrasound images | U-Y-Net derived from YOLO (version 5) | View recognition of standard ultrasound views | Foundation for detecting CHD |

| Fetal Heart Disease Detection Via Deep Reg Network Based on Ultrasound Images [35] | Not specified | AlexNet, ResNet-50 VGG-16, DenseNet, MobileNet, and RegNet | Characterize normal vs. abnormal fetal hearts | Screen fetal CHDs |

| Prenatal Diagnosis and Fetopsy Validation of Complete Atrioventricular Septal Defects Using the Fetal Intelligent Navigation Echocardiography Method [36] | Four-chamber view ultrasound images | Spatial image correlation | Ultrasound View Navigation | Improve visualization of ventricular walls |

| Using Innovative Machine Learning Methods to Screen and Identify Predictors of Congenital Heart Diseases [37] | Self-reported questionnaires and routine clinical laboratory test results | Explainable Boosting Machine | Identify predictors of CHDs | Improve the detection of fetal CHDs |

| Paper | Center | Vendor | Data Acquisition Protocol | Training Data Size | ASE Category [41] |

|---|---|---|---|---|---|

| Qiao et al. [16] | 1 | N/P | N/P | ~1000 | N/A |

| Wang et al. [17] | 1 | 1 | Minimal | ~300 | Assisted |

| Yu et al. [18] | 1 | N/P | N/P | ~200 | Autonomous |

| Sutarno et al. [19] | 1 | 1 | N/P | ~500 | Assisted |

| Arnaout et al. [20] | 2 | 4 | Yes | ~100,000 | Autonomous |

| Li, et al. [21] | 1 | 4 | N/P | ~100,000 | Autonomous |

| Yang et al. [22] 1 | 14 | 2 | Yes | ~10,000 | Autonomous |

| Dong et al. [23] | 1 | N/P | N/P | ~7000 | Assisted |

| Qiao et al. [24] | 1 | N/P | N/P | ~600 | N/A |

| Truong et al. [25] | 1 | 1 | N/P | ~4000 | Autonomous |

| Wong et al. [26] | N/P | N/P | N/P | ~300 | Assisted |

| Yan et al. [28] | 1 | 1 | Minimal | ~500 | Assisted |

| Dozen et al. [30] | 1 | 1 | Minimal | ~600 | Assisted |

| Day et al. [31] | 1 | 1 | N/P | ~10,000 | Autonomous |

| Yang et al. [32] | 1 | 3 | Minimal | ~1800 | Autonomous |

| Li, et al. [33] | 1 | 5 | N/P | ~1500 | Autonomous |

| Wu, et al. [34] | 1 | 5 | N/P | ~3400 | Assisted |

| Magesh, et al. [35] | N/P | N/P | N/P | ~400 | Autonomous |

| Paper | Accuracy | AUC | Sensitivity | Specificity | PPV | NPV |

|---|---|---|---|---|---|---|

| Arnaout et al. [20] | ~100% | 0.99 | 95% | 96% | 20% | 100% |

| Truong et al. [25] | 88% | 0.94 | 85% | 88% | 55% | 97% |

| Yang et al. [32] | 80% | N/P | 90% | N/P | 90% | N/P |

| Magesh, et al. [35] | 97% | 0.97 | 92% | 95% | N/P | N/P |

| Meta-analysis of clinical studies [3] | N/P | 0.99 | 68.5% | 99.8% | N/P | N/P |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the Lithuanian University of Health Sciences. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Suha, K.T.; Lubenow, H.; Soria-Zurita, S.; Haw, M.; Vettukattil, J.; Jiang, J. The Artificial Intelligence-Enhanced Echocardiographic Detection of Congenital Heart Defects in the Fetus: A Mini-Review. Medicina 2025, 61, 561. https://doi.org/10.3390/medicina61040561

Suha KT, Lubenow H, Soria-Zurita S, Haw M, Vettukattil J, Jiang J. The Artificial Intelligence-Enhanced Echocardiographic Detection of Congenital Heart Defects in the Fetus: A Mini-Review. Medicina. 2025; 61(4):561. https://doi.org/10.3390/medicina61040561

Chicago/Turabian StyleSuha, Khadiza Tun, Hugh Lubenow, Stefania Soria-Zurita, Marcus Haw, Joseph Vettukattil, and Jingfeng Jiang. 2025. "The Artificial Intelligence-Enhanced Echocardiographic Detection of Congenital Heart Defects in the Fetus: A Mini-Review" Medicina 61, no. 4: 561. https://doi.org/10.3390/medicina61040561

APA StyleSuha, K. T., Lubenow, H., Soria-Zurita, S., Haw, M., Vettukattil, J., & Jiang, J. (2025). The Artificial Intelligence-Enhanced Echocardiographic Detection of Congenital Heart Defects in the Fetus: A Mini-Review. Medicina, 61(4), 561. https://doi.org/10.3390/medicina61040561