Abstract

Background and Objectives: Device-assisted enteroscopy (DAE) has a significant role in approaching enteric lesions. Endoscopic observation of ulcers or erosions is frequent and can be associated with many nosological entities, namely Crohn’s disease. Although the application of artificial intelligence (AI) is growing exponentially in various imaged-based gastroenterology procedures, there is still a lack of evidence of the AI technical feasibility and clinical applicability of DAE. This study aimed to develop and test a multi-brand convolutional neural network (CNN)-based algorithm for automatically detecting ulcers and erosions in DAE. Materials and Methods: A unicentric retrospective study was conducted for the development of a CNN, based on a total of 250 DAE exams. A total of 6772 images were used, of which 678 were considered ulcers or erosions after double-validation. Data were divided into a training and a validation set, the latter being used for the performance assessment of the model. Our primary outcome measures were sensitivity, specificity, accuracy, positive predictive value (PPV), negative predictive value (NPV), and an area under the curve precision–recall curve (AUC-PR). Results: Sensitivity, specificity, PPV, and NPV were respectively 88.5%, 99.7%, 96.4%, and 98.9%. The algorithm’s accuracy was 98.7%. The AUC-PR was 1.00. The CNN processed 293.6 frames per second, enabling AI live application in a real-life clinical setting in DAE. Conclusion: To the best of our knowledge, this is the first study regarding the automatic multi-brand panendoscopic detection of ulcers and erosions throughout the digestive tract during DAE, overcoming a relevant interoperability challenge. Our results highlight that using a CNN to detect this type of lesion is associated with high overall accuracy. The development of binary CNN for automatically detecting clinically relevant endoscopic findings and assessing endoscopic inflammatory activity are relevant steps toward AI application in digestive endoscopy, particularly for panendoscopic evaluation.

1. Introduction

Ulcers and erosions are the most prevalent lesions of the small bowel [1,2]. Indeed, ulcerative and erosive lesions of the small intestine have been easier to detect since the introduction of capsule endoscopy (CE) in the early 2000s [3]. The etiology of these lesions is vast and can be associated with non-steroid anti-inflammatory drugs, as well as many systemic diseases, like Crohn’s disease, refractory celiac disease, neoplasms, and infections [4].

While the introduction of CE exponentially increased the capacity of mucosa evaluation and detection of bowel lesions, its purely diagnostic nature demanded developing therapeutic deep enteroscopy procedures. Device-assisted enteroscopy (DAE), which comprehends single- and double-balloon enteroscopy, plus motorized spiral enteroscopy, enabled gastroenterologists to sample tissue and treat lesions [5]. DAE has a significant role in the approach of small intestinal lesions, mainly after a positive CE [5,6]. Although the clinical setting and characterization of ulcerative lesions in CE may suggest probable etiologies, no distinctive macroscopic aspect can be used to make a definitive diagnosis. Moreover, revising CE videos is monotonous and prone to errors and missing lesions. DAE might be crucial in these cases by contributing to a correct histological final diagnosis (i.e., excluding infectious enteropathy or the presence of malignant cells).

Artificial intelligence (AI) application in gastroenterology is growing exponentially in many areas, such as gastrointestinal upper and lower endoscopy and hepatology [7,8,9,10]. Implementing AI algorithms for CE evaluation has also shown promising improvements, making the assessment of intestinal mucosa less time-consuming [4,11]. Despite significant advances in efficiency and identification of lesions by applying deep-learning technologies in CE, there is still a lack of evidence of AI pertinence during DAE. Although AI application during DAE has been studied to detect vascular and protruding enteric lesions automatically, no proof-of-concept studies in ulcerative lesions have been conducted [12,13].

This study aimed to develop and test a CNN-based algorithm for automatically detecting ulcers and erosions in DAE.

2. Materials and Methods

2.1. Study Design and Data Collection

Patients consecutively submitted to DAE between January 2020 and July 2022, regardless of intubation direction, at a single tertiary center (Centro Hospitalar Universitário São João, in Porto, Portugal) were included (n = 250). In that period, DAE was performed by two experienced endoscopists using the double-balloon enteroscopy system Fujifilm EN-580T (n = 152) and the single-balloon enteroscopy system Olympus EVIS EXERA II SIF-Q180 (n = 98). Recorded videos from all these procedures were collected retrospectively. Images from the esophagus, stomach, small intestine, and colon were recovered. Each video was segmented in still frames using dedicated video software (VLC media player, Paris, France).

Each extracted frame was then evaluated for the presence of ulcers or erosions, defined as mucosal breaks with white or yellow bases, surrounded by reddish or pink mucosa [14]. Images were divided into two groups, one with normal mucosa and the other with ulcerative lesions. The final classification of each frame required a consensus between three experienced gastroenterologists. When a common agreement was not possible, the frame was excluded. A total of 6772 images were used, of which 633 contained ulcers or erosions.

2.2. Development of the CNN and Performance Analysis

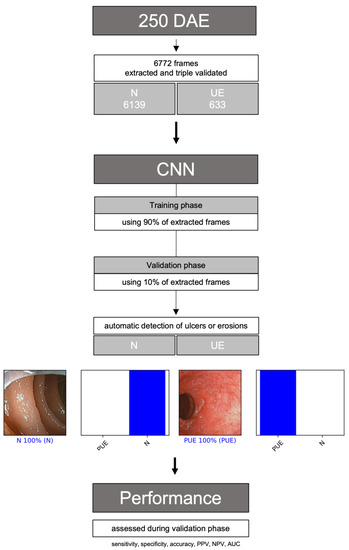

A deep-learning convolutional neural network (CNN) was constructed to detect gastrointestinal erosions or ulcers automatically, enabling panendoscopic inflammatory assessment. From the complete data set, 90% (n = 6094) was used to develop and train the algorithm. The remaining 10% (n = 678) was used to validate CNN performance independently. Figure 1 represents a graphical flowchart of the study design.

Figure 1.

Flowchart describing the study design. N: normal mucosa; UE: ulcers or erosions; PPV: positive predictive value; NPV: negative predictive value; AUC-PC: area under the precision–recall curve.

We used the XCeption model to build the CNN. Weights between units were trained on ImageNet, a large-scale image dataset created for object software recognition. We conserved its convolutional layers to transfer its learning to our model. We removed the last fully connected layers from our own classifier of dense and dropout layers. Each of the two blocks we used had a completely connected layer first, then a dropout layer with a 0.2 drop rate. Finally, we added a dense layer whose size determined the number of categories to be classified (two: normal or ulcers/erosions). The learning rate (varying between 0.0000625 and 0.0005), batch size of 128, and the number of epochs of 20 were set by trial and error. The model was prepared using PyTorch and scikit libraries [15,16]. Standard data augmentation techniques, including image rotations and mirroring, were applied during the training stage. The computers were powered with a 2.1 GHz Intel® Xeon® Gold 6130 processor (Intel, Santa Clara, CA, USA) and a double NVIDIA Quadro®RTXTM 8000 graphic processing unit (NVIDIA Corp, Santa Clara, CA, USA).

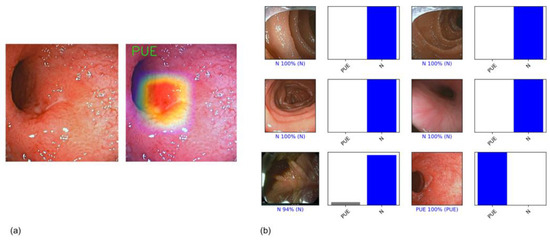

For each frame, the algorithm calculated the probability of being considered normal and a probability of having mucosa with ulcers or erosion. Each frame was classified into one of the categories above, selecting the one with the higher probability (Figure 2). The algorithm’s final classification of each image was compared with the corresponding evaluation provided by the two experts, the latter being considered the gold standard. Our primary outcome measures were sensitivity, specificity, accuracy, positive predictive value (PPV), negative predictive value (NPV), and area under the precision–recall curve (AUC-PR). The interpretation of a conventional receiver operating characteristic (ROC) curve can be misleading in cases of an imbalanced dataset, with a much higher proportion of normal mucosa frames (true negatives) than frames containing ulcerative lesions (true positives) [17]. This is explained by the ratio of true negatives, which can cause the ROC curve to shift to the left without necessarily implying improved diagnostic accuracy. Thus, we decided to use the precision–recall metric, since it is unaffected by true negatives.

Figure 2.

(a) Example of a heatmap highlighting CNN’s detection of an ulcerative; (b) output obtained from CNN application. Each bar represents the probability estimated by the algorithm of being considered normal and a probability of having ulceration or erosion. Each frame was classified in one of the aforementioned categories considering the one with higher probability. The corresponding evaluation provided by the three expert endoscopists, which was considered the gold standard, is written between parentheses. In the case of a correct prediction, the bar was painted blue. In the case of an incorrect one, the bar was painted red.

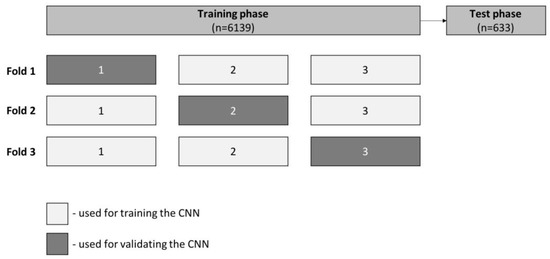

At the training stage, which included 90% of the full dataset, we performed three-fold cross-validation. At this experiment, for each fold the training dataset was divided into three even-sized groups. Within each fold, two groups were used for training (66.7%) and one group for validation (33.3%). For each fold, the groups used for training and validation varied, as shown in Figure 3. Sensitivity, specificity, accuracy, PPV, NPV and AUC were calculated for each run. The remaining 10% of the dataset was used to test CNN performance independently (test phase), using model specifications associated with the best-trained model in the previous phase. We also evaluated its computational performance by calculating the algorithm processing time of all frames from the validation set. We used Sci-Kit Learn v0.22.2 to perform statistical analysis [18].

Figure 3.

Schematic representation of three-fold cross-validation performed during the training phase. Frames were randomly divided into three equal groups from the total training set. We used two of the three created groups (66.7%) for training and one (33.3%) for model validation. We ran three runs with the aforementioned proportions but varying the groups used for training and validation. The remaining 10% of the data set was used to test CNN performance independently, during the validation phase.

3. Results

3.1. CNN Development during Training Phase

A total of 6772 images were used, of which 633 were considered ulcers or erosions after double-validation. From the full data set, 90% (n = 6094) was used to develop and train the algorithm.

The results of the three-fold cross-validation experiment are shown in Table 1. The mean model sensitivity and specificity were 89.7% (CI 95%: 84.2–95.6%) and 99.5% (CI 95%: 99.2–99.8%). The mean CNN accuracy was 98.6% (CI 95%: 98.1–99.1%).

Table 1.

Three-fold cross-validation, which was performed during the training phase.

3.2. CNN Performance during Test Phase

The remaining 10% (n = 678) of data were used to validate CNN performance independently.

The distribution of the main outcomes is shown in Table 2. The sensitivity and specificity of the model were 88.5% and 99.7%, respectively. The PPV was 96.4% and the NPV was 98.9%. Overall, our algorithm accuracy was 98.7%. The AUC-PR predicting enteric ulcers and erosions was 1.00. The CNN algorithm had a reading rate of approximately 293.6 frames per second.

Table 2.

Confusion matrix of the test set versus expert classification (considered the final diagnosis).

4. Discussion

This is the first study evaluating AI applicability in the automatic detection of ulcers and erosions in DAE. Our findings suggest that the automatic detection of ulcerative lesions with this algorithm can be performed effectively, as it achieved high sensitivity and specificity and may be feasible during real-time procedures. Furthermore, this model is the first to automatically detect ulcers and erosions throughout the entire digestive tract during enteroscopy, paving the way to panendoscopical AI analysis.

Our findings show that the performance of our algorithm levels was high, with sensitivity and specificity levels of 88.5% and 99.7%, respectively, and an overall diagnostic accuracy level of 98.9%. This is conjugated with a high processing frame rate capacity, with a reading rate of 294 frames per second. This fact ensures the real-time applicability of the AI model for endoscopic analysis, increasing the technology readiness level (TRL) of the developed AI algorithm. In addition, our CNN was constructed through acquired data from two different models of DAE, which may also increase the TRL of technology and its usefulness in real-life clinical practice, solving significant inter-operability challenges.

These findings are consistent with previous research on the automatic detection of ulcerative lesions using other gastroenterology procedures. Regarding CE, Aoki et al. demonstrated good diagnostic performance in automatically detecting ulcers and erosions, with a sensitivity level of 88.2%, specificity level of 90.9%, and accuracy of 90.8% [4]. Another group reported the development of a CNN for detecting mucosal breaks during CE, which can also predict their bleeding potential, with sensitivity, specificity and accuracy levels of 90.8%, 97.1% and 95.1%, respectively [19]. AI models also achieve good diagnostic performance metrics at colon capsule endoscopy (CCE), with a sensitivity level of 96.9%, specificity level of 99.9%, and accuracy of 99.6% for automatically detecting ulcers and erosions [20]. AI not only enables proficuous pandendoscopic noninvasive evaluation, but may also offer valuable guidance in device-assisted enteroscopy, providing full guidance in the endoscopic diagnostic workflow.

This study has some limitations. On the one hand, this was a unicentric study based on retrospective data, which might be associated with a selection bias. On the other hand, the number of frames used to develop and test this model was relatively small, thus compromising the external validity of our results. Although our results look promising, the risk of overfitting should not be omitted. Therefore, a final conclusion cannot be reached regarding its applicability in everyday situations. Multicentric studies are needed, with more extensive and prospective data collection, to ensure appropriate dataset variability. Moreover, it should be noted that anatomopathological diagnosis of Crohn’s disease remains challenging, since there is a higher proportion of false-negatives when biopsies are taken during enteroscopy [21]. Clinical and non-endoscopic imaging techniques remain crucial, namely when a strong suspicion exists, despite the microscopic result being negative. AI applications, namely AI guided biopsies, during device-assisted enteroscopy, may increase diagnostic yields of this technique, particularly for Crohn’s disease.

5. Conclusions

AI application is growing in various imaged-based gastroenterology procedures, considering that it might lead to higher quality endoscopy, better clinical decisions, and still potentially be cost-effective. This study is a continuation of our previous foundational studies regarding AI and DAE. It suggests that automatic detection of ulcers and erosions using a CNN during DAE is associated with high overall accuracy. Furthermore, implementation of this CNN during DAE might contribute to a higher detection rate of ulcerative lesions, given by real-time feedback during the procedure, which in turn may contribute to lower inter-observer variability and a lower false-negative rate.

Author Contributions

Conceptualization, M.M. (Miguel Mascarenhas), P.A., H.C. and G.M.; methodology, M.M. (Miguel Martins), M.M. (Miguel Mascarenhas), J.A., T.R., P.C. and F.M.; software, J.F.; validation, M.M. (Miguel Mascarenhas), P.A., H.C. and G.M.; formal analysis, M.M. (Miguel Martins), M.M. (Miguel Mascarenhas) and J.F.; investigation, M.M. (Miguel Martins), M.M. (Miguel Mascarenhas), J.A., T.R., P.C. and F.M.; resources, M.M. (Miguel Mascarenhas), P.A., H.C. and G.M.; data curation, J.F.; writing—original draft preparation, M.M. (Miguel Martins) and M.M. (Miguel Mascarenhas), with equal contribution; writing—review and editing, M.M. (Miguel Martins), M.M. (Miguel Mascarenhas), J.A., T.R., P.C., F.M., P.A., H.C. and G.M.; visualization, P.C. and F.M.; supervision, P.A., H.C. and G.M.; project administration, M.M. (Miguel Mascarenhas); funding acquisition, M.M. (Miguel Mascarenhas) All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by FUNDAÇÃO PARA A CIÊNCIA E TECNOLOGIA (FCT), grant number CPCA/A0/7363/2020. This entity had no role in study design, data collection, data analysis, preparation of the manuscript, and publishing decision.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Ethics Committee of CENTRO HOSPITALAR UNIVERSITÁRIO SÃO JOÃO (No. CE 188/2021).

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We thank NVIDIA for donating two Quadro RTX 8000 42Gb through their Applied Research Program.

Conflicts of Interest

J.F. is a paid employee of DigestAID-Digestive Artificial Intelligence Development. The other authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Goenka, M.K.; Majumder, S.; Kumar, S.; Sethy, P.K.; Goenka, U. Single center experience of capsule endoscopy in patients with obscure gastrointestinal bleeding. World J. Gastroenterol. 2011, 17, 774–778. [Google Scholar] [CrossRef] [PubMed]

- Shahidi, N.C.; Ou, G.; Svarta, S.; Law, J.K.; Kwok, R.; Tong, J.; Lam, E.C.; Enns, R. Factors associated with positive findings from capsule endoscopy in patients with obscure gastrointestinal bleeding. Clin. Gastroenterol. Hepatol. 2012, 10, 1381–1385. [Google Scholar] [CrossRef] [PubMed]

- Iddan, G.; Meron, G.; Glukhovsky, A.; Swain, P. Wireless capsule endoscopy. Nature 2000, 405, 417. [Google Scholar] [CrossRef]

- Aoki, T.; Yamada, A.; Aoyama, K.; Saito, H.; Tsuboi, A.; Nakada, A.; Niikura, R.; Fujishiro, M.; Oka, S.; Ishihara, S.; et al. Automatic detection of erosions and ulcerations in wireless capsule endoscopy images based on a deep convolutional neural network. Gastrointest. Endosc. 2019, 89, 357–363.e2. [Google Scholar] [CrossRef] [PubMed]

- Pennazio, M.; Rondonotti, E.; Despott, E.J.; Dray, X.; Keuchel, M.; Moreels, T.; Sanders, D.S.; Spada, C.; Carretero, C.; Cortegoso Valdivia, P.; et al. Small-bowel capsule endoscopy and device-assisted enteroscopy for diagnosis and treatment of small-bowel disorders: European Society of Gastrointestinal Endoscopy (ESGE) Guideline—Update 2022. Endoscopy 2023, 55, 58–95. [Google Scholar] [CrossRef]

- Rondonotti, E.; Spada, C.; Adler, S.; May, A.; Despott, E.J.; Koulaouzidis, A.; Panter, S.; Domagk, D.; Fernandez-Urien, I.; Rahmi, G.; et al. Small-bowel capsule endoscopy and device-assisted enteroscopy for diagnosis and treatment of small-bowel disorders: European Society of Gastrointestinal Endoscopy (ESGE) Technical Review. Endoscopy 2018, 50, 423–446. [Google Scholar] [CrossRef] [PubMed]

- Marlicz, W.; Koulaouzidis, G.; Koulaouzidis, A. Artificial Intelligence in Gastroenterology-Walking into the Room of Little Miracles. J. Clin. Med. 2020, 9, 3675. [Google Scholar] [CrossRef]

- Wu, L.; Xu, M.; Jiang, X.; He, X.; Zhang, H.; Ai, Y.; Tong, Q.; Lv, P.; Lu, B.; Guo, M.; et al. Real-time artificial intelligence for detecting focal lesions and diagnosing neoplasms of the stomach by white-light endoscopy (with videos). Gastrointest. Endosc. 2022, 95, 269–280.e6. [Google Scholar] [CrossRef] [PubMed]

- Repici, A.; Badalamenti, M.; Maselli, R.; Correale, L.; Radaelli, F.; Rondonotti, E.; Ferrara, E.; Spadaccini, M.; Alkandari, A.; Fugazza, A.; et al. Efficacy of Real-Time Computer-Aided Detection of Colorectal Neoplasia in a Randomized Trial. Gastroenterology 2020, 159, 512–520.e7. [Google Scholar] [CrossRef] [PubMed]

- Le Berre, C.; Sandborn, W.J.; Aridhi, S.; Devignes, M.D.; Fournier, L.; Smaïl-Tabbone, M.; Danese, S.; Peyrin-Biroulet, L. Application of Artificial Intelligence to Gastroenterology and Hepatology. Gastroenterology 2020, 158, 76–94.e2. [Google Scholar] [CrossRef] [PubMed]

- Aoki, T.; Yamada, A.; Kato, Y.; Saito, H.; Tsuboi, A.; Nakada, A.; Niikura, R.; Fujishiro, M.; Oka, S.; Ishihara, S.; et al. Automatic detection of various abnormalities in capsule endoscopy videos by a deep learning-based system: A multicenter study. Gastrointest. Endosc. 2021, 93, 165–173.e1. [Google Scholar] [CrossRef] [PubMed]

- Mascarenhas Saraiva, M.; Ribeiro, T.; Afonso, J.; Andrade, P.; Cardoso, P.; Ferreira, J.; Cardoso, H.; Macedo, G. Deep Learning and Device-Assisted Enteroscopy: Automatic Detection of Gastrointestinal Angioectasia. Medicina 2021, 57, 1378. [Google Scholar] [CrossRef] [PubMed]

- Cardoso, P.; Mascarenhas Saraiva, M.; Afonso, J.; Ribeiro, T.; Andrade, P.; Ferreira, J.; Cardoso, H.; Macedo, G. Artificial Intelligence and Device-Assisted Enteroscopy: Automatic detection of enteric protruding lesions using a convolutional neural network: Application do AI for detection of protruding lesions in enteroscopy. Clin. Transl. Gastroenterol. 2022, 13, e00514. [Google Scholar] [CrossRef] [PubMed]

- Gralnek, I.M.; Defranchis, R.; Seidman, E.; Leighton, J.A.; Legnani, P.; Lewis, B.S. Development of a capsule endoscopy scoring index for small bowel mucosal inflammatory change. Aliment. Pharmacol. Ther. 2008, 27, 146–154. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Proceedings of the Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, Vancouver, BC, Canada, 8–14 December 2019; pp. 8024–8035. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Fu, G.H.; Yi, L.Z.; Pan, J. Tuning model parameters in class-imbalanced learning with precision-recall curve. Biom. J. 2019, 61, 652–664. [Google Scholar] [CrossRef] [PubMed]

- Rodriguez-Diaz, E.; Baffy, G.; Lo, W.K.; Mashimo, H.; Vidyarthi, G.; Mohapatra, S.S.; Singh, S.K. Real-time artificial intelligence-based histologic classification of colorectal polyps with augmented visualization. Gastrointest. Endosc. 2021, 93, 662–670. [Google Scholar] [CrossRef] [PubMed]

- Afonso, J.; Saraiva, M.M.; Ferreira, J.P.S.; Cardoso, H.; Ribeiro, T.; Andrade, P.; Parente, M.; Jorge, R.N.; Macedo, G. Automated detection of ulcers and erosions in capsule endoscopy images using a convolutional neural network. Med. Biol. Eng. Comput. 2022, 60, 719–725. [Google Scholar] [CrossRef] [PubMed]

- Ribeiro, T.; Mascarenhas, M.; Afonso, J.; Cardoso, H.; Andrade, P.; Lopes, S.; Ferreira, J.; Mascarenhas Saraiva, M.; Macedo, G. Artificial intelligence and colon capsule endoscopy: Automatic detection of ulcers and erosions using a convolutional neural network. J. Gastroenterol. Hepatol. 2022, 37, 2282–2288. [Google Scholar] [CrossRef] [PubMed]

- Xin, L.; Liao, Z.; Jiang, Y.P.; Li, Z.S. Indications, detectability, positive findings, total enteroscopy, and complications of diagnostic double-balloon endoscopy: A systematic review of data over the first decade of use. Gastrointest. Endosc. 2011, 74, 563–570. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).