Abstract

Objective: Falciparum malaria is a major global health concern, affecting more than half of the world’s population and causing over half a million deaths annually. Red cell invasion is a crucial step in the parasite’s life cycle, where the parasite invade human erythrocytes to sustain infection and ensure survival. Two parasite proteins, Apical Membrane Antigen 1 (AMA-1) and Rhoptry Neck Protein 2 (RON2), are involved in tight junction formation, which is an essential step in parasite invasion of the red blood cell. Targeting the AMA-1 and RON2 interaction with inhibitors halts the formation of the tight junction, thereby preventing parasite invasion, which is detrimental to parasite survival. This study leverages machine learning (ML) to predict potential small molecule inhibitors of the AMA-1–RON2 interaction, providing putative antimalaria compounds for further chemotherapeutic exploration. Method: Data was retrieved from the PubChem database (AID 720542), comprising 364,447 inhibitors and non-inhibitors of the AMA-1–RON2 interaction. The data was processed by computing Morgan fingerprints and divided into training and testing with an 80:20 ratio, and the classes in the training data were balanced using the Synthetic Minority Oversampling Technique. Five ML models developed comprised Random Forest (RF), Gradient Boost Machines (GBMs), CatBoost (CB), AdaBoost (AB) and Support Vector Machine (SVM). The performances of the models were evaluated using accuracy, F1 score, and receiver operating characteristic—area under the curve (ROC-AUC) and validated using held-out data and a y-randomization test. An applicability domain analysis was carried out using the Tanimoto distance with a threshold set at 0.04 to ascertain the sample space where the models predict with confidence. Results: The GBMs model emerged as the best, achieving 89% accuracy and a ROC-AUC of 92%. CB and RF had accuracies of 88% and 87%, and ROC-AUC scores of 93% and 91%, respectively. Conclusions: Experimentally validated inhibitors of the AMA-1–RON2 interaction could serve as starting blocks for the next-generation antimalarial drugs. The models were deployed as a web-based application, known as PLASMOpred.

1. Introduction

Malaria is a life-threatening disease caused by Plasmodium parasites, primarily transmitted through the bites of infected female Anopheles mosquitoes [1,2,3]. Among the five Plasmodium species responsible for malaria in humans, Plasmodium falciparum is the most fatal [4,5,6,7], accounting for the majority of severe malaria cases, mostly in pregnant women and children [8,9,10]. According to the World Health Organization (WHO), malaria caused an estimated 627,000 deaths in 2022, with the vast majority of malaria-associated mortality occurring in sub-Saharan Africa and disproportionately affecting children below the age of five, who comprise 67% of malaria-related deaths [11].

The clinical manifestations of malaria occur during the blood stages of the parasite’s life cycle, where Plasmodium merozoites invade and exit red blood cells (RBCs) [12,13,14,15]. The process of red cell invasion is essential for the parasite’s survival, the sustenance of infection, and its transmission to mosquito vectors [16,17,18,19]. Erythrocyte invasion involves multiple highly coordinated steps, culminating in the formation of a tight junction, a transient but essential structure that acts as a molecular anchor between the merozoite and the host erythrocyte [20]. The tight junction formation begins with the interaction between Apical Membrane Antigen 1 (AMA-1), located on the merozoite surface, and Rhoptry Neck Protein 2 (RON2), which is secreted by the parasite and embedded into the RBC membrane [21,22]. Following egress from liver cells and entry into the bloodstream, the merozoite attaches to RBC receptors and quickly reorients itself to position its apical end containing the rhoptry organelles necessary for invasion toward the RBC membrane [23,24,25]. This is followed by an interaction between the Reticulocyte Binding Protein Homologue 5 (PfRH5) and host cell surface protein Basigin (CD147) [26,27,28], which causes the release of rhoptry proteins into the RBC cytoplasm [29]. Among these rhoptry proteins, RON2 integrates into the RBC membrane, creating an extracellular loop that binds specifically to a hydrophobic cleft on AMA-1 [30].

The interaction between AMA-1 and RON2 is pivotal in stabilizing the initial contact between the parasite and the host cell. It provides the structural framework for the tight junction, a junctional complex that migrates along the surface of the merozoite as the parasite invades the RBC [31,32]. This movement is powered by the parasite’s actomyosin motor, which generates the mechanical force needed to penetrate the RBC membrane [20,33]. Other rhoptry proteins, such as RON4 and RON5, contribute to stabilizing the tight junction by interacting with the host cell’s cytoskeleton, ensuring the parasite’s successful invasion [29,30].

Although artemisinin-based combination therapies (ACTs) have significantly reduced malaria morbidity and mortality, the emergence of artemisinin resistance underscores the urgent need for new therapeutic strategies [34,35]. Additionally, various malaria vaccines have been developed to combat the spread of malaria; however, these vaccines tend to show very low efficacy against the parasite, particularly Plasmodium falciparum [36,37]. This highlights the need for innovative drug discovery approaches. Notably, the AMA-1–RON2 interaction is indispensable for the invasion process, as it lacks alternative pathways, making it an ideal therapeutic target [21,22]. Disrupting this interaction would prevent tight junction formation, effectively halting the merozoite’s entry into RBCs and breaking the parasite’s life cycle, offering a promising approach for malaria drug discovery [22,29].

Experimental techniques such as quantitative high-throughput screening (qHTS) and structure–activity studies have been used to identify small molecule inhibitors of the AMA-1–RON2 interaction [21,22]. However, these methods are time-consuming, costly, and require significant expertise [38]. Computational approaches, particularly machine learning (ML), provide a faster, cost-effective alternative for identifying promising drug candidates [39,40]. Several studies have been conducted using ML in the drug discovery process of various diseases [41,42,43,44,45]. In the field of malaria, ML has been applied in the early diagnosis of malaria based on clinical information [46]. Considering the AMA-1–RON2 complex, ML has been applied to develop models to predict small molecule inhibitors of the AMA-1–RON2 invasion complex [47]. Also, ML has been applied in a study that designed chemical inhibitors of the hydrophobic cleft of the AMA-1 protein in Toxoplasma Gondi [48]. Another study developed QSAR (Quantitative Structure–Activity Relationship) models for predicting antimalarial molecules targeting the Plasmodium falciparum apicolast organelle [49]. Although. there have been major strides in the application of ML in the prediction of antimalarial compounds, there is still the need to explore other models to consolidate the large endeavor towards the early prediction of potential small molecule inhibitors of the AMA-1–RON2 invasion complex.

This study applied supervised ML techniques to predict small molecule inhibitors of the AMA-1–RON2 interactions. By leveraging data from qHTS, various ML models such as Random Forest (RF), Gradient Boost Machines (GBMs), CatBoost (CB), and AdaBoost (AB) were trained and evaluated. A Support Vector Machine (SVM) was also developed to assess how other algorithms perform in relation to the tree-based models. The models were validated to assess their efficiency to ensure their robustness. The top-performing models were deployed as a web-based application called PLASMOpred to aid in predicting antimalarial compounds to support the identification of novel therapeutic compounds.

2. Results and Discussion

2.1. Data Collection and Pre-Processing

The dataset obtained via PubChem (AID 720542) was screened using qHTS to evaluate compound activity [50,51,52]. Compounds were categorized as active (scores 40–100), inconclusive (scores 1–39), or inactive (score 0) [53]. The final working dataset included 738 active compounds and 356,551 compounds labeled as inactive, with the compounds annotated as inconclusive removed from the dataset. The input and output data were in the canonical SMILES format, and contained no duplicates or null values. The activity outcomes were converted into binary labels, with active represented as 1 and inactive as 0 [51,54,55]. To evaluate the models on new data, a validation dataset comprising 20 active and 20 inactive compounds was created by randomly selecting these compounds from the processed dataset. This validation dataset was used for the final evaluation of the models; hence, they were not included in the training or testing phases. To manage computational complexity and address the extreme imbalance between active and inactive classes, the dataset size was reduced to 2000 compounds by randomly reducing the inactive class using a random undersampling technique [49]. This final dataset comprised 718 active compounds and 1282 inactive compounds, maintaining the integrity of the classification task, preventing models from being biased to the majority class.

2.2. Descriptor Generation and Feature Engineering

Morgan fingerprints were generated using RDKit 2024.9.4 to encode molecular structures into binary vectors, capturing chemical features within a defined radius [56,57]. The Morgan fingerprint generated 2048 distinct features for each compound, enabling ML models to effectively analyze the molecular structures and identify key patterns associated with the activity [56,57,58,59]. To further enhance model performance, a variance threshold with a threshold of 0.1 was applied to remove features with low variance, such as columns containing all zeros or all ones. These features may contribute little to model learning and prediction due to their lack of variability [60]. For this study, only one feature was filtered out based on low variance, reducing the dimensionality of the dataset to 2047 features.

2.3. Data Splitting and Data Balancing

The processed dataset was split into training and test sets in the ratio 80:20, with a random state parameter of 18 to ensure reproducibility. The split resulted in a training dataset of 1568 compounds and a testing dataset of 392 compounds, each with 2047 features. Using the same training dataset at a random state set at 18 across ML models allowed for comparisons of performance metrics and evaluation. To address the class imbalance between active and inactive compounds, the Synthetic Minority Oversampling Technique (SMOTE) was applied to the training dataset. SMOTE generates synthetic samples of the minority class (active compounds) by interpolating between existing active compounds, thereby balancing the class distribution [61,62,63]. This approach enhances the model’s ability to accurately predict active and inactive compounds without bias toward the majority class. By applying SMOTE, the size of the training dataset increased to 1998 compounds, with 430 synthetic active compounds added to the dataset.

2.4. Development and Evaluation of Machine Learning Models

This study utilized five ML models comprising RF, GBMs, CB, AB, SVM, with an emphasis on tree-based models for the effective handling of class imbalances [64,65,66]. Hyperparameter tuning with grid search optimized each model by identifying the best hyperparameter combinations [67,68,69]. For the RF model, the optimal parameters included a maximum number of features set to 5, the number of estimators set to 185, and a random state value of 2. The GBMs model achieved its best performance with 150 estimators, a learning rate of 0.2, a maximum depth of 9, and a random state value of 5. For the CB model, the best hyperparameter combination consisted of 185 iterations, a depth of 5, a learning rate of 0.1, and a random seed value of 2. The AB model performed optimally with a base estimator configured with 185 estimators, a learning rate of 1.0, and a random state value of 2. The SVM model achieved its best results using a polynomial kernel with a degree of 3, a coefficient value of 1.0, and probability set as True.

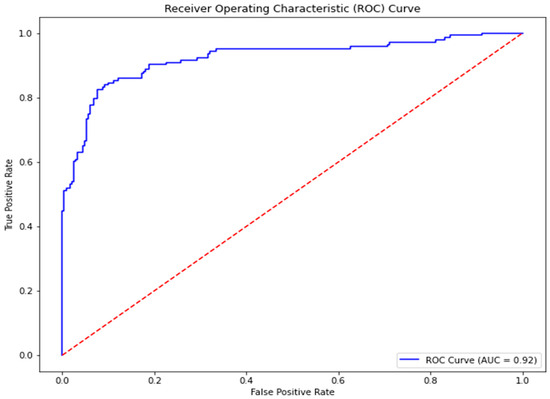

The models were evaluated using the following metrics: specificity, sensitivity, F1-score, precision, accuracy, and the receiver operating characteristic—area under the curve (ROC-AUC) (Table 1). Sensitivity generally measures the model’s ability to correctly identify active compounds (true positives) among all actual active compounds [70,71,72]. Among the models, CB achieved the highest sensitivity with a score of 0.87, indicating its strong performance in detecting active compounds. This was followed by GBMs and RF with scores of 0.85 each. The SVM model performed better than AB, with a score of 0.86 compared to 0.77. The lower sensitivity of AB suggests it may be less effective at identifying active compounds, potentially missing some true positives. Specificity measures the model’s ability to correctly identify inactive compounds (true negatives) among all actual inactive compounds [70,71,72]. AB achieved the highest specificity with a score of 0.92, demonstrating its potential effectiveness in classifying inactive compounds. GBMs, CB, and RF also performed strongly, with scores of 0.90, 0.88, and 0.88, respectively. The SVM model, however, scored the lowest with 0.80. Precision indicates the proportion of true positive predictions among all positive predictions made by the model [73]. AB achieved the highest precision with a score of 0.85, followed closely by GBMs with 0.84. RF and CB scored 0.81 and 0.80, respectively, showing comparable results. SVM had the lowest precision, scoring 0.71, which suggests a higher rate of false positives compared to the other models, in line with its low specificity. The F1-score is the harmonic mean of precision and sensitivity, balancing these two metrics to provide a single measure of the model’s performance [73,74,75]. CB and GBMs achieved an F1-score of 0.84, while RF scored 83, indicating their strong overall balance between precision and sensitivity. AB and SVM scored slightly lower, with scores of 0.81 and 0.78, respectively. Accuracy reflects the proportion of correctly predicted compounds (active and inactive) out of the total number of predictions [76,77]. GBMs achieved the highest accuracy with a score of 0.89, followed by CB with 0.88 and RF with 0.87. AB scored 0.86, while SVM had the lowest accuracy with a score of 0.82, demonstrating comparatively weaker overall performance. The ROC-AUC provides a measure of the model’s ability to distinguish between active and inactive compounds across different classification thresholds [78,79]. CB and AB achieved the highest ROC-AUC scores, both at 0.93, highlighting their superior classification capability. GBMs and RF also performed strongly with scores of 0.92 and 0.91 (Figure 1), respectively. The SVM model scored slightly lower, with 0.90. The performance of the models is comparable to that in other similar ML studies [46,47,48,49] In another study, the RF model, which had the best performance, achieved an accuracy score of 0.93 and an ROC-AUC score of 0.84 [46]. However, other metrics, such as the F1-score and precision, were low at 0.19 and 0.3, respectively, probably due to limitations data [46]. The best-performing model in the study that identified inhibitors of the AMA-1–RON2 interaction, using the PubChem AID720542, was the RF model, which achieved specificity and ROC-AUC scores of 0.8 and 0.86, respectively [47]. This study showcased the performance of the RF model with a sensitivity of 0.85 and a ROC-AUC score of 0.91. The differences in results might be attributed to the implementation of random undersampling and SMOTE to balance the active and inactive classes in this study.

Table 1.

Evaluation of the optimized ML models for RF, GBMs, CB, AB and SVM.

Figure 1.

Receiver Operating Curve for the best-performing model, GBMs. The ROC-AUC obtained was 0.92.

In the study that developed QSAR models to predict antimalarial molecules targeting the apicoplast organelle of Plasmodium falciparum, the best-performing model was also the RF model, which achieved accuracy, ROC-AUC, and sensitivity scores of 0.87, 0.88, and 0.86, respectively [49]. These results are comparable to those of this study. Additionally, a SVM model achieved accuracy, AUC-ROC, and sensitivity scores of 0.83, 0.88, and 0.82, respectively [49], which are also comparable to the SVM model in this study (Table 1). Although, the study predicted potential antimalarial molecules focusing on a different target [49], the methods used, such as undersampling, to handle class imbalance were consistent with those in this study. A key difference was the use of Recursive Feature Elimination (RFE) [49], whereas this project employed a variance threshold.

GBMs outperformed other models due to its ability to capture complex feature interactions, iteratively refine weak learners, and assign higher weights to misclassified samples, improving generalization. The performance of SVM was likely due to its sensitivity to large datasets and imbalanced classes. Unlike tree-based models, which adaptively handle features, SVM relies on finding an optimal decision boundary, which can be challenging when dealing with overlapping class distributions.

2.5. Validation of Machine Learning Models

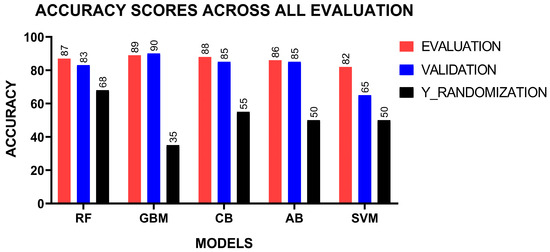

The models were validated using the 40 compounds which were held back at the beginning of the study. This dataset comprised 20 active and 20 inactive compounds. Similar to the training and testing phases, Morgan fingerprints were computed from the canonical SMILES as input features, while the activity outcome served as the output. Each model was validated on this new dataset and evaluated based on accuracy scores. Additionally, the y-randomization test was performed on the validation dataset, where the activity outcome labels were shuffled. The expected outcome of this test is a reduced model performance, which validates the models’ ability to make accurate predictions while ruling out overfitting [80,81]. The degree of performance reduction depends on the extent of shuffling for each specific model. The performance of the models were comparable during validation to their initial evaluation on the test dataset (Figure 2). This perhaps highlights their capability to maintain high performance on previously unseen data. The GBMs achieved the highest accuracy score of 0.90, followed by CB and AB, which had 0.85 (Table 2). RF achieved an accuracy score of 0.83 whilst SVM, had a lower accuracy score of 0.65. Perhaps this supports the hypothesis that tree-based methods are robustly suited for this study. The accuracy scores from the y-randomization test decreased for all the models. This reduction may highlight the effectiveness of the models and decreasing overfitting.

Figure 2.

Assessment of models on the test data using validation, held-out data, and y-randomization.

Table 2.

Evaluation of the optimized ML models on held-out data.

2.6. Model Deployment

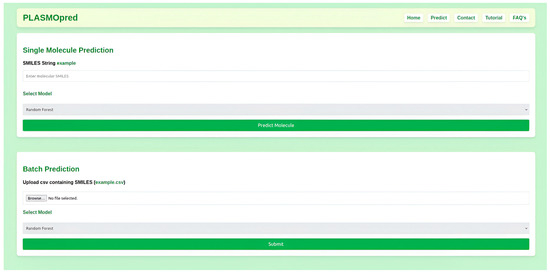

For the top three performing predictive models, which were RF, GBMs, and CB, a user-friendly platform was provided that allows researchers to input chemical structural data, such as SMILES, and receive results instantly (Figure 3, Figure 4 and Figure 5). The web deployment was built with scalable technologies, ensuring that it can handle many simultaneous users while maintaining computational efficiency. Additionally, the application includes features like data visualization, which allows users to interpret the results of the model in a more accessible format. This deployment enhances the accessibility of the model. The web application is accessible at http://197.255.126.13:8081 (accessed on 14 April 2025).

Figure 3.

A display of the homepage of the deployed web application PLASMOpred.

Figure 4.

A graphical user interface of PLASMOpred for the prediction of antimalarial activity.

Figure 5.

Frequently asked questions page of the deployed web application PLASMOpred.

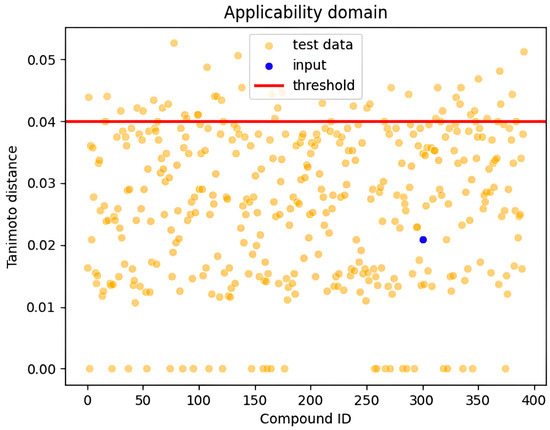

3. Applicability Domain

In this study, the applicability domain (AD) was defined using the Tanimoto distance with a threshold value set at 0.04. The applicability domain defines the response and chemical structure spaces in which a model makes predictions with a given reliability (boundaries, likelihood, applicability, and reliability) [82,83]. Common approaches for determining the applicability domain examine molecular classes, features, and agreement in both chemical and response domains; a typical measure is based on distances within these domains [82,83]. The Tanimoto distance is a widely used metric in chemoinformatics to quantify the similarity between the feature vectors of new samples and the training data [82,83]. Samples with a Tanimoto distance below the threshold of 0.04 were considered to fall within the applicability domain, where the model showed reliable predictive performance [82]. In contrast, samples with a Tanimoto distance exceeding this threshold were deemed outside the applicability domain, and the model’s predictions for these samples were less reliable. The threshold of 0.04 was chosen based on the model’s performance on validation data, ensuring that predictions remain valid for most practical scenarios while highlighting the risk of inaccuracy for samples with significant differences from the training set. This approach allows for a controlled balance between model confidence and generalizability [82,83]. Figure 6 illustrates the applicability domain of our samples, showing the distribution of Tanimoto distances for the training set, with a vertical line at 0.04 indicating our selected threshold; the region below the threshold represents compounds with reliable predictions, while those above are compounds for which predictions should be treated with caution.

Figure 6.

Visualization of the applicability domains showing all test data points and the input point.

The Tanimoto distance is calculated using the following:

where A and B are two sets (or feature vectors), and ∣A∩B∣ represents the number of common elements between the sets, while ∣A∣ and ∣B∣ are the sizes of the individual sets.

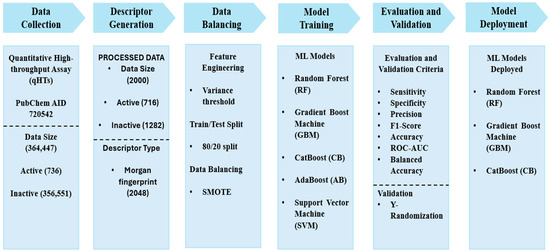

4. Materials and Methods

4.1. Data Collection

The dataset used for the classification of inhibitors was sourced from PubChem with AID 720542. The assay was performed by the National Center for Advancing Translational Sciences (NCATS) within the National Institute of Health (NIH) in the United States and comprised 364,447 compounds generated through qHTS. The purpose of the qHTS assay was to identify inhibitors of the AMA-1–RON2 interactions towards the development of antimalarial drug leads [53]. The qHTS is an advanced screening technique that tests compounds over a range of concentrations to generate detailed dose–response curves [50,51]. Compounds in the dataset were classified based on their activity score obtained through efficacy, AC50, and curve class. Activity scores, recorded as PubChem activity scores, ranged between 40 and 100 for active compounds, 1 and 39 for inconclusive compounds, and 0 for inactive compounds. Fit_LogAC50 was employed to scale scores within each curve class, providing a relative ranking of compound activity [53]. Out of the total dataset, 738 compounds were classified as active, 7158 were inconclusive, and the remaining 356,551 compounds were inactive (Figure 7). Before generating descriptors, the dataset was pre-processed to ensure accurate and reliable models. The PubChem canonical SMILES column was used as the input, which contains the SMILES notation for the compounds, and the PubChem activity outcome column as the output, which contains the activity outcome for each compound. The activity outcome was converted from strings to integers with 1 (active) and 0 (inactive) [51], while inconclusive entries were excluded, leaving only active and inactive compounds in the dataset. To create a validation dataset, 20 active and 20 inactive compounds were randomly held out from the processed dataset. Given the large dataset size, the number of inactive compounds was randomly downsampled to create a combined dataset of 2000 compounds using the Numpy version 1.21.4 Python library, consisting of 718 active and 1282 inactive compounds, to manage the high computational demand and address the extreme class imbalance. This reduced dataset was used for training and testing the ML models.

Figure 7.

Methodology schema highlighting the project pipeline. It shows information on data collection, descriptor generation, data balance, model training, evaluation, validation and deployment.

4.2. Descriptor Generation and Feature Engineering

Morgan fingerprints were computed for each compound resulting in 2048 distinct features. These fingerprints, which encode molecular structures as mathematical representations, serve as input features for the models [56,57]. To improve performance and minimize redundancy, a variance threshold with a threshold of 0.1 was applied to remove features with low variance [60]. The dataset was then split into an 80:20 ratio, as this provided the optimal performance of the models, with 80% of the data allocated for training and 20% for testing [84,85]. To address the class imbalance in the training data, the SMOTE was employed [61,86]. SMOTE was applied to the active compounds, the minority class, generating 430 synthetic samples to ensure a balanced dataset for training.

4.3. Development and Evaluation of Machine Learning Models

Five ML models comprising RF, GBMs, CB, AB and SVM were developed as webservers for the prediction of small molecule inhibitors [87,88,89]. The tree-based methods (RF, GBMs, CB, AB) were hypothesized to better fit the dataset due to their ability to handle complex feature interactions and imbalances effectively [64]. The SVM model was included as a model with a different classification mechanism. Each model was built and optimized using unique hyperparameters tailored to its architecture. RF was constructed with parameters such as maximum number features, number of estimators, and random state [90]. GBMs were trained using number of estimators, maximum depth, learning rate, and random state [91,92,93]. For CB, the parameters included iterations, depth, learning rate, and random seed [94,95]. The AB model was trained with number of estimators, learning rate, and random state [96,97,98]. For the SVM, the training data were scaled due to the algorithm’s reliance on distance-based metrics [99,100], and the model was built using parameters including kernel, degree, probability, and coefficients [101,102,103]. Hyperparameter optimization was performed for each model using a grid search to identify the best combination of parameters to ensure optimal performance [67]. The RF, GB, and AB models had the number of estimators set within a range of 50 to 300 with an interval of 5, while the maximum depth parameters ranged from 3 to 10 for all models that utilized it. The CB, AB, and GBMs models incorporated the learning rate parameter, which was set within a range of 0.01 to 0.3. For RF, the minimum samples leaf parameter ranged from 1 to 6 with an increment of 1, while the minimum samples split parameter ranged from 2 to 11, increasing by 2 at each step. For the SVM, the degree parameter was set within a range of 2 to 5 with an increment of 1, while the coefficient parameter ranged from 0.0 to 2.0. The optimized models were evaluated using several classification metrics, including specificity, sensitivity, F1-score, precision, accuracy, and ROC-AUC [78,104,105,106]. These metrics (Equations (2)–(6)), provided a comprehensive assessment of the models’ performance and suitability for the prediction task.

Note: TP, TN, FP, and FN represent true positives, true negatives, false positives, and false negatives, respectively.

4.4. Validation of Machine Learning Models

The optimized ML models were validated using a held-out dataset of 20 active and 20 inactive compounds. This dataset was entirely excluded from the training process and used to evaluate the models’ performance on new, unseen data. The held-out validation aimed to assess the models’ generalizability and robustness in predicting the activity of novel compounds. To further validate the models and ensure there was no overfitting, a y-randomization test was conducted. In this test, the activity outcomes (outputs) were randomly shuffled while keeping the descriptors (inputs) intact [80,81]. The models were then re-evaluated on this randomized dataset, and any significant decrease in performance confirmed the models’ reliability and ruled out overfitting. An applicability domain analysis was also carried out using the Tanimoto distance applicability domain with a threshold of 0.04 to assess the chemoinformatics space where the models can make accurate predictions.

4.5. Model Deployment

The model was successfully deployed as a web application, making it accessible for users to perform predictions. The front end of the web application was built using Hypertext Markup Language (HTML) and Cascading Style Sheet (CSS), which together ensure an intuitive and visually appealing user interface. HTML provides the structural framework of the application, defining how content like text, forms, and buttons are organized on the web page, while CSS enhances the visual design by controlling the layout, colors, fonts, and overall styling of the interface. For the back end, Python version 3.10.0 and Flask were used to create the logic and functionality of the application. Python was used for handling the core predictive computations, including pre-processing user-inputted data and running the ML models [107]. Flask facilitated the development of the application’s back end, enabling smooth communication between the front end and the back end and managing Hypertext Transfer Protocol (HTTP) requests and responses [108]. Additionally, JavaScript was incorporated to enhance the interactivity of the application, enabling dynamic features such as updates and interactive components, thereby improving the user experience [109].

5. Limitations

This study employed random undersampling to address the significant class imbalance and enhance computational efficiency. Random undersampling reduces the size of the majority class (inactive compounds) by randomly removing a portion of its instances. This technique is widely used in ML to balance datasets and prevent models from being biased toward the majority class. Additionally, it helps mitigate overfitting by ensuring that the classifier does not learn a decision boundary heavily skewed by the majority class [110]. However, a key limitation of this approach is the potential loss of valuable information. Since the process randomly removes inactive compounds, it may discard instances that contain critical patterns or edge cases necessary for distinguishing between active and inactive compounds. This loss can impact the model’s ability to generalize well to unseen data. The predictions of the models need experimental validation to corroborate the computed biological activity. In future, larger datasets devoid of class imbalances maybe used to optimize and enhance the performance of the models.

6. Conclusions

The study developed five ML models comprising RF, GBMs, CB, AB, and SVM using bioactive datasets sourced from the PubChem database to predict potential small molecule inhibitors of the AMA-1–RON2 interaction, which is essential for erythrocyte invasion by Plasmodium falciparum. Morgan fingerprints of the compounds were calculated, resulting in 2047 features after variance filtering, which were used to train the predictive models. GBMs emerged as the best model, with an accuracy of 0.90 and an ROC-AUC score of 0.92. CB and AB also had ROC-AUC scores of 0.93 each. The models were validated using a held-out dataset and y-randomization tests, confirming their robustness and ruling out overfitting. The optimized models were deployed as a web application, PLASMOpred, providing a user-friendly interface to support the prediction of AMA-1–RON2 inhibitors. These robust and efficient models enhance the ability to identify putative inhibitors, offering significant contributions to computer-aided antimalarial discovery.

Author Contributions

H.E.M.-B. Conceptualized the project. The machine learning work, including data processing, model building, evaluation, and validation, was carried out by E.L. The model deployment was carried out by G.A. and E.L.; O.A. was responsible for hosting and handling the web application on the University of Ghana server. The manuscript was written by E.L. under the supervision of H.E.M.-B. with contributions from J.O., G.A., B.O., G.H., P.O.S., and D.S.Y.A.; H.E.M.-B., S.K.K., and W.A.M.III supervised the project. All authors have read and agreed to the published version of the manuscript.

Funding

This research project was funded by the Bill and Melinda Gates Foundation under the African Postdoctoral Training Initiative (APTI) in collaboration with the African Academy of Sciences (Grant Number: AAS/APTI2/005, Awardee: Mensah-Brown).

Data Availability Statement

All data utilized and obtained for this project are provided within the manuscript.

Acknowledgments

This document has been produced with the financial assistance of the Bill and Melinda Gates Foundation Investment ID INV-007175 (formerly OPP1191735) through the African Postdoctoral Training Initiative (APTI) programme. APTI is implemented by the African Academy of Sciences with support from the Bill and Melinda Gates Foundation and in Partnership with the US National Institutes of Health, where the grantees receive mentorship during their first and second years of the fellowship. The contents of this document are the sole responsibility of the author(s) and can under no circumstances be regarded as reflecting the position of the Bill and Melinda Gates Foundation, the African Academy of Sciences, and the US National Institutes of Health. The authors are grateful to the West African Center for Cell Biology of Infectious Pathogens (WACCBIP) at the University of Ghana for the use of high-performing Linux computers for running codes.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Munisi, D.Z.; Mathania, M.M. Adult Anopheles Mosquito Distribution at a Low and High Malaria Transmission Site in Tanzania. Biomed Res. Int. 2022, 2022, 6098536. [Google Scholar] [CrossRef] [PubMed]

- Ogola, E.O.; Fillinger, U.; Ondiba, I.M.; Villinger, J.; Masiga, D.K.; Torto, B.; Tchouassi, D.P. Insights into malaria transmission among Anopheles funestus mosquitoes, Kenya. Parasites Vectors 2018, 11, 577. [Google Scholar] [CrossRef] [PubMed]

- Scheiner, M.; Burda, P.C.; Ingmundson, A. Moving on: How malaria parasites exit the liver. Mol. Microbiol. 2024, 121, 328–340. [Google Scholar] [CrossRef] [PubMed]

- Garcia, L.S. Malaria. Clin. Lab. Med. 2010, 30, 93–129. [Google Scholar] [CrossRef]

- Maeno, Y.; Tuyen Quang, N.; Culleton, R.; Kawai, S.; Masuda, G.; Nakazawa, S.; Marchand, R.P. Humans frequently exposed to a range of non-human primate malaria parasite species through the bites of Anopheles dirus mosquitoes in South-central Vietnam. Parasites Vectors 2015, 8, 376. [Google Scholar] [CrossRef]

- Kho, S.; Anstey, N.M.; Barber, B.E.; Piera, K.; William, T.; Kenangalem, E.; McCarthy, J.S.; Jang, I.K.; Domingo, G.J.; Britton, S.; et al. Diagnostic performance of a 5-plex malaria immunoassay in regions co-endemic for Plasmodium falciparum, P. vivax, P. knowlesi, P. malariae and P. ovale. Sci. Rep. 2022, 12, 7286. [Google Scholar] [CrossRef]

- Anwar, M. Introduction: An Overview of Malaria and Plasmodium. In Drug Targets for Plasmodium Falciparum: Historic to Future Perspectives; Springer: Singapore, 2024; pp. 1–17. [Google Scholar] [CrossRef]

- Milner, D.A. Malaria Pathogenesis. Cold Spring Harb. Perspect. Med. 2018, 8, a025569. [Google Scholar] [CrossRef]

- White, N.J. Anaemia and malaria. Malar. J. 2018, 17, 371. [Google Scholar] [CrossRef]

- White, N.J. Severe malaria. Malar. J. 2022, 21, 284. [Google Scholar] [CrossRef]

- Shin, H.-I.; Ku, B.; Jung, H.; Lee, S.; Lee, S.-Y.; Ju, J.-W.; Kim, J.; Lee, H.-I. 2023 World Malaria Report (Status of World Malaria in 2022). Public Heal. Wkly. Rep. 2024, 17, 1351–1377. [Google Scholar] [CrossRef]

- Tewari, S.G.; Swift, R.P.; Reifman, J.; Prigge, S.T.; Wallqvist, A. Metabolic alterations in the erythrocyte during blood-stage development of the malaria parasite. Malar. J. 2020, 19, 94. [Google Scholar] [CrossRef] [PubMed]

- Salinas, N.D.; Tang, W.K.; Tolia, N.H. Blood-Stage Malaria Parasite Antigens: Structure, Function, and Vaccine Potential. J. Mol. Biol. 2019, 431, 4259–4280. [Google Scholar] [CrossRef]

- Balaji, S.; Deshmukh, R.; Trivedi, V. Severe malaria: Biology, clinical manifestation, pathogenesis and consequences. J. Vector Borne Dis. 2020, 57, 1–13. [Google Scholar] [CrossRef]

- Vijayan, A.; Chitnis, C.E. Development of Blood Stage Malaria Vaccines. Methods Mol. Biol. 2019, 2013, 199–218. [Google Scholar] [CrossRef]

- Salamanca, D.R.; Gómez, M.; Camargo, A.; Cuy-Chaparro, L.; Molina-Franky, J.; Reyes, C.; Patarroyo, M.A.; Patarroyo, M.E. Plasmodium falciparum Blood Stage Antimalarial Vaccines: An Analysis of Ongoing Clinical Trials and New Perspectives Related to Synthetic Vaccines. Front. Microbiol. 2019, 10, 2712. [Google Scholar] [CrossRef]

- Hillringhaus, S.; Dasanna, A.K.; Gompper, G.; Fedosov, D.A. Importance of Erythrocyte Deformability for the Alignment of Malaria Parasite upon Invasion. Biophys. J. 2019, 117, 1202–1214. [Google Scholar] [CrossRef]

- Fikri Heikal, M.; Eka Putra, W.; Sustiprijatno; Hidayatullah, A.; Widiastuti, D.; Lelitawati, M.; Fikri Heikal, M.; Eka Putra, W.; Hidayatullah, A. A Molecular Docking and Dynamics Simulation Study on Prevention of Merozoite Red Blood Cell Invasion by Targeting Plasmodium vivax Duffy Binding Protein with Zingiberaceae Bioactive Compounds. Uniciencia 2024, 38, 326–340. [Google Scholar] [CrossRef]

- King, N.R.; Freire, C.M.; Touhami, J.; Sitbon, M.; Toye, A.M.; Satchwell, T.J. Basigin mediation of Plasmodium falciparum red blood cell invasion does not require its transmembrane domain or interaction with monocarboxylate transporter 1. PLOS Pathog. 2024, 20, e1011989. [Google Scholar] [CrossRef]

- Molina-Franky, J.; Patarroyo, M.E.; Kalkum, M.; Patarroyo, M.A. The Cellular and Molecular Interaction Between Erythrocytes and Plasmodium falciparum Merozoites. Front. Cell. Infect. Microbiol. 2022, 12, 816574. [Google Scholar] [CrossRef]

- Srinivasan, P.; Yasgar, A.; Luci, D.K.; Beatty, W.L.; Hu, X.; Andersen, J.; Narum, D.L.; Moch, J.K.; Sun, H.; Haynes, J.D.; et al. Disrupting malaria parasite AMA1–RON2 interaction with a small molecule prevents erythrocyte invasion. Nat. Commun. 2013, 4, 2261. [Google Scholar] [CrossRef]

- Wang, G.; Drinkwater, N.; Drew, D.R.; MacRaild, C.A.; Chalmers, D.K.; Mohanty, B.; Lim, S.S.; Anders, R.F.; Beeson, J.G.; Thompson, P.E.; et al. Structure–Activity Studies of β-Hairpin Peptide Inhibitors of the Plasmodium falciparum AMA1–RON2 Interaction. J. Mol. Biol. 2016, 428, 3986–3998. [Google Scholar] [CrossRef] [PubMed]

- Wright, K.E.; Hjerrild, K.A.; Bartlett, J.; Douglas, A.D.; Jin, J.; Brown, R.E.; Illingworth, J.J.; Ashfield, R.; Clemmensen, S.B.; De Jongh, W.A.; et al. Structure of malaria invasion protein RH5 with erythrocyte basigin and blocking antibodies. Nature 2014, 515, 427–430. [Google Scholar] [CrossRef]

- Wong, W.; Huang, R.; Menant, S.; Hong, C.; Sandow, J.J.; Birkinshaw, R.W.; Healer, J.; Hodder, A.N.; Kanjee, U.; Tonkin, C.J.; et al. Structure of Plasmodium falciparum Rh5-CyRPA-Ripr invasion complex. Nature 2019, 565, 118–121. [Google Scholar] [CrossRef]

- Ragotte, R.J.; Higgins, M.K.; Draper, S.J. The RH5-CyRPA-Ripr Complex as a Malaria Vaccine Target. Trends Parasitol. 2020, 36, 545. [Google Scholar] [CrossRef]

- Sleebs, B.E.; Jarman, K.E.; Frolich, S.; Wong, W.; Healer, J.; Dai, W.; Lucet, I.S.; Wilson, D.W.; Cowman, A.F. Development and application of a high-throughput screening assay for identification of small molecule inhibitors of the P. falciparum reticulocyte binding-like homologue 5 protein. Int. J. Parasitol. Drugs drug Resist. 2020, 14, 188–200. [Google Scholar] [CrossRef]

- Volz, J.C.; Yap, A.; Sisquella, X.; Thompson, J.K.; Lim, N.T.Y.; Whitehead, L.W.; Chen, L.; Lampe, M.; Tham, W.H.; Wilson, D.; et al. Essential Role of the PfRh5/PfRipr/CyRPA Complex during Plasmodium falciparum Invasion of Erythrocytes. Cell Host Microbe 2016, 20, 60–71. [Google Scholar] [CrossRef]

- Wanaguru, M.; Liu, W.; Hahn, B.H.; Rayner, J.C.; Wright, G.J. RH5-Basigin interaction plays a major role in the host tropism of Plasmodium falciparum. Proc. Natl. Acad. Sci. USA. 2013, 110, 20735–20740. [Google Scholar] [CrossRef]

- Miller, L.H.; Ackerman, H.C.; Su, X.Z.; Wellems, T.E. Malaria biology and disease pathogenesis: Insights for new treatments. Nat. Med. 2013, 19, 156–167. [Google Scholar] [CrossRef]

- Lamarque, M.; Besteiro, S.; Papoin, J.; Roques, M.; Vulliez-Le Normand, B.; Morlon-Guyot, J.; Dubremetz, J.F.; Fauquenoy, S.; Tomavo, S.; Faber, B.W.; et al. The RON2-AMA1 Interaction is a Critical Step in Moving Junction-Dependent Invasion by Apicomplexan Parasites. PLOS Pathog. 2011, 7, e1001276. [Google Scholar] [CrossRef]

- Devine, S.M.; MacRaild, C.A.; Norton, R.S.; Scammells, P.J. Antimalarial drug discovery targeting apical membrane antigen 1. Medchemcomm 2016, 8, 13. [Google Scholar] [CrossRef]

- Srinivasan, P.; Beatty, W.L.; Diouf, A.; Herrera, R.; Ambroggio, X.; Moch, J.K.; Tyler, J.S.; Narum, D.L.; Pierce, S.K.; Boothroyd, J.C.; et al. Binding of Plasmodium merozoite proteins RON2 and AMA1 triggers commitment to invasion. Proc. Natl. Acad. Sci. USA 2011, 108, 13275–13280. [Google Scholar] [CrossRef] [PubMed]

- Straub, K.W.; Peng, E.D.; Hajagos, B.E.; Tyler, J.S.; Bradley, P.J. The Moving Junction Protein RON8 Facilitates Firm Attachment and Host Cell Invasion in Toxoplasma gondii. PLOS Pathog. 2011, 7, e1002007. [Google Scholar] [CrossRef]

- Su, X.Z.; Lane, K.D.; Xia, L.; Sá, J.M.; Wellems, T.E. Plasmodium Genomics and Genetics: New Insights into Malaria Pathogenesis, Drug Resistance, Epidemiology, and Evolution. Clin. Microbiol. Rev. 2019, 32. [Google Scholar] [CrossRef]

- Girgis, S.T.; Adika, E.; Nenyewodey, F.E.; Senoo Jnr, D.K.; Ngoi, J.M.; Bandoh, K.; Lorenz, O.; van de Steeg, G.; Harrott, A.J.R.; Nsoh, S.; et al. Drug resistance and vaccine target surveillance of Plasmodium falciparum using nanopore sequencing in Ghana. Nat. Microbiol. 2023, 8, 2365–2377. [Google Scholar] [CrossRef] [PubMed]

- Duffy, P.E.; Patrick Gorres, J. Malaria vaccines since 2000: Progress, priorities, products. npj Vaccines 2020, 5, 48. [Google Scholar] [CrossRef]

- de Almeida, M.E.M.; de Vasconcelos, M.G.S.; Tarragô, A.M.; Mariúba, L.A.M. Circumsporozoite Surface Protein-based malaria vaccines: A review. Rev. Inst. Med. Trop. Sao Paulo 2021, 63, e11. [Google Scholar] [CrossRef]

- Shockley, K.R. Quantitative high-throughput screening data analysis: Challenges and recent advances. Drug Discov. Today 2015, 20, 296–300. [Google Scholar] [CrossRef]

- Vamathevan, J.; Clark, D.; Czodrowski, P.; Dunham, I.; Ferran, E.; Lee, G.; Li, B.; Madabhushi, A.; Shah, P.; Spitzer, M.; et al. Applications of machine learning in drug discovery and development. Nat. Rev. Drug Discov. 2019, 18, 463–477. [Google Scholar] [CrossRef]

- Lavecchia, A. Machine-learning approaches in drug discovery: Methods and applications. Drug Discov. Today 2015, 20, 318–331. [Google Scholar] [CrossRef]

- Hochreiter, S.; Klambauer, G.; Rarey, M. Machine Learning in Drug Discovery. J. Chem. Inf. Model. 2018, 58, 1723–1724. [Google Scholar] [CrossRef]

- Dara, S.; Dhamercherla, S.; Jadav, S.S.; Babu, C.M.; Ahsan, M.J. Machine Learning in Drug Discovery: A Review. Artif. Intell. Rev. 2021, 55, 1947–1999. [Google Scholar] [CrossRef] [PubMed]

- Kwofie, S.K.; Adams, J.; Broni, E.; Enninful, K.S.; Agoni, C.; Soliman, M.E.S.; Wilson, M.D. Artificial Intelligence, Machine Learning, and Big Data for Ebola Virus Drug Discovery. Pharmaceuticals 2023, 16, 332. [Google Scholar] [CrossRef]

- Agyapong, O.; Miller, W.A.; Wilson, M.D.; Kwofie, S.K. Development of a proteochemometric-based support vector machine model for predicting bioactive molecules of tubulin receptors. Mol. Divers. 2022, 26, 2231–2242. [Google Scholar] [CrossRef]

- Adams, J.; Agyenkwa-Mawuli, K.; Agyapong, O.; Wilson, M.D.; Kwofie, S.K. EBOLApred: A Machine Learning-Based Web Application for Predicting Cell Entry Inhibitors of the Ebola Virus. Comput. Biol. Chem. 2022, 101, 107766. [Google Scholar] [CrossRef]

- Lee, Y.W.; Choi, J.W.; Shin, E.H. Machine learning model for predicting malaria using clinical information. Comput. Biol. Med. 2021, 129, 104151. [Google Scholar] [CrossRef]

- Maindola, P.; Jamal, S.; Grover, A. Cheminformatics Based Machine Learning Models for AMA1-RON2 Abrogators for Inhibiting Plasmodium falciparum Erythrocyte Invasion. Mol. Inform. 2015, 34, 655–664. [Google Scholar] [CrossRef]

- Vetrivel, U.; Muralikumar, S.; Mahalakshmi, B.; Lily Therese, K.; Madhavan, H.; Alameen, M.; Thirumudi, I. Multilevel Precision-Based Rational Design of Chemical Inhibitors Targeting the Hydrophobic Cleft of Toxoplasma gondii Apical Membrane Antigen 1 (AMA1). Genomics Inform. 2016, 14, 53. [Google Scholar] [CrossRef]

- Bharti, D.R.; Lynn, A.M. QSAR based predictive modeling for anti-malarial molecules. Bioinformation 2017, 13, 154. [Google Scholar] [CrossRef]

- Inglese, J.; Auld, D.S.; Jadhav, A.; Johnson, R.L.; Simeonov, A.; Yasgar, A.; Zheng, W.; Austin, C.P. Quantitative high-throughput screening: A titration-based approach that efficiently identifies biological activities in large chemical libraries. Proc. Natl. Acad. Sci. USA 2006, 103, 11473–11478. [Google Scholar] [CrossRef]

- Yasgar, A.; Shinn, P.; Jadhav, A.; Auld, D.; Michael, S.; Zheng, W.; Austin, C.P.; Inglese, J.; Simeonov, A. Compound Management for Quantitative High-Throughput Screening. J. Lab. Autom. 2008, 13, 79–89. [Google Scholar] [CrossRef]

- Huang, R. A quantitative high-throughput screening data analysis pipeline for activity profiling. Methods Mol. Biol. 2016, 1473, 111–122. [Google Scholar] [CrossRef] [PubMed]

- AID 720542—qHTS for Inhibitors of AMA1-RON.; Towards Development of Antimalarial Drug Lead: Primary Screen—PubChem. Available online: https://pubchem.ncbi.nlm.nih.gov/bioassay/720542 (accessed on 16 February 2025).

- Severa, W.; Vineyard, C.M.; Dellana, R.; Verzi, S.J.; Aimone, J.B. Training deep neural networks for binary communication with the Whetstone method. Nat. Mach. Intell. 2019, 1, 86–94. [Google Scholar] [CrossRef]

- Korshunova, M.; Huang, N.; Capuzzi, S.; Radchenko, D.S.; Savych, O.; Moroz, Y.S.; Wells, C.I.; Willson, T.M.; Tropsha, A.; Isayev, O. Generative and reinforcement learning approaches for the automated de novo design of bioactive compounds. Commun. Chem. 2022, 5, 129. [Google Scholar] [CrossRef] [PubMed]

- Zhou, H.; Skolnick, J. Utility of the Morgan Fingerprint in Structure-Based Virtual Ligand Screening. J. Phys. Chem. B 2024, 128, 5363–5370. [Google Scholar] [CrossRef]

- Zhong, S.; Guan, X. Count-Based Morgan Fingerprint: A More Efficient and Interpretable Molecular Representation in Developing Machine Learning-Based Predictive Regression Models for Water Contaminants’ Activities and Properties. Environ. Sci. Technol. 2023, 57, 18193–18202. [Google Scholar] [CrossRef]

- Morgan, L.O.; Johnson, M.; Cornelison, J.B.; Isaac, C.V.; deJong, J.L.; Prahlow, J.A. Autopsy Fingerprint Technique Using Fingerprint Powder. J. Forensic Sci. 2018, 63, 262–265. [Google Scholar] [CrossRef]

- Ehiro, T. Descriptor generation from Morgan fingerprint using persistent homology. SAR QSAR Environ. Res. 2024, 35, 31–51. [Google Scholar] [CrossRef]

- Cherrington, M.; Thabtah, F.; Lu, J.; Xu, Q. Feature selection: Filter methods performance challenges. In Proceedings of the 2019 International Conference on Computer and Information Sciences ICCIS, Sakaka, Saudi Arabia, 3–4 April 2019. [Google Scholar] [CrossRef]

- Blagus, R.; Lusa, L. SMOTE for high-dimensional class-imbalanced data. BMC Bioinformatics 2013, 14, 106. [Google Scholar] [CrossRef]

- Dablain, D.; Krawczyk, B.; Chawla, N.V. DeepSMOTE: Fusing Deep Learning and SMOTE for Imbalanced Data. IEEE Trans. Neural Networks Learn. Syst. 2023, 34, 6390–6404. [Google Scholar] [CrossRef]

- Pradipta, G.A.; Wardoyo, R.; Musdholifah, A.; Sanjaya, I.N.H.; Ismail, M. SMOTE for Handling Imbalanced Data Problem: A Review. In Proceedings of the 2021 6th International Conference on Informatics and Computing ICIC, Jakarta, Indonesia, 3–4 November 2021. [Google Scholar] [CrossRef]

- Liu, X.Y.; Zhou, Z.H. Ensemble Methods for Class Imbalance Learning. In Imbalanced Learning: Foundations, Algorithms, and Applications; Wiley-IEEE Press: Hoboken, NJ, USA, 2013; pp. 61–82. [Google Scholar] [CrossRef]

- Huda, S.; Liu, K.; Abdelrazek, M.; Ibrahim, A.; Alyahya, S.; Al-Dossari, H.; Ahmad, S. An Ensemble Oversampling Model for Class Imbalance Problem in Software Defect Prediction. IEEE Access 2018, 6, 24184–24195. [Google Scholar] [CrossRef]

- Galar, M.; Fernandez, A.; Barrenechea, E.; Bustince, H.; Herrera, F. A review on ensembles for the class imbalance problem: Bagging-, boosting-, and hybrid-based approaches. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2012, 42, 463–484. [Google Scholar] [CrossRef]

- Ogunsanya, M.; Isichei, J.; Desai, S. Grid search hyperparameter tuning in additive manufacturing processes. Manuf. Lett. 2023, 35, 1031–1042. [Google Scholar] [CrossRef]

- Belete, D.M.; Huchaiah, M.D. Grid search in hyperparameter optimization of machine learning models for prediction of HIV/AIDS test results. Int. J. Comput. Appl. 2022, 44, 875–886. [Google Scholar] [CrossRef]

- Shekar, B.H.; Dagnew, G. Grid search-based hyperparameter tuning and classification of microarray cancer data. In Proceedings of the 2019 Second International Conference on Advanced Computational and Communication Paradigms ICACCP, Gangtok, India, 25–28 February 2019. [Google Scholar] [CrossRef]

- Alireza, B.; Mostafa, H.; Ahmed, N.; Gehad, E.A. Part 1: Simple Definition and Calculation of Accuracy, Sensitivity and Specificity. Arch. Acad. Emerg. Med. 2015, 3, 48–49. [Google Scholar]

- Kelly, H.; Bull, A.; Russo, P.; McBryde, E.S. Estimating sensitivity and specificity from positive predictive value, negative predictive value and prevalence: Application to surveillance systems for hospital-acquired infections. J. Hosp. Infect. 2008, 69, 164–168. [Google Scholar] [CrossRef]

- Hawass, N.E.D. Comparing the sensitivities and specificities of two diagnostic procedures performed on the same group of patients. Br. J. Radiol. 1997, 70, 360–366. [Google Scholar] [CrossRef]

- Goutte, C.; Gaussier, E. A Probabilistic Interpretation of Precision, Recall and F-Score, with Implication for Evaluation. Lect. Notes Comput. Sci. 2005, 3408, 345–359. [Google Scholar] [CrossRef]

- Wardhani, N.W.S.; Rochayani, M.Y.; Iriany, A.; Sulistyono, A.D.; Lestantyo, P. Cross-validation Metrics for Evaluating Classification Performance on Imbalanced Data. In Proceedings of the 2019 International Conference on Computer, Control, Informatics and its Applications IC3INA, Tangerang, Indonesia, 23–24 October 2019; pp. 14–18. [Google Scholar] [CrossRef]

- Haque, M.R.; Islam, M.M.; Iqbal, H.; Reza, M.S.; Hasan, M.K. Performance Evaluation of Random Forests and Artificial Neural Networks for the Classification of Liver Disorder. In Proceedings of the 2018 International Conference on Computer, Communication, Chemical, Material and Electronic Engineering IC4ME2, Rajshahi, Bangladesh, 8–9 February 2018. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Warner, T.A.; Guillén, L.A. Accuracy Assessment in Convolutional Neural Network-Based Deep Learning Remote Sensing Studies—Part 1: Literature Review. Remote Sens. 2021, 13, 2450. [Google Scholar] [CrossRef]

- Eusebi, P. Diagnostic Accuracy Measures. Cerebrovasc. Dis. 2013, 36, 267–272. [Google Scholar] [CrossRef]

- Varoquaux, G.; Colliot, O. Evaluating Machine Learning Models and Their Diagnostic Value. Neuromethods 2023, 197, 601–630. [Google Scholar] [CrossRef]

- Ferris, M.H.; McLaughlin, M.; Grieggs, S.; Ezekiel, S.; Blasch, E.; Alford, M.; Cornacchia, M.; Bubalo, A. Using ROC curves and AUC to evaluate performance of no-reference image fusion metrics. In Proceedings of the 2015 National Aerospace and Electronics Conference (NAECON), Dayton, OH, USA, 15–19 June 2015; pp. 27–34. [Google Scholar] [CrossRef]

- Rücker, C.; Rücker, G.; Meringer, M. Y-randomization and its variants in QSPR/QSAR. J. Chem. Inf. Model. 2007, 47, 2345–2357. [Google Scholar] [CrossRef] [PubMed]

- Kaneko, H. Estimation of predictive performance for test data in applicability domains using y-randomization. J. Chemom. 2019, 33, e3171. [Google Scholar] [CrossRef]

- Chung, N.C.; Miasojedow, B.Z.; Startek, M.; Gambin, A. Jaccard/Tanimoto similarity test and estimation methods for biological presence-absence data. BMC Bioinformatics 2019, 20, 644. [Google Scholar] [CrossRef]

- Mathea, M.; Klingspohn, W.; Baumann, K. Chemoinformatic Classification Methods and their Applicability Domain. Mol. Inform. 2016, 35, 160–180. [Google Scholar] [CrossRef]

- Bichri, H.; Chergui, A.; Hain, M. Investigating the Impact of Train / Test Split Ratio on the Performance of Pre-Trained Models with Custom Datasets. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 331. [Google Scholar] [CrossRef]

- Rácz, A.; Bajusz, D.; Héberger, K. Effect of Dataset Size and Train/Test Split Ratios in QSAR/QSPR Multiclass Classification. Mol. 2021, 26, 1111. [Google Scholar] [CrossRef]

- Han, H.; Wang, W.Y.; Mao, B.H. Borderline-SMOTE: A new over-sampling method in imbalanced data sets learning. In Advances in Intelligent Computing; Lecture Notes in Computer Science (PART I); Springer: Berlin/Heidelberg, Germany, 2005; Volume 3644, pp. 878–887. [Google Scholar] [CrossRef]

- Nagassou, M.; Mwangi, R.W.; Nyarige, E.; Nagassou, M.; Mwangi, R.W.; Nyarige, E. A Hybrid Ensemble Learning Approach Utilizing Light Gradient Boosting Machine and Category Boosting Model for Lifestyle-Based Prediction of Type-II Diabetes Mellitus. J. Data Anal. Inf. Process. 2023, 11, 480–511. [Google Scholar] [CrossRef]

- Kumar, V.; Kedam, N.; Sharma, K.V.; Khedher, K.M.; Alluqmani, A.E. A Comparison of Machine Learning Models for Predicting Rainfall in Urban Metropolitan Cities. Sustainability 2023, 15, 13724. [Google Scholar] [CrossRef]

- Joshi, A.; Saggar, P.; Jain, R.; Sharma, M.; Gupta, D.; Khanna, A. CatBoost—An Ensemble Machine Learning Model for Prediction and Classification of Student Academic Performance. Adv. Data Sci. Adapt. Anal. 2021, 13, 2141002. [Google Scholar] [CrossRef]

- Contreras, P.; Orellana-Alvear, J.; Muñoz, P.; Bendix, J.; Célleri, R. Influence of Random Forest Hyperparameterization on Short-Term Runoff Forecasting in an Andean Mountain Catchment. Atmos 2021, 12, 238. [Google Scholar] [CrossRef]

- Fan, M.; Xiao, K.; Sun, L.; Zhang, S.; Xu, Y. Automated Hyperparameter Optimization of Gradient Boosting Decision Tree Approach for Gold Mineral Prospectivity Mapping in the Xiong’ershan Area. Miner 2022, 12, 1621. [Google Scholar] [CrossRef]

- Kiatkarun, K.; Phunchongharn, P. Automatic Hyper-Parameter Tuning for Gradient Boosting Machine. In Proceedings of the 2020 1st International Conference on Big Data Analytics and Practices IBDAP, Bangkok, Thailand, 25–26 September 2020. [Google Scholar] [CrossRef]

- Kavzoglu, T.; Teke, A. Advanced hyperparameter optimization for improved spatial prediction of shallow landslides using extreme gradient boosting (XGBoost). Bull. Eng. Geol. Environ. 2022, 81, 201. [Google Scholar] [CrossRef]

- Hancock, J.T.; Khoshgoftaar, T.M. CatBoost for big data: An interdisciplinary review. J. Big Data 2020, 7, 94. [Google Scholar] [CrossRef] [PubMed]

- Lu, C.; Zhang, S.; Xue, D.; Xiao, F.; Liu, C. Improved estimation of coalbed methane content using the revised estimate of depth and CatBoost algorithm: A case study from southern Sichuan Basin, China. Comput. Geosci. 2022, 158, 104973. [Google Scholar] [CrossRef]

- Krithiga, R.; Ilavarasan, E. Hyperparameter tuning of AdaBoost algorithm for social spammer identification. Int. J. Pervasive Comput. Commun. 2020, 17, 462–482. [Google Scholar] [CrossRef]

- Gao, R.; Liu, Z. An Improved AdaBoost Algorithm for Hyperparameter Optimization. J. Phys. Conf. Ser. 2020, 1631, 012048. [Google Scholar] [CrossRef]

- Fahrezi, S.F.; Nugraha, A.; Luthfiarta, A.; Primadya, N.D. Optimizing Performance of AdaBoost Algorithm through Undersampling and Hyperparameter Tuning on CICIoT 2023 Dataset. Techné J. Ilm. Elektrotek. 2024, 10. [Google Scholar] [CrossRef]

- Chen, Y.C.; Su, C.T. Distance-based margin support vector machine for classification. Appl. Math. Comput. 2016, 283, 141–152. [Google Scholar] [CrossRef]

- Aoudi, W.; Barbar, A.M. Support vector machines: A distance-based approach to multi-class classification. In Proceedings of the 2016 IEEE International Multidisciplinary Conference on Engineering Technology IMCET, Beirut, Lebanon, 2–4 November 2016; pp. 75–80. [Google Scholar] [CrossRef]

- Gaspar, P.; Carbonell, J.; Oliveira, J.L. On the parameter optimization of Support Vector Machines for binary classification. J. Integr. Bioinform. 2012, 9, 201. [Google Scholar] [CrossRef]

- Min, J.H.; Lee, Y.C. Bankruptcy prediction using support vector machine with optimal choice of kernel function parameters. Expert Syst. Appl. 2005, 28, 603–614. [Google Scholar] [CrossRef]

- Dioşan, L.; Rogozan, A.; Pecuchet, J.P. Improving classification performance of Support Vector Machine by genetically optimising kernel shape and hyper-parameters. Appl. Intell. 2012, 36, 280–294. [Google Scholar] [CrossRef]

- Naidu, G.; Zuva, T.; Sibanda, E.M. A Review of Evaluation Metrics in Machine Learning Algorithms. In Artificial Intelligence Application in Networks and Systems; Lecture Notes in Networks and Systems (LNNS); Springer: Cham, Switzerland, 2023; Volume 724, pp. 15–25. [Google Scholar] [CrossRef]

- Rainio, O.; Teuho, J.; Klén, R. Evaluation metrics and statistical tests for machine learning. Sci. Rep. 2024, 14, 6086. [Google Scholar] [CrossRef]

- Erickson, B.J.; Kitamura, F. Magician’s corner: 9. performance metrics for machine learning models. Radiol. Artif. Intell. 2021, 7. [Google Scholar] [CrossRef]

- Taneja, S.; Gupta, P.R. Python as a tool for web server application development. JIMS 8i-Int’l J. Inf. Comm. Comput. Technol. 2014, 2, 77–83. [Google Scholar]

- Mufid, M.R.; Basofi, A.; Al Rasyid, M.U.H.; Rochimansyah, I.F.; Rokhim, A. Design an MVC Model using Python for Flask Framework Development. In Proceedings of the 2019 International Electronics Symposium (IES), Surabaya, Indonesia, 27–28 September 2019; pp. 214–219. [Google Scholar] [CrossRef]

- Uzayr, S.B.; Cloud, N.; Ambler, T. JavaScript Frameworks for Modern Web Development; Apress: Berkeley, CA, USA, 2019. [Google Scholar]

- Prusa, J.; Khoshgoftaar, T.M.; DIttman, D.J.; Napolitano, A. Using Random Undersampling to Alleviate Class Imbalance on Tweet Sentiment Data. In Proceedings of the 2015 IEEE International Conference on Information Reuse and Integration, San Francisco, CA, USA, 13–15 August 2015; pp. 197–202. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).