Robust Activity Recognition via Redundancy-Aware CNNs and Novel Pooling for Noisy Mobile Sensor Data

Abstract

1. Introduction

2. Related Work

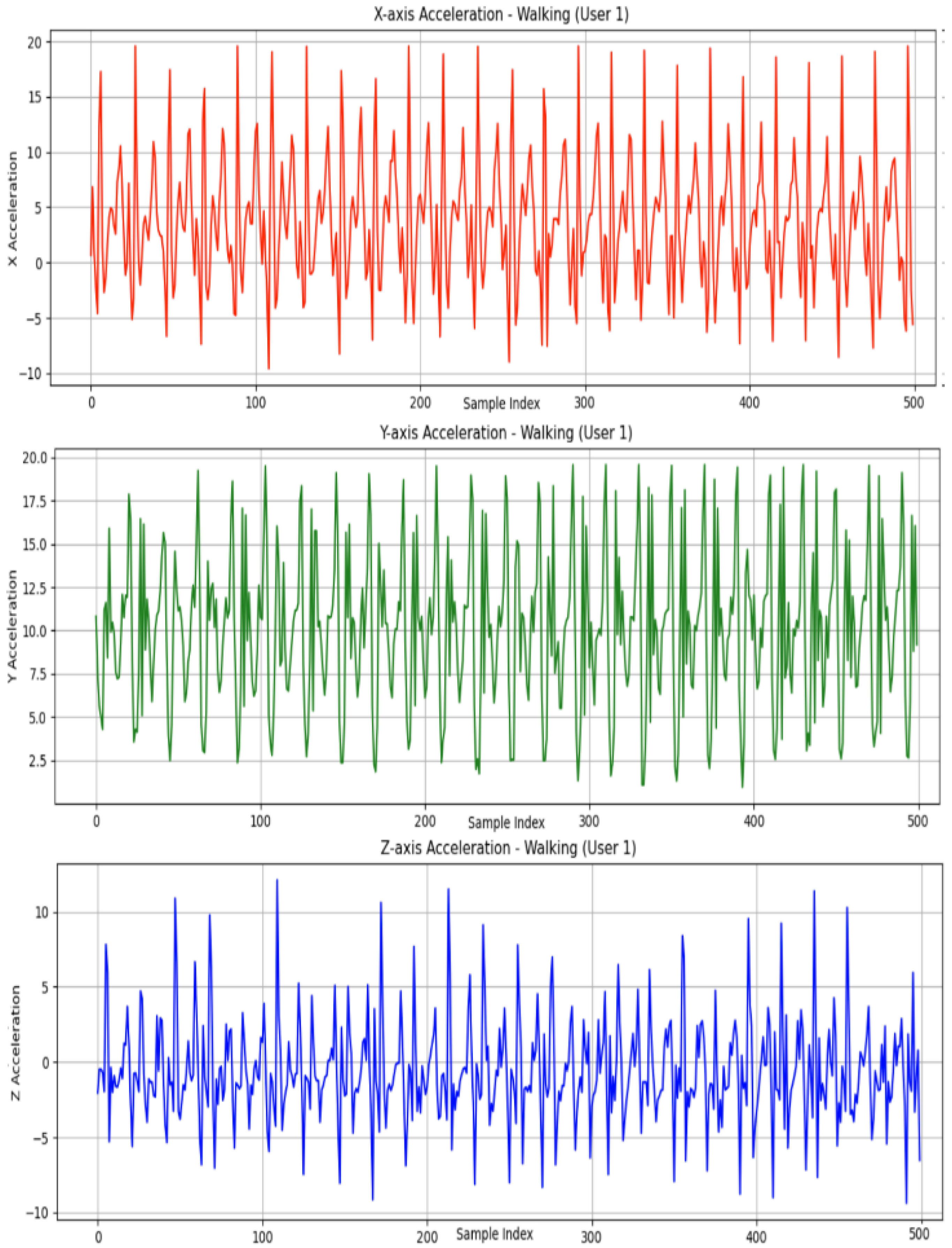

3. Dataset Description

3.1. Data Collection Methodology

3.2. Necessary Preprocessing for Human Activity Recognition (HAR) Models

3.3. Relevance to This Study

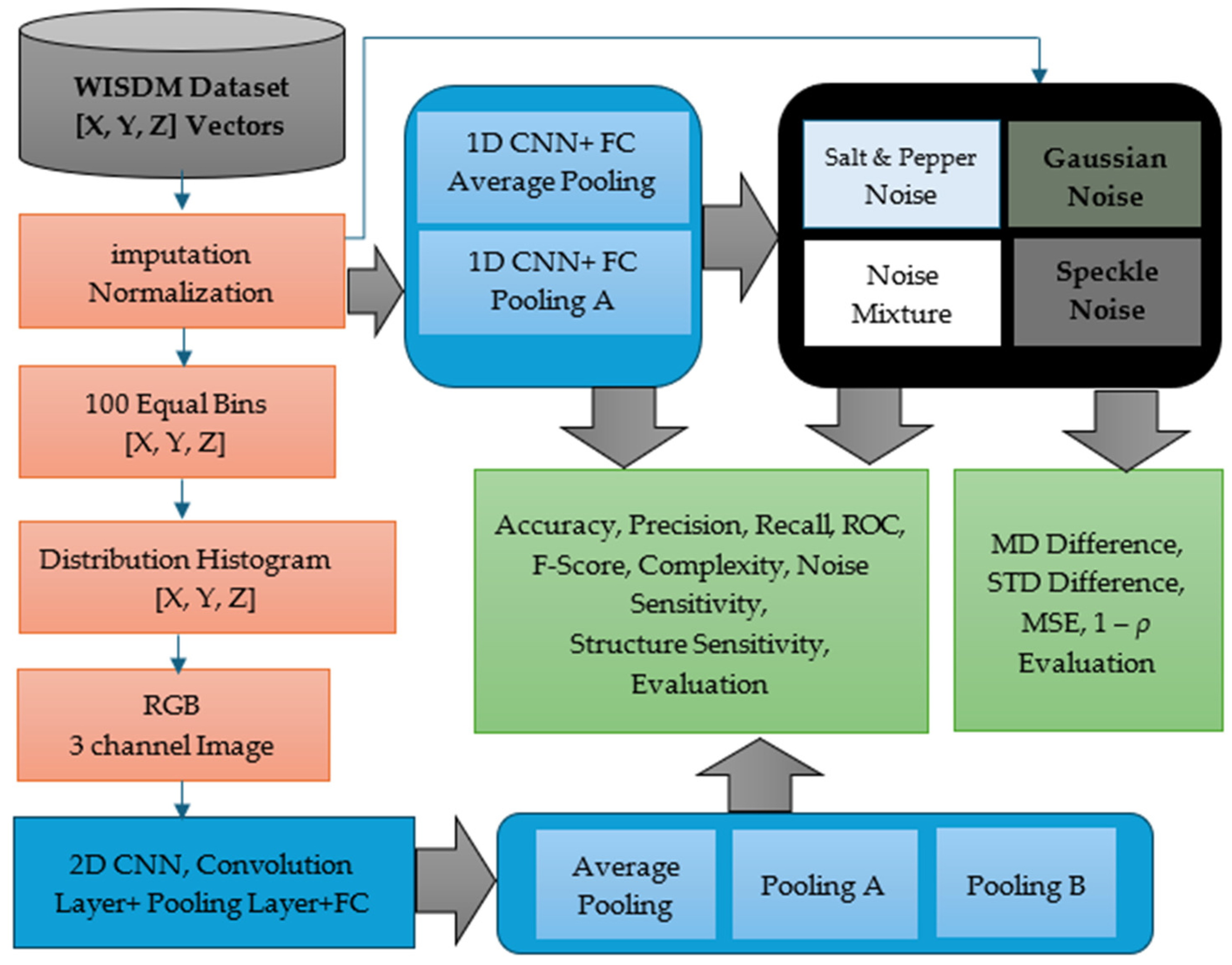

4. Methodology

4.1. Pooling Mechanisms

- When the signal is flat ():

- When (maximum possible contrast):

4.2. Raw Sensor Path: Enhanced 1D CNN

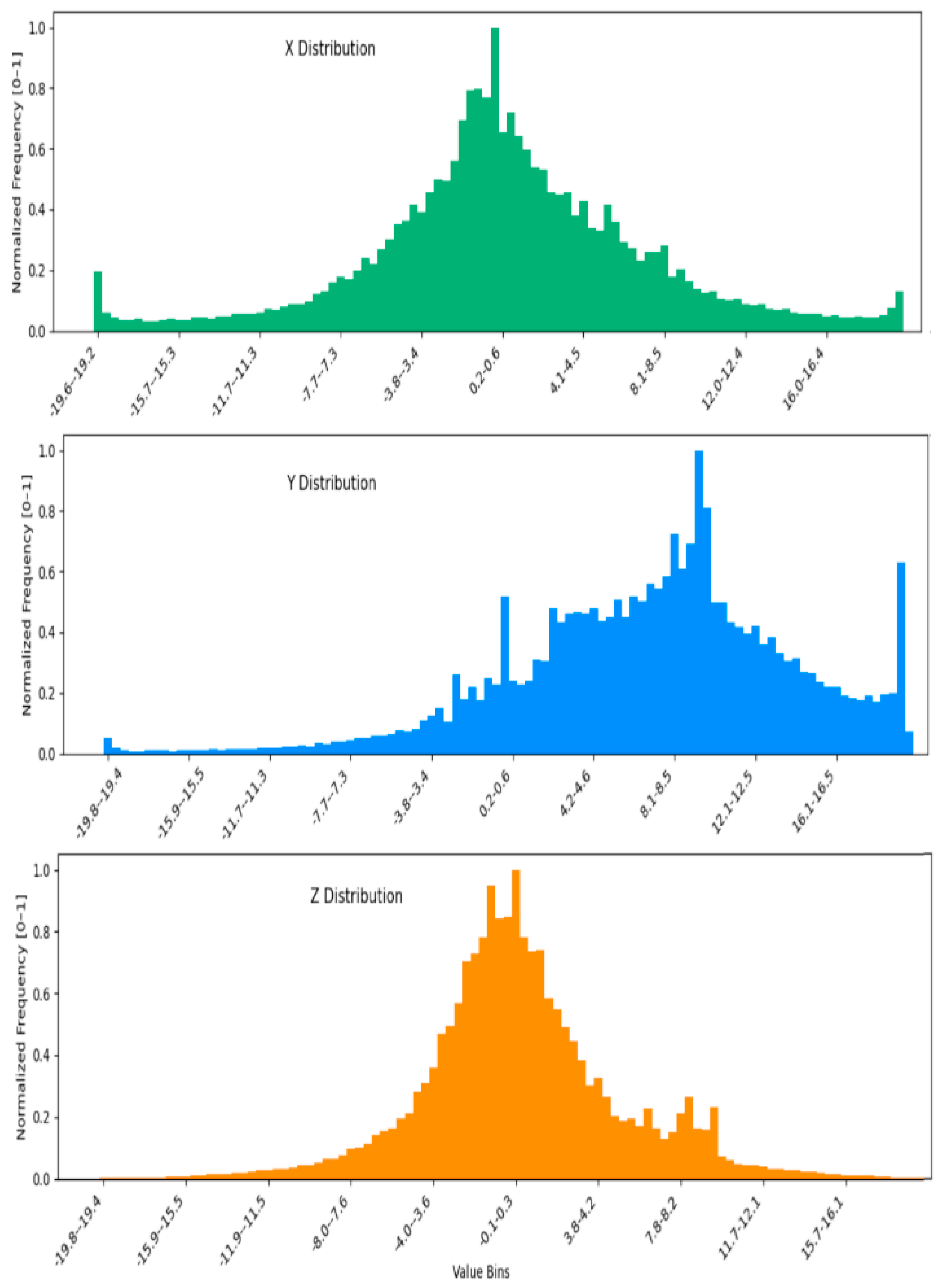

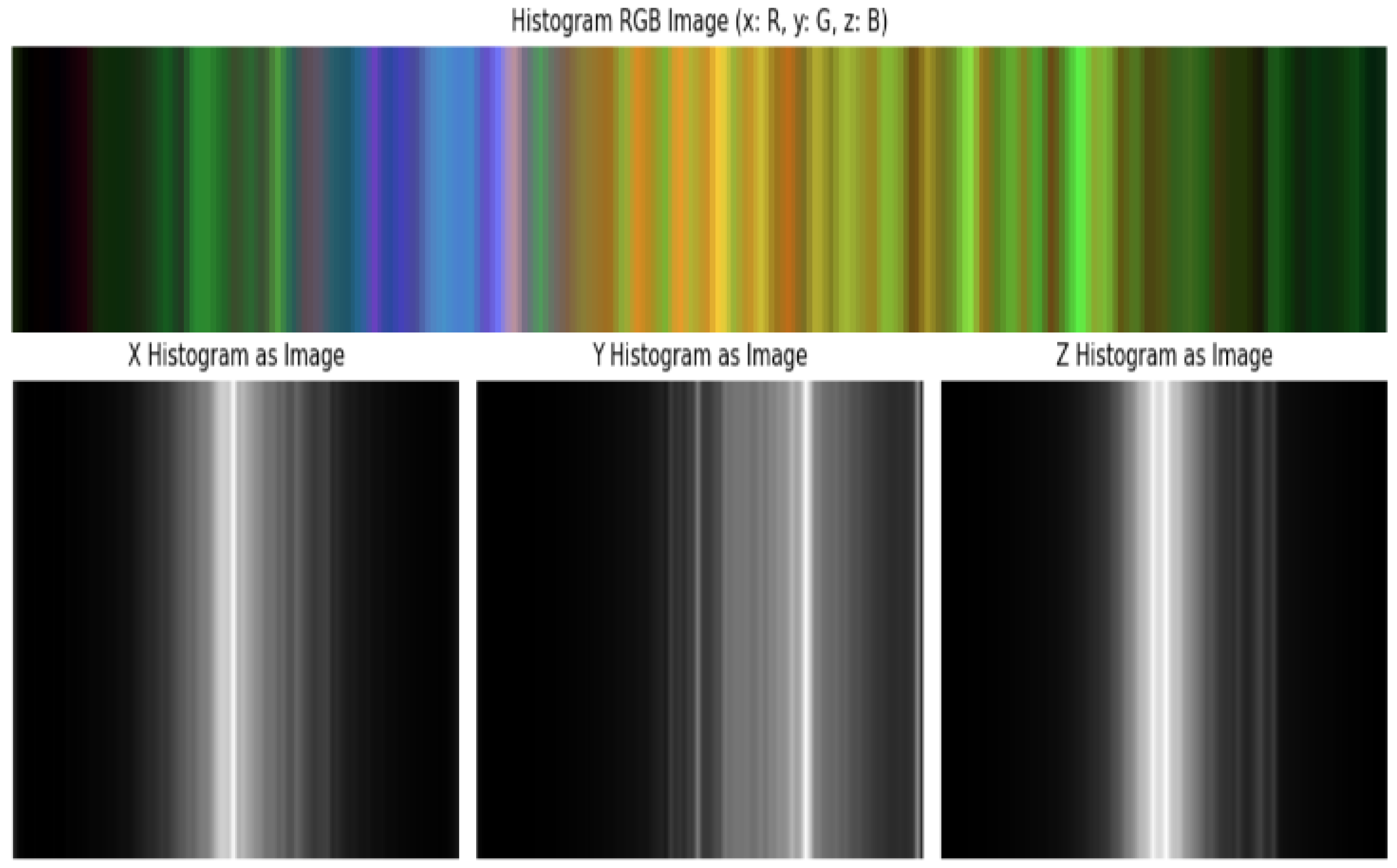

4.3. Histogram-Based Image Path: 2D CNN with Histogram Encoding

4.4. Noise Injection for Robustness Testing

4.5. Classification and Metrics

5. Experiments, Results, and Discussion

5.1. Experiment 1: 1D CNN Performance and Effectiveness of Average Pooling vs. Proposed Pooling A

5.1.1. Pooling A: Enhanced Temporal Discrimination via Nonlinear Aggregation

5.1.2. Average Pooling: Stable Smoothing with Limited Granularity

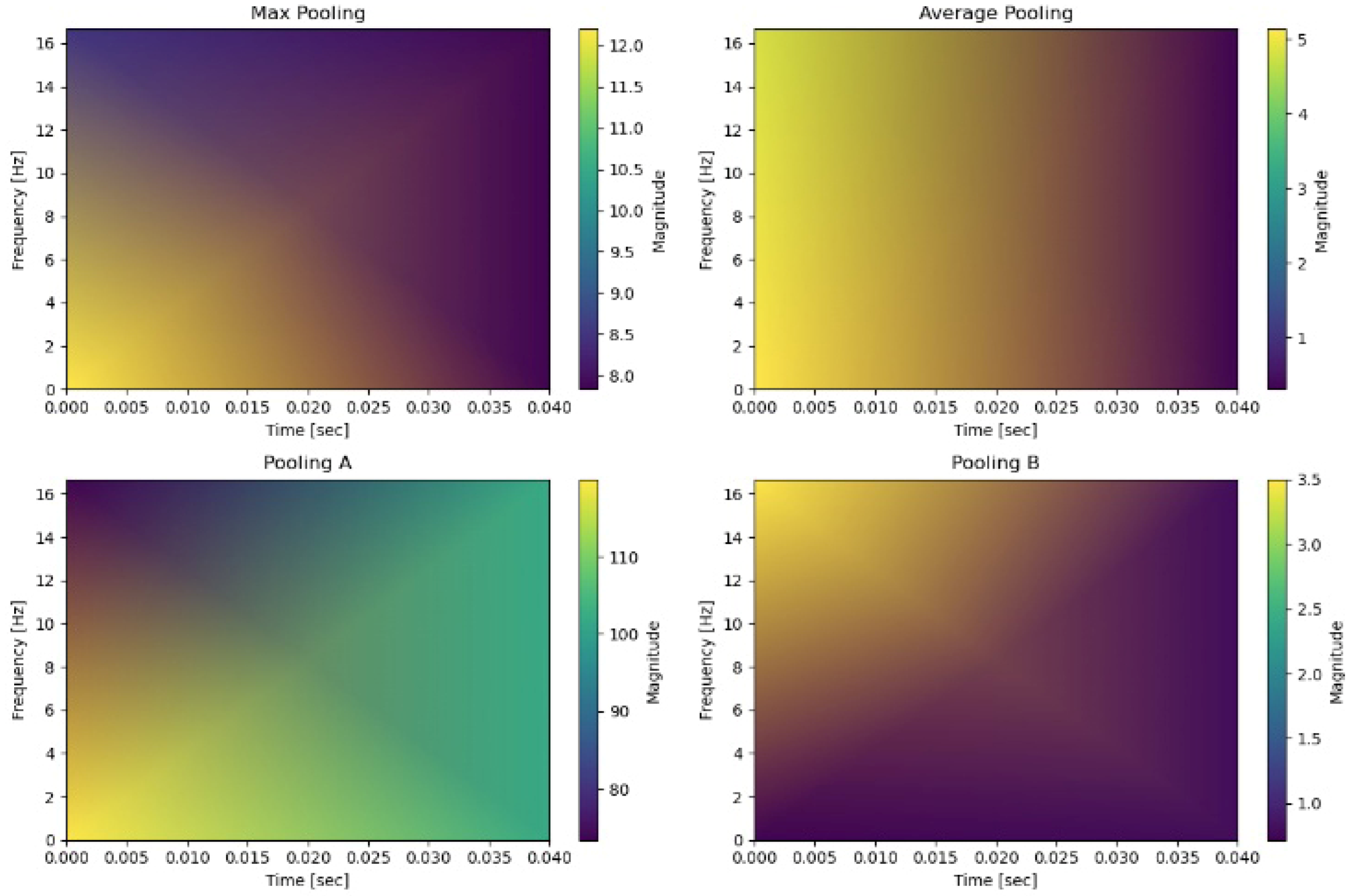

5.1.3. Short Fourier Transform (STFT)

5.1.4. Precision–Recall Dynamics in Transitional Activities

5.1.5. Overall Performance

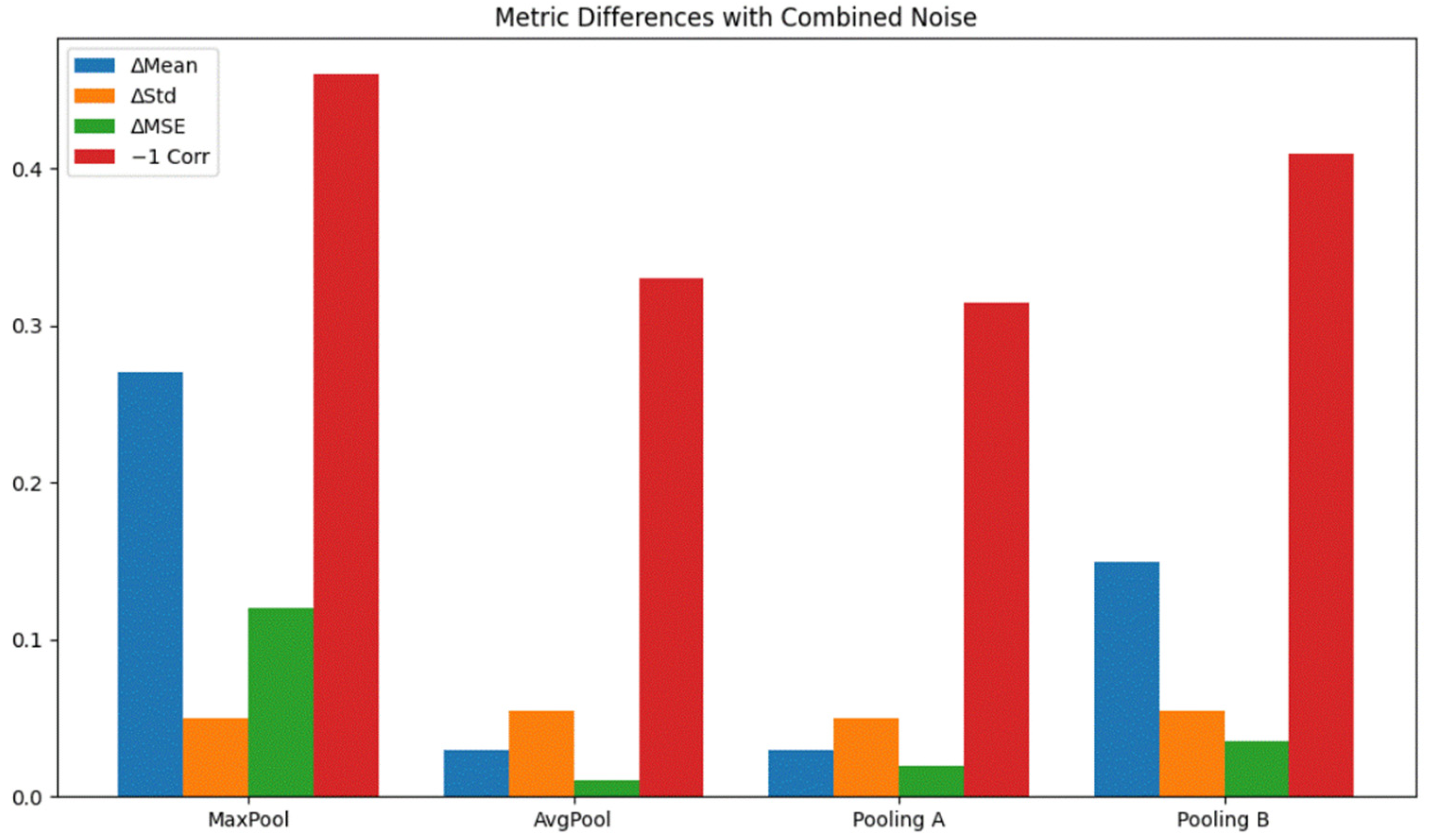

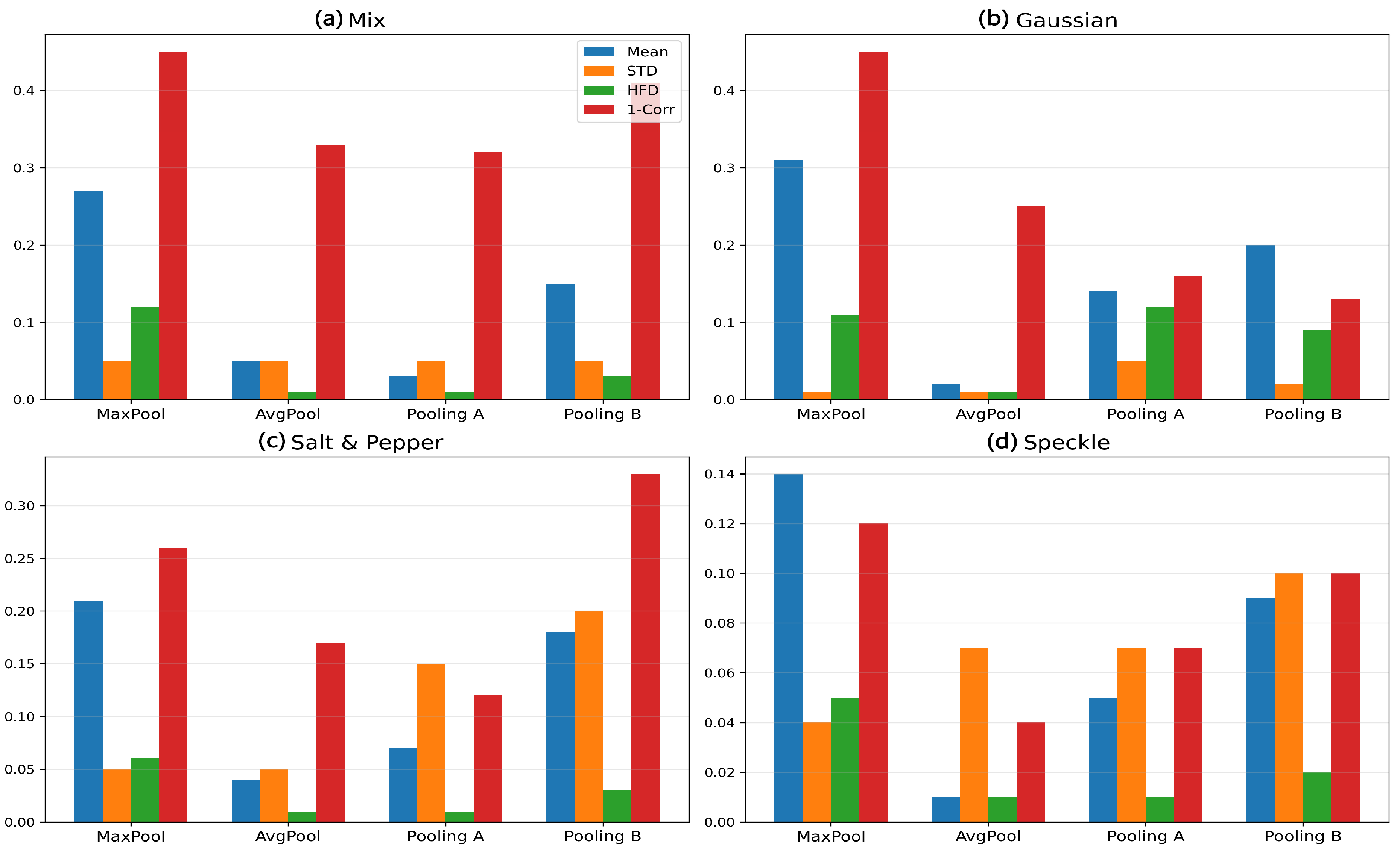

5.2. Experiment 2: Noise Robustness Evaluation of Pooling Methods

- Pooling A demonstrated consistent and robust performance across noise environments; Pooling A matched or outperformed Average Pooling in several conditions, particularly under salt-and-pepper (MD = 0.02, MSE = 0.01, 1 − ρ = 0.11) and No Noise (MSE = 0.01, 1 − ρ = 0.09). It maintained low distortion across all noise types, with MD values never exceeding 0.03 and MSE staying at or below 0.02.

- b.

- Max Pooling performed the worst overall across all noise types. Across every condition including the No Noise baseline Max Pooling produced the largest MD values (e.g., 0.41 under Gaussian, 0.27 under Mixture Noise) and the highest MSE (0.22 for Gaussian, 0.11 for Mixture Noise). It also consistently exhibited the largest correlation loss, with 1 − ρ reaching 0.70 under Gaussian noise. These results reinforce that Max Pooling is highly sensitive to outliers and amplifies noise, making it inappropriate for preserving fine temporal signal characteristics.

- c.

- Pooling B showed its strongest performance under Gaussian noise. Under Gaussian noise, Pooling B achieved the lowest correlation loss among all methods (1 − ρ = 0.12) and a moderate MSE (0.09). While Average Pooling had lower MSE (0.02) and STD (0.00), Pooling B’s correlation preservation surpasses all other methods for this noise type.

- d.

- Average Pooling remained a general-purpose baseline. It performed especially well under Speckle noise, with the lowest MD (0.02), lowest MSE (0.01), and the lowest correlation loss (1 − ρ = 0.06) among all pooling methods for that condition. Its smoothing effect effectively suppresses multiplicative noise components. However, under impulsive or mixed noise (e.g., salt-and-pepper and Mixture Noise), Average Pooling is outperformed by Pooling A in terms of correlation preservation.

- e.

- Performance varies depending on noise characteristics. Pooling A is the most versatile, showing consistently low MD, MSE, and correlation loss across all noise types—including the No Noise condition. Pooling B excels particularly under Gaussian noise due to its superior correlation preservation. Average Pooling is highly effective for smooth or speckle-like distortions. Max Pooling systematically yields the poorest results and is unsuitable for noise-sensitive applications.

5.2.1. Class-Specific Robustness Analysis via Confusion Matrices Under Noise

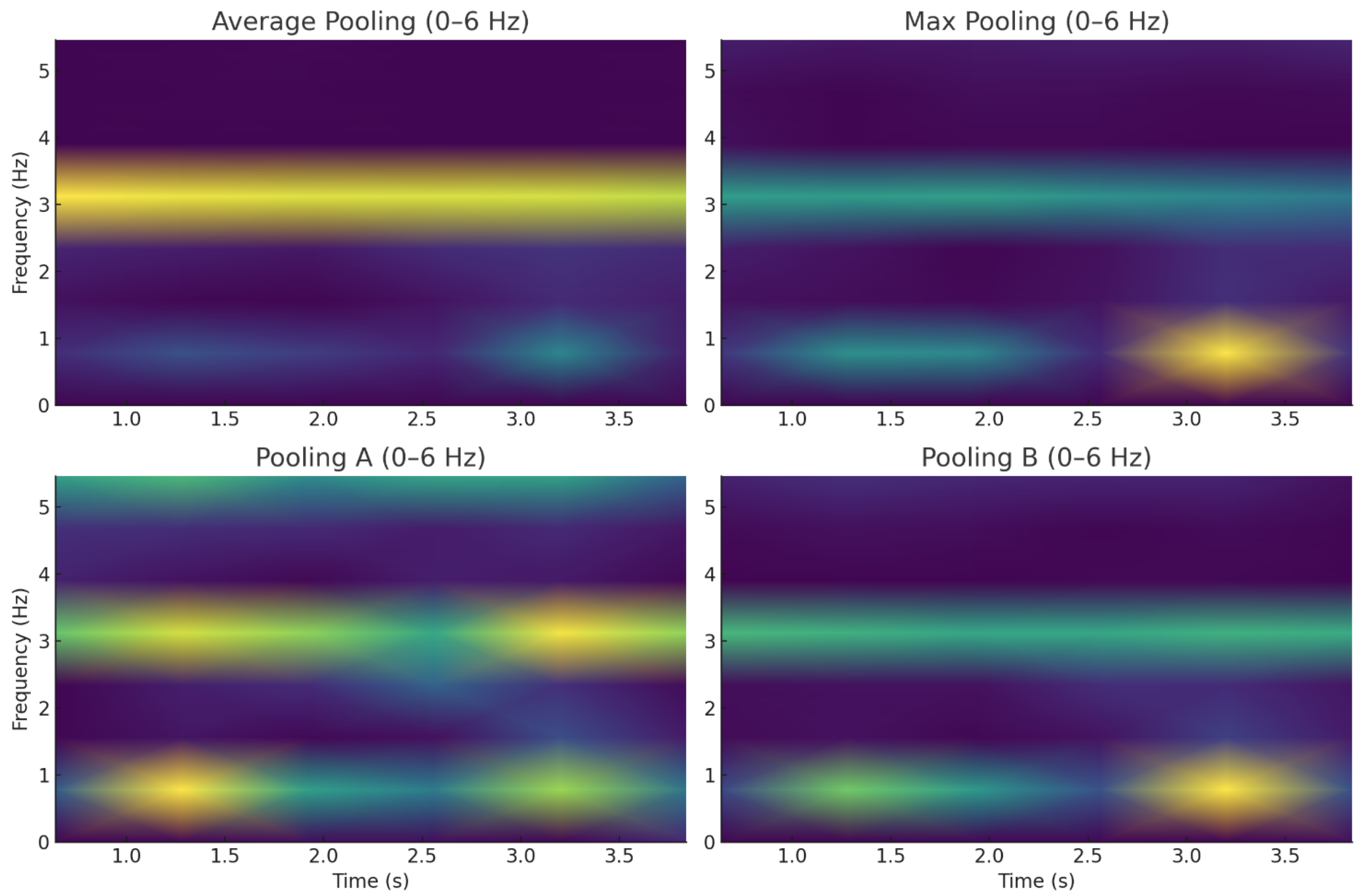

5.2.2. STFT Visualizations Under Simulated Device Displacement Drift

- is the original signal;

- controls the linear drift slope;

- is the amplitude of sinusoidal drift;

- is the drift frequency (set to 0.5 Hz to mimic low-frequency oscillatory displacement).

- Average Pooling shows uniform attenuation with minimal spectral structure, reflecting its smoothing behavior and reduced sensitivity to drift-induced variations;

- Pooling A retains subtle mid-frequency residuals due to its extrema-sensitive design, preserving some transient features despite drift;

- Max Pooling exhibits sporadic high-energy patches, indicating sensitivity to outliers and drift-induced spikes;

- Pooling B demonstrates strong suppression of low-frequency drift components, confirming its variance-reduction mechanism and robustness to baseline shifts.

5.2.3. Latency and Computational Cost Analysis of Pooling Strategies

5.3. Experiment 3: 2D CNN + FC Using Histogram-Based Sensor Representations with Different Pooling Strategies

5.3.1. The Structure and Hyperparameters of Designed 2D CNN

5.3.2. Performance Evaluation and Class-Level Observations

- (a)

- Downstairs, Jogging, Standing, and Walking: Pooling A consistently delivered the highest precision, recall, and F1 scores. These classes tend to contain dynamic, edge-rich patterns (e.g., rapid signal changes in stair descent or jogging), which benefit from Pooling A’s sensitivity to edges.

- (b)

- Sitting and Upstairs: Pooling B slightly outperformed others in Sitting (F1: 0.99), a class associated with stable and stationary signals. This suggests that Pooling B’s reliance on standard deviation offers better discrimination under subtle signal changes. Meanwhile, the upstairs performance shows Average Pooling and Pooling A in close competition.

- (c)

- Upstairs (Precision, Recall, F1): Average Pooling slightly edged out Pooling A (0.88 vs. 0.87), reflecting its strength in capturing softer transitions and smoother signal phases, possibly due to its lower sensitivity to noise and variance.

5.3.3. Methodological Impact of Histogram-Based Representation

- (a)

- Average Pooling provided stability and denoising, but sometimes blurred class-discriminative edges;

- (b)

- Pooling A preserved local variations while balancing suppression of outliers, resulting in consistent superiority across most classes;

- (c)

- Pooling B showed promise in more uniform or slowly changing signals (e.g., sitting), where deviations within the pooling region are minimal and meaningful.

- (a)

- Pooling A and Average Pooling both demonstrated low sensitivity to noise, confirming their utility in real-world sensor environments where measurement fluctuations are common;

- (b)

- Pooling A’s strong edge sensitivity was critical for dynamic classes (Jogging, Downstairs), while Average Pooling’s smoother behavior proved beneficial in classes like Upstairs or Sitting.

5.3.4. Ablation Study: Effect of Histogram Bin Size and Raw Signal Baseline in 2D CNN Path

5.3.5. Evaluation on Sliding Windows to Capture Transitional Activities

5.3.6. Robustness to Motion Artifact Noise

5.4. Model-Wise Performance Comparison

5.5. Comparison with Similar Works and Discussion

5.6. Cross-Dataset Generalization and Contextualizing Performance

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kwapisz, J.R.; Weiss, G.M.; Moore, S.A. Activity recognition using cell phone accelerometers. ACM SIGKDD Explor. Newsl. 2011, 12, 74–82. [Google Scholar] [CrossRef]

- Walse, K.H.; Dharaskar, R.V.; Thakare, V.M. Performance evaluation of classifiers on WISDM dataset for human activity recognition. In Proceedings of the 2nd International Conference on Information and Communication Technology for Competitive Strategies (ICTCS), Udaipur, India, 4–5 March 2016; pp. 1–7. [Google Scholar]

- Min, Y.; Htay, Y.Y.; Oo, K.K. Comparing the performance of machine learning algorithms for human activities recognition using WISDM dataset. Int. J. Comput. (IJC) 2020, 38, 61–72. [Google Scholar]

- Seelwal, P.; Srinivas, C. Human activity recognition using WISDM datasets. J. Online Eng. Educ. 2023, 14, 88–94. [Google Scholar]

- Heydarian, M.; Doyle, T.E. rWISDM: Repaired WISDM, a public dataset for human activity recognition. arXiv 2023, arXiv:2305.10222. [Google Scholar] [CrossRef]

- Sharen, H.; Anbarasi, L.J.; Rukmani, P.; Gandomi, A.H.; Neeraja, R.; Narendra, M. WISNet: A deep neural network based human activity recognition system. Expert Syst. Appl. 2024, 258, 124999. [Google Scholar] [CrossRef]

- Abdellatef, E.; Al-Makhlasawy, R.M.; Shalaby, W.A. Detection of human activities using multi-layer convolutional neural network. Sci. Rep. 2025, 15, 7004. [Google Scholar] [CrossRef]

- Oddo, C.M.; Valdastri, P.; Beccai, L.; Roccella, S.; Carrozza, M.C.; Dario, P. Investigation on calibration methods for multi-axis, linear and redundant force sensors. Meas. Sci. Technol. 2007, 18, 623. [Google Scholar] [CrossRef]

- Gao, Z.; Wang, Y.; Chen, J.; Xing, J.; Patel, S.; Liu, X.; Shi, Y. MMTSA: Multi-modal temporal segment attention network for efficient human activity recognition. In Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies; ACM: New York, NY, USA, 2023; Volume 7, pp. 1–26. [Google Scholar]

- Genc, E.; Yildirim, M.E.; Yucel, B.S. Human activity recognition with fine-tuned CNN-LSTM. J. Electr. Eng. 2024, 75, 8–13. [Google Scholar] [CrossRef]

- Wang, J.; Li, C.; Zhang, B.; Zhang, Y.; Shi, L.; Wang, X.; Zhou, L.; Xiong, D. Automatic rehabilitation exercise task assessment of stroke patients based on wearable sensors with a lightweight multichannel 1D-CNN model. Sci. Rep. 2024, 14, 19204. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Qin, X.; Gao, Y.; Li, X.; Feng, C. SETransformer: A hybrid attention-based architecture for robust human activity recognition. arXiv 2025, arXiv:2505.19369. [Google Scholar] [CrossRef]

- Zhu, P.; Qi, M.; Li, X.; Li, W.; Ma, H. Unsupervised self-driving attention prediction via uncertainty mining and knowledge embedding. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 8558–8568. [Google Scholar]

- Momeny, M.; Sarram, M.A.; Latif, A.; Sheikhpour, R. A convolutional neural network based on adaptive pooling for classification of noisy images. Signal Data Process. 2021, 17, 139–154. [Google Scholar] [CrossRef]

- Shang, Z.; Tang, L.; Pan, C.; Cheng, H. A hybrid semantic attribute-based zero-shot learning model for bearing fault diagnosis under unknown working conditions. Eng. Appl. Artif. Intell. 2024, 136, 109020. [Google Scholar] [CrossRef]

- Kobayashi, S.; Hasegawa, T.; Miyoshi, T.; Koshino, M. Marnasnets: Toward cnn model architectures specific to sensor-based human activity recognition. IEEE Sens. J. 2023, 23, 18708–18717. [Google Scholar] [CrossRef]

- Chu, J.; Li, X.; Zhang, J.; Lu, W. Super-resolution using multi-channel merged convolutional network. Neurocomputing 2020, 394, 136–145. [Google Scholar] [CrossRef]

- Ma, X.; Liu, S. A Simple but Effective Non-local Means Method For Salt and Pepper Noise Removal. In Proceedings of the 2022 IEEE 4th International Conference on Civil Aviation Safety and Information Technology (ICCASIT), Dali, China, 12–14 October 2022; pp. 1423–1432. [Google Scholar]

- Wu, H.; Han, Y.; Zhu, Q.; Geng, Z. Novel feature-disentangled autoencoder integrating residual network for industrial soft sensor. IEEE Trans. Ind. Inform. 2023, 19, 10299–10308. [Google Scholar] [CrossRef]

- Tang, Y.; Zhang, L.; Min, F.; He, J. Multiscale deep feature learning for human activity recognition using wearable sensors. IEEE Trans. Ind. Electron. 2022, 70, 2106–2116. [Google Scholar] [CrossRef]

- Negri, V.; Mingotti, A.; Tinarelli, R.; Peretto, L. Uncertainty-Aware Human Activity Recognition: Investigating Sensor Impact in ML Models. In Proceedings of the 2025 IEEE Medical Measurements & Applications (MeMeA), Chania, Greece, 28–30 May 2025; pp. 1–6. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Stochastic pooling for regularization of deep convolutional neural networks. arXiv 2013, arXiv:1301.3557. [Google Scholar] [CrossRef]

- Hang, S.T.; Aono, M. Bi-linearly weighted fractional max pooling: An extension to conventional max pooling for deep convolutional neural network. Multimed. Tools Appl. 2017, 76, 22095–22117. [Google Scholar] [CrossRef]

- Xu, X.; Li, X.; Ming, W.; Chen, M. A novel multi-scale CNN and attention mechanism method with multi-sensor signal for remaining useful life prediction. Comput. Ind. Eng. 2022, 169, 108204. [Google Scholar] [CrossRef]

- Zafar, A.; Aamir, M.; Mohd Nawi, N.; Arshad, A.; Riaz, S.; Alruban, A.; Dutta, A.K.; Almotairi, S. A comparison of pooling methods for convolutional neural networks. Appl. Sci. 2022, 12, 8643. [Google Scholar] [CrossRef]

- Mohamed, E.A.; Gaber, T.; Karam, O.; Rashed, E.A. A Novel CNN pooling layer for breast cancer segmentation and classification from thermograms. PLoS ONE 2022, 17, e0276523. [Google Scholar]

- Aminifar, S.; bin Marzuki, A. Horizontal and vertical rule bases method in fuzzy controllers. Math. Probl. Eng. 2013, 2013, 532046. [Google Scholar] [CrossRef]

- Kekshar, S.M.; Aminifar, S.A. Uncertainty Avoider Defuzzification of General Type-2 Multi-Layer Fuzzy Membership Functions for Image Segmentation. IEEE Access 2025, 13, 58120–58135. [Google Scholar] [CrossRef]

- Khorsheed, H.A.; Aminifar, S. Measuring uncertainty to extract fuzzy membership functions in recommender systems. J. Comput. Sci. 2023, 19, 1359–1368. [Google Scholar] [CrossRef]

- Sachdeva, R.; Gakhar, R.; Awasthi, S.; Singh, K.; Pandey, A.; Parihar, A.S. Uncertainty and Noise Aware Decision Making for Autonomous Vehicles-A Bayesian Approach. IEEE Trans. Veh. Technol. 2024, 74, 378–389. [Google Scholar]

- Aminifar, S. Uncertainty avoider interval type II defuzzification method. Math. Probl. Eng. 2020, 2020, 5812163. [Google Scholar] [CrossRef]

- Maulud, R.H.; Aminifar, S.A. Enhancing indoor asset tracking: IoT integration and machine learning approaches for optimized performance. J. Comput. Sci. 2025, 21, 1512–1525. [Google Scholar] [CrossRef]

- Bi, Q.; Zhang, H.; Qin, K. Multi-scale stacking attention pooling for remote sensing scene classification. Neurocomputing 2021, 436, 147–161. [Google Scholar] [CrossRef]

- Nirthika, R.; Manivannan, S.; Ramanan, A.; Wang, R. Pooling in convolutional neural networks for medical image analysis: A survey and an empirical study. Neural Comput. Appl. 2022, 34, 5321–5347. [Google Scholar] [CrossRef] [PubMed]

- Han, H.; Zheng, Q.; Luo, M.; Miao, K.; Tian, F.; Chen, Y. Noise-tolerant learning for audio-visual action recognition. IEEE Trans. Multimed. 2024, 26, 7761–7774. [Google Scholar] [CrossRef]

- Chen, K.Y.; Li, J.L.; Ding, J.J. SeamlessEdit: Background Noise Aware Zero-Shot Speech Editing with in-Context Enhancement. arXiv 2025, arXiv:2505.14066. [Google Scholar]

- Miah, A.S.M.; Hwang, Y.S.; Shin, J. Sensor-based human activity recognition based on multi-stream time-varying features with eca-net dimensionality reduction. IEEE Access 2024, 12, 151649–151668. [Google Scholar] [CrossRef]

- Choudhury, N.A.; Soni, B. An adaptive batch size-based-CNN-LSTM framework for human activity recognition in uncontrolled environment. IEEE Trans. Ind. Inform. 2023, 19, 10379–10387. [Google Scholar]

- Qureshi, T.S.; Shahid, M.H.; Farhan, A.A.; Alamri, S. A systematic literature review on human activity recognition using smart devices: Advances, challenges, future directions. Artif. Intell. Rev. 2025, 58, 276. [Google Scholar] [CrossRef]

- Gaud, N.; Rathore, M.; Suman, U. Hybrid deep learning-based human activity recognition (har) using wearable sensors: An edge computing approach. In International Conference on Data Analytics & Management; Springer Nature Singapore: Singapore, 2023; pp. 399–410. [Google Scholar]

- Guo, P.; Nakayama, M. Transformer-based Human Activity Recognition Using Wearable Sensors for Health Monitoring. In Proceedings of the 2025 9th International Conference on Biomedical Engineering and Applications (ICBEA), Seoul, Republic of Korea, 27 February–2 March 2025; pp. 68–72. [Google Scholar]

| Parameter | Description |

|---|---|

| Device Used | Android smartphone with built-in accelerometer |

| Sampling Rate | ~20 Hz |

| Axes Captured | x, y, z |

| Number of Subjects | 36 |

| Activities Labeled | 6 (as listed above) |

| Windowing Scheme | 10 s non-overlapping segments (2 s overlapping for 10-s windows) |

| Label Granularity | Per window (not per sample) |

| Data Format | CSV: user, activity, timestamp, x, y, z |

| User_ID | Downstairs | Jogging | Sitting | Standing | Upstairs | Walking |

|---|---|---|---|---|---|---|

| 1 | 2941 | 11,056 | 0 | 0 | 3120 | 12,861 |

| 2 | 0 | 11,786 | 0 | 0 | 0 | 11,739 |

| 3 | 3326 | 11,018 | 1609 | 2824 | 3411 | 12,973 |

| 4 | 1763 | 895 | 1257 | 0 | 1377 | 6079 |

| 5 | 3281 | 6405 | 1664 | 1515 | 3387 | 12,257 |

| 6 | 1438 | 11,818 | 1679 | 769 | 1665 | 15,058 |

| 7 | 2257 | 9183 | 2529 | 2360 | 3601 | 11,339 |

| 8 | 3346 | 10,313 | 2699 | 3269 | 4453 | 17,108 |

| 9 | 0 | 0 | 0 | 0 | 0 | 12,923 |

| 10 | 3795 | 12,084 | 0 | 1660 | 4296 | 13,048 |

| 11 | 2674 | 12,454 | 0 | 0 | 4392 | 11,436 |

| 12 | 2870 | 12,360 | 2289 | 1670 | 2654 | 10,798 |

| 13 | 4240 | 12,329 | 1179 | 1659 | 4638 | 13,047 |

| 14 | 2875 | 13,279 | 0 | 0 | 8179 | 13,859 |

| 15 | 1762 | 12,799 | 0 | 0 | 2064 | 11,529 |

| 16 | 1574 | 0 | 2984 | 1979 | 1411 | 12,271 |

| 17 | 3767 | 2887 | 0 | 5689 | 1559 | 9677 |

| 18 | 2415 | 11,991 | 1467 | 1954 | 2425 | 12,558 |

| 19 | 2614 | 16,201 | 2534 | 2132 | 4280 | 17,622 |

| 20 | 4673 | 12,948 | 15,644 | 5389 | 4844 | 13,134 |

| 21 | 4036 | 3864 | 1609 | 2859 | 4841 | 6494 |

| 22 | 3627 | 6224 | 0 | 0 | 5430 | 7029 |

| 23 | 1939 | 12,309 | 0 | 0 | 4836 | 6589 |

| 24 | 2929 | 12,278 | 690 | 544 | 3039 | 6256 |

| 25 | 0 | 6489 | 0 | 0 | 0 | 6979 |

| 26 | 3837 | 11,913 | 0 | 0 | 3618 | 13,210 |

| 27 | 3460 | 12,037 | 2099 | 1630 | 3225 | 12,476 |

| 28 | 2997 | 0 | 0 | 1308 | 2892 | 14,169 |

| 29 | 4329 | 12,788 | 2319 | 1603 | 4786 | 12,420 |

| 30 | 3872 | 0 | 1559 | 3098 | 4226 | 12,579 |

| 31 | 3892 | 14,075 | 2148 | 2612 | 4679 | 16,876 |

| 32 | 2343 | 12,245 | 3059 | 1669 | 2214 | 14,897 |

| 33 | 4535 | 2946 | 3248 | 1612 | 2214 | 14,897 |

| 34 | 2856 | 12,869 | 1575 | 1349 | 3921 | 13,377 |

| 35 | 0 | 12,564 | 1599 | 1069 | 0 | 7162 |

| 36 | 4167 | 12,038 | 2500 | 1925 | 5431 | 6200 |

| Activity | Total Samples |

|---|---|

| Walking | 398,795 |

| Jogging | 340,511 |

| Upstairs | 121,168 |

| Downstairs | 100,269 |

| Sitting | 69,072 |

| Standing | 50,481 |

| Class | Precision (Avg) | Precision (A) | Recall (Avg) | Recall (A) | F1 Score (Avg) | F1 Score (A) | F2 (Avg) | F2 (A) | F0.5 (Avg) | F0.5 (A) | Support |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Downstairs | 0.82 | 0.87 | 0.76 | 0.85 | 0.79 | 0.86 | 0.77 | 0.85 | 0.81 | 0.87 | 402 |

| Jogging | 0.98 | 0.95 | 0.98 | 0.96 | 0.98 | 0.96 | 0.98 | 0.96 | 0.98 | 0.96 | 1369 |

| Sitting | 0.97 | 0.98 | 0.96 | 0.98 | 0.97 | 0.98 | 0.96 | 0.98 | 0.97 | 0.98 | 239 |

| Standing | 0.99 | 1.00 | 0.99 | 0.99 | 0.99 | 1.00 | 0.99 | 0.99 | 0.99 | 1.00 | 193 |

| Upstairs | 0.79 | 0.92 | 0.92 | 0.96 | 0.85 | 0.94 | 0.89 | 0.95 | 0.81 | 0.93 | 491 |

| Walking | 0.97 | 0.99 | 0.96 | 0.98 | 0.96 | 0.99 | 0.96 | 0.98 | 0.97 | 0.99 | 1697 |

| Macro Avg | 0.92 | 0.95 | 0.93 | 0.95 | 0.92 | 0.95 | 0.92 | 0.95 | 0.92 | 0.95 | 4391 |

| Weighted Avg | 0.95 | 0.96 | 0.94 | 0.95 | 0.94 | 0.96 | 0.94 | 0.95 | 0.95 | 0.96 | 4391 |

| Metric/Class | Pooling A | Average Pooling | Preferred Approach |

|---|---|---|---|

| Accuracy (WeightedAvg) | 95.1% | 93.0% | Pooling A (slight improvement) |

| F1 Score (Downstairs) | 0.86 | 0.79 | Pooling A |

| F1 Score (Upstairs) | 0.96 | 0.87 | Pooling A (clear margin) |

| Recall (Upstairs) | 0.96 | 0.87 | Pooling A |

| Precision (Upstairs) | 0.95 | 0.86 | Pooling A |

| F1 Score (Jogging) | 0.96 | 0.98 | Average Pooling (slightly better) |

| ROC (Macro Average) | 0.97 | 0.95 | Pooling A |

| Computational Cost | Moderate | Low | Avg Pooling |

| Sensitivity to Noise | Low | Low | Pooling A |

| Sensitivity to Edges | Strong | Weak | Pooling A |

| Noise Density (Amount) | Description | Example in Python 3.13.5 (skimage.util.random_noise) |

|---|---|---|

| 0.10 (10%) | Light corruption; ~1 in 10 samples replaced | random_noise(x, mode = ‘s&p’, amount = 0.10) |

| 0.20 (20%) | Moderate corruption; affects local features | random_noise(x, mode = ‘s&p’, amount = 0.20) |

| 0.30 (30%) | Heavy corruption; severe loss of fine detail | random_noise(x, mode = ‘s&p’, amount = 0.30) |

| Noise Type | Pooling | MD | STD | MSE | 1 − ρ |

|---|---|---|---|---|---|

| Salt-and-Pepper | Max Pooling | 0.16 | 0.07 | 0.05 | 0.23 |

| Average Pooling | 0.02 | 0.05 | 0.01 | 0.18 | |

| Pooling A | 0.02 | 0.07 | 0.01 | 0.11 | |

| Pooling B | 0.09 | 0.15 | 0.02 | 0.27 | |

| Gaussian | Max Pooling | 0.41 | 0.01 | 0.22 | 0.70 |

| Average Pooling | 0.01 | 0.00 | 0.02 | 0.33 | |

| Pooling A | 0.02 | 0.03 | 0.02 | 0.15 | |

| Pooling B | 0.29 | 0.02 | 0.09 | 0.12 | |

| Speckle | Max Pooling | 0.17 | 0.03 | 0.05 | 0.16 |

| Average Pooling | 0.02 | 0.08 | 0.01 | 0.06 | |

| Pooling A | 0.03 | 0.08 | 0.02 | 0.08 | |

| Pooling B | 0.09 | 0.13 | 0.04 | 0.10 | |

| Mixture Noise | Max Pooling | 0.27 | 0.06 | 0.11 | 0.44 |

| Average Pooling | 0.04 | 0.06 | 0.01 | 0.20 | |

| Pooling A | 0.02 | 0.05 | 0.02 | 0.20 | |

| Pooling B | 0.16 | 0.06 | 0.03 | 0.20 | |

| No Noise | Max Pooling | 0.14 | 0.07 | 0.05 | 0.18 |

| Average Pooling | 0.02 | 0.06 | 0.01 | 0.13 | |

| Pooling A | 0.03 | 0.08 | 0.01 | 0.09 | |

| Pooling B | 0.09 | 0.16 | 0.03 | 0.27 |

| Noise Type | Best Metric (Per Pooling) | Worst Performing |

|---|---|---|

| Salt-and-Pepper | Pooling A shows the lowest MSE and highest correlation; Average Pooling is competitive. | Max Pooling |

| Gaussian | Pooling A achieves best 1 − ρ; Average Pooling slightly better in MD, STD, and MSE; Pooling B performs closely to A. | Max Pooling |

| Speckle | Average Pooling performs best across all metrics; Pooling A remains a close second. | Max Pooling |

| Mixture Noise | Pooling A achieves better 1 − ρ; Average Pooling slightly better in MSE and MD. | Max Pooling |

| No Noise | Pooling A shows best 1 − ρ; Average Pooling marginally better in MSE and STD. | Max Pooling |

| True Class | Predicted (Clean)—Top Confusion | Predicted (Gaussian Noise σ = 0.2)—Top Confusion | Comment |

|---|---|---|---|

| Walking | 96% Walking (4% Jogging) | 80% Walking (17% Jogging, 3% Standing) | Misclassified mainly as Jogging under noise |

| Jogging | 96% Jogging (4% Walking) | 78% Jogging (19% Walking, 3% Upstairs) | Overlaps with Walking under noise |

| Sitting | 98% Sitting (2% Standing) | 97% Sitting (3% Standing) | Robust to Gaussian noise |

| Standing | 98% Standing (2% Sitting) | 95% Standing (5% Sitting) | Slight degradation |

| Upstairs | 96% Upstairs (4% Downstairs) | 81% Upstairs (14% Downstairs, 5% Jogging) | Degraded, confuses with Downstairs |

| Downstairs | 94% Downstairs (6% Upstairs) | 82% Downstairs (16% Upstairs, 2% Jogging) | Degraded, confuses with Upstairs |

| Pooling Type | Latency per Window (ms) | FLOPs Estimate | Epochs to Converge | Epochs to Converge | Total Training Time (min) | Total Training Time (min) | Model Size (MB) | Model Size (MB) |

|---|---|---|---|---|---|---|---|---|

| 1D CNN | Two-Dimensional CNN | 1D CNN | Two-Dimensional CNN | 1D CNN | Two-Dimensional CNN | |||

| Average Pooling | 0.05 | 12.2 | 50 | 46 | 35 | 45 | 1.33 | 3.01 |

| Max Pooling | 0.06 | 13.3 | 43 | 45 | 28 | 33 | 1.21 | 2.88 |

| Pooling A | 0.08 | 13.1 | 45 | 39 | 30 | 39 | 1.33 | 3.12 |

| Pooling B | 0.15 | 14.1 | 48 | 41 | 37 | 48 | 1.45 | 3.98 |

| Metric/Method | Downstairs | Jogging | Sitting | Standing | Upstairs | Walking | Weighted Avg |

|---|---|---|---|---|---|---|---|

| Precision | |||||||

| Avg Pooling | 0.81 | 0.98 | 0.97 | 0.98 | 0.88 | 0.95 | 0.94 |

| Proposed Pooling A | 0.88 | 0.99 | 0.97 | 1.00 | 0.87 | 0.96 | 0.96 |

| Proposed Pooling B | 0.87 | 0.98 | 0.99 | 0.97 | 0.80 | 0.95 | 0.94 |

| Recall | |||||||

| Avg Pooling | 0.78 | 0.98 | 0.97 | 0.98 | 0.88 | 0.95 | 0.93 |

| Proposed Pooling A | 0.88 | 0.99 | 0.97 | 1.00 | 0.87 | 0.96 | 0.96 |

| Proposed Pooling B | 0.87 | 0.98 | 0.99 | 0.97 | 0.80 | 0.95 | 0.94 |

| F1 Score | |||||||

| Avg Pooling | 0.79 | 0.98 | 0.97 | 0.98 | 0.88 | 0.95 | 0.93 |

| Proposed Pooling A | 0.88 | 0.99 | 0.97 | 1.00 | 0.87 | 0.96 | 0.96 |

| Proposed Pooling B | 0.87 | 0.98 | 0.99 | 0.97 | 0.80 | 0.95 | 0.94 |

| Metric/Class | Proposed Pooling A | Proposed Pooling B | Average Pooling | Preferred Approach |

|---|---|---|---|---|

| Accuracy (Weighted Avg) | 96.5% | 93.3% | 93% | Pooling A (highest accuracy) |

| F1 Score (Downstairs) | 0.89 | 0.85 | 0.79 | Pooling A |

| F1 Score (Upstairs) | 0.87 | 0.80 | 0.88 | Average Pooling (slightly higher F1) |

| Recall (Upstairs) | 0.87 | 0.80 | 0.88 | Average Pooling |

| Precision (Upstairs) | 0.87 | 0.80 | 0.88 | Average Pooling |

| F1 Score (Jogging) | 0.99 | 0.97 | 0.97 | Pooling A (highest) |

| F1 Score (Sitting) | 0.97 | 0.99 | 0.96 | Pooling B (slightly better) |

| F1 Score (Standing) | 1.00 | 0.96 | 0.97 | Pooling A |

| F1 Score (Walking) | 0.96 | 0.94 | 0.94 | Pooling A |

| ROC (weighted Average) | 0.98 | 0.93 | 0.94 | Pooling A |

| Computational Cost | Moderate | Moderate | Low | Average Pooling |

| Sensitivity to Noise | Low | Moderate | Low | Pooling A/Avg |

| Sensitivity to Edges | Strong | Moderate | Weak | Pooling A |

| Input Representation | Bin Size | Accuracy (%) | Macro F1 | Weighted F1 | Remarks |

|---|---|---|---|---|---|

| Histogram Encoding | 50 | 93.8 | 0.93 | 0.94 | Coarse bins, lower resolution |

| Histogram Encoding | 100 | 95.0 | 0.95 | 0.95 | Balanced trade-off |

| Histogram Encoding | 200 | 95.1 | 0.95 | 0.95 | Slight gain, higher cost |

| Raw Window (2D CNN) | – | 92.6 | 0.92 | 0.92 | Transformation ablated |

| Activity | Precision | Recall | F1 Score | Comparison vs. Non-Overlapping (20%) |

|---|---|---|---|---|

| Downstairs | 0.82 | 0.80 | 0.81 | −5 |

| Jogging | 0.96 | 0.95 | 0.95 | −1 |

| Sitting | 0.97 | 0.97 | 0.97 | −1 |

| Standing | 0.98 | 0.97 | 0.97 | −3 |

| Upstairs | 0.88 | 0.85 | 0.86 | −8 |

| Walking | 0.96 | 0.95 | 0.95 | −4 |

| Macro Avg | 0.93 | 0.91 | 0.92 | −4 |

| Weighted Avg | 0.94 | 0.92 | 0.93 | −3 |

| Activity (True Label) | Noise-Free Accuracy (%) | With Motion Artifact (Avg. Shaking Amplitude 0.5 g) | False Positive Rate (%) | Most Frequent Misclassification |

|---|---|---|---|---|

| Standing | 99.8 | 92.1 | 7.9 | Walking |

| Sitting | 98.9 | 90.7 | 8.2 | Standing |

| Walking | 96.7 | 91.4 | 5.3 | Jogging |

| Jogging | 95.2 | 91.9 | 3.3 | Walking |

| Upstairs | 93.5 | 86.4 | 7.1 | Downstairs |

| Downstairs | 92.7 | 85.5 | 7.2 | Upstairs |

| Histogram-Aware CNN + FC (Average Pooling) | Histogram-Aware CNN + FC (Proposed Pooling A) | Histogram-Aware CNN + FC (Proposed Pooling B) | One-Dimensional CNN (Average Pooling) | One-Dimensional CNN (Pooling A) | |

|---|---|---|---|---|---|

| Downstairs | 78.3 | 87.8 | 87.3 | 77.8 | 85.8 |

| Jogging | 98.2 | 98.9 | 98.1 | 97.3 | 95.6 |

| Sitting | 97.0 | 97.4 | 98.7 | 95.0 | 96.9 |

| Standing | 98.1 | 100 | 97.0 | 97.9 | 99.4 |

| upstairs | 88.3 | 86.5 | 80.0 | 86.3 | 95.8 |

| Walking | 95.0 | 96.1 | 95.4 | 94.3 | 97.3 |

| weighted Average | 94.0 | 96.5 | 94.3 | 93.0 | 95.1 |

| Model Variant | Histogram Encoding | ECP | CMV | Accuracy (%) |

|---|---|---|---|---|

| Baseline CNN | × | × | × | 79.91 |

| +Histogram Only | ✓ | × | × | 91.6 |

| +ECP Only | × | ✓ | × | 87.4 |

| +CMV Only | × | × | ✓ | 83.6 |

| +ECP + CMV | × | ✓ | ✓ | 89.1 |

| Full Model (ECP + CMV + Histogram) | ✓ | ✓ | ✓ | 96.84 |

| ECP + Histogram | ✓ | ✓ | × | 96.5 |

| Study | Dataset Used | Model Type | Feature Representation | Pooling Strategy | Noise Robustness | Reported Accuracy (%) | Remarks |

|---|---|---|---|---|---|---|---|

| Kwapisz et al. (2011) [ACM SigKDD] [1] | Original WISDM v1.0 | Multilayer Perceptron (MLP) | Handcrafted features (mean, std, etc.) | N/A | Not addressed | 91.7 | Classical benchmark; no deep learning used |

| Walse et al. (2016) [ICTCS] [2] | WISDM v1.0 | k-NN, J48, Random Forest | Manual statistical features | N/A | Not addressed | 84–89 | Focus on classical ML classifiers |

| Min et al. (2020) [IJC] [3] | WISDM v1.0 | SVM, RF, KNN | Time-domain features | N/A | Not addressed | 90.2 (best) | No deep architectures; limited generalization |

| Seelwal & Srinivas (2023) [JOEE] [4] | WISDM v1.1 | CNN | Raw time-series input | Max pooling | Not addressed | 92.8 | Used basic CNN without preprocessing enhancements |

| Heydarian & Doyle (2023) [arXiv] [5] | rWISDM (Repaired WISDM) | CNN-LSTM Hybrid | Denoised signals | Max pooling | Basic denoising applied | 93.1 | Enhanced input via preprocessing, but no custom pooling |

| Sharen et al. (2024) [ESWA] [6] | WISDM v1.1 | WISNet (Custom DNN) | Raw signals + domain features | Avg/Max pooling | Minimal robustness | 94.5 | Deep model, but no redundancy modeling or pooling innovation |

| Abdellatef et al. (2025) [Sci. Reports] [7] | WISDM v1.1 | Multi-layer CNN | Raw time-series | Avg pooling | Not addressed | 94.2 | Strong architecture but lacks input transformations |

| This Work (2025) | Based on WISDM | Two-dimensional CNN + Histogram | Raw time-series + Histogram-to-RGB image | Proposed Pooling A & B | Robust to Gaussian, S&P, Mixed noise | 96.5 | First to combine redundancy modeling + pooling design for noise-aware HAR |

| Dataset | Model/Pooling | Accuracy 2D Histogram | Accuracy 1D Histogram | Macro F1 | Notes |

|---|---|---|---|---|---|

| WISDM | Proposed (Pooling A) | 96.5 | 95.1 | 0.95 | Our main benchmark, segmentation-based windows |

| UCI-HAR | Proposed (Pooling A) | 95.2 | 93.0 | 0.94 | Slightly below SOTA (>97%), confirms transferability |

| MobiAct | Proposed (Pooling A) | 94.7 | 93.1 | 0.93 | Generalizes but drops in activities with high inter-user variability |

| WISDM | Average Pooling (CNN) | 94.1 | 93.0 | 0.92 | Baseline comparison |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.

Share and Cite

Hamad Ameen, B.A.; Aminifar, S.A. Robust Activity Recognition via Redundancy-Aware CNNs and Novel Pooling for Noisy Mobile Sensor Data. Sensors 2026, 26, 710. https://doi.org/10.3390/s26020710

Hamad Ameen BA, Aminifar SA. Robust Activity Recognition via Redundancy-Aware CNNs and Novel Pooling for Noisy Mobile Sensor Data. Sensors. 2026; 26(2):710. https://doi.org/10.3390/s26020710

Chicago/Turabian StyleHamad Ameen, Bnar Azad, and Sadegh Abdollah Aminifar. 2026. "Robust Activity Recognition via Redundancy-Aware CNNs and Novel Pooling for Noisy Mobile Sensor Data" Sensors 26, no. 2: 710. https://doi.org/10.3390/s26020710

APA StyleHamad Ameen, B. A., & Aminifar, S. A. (2026). Robust Activity Recognition via Redundancy-Aware CNNs and Novel Pooling for Noisy Mobile Sensor Data. Sensors, 26(2), 710. https://doi.org/10.3390/s26020710